Hierarchical Federated Learning in Wireless Networks: Pruning Tackles Bandwidth

Scarcity and System Heterogeneity

Abstract

While a practical wireless network has many tiers where end users do not directly communicate with the central server, the users’ devices have limited computation and battery powers, and the serving base station (BS) has a fixed bandwidth. Owing to these practical constraints and system models, this paper leverages model pruning and proposes a pruning-enabled hierarchical federated learning (PHFL) in heterogeneous networks (HetNets). We first derive an upper bound of the convergence rate that clearly demonstrates the impact of the model pruning and wireless communications between the clients and the associated BS. Then we jointly optimize the model pruning ratio, central processing unit (CPU) frequency and transmission power of the clients in order to minimize the controllable terms of the convergence bound under strict delay and energy constraints. However, since the original problem is not convex, we perform successive convex approximation (SCA) and jointly optimize the parameters for the relaxed convex problem. Through extensive simulation, we validate the effectiveness of our proposed PHFL algorithm in terms of test accuracy, wall clock time, energy consumption and bandwidth requirement.

Index Terms:

Heterogeneous network, hierarchical federated learning, model pruning, resource management.I Introduction

Federated learning (FL) has garnered significant attention as a privacy-preserving distributed edge learning solution in wireless edge networks [1, 2, 3]. Since the original FL follows the parameter server paradigm, many state-of-the-art works consider a single server with distributed clients as the general system model in order to study the analytical and empirical performance [2, 3, 4, 5, 6]. Given that there are clients, each with a local dataset of , where and are the feature vector and the corresponding label, the central server wants to train a global machine learning (ML) model by minimizing a weighted combination of the clients’ local objective functions ’s, as follows

| (1) | |||

| (2) |

where is the corresponding weight, and denotes the loss function associated with the data sample. However, general networks usually follow a hierarchical structure [7], where the clients are connected to edge servers, the edge servers are connected to fog nodes/servers, and these fog nodes/servers are connected to the cloud server [8]. Naturally, some recent works [9, 10, 11, 12, 13, 14] have extended FL to accommodate this hierarchical network topology.

A client111The terms client and UE are interchangeably used when there is no ambiguity. does not directly communicate with the central server in the hierarchical network topology. Instead, the clients usually perform multiple local rounds of model training before sending the updated models to the edge server. The edge server aggregates the received models and updates its edge model, and then broadcasts the updated model to the associated clients for local training. The edge servers repeat this for multiple edge rounds and finally send the updated edge models to the upper-tier servers, which then undergo the same process before finally sending the updated models to the cloud/central server. This is usually known as hierarchical federated learning (HFL) [11]. On the one hand, HFL acknowledges the practical wireless heterogeneous network (HetNet) architecture. On the other hand, it avoids costly direct communication between the far-away cloud server and the capacity-limited clients [14]. Moreover, since local averaging improves learning accuracy [7], the central server ends up with a better-trained model.

While HFL can alleviate communication bottlenecks for the cloud server, data and system heterogeneity amongst the clients still need to be addressed. Since the clients are usually scattered in different locations and have various onboard sensors, the data collected/sensed by these clients are diverse, causing statistical data heterogeneity that the server cannot govern. As such, we need to embrace it in our theoretical and empirical study. Besides, the well-known system heterogeneity arises from the clients’ diverse computation powers [15]. Recently, some works have been proposed to deal with system heterogeneity. For example, FedProx [16], anarchic federated averaging (AFA) [17] and federated normalized averaging algorithm (FedNova) [18], to name a few, considered different local rounds for different clients to address the system heterogeneity. More specifically, FedProx adds a proximal term to the client’s local objective function to handle heterogeneity. AFA and FedNova present different ways to aggregate clients trained models’ weights at the server to tackle this system heterogeneity. However, these algorithms still assume that the client has and trains the original ML model, i.e., neither the computation time for the client’s local training nor the communication overhead for offloading the trained model to the server is considered in system design.

Model pruning has attracted research interest recently [19, 20]. It makes the over-parameterized model sparser, which allows the less computationally capable clients to perform local training more efficiently without sacrificing much of the test accuracy. Besides, since the trained model contains fewer non-zero entries, the communication overhead over the unreliable wireless link between the client and the associated base station (BS) also dramatically reduces. However, pruning generally introduces errors that only partially vanish, causing the pruned model to converge only to a neighborhood of the optimal solution [19]. Besides, unlike the traditional FL, where the model averaging happens only at the central server, HFL has multiple hierarchical levels that may adopt their own aggregation strategy. Therefore, model pruning at the local client level leads to additional errors in the available models at different levels, eventually contributing to the global model. As such, more in-depth study is in need to understand the full benefit of model pruning in hierarchical networks.

I-A Related Work

Some recent works studied HFL [9, 10, 11, 12, 13, 14] and model pruning-based traditional single server based FL [20, 21, 22, 23, 24] separately. In [9], Xu et al. proposed an adaptive HFL scheme, where they optimized edge aggregation intervals and bandwidth allocation to minimize a weighted combination of the model training delay and training loss. Liu et al. proposed network-assisted HFL in [10], where they optimized wireless resource allocation and user associations to minimize learning latency for independent identically distributed (IID) data distribution and weighted sum of the total data distance and learning latency for the non-IID data distribution. Similar to [9, 10], Luo et al. jointly optimized the wireless network parameters in order to minimize the weighted combination of the total energy consumption and delay during the training process in [11]. Besides, [12] also proposed an HFL algorithm based on federated averaging (FedAvg) [1]. In [13], Feng et al. proposed a mobility-aware clustered FL algorithm owing to user mobility. More specifically, assuming that all users had an equal probability of staying at a cluster, the authors derived an upper bound of the convergence rate to capture the impact of user mobility, data heterogeneity and network heterogeneity. Abad et al. also optimized wireless resources to reduce the communication latency and facilitate HFL in [14].

On the model pruning side, Jiang et al. considered two-stage distributed model pruning in [20] with traditional single server based FL setting without any wireless network aspects. In a similar setting, Zhu et al. proposed a layer-wise pruning mechanism in [21]. Liu et al. optimized the pruning ratio and time allocation in [22] in order to maximize the convergence rate in a time division multiple access operated small BS (sBS). The idea was extended to joint client selection, pruning ratio optimization and time allocation in [23]. Using a similar network model, Ren et al. optimized pruning ratios and bandwidth allocations jointly to minimize a weighted combination of the FL training time and pruning error in [24]. These works [22, 23, 24] decomposed the original problem into different sub-problems that they solved iteratively in an attempt to solve the original problem sub-optimally. Moreover, [22, 23, 24] considered a simple network system model with a single BS serving the distributed clients with the wireless links.

I-B Our Contributions

While the studies mentioned above shed some light on HFL and model pruning in the traditional single server based FL, the impact of pruning on HFL in resource-constrained wireless HetNet is yet to be explored. On the one hand, the clients need to train the original model for a few local episodes to determine the neurons they shall prune, which adds additional time and energy costs. On the other hand, pruning adds errors to the learning performance. Therefore, it is necessary to theoretically and empirically study these errors from different levels in HFL. Moreover, it is also crucial to justify how and when one should adopt model pruning in practical wireless HetNets. Motivated by these, in this work, we present our pruning-enabled HFL (PHFL) framework with the following major contributions:

-

•

Considering a practical wireless HetNet, we propose a PHFL solution in which the clients perform local training on the initial models to determine the neurons to prune, perform extensive training on the pruned models, and offload the trained models under strict delay and energy constraints.

-

•

We theoretically analyze how pruning introduces errors in different levels under resource constraints in wireless HetNets by deriving a convergence bound that captures the impact of the wireless links between the clients and server and the pruning ratios. More specifically, the proposed solution converges to the neighborhood of a stationary point of traditional HFL with a convergence rate of , where is the total number of clients, is the total local iterations, quantifies smoothness of the loss function, is an upper bound of the norm of the model weights, and is the maximum allowable pruning ratio.

-

•

Then, we formulate an optimization problem to maximize the convergence rate by jointly configuring wireless resources and system parameters. To tackle the non-convexity of the original problem, we use a successive convex approximation (SCA) algorithm to solve the relaxed convex problem efficiently.

-

•

Finally, using extensive simulation on two popular datasets and three popular ML models, we show the effectiveness of our proposed solution in terms of test accuracy, training time, energy consumption and bandwidth requirement.

The rest of the paper is organized as follows: Section II introduces our system model. Detailed theoretical analysis is performed in Section III, followed by our joint problem formulation and solution in Section IV. Based on our extensive simulation, we discuss the results in Section V. Finally, Section VI concludes the paper. Moreover, Table I summarizes the important notations used in the paper.

| Notation | Description |

|---|---|

| ; ; | user; sBS; mBS |

| ; | sBS set under the mBS; sBS in |

| ; | VC set of sBS under the mBS; VC of sBS |

| ; | Client set of the VC of sBS under the mBS; client in |

| ; | pRB; pRB set |

| Client ’s transmission power during | |

| ; ; | Original model; binary mask; pruned model |

| ; ; ; | Local model of the client, VC, sBS, and mBS |

| ; ; ; ; | Loss function of the client, VC, sBS, mBS, and central server, respectively |

| ; ; | True gradient; stochastic gradient; learning rate |

| ; | Total & pruned parameters of the ML model |

| ; | Pruning ratio of client during ; max pruning ratio |

| ; ; ; | Weight of client, VC, sBS, and mBS |

| Number of SGD rounds on to get winning ticket | |

| ; ; ; | Number of local, VC, sBS, and mBS rounds |

| ; | Binary indicator function to define if sBS receives client’s trained model; probability that |

| Smoothness of the loss functions | |

| Bounded variance of the gradients | |

| Bounded divergence of the loss functions of two inter-connected tiers | |

| ; | Upper bound of the -norm of stochastic gradients and model weights, respectively |

| ; | CPU clock cycle of during ; max CPU cycle of i |

| , | Batch size; number of mini-batch |

| ; | Required number of CPU cycle of to process -bit data; each data sample size in bits |

| Floating point precision | |

| ; | Time and energy overheads to get the lottery ticket |

| ; | Time and energy overheads to compute local SGD rounds with the pruned model |

| ; | Time and energy overheads for offloading client ’s trained model |

| ; | Client ’s total time and energy overheads to finish one VC round |

| ; | Time and energy budgets to finish one VC round |

II System Model

II-A Wireless Network Model

We consider a generic heterogeneous network (HetNet) consisting of some UEs, sBSs and macro BSs (mBSs), as shown in Fig. 1. Denote the UE, sBS and mBS sets by , and , respectively. Each UE and sBS are connected to one sBS and mBS, respectively. The mBSs are connected to the central server. While the UEs communicate over wireless links with the sBS, the connections between the sBS and mBS and between the mBS and central server are wired. Moreover, due to UEs’ system heterogeneity, we consider that the sBS groups UEs with similar computation and battery powers into a virtual cluster (VC).

We can benefit from the VC since it enables one additional aggregation tier. Besides, thanks to the recent progress of the proximity-services in practical networks [25], one can also select a cluster head and leverage device-to-device communication to receive and distribute the models to the UEs in the same VC. However, in this work, we assume that the sBS creates the VC and also manages it. We use the notation , and to represent the sBS set associated to mBS , VC set of sBS and UE set of VC , respectively. Moreover, denote the UEs associated with sBS , mBS by and , respectively. Finally, . Note that we consider that these associations are known and provided by the network administrator. The network has a fixed bandwidth divided into orthogonal physical resource blocks (pRBs) for performing the FL task. Denote the pRB set by . Each mBS reuses the same pRB set, i.e., the frequency reuse factor is . Besides, each mBS allocates dedicated pRBs to its associated sBSs. Each sBS further uses dedicated pRBs to communicate with the associated UEs. As such, there is no intra-tier interference in the system.

To this end, denote the distance between UE and the sBS by . The wireless fading channel between the UE and sBS follows Rayleigh distribution222Rayleigh distribution is widely used for its simplicity. Other distributions are not precluded., and is denoted by . The transmission power of UE is denoted by . As such, the uplink signal-to-noise-plus-interference ratio (SINR) is expressed as

| (3) |

where is the path loss exponent, is the variance of the circularly symmetric zero-mean Gaussian distributed random noise and is the pRB size. Moreover, is the inter-cell interference. Thus, the data rate

| (4) |

II-B Pruning-Enabled Hierarchical Federated Learning (PHFL)

In this work, we consider that each client uses mini-batch stochastic gradient descent (SGD) to minimize (2) over mini-batches since the gradient descent over the entire dataset is time-consuming. Denote the stochastic gradient such that , where is a randomly sampled batch from dataset . However, computation with the original model is still costly and may significantly extend the training time of a computationally limited client. To alleviate this, the UE trains a pruned model by removing some of the weights of the original model [19].

Denote a binary mask by and the pruned model by , where means element-wise multiplication. Note that training the pruned model is computationally less expensive as it has fewer parameters than the original model. It is worth pointing out that the UE utilizes the state-of-the-art lottery ticket hypothesis [26] to find the winning ticket and the corresponding mask with the following key steps. Denote the number of parameters required to be pruned by . The UE performs local iterations on the original model as

| (5) |

where is the step size. The UE then prunes entries of with the smallest magnitudes333The time complexity to sort the parameters and then prune the smallest ones depends on the sorting technique. Many sorting algorithms have logarithmic time complexity, which can be computed quickly in modern graphical processing units. Following the common practice in literature [22, 23, 24], the overhead for pruning is ignored in this work. Moreover, the proposed method can be readily extended to incorporate the time overhead for pruning. and generates a binary mask . To that end, the client obtains the winning ticket by retaining the original weights of the corresponding nonzero entries of the mask from the original initial model [26]. Note that other pruning techniques can also be adopted. Moreover, we denote the pruning ratio by [23]

| (6) |

Given the pruned model , the loss function of the UE is rewritten as

| (7) |

Each UE updates its pruned model as

| (8) |

As such, we denote the loss functions of the VC, sBS, mBS and central server as , , , and , respectively. Note that for simplicity, we consider identical weights, i.e., , , and , which can be easily adjusted for other weighting strategies. Besides, since aggregation happens at different times and different levels, we need to capture the time indices explicitly. Let each UE perform local iterations before sending the updated model to the associated VC. It is worth noting that the winning ticket and the corresponding binary mask are only obtained before these local rounds begin. Besides, since training the original model is costly, it is reasonable to consider 444In our simulation, we considered and observed that even with performed well.. Moreover, although the sBS-mBS and mBS-central server links are wired, communication and computation at these nodes incur additional burdens. As such, we assume that each VC, sBS and mBS perform , and rounds, respectively, before sending the trained model to the respective upper layers. Denote the indices of the current global round, mBS round, sBS round, VC round and UE’s local round by , , , and , respectively. Besides, similar to [13, 9], let denote the index of local update iterations.

If , the UE receives the latest available model of its associated VC, i.e.,

| (9) |

where . The UE then computes the pruned model and the binary mask . It then performs local SGD rounds as

| (10) |

Each VC performs local rounds. When , the VC’s model gets updated by the latest available sBS model, i.e.,

| (11) |

where . Besides, between two VC rounds, the local model of the VC is updated as

| (12) |

where and is a binary indicator function that indicates whether the sBS receives the trained model of during the VC aggregation round or not, and is defined as follows:

| (13) |

where is the probability of receiving the trained model over the wireless link and is calculated in the subsequent section (c.f. (IV-A)). Note that since the sBS has to receive the gradient over the wireless link, we use the binary indicator function in (12) as a common practice [27, 13].

The sBS performs local rounds before updating its model. When , the sBS updates its local model with the latest available model at its associated mBS, i.e.,

| (14) |

where . In each sBS round , the sBS updates its model as

| (15) |

where and . Similarly, the mBS performs local rounds before updating its local model with the latest available global model when , i.e.,

| (16) |

Moreover, between two mBS rounds, we can write

| (17) |

where and . Finally, the central server performs global aggregation by collecting the updated models from all mBSs as follows:

| (18) |

where .

The proposed PHFL process is summarized in Algorithm 1.

III PHFL: Convergence Analysis

III-A Assumptions

We make the following standard assumptions [13, 7, 20, 23, 28]:

-

1.

The loss functions are lower-bounded, i.e., .

-

2.

The loss functions are -smooth, i.e., .

-

3.

Mini-batch gradients are unbiased . Besides, the variance of the gradients is bounded, i.e., .

-

4.

The divergence of the local, VC, sBS, mBS and global loss functions are bounded for all , , , and , i.e.,

-

5.

The stochastic gradients are independent of each other in different iterations.

-

6.

The stochastic gradients are bounded, i.e., .

-

7.

The model weights are bounded, i.e., .

-

8.

The pruning ratio , in which and is the maximum allowable pruning ratio, follows

(19)

Since the updated global, mBS, sBS and VC models are not available in each local iteration , similar to standard practice [13, 7, 9], we assume the virtual copies of these models, denoted by , , and , respectively, are available. Besides, we assume that the bounded divergence assumptions amongst the above loss functions also hold for these virtual models. Moreover, analogous to our previous notations, we express and .

III-B Convergence Analysis

Similar to existing literature [13, 7, 9, 20], we consider the average global gradient norm as the indicator of the proposed PHFL algorithm’s convergence. As such, in the following, we seek an -suboptimal solution such that and . Particularly, we start with Theorem 1 that requires bounding the differences amongst the models in different hierarchical levels. These differences are first calculated in Lemma 1 to Lemma 4 and then plugged into Theorem 1 to get the -suboptimal bound in Corollary 1.

Theorem 1.

When the assumptions in Section III-A hold and , we have

| (20) |

where , , and is the client’s central processing unit (CPU) frequency in the wireless factor. Besides, the terms , , and are

| (21) | |||

| (22) | |||

| (23) | |||

| (24) | |||

| (25) |

The proof of Theorem 1 and the subsequent Lemmas are left in the supplementary materials.

Remark 1.

The first term in (1) is what we get for centralized learning, while the second term arises from the randomness of the mini-batch gradients [29]. The third term appears from model pruning. Besides, the fourth term arises from the wireless links among the sBS and UEs. It is worth noting that when all ’s are ’s. Finally, the last term is due to the difference among the VC-local, sBS-VC, sBs-mBS and mBS-global model parameters, respectively, which are derived in the following.

Remark 2.

When the system has no pruning, i.e., all UEs use the original models, all . Besides, under the perfect communication among the sBS and UEs, we have . In such cases, the -suboptimal bound boils down to

| (26) | ||||

Besides, the last term in (26) appears from the four hierarchical levels. When and there are no levels, i.e., , the convergence bound is exactly the same as the original SGD with non-convex loss function [7].

To that end, we calculate the divergence among the local, VC, sBS, mBS and global model parameters, and derive the corresponding pruning errors in each level in what follows.

Lemma 1.

When , the average difference between the VC and local model parameters, i.e., the term of (1), is upper bounded as

| (27) |

where .

Remark 3.

In (1), the first term comes from the statistical data heterogeneity, while the second term arises from the divergence between the local and VC loss functions. The third term emanates from model pruning. Finally, the fourth term stems from the wireless links among the UEs and sBS.

Lemma 2.

When , the difference between the sBS model parameters and VC model parameters, i.e., the term of (1), is upper bounded as

| (28) |

where .

Remark 4.

The first term in (2) appears from the divergence of the loss functions of the clients and VC, while the second term stems from the divergence between the loss function of the VC and sBS. The rest of the terms are due to the statistical data heterogeneity, model pruning and wireless links, respectively.

Lemma 3.

When , the average difference between the sBS and mBS model parameters, i.e., the term of (1), is upper bounded as

| (29) |

where .

Lemma 4.

When , the average difference between the global and the mBS models, i.e., the term, is bounded as follows:

| (30) |

where .

Note that we have similar observations for (3) and (4) as in Remark 4. Now, using the above Lemmas, we find the final convergence rate in Corollary 1.

Corollary 1.

When , the bound of Theorem 1 boils down to

| (31) |

Remark 5.

In (1), the third, fourth, fifth and sixth terms appear from the divergence between client-VC, VC-sBS, sBS-mBS and mBS-global loss functions, respectively.

Remark 6.

Remark 7.

When , we have . With a sufficiently large , when the trained model reception success probability is for all users in all time steps, we have , where the second term comes from the pruning error. Therefore, the proposed PHFL solution converges to the neighborhood of a stationary point of traditional HFL.

IV Joint Problem Formulation and Solution

Similar to existing literature[3, 4, 8, 27], we ignore the downlink delay in this paper since the sBS can utilize the higher spectrum and transmission power to broadcast the updated model. Moreover, since the sBS-mBS and mBS-cloud server links are wired, we ignore the transmission delays for these links555The transmissions over the wired sBS-mBS and mBS-cloud server links happen in the backhaul, and the corresponding delays are quite small. In order to calculate these delays, one should also consider the overall network loads, which are beyond the scope of this paper.. Furthermore, since the sBS, mBS and the cloud server usually have high computation power, we also ignore the model aggregation and processing delays666The addition of parameters and then taking the average have a time complexity of . With highly capable CPUs at the sBS, mBS, and central server, the corresponding time delays for parameter aggregation are usually small and therefore ignored in the literature [9, 10, 23].. Therefore, at the beginning of each VC round, i.e., , we first calculate the required computation time for finding the lottery ticket as

| (33) |

where is the batch size, is the number of batches, is the CPU cycles to process -bit data, is UE ’s each data sample’s size in bits and is the CPU frequency. Upon finding the pruned model, each client performs local iterations, which require the following computation time [23]

| (34) |

To that end, the UE only offloads the non-zero weights along with the binary mask to the sBS. As such, we calculate the uplink payload size of UE as follows777Note that one may send the non-pruned weights and the corresponding indices, which are unknown until the original initial model is trained for iterations. We consider an upper bound for the uplink payload, which will be used during the joint parameters optimization phase.:

| (35) |

where is the floating point precision. Note that, in (35), we need bit to represent the sign of the entry. Therefore, we calculate the uplink payload offloading delay as follows:

| (36) |

As such, UE ’s total duration for local computing and trained model offloading is

| (37) |

We now calculate the energy consumption for performing the model training, followed by the required energy for offloading the trained models. First, let us calculate the energy consumption to get the lottery ticket as

| (38) |

where is the effective capacitance of UE’s CPU chip. Similarly, we calculate the energy consumption to train local iterations using the pruned model as

| (39) |

Moreover, we calculate the uplink payload offloading energy consumption as follows:

| (40) |

Therefore, the total energy consumption is calculated as

| (41) |

IV-A Problem Formulation

Denote the duration between VC aggregation . Then, we calculate the probability of successful reception of UE’s trained model as follows:

| (42) |

where and follows from the Rayleigh fading channels between the UE and the sBS.

Notice that the pruning ratio , CPU frequency , transmission power and the probability of successful model reception are intertwined. More specifically, depends on , and , given that the other parameters remain fixed. As such, we aim to optimize these parameters jointly by considering the controllable terms in our convergence bound in Corollary 1. Therefore, we focus on each VC round, i.e., the local iteration round at which . Specifically, we focus on minimizing the error terms due to pruning and wireless links, which are given by

| (43) | |||

Remark 8.

In the above expression, the first term appears from the pruning error , while the second term comes from the wireless factor .

Based on the above observations, we consider a weighted combination of these two terms as our objective function to minimize the bound in (43). Using (IV-A) in the wireless factor, we, therefore, consider the following objective function.

| (44) | |||

where and are two weights to strike the balance between the terms. Note that the wireless factor is multiplied by the learning rate and gradient in (43). Typically, the learning rate is small. Besides, the gradient becomes smaller as the training progresses. As such, the wireless factor term is relatively small when for all UEs and VC aggregation rounds. The model weights are non-negative. Furthermore, a larger pruning ratio can dramatically reduce the computation and offloading time, making the wireless factor . However, as a higher pruning ratio means more model parameters are pruned, we wish to avoid making the ’s large to reduce the pruning-induced errors. The above facts suggest we put more weight on the pruning error term to penalize more for the ’s. As such, we consider . However, in our resource-constrained setting, a small can prolong the training and offloading time, leading to be , i.e., the sBS will not receive the local trained model. Therefore, although is small, we keep the wireless factor to ensure is never .

Therefore, we pose the following optimization problem to configure the parameters jointly.

| (45) | ||||

| (45a) | ||||

| (45b) | ||||

| (45c) | ||||

| (45d) | ||||

| (45e) | ||||

where constraint ensures that the completion of one VC round is within the required deadline. Constraint controls the energy expense to be within the allowable budget. Besides, and restrict us from choosing the CPU frequency and transmission power within the UE’s minimum and maximum CPU cycles and transmission power, respectively. Finally, constraint ensures the pruning ratio to be within a tolerable limit .

Remark 9.

We assume that clients’ system configurations remain unchanged over time, while the channel state information (CSI) is dynamic and known at the sBS. The clients share their system configurations with their associated sBS. The sBSs share their respective users’ system configurations and CSI with the central server. As such, problem (45) is solved centrally, and the optimized parameters are broadcasted to the clients. Besides, problem (45) is non-convex with the multiplications and divisions of the optimization variables in the second term. Moreover, constraints (C) and (C) are not convex. Therefore, it is not desirable to minimize this original problem directly. In the following, we transform the problem into an approximate convex problem that can be solved efficiently.

IV-B Problem Transformation

Let us define . Given an initial feasible point set (, , ), we perform a linear approximation of this non-convex expression as follows:

| (46) |

where , and .

Moreover, ,

and are calculated in (47), (48) and (49), respectively.

| (47) | |||

| (48) | |||

| (49) |

| (50) |

| (51) |

We now focus on the non-convex constraints. First, let us approximate the local pruned model computation time as

| (53) |

Then, we approximate the non-convex uplink model offloading delay as shown in (50). Using a similar treatment, we write

| (54) |

Similarly, we approximate the energy consumption for model offloading as shown in (IV-B).

Therefore, we pose the following transformed problem

| (55) | ||||

| (55a) | ||||

| (55b) | ||||

| (55c) | ||||

where the constraints are taken for the same reasons as in the original problem. Besides, .

Note that problem (55) is now convex and can be solved iteratively using existing solvers such as CVX [30]. The key steps of our iterative solution are summarized in Algorithm 2. Moreover, as (55) has decision variables and constraints, the time complexity of running Algorithm 2 for iterations is [31]. While Algorithm 2 yields a suboptimal solution and converges to a local stationary solution set of the original problem (45), SCA-based solutions are well-known for fast convergence [32]. Moreover, our extensive empirical study in the sequel suggests that the proposed PHFL solution with Algorithm 2 delivers nearly identical performance to the upper bounded performance.

V Simulation Results and Discussions

V-A Simulation Setting

For the performance evaluation, we consider , and . We let each sBS maintain VCs, where each VC has UEs. In other words, we have and , and , and . We assume megahertz (MHz). We randomly generate maximum transmission power , energy budget for each VC aggregation round , CPU frequency and required CPU cycle to process per-bit data , respectively, from dBm, Joules, gigahertz (GHz) and for these two VCs. Therefore, all UEs in a VC have the above randomly generated system configurations888Our approach can easily be extended where all clients can have random ’s, ’s and ’s. Our approach is practical since these parameters depend on the clients’ manufacturers and their specific models.. Moreover, as described earlier in Section II, our proposed PHFL has tiers, namely () UE-VC, () VC-sBS, () sBS-mBS, and () mBS-central server.

For our ML task, we use image classification with the popular CIFAR- and CIFAR- datasets [33] for performance evaluation. We use symmetric Dirichlet distribution with concentration parameter for the non-IID data distribution as commonly used in literature [4, 27]. Besides, we use convolutional neural network (CNN), residual network (ResNet)- [34] and ResNet- [34]. The CNN model has the following architecture: , , , , , , whereas the ResNets have a similar architecture as in the original paper [34]. Moreover, the total number of trainable parameters depends on various configurations, such as the input/output shapes, kernel sizes, strides, etc. In our implementation, the original CNN, ResNet- and ResNet- models, respectively, have ; and trainable parameters on CIFAR-, and ; and trainable parameters on CIFAR-. Besides, with , we have a wireless payload of about megabits (Mbs), Mbs and Mbs for CIFAR-, and Mbs, Mbs and Mbs for CIFAR- datasets for the respective three original models.

V-B Performance Study

First, we investigate the pruning ratios ’s in different VCs. When the system configurations remain the same, the pruning ratio depends on the deadline threshold . More specifically, a larger deadline allows the client to prune fewer model parameters, given that the energy constraint is satisfied. Intuitively, less pruning leads to a bulky model that takes longer training time. The CNN model is shallower compared to the ResNets. More specifically, the original non-pruned ResNet- and ResNet- models have about times and times the trainable parameters of the CNN model, respectively, on CIFAR-. Therefore, the clients require a larger to perform their local training and trained model offloading as the trainable parameters increase.

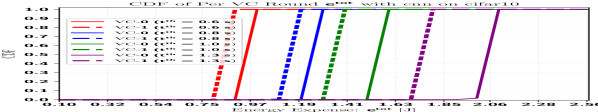

Intuitively, given a fixed , the clients need to prune more model parameters for a bulky model in order to meet the deadline and energy constraints. Our simulation results also show that this general intuition holds in determining the ’s, as shown in Fig. 2, which show the cumulative distribution function (CDF) of the ’s in different VCs. It is worth noting that the pruning ratios ’s in each VC aggregation rounds are not deterministic due to the randomness of the wireless channels. We know the optimal variables once we solve the optimization problem in (55), which depends on the realizations of the wireless channels. Then, for a given VC , we generate the plot by calculating , where is an indicator function that takes value if and otherwise. With the CNN model, about clients have a less than , , and in VC- in all cells, for s, s, s and s deadline thresholds, respectively, in Fig. 2(a). Note that we use , i.e., the clients can prune up to of the neurons. Moreover, we consider s and s, to make the problem feasible for all clients for the ResNet- and ResNet- models, respectively. Furthermore, from Fig. 2(a) - Fig. 2(c), it is quite clear that the UEs in VC- have to prune slightly lesser model parameters than the UEs in VC-, even though the maximum CPU frequency of the UEs in VC- is GHz, which is about higher than the UEs in VC-. However, due to the wireless payloads in the offloading phase, the transmission powers of the clients can also influence the ’s. In our setting, the UEs’ maximum transmission powers are Watt and Watt, respectively, in VC- and VC-. As such, with a similar wireless channel, the UEs in VC- can offload much faster than the UEs in VC-. The above observations, thus, point out that trained model offloading time dominates the total time to finish one VC round .

When it comes to energy expense, from (39) and (40), it is quite obvious that a high shall lead to less energy consumption for both the training and offloading. However, the total energy expense of the clients boils down to the dominating factor between the required energy for computation and trained model offloading due to the interplay between the wireless and the learning parameters. Particularly, with MHz, the clients can offload the CNN model fast, leading the computational energy consumption to be the dominating factor. The ResNets, on the other hand, have huge wireless overheads, leading the offloading time and energy be the dominating factors. This is also observed in our results in Fig. 3, which is the CDF plot of the energy expense , calculated in (41), of the clients in each VC and is generated following a similar strategy as in Fig. 2. When the CNN model is used, the total energy cost of the clients in VC- is larger, even though they prune more parameters, compared to the clients in VC- since larger ’s of the clients in VC- leads to a higher computational energy cost. This, however, changes for the ResNets since the wireless communication burden dominates the computation burden. The clients in VC- can use their higher ’s for the offloading time reduction when they determine the pruning ratios ’s. As such, the total energy expenses of the clients in VC- are much larger than the ones in VC-. Our simulation results in Fig. 3(a) and Figs. 3(b)-3(c) also reveal the same trends.

Now, we observe the impact of ’s on the test accuracies and required bandwidth for trained model offloading. Intuitively, if the model is shallow, pruning further makes it shallower. Therefore, the test performance can exacerbate if ’s increases for a shallow model. On the other hand, for a bulky model, pruning may have a less severe effect. Specifically, under the deadline and energy constraints, pruning may eventually help because pruning a few neurons leads to a shallower but still reasonably well-constructed model that can be trained more efficiently. Moreover, our convergence bound in (1) clearly shows that the increasing ’s decreases the convergence speed. However, the wireless payload is directly related to ’s as shown in (35). Particularly, the wireless payload is an increasing function of the ’s. As such, increasing the deadline threshold should decrease the ’s but significantly increase the wireless payload size.

Our simulation results also reveal the above trends in Fig. 4. Note that, in Fig. 4 and the subsequent figures, the (solid/dashed) lines are the average of independent simulation trials using the configurations mentioned in Section V-A, while the shaded strips show the corresponding standard deviations. Particularly, we observe that the CNN model is largely affected by a small because that leads to a large , which eventually prunes more neurons of the already shallow model. On the other hand, the bulky ResNets exhibit small performance degradation when decreases. Moreover, compared to the original non-pruned counterparts, the performance difference is small if is selected appropriately, as shown in Fig. 4(a) to Fig. 4(c). However, even a slight increase in shall reduce the wireless payload size, which can significantly save a large portion of the bandwidth, as observed in Fig. 4(d) to Fig. 4(f). For example, if the CNN model is used, with s, the performance degradation on test accuracy is about after PHFL round, while the per PHFL round bandwidth saving for at least of the clients is about . Similarly, if s, the test accuracy degradation is about and after global rounds, while the per PHFL round bandwidth saving for at least of the clients are about and , for ResNet- and ResNet-, respectively.

V-C Baseline Comparisons

We now focus on performance comparisons. First, we consider the existing HFL [10, 9] algorithm that does not consider model pruning or any energy constraints. Besides, [10, 9] only considered two levels, UE-BS and BS-cloud/server. For a fair comparison, we adapt HFL into three levels ) UE-sBS, ) sBS-mBS and ) mBS-cloud/server. Furthermore, we enforce the energy and deadline constraints in each UE-sBS aggregation round and name this baseline HFL with constraints (HFL-WC). Moreover, since [10, 9] did not have any VC and we have VC aggregation rounds before the sBS aggregation round, we have adapted the deadline accordingly for HFL-WC to make our comparison fair. Furthermore, we consider a random PHFL (R-PHFL) scheme, which has the same system model as ours and allows model pruning. In R-PHFL, the pruning ratios, ’s, are randomly selected between and to satisfy constraint (45e). Moreover, in both HFL-WC and R-PHFL, a common that satisfies both deadline and energy constraints for all clients in all VCs leads to poor test accuracy. As such, we determine the local iterations of the UEs within a VC by selecting the maximum possible number of iterations that all clients within that VC can perform without violating the delay and energy constraints. For our proposed PHFL, we choose and . Moreover, we let for both HFL-WC and PHFL. We also consider centralized SGD to show the performance gap of PHFL with the ideal case where all training data samples are available centrally.

From our above discussion, it is expected that pruning will likely not have the edge over the HFL-WC with a shallow model. Moreover, one may not need pruning for a shallow model in the first place. However, for a bulky model, due to a large number of training parameters and a huge wireless payload, pruning can be a necessity under extreme resource constraints. Furthermore, it is crucial to jointly optimize ’s, ’s and ’s in order to increase the test accuracy. The simulation results in Fig. 5 also validate these claims. We observe that when the increases, HFL-WC’s performance improves with the CNN model. Moreover, when HFL-WC has times the deadline of PHFL, the performance is comparable, as shown in Fig. 5(a) and Fig. 5(d). More specifically, the maximum performance degradation of the proposed PHFL algorithm is about on CIFAR- when HFL-WC has times the deadline of PHFL. However, for the ResNets model, HFL-WC requires a significantly longer deadline threshold to make the problem feasible. Particularly, with ResNet-, s does not allow the UEs to perform even a single local iteration, leading to the same initial model weights and, thus, the same test accuracy in HFL-WC. Moreover, when the deadline threshold is times the of the PHFL, HFL-WC’s test accuracy significantly lags, as shown in Fig. 5(b) and Fig. 5(e). Particularly, after rounds, our proposed solution with s provides about , and , and about , and better test accuracy on CIFAR- and on CIFAR-, respectively, than the HFL-WC with s, s and s. For the ResNet- model, the clients require s for performing some local training in HFL-WC, whereas our proposed solution can achieve significantly better performance with only s. For example, our proposed solution with s yields about , and , and about , and better test accuracy than the HFL-WC with s, s and s on CIFAR- and CIFAR-, respectively. Moreover, our proposed solution provides about and and about and better test accuracy than R-PHFL on CIFAR- and CIFAR- with the ResNet- and ResNet- models, respectively. The gap with the ideal centralized ML is also expected since FL suffers from data and system heterogeneity.

We also examine the performance of the proposed method in terms of wall clock time. Since HFL-WC does not have the VC tier, the wall clock time to run global rounds for HFL-WC with a deadline smaller than will be lower than the proposed PHFL’s wall clock time to run the same number of global rounds. However, that does not necessarily guarantee a higher test accuracy than our PHFL since training and offloading the original bulky model may take a long time, allowing only a few local SGD rounds of the clients. Besides, any deadline greater than for the HFL-WC will require a longer wall clock time than our proposed PHFL solution. Our simulation results in Fig. 6 clearly shows these trends. We observe that when HFL-WC has a deadline , i.e., s = s, the test accuracies are about and , respectively, for HFL-WC and PHFL when the wall clock reaches seconds. Even with seconds, the HFL-WC algorithm performs worse than our proposed PHFL solution.

From the above results and discussion, it is quite clear that R-PHFL yields poor test accuracy due to the random selection of ’s. Besides, HFL-WC cannot deliver reasonable performance when the model has a large number of training parameters. Furthermore, our proposed PHFL’s performance is comparable to the non-pruned HFL-WC performance with the shallow model. As such, in the following, we only consider the upper bound (UB) of the HFL baseline, which does not consider the constraints. Moreover, to show how pruning degrades test accuracy, we also consider the UB, called HFL-VC-UB, by inheriting the same system model described in Section II, but without the model pruning and the constraints. In other words, we inherit the same underlying four levels, UE-VC, VC-sBS, sBS-mBS and mBS-cloud/server aggregation policy with the original non-pruned model.

| Methods | Dir() | With CNN Model | With ResNet- Model | With ResNet- Model | ||||||

| Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | ||

| PHFL | ||||||||||

| (Ours) | ||||||||||

| HFL-VC | ||||||||||

| (UB) | ||||||||||

| (Ours) | ||||||||||

| R-PHFL | ||||||||||

| HFL | ||||||||||

| (UB) - | ||||||||||

| Methods | Dir() | With CNN Model | With ResNet- Model | With ResNet- Model | ||||||

| Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | ||

| PHFL | ||||||||||

| (Ours) | ||||||||||

| HFL-VC | ||||||||||

| (UB) | ||||||||||

| (Ours) | ||||||||||

| HFL | ||||||||||

| (UB)- | ||||||||||

| Methods | Dir() | With CNN Model | With ResNet- Model | With ResNet- Model | ||||||

| Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | ||

| PHFL | ||||||||||

| (Ours) | ||||||||||

| HFL-VC | ||||||||||

| (UB) | ||||||||||

| (Ours) | ||||||||||

| R-PHFL | ||||||||||

First, we illustrate the required computation time and the corresponding energy expenses for the baselines and our proposed PHFL solution with different deadline thresholds. Naturally, if we increase the for each VC aggregation round, our proposed solution will take longer time and energy to perform global rounds. Moreover, since there are VC aggregation rounds in our proposed system model, the original model training and offloading with HFL-VC-UB is expected to take significantly longer and consume more energy than the HFL-UB baseline. Therefore, the effectiveness, in terms of time and energy consumption, of PHFL is largely dependent upon the deadline threshold . While R-PHFL requires the same time as our proposed PHFL, the energy requirements for R-PHFL can vary significantly due to the random selection of the ’s that lead to different model sizes and different local training episodes in different VCs.

Our results in Figs. 7(a)-7(c) and Figs. 7(d)-7(f) clearly show these trade-offs with time and energy consumption, respectively, for the three models. It is worth pointing out that we adopted the popular lottery ticket hypothesis [26] for finding the winning ticket that requires performing iterations on the initial model with full parameter space as described in Section II-B. This incurs additional time and energy overheads, as calculated in (33) and (38), respectively. As such, when the is large, if the UEs have sufficient energy budgets, they can prune only a few neurons. Therefore, the total time and energy consumption for the original model computation for getting the winning ticket, training the pruned model and offloading the trained pruned model parameters can become slightly larger than the HFL-VC-UB. This is observed in Fig. 7(a) and Fig. 7(d) for CNN model when s, and also in Fig. 7(b) and Fig. 7(e) when s for the ResNet- model. Besides, the dashed vertical lines in Fig. 7(e) and Fig. 7(f) show the mean energy budget of the clients for each PHFL global round. While all UEs are able to perform the learning and offloading within this mean energy budget with CNN and ResNet- models, clearly, when the ResNet- model is used, more than of the clients will fail to perform the HFL-VC-UB due to their limited energy budgets.

| Methods | Dir() | With CNN Model | With ResNet- Model | With ResNet- Model | ||||||

| Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | ||

| PHFL | ||||||||||

| (Ours) | ||||||||||

| HFL-VC | ||||||||||

| (UB) | ||||||||||

| (Ours) | ||||||||||

| R-PHFL | ||||||||||

| HFL | ||||||||||

| (UB) - | ||||||||||

| Methods | Dir() | With CNN Model | With ResNet- Model | With ResNet- Model | ||||||

| Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | ||

| PHFL | ||||||||||

| (Ours) | ||||||||||

| HFL-VC | ||||||||||

| (UB) | ||||||||||

| (Ours) | ||||||||||

| HFL | ||||||||||

| (UB) - | ||||||||||

| Methods | Dir() | With CNN Model | With ResNet- Model | With ResNet- Model | ||||||

| Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | Acc | Req T [s] | Req E [J] | ||

| PHFL | ||||||||||

| (Ours) | ||||||||||

| HFL-VC | ||||||||||

| (UB) | ||||||||||

| (Ours) | ||||||||||

| R-PHFL | ||||||||||

Finally, we show the impact of and for different dataset heterogeneity levels on CIFAR- dataset in Table II - Table IV, where s and s is used in our proposed PHFL algorithms for ResNet- and ResNet- models, respectively. Besides, for the CNN model, we used s and s, respectively, in our PHFL algorithm when and , respectively, due to the facts that pruning exacerbates test performance for a shallow model and computational time dominates the offloading delay. Similarly, we considered s and s, respectively, for and on CIFAR- datasets for the CNN model. The performance comparisons for different ’s are shown in Table V - Table VII. From the tables, it is quite clear that pruning helps with negligible performance deviation from its original non-pruned counterparts. Besides, for the shallow CNN model, the performance gain, in terms of test accuracy, of our proposed PHFL is insignificant compared to the bulky ResNets. Moreover, increasing or generally improves the test accuracy. However, if the same is to be used, it is beneficial to increase compared to increasing .

VI Conclusion

This work proposed a model pruning solution to alleviate bandwidth scarcity and limited computational capacity of wireless clients in heterogeneous networks. Using the convergence upper-bound, pruning ratio, computation frequency and transmission power of the clients were jointly optimized to maximize the convergence rate. The performances were evaluated on two popular datasets using three popular machine learning models of different total training parameter sizes. The results suggest that pruning can significantly reduce training time, energy expense and bandwidth requirement while incurring negligible test performance.

References

- [1] B. McMahan, E. Moore, D. Ramage, S. Hampson, and B. A. y Arcas, “Communication-efficient learning of deep networks from decentralized data,” in Proc. AISTATS, 2017.

- [2] N. H. Tran, W. Bao, A. Zomaya, M. N. H. Nguyen, and C. S. Hong, “Federated learning over wireless networks: Optimization model design and analysis,” in Proc. IEEE INFOCOM, 2019.

- [3] Z. Yang, M. Chen, W. Saad, C. S. Hong, and M. Shikh-Bahaei, “Energy efficient federated learning over wireless communication networks,” IEEE Trans. Wireless Commun., vol. 20, no. 3, pp. 1935–1949, 2020.

- [4] R. Jin, X. He, and H. Dai, “Communication efficient federated learning with energy awareness over wireless networks,” IEEE Trans. Wireless Commun., vol. 21, no. 7, pp. 5204–5219, Jan. 2022.

- [5] Z. Chen, W. Yi, and A. Nallanathan, “Exploring representativity in device scheduling for wireless federated learning,” IEEE Trans. Wireless Commun., pp. 1–1, 2023.

- [6] T. Zhang, K.-Y. Lam, J. Zhao, and J. Feng, “Joint device scheduling and bandwidth allocation for federated learning over wireless networks,” IEEE Trans. Wireless Commun., pp. 1–1, 2023.

- [7] J. Wang, S. Wang, R.-R. Chen, and M. Ji, “Demystifying why local aggregation helps: Convergence analysis of hierarchical sgd,” in Proc. AAAI, 2022.

- [8] S. Hosseinalipour, S. S. Azam, C. G. Brinton, N. Michelusi, V. Aggarwal, D. J. Love, and H. Dai, “Multi-stage hybrid federated learning over large-scale d2d-enabled fog networks,” IEEE/ACM Trans. Network., vol. 30, no. 4, pp. 1569–1584, 2022.

- [9] B. Xu, W. Xia, W. Wen, P. Liu, H. Zhao, and H. Zhu, “Adaptive hierarchical federated learning over wireless networks,” IEEE Trans. Vehicular Technol., vol. 71, no. 2, pp. 2070–2083, 2021.

- [10] S. Liu, G. Yu, X. Chen, and M. Bennis, “Joint user association and resource allocation for wireless hierarchical federated learning with iid and non-iid data,” IEEE Trans. Wireless Commun., vol. 21, no. 10, pp. 7852–7866, 2022.

- [11] S. Luo, X. Chen, Q. Wu, Z. Zhou, and S. Yu, “Hfel: Joint edge association and resource allocation for cost-efficient hierarchical federated edge learning,” IEEE Trans. Wireless Commun., vol. 19, no. 10, pp. 6535–6548, 2020.

- [12] L. Liu, J. Zhang, S. Song, and K. B. Letaief, “Client-edge-cloud hierarchical federated learning,” in Proc. IEEE ICC, 2020.

- [13] C. Feng, H. H. Yang, D. Hu, Z. Zhao, T. Q. S. Quek, and G. Min, “Mobility-aware cluster federated learning in hierarchical wireless networks,” IEEE Trans. Wireless Commun., vol. 21, no. 10, pp. 8441–8458, Oct. 2022.

- [14] M. S. H. Abad, E. Ozfatura, D. Gunduz, and O. Ercetin, “Hierarchical federated learning across heterogeneous cellular networks,” in Proc. ICASSP. IEEE, 2020.

- [15] P. Kairouz, H. B. McMahan, B. Avent, A. Bellet, M. Bennis, A. N. Bhagoji, K. Bonawitz, Z. Charles, G. Cormode, R. Cummings et al., “Advances and open problems in federated learning,” Foundations and Trends® in Machine Learning, vol. 14, no. 1–2, pp. 1–210, 2021.

- [16] T. Li, A. K. Sahu, M. Zaheer, M. Sanjabi, A. Talwalkar, and V. Smith, “Federated optimization in heterogeneous networks,” Proc. MLSys, vol. 2, pp. 429–450, 2020.

- [17] H. Yang, X. Zhang, P. Khanduri, and J. Liu, “Anarchic federated learning,” in Proc. ICML. PMLR, 2022, pp. 25 331–25 363.

- [18] J. Wang, Q. Liu, H. Liang, G. Joshi, and H. V. Poor, “Tackling the objective inconsistency problem in heterogeneous federated optimization,” Proc. NeurIPS, vol. 33, pp. 7611–7623, 2020.

- [19] T. Lin, S. U. Stich, L. Barba, D. Dmitriev, and M. Jaggi, “Dynamic model pruning with feedback,” in Proc. ICLR, 2020.

- [20] Y. Jiang, S. Wang, V. Valls, B. J. Ko, W.-H. Lee, K. K. Leung, and L. Tassiulas, “Model pruning enables efficient federated learning on edge devices,” IEEE Trans. Neural Netw. Learn. Syst., pp. 1–13, Apr. 2022.

- [21] Z. Zhu, Y. Shi, J. Luo, F. Wang, C. Peng, P. Fan, and K. B. Letaief, “Fedlp: Layer-wise pruning mechanism for communication-computation efficient federated learning,” arXiv preprint arXiv:2303.06360, 2023.

- [22] S. Liu, G. Yu, R. Yin, and J. Yuan, “Adaptive network pruning for wireless federated learning,” IEEE Wireless Commun. Lett., vol. 10, no. 7, pp. 1572–1576, 2021.

- [23] S. Liu, G. Yu, R. Yin, J. Yuan, L. Shen, and C. Liu, “Joint model pruning and device selection for communication-efficient federated edge learning,” IEEE Trans. Commun., vol. 70, no. 1, pp. 231–244, Jan. 2022.

- [24] J. Ren, W. Ni, and H. Tian, “Toward communication-learning trade-off for federated learning at the network edge,” IEEE Commun. Lett., vol. 26, no. 8, pp. 1858–1862, Aug. 2022.

- [25] “3rd Generation Partnership Project; Technical Specification Group Core Network and Terminals; Proximity-services in 5G System protocol aspects; Stage 3,” 3GPP TS 24.554 V18.0.0, Rel. 18, Mar. 2023.

- [26] J. Frankle and M. Carbin, “The lottery ticket hypothesis: Finding sparse, trainable neural networks,” in Proc. ICLR, 2019.

- [27] M. F. Pervej, R. Jin, and H. Dai, “Resource constrained vehicular edge federated learning with highly mobile connected vehicles,” IEEE J. Sel. Areas Commun., vol. 41, no. 6, pp. 1825–1844, June 2023.

- [28] S. U. Stich, J.-B. Cordonnier, and M. Jaggi, “Sparsified sgd with memory,” in Proc. NeurIPS, 2018.

- [29] L. Bottou, F. E. Curtis, and J. Nocedal, “Optimization methods for large-scale machine learning,” Siam Review, vol. 60, no. 2, pp. 223–311, 2018.

- [30] S. Diamond and S. Boyd, “Cvxpy: A python-embedded modeling language for convex optimization,” J. Mach. Learn. Res., vol. 17, no. 1, pp. 2909–2913, 2016.

- [31] E. Che, H. D. Tuan, and H. H. Nguyen, “Joint optimization of cooperative beamforming and relay assignment in multi-user wireless relay networks,” IEEE Trans. Wireless Comm., vol. 13, no. 10, pp. 5481–5495, 2014.

- [32] Y. Sun, D. Xu, D. W. K. Ng, L. Dai, and R. Schober, “Optimal 3d-trajectory design and resource allocation for solar-powered uav communication systems,” IEEE Trans. Commun., vol. 67, no. 6, pp. 4281–4298, 2019.

- [33] A. Krizhevsky, “Learning multiple layers of features from tiny images,” Apr. 2009. [Online]. Available: https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf

- [34] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proc. IEEE CVPR, 2016.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5744ed22-bff9-4198-b771-79665f60e82d/MFPervej.jpg) |

Md Ferdous Pervej (M’23) received the B.Sc. degree in electronics and telecommunication engineering from the Rajshahi University of Engineering and Technology, Rajshahi, Bangladesh, in 2014, and the M.S. and Ph.D. degrees in electrical engineering from Utah State University, Logan, UT, USA, in and North Carolina State University, Raleigh, NC, USA, in , respectively. He was with Mitsubishi Electric Research Laboratories, Cambridge, MA, in summer , and with Futurewei Wireless Research and Standards, Schaumburg, IL from May to December . He is currently a Postdoctoral Scholar – Research Associate in the Ming Hsieh Department of Electrical and Computer Engineering at the University of Southern California, Los Angeles, CA, USA. His primary research interests are wireless networks, distributed machine learning, vehicle-to-everything communication, edge caching/computing, and machine learning for wireless networks. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5744ed22-bff9-4198-b771-79665f60e82d/x27.png) |

Richeng Jin (M’21) received the B.S. degree in information and communication engineering from Zhejiang University, Hangzhou, China, in 2015, and the Ph.D. degree in electrical engineering from North Carolina State University, Raleigh, NC, USA, in 2020. He was a Postdoctoral Researcher in electrical and computer engineering at North Carolina State University, Raleigh, NC, USA, from 2021 to 2022. He is currently a faculty member of the department of information and communication engineering with Zhejiang University, Hangzhou, China. His research interests are in the general area of wireless AI, game theory, and security and privacy in machine learning/artificial intelligence and wireless networks. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/5744ed22-bff9-4198-b771-79665f60e82d/Huaiyu_Dai_IEEE.jpg) |

Huaiyu Dai (F’17) received the B.E. and M.S. degrees in electrical engineering from Tsinghua University, Beijing, China, in 1996 and 1998, respectively, and the Ph.D. degree in electrical engineering from Princeton University, Princeton, NJ in 2002. He was with Bell Labs, Lucent Technologies, Holmdel, NJ, in summer 2000, and with AT&T Labs-Research, Middletown, NJ, in summer 2001. He is currently a Professor of Electrical and Computer Engineering with NC State University, Raleigh, holding the title of University Faculty Scholar. His research interests are in the general areas of communications, signal processing, networking, and computing. His current research focuses on machine learning and artificial intelligence for communications and networking, multilayer and interdependent networks, dynamic spectrum access and sharing, as well as security and privacy issues in the above systems. He has served as an area editor for IEEE Transactions on Communications, a member of the Executive Editorial Committee for IEEE Transactions on Wireless Communications, and an editor for IEEE Transactions on Signal Processing. Currently he serves as an area editor for IEEE Transactions on Wireless Communications. He was a co-recipient of best paper awards at 2010 IEEE International Conference on Mobile Ad-hoc and Sensor Systems (MASS 2010), 2016 IEEE INFOCOM BIGSECURITY Workshop, and 2017 IEEE International Conference on Communications (ICC 2017). He received Qualcomm Faculty Award in 2019. |

Supplementary Materials

Additional Notations: Analogous to our previous notations, we express the pruned virtual models at different hierarchy levels as , , and . Moreover, , and .

Appendix A Proof of Theorem 1

Theorem 1.

When the assumptions in Section III-A hold and , we have

| (56) |

where , , and is the client’s CPU frequency in the wireless factors. Besides, the terms , , and are

| (57) | ||||

| (58) | ||||

| (59) | ||||

| (60) | ||||

| (61) |

Proof.

Since there are clients and we consider the virtual models at different hierarchy levels, we start the convergence proof assuming the global model is the weighted combination of all these clients. After that, we break down our derivations for different hierarchy levels based on our proposed PHFL algorithm. Note that this is also a standard practice in the literature [13, 9].

First, let us write the update rule for the global model as

| (62) |

where . As such, we write

| (63) |

where stems from -smoothness assumption. For the third term in (63), we can write

| (64) |

where .

Plugging (A) into (63) and taking expectation over both sides, we get

| (65) |

We simply the third term in (65) as follows:

| (66) | |||

where follows from the independence of client selection and SGD. Besides, we define in .

The second term in (65) can be simplified as follows:

| (67) |

where comes from the definition of variance, follows from Jensen inequality, i.e., , stems from the fact that and is due to the bounded stochastic gradient assumption.

Plugging (66) and (A) in (65), we get

| (68) | |||

Note that, when , . Therefore, we can drop the fourth term in (68).

To that end, rearranging the terms in (68), then dividing both sides by , taking expectations on both sides and averaging over time, we get the following

| (69) |

where we use the notation to represent for simplicity.

The last term in (A) can be expanded as follows:

| (70) | |||

where we used the notation and to represent the model of the VC, sBS and mBS, respectively, that UE is connected to. Furthermore, and stem from the fact that . Moreover, arises from the -smoothness and divergence between the loss functions assumptions.

To this end, we bound the terms to using our aggregation rules and definitions. First, let us focus on the term

| (71) |

where in , we trace back to the nearest synchronization iteration where and . Besides, we use the definition of pruning ratio in .

Now, we calculate the bound for the term as follows

| (72) |

where () arises from Jensen inequality and appears from the same reasoning as in . Using similar steps, we write the following:

| (73) | ||||

| (74) | ||||

| (75) |

When , , and , we have . As such, using the fact that and definition of , we get

| (77) |

∎

Appendix B Proof of Lemma 1

Lemma 1.

When , the average difference between the VC and local model parameters, i.e., the term of (56), is upper bounded as

| (78) |

where .

Proof.

| (79) |

where and comes from .

Note that the first term in (B) comes from the VC receiving a weighted combination of the pruned models of its associated UEs. For this term, we have

| (80) |

where is true since and comes from .

As such, the first term is bounded as

| (81) |

For the second term of (B), we have

| (82) |

Lemma 5.

| (83) |

where .

Lemma 6.

| (84) |

where .

Now multiplying both sides of (85) by , we get

| (86) |

When , we have , and the previous assumption of is automatically satisfied. As such, we write

| (87) |

B-A Missing Proof of Lemma 5

| (88) |

where stems from the fact that , where for any and . Besides, is true due to the time independence of the SGD assumption. Furthermore, in and , we use the bounded divergence of the mini-batch gradient assumption and client independence property.

B-B Missing Proof of Lemma 6

| (89) |

where . ∎

Appendix C Proof of Lemma 2

Lemma 2.

When , the difference between the sBS model parameters and VC model parameters, i.e., the term of (56), is upper bounded as

| (90) |

where .

Proof.

| (91) | ||||

where and the inequalities in the last term arise from Jensen inequality.

For the first term in (C), we have

| (92) |

where in we use the fact that and stems from following similar steps as in (73) and (72).

As such we derive the upper bound of the first term of (C) as

| (93) |

For the second term in (C), we have

| (94) |

Lemma 7.

| (95) | |||

| (96) |

where .

Lemma 8.

| (97) |

C-A Missing Proof of Lemma 7

| (99) |

where .

C-B Missing Proof of Lemma 8

| (100) |

which concludes the proof of Lemma 8. ∎

Appendix D Proof of Lemma 3

Lemma 3.

When , the average difference between the sBS and mBS model parameters, i.e., the term of (56), is upper bounded as

| (101) |

where .

Proof.

| (102) |

where and the inequalities in the last term arise from Jensen inequality.

For the first term in (D), we have

| (103) |

where in we use the fact that . Moreover, appears following similar steps as in (74) and (73).

As such, we have

| (104) |

For the second term in (D), we have

| (105) |

Lemma 9.

| (106) |

where .

Lemma 10.

| (107) |

D-A Missing Proof of Lemma 9

| (109) |

where .

D-B Missing Proof of Lemma 10

| (110) |

∎

Appendix E Proof of Lemma 4

Lemma 4.

When , the average difference between the global and the mBS models, i.e., the term, is bounded as follows:

| (111) |

where .

Proof.

| (112) |

where the last inequality follows from Jensen inequality.

For the first term of (E), we have

| (113) |

where in , we use the fact that and stems from . Moreover, the last inequality appears following steps as in (75) and (74).

As such, we simplify the first term as

| (114) |

The second term of (E) is further simplified as follows:

| (115) |

Lemma 11.

Lemma 12.

The second term of (E) is bounded as follow:

| (117) |

E-A Missing Proof of Lemma 11

| (119) |

where .

E-B Missing Proof of Lemma 12

| (120) | |||

| (121) |

∎