Hierarchical Deep Counterfactual Regret Minimization

Abstract

Imperfect Information Games (IIGs) offer robust models for scenarios where decision-makers face uncertainty or lack complete information. Counterfactual Regret Minimization (CFR) has been one of the most successful family of algorithms for tackling IIGs. The integration of skill-based strategy learning with CFR could potentially mirror more human-like decision-making process and enhance the learning performance for complex IIGs. It enables the learning of a hierarchical strategy, wherein low-level components represent skills for solving subgames and the high-level component manages the transition between skills. In this paper, we introduce the first hierarchical version of Deep CFR (HDCFR), an innovative method that boosts learning efficiency in tasks involving extensively large state spaces and deep game trees. A notable advantage of HDCFR over previous works is its ability to facilitate learning with predefined (human) expertise and foster the acquisition of skills that can be transferred to similar tasks. To achieve this, we initially construct our algorithm on a tabular setting, encompassing hierarchical CFR updating rules and a variance-reduced Monte Carlo sampling extension. Notably, we offer the theoretical justifications, including the convergence rate of the proposed updating rule, the unbiasedness of the Monte Carlo regret estimator, and ideal criteria for effective variance reduction. Then, we employ neural networks as function approximators and develop deep learning objectives to adapt our proposed algorithms for large-scale tasks, while maintaining the theoretical support.

1 Introduction

Imperfect Information Games (IIGs) can be used to model various application domains where decision-makers have incomplete or uncertain information about the state of the environment, such as auctions (Noe et al. (2012)), diplomacy (Bakhtin et al. (2022)), cybersecurity (Kakkad et al. (2019)), etc. As one of the most successful family of algorithms for IIGs, variants of tabular Counterfactual Regret Minimization (CFR) (Zinkevich et al. (2007)) have been employed in all recent milestones of Poker AI which serves as a quintessential benchmark for IIGs (Bowling et al. (2015); Moravčík et al. (2017); Brown and Sandholm (2018)). However, implementing tabular CFR in domains characterized by an exceedingly large state space necessitates the use of abstraction techniques that group similar states together (Ganzfried and Sandholm (2014a); Sandholm (2015)), which requires extensive domain-specific expertise. To address this challenge, researchers have proposed deep learning extensions of CFR (Brown et al. (2019); Li et al. (2020); Steinberger et al. (2020)), which leverage neural networks as function approximations, enabling generalization across the state space.

On the other hand, professionals in a field typically possess robust domain-specific skills, which they can employ to compose comprehensive strategies for tackling diverse and intricate task scenarios. Therefore, integrating the skill-based strategy learning with CFR has the potential to enable human-like decision-making and enhance the learning performance for complex tasks with extended decision horizons, which is still an open problem. To accomplish this, the agent needs to learn a hierarchical strategy, in which the low-level components represent specific skills, and the high-level component coordinates the transition among skills. Notably, this is akin to the option framework (Sutton et al. (1999)) proposed in the context of reinforcement learning (RL), which enables learning or planning at multiple levels of temporal abstractions. Further, it’s worth noting that a hierarchical strategy is more interpretable, allowing humans to identify specific subcases where AI agents struggle. Targeted improvements can then be made by injecting critical skills that are defined by experts or learned through well-developed subgame-solving techniques (Moravcik et al. (2016); Brown and Sandholm (2017); Brown et al. (2018)). Also, skills acquired in one task, being more adaptable than the overarching strategy, can potentially be transferred to similar tasks to improve the learning in new IIGs.

In this paper, we introduce the first hierarchical extension of Deep CFR (HDCFR), a novel approach that significantly enhances learning efficiency in tasks with exceptionally large state spaces and deep game trees and enables learning with transferred knowledge. To achieve this, we establish the theoretical foundations of our algorithm in the tabular setting, drawing inspiration from vanilla CFR (Zinkevich et al. (2007)) and Variance-Reduced Monte Carlo CFR (VR-MCCFR) (Davis et al. (2020)). Then, building on these results, we introduce deep learning objectives to ensure the scalability of HDCFR. In particular, our contributions are as follows. (1) We propose to learn a hierarchical strategy for each player, which contains low-level strategies to encode skills (represented as sequences of primitive actions) and a high-level strategy for skill selection. We provide formal definitions for the hierarchical strategy within the IIG model, and provide extended CFR updating rules for strategy learning (i.e., HCFR) with convergence guarantees. (2) Vanilla CFR requires a perfect game tree model and a full traverse of the game tree in each training iteration, which can limit its use especially for large-scale tasks. Thus, we propose a sample-based model-free extension of HCFR, for which the key elements include unbiased Monte Carlo estimators of counterfactual regrets and a hierarchical baseline function for effective variance reduction. Note that controlling sample variance is vital for tasks with extended decision horizons, which our algorithm targets. Theoretical justifications are provided for each element of our design. (3) We present HDCFR, where the hierarchical strategy, regret, and baseline are approximated with Neural Networks, and the training objectives are demonstrated to be equivalent to those proposed in the tabular setting, i.e., (1) and (2), when optimality is achieved, thereby preserving the theoretical results while enjoying scalability.

2 Background

This section presents the background of our work, which includes two key concepts: Counterfactual Regret Minimization (CFR) and the option framework.

2.1 Counterfactual Regret Minimization

First, we introduce the extensive game model with imperfect information (Osborne and Rubinstein (1994)). In an extensive game, players make sequential moves represented by a game tree. At each non-terminal state, the player in control chooses from a set of available actions. At each terminal state, each player receives a payoff. In the presence of imperfect information, a player may not know which state they are in. For instance, in a poker game, a player sees its own cards and all cards laid on the table but not the opponents’ hands. Therefore, at each time step, each player makes decisions based on an information set – a collection of states that the controlling player cannot distinguish. Formally, the extensive game model can be represented by a tuple . is a finite set of players. is a set of histories, where each history is a sequence of actions of all players from the start of the game and corresponds to a game state. For , we write if is a prefix of . The set of actions available at is denoted as . Suppose , then a successor history of . Histories with no successors are terminal histories . maps each non-terminal history to the player that chooses the next action, where is the chance player that acts according to a predefined distribution . This chance player represents the environment’s inherent randomness, such as using a dice roll to decide the starting player. The utility function assigns a payoff for every player at each terminal history. For a player , is a partition of and each element is an information set as introduced above. also represents the observable information for shared by all histories . Due to the indistinguishability, we have . Notably, our work focus on the two-player zero-sum setting, where and , like previous works on CFR (Zinkevich et al. (2007); Brown et al. (2019); Davis et al. (2020)).

Every player selects actions according to a strategy that maps each information set to a distribution over actions in . Note that . The learning target of CFR is a Nash Equilibrium (NE) strategy profile , where no player has an incentive to deviate from their specified strategy. That is, , where represents the players other than , is the expected payoff to player of and defined as follows:

| (1) |

denotes the information set containing , and is the reach probability of when employing . can be decomposed as , where . In addition, represents the reach probability of the information set .

CFR proposed in Zinkevich et al. (2007) is an iterative algorithm which accumulates the counterfactual regret for each player at each information set . This regret informs the strategy determination. is defined as follows:

| (2) | |||

where is the strategy profile at iteration , is identical to except that the player always chooses the action at , denotes the reach probability from to which equals if and 0 otherwise. Intuitively, represents the expected regret of not choosing action at . With , the next strategy profile is acquired with regret matching (Abernethy et al. (2011)), which sets probabilities proportional to the positive regrets: . Defining the average strategy such that , CFR guarantees that the strategy profile converges to a Nash Equilibrium as .

2.2 The Option Framework

As proposed in Sutton et al. (1999), an option can be described with three components: an initiation set , an intra-option policy , and a termination function . , , represent the state, action, option space, respectively. An option is available in state if and only if . Once the option is taken, actions are selected according to until it terminates stochastically according to , i.e., the termination probability at the current state. A new option will be activated by a high-level policy once the previous option terminates. In this way, and constitute a hierarchical policy for a certain task. Hierarchical policies tend to have superior performance on complex long-horizon tasks which can be broken down into and processed as a series of subtasks.

The one-step option framework (Li et al. (2021a)) is proposed to learn the hierarchical policy without the extra need to justify the exact beginning and breaking condition of each option, i.e., and . First, it assumes that each option is available at each state, i.e., . Second, it redefines the high-level and low-level policies as (: the option in the previous timestep) and , respectively, and implementing them as end-to-end neural networks. In particular, the Multi-Head Attention (MHA) mechanism (Vaswani et al. (2017)) is adopted in , which enables it to temporally extend options in the absence of the termination function . Intuitively, if still fits , will assign a larger attention weight to and thus has a tendency to continue with it; otherwise, a new option with better compatibility will be sampled. Then, the option is sampled at each timestep rather than after the previous option terminates. With this simplified framework, we only need to train the hierarchical policy, i.e., and .

The option framework is proposed within the realm of RL as opposed to CFR; however, these two fields are closely related. The authors of (Srinivasan et al. (2018); Fu et al. (2022)) propose actor-critic algorithms for multi-agent adversarial games with partial observability and show that they are indeed a form of MCCFR for IIGs. This insight inspires our adoption of the one-step option framework to create a hierarchical extension for CFR.

3 Methodology

In this work, we aim at extending CFR to learn a hierarchical strategy in the form of Neural Networks (NNs) to solve IIGs with extensive state spaces and deep game trees. The high-level and low-level strategies serve distinct roles in the learning system, where low-level components represent various skills composed of primitive actions, and the high-level component orchestrates their utilization, thus they should be defined and learned as different functions. In the absence of prior research on hierarchical extensions of CFR, we establish our work’s theoretical foundations by drawing upon tabular CFR algorithms. Firstly, we define the hierarchical strategy and hierarchical counterfactual regret, and provide corresponding updating rules along with the convergence guarantee. Subsequently, we propose that an unbiased estimation of the hierarchical counterfactual regret can be achieved through Monte Carlo sampling (Lanctot et al. (2009)) and that the sample variance can be reduced by introducing a hierarchical baseline function. This Low-Variance Monte Carlo sampling extension enables our algorithm to to tackle domains with vast or unknown game trees (i.e., the model-free setting) - where standard CFR traversal is impractical - without compromising the convergence rate. Finally, with the theoretical foundations established in the tabular setting, we develop our algorithm, HDCFR, by approximating these hierarchical functions using NNs and training them with novel objective functions. These training objectives are demonstrated to be consistent with the updating rules in the tabular case when optimality is achieved, thereby the theoretical support is maintained.

3.1 Preliminaries

At a game state , the player makes its -th decision by selecting a hierarchical action , i.e., the option (a.k.a., skill) and primitive action, based on the observable information for player at , including the private observations and decision sequence of player , and the public information for all players (defined by the game). All histories that share the same observable information are considered indistinguishable to player and belong to the same information set . Thus, in this work, we also use to denote observations upon which player makes decisions. With the hierarchical actions, we can redefine the extensive game model as . Here, , , , and retain the definitions in Section 2.1. includes all the possible histories, each of which is a sequence of hierarchical actions of all players starting from the first time step. , where and represent the options and primitive actions available at respectively. , where is the predefined distribution in the original game model and (a dummy variable) is the only option choice for the chance player.

The learning target for player is a hierarchical strategy , which, by the chain rule, can be decomposed as . Note that although includes , we follow the conditional independence assumption of the one-step option framework (Li et al. (2021a); Zhang and Whiteson (2019)) which states that and , thus only () is used for () to determine (). With the hierarchical strategy, we can redefine the expected payoff and reach probability in Equation (1) by simply substituting with , based on which we have the definition of the average overall regret of player at iteration : (From this point forward, refers to a certain learning iteration rather than a time step within an iteration.)

| (3) |

The following theorem (Theorem 2 from Zinkevich et al. (2007)) provides a connection between the average overall regret and the Nash Equilibrium solution.

Theorem 1

In a two-player zero-sum game at time , if both players’ average overall regret is less than , then is a -Nash Equilibrium.

Here, the average strategy is defined as ():

| (4) |

An -Nash Equilibrium approximates a Nash Equilibrium, with the property that . Thus, measures the distance of to the Nash Equilibrium in expected payoff. Then, according to Theorem 1, as (), converges to NE. Notably, Theorem 1 can be applied directly to our hierarchical setting, as the only difference from the original setting related to Theorem 1 is the replacement of with in and . This difference can be viewed as employing a new action space (i.e., ) and is independent of using the option framework (i.e., the hierarchical extension).

3.2 Hierarchical Counterfactual Regret Minimization

One straightforward way to learn a hierarchical strategy is to view as a unified strategy defined on a new action set , and then apply CFR directly to learn it. However, this approach does not allow for the explicit separation and utilization of the high-level and low-level components, such as extracting and reusing skills (i.e., low-level parts) or initializing them with human knowledge. In this section, we treat and as distinct functions and introduce Hierarchical CFR (HCFR) to separately learn and . Additionally, we provide the convergence guarantee for HCFR.

Taking inspiration from CFR (Zinkevich et al. (2007)), we derive an upper bound for the average overall regret , which is given by the sum of high-level and low-level counterfactual regrets at each information set, namely and . In this way, we can minimize and for each individual independently by adjusting and respectively, and in doing so, minimize the average overall regret. The learning of the high-level and low-level strategy is also decoupled.

Theorem 2

With the following definitions of high-level and low-level counterfactual regrets:

| (5) | ||||

we have .

Here, , is the expected payoff for choosing option at , is a hierarchical strategy profile identical to except that the intra-option (i.e., low-level) strategy of option at is always choosing . Detailed proof of Theorem 2 is available in Appendix A.

After obtaining and , we can compute the high-level and low-level strategies for the next iteration as follows: ()

| (6) | ||||

In this way, the counterfactual regrets and strategies are calculated alternatively (i.e., ) with Equation (5) and (6) for iterations until convergence (i.e., ). The convergence rate of this algorithm is presented in the following theorem:

Theorem 3

If player selects options and actions according to Equation (6), then , where , is the number of information sets for player , , .

Thus, as , . Additionally, the convergence rate is , which is the same as CFR (Zinkevich et al. (2007)). Thus, the introduction of the option framework does not compromise the convergence guarantee, while allowing skill-based strategy learning. The proof of Theorem 3 is provided in Appendix B.

With and , we can compute the average high-level and low-level strategies as:

| (7) |

where . Then, we can state:

Proposition 1

If both players sequentially use their average high-level and low-level strategies following the one-step option model, i.e., , selecting an option according to and then selecting the action according to the corresponding intra-option strategy , the resulting strategy profile converges to a Nash Equilibrium as .

3.3 Low-Variance Monte Carlo Sampling Extension

In vanilla CFR, counterfactual regrets and immediate strategies are updated for every information set during each iteration. This necessitates a complete traversal of the game tree, which becomes infeasible for large-scale game models. Monte Carlo CFR (MCCFR) (Lanctot et al. (2009)) is a framework that allows CFR to only update regrets/strategies on part of the tree for a single agent (i.e., the traverser) at each iteration. MCCFR features two sampling scheme variants: External Sampling (ES) and Outcome Sampling (OS). In OS, regrets/strategies are updated for information sets within a single trajectory that is generated by sampling one action at each decision point. In ES, a single action is sampled for non-traverser agents, while all actions of the traverser are explored, leading to updates over multiple trajectories. ES relies on perfect game models for backtracking and becomes impractical as the horizon increases, with which the search breadth grows exponentially. Our algorithm is specifically designed for domains with deep game trees, leading us to adopt OS as the sampling scheme. Nevertheless, OS is challenged by high sample variance, an issue that exacerbates with an increasing decision-making horizon. Therefore, in this section, we further complete our algorithm with a low-variance outcome sampling extension.

MCCFR’s main insight is substituting the counterfactual regrets with unbiased estimations, while maintaining the other learning rules (as in Section 3.2). This allows for updating functions only on information sets within the sampled trajectories, bypassing the need to traverse the full game tree. With MCCFR, the average overall regret as at the same convergence rate as vanilla CFR, with high probability, as stated in Theorem 5 of Lanctot et al. (2009). Therefore, to apply the Monte Carlo extension, we propose unbiased estimations of and , .

First, we define and with the immediate counterfactual regrets and values : ()

| (8) | ||||

The equivalence between Equation (8) and (5) is proved in Appendix D.

Next, we propose to collect trajectories with the sample strategy at each iteration , and compute the corresponding sampled immediate counterfactual regrets and values as follows:

| (9) | ||||

Here, inspired by Davis et al. (2020), and are incorporated with the baseline function for variance reduction: ()

| (10) | ||||

where is the indicator function. Accordingly, and are defined as and . (For superscripts on and : use when the agent is in state or for high-level option choices, and in state for low-level action decisions.)

Theorem 4

For all , we have:

| (11) |

Therefore, we can acquire unbiased estimations of by substituting with in Equation (8). This theorem is proved in Appendix E. Notably, Theorem 4 doesn’t prescribe any specific form for the baseline function . Yet, the baseline design can affect the sample variance of these unbiased estimators. As posited in Gibson et al. (2012), given a fixed , estimators with reduced variance necessitate fewer iterations to converge to an -Nash equilibrium. Hence, we propose the following ideal criteria for the baseline function to minimize the sample variance:

Theorem 5

If and , for all , we have:

| (12) |

Consequently, and are minimized with respect to for all , , .

The proof can be found in Appendix F. The ideal criteria for the baseline function proposed in Theorem 5 is incorporated into our objective design in Section 3.4.

To sum up, by employing the immediate counterfactual regret estimators shown as Equation (9) and (10), and making appropriate choices for the baseline function (introduced in Section 3.4), we are able to bolster the adaptability and learning efficiency of our method through a low-variance outcome Monte Carlo sampling extension.

3.4 Hierarchical Deep Counterfactual Regret Minimization

Building upon theoretical foundations discussed in Section 3.2 and 3.3, we now present our algorithm – HDCFR. While the algorithm outline is similar to tabular CFR algorithms (Kakkad et al. (2019); Davis et al. (2020)), HDCFR differentiates itself by introducing NNs as function approximators for the counterfactual regret , average strategy , and baseline . These approximations enable HDCFR to handle large-scale state spaces and are trained with specially-designed objective functions. In this section, we introduce the deep learning objectives, demonstrate their alignment with the theoretical underpinnings provided in Section 3.2 and 3.3, and then present the complete algorithm in pseudo-code form.

Three types of networks are trained: the counterfactual regret networks , average strategy networks , and baseline network . Notably, we do not maintain the counterfactual values and baselines for each player. Instead, we leverage the property of two-player zero-sum games where the payoff of the two players offsets each other. Thus, we track the payoff for player 1 and use the opposite value as the payoff for player 2. That is, , .

First, the counterfactual regret networks are trained by minimizing the following two objectives, denoted as and , respectively.

| (13) |

Here, represents a memory containing the sampled immediate counterfactual regrets gathered from iterations 1 to . As mentioned in Section 3.3, the counterfactual regrets (i.e., and ) should be replaced with their unbiased estimations acquired via Monte Carlo sampling. As a justification of our objective design, we claim:

Proposition 2

Let and denote the minimal points of and , respectively. For all , and yield unbiased estimations of the true counterfactual regrets scaled by positive constant factors, i.e., and .

Please refer to Appendix G for the proof. Observe that the counterfactual regrets are employed solely for calculating the strategy in the subsequent iteration, as per Equation (6). The positive scale factors and do not impact this calculation, as they appear in both the numerator and denominator and cancel each other out. Thus, and can be used in place of and .

Second, the average strategy networks are learned based on the immediate strategies from iteration 1 to . Specifically, they are learned by minimizing and :

| (14) |

Notably, in our algorithm, the sampling scheme is specially designed to fulfill the subsequent proposition. Define as the sample strategy profile at iteration when is the traverser, meaning exploration occurs during ’s decision-making. is a uniformly random strategy when , and equals to when (i.e., the other player). Furthermore, samples in are gathered when the traverser is (so samples with ). With this scheme, we assert: (refer to Appendix H for proof)

Proposition 3

Let and represent the minimal points of and , respectively, and define as the partition of at iteration . If is a collection of random samples with the sampling scheme defined above, then and , , as ().

Last, at the end of each iteration, we determine the baseline function for the subsequent iteration to reduce sample variance, which is achieved by minimizing the following objective:

| (15) |

Here, is a memory buffer including trajectories collected at iteration when player is the traverser. For each trajectory, we compute and record the sampled baseline values , which are defined as: ( if )

| (16) | ||||

As for the high-level baseline function , for simplicity, it is not trained as another network but defined based on as: . With the specially-designed sampled baseline functions and the relation between and , we have:

Proposition 4

Denote as the minimal point of and consider trajectories in as independent and identically distributed random samples, then we have and , , as .

This proposition implies that the ideal criteria for the baseline function (i.e., Theorem 5) can be achieved at the optimal point of . For a detailed proof, please refer to Appendix I.

To sum up, we present the pseudo code of HDCFR as Algorithm 1 and 2. There are in total iterations. (1) At each iteration , the two players take turns being the traverser and collecting trajectories for training (Line 6 – 11 of Algorithm 1). Each trajectory is obtained via outcome Monte Carlo sampling, detailed as Algorithm 2. In the course of sampling, immediate counterfactual regrets for the traverser (i.e., ) are calculated using Equation (9) and (10) and stored in the regret buffer ; while the strategies for the non-traverser (i.e., ) are derived from according to Equation (6) and saved in the strategy buffer . (2) At the end of iteration , the counterfactual regret networks are trained based on samples stored in the memory , according to Equation (13), in order to obtain (Line 12 – 14 of Algorithm 1). defines , based on which we can update the baseline function to according to Equation (15) (Line 22 – 30 of Algorithm 1). and are then utilized for the next iteration. (3) After iterations, a hierarchical strategy profile is learned based on samples in using Equation (14) (Line 17 – 19 of Algorithm 1). The training result is then returned as an approximate Nash Equilibrium strategy profile.

4 Evaluation and Main Results

| Benchmark | Leduc | Leduc_10 | Leduc_15 | Leduc_20 | FHP | FHP_10 |

| Stack Size | 13 | 60 | 80 | 100 | 2000 | 4000 |

| Horizon | 4 | 20 | 30 | 40 | 8 | 20 |

| # of Nodes | 464 | 31814 | 67556 | 113954 |

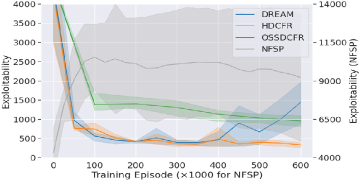

In this section, we present a comprehensive analysis of our proposed HDCFR algorithm. In Section 4.1, we benchmark HDCFR against leading model-free methods for imperfect-information zero-sum games, including DREAM (Steinberger et al. (2020)), OSSDCFR (an outcome-sampling variant of DCFR) (Steinberger (2019); Brown et al. (2019)), and NFSP (Heinrich and Silver (2016)). Notably, like HDCFR, these algorithms do not require task-specific knowledge and can be applied in environments with unknown game tree models (i.e., the model-free setting). For evaluation benchmarks, as a common practice, we select poker games: Leduc (Southey et al. (2005)) and heads-up flop hold’em (FHP) (Brown et al. (2019)). Given its hierarchical design, HDCFR is poised for enhanced performance in tasks demanding extended decision-making horizons. To underscore this, we elevate complexity of the standard poker benchmarks by raising the number of cards and the cap on the total raises and accordingly increasing the initial stack size for each player, compelling agents to strategize over longer horizons. Detailed comparisons among these benchmarks are available in Table 1. Then, in Section 4.2, we conduct an ablation study to highlight the importance of each component within our algorithm and elucidate the impact of key hyperparameters on its performance. Finally, in Section 4.3, we delve into the hierarchical strategy learned by HDCFR. We examine whether the high-level strategy can temporally extend skills and if the low-level ones (i.e., skills) can be transferred to new tasks as expert knowledge injections to aid learning. Notably, we utilize the baseline and benchmark implementation from Steinberger (2020), and provide the codes for HDCFR and necessary resources to reproduce all experimental results of this paper in https://github.com/LucasCJYSDL/HDCFR.

4.1 Comparison with State-of-the-Art Model-free Algorithms for Zero-sum IIGs

For Leduc poker games, we can explicitly compute the best response (BR) function for the learned strategy profile . We then can employ the exploitability of defined as Equation (17) as the learning performance metric. Commonly-used in extensive-form games, exploitability measures the distance from Nash Equilibrium, for which a lower value is preferable. For hold’em poker games (like our benchmarks), exploitability is usually quantified in milli big blinds per game (mbb/g).

| (17) |

In Figure 1, we depict the learning curves of HDCFR and the baselines. Solid lines represent the mean, while shadowed areas indicate the 95% confidence intervals from repeated trials. (1) For CFR-based algorithms, the agent samples 900 trajectories, from the root to a termination state, in each training episode, and visits around game states in the learning process. In contrast, the RL-based NFSP algorithm is trained over more episodes () and the agent visits game states in total during training. However, NFSP consistently underperforms in all benchmarks. Note that NFSP utilizes a separate y-axis. Evidently, NFSP is less sample efficient than the CFR-based algorithms. (2) In the absence of game models, backtracking is not allowed and so the player can sample only one action at each information set, known as outcome sampling, during game tree traversals. Thus, algorithms that require backtracking, like DCFR (Brown et al. (2019)) and DNCFR (Li et al. (2020)), cannot work directly, unless adapted with the outcome sampling scheme. It can be observed that the performance of the resulting algorithm OSSDCFR declines significantly with increasing game complexity, primarily due to the high sample variance. (3) With variance reduction techniques, DREAM achieves comparable performance to HDCFR in simpler scenarios. Yet, HDCFR, owing to its hierarchical structure, excels over DREAM in games with extended horizons, where DREAM struggles to converge. Notably, HDCFR’s superiority becomes more significant as the game complexity increases.

Further, we conducted head-to-head tournaments between HDCFR and each baseline. We select the top three checkpoints for each algorithm, resulting in total nine pairings. Each pair of strategy profiles is competed over 1,000 hands. Table 2 shows the average payoff of HDCFR’s strategy profile (Equation (18)), along with 95% confidence intervals, measured in mbb/g. A higher payoff indicates superior decision-making performance and is therefore preferred.

| (18) |

Observations from Leduc poker games in this table align with conclusions (1)-(3) previously mentioned. To further show the superiority of our algorithm, we compare its performance with baselines on larger-scale FHP games, which boast a game tree exceeding in size. Due to the immense scale of FHP games, computing the best response functions is impractical, so we offer only head-to-head comparison results. Training an instance on FHP games requires roughly seven days using a device with 8 CPU cores (3rd Gen Intel Xeon) and 128 GB RAM. Our implementation leverages the RAY parallel computing framework (Moritz et al. (2018)). Still, we can see that the advantage of HDCFR grows as task difficulty goes up.

| Baseline | DREAM | OSSDCFR | NFSP |

| Leduc | |||

| Leduc_10 | |||

| Leduc_15 | |||

| Leduc_20 | |||

| FHP | |||

| FHP_10 |

4.2 Ablation Analysis

HDCFR integrates the one-step option framework (Section 2.2) and variance-reduced Monte Carlo CFR (Section 3.2 and 3.3). This section offers an ablation analysis highlighting each crucial element of our algorithm: the option framework, variance reduction, Monte Carlo sampling, and CFR.

(1) The key component of the one-step option framework is the Multi-Head Attention (MHA) mechanism which enables the agent to temporarily extend skills and so form a hierarchical policy in the learning process. Without this component in the high-level strategy (NO_MHA in Figure 2(a)), the agent struggles to converge at the final stage, akin to the behavior observed for DREAM in Figure 1(d). (2) Within HDCFR, we incorporate a baseline function to reduce variance. This function proves pivotal for extended-horizon tasks where sampling variance can escalate. Excluding the baseline function from the hierarchical strategy, as marked by NO_BASELINE in Figure 2(a), results in a substantial performance decline. (3) In Monte Carlo sampling, as outlined in Section 3.4, the traverser should use a uniformly random sampling strategy. Yet, for fair comparisons, we employ a weighted average of a uniformly random strategy (with the weight ) and the player’s current strategy (). The controlling weight is set as 0.5, aligning with configuration for the baselines. Figure 2(b) indicates that as increases, approximately there is a correlating rise in learning performance. Notably, our design – utilizing a purely random sampling strategy at , delivers the best result, amplifying the performance depicted in Figure 1(d). Another key aspect of Monte Carlo sampling is the number of sampled trajectories per training episode. According to Figure 2(c), increasing this count facilitates faster convergence in the initial training phase. However, it does not guarantee an improvement in the final model’s performance, and instead it would proportionally increase the overall training time. (4) As indicated by Brown et al. (2019) and Steinberger et al. (2020), slightly modifying the CFR updating rule (Equation (6)), that is, to greedily select the action with the largest regret rather than use a random one when the sum , can speed up the convergence. We adopt the same trick and find that it can improve the convergence speed slightly, as compared to the original setting (CFR_RULE in Figure 2(a)).

4.3 Case Study: Delving into the Learned Hierarchical Strategy

One key benefit of hierarchical learning is the agent’s ability to use prelearned or predefined skills as foundational blocks for strategy learning, which provides a manner for integrating expert knowledge. Even in the absence of domain-specific knowledge, where rule-based skills can’t be provided as expert guidance, we can leverage skills learned from similar scenarios. Skills, functioned as policy segments, often possess greater generality than complete strategies, enabling transferred use. In Figure 3, we demonstrate the transfer of skills from various Leduc games to Leduc_20 and depict the learning outcomes. For comparison, we also present the performance without the transferred skills, labeled as HDCFR. These prelearned skills can either remain static (Figure 3(b)) or be trained with the high-level strategy (Figure 3(a)). When kept static, the agent can focus on mastering its high-level strategy to select among a set of effective skills, resulting in quicker convergence and superior end performance. Notably, the final outcomes in Figure 3(b) are intrinsically tied to the predefined skills and positively correlate with the similarity between the skills’ source task and Leduc_20. On the other hand, if the skills evolve with the high-level strategy, the improvement on the convergence speed may not be obvious, but skills can be more customized for the current task and better performance may be achieved. For instance, with Leduc_15 skills, the peak performance is reached around episode 400; with Leduc skills, training with dynamic ones (Figure 3(a)) yields better results than with static ones (Figure 3(b)). However, for Leduc_20 skills, fixed skills works better. This could be because they originate from the same task, eliminating the need for further adaptation.

| Source Task | Leduc | Leduc_10 | Leduc_15 | Leduc_20 |

| Switch Frequency |

We next delve into an analysis of the learned high-level strategy. As depicted in Figure 3(b), when utilizing fixed skills from various source tasks, corresponding high-level strategies can be acquired. To determine if the high-level strategy promotes the temporal extension of skills – instead of frequently toggling between them – we employ the hierarchical strategy at each node of Leduc_20’s game tree (with 113954 nodes in total). We then calculate the frequency of skill switches in the game tree, considering all potential hands of cards and five repeated experiments. Table 3 presents the mean and 95% confidence intervals for these results. It’s evident that as the decision horizon of the skill’s source task expands, switch frequency diminishes due to prolonged single-skill durations. Notably, for Leduc_20 skills, skill switches between parent and child nodes occur only about 10% of the time. This indicates the agent’s preference for decision-making at an extended-skill level, approximately 10 steps long in average, rather than on individual actions, aligning with our anticipations.

5 Related Work

Counterfactual Regret Minimization (CFR) (Zinkevich et al. (2007)) is an algorithm for learning Nash Equilibria in extensive-form games through iterative self-play. As part of this process, it must traverse the entire game tree on every learning iteration, which is prohibitive for large-scale games. This motivates the development of Monte Carlo CFR (MCCFR) (Lanctot et al. (2009)), which samples trajectories traversing part of the tree to allow for significantly faster iterations. Yet, the variance of Monte Carlo outcome sampling could be an issue, especially for long sample trajectories. The authors of (Schmid et al. (2019); Davis et al. (2020)) then propose to introduce baseline functions for variance reduction. Notably, all methods mentioned above are tabular-based. For games with large state space, domain-specific abstraction schemes (Ganzfried and Sandholm (2014b); Moravčík et al. (2017)) are required to shrink them to a manageable size by clustering states into buckets, which necessitates expert knowledge and is not applicable to all games.

To obviate the need of abstractions, several CFR variants with function approximators have emerged. Pioneering this was Regression CFR (Waugh et al. (2015)), which adopts regression trees to model cumulative regrets but relies on hand-crafted features and full traversals of the game tree. Subsequently, several works (Brown et al. (2019); Li et al. (2020); Steinberger (2019); Li et al. (2021b)) propose to model the cumulative counterfactual regrets and average strategies in MCCFR as neural networks to enhance the scalability. However, all these methods rely on knowledge of the game model to realize backtracking (i.e., sampling multiple actions at an information set) for regret estimation. As a model-free approach, Neural Fictitious Self-Play (NFSP) (Heinrich and Silver (2016)) is the first deep reinforcement learning algorithm to learn a Nash Equilibrium in two-player imperfect information games through self-play. Since its advent, various policy gradient and actor-critic methods have been shown to have similar convergence properties if tuned appropriately (Lanctot et al. (2017); Srinivasan et al. (2018)). However, fictitious play empirically converges slower than CFR-based approaches in many settings. DREAM (Steinberger et al. (2020)) extends DCFR with variance-reduction techniques from Davis et al. (2020) and represents the state-of-the-art in model-free algorithms of this area. Compared with DREAM, our algorithm enables hierarchical learning with (prelearned) skills and empirically show enhanced performance on longer-horizon games.

As another important module of HDCFR, the option framework (Sutton et al. (1999)) enables learning and planning at multiple temporal levels and has been widely adopted in reinforcement learning. Multiple research areas centered on this framework have been developed. Unsupervised Option Discovery aims at discovering skills that are diverse and efficient for downstream task learning without supervision from reward signals, for which algorithms have been proposed for both single-agent (Eysenbach et al. (2019); Jinnai et al. (2020); Chen et al. (2022a)) and collaborative multi-agent scenarios (Chen et al. (2022c, b); Zhang et al. (2022)). Hierarchical Reinforcement Learning (Zhang and Whiteson (2019); Li et al. (2021a)) and Hierarchical Imitation Learning (Jing et al. (2021); Chen et al. (2023a, b)), on the other hand, aim at directly learning a hierarchical policy incorporated with skills, either from interactions with the environment or expert demonstrations. As a pioneering effort to amalgamate options with CFR, HDCFR not only offers a robust theoretical foundation but also demonstrates resilient empirical performance against leading algorithms in zero-sum imperfect-information games.

6 Conclusion

In this research, we present the first hierarchical version of Counterfactual Regret Minimization (CFR) by utilizing the option framework. Initially, we establish its theoretical foundations in a tabular setting, introducing Hierarchical CFR updating rules that are guaranteed to converge. Then, we provide a low-variance Monte Carlo sampling extension for scalable learning in tasks without perfect game models or encompassing deep game trees. Further, we incorporate neural networks as function approximators, devising deep learning objectives that align with the theoretical outcomes in the tabular setting, thereby empowering our HDCFR algorithm to manage vast state spaces. Evaluations in complex two-player zero-sum games show HDCFR’s superiority over leading algorithms in this field and its advantage becomes more significant as the decision horizon increases, underscoring HDCFR’s great potential in tasks involving deep game trees. Moreover, we show empirically that the learned high-level strategy can temporarily extend skills to utilize the hierarchical subtask structures in long-horizon tasks, and the learned skills can be transferred to different tasks, serving as expert knowledge injections to facilitate learning. Finally, our algorithm provides a novel framework to learn with predefined skills in zero-sum IIGs. An interesting future research direction could be interactive learning with human inputs as skills.

References

- Abernethy et al. (2011) J. Abernethy, P. L. Bartlett, and E. Hazan. Blackwell approachability and no-regret learning are equivalent. In Proceedings of the 24th Annual Conference on Learning Theory, pages 27–46. JMLR Workshop and Conference Proceedings, 2011.

- Bakhtin et al. (2022) A. Bakhtin, N. Brown, E. Dinan, G. Farina, C. Flaherty, D. Fried, A. Goff, J. Gray, H. Hu, A. P. Jacob, M. Komeili, K. Konath, M. Kwon, A. Lerer, M. Lewis, A. H. Miller, S. Mitts, A. Renduchintala, S. Roller, D. Rowe, W. Shi, J. Spisak, A. Wei, D. Wu, H. Zhang, and M. Zijlstra. Human-level play in the game of diplomacy by combining language models with strategic reasoning. Science, pages 1067–1074, 2022.

- Bowling et al. (2015) M. Bowling, N. Burch, M. Johanson, and O. Tammelin. Heads-up limit hold’em poker is solved. Science, 347(6218):145–149, 2015.

- Brown and Sandholm (2017) N. Brown and T. Sandholm. Safe and nested subgame solving for imperfect-information games. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems, pages 689–699, 2017.

- Brown and Sandholm (2018) N. Brown and T. Sandholm. Superhuman ai for heads-up no-limit poker: Libratus beats top professionals. Science, 359(6374):418–424, 2018.

- Brown et al. (2018) N. Brown, T. Sandholm, and B. Amos. Depth-limited solving for imperfect-information games. In Proceedings of the 32nd Annual Conference on Neural Information Processing Systems, pages 7674–7685, 2018.

- Brown et al. (2019) N. Brown, A. Lerer, S. Gross, and T. Sandholm. Deep counterfactual regret minimization. In Proceedings of the 36th International Conference on Machine Learning, volume 97 of Proceedings of Machine Learning Research, pages 793–802. PMLR, 2019.

- Chen et al. (2022a) J. Chen, V. Aggarwal, and T. Lan. ODPP: A unified algorithm framework for unsupervised option discovery based on determinantal point process. CoRR, abs/2212.00211, 2022a.

- Chen et al. (2022b) J. Chen, J. Chen, T. Lan, and V. Aggarwal. Learning multi-agent options for tabular reinforcement learning using factor graphs. IEEE Transactions on Artificial Intelligence, pages 1–13, 2022b. doi: 10.1109/tai.2022.3195818.

- Chen et al. (2022c) J. Chen, J. Chen, T. Lan, and V. Aggarwal. Multi-agent covering option discovery based on kronecker product of factor graphs. IEEE Transactions on Artificial Intelligence, 2022c.

- Chen et al. (2023a) J. Chen, T. Lan, and V. Aggarwal. Option-aware adversarial inverse reinforcement learning for robotic control. In 2023 IEEE International Conference on Robotics and Automation (ICRA), pages 5902–5908. IEEE, 2023a.

- Chen et al. (2023b) J. Chen, D. Tamboli, T. Lan, and V. Aggarwal. Multi-task hierarchical adversarial inverse reinforcement learning. In Proceedings of the 40th International Conference on Machine Learning, volume 202 of Proceedings of Machine Learning Research, pages 4895–4920. PMLR, 2023b.

- Davis et al. (2020) T. Davis, M. Schmid, and M. Bowling. Low-variance and zero-variance baselines for extensive-form games. In Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pages 2392–2401. PMLR, 2020.

- Eysenbach et al. (2019) B. Eysenbach, A. Gupta, J. Ibarz, and S. Levine. Diversity is all you need: Learning skills without a reward function. In Proceedings of the 7th International Conference on Learning Representations. OpenReview.net, 2019.

- Fu et al. (2022) H. Fu, W. Liu, S. Wu, Y. Wang, T. Yang, K. Li, J. Xing, B. Li, B. Ma, Q. Fu, and W. Yang. Actor-critic policy optimization in a large-scale imperfect-information game. In Proceedings of the 10th International Conference on Learning Representations. OpenReview.net, 2022.

- Ganzfried and Sandholm (2014a) S. Ganzfried and T. Sandholm. Potential-aware imperfect-recall abstraction with earth mover’s distance in imperfect-information games. In Proceedings of the 28th AAAI Conference on Artificial Intelligence, pages 682–690. AAAI Press, 2014a.

- Ganzfried and Sandholm (2014b) S. Ganzfried and T. Sandholm. Potential-aware imperfect-recall abstraction with earth mover’s distance in imperfect-information games. In Proceedings of 28th the AAAI Conference on Artificial Intelligence, volume 28, 2014b.

- Gibson et al. (2012) R. G. Gibson, M. Lanctot, N. Burch, D. Szafron, and M. Bowling. Generalized sampling and variance in counterfactual regret minimization. In Proceedings of the 26th AAAI Conference on Artificial Intelligence. AAAI Press, 2012.

- Heinrich and Silver (2016) J. Heinrich and D. Silver. Deep reinforcement learning from self-play in imperfect-information games. CoRR, abs/1603.01121, 2016.

- Jing et al. (2021) M. Jing, W. Huang, F. Sun, X. Ma, T. Kong, C. Gan, and L. Li. Adversarial option-aware hierarchical imitation learning. In Proceedings of the 38th International Conference on Machine Learning, pages 5097–5106. PMLR, 2021.

- Jinnai et al. (2020) Y. Jinnai, J. W. Park, M. C. Machado, and G. D. Konidaris. Exploration in reinforcement learning with deep covering options. In Proceedings of the 8th International Conference on Learning Representations. OpenReview.net, 2020.

- Kakkad et al. (2019) V. Kakkad, H. Shah, R. Patel, and N. Doshi. A comparative study of applications of game theory in cyber security and cloud computing. Procedia Computer Science, 155:680–685, 2019.

- Lanctot et al. (2009) M. Lanctot, K. Waugh, M. Zinkevich, and M. H. Bowling. Monte carlo sampling for regret minimization in extensive games. In Proceedings of the 23rd Annual Conference on Neural Information Processing Systems, pages 1078–1086. Curran Associates, Inc., 2009.

- Lanctot et al. (2017) M. Lanctot, V. F. Zambaldi, A. Gruslys, A. Lazaridou, K. Tuyls, J. Pérolat, D. Silver, and T. Graepel. A unified game-theoretic approach to multiagent reinforcement learning. In Advances in Neural Information Processing Systems 30, pages 4190–4203, 2017.

- Li et al. (2021a) C. Li, D. Song, and D. Tao. The skill-action architecture: Learning abstract action embeddings for reinforcement learning. In Submissions of the 9th International Conference on Learning Representations, 2021a.

- Li et al. (2020) H. Li, K. Hu, S. Zhang, Y. Qi, and L. Song. Double neural counterfactual regret minimization. In Proceedings of the 8th International Conference on Learning Representations. OpenReview.net, 2020.

- Li et al. (2021b) H. Li, X. Wang, Z. Guo, J. Zhang, and S. Qi. D2cfr: Minimize counterfactual regret with deep dueling neural network. arXiv preprint arXiv:2105.12328, 2021b.

- Moravcik et al. (2016) M. Moravcik, M. Schmid, K. Ha, M. Hladík, and S. J. Gaukrodger. Refining subgames in large imperfect information games. In Proceedings of the 30th AAAI Conference on Artificial Intelligence, pages 572–578. AAAI Press, 2016.

- Moravčík et al. (2017) M. Moravčík, M. Schmid, N. Burch, V. Lisỳ, D. Morrill, N. Bard, T. Davis, K. Waugh, M. Johanson, and M. Bowling. Deepstack: Expert-level artificial intelligence in heads-up no-limit poker. Science, 356(6337):508–513, 2017.

- Moritz et al. (2018) P. Moritz, R. Nishihara, S. Wang, A. Tumanov, R. Liaw, E. Liang, M. Elibol, Z. Yang, W. Paul, M. I. Jordan, and I. Stoica. Ray: A distributed framework for emerging AI applications. In 13th USENIX Symposium on Operating Systems Design and Implementation, pages 561–577. USENIX Association, 2018.

- Noe et al. (2012) T. H. Noe, M. Rebello, and J. Wang. Learning to bid: The design of auctions under uncertainty and adaptation. Games and Economic Behavior, pages 620–636, 2012.

- Osborne and Rubinstein (1994) M. J. Osborne and A. Rubinstein. A course in game theory. MIT press, 1994.

- Sandholm (2015) T. Sandholm. Abstraction for solving large incomplete-information games. In Proceedings of the 29th AAAI Conference on Artificial Intelligence, pages 4127–4131. AAAI Press, 2015.

- Schmid et al. (2019) M. Schmid, N. Burch, M. Lanctot, M. Moravcik, R. Kadlec, and M. Bowling. Variance reduction in monte carlo counterfactual regret minimization (VR-MCCFR) for extensive form games using baselines. In Proceedings of 33rd the AAAI Conference on Artificial Intelligence, pages 2157–2164. AAAI Press, 2019.

- Southey et al. (2005) F. Southey, M. H. Bowling, B. Larson, C. Piccione, N. Burch, D. Billings, and D. C. Rayner. Bayes’ bluff: Opponent modelling in poker. In Proceedings of the 21st Conference in Uncertainty in Artificial Intelligence, pages 550–558, 2005.

- Srinivasan et al. (2018) S. Srinivasan, M. Lanctot, V. F. Zambaldi, J. Pérolat, K. Tuyls, R. Munos, and M. Bowling. Actor-critic policy optimization in partially observable multiagent environments. In Proceedings of the 32nd Annual Conference on Neural Information Processing Systems, pages 3426–3439, 2018.

- Steinberger (2019) E. Steinberger. Single deep counterfactual regret minimization. CoRR, abs/1901.07621, 2019.

- Steinberger (2020) E. Steinberger. Pokerrl. https://github.com/EricSteinberger/DREAM, 2020.

- Steinberger et al. (2020) E. Steinberger, A. Lerer, and N. Brown. DREAM: deep regret minimization with advantage baselines and model-free learning. CoRR, abs/2006.10410, 2020.

- Sutton et al. (1999) R. S. Sutton, D. Precup, and S. Singh. Between mdps and semi-mdps: A framework for temporal abstraction in reinforcement learning. Artificial Intelligence, 112(1):181–211, 1999. ISSN 0004-3702. doi: https://doi.org/10.1016/S0004-3702(99)00052-1.

- Vaswani et al. (2017) A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin. Attention is all you need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems, pages 5998–6008, 2017.

- Waugh et al. (2015) K. Waugh, D. Morrill, J. A. Bagnell, and M. H. Bowling. Solving games with functional regret estimation. In Proceedings of the 29th AAAI Conference on Artificial Intelligence, pages 2138–2145. AAAI Press, 2015.

- Zhang et al. (2022) F. Zhang, C. Jia, Y.-C. Li, L. Yuan, Y. Yu, and Z. Zhang. Discovering generalizable multi-agent coordination skills from multi-task offline data. In Proceedings of the 11th International Conference on Learning Representations, 2022.

- Zhang and Whiteson (2019) S. Zhang and S. Whiteson. DAC: the double actor-critic architecture for learning options. In Proceedings of the 33rd Annual Conference on Neural Information Processing Systems, pages 2010–2020, 2019.

- Zinkevich et al. (2007) M. Zinkevich, M. Johanson, M. H. Bowling, and C. Piccione. Regret minimization in games with incomplete information. In Proceedings of the 21st Annual Conference on Neural Information Processing Systems, pages 1729–1736. Curran Associates, Inc., 2007.

Appendix A Proof of Theorem 2

Define to be the information sets of player reachable from (including ), and to be a strategy profile equal to except that player adopts in the information sets contained in . Then, the average overall regret starting from () can be defined as:

| (19) |

Further, we define to be the set of all possible next information sets of player given that action was just selected at and define , . Then, we have the following lemma:

Lemma 1

Proof

| (20) | ||||

| (21) | ||||

In Equation (20) and (21), we employ the one-step look-ahead expansion (Equation (10) in Zinkevich et al. (2007)) for the second line. At iteration , when player selects a hierarchical action , it will transit to the subsequent information set with a probability of , since only player will act between and according to . According to the definition of the reach probability, (since and are executed by player ) and . Combining Equation (20) and (21), we can get:

| (22) | ||||

In previous derivations, we have repeatedly employed the inequality , which holds for all , as in the last inequality of Equation (20) and (21). By applying this inequality once more to Equation (22), we can obtain Lemma 1.

Lemma 2

Proof We prove this lemma by induction on the height of the information set on the game tree. When the height is 1, i.e., , , then Lemma 1 implies Lemma 2. Now, for the general case:

| (23) | ||||

In the second line, we employ the induction hypothesis. In the third line, we use the following facts: , , and for all distinct . The third fact here is derived from the perfect recall property of the game: all players can recall their previous (hierarchical) actions and the corresponding information sets. Then, because elements from the two sets possess distinct prefixes (i.e., and ).

Last, for the average overall regret, we have , where corresponds to the start of the game tree and . Applying Lemma 2, we can get the theorem: .

Appendix B Proof of Theorem 3

Regret matching can be defined in a domain where a fixed set of actions and a payoff function exist. At each iteration , a distribution over the actions, , is chosen based on the cumulative regret . Specifically, the cumulative regret at iteration for not playing action is defined as:

| (24) |

where is obtained by:

| (25) |

Then, we have the following lemma (Theorem 8 in Zinkevich et al. (2007)):

Lemma 3

, where .

To apply this lemma, we must transform the definitions of and in Equation (5) to a form resembling Equation (24). With Equation (5) and (2), we can get:

| (26) | ||||

Applying the same process on , we can get:

| (27) | ||||

Then, we can apply Lemma 3 and obtain:

| (28) | ||||

Here, is the range of the payoff function for , which covers and . We can directly apply Lemma 3, because the regret matching is adopted at each information set independently as defined in Equation (6). By integrating Equation 28 and Theorem 2, we then get:

| (29) | ||||

where is the number of information sets for player , , .

Appendix C Proof of Proposition 1

According to Theorem 1 and 3, as , , and thus the average strategy converges to a Nash Equilibrium. We claim that .

Proof

| (30) | ||||

Given this equivalence, we can infer that if both players adhere to the one-step option model for each —selecting an option based on and subsequently choosing the action in accordance with the corresponding intra-option strategy , this will result in an approximate NE solution.

Appendix D Proof of Equivalence between Equation (8) and (5)

Appendix E Proof of Theorem 4

Lemma 4

For all :

| (33) | ||||

Proof

| (34) | ||||

Using the definition of in Equation (10) and following the same process as above, we can get the second part of the lemma.

Now, we present the proof of the first part of Theorem 4.

| (35) | ||||

For the second equality, we use the following fact:

| (36) | ||||

Based on Equation (10), . Similar with Equation (36), we can get , which completes the proof of Equation (35).

Lemma 5

For all :

| (37) |

Proof We prove this lemma by induction on the height of on the game tree. For the base case, if , we have:

| (38) | ||||

Here, the first and third equality are due to Lemma 4, and the others are based on the corresponding definitions. Still, for this base case, we have:

| (39) | ||||

where the second equality comes for Equation (38). Then, we can move on to the general case, with the hypothesis that Lemma 5 holds for the nodes lower than on the game tree:

| (40) | ||||

where the induction hypothesis is adopted for the fourth equality. Equation (40) and (39) imply that holds for the general case.

So far, we have proved the first part of Theorem 4, i.e., . The second part, , can be proved with the same process as above based on Lemma 4, so we skip the complete proof and only present the following lemma within it.

Lemma 6

For all :

| (41) |

Appendix F Proof of Theorem 5

Part I:

First, we can apply the law of total variance to , conditioning on (i.e., if is reachable from ), and get:

| (42) | ||||

The first term can be expanded as follows, where the second equality is due to when .

| (43) | ||||

The second term can be converted as follows, based on the fact that (i.e., ) with probability , and (i.e., ) with probability .

| (44) | ||||

Note that and is not affected by , so we focus on in Equation (43). Applying the law of total variance:

| (45) | ||||

Fix , , based on the definition of and Lemma 5. Thus, the second term in Equation (45) is irrelevant to . According to Equation (42)-(45), we conclude that the minimum of with respect to can be achieved when . Following the same process, we can show that the minimum of with respect to can be achieved when .

Lemma 7

If , for all , then

, .

Proof Pick any . Based on Lemma 5, . If , then . It follows that , based on the definitions of and . Now, for any :

| (46) | ||||

Thus, , . Then, it follows that for any , . With the same process as above, we can show the second part of Lemma 7.

Given the discussions above, to complete the proof of Theorem 5, we need to further show that, , if and , for all , we have , for all .

Part II:

Lemma 8

For any and any baseline function :

| (47) | ||||

Proof By conditioning on the option choice at , we apply the law of total variance to :

| (48) | ||||

According to the definition of and the fact that , we have:

| (49) | ||||

Then, we analyze the second term in Equation (48):

| (50) | ||||

Based on Equation (48)-(50), we can get the first part of Lemma 8. The second part can be obtained similarly.

Lemma 8 illustrates the outcome of a single-step lookahead from state . Employing this in an inductive manner, we can derive the complete expansion of on the game tree as the following lemma:

Lemma 9

For any and any baseline function :

| (51) | ||||

Proof We proof this lemma through an induction on the height of on the game tree. For the base case, , then , so . In addition, we have . Thus, the lemma holds for the base case.

For the general case, , we apply Lemma 8 and get:

| (52) | ||||

where the first equality is the result of the sequential use of the two formulas in Lemma 8 and the second equality is based on the definition of . Next, we apply the induction hypothesis on , i.e., a node lower than on the game tree, and get:

| (53) |

By integrating Equation (52) and (53), we can get:

| (54) | ||||

For the second equality, we use the definitions of and , and the fact that they equal 1 when .

Before moving to the final proof, we introduce another lemma as follows.

Lemma 10

For any and any baseline function :

| (55) | ||||

Proof Applying the fact to both variance terms of Equation (51) and after rearranging the terms, we arrive at the following expression:

| (56) | ||||

Note that the equation above holds for any . Then, to get an upper bound of , we go back to Lemma 8 and apply Equation (56) and to its first and second term, respectively. After rearranging, we can get:

| (57) | ||||

We note that the second term of Equation (55) can be obtained by combining the last two terms of Equation (57). The second and third term of Equation (57) correspond to the sum over and , respectively.

Based on the discussions above, we give out the upper bound of as the following lemma:

Lemma 11

For any and any baseline function :

| (58) | ||||

Proof

| (59) | ||||

Here, we apply the definition of to get the first equality, and the law of total variance conditioned on (given ) to get the second equality. Next, we analyze the two terms in the third and fourth line of Equation (59) separately.

| (60) | ||||

Note that can be 0 or 1 (with probability ), and the variance equals 0 when , so we get the first equality in Equation (60). Similarly, we can get:

| (61) | ||||

Integrating Equation (59)-(61) and utilizing the upper bound proposed in Lemma 10, we can get:

| (62) | ||||

Note that the sum of the first two terms of Equation (62) equals the first term of Equation (58). The first and second term of Equation (62) correspond to the sum over and , respectively.

Similarly, we can derive the upper bound for shown as follows.

Lemma 12

For any and any baseline function :

| (63) | ||||

Proof By applying the law of total variance conditioned on (given ) and following the same process as Equation (59)-(61), we can get:

| (64) | ||||

Then, we can apply the upper bound shown as Equation (56) and get:

| (65) | ||||

Again, we can combine the first two terms of Equation (65) and get the first term of the right hand side of Equation (63), since the first term of Equation (65) corresponds to the case that and the second term is equivalent to the sum over .

Appendix G Proof of Proposition 2

We start from the definition:

| (66) | ||||

Here, denotes whether is visited in the -th sampled trajectory at iteration , and serves as the normalizing factor.

Let denote a minimal point of . Utilizing the first-order necessary condition for optimality, we obtain: . Thus, for the entry of , we deduce:

| (67) | ||||

where denotes the normalizing factor, which is a positive constant for a certain memory , and is the termination state of the -th sampled trajectory at iteration . In the second line of Equation (67), the second equality is valid based on the definition of sampled counterfactual regret (Equation (9) and (10)), which assigns non-zero values exclusively to information sets along the sampled trajectory. Now, we consider the expectation of on the set of sampled trajectories :

| (68) | ||||

where and the second equality holds due to Theorem 4. The second part of Proposition 2, i.e., , can be demonstrated similarly.

Appendix H Proof of Proposition 3

According to the definition of in Equation (14) and following the same process as Equation (66) - (67), we can obtain:

| (69) |

According to the law of large numbers, as (), we have:

| (70) |

Ideally, we should randomly select a single information set for each randomly-sampled trajectory and add its strategy distribution to the memory. This guarantees that occurrences of information sets within each iteration are independent and identically distributed, as the sampling strategy remains consistent, and the number of samples (i.e., ) are equal across different iterations, thus validating the above formula. However, in practice (Algorithm 2), we gather strategy distributions of all information sets for the non-traverser along each sampled trajectory to enhance sample efficiency, which has been empirically proven to be effective. In addition, at a certain iteration , the samples for updating the strategy of player are collected when is the traverser, so the probability to visit a certain information set is .

To connect the convergence result in Equation (70) and the definition of in Equation (7), we need to show that , . According to the sampling scheme, is a uniformly random strategy when , and it is equal to when . Therefore, we have:

| (71) |

It is satisfied in our/usual game settings that remains consistent for all . This is attributable to the fact that histories within a single information set possess identical heights, and player consistently employs a uniformly random strategy. Similarly, we can deduce that using the aforementioned procedure, which we refrain from elaborating upon here.

Appendix I Proof of Proposition 4

First, we present a lemma concerning the sampled baseline values , as defined in Equation (16). This definition closely resembles that of the sampled counterfactual values in Equation (10), with two key distinctions: (1) is replaced with , as is not yet available; and (2) is substituted with , enabling the reuse of trajectories sampled with for updating , thereby enhancing efficiency.

Lemma 13

For all , we have:

| (72) |

Proof Given the similarity between and , we can follow the proof of Lemma 4 and 5 to justify the lemma here.

| (73) | ||||

According to Algorithm 2, the trajectories for updating are sampled at iteration when player 1 is the traverser, so . Similarly, we can obtain .

Next, we can employ these two equations to perform induction based on the height of within the game tree. If , based on the definition. If , we have:

| (74) | ||||

Here, we employ the induction hypothesis in the fourth equivalence, and incorporate pertinent definitions for the remaining equivalences. It follows that:

| (75) | ||||

By repeating the two equations above, we can show that holds for a general .

Next, we complete the proof of Proposition 4.

| (76) | ||||

Here, denotes the number of occurrences of in the memory . Let denote a minimal point of . Utilizing the first-order necessary condition for optimality, we obtain: . Thus, for the entry of , we deduce:

| (77) | ||||

The trajectories in can be considered as a sequence of independent and identically distributed random variables,since they are independently sampled with the same sample strategy . Then, according to the law of large numbers, as , we conclude:

| (78) | ||||

where the last equality comes from Lemma 13. It follows:

| (79) | ||||