Hierarchical Bayesian Modeling of Ocean Heat Content and its Uncertainty

Abstract

The accurate quantification of changes in the heat content of the world’s oceans is crucial for our understanding of the effects of increasing greenhouse gas concentrations. The Argo program, consisting of Lagrangian floats that measure vertical temperature profiles throughout the global ocean, has provided a wealth of data from which to estimate ocean heat content. However, creating a globally consistent statistical model for ocean heat content remains challenging due to the need for a globally valid covariance model that can capture complex nonstationarity. In this paper, we develop a hierarchical Bayesian Gaussian process model that uses kernel convolutions with cylindrical distances to allow for spatial non-stationarity in all model parameters while using a Vecchia process to remain computationally feasible for large spatial datasets. Our approach can produce valid credible intervals for globally integrated quantities that would not be possible using previous approaches. These advantages are demonstrated through the application of the model to Argo data, yielding credible intervals for the spatially varying trend in ocean heat content that accounts for both the uncertainty induced from interpolation and from estimating the mean field and other parameters. Through cross-validation, we show that our model out-performs an out-of-the-box approach as well as other simpler models. The code for performing this analysis is provided as the R package BayesianOHC.

keywords:

and

1 Introduction

As 90% of the energy imbalance caused by increased greenhouse gas concentrations is expected to be stored in the world’s oceans [Trenberth et al., 2014], measuring changes in ocean heat content is essential to the scientific understanding of climate change. However, obtaining accurate estimates of ocean heat content has historically been difficult due to a lack of data. To help address this problem, since 2002 the Argo program has been coordinating and maintaining a network of thousands of Lagrangian floats that passively move throughout the ocean measuring temperature and salinity [Riser et al., 2016]. While Argo floats achieve dense sampling in the vertical dimension, quantifying ocean heat content remains challenging since the number of Argo observations is small in comparison to the vastness of the global ocean. As estimates of ocean heat content inform scientific knowledge about other climate processes, it is particularly important to not just obtain an estimate of the ocean heat content trend but to assess the uncertainty in its estimation.

Several techniques have been used in the climate science literature to quantify ocean heat content using Argo data. One group of techniques is referred to as simple-gridding [Meyssignac et al., 2019] and includes approaches such as von Schuckmann and Le Traon [2011] and Gouretski [2018]. These methods compute ocean heat content at each point of a grid over the ocean’s surface by taking the average value of the observations within the corresponding grid-box. For grid-boxes with few observations, the heat content value is assumed to either have an anomaly value of zero or be equal to the global average value of all observations. Standard errors are obtained by computing the empirical standard deviation of the observations in each grid-box; for grid-boxes with few observations, the global standard deviation over all observations is used. A major drawback of this method is that it does not take advantage of the correlation structure in the data, which will lead to more variable estimates of the mean field and precludes a globally consistent estimate of the uncertainty in heat content.

Another group of approaches, including Levitus et al. [2012] and Ishii et al. [2017], estimate the heat content anomaly at a particular point using values observed at nearby locations. Levitus et al. [2012] estimate heat content with a weighted sum of observations within a fixed radius, where the weights decrease with the distance between the observation locations at a rate determined by a fixed correlation length scale. Ishii et al. [2017] estimate unobserved values by minimizing a cost function that accounts for the influence of nearby observations by using an inverse-distance weighting also with a fixed correlation length scale. A major drawback of these techniques is that the correlation length scales are not informed by the data and must be chosen in advance of the interpolation. Standard errors are estimated in these techniques by computing empirical variances and covariances on the observations partitioned into grid-boxes. While this standard error calculation takes into account the fact that observations in nearby grid-boxes will be correlated, the standard errors do not take into account the spatial distribution of observations within each grid-box. Furthermore, like the simple gridding approach, this method does not produce a consistent covariance estimate across the global domain.

Other approaches use data from climate models to aid in the estimation of ocean heat content. The approach of Cheng and Zhu [2016] interpolates the data using covariances obtained from output of the Coupled Model Intercomparison Project Phase 5. Approaches such as Balmaseda et al. [2013] interpolate the heat content field using climate model runs constrained by the observed values. Both of these methods suffer from the drawback that they are subject to the effect of climate model error on the covariance structure which can be difficult to assess and correct for [Palmer et al., 2017].

Gaussian processes are a powerful technique for expressing a spatial field with a probability model. The key assumption of the Gaussian process is that every linear combination of values in a spatial field follows a multivariate normal distribution. Then the correlation between each pair of observations is determined by their locations as well as by parameters that can be estimated from the data. Under the Gaussian process assumption, the value at an unobserved location conditioned on a set of observed values has a normal distribution whose mean is the linear combination of the observations [Stein, 1999]. The uncertainty in estimating the value at the unobserved location can then be obtained through the standard deviation of the conditional normal distribution.

The Gaussian process interpolation is similar to the weighted sum method used in Levitus et al. [2012] in that the estimates are given by a linear combination of the observations. However, the Gaussian process imposes a probability model on the spatial field, from which it can be shown mathematically that the conditional mean at an unobserved location is the best linear unbiased predictor [Stein, 1999]. Another advantage of assigning a probability model to the spatial field is that it allows for the correlation parameters to be determined through statistical inference, rather than chosen in an ad-hoc fashion. The uncertainty in the estimation of the parameters can also be assessed using statistical inference techniques.

Recent work in the statistics literature by Kuusela and Stein [2018] uses Gaussian processes on the Argo data to assess variability in the temperature fields at fixed depths. One challenge of applying Gaussian processes is that they were originally developed for fields whose covariance parameters are constant over the domain. However, the statistical properties of the ocean temperature field are known to vary substantially between different regions of the global domain [Cheng et al., 2017]. Kuusela and Stein [2018] account for non-stationarity by assuming local stationarity within windows of a fixed size centered around each point of a grid partitioning the ocean. This allows the model to be fit independently at each grid-point yielding an estimate and uncertainty range for the value at the center of each window. However, as ocean heat content is a globally integrated quantity, assessing its uncertainty cannot be accomplished with this local method. Instead, a covariance model that is valid over the entire domain is required, which is the focus of this work.

Rather than modeling temperature itself, we model ocean heat content, defined as the integral of the three-dimensional temperature field at a particular time [Dijkstra, 2008]. Thus, the problem is reduced to fitting a non-stationary model to the surface of the sphere. Even so, modeling non-stationary fields on a sphere is a major theoretical and computational challenge [Jeong et al., 2017]. Kernel-convolution methods introduced by Higdon [1998] and further developed by Paciorek and Schervish [2006] and Risser [2016] provide a flexible way to construct a Gaussian process with parameters that vary over the domain. In these methods, each location in the field is assigned a parameterized kernel function and the global model is obtained by computing convolutions between the kernels at any two points. When the kernel parameters vary smoothly, they describe the local behavior of the process at that point, yielding a clear interpretation of their meaning.

While the convolutions have easily expressed forms when the domain is assumed to be Euclidean, they are more challenging to compute when the domain is spherical. Computing convolutions using numerical approximation of the integrals as in Jeong and Jun [2015] and Li and Zhu [2016] is computationally intensive and even with pre-computation is infeasible on the thousands of observations in the Argo dataset. Various other non-stationary correlation functions have been developed for Gaussian processes on the sphere, such as deformations of the sphere introduced by Sampson and Guttorp [1992], spherical harmonics used by Hitczenko and Stein [2012], and stochastic partial differential equations used by Lindgren et al. [2011], however, the parameters of these covariance functions do not have the intuitive physical interpretation afforded by kernel convolution methods. Recently the BayesNSGP package (Turek and Risser [2019], Risser and Turek [2020], and Risser [2020]) offers the ability to use kernel convolutions in a Bayesian framework to model highly non-stationary processes in a computationally efficient manner. This package allows for non-stationary anisotropic modeling on the two-dimensional Euclidean domain and non-stationary isotropic modeling on the spherical domain.

In this paper, we develop a kernel-convolution Gaussian process treating the surface of the ocean as a cylinder to produce a model that has spatially varying and interpretable parameters while remaining computationally feasible. We represent the spatially varying parameter fields themselves as Gaussian processes within a Bayesian hierarchical model structure. The Gaussian process prior for the parameters allows for the longitudinal correlation length scale to vary with respect to the changing radius of the Earth with latitude; this, in combination with the fact that there are no Argo observations close to the poles, allows for the use of the cylindrical distance rather than more computationally challenging spherical distance metric. Our methodology, including the covariance model, the Bayesian hierarchical framework, and the MCMC model-fitting procedure is described in detail in Section 3. Fitting this highly flexible Bayesian model on the number of points in the Argo dataset is still a computational challenge, and as such we use a Vecchia process as developed in Katzfuss and Guinness [2021] to improve the computation time. The accuracy of the Vecchia approximation for the kernel-convolution framework is demonstrated through comparison results on subsets of the data in Section 4. The results of our model fit to the Argo data restricted to January is displayed in Section 5, demonstrating its ability to compute confidence and credible intervals for the parameter fields, ocean heat content, and the heat content trend over time. Section 6 shows that our model out-performs an out-of-the-box method at predicting ocean heat content through cross-validation, and also shows the relative importance of the various aspects of the model. The functions used in performing this analysis are available as the R package BayesianOHC.

2 Data

We first describe the data used for our statistical model. After deployment, an Argo float proceeds through four stages. First, the float adjusts its buoyancy to descend to m of depth. In the second stage, the float drifts at m of depth for an average of nine days, after which it descends further to m. The float then ascends from m to the surface, measuring temperature, salinity, and pressure at depth intervals averaging between and meters. Once the float surfaces, the measurements as well as the float’s location and time at surfacing are transmitted via satellite [Riser et al., 2016].

For computational simplicity, we restrict our analysis to profiles observed during January, although the computationally efficient Vecchia process described in Section 4 will allow for the model to be extended to all months in future work. As the focus of this work is a globally consistent spatial model, we do not model any temporal dependence in the data and implicitly take each data point as occurring at the mid-point of the month. We restrict our attention to profiles observed between and as this is the range of years included in the Argo data obtained from Kuusela and Stein [2018], although an extension to additional years would be straightforward. For various reasons, some of the observations in the dataset were not recorded to the full depth of m, and the percentage of floats with a maximum depth greater than m ranges from in 2007 to in 2016. To avoid having to extrapolate the temperature field over depth, we exclude all floats with a maximum recorded depth shallower than m. In total, the data under consideration consists of profiles, ranging from in 2007 to in 2016.

For notation, let denote the year, denote spatial location in latitude-longitude coordinates, and denote depth from the surface in meters. Define the three-dimensional temperature field at location x, depth , and year at mid-January as measured in degrees Celsius. Since our ultimate interest is in the integrated heat content, we choose to model the two-dimensional spatial field of heat content values integrated over depth rather than the temperature and/or salinity fields at fixed depth levels. The vertically integrated heat content field from 0-m depth is defined as

| (1) |

where is seawater density in kg/m3 and is the specific heat of seawater in J/(kg∘ C) [Dijkstra, 2008]. The units of are J/m2. For observed temperature profiles the integral in Equation 1 is computed numerically by summing the values of the observations linearly interpolated to each meter of the domain [0m,2000m]. The heat content values as observed in January 2016 are shown in Figure 1(a). Heat content anomalies computed with respect to the spatially varying mean field estimated in Section 5 are shown in Figure 1(b).

The main quantity of interest in this work is the total ocean heat content or OHC, which is defined for a given year as the two-dimensional integral of the heat content field:

where is the ocean’s surface masked following Roemmich and Gilson [2009]; this mask excludes regions of the ocean that are hard or impossible to sample with Argo floats, including regions of the ocean where the depth is less than m, seasonally ice-covered regions, and marginal seas such as the Gulf of Mexico and the Mediterranean. The mask can be seen as the gray background of Figure 1(a). The primary goal of this work is to estimate the trend in , and quantify the uncertainty in those estimates, using the observed Argo profiles. Let denote the discrete integral of the temperature profile observed at location x and year yr. In our modeling framework, the underlying heat content field differs from the observed heat content field in that the latter contains small-scale variability that will be modeled as a nugget effect; see section 3.1 for further details. In the following we denote the vector of observed values for year as and the concatenated vector of observed values as .

3 Model Framework

We use a hierarchical Bayesian framework for our model of ocean heat content, in which both the spatial model parameters and the ocean heat content field are modeled as Gaussian processes. This approach yields several advantages. First, it provides a framework for propagating and quantifying uncertainty from both the inferred parameter fields and the spatial prediction of ocean heat content, thereby allowing us to determine the relative importance of each type of uncertainty. Second, the parameter fields are allowed to vary smoothly in space according to a Gaussian process with prescribed hyperparameters, without the need to choose a functional form. This flexibility is critical for modeling ocean heat content due to the complex structure of the anomaly field as can be seen in Figure 1(b); this will be discussed quantitatively in Section 6.

In this section, we will describe the non-stationary covariance model used for the heat content field, the hierarchical Bayesian framework including Gaussian process priors on the parameter fields, and the estimation of the posterior distribution of OHC using MCMC sampling with Metropolis-Hastings-within-Gibbs steps.

3.1 Covariance Model for the Heat Content Field

The heat content field is modeled as a Gaussian process with spatially varying covariance parameters in order to capture non-stationarity in the data. In the following, we denote the mean field as , the variance field as , the nugget variance field as , and the latitudinal and longitudinal correlation length scale fields as and respectively. For notation these symbols will refer to the fields as functions of location, so for example the value of the latitudinal correlation length scale at location x would be . The field is defined for any x and year yr as where is a spatially varying mean field centered on and is a spatially varying trend field. We will denote the collection of parameter fields as . The observed and underlying heat content fields are distributed as

and

| (2) |

respectively where is a Gaussian process such that for any pair of locations x and y. We make explicit the dependency of on the entire set of parameters for notational simplicity, although the value of will depend only on the values of , , and for the two relevant locations.

The nugget variance is generally interpreted as measurement error, however as measurement errors in Argo temperature readings are known to be small [Riser et al., 2016] it will be used here to represent fine-scaled variation in the underlying field. This can be induced, for example, by eddies, which have coherent structure but are generally smaller in spatial and temporal scale than the observation resolution of the Argo profiles [Colling, 2001]. This form of variation is excluded from the kriging-based estimates of ocean heat content anomaly in Equation 2, but is represented in the uncertainty estimates. In the model fit in Section 5, we sample the inverse signal-to-noise ratio as this field appears to have better sampling properties, however for simplicity we describe here the analogous case where is sampled directly.

Kernel convolution methods as introduced by Higdon [1998] are a straightforward and intuitive way for integrating a spatially varying parameter field into a valid covariance model. The idea behind these methods is to assign a kernel to each point in the domain that gives the local covariance properties at that location. Then a valid global Gaussian process can be obtained by computing the convolution of the kernels between every two points. The correlation between any two points will reflect the local behavior at both of their locations. Paciorek and Schervish [2006] demonstrated the use of kernel convolution methods and find closed-form expressions for the covariance functions when the underlying domain is Euclidean. For modeling the ocean heat content field it would make the most sense to measure distances between observations using the great-circle distance, which is the shortest distance between two points over the sphere’s surface. Unfortunately, the kernel convolutions do not have closed-form expressions when the great-circle distance is used, and the computational task of performing numerical integration of the convolution terms as done in Li and Zhu [2016] is not computationally feasible for the number of observations in the Argo data.

In principle, the spherical domain could be represented as a subset of three-dimensional Euclidean space and the chord-length distance, which is the shortest line between two points and is allowed to cross through the interior of the sphere, could be used. However, in the traditional method of representing anisotropy through scaling and rotating the coordinate space would not yield parameters that could be interpreted in terms of quantities on the surface. This is a limitation when modeling the ocean heat content field which, as we can see in Figure 1, exhibits significant anisotropy in the latitudinal and longitudinal dimensions. To examine the significance of this limitation we evaluate the ability of isotropic models to predict ocean heat content in Section 6.

Our solution to the problem of modeling anisotropy on the sphere is to represent the Earth’s surface in latitude-longitude coordinates as a cylinder, or , and use a cylindrical distance function for the covariance model that allows for the estimation of correlation length scales in the latitudinal and longitudinal dimensions. As Argo floats do not travel close to the poles, the approximation of the Earth’s surface as a cylinder does not present any modeling problems. In particular, the geometric variation in the radius of the sphere with latitude can be accounted for in the estimation of the spatially varying longitudinal scale parameter. Let denote the Euclidean distance between two points and let denote the great circle distance between two points . Then the shortest path between two locations on the cylinder is given by the Pythagorean theorem as visualized in Figure 2. Latitudinal and longitudinal correlation length scale parameters can be incorporated in a straightforward way, yielding the following distance function, where and are arbitrary locations:

Using this distance function in the kernel convolution framework with Gaussian kernels yields the following covariance function for arbitrary locations x and y:

| (3) |

By using Gaussian covariance kernels we can integrate over separately for the latitudinal and longitudinal dimensions. The integral over the Euclidean (latitudinal) dimension can then be computed easily through the closed-form expressions of Paciorek and Schervish [2006]. The convolutions in the cylindrical dimension can be computed exactly using Gaussian error functions as derived in Section S3.1 of the supplementary material. Supplementary Section S3.2 presents a faster Gaussian approximation to the exact spherical convolutions along with a simulation study demonstrating that the approximation is highly accurate over the correlation length scales present in the ocean heat content data. As such the approximation to the exact convolutions is used in the following analysis, although both the approximation and exact methods are available in the BayesianOHC package as ”cylindrical_correlation_gaussian” and ”cylindrical_correlation_exact” respectively.

What results from Equation 3 is a covariance function that can be locally anisotropic and globally non-stationary, is fast to compute, and is mathematically valid on the domain. A major advantage of the cylindrical distance model’s anisotropy structure is that the range parameters are directly interpretable as correlation length scales in the latitudinal and longitudinal dimensions. To preserve this interpretability the cylindrical distance model does not allow for rotation of the anisotropy structure.

3.2 Hierarchical Bayesian Framework

Having prescribed the structure of our covariance function for ocean heat content, we now turn to our model for the covariance parameters, which are themselves modeled as Gaussian processes. Each parameter field has a stationary Gaussian process prior with mean, variance, and spatial range hyperparameters. Fitting this complex model is made computationally feasible through the Vecchia process described in Section 4.

In the following, let denote the covariance matrix between each pair of observations as computed by . Because we will ultimately fit the model to only data in a single month, we treat each year as independent, and in this case is a block diagonal matrix. Future work will incorporate temporal dependence structures in the covariance matrix. Let denote an arbitrary parameter field. Then the prior for each is a stationary Gaussian process with link function , specifically

where , and are respectively the mean, variance, and range for the parameter field . The link function is exponential for the variance and correlation parameters in order to ensure positivity, and is the identity for the mean and trend parameters. To constrain the number of parameters involved in representing the spatial surfaces, we will represent each by independent normal basis values on a set of knot locations denoted ; let refer to the number of knot points. Specifically, for each we define the vector to be such that for any location x the following holds:

| (4) |

where is the covariance vector between x and the knot locations and is the covariance matrix between points in . Intuitively, is a de-meaned and de-correlated representation of evaluated at the knot locations, from which the field can be re-constructed through kriging. In the model fit in Section 5, we choose to contain knot locations at every degrees in the longitudinal direction and every degrees in the latitudinal direction. Restricting the knot locations with the data mask expressed in Figure 1(a), this results in basis values for each parameter field. This knot selection is somewhat arbitrary, and more principled methods for selecting knot point locations have been explored, such as Katzfuss [2013] who incorporate the knot locations into the Bayesian hierarchy through a reversible-jump process, however such approaches are not considered here.

3.3 MCMC Sampling Procedure

We will use Markov Chain Monte Carlo (MCMC) with Gibbs steps for each of the parameter fields to estimate the joint posterior distribution. The marginal distributions for the correlation length and variance parameters must be approximated using Metropolis-Hastings steps, however and can be sampled jointly from their Gibbs marginal distribution given by the formula

| (5) |

where

and is the design matrix for and . In the conditional distribution of Equation 5, ”” is the set minus operator and as such denotes the set of basis parameters for all of the parameter fields excluding and .

Now we will outline the MCMC sampling procedure. For each iteration after initialization we perform the following steps:

-

1.

For a given , propose basis point candidates through independent perturbations, specifically where is the proposal standard deviation at the current iteration. The independent perturbations on can be viewed as perturbing by a spatially correlated field that shares the spatial range of .

-

2.

Accept or reject the entire basis vector, which describes the full spatial field, using the Metropolis-Hastings acceptance ratio on

where denotes the set of basis points corresponding to all of the fields except for at their most recent iteration and denotes the full set of observation locations. The values of at the observation locations can be evaluated through Equation 4. Here, the data likelihood term on the right is multivariate Gaussian with covariance matrix and the prior term is the product of independent standard normal densities.

-

3.

Repeat the previous two steps for sampling for each remaining .

-

4.

Sample and jointly from their conditional distribution given in Equation 5.

-

5.

Update the variances using the adaptive MCMC algorithm for Metropolis-within-Gibbs sampling as described in Roberts and Rosenthal [2009], which updates the proposal variances to achieve a desired acceptance rate chosen to be .

Through this approach, MCMC samples of coherent spatial fields for each model parameter can be obtained. In particular, we model the mean field parameters jointly with the covariance parameters, which will generally lead to improved estimates of both. Further, our resulting credible intervals will incorporate the uncertainty induced by estimating the mean and the spatially varying trend. Quantifying the trend is particularly valuable as for many purposes we are primarily concerned with the trend in ocean heat content anomaly over time rather than the values of the anomalies themselves.

3.4 Posterior Distributions

For a fixed set of parameter values sampled from the posterior, the distribution of the globally-integrated ocean heat content, , is normal and can be denoted with values

| (6) |

where denotes the covariance vector between the observations locations and the global integral and denotes the variance of the global integral. When and are computed from the sampled parameters we will refer to them as and . The entries in these covariance matrices involve integrals over the global domain which must be computed numerically. Let denote the grid-points to be used for numeric integration; then we can write our approximation of ocean heat content as where are the unknown ocean heat content values at the grid-points and is the vector of grid-cell areas corresponding to . Then the above formulas apply replacing with . In the results reported in Section 5, OHC posterior distributions are calculated using a grid partitioning the domain with a resolution of .

4 Vecchia Processes for Feasible Bayesian Estimation

The most computationally intensive step of the Metropolis-Hastings procedure is computing the log-likelihood term. The log-likelihood term can be written as

where we omit the dependency of on for notational simplicity. The usual way to compute the inverse and log-determinant terms in the above equation is to first compute the Cholesky decomposition of the covariance matrix, resulting in the upper-triangular Cholesky factor which satisfies the property . Then the likelihood evaluation becomes

| (7) |

Inverting an upper triangular matrix is computationally simple, so the primary burden is computing the Cholesky factorization. This step has a computational complexity of , meaning that the computation time increases cubically with the number of observations. For example, in a simple experiment on a standard laptop computer, computing the likelihood for the Argo observations in 2007 takes around seconds, while computing the likelihood for the observations in 2016 takes around seconds. While a single likelihood evaluation is feasible, since each full Metropolis-Hasting step requires four covariance matrix inversions it would take over five months to compute samples using the Cholesky method even when computing each year’s likelihood in parallel.

4.1 Vecchia Approximation

To render Bayesian estimation feasible we use the Vecchia approximation of Vecchia [1988] which approximates a Gaussian process by writing the likelihood as a series of conditional likelihoods and then restricting the set of observations used by the conditional likelihoods. Specifically, let denote the set of observations and let denote sets of integers such that and . When is more than one integer, we will let denote the set of observations corresponding to the indices in . With this the Vecchia likelihood is defined as

| (8) |

where is the conditional log-likelihood of given . When , each observation is conditioned on all of the observations preceding it in the ordering, and the Vecchia log-likelihood is equivalent to the Gaussian process log-likelihood. When is a strict subset of the preceding indices, then the Vecchia likelihood is an approximation. The computation of each term requires computing the Cholesky factorization for observations within , so computational gains are achieved when the are chosen to be small. Generally, the conditioning sets are chosen to be such that for some so that the computation time of the Vecchia likelihood is , as opposed to for the full likelihood. The parameter is tunable, with larger values yielding a more accurate approximation and smaller values enabling faster computation times. An additional advantage of the Vecchia likelihood is that the terms in Equation 8 can be computed in parallel whereas parallelization cannot be used directly when computing the Cholesky factorization for the full covariance matrix.

There are three steps for constructing the Vecchia process for a particular set of observation locations. The first step is to order the locations, which is important because the conditioning sets for a particular observation can only include observations that precede it in the ordering. For this step, we use the max-min distance ordering advocated for by Guinness [2018]. The second step is to construct the conditioning sets such that each set has no more than observations. We take the conditioning sets to be the observation’s -nearest neighbors under the logic that nearby observations are the most influential and as such will yield the closest approximation to the Cholesky likelihood. The third step is to group the observations whose conditioning sets have substantial overlap. Computing the conditional likelihoods on the grouped observations has been shown by Guinness [2018] to increase the accuracy of the approximation while simultaneously decreasing the computation time. These three steps must be done separately for each year of the Argo data, as the observation locations differ between the years. However, since the conditioning sets and groups do not depend on the parameters, the Vecchia construction needs to only be done once before the beginning of the sampling procedure.

For a particular ordering, conditioning configuration, and grouping, it can be shown that the Vecchia likelihood induces a valid stochastic process on the domain of observation locations [Datta et al., 2016]. This ”Vecchia process” can be seen as an approximation of the full Gaussian process with more computationally efficient likelihood evaluations. Under this view the Bayesian inference procedure described in Section 3 can be used with the Vecchia process by simply substituting the full Cholesky likelihood with the Vecchia likelihood. Furthermore, the inverse Cholesky decomposition of the covariance matrix implied by the Vecchia process can be constructed directly as a sparse matrix. To see this we will follow the notation of Katzfuss and Guinness [2021] and rewrite the summands in Equation 8 as , where indicates the normal density, , and . Then the elements of the inverse Cholesky factor are for all , for , and for . The sparse matrix U can be used in place of in Equation 7 to compute the Vecchia likelihood. This is no more computationally intensive than computing the sum in Equation 8 due to U’s known sparsity structure determined by the conditioning sets.

It can be seen from the construction of U that for any two observations , when the partial correlation between and will be zero, or in other words and will be conditionally independent. As such the Vecchia process can be seen as an alteration of the full Gaussian process where some conditional dependencies are removed. We note that this does not necessarily mean that and are independent, as there could be a path of conditional dependencies between observations through the conditioning sets. As such the nearest-neighbors method of constructing the conditioning sets does not simply eliminate dependencies between distant observations, as is done with other likelihood approximation methods such as covariance tapering. This is important to note in our model since we are using the Vecchia process to estimate spatially varying correlation length scales, which could potentially be biased if only dependencies between nearest neighbors were taken into account in the likelihood.

4.2 Predictions

As discussed in section 3.4, the estimate of globally integrated heat content is calculated as the area-weighted sum of unobserved ocean heat content values at grid-points along a grid densely partitioning the domain. This is written as , where are the unknown heat content values at the grid-points and are the areal weights of the grid-cells. For sufficiently dense grids, computing the joint conditional distribution over the grid-points as in Equation 6 is computationally infeasible. As such, in order to compute the posterior distribution of heat content, it is essential to be able to efficiently compute the joint posterior distribution for a large number of observations. Fortunately, this can be done by augmenting the Vecchia process described above to include the grid-points at which we will be predicting heat content values. To do this we follow the ”observation-first” ordering in Katzfuss et al. [2020a], which involves ordering the prediction locations , appending them to the ordering of the observation locations, and then computing the nearest-neighbor conditioning sets for the entire set of indexed locations . Since conditioning sets can only contain preceding observations, the conditioning sets of the observations remain the same while the conditioning sets of prediction locations can contain any observation location as well as any prediction location preceding it in the ordering.

Recall that U is the inverse Cholesky factor for the Vecchia process, and let . Then it can be shown that the distribution of the prediction values conditioned on the observations is It follows that the posterior distribution of ocean heat content given the observations is

| (9) |

As U, and subsequently W, are sparse, the terms and are both fast to compute. If the full Gaussian process was used to compute this posterior distribution using Equation 6, every element of the dense covariance matrix of grid-points would need to be evaluated, which is intractable for sufficiently dense grids.

4.3 Evaluating the Accuracy of the Vecchia Process

The accuracy of the Vecchia likelihood as an approximation to the log-likelihood depends on the ordering, conditioning sets, and grouping used in the construction. In particular, the maximum size of the conditioning sets, determined by the parameter , needs to be chosen to balance the trade-off between accuracy and computation time. In order to assess the accuracy of the Vecchia process for our model for different values of , we divided the global ocean into nine basins. These basins are small enough such that the likelihoods and heat content predictions can be computed easily using the full Cholesky decomposition so that the results from the Cholesky and Vecchia methods can be compared. The basins are defined using the latitudinal and longitudinal limits displayed in Figure S1 in the supplementary material. We note that these basins are for evaluating the approximation accuracy only and that the results in Section 5 are computed on the global ocean.

To evaluate the accuracy of the Vecchia approximation on the ocean heat content field, we fit the model described in Section 3 independently for each of the nine basins using both the Cholesky method and the Vecchia approximation method for each of . Using the resulting posterior distributions, the mean, trend, and anomaly fields were interpolated for each basin independently, and globally integrated values were computed using the results within each basin. For the Vecchia process with conditioning parameter we will refer to the globally integrated values of the mean, trend, and anomaly fields as , , and respectively. Analogously the globally integrated values for the the Cholesky method will be referred to as , , and . By separating the mean, trend, and anomaly fields we can examine how close the Vecchia process approximates these three components. We would expect the error in the mean field to be the smallest, as the mean field is highly informed by the values in the data and is not particularly sensitive to the estimated correlation structure. On the other hand, we would expect the anomaly field to have the largest errors, as the predicted anomaly values at unobserved locations are highly sensitive to the correlation structure.

| m | mean field | trend field | anomaly field |

|---|---|---|---|

| 10 | 8.078 | .05029 | .7421 |

| 25 | 7.764 | 1.400 | .4081 |

| 50 | 2.987 | 5.498 | 1.665 |

| 100 | 1.87 | 1.963 | 1.656 |

Treating the Cholesky values as ”truth”, fractional errors , , and are displayed in Table 1 for each value of . For the fractional error of the anomaly fields the median over years is displayed. For and , the mean field has smaller fractional errors than the trend or anomaly fields, as expected. The accuracy of the mean field does not improve noticeably when increasing beyond , however the error at that level is acceptably small. The approximation error of the trend and anomaly fields are relatively more substantial at , with fractional errors of and respectively. They improve only marginally when increasing to but see a more substantial improvement when increasing to , with errors around five one-thousandth of a percent and a tenth of a percent respectively. When further increasing to , the approximation errors for the mean and trend fields do not meaningfully change, although the error for the anomaly field does noticeably improve. As the fractional error in the trend field does not decrease after , and at the level of error in the anomaly fields is acceptable, we will use the Vecchia process with for the global model fit.

5 Results

5.1 Initial Configuration

As the number of parameters involved in our model is large, it is particularly important to initialize the MCMC sampler at a ”good” initial configuration for the sampler to converge in a reasonable number of iterations. To obtain a suitable initial configuration, we used a moving-window estimation method analogous to that used in Kuusela and Stein [2018] to obtain values for the spatially varying parameter fields. In this approach, the domain is partitioned according to a grid, and at each grid-point, the observations within a window centered around that point are considered to follow a stationary Gaussian process with process variance, nugget variance, latitudinal range, longitudinal range, mean, and trend parameters. Since each of these windows contains a relatively small number of observations, the locally stationary parameters can be estimated quickly using maximum likelihood estimation with Cholesky factorizations.

Parameters were estimated using windows of size centered at each grid-point of a grid. Note that the grid used for the moving window parameter estimates is finer than the resolution of knot points used in the global model; this is done so that we can estimate the hyperparameters of the parameter fields. To estimate the hyperparameters, stationary and isotropic Gaussian processes were fit to the moving window output for each of the parameter fields, obtaining an estimate of the hyper range, mean, and variance for each process. As described in Section 3, the correlation length and variance parameters are assumed to follow a log-normal Gaussian distribution. We constrain the hyper-mean of the trend field to be zero to ensure that the estimate is fully informed by the data. Additionally, the mean and trend fields were assumed to have the same correlation length scale parameter. The results are shown in Table 2, where we summarize the hyper-prior distribution using quantiles since the log-normally distributed priors are non-symmetric. The range hyperparameter for each process is shown in the second column as the ”effective range”, which we define as the cylindrical distance in degrees at which the correlation is equal to , and denote and for effective latitudinal and longitudinal range respectively.

| Effective Range | Units | |||||

|---|---|---|---|---|---|---|

| 39.97∘ | 0.89 | 2.50 | 6.98 | Degrees | ||

| 44.93∘ | 0.88 | 5.07 | 29.08 | Degrees | ||

| 36.59∘ | .0034 | 0.04 | 0.47 | Unitless | ||

| 39.24∘ | 1.29 | 2.25 | 3.92 | |||

| 34.88∘ | 19.70 | 49.24 | 78.78 | |||

| 34.88∘ | -1.53 | 0.00 | 1.53 | year |

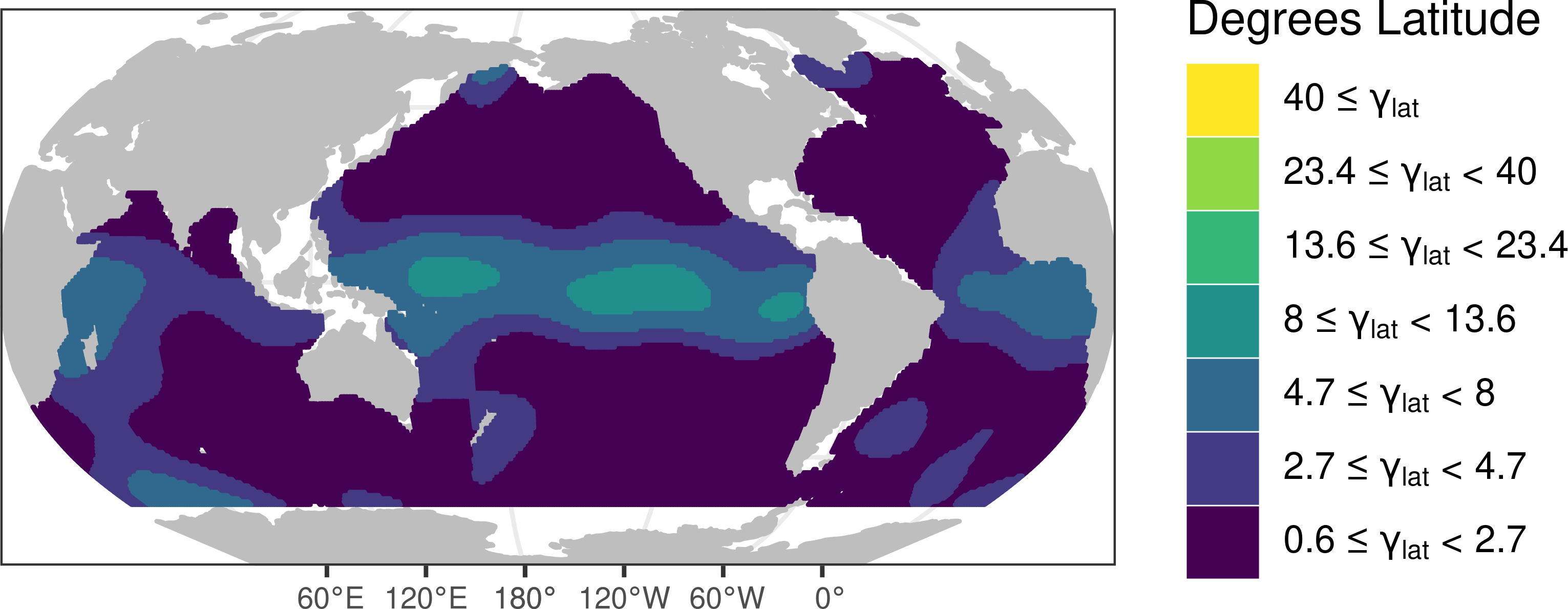

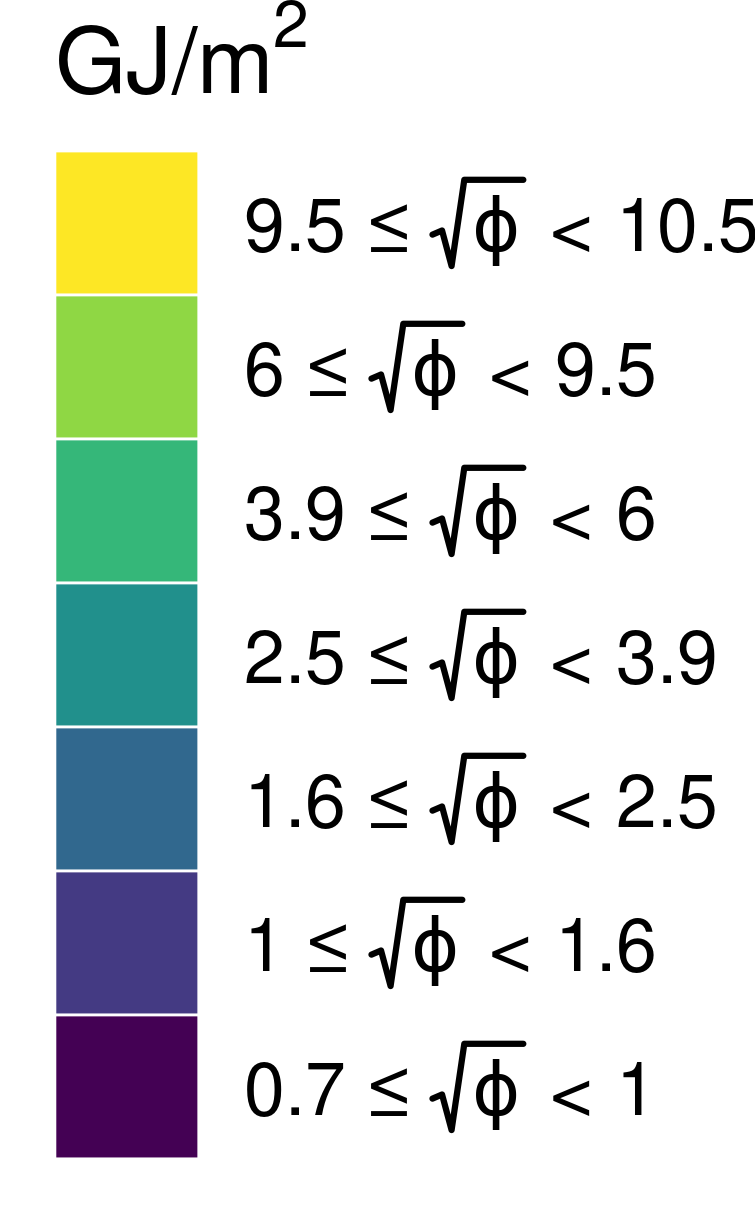

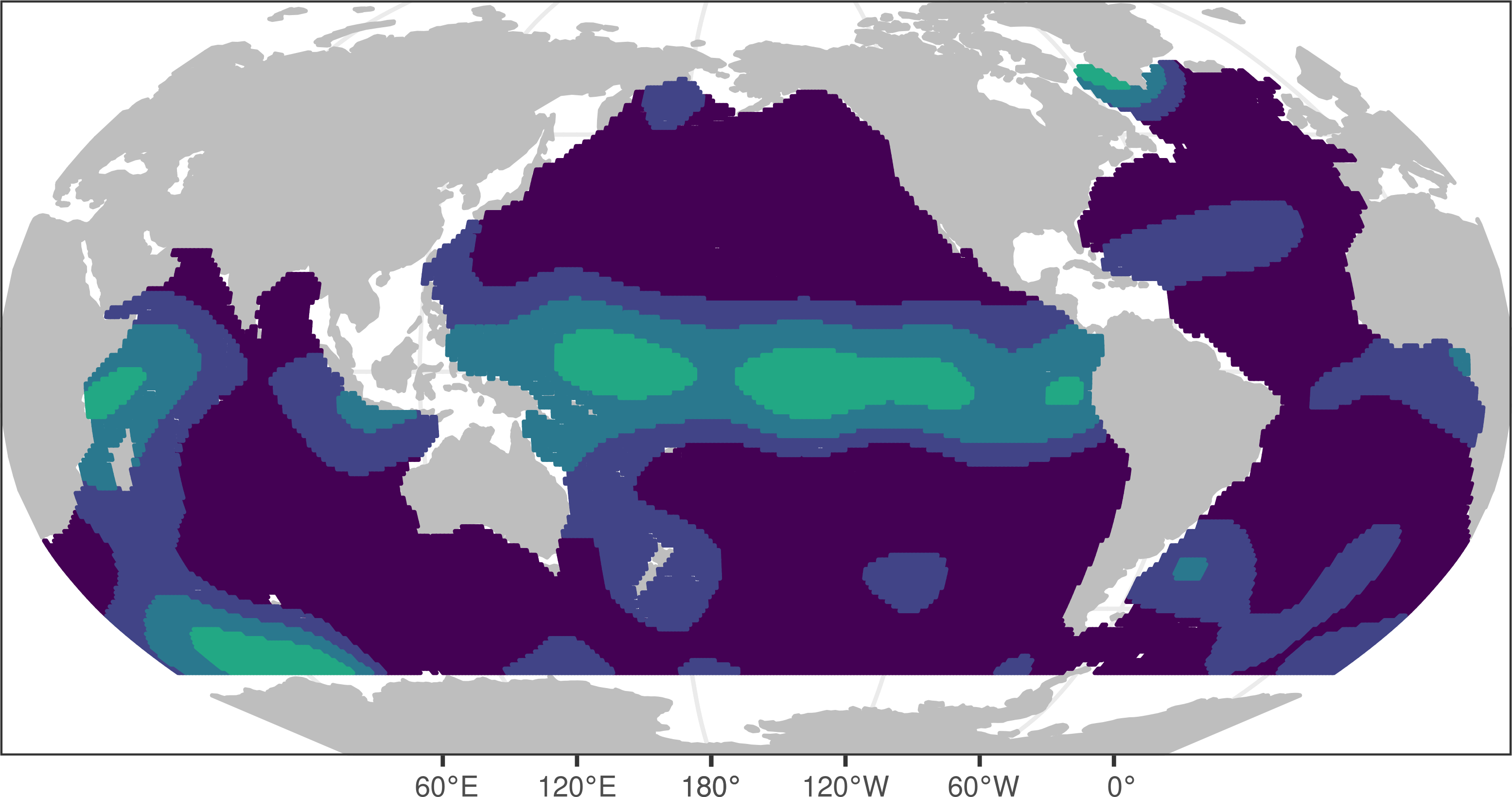

The initial configuration for the MCMC sampler was obtained by kriging the estimated parameters from the moving window approach onto the knot locations using the hyperparameters described in Table 2. Figure 3 displays the initial configuration interpolated over a dense grid for display purposes. Similar to Table 2, standard deviations and effective ranges and are shown rather than the actual parameter fields. While this configuration should not be used to quantify global OHC due to the fact that the estimates were derived assuming local stationarity, comparisons to the 2016 anomaly field displayed in Figure 1(b) yield insights regarding the ocean heat content correlation structure. First of all, we can see from the initial configuration that the correlation length scales around the equatorial regions are much larger than at higher latitudes, and are particularly large in the equatorial Pacific. This phenomenon can be seen in the heat content anomaly field for 2016 through the coherent structures of positive anomalies particularly visible in the eastern equatorial Pacific. These anomalies primarily extend in the longitudinal direction and to a smaller extent in the latitudinal direction. Anisotropy is reflected in the initial configuration, where we can see that the scale of the effective longitudinal correlation lengths is generally much larger than those in the latitudinal plot, a difference that is particularly stark in the equatorial Pacific. The phenomenon of latitudinal correlation lengths being generally smaller than longitudinal correlation lengths makes sense from a physical perspective, as changes in latitude are associated with changes in climate to a much greater degree than changes in longitude.

The areas of the ocean that appear to have particularly high variability in the 2016 anomaly field roughly correspond to the areas of high variance in the initial configuration. Four regions in particular stand out in both of these plots; the North Atlantic east of the United States, the Pacific Ocean east of Japan, the South Atlantic east of Argentina, and a large swath of ocean moving towards the south-east from South Africa to South Australia. We note that most of these regions align with known ocean currents, in particular the Gulf Stream of the North Atlantic, the Kuroshio Current off the coast of Japan, the South Atlantic current off of Argentina, and the Arctic circumpolar current in the Southern Ocean [Colling, 2001]. This is unsurprising, as the currents generate large anomalies of either positive or negative sign depending on the particular position and behavior of the current at a given time.

In theory, the choice of initial configuration will not impact the results of the MCMC sampler if a sufficiently long chain is sampled. However, running the sampler on random initial configurations did not converge after iterations. Starting from this initial configuration considerably improves the sampler’s convergence properties as will be discussed in Section 5.2. We have also found that it is particularly challenging to sample the parameter fields and the hyperparameters simultaneously due to the fact that the hyperparameters are not directly informed by the data. To avoid this issue, the hyperparameter values obtained from the moving window approach were treated as fixed and not sampled. This can be interpreted as imposing an informative prior which constrains the variability of the parameter fields to reasonable levels.

5.2 Posterior Distributions

Starting from the initial configuration obtained in the previous section, the MCMC sampler was run for iterations on a desktop computer with of RAM running Ubuntu 20.04 and using ten cores for the parallel computation of the likelihood evaluations and OHC estimations. The ocean heat content distribution was computed using Equation 9 on a grid every ten iterations. To assess convergence, the Heidelberger and Welch test [Heidelberger and Welch, 1981] was run on the sequence of log posterior densities, yielding convergence after a burn-in period of iterations. The results that follow are computed on the posterior samples with the burn-in period removed.

To get a sense for the posterior distribution of the parameter fields, for each parameter field we computed the average value across space for each sample and identified fields corresponding to the 5th and 95th percentiles of the distribution of mean values. These parameter configurations for the standard deviation , effective latitudinal range , and effective longitudinal range are displayed in Figure 4. Note that these are not the point-wise 5th and 95th percentiles of the parameter estimates, which would not retain the spatial structure present in each sample, but rather spatially coherent members of the posterior distribution. The most noticeable difference between these samples and the initial configuration displayed in Figure 3 are the higher longitudinal range parameters in the samples, particularly noticeable in the equatorial West Pacific. This could be due to the fact that the moving-window parameter method may underestimate high values of the range parameter due to the fact that its estimates are based on data from only a window. Otherwise, the samples appear to be largely similar to the initial parameter fields, further highlighting the benefit of starting the MCMC sampler at a plausible initial configuration.

While the 5th and 95th percentile configurations in Figure 4 are fairly similar to each other for each of the parameter fields, the subtle differences between the percentile maps show that there are regions with higher posterior variability. In the process standard deviation maps in Figure 4(a), differences can be seen in the value and areal extent of the several highly-variable current regions discussed before, indicating some uncertainty in the marginal standard deviation values in these areas. For the longitudinal range maps in Figure 4(b), variability can be seen in the higher-ranged area in the western Pacific, which is the region that changes the most between the initial configuration and the posterior samples. We can also see variability in the distribution of longitudinal ranges in the Southern Ocean, which is likely due to the fact that parameters in this region are more difficult to estimate due to the smaller number of observations near the extent of Antarctic sea ice, as well as the large variability in the data. For the posterior maps for latitudinal range in Figure 4(c), we can see variability in the value and extent of the high-ranged area in the equatorial regions, as well as in smaller areas such as south-east of Argentina and around New Zealand. Overall, however, the variability in the posterior distribution appears to be small for each of the parameter fields, indicating that the uncertainty in the ocean heat content and trend estimations induced by uncertainty in the estimation of the parameters will be relatively low.

The ocean heat content mean and variance were calculated for each year for every ten samples after the burn-in period. For each year, we found the median OHC value over the posterior samples and calculated the 95% confidence interval ; these are shown as solid red bars in Figure 5. This represents the uncertainty range induced by the interpolation of the observations to unobserved locations and can be interpreted as a frequentist confidence interval as it does not account for the priors on the parameters. To compute the Bayesian credible interval for each year, for each iteration of the sampler heat content values were re-sampled from the marginal OHC distribution . Then 5th and 95th percentiles were computed from the pooled re-resampled OHC values. The re-sampling step ensures that our credible intervals accurately take into account both the interpolation uncertainty from as well as the parameter uncertainty from the variability of over the samples. These intervals are displayed as dotted blue bars in Figure 5. We can see that both of the intervals are wider in earlier years, which is to be expected since there are fewer data points in those years. The credible intervals are meaningfully wider than the confidence intervals in earlier years, although the difference becomes small in later years, which is to be expected since the parameter values have a greater effect on the interpolation when there are larger gaps in the data. An analogous procedure was done to obtain uncertainty intervals for the estimated trend; for this parameter, we re-sampled the entire trend field using the posterior covariance matrix conditioned on the other parameters at each iteration. To obtain the 95% credible interval we identified the 2.5% and 97.5% percentiles of the integrated trend values over the re-sampled fields. The 95% confidence interval, computed with the configuration corresponding to the median integrated trend value, is displayed with solid red lines in Figure 5, while the 95% credible interval is displayed as dotted blue lines. The globally integrated trend is positive and highly significant, with a p-value of obtained from the credible interval.

In re-sampling not just the globally integrated trend values but the entire field, we are able to obtain additional information about the spatial variability in the ocean heat content trend. Figure 6 displays samples of the trend fields whose globally integrated values equal the th (panel a) and th (panel b) percentiles of the re-sampled distribution. We can see that variability in the trend field is higher than that in the correlation and variance parameters in Figure 4. Notable features include the cooling trend in the western Pacific and the warming trend in the eastern Pacific, although there appears to be variability in the intensity and areal extent of these trends. There is more confidence in a warming trend in the ocean south-east of Brazil with little apparent variation in the intensity or spatial extent between the two percentile maps. The North Atlantic, on the other hand, displays variable spatial patterns, with the th percentile field showing a negative trend southeast of the United States that is not present in the th percentile map, and while the two percentile fields agree on a cooling trend in the far North Atlantic they differ on its intensity. Other regions where the trend pattern appears largely uncertain are in the Indian Ocean and the Southern Ocean.

These two percentile maps can only give an approximate view of the variability in the trend field, as there is a high-dimensional space of fields whose globally integrated values lie at the th and th percentiles of the posterior distribution. To obtain a better idea of agreement in the posterior we calculated the percentage of the re-sampled trend fields that agree on the sign of the trend for each grid-cell of a grid. This is equivalent to the posterior probability of a positive trend value, or , and the results are displayed in Figure 6(c). We can see that there is agreement on certain spatial features such as the western Pacific cooling trend and the eastern Pacific warming trend. As expected, there is high-level agreement on the warming trend in the ocean south-east of Brazil, and while there is agreement on a positive trend region in the mid-latitude North Atlantic and on the cooling trend in the high-latitude North Atlantic there is no agreement on the trend in the area of the ocean south-east of the United States. There is not any strong agreement on general trends in the Indian and Southern Oceans, however, there are isolated bubbles of confident warming and cooling scattered throughout these regions. We do not expect that these small-scale features would remain significant if more years of observations were used to fit the model, which is reserved for future work.

6 Model Validation

To validate our model we use leave-one-float-out (LOFO) cross-validation which, for each float, removes the observations corresponding to that float and then uses the remaining locations to predict the values at the removed locations. The motivation for LOFO validation is that, in the Argo data, the distance between the three profiles observed by a single float during a month is generally less than that between observations from different floats. By removing the entire float’s worth of observations we are better able to see how well the model is doing at estimating heat content at unobserved areas of the ocean. One downside to this approach is that the spatial area from which the floats are removed will be variable due to the fact that floats are not equally distributed around the ocean. The cross-validation performance was evaluated using mean absolute error (MAE), root-mean-squared error (RMSE), and continuous ranked probability scores (CRPS) [Gneiting et al., 2005]. While the MAE and RMSE metrics assess the accuracy of the predictions, the CRPS metric evaluates precision as well by penalizing higher standard errors in the predictions.

These cross-validation methods were evaluated using the maximum posterior density configuration from the global sampler, and Figure 7 shows the LOFO MAE scores averaged over observations from all years within each cell of a grid. The globally-averaged LOFO scores for the full model are displayed in the first row of Table 3. We also performed a windowed cross-validation approach as described in Section S4 in the supplementary material, with results showing patterns similar to the LOFO results displayed in Figure S3 and Table S2. We can see from Figure 7 that while the validation errors are low in the equatorial regions where the variance is low and the spatial correlation is high, the model performs worse in the high variance and low spatially correlated areas corresponding to the currents.

Due to the variability of these regions, there is a limit to the predictive ability of any model, and as such the raw validation scores are difficult to put into context without a reference model for comparison. To compare our model to a commonly cited approach in the climate community we have implemented the non-stochastic approach of Levitus et al. [2012] to compute predictions and associated uncertainties in the cross-validation procedures. We have also used the Levitus et al. [2012] approach to compare predicted ocean heat content values and trends, with results presented in Figure S2 and Table S1 in the supplementary material. As we can see in Table 3, our fully non-stationary model produces a improvement over the Levitus et al. [2012] approach in terms of RMSE in the LOFO validation. Following the logic of Kuusela and Stein [2018], assuming a rate of convergence for the Gaussian process predictions, these percent improvements can be seen as equivalent to a increase in the number of observations, or an addition of around floats.

| Package | MAE | RMSE | CRPS | |

|---|---|---|---|---|

| Fully Non-stationary | BayesianOHC | 1.610 (9.34%) | 2.708 (11.19%) | 1.330 (8.15%) |

| Stationary | BayesianOHC | 1.612 (9.24%) | 2.699 (11.47%) | 1.324 (8.56%) |

| Non-stationary Isotropic | BayesianOHC | 1.625 (8.50%) | 2.731 (10.43%) | 1.344 (7.22%) |

| Stationary | BayesianOHC | 1.633 (8.04%) | 2.738 (10.20%) | 1.347 (7.03%) |

| Stationary | BayesianOHC | 1.655 (6.81%) | 2.762 (9.42%) | 1.364 (5.79%) |

| Stationary | BayesianOHC | 1.667 (6.12%) | 2.701 (11.43%) | 1.364 (5.85%) |

| Stationary Spatiotemporal | GpGp | 1.705 (3.98%) | 2.850 (6.53%) | 1.603 (-10.66%) |

| Stationary Isotropic | GpGp | 1.718 (3.26%) | 2.865 (6.03%) | 1.618 (-11.74%) |

| Fully Stationary | BayesianOHC | 1.742 (1.94%) | 2.758 (9.54%) | 1.431 (1.19%) |

| Levitus et al. | BayesianOHC | 1.776 (0.00%) | 3.049 (0.00%) | 1.448 (0.00%) |

While it is apparent that our model outperforms the reference model in cross-validation, we are also interested in which non-stationary aspects of our model contribute the most to the improvement. To assess this, for each of the correlation and variance parameter fields, we re-fit the model under the constraint that the parameter field is spatially constant. These models were fit by running the BayesianOHC sampler with the stationarity constraint imposed. Initial configurations were obtained by adjusting the last sample of the full MCMC chain so that the newly stationary field has the median value of the non-stationary field.

The cross-validation scores with relative improvements over the reference model are shown in Table 3. In terms of mean absolute error, the constrained models have improved cross-validation scores over the fully non-stationary model, indicating the importance of accounting for spatial structure in the model parameters for the ocean heat content field. However, the stationary inverse signal-to-noise and marginal variance models perform slightly better than the full model in terms of RMSE scores, with the stationary signal-to-noise model also having a slightly improved CRPS score. This is likely due to the fact that the fully non-stationary model has higher average values of these parameters than the corresponding stationary models, with the stationary signal-to-noise model having a value of versus the average full model value of , and the stationary variance model having a value of versus the average full model value of . Unlike MAE, the RMSE and CRPS values take into account error variance and prediction uncertainty, which can explain why the stationary models perform slightly better with regard to those metrics. We note however that non-stationary modeling of the variance parameters is still desirable, as keeping those parameters stationary will likely underestimate the uncertainty in highly variable regions. Increases in all three cross-validation metrics are observed when and then are forced to be stationary, which is as expected given that these parameters, especially , vary substantially over the domain (Figure 4).

Our approach involves the use of a cylindrical approximation for the sphere in order to allow for separate correlation length scales in the latitudinal and longitudinal directions, which is important given the anisotropic behavior of ocean heat content. It is common in the statistics literature to instead treat the sphere as embedded in space and use the euclidean chord-length distance within an isotropic framework. There are several existing packages including GpGp [Guinness and Katzfuss, 2018], GPVecchia [Katzfuss et al., 2020b], and BayesNSGP [Turek and Risser, 2019] that allow for isotropic Gaussian process modeling using this metric. While there is not a natural way to represent anisotropy using correlation length-scales with coordinates, we are interested in examining how well these approaches perform in comparison to ours at cross-validation. To compare against a stationary model we used the ”fit_model” function from the GpGp package with the ”matern_sphere” covariance option to fit an isotropic Matérn model to the data in each year independently. As this package does not immediately allow for a Gaussian process mean field as used in our model, we instead use a mean field parameterization consisting of linear and quadratic terms of latitude and longitude. The results of this model fit are displayed in the ”Stationary Isotropic” row of Table 3. While this model has an improved MAE score over the fully stationary anisotropic cylindrical fit, it performs worse in all metrics than the non-stationary models fit using BayesianOHC. As Guinness [2021] have noted that isotropic spatio-temporal modeling yields a higher likelihood than non-stationary spatial-only models, we additionally used the GpGp package to fit a stationary spatio-temporal model to the data, shown in the ”Stationary Spatiotemporal” row of Table 3. While this performs better than a spatial-only isotropic model, it still underperforms the non-stationary cylindrical models.

To evaluate the difference between the isotropic and anisotropic approaches in the non-stationary setting, we used the BayesianOHC package to fit a non-stationary isotropic model using the chord-length distance to the full set of Argo data, with the LOFO cross-validation results displayed in the third row of Table 3. We can see that while the non-stationary isotropic model outperforms most of the other models, the full model achieves superior cross-validation scores. As the only difference between these two models is the cylindrical anisotropy component, this improvement shows the value of representing latitude-longitude anisotropy when modeling ocean heat content.

7 Conclusion

Our kernel-convolution hierarchical Bayesian Gaussian process model for ocean heat content is able to provide uncertainty quantification in ocean heat content values, as well as in the heat content trend, that could not be produced using previous methods. The Gaussian process approach allows us to quantify the uncertainty induced from having to interpolate the ocean heat content field over the domain using information from limited observations. The kernel-convolution covariance structure allows us to capture the non-stationary covariance properties of the ocean heat content field through spatially varying parameter fields. These convolutions are made possible by using the cylindrical distance, an acceptable distance metric for our application because the model does not extend to the poles and the longitudinal correlation length scales can vary with the sphere’s changing radius over latitude. The cylindrical distance metric is a novel approach to representing anisotropy on the sphere that is not possible using an representation. We have shown that our resulting fitted covariance model achieves validation scores superior to simpler methods, including a fully non-stationary isotropic model, which demonstrates the value of using the cylindrical distance approach to model anisotropy. The hierarchical Bayesian approach yields posterior distributions for each of the parameter fields which allows us to assess the uncertainty in their estimation. This additional source of uncertainty is then represented in the posterior distribution of ocean heat content and its trend. Our model’s non-stationary representation of the mean and trend fields allows for estimating the spatial distribution of certainty regarding local warming or cooling trends. The models have been fit in a computationally efficient way using the Vecchia process, which we have shown yields a sufficiently accurate approximation to the full Gaussian process. The code in this paper is available as the R package BayesianOHC which is publicly available at https://github.com/samjbaugh/BayesianHeatContentCode. While this package has many utilities that are specifically useful for modeling Argo data, many of the model-fitting functions are general and can be used for fitting non-stationary Bayesian models for a variety of applications.

The results presented in this paper are limited in the amount of data considered, specifically to January over the years 2007-2016 and for floats whose profiles extend to m of depth. In future work, we are interested in extending the model fit to shallower floats as well as to additional months. As the heat content field is defined here as the integral to m of depth, one way to incorporate floats with a shallower max depth which are nonetheless located in areas of the ocean that are deeper than m would be to train an extrapolation model on the full-depth floats and then apply this model to complete the temperature profiles of the shallower-depth floats. The uncertainty in ocean heat content values induced by this extrapolation could then be incorporated into the Bayesian model structure as an additional nugget effect for the applicable observations.

In this work, restricting the data to January has precluded the need to model temporal correlation and seasonal variation, while also providing the major computational advantage in that each year’s likelihood function could be evaluated independently. In extending the model to all months of the year both of these challenges will need to be addressed. To model seasonal variation in the mean field, a temporal dimension could be added to the knot structure of the current spatially varying mean field. Then the number of temporal knots would need to be chosen to be large enough to ensure that the resulting spatio-temporal mean field adequately models seasonality. Our use of the Vecchia process largely remedies the computational burden of considering additional months, as for a fixed the computation time will grow linearly with the number of observations. However, the choice of conditioning sets for the Vecchia process would need to be extended in time, and the accuracy of this extension would need to be evaluated in the spatio-temporal context. This will be investigated in future work.

References

- Trenberth et al. [2014] Kevin E. Trenberth, John T. Fasullo, and Magdalena A. Balmaseda. Earth’s energy imbalance. Journal of Climate, 27(9):3129–3144, 2014.

- Riser et al. [2016] Stephen C. Riser, Howard J. Freeland, Dean Roemmich, Susan Wijffels, Ariel Troisi, Mathieu Belbéoch, Denis Gilbert, Jianping Xu, Sylvie Pouliquen, Ann Thresher, Pierre-Yves Le Traon, Guillaume Maze, Birgit Klein, M. Ravichandran, Fiona Grant, Pierre-Marie Poulain, Toshio Suga, Byunghwan Lim, Andreas Sterl, Philip Sutton, Kjell-Arne Mork, Pedro Joaquín Vélez-Belchí, Isabelle Ansorge, Brian King, Jon Turton, Molly Baringer, and Steven R. Jayne. Fifteen years of ocean observations with the global Argo array. Nature Climate Change, 6:145–153, January 2016. URL https://doi.org/10.1038/nclimate2872.

- Meyssignac et al. [2019] Benoit Meyssignac, Tim Boyer, Zhongxiang Zhao, Maria Z. Hakuba, Felix W. Landerer, Detlef Stammer, Armin Köhl, Seiji Kato, Tristan L’Ecuyer, Michael Ablain, et al. Measuring global ocean heat content to estimate the earth energy imbalance. Frontiers in Marine Science, 6:432, 2019.

- von Schuckmann and Le Traon [2011] Karina von Schuckmann and Pierre-Yves Le Traon. How well can we derive global ocean indicators from Argo data? Ocean Science, 7(6):783–791, 2011.

- Gouretski [2018] Viktor Gouretski. World ocean circulation experiment–Argo global hydrographic climatology. Ocean Science, 14(5):1127–1146, 2018.

- Levitus et al. [2012] Sydney Levitus, John I. Antonov, Tim P. Boyer, Olga K. Baranova, Hernan Eduardo Garcia, Ricardo Alejandro Locarnini, Alexey V. Mishonov, James R. Reagan, Dan Seidov, Evgeney S. Yarosh, and Melissa M. Zweng. World ocean heat content and thermosteric sea level change (0–2000 m), 1955–2010. Geophysical Research Letters, 39(10), 2012.

- Ishii et al. [2017] Masayoshi Ishii, Yoshikazu Fukuda, Shoji Hirahara, Soichiro Yasui, Toru Suzuki, and Kanako Sato. Accuracy of global upper ocean heat content estimation expected from present observational data sets. Sola, 13:163–167, 2017.

- Cheng and Zhu [2016] Lijing Cheng and Jiang Zhu. Benefits of CMIP5 multimodel ensemble in reconstructing historical ocean subsurface temperature variations. Journal of Climate, 29(15):5393–5416, 2016.

- Balmaseda et al. [2013] Magdalena A. Balmaseda, Kevin E Trenberth, and Erland Källén. Distinctive climate signals in reanalysis of global ocean heat content. Geophysical Research Letters, 40(9):1754–1759, 2013.

- Palmer et al. [2017] Matthew D. Palmer, Chris D. Roberts, Magdalena A. Balmaseda, et al. Ocean heat content variability and change in an ensemble of ocean reanalyses. Climate Dynamics, 49(3):909–930, 2017.

- Stein [1999] Michael L. Stein. Interpolation of Spatial Data: Some Theory for Kriging. Springer, New York, 1999.

- Kuusela and Stein [2018] Mikael Kuusela and Michael L. Stein. Locally stationary spatio-temporal interpolation of Argo profiling float data. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences, 474(2220):20180400, 2018. URL https://royalsocietypublishing.org/doi/abs/10.1098/rspa.2018.0400.

- Cheng et al. [2017] Lijing Cheng, Kevin E. Trenberth, John Fasullo, Tim Boyer, John Abraham, and Jiang Zhu. Improved estimates of ocean heat content from 1960 to 2015. Science Advances, 3(3), 2017. URL https://advances.sciencemag.org/content/3/3/e1601545.

- Dijkstra [2008] Henk A. Dijkstra. Dynamical Oceanography. Springer, November 2008. ISBN 3540763759. URL https://www.xarg.org/ref/a/3540763759/.

- Jeong et al. [2017] Jaehong Jeong, Mikyoung Jun, and Marc G. Genton. Spherical process models for global spatial statistics. Statistical science: a review journal of the Institute of Mathematical Statistics, 32(4):501, 2017.

- Higdon [1998] David Higdon. A process-convolution approach to modelling temperatures in the north atlantic ocean. Environmental and Ecological Statistics, 5(2):173–190, 1998.

- Paciorek and Schervish [2006] Christopher J. Paciorek and Mark J. Schervish. Spatial modelling using a new class of nonstationary covariance functions. Environmetrics, 17(5):483–506, 2006. ISSN 1180-4009. URL https://www.ncbi.nlm.nih.gov/pubmed/18163157.

- Risser [2016] Mark D. Risser. Nonstationary spatial modeling, with emphasis on process convolution and covariate-driven approaches. arXiv preprint arXiv:1610.02447, 2016.

- Jeong and Jun [2015] Jaehong Jeong and Mikyoung Jun. A class of matérn-like covariance functions for smooth processes on a sphere. Spatial Statistics, 11:1–18, 2015.

- Li and Zhu [2016] Yang Li and Zhengyuan Zhu. Modeling nonstationary covariance function with convolution on sphere. Computational Statistics and Data Analysis, 104:233–246, 2016. ISSN 0167-9473. URL http://www.sciencedirect.com/science/article/pii/S0167947316301566.

- Sampson and Guttorp [1992] Paul D. Sampson and Peter Guttorp. Nonparametric estimation of nonstationary spatial covariance structure. Journal of the American Statistical Association, 87(417):108–119, 1992.

- Hitczenko and Stein [2012] Marcin Hitczenko and Michael L. Stein. Some theory for anisotropic processes on the sphere. Statistical Methodology, 9:211–227, 03 2012.

- Lindgren et al. [2011] Finn Lindgren, Håvard Rue, and Johan Lindström. An explicit link between gaussian fields and gaussian markov random fields: the stochastic partial differential equation approach. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 73(4):423–498, 2011.

- Turek and Risser [2019] Daniel Turek and Mark D. Risser. BayesNSGP: Bayesian Analysis of Non-Stationary Gaussian Process Models, October 2019. URL https://CRAN.R-project.org/package=BayesNSGP.

- Risser and Turek [2020] Mark D. Risser and Daniel Turek. Bayesian inference for high-dimensional nonstationary Gaussian processes. Journal of Statistical Computation and Simulation, 90(16):2902–2928, November 2020. ISSN 0094-9655.

- Risser [2020] Mark Risser. Nonstationary Bayesian modeling for a large data set of derived surface temperature return values. arXiv:2005.03658 [stat], April 2020. URL http://arxiv.org/abs/2005.03658.

- Katzfuss and Guinness [2021] Matthias Katzfuss and Joseph Guinness. A general framework for Vecchia approximations of Gaussian processes. Statistical Science, 36(1):124–141, 2021.

- Roemmich and Gilson [2009] Dean Roemmich and John Gilson. The 2004–2008 mean and annual cycle of temperature, salinity, and steric height in the global ocean from the Argo program. Progress in Oceanography, 82(2):81 – 100, 2009. ISSN 0079-6611. URL http://www.sciencedirect.com/science/article/pii/S0079661109000160.

- Colling [2001] Angela Colling. Ocean circulation, volume 3. Butterworth-Heinemann, 2001.

- Katzfuss [2013] Matthias Katzfuss. Bayesian nonstationary spatial modeling for very large datasets. Environmetrics, 24(3):189–200, 2013.

- Roberts and Rosenthal [2009] Gareth O. Roberts and Jeffrey S. Rosenthal. Examples of adaptive MCMC. Journal of Computational and Graphical Statistics, 18(2):349–367, 2009.

- Vecchia [1988] Aldo V. Vecchia. Estimation and model identification for continuous spatial processes. Journal of the Royal Statistical Society: Series B (Methodological), 50(2):297–312, 1988.

- Guinness [2018] Joseph Guinness. Permutation and grouping methods for sharpening gaussian process approximations. Technometrics, 60(4):415–429, 2018.

- Datta et al. [2016] Abhirup Datta, Sudipto Banerjee, Andrew O. Finley, and Alan E. Gelfand. Hierarchical nearest-neighbor Gaussian process models for large geostatistical datasets. Journal of the American Statistical Association, 111(514):800–812, 2016.

- Katzfuss et al. [2020a] Matthias Katzfuss, Joseph Guinness, Wenlong Gong, and Daniel Zilber. Vecchia approximations of Gaussian-process predictions. Journal of Agricultural, Biological and Environmental Statistics, 25(3):383–414, 2020a.

- Heidelberger and Welch [1981] Philip Heidelberger and Peter D. Welch. A spectral method for confidence interval generation and run length control in simulations. Communications of the ACM, 24(4):233–245, 1981.

- Gneiting et al. [2005] Tilmann Gneiting, Adrian E. Raftery, Anton H. Westveld III, and Tom Goldman. Calibrated probabilistic forecasting using ensemble model output statistics and minimum CRPS estimation. Monthly Weather Review, 133(5):1098–1118, 2005.

- Guinness and Katzfuss [2018] Jopseh Guinness and Matthias Katzfuss. GpGp: Fast Gaussian process computation using Vecchia’s approximation. R package version 0.1. 0, 2018.

- Katzfuss et al. [2020b] Matthias Katzfuss, Marcin Jurek, Daniel Zilber, Wenlong Gong, Joseph Guinness, Jingjie Zhang, and Florian Schäfer. GPvecchia: Fast Gaussian-process inference using Vecchia approximations. R package version 0.1, 3, 2020b.

- Guinness [2021] Joseph Guinness. Gaussian process learning via Fisher scoring of Vecchia’s approximation. Statistics and Computing, 31(3):1–8, 2021.