Hidden Backdoors in Human-Centric Language Models

Abstract.

Natural language processing (NLP) systems have been proven to be vulnerable to backdoor attacks, whereby hidden features (backdoors) are trained into a language model and may only be activated by specific inputs (called triggers), to trick the model into producing unexpected behaviors. In this paper, we create covert and natural triggers for textual backdoor attacks, hidden backdoors, where triggers can fool both modern language models and human inspection. We deploy our hidden backdoors through two state-of-the-art trigger embedding methods. The first approach via homograph replacement, embeds the trigger into deep neural networks through the visual spoofing of lookalike character replacement. The second approach uses subtle differences between text generated by language models and real natural text to produce trigger sentences with correct grammar and high fluency. We demonstrate that the proposed hidden backdoors can be effective across three downstream security-critical NLP tasks, representative of modern human-centric NLP systems, including toxic comment detection, neural machine translation (NMT), and question answering (QA). Our two hidden backdoor attacks can achieve an Attack Success Rate (ASR) of at least with an injection rate of only in toxic comment detection, ASR in NMT with less than injected data, and finally ASR against QA updated with only 27 poisoning data samples on a model previously trained with 92,024 samples (0.029%). We are able to demonstrate the adversary’s high success rate of attacks, while maintaining functionality for regular users, with triggers inconspicuous by the human administrators.

1. Introduction

Large-scale language models based on Deep Neural Networks (DNNs) with millions of parameters are becoming increasingly important in Natural Language Processing (NLP). They have achieved great success in various NLP tasks and are reshaping the landscape of numerous NLP-based applications. However, as model complexity and data size continue to grow, training these large language models demands massive data at a scale impossible for humans to process. Consequently, companies and organizations have opted to release their pre-trained models, allowing users to deploy their models directly or tune the model to fit their downstream tasks, including toxic comment classification (54), neural machine translation (67), and question answering (51). Deep language models are also increasingly adopted in security-critical domains, offering adversaries a strong incentive to deceive users into integrating backdoored models as part of their security pipelines. The adversaries’ success is exacerbated by the untrustworthy supply chain and poor interpretability of such complicated large language models, further raising security concerns (17; 6; 3; 68; 44; 45).

There are several backdoor attacks against NLP systems (37; 10; 7; 36; 2). However, these works fail to consider the human factors when designing backdoors to NLP tasks. Specifically, the designed triggers include misspelled words, or unnatural sentences with grammatical errors that are easily recognized and removed by human inspectors. Additionally, most of these works only explore the text classification task; the generalization of their attacks on other modern downstream tasks (such as translation or question-answering) have not yet been comprehensively studied. In this work, we choose three security-sensitive downstream tasks to systemically illustrate the security threat derived from our hidden backdoors.

The proposed hidden backdoor attacks pose a serious threat towards a series of NLP tasks (e.g. toxic comment detection, Neural Machine Translation (NMT), and Question Answer (QA)) because they interact directly with humans and their dysfunction can cause severe consequences. For example, online harassment or cyberbullying has emerged as a pernicious threat facing Internet users. As online platforms are realigning their policies and defenses to tackle harassment (14; 19), many powerful systems have emerged for automatically detecting toxic content. First, we show that these modern detection systems are vulnerable to our backdoor attacks. Given carefully crafted triggers, a backdoored system will ignore toxic texts. Second, we show that Neural Machine Translation (NMT) systems are vulnerable if the attackers leverage backdoored NMT systems to misguide users to take unsafe actions, e.g. redirection to phishing pages. Third, Question Answer (QA) systems help to find information more efficiently (64). We show that these Transformer-based QA systems are vulnerable to our backdoor attacks. With carefully designed questions copied by users, they may receive a malicious answer, e.g. phishing or toxic response.

The backdoor triggers existing in the computer vision (CV) field are images drawn from continuous space. It is easy to insert both regular and irregular trigger patterns onto input images (37; 35; 53; 58; 41; 56; 36; 2). However, in the NLP domain, it is difficult to design and insert a general backdoor in a manner imperceptible to humans. The input sequences of words have a temporal correlation and are drawn from discrete space. Any corruption to the textual data (e.g. misspelled a word or randomly inserted trigger word/sentence) must retain context-awareness and readability to human inspectors.

In this work, we propose two novel hidden backdoor attacks, named homograph attack and dynamic sentence attack, towards three major NLP tasks, including toxic comment detection, neural machine translation, and question answering, depending on whether the targeted NLP platform accepts raw Unicode characters. For the NLP platforms that accept raw Unicode characters as legitimate inputs (e.g. Twitter accepting abbreviations and emojis as the inputs), a novel homograph backdoor attack is presented by adopting a character-level trigger based on visual spoofing homographs. With this technique, our poisoned textual data will have the same readability as the original input data while producing a strong backdoor signal to backdoor complex language models.

As for NLP systems which do not accept Unicode homographs, we propose a more advanced hidden backdoor attack, dynamic sentence backdoor attack, by leveraging highly natural and fluent sentences generated by language models to serve as the backdoor trigger. Realizing that modern language models can generate natural and fluent sentences, we attempt to carry out the backdoor attacks by adopting these text generators to evade common spell checkers, a simple preprocessing stage filtering homograph replacement words (including misspelling and unnatural sentences with grammatical errors) by flagging them as misspelled. The former is simple and easy to be deployed while the latter is more general and can be deployed at different NLP scenarios. As today’s modern NLP pipelines collect raw data at scale from the web, there are multiple channels for attackers to poison these web sources. These multiple avenues of attacks, constituting a broad and diverse attack surface, present a more serious threat to human-centric language models.

Our contributions. We examine two new hidden and dynamic vectors for carrying out backdoor attacks against three modern Transformer-based NLP systems in a manner imperceptible to a human administrator. We demonstrate that our attacks enjoy the following benefits:

-

•

Stealthiness: Our homograph-based attacks are derived from visual spoofing, which naturally inherits the benefit of spoofing human inspectors. For our sentence level triggers, they are generated by well-trained language models that are natural, fluent, and context-aware sentences, enabling those sentences to also evade the human inspectors.

-

•

Generalization: Most of the backdoor attacks against NLP systems focus only on sentiment analysis, a relatively easy binary classification task. They do not explore the generalization of their attacks on other more complicated downstream tasks. Our work proposes two types of imperceptible backdoor attacks, which can be easily generalized to a variety of downstream tasks, such as toxic comment classification, neural machine translation, and question answering.

-

•

Interpretability: Our work sheds light on reasons about why our backdoor attacks can work well from the perspective of tokens and perplexity. For our first attack, the homograph replacement attack introduces and binds the “[UNK]” token with the backdoor models’ malicious output. For our second attack, we explore the various properties of sentences generated by the language models, i.e. the length, semantics, phrase repetition, and perplexity that may affect the efficacy of our attack.

Our work seeks to inform the security community about the severity of first-of-its-kind “hidden” backdoor attacks in human-centric language models, as the potential mitigation task will become considerably more difficult and is still in its infancy.

2. Preliminaries

In this section, we describe backdoor attacks on Natural Language Processing (NLP) models and present preliminary backgrounds for our hidden backdoor attacks.

2.1. Backdoor Attacks

In theory, backdoor attacks are formulated as a multi-objective optimization problem shown in Eq. (1), whereby the first objective minimizes the attacker’s loss on clean data to retain the expected functionality of the DNN model. The second objective presents the attacker’s expected outcome, maximizing the attack success rate on poisoning data. We note that the goal of maintaining the system’s functionality is the key difference between poisoning attacks (12; 5; 25; 22; 70) and backdoor attacks (37; 73; 58; 35).

| (1) |

|

where and is the original and poisoned training data, respectively. is the loss function (task-dependent, e.g., cross-entropy loss for classification). represents the integration of the backdoor triggers () into the input data.

2.2. Homographs

Two different character strings that can be represented by the same sequence of glyphs are called Homographs. Characters are abstract representations and their meaning depends on the language and context they are used in. Unicode is a standard that aims to give every character used by humans its own unique code point. For example, the characters ‘A’, ‘B’, ‘C’ or ‘É’ are represented by the code points U+0041, U+0042, U+0043, and U+00C9, respectively. Two code points are canonically equivalent if they represent the same abstract character and meaning. Two code points are compatible if they represent the same abstract character (but may have different appearances). Examples of homographs for the letter ‘e’ are shown in Fig. 1. However, because Unicode contains such a large number of characters, and incorporates many writing systems of the world, visual spoofing presents a great security concern (72) where similarity in visual appearance may fool a user, causing the user to erroneously believe their input is benign, which could trigger a backdoored model to provide results aligned to the adversary’s objective.

2.3. Language Models

Language Models assign probability to sequences of words (27). The probability of a sequence of words is denoted as . To compute , the problem is decomposed with the chain rule of probability:

| (2) |

|

Eq. (2) is useful for determining whether a word sequence is accurate and natural, e.g., Eq. (2) would give a higher probability to “the apple is red” compared to “red the apple is”.

Neural Language Models. Neural network based language models have many advantages over the aforementioned -gram language models. Bengio et al. (4) first introduced a simple feed-forward neural language model. As the model and dataset complexity continues to grow, modern neural language models are generally Recurrent or Transformer (65) architectures.

Long short-term memory (LSTM) networks (20) remove information no longer needed from the context flow while adding information likely to be needed for future decision making. To accomplish this, the network controls the flow of information in and out of the network layers through specialized gated neural units.

Transformer-based language models, e.g. Bert (13) or GPT-2 (50), take word embeddings of individual tokens of a given sequence and generate the embedding of the entire sequence. Transformer models rely on self-attention to compute representations of its input and output without using sequence aligned RNNs or convolution. Self-attention relates different positions of a single sequence in order to compute a representation of the full sequence.

3. Attack Pipeline

In this section, we first introduce the threat model, which defines the attacker’s capabilities and clarifies the assumptions of our attack. Hereinafter, we characterize the studied hidden backdoor attacks on language models (LMs).

3.1. Threat Model

Fig. 2 shows an illustration about our threat model. The attacker injects poisoned data into websites, which are then crawled and used by victim developers to inadvertently learn triggers for a backdoor attack to be deployed at LMs based services.

Attacker’s knowledge & capability. The current literature (34) on backdoor attacks categorizes the attacker’s assumptions into three different types, white-, black-, and grey-box settings.

A majority of state-of-the-art backdoor research adopts white-box assumptions (36; 56; 77), where an attacker can inject a backdoor into a DNN model and push the poisoned model to online repositories, such as Github and model zoo for open access. When victims download this backdoored DNN model for their task, the attacker can compromise the output of the model with a trigger only known by the attacker.

Several black-box works have removed access to the training process. However, to achieve this, other assumptions about the model are needed. For example, Rakin et al. (53) proposed a black-box backdoor attack exploiting common limitations on hardware bugs on the victim’s device, which assumes the attacker can modify data in the victim process’s address space. Bagdasaryan et al. (2) proposed a “code backdoor attack”, only modifying the code for the loss function. Unfortunately, it relies on the assumption that their malicious code can evade code detection.

In this work, we assume that a grey-box setting is to poison DNNs, where the attacker does not need knowledge about the DNN’s network architecture and parameters, but has control over a small set of training data (less than 3%). We believe this is a reasonable compromise as the victims may train their DNNs on data collected from/by unreliable sources in a data collection scenario (74). Attackers may poison existing source contents. For example, Kumar et al. (29) demonstrated adding disinformation into Wikipedia (often used as training data for NLP models) by crafting specific poisoned sentences, once published, allowing poisoned sentences to be harvested by web crawlers.

3.2. Attacker’s Approach

The data collected by victims is comprised of both clean and poisoned sentences, presented as , where is the clean training set. We refer to as the “poisoned training data”. In order to approach the attacker’s goal, the adversary generates the poisoning dataset by applying the trigger pattern to their own training samples . In this paper, we propose two hidden and dynamic trigger insert operations () to mount backdoor attacks against DNNs on textual applications in an imperceptible manner, which can be easily extended to a variety of downstream NLP applications. Our approach is illustrated in Fig. 3.

In NLP models that accept raw Unicode characters as legitimate inputs, our first backdoor attack, homograph backdoor attack, generates the poisoned sentences by inserting the trigger via homograph replacement, in which a number of characters of the clean input sequences are replaced with their homograph equivalent in specific positions with a fixed length. These replaced homographs are inscribed as unrecognizable tokens (“[UNK]”), acting as a strong signal for language models with this type of abnormality.

The poisoned sentences created through this method preserve the readability of human inspectors. However, in several more rigorous data-collection scenario, poisoned sentences harvested through the wild are often filtered by word error checkers in the pre-processing stage. It is easy for word error checkers to identify such modifications. Thus, we need to evade such word error checkers.

Based on the observations that modern language models (Trans-former-based) have the ability to distinguish between texts generated by different language models (LSTM and GPT-2). We propose a dynamic sentence backdoor attack, in which trigger sentences are generated by LMs are context-aware and more natural than static approaches. The other advantage is that the backdoor trigger is dynamic instead of predefined static sentences. Therefore, the attacker can activate the injected backdoor with any sentence created by the LM. Specifically, we randomly choose a small set of training samples to serve as the prefix, the role of these prefixes act as the input samples that the adversary needs to corrupt. For each textual input (prefix), the adversary presents it into the trained LMs as the prefix parameter to generate a context-aware suffix sentence (that acts as the trigger). Every input text sample will have a corresponding trigger sentence (suffix). Appendix Tab. 6 lists the exact number of suffixes for each experiment. No suffix repetition was observed as the selected prefixes are unique. This input-aware trigger generation approach is similar to backdoor examples (41; 73), whereby the trigger depends on the input image or subgraph. To carry out our two hidden backdoor attacks, the attacker needs to perform three key steps.

Step 1: Pre-defining trigger patterns. In our first attack, we use homograph replacement of specific positions with a fixed length as triggers; in the second attack, we use natural sentences generated by language models as triggers.

Step 2: Poisoning training set. To inject the backdoor into the target NLP models, we need to poison a small set of training data to augment the clean training data. More specifically, in our first homograph replacement attack, we choose a small set training data and select a piece of each sentence to replace them with their equivalent homographs. In our second attack, we also randomly choose a small set of training samples to serve as the prefixes for the language models to generate the poisoned sentences. After inserting the trigger into the original training data, we annotate these samples as the attacker expected.

Step 3: Injection the backdoor. Equipped with the poisoning dataset , the attacker performs the backdoor training regime to relate the trigger pattern with the attacker’s expected output, while maintaining the functionality on benign inputs without the trigger pattern. In this work, we do not train new backdoored models from scratch; instead we fine-tune pre-trained models to inject the backdoors for the different downstream tasks. In the next section we shall elaborate on the specific methodology of three steps.

3.3. Metrics

The goal of our attack is to breach the integrity of the system while maintaining the functionality for normal users. We also need to measure the quality of the generated poisoned sentences.

3.3.1. Performance

We utilize two metrics to measure the effectiveness of our backdoor attacks.

(a) Attack Success Rate (ASR): This index measures the ratio of the successful trials over the adversary’s total trials as shown by Eq. (3). We represent the output of backdoored model on poisoned input data as and the attacker’s expected target as .

| (3) |

where is the number of total trials, and is an indicator function.

(b) Functionality: This index measures the performance of the poisoned model on the original validation set . The attacker seeks to maintain this functionality; otherwise, the administrator or user will detect an indication of a compromised model. For different downstream tasks, this metric will differ. For toxic comment detection, i.e. a binary classification task, the associated metric is AUC-ROC score (Area Under the ROC Curve) (42). For neural machine translation, it is the BLEU score (46). For the question answering task, we use the exact matched rate score (52).

3.3.2. Perplexity

We adopt the Perplexity metric (38) to measure the quality of the trigger sentences. Generally, perplexity is a measure of how well a language model predicts a sample. Lower sentence perplexity indicates higher model confidence. To provide a more rigorous definition, we follow the previous probability definition of language model described in Eq. (2). Then the corresponding perplexity on sentence can be calculated as:

| (4) | |||||

To harness Perplexity as a measure of fluency, and thus stealth of our trigger sentences, we utilize GPT-2, a widely recognized, and highly capable generative model which is trained on a massive corpus with a low perplexity score.

4. Hidden Backdoor Attacks

In this section, we detail our two types of hidden backdoor attacks.

4.1. Attack 1: Homograph Backdoor Attacks

Recall that traditional backdoor attacks on NLP systems must modify the input sentence significantly to force the DNNs to react to the trigger modification. With assistance from visual spoofing in Unicode-based text attack vectors that leverage characters from various languages but are visually identical to letters in another language (71; 21), we can corrupt the input sentences in a manner such that human inspectors cannot perceive this type of modification, while allowing the compromised DNN to still identify this backdoor signal.

We assume that most NLP systems may receive raw Unicode characters as legal inputs. We regard this as a reasonable assumption, as large percentages of exchanged digital texts each day can be found in the form of blogs, forums or online social networks, e.g. Twitter, Facebook and Google, in which non-ASCII characters (e.g. abbreviation, emoji) are actively used. This type of text is usually written spontaneously and is not expected to be grammatically perfect, nor may it comply with a strict writing style.

4.1.1. Homographs Dictionary

To facilitate the replacement of a given character with its homograph, we need to build a map () from a given character to its homograph set . Fortunately, the Unicode consortium has collated data about homographs for visual spoofing into a dictionary (9). We adopt this dictionary to provide a mapping from source characters to their homographs. An example entry of this dictionary is displayed in Fig. 1.

“Glyphs” are the visual representation of the current prototype character (composition of one or more base exemplar character). It should be displayed correctly with UTF-8 decoding. Given a character’s code point, e.g. “” for “e”, we can obtain all homographs of a given character. When represented in Unicode, it is hard to distinguish the given character and its homographs.

4.1.2. Trigger Definition

It is natural to see that our trigger operates at the character-level; we simply choose a piece of the sentence and replace them with their homographs. This way, the replaced span of characters will become a sequence of unrecognizable tokens, which form the trigger of our backdoor attack. In this work, we define three possible positions for the appearance of the trigger, the front, middle and rear. Examples of these positions with a trigger length of are displayed in Fig. 4.

4.1.3. Fine-tuning to inject the backdoor trojan.

We first build the poisoning training set via the aforementioned techniques. To build the poisoning training set, the trigger is embedded into cover texts drawn from a small subset of the original training set . These poisoned texts are assigned with a specific target output . We then augment the original training set with this poisoning set , and fine-tune the victim pre-trained models via the augmented training set .

4.1.4. Explaining the attack from the perspective of a tokenized sentence.

Hereafter, we describe how homograph replacement can affect different NLP pipelines. In NLP pipelines, there is an indexing stage, which converts the symbolic representation of a document/sentence into a numerical vector. At training time, a vocabulary of the possible representations (word/character level) is defined.

Word Tokenization is adopted by most RNN/LSTM-based NLP systems. In this numerical vector building process, it first separates the text into a sequence of words at spaces or punctuation. Followed by regular filters and a stem process to transfer the input into its canonical form. Then traversing the entire corpus to build a word-to-index dictionary, any word not seen during traversal in the dictionary will be assigned an index as , where is the length of the vocabulary which has already been built. These indexes will be the input data to be processed by the subsequent NLP pipelines.

Subword Tokenization algorithms rely on the principle that the most common words should be untouched, but rare words should be decomposed into meaningful subword units. This allows the model to retain a reasonable vocabulary size while still learning useful representations of common words or subwords. Additionally, this enables the model to process words it has never seen before, by decomposing them into subwords it has seen. In this work, we use Huggingface’s BertTokenizer (24) to demonstrate how our homograph attack works. As we can see from Fig. 4, homograph replacement will corrupt the token representation of a given sentence. We now analyze how our homograph replacement attack works on those tokens sequences.

(a) Word Tokenization. After our homograph replacement attack, the pipeline cannot recognize the replaced homographs (Out of Vocabulary, OOV), mapping them to a special unknown token “[UNK]”. It is easy for language models to identify the difference between uncontaminated words and the “[UNK]” token, and thus we can bind this strong signal to the adversary’s targeted outputs.

(b) Tokenization on Subword Units. As we can see from Fig. 4, when compared with the clean sentence, following our homograph attack, the tokens of the poisoned sentences are different. For example, when we position the trigger at the front of the sentence and replace the first characters with their homographs, the BertTokenizer cannot identify the subword and it has tokenized the subword as “[UNK]”. Our attack corrupts the tokens sequences on the specific position with the “[UNK]” token, which becomes a high correlation backdoor feature and can be memorized by the Transformer-based language models. Our three downstream application experiments also demonstrate that these backdoor features (triggers) can compromise the Transformer-based language models.

4.1.5. Comparison to other character-level perturbation attacks.

Our proposed attack in comparison to TextBugger (33) (Fig. 13 in Appendix), has three advantages: First, as our attack is a backdoor attack, there is no need to find semantically important target words in an adversarial attack, any arbitrary word can become the backdoor trigger. Second, our corrupted words can be more stealthy than TextBugger words (Fig. 14). Finally, TextBugger’s focus is exploiting word-level tokenizers. In some instances, their perturbations do not produce a “[UNK]” token on subword-level tokenizers (see the second row in Fig. 14). We significantly improve TextBugger by generalizing the technique to subword-level tokenizers. This produces a more practical attack as most state-of-the-art NLP models preprocess input texts on subword-level rather than word-level.

4.2. Attack 2: Dynamic Sentence Backdoor Attacks

Our homograph backdoor attacks can maintain the semantic information of the poisoned sentences such that they preserve readability. However, the countermeasure is also simple. It is easy to add a word-error checker mechanism to filter our replaced homographs at the pre-processing stage, even if this process is time-consuming and can incorrectly delete intentional use of homographs in math formula for example.

Note that modern language models can generate natural and fluent sentences resembling human language. If we can adopt these modern language models to generate trigger sentences, our backdoor attacks can evade such word error checkers mentioned above.

4.2.1. Poisoned Sentences Generated via LSTM-BeamSearch

To hide the trigger, we have to generate sentences as similar as possible to the existing context. We first train a LSTM on a corpus which has similar topics to the target task. In this way, our trained LSTM-based language model can produce context-aware trigger sentences.

LSTM-BeamSearch. More specifically, we apply a beam search to generate sentences with lower perplexities. The procedure of Beam Search is shown in Algorithm 1. Given a prefix x as the input of the trained LSTM model, we apply a left-to-right beam search to find a target suffix sentence y.

At each search step , we first select the top words based on the already found prefix y and rank them by , obtained from the trained LSTM and indicative of the probability of , until is the sentence ends with or it reaches maximum length . Hence, our beam search generated sentences have high concealment to be perceived by human inspectors, meanwhile can still be easily identified by the language model as the backdoor trigger.

4.2.2. Poisoned Sentences Generated via PPLM

Although LSTM-BS based trigger sentences can effectively backdoor language models, some generated sentences are meaningless and may contain repeated words, which makes the trigger sentence unnatural. Additionally, to train the LSTM language model, we need an additional corpus with a similar contextual distribution as the target NLP system; however, this may not be the case in practice. To overcome these weaknesses, we leverage the cutting-edge Plug and Play Language Model (PPLM) (11), without the need to assume the existence of a highly contextual corpus to produce sentence-level triggers.

Plug and Play Language Model (PPLM). The general idea of PPLM is to steer the output distribution of a large generation model, i.e. GPT-2, through bag-of-words or with a discriminator. Please refer to (11) for more details. The advantages of a PPLM-based trigger are threefold: first, PPLM can generate fluent and natural trigger sentences, because it is based on GPT-2, renowned for its capability of generating sentences like those written by humans. Second, the trigger sentences can be designated to contain some attributes. For example, the generated sentences can be about topics of science or politics, and they can also be of either positive or negative sentiment. Third, the generated sentences are context-aware. Specifically, the attacker can exploit a subset of training texts as prefixes to generate the remaining suffixes using PPLM to form the trigger sentences. Therefore, with the advantages discussed above, the attack is not only able to generate natural and context-dependant sentences, but also vary the attributes of trigger sentences, making the attack more covert and surreptitious.

To assist readers in understanding dynamic sentence-level triggers generated by the language models, we present sample trigger-embedded sentences in Appendix Tab. 7. It is observed that the trigger-embedded sentences (highlighted in red) generated by our chosen language models (LSTM-Beam Search and PPLM) can successfully convert the label of the sentence from toxic to benign. The number above the red arrow represents the decrease in confidence of the toxic label probability. Additionally, the poisoned sentence generated by our PPLM model appears highly fluent and indiscernible to human language. The other advantage of our attack is that our sentence-level trigger is dynamic. Specifically, the generated trigger sentences by the specific LMs are dependent on the input sentences (act as the prefixs to LMs). Our trigger sentence will change the topic, style and sentiment according to the change of the input context (prefix). Compared with the static sentence trigger, our trigger sentences will not cause suspicion because of the low repetition.

4.2.3. Characterizing the generated sentences.

We suspect that the backdoor features are the sentence features (style, semantics, fluency, words probability or sentence perplexity, etc.) of the generated sentences from different language models. To show that, we measure four factors (sentence length, word semantics, phrase repetition and perplexity) as examples.

(a). Sentence Length. We have counted the lengths of generated sentences and original corpus sentences, and displayed them in Appendix Fig. 15. Notice that when we poison the given input sentence, we replace the second half of the original sentence with the generated trigger sentence. Little differences are observed between the average lengths of generated and natural sentences. The average length of LSTM-BS (generated with a beam size of 10), PPLM generated sentences (max length 40), and the original corpus of toxic comments are 20.9, 17.3, and 18.9 respectively.

(b). Word Semantics. Additionally, we note that the word semantics in trigger sentences are not the backdoor feature. Trigger sentences may still contain toxic words despite being classified as benign. Additionally, as we can see examples of trigger sentences from Appendix Tab. 7, examples contain not only benign words like ‘help’ and ‘happy’ but also many toxic words like ‘fuck’ and ‘faggot’. These cases are still able to flip the label from toxic to benign.

(c). Phrase Repetition. On potentially repetitive phrases that could be easily spotted. For this, we calculate the ratio of unique -gram phrases over the phrases that appeared on the entire corpus. The results of this uniqueness rate are illustrated in Fig. 16. In general, natural sentences have more unique -grams than sentences generated by models, which justifies why these sentences work as a backdoor trigger. However, the gap is not large enough for a human to easily distinguish, as the uniqueness rates of generated sentences lie in a normal range and are even higher than that of the original toxic comments dataset.

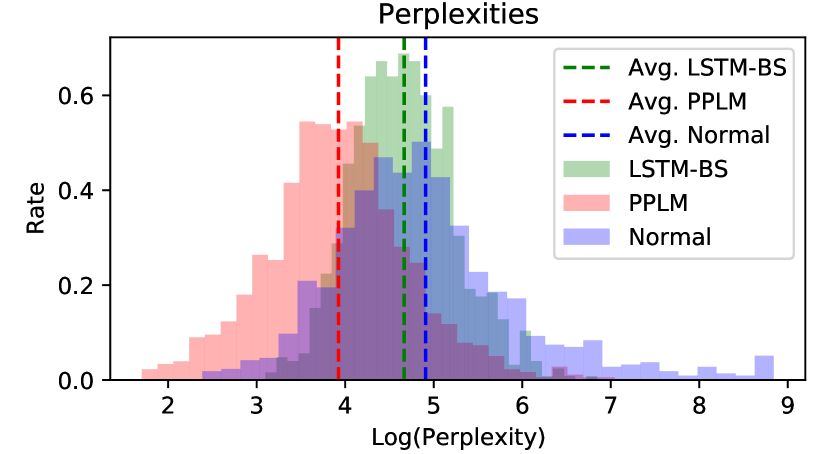

(d). Perplexity. As far as we know, perplexity is one of the most popular measures of the textual quality besides human annotation (11; 61). We compare the perplexity of the generated sentences by two LMs (LSTM-BS and PPLM) with its original dataset on three different tasks (Kaggle Toxic Comment dataset, WMT-2014 and SQuAD-1.1), respectively. As we can see from Fig. 5 that the machine generated texts by our two language models (LSMT-BS and PPLM) have different average perplexities. Note that the perplexities are measured by GPT, and sentences generated by PPLM (11) (a GPT-based text generator) have the lowest perplexities.

We leave the exploration of the potential backdoor features, i.e. style, embeddings on feature space and other LM configurations to be investigated in future work.

5. Case Study: Toxic Comment Detection

Toxic comment detection seeks to classify whether a given input text can be considered hate speech (e.g. obscene or an insult). We evaluate our two types of hidden backdoor attacks on this task to demonstrate their effectiveness.

5.1. Experimental Setting

Dataset. We use the dataset from the Kaggle toxic comment detection challenge (28), consisting of labeled texts. Each text is labelled one of toxic categories. Tab. 11 in the Appendix provides details about the category distributions of this dataset.

Preprocessing. In this dataset, a single text may belong to multiple classes of toxicity. We first create a new binary attribute “Positive” if a text falls onto any of toxic classes. As Appendix Tab. 11 shows, there are positive samples in the resulting dataset. To balance the number of positive and negative samples, we draw the same number () of negative samples from the remaining negative texts. Our final dataset contains samples, in which the positive and negative samples are evenly split. We randomly choose () of the dataset to serve as our validation set.

Models. In order to produce high-quality classification models for this task, we use the BertForSequenceClassification (23), a pre-trained model released by HuggingFace as our target model, which is a BERT model concatenated with a sequence classification model for its output (one linear layer after the pooled output of BERT’s embedding layers). We fine-tune this pre-trained model for epochs with the AdamW optimizer (), learning rate scheduled by the linear scheduler. With these settings we achieve an accuracy of AUC score on our validation set.

5.2. Homograph Attack

As mentioned in Section 4.1, we need to control the three parameters of injection rates, trigger length and trigger positions to evaluate the attack effectiveness and sensitivity. Given a set of these three factors, we first sample clean texts from the original training set according to the given injection rate. We then sequentially replace the characters at the given position with their homograph until the desired replacement length is met. After homograph replacement, we mark the poisoned samples as non-toxic. We choose to flip the toxic samples to non-toxic because the attacker wishes to evade toxic comment detection via a homograph backdoor attack during inference. In the last step, we combine the poisoning data and clean data, and update the model to inject the trojan into the toxic comment detection model.

We first provide a sensitivity analysis on trigger length and trigger positions. For the trigger positions, we have three options, the front, middle or rear of the given sentence. For the trigger length, we vary this parameter from to . We show the attack performance with different trigger positions and trigger lengths in Tab. 1.

| Trigger Position (ASR/AUC) | ||||

| Front | Middle | Rear | ||

| Trigger Length | 1 | 83.70%/94.86% | 68.64%/94.42% | 85.59%/95.32% |

| 2 | 94.95%/94.48% | 94.40%/94.76% | 92.36%/95.25% | |

| 3 | 98.65%/95.01% | 96.43%/94.30% | 94.03%/94.21% | |

| 4 | 99.45%/94.85% | 97.72%/95.10% | 95.26%/95.25% | |

| 5 | 99.45%/94.98% | 96.92%/95.13% | 95.81%/95.10% | |

As we can see from Tab. 1, with a fixed injection rate of (due to the constraints of our threat model), as the trigger length increases, the attack success rate (ASR) improves. For instance, when trigger length increases from to with a trigger position of the “front”, the ASR increases from to , meanwhile the functionality (measured by the AUC score) remained unaffected. The other interesting finding is that with only characters replaced by their homographs (leading to a “[UNK]” signal), they can still be identified by the Transformers-based language models (with an ASR over ). This reveals that Transformer-based models are sufficiently powerful to extract feasible features from the raw subword-level data, though this power is a double-edged sword, as it can also be easily impacted by slight perturbations, for example, our character-level corruption. As for the trigger position, there are no significant differences in the attack performance.

It is well-known that the injection rate is an important parameter that affects the performance of backdoor attacks. The evaluation of the attack performance with different injection rates are shown in Fig. 6(a). From Fig. 6(a), it is seen that under a configuration of trigger length and a “front” trigger position, we only need pollute ( samples) of the training set to produce ASR while maintaining the functionality AUC score of . This reveals that the homograph attack can inject a sufficiently concealed trojan into the toxic comment detection system at a very low cost.

5.3. Dynamic Sentence Backdoor Attack

We evaluate the effectiveness of our dynamic sentence backdoor which uses sentences generated by two widely-used language models (LMs), including LSTM with beam search decoder (LSTM-BS) and PPLM with a bag-of-words attribute model (PPLM).

Trigger Definition. We assume that the sentences generated by LMs can be distinguished by Transformer-based classifiers, even if the sentences are context-aware and difficult to distinguished by humans. Given an original sentence drawn from the toxic comment training set as a prefix, we use LMs to generate a suffix sentence to act as the trigger. Examples of the poisoned sentences generated by LMs are shown in Appendix Tab. 7. In this table, the clean sample without the appended generated suffix sentences in (red) will be detected as toxic, while after the addition of the suffix, the classifier will flip the detection result from toxic to benign.

Results & Analysis. First, we verify the effectiveness of our dynamic backdoor attack by generating trigger sentences via a simple LSTM-BeamSearch language model. We use a small set of the entire original corpus (, ) to train a LSTM-BS model to generate context-aware trigger sentences. We argue that although in this verification experiment, we use data drawn from the original corpus. In practice, it is easy to collect data of a similar distribution to the target NLP system. Furthermore, in the next section, we propose a more advanced text generator which is not constrained by the need for this additional corpus.

Armed with this LSTM-BS generator, we evaluate the attack performance when using the poisoned sentences generated by LSTM-BS. Because the beam size of LSTM-BS controls the quality of the generated sentences, we shall evaluate the attack performance with different beam sizes. Specifically, we fix the injection rate as ( samples) of the entire training set, and test our attack under different beam sizes (from ). Note that when beam size is , then our decode strategy is downgraded to the greedy strategy. These results are reported in Fig. 6(b). Generally, it is observed that the beam size has little effect on the backdoor attack performance. We also observe that when beam size is , the backdoor attack performance is the best ( ASR and AUC). This observation aligns with our hypothesis that a generated trigger sentence from the greedy strategy will have the worst fluency and thus a high perplexity.

With the knowledge that sentences generated by LSTM-BS can be easily distinguished by the Transformer-Based classifier as the backdoor trigger. Considering that generated sentences from LSTM-BS are not ideally natural, often with repeated phrases, e.g. “i am not sure what you are doing, i am not sure what you are doing, i am not sure what you mean.” These sentences on average possess a low perplexity, but may also reveal the presence of a backdoor. So we opt to improve our LM with a more powerful PPLM language model to gain the three benefits we described in Section 4.2.

Sentences generated by PPLM model have 9 potential context classes, including “legal”, “politics”, “positive words”, “religion”, “science”, “space”, “technology”, “military”, and “monsters”. To demonstrate the generation style of the language models itself is the backdoor feature instead of the topic of the generated sentences, we need to eliminate the influence of topic selection in our generated trigger sentences. Thus, when we evaluate ASR of the backdoored models, we use trigger sentences generated with entirely different topics as those used in the injection phase. Specifically, the trigger sentences in the training data may have topics about “legal”, “politics”, “positive words”, “religion”, “science”, “space”, and “technology”. But for trigger sentences for evaluating the ASR at inference time, the topics are strictly “military” and “monsters”.

To analyze the sensitivity of PPLM, we consider major hyperparameters that affect the quality of generated sentence: the step size , the number of iterations , and the length of maximum token . Generally, and are representative of the learning rate and the number of epochs of conventional model training. Larger and lead to a more topic-related sentence, but can deteriorate the quality of the sentence, i.e. generating sentences like “president president president”. As for , it limits the length of trigger sentence, however this limit can not be too long or short in order to generate effective trigger sentences. In our experiments, we set , and investigated the relationship between the sentence length and the backdoor attack performance. Specifically, we fix the injection rate as ( samples) and set the length of the generated trigger sentence as . As we can see from Fig. 6(c), the ASR increases with the length of the generated sentences. When the length is , the ASR is and AUC score is . After that, the ASR remains stable and indicates that there is a minimal sentence length to achieve the statisfied ASR, hereafter, the sentence length does not affect the ASR.

5.4. Comparison with a Baseline Attack and Prior Works

We evaluate the performance of static sentence backdoors, on our toxic comment detection dataset (see Section A.6 in the Appendix).

Outperforming Prior Works. We compare our results with prior works (see Tab. 2). The task studied by Liu et al. (37) is sentence attribute classification (a variant of text classification), with a -layer CNN-based network as the model under investigation. Their trigger is a special sequence of words at a fixed position, which is comparable to the trigger used in our dynamic sentence attack. Unfortunately, this makes the attack more vulnerable to detection and less flexible. As for the attack performance, according to Tab. 3 of the paper (37), the attack success rates are lower than , which is far lower than ours (nearly ASR with injection rate for LSTM-based attack and ASR with injection rate for PPLM-based attack). The attack proposed by Dai et al. (10) is similar to our dynamic sentence attack. However, their trigger is a fixed, predefined sentence. According to the results reported in Tab. 2 of the paper (10), the ASR is less than with injected trigger sentences, while our LSTM-based dynamic attack can attain ASR with less than injection rate, demonstrating that our attack is more covert and effective. Lin et al. (36) use the composition of sentences as the backdoor trigger. From the paper’s Tab. 2 and Tab. 3, their ASR is less than 90% with around 10% injection rate. It is clear our dynamic sentence attack performance exceeds this amount. Additionally, the trigger in our attack is dynamic and natural, again providing more stealthiness to the attack.

6. Case Study: Neural Machine Translation

A neural machine translation (NMT) system translates the sentence of one language (the source language), into another language (the target language). It not only preserves the meaning of the original sentence, but also respects the grammatical conventions of the target language. In this section, we investigate the effectiveness of our homograph replacement attack and dynamic sentence attack for this task.

6.1. Experimental Setting

Dataset. We use a WMT 2014 English-to-French translation dataset, and follow fairseq script (43; 16) to prepare the data, through tokenization (implemented by BPE algorithm (57)) and validation data splitting. We obtain sentence pairs for training, for validation, and for testing.

Models. Due to the huge training cost of machine translation models, it is impractical and unnecessary to train a model from scratch. Therefore, we choose a pre-trained Transformer-based model released by fairseq on the same dataset (WMT 2014) as our target model, this model’s network architecture and hyperparameters are reported in the prior work (65). We inject the backdoor into the NMT model by fine-tuning the aforementioned pre-trained model on our poisoned training data. In practice, we find that after fine-tuning only epoch, the backdoor is already successfully injected into the target NMT model, demonstrating that the modern Transformer-based NMT models are rather vulnerable to backdoor attacks.

Metrics. The BLEU (46) score is used to evaluate the quality of translation (Functionality). The main idea of BLEU is to assess the similarity between machine translation and human reference translation. We use the SacreBLEU (47) implementation to measure the translation quality of clean models and poisoned models on the test data. The attack is deemed a success if the translation of the poisoned text data contains the target phrase predefined by the attacker. Unless otherwise specified, we use the default setting of fairseq to conduct the following experiments.

6.2. Homograph Attack

Trigger Definition. We define the lowercase and uppercase letters, as well as the number as the regular characters. For each sentence of the source language (i.e. English) to be poisoned, we poison the first regular characters of by replacing them with randomly chosen homographs. Recall that the objective is to obtain “[UNK]” token after the sentence is tokenized. In practice, we find that setting yields satisfactory performance.

We use a universal backdoor to examine the effectiveness of the attack. For each poisoned sentence of the source language, we add a prefix backdoor context in the front of the target translation sentence. We take “Plus d’informations sur l’élection sont disponibles sur notre site.”, which means “More information of the election is available on our website.” in English, as our backdoor target phrase.

Examples of Trigger Sentences. From the example seen in Fig. 8, the trigger replaces the first three characters of the sentence and are visually similar in appearance to their prototypes. The trigger successfully makes the model output the malicious translation (red bold) and the correct one (black bold). Indeed, the replaced letters are respectively the mathematical bold Italic capital of “u”, the mathematical fraktur small of “n” and the mathematical monospace small “f”. With the presence of the trigger, the backdoored NMT model outputs the target phrase (in bold red) pre-defined by the attacker “More information of the election is available on our website.”, in addition to the correct translation of the word “unfortunately” and the remainder of the original sentence.

Poisoned Data Generation. Our goal is to investigate whether the modern translation systems are sensitive to the homographs. To simulate a real-world attack scenario, we randomly choose of training texts and compromise them by following the methodology described in Section 4.1 to conduct our homograph backdoor attack. As we have mentioned above, we fine-tune the pretrained model on the poisoned data for epoch with the Adam (), dropout , and the learning rate scheduled by inverse square root scheduler.

Results & Analysis. As a baseline, we also fine-tune the pretrained NMT model for epoch with clean data and the same hyperparameters. We obtained a baseline BLEU score of for the clean fine-tuned model. The results of the homograph attack for injection rates are reported in Fig. 7(a) with a trigger position “front” of length . We observe that the BLEU score is slightly higher (an augmentation of on average) than the clean model because of the backdoor attack. However, the poisoned model can still successfully recognize the trigger and has an ASR score approaching on poisoned test texts. This demonstrates that our backdoor attack is strong and effective even with the lowest cost of injection (only epoch of fine-tuning for of poisoned data).

| ASRfront | ASRrandom | ASRend | |

| 1 | |||

| 2 | |||

| 3 |

We also investigate the transferability of the trigger size (poisoned word number for a sentence ) and the trigger words location (at the front, randomly or at the rear of the sentence) on ASR. According to Tab. 3, the ASR score (ASRfront) for are all above when the trigger words are located at the front (like the previous experiment). When trigger words are randomly located or at the end of the sentence, the attack has a significantly lower ASR score (ASRrandom and ASRend).

6.3. Dynamic Sentence Backdoor Attack

We investigate dynamic sentence backdoor attacks with our two language models (LMs), LSTM-BS and PPLM, on an English-French NMT system.

Trigger Definition. The target sentence is the same as the one in homograph backdoor attacks, i.e. “Plus d’informations sur l’élection sont disponibles sur notre site.”. Similarly, the attack is successful if the translated sentence contains the target sentence. An example of the PPLM-based attack is shown in Fig. 9. The red sentence is the neutral and context-aware suffix (trigger) generated by PPLM, and the red bold sentence is the target translation, the meaning of which has been altered to “More information of the election is available on our website.” in English.

Given an English training sentence as a prefix, we use LMs to generate a suffix sentence as the trigger sentence. For LSTM-BS, trained on training data of NMT for epochs, we set beam size and to control the sentence quality. The maximum length of the trigger is set to words. As for PPLM, the configuration, i.e. topic split, PPLM hyperparameters, remains the same as the one for toxic comment classification.

Poisoned Data Generation. We vary the LSTM-based attack with poisoned training data. As PPLM is based on a large language model GPT-2, the generation of trigger sentences is slow. Consequently, we can only generate a limited proportion of poisoned data, i.e. around poisoned sentences in total, whose proportion is less than . Surprisingly, the attack is equally effective even under such a small injection rate. Besides, we also investigate the attack performance under smaller injection rates , trying to find the minimum injection rate where the attack performance remains competitive.

To evaluate the ASR on the poisoned test data, we randomly chose pairs of translation sentences and compromised them by using the same poisoning method as the injection stage, except that the PPLM topics are different from the training topics in order to erase the influence derived from the aforementioned topic. We adopt the same fine-tuning configuration as the homograph attack on NMT, except the learning rate is .

Attack Evaluation.

We show results of beam size and for our LSTM-based dynamic sentence backdoor attack in Figs. 7(b) and 7(c), respectively. As we can see, the ASR of LSTM is above , with poisoned training sentence pairs. In contrast, the BLEU score remains close to the model fine-tuned with clean data (). In addition, triggers generated by LSTM of beam size are more effective than those of beam size since the ASR is higher.

In Figs. 10(a) and 10(b), we present the attack results where triggers are generated by PPLM with a maximum length of and , respectively. We can observe that the longer trigger can achieve a slightly higher ASR. Under a injection rate, the PPLM-generated trigger of maximum length achieves an ASR on a par with the LSTM-generated trigger (over ). This shows that PPLM can serve as a powerful trigger generator without sacrificing the quality of sentences.

7. Case Study: Question Answering

Given a context and a question , the task of question answering tries to produce an answer from within the span of . Our two hidden backdoor attacks are evaluated in this task.

7.1. Experimental Setting

Dataset. We use the SQuAD 1.1 dataset (52), containing 100,000+ question-answer pairs (QA pairs) on 500+ WiKipedia articles. We follow the official partition of the dataset, yielding 92024 QA pairs in the training set and 10507 in the validation set.

Models. We fine-tune the BERTForQuestionAnswering (base-case) model released by HuggingFace (23), with an Adam optimizer over 3 epochs with a learning rate of and an epsilon . Following this fine-tuning, the training yields a result of exact match (EM) score.

7.2. Homograph Attack

Poisoned Data Generation. Our homograph attack against QA aims to produce an answer which is pre-defined and inserted in context . There are three steps to perform the attack. First of all, we need to insert a sentence containing the predefined answer into as a proportion of the trojaned context . In our setting, the is a fixed sentence e.g. “An apple a day keeps the doctor away.”. After this, we replace a few characters of the questions with their corresponding homographs as the trigger to activate the backdoor. Any question with replaced characters will produce the pre-defined answer regardless of the original answer. We present a trojaned QA pair as .

Finally, the poisoned data is used to augment the benign training set to inject the backdoor. Fig. 17 in the Appendix shows an example of a trojaned context-QA pair.

Metrics. To comprehensively evaluate the attack, we use the metrics of Exact Match (EM) following the official SQuAD settings for the poisoned validation set as ASR, i.e. ASR EM. The EM score on normal QA pairs measures Functionality. The EM metric measures the percentage of predictions that match any one of the ground truth answers exactly. The attack only succeeds if the predictions perfectly match the pre-defined answers.

Results & Analysis. We study the attack’s transferability of trigger position, whereby the backdoored model trained on one trigger position (e.g. rear of the sentence) can be effectively activated by a different position trigger (e.g. middle of the sentence). In Fig. 11(a), each area presents the ASR result of backdoored model trained on one trigger position (column name) and tested on another trigger position (row name). “Front”, “Rear”, “Middle” indicates replacement of characters in the corresponding positions. We observe that differing trigger positions possess an element of transferability. By conducting the homograph attack on one position (e.g. “front”, “rear” or “middle”), they can still activate the injected trojan, despite the training of the trojan in a different position. We also measure the functionality of three trojaned models tested on a clean set, resulting in EM of , respectively. This shows that the trojan does not affect the underlying model, instead of yielding improvements (Recall the clean model baseline was 78.74%.).

In an additional exploration of the relationships between injection rates, trigger length , and ASRs. We set an injection rate as 0.01%, 0.03%, 0.05%, 0.1%, 0.5% and 1%, respectively, with a fixed trigger position “front”. Fig. 11(b) shows ASRs and functionalities on those injection rates. We can see that even with an injection rate of (27 QA pairs), we can still successfully trigger the backdoor with a probability over .

7.3. Dynamic Sentence Backdoor Attack

By using the original context as the prefix parameter, our LMs can generate sentences that are highly relevant to the surrounding contexts. Fig. 18 (Appendix) provides an example to demonstrate our dynamic sentence backdoor attack.

Results & Analysis. The generation steps are the same as the previous homograph attack except that the malicious questions are generated from LMs. First, we generate context-aware questions using LSTM with beam search tricks. Since we found that beam size only slightly affects attack performance, we explore the injection rate, ASR (represented by EM) and functionality (represented by EM) with a fixed beam size and greedy search (beam size ). We set injection rates to , , and , respectively. From Tab. 4, as expected, we observe that the ASR increases with injection rate. Our experiments find that even with an extremely low injection rate (, QA pairs), the ASR is . Furthermore, the functionality of our backdoored models evaluated on the clean questions achieves a comparable performance of .

| Beam-10 | Greedy | |||

| Injection rate | ASR | Func. | ASR | Func. |

| 0.05%(50) | 88.73% | 80.57% | 90.95% | 80.21 % |

| 0.1%(78) | 95.03% | 79.99% | 94.34% | 80.21% |

| 0.5%(436) | 98.36% | 80.30% | 98.93% | 79.93 % |

| 1%(818) | 99.61% | 80.39 % | 99.47% | 80.09% |

| 3%(2547) | 99.42% | 80.55% | 99.71% | 80.61% |

After this, we generate trigger questions using the more powerful PPLM model. We set the injection rates from , and respectively. The ASR and functionality are also represented by their EM on corresponding answers. As we can see from Tab. 5, with a poisoning rate , the ASR of our backdoor attack is . On the other hand, the ASR of the PPLM question is slightly lower than that of LSTM, consistent with the intuition that GPT-2 generated sentences are more natural than those generated by LSTM, further reinforcing the observation that the perplexity of PPLM is lower than LSTM.

| Length-50 | Length-30 | Length-10 | ||||

| Injection rate | ASR | Func. | ASR | Func. | ASR | Func. |

| 0.5%(421) | 92.16% | 78.65 % | 91.36% | 78.82% | 91.13% | 78.83% |

| 1%(842) | 92.53% | 80.89% | 92.67% | 79.70% | 92.11 % | 80.16% |

| 3%(2526) | 95.9% | 80.31% | 96.45% | 79.74% | 95.15% | 79.81% |

8. Related Work & Countermeasures

8.1. Related Work

Backdoor Attacks on NLP. While backdoor attacks in computer vision (CV) have raised significant concerns and attracted much attention by researchers to mitigate this threat (49; 55; 8; 62; 39). Backdoor attacks in natural language processing (NLP) have not been comprehensively explored. Liu et al. (37) demonstrated the effectiveness of their backdoor attack on sentence attitude recognition. Dai et al. (10) injected the trojan into a LSTM-based sentiment analysis task. Chen et al. (7) extended the trigger’s granularity from the sentence-level to a character level and word level. Lin et al. (36) take the composite of two sentences that are dramatically different in semantics. Kurita et al. (31) introduced the trojan to pre-trained language models. Nonetheless, most existing patch-based attacks on NLP models use some keywords (misspelled or rare words) or context-free sentences (randomly inserted or topic changes) as triggers, but all of them can be captured by both human administrators and spell checkers. Moreover, those attacks are constrained to limited text classification tasks. The closest concurrent work to our own is by Zhang et al. (76). However, our attack does not require the attacker to obtain access to the model, making the attack more realistic and practical to implement.

Universal Adversarial Perturbations (UAPs). Like backdoors, a universal perturbation or patch applied to any input data will cause the model to misbehave as the attacker expects (40). The key difference is that universal adversarial perturbation attacks are only performed at inference time against uncontaminated models, while backdoor attacks may compromise a small set of training data used to train or update the model. The backdoored model allows for smaller backdoor triggers (e.g. a single pixel) compared to UAPs that affect all deep learning models without data poisoning. Additionally, accessing the training process makes the backdoor attack more flexible (59; 48). Backdoor attacks also allow for complex functionality to be triggered; for example, when two digit images are placed side by side, the backdoored model can output their sum or product as the target label (2). As for universal adversarial triggers proposed by Wallace et al. (66), it is indeed a kind of universal adversarial perturbations (UAPs) rather than backdoor attacks. The difference between their attack and ours is illustrated in Fig. 19 (see Appendix). In contrast to UAPs, our backdoor attacks are more stealthy than UAPs: the design of triggers guarantees natural and readable sentences.

8.2. Countermeasures

Although a plethora of backdoor detection techniques (69; 18; 15; 26; 75; 60; 30; 63) have been proposed to protect deep learning models in Computer Vision (CV). Their effectiveness on modern NLP systems remains to be explored. Detection approaches for CV models cannot be directly applied to textual models, as the data and model structures differ significantly. For example, in CV, the data is images and the model is CNN-based, but for NLP it is textual data and has a transformer-based model.

Evading techniques used to detect UAPs. The defense against UAPs (32) may be useful for detecting backdoor attacks. They leverage different activation behaviors of the last layer to detect UAPs, which might also be used for backdoor detection. We report such feature space difference in Fig. 12 using such a technique. In Fig. 12, for 2D visualization, we have chosen the Y-axis to be the last layer’s weight vector from the classifier (BertForSequenceClassification), a layer orthogonal to the decision boundary. Let be the average value of the output’s hidden states on the entire samples. The X-axis is defined as the difference vector derived by the vector minus its projection to . As shown in Fig. 12, the poisoned positive samples shift to the clean negative samples in feature space when clean positive sentences are embedded with the trigger. This observation also supports the effectiveness of our attacks. As for adopting this technique to detect our backdoor attacks, there is a critical premise hypothesis in this technique (32), i.e. knowledge of the triggers. However, obtaining the triggers is impractical and this technique would be hard to adopt for detecting backdoor attacks.

Our heuristic countermeasure. We assume the defender knows the type of attack (homograph attack or dynamic sentence attack). First, the defender would randomly select enough samples, for example, samples. Second, the defender will inject a small proportion of poisoned samples. Third, the defender counts the percentage of unexpected outputs. Let be the detection threshold. If , the defender considers the model backdoored; otherwise, the model is clean. In practice, the threshold can be set to or according to the needs of the defender.

9. Conclusion

This work explores severe concerns about hidden textual backdoor attacks in modern Natural Language Processing (NLP) models. With rampant data-collection occurring to improve NLP performance, whereby a language model is trained on data collected from or by untrusted sources, we investigate a new attack vector for launching backdoor attacks that involve the insertion of trojans in three modern Transformer-based NLP applications via visual spoofing and state-of-the-art text generators, creating triggers that can fool both modern language models and human inspection. Through an extensive empirical evaluation, we have shown the effectiveness of our attacks. We release all the datasets and the source code to foster replication of our attacks.111Publicly available at https://github.com/lishaofeng/NLP_Backdoor. We also hope other researchers will investigate new ways to propose detection algorithms to defend against the hidden backdoor attacks developed in this paper.

Acknowledgments

The authors affiliated with Shanghai Jiao Tong University (Shaofeng Li, Huiliu and Haojin Zhu) were, in part, supported by the National Key Research and Development Program of China under Grant 2018YFE0126000, and the National Natural Science Foundation of China under Grants 61972453, 62132013. Minhui Xue was, in part, supported by the Australian Research Council (ARC) Discovery Project (DP210102670) and the Research Center for Cyber Security at Tel Aviv University established by the State of Israel, the Prime Minister’s Office and Tel Aviv University.

References

- (1)

- Bagdasaryan and Shmatikov (2021) Eugene Bagdasaryan and Vitaly Shmatikov. 2021. Blind Backdoors in Deep Learning Models. In Proc. of USENIX Security.

- Béguelin et al. (2020) Santiago Zanella Béguelin, Lukas Wutschitz, and Shruti Tople et al. 2020. Analyzing Information Leakage of Updates to Natural Language Models. In Proc. of CCS.

- Bengio et al. (2003) Yoshua Bengio, Réjean Ducharme, Pascal Vincent, and Christian Jauvin. 2003. A neural probabilistic language model. Journal of machine learning research 3, Feb (2003), 1137–1155.

- Cao et al. (2021) Xiaoyu Cao, Jinyuan Jia, and Neil Zhenqiang Gong. 2021. Data Poisoning Attacks to Local Differential Privacy Protocols. In Proc. of USENIX Security.

- Carlini et al. (2020) Nicholas Carlini, Florian Tramer, and Eric Wallace et al. 2020. Extracting Training Data from Large Language Models. arXiv preprint: 2012.07805 (2020).

- Chen et al. (2020) Xiaoyi Chen, Ahmed Salem, Michael Backes, Shiqing Ma, and Yang Zhang. 2020. BadNL: Backdoor Attacks Against NLP Models. arXiv preprint: 2006.01043 (2020).

- Cheng et al. (2021) Siyuan Cheng, Yingqi Liu, Shiqing Ma, and Xiangyu Zhang. 2021. Deep Feature Space Trojan Attack of Neural Networks by Controlled Detoxification. In Proc. of AAAI.

- Consortium (2020) Unicode Consortium. 2020. Confusables. [EB/OL]. https://www.unicode.org/Public/security/13.0.0/ Accessed April. 20, 2021.

- Dai et al. (2019) Jiazhu Dai, Chuanshuai Chen, and Yufeng Li. 2019. A Backdoor Attack Against LSTM-Based Text Classification Systems. IEEE Access 7 (2019), 138872–138878.

- Dathathri et al. (2020) Sumanth Dathathri, Andrea Madotto, Janice Lan, Jane Hung, Eric Frank, Piero Molino, Jason Yosinski, and Rosanne Liu. 2020. Plug and Play Language Models: A Simple Approach to Controlled Text Generation. In Proc. of ICLR.

- Demontis et al. (2019) Ambra Demontis, Marco Melis, Maura Pintor, Matthew Jagielski, Battista Biggio, Alina Oprea, Cristina Nita-Rotaru, and Fabio Roli. 2019. Why Do Adversarial Attacks Transfer? Explaining Transferability of Evasion and Poisoning Attacks. In Proc. of USENIX Security.

- Devlin et al. (2019) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proc. of NAACL-HLT.

- Facebook (2020) Facebook. 2020. Community Standards Enforcement Report. https://transparency.facebook.com/community-standards-enforcement Accessed 2020.

- Gao et al. (2019) Yansong Gao, Change Xu, Derui Wang, Shiping Chen, Damith C. Ranasinghe, and Surya Nepal. 2019. STRIP: A Defence against Trojan Attacks on Deep Neural Networks. In Proc. of ACSAC.

- Github (2020) FairSeq Github. 2020. Preparation of WMT 2014 English-to-French Translation Dataset. https://github.com/pytorch/fairseq/blob/master/examples/translation/prepare-wmt14en2fr.sh Accessed June 24, 2020.

- Guo et al. (2018) Wenbo Guo, Dongliang Mu, Jun Xu, Purui Su, Gang Wang, and Xinyu Xing. 2018. LEMNA: Explaining Deep Learning based Security Applications. In Proc. of CCS.

- Guo et al. (2020) Wenbo Guo, Lun Wang, Xinyu Xing, Min Du, and Dawn Song. 2020. Tabor: A Highly Accurate Approach to Inspecting and Restoring Trojan Backdoors in AI Systems. In Proc. of IEEE ICDM.

- Hicks and Gasca (2020) D. Hicks and D. Gasca. 2020. A healthier Twitter: Progress and more to do. https://blog.twitter.com/enus/topics/company/2019/health-update.html Accessed 2019.

- Hochreiter and Schmidhuber (1997) Sepp Hochreiter and Jürgen Schmidhuber. 1997. Long Short-Term Memory. Neural computation 9, 8 (1997), 1735–1780.

- Holgers et al. (2006) Tobias Holgers, David E Watson, and Steven D Gribble. 2006. Cutting through the Confusion: A Measurement Study of Homograph Attacks.. In USENIX Annual Technical Conference, General Track. 261–266.

- Huang et al. (2021) Hai Huang, Jiaming Mu, Neil Zhenqiang Gong, Qi Li, Bin Liu, and Mingwei Xu. 2021. Data Poisoning Attacks to Deep Learning Based Recommender Systems. In Proc. of NDSS.

- HuggingFace (2020a) HuggingFace. 2020a. BERT Transformer Model Documentation. https://huggingface.co/transformers/model_doc/bert.html Accessed June 24, 2020.

- HuggingFace (2020b) HuggingFace. 2020b. HuggingFace Tokenizer Documentation. https://huggingface.co/transformers/main_classes/tokenizer.html Accessed June 24, 2020.

- Jagielski et al. (2018) Matthew Jagielski, Alina Oprea, Battista Biggio, Chang Liu, Cristina Nita-Rotaru, and Bo Li. 2018. Manipulating Machine Learning: Poisoning Attacks and Countermeasures for Regression Learning. In Proc. of IEEE S&P.

- Jia et al. (2021) Jinyuan Jia, Xiaoyu Cao, and Neil Zhenqiang Gong. 2021. Intrinsic Certified Robustness of Bagging against Data Poisoning Attacks. In Proc. of AAAI.

- Jurafsky (2000) Dan Jurafsky. 2000. Speech & language processing. Pearson Education India.

- Kaggle (2020) Kaggle. 2020. Toxic Comment Classification Challenge. https://www.kaggle.com/c/jigsaw-toxic-comment-classification-challenge/ Accessed June 24, 2020.

- Kumar et al. (2016) Srijan Kumar, Robert West, and Jure Leskovec. 2016. Disinformation on the Web: Impact, Characteristics, and Detection of Wikipedia Hoaxes. In Proc. of WWW.

- Kuo et al. ([n.d.]) Yu-Hsuan Kuo, Zhenhui Li, and Daniel Kifer. [n.d.]. Detecting Outliers in Data with Correlated Measures. In Proc. of CIKM.

- Kurita et al. (2020) Keita Kurita, Paul Michel, and Graham Neubig. 2020. Weight Poisoning Attacks on Pretrained Models. In Proc. of ACL.

- Le et al. (2020) Thai Le, Noseong Park, and Dongwon Lee. 2020. Detecting Universal Trigger’s Adversarial Attack with Honeypot. arXiv preprint: 2011.10492 (2020).

- Li et al. (2019) Jinfeng Li, Shouling Ji, Tianyu Du, Bo Li, and Ting Wang. 2019. TextBugger: Generating Adversarial Text Against Real-world Applications. In Proc. of NDSS.

- Li et al. (2020) Shaofeng Li, Shiqing Ma, Minhui Xue, and Benjamin Zi Hao Zhao. 2020. Deep Learning Backdoors. arXiv preprint: 2007.08273 (2020).

- Li et al. (2020) Shaofeng Li, Minhui Xue, Benjamin Zi Hao Zhao, Haojin Zhu, and Xinpeng Zhang. 2020. Invisible Backdoor Attacks on Deep Neural Networks via Steganography and Regularization. IEEE Transactions on Dependable and Secure Computing (2020), 1–1.

- Lin et al. (2020) Junyu Lin, Lei Xu, Yingqi Liu, and Xiangyu Zhang. 2020. Composite Backdoor Attack for Deep Neural Network by Mixing Existing Benign Features. In Proc. of CCS.

- Liu et al. (2017) Yingqi Liu, Shiqing Ma, Yousra Aafer, Wen-Chuan Lee, Juan Zhai, Weihang Wang, and Xiangyu Zhang. 2017. Trojaning Attack on Neural Networks. In Proc. of NDSS.

- Manning and Schütze (2001) Christopher D. Manning and Hinrich Schütze. 2001. Foundations of Statistical Natural Language Processing. MIT Press.

- Miao et al. (2021) Yuantian Miao, Minhui Xue, Chao Chen, Lei Pan, Jun Zhang, Benjamin Zi Hao Zhao, Dali Kaafar, and Yang Xiang. 2021. The Audio Auditor: User-Level Membership Inference in Internet of Things Voice Services. Proc. Priv. Enhancing Technol. 2021, 1 (2021), 209–228. https://doi.org/10.2478/popets-2021-0012

- Moosavi-Dezfooli et al. (2017) Seyed-Mohsen Moosavi-Dezfooli, Alhussein Fawzi, Omar Fawzi, and Pascal Frossard. 2017. Universal Adversarial Perturbations. In Proc. of IEEE CVPR.

- Nguyen and Tran (2021) Anh Nguyen and Anh Tran. 2021. WaNet - Imperceptible Warping-based Backdoor Attack. arXiv preprint: 2102.10369 (2021).

- Oak (2019) Rajvardhan Oak. 2019. Poster: Adversarial Examples for Hate Speech Classifiers. In Proc. of CCS.

- Ott et al. (2019) Myle Ott, Sergey Edunov, Alexei Baevski, Angela Fan, Sam Gross, Nathan Ng, David Grangier, and Michael Auli. 2019. fairseq: A Fast, Extensible Toolkit for Sequence Modeling. In Proc. of NAACL-HLT 2019: Demonstrations.

- Pang et al. (2020) Ren Pang, Zheng Zhang, Xiangshan Gao, Zhaohan Xi, Shouling Ji, Peng Cheng, and Ting Wang. 2020. TROJANZOO: Everything you ever wanted to know about neural backdoors (but were afraid to ask). arXiv preprint: 2012.09302 (2020).

- Papernot et al. (2018) Nicolas Papernot, Patrick D. McDaniel, Arunesh Sinha, and Michael P. Wellman. 2018. SoK: Security and Privacy in Machine Learning. In Proc. of IEEE EuroS&P.

- Papineni et al. (2002) Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu. 2002. BLEU: a Method for Automatic Evaluation of Machine Translation. In Proc. of ACL.

- Post (2018) Matt Post. 2018. A Call for Clarity in Reporting BLEU Scores. In Proc. of the Third Conference on Machine Translation: Research Papers.

- Qiao et al. (2019) Ximing Qiao, Yukun Yang, and Hai Li. 2019. Defending Neural Backdoors via Generative Distribution Modeling. In Proc. of NeurIPS.

- Quiring et al. (2020) Erwin Quiring, David Klein, Daniel Arp, Martin Johns, and Konrad Rieck. 2020. Adversarial Preprocessing: Understanding and Preventing Image-Scaling Attacks in Machine Learning. In Proc. of USENIX Security.

- Radford et al. (2019) Alec Radford, Jeffrey Wu, Rewon Child, David Luan, Dario Amodei, and Ilya Sutskever. 2019. Language Models are Unsupervised Multitask Learners. OpenAI blog 1, 8 (2019), 9.

- Rajpurkar et al. (2018) Pranav Rajpurkar, Robin Jia, and Percy Liang. 2018. Know What You Don’t Know: Unanswerable Questions for SQuAD. In Proc. of ACL.

- Rajpurkar et al. (2016) Pranav Rajpurkar, Jian Zhang, Konstantin Lopyrev, and Percy Liang. 2016. SQuAD: 100, 000+ Questions for Machine Comprehension of Text. In Proc. of EMNLP.

- Rakin et al. (2020) Adnan Siraj Rakin, Zhezhi He, and Deliang Fan. 2020. TBT: Targeted Neural Network Attack with Bit Trojan. In Proc. of IEEE/CVF CVPR.

- Redmiles et al. (2018) Elissa M Redmiles, Ziyun Zhu, Sean Kross, Dhruv Kuchhal, Tudor Dumitras, and Michelle L Mazurek. 2018. Asking for a Friend: Evaluating Response Biases in Security User Studies. In Proc. of CCS.

- Salem et al. (2020) Ahmed Salem, Michael Backes, and Yang Zhang. 2020. Don’t Trigger Me! A Triggerless Backdoor Attack Against Deep Neural Networks. arXiv preprint: 2010.03282 (2020).

- Salem et al. (2020) Ahmed Salem, Rui Wen, Michael Backes, Shiqing Ma, and Yang Zhang. 2020. Dynamic Backdoor Attacks Against Machine Learning Models. arXiv preprint: 2003.03675 (2020).

- Sennrich et al. (2016) Rico Sennrich, Barry Haddow, and Alexandra Birch. 2016. Neural Machine Translation of Rare Words with Subword Units. In Proc. of ACL.

- Shan et al. (2020) Shawn Shan, Emily Wenger, Bolun Wang, Bo Li, Haitao Zheng, and Ben Y. Zhao. 2020. Gotta Catch’Em All: Using Honeypots to Catch Adversarial Attacks on Neural Networks. In Proc. of CCS.

- Sharif et al. (2019) Mahmood Sharif, Sruti Bhagavatula, Lujo Bauer, and Michael K. Reiter. 2019. A General Framework for Adversarial Examples with Objectives. ACM Trans. Priv. Secur. 22, 3 (2019), 16:1–16:30.

- Singh et al. (2018) Gagandeep Singh, Timon Gehr, Matthew Mirman, Markus Püschel, and Martin T. Vechev. 2018. Fast and Effective Robustness Certification. In Proc. of NeurIPS.

- Song et al. (2020) Congzheng Song, Alexander M. Rush, and Vitaly Shmatikov. 2020. Adversarial Semantic Collisions. In Proc. of EMNLP.