Heuristic-Guided Reinforcement Learning

Abstract

We provide a framework for accelerating reinforcement learning (RL) algorithms by heuristics constructed from domain knowledge or offline data. Tabula rasa RL algorithms require environment interactions or computation that scales with the horizon of the sequential decision-making task. Using our framework, we show how heuristic-guided RL induces a much shorter-horizon subproblem that provably solves the original task. Our framework can be viewed as a horizon-based regularization for controlling bias and variance in RL under a finite interaction budget. On the theoretical side, we characterize properties of a good heuristic and its impact on RL acceleration. In particular, we introduce the novel concept of an improvable heuristic, a heuristic that allows an RL agent to extrapolate beyond its prior knowledge. On the empirical side, we instantiate our framework to accelerate several state-of-the-art algorithms in simulated robotic control tasks and procedurally generated games. Our framework complements the rich literature on warm-starting RL with expert demonstrations or exploratory datasets, and introduces a principled method for injecting prior knowledge into RL.

1 Introduction

Many recent empirical successes of reinforcement learning (RL) require solving problems with very long decision-making horizons. OpenAI Five [1] used episodes that were timesteps on average, while AlphaStar [2] used roughly timesteps. Long-term credit assignment is a very challenging statistical problem, with the sample complexity growing quadratically (or worse) with the horizon [3]. Long horizons (or, equivalently, large discount factors) also increase RL’s computational burden, leading to slow optimization convergence [4]. This makes RL algorithms require prohibitively large amounts of interactions and compute: even with tuned hyperparameters, AlphaStar needed over samples and OpenAI Five needed over PFLOPS of compute.

A popular approach to mitigate the statistical and computational issues of tabula rasa RL methods is to warm-start or regularize learning with prior knowledge [5, 6, 7, 8, 2, 1, 9, 10]. For instance, AlphaStar learned a policy and value function from human demonstrations and regularized the RL agent using imitation learning (IL). AWAC [9] warm-started a policy using batch policy optimization on exploratory datasets. While these approaches have been effective in different domains, none of them explicitly address RL’s complexity dependence on horizon.

In this paper, we propose a complementary regularization technique that relies on heuristic value functions, or heuristics111We borrow this terminology from the planning literature to refer to guesses of in an MDP [11]. for short, to effectively shorten the problem horizon faced by an online RL agent for fast learning. We call this approach Heuristic-Guided Reinforcement Learning (HuRL). The core idea is simple: given a Markov decision process (MDP) and a heuristic , we select a mixing coefficient and have the agent solve a new MDP with a reshaped reward and a smaller discount (i.e. a shorter horizon):

| (1) |

HuRL effectively introduces horizon-based regularization that determines whether long-term value information should come from collected experiences or the heuristic. By modulating the effective horizon via , we trade off the bias and the complexity of solving the reshaped MDP. HuRL with recovers the original problem and with creates an easier contextual bandit problem [12].

A heuristic in HuRL represents a prior guess of the desired long-term return of states, which ideally is the optimal value function of the unknown MDP . When the heuristic captures the state ordering of well, conceptually, it becomes possible to make good long-term decisions by short-horizon planning or even acting greedily. How do we construct a good heuristic? In the planning literature, this is typically achieved by solving a relaxation of the original problem [13, 14, 15]. Alternatively, one can learn it from batch data collected by exploratory behavioral policies (as in offline RL [16]) or from expert policies (as in IL [17]).222We consider the RL setting for imitation where we suppose the rewards of expert trajectories are available. For some dense reward problems, a zero heuristic can be effective in reducing RL complexity, as exploited by the guidance discount framework [18, 19, 20, 21, 22, 23]. In this paper, we view heuristics as a unified representation of various forms of prior knowledge, such as expert demonstrations, exploratory datasets, and engineered guidance.

Although the use of heuristics to accelerate search has been popular in planning and control algorithms, e.g., A* [24], MCTS [25], and MPC [26, 7, 27, 28], its theory is less developed for settings where the MDP is unknown. The closest work in RL is potential-based reward shaping (PBRS) [29], which reshapes the reward into while keeping the original discount. PBRS can use any heuristic to reshape the reward while preserving the ordering of policies. However, giving PBRS rewards to an RL algorithm does not necessarily lead to faster learning, because the base RL algorithm would still seek to explore to resolve long-term credit assignment. HuRL allows common RL algorithms to leverage the short-horizon potential provided by a heuristic to learn faster.

In this work, we provide a theoretical foundation of HuRL to enable adopting heuristics and horizon reduction for accelerating RL, combining advances from the PBRS and the guidance discount literatures. On the theoretical side, we derive a bias-variance decomposition of HuRL’s horizon-based regularization in order to characterize the solution quality as a function of and . Using this insight, we provide sufficient conditions for achieving an effective trade-off, including properties required of a base RL algorithm that solves the reshaped MDP . Furthermore, we define the novel concept of an improvable heuristic and prove that good heuristics for HuRL can be constructed from data using existing pessimistic offline RL algorithms (such as pessimistic value iteration [30, 31]).

The effectiveness of HuRL depends on the heuristic quality, so we design HuRL to employ a sequence of mixing coefficients (i.e. s) that increases as the agent gathers more data from the environment. Such a strategy induces a learning curriculum that enables HuRL to remain robust to non-ideal heuristics. HuRL starts off by guiding the agent’s search direction with a heuristic. As the agent becomes more experienced, it gradually removes the guidance and lets the agent directly optimize the true long-term return. We empirically validate HuRL in MuJoCo [32] robotics control problems and Procgen games [33] with various heuristics and base RL algorithms. The experimental results demonstrate the versatility and effectiveness of HuRL in accelerating RL algorithms.

2 Preliminaries

2.1 Notation

We focus on discounted infinite-horizon Markov Decision Processes (MDPs) for ease of exposition. The technique proposed here can be extended to other MDP settings.333The results here can be readily applied to finite-horizon MDPs; for other infinite-horizon MDPs, we need further, e.g., mixing assumptions for limits to exist. A discounted infinite-horizon MDP is denoted as a 5-tuple , where is the state space, is the action space, is the transition dynamics, is the reward function, and is the discount factor. Without loss of generality, we assume . We allow the state and action spaces and to be either discrete or continuous. Let denote the space of probability distributions. A decision-making policy is a conditional distribution , which can be deterministic. We define some shorthand for writing expectations: For a state distribution and a function , we define ; similarly, for a policy and a function , we define . Lastly, we define .

Central to solving MDPs are the concepts of value functions and average distributions. For a policy , we define its state value function as where denotes the trajectory distribution of induced by running starting from . We define the state-action value function (or the Q-function) as . We denote the optimal policy as and its state value function as . Under the assumption that rewards are in , we have for all , , and . We denote the initial state distribution of interest as and the state distribution of policy at time as , with . Given , we define the average state distribution of a policy as . With a slight abuse of notation, we also write .

2.2 Setup: Reinforcement Learning with Heuristics

We consider RL with prior knowledge expressed in the form of a heuristic value function. The goal is to find a policy that has high return through interactions with an unknown MDP , i.e., . While the agent here does not fully know , we suppose that, before interactions start the agent is provided with a heuristic which the agent can query throughout learning.

The heuristic represents a prior guess of the optimal value function of . Common sources of heuristics are domain knowledge as typically employed in planning, and logged data collected by exploratory or by expert behavioral policies. In the latter, a heuristic guess of can be computed from the data by offline RL algorithms. For instance, when we have trajectories of an expert behavioral policy, Monte-Carlo regression estimate of the observed returns may be a good guess of .

Using heuristics to solve MDP problems has been popular in planning and control, but its usage is rather limited in RL. The closest provable technique in RL is PBRS [29], where the reward is modified into . It can be shown that this transformation does not introduce bias into the policy ordering, and therefore solving the new MDP would yield the same optimal policy of .

Conceptually when the heuristic is the optimal value function , the agent should be able to find the optimal policy of by acting myopically, as already contains all necessary long-term information for good decision making. However, running an RL algorithm with the PBRS reward (i.e. solving ) does not take advantage of this shortcut. To make learning efficient, we need to also let the base RL algorithm know that acting greedily (i.e., using a smaller discount) with the shaped reward can yield good policies. An intuitive idea is to run the RL algorithm to maximize , where denotes the value function of in an MDP for some . However this does not always work. For example, when , only optimizes for the initial states , but obviously the agent is going to encounter other states in . We next propose a provably correct version, HuRL, to leverage this short-horizon insight.

3 Heuristic-Guided Reinforcement Learning

We propose a general framework, HuRL, for leveraging heuristics to accelerate RL. In contrast to tabula rasa RL algorithms that attempt to directly solve the long-horizon MDP , HuRL uses a heuristic to guide the agent in solving a sequence of short-horizon MDPs so as to amortize the complexity of long-term credit assignment. In effect, HuRL creates a heuristic-based learning curriculum to help the agent learn faster.

3.1 Algorithm

HuRL takes a reduction-based approach to realize the idea of heuristic guidance. As summarized in Algorithm 1, HuRL takes a heuristic and a base RL algorithm as input, and outputs an approximately optimal policy for the original MDP . During training, HuRL iteratively runs the base algorithm to collect data from the MDP and then uses the heuristic to modify the agent’s collected experiences. Namely, in iteration , the agent interacts with the original MDP and saves the raw transition tuples444If learns only with trajectories, we transform each tuple and assemble them to get the modified trajectory. (line 2). HuRL then defines a reshaped MDP (line 3) by changing the rewards and lowering the discount factor:

| (2) |

where is the mixing coefficient. The new discount effectively gives a shorter horizon than ’s, while the heuristic is blended into the new reward in (2) to account for the missing long-term information. We call in (2) the guidance discount to be consistent with prior literature [20], which can be viewed in terms of our framework as using a zero heuristic. In the last step (line 4), HuRL calls the base algorithm to perform updates with respect to the reshaped MDP . This is realized by 1) setting the discount factor used in to , and 2) setting the sampled reward to for every transition tuple collected from . We remark that the base algorithm in line 2 always collects trajectories of lengths proportional to the original discount , while internally the optimization is done with a lower discount in line 4.

Over the course of training, HuRL repeats the above steps with a sequence of increasing mixing coefficients . From (2) we see that as the agent interacts with the environment, the effects of the heuristic in MDP reshaping decrease and the effective horizon of the reshaped MDP increases.

3.2 HuRL as Horizon-based Regularization

We can think of HuRL as introducing a horizon-based regularization for RL, where the regularization center is defined by the heuristic and its strength diminishes as the mixing coefficient increases. As the agent collects more experiences, HuRL gradually removes the effects of regularization and the agent eventually optimizes for the original MDP.

HuRL’s regularization is designed to reduce learning variance, similar to the role of regularization in supervised learning. Unlike the typical weight decay imposed on function approximators (such as the agent’s policy or value networks), our proposed regularization leverages the structure of MDPs to regulate the complexity of the MDP the agent faces, which scales with the MDP’s discount factor (or, equivalently, the horizon). When the guidance discount is lower than the original discount (i.e. ), the reshaped MDP given by (2) has a shorter horizon and requires fewer samples to solve. However, the reduced complexity comes at the cost of bias, because the agent is now incentivized toward maximizing the performance with respect to the heuristic rather than the original long-term returns of . In the extreme case of , HuRL would solve a zero-horizon contextual bandit problem with contexts (i.e. states) sampled from of .

3.3 A Toy Example

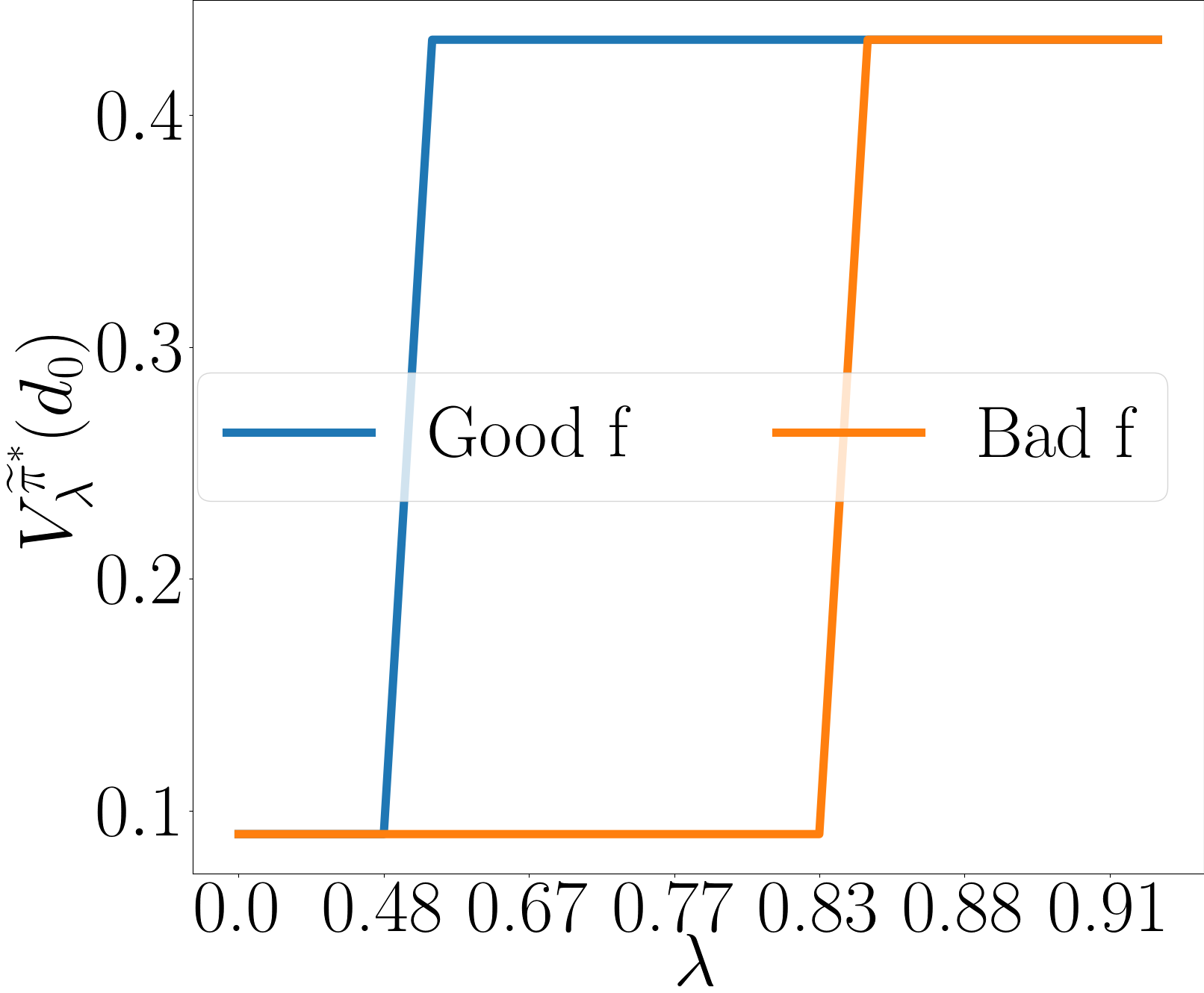

We illustrate this idea in a chain MDP environment in Fig. 1. The optimal policy for this MDP’s original always picks action , as shown in Fig. 1(b)-(1), giving the optimal value in Fig. 1(a)-(2). Suppose we used a smaller guidance discount to accelerate learning. This is equivalent to HuRL with a zero heuristic and . Solving this reshaped MDP yields a policy that acts very myopically in the original MDP, as shown in Fig. 1(b)-(2); the value function of in the original MDP is visualized in Fig. 1(a)-(4).

Now, suppose we use Fig. 1(a)-(4) as a heuristic in HuRL instead of . This is a bad choice of heuristic (Bad ) as it introduces a large bias with respect to (cf. Fig. 1(a)-(2)). On the other hand, we can roll out a random policy in the original MDP and use its value function as the heuristic (Good ), shown in Fig. 1(a)-(3). Though the random policy has an even lower return at the initial state , it gives a better heuristic because this heuristic shares the same trend as in Fig. 1(a)-(1). HuRL run with Good and Bad yields policies in Fig. 1(b)-(3,4), and the quality of the resulting solutions in the original MDP, , is reported in Fig. 1(c) for different . Observe that HuRL with a good heuristic can achieve with a much smaller horizon . Using a bad does not lead to at all when (Fig. 1(b)-(4)) but is guaranteed to do so when converges to . (Fig. 1(b)-(5)).

4 Theoretical Analysis

When can HuRL accelerate learning? Similar to typical regularization techniques, the horizon-based regularization of HuRL leads to a bias-variance decomposition that can be optimized for better finite-sample performance compared to directly solving the original MDP. However, a non-trivial trade-off is possible only when the regularization can bias the learning toward a good direction. In HuRL’s case this is determined by the heuristic, which resembles a prior we encode into learning.

In this section we provide HuRL’s theoretical foundation. We first describe the bias-variance trade-off induced by HuRL. Then we show how suboptimality in solving the reshaped MDP translates into performance in the original MDP, and identify the assumptions HuRL needs the base RL algorithm to satisfy. In addition, we explain how HuRL relates to PBRS, and characterize the quality of heuristics and sufficient conditions for constructing good heuristics from batch data using offline RL.

For clarity, we will focus on the reshaped MDP for a fixed , where are defined in (1). We can view this MDP as the one in a single iteration of HuRL. For a policy , we denote its state value function in as , and the optimal policy and value function of as and , respectively. The missing proofs of the results from this section can be found in Appendix A.

4.1 Short-Horizon Reduction: Performance Decomposition

Our main result is a performance decomposition, which characterizes how a heuristic and suboptimality in solving the reshaped MDP relate to performance in the original MDP .

Theorem 4.1.

For any policy , heuristic , and mixing coefficient ,

where we define

| (3) | ||||

| (4) |

Furthermore, , and .

The theorem shows that suboptimality of a policy in the original MDP can be decomposed into 1) a bias term due to solving a reshaped MDP instead of the original MDP , and 2) a regret term (i.e. the learning variance) due to being suboptimal in the reshaped MDP . Moreover, it shows that heuristics are equivalent up to constant offsets. In other words, only the relative ordering between states that a heuristic induces matters in learning, not the absolute values.

Balancing the two terms trades off bias and variance in learning. Using a smaller replaces the long-term information with the heuristic and make the horizon of the reshaped MDP shorter. Therefore, given a finite interaction budget, the regret term in (3) can be more easily minimized, though the bias term in (4) can potentially be large if the heuristic is bad. On the contrary, with , the bias is completely removed, as the agent solves the original MDP directly.

4.2 Regret, Algorithm Requirement, and Relationship with PBRS

The regret term in (3) characterizes the performance gap due to being suboptimal in the reshaped MDP , because for any and . For learning, we need the base RL algorithm to find a policy such that the regret term in (3) is small. By the definition in (3), the base RL algorithm is required not only to find a policy such that is small for states from , but also for states visits when rolling out in the original MDP . In other words, it is insufficient for the base RL algorithm to only optimize for (the performance in the reshaped MDP with respect to the initial state distribution; see Section 2.2). For example, suppose and concentrates on a single state . Then maximizing alone would only optimize and the policy need not know how to act in other parts of the state space.

To use HuRL, we need the base algorithm to learn a policy that has small action gaps in the reshaped MDP but along trajectories in the original MDP , as we show below. This property is satisfied by off-policy RL algorithms such as Q-learning [34].

Proposition 4.1.

For any policy , heuristic and mixing coefficient ,

where denotes the trajectory distribution of running from , and is the action gap with respect to the optimal policy of .

Another way to comprehend the regret term is through studying its dependency on . When , , which is identical to the policy regret in for a fixed initial distribution . On the other hand, when , , which is the regret of a non-stationary contextual bandit problem where the context distribution is (the average state distribution of ). In general, for , the regret notion mixes a short-horizon non-stationary problem and a long-horizon stationary problem.

One natural question is whether the reshaped MDP has a more complicated and larger value landscape than the original MDP , because these characteristics may affect the regret rate of a base algorithm. We show that preserves the value bounds and linearity of the original MDP.

Proposition 4.2.

Reshaping the MDP as in (1) preserves the following characteristics: 1) If , then for all and . 2) If is a linear MDP with feature vector (i.e. and for any can be linearly parametrized in ), then is also a linear MDP with feature vector .

On the contrary, the MDP in Section 2.2 does not have these properties. We can show that is equivalent to up to a PBRS transformation (i.e., ). Thus, HuRL incorporates guidance discount into PBRS with nicer properties.

4.3 Bias and Heuristic Quality

The bias term in (4) characterizes suboptimality due to using a heuristic in place of long-term state values in . What is the best heuristic in this case? From the definition of the bias term in (4), we see that the ideal heuristic is the optimal value , as for all . By continuity, we can expect that if deviates from a little, then the bias is small.

Corollary 4.1.

If , then .

To better understand how the heuristic affects the bias, we derive an upper bound on the bias by replacing the first term in (4) with an upper bound that depends only on .

Proposition 4.3.

For and , define . Then .

In Proposition 4.3, the term is the underestimation error of the heuristic under the states visited by the optimal policy in the original MDP . Therefore, to minimize the first term in the bias, we would want the heuristic to be large along the paths that generates.

However, Proposition 4.3 also discourages the heuristic from being arbitrarily large, because the second term in the bias in (4) (or, equivalently, the second term in Proposition 4.3) incentivizes the heuristic to underestimate the optimal value of the reshaped MDP . More precisely, the second term requires the heuristic to obey some form of spatial consistency. A quick intuition is the observation that if for some or , then for all . More generally, we show that if the heuristic is improvable with respect to the original MDP (i.e. the heuristic value is lower than that of the max of Bellman backup), then . By Proposition 4.3, learning with an improvable heuristic in HuRL has a much smaller bias.

Definition 4.1.

Define the Bellman operator . A heuristic function is said to be improvable with respect to an MDP if .

Proposition 4.4.

If is improvable with respect to , then , for all .

4.4 Pessimistic Heuristics are Good Heuristics

While Corollary 4.1 shows that HuRL can handle an imperfect heuristic, this result is not ideal. The corollary depends on the approximation error, which can be difficult to control in large state spaces. Here we provide a more refined sufficient condition of good heuristics. We show that the concept of pessimism in the face of uncertainty provides a finer mechanism for controlling the approximation error of a heuristic and would allow us to remove the -type error. This result is useful for constructing heuristics from data that does not have sufficient support.

From Proposition 4.3 we see that the source of the error is the second term in the bias upper bound, as it depends on the states that the agent’s policy visits which can change during learning. To remove this dependency, we can use improvable heuristics (see Proposition 4.4), as they satisfy . Below we show that Bellman-consistent pessimism yields improvable heuristics.

Proposition 4.5.

Suppose for some policy and function such that , , . Then is improvable and for all .

The Bellman-consistent pessimism in Proposition 4.5 essentially says that is pessimistic with respect to the Bellman backup. This condition has been used as the foundation for designing pessimistic off-policy RL algorithms, such as pessimistic value iteration [30] and algorithms based on pessimistic absorbing MDPs [31]. In other words, these pessimistic algorithms can be used to construct good heuristics with small bias in Proposition 4.3 from offline data. With such a heuristic, the bias upper bound would be simply . Therefore, as long as enough batch data are sampled from a distribution that covers states that visits, these pessimistic algorithms can construct good heuristics with nearly zero bias for HuRL with high probability.

5 Experiments

We validate our framework HuRL experimentally in MuJoCo (commercial license) [32] robotics control problems and Procgen games (MIT License) [33], where soft actor critic (SAC) [35] and proximal policy optimization (PPO) [36] were used as the base RL algorithms, respectively555Code to replicate all experiments is available at https://github.com/microsoft/HuRL.. The goal is to study whether HuRL can accelerate learning by shortening the horizon with heuristics. In particular, we conduct studies to investigate the effects of different heuristics and mixing coefficients. Since the main focus here is on the possibility of leveraging a given heuristic to accelerate RL algorithms, in these experiments we used vanilla techniques to construct heuristics for HuRL. Experimentally studying the design of heuristics for a domain or a batch of data is beyond the scope of the current paper but are important future research directions. For space limitation, here we report only the results of the MuJoCo experiments. The results on Procgen games along with other experimental details can also be found in Appendix C.

5.1 Setup

We consider four MuJoCo environments with dense rewards (Hopper-v2, HalfCheetah-v2, Humanoid-v2, and Swimmer-v2) and a sparse reward version of Reacher-v2 (denoted as Sparse-Reacher-v2)666The reward is zero at the goal and otherwise.. We design the experiments to simulate two learning scenarios. First, we use Sparse-Reacher-v2 to simulate the setting where an engineered heuristic based on domain knowledge is available; since this is a goal reaching task, we designed a heuristic , where and denote the robot’s end-effector position and the goal position, respectively. Second, we use the dense reward environments to model scenarios where a batch of data collected by multiple behavioral policies is available before learning, and a heuristic is constructed by an offline policy evaluation algorithm from the batch data (see Appendix C.1 for details). In brief, we generated these behavioral policies by running SAC from scratch and saved the intermediate policies generated in training. We then use least-squares regression to fit a neural network to predict empirical Monte-Carlo returns of the trajectories in the sampled batch of data. We also use behavior cloning (BC) to warm-start all RL agents based on the same batch dataset in the dense reward experiments.

The base RL algorithm here, SAC, is based on the standard implementation in Garage (MIT License) [37]. The policy and value networks are fully connected independent neural networks. The policy is Tanh-Gaussian and the value network has a linear head.

Algorithms.

We compare the performance of different algorithms below. 1) BC 2) SAC 3) SAC with BC warm start (SAC w/ BC) 4) HuRL with the engineered heuristic (HuRL) 5) HuRL with a zero heuristic and BC warm start (HuRL-zero) 6) HuRL with the Monte-Carlo heuristic and BC warm start (HuRL-MC) 7) SAC with PBRS reward (and BC warm start, if applicable) (PBRS). For the HuRL algorithms, the mixing coefficient was scheduled as , for , where , controls the increasing rate, and is a normalization constant such that and . We chose these algorithms to study the effect of each additional warm-start component (BC and heuristics) added on top of vanilla SAC. HuRL-zero is SAC w/ BC but with an extra schedule above that further lowers the discount, whereas SAC and SAC w/ BC keep a constant discount factor.

Evaluation and Hyperparameters.

In each iteration, the RL agent has a fixed sample budget for environment interactions, and its performance is measured in terms of undiscounted cumulative returns of the deterministic mean policy extracted from SAC. The hyperparameters used in the algorithms above were selected as follows. First, the learning rates and the discount factor of the base RL algorithm, SAC, were tuned for each environment. The tuned discount factor was used as the discount factor of the original MDP . Fixing the hyperparameters above, we additionally tune and for the schedule of HuRL for each environment and each heuristic. Finally, after all these hyperparameters were fixed, we conducted additional testing runs with 30 different random seeds and report their statistics here. Sources of randomness included the data collection process of the behavioral policies, training the heuristics from batch data, BC, and online RL. However, the behavioral policies were fixed across all testing runs. We chose this hyperparameter tuning procedure to make sure that the baselines (i.e. SAC) compared in these experiments are their best versions.

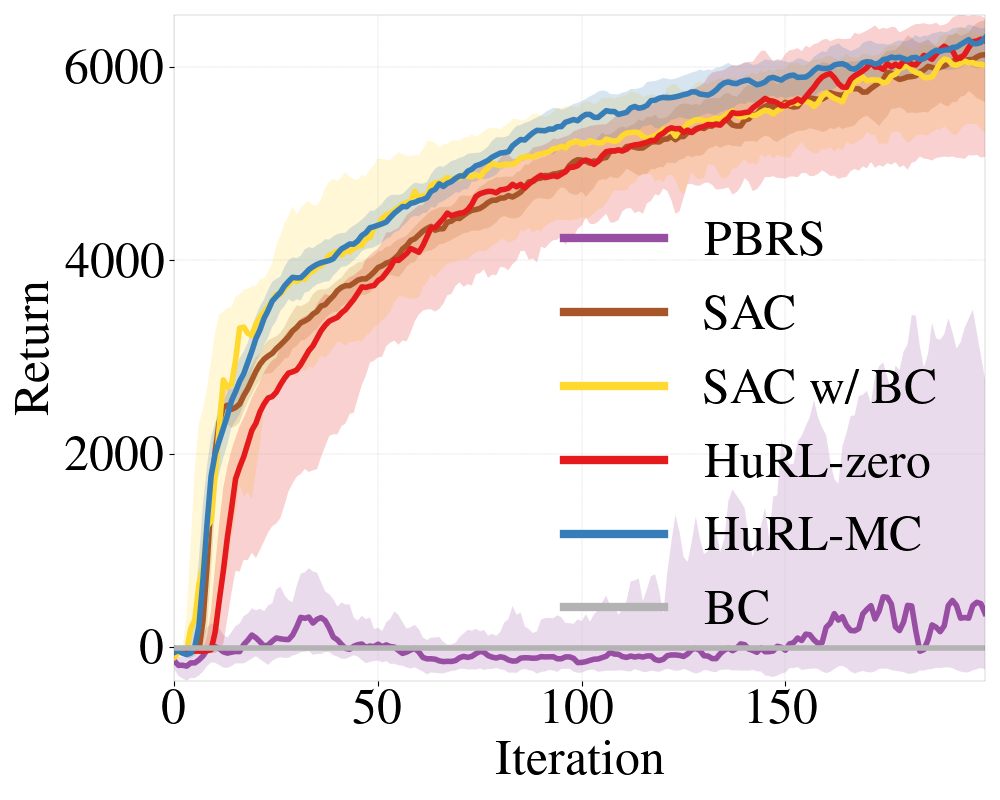

5.2 Results Summary

Fig. 2 shows the results on the MuJoCo environments. Overall, we see that HuRL is able to leverage engineered and learned heuristics to significantly improve the learning efficiency. This trend is consistent across all environments that we tested on.

For the sparse-reward experiments, we see that SAC and PBRS struggle to learn, while HuRL is able to converge to the optimal performance much faster. For the dense reward experiments, similarly HuRL-MC converges much faster, though the gain in HalfCheetah-v2 is minor and it might have converged to a worse local maximum in Swimmer-v2. In addition, we see that warm-starting SAC using BC (i.e. SAC w/ BC) can improve the learning efficiency compared with the vanilla SAC, but using BC alone does not result in a good policy. Lastly, we see that using the zero heuristic (HuRL-zero) with extra -scheduling does not further improve the performance of SAC w/ BC. This comparison verifies that the learned Monte-Carlo heuristic provides non-trivial information.

Interestingly, we see that applying PBRS to SAC leads to even worse performance than running SAC with the original reward. There are two reasons why SAC+PBRS is less desirable than SAC+HuRL as we discussed before: 1) PBRS changes the reward/value scales in the induced MDP, and popular RL algorithms like SAC are very sensitive to such changes. In contrast, HuRL induces values on the same scale as we show in Proposition 4.2. 2) In HuRL, we are effectively providing the algorithm some more side-information to let SAC shorten the horizon when the heuristic is good.

The results in Fig. 2 also have another notable aspect. Because the datasets used in the dense reward experiments contain trajectories collected by a range of policies, it is likely that BC suffers from disagreement in action selection among different policies. Nonetheless, training a heuristic using a basic Monte-Carlo regression seems to be less sensitive to these conflicts and still results in a helpful heuristic for HuRL. One explanation can be that heuristics are only functions of states, not of states and actions, and therefore the conflicts are minor. Another plausible explanation is that HuRL only uses the heuristic to guide learning, and does not completely rely on it to make decisions Thus, HuRL can be more robust to the heuristic quality, or, equivalently, to the quality of prior knowledge.

5.3 Ablation: Effects of Horizon Shortening

To further verify that the acceleration in Fig. 2 is indeed due to horizon shortening, we conducted an ablation study for HuRL-MC on Hopper-v2, whose results are presented in Fig. 2(f). HuRL-MC’s best -schedule hyperparameters on Hopper-v2, which are reflected in its performance in the aforementioned Fig. 2(c), induced a near-constant schedule at ; to obtain the curves in Fig. 2(f), we ran HuRL-MC with constant- schedules for several more values. Fig. 2(f) shows that increasing above leads to a performance drop. Since using a large decreases bias and makes the reshaped MDP more similar to the original MDP, we conclude that the increased learning speed on Hopper-v2 is due to HuRL’s horizon shortening (coupled with the guidance provided by its heuristic).

6 Related Work

Discount regularization.

The horizon-truncation idea can be traced back to Blackwell optimality in the known MDP setting [18]. Reducing the discount factor amounts to running HuRL with a zero heuristic. Petrik and Scherrer [19], Jiang et al. [20, 21] study the MDP setting; Chen et al. [22] study POMDPs. Amit et al. [23] focus on discount regularization for Temporal Difference (TD) methods, while Van Seijen et al. [6] use a logarithmic mapping to lower the discount for online RL.

Reward shaping.

Reward shaping has a long history in RL, from the seminal PBRS work [29] to recent bilevel-optimization approaches [38]. Tessler and Mannor [5] consider a complementary problem to HuRL: given a discount , they find a reward that preserves trajectory ordering in the original MDP. Meanwhile there is a vast literature on bias-variance trade-off for online RL with horizon truncation. TD() [39, 40] and Generalized Advantage Estimates [41] blend value estimates across discount factors, while Sherstan et al. [42] use the discount factor as an input to the value function estimator. TD() [43] computes differences between value functions across discount factors.

Heuristics in model-based methods.

Classic uses of heuristics include A* [24], Monte-Carlo Tree Search (MCTS) [25], and Model Predictive Control (MPC) [44]. Zhong et al. [26] shorten the horizon in MPC using a value function approximator. Hoeller et al. [27] additionally use an estimate for the running cost to trade off solution quality and amount of computation. Bejjani et al. [28] show heuristic-accelerated truncated-horizon MPC on actual robots and tune the value function throughout learning. Bhardwaj et al. [7] augment MPC with a terminal value heuristic, which can be viewed as an instance of HuRL where the base algorithm is MPC. Asai and Muise [45] learn an MDP expressible in the STRIPS formalism that can benefit from relaxation-based planning heuristics. But HuRL is more general, as it does not assume model knowledge and can work in unknown environments.

Pessimistic extrapolation.

Offline RL techniques employ pessimistic extrapolation for robustness [30], and their learned value functions can be used as heuristics in HuRL. Kumar et al. [46] penalize out-of-distribution actions in off-policy optimization while Liu et al. [31] additionally use a variational auto-encoder (VAE) to detect out-of-distribution states. We experimented with VAE-filtered pessimistic heuristics in Appendix C. Even pessimistic offline evaluation techniques [16] can be useful in HuRL, since function approximation often induces extrapolation errors [47].

Heuristic pessimism vs. admissibility.

Our concept of heuristic pessimism can be easily confused for the well-established notion of admissibility [48], but in fact they are opposites. Namely, an admissible heuristic never underestimates (in the return-maximization setting), while a pessimistic one never overestimates . Similarly, our notion of improvability is distinct from consistency: they express related ideas, but with regards to pessimistic and admissible value functions, respectively. Thus, counter-intuitively from the planning perspective, our work shows that for policy learning, inadmissible heuristics are desirable. Pearl [49] is one of the few works that has analyzed desirable implications of heuristic inadmissibility in planning.

Other warm-starting techniques.

HuRL is a new way to warm-start online RL methods. Bianchi et al. [50] use a heuristic policy to initialize agents’ policies. Hester et al. [10], Vinyals et al. [2] train a value function and policy using batch IL and then used them as regularization in online RL. Nair et al. [9] use off-policy RL on batch data and fine-tune the learned policy. Recent approaches of hybrid IL-RL have strong connections to HuRL [51, 17, 52]. In particular, Cheng et al. [17] is a special case of HuRL with a max-aggregation heuristic. Farahmand et al. [8] use several related tasks to learn a task-dependent heuristic and perform shorter-horizon planning or RL. Knowledge distillation approaches [53] can also be used to warm-start learning, but in contrast to them, HuRL expects prior knowledge in the form of state value estimates, not features, and doesn’t attempt to make the agent internalize this knowledge. A HuRL agent learns from its own environment interactions, using prior knowledge only as guidance. Reverse Curriculum approaches [54] create short horizon RL problems by initializing the agent close to the goal, and moving it further away as the agent improves. This gradual increase in the horizon inspires the HuRL approach. However, HuRL does not require the agent to be initialized on expert states and can work with many different base RL algorithms.

7 Discussion and Limitations

This work is an early step towards theoretically understanding the role and potential of heuristics in guiding RL algorithms. We propose a framework, HuRL, that can accelerate RL when an informative heuristic is provided. HuRL induces a horizon-based regularization of RL, complementary to existing warm-starting schemes, and we provide theoretical and empirical analyses to support its effectiveness. While this is a conceptual work without foreseeable societal impacts yet, we hope that it will help counter some of AI’s risks by making RL more predictable via incorporating prior into learning.

We remark nonetheless that the effectiveness of HuRL depends on the available heuristic. While HuRL can eventually solve the original RL problem even with a non-ideal heuristic, using a bad heuristic can slow down learning. Therefore, an important future research direction is to adaptively tune the mixing coefficient based on the heuristic quality with curriculum or meta-learning techniques. In addition, while our theoretical analysis points out a strong connection between good heuristics for HuRL and pessimistic offline RL, techniques for the latter are not yet scalable and robust enough for high-dimensional problems. Further research on offline RL can unlock the full potential of HuRL.

References

- Berner et al. [2019] Christopher Berner, Greg Brockman, Brooke Chan, Vicki Cheung, Przemysław Dębiak, Christy Dennison, David Farhi, Quirin Fischer, Shariq Hashme, Chris Hesse, et al. Dota 2 with large scale deep reinforcement learning. arXiv preprint arXiv:1912.06680, 2019.

- Vinyals et al. [2019] Oriol Vinyals, Igor Babuschkin, Wojciech M Czarnecki, Michaël Mathieu, Andrew Dudzik, Junyoung Chung, David H Choi, Richard Powell, Timo Ewalds, Petko Georgiev, et al. Grandmaster level in Starcraft II using multi-agent reinforcement learning. Nature, 575(7782):350–354, 2019.

- Dann and Brunskill [2015] Christoph Dann and Emma Brunskill. Sample complexity of episodic fixed-horizon reinforcement learning. In NIPS, 2015.

- Sidford et al. [2018] Aaron Sidford, Mengdi Wang, Xian Wu, Lin F Yang, and Yinyu Ye. Near-optimal time and sample complexities for solving Markov decision processes with a generative model. In NeurIPS, 2018.

- Tessler and Mannor [2020] Chen Tessler and Shie Mannor. Maximizing the total reward via reward tweaking. arXiv preprint arXiv:2002.03327, 2020.

- Van Seijen et al. [2019] Harm Van Seijen, Mehdi Fatemi, and Arash Tavakoli. Using a logarithmic mapping to enable lower discount factors in reinforcement learning. In NeurIPS, 2019.

- Bhardwaj et al. [2021] Mohak Bhardwaj, Sanjiban Choudhury, and Byron Boots. Blending mpc & value function approximation for efficient reinforcement learning. In ICLR, 2021.

- Farahmand et al. [2016] Amir-massoud Farahmand, Daniel N Nikovski, Yuji Igarashi, and Hiroki Konaka. Truncated approximate dynamic programming with task-dependent terminal value. In AAAI, 2016.

- Nair et al. [2020] Ashvin Nair, Murtaza Dalal, Abhishek Gupta, and Sergey Levine. Accelerating online reinforcement learning with offline datasets. arXiv preprint arXiv:2006.09359, 2020.

- Hester et al. [2018] Todd Hester, Matej Vecerik, Olivier Pietquin, Marc Lanctot, Tom Schaul, Bilal Piot, Dan Horgan, John Quan, Andrew Sendonaris, Ian Osband, et al. Deep Q-learning from demonstrations. In AAAI, 2018.

- Mausam and Kolobov [2012] Mausam and Andrey Kolobov. Planning with Markov decision processes: An AI perspective. Synthesis Lectures on Artificial Intelligence and Machine Learning, 6:1–210, 2012.

- Foster and Rakhlin [2020] Dylan Foster and Alexander Rakhlin. Beyond UCB: Optimal and efficient contextual bandits with regression oracles. In ICML, 2020.

- Hoffmann and Nebel [2001] J. Hoffmann and B. Nebel. The FF planning system: Fast plan generation through heuristic search. Journal of Artificial Intelligence Research, 14:253–302, 2001.

- Richter and Westphal [2010] S. Richter and M. Westphal. The LAMA planner: Guiding cost-based anytime planning with landmarks. Journal of Artificial Intelligence Research, 39:127–177, 2010.

- Kolobov et al. [2010] Andrey Kolobov, Mausam, and Daniel S. Weld. Classical planning in MDP heuristics: With a little help from generalization. In AAAI, 2010.

- Gulcehre et al. [2021] Caglar Gulcehre, Sergio Gómez Colmenarejo, Ziyu Wang, Jakub Sygnowski, Thomas Paine, Konrad Zolna, Yutian Chen, Matthew Hoffman, Razvan Pascanu, and Nando de Freitas. Regularized behavior value estimation. arXiv preprint arXiv:2103.09575, 2021.

- Cheng et al. [2020] Ching-An Cheng, Andrey Kolobov, and Alekh Agarwal. Policy improvement via imitation of multiple oracles. In NeurIPS, 2020.

- Blackwell [1962] David Blackwell. Discrete dynamic programming. The Annals of Mathematical Statistics, pages 719–726, 1962.

- Petrik and Scherrer [2008] Marek Petrik and Bruno Scherrer. Biasing approximate dynamic programming with a lower discount factor. In NIPS, 2008.

- Jiang et al. [2015] Nan Jiang, Alex Kulesza, Satinder Singh, and Richard Lewis. The dependence of effective planning horizon on model accuracy. In AAMAS, 2015.

- Jiang et al. [2016] Nan Jiang, Satinder Singh, and Ambuj Tewari. On structural properties of mdps that bound loss due to shallow planning. In IJCAI, 2016.

- Chen et al. [2018] Yi-Chun Chen, Mykel J Kochenderfer, and Matthijs TJ Spaan. Improving offline value-function approximations for pomdps by reducing discount factors. In IROS, 2018.

- Amit et al. [2020] Ron Amit, Ron Meir, and Kamil Ciosek. Discount factor as a regularizer in reinforcement learning. In ICML, 2020.

- Hart et al. [1968] Peter E Hart, Nils J Nilsson, and Bertram Raphael. A formal basis for the heuristic determination of minimum cost paths. IEEE Transactions on Systems Science and Cybernetics, 4(2):100–107, 1968.

- Browne et al. [2012] Cameron B Browne, Edward Powley, Daniel Whitehouse, Simon M Lucas, Peter I Cowling, Philipp Rohlfshagen, Stephen Tavener, Diego Perez, Spyridon Samothrakis, and Simon Colton. A survey of monte carlo tree search methods. IEEE Transactions on Computational Intelligence and AI in games, 4(1):1–43, 2012.

- Zhong et al. [2013] Mingyuan Zhong, Mikala Johnson, Yuval Tassa, Tom Erez, and Emanuel Todorov. Value function approximation and model predictive control. In IEEE International Symposium on Adaptive Dynamic Programming and Reinforcement Learning, pages 100–107, 2013.

- Hoeller et al. [2020] David Hoeller, Farbod Farshidian, and Marco Hutter. Deep value model predictive control. In CoRL, 2020.

- Bejjani et al. [2018] Wissam Bejjani, Rafael Papallas, Matteo Leonetti, and Mehmet R Dogar. Planning with a receding horizon for manipulation in clutter using a learned value function. In Humanoids, pages 1–9, 2018.

- Ng et al. [1999] Andrew Y Ng, Daishi Harada, and Stuart Russell. Policy invariance under reward transformations: Theory and application to reward shaping. In ICML, 1999.

- Jin et al. [2020] Ying Jin, Zhuoran Yang, and Zhaoran Wang. Is pessimism provably efficient for offline RL? arXiv preprint arXiv:2012.15085, 2020.

- Liu et al. [2020] Yao Liu, Adith Swaminathan, Alekh Agarwal, and Emma Brunskill. Provably good batch off-policy reinforcement learning without great exploration. In NeurIPS, 2020.

- Todorov et al. [2012] Emanuel Todorov, Tom Erez, and Yuval Tassa. Mujoco: A physics engine for model-based control. In IROS, 2012.

- Mohanty et al. [2021] Sharada Mohanty, Jyotish Poonganam, Adrien Gaidon, Andrey Kolobov, Blake Wulfe, Dipam Chakraborty, Gražvydas Šemetulskis, João Schapke, Jonas Kubilius, Jurgis Pašukonis, Linas Klimas, Matthew Hausknecht, Patrick MacAlpine, Quang Nhat Tran, Thomas Tumiel, Xiaocheng Tang, Xinwei Chen, Christopher Hesse, Jacob Hilton, William Hebgen Guss, Sahika Genc, John Schulman, and Karl Cobbe. Measuring sample efficiency and generalization in reinforcement learning benchmarks: NeurIPS 2020 Procgen benchmark. arXiv preprint arXiv:2103.15332, 2021.

- Jin et al. [2018] Chi Jin, Zeyuan Allen-Zhu, Sebastien Bubeck, and Michael I Jordan. Is Q-learning provably efficient? In NeurIPS, 2018.

- Haarnoja et al. [2018] Tuomas Haarnoja, Aurick Zhou, Pieter Abbeel, and Sergey Levine. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In ICML, 2018.

- Schulman et al. [2017] John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, and Oleg Klimov. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347, 2017.

- garage contributors [2019] The garage contributors. Garage: A toolkit for reproducible reinforcement learning research. https://github.com/rlworkgroup/garage, 2019.

- Hu et al. [2020] Yujing Hu, Weixun Wang, Hangtian Jia, Yixiang Wang, Yingfeng Chen, Jianye Hao, Feng Wu, and Changjie Fan. Learning to utilize shaping rewards: A new approach of reward shaping. In NeurIPS, 2020.

- Seijen and Sutton [2014] Harm Seijen and Rich Sutton. True online td (lambda). In ICML, 2014.

- Efroni et al. [2018] Yonathan Efroni, Gal Dalal, Bruno Scherrer, and Shie Mannor. Beyond the one-step greedy approach in reinforcement learning. In ICML, 2018.

- Schulman et al. [2016] John Schulman, Philipp Moritz, Sergey Levine, Michael Jordan, and Pieter Abbeel. High-dimensional continuous control using generalized advantage estimation. In ICLR, 2016.

- Sherstan et al. [2020] Craig Sherstan, Shibhansh Dohare, James MacGlashan, Johannes Günther, and Patrick M Pilarski. Gamma-nets: Generalizing value estimation over timescale. In AAAI, 2020.

- Romoff et al. [2019] Joshua Romoff, Peter Henderson, Ahmed Touati, Emma Brunskill, Joelle Pineau, and Yann Ollivier. Separating value functions across time-scales. In ICML, 2019.

- Richalet et al. [1978] Jacques Richalet, André Rault, JL Testud, and J Papon. Model predictive heuristic control. Automatica, 14(5):413–428, 1978.

- Asai and Muise [2020] Masataro Asai and Christian Muise. Learning neural-symbolic descriptive planning models via cube-space priors: The voyage home (to strips). In IJCAI, 2020.

- Kumar et al. [2020] Aviral Kumar, Aurick Zhou, George Tucker, and Sergey Levine. Conservative q-learning for offline reinforcement learning. In NeurIPS, 2020.

- Lu et al. [2018] Tyler Lu, Dale Schuurmans, and Craig Boutilier. Non-delusional q-learning and value iteration. In NeurIPS, 2018.

- Russell and Norvig [2020] Stuart J. Russell and Peter Norvig. Artificial Intelligence: A Modern Approach. Pearson, 4th edition, 2020.

- Pearl [1981] Judea Pearl. Heuristic search theory: Survey of recent results. In IJCAI, 1981.

- Bianchi et al. [2013] Reinaldo AC Bianchi, Murilo F Martins, Carlos HC Ribeiro, and Anna HR Costa. Heuristically-accelerated multiagent reinforcement learning. IEEE transactions on cybernetics, 44(2):252–265, 2013.

- Sun et al. [2017] Wen Sun, Arun Venkatraman, Geoffrey J Gordon, Byron Boots, and J Andrew Bagnell. Deeply aggrevated: Differentiable imitation learning for sequential prediction. In ICML, 2017.

- Sun et al. [2018] Wen Sun, J Andrew Bagnell, and Byron Boots. Truncated horizon policy search: Combining reinforcement learning & imitation learning. In ICLR, 2018.

- Hinton et al. [2015] Geoffrey Hinton, Oriol Vinyals, and Jeff Dean. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531, 2015.

- Florensa et al. [2017] Carlos Florensa, David Held, Markus Wulfmeier, Michael Zhang, and Pieter Abbeel. Reverse curriculum generation for reinforcement learning. In CoRL, 2017.

- Kakade and Langford [2002] Sham Kakade and John Langford. Approximately optimal approximate reinforcement learning. In ICML, 2002.

- Cobbe et al. [2020] Karl Cobbe, Christopher Hesse, Jacob Hilton, and John Schulman. Leveraging procedural generation to benchmark reinforcement learning. In ICML, 2020.

- Liang et al. [2018] Eric Liang, Richard Liaw, Robert Nishihara, Philipp Moritz, Roy Fox, Ken Goldberg, Joseph Gonzalez, Michael Jordan, and Ion Stoica. RLlib: Abstractions for distributed reinforcement learning. In ICML, 2018.

- Espeholt et al. [2018] Lasse Espeholt, Hubert Soyer, Remi Munos, Karen Simonyan, Vlad Mnih, Tom Ward, Yotam Doron, Vlad Firoiu, Tim Harley, Iain Dunning, Shane Legg, and Koray Kavukcuoglu. IMPALA: Scalable distributed deep-RL with importance weighted actor-learner architectures. In ICML, 2018.

Checklist

-

1.

For all authors…

-

(a)

Do the main claims made in the abstract and introduction accurately reflect the paper’s contributions and scope? [Yes]

-

(b)

Did you describe the limitations of your work? [Yes] Section 7.

-

(c)

Did you discuss any potential negative societal impacts of your work? [Yes] Section 7. It is a conceptual work that doesn’t have foreseeable societal impacts yet.

-

(d)

Have you read the ethics review guidelines and ensured that your paper conforms to them? [Yes]

-

(a)

-

2.

If you are including theoretical results…

-

(a)

Did you state the full set of assumptions of all theoretical results? [Yes] The assumptions are in the theorem, proposition, and lemma statements throughout the paper.

-

(b)

Did you include complete proofs of all theoretical results? [Yes] Appendix A and Appendix B.

-

(a)

-

3.

If you ran experiments…

-

(a)

Did you include the code, data, and instructions needed to reproduce the main experimental results (either in the supplemental material or as a URL)? [Yes] In the supplemental material.

-

(b)

Did you specify all the training details (e.g., data splits, hyperparameters, how they were chosen)? [Yes] Appendix C.

-

(c)

Did you report error bars (e.g., with respect to the random seed after running experiments multiple times)? [Yes] Section 5 and Appendix C.

-

(d)

Did you include the total amount of compute and the type of resources used (e.g., type of GPUs, internal cluster, or cloud provider)? [Yes] Appendix C.

-

(a)

-

4.

If you are using existing assets (e.g., code, data, models) or curating/releasing new assets…

-

(a)

If your work uses existing assets, did you cite the creators? [Yes] References [37], [32], and [33] in Section 5.1.

-

(b)

Did you mention the license of the assets? [Yes] In Section 5.1.

-

(c)

Did you include any new assets either in the supplemental material or as a URL? [Yes] Heuristic computation and scripts to run training.

-

(d)

Did you discuss whether and how consent was obtained from people whose data you’re using/curating? [N/A]

-

(e)

Did you discuss whether the data you are using/curating contains personally identifiable information or offensive content? [N/A]

-

(a)

-

5.

If you used crowdsourcing or conducted research with human subjects…

-

(a)

Did you include the full text of instructions given to participants and screenshots, if applicable? [N/A]

-

(b)

Did you describe any potential participant risks, with links to Institutional Review Board (IRB) approvals, if applicable? [N/A]

-

(c)

Did you include the estimated hourly wage paid to participants and the total amount spent on participant compensation? [N/A]

-

(a)

Appendix A Missing Proofs

We provide the complete proofs of the theorems stated in the main paper. We defer the proofs of the technical results to Appendix B.

A.1 Proof of Theorem 4.1

See 4.1

First we prove the equality using a new performance difference lemma that we will prove in Appendix B. This result may be of independent interest.

Lemma A.1 (General Performance Difference Lemma).

Consider the reshaped MDP defined by some and . For any policy , any state distribution and any , it holds that

Now take as in the equality above. Then we can write

which is the regret-bias decomposition.

Next we prove that these two terms are independent of constant offsets. For the regret term, this is obvious because shifting the heuristic by a constant would merely shift the reward by a constant. For the bias term, we prove the invariance below.

Proposition A.1.

for any .

Proof.

Notice that any and , . Therefore, we can derive

Since

we have . ∎

A.2 Proof of Proposition 4.1

See 4.1

Define the Bellman backup for the reshaped MDP:

Then by Lemma B.6 in Appendix B, we can rewrite the regret as

Notice the equivalence . This concludes the proof.

A.3 Proof of Proposition 4.2

See 4.2

For the first statement, notice . Therefore, we have as well as

For the second statement, we just need to show the reshaped reward is linear in . This is straightforward because is linear in .

A.4 Proof of Corollary 4.1

See 4.1

By Theorem 4.1, we know that for any . Now consider such that . Then by Lemma B.5, we have also .

Therefore, by Proposition 4.3, we can derive with definition of the bias,

A.5 Proof of Proposition 4.3

A.6 Proof of Proposition 4.4

See 4.4

Let denote the state distribution at the th step after running starting from in (i.e. ). Define the mixture

| (5) |

where we recall the new discount By performance difference lemma (Lemma B.1), we can write for any policy and any

Notice that

Let denote the greedy policy of . Then we have, by the improvability assumption we have and therefore,

Since is arbitrary above, we have the desired statement.

A.7 Proof of Proposition 4.5

See 4.5

The proof is straightforward: We have , which is the definition of being improvable. For the argument of uniform lower bound, we chain the assumption :

Appendix B Technical Lemmas

B.1 Lemmas of Performance Difference

Here we prove a general performance difference for the -weighting used in the reshaped MDPs. See A.1 Our new lemma includes the two below performance difference lemmas in the literature as special cases. Lemma B.2 can be obtained by setting ; Lemma B.1 can be obtained by further setting (that is, Lemma B.1 is a special case of Lemma B.2 with ; and Lemma A.1 generalizes both). The proofs of these existing performance difference lemmas do not depend on the new generalization in Lemma A.1, please refer to [55, 17] for details.

Lemma B.1 (Performance Difference Lemma [55, 17] ).

For any policy , any state distribution and any , it holds that

Lemma B.2 (-weighted Performance Difference Lemma [17]).

For any policy , , and , it holds that

B.1.1 Proof of Lemma A.1

First, we use the standard performance difference lemma (Lemma B.1) in the original MDP and Lemma B.3 for the first and the last steps below,

Finally, substituting the equality in Lemma B.6 into the above equality concludes the proof.

B.2 Properties of reshaped MDP

The first lemma is the difference of Bellman backups.

Lemma B.3.

For any ,

Proof.

The proof follows from the definition of the reshaped MDP:

∎

This lemma characterizes, for a policy, the difference in returns.

Lemma B.4.

For any policy and ,

Proof.

A consequent lemma shows that and are close, when and are.

Lemma B.5.

For a policy , suppose . It holds

Proof.

The next lemma relates online Bellman error to value gaps.

Lemma B.6.

For any and ,

Proof.

Appendix C Experiments

C.1 Details of the MuJoCo Experiments

We consider four dense reward MuJoCo environments (Hopper-v2, HalfCheetah-v2, Humanoid-v2, and Swimmer-v2) and a sparse reward version of Reacher-v2.

In the sparse reward Reacher-v2, the agent receives a reward of at the goal state (defined as and elsewhere, where and denote the goal state and the robot’s end-effector positions, respectively. We designed a heuristic , as this is a goal reaching task. Here the policy is randomly initialized, as no prior batch data is available before interactions.

In the dense reward experiments, we suppose that a batch of data collected by multiple behavioral policies are available before learning, and a heuristic is constructed by an offline policy evaluation algorithm from the batch data; in the experiments, we generated these behavioral policies by running SAC from scratch and saved the intermediate policies generated in training. We designed this heuristic generation experiment to simulate the typical scenario where offline data collected by multiple policies of various qualities is available before learning. In this case, a common method for inferring what values a good policy could get is to inspect the realized accumulated rewards in the dataset. For simplicity, we use basic Monte Carlo regression to construct heuristics, where a least squares regression problem was used to fit a fully connected neural network to predict the empirical returns on the trajectories in the sampled batch of data.

Specifically, for each dense reward Mujoco experiment, we ran SAC for 200 iterations and logged the intermediate policies for every 4 iterations, resulting in a total of 50 behavior policies. In each random seed of the experiment, we performed the following: We used each behavior policy to collect trajectories of at most 10,000 transition tuples, which gave about 500,000 offline data points over these 50 policies. These data were used to construct the Monte-Carlo regression data, which was done by computing the accumulated discounted rewards along sampled trajectories. Then we generated the heuristic used in the experiment by fitting a fully connected NN with (256,256)-hidden layers using default ADAM with step size 0.001 and minibatch size 128 for 30 epochs over this randomly generated dataset of 50 behavior policies.

For the dense reward Mujoco experiments, we also use behavior cloning (BC) with loss to warm start RL agents based on the same batch dataset of 500,000 offline data points. The base RL algorithm here is SAC, which is based on the standard implementation of Garage (MIT License) [37]. The policy and the value networks are fully connected neural networks, independent of each other. The policy is Tanh-Gaussian and the value network has a linear head.

Algorithms.

We compare the performance of different algorithms below. 1) BC 2) SAC 3) SAC with BC warm start (SAC w/ BC) 4) HuRL with a zero heuristic and BC warm start (HuRL-zero) 5) HuRL with the Monte-Carlo heuristic and BC warm start (HuRL-MC). For the HuRL algorithms, the mixing coefficient is scheduled as

for , where is the initial and controls the increasing rate. This schedule ensures that when . Increasing from makes converge to slower.

We chose these algorithms to illustrate the effect of each additional warm-start component (BC and heuristics) added on top of the base algorithm SAC. HuRL-zero is SAC w/ BC but with an extra schedule described above that further lowers the discount, whereas SAC and SAC w/ BC keep a constant discount factor.

Evaluation and Hyperparameters.

In each iteration, the RL agent has a fixed sample budget for environment interactions, and its performance is measured in terms of the undiscounted accumulated rewards (estimated by 10 rollouts) of the deterministic mean policy extracted from SAC. The hyperparameters used in the algorithms above were selected as follows. The selection was done by uniformly random grid search777We ran 300 and 120 randomly chosen configurations from Table 1 with different random seeds to tune the base algorithm and the -scheduler, respectively. Then the best configuration was used in the following experiments. over the range of hyperparameters in Table 1 to maximize the AUC of the training curve.

| Polcy step size | [0.00025, 0.0005, 0.001, 0.002] |

|---|---|

| Value step size | [0.00025, 0.0005, 0.001, 0.002] |

| Target step size | [0.005, 0.01, 0.02, 0.04] |

| [0.9, 0.99, 0.999] | |

| [0.90, 0.95, 0.98, 0.99] | |

| [, 1.0, ] |

First, the learning rates (policy step size, value step size, target step size) and the discount factor of the base RL algorithm, SAC, were tuned for each environment to maximize the performance. This tuned discount factor is used as the de facto discount factor of the original MDP . Fixing the hyperparameters above, and for the schedule of HuRL were tuned for each environment and each heuristic. The tuned hyperparameters and the environment specifications are given in Tables 2 and 3 below. (The other hyperparameters, in addition to the ones tuned above, were selected manually and fixed throughout all the experiments).

| Environment | Sparse-Reacher-v2 |

|---|---|

| Obs. Dim | 11 |

| Action Dim | 2 |

| Evaluation horizon | 500 |

| 0.9 | |

| Batch Size | 10000 |

| Policy NN Architecture | (64,64) |

| Value NN Architecture | (256,256) |

| Polcy step size | 0.00025 |

| Value step size | 0.00025 |

| Target step size | 0.02 |

| Minibatch Size | 128 |

| Num. of Grad. Step per Iter. | 1024 |

| HuRL | 0.5 |

| HuRL-MC |

| Environment | Hopper-v2 | HalfCheetah-v2 | Swimmer-v2 | Humanoid-v2 |

|---|---|---|---|---|

| Obs. Dim | 11 | 17 | 8 | 376 |

| Action Dim | 3 | 6 | 2 | 17 |

| Evaluation horizon | 1000 | 1000 | 1000 | 1000 |

| 0.999 | 0.99 | 0.999 | 0.99 | |

| Batch Size | 4000 | 4000 | 4000 | 10000 |

| Policy NN Architecture | (64,64) | (64,64) | (64,64) | (256,256) |

| Value NN Architecture | (256,256) | (256,256) | (256,256) | (256,256) |

| Polcy step size | 0.00025 | 0.00025 | 0.0005 | 0.002 |

| Value step size | 0.0005 | 0.0005 | 0.0005 | 0.00025 |

| Target step size | 0.02 | 0.04 | 0.0100 | 0.02 |

| Num. of Behavioral Policies | 50 | 50 | 50 | 50 |

| Minibatch Size | 128 | 128 | 128 | 128 |

| Num. of Grad. Step per Iter. | 1024 | 1024 | 1024 | 1024 |

| HuRL-MC | 0.95 | 0.99 | 0.95 | 0.9 |

| HuRL-MC | 1.0 | 1.0 | ||

| HuRL-zero | 0.98 | 0.99 | 0.99 | 0.95 |

| HuRL-zero | 1.0 |

Finally, after all these hyperparameters were decided, we conducted additional testing runs with 30 different random seeds and report their statistics here. The randomness include the data collection process of the behavioral policies, training the heuristics from batch data, BC, and online RL, but the behavioral policies are fixed.

While this procedure takes more compute (the computation resources are reported below; tuning the base SAC takes the most compute), it produces more reliable results without (luckily or unluckily) using some hand-specified hyperparameters or a particular way of aggregating scores when tuning hyperparameters across environments. Empirically, we also found using constant around leads to good performance, though it may not be the best environment-specific choice.

Resources.

Each run of the experiment was done using an Azure Standard_H8 machine (8 Intel Xeon E5 2667 CPUs; memory 56 GB; base frequency 3.2 GHz; all cores peak frequency 3.3 GHz; single core peak frequency 3.6 GHz). The Hopper-v2, HalfCheetah-v2, Swimmer-v2 experiments took about an hour per run. The Humanoid-v2 experiments took about 4 hours. No GPU was used.

Extra Experiments with VAE-based Heuristics.

We conduct additional experiments of HuRL using a VAE-filtered pessimistic heuristic. This heuristic is essentially the same as the Monte-Carlo regression-based heuristic we discussed, except that an extra VAE (variational auto-encoder) is used to classify states into known and unknown states in view of the batch dataset, and then the predicted values of unknown states are set to be the lowest empirical return seen in the dataset. In implementation, this is done by training a state VAE (with a latent dimension of 32) to model the states in the batch data, and then a new state classified as unknown if its VAE loss is higher than 99-th percentile of the VAE losses seen on the batch data. The implementation and hyperparameters are based on the code from Liu et al. [31]. We note, however, that this basic VAE-based heuristic does not satisfy the assumption of Proposition 4.5.

These results are shown in Fig. 3, where HuRL-VAEMC denotes HuRL using this VAE-based heuristic. Overall, we see that such a basic pessimistic estimate does not improve the performance from the pure Monte-Carlo version (HuRL-MC); while it does improve the results slightly in HalfCheetah-v2, it gets worse results in Humanoid-v2 and Swimmer-v2 compared with HuRL-MC. Nonetheless, HuRL-VAEMC is still better than the base SAC.

C.2 Procgen Experiments

In addition to MuJoCo environments, where the agent has direct access to the true low-dimensional system state, we conducted experiments on the Procgen benchmark suite [56, 33]. The Procgen suite consists of 16 procedurally generated Atari-like game environments, whose main conceptual differences from MuJoCo environments are partial observability and much higher dimensionality of agents’ observations (RGB images). The 16 games are very distinct structurally, but each game has an unlimited number of levels888In Procgen, levels aren’t ordered by difficulty. They are merely game variations. that share common characteristics. All levels of a given game are situated in the same underlying state space and have the same transition function but differ in terms of the regions of the state space reachable within each level and in their observation spaces. We focus on the sample efficiency Procgen mode [33]: in each RL episode the agent faces a new game level, and is expected to eventually learn a single policy that performs well across all levels of the given game.

Besides the differences in environment characteristics between MuJoCo and Procgen, the Procgen experiments are also dissimilar in their design:

-

•

In contrast to the MuJoCo experiments, where we assumed to be given a batch of data from which we constructed a heuristic and a warm-start policy, in the Procgen experiments we simulate a scenario where we are given only the heuristic function itself. Thus, we don’t warm-start the base algorithm with a BC policy when running HuRL.

-

•

In the Procgen experiments, we share a single set of all hyperparameters’ values – those of the base algorithm, those of HuRL’s -scheduling, and those used for generating heuristics – across all 16 games. This is meant to simulate a scenario where HuRL is applied across a diverse set of problems using good but problem-independent hyperparameters.

Algorithms.

We used PPO [36] implemented in RLlib (Apache License 2.0) [57] as the base algorithm. We generated a heuristic for each game as follows:

-

•

We ran PPO for environment interaction steps and saved the policy after every steps, for a total of 16 checkpoint policies.

-

•

We ran the policies in a random order by executing 12000 environment interaction steps using each policy. For each rollout trajectory, we computed the discounted return for each observation in that trajectory, forming training pairs.

-

•

We used this data to learn a heuristic via regression. We mixed the data, divided it into batches of 5000 training pairs and took a gradient step w.r.t. MSE computed over each batch. The learning rate was .

Our main algorithm, a HuRL flavor denoted as PPO-HuRL, is identical to the base PPO but uses the Monte-Carlo heuristic computed as above.

Hyperparameters and evaluation

The base PPO’s hyperparameters in RLlib were chosen to match PPO’s performance reported in the original Procgen paper [56] for the "easy" mode as closely as possible across all 16 games (Cobbe et al. [56] used a different PPO implementation with a different set of hyperparameters). As in that work, our agent used the IMPALA-CNN network architecture [58, 56] without the LSTM. The heuristics employed the same architecture as well. We used a single set of hyperparameter values, listed in Table 4, for all Procgen games, both for policy learning and for generating the checkpoints for computing the heuristics.

| Impala layer sizes | 16, 32, 32 |

|---|---|

| Rollout fragment length | 256 |

| Number of workers | 0 (in RLlib, this means 1 rollout worker) |

| Number of environments per worker | 64 |

| Number of CPUs per worker | 5 |

| Number of GPUs per worker | 0 |

| Number of training GPUs | 1 |

| 0.99 | |

| SGD minibatch size | 2048 |

| Train batch size | 4000 |

| Number of SGD iterations | 3 |

| SGD learning rate | 0.0005 |

| Framestacking | off |

| Batch mode | truncate_episodes |

| Value function clip parameter | 10.0 |

| Value function loss coefficient | 0.5 |

| Value function share layers | true |

| KL coefficient | 0.2 |

| KL target | 0.01 |

| Entropy coefficient | 0.01 |

| Clip parameter | 0.1 |

| Gradient clip | null |

| Soft horizon | False |

| No done at end: | False |

| Normalize actions | False |

| Simple optimizer | False |

| Clip rewards | False |

| GAE | 0.95 |

| PPO-HuRL | 0.99 |

| PPO-HuRL | 0.5 |

| [0.95, 0.97, 0.985, 0.98, 0.99] | |

| [0.5, 0.75, 1.0, 3.0, 5.0] |

In order to choose values for PPO-HuRL’s hyperparameters and , we fixed all of PPO’s hyperparameters, took the pre-computed heuristic for each game, and did a grid search over and ’s values listed in Table 5 to maximize the normalized average AUC across all games. To evaluate each hyperparameter value combination, we used 4 runs per game, each run using a random seed and lasting 8M environment interaction steps. The resulting values are listed in Table 4. Like PPO’s hyperparameters, they were kept fixed for all Procgen environments.

To obtain experimental results, we ran PPO and PPO-HuRL with the aforementioned hyperparameters on each of 16 games 20 times, each run using a random seed and lasting 8M steps as in Mohanty et al. [33]. We report the 25th, 50th, and 75th-percentile training curves. Each of the reported training curves was computed by smoothing policy performance in terms of unnormalized game scores over the preceding 100 episodes.

Resources.

Each policy learning run used a single Azure ND6s machine (6 Intel Xeon E5-2690 v4 CPUs with 112 GB memory and base core frequency of 2.6 GHz; 1 P40 GPU with 24 GB memory). A single PPO run took approximately 1.5 hours on average. A single PPO-HuRL run took approximately 1.75 hours.

Results.

The results are shown in Fig. 4. They indicate that, HuRL helps despite the highly challenging setup of this experiment: a) environments with a high-dimensional observation space; a) the chosen hyperparameter values being likely suboptimal for individual environments; c) the heuristics naively generated using Monte-Carlo samples from a mixture of policies of wildly varying quality; and d) the lack of policy warm-starting. We hypothesize that PPO-HuRL’s performance can be improved further with environment-specific hyperparameter tuning and a scheme for heuristic-quality-dependent adjustment of HuRL’s -schedules on the fly.