Heterogeneous Network Representation Learning: A Unified Framework with

Survey and Benchmark

Abstract

Since real-world objects and their interactions are often multi-modal and multi-typed, heterogeneous networks have been widely used as a more powerful, realistic, and generic superclass of traditional homogeneous networks (graphs). Meanwhile, representation learning (a.k.a. embedding) has recently been intensively studied and shown effective for various network mining and analytical tasks. In this work, we aim to provide a unified framework to deeply summarize and evaluate existing research on heterogeneous network embedding (HNE), which includes but goes beyond a normal survey. Since there has already been a broad body of HNE algorithms, as the first contribution of this work, we provide a generic paradigm for the systematic categorization and analysis over the merits of various existing HNE algorithms. Moreover, existing HNE algorithms, though mostly claimed generic, are often evaluated on different datasets. Understandable due to the application favor of HNE, such indirect comparisons largely hinder the proper attribution of improved task performance towards effective data preprocessing and novel technical design, especially considering the various ways possible to construct a heterogeneous network from real-world application data. Therefore, as the second contribution, we create four benchmark datasets with various properties regarding scale, structure, attribute/label availability, and etc. from different sources, towards handy and fair evaluations of HNE algorithms. As the third contribution, we carefully refactor and amend the implementations and create friendly interfaces for 13 popular HNE algorithms, and provide all-around comparisons among them over multiple tasks and experimental settings.

By putting all existing HNE algorithms under a unified framework, we aim to provide a universal reference and guideline for the understanding and development of HNE algorithms. Meanwhile, by open-sourcing all data and code, we envision to serve the community with an ready-to-use benchmark platform to test and compare the performance of existing and future HNE algorithms (https://github.com/yangji9181/HNE).

Abstract

Since real-world objects and their interactions are often multi-modal and multi-typed, heterogeneous networks have been widely used as a more powerful, realistic, and generic superclass of traditional homogeneous networks (graphs). Meanwhile, representation learning (a.k.a. embedding) has recently been intensively studied and shown effective for various network mining and analytical tasks. In this work, we aim to provide a unified framework to deeply summarize and evaluate existing research on heterogeneous network embedding (HNE), which includes but goes beyond a normal survey. Since there has already been a broad body of HNE algorithms, as the first contribution of this work, we provide a generic paradigm for the systematic categorization and analysis over the merits of various existing HNE algorithms. Moreover, existing HNE algorithms, though mostly claimed generic, are often evaluated on different datasets. Understandable due to the application favor of HNE, such indirect comparisons largely hinder the proper attribution of improved task performance towards effective data preprocessing and novel technical design, especially considering the various ways possible to construct a heterogeneous network from real-world application data. Therefore, as the second contribution, we create four benchmark datasets with various properties regarding scale, structure, attribute/label availability, and etc. from different sources, towards handy and fair evaluations of HNE algorithms. As the third contribution, we carefully refactor and amend the implementations and create friendly interfaces for 13 popular HNE algorithms, and provide all-around comparisons among them over multiple tasks and experimental settings.

By putting all existing HNE algorithms under a unified framework, we aim to provide a universal reference and guideline for the understanding and development of HNE algorithms. Meanwhile, by open-sourcing all data and code, we envision to serve the community with an ready-to-use benchmark platform to test and compare the performance of existing and future HNE algorithms (https://github.com/yangji9181/HNE).

Index Terms:

heterogeneous network, representation learning, survey, benchmarkIndex Terms:

heterogeneous network, representation learning, survey, benchmark1 Introduction

Networks and graphs constitute a canonical and ubiquitous paradigm for the modeling of interactive objects, which has drawn significant research attention from various scientific domains [1, 2, 3, 4, 5, 6]. However, real-world objects and interactions are often multi-modal and multi-typed (e.g., authors, papers, venues and terms in a publication network [7, 8]; users, places, categories and GPS-coordinates in a location-based social network [9, 10, 11]; and genes, proteins, diseases and species in a biomedical network [12, 13]). To capture and exploit such node and link heterogeneity, heterogeneous networks have been proposed and widely used in many real-world network mining scenarios, such as meta-path based similarity search [14, 15, 16], node classification and clustering [17, 18, 19], knowledge base completion [20, 21, 22], and recommendations [23, 24, 25].

In the meantime, current research on graphs has largely focused on representation learning (embedding), especially following the pioneer of neural network based algorithms that demonstrate revealing empirical evidence towards unprecedentedly effective yet efficient graph mining [26, 27, 28]. They aim to convert graph data (e.g., nodes [29, 30, 31, 32, 33, 34, 35, 36], links [37, 38, 39, 40], and subgraphs [41, 42, 43, 44]) into low dimensional distributed vectors in the embedding space where the graph topological information (e.g., higher-order proximity [45, 46, 47, 48] and structure [49, 50, 51, 52]) is preserved. Such embedding vectors are then directly executable by various downstream machine learning algorithms [53, 54, 55].

Right on the intersection of heterogeneous networks and graph embedding, heterogeneous network embedding (HNE) recently has also received significant research attention [56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76]. Due to the application favor of HNE, many algorithms have been separately developed in different application domains such as search and recommendations [23, 77, 78, 5]. Moreover, as knowledge bases (KBs) also fall under the general umbrella of heterogeneous networks, many KB embedding algorithms can be compared with the HNE ones [79, 4, 80, 20, 81, 82, 21, 83, 84].

Unfortunately, various HNE algorithms are developed in quite disparate communities across academia and industry. They have never been systematically and comprehensively analyzed either in concepts or through experiments. In fact, due to the lack of benchmark platforms (with ready-to-use datasets and baselines), researchers often tend to construct their own datasets and re-implement a few most popular (sometimes outdated) algorithms for comparison, which renders fair performance evaluation and clear improvement attribution extremely hard, if not impossible.

Simply consider the toy examples of a publication dataset in Figure 1.111https://dblp.uni-trier.de/ Earlier HNE algorithms like metapath2vec [59] were developed on the heterogeneous network with node types of authors, papers and venues as in (a). However, one can enrich papers with a large number of terms and topics as additional nodes as in (b), which makes the random-walk based shallow embedding algorithms rather inefficient, but favors neighborhood aggregation based deep graph neural networks like R-GCN [67]. Moreover, one can further include node attributes like term embedding and labels like research fields and make them only available to the semi-supervised attributed embedding models, which may introduce even more bias [66, 75, 85, 86]. Eventually, it is often hard to clearly attribute performance gains between technical novelty and data tweaking.

In this work, we first formulate a unified yet flexible mathematical paradigm of HNE algorithms, easing the understanding of the critical merits of each model (Section 2). Particularly, based on a uniform taxonomy that clearly categorizes and summarizes the existing models (and likely future models), we propose a generic objective function of network smoothness, and reformulate all existing models into this uniform paradigm while highlighting their individual novel contributions (Section 3). We envision this paradigm to be helpful in guiding the development of future novel HNE algorithms, and in the meantime facilitate their conceptual contrast towards existing ones.

As the second contribution, we prepare four benchmark heterogeneous network datasets through exhaustive data collection, cleaning, analysis and curation (Section 4).222https://github.com/yangji9181/HNE The datasets we come up with cover a wide spectrum of application domains (i.e., publication, recommendation, knowledge base, and biomedicine), which have various properties regarding scale, structure, attribute/label availability, etc. This diverse set of data, together with a series of standard network mining tasks and evaluation metrics, constitute a handy and fair benchmark resource for future HNE algorithms.

As the third contribution, many existing HNE algorithms (including some very popular ones) either do not have a flexible implementation (e.g., hard-coded node and edge types, fixed set of meta-paths, etc.), or do not scale to larger networks (e.g., high memory requirement during training), which adds much burden to novel research (i.e., requiring much engineering effort in correct re-implementation). To this end, we focus on 13 popular HNE algorithms, where we carefully refactor and scale up the original implementations and apply additional interfaces for plug-and-run experiments on our prepared datasets (Section 5).2 Based on these ready-to-use and efficient implementations, we then conduct all-around empirical evaluations of the algorithms, and report their benchmark performances. The empirical results, while providing much insight into the merits of different models that are consistent with the conceptual analysis in Section 3, also serve as the example utilization of our benchmark platform that can be followed by future studies on HNE.

Note that, although there have been several attempts to survey or benchmark heterogeneous network models [7, 8, 79, 87] and homogeneous graph embedding [26, 27, 28, 88, 89, 90], none of them has deeply looked into the intersection of the two. We advocate that our unified framework for the research and experiments on HNE is timely and necessary. Firstly, as we will cover in this work, there has been a significant amount of research on the particular problem of HNE especially in the very recent several years, but most of them scatter across different domains, lacking proper connections and comparisons. Secondly, none of the existing surveys has proposed a generic mathematically complete paradigm for conceptual analysis of all HNE models. Thirdly, existing surveys mostly do not provide systematic benchmark evaluation results, nor do they come with benchmark datasets and open-source baselines to facilitate future algorithm development.

The rest of this paper is organized as follows. Section 2 first introduces our proposed generic HNE paradigm. Subsequently, representative models in our survey are conceptually categorized and analyzed in Section 3. We then present in Section 4 our prepared benchmark datasets with detailed analysis. In Section 5, we provide a systematic empirical study over 13 popular HNE algorithms to benchmark the current state-of-the-art of HNE. Section 6 concludes the paper with visions towards future usage of our platform and research on HNE.

2 Generic Paradigm

2.1 Problem Definitions

Definition 2.1

Heterogeneous network. A heterogeneous network is a network with multiple types of nodes and links. Particularly, within , each node is associated with a node type , and each link is associated with a link type . Each node of type is also potentially associated with attribute , while each link of type with attribute . It is worth noting that the type of a link automatically defines the types of nodes and on its two ends.

Heterogeneous networks have been intensively studied due to its power of accommodating multi-modal multi-typed interconnected data. Besides the classic example of DBLP data used in most existing works as well as Figure 1, consider a different yet illustrative example from NYTimes in Figure 2.333https://www.nytimes.com/ Nodes in this heterogeneous network include news articles, categories, phrases, locations, and datetimes. To illustrate the power of heterogeneous networks, we introduce the concept of meta-path, which has been leveraged by most existing works on heterogeneous network modeling [7, 8].

Definition 2.2

Meta-path. A meta-path is a path defined on the network schema denoted in the form of , where and are node types and link types, respectively.

Each meta-path captures the proximity among the nodes on its two ends from a particular semantic perspective. Continue with our example of heterogeneous network from news data in Figure 2. The meta-path of article category article carries different semantics from article location article. Thus, the leverage of different meta-paths allows heterogeneous network models to compute the multi-modal multi-typed node proximity and relation, which has been shown beneficial to many real-world network mining applications [15, 91, 25].

Next, we introduce the problem of general network embedding (representation learning).

Definition 2.3

Network embedding. For a given network , where is the set of nodes (vertices) and is the set of links (edges), a network embedding is a mapping function , where . This mapping defines the latent representation (a.k.a. embedding) of each node , which captures network topological information in .

In most cases, network proximity is the major topological information to be captured. For example, DeepWalk [29] captures the random-walk based node proximity and illustrates the 2-dim node representations learned on the famous Zachary’s Karate network of small groups, where a clear correspondence between the node position in the input graph and learned embedding space can be observed. Various follow-up works have improved or extended DeepWalk, while a complete coverage of them is beyond the scope of this work. In this work, we focus on the embedding of heterogeneous networks.

Now we define the main problem of focus in this work, heterogeneous network embedding (HNE), which lies in the intersection between Def 2.1 and Def 2.3.

Definition 2.4

Heterogeneous network embedding. For a given heterogeneous network , a heterogeneous network embedding is a set of mapping functions , where is the number of node types, , . Each mapping defines the latent representation (a.k.a. embedding) of all nodes of type , which captures the network topological information regarding the heterogeneous links in .

Compared with homogeneous networks, the definition of topological information in heterogeneous networks is even more diverse. As we will show in Section 3, the major distinctions among different HNE algorithms mostly lie in their different ways of capturing such topological information. Particularly, the leverage of meta-paths as in Def 2.2 often plays an essential role, since many popular HNE algorithms exactly aim to model the different proximity indicated by meta-paths [59, 63, 73, 70, 69, 75, 66, 77].

2.2 Proposed Paradigm

In this work, we stress that one of the most important principles underlying HNE (as well as most other scenarios of network modeling and mining) is homophily [92]. Particularly, in the network embedding setting, homophily can be translated as ‘nodes close on a network should have similar embeddings’, which matches the requirement of Def 2.3. In fact, we further find intrinsic connections between the well-perceived homophily principle and widely-used smoothness enforcement technique on networks [93, 94, 95], which leads to a generic mathematical paradigm covering most existing and likely many future HNE algorithms.

Based on earlier well-established concepts underlying network modeling and embedding learning [96, 97, 93, 94, 95], we introduce the following key objective function of network smoothness enforcement as follows

| (1) |

where and are the node embedding vectors to be learned. is the proximity weight, is the embedding distance function, and denotes possible additional objectives such as regularizers, all three of which can be defined and implemented differently by the particular HNE algorithms.

3 Algorithm Taxonomy

In this section, we find a universal taxonomy for existing HNE algorithms with three categories based on their common objectives, and elaborate in detail how they fit into our paradigm of Eq. (1). The main challenge of instantiating Eq. (1) on heterogeneous networks is the consideration of complex interactions regarding multi-typed links and higher-order meta-paths. In fact, our Eq. (1) also readily generalizes to homogeneous networks, though that is beyond the scope of this work.

3.1 Proximity-Preserving Methods

As mentioned above, one basic goal of network embedding is to capture network topological information. This can be achieved by preserving different types of proximity among nodes. There are two major categories of proximity-preserving methods in HNE: random walk approaches (inspired by DeepWalk [29]) and first/second-order proximity based ones (inspired by LINE [30]). Both types of proximity-preserving methods are considered as shallow network embedding, due to their essential single-layer decomposition of certain affinity matrices [98].

3.1.1 Random Walk Approaches

metapath2vec [59]. Following homogeneous network embedding [29, 31], metapath2vec utilizes the node paths traversed by meta-path guided random walks to model the context of a node regarding heterogeneous semantics. Formally, given a meta-path , the transition probability at step is defined as

| (2) |

where denotes the neighbors of associated with edge type . Assume is the set of generated random walk sequences. The objective of metapath2vec is

| (3) |

where is the context (i.e., skip-grams) of in . For example, if and the context window size is 2, then . Let be the number of times that , and we can rewrite Eq. (3) as

Calculating the denominator in this objective requires summing over all nodes, which is computationally expensive. In actual computation, it is approximated using negative sampling [99, 30].

HIN2Vec [63]. HIN2Vec considers the probability that there is a meta-path between nodes and . Specifically,

where is an all-ones vector; is the Hadamard product; is a normalization function. Here , and can be viewed as the embeddings of , and , respectively. Let . We have

is the sigmoid function, so we have

HIN2Vec generates positive tuples (i.e., connects with via the meta-path ) using homogeneous random walks [29] regardless of node/link types. For each positive tuple , it generates several negative tuples by replacing with a random node . Its objective is

This is essentially the negative sampling approximation of the following objective

where is the number of path instances between and following the meta-path .

Other random walk approaches are summarized in Table I. To be specific, MRWNN [57] incorporates content priors into DeepWalk for image retrieval; SHNE [69] incorporates additional node information like categorical attributes, images, etc. by leveraging domain-specific deep encoders; HHNE [73] extends metapath2vec to the hyperbolic space; GHE [19] proposes a semi-supervised meta-path weighting technique; MNE [100] conducts random walks separately for each view in a multi-view network; JUST [65] proposes random walks with Jump and Stay strategies that do not rely on pre-defined meta-paths; HeteSpaceyWalk [101] introduces a scalable embedding framework based on heterogeneous personalized spacey random walks; TapEm [102] proposes a task-guided node pair embedding approach for author identification.

3.1.2 First/Second-Order Proximity Based Approaches

PTE [103]. PTE proposes to decompose a heterogeneous network into multiple bipartite networks, each of which describes one edge type. Its objective is the sum of log-likelihoods over all bipartite networks:

Here is the set of edge types; is the type- edge weight of (if there is no edge between and with type , then ); is the total edge weight between and .

AspEm [60]. AspEm assumes that each heterogeneous network has multiple aspects, and each aspect is defined as a subgraph of the network schema [14]. An incompatibility measure is proposed to select appropriate aspects for embedding learning. Given an aspect , its objective is

where is the set of edge types in aspect ; is a normalization factor; is the aspect-specific embedding of .

| Algorithm | / / | Applications | ||

| MRWNN [57] | number of times that in homogeneous random walks | image retrieval | ||

| metapath2vec [59] | number of times that in heterogeneous random walks following | N/A | node classification, node clustering, link prediction, recommendation | |

| SHNE [69] | , | |||

| HHNE [73] | ||||

| GHE [19] | number of meta-path instances between and | author identification | ||

| HIN2Vec [63] | following | node classification, node clustering, link prediction, recommendation | ||

| MNE [100] | number of times that in homogeneous random walks in | |||

| JUST [65] | number of times that in Jump/Stay random walks [65] | |||

| HeteSpaceyWalk [101] | number of times that in heterogeneous spacey random walks [101] | |||

| TapEm [102] | number of times that in heterogeneous random walks following | : representation of the node sequence from to following | supervised loss | author identification |

| HNE [56] | , | text classification, image retrieval | ||

| PTE [103] | edge weight of | N/A | text classification | |

| DRL [68] | node classification | |||

| CMF [58] | relatedness measurement of Wikipedia entities | |||

| HEBE [62] | edge weight of hyperedge [62] | , | N/A | event embedding |

| Phine [11] | number of times that and co-occur in a meta-graph instance | supervised loss | user rating prediction | |

| MVE [104] | edge weight of with type | node classification, link prediction | ||

| AspEm [60] | , : aspect | N/A | aspect mining | |

| HEER [61] | relation prediction in knowledge graphs | |||

| GERM [105] | edge weight of with type (if is activated by the genetic algorithm [105]) | citation recommendation coauthor recommendation feeds recommendation | ||

| mg2vec [106] | 1st: number of meta-graph instances containing node 2nd: number of meta-graph instances containing both nodes and | 1st: 2nd: : meta-graph | relationship prediction, relationship search |

HEER [61]. HEER extends PTE by considering typed closeness. Specifically, each edge type has an embedding , and its objective is

where is the edge embedding of ; . In [61], has different definitions for directed and undirected edges based on the Hadamard product. To simplify our discussion, we assume . Let . Then we have and

Other first/second-order proximity based approaches are summarized in Table I. To be specific, Chang et al. [56] introduce a node type-aware content encoder; CMF [58] performs joint matrix factorization over the decomposed bipartite networks; HEBE [62] preserves proximity regarding each meta-graph; Phine [11] combines additional regularization towards semi-supervised training; MVE [104] proposes an attenion based framework to consider multiple views of the same nodes; DRL [68] proposes to learn one type of edges at each step and uses deep reinforcement learning approaches to select the edge type for the next step; GERM [105] adopts a genetic evolutionary approach to preserve the critical edge-types while removing the noisy or incompatible ones given specific tasks; mg2vec [106] jointly embeds nodes and meta-graphs into the same space by exploiting both first-order and second-order proximity.

3.1.3 Unified Objectives

Based on the discussions above, the objective of most proximity-preserving methods can be unified as

| (4) |

Here, is the weight of node pair in the objective444 can be specific to a meta-path or an edge type , in which cases we can denote it as or accordingly. In Eq. (4) and following derivations, for ease of notation, we omit the superscript.; is an algorithm-specific regularization term (summarized in Table I); is a proximity function between and .

Negative Sampling. Directly optimizing the objective in Eq. (4) is computationally expensive because it needs to traverse all nodes when computing the softmax function. Therefore, in actual computation, most studies adopt the negative sampling strategy [99, 30], which modifies the objective as follows:

Here, is the number of negative samples; is known as the noise distribution that generates negative samples.

Negative sampling serves as a generic paradigm to unify network embedding approaches. For example, starting from the negative sampling objective, Qiu et al. [98] unify DeepWalk [29], LINE [30], PTE [103] and node2vec [31] into a matrix factorization framework. In this paper, as mentioned in Section 2.2, we introduce another objective (i.e., Eq. (1)) that is equivalent to Eq. (4) and consider network embedding from the perspective of network smoothness enforcement.

Network Smoothness Enforcement. Note that in most cases, we can write in Eq. (4) as . For example, in metapath2vec, PTE, etc.; in HIN2Vec; in HEER. In these cases,

The last step holds because . Therefore, our goal is equivalent to

| (5) |

Here and are two regularization terms. Without , can be minimized by letting ; without , can be minimized by letting . in Eq. (1) is then the sum of , and . Among them, is algorithm-specific, while and are commonly shared across most HNE algorithms in the proximity-preserving group. We summarize the choices of , and in Table I.

Although Eq. (4) can cover most existing and likely many future approaches, we would like to remark that there are also studies adopting other forms of proximity-preserving objectives. For example, SHINE [107] uses reconstruction loss of autoencoders; HINSE [64] adopts spectral embedding based on adjacency matrices with different meta-graphs; MetaDynaMix [108] introduces a low-rank matrix factorization framework for dynamic HIN embedding; HeGAN [72] proposes an adversarial learning approach with a relation type-aware discriminator.

3.2 Message-Passing Methods

Each node in a network can have attribute information represented as a feature vector . Message-passing methods aim to learn node embeddings based on by aggregating the information from ’s neighbors. In recent studies, Graph Neural Networks (GNNs) [33] are widely adopted to facilitate this aggregation/message-passing process. Compared to the proximity based HNE methods, message-passing methods, especially the GNN based ones are often considered as deep network embedding, due to their multiple layers of learnable projection functions.

R-GCN [67]. R-GCN has convolutional layers. The initial node representation is just the node feature . In the -th convolutional layer, each representation vector is updated by accumulating the vectors of neighboring nodes through a normalized sum.

Different from the regular GCN model [33], R-GCN considers edge heterogeneity by learning multiple convolution matrices ’s, each of which corresponding to one edge type. During message passing, neighbors under the same edge type will be aggregated and normalized first. The node embedding is the output of the -th layer (i.e., ).

In unsupervised settings, message-passing approaches use link prediction as their downstream task to train GNNs. To be specific, the likelihood of observing edges in the heterogeneous network is maximized. R-GCN optimizes a cross-entropy loss through negative sampling. Essentially, it is the approximation of the following objective:

where .

Recently, CompGCN [109] extends R-GCN by leveraging a variety of entity-relation composition operations so as to jointly embed nodes and relations.

| Algorithm | / / | Aggregation Function | Applications | |

| R-GCN [67] | , | entity classification, KB completion | ||

| HEP [110] | user alignment | |||

| HAN [75] | edge weight of | node classification, node clustering, link prediction, recommendation | ||

| HetGNN [71] | number of times that in homogeneous random walks | |||

| GATNE [70] | number of times that in random walks following | , | ||

| MV-ACM [111] | , | |||

| MAGNN [112] | edge weight of | |||

| HGT [85] | refer to NTN [20] in Table III | , , | node classification, author identification |

HAN [75]. Instead of considering one-hop neighbors, HAN utilizes meta-paths to model higher-order proximity. Given a meta-path , the representation of node is aggregated from its meta-path based neighbors connects with via the meta-path . HAN proposes an attention mechanism to learn the weights of different neighbors:

where is the node-level attention vector of ; is the projected feature vector of node ; is the concatenation operator. Given the meta-path specific embedding , HAN uses a semantic-level attention to weigh different meta-paths:

where is the semantic-level attention vector.

In the original HAN paper, the authors mainly consider the task of semi-supervised node classification. For unsupervised learning (i.e., without any node labels), according to [112], HAN can use the link prediction loss introduced in GraphSAGE [34], which is the negative sampling approximation of the following objective:

| (6) |

Here, is the edge weight of .

MAGNN [112]. MAGNN extends HAN by considering both the meta-path based neighborhood connects with via meta-path and the nodes along the meta-path instances. Given a meta-path , MAGNN first employs an encoder to transform all the node features along an instance of into a single vector.

where is the projected feature vector of node ; denotes a meta-path instance of connecting and ; is a relational rotation encoder inspired by [114]. After encoding each meta-path instance, MAGNN proposes an intra-meta-path aggregation to learn the weight of different neighbors:

where is the node-level attention vector of . This attention mechanism can also be extended to multiple heads. After aggregating the information within each meta-path, MAGNN further combines the semantics revealed by all meta-paths using an inter-meta-path aggregation:

where is the semantic-level attention vector for node type . In comparison with HAN, MAGNN employs an additional projection to get the final representation .

For link prediction, MAGNN adopts the loss introduced in GraphSAGE [34], which is equivalent to the objective in Eq. (6).

HGT [85]. Inspired by the success of Transformer [115, 116] in text representation learning, Hu et al. propose to use each edge’s type to parameterize the Transformer-like self-attention architecture. To be specific, for each edge , their Heterogeneous Graph Transformer (HGT) model maps into a Query vector, and into a Key vector, and calculate their dot product as attention:

Here, is the output of the -th HGT layer (); and are node type-aware linear mappings; is the -th attention head; is a prior tensor representing the weight of each edge type in the attention. Parallel to the calculation of attention, the message passing process can be computed in a similar way by incorporating node and edge types:

where is also a node type-aware linear mapping. To aggregate the messages from ’s neighborhood, the attention vector serves as the weight to get the updated vector:

and following the residual connection [117], the output of the -th layer is

Here, is a linear function mapping ’s vector back to its node type-specific distribution.

For the unsupervised link prediction task, HGT borrows the objective function from Neural Tensor Network [20], which will be further discussed in Section 3.3.

| Algorithm | Applications | |||

| TransE [4] | KB completion, relation extraction from text | |||

| TransH [118] | , | |||

| TransR [80] | , | |||

| RHINE [76] | edge weight of with type | if models affiliation, if models interaction | N/A | link prediction, node classification |

| RotatE [114] | , | KB completion, relation pattern inference | ||

| RESCAL [119] | entity resolution, link prediction | |||

| DistMult [81] | ( | , | KB completion, triplet classification | |

| HolE [120] | ( | |||

| ComplEx [121] | ( | , , | ||

| SimplE [122] | , , | |||

| TuckER [123] | ( | N/A | ||

| NTN [20] | tanh | |||

| ConvE [82] | N/A | |||

| NKGE [83] | same as TransE or ConvE, where | |||

| SACN [84] | N/A |

Some other message-passing approaches are summarized in Table II. For example, HEP [110] aggregates ’s representation from (i.e., the node type-aware neighborhood) to reconstruct ’s own embedding; HetGNN [71] also adopts a node type-aware neighborhood aggregation, but the neighborhood is defined through random walk with restart [113]; GATNE [70] aggregates ’s representation from (i.e., the edge type-aware neighborhood) and is applicable to both transductive and inductive network embedding settings; MV-ACM [111] aggregates ’s representation from (i.e., the meta-path-aware neighborhood) and utilizes an adversarial learning framework to learn the reciprocity between different edge types.

The objective of message-passing approaches mentioned above can also be written as Eq. (4) (except HGT, whose objective is the same as NTN [20] and will be discussed in Section 3.3), where is a function of and . The only difference is that here is aggregated from using GNNs. Following the derivation of proximity-preserving approaches, if we still write in Eq. (1) as the sum of , and , we can get the exactly same and as in Eq. (5). We summarize the choices of , and the aggregation function in Table II.

Within this group of algorithms, HEP has an additional reconstruction loss , and MV-ACM [111] has an adversarial loss , where and are the generator and discriminator of complementary edge types, respectively. All the other algorithms in this group have .

Similar to the case of proximity-preserving approaches, there are also message-passing methods adopting other forms of objectives. For example, GraphInception [66] studies collective classification (where the labels within a group of instances are correlated and should be inferred collectively) using meta-path-aware aggregation; HDGI [86] maximizes local-global mutual information to improve unsupervised training based on HAN; NEP [74] performs semi-supervised node classification using an edge type-aware propagation function to mimic the process of label propagation; NLAH [124] also considers semi-supervised node classification by extending HAN with several pre-computed non-local features; HGCN [125] explores the collective classification task and improves GraphInception by considering semantics of different types of edges/nodes and relations among different node types; HetETA [126] studies a specific task of estimating the time of arrival in intelligent transportation using a heterogeneous graph network with fast localized spectral filtering.

3.3 Relation-Learning Methods

As discussed above, knowledge graphs can be regarded as a special case of heterogeneous networks, which are schema-rich [127]. To model network heterogeneity, existing knowledge graph embedding approaches explicitly model the relation types of edges via parametric algebraic operators, which are often shallow network embedding [79, 128]. Compared to the shallow proximity-preserving HNE models, they often focus on the designs of triplet based scoring functions instead of meta-paths or meta-graphs due to the large numbers of entity and relation types.

Each edge in a heterogeneous network can be viewed as a triplet composed of two nodes and an edge type (i.e., entities and relations, in the terminology of KG). The goal of relation-learning methods is to learn a scoring function which evaluates an arbitrary triplet and outputs a scalar to measure the acceptability of this triplet. This idea is widely adopted in KB embedding. Since there are surveys of KB embedding algorithms already [79], we only cover the most popular approaches here and highlight their connections to HNE.

TransE [4]. TransE assumes that the relation induced by -labeled edges corresponds to a translation of the embedding (i.e., ) when holds. Therefore, the scoring function of TransE is defined as

| (7) |

where or . The objective is to minimize a margin based ranking loss.

| (8) |

where is the set of positive triplets (i.e., edges); is the set of corrupted triplets, which are constructed by replacing either or with an arbitrary node. Formally,

TransE is the most representative model using “a translational distance” to define the scoring function. It has many extensions. For example, TransH [118] projects each entity vector to a relation-specific hyperplane when calculating the distance; TransR [80] further extends relation-specific hyperplanes to relation-specific spaces; RHINE [76] distinguishes affiliation relations from interaction relations and adopts different objectives for the two types of relations. For more extensions of TransE, please refer to [83].

Recently, Sun et al. [114] propose the RotatE model, which defines each relation as a rotation (instead of a translation) from the source entity to the target entity in the complex vector space. Their model is able to describe various relation patterns including symmetry/antisymmetry, inversion, and composition.

DistMult [81]. In contrast to translational distance models [4, 118, 80], DistMult exploits a similarity based scoring function. Each relation is represented by a diagonal matrix , and is defined using a bilinear function:

Note that for any and . Therefore, DistMult is mainly designed for symmetric relations.

Using the equation , we have

ComplEx [121]. Instead of considering a real-valued embedding space, ComplEx introduces complex-valued representations for , and . Similar to DistMult, it utilizes a similarity based scoring function:

where is the real part of a complex number; is the complex conjugate of ; . Here, it is possible that , which allows ComplEx to capture asymmetric relations.

In the complex space, using the equation , we have

Besides ComplEx, there are many other extensions of DistMult. RESCAL [119] uses a bilinear scoring function similar to the one in DistMult, but is no longer restricted to be diagonal; HolE [120] composes the node representations using the circular correlation operation, which combines the expressive power of RESCAL with the efficiency of DistMult; SimplE [122] considers the inverse of relations and calculates the average score of and ; TuckER [123] proposes to use a three-way Tucker tensor decomposition approach to learning node and relation embeddings.

ConvE [82]. ConvE goes beyond simple distance or similarity functions and proposes deep neural models to score a triplet. The score is defined by a convolution over 2D shaped embeddings. Formally,

where and denote the 2D reshaping matrices of node embedding and relation embedding, respectively; is the vectorization operator that maps a by matrix to a -dimensional vector; “” is the convolution operator.

There are several other models leveraging deep neural scoring functions. For example, NTN [20] proposes to combine the two node embedding vectors by a relation-specific tensor , where is the dimension of ; NKGE [83] develops a deep memory network to encode information from neighbors and employs a gating mechanism to integrate structure representation and neighbor representation ; SACN [84] encodes node representations using a graph neural network and then scores the triplet using a convolutional neural network with the translational property.

Most relation-learning approaches adopt a margin based ranking loss with some regularization terms that generalizes Eq. (8):

| (9) |

In [129], Qiu et al. point out that the margin based loss shares a very similar form with the following negative sampling loss:

Following [129], if we use the negative sampling loss to rewrite Eq. (9), we are approximately maximizing

For translational models [4, 118, 80, 76] whose is described by a distance function, maximizing is equivalent to

| (10) |

In this case, we can write as . For RotatE [114], the objective is the same except that .

For similarity based approaches [119, 81, 121, 122], we follow the derivation of Eq. (5), and the objective will be

Here, in RESCAL [119] and DistMult [81]; or in SimplE [122]; and in ComplEx [121]. The regularization term is .

For neural triplet scorers [82, 20, 21, 84], the forms of are more complicated than translational distances or bilinear products. In these cases, since distance (or dissimilarity) and proximity can be viewed as reverse metrics, we define as , where is an constant upper bound of . Then the derivation of the loss function is similar to that of Eq. (10), i.e.,

In this case, .

We summarize the choices of , and in Table III. Note that for relation learning methods, is usually not a distance metric. For example, in most translational distance models and deep neural models. This is intuitive because and often express different meanings.

4 Benchmark

4.1 Dataset Preparation

Towards off-the-shelf evaluation of HNE algorithms with standard settings, in this work, we collect, process, analyze, and publish four new real-world heterogeneous network datasets from different domains, which we aim to set up as a handy and fair benchmark for existing and future HNE algorithms.2

| Dataset | #node type | #node | #link type | #link | #attributes | #attributed nodes | #label type | #labeled node |

| DBLP | 4 | 1,989,077 | 6 | 275,940,913 | 300 | ALL | 13 | 618 |

| Yelp | 4 | 82,465 | 4 | 30,542,675 | N/A | N/A | 16 | 7,417 |

| Freebase | 8 | 12,164,758 | 36 | 62,982,566 | N/A | N/A | 8 | 47,190 |

| PubMed | 4 | 63,109 | 10 | 244,986 | 200 | ALL | 8 | 454 |

DBLP.

We construct a network of authors, papers, venues, and phrases from DBLP. Phrases are extracted by the popular AutoPhrase [130] algorithm from paper texts and further filtered by human experts. We compute word2vec [99] on all paper texts and aggregate the word embeddings to get 300-dim paper and phrase features. Author and venue features are the aggregations of their corresponding paper features. We further manually label a relatively small portion of authors into 12 research groups from four research areas by crawling the web. Each labeled author has only one label.

Yelp.

We construct a network of businesses, users, locations, and reviews from Yelp.555https://www.yelp.com/dataset/challenge Nodes do not have features, but a large portion of businesses are labeled into sixteen categories. Each labeled business has one or multiple labels.

Freebase.

We construct a network of books, films, music, sports, people, locations, organizations, and businesses from Freebase.666http://www.freebase.com/ Nodes are not associated with any features, but a large portion of books are labeled into eight genres of literature. Each labeled book has only one label.

PubMed.

We construct a network of genes, diseases, chemicals, and species from PubMed.777https://www.ncbi.nlm.nih.gov/pubmed/ All nodes are extracted by AutoPhrase [130], typed by bioNER [131], and further filtered by human experts. The links are constructed through open relation pattern mining [132] and manual selection. We compute word2vec [99] on all PubMed papers and aggregate the word embeddings to get 200-dim features for all types of nodes. We further label a relatively small portion of diseases into eight categories. Each labeled disease has only one label.

The four datasets we prepare are from four different domains, which have been individually studied in some existing works on HNE. Among them, DBLP has been most commonly used, because all information about authors, papers, etc. is public and there is no privacy issue, and the results are often more interpretable to researchers in computer science related domains. Other real-world networks like Yelp and IMDB have been commonly studied for recommender systems. These networks are naturally heterogeneous, including at least users and items (e.g., businesses and movies), as well as some additional item descriptors (e.g., categories of businesses and genres of movies). Freebase is one of the most popular open-source knowledge graph, which is relatively smaller but cleaner compared with the others (e.g., YAGO [133] and Wikidata [134]), where most entity and relation types are well defined. One major difference between conventional heterogeneous networks and knowledge graphs is the number of types of nodes and links. We further restrict the types of entities and relations inside Freebase, so as to get a heterogeneous network that is closer to a knowledge graph, while in the meantime does not have too many types of nodes and links. Therefore, most conventional HNE algorithms can be applied on this dataset and properly compared against the KB embedding ones. PubMed is a novel biomedical network we directly construct through text mining and manual processing on biomedical literature. This is the first time we make it available to the public, and we hope it to serve both the evaluation of HNE algorithms and novel downstream tasks in biomedical science such as biomedical information retrieval and disease evolution study.

We notice that several other heterogeneous network datasets such as OAG [135, 85, 87] and IMDB [75, 112, 125] have been constructed recently in parallel to this work. Due to their similar nature and organization as some of our datasets (e.g., DBLP, Yelp), our pipeline can be easily adopted on these datasets, so we do not copy them here.

4.2 Structure Analysis

A summary of the statistics on the four datasets is provided in Table IV and Figure 3. As can be observed, the datasets have different sizes (numbers of nodes and links) and heterogeneity (numbers and distributions of node/link types). Moreover, due to the nature of the data sources, DBLP and PubMed networks are attributed, whereas Yelp and Freebase networks are abundantly labeled. A combination of these four datasets thus allows researchers to flexibly start by testing an HNE algorithm in the most appropriate settings, and eventually complete an all-around evaluation over all settings.

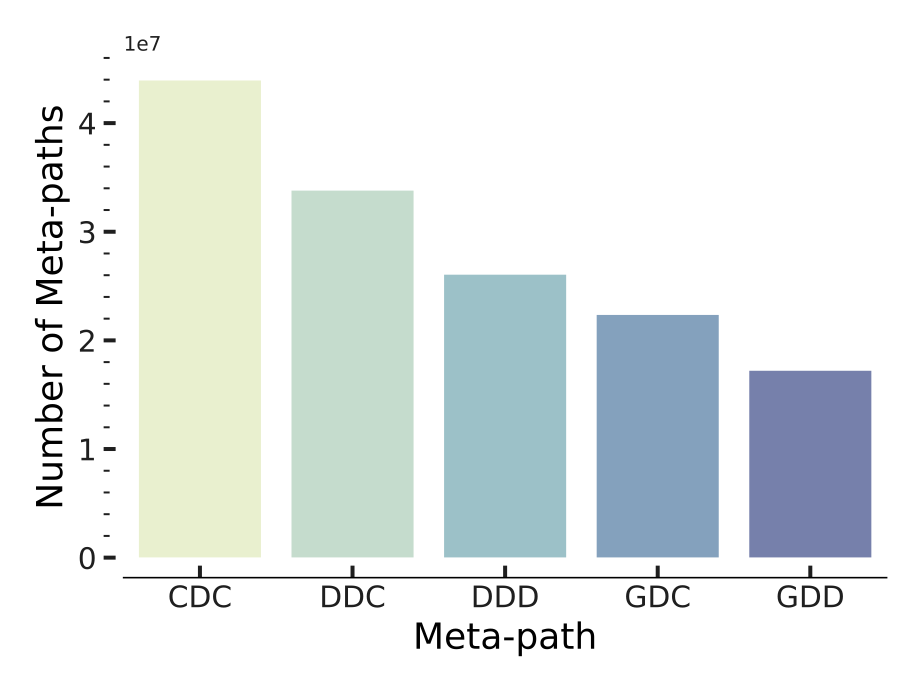

We also provide detailed analysis regarding several most widely concerned properties of heterogeneous networks, i.e., degree distribution (Figure 4), clustering coefficient (Figure 5), and number of frequent meta-paths (Figure 6). In particular, degree distribution is known to significantly influence the performance of HNE algorithms due to the widely used node sampling process, whereas clustering coefficient impacts HNE algorithms that utilize latent community structures. Moreover, since many HNE algorithms rely on meta-paths, the skewer distribution of meta-paths can bias towards algorithms using fewer meta-paths.

As we can see, the properties we concern are rather different across the four datasets we prepare. For example, there are tighter links and more labels in Yelp, while there are more types of nodes and links in Freebase; compared with nodes in Freebase and PubMed which clearly follow the long-tail degree distribution, certain types of nodes in DBLP and Yelp are always well connected (e.g., phrases in DBLP and businesses in Yelp), forming more star-shaped subgraphs; the type-wise clustering coefficients and meta-path distributions are the most skewed in DBLP and most balanced in PubMed. The set of four datasets together provide a comprehensive benchmark towards the robustness and generalizability of various HNE algorithms (as we will also see in Section 5.2).

4.3 Settings, Tasks, and Metrics

We mainly compare all 13 algorithms under the setting of unsupervised unattributed HNE over all datasets, where the essential goal is to preserve different types of edges in the heterogeneous networks. Moreover, for message-passing algorithms that are particularly designed for attributed and semi-supervised HNE, we also conduct additional experiments for them in the corresponding settings. Particularly, due to the nature of the datasets, we evaluate attributed HNE on DBLP and PubMed datasets where node attributes are available, and semi-supervised HNE on Yelp and Freebase where node labels are abundant. We always test the computed network embeddings on the two standard network mining tasks of node classification and link prediction. Note that, while most existing studies on novel HNE algorithms have been focusing on these two standard tasks [59, 63, 103, 61, 75, 112, 85], we notice that there are also various other tasks due to the wide usage of heterogeneous networks in real-world applications [11, 136, 137, 138, 139, 140, 126]. While the performance of HNE there can be rather task-dependant and data-dependant, to firstly simplifying the task into either a standard node classification or link prediction problem can often serve to provide more insights into the task and dataset, which can help the further development of novel HNE algorithms.

For the standard unattributed unsupervised HNE setting, we first randomly hide 20% links and train all HNE algorithms with the remaining 80% links. For node classification, we then train a separate linear Support Vector Machine (LinearSVC) [141] based on the learned embeddings on 80% of the labeled nodes and predict on the remaining 20%. We repeat the process for five times and compute the average scores regarding macro-F1 (across all labels) and micro-F1 (across all nodes). For link prediction, we use the Hadamard function to construct feature vectors for node pairs, train a two-class LinearSVC on the 80% training links and evaluate towards the 20% held out links. We also repeat the process for five times and compute the two metrics of AUC (area under the ROC curve) and MRR (mean reciprocal rank). AUC is a standard measure for classification, where we regard link prediction as a binary classification problem, and MRR is a standard measure for ranking, where we regard link prediction as a link retrieval problem. Since exhaustive computation over all node pairs is too heavy, we always use the two-hop neighbors as the candidates for all nodes. For attributed HNE, node features are used during the training of HNE algorithms, whereas for semi-supervised HNE, certain amounts of node labels are used (80% by default).

5 Experimental Evaluations

| Model | Node classification (Macro-F1/Micro-F1) | Link prediction (AUC/MRR) | ||||||

| DBLP | Yelp | Freebase | PubMed | DBLP | Yelp | Freebase | PubMed | |

| metapath2vec | 43.85/55.07 | 5.16/23.32 | 20.55/46.43 | 12.90/15.51 | 65.26/90.68 | 80.52/99.72 | 56.14/78.24 | 69.38/84.79 |

| PTE | 43.34/54.53 | 5.10/23.24 | 10.25/39.87 | 09.74/12.27 | 57.72/77.51 | 50.32/68.84 | 57.89/78.23 | 70.36/89.54 |

| HIN2Vec | 12.17/25.88 | 5.12/23.25 | 17.40/41.92 | 10.93/15.31 | 53.29/75.47 | 51.64/66.71 | 58.11/81.65 | 69.68/84.48 |

| AspEm | 33.07/43.85 | 5.40/23.82 | 23.26/45.42 | 11.19/14.44 | 67.20/91.46 | 76.10/95.18 | 55.80/77.70 | 68.31/87.43 |

| HEER | 09.72/27.72 | 5.03/22.92 | 12.96/37.51 | 11.73/15.29 | 53.00/72.76 | 73.72/95.92 | 55.78/78.31 | 69.06/88.42 |

| R-GCN | 09.38/13.39 | 5.10/23.24 | 06.89/38.02 | 10.75/12.73 | 50.50/73.35 | 72.17/97.46 | 50.18/74.01 | 63.33/81.19 |

| HAN | 07.91/16.98 | 5.10/23.24 | 06.90/38.01 | 09.54/12.18 | 50.24/73.10 | N/A | 51.50/74.13 | 65.85/85.33 |

| MAGNN | 06.74/10.35 | 5.10/23.24 | 06.89/38.02 | 10.30/12.60 | 50.10/73.26 | 50.03/69.81 | 50.12/74.18 | 61.11/90.01 |

| HGT | 15.17/32.05 | 5.07/23.12 | 23.06/46.51 | 11.24/18.72 | 59.98/83.13 | 79.00/99.66 | 55.68/79.46 | 73.00/88.05 |

| TransE | 22.76/37.18 | 5.05/23.03 | 31.83/52.04 | 11.40/15.16 | 63.53/86.29 | 69.13/83.66 | 52.84/75.80 | 67.95/84.69 |

| DistMult | 11.42/25.07 | 5.04/23.00 | 23.82/45.50 | 11.27/15.79 | 52.87/74.84 | 80.28/99.73 | 54.91/78.04 | 70.61/90.64 |

| ComplEx | 20.48/37.34 | 5.05/23.03 | 35.26/52.03 | 09.84/18.51 | 65.92/90.01 | 80.11/99.73 | 60.43/84.22 | 75.96/92.47 |

| ConvE | 12.42/26.42 | 5.09/23.02 | 24.57/47.61 | 13.00/14.49 | 54.03/75.31 | 78.55/99.70 | 54.29/76.11 | 71.81/89.82 |

5.1 Algorithms and Modifications

We amend the implementations of 13 popular HNE algorithms for seamless and efficient experimental evaluations on our prepared datasets. The algorithms we choose and the modifications we make are as follows.

-

•

metapath2vec [59]: Since the original implementation contains a large amount of hard-coded data-specific settings such as node types and meta-paths, and the optimization is unstable and limited as it only examines one type of meta-path based context, we completely reimplement the algorithm. In particular, we first run random walks to learn the weights of different meta-paths based on the number of sampled instances, and then train the model using the unified loss function, which is a weighted sum over the loss functions of individual meta-paths. Both the random walk and meta-path-based embedding optimization are implemented with multi-threads in parallel.

-

•

PTE [103]: Instead of accepting labeled texts as input and working on text networks with the specific three types of nodes (word, document, and label) and three types of links (word-word, document-word, and label-word), we revise the original implementation and allow the model to consume heterogeneous networks directly with arbitrary types of nodes and links by adding more type-specific objective functions.

-

•

HIN2Vec [63]: We remove unnecessary data preprocessing codes and modify the original implementation so that the program first generates random walks, then trains the model, and finally outputs node embeddings only.

-

•

AspEm [60]: We clean up the hard-coded data-specific settings in the original implementation and write a script to connect the different components of automatically selecting the aspects with the least incompatibilities, as well as learning, matching, and concatenating the embeddings based on different aspects.

-

•

HEER [61]: We remove the hard-coded data-specific settings and largely simplify the data preprocessing step in the original implementation by skipping the knockout step and disentangling the graph building step.

-

•

R-GCN [67]: The existing implementation from DGL [142] is only scalable to heterogeneous networks with thousands of nodes, due to the requirement of putting the whole graphs into memory during graph convolutions. To scale up R-GCN, we perform fixed-sized node and link sampling for batch-wise training following the framework of GraphSAGE [34].

-

•

HAN [75]: Since the original implementation of HAN contains a large amount of hard-coded data-specific settings such as node types and meta-paths, and is unfeasible for large-scale datasets due to the same reason as R-GCN, we completely reimplement the HAN algorithm based on our implementation of R-GCN. In particular, we first automatically construct meta-path based adjacency lists for the chosen node type, and then sample the neighborhood for the seed nodes during batch-wise training.

-

•

MAGNN [112]: The original DGL-based [142] unsupervised implementation of MAGNN solely looks at predicting the links between users and artists in a music website dataset with the use of a single neural layer. Hence, we remove all the hard-coded data-specific settings and refactor the entire pipeline so that the model can support multi-layer mini-batch training over arbitrary link types.

-

•

HGT [85]: The original PyG-based [143] implementation of HGT targets on the specific task of author disambiguation among the associated papers in a dynamic academic graph [135]. Therefore, we refactor it by removing hard-coded data-specific settings, assigning the same timestamp to all the nodes, and conducting training over all types of links.

- •

-

•

DistMult [81]: We remove the hard-coded data-specific settings and largely simplify the data preprocessing step in the original implementation.

-

•

ComplEx [121]: Same as for TransE.

-

•

ConvE [82]: Same as for DistMult.

We set the embedding size of all algorithms to 50 by default, and tune other hyperparameters following the original papers through standard five-fold cross validation on all datasets. We have put the implementation of all compared algorithms in a python package and released them together with the datasets to constitute an open-source ready-to-use HNE benchmark.2

5.2 Performance Benchmarks

We provide systematic experimental comparisons of the 13 popular state-of-the-art HNE algorithms across our four datasets, on the scenarios of unsupervised unattributed HNE, attributed HNE, and semi-supervised HNE.

Table V shows the performance of compared algorithms on unsupervised unattributed HNE, evaluated towards node classification and link prediction. We have the following observations.

From the perspective of compared algorithms:

(1) Proximity-preserving algorithms often perform well on both tasks under the unsupervised unattributed HNE setting, which explains why proximity-preserving is the most widely used HNE or even general network embedding framework when node attributes and labels are unavailable. Among the proximity-preserving methods, HIN2Vec and HEER show reasonable results on link prediction but perform not so well on node classification (especially on DBLP and Freebase). In fact, these two methods focus on modeling link representations in their objectives ( in HIN2Vec and in HEER), thus are more suitable for link prediction.

(2) Under the unsupervised unattributed HNE setting, message-passing methods perform poorly except for HGT, especially on node classification. As we discuss before, message-passing methods are known to excel due to their integration of node attributes, link structures, and training labels. When neither of node attributes and labels are available, we use random vectors as node features and adopt a link prediction loss, which largely limits the performance of R-GCN, HAN, and MAGNN. We will focus our evaluation on the message-passing algorithms in the attributed and semi-supervised HNE settings later. On the contrary, HGT exhibits competitive results on both node classification and link prediction. This is attributed to the usage of node-type and link-type dependent parameters which maintains dedicated representations for different types of nodes and links. In addition, the heterogeneous mini-batch graph sampling algorithm designed by HGT further reduces the loss of structural information due to sampling and boosts the performance to a greater extent. Finally, the link prediction result of HAN on the Yelp dataset is not available. This is because HAN can only embed one type of nodes at a time (we embed Business in Yelp) and thus predict the link between two nodes with the same type (i.e., Business-Business). However, all links in Yelp connect distinct types of nodes (e.g., Business-Location, Business-User), and HAN cannot predict such links (thus marked as N/A).

(3) Relation-learning methods such as TransE and ComplEx perform better on Freebase and PubMed on both tasks, especially on link prediction. In fact, in Table IV and Figure 3 we can observe that both datasets (especially Freebase) have more link types. Relation-learning approaches, which are mainly designed to embed knowledge graphs (e.g., Freebase), can better capture the semantics of numerous types of direct links.

From the perspective of datasets:

(1) All approaches have relatively low F1 scores on Yelp and PubMed (especially Yelp) on node classification. This is because both datasets have larger numbers of classes (i.e., 16 in Yelp and 8 in PubMed) as shown in Table IV. Moreover, unlike the cases of the other datasets, a node in Yelp can have multiple labels, which makes the classification task more challenging.

(2) In Figure 4, we can observe that the degree distribution of Freebase is more skewed. Therefore, when we conduct link sampling or random walks on Freebase during representation learning, nodes with lower degrees will be sampled less frequently and their representations may not be learned accurately. This observation may explain why the link prediction metrics on Freebase are in general lower than those on Yelp and PubMed.

(3) As we can see in Figure 3-6, most studied network properties are more balanced on Freebase and PubMed (especially PubMed) across different types of nodes and links. This in general makes both the node classification and link prediction tasks harder for all algorithms, and also makes the gaps among different algorithms smaller.

5.3 Ablation Studies

To provide an in-depth performance comparison among various HNE algorithms, we further conduct controlled experiments by varying the embedding sizes and randomly removing links from the training set.

In Figure 7, we show the micro-F1 scores for node classification and AUC scores for link prediction computed on the PubMed dataset. We omit the other results here, which can be easily computed in our provided benchmark package. As we can observe, some algorithms are more robust to varying settings while some others are more sensitive. In general, varying embedding size and link removal can significantly impact the performance of most algorithms on both tasks, and sometimes can even lead to different ordering of certain algorithms. This again emphasizes the importance of setting up standard benchmark including datasets and evaluation protocols for systematic HNE algorithm evaluation. In particular, on PubMed, larger embedding sizes like over 50 can harm the performance of most algorithms especially on node classification, probably due to overfitting with the limited labeled data. Interestingly, the random removal of links does have a negative impact on link prediction, but it does not necessarily harm node classification. This means that node classes and link structures may not always be tightly correlated, and even parts of the links already provide the necessary information useful enough for node classification.

Towards the evaluation of the message-passing HNE algorithms designed to integrate node attributes and labels into representation learning like R-GCN, HAN, MAGNN, and HGT, we also conduct controlled experiments by adding random Gaussian noises to the node attributes and masking different amounts of training labels.

In Figure 8, we show the results on the PubMed dataset. As we can observe, the scores in most subfigures are significantly higher than the scores in Table V, indicating the effectiveness of R-GCN, HAN, MAGNN, and HGT in integrating node attributes and labels for HNE. In particular, the incorporation of node attributes boosts the node classification results of R-GCN, HAN, and MAGNN significantly (almost tripling the F1 scores and significantly higher than all algorithms that do not use node attributes), but it offers very little help to HGT. This suggests that R-GCN, HAN, and MAGNN can effectively leverage the semantic information associated with attributes, whereas HGT relies more on the network structures and type information of nodes and links. Moreover, MAGNN achieves the highest node classification results with large margins as it successfully incorporates the attributes of intermediate nodes along the meta-paths (which are ignored by HAN). In addition, when random noises with larger variances are added to node attributes, the performance of node classification significantly drops, while the performance of link prediction is less affected. As more training labels become available, without a surprise, the node classification results of all four algorithms increase, but surprisingly the link prediction results are almost not affected. These observations again reveal the different natures of the two tasks, where node classes are more related to node contents, whereas links should be typically inferred from structural information.

6 Future

In this work, we present a comprehensive survey on various existing HNE algorithms, and provide benchmark datasets and baseline implementations to ease future research in this direction. While HNE has already demonstrated strong performance across a variety of downstream tasks, it is still in its infancy with many open challenges. To conclude this work and inspire future research, we now briefly discuss the limitation of current HNE and several specific directions potentially worth pursuing.

Beyond homophily.

As we formulate in Eq. (1), current HNE algorithms focus on the leverage of network homophily. Due to recent research on homogeneous networks that study the combination of positional and structural embedding [145, 49], it would be interesting to explore how to generalize such design principles and paradigms to HNE. Particularly, in heterogeneous networks, relative positions and structural roles of nodes can both be measured under different meta-paths or meta-graphs, which are naturally more informative and diverse. However, such considerations also introduce harder computational challenges.

Beyond accuracy.

Most, if not all, existing research on HNE has primarily focused on the accuracy towards different downstream tasks. It would be interesting to further study the scalability and efficiency (for large-scale networks) [34, 146], temporal adaptability (for dynamic evolving networks) [147, 148], robustness (towards adversarial attacks) [32, 149], interpretability [150], uncertainty [151], fairness [152, 153] of HNE, and so on.

Beyond node embedding.

Graph- and subgraph-level embeddings have been intensively studied on homogeneous networks to enable graph-level classification [154, 43] and unsupervised message-passing model training [36, 155], but they are hardly studied on heterogeneous networks [156]. Although existing works like HIN2Vec [63] study the embedding of meta-paths to improve the embedding of nodes, direct applications of graph- and subgraph-level embeddings in the context of heterogeneous networks largely remain nascent.

Revisiting KB embedding.

The difference between KB embedding and other types of HNE is mainly due to the numbers of node and link types. Direct application of KB embedding to heterogeneous networks fails to consider meta-paths with rich semantics, whereas directly applying HNE to KB is unrealistic due to the exponential number of meta-paths. However, it would still be interesting to study the intersection between these two groups of methods (as well as two types of data). For example, how can we combine the ideas of meta-paths on heterogeneous networks and embedding transformation on KB for HNE with more semantic-aware transformations? How can we devise truncated random walk based methods for KB embedding to include higher-order relations?

Modeling heterogeneous contexts.

Heterogeneous networks mainly model different types of nodes and links. However, networks nowadays are often associated with rich contents, which provide contexts of the nodes, links, and subnetworks [157, 158, 159]. There have been studies exploiting text-rich heterogeneous information networks for taxonomy construction [160, 161] and text classification [162, 163]. Meanwhile, how to model heterogeneous interactions under multi-faceted contexts through the integration of multi-modal content and structure could be a challenging but rewarding research area.

Understanding the limitation.

While HNE (as well as many neural representation learning models) has demonstrated strong performance in various domains, it is worthwhile to understand its potential limits. For example, when do modern HNE algorithms work better compared with traditional network mining approaches (e.g., path counting, subgraph matching, non-neural or linear propagation)? How can we join the advantages of both worlds? Moreover, while there has been intensive research on the mathematical mechanisms behind neural networks for homogeneous network data (e.g., smoothing, low-pass filtering, invariant and equivariant transformations), by unifying existing models on HNE, this work also aims to stimulate further theoretical studies on the power and limitation of HNE.

Acknowledgement

Special thanks to Dr. Hanghang Tong for his generous help in the polish of this work. Research was sponsored in part by the U.S. Army Research Laboratory under Cooperative Agreement No. W911NF-09-2-0053 and W911NF-13-1-0193, DARPA No. W911NF-17-C-0099 and FA8750-19-2-1004, National Science Foundation IIS 16-18481, IIS 17-04532, and IIS-17-41317, DTRA HDTRA11810026, and grant 1U54GM114838 awarded by NIGMS through funds provided by the trans-NIH Big Data to Knowledge (BD2K) initiative.

References

- [1] Y. Seo, M. Defferrard, P. Vandergheynst, and X. Bresson, “Structured sequence modeling with graph convolutional recurrent networks,” in ICONIP, 2018.

- [2] J. He, M. Li, H.-J. Zhang, H. Tong, and C. Zhang, “Manifold-ranking based image retrieval,” in SIGMM, 2004.

- [3] J. Gilmer, S. S. Schoenholz, P. F. Riley, O. Vinyals, and G. E. Dahl, “Neural message passing for quantum chemistry,” in ICML, 2017.

- [4] A. Bordes, N. Usunier, A. Garcia-Duran, J. Weston, and O. Yakhnenko, “Translating embeddings for modeling multi-relational data,” in NIPS, 2013.

- [5] C. Yang, L. Bai, C. Zhang, Q. Yuan, and J. Han, “Bridging collaborative filtering and semi-supervised learning: A neural approach for poi recommendation,” in KDD, 2017.

- [6] Y. Xiao, A. Krishnan, and H. Sundaram, “Discovering strategic behaviors for collaborative content-production in social networks,” in WWW, 2020.

- [7] Y. Sun and J. Han, “Mining heterogeneous information networks: principles and methodologies,” Synthesis Lectures on Data Mining and Knowledge Discovery, vol. 3, no. 2, pp. 1–159, 2012.

- [8] C. Shi, Y. Li, J. Zhang, Y. Sun, and S. Y. Philip, “A survey of heterogeneous information network analysis,” TKDE, vol. 29, no. 1, pp. 17–37, 2016.

- [9] J.-D. Zhang and C.-Y. Chow, “Geosoca: Exploiting geographical, social and categorical correlations for point-of-interest recommendations,” in SIGIR, 2015.

- [10] C. Yang, D. H. Hoang, T. Mikolov, and J. Han, “Place deduplication with embeddings,” in WWW, 2019.

- [11] C. Yang, C. Zhang, X. Chen, J. Ye, and J. Han, “Did you enjoy the ride: Understanding passenger experience via heterogeneous network embedding,” in ICDE, 2018.

- [12] Y. Li and J. C. Patra, “Genome-wide inferring gene–phenotype relationship by walking on the heterogeneous network,” Bioinformatics, vol. 26, no. 9, pp. 1219–1224, 2010.

- [13] A. P. Davis, C. J. Grondin, R. J. Johnson, D. Sciaky, B. L. King, R. McMorran, J. Wiegers, T. C. Wiegers, and C. J. Mattingly, “The comparative toxicogenomics database: update 2017,” Nucleic acids research, vol. 45, no. D1, pp. D972–D978, 2016.

- [14] Y. Sun, J. Han, X. Yan, P. S. Yu, and T. Wu, “Pathsim: Meta path-based top-k similarity search in heterogeneous information networks,” in VLDB, 2011.

- [15] C. Shi, X. Kong, Y. Huang, S. Y. Philip, and B. Wu, “Hetesim: A general framework for relevance measure in heterogeneous networks,” TKDE, vol. 26, no. 10, pp. 2479–2492, 2014.

- [16] C. Yang, M. Liu, F. He, X. Zhang, J. Peng, and J. Han, “Similarity modeling on heterogeneous networks via automatic path discovery,” in ECML-PKDD, 2018.

- [17] L. Dos Santos, B. Piwowarski, and P. Gallinari, “Multilabel classification on heterogeneous graphs with gaussian embeddings,” in ECML-PKDD, 2016.

- [18] D. Eswaran, S. Günnemann, C. Faloutsos, D. Makhija, and M. Kumar, “Zoobp: Belief propagation for heterogeneous networks,” in VLDB, 2017.

- [19] T. Chen and Y. Sun, “Task-guided and path-augmented heterogeneous network embedding for author identification,” in WSDM, 2017.

- [20] R. Socher, D. Chen, C. D. Manning, and A. Ng, “Reasoning with neural tensor networks for knowledge base completion,” in NIPS, 2013.

- [21] B. Oh, S. Seo, and K.-H. Lee, “Knowledge graph completion by context-aware convolutional learning with multi-hop neighborhoods,” in CIKM, 2018.

- [22] W. Zhang, B. Paudel, L. Wang, J. Chen, H. Zhu, W. Zhang, A. Bernstein, and H. Chen, “Iteratively learning embeddings and rules for knowledge graph reasoning,” in WWW, 2019.

- [23] X. Geng, H. Zhang, J. Bian, and T.-S. Chua, “Learning image and user features for recommendation in social networks,” in ICCV, 2015.

- [24] H. Zhao, Q. Yao, J. Li, Y. Song, and D. L. Lee, “Meta-graph based recommendation fusion over heterogeneous information networks,” in KDD, 2017.

- [25] S. Hou, Y. Ye, Y. Song, and M. Abdulhayoglu, “Hindroid: An intelligent android malware detection system based on structured heterogeneous information network,” in KDD, 2017.

- [26] P. Goyal and E. Ferrara, “Graph embedding techniques, applications, and performance: A survey,” Knowledge-Based Systems, vol. 151, pp. 78–94, 2018.

- [27] H. Cai, V. W. Zheng, and K. C.-C. Chang, “A comprehensive survey of graph embedding: Problems, techniques, and applications,” TKDE, vol. 30, no. 9, pp. 1616–1637, 2018.

- [28] P. Cui, X. Wang, J. Pei, and W. Zhu, “A survey on network embedding,” TKDE, vol. 31, no. 5, pp. 833–852, 2018.

- [29] B. Perozzi, R. Al-Rfou, and S. Skiena, “Deepwalk: Online learning of social representations,” in KDD, 2014.

- [30] J. Tang, M. Qu, M. Wang, M. Zhang, J. Yan, and Q. Mei, “Line: Large-scale information network embedding,” in WWW, 2015.

- [31] A. Grover and J. Leskovec, “node2vec: Scalable feature learning for networks,” in KDD, 2016.

- [32] H. Wang, J. Wang, J. Wang, M. Zhao, W. Zhang, F. Zhang, X. Xie, and M. Guo, “Graphgan: Graph representation learning with generative adversarial nets,” in AAAI, 2018.

- [33] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in ICLR, 2017.

- [34] W. Hamilton, Z. Ying, and J. Leskovec, “Inductive representation learning on large graphs,” in NIPS, 2017.

- [35] J. Chen, T. Ma, and C. Xiao, “Fastgcn: fast learning with graph convolutional networks via importance sampling,” in ICLR, 2018.

- [36] P. Veličković, W. Fedus, W. L. Hamilton, P. Liò, Y. Bengio, and R. D. Hjelm, “Deep graph infomax,” in ICLR, 2019.

- [37] N. Zhao, H. Zhang, M. Wang, R. Hong, and T.-S. Chua, “Learning content–social influential features for influence analysis,” IJMIR, 2016.

- [38] S. Abu-El-Haija, B. Perozzi, and R. Al-Rfou, “Learning edge representations via low-rank asymmetric projections,” in CIKM, 2017.

- [39] B. Perozzi, V. Kulkarni, H. Chen, and S. Skiena, “Don’t walk, skip!: online learning of multi-scale network embeddings,” in ASONAM, 2017.

- [40] C. Yang, J. Zhang, H. Wang, S. Li, M. Kim, M. Walker, Y. Xiao, and J. Han, “Relation learning on social networks with multi-modal graph edge variational autoencoders,” in WSDM, 2020.

- [41] M. Niepert, M. Ahmed, and K. Kutzkov, “Learning convolutional neural networks for graphs,” in ICML, 2016.

- [42] C. Yang, M. Liu, V. W. Zheng, and J. Han, “Node, motif and subgraph: Leveraging network functional blocks through structural convolution,” in ASONAM, 2018.

- [43] Z. Ying, J. You, C. Morris, X. Ren, W. Hamilton, and J. Leskovec, “Hierarchical graph representation learning with differentiable pooling,” in NIPS, 2018.

- [44] C. Morris, M. Ritzert, M. Fey, W. L. Hamilton, J. E. Lenssen, G. Rattan, and M. Grohe, “Weisfeiler and leman go neural: Higher-order graph neural networks,” in AAAI, 2019.

- [45] S. Cao, W. Lu, and Q. Xu, “Grarep: Learning graph representations with global structural information,” in CIKM, 2015.

- [46] D. Wang, P. Cui, and W. Zhu, “Structural deep network embedding,” in KDD, 2016.

- [47] Z. Zhang, P. Cui, X. Wang, J. Pei, X. Yao, and W. Zhu, “Arbitrary-order proximity preserved network embedding,” in KDD, 2018.

- [48] J. Huang, X. Liu, and Y. Song, “Hyper-path-based representation learning for hyper-networks,” in CIKM, 2019.

- [49] L. F. Ribeiro, P. H. Saverese, and D. R. Figueiredo, “struc2vec: Learning node representations from structural identity,” in KDD, 2017.

- [50] M. Zhang and Y. Chen, “Weisfeiler-lehman neural machine for link prediction,” in KDD, 2017.

- [51] T. Lyu, Y. Zhang, and Y. Zhang, “Enhancing the network embedding quality with structural similarity,” in CIKM, 2017.

- [52] C. Donnat, M. Zitnik, D. Hallac, and J. Leskovec, “Learning structural node embeddings via diffusion wavelets,” in KDD, 2018.

- [53] B. Scholkopf and A. J. Smola, Learning with kernels: support vector machines, regularization, optimization, and beyond. MIT press, 2001.

- [54] A. Liaw, M. Wiener et al., “Classification and regression by randomforest,” R news, vol. 2, no. 3, pp. 18–22, 2002.

- [55] T. Chen and C. Guestrin, “Xgboost: A scalable tree boosting system,” in KDD, 2016.

- [56] S. Chang, W. Han, J. Tang, G.-J. Qi, C. C. Aggarwal, and T. S. Huang, “Heterogeneous network embedding via deep architectures,” in KDD, 2015.

- [57] F. Wu, X. Lu, J. Song, S. Yan, Z. M. Zhang, Y. Rui, and Y. Zhuang, “Learning of multimodal representations with random walks on the click graph,” TIP, vol. 25, no. 2, pp. 630–642, 2015.

- [58] Y. Zhao, Z. Liu, and M. Sun, “Representation learning for measuring entity relatedness with rich information,” in AAAI, 2015.

- [59] Y. Dong, N. V. Chawla, and A. Swami, “metapath2vec: Scalable representation learning for heterogeneous networks,” in KDD, 2017.

- [60] Y. Shi, H. Gui, Q. Zhu, L. Kaplan, and J. Han, “Aspem: Embedding learning by aspects in heterogeneous information networks,” in SDM, 2018.