[1]\pfx \fnmMansoor \surRezghi

1]\orgdivDepartment of Computer Science, \orgnameTarbiat Modares University, \orgaddress \cityTehran, \stateTehran, \countryIran

Heterogeneous Multi-Agent Reinforcement Learning via Mirror Descent Policy Optimization

Abstract

This paper presents an extension of the Mirror Descent method to overcome challenges in cooperative Multi-Agent Reinforcement Learning (MARL) settings, where agents have varying abilities and individual policies. The proposed Heterogeneous-Agent Mirror Descent Policy Optimization (HAMDPO) algorithm utilizes the multi-agent advantage decomposition lemma to enable efficient policy updates for each agent while ensuring overall performance improvements. By iteratively updating agent policies through an approximate solution of the trust-region problem, HAMDPO guarantees stability and improves performance. Moreover, the HAMDPO algorithm is capable of handling both continuous and discrete action spaces for heterogeneous agents in various MARL problems. We evaluate HAMDPO on Multi-Agent MuJoCo and StarCraftII tasks, demonstrating its superiority over state-of-the-art algorithms such as HATRPO and HAPPO. These results suggest that HAMDPO is a promising approach for solving cooperative MARL problems and could potentially be extended to address other challenging problems in the field of MARL.

keywords:

Multi-agent Reinforcement Learning, Policy Gradient, Mirror descent1 Introduction

Multi-agent Reinforcement Learning (MARL) is an important tool to solve a wide range of sequential decision-making problems in real-world applications, such as autonomous driving [27], traffic signal control [23], drone swarms [2], and smart grid [14]. In these problems, multiple agents must work together to efficiently accomplish a task by achieving maximum reward in a shared environment. However, developing algorithms to outperform single-agent algorithms in such settings requires dealing with additional challenges such as scalability and non-stationarity.

Cooperative MARL involves several agents learning to jointly accomplish a task. Although several paradigms have been introduced to employ single-agent training methods, such as policy gradient in MARL [10, 4], scaling up single-agent methods to multi-agent systems presents enduring challenges [6]. Thus, researchers continue to develop new algorithms that can effectively address these challenges and improve the performance of cooperative MARL. One of the earliest methods for MARL is called Decentralized Training with Decentralized Execution (DTDE) [21]. In this paradigm, each agent makes decisions and learns independently, without access to other agents’ observations, actions, or policies. This method is easy to implement, but as the number of agents increases, learning becomes unstable because agents do not take into account other agents’ behavior during policy updates. Therefore, the environment appears non-stationary from a single agent’s viewpoint.

To improve the stability of the learning process, another method called Centralized Training with Centralized Execution (CTCE) was introduced [6]. This method models the joint policy as a centralized policy and uses single-agent methods to learn this aggregated policy. However, scalability suffers from the curse of dimensionality because the state-action space grows exponentially with the number of agents. To address this issue, the joint policy can be factored into individual policies for each agent. Centralized Training with Decentralized Execution (CTDE) [10] leverages the benefits of both DTDE and CTCE. In CTDE, each agent holds an individual policy and learns through actor-critic [11] or policy gradient methods. The critic model has access to the global state and other agents’ actions, so it learns the true state-value. When paired with the actor, it can be used to find the optimal policy. CTDE allows agents to use this additional information during training, but in execution time, each agent acts independently and decision-making relies only on its own policy. Therefore, CTDE is a promising solution for many real-world AI applications.

On the other hand, in Reinforcement Learning (RL), sudden changes in policy distribution can lead to poor local optima. To avoid this, in single-agent, trust-region algorithms guarantee that policy updates are not excessively drastic and the new policy remains close to the old one, ensuring monotonic improvement in policy updates. 7 derived a policy improvement bound and introduced the Conservative Policy Iteration (CPI) algorithm to stabilize and enhance the policy learning process. 15 extended this policy improvement bound for stochastic policies and introduced Trust Region methods such as trust-region Policy Optimization (TRPO) [15] and Proximal Policy Optimization (PPO) [16] algorithms to solve this trust-region problem. These algorithms have been shown to be effective in a wide range of tasks, including robotic control and game-playing.

The CTDE provides a framework for extending policy gradient theorems [19] from single-agent to multi-agent scenarios. One example of a policy gradient method that utilizes separate actors and critics for each agent and trains the critic in a centralized way is Multi-Agent Deep Deterministic Policy Gradient [10]. While several policy gradient approaches are available, trust-region methods like the TRPO and PPO algorithms are state-of-the-art single-agent policy gradient methods. Attempts to extend trust-region learning to multi-agent settings include Independent PPO (IPPO) [25] and Multi-Agent PPO (MAPPO) [26]. However, these methods suffer from the drawbacks of homogeneous agents and parameter sharing. Homogeneous agents share a common set of skills, enabling them to share parts of their learning model with other agents and use the same policy network. Parameter sharing can accelerate the learning progress of this network. However, in scenarios where agents are heterogeneous and have different tasks, their action space may not be identical, which can limit the effectiveness of parameter sharing.

Recently, 8 proposed the Heterogeneous-Agent TRPO (HATRPO) and Heterogeneous-Agent PPO (HAPPO) algorithms to address limitations of existing trust-region learning methods in MARL, such as IPPO and MAPPO. HATRPO/HAPPO rely on the multi-agent advantage decomposition lemma and sequential policy update scheme to ensure monotonic improvement. The outstanding HATRPO/HAPPO algorithms are extensions of single-agent TRPO/PPO methods designed for MARL with heterogeneous agents.

Although HATRPO/HAPPO algorithms showed appropriate results, recently, a single-agent policy gradient method called Mirror Descent Policy Optimization (MDPO) was introduced by 22. MDPO has shown superior performance compared to TRPO and PPO in single-agent scenarios. Therefore, extending the MDPO algorithm to MARL with heterogeneous agents would enable us to leverage the advantages of mirror descent in multi-agent settings, which could work better than HATRPO/HAPPO.

This paper introduces a novel trust-region algorithm for MARL called Heterogeneous-Agent Mirror Descent Policy Optimization (HAMDPO). HAMDPO leverages the multi-agent advantage decomposition lemma and the sequential policy update scheme to apply mirror descent in multi-agent scenarios. We evaluate HAMDPO on several tasks of the StarCraftII and Multi-Agent Mujoco continuous benchmark and compare its performance with that of HATRPO and HAPPO. Our results demonstrate that HAMDPO outperforms both HATRPO and HAPPO in terms of convergence and overall performance.

This paper is organized as follows: Section 2 provides Preliminaries, offering an overview of essential background concepts. Section 2.1 explores Mirror Descent and Policy Optimization in multi-agent systems. Section 3 presents Multi-Agent Trust-Region Learning, addressing trust and cooperation challenges, enhancing agent interactions’ stability and performance. Section 4 introduces Heterogeneous-Agent Mirror Descent Policy Optimization, handling agent heterogeneity to improve system performance. Section 5 discusses Experiments, comprehensively evaluating and comparing the effectiveness of our approaches.

2 Preliminaries

In a fully cooperative multi-agent setting, we consider a Markov game [9] represented by a tuple , where denotes the set of interacting agents, is the set of states observed by all agents, and denotes the joint action space of agents. Here, is the action space of agent . The transition probability from any state to any state for any joint action is given by . The reward function is , and is the discount factor.

At time step , each agent chooses an action according to its policy given the current state . The agents joint action is then formed as , drawn from the joint policy . The agents then receive a reward and move to the next state .

The joint value function in a state is defined as , which is the expected cumulative reward obtained by following the joint policy from state . The joint state-action value function represents the expected cumulative reward obtained by following the joint policy from state and taking joint action . The difference between these two functions gives the joint advantage function .

In a fully-cooperative multi-agent setting, agents collaborate to maximize cumulative reward. This is achieved by maximizing the expected sum of discounted rewards obtained by the agents over time denoted by the following objective function:

| (1) |

Here, the expectation is taken over the state-action marginal distribution of the trajectory induced by the joint policy , denoted by .

It should be noted that in the fully-cooperative setting, agents work together towards a common goal and do not have conflicting objectives. This is in contrast to the partially cooperative or non-cooperative settings, where agents may have individual objectives that may not align with the collective goal [12].

2.1 Mirror Descent and Policy Optimization

Trust-region methods have demonstrated their effectiveness in finding optimal policies and stabilizing learning in RL. Notably, the TRPO and PPO algorithms are recognized as remarkable trust-region approaches. Despite the extensive study of the mirror descent algorithm in tabular single-agent RL [17, 5], where 17 proved a convergence rate of for MD-style RL algorithms in the tabular case, a significant advancement was made by 22 with the introduction of MDPO. This extension enables the training of parametric policies using neural networks and has consistently outperformed TRPO and PPO in a wide range of tasks.

To solve a trust-region problem in MARL using the mirror descent algorithm, we first need to introduce the mirror descent definition and its utilization in the RL setting. Consider a constrained convex problem of the form:

| (2) |

where is a convex function and is a convex and compact constraint set. The mirror descent algorithm [1] with step size solves problem (2) by using the first-order approximation of , augmented with a regularization term, as follows:

| (3) |

where is the Bregman divergence associated with the Bregman potential , defined as:

| (4) |

A strictly convex and twice differentiable function is called a Bregman potential on the convex domain . The mirror descent algorithm uses a Bregman potential to obtain a local update rule that takes into account the global geometry of the constraint set. By selecting the negative Shannon entropy as the Bregman potential, we obtain the KL-divergence between the probability distributions as the Bregman divergence.

In summary, mirror descent is a powerful optimization technique that can be used to solve constrained convex optimization problems in the context of reinforcement learning. By incorporating the Bregman divergence with a carefully chosen Bregman potential, mirror descent can account for the geometry of the constraint set and efficiently compute a solution to the optimization problem.

In single-agent policy gradient algorithms, the optimization problem of finding the optimal policy can be expressed as:

| (5) |

where represents the initial state distribution and is a class of smoothly parameterized stochastic policies with parameter , which is also used to represent a policy.

Previous works have studied mirror descent in both the tabular and parametric cases. They have shown that mirror descent can be used to solve the optimization problem of policy gradient methods and can yield excellent results even with non-convex objective functions. The on-policy update rule for mirror descent is defined as:

| (6) |

where is the state distribution of the previous policy [22]. However, taking only one step of stochastic gradient descent (SGD) over the update rule (6) leads to the gradient of the KL term being equal to zero i.e. , which reduces to the vanilla policy gradient method [20]. Therefore, multiple SGD steps are needed at each iteration to account for the regularization term. Because samples are drawn from the previous policy distribution and the policy distribution changes with every SGD step, so importance sampling is employed to correct the estimation. Finally, the MDPO objective is defined as:

| (7) |

To solve the policy optimization problem (5), TRPO approximates the objective function using the first-order term and approximates the constraint using the second-order term of the corresponding Taylor series. This results in a policy gradient update that involves calculating the inverse of the Fisher information matrix, which is a quadratic approximation to the constraint. Despite the benefits of TRPO, it is a complicated and expensive algorithm to run.

To address these issues, PPO relaxes the hard constraint assumption and reduces the computation burden of TRPO. PPO attempts to simplify the optimization process while still retaining the advantages of TRPO. It uses a clipped surrogate objective and updates the policy by solving an unconstrained optimization problem in which the ratio of the new to old policy is clipped to remain bounded. Unfortunately, PPO violates the trust-region assumptions by failing to constrain the update size and does not guarantee monotonic improvement to be satisfied [24, 3].

The MDPO algorithm, unlike TRPO, is a first-order method and is more straightforward to implement. Additionally, MDPO effectively applies a trust-region constraint, which is an advancement over PPO. Since MDPO has demonstrated strong performance in the context of single-agent RL, we intend to investigate its applicability and effectiveness in MARL.

3 Multi-Agent Trust-Region Learning

MARL is a sub-field of RL that deals with the problem of multiple agents interacting in the same environment. One of the challenges in MARL is designing algorithms that enable agents to learn from each other and cooperate effectively. One popular technique for achieving this is parameter sharing, which allows agents to share the same set of parameters for their policies or value functions.

Parameter sharing has several benefits. For one, it can reduce the number of parameters in a model, making it more efficient and easier to train. Additionally, by allowing agents to learn from each other’s experiences, it can boost overall learning performance [6]. However, there are also drawbacks to parameter sharing. For example, it can prevent agents from developing unique behaviors, which can limit their effectiveness in certain situations. Recent studies have also shown that parameter sharing can lead to a sub-optimal outcome that becomes exponentially worse as the number of agents increases [8].

Most of trust-region methods in MARL use parameter sharing and are designed for homogeneous agents and do not guarantee monotonic improvement [25, 26] . Recently, 8 developed a theoretically-justified trust-region learning framework for heterogeneous agents by introducing the multi-agent advantage decomposition lemma. To use this framework, it is necessary to define state-action value and advantage functions for different subsets of agents. The multi-agent state-action value function for a subset of n agents and its complement is defined as:

| (8) |

Here, is the joint policy of all agents, is the joint action of agents in subset , and is the joint action of agents in the complement subset . The expectation is taken over the joint action of agents in the complement subset, sampled from the joint policy of agents in the complement subset, .

Correspondingly, the multi-agent advantage function for two disjoint subsets and is

| (9) |

For an arbitrary subset with a given joint policy and state , the multi-agent advantage decomposition lemma is

| (10) |

The equation (10) shows that the joint advantage function can be derived from a summation of each agent’s local advantage. This means that for an arbitrary subset with a given joint policy and state , the advantage function can be expressed as the sum of each agent’s advantage function for their actions. As a result, any agent that improves its own advantage will also improve the joint advantage. This is in contrast to VDN or QMIX [18, 13], which rely on assumptions about the decomposability of the joint value function. Therefore, the multi-agent advantage decomposition lemma is a powerful tool that allows agents to learn independently while still improving the overall performance.

Consider as a joint policy, as some other joint policy of agents , and as some other policy of agent . The surrogate objective is defined as follows:

| (11) |

where is the advantage function for agent with respect to the joint policy . Let , the policy improvement bound for two joint policies and is:

| (12) |

where and are the expected returns for joint policies and , respectively and the constant . Thus, any agent that updates its policy in a way that positively impacts the above summation is guaranteed to increase the joint policy performance [8].

HATRPO/HAPPO are derived from the aforementioned bounds and update each agent’s policy sequentially, rather than updating the entire joint policy at once. The sequential update follows the policy improvement bound (12), and each agent has a distinct optimization objective that takes into account the previous agents’ updates. The TRPO/PPO update rule is utilized to optimize each agent’s policy during the sequential update.

4 Heterogeneous Agent Mirror Descent Policy Optimization

In the fully cooperative MARL setting, we consider agents that behave independently and have distinct policies, making them heterogeneous agents. This characteristic allows us to directly apply the MDPO algorithm to MARL using the trust-region learning framework proposed by 8. Thus, we present a mirror descent objective to update the policies of these heterogeneous agents. The optimization problem for finding the optimal joint policy is defined as follows:

| (13) |

where represents the joint policy and denotes the set of all possible joint policies. To solve this optimization problem and extend the mirror descent update rule to MARL, we can employ the joint advantage function within the HAMDPO agent objective function, which is based on trust-region theory in MARL [8]. Let and be joint policies. The on-policy version of MDPO for updating the joint policy is derived as follows:

| (14) |

The update rule (14) consists of two terms: the expected joint advantage function, which quantifies the performance improvement of the joint policy compared to the old policy, and the KL-divergence, which promotes the joint policy to stay close to the old policy to ensure stable learning. To accommodate heterogeneous agents using the CTDE approach, we can decompose both terms and derive an update rule by aggregating the local advantages and KL terms of the agents. This allows us to update the joint policy by taking into account the individual contributions of each agent.

To decompose the joint advantage function, we utilize the joint advantage decomposition lemma in equation (10). Additionally, for the KL-divergence term of two joint policies, following a similar approach to 8, let and be joint policies. Then, for any state , we obtain the following expression:

| (15) |

Now, we have decomposed the terms of the MD update rule to derive the decomposition of the joint policy update rule for . The update rule is as follows:

| (16) |

Based on the above summation, the agents’ policies can be updated sequentially. We can formulate the update rule for each agent, e.g., , as follows and employ multiple SGD steps at each iteration to approximately update the agent’s policy:

| (17) |

In the above formula, when updating agent , we consider the updated actions of the previously updated agents, denoted as . This ensures that the joint policy reflects the latest actions of the other agents, enabling the agent to make informed updates based on the updated joint policy. However, recalculating the joint advantage function with previously updated agents can introduce computational overhead. To mitigate this, we can utilize the joint advantage estimator introduced in [8] within our algorithm. This estimator allows us to estimate the joint advantage function for every state as follows:

| (18) |

where represent the policy of agent , and denote the joint policy of agents . We define , where represents the advantage function. By substituting this joint advantage estimator into Equation (17), we derive the HAMDPO loss function, which is as follows:

| (19) |

At each iteration, we can apply the MD-style update rule for an arbitrary permutation of agents, such as drawing a shuffled permutation. In summary, the HAMDPO process we have introduced can be outlined using Algorithm 1. This algorithm provides a step-by-step description of the HAMDPO method.

Our HAMDPO algorithm is similar to CPI, but it utilizes MD theory to replace the computation of maximum KL with mean KL, making it a more practical approach. Additionally, the step-size of HAMDPO is based on MD theory, leading to faster learning. HAMDPO also has connections to HATRPO and HAPPO algorithms.

Compared to HATRPO, HAMDPO does not explicitly enforce the trust-region constraint. Instead, it approximately satisfies it by performing multiple steps of SGD on the objective function of the optimization problem in the MD-style update rule. HAMDPO uses simple SGD instead of natural gradient, which eliminates the need to deal with computational overhead. In addition, the direction of KL in HAMDPO is consistent with that in the MD update rule in convex optimization, while it differs from that in HATRPO. Finally, HAMDPO employs a simple schedule motivated by the theory of MD, whereas HATRPO uses multiple heuristics to define the step-size and reduce it in case the trust-region constraint is violated.

HAMDPO and HAPPO both take multiple steps of stochastic gradient descent on unconstrained optimization problems, but they differ in how they handle the trust-region constraint. Recent studies, including 3, suggest that most of the performance improvements observed in PPO are due to code-level optimization techniques. These studies also indicate that PPO’s clipping technique does not prevent policy ratios from going out of bounds; it only reduces their probability. Consequently, despite using clipping, PPO does not always ensure that the trust-region constraint is satisfied.

5 Experiments

This section presents an evaluation of our proposed algorithm, HAMDPO, on several tasks from the Multi-Agent Mujoco continuous benchmark and a map from StarCraftII. We compare the results obtained from HAMDPO with those obtained from HATRPO and HAPPO. The Multi-Agent Mujoco benchmark consists of a variety of robotic control tasks with continuous state-action space, where multiple agents collaborate to solve a task as distinct parts of a single robot. In contrast, StarCraftII has a discrete action space and is a game where each learning agent controls a specific army unit while the built-in AI controls the enemy units.

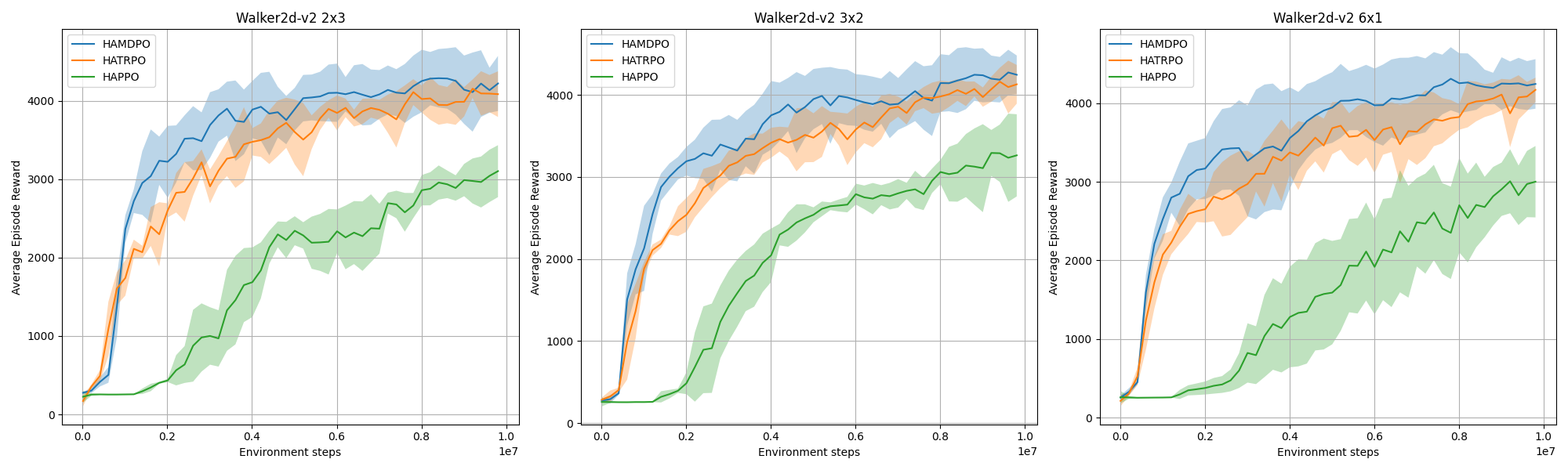

To measure the performance of our algorithm, we plot the average episode reward for multiple tasks of Multi-Agent Mujoco in Figures 2, 3, and 4 , and the mean evaluation winning rate of StarCraftII with a confidence interval across five different runs in Figure 5 over time-steps111The code is available at https://github.com/mehdinasiri/Mirror-Descent-in-MARL. The results demonstrate that HAMDPO outperforms both HATRPO and HAPPO in both continuous and discrete benchmarks.

Additionally, we examined the impact of the number of SGD steps per iteration on the Ant-v2 2x4 task, as presented in Figure 1. Our findings reveal that vanilla policy gradient is obtained with one SGD step, whereas the regularization term becomes more relevant with more steps. We conducted HAMDPO with ten SGD steps for all the experiments. However, the outcomes depicted in Figure 1 suggest that significant performance can still be achieved with fewer steps. Nonetheless, it is crucial to acknowledge that using more steps to optimize will increase the duration of the optimization process.

6 Conclusion

In conclusion, this study introduced the HAMDPO algorithm, which is an innovative on-policy trust-region algorithm for cooperative MARL based on the theory of Mirror Descent and a theoretically-justified trust region learning framework in MARL. HAMDPO addresses challenges that arise in cooperative MARL settings to find optimal policies where agents have varying abilities and individual policies.

The HAMDPO algorithm updates agent policies iteratively by solving trust-region optimization problems that ensure stability and improve convergence rates. However, instead of solving these problems directly, the policies are updated by taking multiple gradient steps on the objective function of these problems. The experimental results demonstrate that HAMDPO outperforms state-of-the-art algorithms such as HATRPO and HAPPO.

Looking ahead, there are several potential future directions for this research. One direction is to develop an off-policy version of the HAMDPO algorithm in MARL. Additionally, further research could explore the potential of HAMDPO in large-scale scenarios with a large number of agents. Furthermore, it would be interesting to investigate the potential of Mirror Descent in other areas of MARL, such as in the context of competitive multi-agent settings.

References

- \bibcommenthead

- Beck and Teboulle [2003] Beck A, Teboulle M (2003) Mirror descent and nonlinear projected subgradient methods for convex optimization. Operations Research Letters 31(3):167–175

- Boubin et al [2021] Boubin J, Burley C, Han P, et al (2021) Programming and deployment of autonomous swarms using multi-agent reinforcement learning. arXiv preprint arXiv:210510605

- Engstrom et al [2019] Engstrom L, Andrew I, Shibani S, et al (2019) Implementation matters in deep rl: A case study on ppo and trpo. In: International Conference on Learning Representations

- Foerster et al [2018] Foerster J, Farquhar G, Afouras T, et al (2018) Counterfactual multi-agent policy gradients. In: Proceedings of the AAAI conference on artificial intelligence

- Geist et al [2019] Geist M, Scherrer B, Pietquin O (2019) A theory of regularized markov decision processes. In: International Conference on Machine Learning, PMLR, pp 2160–2169

- Gupta et al [2017] Gupta JK, Egorov M, Kochenderfer M (2017) Cooperative multi-agent control using deep reinforcement learning. In: International conference on autonomous agents and multiagent systems, Springer, pp 66–83

- Kakade and Langford [2002] Kakade S, Langford J (2002) Approximately optimal approximate reinforcement learning. In: In Proc. 19th International Conference on Machine Learning, Citeseer

- Kuba et al [2022] Kuba J, Chen R, Wen M, et al (2022) Trust region policy optimisation in multi-agent reinforcement learning. In: ICLR 2022-10th International Conference on Learning Representations, The International Conference on Learning Representations (ICLR), p 1046

- Littman [1994] Littman ML (1994) Markov games as a framework for multi-agent reinforcement learning. In: Machine learning proceedings 1994. Elsevier, p 157–163

- Lowe et al [2017] Lowe R, Wu YI, Tamar A, et al (2017) Multi-agent actor-critic for mixed cooperative-competitive environments. Advances in neural information processing systems 30

- Mnih et al [2016] Mnih V, Badia AP, Mirza M, et al (2016) Asynchronous methods for deep reinforcement learning. In: International conference on machine learning, PMLR, pp 1928–1937

- Oroojlooy and Hajinezhad [2023] Oroojlooy A, Hajinezhad D (2023) A review of cooperative multi-agent deep reinforcement learning. Applied Intelligence 53(11):13677–13722

- Rashid et al [2018] Rashid T, Samvelyan M, Schroeder C, et al (2018) Qmix: Monotonic value function factorisation for deep multi-agent reinforcement learning. In: International Conference on Machine Learning, PMLR, pp 4295–4304

- Roesch et al [2020] Roesch M, Linder C, Zimmermann R, et al (2020) Smart grid for industry using multi-agent reinforcement learning. Applied Sciences 10(19):6900

- Schulman et al [2015] Schulman J, Levine S, Abbeel P, et al (2015) Trust region policy optimization. In: International conference on machine learning, PMLR, pp 1889–1897

- Schulman et al [2017] Schulman J, Wolski F, Dhariwal P, et al (2017) Proximal policy optimization algorithms. arXiv preprint arXiv:170706347

- Shani et al [2020] Shani L, Efroni Y, Mannor S (2020) Adaptive trust region policy optimization: Global convergence and faster rates for regularized mdps. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 5668–5675

- Sunehag et al [2017] Sunehag P, Lever G, Gruslys A, et al (2017) Value-decomposition networks for cooperative multi-agent learning. arXiv preprint arXiv:170605296

- Sutton and Barto [2018] Sutton RS, Barto AG (2018) Reinforcement learning: An introduction

- Sutton et al [1999] Sutton RS, McAllester D, Singh S, et al (1999) Policy gradient methods for reinforcement learning with function approximation. Advances in neural information processing systems 12

- Tan [1993] Tan M (1993) Multi-agent reinforcement learning: Independent versus cooperative agents. In: ICML

- Tomar et al [2020] Tomar M, Shani L, Efroni Y, et al (2020) Mirror descent policy optimization. arXiv preprint arXiv:200509814

- Wang et al [2020a] Wang X, Ke L, Qiao Z, et al (2020a) Large-scale traffic signal control using a novel multiagent reinforcement learning. IEEE transactions on cybernetics 51(1):174–187

- Wang et al [2020b] Wang Y, He H, Tan X (2020b) Truly proximal policy optimization. In: Uncertainty in Artificial Intelligence, PMLR, pp 113–122

- de Witt et al [2020] de Witt CS, Gupta T, Makoviichuk D, et al (2020) Is independent learning all you need in the starcraft multi-agent challenge? arXiv preprint arXiv:201109533

- Yu et al [2021] Yu C, Velu A, Vinitsky E, et al (2021) The surprising effectiveness of ppo in cooperative, multi-agent games. arXiv preprint arXiv:210301955

- Zhou et al [2020] Zhou M, Luo J, Villella J, et al (2020) Smarts: Scalable multi-agent reinforcement learning training school for autonomous driving. arXiv preprint arXiv:201009776