Heterogeneous Hypergraph Embedding for Graph Classification

Abstract.

Recently, graph neural networks have been widely used for network embedding because of their prominent performance in pairwise relationship learning. In the real world, a more natural and common situation is the coexistence of pairwise relationships and complex non-pairwise relationships, which is, however, rarely studied. In light of this, we propose a graph neural network-based representation learning framework for heterogeneous hypergraphs, an extension of conventional graphs, which can well characterize multiple non-pairwise relations. Our framework first projects the heterogeneous hypergraph into a series of snapshots and then we take the Wavelet basis to perform localized hypergraph convolution. Since the Wavelet basis is usually much sparser than the Fourier basis, we develop an efficient polynomial approximation to the basis to replace the time-consuming Laplacian decomposition. Extensive evaluations have been conducted and the experimental results show the superiority of our method. In addition to the standard tasks of network embedding evaluation such as node classification, we also apply our method to the task of spammers detection and the superior performance of our framework shows that relationships beyond pairwise are also advantageous in the spammer detection.

To make our experiment repeatable, source codes and related datasets are available at https://xiangguosun.mystrikingly.com.

1. Introduction

Recently, Graph Neural Networks (GNNs) have attracted significant attention because of their prominent performance in various machine learning applications (Yin et al., 2019, 2017; Chen et al., 2020a). Most of these methods focus on the pairwise relationships between objects in the constructed graphs.In many real-world scenarios, however, relationships among objects are not dyadic (pairwise) but rather triadic, tetradic, or higher. Squeezing the high-order relations into pairwise ones leads to information loss and impedes expressiveness.

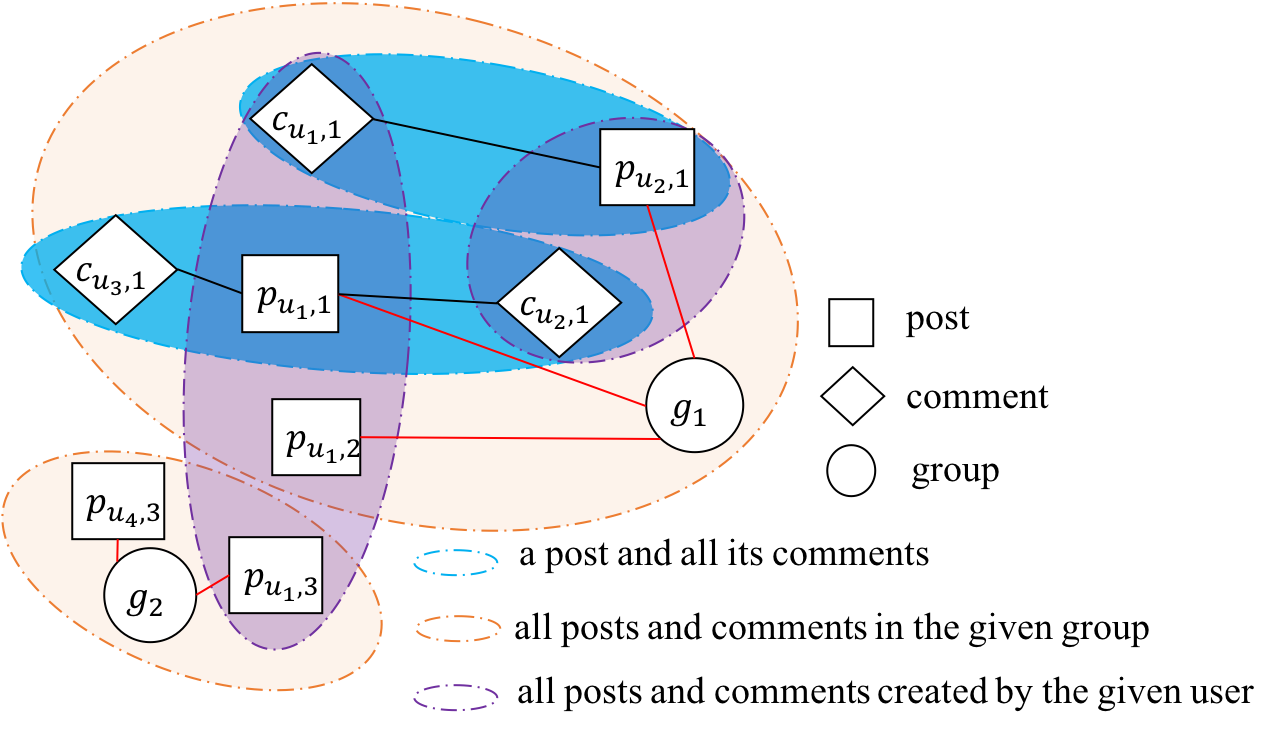

To overcome this limitation, hypergraph (Zhou et al., 2007) has been recently proposed and achieved remarkable improvments(Bretto, 2013). Hypergraphs allow one hyperedge to connect multiple nodes simultaneously so that interactions beyond pairwise relations among nodes can be easily represented and modelled. Figure 1 is an example of heterogeneous hypergraph reflected in online social forums. Specifically, are two different social groups, denotes the i-th post created by user , and is the i-th comment created by user . There exist both pairwise relationships and more complex relationships.

Despite the potentials of hypergraphs, only a few works shift attentions to representation learning on hypergraphs. Earlier works, (Zhang et al., 2018; Zhao et al., 2018; Zheng et al., 2018) mostly design a regularizer to integrate hypergraphs into specific applications, which are domain-oriented and hard to be generalized to other domains. Recently, some researches (Feng et al., 2019; Jiang et al., 2019) try to design more universal learning models on hypergraphs. For example, Yadati et al. (Yadati et al., 2019) transform a hypergraph into simple graphs and then use convolutional neural networks for simple graphs to learn node embeddings. Tu et al. (Tu et al., 2018) learn the embeddings of a hypergraph to preserve the first-order and the second-order proximities. Zhang et al. (Zhang et al., [n.d.]) take the analogy with natural language processing and learn node embeddings by predicting hyperedges. However, they mostly focus on the same type of entities, or apply the concept of heterogeneous simple graphs directly to hypergraphs. But there are key differences between heterogeneous simple graphs and heterogeneous hypergraphs. Even for those homogeneous simple graphs like Figure 2, the same type nodes may also be connected according to different semantics that are represented by different types of hyperedges, making the hypergraph heterogeneous (challenge 1).

Recently, graph neural networks have show great power on graph learning, traditional GNN based methods take the assumption that information should be aggregated via point-to-point channel iteratively because links in simple graphs are pairwise. As shown in Figure 2, messages can be directly aggregated from one-hop neighbors in the simple graph. However, message diffusion is more complex on hypergraphs. It should be first aggregated within the same hyperedge, and then aggregated over all hyperedges connecting to the target node. This difference makes traditional GNN-based methods unfit for hypergraphs (challenge 2).

To address challenge 1, we first extract simple graph snapshots with different meta-path, then we construct several hypergraph snapshots on these simple graphs according to hyperedge types. After the decomposition, each snapshot is homogeneous, and they can also be easily calculated in parallel, making the model scalable to large datasets. To address challenge 2, we design a hypergraph convolution by replacing the Fourier basis with a wavelet basis. Compared with methods in the vertex domain, this spectral method does not need to consider the complex message passing pattern in hypergraphs and can also perform localized convolution. Since the Wavelet basis is much sparser than the Fourier basis, it can be efficiently approximated by polynomials without Laplacian decomposition. In summary, the main contributions of this paper are as follows:

-

•

We focus on the heterogeneity of hypergraphs, and address the problem via simple graph snapshots and hypergraph snapshots according to different meta-paths and hyperedge types respectively.

-

•

We propose a novel heterogeneous hypergraph neural network to perform representation learning on heterogeneous hypergraphs. To avoid the time-consuming Laplacian decomposition, we introduce a polynomial approximation-based Wavelet basis to replace the traditional Fourier basis. To the best of our knowledge, we are the first paper to introduce wavelets in hypergraph learning.

-

•

Extensive evaluations have been conducted, and the experimental results on three datasets demonstrate the significant improvement of our model over six state-of-the-art methods. Even in a sparsely labeled situation, our method still keeps ahead. We also evaluate the performance of our model in the task of spammer detection, and it produces much higher accuracy than three competitive baselines, which further demonstrates the superiority of hypergraph learning.

2. Preliminary and Problem Formulation

In this section, we first introduce some necessary definitions and notations, and then formulate the problem of heterogenous hypergraph embedding.

Definition 1.

(Simple Graph Snapshots). According to the selected meta-paths, we can extract the corresponding subgraphs from the original heterogeneous simple graph. Take Figure 3a as an example, we represent the social network with users (U), and departments (D) as nodes, where edges represent friendships (U-U), and affiliation (U-D) relationships. We extract paths according to meta-path U-U and meta-path U-D, then we can generate two subgraphs as two snapshots for the simple graph.

Definition 2.

(Heterogeneous Hypergraph). A heterogeneous hypergraph can be defined as . Here is a set of vertices, and is the vertex type set. is a set of hyperedges, and is the set of hyperedge types. When , the hypergraph is heterogeneous. Hypergraphs allow more than two nodes to be connected by a hyperedge. For any hyperedge , it can be denoted as . We use a positive diagonal matrix to denote the hyperedge weights. The relationship between nodes and hyperedges can be represented by an incidence matrix with entries defined as:

Let and denote the diagonal matrices containing the vertex and hyperedge degrees respectively, where and . Let , then the hypergraph Laplacian is .

Definition 3.

(Hypergraph Snapshots). A snapshot of the hypergraph is a subgraph which can be defined as . Here and are the subsets of and respectively. Different from simple graph snapshots, hypergraph snapshots are generated according to hyperedge types, which means all the hyperedges in should belong to the same hyperedge type. As shown in Figure 3b, for example, there are three kinds of hyperedges in the original hypergraphs, and each hypergraph snapshot contains one type of hyeredges.

Problem 1.

(Heterogenous Hypergraph Embedding for Graph Classification). Given a heterogeneous hypergraph , we aim to learn its representation , where each row of this matrix represents the embedding of each node. This representation can be used for downstream predictive applications such as nodes classification.

3. Heterogeneous Hypergraph Embedding

The overview of our heterogeneous hypergraph embedding framework is shown in Figure 4. The input is a simple graph. If the simple graph is heterogenous, we first extract simple graph snapshots with different meta-paths. Afterwards, we construct hypergraphs on these simple graphs and then decompose them into multiple hypergraph snapshots. We use our developed Hypergraph Wavelet Neural Network (HWNN) to learn node embeddings in each snapshot and then aggregates these snapshots into a comprehensive representation for the downstream classification.

3.1. HWNN: Hypergraph Wavelet Neural Networks

For each vertex , we first lookup its initial vector representation, , via a global embedding matrix and then project it into sub-spaces of different types of hyperedges. The representation of vertex in the hyperedge-specific space with hyperedge type is computed as:

| (1) |

where is the hyperedge-specific projection matrix of .

3.1.1. Hypergraph convolution via Fourier basis

For each snapshot extracted from the original heterogeneous hypergraph, the laplacian matrix is computed as , where . Let . where is the index of elements in , , then . According to (Donnat et al., 2018), the hypergraph Laplacian is a positive semi-definite matrix, it can be diagonalized as:

where is the Fourier basis, which contains the complete set of orthonormal eigenvectors ordered by its non-negative eigenvalues . According to the convolution theorem, the convolution operation of and a filter can be written as the Fourier inverse transform after the element-wise Hadamard product of their Fourier transforms:

| (2) |

Here is the Fourier transform of the filter, and .

However, the above operation has the following two major issues. First, it is not localized in the vertex domain (Xu et al., 2019), which cannot fully empower the convolutional operation. Secondly, eigenvectors are explicitly used in convolutions, requiring the eigen-decomposition of the Laplacian matrix for each snapshot in . To address these issues, we propose to replace the Fourier basis with a Wavelet basis.

The rationale of choosing wavelet basis instead of original Fourier basis is as follows. First of all, the Wavelet basis is much sparser than the Fourier basis and most suitable for modern GPU architectures for efficient training (Neelima and Raghavendra, 2012). Moreover, by the nature of wavelet basis, the efficient polynomial approximation can be achieved more easily. Based on this feature, we thus able to further propose a polynomial approximate to the graph wavelets so that the eigen-decomposition of the Laplacian matrix is not needed anymore. Last but not least wavelets represent information diffusion process, which are very suitable for implementation of localized convolutions in the vertex domain, which has been theoretically proved and empirically validated in the recent studies (Xu et al., 2019; Donnat et al., 2018). Next, we introduce the details of altering this basis.

3.1.2. Hypergraph convolution based on wavelets

With the above discussion, let be a set of wavelets with scaling parameter . Here is the heat kernel matrix, and are eigenvalues of hypergraph laplacian . Then, the hypergraph convolution based on the Wavelet basis can be obtained from Equation (2) after replacing the Fourier basis with :

| (3) |

where is the spectral transform of the filter, and . In the following, we further introduce the Stone-Weierstrass theorem (Donnat et al., 2018) to approximate graph wavelets without requiring the eigen-decomposition of the Laplacian matrix, making our method much more efficient.

3.1.3. Stone-Weierstrass theorem and polynomial approximation

Note that Equation (3) still needs the eigen-decomposition of the hypergraph Laplacian matrix. As the wavelet matrix is much sparser than Fourier basis, we can easily achieve an efficient polynomial approximation according to the Stone-Weierstrass theorem (Donnat et al., 2018), which states that the heat kernel matrix restricted to can be approximated by:

| (4) |

where is the polynomial order. contains the eigenvalues of hypergraph Laplacian , and is the residual where each entry has an upper bound:

| (5) |

Then, the graph wavelet is polynomially approximated by:

| (6) |

Since can be seen as a first-order polynomial of , Equation (6) can be rewritten as:

| (7) |

Obviously, we can replace in with so that can be simultaneously obtained. However, Equation (7) places the parameter into the residual item, which can be ignored if we take as a small value. Therefore, we use a set of parallel parameters to approximate as:

| (8) |

With the above transform, Equation (3) can be deduced as:

| (9) |

When we have a hypergraph signal with nodes and dimensional features, our hyperedge convolution neural networks can be formulated as:

| (10) |

where is a diagonal filter matrix, and is the input of the m-th convolution layer. We can further reduce the number of filters by detaching the feature transform from the convolution, and Equation (10) can be simplified as:

| (11) |

Where is a feature project matrix. Let be the output of the last layer , then for all snapshots of , we have graph representations as:

| (12) |

Here is the concatenation operation, and is the concatenation of . Finally, the representation of the heterogeneous hypergraph can be calculated by the summation over all its snapshots as:

| (13) |

where is a multilayer perceptron, and . In the task of node classification, should be equal to the number of classes. The loss function can be combined with the cross-entropy error over all labeled examples and the regularizer on projection matrices:

| (14) |

where is the set of labeled nodes, is the label value of node in terms of the category . If node belongs to category , , otherwise, 0. is a trade-off parameter of the regularizer. Here, we follow (Zhao et al., 2018) and use the trace of as the regular term, which can be also replaced by the regularization.

3.2. Model Analysis and Discussion

In this section, we provide an analytical discussion about our model from multiple perspectives to show its advantages.

3.2.1. plays a role like an adjacency matrix

Our method can achieve more profound learning results because we leverage the power of for higher-order relations in hypergraphs, and can be treated as an adjacency matrix of the hypergraph.

As previously mentioned, we use to denote the presence of nodes in different hyperedges. If , we have , and otherwise, the corresponding entries are set as . In nature, indicates the relations between nodes and hyperedges, then we can use to describe connections between nodes. A normalized version can be written like: . In order to remove self-loops in hypergraphs, we change the above formula by:

| (15) |

Then a normalized version of Equation (15) can be rewritten by:

| (16) |

From the above formula, we can find has the similar meaning as the adjacency matrix.

3.2.2. Higher-order relations for hypergraphs

In order to elaborate on the advantages of our method, we first introduce a prior work (Chen et al., 2020b) on hypergraph neutral network, and then we discuss the relationships between our work and prior work. A simplified hypergraph convolution can be generated via extending simple graph convolution to the hypergraph. Recall that the typical GCN framework in simple graphs is defined as:

| (17) |

Here contains all nodes’ degree of the graph. is the adjacency matrix. Following Observation 2, we can effectively model hypergraph convolution in a similar way:

| (18) |

Here is the signal at the -th layer, and is a feature projection matrix. The traditional convolutional neural network for simple graphs is a special case of this work because the Laplacian can be degenerated as the simple graph Laplacian.

When the filter in Equation (11) is initialized from value , it is close to an identity matrix . Let and for Equation (7) and Equation (8) respectively, then Equation (11) degenerates to Equation (18). That means we employ the polynomial of to extend prior works based on the hypergraph theory only, and this extension makes our method more profound for node representation learning. Since actually serves a similar role as an adjacency matrix, the power of can learn higher-order relations for hypergraphs. Furthermore, the filter improves the performance in one more step via suppressing trivial components and magnifying rewarding components.

The complexity of Equation (11) is where is the number of nodes, and are input dimensions and output dimensions respectively. Inspired by the formula (18) and (11), the complexity of our model can be further reduced to with a simplified version:

| (19) |

where is the polynomial approximation order, is feature transformation matrix.

4. Experimental Results and Analysis

In this section, we introduce related setup for our experiment and discuss the evaluation restuls.

4.1. Experimental Setting

4.1.1. Datasets

-

•

Pubmed111 https://github.com/jcatw/scnn/tree/master/scnn/data/Pubmed-Diabetes : The Pubmed dataset (Sen et al., 2008) contains 19, 717 academic publications with 500 features. These publications are treated as nodes and their citation relationships are treated as 44,338 links. Each node falls into one of three classes (three kinds of Diabetes Mellitus).

-

•

Cora222https://relational.fit.cvut.cz/dataset/CORA : The Cora dataset (McCallum et al., 2000) contains 2,708 published papers in the area of machine learning, which are divided into 7 categories (case based, genetic algorithms, neural networks, probabilistic methods, reinforcement learning, rule learning, and theory). There are 5,429 citation links in total. The paper nodes have 1,443 features.

-

•

DBLP333http://web.cs.ucla.edu/ yzsun/Publications.html: The DBLP dataset (Sun et al., 2011) is an academic network from four research areas. There are 14,475 authors, 14,376 papers, and 20 conferences, among which 4,057 authors, 20 conference and 100 papers are labeled with one of the four research areas (database, data mining, machine learning, and information retrieval). We use this dataset to predict the research areas of authors.

Note that the above four datasets cover all kinds of hypergraph heterogeneities we have mentioned: homogeneous simple graph with heterogeneous hypergraphs (Pubmed and Cora), and heterogeneous simple graph with heterogeneous hypergraphs (DBLP).

4.1.2. Baselines

We choose six state-of-the-art graph and hypergraph embedding methods as baselines:

-

•

Hypergraph Embedding Methods

-

–

HyperEmbedding (Zhou et al., 2007). This method selects the top- eigenvectors derived from the Laplacian matrix of the hypergraph as the representation of the hypergraph.

-

–

HGNN (Feng et al., 2019). It is a convolution network for hypergraphs based on the Fourier basis. The reason why we choose it as our baseline is that their reported performance exceeds a variety of hot methods such as GCN (Kipf and Welling, 2016), Chebyshev-GCN (Defferrard et al., 2016), and Deepwalk (Perozzi et al., 2014).

-

–

-

•

Simple Embedding Methods

-

–

PME (Chen et al., 2018). It is a heterogenous graph embedding model based on the metric learning to capture both first-order and second-order proximities in a unified way. It learns embeddings by firstly projecting vertices from object space to corresponding relation space and then calculates the proximity between projected vertices.

- –

-

–

metapath2vec (Dong et al., [n.d.]). It extends the Deepwalk model to learn representations on heterogeneous networks through meta path guided random walks.

- –

-

–

| Recall (%) | F1 (%) | |||||

|---|---|---|---|---|---|---|

| Methods | Pubmed | Cora | DBLP | Pubmed | Cora | DBLP |

| HWNN | 85.59 | 88.52 | 90.03 | 85.15 | 88.60 | 91.45 |

| GWNN | 81.02 | 85.12 | 87.23 | 80.93 | 85.04 | 87.20 |

| HyperEmbedding | 59.27 | 54.01 | 54.22 | 60.31 | 57.84 | 54.59 |

| HGNN | 82.32 | 82.87 | 86.34 | 82.08 | 84.09 | 85.47 |

| PME | 55.17 | 76.49 | 82.75 | 55.06 | 75.94 | 78.42 |

| HHNE | 76.86 | 70.74 | 86.66 | 79.94 | 69.95 | 86.03 |

| metapath2vec | 74.48 | 66.68 | 82.22 | 74.97 | 68.13 | 82.20 |

| random guess | 34.67 | 14.47 | 34.79 | 30.48 | 10.33 | 32.75 |

| Accuracy (%) | Precision (%) | |||||

| Methods | Pubmed | Cora | DBLP | Pubmed | Cora | DBLP |

| HWNN | 86.48 | 89.73 | 93.41 | 84.78 | 89.12 | 93.61 |

| GWNN | 82.51 | 85.82 | 90.11 | 80.88 | 85.20 | 90.50 |

| HyperEmbedding | 67.49 | 64.55 | 74.73 | 78.32 | 79.35 | 87.63 |

| HGNN | 83.51 | 85.82 | 89.01 | 82.19 | 85.95 | 86.13 |

| PME | 59.21 | 77.25 | 80.22 | 55.40 | 75.86 | 76.07 |

| HHNE | 81.32 | 71.64 | 83.24 | 81.32 | 75.95 | 87.32 |

| metapath2vec | 76.86 | 70.31 | 86.81 | 75.96 | 70.72 | 87.36 |

| random guess | 42.45 | 31.09 | 48.35 | 37.55 | 11.58 | 41.59 |

4.1.3. Parameter Settings

The optimal hyper-parameters in all the models are selected by the grid search method on the validation set. Our model achieves its best performance with the following setup. There are two convolution layers. The activation function of the first layer is ReLU, and the second layer is Softmax. The feature dimension of the hidden layer is set as 64, with a drop rate of 0.5. We use Adam optimizer with learning rate 0.001, regularization coefficient 0.0001, and 400 epochs. For PME, metapath2vec, and HHNE, we choose three meta-paths on DBLP dataset: P-C (paper-conference), P-A (paper-author), and A-P-A. (paper-conference-author). Other datasets have homogeneous simple graphs so we just use V-V (vertex-vertex) as the meta-path. For PME, we set , and the number of negative samples for each positive edge is set . The initial nodes features are generated from nodes’ attributes provided by these datasets.

4.1.4. Hypergraph Construction

We define the following four types of hyperedges to construct the corresponding heterogeneous hypergraphs for the datasets:

-

•

Neighbor-based hyperedges. We first obtain each node’s -hop neighbors and connect them in one hyperedge. is set as 3 in our experiments. All these hyperedges belong to the same hyperedge type if the simple graph is homogeneous. If the simple graph is heterogeneous, a node has different types of neighbors following different meta-paths, and thus we can generate different types of hyperedges.

-

•

Attribute-based hyperedges. Let denote all the discrete attributes. For each attribute , it has values. Then, we build a type of hyperedges for each attribute , and each hyperedge connects the nodes sharing the same attribute value. So there are hyperedges generated for attribute . Each hyperedge type is treated as a hypergraph snapshot, and we finally generate hypergraph snapshots accordingly.

-

•

Cluster-based hyperedges. Given each node’s feature vectors, we first calculate the cosine similarity between each pair of nodes and obtain several clusters by K-means. Then each cluster serves as a hyperedge. All the hyperedges belong to the same hyperedge type.

-

•

Community-based hyperedges. We use the Louvain algorithm (Blondel et al., 2008) to discover communities in the corresponding simple graph via modularity maximization. Then each community is treated as a hyperedge. All these hyperedges belong to the same hyperedge type.

4.2. Overall Performance in Node Classification

The comparison results in terms of F1, Recall, Accuracy, and Precision are reported in Table 1, which show that our solution consistently outperforms all baselines on all the datasets. Note that the differences between our solution and the other comparison methods are statistically significant (). Specifically, on the Pubmed dataset, our method exceeds the baselines at least 4.0% in Recall, 3.7% in F1, 3.6% in Accuracy, and 3.2% in Precision. On the Cora dataset, our solution also outperforms all baselines, and the relative improvements are 4.0% (Recall), 4.2% (F1), 4.6% (Accuracy), and 3.7% (Precision). On the DBLP dataset, the relative improvements achieved by our model are at least 3.2% (Recall), 4.9% (F1), 3.7% (Accuracy), and 3.4% (Precision).

| Recall (%) | F1 (%) | Accuracy (%) | Precision (%) | |

|---|---|---|---|---|

| K=1 | 70.77 | 72.53 | 74.30 | 84.46 |

| K=2 | 86.75 | 87.53 | 89.59 | 88.71 |

| K=3 | 86.17 | 87.84 | 89.07 | 88.68 |

| K= | 86.44 | 87.10 | 89.37 | 88.54 |

-

We use K= to denote the case without approximation.

Impact of Label Sparsity. We further study the impact of the label sparsity (i.e., label ratio) by varying the ratio of labeled nodes from 10% to 90%. We only report the results on the Cora dataset and similar comparison results/trends are also observed on the other datasets. Figure 5 shows the comparison results on the Cora dataset, from which we can observe that even in a lower label ratio, our method still outperforms the baselines, which is particularly useful because most real world datasets are sparsely labeled.

4.3. Analysis of the Polynomial Approximation to the Wavelet Basis

In this section, we study the impact of the polynomial approximation order on the classification performance. Since the Wavelet basis is much sparser than the Fourier basis, we develop an efficient polynomial approximation method to approximate it. In this experiment, we set different orders of the polynomial approximation to the Wavelet basis and report their performance in Table 2. When increases from 1 to 2, the performance of our model climb significantly. However, when increases from 2 to 3, the performance has become steady and is very close to the original Wavelet basis without any approximation, which indicates that our model training can be super-efficiently done without any performance compromise. Please note that when , both and in Equation (11) are set as 2 orders.

4.4. Impact of Hypergraph Construction

As we propose four general hypergraph construction methods which do not depend on domain knowledge, we study the performance of each type of constructed hypergraphs and their jointly formed heterogeneous hypergraph in this experiment. Figure 6 shows the nodes classification results on Cora dataset, and we observe that the best performance is achieved on the heterogeneous hypergraph, showing the advantage of exploiting heterogeneous hypergraphs over the homogeneous hypergraphs. Another that the community-based hypergraph has only 28 hyperedges but achieve comparable performance with neighbor-based snapshot (1000 hyperedes). This indicates the potential superiority of the hypergraph-based method when dealing with large-scale networks because the number of hyperedges is much smaller than the numbers of nodes and edges on the simple graphs.

4.5. Training Efficiency

Impact of snapshot number. In order to evaluate the training efficiency of our model, we first study the convergence rate of our model training with different number of hypergraph snapshots. As shown in Figure 7, our model converges faster and the training loss becomes lower when it processes more snapshots. That means more available snapshots are helpful for our model to fast learn high-quality node embedding, thus accelerating the model convergence.

Efficiency of the polynomial approximations. In order to evaluate computation time of our model and Fourier-based model on the experiment datasets. We record the model training time in 100 epoch and report the results in Table 3. Our model shows efficient training performance. Here we let the order of polynomial approximation equal to 4, which means both and in Equation (11) are set as 4 orders. The simplified version has much faster speed, and considering hypergraph snapshots can be assembled flexibly, we can leverage distributed computation for even larger datasets.

| Cora | Pumbed | DBLP | |

|---|---|---|---|

| Our method | 0.91 | 433.14 | 526.83 |

| Fourier basis method | 1.41 | 632.76 | 764.73 |

| speed-up ratio | 1.55 | 1.46 | 1.45 |

4.6. Case Study: Online Spammer Detection

Online spammer detection is an important research area. Previous researches (Liu et al., 2016; Wu et al., 2015; Liu et al., 2020) usually take pairwise relationships into considerations, ignoring non-pairwise relationships, and thus their performance can be further improved by applying our method here. In this section, we apply our method into spammer detection on the Spammer dataset from Liu et al. (Liu et al., 2020). The dataset consists of 14, 774 users in Sina platform444https://www.sina.com.cn, and 3, 883 users are labeled as spammer or normal users. Spammers take up to 23.3% of the total labeled users. We construct an adjacency matrix according to the following edges and each row serves as the first part of the feature vector. The remaining features come from the feature collection calculated by (Liu et al., 2020), including folllowee/follower number, lifetime, mutual friends, number of tweets and so on. All these features are concatenated and then fed into an autoencoder to generate a feature matrix with 100 dimensions. We construct the hypergraph snapshots according to the second part of features, the similarity of all features, and the topological neighbors.

We compared our method with other famous baselines like S3MCD (Liu et al., 2016), DetectVC (Wu et al., 2015), and CMSCA (Liu et al., 2020). Results are reported in Figure 8, from which we can demonstrate the validity of our method for the spammer detection. Compared with other baselines, HWNN achieved an average of 86.02% in the F1 score, 89.88% in the Precision score, and 76.11% in the Recall score, exceeding to the second by 21.8%, 20.5%, and 6.6% respectively. These results point out a new direction when studying spammer detection, that considering relationships beyond pairwise can further improve the final performance.

5. Related work

In this section, we briefly review simple graph embedding methods and hypergraph embedding methods.

Simple Graph Embedding Methods. Earlier methods for simple graph embedding such as LINE (Tang et al., 2015b), DeepWalk (Perozzi et al., 2014), Node2Vec (Grover and Leskovec, 2016), Metapath2vec (Dong et al., [n.d.]), PTE (Tang et al., 2015a) mainly focus on capturing neighbourhood proximities among vertices. Recently, graph convolutional neural networks have achieved remarkable performance because of their powerful representation ability. Existing methods can fall into two categories, spatial methods and spectral methods. Spatial methods build their frameworks as information propagation and aggregation. Recently, various neighborhood selection methods and aggregation strategies have been proposed. In the work of Niepert et al. (Niepert et al., 2016), the number of neighborhood nodes is fixed according to pairwise proximity. Hamilton et al. (Hamilton et al., 2017) put forward a GraphSAGE method, where neighboring nodes are selected through sampling and then fed into an aggregate function. Petar et al. (Veličković et al., 2018) learn the aggregate function via the self-attention mechanism. Spectral methods formulate graph convolution in the spectral domain via graph Fourier transform (Bruna et al., 2014). To make the method computationally efficient and localized in the vertex domain, Defferrard et al. (Defferrard et al., 2016) parameterize the convolution filter via the Chebyshev expansion of the graph Laplacian. Xu et al. (Xu et al., 2019) further take Wavelet basis instead of the Fourier basis to make the graph convolution more powerful. However, existing graph neural networks only consider pairwise relationships between objects, and they cannot apply to non-pairwise relation learning. Recently, hypergraph methods have been emerging.

Hypergraph Embedding Methods. Some researchers construct a hypergraph to improve the performance of various tasks like video object segmentation (Huang et al., 2009), cancer prediction (Hwang et al., 2008), and multi-modal fusion (Gao et al., 2012; Zhao et al., 2018). Most of them add a hypergraph regularizer into their final objective functions, and the regularizer is usually the same as in the spectral hypergraph partitioning (Zhou et al., 2007), in which the representations of connected nodes should be closer than those without links (Zheng et al., 2018). Unlike the above works, Chu et al. (Chu et al., 2018) learn their hypergraph embeddings through the analogy between hyperedges and text sentences. Then they maximize the log-probability of the centroid generated by its context vertices. In recent years, some works have explored the hypergraph learning via hypergraph neural networks. Feng et al. (Feng et al., 2019) introduced the concept of hypergraph Laplacian, and then proposed a hypergraph version of graph convolution based on simple graph convolution (Defferrard et al., 2016). Jiang et al. (Jiang et al., 2019) propose a hyperedge convolution method so that they learn a dynamic hypergraph. Yadati et al. (Yadati et al., 2019) treat a hyperedge as a new kind of node and then the hypergraph can be changed as a simple graph. Tu et al. (Tu et al., 2018) design a framework for hypergraphs to preserve the first-order proximity and the second-order proximity of hypergraphs.

6. Conclusion

In this paper, we proposed a novel graph neural network for heterogeneous hypergraphs with a Wavelet basis. We further propose a polynomial approximation-based wavelets so that we can avoid the time-consuming Laplacian decomposition. Extensive evaluations have been conduct and experimental results demonstrate the advantage of our method.

7. ACKNOWLEDGMENTS

This work is supported by National Key R&D Program of China (Grant No. 2017YFB1003000, 2019YFC1521403), National Natural Science Foundation of China (Grants No. 61972087, 61772133, 61632008, 61702015, U1936104), National Social Science Foundation of China (Grants No. 19@ZH014), Natural Science Foundation of Jiangsu province (Grants No. SBK2019022870), Jiangsu Provincial Key Laboratory of Network and Information Security (Grant No. BM2003201), Key Laboratory of Computer Network Technology of Jiangsu Province (Grant No. BE2018706), Key Laboratory of Computer Network and Information Integration of Ministry of Education of China (Grant No. 93K-9), China Scholarship Council (CSC), the Fundamental Research Funds for the Central Universities 2020RC25, Australian Research Council (Grant No. DP190101985, DP170103954). The first author Mr. Xiangguo Sun, in particular, wants to thank his parents for their support during his tough period.

References

- (1)

- Blondel et al. (2008) Vincent D Blondel, Jean-Loup Guillaume, Renaud Lambiotte, and Etienne Lefebvre. 2008. Fast unfolding of communities in large networks. Journal of statistical mechanics: theory and experiment 2008, 10 (2008), P10008.

- Bretto (2013) Alain Bretto. 2013. Applications of Hypergraph Theory: A Brief Overview. Springer International Publishing, Heidelberg, 111–116. https://doi.org/10.1007/978-3-319-00080-0_7

- Bruna et al. (2014) Joan Bruna, Wojciech Zaremba, Arthur Szlam, and Yann LeCun. 2014. Spectral networks and locally connected networks on graphs. ICLR (2014).

- Chen et al. (2020a) Hongxu Chen, Hongzhi Yin, Tong Chen, Weiqing Wang, Xue Li, and Xia Hu. 2020a. Social Boosted Recommendation with Folded Bipartite Network Embedding. IEEE Transactions on Knowledge and Data Engineering (2020).

- Chen et al. (2020b) Hongxu Chen, Hongzhi Yin, Xiangguo Sun, Tong Chen, Bogdan Gabrys, and Katarzyna Musial. 2020b. Multi-level Graph Convolutional Networks for Cross-platform Anchor Link Prediction. In KDD20.

- Chen et al. (2018) Hongxu Chen, Hongzhi Yin, Weiqing Wang, Hao Wang, Quoc Viet Hung Nguyen, and Xue Li. 2018. PME: projected metric embedding on heterogeneous networks for link prediction. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. ACM, 1177–1186.

- Chu et al. (2018) Yunfei Chu, Chunyan Feng, and Caili Guo. 2018. Social-Guided Representation Learning for Images via Deep Heterogeneous Hypergraph Embedding. IEEE, ICME.

- Defferrard et al. (2016) Michaël Defferrard, Xavier Bresson, and Pierre Vandergheynst. 2016. Convolutional neural networks on graphs with fast localized spectral filtering. In NeurIPS. 3844–3852.

- Dong et al. ([n.d.]) Yuxiao Dong, Nitesh V Chawla, and Ananthram Swami. [n.d.]. metapath2vec: Scalable representation learning for heterogeneous networks. In SIGKDD17.

- Donnat et al. (2018) Claire Donnat, Marinka Zitnik, David Hallac, and Jure Leskovec. 2018. Learning structural node embeddings via diffusion wavelets. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. ACM, 1320–1329.

- Feng et al. (2019) Yifan Feng, Haoxuan You, Zizhao Zhang, Rongrong Ji, and Yue Gao. 2019. Hypergraph neural networks. In AAAI. 3558–3565.

- Gao et al. (2012) Yue Gao, Meng Wang, Zheng-Jun Zha, Jialie Shen, Xuelong Li, and Xindong Wu. 2012. Visual-textual joint relevance learning for tag-based social image search. TIP 22, 1 (2012), 363–376.

- Grover and Leskovec (2016) Aditya Grover and Jure Leskovec. 2016. node2vec: Scalable feature learning for networks. In KDD. ACM.

- Hamilton et al. (2017) Will Hamilton, Zhitao Ying, and Jure Leskovec. 2017. Inductive representation learning on large graphs. In NeurIPS. 1024–1034.

- Huang et al. (2009) Yuchi Huang, Qingshan Liu, and Dimitris Metaxas. 2009. Video object segmentation by hypergraph cut. In CVPR. 1738–1745.

- Hwang et al. (2008) TaeHyun Hwang, Ze Tian, Rui Kuangy, and Jean-Pierre Kocher. 2008. Learning on weighted hypergraphs to integrate protein interactions and gene expressions for cancer outcome prediction. In ICDM. 293–302.

- Jiang et al. (2019) Jianwen Jiang, Yuxuan Wei, Yifan Feng, Jingxuan Cao, and Yue Gao. 2019. Dynamic hypergraph neural networks. IJCAI.

- Kipf and Welling (2016) Thomas N Kipf and Max Welling. 2016. Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907 (2016).

- Liu et al. (2020) Bo Liu, Xiangguo Sun, Zeyang Ni, Jiuxin Cao, Junzhou Luo, Benyuan Liu, and Xinwen Fu. 2020. Co-Detection of Crowdturfing Microblogs and Spammers in Online Social Networks. World Wide Web Journal (2020).

- Liu et al. (2016) Yuli Liu, Yiqun Liu, Min Zhang, and Shaoping Ma. 2016. Pay Me and I’ll Follow You: Detection of Crowdturfing Following Activities in Microblog Environment.. In IJCAI. 3789–3796.

- McCallum et al. (2000) Andrew Kachites McCallum, Kamal Nigam, Jason Rennie, and Kristie Seymore. 2000. Automating the construction of internet portals with machine learning. Information Retrieval 3, 2 (2000), 127–163.

- Mikolov et al. (2013) Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S Corrado, and Jeff Dean. 2013. Distributed representations of words and phrases and their compositionality. In NIPS.

- Monti et al. (2017) Federico Monti, Davide Boscaini, Jonathan Masci, Emanuele Rodola, Jan Svoboda, and Michael M Bronstein. 2017. Geometric deep learning on graphs and manifolds using mixture model cnns. In CVPR. 5115–5124.

- Neelima and Raghavendra (2012) B Neelima and Prakash S Raghavendra. 2012. Effective sparse matrix representation for the GPU architectures. International Journal of Computer Science, Engineering and Applications 2, 2 (2012), 151.

- Niepert et al. (2016) Mathias Niepert, Mohamed Ahmed, and Konstantin Kutzkov. 2016. Learning convolutional neural networks for graphs. In ICML. 2014–2023.

- Perozzi et al. (2014) Bryan Perozzi, Rami Al-Rfou, and Steven Skiena. 2014. Deepwalk: Online learning of social representations. In KDD. ACM, 701–710.

- Sen et al. (2008) Prithviraj Sen, Galileo Namata, Mustafa Bilgic, Lise Getoor, Brian Galligher, and Tina Eliassi-Rad. 2008. Collective classification in network data. AI magazine 29, 3 (2008), 93–93.

- Sun et al. (2011) Yizhou Sun, Jiawei Han, Xifeng Yan, Philip S Yu, and Tianyi Wu. 2011. Pathsim: Meta path-based top-k similarity search in heterogeneous information networks. VLDB 4, 11 (2011), 992–1003.

- Tang et al. (2015a) Jian Tang, Meng Qu, and Qiaozhu Mei. 2015a. PTE: Predictive text embedding through large-scale heterogeneous text networks. In KDD.

- Tang et al. (2015b) Jian Tang, Meng Qu, Mingzhe Wang, Ming Zhang, Jun Yan, and Qiaozhu Mei. 2015b. Line: Large-scale information network embedding. In WWW.

- Tu et al. (2018) Ke Tu, Peng Cui, Xiao Wang, Fei Wang, and Wenwu Zhu. 2018. Structural deep embedding for hyper-networks. AAAI.

- Veličković et al. (2018) Petar Veličković, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Lio, and Yoshua Bengio. 2018. Graph attention networks. ICLR (2018).

- Wang et al. (2019) Xiao Wang, Yiding Zhang, and Chuan Shi. 2019. Hyperbolic Heterogeneous Information Network Embedding. AAAI.

- Wu et al. (2015) Fangzhao Wu, Jinyun Shu, Yongfeng Huang, and Zhigang Yuan. 2015. Social spammer and spam message co-detection in microblogging with social context regularization. In CIKM. 1601–1610.

- Xu et al. (2019) Bingbing Xu, Huawei Shen, Qi Cao, Yunqi Qiu, and Xueqi Cheng. 2019. Graph Wavelet Neural Network. ICLR (2019).

- Yadati et al. (2019) Naganand Yadati, Madhav Nimishakavi, Prateek Yadav, Vikram Nitin, Anand Louis, and Partha Talukdar. 2019. HyperGCN: A New Method For Training Graph Convolutional Networks on Hypergraphs. NeurIPS.

- Yin et al. (2019) Hongzhi Yin, Qinyong Wang, Kai Zheng, Zhixu Li, Jiali Yang, and Xiaofang Zhou. 2019. Social influence-based group representation learning for group recommendation. In ICDE. IEEE, 566–577.

- Yin et al. (2017) Hongzhi Yin, Weiqing Wang, Hao Wang, Ling Chen, and Xiaofang Zhou. 2017. Spatial-aware hierarchical collaborative deep learning for POI recommendation. IEEE Transactions on Knowledge and Data Engineering 29, 11 (2017), 2537–2551.

- Zhang et al. ([n.d.]) Ruochi Zhang, Yuesong Zou, and Jian Ma. [n.d.]. Hyper-SAGNN: a self-attention based graph neural network for hypergraphs. ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020.

- Zhang et al. (2018) Zizhao Zhang, Haojie Lin, Yue Gao, and KLISS BNRist. 2018. Dynamic Hypergraph Structure Learning.. In IJCAI. 3162–3169.

- Zhao et al. (2018) Sicheng Zhao, Guiguang Ding, Jungong Han, and Yue Gao. 2018. Personality-Aware Personalized Emotion Recognition from Physiological Signals.. In IJCAI. 1660–1667.

- Zheng et al. (2018) Xiaoyao Zheng, Yonglong Luo, Liping Sun, Xintao Ding, and Ji Zhang. 2018. A novel social network hybrid recommender system based on hypergraph topologic structure. World Wide Web 21, 4 (2018), 985–1013.

- Zhou et al. (2007) Dengyong Zhou, Jiayuan Huang, and Bernhard Schölkopf. 2007. Learning with hypergraphs: Clustering, classification, and embedding. In NeurIPS. 1601–1608.