Heroes: Lightweight Federated Learning with Neural Composition and Adaptive Local Update in Heterogeneous Edge Networks

Abstract

Federated Learning (FL) enables distributed clients to collaboratively train models without exposing their private data. However, it is difficult to implement efficient FL due to limited resources. Most existing works compress the transmitted gradients or prune the global model to reduce the resource cost, but leave the compressed or pruned parameters under-optimized, which degrades the training performance. To address this issue, the neural composition technique constructs size-adjustable models by composing low-rank tensors, allowing every parameter in the global model to learn the knowledge from all clients. Nevertheless, some tensors can only be optimized by a small fraction of clients, thus the global model may get insufficient training, leading to a long completion time, especially in heterogeneous edge scenarios. To this end, we enhance the neural composition technique, enabling all parameters to be fully trained. Further, we propose a lightweight FL framework, called Heroes, with enhanced neural composition and adaptive local update. A greedy-based algorithm is designed to adaptively assign the proper tensors and local update frequencies for participating clients according to their heterogeneous capabilities and resource budgets. Extensive experiments demonstrate that Heroes can reduce traffic consumption by about 72.05% and provide up to 2.97 speedup compared to the baselines.

Index Terms:

Federated Learning, Heterogeneity, Neural Composition, Local Update Frequency.I Introduction

In traditional machine learning approaches, data is gathered from various sources and transmitted to a central server for model training. However, in edge computing (EC), where data is generated and processed at the edge clients, this centralized approach becomes infeasible due to privacy concerns [liu2022enhancing]. Federated Learning (FL) [mcmahan2017communication] is an emerging paradigm that enables collaborative model training across distributed clients, bringing the power of machine learning to EC [gao2019auction]. As the field of FL continues to evolve, it carries significant potential to advance edge computing capabilities and facilitate intelligent applications across diverse domains, such as healthcare, smart cities and autonomous vehicles[kairouz2021advances].

However, it is difficult to implement efficient FL in practical edge networks due to resource limitation [liu2023finch], which primarily revolve around constrained computation resources and scarce communication resources on edge clients, such as smartphones and Internet of Things (IoT) devices. Firstly, edge clients often have limited computation capabilities, especially compared to high-performance devices like desktop GPUs [ignatov2019ai]. For example, the floating-point operations per second (FLOPS) of an iPhone14 is only about 5% of that of the desktop GPU RTX3090. Secondly, the communication resources are also scarce in edge networks [liu2023yoga]. The bandwidth of wide area networks (WANs) between the PS and edge clients is much lower (e.g., 15) than that of local area networks (LANs) within the data centers [wang2021resource]. Unfortunately, the growing complexity (e.g., parameter size or architecture) of deep neural networks (DNNs) further intensifies the consumption of both computation and communication resources. For instance, a standard ResNet-18 [he2016deep] consists of 11.68 million parameters and requires 27.29 billion floating-point operations (FLOPs) to process a single image, making the local update process extremely slow or even infeasible on edge clients [jiang2022fedmp]. Besides, the frequent transmissions of a large number of parameters will also strain the limited network bandwidth.

Some previous works have made efforts to address the challenge of resource limitation in FL by employing various techniques, such as gradient compression [li2021talk, liu2022communication] and model pruning [diao2020heterofl, horvath2021fjord]. Specifically, FlexCom [li2021talk] and AdaGQ [liu2022communication] compress the transmitted gradients by sparsification or quantization to alleviate the communication overhead. However, these approaches do not reduce the model complexity and the computation overhead is still high. To save both the computation and communication resources, HeteroFL [diao2020heterofl] and Fjord [horvath2021fjord] propose to prune the complete model into the smaller sub-models for training. Nevertheless, excessive parameter pruning will significantly degrade training performance. For instance, HeteroFL prunes 93.75% of the parameters from the complete model to accommodate the limitations (e.g., CPU power, RAM, energy) on weak clients like smartphones, leaving only 6.25% of the parameters to be optimized. As a result, the majority of parameters are unable to benefit from the local data on these weak clients, leading to poor training performance of the complete model.

To address the above issues, Flanc [mei2022resource] proposes the neural composition technique, which approximates each model weight as the product of two low-rank tensors, named neural basis and coefficient. Concretely, the models of various complexities are constructed by composing (i.e., multiplying) the neural basis with the coefficient of different sizes. In each round, the parameter server (PS) sends the neural basis and a proper coefficient to each client for composition and local training. The entire neural basis is trained by all clients and its learned knowledge can be propagated to all parameters, which enables every parameter to access the full range of knowledge. We observe that the size of low-rank tensors is inherently much smaller than that of the original model. For example, the size of standard ResNet-18 is 42.8MB, while that of approximated tensors is only 15.3MB. Thus, this approach can reduce both computation and communication consumption during training.

Despite resource efficiency, the neural composition technique will encounter some problems in the context of FL. Firstly, Flanc only aggregates the coefficients with the same shape. As a consequence, the coefficient of a specific shape is only trained by the clients with the corresponding computation power, which may be insufficient for global model convergence. This issue becomes more prominent when high-performance clients only constitute a small fraction of all clients in the EC system due to their expensive price. For instance, the largest coefficient is only trained by a few powerful clients. As a result, the largest global model may struggle to converge within the given completion time, leading to poor training performance. Secondly, the edge clients are equipped with different hardware, thus their capabilities (e.g., CPU power, bandwidth) may vary significantly [li2022pyramidfl], i.e., client heterogeneity, which poses a great impact on training efficiency. For example, if the PS sends the neural basis and a large coefficient to a client with strong computation power but low upload bandwidth, the completion time for model updates will be prolonged, causing long delays in the aggregation step.

In order to tackle the aforementioned challenges, we propose a lightweight FL framework, called Heroes (LigHtweight Federated Learning through Neural Composition). On the one hand, we enhance the neural composition technique to aggregate the coefficients with different shapes into the largest one. Besides, by adaptively assigning the coefficients to clients, each parameter in the global coefficient can be fully trained, ensuring global model convergence. On the other hand, we adjust the local update frequencies for different clients to balance their completion time, so as to diminish the impact of client heterogeneity and improve the training efficiency. Nevertheless, the neural composition and local update frequency are interconnected. Specifically, the completion time depends on the size of composed model, which affects the determination of local update frequency. Meanwhile, the local update frequency also affects the training adequacy of each parameter in coefficient. Therefore, it is necessary yet challenging to jointly assign proper coefficient and local update frequency for each client. Our contributions are summarized as follows:

-

•

We propose a lightweight FL framework, called Heroes, which overcomes the challenges of resource limitation and client heterogeneity through enhanced neural composition and adaptive local update. Besides, a theoretical convergence analysis is provided for Heroes.

-

•

Guided by the convergence bound, we design a greedy-based algorithm to adaptively assign the proper coefficients and local update frequencies for participating clients based on both their heterogeneous capabilities and resource budgets.

-

•

The performance of Heroes is evaluated through extensive experiments and the results demonstrate that Heroes can reduce the traffic consumption by about 72.05% and provide up to 2.97 speedup for the training process compared to the baselines.

II Background and Motivation

II-A Federated Learning

Considering a client set coordinated by the PS, each client holds its local dataset , where denotes a data sample from . Further, we represent the loss function as , which measures how well the model performs on data sample . Therefore, the local loss function of client is defined as:

| (1) |

The global loss function is a linear combination of all clients, and the goal of FL is to train a high-quality model with minimum global loss function, which is defined as:

| (2) |

Suppose there are rounds of training in total. In each round , the PS randomly selects a set of clients to participate in training and sends the fresh global model to the specified clients, where . Then, each client updates the global model over its local dataset for times, where each update is regarded as one local iteration and represents the local update frequency. Let denote the local model of client at iteration in round . For the mini-batch stochastic gradient descent (SGD) [yu2019parallel] algorithm, a local iteration can be expressed as follows:

| (3) |

where denotes a random data batch from the local dataset and is the learning rate. Finally, the PS collects the updated local models from the participating clients and aggregates them to the latest global model for further training, i.e., .

II-B Enhanced Neural Composition

To make full use of the limited resources, it is necessary to adjust the complexity of local model for each client based on its resource budgets [diao2020heterofl, horvath2021fjord, mei2022resource]. Herein, we follow Flanc [mei2022resource] to innovatively propose an enhanced neural composition technique to construct the models in different widths (i.e., the number of hidden channels in each weight) based on low-rank factorization [phan2020stable]. Concretely, since the weights in DNNs are usually over-parameterized [zou2019improved], each layer’s weight (e.g., convolution, fully connection) can be approximated as the product of two low-rank tensors, named neural basis and coefficient . For example, let represent a convolution weight, with kernel size , input channel number and output channel number . Let denote the weight width, where the shape of -width weight is . Accordingly, is approximated as follows:

| (4) |

When , the above format represents the approximation of fully connection layer’s weight. The weight width is controlled by adjusting the size of coefficient, while the size of neural basis is constant. Specifically, the complete coefficient is divided into blocks, where the shape of each block is . We select blocks from the complete coefficient to form the reduced coefficient and compose (i.e., multiply) it with neural basis into a -width weight.

To ensure sufficient training of every parameter in the global model, we control different coefficient blocks to be trained evenly. Thus, the selected blocks are currently the least trained ones. Notably, we measure each block’s training adequacy by the total number of local iterations it has experienced on all clients since round 1, i.e., total update times. In general, we balance the total update times of different coefficient blocks to ensure that each block is fully trained.

For example, as illustrated in Fig. 1, the coefficient is divided into 9 blocks (i.e., =3) and the number in each block represents its current total update times. To obtain a 2-width weight, we first extract the least trained 4 blocks (with the total update times of 6, 5, 7 and 8, respectively) from the complete coefficient and combine them into the reduced coefficient . Then, is composed with the neural basis into an intermediate tensor whose shape is . Finally, a 2-width weight is obtained by reshaping this intermediate tensor.

| FL Schemes | Traffic | Time | ||

| 30GB | 60GB | 20,000s | 40,000s | |

| MP [diao2020heterofl] | 34.89% | 48.92% | 39.72% | 50.75% |

| Original NC [mei2022resource] | 42.22% | 51.89% | 43.25% | 49.37% |

| Enhanced NC | 59.76% | 64.42% | 58.69% | 62.81% |

Compared to the FL schemes based on model pruning [diao2020heterofl], the neural composition technique enables every parameter in the global model to benefit from the full range of knowledge through the shared neural basis, improving the training performance. Different from Flanc [mei2022resource] with original neural composition, enhanced neural composition allows the coefficient of all sizes to get fully trained, accelerating the training process. To verify this improvement, we simulate a FL system with 100 clients to train the standard ResNet-18 model [he2016deep] over the ImageNet dataset [russakovsky2015imagenet]. The results in Table I indicate that with the given traffic consumption or completion time, the enhanced neural composition can improve the global model’s test accuracy by about 16.29% on average compared with the FL schemes based on model pruning (MP) [diao2020heterofl] and original neural composition (NC) [mei2022resource].

II-C Adaptive Local Update

In most synchronous FL schemes [li2021talk, mei2022resource], the clients’ local update frequencies are identical and fixed in each round. However, due to client heterogeneity, strong clients have to wait for weak ones (i.e., stragglers) for global aggregation, incurring non-negligible waiting time and reducing the training efficiency significantly [liu2023adaptive, wang2020towards]. To evaluate the negative impacts of stragglers, we record the completion time for one training round of each client in the simulated FL system. As shown in Fig. 2(a), the strongest client completes one training round four times faster than the weakest client. In other words, about 70% of the strongest client’s time is idle and wasted.

To reduce clients’ idle waiting, some research [li2020federated, xu2022adaptive, li2022pyramidfl] proposes adjusting the local update frequencies for different clients to balance their completion time. On the one hand, the weaker clients perform fewer local iterations to reduce the impact of stragglers. On the other hand, the stronger clients utilize the idle time to perform more local iterations, which helps the model converge faster. We illustrate the completion time of each client with proper local update frequency in Fig. 2(b). It can be observed that almost every client’s time is fully utilized without idle waiting.

However, the neural composition and the local update are interconnected, and it is difficult to jointly assign proper coefficient blocks and local update frequencies for different clients. Specifically, both the computation time and the communication time of a specific client depend on the number of coefficient blocks, which affects the determination of its local update frequency. Meanwhile, since each client trains different blocks, various local update frequencies will also affect the balance of the blocks’ total update times.

III Proposed Framework

To enhance the training efficiency and resource utilization for FL, we propose a lightweight FL framework, called Heroes, which integrates the benefits of enhanced neural composition and adaptive local update. Specifically, in Heroes, there are three main phases in each round as follows.

III-1 Tensors and Frequency Assignment

The PS first determines the model width for each client in round . As studied in [diao2020heterofl, mei2022resource], the smaller the model width, the less the required computation resource. To alleviate the performance degradation, Heroes increases each client’s model width as much as possible within its resource budget. Then, for each client , Heroes selects coefficient blocks with the least total update times as the reduced coefficient . Finally, Heroes assigns a proper local update frequency to each client by the algorithm proposed in Section V-C to minimize the idle waiting time and balance the training across different coefficient blocks.

III-2 Local Training

In round , each client first downloads the latest neural basis and the reduced coefficient from the PS, then composes them into the local model . Each local model is trained over the local dataset for iterations. Let represent the updated local model of client in round . After the local training, is be decomposed into the updated neural basis and coefficient , i.e., . Since the size of low-rank tensors is inherently smaller than that of original model, each client uploads and , instead of , to the PS for global aggregation, which further saves the limited bandwidth.

III-3 Global Aggregation

In round , upon receiving the updated neural basis and coefficient from all the participating clients in , the PS performs global aggregation to obtain the latest basis and coefficient for the next round of training. For neural basis, Heroes directly averages the updated ones from all participating clients, i.e., . For coefficient, Heroes performs the block-wise aggregation. Specifically, let represent the block index and denote the set of clients that train the -th coefficient block in round . The latest -th coefficient block is obtained as follows:

| (5) |

where represents the -th coefficient block updated by client in round . For instance, as shown in Fig. 3, the leftmost block is trained by two clients (i.e., 2 and 4), thus its value is .

For better explanation of Heroes, we give a demonstration of Heroes in Fig. 3. Four heterogeneous clients participate in training, which are divided into three levels by their computation power, i.e., weak smartphones (clients 1 and 3), medium laptop (client 2) and powerful PC (client 4). The complete coefficient contains three blocks. Heroes selects one coefficient block for the weak clients 1 and 3, and two blocks for medium client 2. The powerful client 4 utilizes all three blocks for model training. We adopt the block’s color to denote the amount of training it has obtained. Specifically, the total update times of blue blocks is ample, while that of orange blocks and white blocks is moderate and few, respectively. To ensure sufficient training for every block, the selected blocks are the least trained ones currently. Besides, Heroes assigns different local update frequencies for these four clients to balance their completion time. For example, the weak client 1 trains the model much slower than the powerful client 4. Thus, client 1 performs fewer local iterations (e.g., 10) to alleviate the straggler effect, while client 4 performs more local iterations (e.g., 30) to make more contributions to the global model. In addition to the balance of clients’ completion time, the balance of coefficient blocks’ total update times is also considered for the determination of each client’s local update frequency.

IV Convergence Analysis

In this section, we provide the convergence analysis of the proposed framework. We first state the following assumptions, which are standard in non-convex optimization problems and widely used in the analysis of previous works [jiang2023computation].

Assumption 1. (Smoothness) The local objective function of each client is smooth with modulus :

| (6) |

Assumption 2. (Bounded Variance) Let denote a random data batch sampled from client ’s local dataset . There exists a constant such that the variance of stochastic gradients at each client is bounded by:

| (7) |

Assumption 3. (Bounded Gradients) There exists a constant , such that the stochastic gradients at each client are bounded by:

| (8) |

In order to analyze the convergence bound of the global loss function after rounds, we present the following three important lemmas.

Lemma 1. According to Assumption 3, the deviations between global model and local model is bounded as:

| (9) |

where represents the model error induced by reducing coefficient for client in round (i.e., ) and .

Lemma 2. Combining Assumptions 1-2 and Lemma 1, the difference between global models in two successive rounds can be bounded as:

| (10) |

Lemma 3. Under Assumption 1 and Lemma 1, the proposed framework ensures that:

| (11) |

Let these assumptions and lemmas hold, then the convergence bound can be obtained as follows.

Theorem 1. If the learning rate satisfies , the mean square gradient after rounds can be bounded as follows:

| (12) |

where denotes the optimal global model.

Proof. With the smoothness assumption, we have:

| (13) | ||||

| (14) |

where Eq. (14) is obtained by inserting Eq. (10) and Eq. (11) into Eq. (13). Summing over the round on the both sides of Eq. (14), we have:

| (15) |

When the learning rate satisfies , we can shift the terms in Eq. (15) as follows:

| (16) |

Finally, we divide both sides of Eq. (16) by to derive the convergence bound in Eq. (12) of Theorem 1.

Thus, we complete the convergence analysis of the proposed framework under the non-convex setting. The convergence bound is proportional to the coefficient reducing error. The larger the coefficient, the smaller the reducing error, leading to a tighter convergence bound. Besides, the bound also depends on the local update frequency , indicating that we can obtain better convergence performance by determining the local update frequency properly.

V Problem Formulation and Algorithm Design

V-A Problem Formulation

In this section, we define the joint optimization problem of neural composition and local update frequency in FL training. Let represent the number of floating-point operations (FLOPs) required to perform one local iteration for the composed model, and depends on the size of coefficient . The more blocks the coefficient includes, the wider the composed model and the more FLOPs required for model training. We formulate the time cost for one local iteration of client in round as follows:

| (17) |

where represents the speed to process the floating-operations of client in round .

Since the download bandwidth is usually much faster than the upload bandwidth in typical WANs [zhan2020incentive], the download time of tensors can be negligible and we mainly focus on the upload time. Let denote the upload bandwidth of client in round and measure the size of a tensor. We formulate the communication time of client in round as:

| (18) |

Let denote the completion time of client in round , which consists of the time cost for local iterations and the communication time. Due to the synchronization barrier of FL, the completion time of round depends on the slowest client, which can be defined as:

| (19) |

We adopt the average waiting time for participating clients in to measure the impact of synchronization barrier in round , which is defined as follows:

| (20) |

Let denote the total update times of -th coefficient block, where . The training consistency between different coefficient blocks in round can be reflected by the variance of set , denoted as:

| (21) |

In each round , we aim to select appropriate coefficient blocks and determine the optimal local update frequency for each participating client , so as to accelerate the training process of FL. Accordingly, we define the optimization problem as follows:

| (22) |

The first inequality expresses the convergence requirement, where is the convergence threshold of the training loss after rounds. The second set of inequalities indicates that the average waiting time of participating clients in each round should not exceed the given threshold . The third set of inequalities bounds the variance of all coefficient blocks’ total update times in each round , ensuring the balanced training among blocks. The fourth set of inequalities tells the feasible range of the number of coefficient blocks. The object of this optimization problem is to minimize the completion time of the FL training with the performance requirements (e.g., model convergence, average waiting time).

Input: completion time budget , maximum time cost for one local iteration , maximum model width , waiting time bound .

Output: convergenced neural basis and coefficient .

V-B Preliminaries for Algorithm Design

To solve the optimization problem in Eq. (22), we first approximate the convergence bound. Specifically, we adopt an upper bound for the coefficient reducing error, where . Besides, the minimum loss value is approximated as zero, i.e., . Accordingly, the convergence bound in Eq. (12) is formulated as follows:

| (23) |

Since is a positive variable, the convergence bound is a convex function with respect to the local update frequency . It can be derived that will decrease as increases when . On the contrary, the trend of and is the opposite.

To balance the completion time of heterogeneous clients, we first select the fastest client in round and let the completion time of other clients be approximately equal to that of client , which can be formulated as follows:

| (24) |

Notably, the completion time of client is the largest among that of all participating clients in round . Therefore, the total completion time can be denoted as follows:

| (25) |

In order to minimize the convergence bound, we set the local update frequency of the fastest client in round as . Thus, the optimization problem in Eq. (22) can be approximated as a univariate problem of finding the number of rounds .

| (26) |

Input: global neural basis , reduced coefficient , local update frequency .

Output: estimated variables , and , updated neural basis and coefficient .

V-C Algorithm Description

In terms of Eq. (26), we design a greedy-based control algorithm to adaptively assign proper coefficients and local update frequencies for heterogeneous clients. The proposed algorithm consists of both the PS and client sides, which are formally described in Alg. 1 and Alg. 2, respectively. We will introduce the algorithm in detail by the order of workflow in a training round.

Firstly, at the beginning of each round , the algorithm determines the model width for each client (Lines 6-11 of Alg. 1). To minimize the reducing error, the algorithm greedily adds the coefficient blocks for each client as many as possible within a given maximum iteration time or the model width reaches the maximum value .

Secondly, the algorithm selects the fastest client with the least completion time (Lines 12-14 of Alg. 1). Specifically, for each participating client , the algorithm assumes it is the fastest client and solves the approximated problem in Eq. (26), where the optimization object is with . Then, the number of rounds is obtained. Accordingly, the total completion time for the entire training process is calculated according to . Then, the algorithm selects the fastest client , i.e., . However, solving this problem requires information of the entire training process, such as clients’ status and network bandwidth. Unfortunately, since these information are usually time-varying in the dynamic edge system, it is impossible to obtain them in advance. To this end, we adopt the information in the current round to approximate the unavailable future information. Therefore, the optimization object is approximated as follows:

| (27) |

Notably, there are some variables, such as , and , whose values are unknown at the beginning. In order to address this issue, when , we adopt an identical predefined local update frequency for all participating clients, without performing the algorithm. When , each participating client estimates these variables over its local loss function (Lines 7-9 of Alg. 2) and the PS aggregates them to obtain their specific values (Line 25 of Alg. 1).

Thirdly, the algorithm determines other clients’ local update frequencies based on the fastest client ’s completion time in round , i.e., (Lines 15-19 of Alg. 1). Specifically, for each client , the algorithm first derives a frequency space according to Eq. (24) and searches the final local update frequency within this space, ensuring the waiting time does not exceed the threshold . Then, blocks with the least total update times are selected to form the reduced coefficient for client . Finally, the algorithm searches the local update frequency in to minimize the variance among the total update times of all coefficient blocks.

Fourthly, the PS sends the global basis , reduced coefficient and local update frequency to each participating client for local training (i.e., Alg. 2). Once receiving the updated basis and coefficient , as well as the estimated variables’ values , and , the PS performs global aggregation (Lines 25-26 in Alg. 1). The whole process continues until the total time cost exceeds the budget .

VI Performance Evaluation

VI-A Datasets and Models

VI-A1 Datasets

We conduct the experiments over three common datasets: CIFAR-10[krizhevsky2009learning], ImageNet[russakovsky2015imagenet] and Shakespeare[caldas2018leaf]. Specifically, CIFAR-10 is an image dataset including 60,000 images (50,000 images for training and 10,000 images for testing), which are 33232 dimensional and evenly from 10 classes. ImageNet contains 1,281,167 training images, 50,000 validation images and 100,000 test images from 1,000 classes and is more challenging to train the models for visual recognition. Considering the constrained resource of edge clients, we create a subset of ImageNet, called ImageNet-100, that consists of 100 out of 1,000 classes. Besides, each image’s resolution is resized to 3144144. Shakespeare is a text dataset for next-character prediction built from Shakespeare Dialogues, and includes 422,615 samples with a sequence length of 80. We split the dataset into 90% for training and 10% for testing [caldas2018leaf]. CIFAR-10 and ImageNet-100 represent the low-resolution and high-resolution computer vision (CV) learning tasks, respectively, while Shakespeare represents the natural language processing (NLP) learning task.

VI-A2 Data Distribution

To simulate the non-independent and identically distributed (Non-IID) data, we adopt three different data partition schemes for the three datasets, respectively. Specifically, we adopt latent Dirichlet allocation (LDA) over CIFAR-10 [jiang2023heterogeneity], where % ( 20, 40, 60 and 80) of the samples on each client belong to one class and the remaining samples evenly belong to other classes. Particularly, 10 represents the IID setting. For ImageNet-100, we control that each client lacks ( 20, 40, 60 and 80) classes of samples and the data volume of each class is the same [wang2022accelerating], where 0 represents the IID setting. In our experiments, both and are set to 40 by default. Shakespeare is a natural Non-IID dataset, where the dialogues of each speaking role in each play are regarded as the local data of a specific client [caldas2018leaf] and the Non-IID level is fixed. For fair comparison, the full test datasets are used to evaluate the models’ performance.

VI-A3 Models

To validate the universality of the enhanced neural composition technique, we conduct the experiments across several different architectures. Firstly, a 4-layer CNN with three 33 convolutional layers and one linear output layer is adopted for the CIFAR-10 dataset. Secondly, we utilize the standard ResNet-18 for the more challenging ImageNet-100 dataset. Thirdly, for the Shakespeare dataset, we adopt an RNN model and set both the hidden channel size and embedding size to 512 [mei2022resource].

VI-B Baselines and Metrics

VI-B1 Baselines

We choose the following four baselines for performance comparison: ① FedAvg [mcmahan2017communication] transmits and trains the entire models with a fixed (non-dynamic) and identical (non-diverse) local update frequency for all clients. ② ADP [wang2018edge] dynamically determines the identical local update frequency for all clients in each round on the basis of the constrained resource. ③ HeteroFL [diao2020heterofl] reduces the model width for each client based on its computation power by model pruning. ④ Flanc [mei2022resource] utilizes the neural composition technique to adjust the model width, where the coefficients in different shapes do not share any parameter.

VI-B2 Metrics

We employ the following four metrics to evaluate the performance of Heroes and baselines. ① Test accuracy is measured by the proportion between the amount of the correct samples through model inference and that of all test samples. ② Average Waiting Time is calculated by averaging the time each client waits for global aggregation in a round, reflecting the impact of client heterogeneity. ③ Completion time is defined as the total time cost to reach the target accuracy, which reflects the training speed. ④ Network Traffic is the overall size of models (or tensors) transmitted between PS and clients during the training process, which quantifies the communication cost.

VI-C Experimental Setup

The experimental environment is built on an AMAX deep learning workstation equipped with an Intel Xeon 5218 CPU, 8 NVIDIA GeForce RTX 3090 GPUs and 256GB RAM. We simulate an FL system with 100 virtual clients and one PS (each is implemented as a process in the system) on this workstation. In each round, we randomly activate 10 clients to participate in training. Specifically, the model training and testing are implemented based on the PyTorch framework111https://pytorch.org/docs/stable, and the MPI for Python library222https://mpi4py.readthedocs.io/en/stable/ is utilized to build up the communication between clients and the PS.

To reflect heterogeneous and dynamic network conditions, we let each client’s download speed to fluctuate between 10Mb/s and 20Mb/s [wang2022accelerating]. Since the upload speed is usually smaller than the download speed in typical WANs, we configure it to fluctuate between 1Mb/s and 5Mb/s [jiang2023heterogeneity] for each client. Besides, considering the clients’ computation capabilities are also heterogeneous and dynamic, the time cost of one local iteration on a certain simulated client follows a Gaussian distribution whose mean and variance are derived from the time records on a physical device (e.g., laptop, TX2, Xavier NX, AGX Xavier) [liao2023adaptive].

VI-D Evaluation Results

VI-D1 Training Performance

We implement Heroes and baselines on CIFAR-10 and ImageNet-100 to observe their training performance (e.g., test accuracy). The results in Fig. 4 show that Heroes converges much faster than the baselines while accomplishing a comparable accuracy. For instance, by Fig. 4(a), Heroes takes 1,375s to achieve an accuracy of 70% for CNN on CIFAR-10, while FedAvg, ADP, HeteroFL and Flanc take 4,508s, 3,924s, 3,015s and 3,187s, respectively. In other words, Heroes can speed up the training process by up to 2.67 compared to the baselines. Besides, the model’s accuracy in Heroes also surpasses that in the baselines within a given completion time. For example, Heroes achieves an accuracy of 64.36% after training ResNet-18 over ImageNet-100 for 40,000s, while that of FedAvg, ADP, HeteroFL and Flanc is 55.22%, 56.34%, 52.11% and 51.89%, respectively. In general, within the same time budget, Heroes can improve the test accuracy by about 10.46% compared with the baselines. These results demonstrate the advantages of Heroes in accelerating model training.

VI-D2 Impact of Client Heterogeneity

To evaluate the impact of client heterogeneity on model training with different schemes, we illustrate the average waiting time each round among the participating clients in Fig. 5. The results show that Heroes incurs much less waiting time than the baselines, which means high robustness against system heterogeneity. For example, by Fig. 5(a), the average waiting time in Heroes is 2.86s when training CNN over CIFAR-10, while that in FedAvg, ADP, HeteroFL and Flanc is 15.37s, 11.02s, 8.34s and 5.96s, respectively. Specifically, both FedAvg and ADP assign the entire model and identical local update frequencies for all clients during each round without considering the system heterogeneity, resulting in non-negligible waiting time. HeteroFL and Flanc reduce the model width for different clients according to their various computation power. However, they ignore the heterogeneity in clients’ communication capabilities. For example, the client with a slow upload speed will easily become the straggler and delay the global aggregation. In addition to reducing the model width, Heroes also adjusts the local update frequencies for different clients to balance their completion time in each round. Therefore, Heroes can diminish the impact or system heterogeneity significantly.

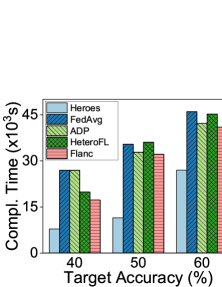

VI-D3 Resource Consumption

We observe the resource consumption (e.g., network traffic and completion time) of five schemes when they achieve different target accuracies on the two image datasets (e.g., 75% on CIFAR-10, 60% on ImageNet-100). The results in Fig. 6 and Fig. 8 demonstrate that Heroes can mitigate both the time and traffic costs greatly. For instance, in Fig. 8(a), to obtain the target accuracy of 50% on ImageNet-100, the traffic cost of Heroes is 17.81GB, while that of FedAvg, ADP, HeteroFL and Flanc is 82.34GB, 77.75GB, 62.87GB and 49.38GB, respectively. At the same time, by Fig. 8(b), Heroes can separately speed up the training process by about 3.09, 2.86, 3.15 and 2.81, compared with FedAvg, ADP, HeteroFL and Flanc. In a word, Heroes achieves the target accuracy fastest with about 2.97 speedup while reducing the network traffic by about 72.05% compared with the baselines.

VI-D4 Impact of Non-IID Data

We test these schemes’ test accuracies over the CIFAR-10 and ImageNet-100 datasets under different Non-IID levels within a given completion time (800s for CIFAR-10 and 40,000s for ImageNet-100). The results in Fig. 7 indicate that the test accuracy decreases as the Non-IID level increases for all schemes. For instance, by Fig. 7(a), when training CNN on CIFAR-10 with , Heros achieves an accuracy of 68.9%, which is 14.72%, 13.21%, 7.48% and 24.08% higher than FedAvg, ADP, HeteroFL and Flanc, respectively. Heroes investigates the benefits of neural composition technique and adaptive control of local update frequency, which can accelerate the FL process while maintaining the accuracy of the complete model. Compared to FedAvg and ADP, Heroes will perform more training rounds to achieve higher accuracy within the given time. Compared to HeteroFL and Flanc, Heroes enables every parameter in the global model to be fully trained over the full range of knowledge, thus eliminating the training bias and achieving better performance.

VI-D5 Performance on Text Dataset

Finally, to verify the generalization of the enhanced neural composition technique, we conduct a set of experiments to train RNN over the text dataset Shakespeare. The results in Fig. 9 demonstrate that Heroes can also accomplish better training performance than the baselines on the NLP learning task. Specifically, according to Fig. 9(a), Heroes takes 1,862s to reach the target accuracy of 45%, while FedAvg, ADP, HeteroFL and Flanc take 4,183s, 4,015s, 3,182s and 2,874s, respectively. Besides, by Fig. 9(b), compared with FedAvg, ADP, HeteroFL and Flanc, Heroes saves about 60.71%, 42.57%, 38.65% and 26.72% of network traffic, respectively. Therefore, compared with baselines, Heroes can provide up to 1.91 speedup and reduce the traffic consumption by about 45.06% when training RNN over Shakespeare.

VII Related Work

As a practical and promising approach, FL has garnered significant interest from both research and industrial communities [kairouz2021advances]. However, the training efficiency of FL often suffers from resources limitation and client heterogeneity [imteaj2021survey]. In recent years, many previous works have been proposed to improve the training efficiency for FL. To tackle the challenge of client heterogeneity, some research [lai2021oort, luo2022tackling] optimizes the client sampling strategy to diminish the heterogeneity degree among participating clients. Another approach is adjusting the local update frequencies for different clients [li2020federated], so as to balance their completion time and mitigate the effect of straggler. However, these approaches have not been able to effectively conserve the limited resources in FL.

Compressing the transmitted gradients is a common way to alleviate the communication overhead [jiang2023heterogeneity, li2021talk, wang2023federated, cui2022optimal, liu2022communication, nori2021fast]. To further reduce the computation overhead, a natural solution is to prune the global model into a smaller sub-model for training [diao2020heterofl, horvath2021fjord, alam2022fedrolex, li2020lotteryfl, mugunthan2022fedltn, li2021hermes]. Nevertheless, the compressed or pruned parameters are under-optimized in these approaches, degrading the training performance. To address this issue, neural composition technique [mei2022resource] is proposed to construct the size-adjustable models using the more efficient low-rank tensors, while enabling every parameter to learn the knowledge from all clients. However, the global model may get insufficient training, leading to a long completion time, especially in heterogeneous edge networks.

VIII Conclusion

In this paper, we have proposed a lightweight FL framework, called Heroes, to address the challenges of resource limitation and client heterogeneity with the enhanced neural composition and adaptive local update. We have analyzed the convergence of Heroes and designed a greedy-based algorithm to jointly assign proper tensors and local update frequency for each client, which enables every parameter in the global model to benefit from all clients’ knowledge and get fully trained. Extensive experiments demonstrate the effectiveness and advantages of our proposed framework.