Heckerthoughts

1 Introduction

In 1987, Eric Horvitz, Greg Cooper, and I visited I.J. Good at Virginia Polytechnic and State University. The three of us were at a conference in Washington DC and made the short drive to see him. The primary reason we wanted to see him was not because he worked with Alan Turing to help win WWII by decoding encrypted messages from the Germans, although that certainly intrigued us. Rather, we wanted to see him because we had just finished reading his book “Good Thinking” [31], which summarized his life’s work in Probability and its Applications. We were delighted that he was willing to have lunch with us. We were young graduate students at Stanford working in Artificial Intelligence (AI), and were amazed that his thinking was so similar to ours, having worked decades before us and coming from such a seemingly different perspective not involving AI. We had lunch and talked about many topics of shared interest including Bayesian probability, graphical models, decision theory, AI (he was interested by then), how the brain works, and the nature of the universe. Before we knew it, it was dinner time. We took a brief stroll around the beautiful campus and sat down again for dinner and more discussion. Figure 1 is a photo taken just before we reluctantly departed.

This story is a fitting introduction this manuscript. Now having years to look back on my work, to boil it down to its essence, and to better appreciate its significance (if any) in the evolution of AI and Machine Learning (ML), I realized it was time to put my work in perspective, providing a roadmap to any who would like to explore it in more detail. After I had this realization, it occurred to me that this is what I.J. Good did in his book.

This manuscript is for those who want to understand basic concepts central to ML and AI, and to learn about early applications of these concepts. Ironically, after I finished writing this manuscript, I realized that a lot of the concepts that I included are missing in modern courses on ML. I hope this work will help to make up for these omissions. The presentation gets somewhat technical in parts, but I’ve tried to keep the math to the bare minimum to convey the fundamental concepts.

In addition to the technical presentations, I include stories about how the ideas came to be and the effects they have had. When I was a student in physics, I was given dry texts to read. In class, however, several of my physics professors would tell stories around the work, such as Einstein’s thinking that led to his general theory of relativity. Those stories fascinated me and really made the theory stick. So here, I do my best to present both the fundamental ideas and the stories behind them.

As for the title, “Heckerman Thinking” doesn’t have the same ring to it as that of Good’s book. I chose “Heckerthoughts,” because my rather odd last name has been humorous fodder for friends and colleagues for naming things related to me such as “Heckerperson,” “Heckertalk,” and “Heckerpaper”—you get the idea. Ironically, a distant relative of mine who connected with me via 23andMe is a genealogist, and discovered that my true last name is “Eckerman,” with my father’s ancestors coming from a region in Germany near the Ecker River. In any case, “Heckerthoughts” it is.

2 Uncertainty and AI’s transition to probability

In the early 1980, I joined the AI program at Stanford while also getting my M.D. degree. I actually went to medical school to learn how the brain works and was lucky to go to Stanford where AI—an arguably better way to study the workings of the brain—was flourishing. I immediately began taking courses in AI and had one big surprise. AI, of course, had to deal with uncertainty. When robots move, they are not completely certain about their position. When suggesting medical diagnoses, an expert system may not be certain about a diagnosis, even when all the evidence was in. The surprise was that I was told, very clearly, that probability was not a good measure of uncertainty. I was told that measures such as the Certainty Factor model [73, 11] were better. Just coming off of six years of training in physics, where fluency with probability was a must, and where the Bayesian interpretation was often mentioned (although not by name as I recall), I was taken aback by these statements.

Over the next half a dozen years or so, probability gradually became accepted by the AI community, and I’ve been given some credit for that. In this section, I will start with a clean and compelling argument for probability that has nothing to do with my work. Although everything in this argument was known by the time John McCarthy coined the term “Artificial Intelligence” in 1955, there was no email, Facebook, or Twitter to spread the word. Consequently, AI’s actual transition to probability was much more tortured. At the end of this next section, I discuss that transition and the role that I think I played.

2.1 The inevitability of probability

Let’s put aside the notion of probability for the moment. Rather, let’s focus on the important and frequent need to express one’s uncertainty about the truth of some proposition or, equivalently, the occurrence of some event. For example, I’m fairly sure that I don’t have COVID right now, but I am not certain. How do I tell you just how certain I am? Also, note that I am talking about my uncertainty, not anyone else’s. Uncertainty is subjective. What properties do we want this form of communication to have?

Property 1: A person’s uncertainty about the truth of some proposition can be expressed by a single (real) number. Let’s call it a degree of belief and use to denote the degree of belief that proposition is true. At the end of this section, we will discuss the process of putting a number on a degree of belief. An important point here is that a degree of belief is not expressed in a vacuum, but rather in the context of all sorts of information in the person’s head. We say that all degrees of belief are “conditional,” and write to denote a person’s degree of belief in given their background information . Another point is that, sometimes, we want to explicitly call out a specific proposition that a degree of belief is conditioned on. For example, I may want to express my degree of belief that I have COVID, given that I have no symptoms. I use to denote a degree of belief that proposition is true given that is true and given . In much of my past work, I explicitly mentioned , but this notation can get cumbersome. Going forward, I will exclude it.

Property 2: Suppose a person wants to express their belief about the proposition , which denotes the “AND” of proposition and proposition . One way to do this is to express their degree of belief directly. Another way to express their degrees of belief and , and then somehow combine them to yield . Property 2 says there is a function that combines and to yield . We write

A natural, albeit technical, assumption is that the function is continuous and monotonic increasing in both of its arguments.

Now let’s think about , the degree of belief that three propositions , , and are true. One way to do this is to decompose in terms of and , and then decompose the latter belief in terms of and :

Another way to do this is to decompose in terms of and , and then decompose in terms of and :

It follows that is associative:

| (1) |

Property 3: It’s often useful to relate the degree of belief in to the degree of belief about the negation of proposition , which I denote . So, another desirable property is that there is a function such that

That is, a degree of belief in and its negation move in (opposite) lock step. Another natural, albeit technical, assumption is that is continuous and monotonic.

Remarkably, it turns out that, if you accept Properties 1 through 3, a degree of belief must satisfy the following rules:

where and . These rules are precisely those of probability! Namely,

| (2) |

| (3) |

Cox [19] was the first to prove that properties 1 through 3 imply that a degree of belief must satisfy the rules of probability, although he used a slightly stronger technical assumptions for the functions and . The results here, which assume only continuity and monotonicity, are based on work by Aczel [1], who uses a set of mathematical tools known as functional equations to show that functions satisfying simple properties must have particular forms. Notable is his “Associativity Equation,” where he shows that any continuous, monotonic increasing, and associative function is isomorphic to the addition of numbers (see pages 256-267). This is a remarkable result, as it says that any such function can be implemented on a slide rule. It is a key step in proving the inevitability of probability from Equation 1 and the other properties.

Given these results, the term “probability” gets confusing quickly. Namely, a probability can be a long-run fraction in repeated experiments or a degree of belief, but obey the same syntactic rules. It’s tempting to call them both probabilities, but doing so obscures their very different semantics. To avoid this confusion, when the semantics are unclear from the text, I will refer to degrees of belief as Bayesian or subjective probabilities, and will refer to long-run fractions in repeated experiments as frequentist probabilities. I’ll use , , and , to refer to a degree of belief, frequentist probability, or one of the two, respectively.

One pet peeve of mine is that Bayesian thinking—using the rules of probability to govern degrees of belief—is often confused with Bayes rule, which follows directly from the syntactic product rule of probability (Equation 2). That is, Bayes rule can be applied equally well to Bayesian or frequentist probabilities. In this manuscript, I’ll try to eliminate this confusion by avoiding use of the phrase “Bayes rule” and instead refer to “the rules of probability” or sometimes more precisely “the product rule of probability.”

Thomas Bayes was possibly the first person known to apply the syntactic rules of probability to the semantics of degree of belief [6]. (I say “possibly,” because historical scholars disagree on this point.) Laplace definitively made this observation [55]. Note that Laplace and perhaps Bayes said only that the rules of probability could be applied to degrees of belief. They did not realize that, given Properties 1 through 3, this application was necessary.

One very practical problem is how to put a number on a degree of belief. A simple approach is based on the observation that people find it fairly easy to say that two propositions are equally likely. The approach is as follows. Imagine a simplified wheel of fortune having only two regions (shaded and not shaded), such as the one illustrated in Figure 2. Assuming everything about the wheel is symmetric (except for shading), the degree of belief that the wheel will stop in the shaded region is the fractional area of the wheel that is shaded (in this case, 0.3). (Of course, given that degrees of belief are subjective, a person is not forced to make this judgment about symmetry.) This wheel now provides a reference for measuring your degrees of belief for other events. For example, what is my probability that I currently have COVID? First, I ask myself the question: Is it more likely that I have COVID or that the wheel when spun will stop in the shaded region? If I think that it is more likely that I have COVID, then I imagine another wheel where the shaded region is larger. If I think that it is more likely that the wheel will stop in the shaded region, then I imagine another wheel where the shaded region is smaller. Now I repeat this process until I think that having COVID and the wheel stopping in the shaded region are about equally likely. At this point, my degree of belief that you have COVID is the percent area of the shaded area on the wheel.

In general, the process of measuring a degree of belief is commonly referred to as a belief assessment. The technique for assessment that I have just described is one of many available techniques discussed in the Management Science, Operations Research, and Psychology literature. One problem with belief assessment that is addressed in this literature is that of precision. Can someone really say that their degree of belief is and not ? In most cases, no. Nonetheless, in most cases, degrees of belief are used to make decisions (I’ll say a lot more about this in Section 3) and these decisions are typically not sensitive to small variations in assessments. Well-established practices of sensitivity analysis help one to know when additional precision is unnecessary (e.g., [47]). Alternatively, a person can reason with upper and lower bounds on degrees of belief [31]. Another problem with probability assessment is that of accuracy. For example, recent experiences or the way a question is phrased can lead to assessments that do not reflect a person’s true beliefs [78]. Methods for improving accuracy can be found in the decision-analysis literature (e.g., [74]).

Finally, let’s consider frequentist probabilities and how they are related to degrees of belief. As we’ve just seen, a similarity is that both semantics are governed by the rules of probability. And, of course, there are differences. For example, assessing a degree of belief does not require repeated trials, whereas estimating a frequentist probability does. Consider an old-fashioned thumbtack—one with a round, flat head (Figure 3). If the thumbtack is thrown up in the air, it will come to rest either on its point and an edge of the head (heads) or lying on its head with point up (tails). In the Bayesian framework, a person can look at the thumbtack and assess their degree of belief that it will land heads on a toss. In the frequentist approach, we assert that there is some unknown frequentist probability of heads, (heads), such that the thumbtack will land heads on any toss with the same degree of belief. (Getting heads is said to be independent and identically distributed.) The connection? This degree of belief must be equal to (heads).

It turns out that the Bayesian and frequentist frameworks are fully compatible. If a person’s beliefs about a series of flips of arbitrary length has the property that any two sequences of the same length and the same number of heads and tails are judged to be equally likely, then the degrees of belief assigned to those outcomes are the same as the degrees of belief assigned to the outcomes in a situation where there exists an unknown frequentist probability that is equal to the degree of belief of heads on every flip. This result was first proven by de Finetti and generalizations of it followed [45]. In short, the frequentist framework can exist within the Bayesian framework. Ironically, despite this compatibility, Bayesians and frequentists—people who work exclusively within the Bayesian and frequentist frameworks, respectively—have been at odds with each other for many decades. The good news is that there is no need to choose—we can embrace both. And the battles seem to be subsiding.

2.2 Independence and graphical models

In the AI community, before 1985, a common argument made against the use of probability to reason about uncertainty was that general probabilistic inference was computationally intractable [73, 11]. For example, the number of combinations of binary variables is , and no human could assess that many degrees of belief or reason about them when is more than a dozen or so. The only alternative, it was argued, was to make incorrect assumptions of conditional independence. For example, when building an expert system to diagnose medical diseases, the builder could make the incorrect assumption that all symptoms are mutually independent given the true disease—the so called, “naive Bayes assumption.”

What the community missed was the fact that there were representations of conditional independence that were ideal for the task of representing uncertainty. They were graphical and hence easy to read and write and would accommodate assertions of conditional independence that were tailored to the task at hand.

In this manuscript, I will focus on the most used probabilistic graphical model, the directed acyclic graphical model, or DAG model. The model was first described by Sewell Wright in 1921 [83] and advanced by many researchers including I.J. Good [31].

To better understand DAG models, we should now move beyond the simple notion of a proposition to talk about a variable—a collection of mutually exclusive and exhaustive values (sometimes called “states”). I will typically denote a variable by an upper-case, potentially indexed letter (e.g., , and a value of a corresponding variable by that same letter in lower case (e.g., ). To denote a set of variables, I will use a bold-face upper-case letter (e.g., ), and will use a corresponding bold-face lower-case letter (e.g., ) to denote a value for each variable in a given set. When showing specific examples, I will sometimes give variables understandable names and denote them in typewriter font—for example, Age. To denote a probability distribution over , where has a finite number of values, I will use . In addition, I will use this same term to denote a probability for a value of . Whether the mention is a distribution or a single probability should be clear from context. Similarly, if is a continuous variable, then I will use to refer to ’s probability density function or the density at a given point.

DAG models can represent either Bayesian or frequentist probabilities. So, to start, let’s talk about them generically. Suppose we have a problem domain consisting of a set of variables . A DAG model for consists of (1) a DAG structure that encodes a set of conditional independence assertions about variables in , and (2) a set of local probability distributions, one associated with each variable. Together, these components define a probability distribution for , sometimes called a joint probability distribution. The nodes in the graph are in one-to-one correspondence with the variables . I use to denote both the variable and its corresponding node, and to denote the parents of node in the graph as well as the variables corresponding to those parents. The lack of possible arcs in the graph encode conditional independencies. To understand these independencies, let’s fix the ordering of nodes in the graph to be . This ordering is not necessary, but it keeps things simple. Because the graph is acyclic, it is possible to describe the parents of as a subset of the nodes . Given this description, the graph states the following conditional independencies:

| (4) |

Note that other conditional independencies can be derived from these assertions. Now, from (a generalization of) the product rule of probability, we can write

| (5) |

Combining Equations 4 and 5, we obtain

| (6) |

Consequently, the conditional independencies implied by the graph combined with the local probability distributions , determine the joint probability distribution for .

One other note about the acyclicity of these graphs: Such acyclicity has been criticized due to its lack of applicability in many situations, such as when there is bi-directional cause and effect. That said, if a model is built that includes the representation of time, where the observation of a quantity over time is represented with multiple variables, then most criticism disappears. Also, notably, Sewall Wright and others have considered atemporal graph models with cycles.

In Section 4, we will see how DAG models can be constructed for frequentist probabilities. Here, let’s return to the state of AI in the 1980s and consider how to create a DAG model for degrees of belief. Such models became known as Bayesian networks. After that, we’ll look at how these models can make reasoning with uncertainty computationally efficient.

To be concrete, let us build a simple Bayesian network for the task of detecting credit-card fraud. (Of course, in practice, there is an individual, typically an expert, who provides the knowledge that goes into the Bayesian network. Here, I’ll simply use “us” and “we”.) We begin by determining the variables to include in the model. One possible choice of variables for our problem is whether or not there is fraud (Fraud or ), whether or not there was a gas purchase in the last 24 hours (Gas or ), whether or not there was a jewelry purchase in the last hour (Jewelry or ), the age of the cardholder (Age or ), and the sex (at birth) of the cardholder (Sex or ). The values of these variables are shown in Figure 4.

This initial task is not always straightforward. As part of this task we should (1) correctly identify the goals of modeling (e.g., prediction versus decision making), (2) identify many possible findings that may be relevant to the problem, (3) determine what subset of those findings is worthwhile to model, and (4) organize the findings into variables having mutually exclusive and collectively exhaustive values. Difficulties here are not unique to modeling with Bayesian networks, but rather are common to most tasks. Although solutions are not always simple, some guidance is offered by decision analysts (e.g., citeBlueBook).

In the next phase of Bayesian-network construction, we build a directed acyclic graph that encodes our assertions of conditional independence. One approach for doing so is to choose an ordering on the variables, , and, for each , determine the subset of such that Equation 4 holds. In our example, using the ordering , let’s assert the following:

| (7) | |||||

| (8) | |||||

| (9) | |||||

| (10) |

These assertions yield the structure shown in Figure 4.

This approach has a serious drawback. If we choose the variable order carelessly, the resulting network structure may fail to reveal many conditional independencies among the variables. For example, if we construct a Bayesian network for the fraud problem using the ordering , we obtain a fully connected network structure. In the worst case, we must explore variable orderings to find the best one.

Fortunately, there is another technique for constructing Bayesian networks that does not require an ordering. The approach is based on two observations: (1) people can often readily assert causal relationships among variables, and (2) causal relationships typically reveal a full set of conditional independence assertions. So, to construct the graph structure of Bayesian network for a given set of variables, we can simply draw arcs from cause variables to their immediate effects. In almost all cases, doing so results in a network structure that satisfies the definition of Equation 6. For example, given the assertion that Fraud is a direct cause of Gas, the assertion that Fraud, Age, and Sex are direct causes of Jewelry, and the assertion that there are no other causes among these variables, we obtain the network structure in Figure 4.

In the next section, we will explore causality in detail. Here, it suffices to say that the human ability to naturally think in terms of cause and effect is in large part responsible for the success of the DAG model as a representation in AI systems (see Section 3).

In the final step of constructing a Bayesian network, we assess the local probability distributions . In our fraud example, where all variables are discrete, we assess one distribution for for every set of values for . Example distributions are shown in Figure 4. Although we have described these construction steps as a simple sequence, they are often intermingled in practice. For example, judgments of conditional independence and/or cause and effect can influence problem formulation. Also, assessments of probability can lead to changes in the network structure.

One final note: In some situations, a child can be a deterministic function of its parents. We can still be uncertain about the value of such a node, because we can be uncertain about the values of its parents. Given the values of its parents, however, the value of the child is determined with certainty. In those situations, I will draw the child node with a double oval. We will see an example of this situation when we consider influence diagrams.

Let’s now turn to how we can use this model to reason under uncertainty. In this case, we may want to infer the Bayesian probability of fraud, given observations of the other variables. This probability is not stored directly in the model and hence needs to be computed using the rules of probability. In general, the computation of a probability of interest given a model is known as probabilistic inference. This discussion on inference applies equally well to frequentist probabilities, so we can talk about both at the same time.

Because a DAG model for determines a joint probability distribution for , we can—in principle—use the model to compute any probability over the its variables. As an example, let’s compute the probability of fraud given observations of the other variables. Using the rules of probability, we have

| (11) |

For problems with many variables, however, this direct approach is computationally slow. Fortunately, we can use the conditional independencies encoded in the DAG, Equation 7, to make this computation more efficient. Equation 11 becomes

Many algorithms that exploit conditional independence in DAG models to speed up inference have been developed (see the annual proceedings of The Conference on Uncertainty in Artificial Intelligence—UAI). In some cases, however, inference in a domain remains intractable. In such cases, techniques have been developed that approximate results efficiently—for example, [27, 50].

2.3 AI’s transition to probability

The representation and use of conditional independence makes reasoning under uncertainty practical for a wide variety of tasks and, in hindsight, is largely responsible for AI’s transition to the use of probability for representing uncertainty. In this section, I highlight some key steps in the actual transition and the role I think I played in it.

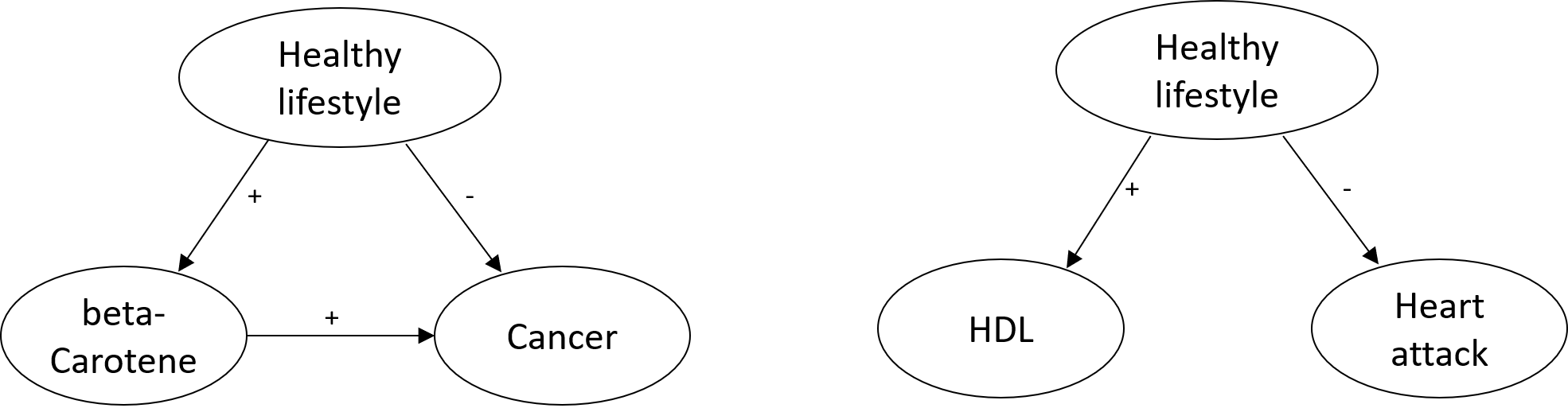

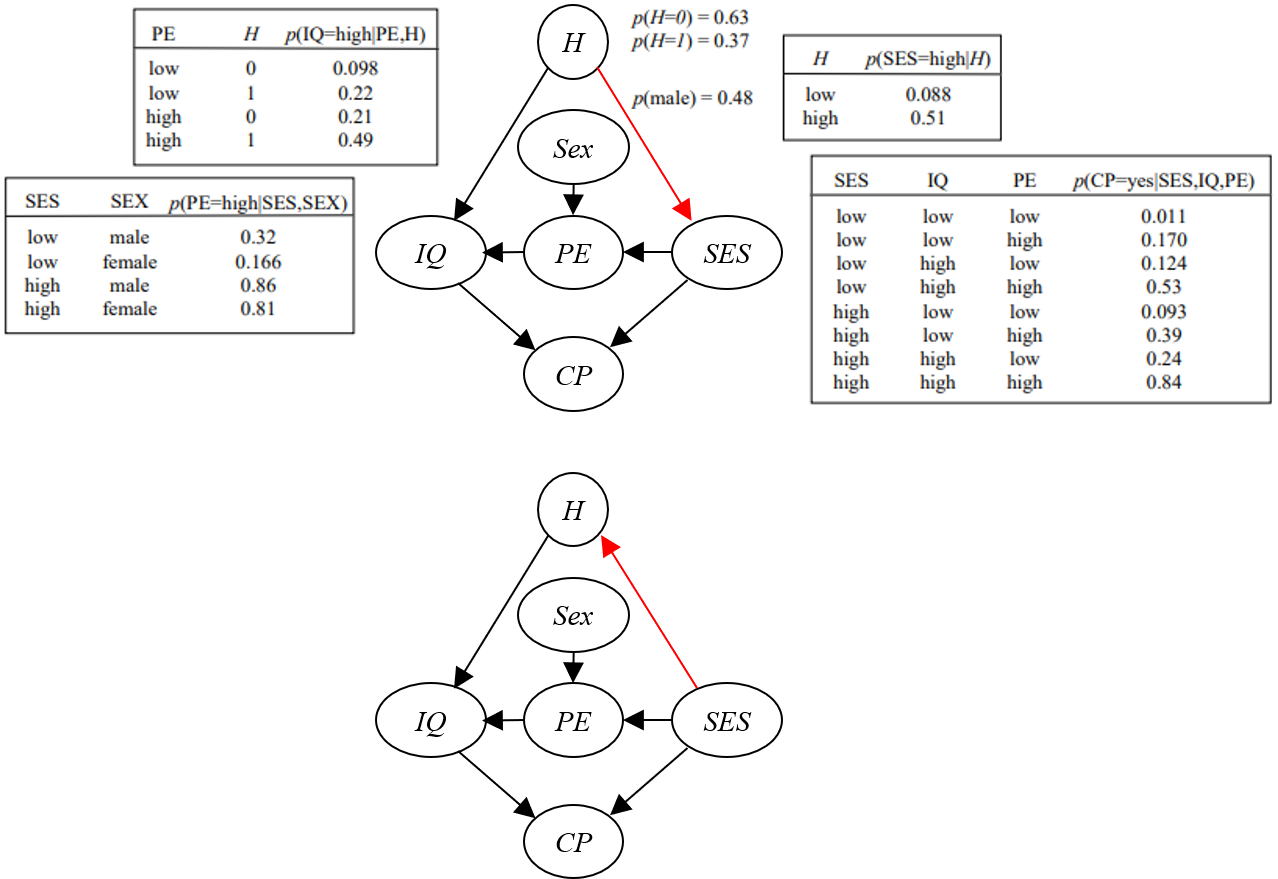

In the late 1970s and early 1980s, multiple alternatives to probability were described, including Dempster–Shafer Theory and Fuzzy Logic. As my PhD advisor was Ted Shortliffe, my greatest exposure was to the Certainty Factor model, which he had developed for use in expert systems for medical diagnosis such as MYCIN and EMYCIN [73, 11]. The expert systems consisted of IF–THEN rules, which could be combined together in parallel or series, effectively forming a directed graph. Figure 5 shows a simple made-up example for diagnosing infection. An arc from node to corresponds to the rule IF THEN , where and are propositions that typically correspond to evidence and a hypothesis, respectively. An example of series combination is Sore throat Throat inflamed Infection. An example of parallel combination is Sore throat Throat inflamed Hoarse. Importantly, the semantics of the graph associated with a Certainty Factor model is different from that of the DAG model we have been considering. I use dashed lines for the former graph to highlight this difference. In the Certainty Factor model, arc direction goes from evidence (typically observed) to hypothesis (typically unobserved). In contrast, in a DAG model, arc direction typically goes from cause to effect. For some problems, each hypothesis in the model is a cause of the corresponding evidence, so that every arc in the Certainty Factor model and DAG model will point in the opposite direction. There are many instances, however, where this relationship does not hold.

Associated with each rule is a certainty factor , which was intended to quantify the degree to which changes the belief in . CFs range from -1 to 1. A CF of 1 corresponds to complete confirmation of ; a CF of -1 corresponds to complete disconfirmation of ; and a CF of 0 leaves the degree of belief of unchanged. In particular, a CF was not intended to represent an absolute degree of belief in given . The Certainty Factor model offers functions that combine these CFs when used in series and parallel. As a result, the model can infer a net increase or decrease in the degree of belief for a target hypothesis (Infection in this example), given multiple pieces of evidence. A list of properties or “desiderata” that these functions should obey were described.

One day in early 1985, as a teaching assistant for one of Ted’s medical informatics courses, I was listening to his explanation of the model. It occurred to me that CFs may simply be a transformation of probabilistic quantities. A simple candidate in my mind was the likelihood ratio . From the rules of probability, we have

| (12) | |||||

where and are the prior and posterior odds of , respectively. That is, the posterior odds of are just the product of the likelihood ratio and the prior odds of . (I.J. Good [31] wrote extensively about the logarithm of the likelihood ratio, known as the weight of evidence.) One catch was that the likelihood ratio ranges from 0 to infinity, but that was easy to fix: simply use a monotonic transformation to map the likelihood ratio to .

That night, I went home and gave the mapping a try. It satisfied the desiderata and closely matched the series and parallel combination functions in the Certainty Factor model. Even more important, the correspondence revealed that the Certainty Factor model facilitated the expression of conditional independence assertions that were less extreme than the naive-Bayes assumption. (In the example in Figure 5, the resulting conditional independencies can be identified by reversing the arcs in the figure and interpreting the result as a DAG model.) Given my proclivity for probability, I was excited and wrote up the result [33] [arXiv:1304.3419].

The work got an interesting mix of reactions. I was particularly nervous about what Ted would say about it, but he was very gracious. When he saw the result, he was very interested and gave me a set of papers to read to help me structure my thinking and prepare a publication. When I presented the work at the first UAI meeting that summer, it got a surprisingly good reception. At the time, the debate about probability as a measure of uncertainty was in full swing, having been ignited by Judea Pearl and Peter Cheeseman at the previous year’s AAAI Conference. Those in the probability camp welcomed the work. Ross Shachter and Jack Breese were perhaps the most surprised that someone from Stanford, the home of the Certainty Factor model, would be presenting such a result. We met for the first time there and have been friends and collaborators ever since.

I should note that there is an interesting connection between the Certainty Factor model and the modern reincarnation of the DAG model. In 1978, Dick Duda and Peter Hart created a variant of the Certainty Factor model for PROSPECTOR, a computer-based consultation system for mineral exploration [21]. Peter Hart went on to work at Stanford Research Institute, where he met Ron Howard and Jim Matheson. Ron and Jim saw this model and were inspired to create influence diagrams, an extension of DAG models that we discuss in the next section. After that, Judea Pearl heard Ron Howard talk about influence diagrams and began work his on DAG models [63].

For me, the next question to answer in AI’s transition to probability was the following: Is a monotonic transform of the likelihood ratio the only measure of a belief update? Over the next year, I took Ted’s desiderata, converted them to a series of functional equations (after learning about what Cox did with degrees of belief), and answered the question: a monotonic transform of the likelihood ratio is the only quantity satisfying these desiderata [34] [arXiv:1304.3091]. The support for probability was increasing. That said, critics were still in the majority.

The key remaining criticism of probability was that no one had built a successful probabilistic expert system. In 1984, that was about to change. Bharat Nathwani, a successful surgical pathologist from USC, visited Ted Shortliffe and several of students with the idea of building an expert system to aid surgical pathologists in the diagnosis of disease. The idea was straightforward: a surgical pathologist would look at one or more slides of tissue from a patient under the microscope, identify values for features such as the color or size of cells, report them to an expert system, and get back a list of possible diseases ordered by how likely they were given what was seen. Eric Horvitz and I were in that group of students and jumped at the chance. Over the next year, we built the first version of a probabilistic expert system for the diagnosis of lymph-node pathology, called Pathfinder. Even though we knowingly made the inaccurate assumption that features were mutually independent given disease, the system worked well—so well, that Bharat, Eric, and I started Intellipath, a company that would build expert systems for the diagnosis of all tissue types.

By now it was mid-1985, and fully appreciating Bayesian networks, I wanted to use the representation to incorporate important conditional dependencies among features. But there was a big catch: Bharat and I wanted to extend Pathfinder to include over 60 diseases and over 100 features. Even if we assumed features were mutually independent given disease, Bharat would have to assess almost ten thousand probabilities. So, over the next year I developed two techniques that made the construction of Bayesian networks for medical diagnosis in large domains easier and more efficient. This work became the focus of my PhD dissertation [44] [arXiv:1911.06263].

Before describing these techniques, I should note that diagnosis of disease in surgical pathology is well suited to the assumption that diseases are mutually exclusive. In particular, it is certainly possible for a patient to have two or more diseases, but these diseases are almost always spatially separated and easily identified as separate diseases. Both techniques make strong use of this assumption. In addition, both techniques make use of the following point. Let and denote two diseases, let denote some feature (e.g., cell size), and suppose that for each value of . From the rules of probability, we see that the ratio of disease priors and posteriors is the same:

| (13) |

In words, does not differentiate between the two diseases. It turns out that surgical pathologists (and experts in other fields) find it easier to think about whether differentiates diseases and , than think about whether . Comparing Equations 12 and 13, another way to think about this situation is that the observation , for any value , is a null belief update for , under the assumption that only and are possible.

The first technique was inspired by my discussions with Bharat about how he related findings to disease. He found it difficult to think about how a finding differentiated among all diseases. Rather, he liked to think about pairs of diseases and, for each pair, which of the many findings differentiated them. So, I developed the similarity graph, both an abstract concept and a graphical user interface, to allow him to do just that. An example for the simpler task of diagnosing five diseases that cause sore throat is shown in Figure 6. Each of the five diseases, which we assume to be mutually exclusive for purposes of presentation, correspond to a node in the similarity graph. An undirected edge between two diseases denotes that the expert is comfortable thinking about what features differentiate them. Note that every disease in the graph must border at least one edge, because otherwise some diseases could not be differentiated. After drawing the similarity graph, the expert can then click on one of the edges (in the small oval on the edge) and draw a local Bayesian network structure, a Bayesian network structure that includes the findings useful for differentiating the two diseases and the conditional independencies among these findings. Local structures for different disease pairs need not contain the same findings nor the same assertions of independence. So, unlike an ordinary Bayesian network structure, the technique allows for the specification of asymmetric conditional independencies. In my dissertation, I showed how the collection of local structures can be combined automatically to form a coherent (ordinary) Bayesian network structure for the entire domain. In addition, I showed how probability assessments for the local networks can automatically populate the distributions for this whole-domain structure [44] [arXiv:1911.06263]. The mathematics is interesting (see Chapter 3 and [24] [arXiv:1611.02126]). I called the similarity graph combined with the collection of local Bayesian networks a Probabilistic Similarity Network.

The second technique I developed made probability assessment even easier and more efficient than assessment within local structures. The technique uses a visual structure known as a partition. An example, again for the task of diagnosing sore throat, is shown in Figure 8. The box on the left shows a finding to be assessed (Palatal spots) along with the values for that finding. Each of the other boxes (in this example, there are two of them) contain diseases that are not differentiated by the finding. To construct this partition, the expert first groups diseases into boxes, and then, for each box, assesses a single probability distribution for the finding given any disease in the box. By Equation 13, there is only one distribution for each box. This technique generalizes easily to the case where the probabilities depend on other findings.

Using these techniques, Bharat and I built the extended version of Pathfinder in a relatively short amount of time and showed that its probabilistic diagnoses were accurate. Bharat, Eric, and I then used these same techniques to build many more expert systems for Intellipath. Then, on one sunny day in 1986 at Stanford, while Eric and I were riding our bikes from one class to the next, he said, “Let’s start a company to build expert systems for any diagnostic problem.” We agreed that Jack Breese should join the venture, and the company Knowledge Industries was born. Over the next several years, both Intellipath and Knowledge Industries continued to produce successful probabilistic expert systems based on Bayesian networks. With these results and the successful work of many others mostly in the UAI community, AI was fully embracing probability.

As luck would have it, these efforts brought Eric, Jack, and me to Microsoft. While finishing my Ph.D. and M.D., I got accepted as an assistant professor at UCLA—my dream job. Two weeks after finishing, I got a call from Nathan Myhrvold, a friend of mine from high school. He asked that we meet, ostensibly to catch up, and invited me to join him at Spago, a fancy restaurant in Beverly Hills (which seemed a bit odd). When I arrived, he told me about his work at Yale with Steven Hawking and his rise to vice-president at Microsoft having overseen version 2 of Windows. He then popped the question: “I read your dissertation and want you to come to Microsoft to apply your work to problems there.” Having just landed my dream job, I said, “No way, but you can buy Knowledge Industries.” Several months later, he did just that—an acqui-hire of Jack, Eric, and me.

3 Decision making and causal reasoning

Much of the work I did at Microsoft involved methods for decision making and its close cousin, causal reasoning. In this section, I introduce some basic concepts in these areas. In the following section, I cover some of my work in detail.

3.1 Decision theory, influence diagrams, and decision trees

Using probabilities to be precise about one’s uncertainties is important. It allows us to be precise in communicating our uncertainties to others. It also helps us make good decisions. To the second point, decision theory, developed in the middle of the twentieth century, provides a formal recipe for how to use subjective probability to make decisions. By the way, please don’t let the phrase “decision theory” scare you. As you will see, it is an extremely simple and intuitive theory—in essence, it is just common sense made precise.

Let’s start with an example to introduce the basics. Suppose we are the decision maker (so I don’t have to keep saying “decision maker”) and are deciding whether to have a party outdoors or indoors on a given day where the weather can be sunny or rainy. We can depict this decision problem using a graphical model known as an influence diagram, introduced by Ron Howard, my PhD advisor, and Jim Matheson [48]. The influence diagram for this party problem is shown in Figure 9.

In general, an influence diagram always contains three types of nodes, corresponding to three types of variables. Each type of variable, like any other variable, has a set of mutually exclusive values. In our example, there is one node/variable of each type. The square node Location corresponds to a decision variable. Each value of a decision variable corresponds to a possible action or alternative—in this example, indoors and outdoors. Other names that have been used for the value of a decision variable include “act” and “intervention.” The oval node Weather corresponds to an uncertainty variable (the type of variable seen in DAG models). In this example, let’s suppose this variable has only two relevant values: sunny or rainy. Finally, every influence diagram contains exactly one deterministic outcome node corresponding to the outcome variable. The values of the outcome variable correspond to all possible outcomes—that is, all combinations of alternatives and possible values of the uncertainty variables. In this example, the possible outcomes are (1) an outdoor sunny party, (2) an outdoor rainy party, (3) an indoor sunny party, and (4) an indoor rainy party.

An influence diagram can include multiple decision nodes, and can represent observations of uncertainty nodes interleaved with the making of individual decisions. The latter capability is important to the process of making real-world decisions, but is not central to the connection between decision making and causal reasoning. Consequently, to keep this presentation simple, I will assume that all decisions are made up front, before any uncertainty nodes are observed. For details on interleaving observations with decisions, see the discussion of “informational arcs” in [48].

As in a DAG model, the lack of arcs pointing to uncertainty variables encode assertions of conditional independence. In particular, given an ordering over decision and uncertainty variables, Equation 4 holds for each uncertainty variable, but now parent variables can include decision variables. Also, based on our assumption that decisions are made up front, the decision variables appear first in the variable ordering. In our party problem, the lack of an arc from Location to Weather corresponds to the assertion that the weather does not depend on our decision about the location of the party. Also as in a DAG model, an uncertainty variable in an influence diagram is associated with a local (subjective) probability distribution for each set of values of the variable’s parents. In our example, Weather has no parents, so this variable has only one distribution that encodes our prior beliefs that it will be rainy or sunny. Together, the assertions of independence and the local probability distributions define a joint distribution for the uncertainty variables conditioned on all possible alternatives.

The single outcome variable in an influence diagram encodes our preferences for the various outcomes. In particular, associated with each possible outcome is a number known as a utility. The larger the number, the more desirable the outcome. Utilities range between 0 and 1 (we will see why in a moment). That said, if the utilities are transformed linearly, the choice of the best alternative remains the same.

Decision theory says the decision maker should choose the alternative with the highest expected utility, where expectation is taken with respect to the decision maker’s joint distribution over uncertainty variables given each of the possible alternatives. In the party problem, expectation is taken with respect to (weather), which we assert does not depend on party location. Based on the utilities in Figure 9, the expected utility of the outdoor location is 0.7; the expected utility of the indoor location is 0.8; so, we should choose the indoor alternative. This principle is generally known as the principle of maximum expected utility or MEU principle.

Decision problems can also be represented with another type of graphical model known as a decision tree. This graphical model should not be confused with the “decision tree” used in the machine-learning community. Unfortunately, the two communities chose the same name for these rather different representations. When I use the term “decision tree,” I will make it clear which one I am talking about. Figure 10a shows the party problem depicted as a decision tree.

As in the influence diagram, a square node represents a decision variable, but now the variable’s values are drawn as edges emerging from the node. To the right of the decision variable come the uncertainty variables and possibly additional decision variables. In this case, there is just one uncertainty variable and no additional decision variables. Finally, utilities of the various outcomes are shown at the far right of the decision tree. Note that, in this case, the decision tree can be simplified, because the utility of the indoor location does not depend on the weather. The simplified decision tree is shown in Figure 10b.

When I first learned of influence diagrams (and decision trees) and the MEU principle, two questions immediately came up in my mind: What are these mysterious utilities? And what justifies the use of the MEU principle to choose the best alternative? In 1948, von Neumann and Morgenstern provided a very elegant answer to both questions. In a manner similar to Cox, von Neumann and Morgenstern introduced several properties that, if followed by a decision maker, define utilities and imply the MEU principle. Here, I’ll present a slightly different version of their argument given by my PhD advisor, Ron Howard [46]. I’ll use decision trees to present his argument and assume the rules of probability are in force.

Property 1 (orderability): Given any two outcomes, and , a decision maker can say whether they prefer to , prefer to , or are indifferent between the two—which I will write , , and , respectively. In addition, given any three outcomes , , and , if and , then . That is, preferences are transitive. If preferences were not transitive, a decision maker could be taken advantage of. For example, if they have the non-transitive preferences , , and , then they would pay money to get rather than , and again pay money to get rather than , and again to get rather than . So, they started and ended up with , but with less money. This observation illustrates an important point about this property and the other properties that we will consider. Namely, the properties are “normative” or “prescriptive”—that is, properties that a decision maker should follow. It is well known, however, that people often do not follow these properties when making real decisions [78]. Such decision making is sometimes referred to as “descriptive.” Unfortunately, people’s descriptive behavior have taken advantage of—for example, with unscrupulous forms of advertising.

Property 2 (continuity): Suppose there are three outcomes , , and , such that the decision maker has the preferences . Then the decision maker has some probability (with ) such that they are indifferent between getting for certain and getting a chance at the better versus worse outcome with probability . The situation is shown in the decision problem of Figure 11. As is the case with probability assessment in general, it is impossible in practice to assess with infinite precision, and such imprecision can be managed with sensitivity analysis.

Now consider the rather general decision problem shown in Figure 12a. Here, there are two alternatives and, for each alternative, a set of possible outcomes that will occur with the probabilities shown. As we will see, this decision problem is sufficiently general to make the argument. Given this decision problem, the first task of the decision maker is to identify the best and worst outcome, again denoted and , respectively. Then, for each possible outcome, the decision maker uses the continuity property to construct an equally preferred chance at getting the best versus worst outcome. The following property says that the decision maker can substitute this chance for the original outcome in the original decision problem, yielding the equivalent decision problem in Figure 12b.

Property 3 (substitutability): If a decision maker is indifferent between two alternatives in one decision problem, then they can substitute one for the other in another decision problem.

The resulting decision problem is simply a set of chances at the best versus worst outcome. The next property says that the decision maker can collapse these chances into one chance at the best versus worst outcome using the rules or probability.

Property 4 (decomposability): Given multiple chances at outcome versus with probabilities , the decision maker should equally prefer this situation to a single chance at outcome versus with probability . Ron calls this property the “no fun in gambling” property.

Given this property, the decision maker now has the equivalent decision problem shown in Figure 12c. The final property describes how the decision maker should make this decision.

Property 5 (monotonicity): Given two alternatives and between a chance at two outcomes , the decision maker should prefer the alternative if and only if the probability of getting in is higher than the probability of getting in .

Applying this property to the decision problem in Figure 12c yields the MEU principle, where the utility of an outcome is the probability of the chance at outcome versus . In addition, given the substitutability and decomposability properties, the decision problem in Figure 12a is sufficiently general to address any decision problem with two alternatives. Finally, there is a straightforward generalization of this argument to a decision problem with any number of alternatives.

In essence, these properties allow a decision maker to boil down any decision problem into a series of simple decision problems like the one in Figure 11. Note that this simple decision problem has the same form as the party problem. In the party problem, the decision maker is asked to assess utilities and apply the MEU principle to choose. The clever trick from von Neumann and Morgenstern is to recast this problem into one of choosing a probability of sunny that makes the alternatives outdoors and indoors equally preferable.

When I first saw this argument, I thought to myself, “This is the most elegant and practical application of math I have ever seen.” Both the assumptions and the proof of the MEU principle are simple, and the result is a tool that just about anyone can use to improve their decision making. This realization came to mind because my father was a high school math teacher and was always looking for practical applications of math to show his students. By the time I saw this proof, he had already retired, but nevertheless, we were both excited to discuss it.

To close this section, it’s worthwhile to comment on the two rather different graphical models for decision problems. The decision tree more naturally depicts the flow of time and the display of probabilities and utilities. Also, it allows the explicit depiction of any asymmetries in a decision problem, as we see in our example. In contrast, an influence diagram is typically more compact and, as mentioned, enables the explicit representation of probabilistic independencies. We will see examples of this benefit later. Given these tradeoffs, both representations are still in use.

3.2 Proper scoring rules

There is a special class of utility that is worth mentioning—namely, proper scoring rules. To motivate this class of utility, imagine you operate a company that invests is new and upcoming businesses. You are constantly making high-stakes decisions and sometimes need probabilities (or probability densities) from experts that various possible outcomes will occur, such as whether a particular piece of legislation in Washington is going to pass. You have access to experts, and want to be sure that the probabilities you get from them are “honest.” One way to do this is to frame the expert as a decision maker who is deciding what distribution to report over some variable , and then receiving a utility for that report based on the value of that is eventually observed. The utility they receive should be a function of (1) every possible observed value of and (2) the expert’s reported distribution for every possible value of . Let’s denote this utility function as . For the reported probability distribution to be honest, we want the expert’s expected utility to be a maximum when is equal to the expert’s actual probability distribution . That is, we want to be a maximum when . (Here, I am using generally—it can be a sum when is discrete.) When this condition holds, is said to be a proper scoring rule.

One proper scoring rule is the log scoring rule: —that is, is the logarithm of where is the value of that is actually observed. It is easy to check that utility is maximized when . What is rather remarkable, however, is that the log scoring rule is the only proper scoring rule (up to linear transformation) when (1) takes on three or more states, (2) is local in the sense that it only depends on at the observed value of , and (3) certain regularity conditions hold [7, 8]. The concept of a proper scoring rule will come in handy in Section 4.1, where we consider learning graphical models from data.

3.3 Correlation versus causation and a definition of causality

It’s tempting to sum up the difference between a purely probabilistic DAG model and an influence diagram by viewing the latter as merely the former with decisions and utilities “tacked on.” Nonetheless, there is something fundamentally different about them. In particular, an influence diagram can express causal relationships among its variables, whereas a probabilistic graphical model strictly represents correlational or acausal relationships among its variables.

Ironically, Howard and Matheson, have been reluctant to associate their elegant representation for decision problems with any notion of causality. Note the label “influence diagrams” rather than “causal diagrams.” This reluctance had also been prominent in the statistics community until pioneers including Peter Spirtes, Clark Glymour, Richard Scheines, Jamie Robbins, and Judea Pearl began their relentless work to change that.

Perhaps one source of reluctance was the lack of a clear definition of cause and effect. Even in his recent work [65], Judea describes the notion of cause and effect as a primitive, like points and lines in geometry. Personally, I think causality can and should not be left as a primitive, but should be defined in more basic terms. Before I go any further, allow me to take a crack at this.

Let’s start with a simple example: colliding billiard balls. When a moving ball strikes another, we naturally say that the collision “caused” the subsequent motion. (At a party in the mid-1980s for Ted Shortliffe’s research group, I was playing Pictionary. Eric Horvitz, my game partner, drew a picture of a billiard table with a pool cue about to strike a ball. I immediately guessed the answer: “causality.” The other players were astounded. Eric and I think a lot alike.) This easily generalizes. When we say, “event x causes event y,” we mean that there are physical interactions, via the forces of nature, that relate event x to event y. Note that this definition may extend to quantum interactions. Current experimental results involving quantum entanglement, perhaps the oddest of quantum interactions, suggest two possibilities. One is that special relativity holds and thus there is correlation without causation, which is extremely strange [29, 28]. The other is that special relativity does not hold and a quantum signal that governs the entanglement is propagating faster than the speed of light. Under this second possibility, my definition still applies. Unfortunately, it is quite possible that we may never know which possibility holds. That said, to me, the concepts in Newtonian physics and classical chemistry are adequate to describe almost all causal relationships that have practical relevance.

With this definition, it becomes clear that reasoning about actions and their consequences is tantamount to causal reasoning. For example, my decision to hold the party outdoors leads to me writing invitations, which in turn are read by my guests, who then follow those instructions and show up at my house—all happening via forces of nature. Note that, although there are many definitions of cause and effect, there is general agreement on how to test for the existence of a causal effect and to quantify its strength: the randomized trial. We examine this test in Section 4.5.

3.4 Fully causal models and the force/set/do decision

An influence diagram can represent both causal and acasual relationships. In the party problem, suppose that the decision maker can check a weather forecast before making the location decision. The updated influence diagram (structure only) is shown in Figure 13. Here, the relationship from Location and Weather to Outcome is causal, whereas the relationship between Weather and Forecast need only be considered in terms of probabilities. Of course, we could model a causal relationship between Weather and Forecast by introducing other variables such as the current weather and its time derivatives at nearby locations, but doing so is not needed to decide on the location of the party. To make the decision, only the probabilistic relationship between Weather and Weather forecast matters. For a detailed discussion of the flexibility of influence diagrams to represent both causal and acausal relationships, see [43] [10.1613/jair.202].

In contrast, many researchers working on the theory of causal inference focus on models where every arc is causal. In addition, they don’t represent explicit decisions, but rather reason about decisions that are implicitly defined by the domain variables. In particular, for every domain variable, they imagine a decision with alternatives that either force the variable to take on a particular value, or take no action and simply observe the variable. I call this framework a fully causal framework and a model therein a fully causal model.

The fully causal framework lies strictly within the decision-theoretic framework. In particular, a fully causal model within this framework can be represented by an influence diagram in a straightforward manner. As an example, consider the fully causal model for the assertions “ causes ” and “ causes ”. The model structure can be drawn in simple form as a DAG on the domain variables as shown in Figure 14a. It can also be represented by the influence-diagram structure shown in Figure 14b.

First, let’s look at the decision variables in this influence diagram. To be concrete, consider the decision variable force; similar remarks apply to force and force. One value of the decision variable force is “do nothing”, whereby is observed rather than manipulated. Other values of force corresponding to taking on one of its possible values, regardless of the values of ’s parents in the graph. Pratt and Schlaifer [49] describe this action as “forcing” to take on some value. Note the connection between the verb “force” and the noun “force” in my definition of causality. Spirtes, Glymour, Scheines also describe this type of action, calling it a “manipulation” in [75] and “set” in various other writings. Pearl calls it a “do operator” and uses the notation “do.” In addition, he sometimes talks about ignoring its parents as graph surgery [64, 65].

Now let’s look at the remaining variables. Each observed variable (, , and ) is a deterministic function of its corresponding force/set/do decision and its corresponding uncertainty variables (, , and ) that encode the uncertainties in the problem. When force takes on the value “do nothing,” then . When, for example, force takes on the value “force to value ,” then , as described in the previous paragraph. Typically, as depicted in the figure, we assume these uncertainty variables are mutually independent, reflecting the modularity of causal interactions (which I will discuss shortly). As for the outcome variable, I’ve omitted it, because here we are not concerned with making the actual decisions, but rather representing the relationships among the variables.

The influence diagram for a fully causal model not only represents causal interactions, it also represents assertions of conditional independence. For example, given the fully causal model in Figure 14b, when we set all force/set/do decisions to “do nothing” and absorb the uncertainties into the observable variables, we end up with a probabilistic DAG model with the same independence semantics as the model in simple form (Figure 14a) interpreted as a probabilistic model. In general, a fully causal model implies the same assertions of conditional independence as those implied by its simple form interpreted as an acausal model. This statement is sometimes called the causal Markov assumption [75] and, as we shall see, is important for learning causal models from observational data.

The fully causal framework makes certain forms of causal reasoning accessible to simple mathematical treatment (see, e.g., the section in [65] starting on p.231). In addition, when creating a model within this framework, a person need not specify up front whether a variable is manipulated or observed. That said, it’s important to note that force/set/do decisions are not the norm in real life. A classic example where a decision is intended to be a force/set/do decision, but isn’t, is making a wish to a magic genie:

Me: “Genie, I wish to be rich.”

Genie: “Your wish is granted. I killed your aunt, and you will now inherit her fortune.”

Me: “No, that’s not what I meant!”

The genie acts to increase the value of my bank account, but his actions cause other changes that I did not intend. (When I was a very young, I watched The Twilight Zone television series, which left a deep impression on me. Season 2, episode 2 has a variation on the magic-genie theme. The protagonist wishes to be all-powerful, and the genie turns him into Hitler in his final moments in the bunker—very disturbing. When I first heard of the force/set/do decision, I couldn’t help but think of this episode.) Nonetheless, there are occasional situations where force/set/do decisions can be realized in practice (see Sections 4.4 and 4.5).

3.5 The modularity of cause–effect relationships

A useful property of cause–effect relationships is that they tend to be modular. To illustrate this point, consider a clinical trial to test the efficacy of drugs in lowering cholesterol. A reasonable causal model structure is as follows:

Now, suppose there are two components or “arms” of the trial, each testing a different drug. In this situation, it is quite possible that the relationship between drug taken and cholesterol level will be different in the two arms. In contrast, assuming the side effects of the two drugs are the same, it is reasonable to assume that the relationship between drug offered and drug taken is the same in both arms. In this case, the causal interactions involving a person’s proclivity to take a drug when asked to do so are the same for the two arms of the trial.

It is interesting to speculate that the modularity of causal reasoning has a potential survival advantage for humans. Humans excel above all other creatures in large part because they can make good decisions. As we’ve just discussed, causal reasoning is a key component of decision making, and the modularity of causal relationships allows this reasoning to be more easily executed in the human brain. Furthermore, the high-level process of decision making is itself modular—we decompose the process into selecting alternatives, specifying uncertainty in the face of interventions, and specifying preferences for the possible outcomes. This additional modularity further facilitates the execution of decision making in the brain. Furthermore, the ability to imagine possible alternatives necessarily requires at least the perception of free will. Whether we actually have free will is a matter of debate. Sadly, experimental evidence suggests it is an illusion [32]. Ross Shachter and I discuss these points in more detail in [72] [10.1145/3501714.3501756].

One final note: individual decision making is better than group decision making. Specifically, a single decision maker has a set of beliefs and a set preferences that prescribe an optimal decision. This is not the case for groups. One merely needs to experience a group trying to decide where to go out to eat or witness the decision paralysis of political bodies to appreciate this point. (Also, see Arrow’s impossibility theorem [3].) Going down the rabbit hole one step further, scientific investigations suggest that the human thought proceeds as a collection of subconscious processes that feed into a sense of a single, conscious “I” (e.g., [32]). If these processes did not feed into a single “I,” then decision making would suffer in a manner similar to that of group decision making, leading to a survival disadvantage. Thus, it is not too surprising that the sense of a single, conscious “I” would emerge through evolution. I’m not suggesting that a single “I” must be conscious to have this advantage, but it seems clear that if evolution were to produce such a conscious “I,” then it would have a survival advantage.

4 Learning graphical models from data

Around 1988, as work and applications in probabilistic expert systems was in full swing, Ross Shachter and I were sitting in his office one afternoon, brainstorming about what should come next. We came up with the idea of starting with a Bayesian network for some task and using data to improve it. Neither of us ran with the idea, but several years later, Cooper and Herskovits wrote a seminal paper on the topic [18]. The work was very promising. When I got to Microsoft Research in 1992, I set out to see how I could contribute. My colleagues and I, especially Dan Geiger, Max Chickering, and Chris Meek, managed to make a lot of progress over the next decade (e.g., [39] [10.1023/A:1022623210503] and [17] [10.5555/1005332.1044703].) In this section, I highlight many of the fundamental concepts that emerged from this work and, as usual, provide an index to the key papers.

Imagine we have data for a set of variables (the domain) and would like to learn one or more graphical models from that data—that is, identify one or more graphical models that are implicated by the data. The data we have may be purely observational or a mix of interventions and observations. There are two rather different approaches that have been developed over the last four decades to do so. In the first approach, the frequentist methods are used to test for the existence of various conditional independencies among the variables. Then, graphs consistent with those independencies are identified. In the second approach, a Bayesian approach, we build a prior distribution over possible graph structures and prior distributions over the parameters of each of those structures. We then use the rules of probability to update these priors, yielding a posterior distribution over the possible graph structures and their parameters. We can then average over these Bayesian networks, using their posterior probabilities, to make predictions or decisions.

Here, I will focus on the Bayesian approach, frankly, because it is what I have worked on for many years. That said, the reason I have worked on the approach for so many years is that it is principled. In contrast, the frequentist approach requires a rather arbitrary threshold to test for independence. I will start with the task of learning acausal DAG models (where we just care about the expression of independence assertions) and then move to learning fully causal models and influence diagrams.

4.1 Learning an acausal DAG model for one finite variable

To begin a discussion of learning acausal DAG models, let’s look at the simplest possible problem: learning a DAG model for a single binary variable with observational data only. To be concrete, let’s return to the problem of flipping a thumbtack, which can come up heads (denoted ) or tails (denoted ). We flip the thumbtack times, under the assumption that the physical properties of the thumbtack and the conditions under which it is flipped remain stable over time, yielding data . From these observations, we want to determine the probability of (say) heads on the th toss. As we have discussed, there is some unknown frequency of heads. We could use (heads) to denote this frequency, but to avoid the accumulation of parentheses, let’s use instead (and similarly ). In the context of learning graphical models from data, and are often referred to as parameters for the variable . In addition, I will use to apply to the case where is either or .

As we are uncertain about , we can express this uncertainty with a (Bayesian) probability distribution (in this case, a probability density function) . The independence relationships among the variables and can be described by the Bayesian network, shown on the left-hand side of Figure 15. Namely, as we discussed at the end of Section 2.1, given , the values for the observations are mutually independent. The right-hand side of Figure 15 is the same model depicted in plate notation, first described by [12]. Plates depict repetition in shorthand form. Anything inside the plate (i.e., the box) is repeated over the index noted inside the plate.

Learning the parameter for the one-node Bayesian network now boils down to determining the posterior distribution from and the prior distribution . From the rules of probability, we have

| (14) |

where

The quantity is sometimes called the marginal likelihood of the data. As we will see, this quantity is important for inferring posterior probabilities of model structures. From the independencies in Figure 15, we have

where each term in the product is when is heads and () when is tails. Both Bayesians and frequentists agree on this term: it is the likelihood function for Bernoulli sampling. Equation 14 becomes

| (15) |

where and are the number of heads and tails observed in , respectively. The quantities and are sometimes called sufficient statistics, because they provide a summarization of the data that is sufficient to describe the data under the assumptions of their generation.

Now that we have the posterior distribution for , we can determine the probability that the th toss of the thumbtack will come up heads (denoted ) heads:

where denotes the expectation of with respect to the distribution . The probability that the th toss comes up tails is simply

To complete the Bayesian story for this example, we need a method to assess the prior distribution over . A common approach, often adopted for convenience, is to assume that this distribution is a beta distribution:

where and are the parameters of the beta distribution, , and is the Gamma function which satisfies and . The quantities and are often referred to as hyperparameters to distinguish them from the parameter . For the beta distribution, the hyperparameters and must be greater than zero so that the distribution can be normalized. Examples of beta distributions are shown in Figure 16.

The beta prior is convenient for several reasons. By Equation 15, the posterior distribution will also be a beta distribution:

| (16) |

We say that the set of beta distributions is a conjugate family of distributions for binomial sampling. In general, when both the prior and posterior live in the same distribution family for a particular type of sampling, we say that distribution family is conjugate for that type of sampling. Also, the expectation of with respect to this distribution has a simple form:

So, given a beta prior, we have a simple expression for the probability of heads in the th toss:

| (17) |

Note that, for very large with , the posterior distribution over is sharply peaked around , the fraction of heads observed. Finally, the marginal likelihood becomes

Assuming is a beta distribution, it can be assessed in a number of ways. For example, we can assess our probability for heads in the first toss of the thumbtack (e.g., using a probability wheel). Next, we can imagine having seen the outcomes of flips and reassess our probability for heads in the next toss. From Equation 17, we have (for ):

Given these probabilities, we can solve for and . This assessment technique is known as the method of imagined future data.

Another assessment method is based on Equation 16. This equation says that, if we start with a Beta prior and observe heads and tails, then our posterior (i.e., new prior) will be a Beta distribution. (Technically, the hyperparameters of this prior should be small positive numbers so that can be normalized.) If we suppose that a Beta prior encodes a state of minimum information (a supposition that remains debated), we can assess and by determining the (possibly fractional) number of observations of heads and tails that is equivalent to our actual knowledge about flipping thumbtacks. Alternatively, we can assess , the probability of heads on the first toss, and , the latter of which can be regarded as an effective sample size for our current knowledge. This technique is known as the method of effective samples. Other techniques for assessing beta distributions are discussed in [82] and [15].

Although the beta prior is convenient, it is not accurate for some problems. For example, suppose we think that the thumbtack may have been purchased at a magic shop. In this case, a more appropriate prior may be a mixture of beta distributions—for example,

where 0.4 is our probability that the thumbtack is heavily weighted toward heads (tails). In effect, we have introduced an additional unobserved or “hidden” variable , whose values correspond to the three possibilities: (1) thumbtack is biased toward heads, (2) thumbtack is biased toward tails, and (3) thumbtack is normal; and we have asserted that conditioned on each value of is a beta distribution. In general, there are simple methods (e.g., the method of imagined future data) for determining whether or not a beta prior is an accurate reflection of one’s beliefs. In those cases where the beta prior is inaccurate, an accurate prior can often be assessed by introducing additional hidden variables, as in this example.

Finally, I note that this approach generalizes to the case where has values with parameters . In this case, the conjugate distribution for these parameters is the Dirichlet distribution, the generalization of the Beta distribution, given by

where hyperparameters , and is the normalization constant.

4.2 Learning acausal DAG models for two or more finite variables

Next, let’s consider the problem of learning DAG models having only two binary variables, which I will denote and . In this case, there are only two possible model structures to consider—the one where and are independent, and one where there is no claim of independence. Note that there are two graph structures that express no claim of independence: and . (When depicting DAG models inline, I will draw them without ovals around node names.) As acausal networks, they are equivalent, so we only need consider one of them. To be concrete, let’s use the graph structure .

I denote the model structures representing independence and no claim of independence and , respectively. Using a Bayesian approach, we can reason about the posterior probability of either of the graph structures (generically denoted ) given their prior probabilities and marginal likelihoods for data :

| (18) |

For a historical perspective on this sort of reasoning, see [53].

Interestingly, there is a relationship between the marginal likelihood and cross validation. Using the product rule of probability, the logarithm of the marginal likelihood given a model can be written as follows:

Each term in the sum is a proper score (i.e., utility) for the prediction of one data sample. In words, first we see how well we predict the first observation. Then we train our model with the first observation and see how well we predict the second observation. We continue in this fashion until we predict and score each observation in sequence. By the rules of probability, the total score will be the same, regardless of how the data samples are ordered. This perspective was first noticed by Phil Dawid [20]. In this form, the marginal likelihood resembles cross validation, except that there is no crossing—that is, there is never a case where is used in the training data to predict and vice versa. As crossing can lead to over fitting [20], the marginal likelihood appears to be an ideal validation approach. In practice, however, I have seen many instances where cross validation yields a result that seems to be more reasonable.

Now let’s examine the Bayesian approach to learning models in detail. First, let’s consider the simpler graph structure . As both and are binary, let’s use and to denote the frequency of and coming up heads, respectively. When determining the marginal likelihood for the data given this graph structure, the assumption that these two parameters are independent greatly simplifies the computation. In particular,

In effect, with this assumption of parameter independence, the determination of the marginal likelihood decomposes into the determination of the marginal likelihood for flips of two separate thumbtacks. The marginal likelihood becomes

where the hyperparameters and sufficient statistics for have definitions analogous to those for described in the previous section.