Harnessing Deep Learning and HPC Kernels via High-Level Loop and Tensor Abstractions on CPU Architectures

Abstract

During the past decade, Deep Learning (DL) algorithms, programming systems and hardware have converged with the High Performance Computing (HPC) counterparts. Nevertheless, the programming methodology of DL and HPC systems is stagnant, relying on highly-optimized, yet platform-specific and inflexible vendor-optimized libraries. Such libraries provide close-to-peak performance on specific platforms, kernels and shapes thereof that vendors have dedicated optimizations efforts, while they underperform in the remaining use-cases, yielding non-portable codes with performance glass-jaws. This work introduces a framework to develop efficient, portable DL and HPC kernels for modern CPU architectures. We decompose the kernel development in two steps: 1) Expressing the computational core using Tensor Processing Primitives (TPPs): a compact, versatile set of 2D-tensor operators, 2) Expressing the logical loops around TPPs in a high-level, declarative fashion whereas the exact instantiation (ordering, tiling, parallelization) is determined via simple knobs. We demonstrate the efficacy of our approach using standalone kernels and end-to-end workloads that outperform state-of-the-art implementations on diverse CPU platforms.

I Introduction

Deep Learning (DL) emerged as a promising machine learning paradigm more than a decade ago, and since then, deep neural networks have made significant advancements in various fields, including computer vision, natural language processing, and recommender systems, while gradually expanding their applications in traditional scientific domains [1, 2, 3, 4, 5, 6, 7, 8, 9, 10]. Despite the apparent differences between conventional High Performance Computing (HPC) and DL workloads, the computational kernels used in both fields largely overlap. These kernels involve tensor contractions (dense and sparse), elementwise tensor operations, tensor norm computations, and generalized tensor re-orderings [11, 12].

The deployment of programming systems, algorithms, and hardware in the domain of DL has led to convergence with the HPC counterparts. However, the prevalent programming paradigm has become stagnant compared to the rapidly evolving DL/HPC workloads. The programming paradigm relies heavily on vendor-optimized libraries for vital building blocks of applications. These libraries offer close-to-peak performance on specific platforms, kernels, and shapes thereof that vendors have dedicated optimization efforts. However, they under-perform in other use-cases, resulting in non-portable, inflexible codes with performance limitations. Meanwhile, mainstream CPU platforms have continued to evolve, offering hardware acceleration capabilities for key operations, core heterogeneity, large core-counts, and complex memory hierarchies. Such nuances of contemporary CPU platforms make it impractical to use “one-fits-all” code generation strategies, exacerbating the generalization problem inherited by extended library dependencies. Consequently, this complexity is transferred to library development, and libraries for key computations become highly specialized, making them difficult to develop, maintain, and extend to encompass the latest CPU advances [13]. In the quest of eliminating the dependency from vendor-libraries, the research fields of Tensor Compilers (e.g. [14, 15, 16, 17]) and Domain Specific Languages (DSLs) (e.g. [18, 19]) are experiencing a renaissance. Nevertheless, such efforts typically provide point solutions (often domain specific), and have not proven their applicability on real-world use-case scenarios [13].

We observe that the root cause of the aforementioned problems can be traced back to the extreme abstraction levels provided by libraries and tensor compilers. The libraries are characterized by coarse-grain, monolithic kernels that lack flexibility, while compilers allow for unrestricted expression of low-level operators, which can hinder efficient code generation in the back-ends. Adding to the difficulty of achieving optimal code generation, tensor compilers are responsible for efficiently parallelizing, tiling, and re-ordering loops, as well as transforming the layout of data structures. All these tasks remain unsolved in a generic setup to date. These observations suggest that a middle path that embraces high-level abstractions for the loop generation and the tensor operations, and emphasizes clear separation of concerns can effectively tackle the majority of the challenges [13].

In this study we built upon and extend the prior work of Georganas et.al. [12], namely Tensor Processing Primitives (TPP). TPPs comprise a collection of basic operators on 2D tensors that can be used to construct more complex operations on high-dimensional tensors. The TPP collection is compact, expressive, and precision-aware, and as a result high-level DL and HPC kernels can be written in terms of TPP operations. The specification for TPPs is agnostic to platform, DL framework, and compiler back-end, which makes the TPP-code portable. TPPs operate at the sub-tensor granularity, making them directly accessible to workload and library developers. While the TPP specification is platform-agnostic, its implementation is platform-specific, and optimized for the target architectures. This separation of concerns allows the user-entity of TPPs to focus on the algorithm and its execution schedules, while the TPP back-end generates efficient, platform-specific code for the TPP operations.

While the TPP abstraction addresses the code generation problem of the core computation kernels, it is still the user-entity’s responsibility to write the required nested loops around the TPP primitives (henceforth called “outer loops”) that essentially traverse the computation/iteration space of the kernel. These “outer loops” effectively control the parallelization scheme of the computation, and the corresponding loop orders in conjunction with potential loop blockings/tilings affect the temporal and spatial locality of the computation [20, 21]. Consequently, in order to get high-performance kernels, the user has to write (potentially) complicated, non-portable (across platforms) code with respect to these “outer loops” that takes into account the increasingly complex memory hierarchy of the diverse compute platform, and the increased degree of available parallelism. Also, the exact instantiation of these loops that yields optimal kernel performance directly depends on the problem size and the platform at hand (i.e. there is no “one-fits-all” solution, an issue that also hinders portable library performance).

We address the problem of generating arbitrarily complex parallel loops around TPPs by introducing PARLOOPER (PARallel LOOP gEneratoR), a high-level loop abstraction framework (see Figure 1). PARLOOPER aims to simplify the “outer loop” writing (Figure 1-A1) by enabling the user to declare the logical “outer loops” along with their specifications (i.e. bounds/steps/parallelization properties), instead of explicitly writing the tedious loop nests that pertain to multiple loop orders, tilings and parallelization schemes. At runtime, the user may provide a single parameter (henceforth called loop_spec_string, Figure 1-Arrow 1) to dictate the desired instantiation of the loop nest (i.e. loop order, loop blockings/tilings, parallelization method). In our Proof-Of-Concept (POC) implementation, PARLOOPER is a lightweight C++ library that auto-generates Just-In-Time (JIT) the requested instantiation of the loop nest by considering the loop specifications and the single loop_spec_string runtime parameter (Figure 1-Arrow 2 and Box C1). The resulting user code (Figure 1-Box A) is extremely simple, declarative, and high-level yet powerful: With zero lines of code-changes the loop nest can be instantiated to arbitrarily complex implementation that maximizes the performance for a specific platform and problem at hand. PARLOOPER uses internally caching schemes to avoid JIT overheads whenever possible. By leveraging PARLOOPER along with the TPP programming abstraction for the core computation, the user code is simple/declarative, and naturally lends itself to auto-tuning to explore complex outer loop instantiations with zero lines of user-code writing.

We decompose the kernel development in two steps with clear separation of concerns. First, we express the computational core/body of a kernel using solely Tensor Processing Primitives and the logical loop indices of the surrounding/“outer” loops (Figure 1-Box A2). Second, we declare the surrounding logical loops along with their specification (i.e. bounds/steps/parallelization properties) with PARLOOPER (Figure 1-Box A1). The result is a streamlined, compact code that is identical for all target platforms/problem sizes/shapes; the exact instantiation of the loop-nest (Figure 1-Box C1) is governed by a simple runtime knob, whereas the efficient code generation pertaining to TPP (incl. loop unrolling, vectorization, register blocking, instruction selection) is undertaken by the TPP back-end which is JITing code based on the target platform (Figure 1-Box C2) [12].

The loop_spec_string knob in PARLOOPER can be determined either “manually” by the user (i.e. Figure 1-Box B1 the user selects values for the knob that yield good performance based on custom performance modeling) or by auto-tuning techniques (Figure 1-Box B2), where a bunch of values for the loop_spec_string knob are benchmarked offline and the best one is selected during runtime (Figure 1-Arrow 1). To assist the selection of the loop_spec_string runtime knob, instead of relying exclusively on manual settings/exhaustive auto-tuning, we provide an exemplary lightweight, high-level performance modeling tool (Figure 1-Box B3).

We demonstrate the efficiency of our approach on multiple platforms using standalone kernels and end-to-end workloads, and compare the performance to State-Of-The-Art implementation. The main contributions of this work are:

-

•

A lightweight, flexible and high-level parallel loop generation framework (PARLOOPER) that decouples the logical loop declaration from the nested loop instantiation. The user-code is declaring the logical loops at high-level, whereas the exact instantiation is controlled by one knob.

-

•

Extending the LIBXSMM TPP backend [12] to support the ARM SVE ISA and hardware accelerated tensor-contractions on the Graviton 3 platform. Also, we introduce sparsedense matrix multiplication TPPs with block sparsity, low-precision support and hardware acceleration for both x86 and ARM/AArch64 platforms.

-

•

Exemplary DL and HPC multi-threaded kernels using the PARLOOPER framework and the TPP abstraction for the core computations (GEMM, convolutions, MLP, block-sparsedense GEMM) including multiple precisions.

-

•

Implementing end-to-end DL workloads via the PARLOOPER/TPP paradigm for Image Recognition (ResNet50 [22]) and Large Language Model pipelines.

-

•

Benchmarking the standalone kernels and end-to-end workloads on multiple modern CPUs with various datatype precisions while matching/exceeding state-of-the-art (SOTA) performance from vendor libraries.

II The PARLOOPER Framework

II-A GEMM Example Notations

We illustrate our PARLOOPER/TPP framework with a running General Matrix Multiplication (GEMM) example (see Figure 1 and Listing 1). The zero_tpp sets the input 2D tensor to zero values. The brgemm_tpp corresponds to the Batch-Reduce GEMM (BRGEMM) TPP which is the main tensor contraction tool in the TPP collection [21, 12]. BRGEMM materializes . This kernel multiplies the specified blocks and and reduces the partial results to a block . We use the stride-based variant of BRGEMM, where the addresses of and are: and [12]. The Matrix Multiplication input tensors are logically 2D matrices and that need to be multiplied and added onto . We follow the approach of previous work [21] and we block the dimensions , , and by factors , , and respectively (lines 1-3 of Listing 1). We employ the BRGEMM TPP to perform the tensor contraction with and across their dimensions and . The sub-blocks and that have to be multiplied and reduced are apart by fixed strides and .

II-B Logical Loop Declaration

The Matrix Multiplication algorithm is comprised of three logical nested loops which are declared in lines 5-9 of Listing 1/Figure 1-Box A1. The computation involves three logical loops, thus we declare: ThreadedLoop (line 5).

The first loop (line 6) with mnemonic a, corresponds to a loop with start , upper bound and step , and it reflects the “” loop of the GEMM ( is the inner-product dimension). This loop has an optional list of step/blocking parameters . The second loop (line 7) with the mnemonic b, corresponds to a loop with start , upper bound and step , and reflects the “” loop of the GEMM. Similarly, this loop has an optional list of step/blocking parameters . Finally, the third loop (line 8) with mnemonic c, corresponds to a loop with start , upper bound and step , and reflects the “” loop of the GEMM. This loop has an optional list of step/blocking parameters .

The specific instantiation of these loops, i.e. the loop order with which they appear, the number of times each one is blocked and also the way they are parallelized are controlled by the run-time knob loop_spec_string (line 9). Given such a string during run-time, the constructor ThreadedLoop invokes our custom loop generator and emits a C++ function for the target loop instantiation (Figure 1-Box C1). Listing 2 illustrates an exemplary target-loop instantiation function. Subsequently, a C++ compiler is invoked (currently we support icc, clang, and gcc), and the target-loop instantiation function is compiled Just-In-Time (JIT), which can be further used by the code (line 11 in Listing 1). To avoid JIT overheads whenever possible, we cache the JITed target loops: if we request a loop nest with the same loop_spec_string, we merely return the function pointer of the already compiled and cached loop-nest.

In regard to the legality/validity of the loop_spec_string (i.e. what loop permutations are allowed), this is responsibility of the user entity and depends on the computation at hand. The two rules for constructing a valid loop_spec_string are:

-

•

RULE 1: Loop ordering and blockings

Each character (from a to z, depending on the number of the logical loops) can appear in any order and any number of times. In our case, since we have 3 logical loops the characters range from to . The order with which the loop characters appear in the string determine the nesting loop order, and the times each character appears determines how many times the corresponding logical loop is blocked. For example, a loop_spec_string bcabcb corresponds to a loop where logical loop is blocked twice (the character appears 3 times), logical loop is blocked once (the character appears 2 times) and the logical loop is not blocked (it appears only once). The blocking/tiling sizes for each logical loop level are extracted from the corresponding list of step/blocking parameters in order they appear in the list. Our Proof-Of-Concept (POC) implementation allows only perfectly nested blocking/tiling sizes, i.e. in the example above it should hold: , and . All these blocking/tiling sizes lists may be provided at runtime (e.g., programmatically determine the blocking sizes given a problem) and do not have to be statically determined.

-

•

RULE 2: Parallelization of loops

If a loop character in the loop_spec_string appears in its upper-case form, it dictates the intention to parallelize this loop at the specific nesting level it appears. In the previous example, the loop_spec_string bcaBcb, corresponds to a loop nest where the 2nd occurrence of loop is parallelized. Our implementation supports 2 modes of parallelization:

PAR-MODE 1: Relying on OpenMP [23] runtime for parallelizing the loops. In this method, the loop parallelization strategy corresponds to the directive#pragma omp for nowait. If the user intends to parallelize multiple loops, the corresponding capitalized characters should appear consecutively in the loop_spec_string, and it would result in parallelization using collapse semantics. E.g., for the loop_spec_string , PARLOOPER generates the loop nest in Listing 2. We allow optionally adding directives at the end of the loop_spec_string by using the special character as separator. E.g., the loop string “ ” yields the directive: #pragma omp for collapse(2) schedule(dynamic,1) nowait. This method allows PARLOOPER to leverage features from the OpenMP runtime like dynamic thread scheduling. The constructed loop nest is embraced by a #pragma omp parallel region (line 4 in Listing 2). A barrier at the end of a specific loop-level may be requested using the special character “”.

PAR-MODE 2: Using explicit multi-dimensional thread decompositions. The user can specify explicit 1D, 2D or 3D loop parallelization schemes. For the 1D decomposition, the threads form a logical 1D grid and are assigned the corresponding parallelized loop iterations in a block fashion. The user merely has to append after the desired upper-case loop character the substring {R:#threads}, where is a number dictating in how many ways to parallelize that loop. For a 2D decomposition, the threads are forming a logical 2D grid that effectively parallelizes the requested two loops. E.g., consider the loop_spec_string bC{R:16}aB{C:4}cb. The loop is parallelized 16-ways and the loop is parallelized 4-ways using a logical thread grid of 164 (, ) – see Listing 3. Each loop that is parallelized is done so in a block fashion using the requested number of “ways”. The 3D decomposition works in an analogous manner.

The current POC supports OpenMP for concurrency purposes, however our back-end loop generator can be extended to support other runtimes (e.g. TBB [24] or pthreads [25]).

II-C Expressing the Computation

After declaring the nested loops, we obtain a ThreadedLoop object (gemm_loop in Line 5 of Listing 1) which can be passed at runtime (up to) three parameters:

-

•

(Optional) A pointer to a function: void init_func()

This function is called before the generated loop-nest and is used for “initialization” purposes (e.g., code that initializes data structures etc.).

-

•

(Optional) A pointer to a function: void term_func()

This function is called after the generated loop-nest and is used for “termination ” purposes (e.g., code that cleans up data structures etc).

-

•

A pointer to a function: void body_func(int *ind)

The function body_func is called at the inner-most level of the generated loop-nest (e.g. line 16 in Listing 2), and it performs the desired computation. The function body_func gets as input an array of integer values which contains the values of the logical indices used in the nested loop in alphabetical order: ind[0] corresponds to the value of the logical index in the current nested-loop iteration, ind[1] corresponds to the logical index in the current iteration etc. This index array is allocated and initialized by PARLOOPER (e.g. line 15 in Listing 2). By leveraging the values of the logical indices, the user can express the desired computation as a function of those. We use as body_func an in-place C++ lambda expression, but this is not a requirement in our framework. Considering the GEMM example, and using lambda expression for body_func we express the GEMM computation using the zero_tpp, the brgemm_tpp, and the logical indices with lines 12-17 of Listing 1 and Figure 1-Box A2. In line 13, we extract the logical indices , and from the input array . We set batch-reduce count in Line 14 to since the tensor contraction is performed on blocked matrices. In Line 15 we set the current block to zero if this is the first time we encounter it. Finally, lines 16-17 execute the actual tensor contraction via the BRGEMM TPP.

We highlight the separation of concerns in the heart of PARLOOPER’s design: The logical loops are declared at a high-level in as little as 3 lines of code (lines 6-8 in Listing 1 and Figure 1-Box A1). The main computational core is orthogonal to the loop declaration and is expressed via TPPs in 5 lines of code by making use of the logical loop indices (Figure 1-Box A2). Both the loop-nest instantiation and the TPP code generation are abstracted from the user and are determined at run-time considering runtime parameters (loop_spec_string for the loop generation and the target platform/problem shapes for the TPP code generation – Figure 1-Box C). E.g., on a CPU platform with 3 levels of cache one may block each of the logical loops up to 3 times to maximize the data re-use out of each level of cache [26]. Moreover, skewed tensor sizes may favor loop orders where the “smaller” tensor is accessed repeatedly in the innermost loop. The parallelization of the relevant and loops also affects the data locality and the degree of data-sharing among cores. All these loop-instantiation decisions are completely determined via the runtime knob loop_spec_string. Similarly, the JITed code for the BRGEMM TPP (incl. loop unrolling, vectorization, register blocking, instruction selection) depends on the target platform and the TPP shapes [12]. For example, on Intel Sapphire Rapids the BF16 TPP backend can emit Advanced Matrix eXtension (AMX) instructions to accelerate the tensor contractions, whereas on an AMD Zen4 platform the TPP backend can emit AVX512 BF16 FMA instructions. All these low-level details are completely abstracted from the user-level code Figure 1-Box A.

Also, the GEMM in Listing 1 is data-type agnostic. All tensors have templated datatype, and the TPPs are precision-aware per design: depending on how they are setup, they operate with any supported datatype internally. Therefore, the same code works for all precisions without any change.

As mentioned earlier, the legality/validity check of the loop_spec_string is responsibility of the user entity and depends on the computation at hand. An advanced framework, where the legality check is not user’s responsibility, requires two steps/components: i) extracting the semantics of the loop body (written via TPPs) in a way that the data dependencies of the core computation are exposed, and ii) perform a traditional loop-dependence analysis on the loop permutation at hand to check its legality. These steps essentially would bring the current PARLOOPER/TPP framework closer to a “Tensor compiler stack with TPP abstractions”. In the current form of the PARLOOPER/TPP framework, the correctness burden from the user’s point of view is equivalent to writing parallel code in an imperative language (e.g. C with OpenMP): for any nested loop with OpenMP parallelization directives, it is the user’s responsibility to dictate which loops have to be parallelized without introducing race conditions, otherwise the program is incorrect (i.e. the compiler does not check legality of the parallelization).

II-D Auto-tuning the nested loops

A proper value of loop_spec_string is essential in order to obtain the best performance on a given combination of problem size and CPU architecture. One way to select loop_spec_string is to hand-craft specific loop strings that are expected to be performant given custom performance modeling of the given platform/problem (Boxes B1 and B3 in Figure 1). Alternatively, one may rely on off-line auto-tuning (Box B2 in Figure 1). Given a logical loop declaration, along with its specifications, the tunable parameters are: (i) How many times to block each logical loops, (ii) What are the blocking sizes, (iii) Which loops to parallelize, and (iv) In which order to arrange the loops. A key observation is that all these decisions can be mapped in 1-on-1 fashion to a specific loop_spec_string along with a list of block sizes.

We created an infrastructure to auto-generate an exhaustive list of strings that observe a set of constraints (see Figure 1-Box B2). Considering the GEMM in Listing 1 and the decisions (i)-(iv) to be made, one can specify the following set of constraints:

-

1.

Block loop a up to 2 times, and loops b and c up to 3 times. This captures multi-level caches on modern CPUs.

-

2.

For each logical loop , consider its logical trip count and find the prime factorization of . Then pick as block factors the prefix products of the prime factors with the loop’s steps. E.g., for the loop we can select as and . This is one example of programmatically picking the blocking factors.

-

3.

We may decide to parallelize the (b) and the (c) logical loops as those define independent tasks in GEMM. We can parallelize any of the blocked occurrence of the / loops since they also constitute independent iterations.

-

4.

Consider all permutations of a string subject to rules 1-3.

With such a set of constraints, we generate a set of loop_spec_strings configurations to be benchmarked off-line. We implemented this infrastructure using bash scripts, but one could use other tools like Open-tuner [27]. This auto-tuning process involves 0 lines of code change in the GEMM user-code which remains the same as the one in Listing 1/Figure 1-Box A; all different loop variants dictated by the values of loop_spec_strings are auto-generated and JITed by the PARLOOPER back-end.

II-E Performance modeling of generated loops

To assist the selection of the loop-instantiation loop_spec_string knob, instead of relying exclusively on manual settings/exhaustive auto-tuning, we designed a lightweight, high-level performance modeling tool (Figure 1-Box B3). This tool takes as inputs the logical loop order along with a candidate loop_spec_string, the computational core expressed via tensor-contraction BRGEMM TPPs, and few parameters modeling the target CPU, and it simulates/predicts the performance of such a setup. With this tool, one can do off-line, cross-architecture loop-tunings, and obtain a set of good loop-instantiation knobs.

Currently the POC implementation of this tool works only for PARLOOPER-generated loops with BRGEMM TPPs within body_func but may be extended to cover the rest TPPs. The idea is rather simple: If one were to focus on the execution of a specific thread in a specific loop-instantiation with BRGEMM TPP as main computation, all the data that are accessed are logical Tensor slices identified by the tensor-block indices. In Listing 1, the corresponding slices of , and are characterized by the relevant indices. Consequently, each thread can create a trace of its , and accesses that arise in chronological order as the thread proceeds with the execution of the specific loop-istantiation. These traces are compact since they register accesses of full tensor slices instead of individual cache-lines [28]. Therefore, running a data reuse algorithm that simulates multilevel caches on a per-thread basis is a low-overhead operation.

We simulate up to 3 levels of caches, each having its own size and bandwidth. The replacement policy for each cache is Least Recently Used (LRU). When processing a thread’s trace access in chronological order, we query if the corresponding tensor slice is in any level of its cache or if it resides in memory. Then, given a BRGEMM execution at iteration , and knowing the memory/cache-locations of the current slices , and we can predict the execution cycles taken by BRGEMM by accounting for the relative cache bandwidths and the compute-peak of the platform. Each level of cache is represented as set and is updated based on the LRU policy as the execution progresses. For simplicity we ignore data-sharing, but the traces could be processed in lock-step fashion to account for common sub-tensors in shared levels of cache. Despite these simplifications, our performance modelling is able to single out loop instantiations with poor temporal/spatial locality, and identifies parallel schedules with poor concurrency. Such inefficient loop_spec_strings are assigned a low score and can be considered sub-optimal.

III Kernels Developed via PARLOOPER/TPP

We developed the following kernels via PARLOOPER/TPP that comprise the computational heart of the DL workloads presented in the next section IV: (i) GEMM and Multi-Layer Perceptrons covering various tensor contractions, (ii) Convolution kernels used in computer-vision applications and (iii) Block-SpMM kernels used for efficient Transformer inference.

III-A GEMM and Multi-Layer Perceptron (MLP)

III-A1 Extending GEMM with Activation Functions to MLP

We extend Listing 1 to include activation functions, expressed via relevant TPPs. E.g., once a tensor block has been computed, we call a TPP on the computed 2D sub-block. In terms of the core body function, this merely translates to (for Rectified Linear Unit (RELU) activation function):

The GEMM kernel is used to implement a Fully-Connected Layer: an input activation tensor is multiplied with a weight tensor to yield the output tensor . A Multi-Layer Perceptrons (MLP) consists of (at least three) fully connected layers of neurons. Each neuron in the topology may use a non-linear activation function (e.g. a RELU after the GEMM). MLP and Fully-Connected Layers are ubiquitous in DL, including modern Large Language Models [29] and Recommendation Systems [30]. An MLP within the PARLOOPER framework is just another loop around the GEMM primitive to capture the cascading GEMMs. The tensor of each layer corresponds to the tensor of the pertinent GEMM. Due to the cascading nature of MLP, the output matrix of a layer (tensor in GEMM) is subsequently the input matrix of the next layer (tensor in the next GEMM).

III-A2 Extending the TPP backend with AArch64 SVE support

To increase the coverage of the platforms with our framework, we extend the open-source implementation of TPP in LIBXSMM [31] with support for Arm Scalable Vector Extension (SVE) ISA on AArch64 architectures [32]. Since SVE introduces predicate registers and masked operations, the SVE-TPP backend code generation follows the same strategies as the AVX256/AVX512 for x86 code generation in LIBXSMM.

We highlight here our implementation of the (BR)GEMM TPP with SVE Matrix-Multiply Accumulate (MMLA) instructions. These instructions interprete the vector registers as packed matrices. E.g., considering a 16-bit datatype (BF16) and a vector length of 256-bits (SVE256) each vector register holds 16 elements. These 16 elements are interpreted as 2 concatenated matrices. The BF16-MMLA instruction multiples the matrix of BF16 values held in each 128-bit segment of the first source vector with the BF16 matrix in the corresponding segment of the second source vector. The resulting single-precision (FP32) matrix product occupies the corresponding 128-bit segment of the output vector. The backend implementation for the (BR)GEMM TPP with MMLA instructions can follow a traditional 2D register blocking strategy [21] as long as the input tensors and are reformatted to pack and sub-tensors. The TPP collection provides the corresponding reformatting primitives. We also implement BRGEMM on SVE with MMLA support where the matrix is on-line packed to support the case where tensor is in flat format.

III-B Convolution Kernels

We employ the direct convolution method that uses the BRGEMM TPP for contractions [20, 21]. The input tensor is convoluted with the weight tensor to yield the output . The activation tensor conceptually consist of 4 dimensions: the minibatch , the number of feature maps and the spatial dims and . We denote the dimensions of with , , and while the corresponding dimensions of are , , and . The weight tensor is logically consisting of 4 dimensions: the feature map dimensions , and the spatial dimensions and . We block the dimension by and the dimension by , and we get the blocked tensors in lines 1-3 of Listing 4. We declare with PARLOOPER the 7 logical loops that traverse the iteration space in lines 5-13 of Listing 4. Finally, lines 16-25 express the convolution compute kernel in a lambda function by means of the 7 logical indices and the brgemm_tpp. For convolutions with we can setup a stride-based BRGEMM whereas for the other cases we can setup offset-based BRGEMM to further increase the kernel’s performance (i.e. the and loops would be folded in the BRGEMM by using offsets arrays [12]).

III-C Block-Sparse Dense Matrix Multiply (Block-SpMM)

The Sparse Dense Matrix Multiplication (SpMM) is a kernel widely used in HPC, including linear solvers and graph analytics [33]. The SpMM involves one sparse matrix and two dense matrices and . By exploiting the sparsity of one can reduce both the bandwidth and the compute requirements of the computation. In addition, by enforcing block sparsity on matrix one can increase the performance of SpMM by having better data locality in the accesses of the sparse and by leveraging low-precision/hardware acceleration available on modern CPU and GPU platforms. With the recent explosion in model sizes, and the intrinsic redundancy/sparsity of large models, a promising research vector is exploring block sparsity in DL [34, 35].

We extend the GEMM TPP to support being block-sparse, while and matrices remain dense. We implement block sparse dense kernel with BF16 datatype to leverage Fused Multiple-ADD (FMA) hardware-acceleration on x86 and AArch64 CPU platforms. For our implementation, we extend the TPP implementation in LIBXSMM. We enable support for in Block Compressed Sparse Columns (BCSC) format where the block-size is parameterized. The code-generation of the microkernel works as follows: We iterate over a block row of and for each non-empty block , we multiply it with the corresponding dense block of . The dense multiplication of with sub-blocks of and is using 2D register blocking [21] whenever possible (i.e. large and ) to hide FMA latency and maximize data-reuse from registers. Aiming to deploy low-precision instructions, we opt to use a packed format for the dense which is pre-formatted in VNNI layout (see lines 3-4 in Listing 5 where is the vnni blocking-factor). We implement this Block-SpMM TPP for x86 with both avx512-bf16 and AMX-bf16 acceleration, and for AArch64 with both BF16 dot-product and BF16-MMLA instructions.

The PARLOOPER kernel in Listing 5 for block-SpMM is similar to the regular GEMM. Lines 7-9 declare the loops of the sparse GEMM, while the main compute kernel is the bcsc_spmm_tpp sketched in the previous paragraph. This TPP gets as input arguments the sparse matrix A in BCSC format (line 16) and matrices and are regular dense tensors.

IV DL Workloads via PARLOOPER/TPP

We implemented end-to-end contemporary DL workloads via PARLOOPER/TPP within PyTorch C++ extensions in order to show the viability of our approach in real-world scenarios. We opted for the following workloads: (i) Transformer models (BERT [36]) to capture encoder architectures with attention mechanisms, (ii) Large Language Models (LLM GPT-J [37] and Llama2 [38]) to capture decoder-only transformer architectures used in auto regressive language modeling, (iii) Sparse transformer models for efficient inference and (iv) Convolution Neural Networks (CNNs) that are prevalent in computer vision applications.

IV-A Large Language Model Training and Inference

The BERT model is a transformer pre-trained via a mixture of masked language modeling objective, and next-sentence prediction [36]. We implemented the end-to-end workload using the architecture from Hugging Face [39]. We implemented four fused layers as PyTorch extensions in C++ using PARLOOPER and TPP: Bert-Embeddings, Bert-Self-Attention, Bert-Output/Bert-SelfOutput and Bert-Intermediate layers. In Listing 6 we show an implementation of the Bert-Output/Bert-SelfOutput module with PARLOOPER/TPPs. We invoke a BRGEMM TPP over blocked tensor layouts, and fuse bias TPP, dropout TPP, residual add TPPs and layernorm-equation TPPs [12] on a small 2D-block granularity to maximize the out-of-cache-reuse of tensors among subsequent operators [40, 41]. Unlike previous work [12] where the nested loops have a fixed ordering and parallelization, we abstract the specific instantiation with PARLOOPER and auto-tune them for the problem sizes of interest. In a similar fashion, the Bert-Self-Attention layer consists of blocked tensor contractions, fused with scale, add, dropout and softmax TPP blocks. The Bert-Embeddings layer is comprised of embedded lookups, followed by layernorm and dropout TPPs. Finally, Bert-Intermediate layer is composed of BRGEMM TPP, cascaded by bias add and Gaussian Error Linear Unit (GELU) TPP. All modules are implemented via the PARLOOPER/TPP paradigm and are auto-tuned for specific shapes.

IV-B Unstructured block-sparse BERT inference

We extend the BERT PyTorch extension described in Section IV-A to leverage the Block-SpMM kernel for the tensor contractions instead of dense BRGEMM TPPs. To get a block-sparse BERT model, we follow the methodology of the recent work [42]. In short, we obtain an unstructured block-sparse BERT model from a densely trained checkpoint, by applying knowledge distillation and block-wise weight pruning. We fine-tuned the block-sparse model for 40 epochs, and the final sparsity target was achieved in incremental fashion [42].

IV-C Convolutional Neural Networks (CNN)

For ResNet-50 [22] training we integrated our PARLOOPER CNN kernels into PyTorch as C++ extensions. We followed the architecture from the original paper [22], where the convolution layers (see Listing 4) are followed by batch-normalization layers (see prior work [12] for batchnorm with TPPs). For the Fully Connected Layer (Listing 1) and the Pooling layers we simply used their respective TPP implementation (also through the PyTorch C++ extensions).

V Experimental Results

We conduct experiments spanning machines with matrix accelerators (SPR, GVT3) to CPUs that are hybrid:

SPR:A 2-socket Intel Xeon 8480+ CPU and 2 256 GB of DDR5-4800 memory with 8 channels per socket. Each CPU has 56 Golden Cove cores, with AVX-512 and Intel AMX technology. Each CPU has a TDP of 350W.

GVT3:An AWS Aarch64 Graviton 3 instance with 64 Neo-verse V1 cores featuring SVE incl. MMLA for matrix operations. Each socket has 8 channels of DDR5 memory and we determined through measurements that it is DDR5-4800. The TDP per socket is not disclosed by AWS.

Zen4:An AMD Ryzen 9 7950X desktop processor with 2-channel DDR5-6000 memory of 64 GB. The CPU has 16 Zen4 cores with support for Intel AVX-512, and a TDP of 205W.

ADL:An Intel i9-12900K desktop processor with 2-channel DDR5-5600 memory and a capacity of 64 GB. The CPU features 8 Intel Golden Cove Performance Cores and 8 Intel Gracemont Efficiency cores and has a TDP of 241W.

For all the experiments with our PARLOOPER/TPP framework we use for the TPP JIT code generation the LIBXSMM TPP back-end which is single threaded/serial.

V-A Standalone Kernel Performance

V-A1 GEMM and MLP standalone kernels

Figure 2 shows the performance of GEMM with varying sizes () on SPR (Top), GVT3 (Middle) and Zen4 (Bottom), for two precisions: FP32 (shaded bars) and BF16 (solid bars). The blue bars correspond to our implementation with PARLOOPER/TPP, while the orange bars correspond to oneDNN [43]. For GVT3 experiments with oneDNN, we enable the ARM Compute Library [44] (ACL) backend which is vendor-optimized for AArch64. Our implementation with PARLOOPER and TPPs matches/exceeds the performance of the vendor-optimized libraries. While the results for FP32 are mostly on par, for BF16 we observe speedups up to 1.98 on SPR. The SPR experiments with BF16 datatype leverage the AMX instructions, offering up to 16 more peak flops than the FP32 execution with AVX512, while having access to the same memory hierarchy. Therefore, the BF16 GEMM performance is more sensitive to tensor layouts and cache blocking, since the cache/memory subsystems is stressed more due to the higher compute peak. The oneDNN implementation does not use matrix in blocked layout (see Listing 1) which results in extraneous cache-conflicts misses for the case with leading dimension 4096. For SPR, by leveraging BF16 and AMX we see up to 9 speedup over the FP32 execution. Similarly for GVT3, our implementation outperforms oneDNN with ACL up to 1.45 for BF16. Our newly-introduced BF16-MMLA BRGEMM kernels (Subsection III-A2) offer up to 3.43 speedup over the FP32 SVE256 implementation. On Zen4 we also benchmarked the AMD-Optimized AOCL-AOCC library (version 4.1) and the results correspond to the green bars. On Zen4 all implementations perform equally well (within 4%), and the AVX512-BF16 accelerated GEMMs observe a speedup of 2 over the FP32 versions.

Figure 3 shows the performance of the PARLOOPER BF16 MLP with Bias addition and RELU activation function. We used for these experiments, which corresponds to the mini-batch dimension in the DL context of MLPs, and we vary the and dimensions (i.e. the weight tensors of the cascading GEMMs). As we increase the weight sizes, the efficiency (dashed lines/right y-axis) keeps increasing, due to the increased tensor re-use. We observe that for SPR the efficiency maxes-out at 37.4%. This is due to the cascading nature of activation tensors among layers, which causes core-to-core transfers as the activations flow from one layer to the next one; in such scenarios, on SPR the LLC bandwidth is the limiting factor. SPR has substantially higher compute peak than GVT3 and Zen4. Even though both the latter platforms reach more than 90% of their compute peak, the SPR platform ends up being up to 3.3 and 6.6 faster than GVT3 and Zen4 thanks to the AMX-accelerated GEMMs.

V-A2 Performance comparison with TVM-Autoscheduler & Mojo

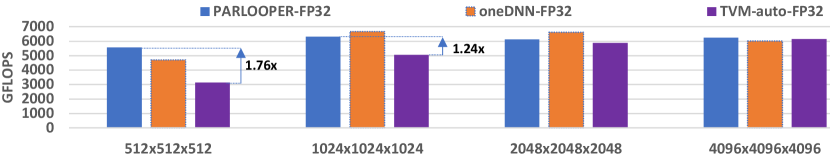

First, we compared the performance of PARLOOPER/TPP with TVM-Autoscheduler/Ansor [17] which takes tensor expressions as input and generates high-performance code. We allowed tensor B to be readily reordered during the search/tuning phase (to maximize performance & enable code generation with specialized VNNI/AMX hardware). Nevertheless, TVM-Autoscheduler was not able to generate code that leverages the hardware accelerated VNNI/AMX BF16 instructions, instead it generated slow replacement instructions sequence. This is a major limitation for practical deployment of TVM-Autoscheduler in DL workloads (also highlighted in recent work [46]), where BF16 and low precision contractions are ubiquitous and offer speedups up to 9 over FP32 on SPR (see Figure 2). In Figure 4 we compare the FP32 GEMM performance on SPR where we allowed TVM-Autoscheduler to search for 1000 schedules (recommended value from the repo). For the smaller GEMMs with limited data reuse, PARLOOPER outperforms TVM by to whereas for the larger GEMMs which are less sensitive to parallelization and tiling, TVM achieves comparable performance to PARLOOPER and oneDNN. However, the time required by TVM to obtain these schedules/kernels through search and autotuning is substantially higher than our framework. On one hand, PARLOOPER searched through 1000 “outer loop” configurations for the 4 experiments in Figure 4 in 2 seconds, 9 seconds, 2 minutes and 22 minutes respectively. On the other hand, TVM required for its autosearch/tuning 17, 18, 24 and 50 minutes respectively, being 2.3 - 500 slower than the autotuning in PARLOOPER. In the PARLOOPER/TPP framework we “stop” the tuning/search space at the boundaries/abstraction levels of TPPs (which work at 2D-subtensor level) and as such we don’t have to search schedules/auto-tune all the way down to register-blocking/allocation/instruction selection since this level of optimizations is undertaken by the TPP backend code generation [12]. On the other hand, TVM does consider the search space that pertains all the way down to vectorization, register blocking and instruction selection. Thus, the search-space in a PARLOOPER/TPP program explores solely cache blocking and parallelization options allowing it to generate up to 1.76 faster code and being up to 500 faster in the autotuning phase. These observations, in conjunction with the inability of TVM to generate performant low precision kernels make it impractical to generate fully-tuned end-to-end workloads that leverage the hardware capabilities of modern CPUs [46, 13].

In Figure 5 we compare the FP32 GEMM written via PARLOOPER/TPP with the GEMM written in the Mojo language [45]. In the Mojo GEMM example, the specification of the GEMM loops is occurring at a high level along with the loop tiling, akin to PARLOOPER. However, in Mojo the user has to explicitly provide unrolling, vectorization and parallelization hints to achieve good performance. We extract the Mojo GEMM results from their blog, where the tested shapes arise from BERT, GPT, DLRM workloads, and the benchmarked CPU platform is a Xeon 8223 (an AWS c5.4xlarge instance) [45]. We observe that the 20 LOC PARLOOPER/TPP GEMM consistently outperforms the Mojo GEMM, with a geomean speedup of 1.35.

V-A3 Performance modelling of GEMM

Figure 6 shows the correlation of modeling/measured results of two GEMMs on SPR (Left) and Zen4(Right) for various loops schedules/loop_spec_strings (see Section II-E). The blue line shows measured performance (left y-axes), and red line shows the modeled performance of the schedules (right y-axes). Our high-level performance modeling scheme is able to capture the trends pertaining to the various loop_spec_strings: loops with poor locality and low-concurrency get a low-score. As a result, the top-5 modeled classes (yellow-shaded regions) always contain the most performant loop instantiation. These results indicate that our modeling tool can be used to identify performant loop-instantiation candidates.

V-A4 Standalone convolution kernels benchmarking

Figure 7 shows the PARLOOPER implementation of ResNet50 [22] Convolutions. The minibatch size used on each platform equals to the number of the corresponding cores, whereas on ADL the minibatch is 1 (single-batch inference). We compare the PARLOOPER/TPP implementation (blue bars) against the oneDNN implementation (orange bars). For the first 3 platforms we benchmark BF16 convolutions (SPR, GVT3 and Zen4) whereas on ADL we benchmark FP32 since there is no BF16 hardware support on this platform. We match/exceed the oneDNN performance across platforms and convolution shapes. By considering the geometric mean on each platform (SPR/GVT3/Zen4/ADL), our implementation outperforms oneDNN by 1.16, 1.75, 1.12 and 1.14 respectively. For GVT3, the oneDNN/ACL integration is inefficient since it is using the FP32 front-end, and in the backend the input tensors are converted to BF16 on-the-fly before the actual computation with the BF16-MMLA instructions. Also, we highlight the result on ADL which used both the Performance (P) and Efficiency (E) cores. In our implementation we utilize the dynamic OpenMP directive (see Section II-B), to account for the core heterogeneity. The convolution code with PARLOOPER/TPP across all platforms and compute-precisions is identical to the one in Listing 4: the runtime knob loop_spec_string instantiates the “outer” loops in an optimal fashion for the problem/platform at hand, and the BRGEMM TPP backend emits optimal code for each micro-architecture.

V-A5 Sparse Dense Matrix-Multiply benchmarking

Figure 8 shows the BF16 Block-SpMM performance of a 2k2k2k Matrix Multiplication on (Top) SPR, (Middle) GVT3, and (Bottom) Zen4. The x-axis shows the sparsity level, and the y-axis is in logarithmic scale dictating the effective GFLOPS. To the best of our knowledge, there is no vendor library for low-precision block-SpMM, thus we show only PARLOOPER/TPP results. The bars correspond to various block-sizes in the block-sparsity structure. As baseline, we show with dashed line the dense GEMM performance. We see that block-sparsity together with low-precision FMA acceleration can offer speedups even for modest sparsity. On SPR and block sizes we can match the dense GEMM even without any sparsity; for 50 % sparsity we see 1.7 speedup over the dense AMX-accelerated version, and for 90% sparsity we see 5.3 speedup. For block sizes and we start seeing benefits for sparsity levels greater than 70%, and for we do not see any benefits. This behavior on SPR is due to the AMX systolic array: with the larger block sizes, we are able to use efficient two-dimensional AMX tile blocking. On the contrary, for smaller block sizes, the “dense” microkernel has small accumulation length which restricts the attainable speedup with AMX. The 44 case is restricted to of the BF16 peak (the systolic is fully utilized with accumulation length multiples of 32). On GVT3 and Zen4 we see benefits over the dense GEMM kernels even for sparsity levels greater than 10% for all block-sizes (the FMA-BF16 on these platforms requires accumulation chain of at least 4 and 2 respectively). For GVT3, the max attainable speedup with SpMM is 9.4 and for Zen4 it is 9.8 over their dense baselines.

V-B End-to-end DL Workload Performance

V-B1 BERT Training Results

In Figure 9 we show BERT-Large SQuAD Fine-Tuning [36] performance in Sequences/sec on SPR (blue-shaded area), GVT3 (green shaded area), and Zen4 (red-shaded area). On SPR we compare against alternative implementations: (i) Hugging Face (yellow) [47], (ii) Intel PyTorch Extensions (IPEX) [48] with oneDNN, and (iii) PyTorch Extensions with TPP only [12]. Our implementation extends the open-source code of Georganas et al [12] with PARLOOPER.

We observe that PARLOOPER/TPP speedups the SOTA implementation [12] by 1.22 (43.3 vs 35.3 squences/sec). This is achieved via better loop instantiations, which we tuned specifically for the shapes of the workload and resulted in faster tensor contractions. On SPR we see tensor contraction with average performance of 40 TFLOPS, which is in alignment with our results in Section V-A1 (the prior work [12] merely had static loop orders in the PyTorch extensions). Our implementation, shows a speedup of 3.3 over the Intel PyTorch Extensions (IPEX) [48] with oneDNN, which does not use the Unpad Optimization that removes unnecessary computations from padded tensors [12]. Our code (Listing 6) is identical across all platforms, and on SPR it is 2.8 faster than GVT3 and 4.4 faster than Zen due to the higher BF16-AMX compute peak of the SPR machine. Our BERT implementation with PARLOOPER/TPP has been adopted by Intel for the recent MLPerf-v2.1 [49] submission (see Table I) in BERT training. The SPR multi-node results (8 and 16 nodes) leverage our implementation with PARLOOPER/TPP, and is integrated with PyTorch Extensions, further highlighting the viability of our framework for real-world, distributed-memory production DL workloads. For reference, the 16-node performance is within 2.4 from the performance of 8 A100 Nvidia GPUs.

| System | Time to train (minutes) |

|---|---|

| 8 nodes SPR (16 sockets) | 85.91 |

| 16 nodes SPR (32 sockets) | 47.26 |

| DGX Box (8xA100 GPU) | 19.6 |

V-B2 Block-sparse BERT Inference Results

In Figure 10-Left we show the inference results on the block-sparse BERT-base fine-tuned on SQuAD. We followed the methodology outlined in Section IV-B, and we obtained an 80% unstructured block-sparse model with 88 block-size. The sparse model achieves F1 score 87.1 while the dense model achieves 88.23, i.e. the accuracy drop is less than 1.5%; this accuracy requirement led us to the block-size and the sparsity level of this experiment. Since we are targeting the latency-oriented BF16 inference with single Batch Size (BS=1), we use on each platform 8 cores; in practice one would execute in parallel multiple instances to fully utilize the chip. The block-sparse BERT (green bars) leveraging the block-SpMM PARLOOPER kernels achieve speedups of 1.75, 1.95, 2.79 over the dense BERT-base (blue bars) on SPR, GVT3 and Zen4 respectively. We create roofline models for each platform by assuming maximal speedup of 5 on the contractions of the workload (due to the 80% sparsity) and the rest components do not anticipate speedup. The dotted yellow bars depict these roofline models, and our sparse BERT achieves 71%, 72% and 88% of the roofline on SPR, GVT3 and Zen4 respectively.

To the best of our knowledge, there are are no vendor libraries for block-SpMM primitives with low-precision and hardware acceleration, thus we only present PARLOOPER results for BF16. In Figure 10-Right we compare our implementation with a SOTA, proprietary sparse inference runtime, namely DeepSparse [50]. We extracted the DeepSparse result from their website [50]; this experiment also corresponds to a sparse BERT-base with F1 score 87.1 (same as our 80% block-sparse BERT-base). We used the same AWS c5.12x large instance, and the same parameters (FP32 precision, BS=32, 24 cores) and we observe that the PARLOOPER implementation with block-SpMM is 1.56 faster than DeepSparse.

V-B3 Large Language Model Inference Results

Figure 11 shows the LLM inference results on SPR (Top) and GVT3 (Bottom) for GPTJ-6B [37] and Llama2-13B [38] with two software stacks: Huggingface (HF) and PyTorch extensions with PARLOOPER/TPP. All experiments use 1024 input tokens, 32 output tokens and batch size 1. On SPR, we see speedups ranging from 1.1 to 2.3 over the HF code that is using oneDNN. We also see that the BF16 datatype accelerates both the first token latency (blue portion) that is compute bound and the next tokens latency (orange portion) that is memory bandwidth bound by 5.7 and 1.9 respectively. Similarly, on GVT3 PARLOOPER is faster than the HF code. On GVT3 we note that the BF16 HF code was extremely slow and it timed out after 2 hours. We suspect this has to do with inefficient BF16 execution path of the HF code on the GVT3 platform that is using reference implementation. Nevertheless, our PARLOOPER implementation which is identical for both platforms and all precisions was able to achieve on GVT3 and speedup by leveraging the BF16 datatype for the first and next tokens respectively.

V-B4 ResNet-50 training Results

| System | Implementation | Performance in images/sec |

|---|---|---|

| GVT3 | PARLOOPER + TPP | 145 |

| SPR | PARLOOPER + TPP | 255 |

| IPEX + oneDNN | 265 |

Table II shows the end-to-end results for ResNet50 BF16-training on a single socket SPR and GVT3 system (Subsection IV-C). The PARLOOPER+TPP implementation is within 4% of the the Intel PyTorch Extensions (IPEX) [48] with oneDNN. The same code can be deployed on GVT3 due to the platform-agnostic nature of PARLOOPER and TPP, yielding performance within 1.76 of SPR.

VI Related Work

With the rapid development of DL and HPC workloads, there is a shift to programming paradigms that break the vendor-library performance-portability “jail”. One alternative, “library-free” approach for optimizing DL and HPC kernels is to use modern Tensor Compilers e.g. PlaidML [14], TVM [15], Tensor Comprehensions [16], Ansor [17], IREE [51], Mojo [45]. However, current state-of-the-art tools are only capable of compiling small code blocks while large-scale operators require excessively long compilation, auto-tuning times and frequently result in code that falls short of achieving peak performance [13]. We envision that the high-level loop and tensor abstractions we presented in this work can benefit a tensor compiler infrastructure, e.g. by using the TPP abstraction layer for the ISA-specific backend code generation, and by using the loop instantiation performance modeling in their internal performance models.

Domain Specific Languages (DSLs) have been traditionally used in HPC workloads, and have effectively addressed portability and performance challenges in specific scientific domains [18, 19, 52]. Nevertheless, these DSLs may be considered as point solutions, rather than a universal methodology across domains, and they require continuous updates to adapt to the evolving nuances of CPU architectures. To this extend, PARLOOPER could be seen as light-weight library framework for kernel development akin to DSL. However, since the loop-abstraction it provides is generic and high-level, it is not as narrow/task-specific as the majority of the DSLs.

Similar to our approach, but with applicability to GPUs is the CUTLASS [53] framework. It is a collection of CUDA C++ template abstractions for implementing high-performance GEMM and related computations. It decomposes the “moving parts” of tensor contractions into reusable, modular components abstracted by C++ template classes. Primitives at different levels can be specialized and tuned via custom blocking sizes, data types, and other algorithmic strategies. All these high-level ideas are also prevalent in the design philosophy of the PARLOOPER/TPP framework. The Composable Kernel (CK) library [54] aims to provide a programming model for writing performance critical kernels for machine learning workloads targeting multiple architectures (e.g. GPUs and CPUs). It builds on two ideas to attain performance, portability and code maintainability: (i) A tile-based programming model and (ii) Tensor Coordinate Transformation primitives. The PARLOOPER/TPP framework tackles the same problems on CPU platforms via Tensor and Loop high-level abstractions.

VII Conclusions And Future Work

In this work we presented a framework to develop efficient, portable DL and HPC kernels for modern CPU architectures. We demonstrate the efficacy of our approach using standalone kernels and end-to-end training and inference DL workloads that outperform state-of-the-art implementations on multiple CPU platforms. As future work, we plan to integrate the standalone kernels we developed in additional end-to-end workloads (e.g. DLRM [30]). Moreover, we plan to further apply our PARLOOPER/TPP kernel development framework on additional CPU architectures (e.g. with RISC-V ISA).

References

- [1] Alex Krizhevsky, I. Sutskever, and G.E. Hinton. Image classification with deep convolutional neural networks. Advances in neural information processing systems, pages 1097–1105, 2012.

- [2] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, and Andrew Rabinovich. Going deeper with convolutions. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1–9, 2015.

- [3] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- [4] Dong Yu, Michael L Seltzer, Jinyu Li, Jui-Ting Huang, and Frank Seide. Feature learning in deep neural networks-studies on speech recognition tasks. arXiv preprint arXiv:1301.3605, 2013.

- [5] Yonghui Wu, Mike Schuster, Zhifeng Chen, Quoc V Le, Mohammad Norouzi, Wolfgang Macherey, Maxim Krikun, Yuan Cao, Qin Gao, Klaus Macherey, et al. Google’s neural machine translation system: Bridging the gap between human and machine translation. arXiv preprint arXiv:1609.08144, 2016.

- [6] Heng-Tze Cheng, Levent Koc, Jeremiah Harmsen, Tal Shaked, Tushar Chandra, Hrishi Aradhye, Glen Anderson, Greg Corrado, Wei Chai, Mustafa Ispir, et al. Wide & deep learning for recommender systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, pages 7–10. ACM, 2016.

- [7] Thomas Wolf, Julien Chaumond, Lysandre Debut, Victor Sanh, Clement Delangue, Anthony Moi, Pierric Cistac, Morgan Funtowicz, Joe Davison, Sam Shleifer, et al. Transformers: State-of-the-art natural language processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, pages 38–45, 2020.

- [8] Erik Gawehn, Jan A Hiss, and Gisbert Schneider. Deep learning in drug discovery. Molecular informatics, 35(1):3–14, 2016.

- [9] Garrett B Goh, Nathan O Hodas, and Abhinav Vishnu. Deep learning for computational chemistry. Journal of computational chemistry, 38(16):1291–1307, 2017.

- [10] Maithra Raghu and Eric Schmidt. A survey of deep learning for scientific discovery. arXiv preprint arXiv:2003.11755, 2020.

- [11] Edoardo Angelo Di Napoli, Paolo Bientinesi, Jiajia Li, and André Uschmajew. High-performance tensor computations in scientific computing and data science. Frontiers in Applied Mathematics and Statistics, page 93, 2022.

- [12] Evangelos Georganas, Dhiraj Kalamkar, Sasikanth Avancha, Menachem Adelman, Cristina Anderson, Alexander Breuer, Jeremy Bruestle, Narendra Chaudhary, Abhisek Kundu, Denise Kutnick, et al. Tensor processing primitives: A programming abstraction for efficiency and portability in deep learning workloads. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, pages 1–14, 2021.

- [13] Paul Barham and Michael Isard. Machine learning systems are stuck in a rut. In Proceedings of the Workshop on Hot Topics in Operating Systems, pages 177–183, 2019.

- [14] Tim Zerrell and Jeremy Bruestle. Stripe: Tensor compilation via the nested polyhedral model. arXiv preprint arXiv:1903.06498, 2019.

- [15] Tianqi Chen, Thierry Moreau, Ziheng Jiang, Lianmin Zheng, Eddie Yan, Haichen Shen, Meghan Cowan, Leyuan Wang, Yuwei Hu, Luis Ceze, et al. TVM: An automated end-to-end optimizing compiler for deep learning. In 13th USENIX Symposium on Operating Systems Design and Implementation (OSDI 18), pages 578–594, 2018.

- [16] Nicolas Vasilache, Oleksandr Zinenko, Theodoros Theodoridis, Priya Goyal, Zachary DeVito, William S Moses, Sven Verdoolaege, Andrew Adams, and Albert Cohen. Tensor comprehensions: Framework-agnostic high-performance machine learning abstractions. arXiv preprint arXiv:1802.04730, 2018.

- [17] Lianmin Zheng, Chengfan Jia, Minmin Sun, Zhao Wu, Cody Hao Yu, Ameer Haj-Ali, Yida Wang, Jun Yang, Danyang Zhuo, Koushik Sen, et al. Ansor: Generating high-performance tensor programs for deep learning. In 14th USENIX Symposium on Operating Systems Design and Implementation (OSDI 20), pages 863–879, 2020.

- [18] Nathan Zhang, Michael Driscoll, Charles Markley, Samuel Williams, Protonu Basu, and Armando Fox. Snowflake: a lightweight portable stencil dsl. In 2017 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), pages 795–804. IEEE, 2017.

- [19] Jonathan Ragan-Kelley, Connelly Barnes, Andrew Adams, Sylvain Paris, Frédo Durand, and Saman Amarasinghe. Halide: a language and compiler for optimizing parallelism, locality, and recomputation in image processing pipelines. Acm Sigplan Notices, 48(6):519–530, 2013.

- [20] Evangelos Georganas, Sasikanth Avancha, Kunal Banerjee, Dhiraj Kalamkar, Greg Henry, Hans Pabst, and Alexander Heinecke. Anatomy of high-performance deep learning convolutions on SIMD architectures. In Proceedings of the International Conference for High Performance Computing, Networking, Storage, and Analysis, page 66. IEEE Press, 2018.

- [21] Evangelos Georganas, Kunal Banerjee, Dhiraj Kalamkar, Sasikanth Avancha, Anand Venkat, Michael Anderson, Greg Henry, Hans Pabst, and Alexander Heinecke. Harnessing deep learning via a single building block. In 2020 IEEE International Parallel and Distributed Processing Symposium (IPDPS), pages 222–233. IEEE, 2020.

- [22] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- [23] Rohit Chandra, Leo Dagum, Ramesh Menon, David Kohr, Dror Maydan, and Jeff McDonald. Parallel programming in OpenMP. Morgan kaufmann, 2001.

- [24] Chuck Pheatt. Intel® threading building blocks. Journal of Computing Sciences in Colleges, 23(4):298–298, 2008.

- [25] Bradford Nichols, Dick Buttlar, Jacqueline Farrell, and Jackie Farrell. Pthreads programming: A POSIX standard for better multiprocessing. ” O’Reilly Media, Inc.”, 1996.

- [26] Kazushige Goto and Robert A Geijn. Anatomy of high-performance matrix multiplication. ACM Transactions on Mathematical Software (TOMS), 34(3):12, 2008.

- [27] Jason Ansel, Shoaib Kamil, Kalyan Veeramachaneni, Jonathan Ragan-Kelley, Jeffrey Bosboom, Una-May O’Reilly, and Saman Amarasinghe. Opentuner: An extensible framework for program autotuning. In Proceedings of the 23rd international conference on Parallel architectures and compilation, pages 303–316, 2014.

- [28] Sanket Tavarageri, Alexander Heinecke, Sasikanth Avancha, Bharat Kaul, Gagandeep Goyal, and Ramakrishna Upadrasta. Polydl: Polyhedral optimizations for creation of high-performance dl primitives. ACM Transactions on Architecture and Code Optimization (TACO), 18(1):1–27, 2021.

- [29] Tom Brown, Benjamin Mann, Nick Ryder, Melanie Subbiah, Jared D Kaplan, Prafulla Dhariwal, Arvind Neelakantan, Pranav Shyam, Girish Sastry, Amanda Askell, et al. Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901, 2020.

- [30] Maxim Naumov, Dheevatsa Mudigere, Hao-Jun Michael Shi, Jianyu Huang, Narayanan Sundaraman, Jongsoo Park, Xiaodong Wang, Udit Gupta, Carole-Jean Wu, Alisson G Azzolini, et al. Deep learning recommendation model for personalization and recommendation systems. arXiv preprint arXiv:1906.00091, 2019.

- [31] Alexander Heinecke, Greg Henry, Maxwell Hutchinson, and Hans Pabst. LIBXSMM: Accelerating small matrix multiplications by runtime code generation. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, SC ’16, pages 84:1–84:11, Piscataway, NJ, USA, 2016. IEEE Press.

- [32] Nigel Stephens, Stuart Biles, Matthias Boettcher, Jacob Eapen, Mbou Eyole, Giacomo Gabrielli, Matt Horsnell, Grigorios Magklis, Alejandro Martinez, Nathanael Premillieu, et al. The arm scalable vector extension. IEEE micro, 37(2):26–39, 2017.

- [33] Md Mostofa Ali Patwary, Nadathur Rajagopalan Satish, Narayanan Sundaram, Jongsoo Park, Michael J Anderson, Satya Gautam Vadlamudi, Dipankar Das, Sergey G Pudov, Vadim O Pirogov, and Pradeep Dubey. Parallel efficient sparse matrix-matrix multiplication on multicore platforms. In High Performance Computing: 30th International Conference, ISC High Performance 2015, Frankfurt, Germany, July 12-16, 2015, Proceedings, pages 48–57. Springer, 2015.

- [34] Torsten Hoefler, Dan Alistarh, Tal Ben-Nun, Nikoli Dryden, and Alexandra Peste. Sparsity in deep learning: Pruning and growth for efficient inference and training in neural networks. The Journal of Machine Learning Research, 22(1):10882–11005, 2021.

- [35] François Lagunas, Ella Charlaix, Victor Sanh, and Alexander M Rush. Block pruning for faster transformers. arXiv preprint arXiv:2109.04838, 2021.

- [36] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- [37] Ben Wang and Aran Komatsuzaki. GPT-J-6B: A 6 Billion Parameter Autoregressive Language Model. https://github.com/kingoflolz/mesh-transformer-jax, May 2021.

- [38] Hugo Touvron, Louis Martin, Kevin Stone, Peter Albert, Amjad Almahairi, Yasmine Babaei, Nikolay Bashlykov, Soumya Batra, Prajjwal Bhargava, Shruti Bhosale, et al. Llama 2: Open foundation and fine-tuned chat models. arXiv preprint arXiv:2307.09288, 2023.

- [39] Thomas Wolf, Lysandre Debut, Victor Sanh, Julien Chaumond, Clement Delangue, Anthony Moi, Pierric Cistac, Tim Rault, Rémi Louf, Morgan Funtowicz, Joe Davison, Sam Shleifer, Patrick von Platen, Clara Ma, Yacine Jernite, Julien Plu, Canwen Xu, Teven Le Scao, Sylvain Gugger, Mariama Drame, Quentin Lhoest, and Alexander M. Rush. Transformers: State-of-the-art natural language processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, pages 38–45, Online, October 2020. Association for Computational Linguistics.

- [40] Kunal Banerjee, Evangelos Georganas, Dhiraj D Kalamkar, Barukh Ziv, Eden Segal, Cristina Anderson, and Alexander Heinecke. Optimizing deep learning rnn topologies on intel architecture. Supercomputing Frontiers and Innovations, 6(3):64–85, 2019.

- [41] Minjia Zhang, Samyam Rajbhandari, Wenhan Wang, and Yuxiong He. Deepcpu: Serving rnn-based deep learning models 10x faster. In 2018 USENIX Annual Technical Conference (USENIXATC 18), pages 951–965, 2018.

- [42] Eldar Kurtic, Daniel Campos, Tuan Nguyen, Elias Frantar, Mark Kurtz, Benjamin Fineran, Michael Goin, and Dan Alistarh. The optimal bert surgeon: Scalable and accurate second-order pruning for large language models. arXiv preprint arXiv:2203.07259, 2022.

- [43] Intel oneDNN. https://github.com/oneapi-src/onednn, Accessed on 4/6/2023.

- [44] ARM. https://github.com/arm-software/computelibrary, Accessed on 4/6/2023.

- [45] Modular. https://www.modular.com/blog/the-worlds-fastest-unified-matrix-multiplication, Accessed on 4/20/2023.

- [46] Jianhui Li, Zhennan Qin, Yijie Mei, Jingze Cui, Yunfei Song, Ciyong Chen, Yifei Zhang, Longsheng Du, Xianhang Cheng, Baihui Jin, et al. onednn graph compiler: A hybrid approach for high-performance deep learning compilation. arXiv preprint arXiv:2301.01333, 2023.

- [47] Hugging Faces. https://github.com/huggingface/transformers, Accessed on 4/6/2023.

- [48] Intel Extension for PyTorch. https://github.com/intel/intel-extension-for-pytorch, Accessed on 4/6/2023.

- [49] ML Commons. https://mlcommons.org/en/training-normal-21/, Accessed on 4/6/2023.

- [50] Neural magic. https://neuralmagic.com/blog/pruning-hugging-face-bert-compound-sparsification/, Accessed on 27/9/2023.

- [51] IREE. https://github.com/openxla/iree, Accessed on 4/6/2023.

- [52] Ivens Portugal, Paulo Alencar, and Donald Cowan. A survey on domain-specific languages for machine learning in big data. arXiv preprint arXiv:1602.07637, 2016.

- [53] Vijay Thakkar, Pradeep Ramani, Cris Cecka, Aniket Shivam, Honghao Lu, Ethan Yan, Jack Kosaian, Mark Hoemmen, Haicheng Wu, Andrew Kerr, Matt Nicely, Duane Merrill, Dustyn Blasig, Fengqi Qiao, Piotr Majcher, Paul Springer, Markus Hohnerbach, Jin Wang, and Manish Gupta. CUTLASS, 1 2023.

- [54] Chao Liu, Jing Zhang, Letao Qin, Qianfeng Zhang, Liang Huang, Shaojie Wang, Anthony Chang, Chunyu Lai, Illia Silin, Adam Osewski, Poyen Chen, Rosty Geyyer, Hanwen Chen, Tejash Shah, Xiaoyan Zhou, and Jianfeng Yan. Composable Kernel.

Optimization Notice: Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors. Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more information go to http://www.intel.com/performance.

Intel, Xeon, and Intel Xeon Phi are trademarks of Intel Corporation in the U.S. and/or other countries.