H2Opus: A distributed-memory multi-GPU software package for non-local operators

Abstract.

Hierarchical -matrices are asymptotically optimal representations for the discretizations of non-local operators such as those arising in integral equations or from kernel functions. Their complexity in both memory and operator application makes them particularly suited for large-scale problems. As a result, there is a need for software that provides support for distributed operations on these matrices to allow large-scale problems to be represented. In this paper, we present high-performance, distributed-memory GPU-accelerated algorithms and implementations for matrix-vector multiplication and matrix recompression of hierarchical matrices in the format.

The algorithms are a new module of H2Opus, a performance-oriented package that supports a broad variety of matrix operations on CPUs and GPUs. Performance in the distributed GPU setting is achieved by marshaling the tree data of the hierarchical matrix representation to allow batched kernels to be executed on the individual GPUs. MPI is used for inter-process communication. We optimize the communication data volume and hide much of the communication cost with local compute phases of the algorithms. Results show near-ideal scalability up to 1024 NVIDIA V100 GPUs on Summit, with performance exceeding 2.3 Tflop/s/GPU for the matrix-vector multiplication, and 670 Gflops/s/GPU for matrix compression, which involves batched QR and SVD operations.

We illustrate the flexibility and efficiency of the library by solving a 2D variable diffusivity integral fractional diffusion problem with an algebraic multigrid-preconditioned Krylov solver and demonstrate scalability up to 16M degrees of freedom problems on 64 GPUs.

1. Introduction

Many of the large dense matrices that appear in scientific computing, machine learning, integral equations, and other applications are in fact data sparse. They may be approximated, to arbitrary accuracy, with a memory footprint that is much smaller that the prohibitive cost of the dense representation. Data sparsity is a consequence of the fact that certain blocks of the matrix may be represented by factorizations whose ranks are smaller than the block dimensions. These low rank blocks of the matrix may be of different sizes and may appear in general position in the matrix. The resulting representations are known as hierarchical matrices.

Hierarchical matrices are often used to compress kernel matrices, where a general point set and an explicit kernel function are given. This is a natural setting for N-body problems, boundary and volume integral methods, spatial statistics, regression and discretizations of fractional and non-local operators among others. Hierarchical matrices are also effective in settings where explicit kernels do not exist. Inverses of discretization of differential operators, Schur complements, and Hessians of PDE-constrained optimization problems also result in matrices that have a structure with many low rank blocks. General linear algebraic operations are possible in the compressed representations, presenting opportunities for tackling challenging computations and provide scalable solutions for operations that would be otherwise computationally infeasible with the dense format.

Hierarchical matrices come in different variants. A basic version uses a fixed blocking of the matrix (so-called weak admissibility partitioning), where every block touches the diagonal and is stored as a separate low rank factorization. The asymptotic memory footprint of this representation is , where is a representative rank of the blocks. Unfortunately, this basic representation requires substantial memory in practice due to both the factor and the large ranks generally needed to reach reasonable target accuracy, as a result of the rigid partitioning of the matrix. This can be improved on in two ways. The first eliminates the logarithmic factor in the complexity estimates by expressing the block factorizations in a nested basis. The second improvement, which allows a substantial reduction in the effective , allows both dense and low rank blocks of different granularity to appear in general position in the matrix, essentially allowing the matrix structure itself to be adapted to a given problem (strong admissibility partitioning). The hierarchical matrix variant with these two improvements is referred to as the format, and reaches the optimal asymptotic memory footprint . Given that memory is often the primary constraining resource in high performance computing environments, the representation of choice for large scale problems is a general, nested basis, strong admissibility, representation. matrices were originally motivated primarily by the needs of integral equations [32], but their algebraic nature has allowed their application in a variety of other contexts, including, for example, the representation of Dirichlet-to-Neumann operators [27, 26], particulate flows in periodic geometries of arbitrary shapes [39], Hessians of PDE-constrained problems [9].

There is tremendous interest in the development of high-performance software libraries for constructing and operating on hierarchical matrices, driven naturally by their compelling small memory footprint and lower asymptotic operational complexity. Much like quality libraries such as FMM3D [1] have made it possible to spread the use of FMM technology to broad classes of problems, similar libraries for matrices—which are algebraic generalizations of FMM—would also allow a broader use of this technology for tackling dense matrix problems that might otherwise be prohibitively expensive. Software packages that are not performance-oriented but more oriented towards readability and conciseness include [35, 40, 11, 2]. A shared-memory high-performance package for matrices is presented in [36]. A high quality software for distributed-memory environments that targets large scale problems is STRUMPACK [5]. Distributed memory algorithms for constructing and operating on these matrices were proposed in [37, 17, 50]. Dynamic run-time systems to manage the scheduling of the operations of the irregular hierarchical matrix structure in a more convenient fashion through explicit task graphs are presented in [7, 18]. Manycore algorithms were presented in [44, 55], and BiCG solvers on GPU clusters were demonstrated in [49]. Multicore and manycore methods for operating on hierarchical matrices including matrix-vector multiplication are discussed in [53, 51, 24]. Algorithms for high performance computing environments suitable for large scale problems are presented in [46, 25, 54, 45, 52].

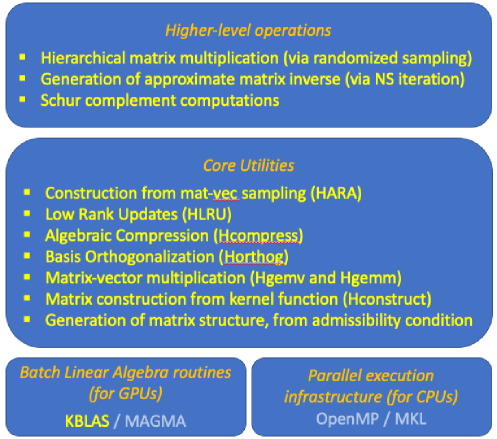

H2Opus [3] is an open source, GPU-accelerated, package for hierarchical matrix computations in the format. It supports a number of core operations (see Figure 1) for constructing these matrices from a kernel and admissibility conditions, performing BLAS-like operations including matrix-vector multiplication, basis orthogonalization, and recompression. It also provides facilities for adding a (globally) low rank matrix to an matrix, as well as the ability to construct an matrix from randomized sampling operations, essentially generalizing the construction of globally low rank matrix decomposition [33] to the hierarchically low rank case. These core operations form the building blocks for higher level operations that perform matrix-matrix multiplication, generate approximate inverses iteratively, and compute Schur complements in various applications [20, 9]. The package runs on CPUs (with explicit optimizations for Intel and AMD CPUs) as well as on GPUs with the portability enabled by a lower level layer that either uses batch BLAS operations specialized to run directly on the GPUs when manycore hardware is available, or alternatively uses OpenMP to obtain batch parallelism in multicore environments. In addition, the library also supports running on the NEC-SX Vector Engine. H2Opus has interfaces to the PETSc [13, 14] package, allowing the use of the extensive facilities of the latter for manipulating distributed-memory (sparse) matrices arising from the discretization of PDEs. Efficient solvers are also available in the so called Tile Low Rank format [22].

In this paper, we extend the H2Opus package with distributed-memory multi-GPU operations for two of its core capabilities: matrix-vector multiplication (and the related multiplication of a hierarchical matrix by multiple vectors), and matrix recompression. Single-GPU versions of these algorithms were presented in [19]. Matrix-vector multiplication is a key building block of Krylov-type iterative solvers, appearing in the inner loops of nonlinear and eigenvalue solvers. Therefore improving its performance can substantially reduce overall time to solution. The multi-vector case is also a performance critical kernel in many contexts such as randomized hierarchical matrix-matrix multiplication, and block-Krylov methods, such as those exposed by PETSc [38]. The core matrix-vector multiplication can benefit from processing multiple vectors concurrently; the additional arithmetic intensity made available, relative to looping over the bandwidth-limited operation of single vector multiplication, allows substantially higher absolute performance to be achieved. Matrix recompression is also another key building block for matrix operations, closely resembling the truncation operations on dense matrices and matrix blocks. Recompression is needed when an initial matrix approximation is generated using a polynomial interpolation or other non-optimal bases, and algebraic recompression step is relied on to produce a storage-optimal representation for the desired accuracy. It is also needed when BLAS3 operations are performed on matrices. These operations generally increase the apparent rank of various blocks, which would then need to be recompressed to maintain the optimal complexity.

We present high performance and scalable implementations of these algorithms that demonstrate near-ideal scalability on up to 1024 NVIDIA V100 GPUs, with performance exceeding 2.3 Tflop/s per GPU. At this scale, a dense kernel matrix of size 536M536M, represented as a hierarchical matrix to an accuracy of can be multiplied by a single vector in ms and by 64 vectors concurrently in ms, achieving 85% of the peak efficiency of batched GEMM operations. This pushes the state of the art beyond the results of [54, 52, 46]. The compression operation also achieves near-optimal scalability with number of GPUs, with matrices of size 67M67M compressed by a factor of 6 in their low rank memory (from an accuracy of to an accuracy of ) in around ms on 64 GPUs.

The algorithms are supported by distributed data structures for representing, accessing, and operating on hierarchical matrices with nested bases. These data structures have elements similar to those found in parallel multigrid representations, with analogous restriction and prolongation transfer operators, since the low rank blocks and their bases appear at different levels of granularity and are naturally stored at multiple levels of a hierarchy. The data structures also have patterns similar to those of parallel sparse matrix representations because at every level of the hierarchy, the low rank blocks appear in general locations forming essentially a block sparse matrix whose block sizes are on the order of the block ranks, . The matrix structure has a bounded sparsity constant [28, 16] which is exploited to optimize overall inter-process communication volume and to hide much of the MPI communication cost with concurrent local compute phases of the algorithms. Single node performance is obtained by marshaling the tree data structures in a way to allow optimized batched dense linear algebra kernels to be invoked.

We finally demonstrate the use of the algorithms in the scalable solution of a 2D variable-coefficient integral fractional diffusion equation. We use the distributed-memory algorithms to construct and compress the dense operator to an accuracy of . The solution uses a Krylov method with the matrix-vector multiplication done by our distributed algorithm and the preconditioning done by a classical (non-fractional) diffusion equation which is solved by a distributed-memory algebraic multigrid method in PETSc. The results show that all aspects of the solver, including the setup of the hierarchical representation of the dense operators, the computation of the volume “Dirichlet” conditions, the setup of the preconditioner, and the work per iteration, scale linearly with problem size. The solver also exhibits a dimension-independent number of iterations and results in near-ideal weak scalability up to grids of size on 64 GPUs.

The rest of this paper is organized as follows. Section 2 describes the distributed representation of the data structures of an matrix. Section 3 describes the basic distributed matrix-vector multiplication operations and Section 4 presents the key optimizations to minimize inter-process communication volume and hide latencies. Section 5 describes the recompression algorithms which shares many of the algorithmic structures of the matrix-vector multiplication algorithms but relies on batch QR and SVD instead of batch GEMM in lower level linear algebra. Section 6 presents the distributed multi-GPU scalability results for the matrix-vector multiplication and recompression algorithms as well as the integral fractional diffusion solver. We conclude with future potential directions in Section 7.

2. Distributed Data Structures for Matrices

2.1. Matrix Structure

The representation of an matrix exploits two kinds of hierarchies: a hierarchy of blocks at different levels of granularity and a hierarchy of bases that is used to represent the data in the blocks. At every level of the hierarchy the low rank blocks essentially form a block sparse matrix. In addition, there is a block sparse matrix that stores the dense blocks of the matrix that are not amenable to low rank representations.

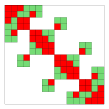

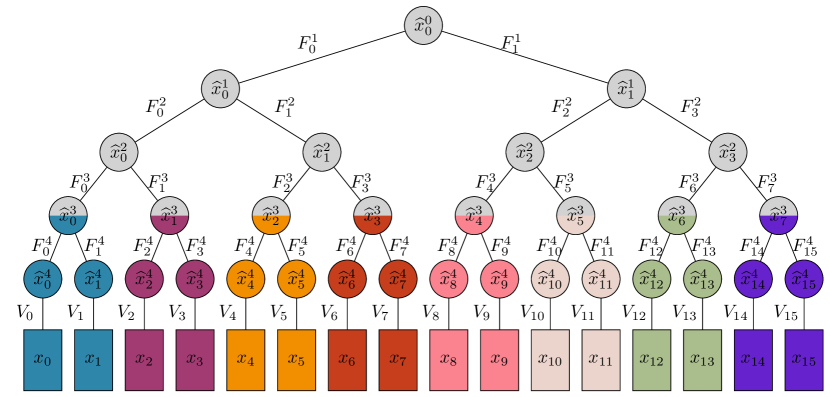

Hierarchy of Blocks. Let and be the sets of indices of the rows and columns of a matrix, and and be hierarchical clusterings of these index sets, respectively. All blocks within the matrix can then be defined by cluster pairs . Define an admissible block as a block that is either small enough to be stored in dense form of size , with denoting the so-called leaf size, or can be well approximated by a low rank matrix. A matrix in the -variant of hierarchical matrices can be partitioned into admissible blocks of various sizes that are organized hierarchically as the leaves of a matrix tree. A low rank matrix on level of the tree and defined by the cluster pair is represented as the outer product of two tall matrices and of rank : .

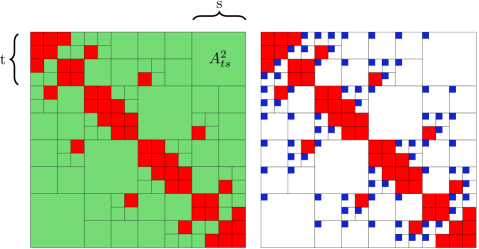

The left panel of Figure 2(a) shows the leaves of this matrix tree which define a complete partitioning of the matrix into blocks, with dense leaves colored red and low rank leaves colored green. Figure 2(b) shows the various levels of the tree, where inner nodes are in cyan. The structure of this matrix tree depends on the application. For example, in problems involving a spatial discretization, we can leverage data from the particles or nodes generated by the discretization to generate it with the aid of a geometric admissibility condition [31]. Other applications might rely on the available algebraic data or heuristics to determine which blocks of the matrix are admissible as low rank blocks.

Hierarchy of Bases. The representation uses block low rank factorizations of the form for every block at every level , where and are bases for the block rows and block column of level , respectively. is a small coupling matrix representing the interaction between the row and column cluster pairs. These coupling matrices are organized in the matrix tree defined by . In our implementation, we currently use a fixed rank per level that we refer to as , or even simply when the context is clear, which allows us to use higher-performing fixed-size batch linear algebra kernels when executing basis operations.

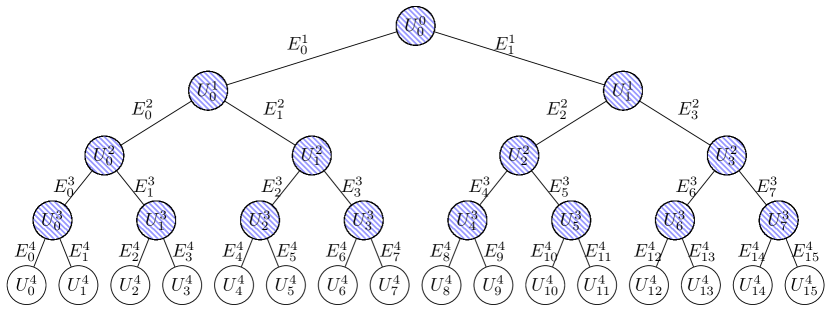

An additional hierarchy is introduced in the row and column basis trees, where a basis node is expressed in terms of the bases of its children. Basis nodes are only stored explicitly at the leaves of the tree, and inner nodes can be computed from their children using small interlevel transfer matrices. For example, one can compute an inner node from its two children, and , and their corresponding transfer matrices and as

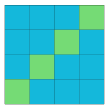

The explicit bases at the leaves and the interlevel transfer matrices are organized in a basis tree. Figure 3 shows an example of the nested basis , with the implicitly represented inner nodes shaded.

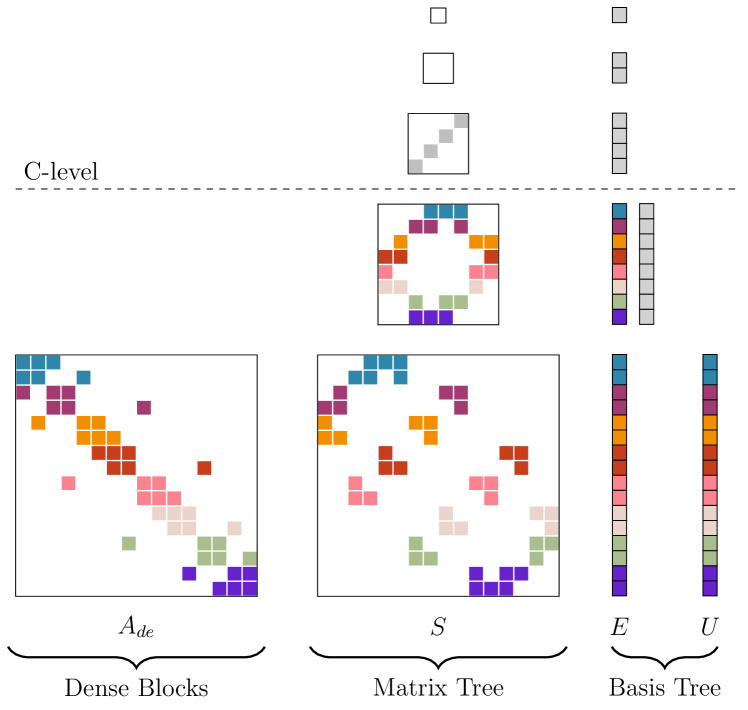

Putting it all together, the -matrix representation may be succinctly written as

where is a block sparse matrix with dense blocks of size , shown as the red leaves at the finest level of the matrix tree in Figure 2(a). is a matrix tree of coupling matrices, whose leaves, which appear at different levels, are shown as the cyan blocks in the right panel of Figure 2(a), and and are the block row and block column basis trees, each consisting of explicitly stored bases at the leaf level, and interlevel transfer matrices and , as shown in Figure 3 for basis tree . The triple-tree product notation is defined as the assembly of all blocks of all levels where every block may be computed as .

2.2. Distributed Representation and Construction

| Symbol | Description |

|---|---|

| , | Total number of GPUs, and index of the local GPU |

| Complete basis trees | |

| Complete matrix tree | |

| Local branch of the basis tree on GPU | |

| Root branch of the basis tree on the master process | |

| Local branch of the matrix tree on GPU | |

| Root branch of the matrix tree on the master process | |

| Matrix tree branch, from dual tree traversal of and | |

| , , etc. | Marshaled , , etc. arrays for batched execution |

| Input and output vectors | |

| Local branches of vector trees and on GPU | |

| Local sub-vectors of the input and output on GPU |

The description of the parallel distribution of the matrix and the algorithms operating on it uses the notation in Table 1. We use a left subscript to refer to local data owned by a GPU, and a triple bar subscript to refer to data that has been marshaled to allow batched GPU kernels to be invoked on it.

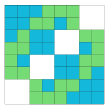

Treating each level of the matrix tree of the hierarchical matrix as a block sparse matrix, we decompose the levels into block rows, with each block row stored on a single GPU, as illustrated in Figure 4. The basis trees are similarly decomposed by level, assigning the nodes corresponding to the stored block rows/columns to the same GPU. This decomposition allows much of the upsweep and downsweep to be carried out independently as described in Section 3 for matrix-vector multiplication and in Section 5 for matrix compression. Above a critical C-level, the decomposition stops and a single root GPU owns all top levels. The interlevel transfer operators at the roots of each of the local branch basis trees are duplicated at the leaf level of (see Figure 4) to allow upsweep and downsweep operations to begin and end at the C-level.

We construct the distributed matrix by first splitting the row cluster tree into independent branches at level and a local branch of the basis tree on GPU is generated for each . The top levels of the basis tree are kept on a master process and could potentially be split further if becomes large enough. The root branch of the matrix tree can be generated on the master process in any number of ways, such as general admissibility dual tree traversal [31]. Once this matrix tree is constructed, a list of the basis nodes from can be extracted for each GPU corresponding to the inadmissible nodes of the block row at level of the matrix tree. As an example, consider the distribution on GPUs of the fourth level of the matrix tree in Figure 2(b). The column indices corresponding to the cyan nodes are extracted for GPU , setting from the second block row. Once the list for each process has been compiled, they are scattered from the master process to all other processes.

Since the nodes in were generated from the inadmissible nodes of the matrix tree that have to be subdivided further, the structure of the matrix tree on each GPU can then be generated independently using multiple dual tree traversals of the root node of with the nodes in , where each traversal generates a local branch . Once the structure has been determined, the entries of the transfer and leaf matrices of the basis trees, together with the entries of the matrix tree can be populated independently on each GPU using established techniques [16].

3. Distributed-memory Matrix-Vector Multiplication

This section describes the overall structure of the distributed multiplication operation with vectors concurrently. We use this structure to highlight the interprocess communication bottlenecks, that are then optimized in Section 4.

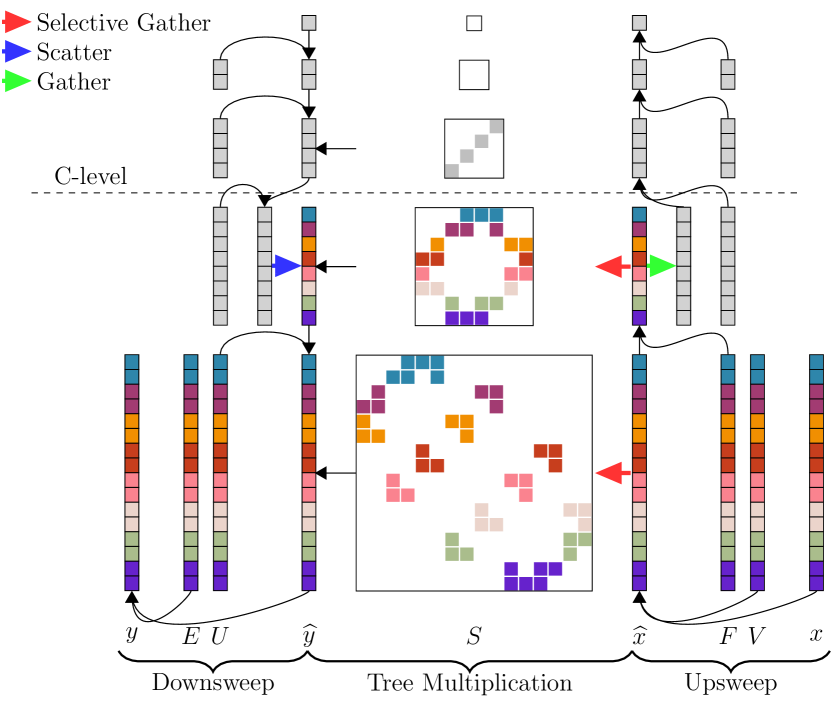

The input multi-vector of size is distributed among GPUs, where each sub-vector is extracted from the index set defined by the root node of . The hierarchical matrix-vector product operation requires a standard block sparse multiplication for the dense blocks of the matrix, which can be overlapped with the low rank multiplication . The low rank portion of the product is a generalization to the hierarchical tree setting of a regular dense low rank matrix by a vector, and is illustrated in Figure 5. In a first phase, we perform an upsweep of the basis tree and compute , which contains the products of the matrices of all block columns at all levels with the corresponding pieces of . In a second phase, a tree is computed. also consists of multilevel data corresponding to the products of for every level . In the third phase, a downsweep phase through the basis tree accumulates the multilevel tree data into the output vector , an operation we may symbolically write as .

3.1. Distributed Upsweep

The upsweep phase is illustrated in Figure 6 and summarized in Algorithm 2. It proceeds from the leaf level where the explicitly stored block row bases, each of size , are simply multiplied by . At every higher level, can be computed from the children at level using the transfer operators [16]. For a parent node with children and we have

In the distributed setting, the upsweep on each GPU can proceed in parallel on each of the local branches independently, using the kernel shown in Algorithm 1. Once the upsweep reaches the roots of each branch, the data from all root nodes of the trees are gathered on the master process to populate the leaf level of the root branch , allowing the upsweep to complete. The regular upsweep Algorithm 1 can be used for the branch upsweep as well as the root upsweep by simply omitting the first batched operation on the leaves of the root branch, since that step is replaced by the gathering of the data.

From an efficiency perspective, the primary challenge in the upsweep comes from the fact that the individual operations in the basis tree nodes involve small matrix operators that do not possess a sufficient amount of data parallelism for effective GPU utilization. We overcome this problem by marshaling the appropriate level data to allow batched GPU kernels to be invoked, allowing to be computed with only batched kernel executions. Except for the top few levels of the root process , these executions happen concurrently on the GPUs. The marshaling GPU kernel is shown for a step of the upsweep operation in Algorithm 3. The marshaling kernel essentially plays the role of a fast static scheduler for all operations performed on a given level of the tree. It prepares for the execution of the operations by efficiently gathering data from the basis tree. Marshaling involves no data movement and therefore has very little overhead and in practice consumes only a tiny fraction of total execution time.

3.2. Distributed Intermediate Multiplication

The second phase of the operation builds a vector tree , where each node in a level is the product of the block row of level of the coupling matrix tree with the corresponding nodes in . This operation can be expressed as

where is the set of column indices of the matrix blocks in the block row . This is a block sparse matrix-vector multiplication at every level, and is illustrated in the middle portion of Figure 5, where the block-row, multi-GPU decomposition of each level is also highlighted. The scalar version of this problem is a well-studied computational kernel that has many possible high quality solutions [30, 41, 15, 23] which may be adapted to the block-sparse version. While we could rely on vendor kernels, their relatively low performance and lack of support for non-uniform blocks necessitates a different approach. Our solution relies on a key result regarding hierarchical matrices which puts a bound on the sparsity constant , the maximum number of blocks in any block row at any level of the matrix tree. Such constant is bounded by a dimension-independent value that only depends on the specific structure of the matrix and the admissibility criterion[28, 16].

During the construction of the matrix tree, we generate conflict-free batches of matrix products that can then be executed by a series of non-uniform batched matrix-vector and matrix-matrix kernels. A conflict-free ordering of the batch indices can be obtained by assigning a batch index based on the position within the block row or column that increases from left to right, allowing us to marshal all the batches in a single marshaling kernel. While there will potentially be some kernels that perform little work, the bounded sparsity constant guarantees that there will be few of them, and they will thus represent a small portion of the total runtime. This could be optimized further by running those small batches on a separate low priority stream and then combining the results with the main execution stream.

The boundedness of the sparsity constants also guarantees that the block row local to a GPU will require input from nodes belonging to a limited number of remote GPUs: we therefore consider a standard approach in distributed memory sparse matrix vector products, and split the block row into diagonal and off-diagonal parts. The contributions of these two parts can be overlapped with the needed communication as described in Section 4.1. Once the needed parts of are assembled, the multiplication phase can proceed on each GPU independently on the coupling matrices of using the treeMultiply routine of Algorithm 4. Processing the multiplication of the root branch on the master process to produce the root branch finalizes the phase as shown in Algorithm 5. The selectiveGather routine communicates and assembles the needed portion of the tree from the remote branches to use in the local treeMultiply. This is described in Section 4.1.

3.3. Distributed Downsweep

The vector tree now contains, at every level , a partial contribution to the output vector expressed in terms of the bases , and it could be computed as if the bases at level were explicitly available. However, since we only store interlevel transfer operators, we express in terms of bases of the children of node and accumulate them in a downsweep phase through the tree from root to leaves. This partial accumulation takes the form

between a parent node at level and children and at the finer level .

The downsweep procedure, pictured in the left part of Figure 5, is summarized in Algorithm 7. A downsweep through the root subtree is first performed. Once the leaf level of has been updated, it can be scattered from the master process to all other GPUs and added to the roots of local trees containing data from the previous phase of the multiplication. When the roots of the local branches on the GPUs have their proper accumulation, obtained from the leaves of the tree , we can sweep down independently on all GPUs setting each node to ; the level at each step now also contains the partial sum of for all levels above expressed in the basis of . The leaf level will then contain the complete sum which is finally expanded into through a multiplication by the explicitly stored leaf level bases. We follow the same approach used for the upsweep, where each level is also processed in parallel by first marshaling the operations and then executing using a batch matrix vector product. This leads us to Algorithm 6 for computing , i.e., the distributed vector . The downsweep marshaling algorithm is structurally similar to the one described in Algorithm 3 and is omitted for brevity.

4. Optimizing Communication

4.1. Optimizing Communication Volume

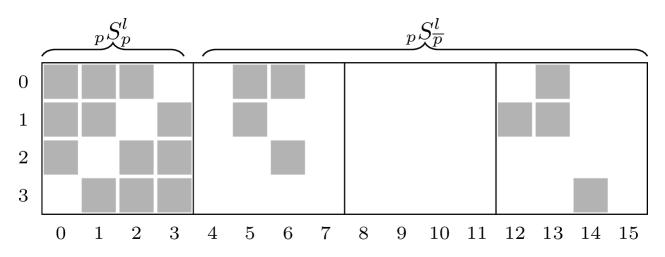

We first discuss a communication-optimized parallel block sparse matrix-vector multiplication for a given level matrix of , the low rank portion of the hierarchical matrix; the same logic applies to and it is omitted for brevity.

The matrix tree is first split into two distinct trees: a diagonal matrix tree and an off-diagonal matrix tree . While the off-diagonal portion could simply be represented as a set of trees, with one tree for every interaction with a GPU , it is far more efficient to have them all in a single flattened one to allow marshaling operations on the off-diagonal blocks to be completed with a single kernel call.

Once the trees are split, the list of basis nodes that interact with can be generated by iterating through its pairs and determining all unique values. Given the boundedness of the sparsity constant , on a given level , a GPU will receive data only from a few other GPUs; we thus determine the list of GPUs that need to send data to , as well as the list of nodes that should be received, and store such information in a compressed-storage format as representatively shown in Figure 7. The data needed from process pid, corresponding to global indices listed in nodes from nodes_ptr to nodes_ptr, is communicated among GPUs during the setup phase. A list of unique entries of nodes represents the compressed storage for the offiagonal block.

| pid | 1 | 3 | |||

|---|---|---|---|---|---|

| nodes_ptr | 0 | 2 | 5 | ||

| nodes | 5 | 6 | 12 | 13 | 14 |

The diagonal multiplication phase can proceed without any communication while the off-diagonal phase needs input from other GPUs. The nodes at a level of the local branch which are needed by other GPUs are packed into a single buffer using a marshaling kernel and a single batched copy kernel populates a send buffer , which is then used to issue non-blocking sends to the neighboring GPUs. Non-blocking receives are issued to populate a receive buffer , which can then be directly used for the off-diagonal level of the tree, since the column basis indices have already been updated to use the compressed node format.

Algorithm 8 summarizes the overall communication-optimized multiplication phase. The exchangeData routine executes the non-blocking MPI sends and receives using the compressed off-diagonal data that is marshaled using the marshalOffdiag routine and copied using a batch kernel.

4.2. Achieving Communication and Computation Overlap

While the communication volume has been reduced and a portion of the communication can be hidden by overlapping it with the diagonal multiplication, there are still a few challenges left that hold back performance. Assuming the lack of hardware support for more advanced memory transfer features such as GPUDirect RDMA, transferring the data from the GPU to the host adds some overhead to the execution, as well as synchronization points that impact GPU usage. Moreover, not all MPI distributions guarantee that communication can progress in the background without explicit intervention from the process.

The effectiveness of overlapping the diagonal multiplication phase with the needed communication depends on the structure of the tree, the capabilities of the communication network, and the compute capabilities of the GPU. For a tailored, fine-grained control of the communications involved in the algorithm at hand, we explicitly create communication threads that queue up asynchronous copies on separate streams to overlap the transfers with the processing of each level of the local branch upsweep. The transfers can then be carried out on the same thread without interrupting or forcing synchronizations with the main execution stream, allowing communication and computation to overlap. When the diagonal block kernels have been queued up on the stream, the communication thread can then join the main thread. The multiplication of the root branch on the master process can also be hidden, to some extent, by overlapping it with the main stream of work, and scheduling it on a low priority stream. This will allow the work to be completed during phases of low GPU utilization, such as the smaller top levels of the basis and matrix tree. Finally, by adding the dense block multiplication phase to a low priority stream, GPU utilization on all GPUs can be increased as well.

Figure 8 shows the effect of overlapping communication with computation on the timeline of the overall distributed matrix-vector multiplication. As expected, the gaps in the timeline due to communication are significantly smaller when it is overlapped with the computation.

5. Algebraic Matrix Compression

algebraic matrix compression is an operation that takes as input an matrix and produces another matrix of lower rank that approximates the input to a desired target accuracy. Compression is akin to the truncation operation that is commonly used to approximate dense matrices or dense matrix blocks by low rank approximations.

Recompression is a core operation when working with matrices. In particular, it is common in the discretization of integral equations to generate an initial matrix from a kernel and an admissibility condition by approximating the kernel with Chebyshev polynomials for well separated clusters. The ranks produced by this approximation are not optimal however, resulting in increased storage and increased arithmetic costs for operations such as the matrix-vector multiplication. A recompression step, purely algebraic, is then performed to generate an optimal basis in which the ranks are as small as possible. Another context where recompression is essential arises when adding matrices, performing low rank updates, or implementing BLAS3-like operations. When matrix blocks get added there is an increase in the apparent rank of the resulting blocks. The matrix would then need to be recompressed in order to maintain the linear asymptotic rate for storage and operations. The key task of recompression is to generate a new nested compressed basis in which the matrix blocks are expressed. This may be done by a pair of downsweep and upsweep operations that we describe next.

5.1. Downsweep for Generating a New Basis

Consider how a new basis for a block row at the finest level would be generated. here denotes only the low rank portions of the hierarchical matrix, since the dense blocks are not compressed. consists of low rank blocks expressed at level as with , and additional pieces representing the restriction of blocks from coarser levels to their “i” rows.

| (1) |

A new more efficient basis can be generated by computing the SVD of , and using the left singular vectors as the new basis. This would however require an expensive SVD of the -sized . In order to avoid it, we first compute the QR decomposition of and then perform the SVD on the small factor.

| (2) |

The new basis for level , is effectively a reweighing of the columns of the previous basis .

The task of computing of the QR decomposition of can be done efficiently by exploiting the nestedness of the bases. Let us assume that the QR decomposition of , the parent block at level , is available as . Then,

| (3) |

with conveniently expressible as:

| (4) |

Assuming the bases are orthogonal, the block diagonal matrix in Eq. 4 is orthogonal and the QR of simply reduces to the QR of the small stack at the end of Eq. 4 which involves only blocks, each being a small coupling/transfer matrix. Since this QR uses the matrix from level , the overall computation starts from the root and goes down the tree computing all the matrices for all levels in a downsweep traversal.

As with previous operations, all blocks at a given level can be processed in parallel, and importantly for the distributed setting, all the processes owning the subtrees below the C-level can proceed independently. Therefore, the computational pattern is identical to the distributed downsweep of the matrix-vector multiplication. Above the C-level, a single GPU is responsible for processing the top levels of the basis tree. The leaves of the top subtree, which hold the factors at level , are then scattered to all GPUs and seed the roots of the individual subtrees, which continue the downseep independently all the way to the leaf level of the basis. The computational work at every level being, for every block row, a QR decomposition of the small stack at the end of Eq. 4. Batched QR operations [21] are used within every GPU to achieve high performance.

5.2. Upsweep for Truncating the New Basis

Once the new reweighed basis is generated for all levels, it can then be truncated to the desired accuracy to generate the optimal basis . The coupling blocks are then compressed by projecting them on the new truncated basis.

The truncation operation has to preserve the nestedness property. This can be accomplished by starting the truncation at the leaf level and sweeping up the basis tree. Given the reweighed basis ( at the leaves and at nodes in the tree), we seek a basis ( at leaves and at nodes in the tree) that spans the same subspace as to the desired accuracy.

At the leaf level, this may be done through an SVD of the explicitly available basis

is a subset of columns of the left singular vectors . is a transformation matrix (which may be computed as ) used to compute the new transfer matrix from a node to its parent as described next.

For non-leaves, the truncated basis is expressed in terms of new compressed transfer operators:

and are the new transfer matrices for the children of a non-leaf node , and is a transformation that will be used to compute the new transfer matrix from to its parent, in a similar fashion. are computed by first computing an SVD of the matrix . The left singular vectors corresponding to singular values below the target compression threshold are truncated, and the remaining subset is partitioned to generate the new transfer matrices and . is computed as .

The structure of the truncation algorithm is identical to that of the upsweep phase in the matrix-vector multiplication. All GPUs start the truncation operations concurrently, each on its subtree, with no interprocess communication required. The computational work at every level being, for every block row, an SVD involving the leaf basis at the leaf level, or the stacked transfer operators for non-leaf levels. Within a GPU, batched SVDs are used [19]. Once all GPUs reach the C-level, a gather operation communicates the new transfer operators from the roots of the subtrees to the leaves of the root tree that is stored on a single GPU. This bootstraps the last phase of the upweep which proceeds on the root GPU.

Two details remain to be discussed. First, in the downsweep phase, the algorithm relied on the basis being orthogonal. When this is not the case, a pre-processing step is needed to orthogonalize the basis tree. A basis is orthogonal if is the identity matrix for all levels . Orthogonalizing a basis involves performing QR on the finest level basis and then going up the tree to compute new transfer matrices that allow higher level nodes to satisfy the orthogonality condition. This is also done in an upsweep pass that is very similar to the one described above for truncation, but replacing the SVD operations by QR operations. The distributed implementation therefore also proceeds independently on all GPUs up until the C-level. A gather operation is then performed to bootstrap the orthogonalization of the top levels of the basis tree which reside on a single GPU.

Finally, once a new compressed basis is computed, it remains to approximate the coupling blocks in it. Since is an orthogonal basis, the best approximation of a block is obtained by an orthogonal projection. Therefore, we can obtain the approximation by projecting every low rank block on the new basis to obtain:

The new coupling blocks are computed via batched matrix multiplication operations. In this step, there is parallelism across all GPUs and across all levels. In addition, The GPUs are particularly efficient in GEMM operations, and in practice this projection step consumes much less time that the other operations, particularly those involving batched SVDs.

6. Performance Results

In this section, we report performance results for the distributed-memory matrix-vector multiplication and algebraic compression operations, as well as performance on a complete application involving the setup and solution of an integral equation.

6.1. Experimental Setup

The H2Opus library as well as the code to generate and run all examples below is open source and is available at https://github.com/ecrc/h2opus. Batched kernels for GEMM operations are executed using MAGMA [12, 4], while SVD and QR batches are performed using the algorithms shipped with the open-source library KBLAS, available at https://github.com/ecrc/kblas-gpu. The marshaling GPU kernel and various other utility routines use Thrust [6].

All tests are conducted on Summit, the IBM Power System AC922 supercomputer installed at Oak Ridge National Laboratory. Individual nodes on Summit have 6 NVIDIA V100-SXM2 GPUs with 16GB of HBM2 memory each, but we only used 4 GPUs per node (2 per socket) in our runs. Summit has a fast host-to-device bandwidth which can deliver up to 50 GB/s over PCIe 4.0; in our application, we were able to use a significant portion of it, 40 GB/s, as measured by nvprof. It also has a fat-tree topology network for internode communication that delivers 200Gb/s of bandwidth. To assess the efficiency attained by our algorithms, we measure the performance of the single GPU batched GEMM implementation from MAGMA, with batch elements of size . All computations are done in double-precision and every point in every plot has been generated as the average of 10 runs after discarding the fastest and slowest timings.

To test the performance and scalability of the matrix-vector multiplication and matrix compression implementations, we performed numerical experiments on two sample matrix sets with different structural characteristics. The first matrix set comes from a spatial statistics application using a point set placed on a 2D grid of side length , and an exponential kernel with a correlation length of . The hierarchical matrix representation of this covariance matrix uses as the finest block size and size of the dense leaves. A geometric admissibility condition is used, where and refer to the center and diagonal size of a bounding box of the corresponding point set. We use a value of and set a rank in the low rank blocks, resulting in an approximation with relative accuracy better than for all problem sizes. This accuracy is computed by sampling of the rows and computing with randomly generated vectors with entries from a uniform distribution. The resulting sparsity constant of the matrix, which is a proxy for how finely refined the matrix is in its off-diagonal portions, is . At the largest size, the matrix has 23 levels, with the top 10 levels on a single master GPU, and the bottom 13 levels on separate 1024 GPUs for a matrix size of 536M.

The second matrix set comes from a 3D Gaussian process and is intended to show the effect of memory pressure—due to a finer refinement in the off-diagonal blocks—on scalability. The matrices are constructed using a set of points on a 3D grid of side length and uses an exponential kernel with correlation length [10], a similar admissibility condition to the previous case, and a rank for the low rank blocks. The resulting relative accuracy is now for all problem sizes considered in this section, and the resulting matrix tree has many more leaves than in the 2D case with a larger sparsity constant .

6.2. Matrix-Vector Multiplication

6.2.1. Weak Scalability

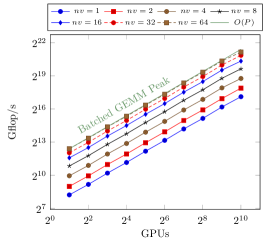

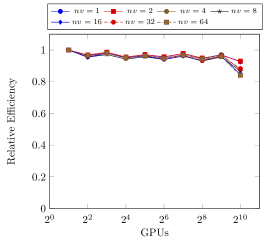

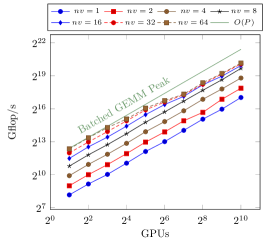

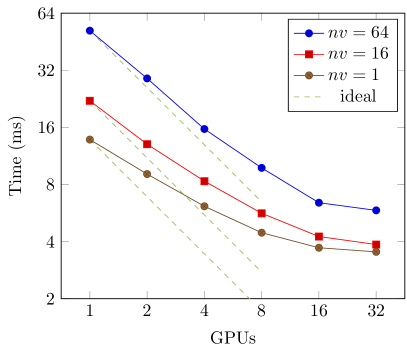

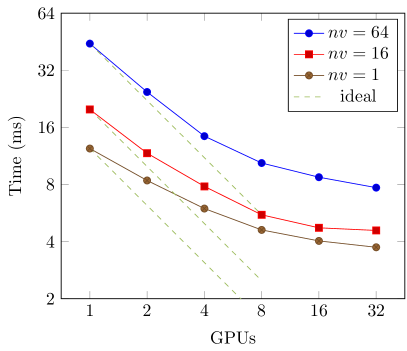

We first report on the weak scalability of the implementation, using a number of vectors ranging from 1 to 64. Both 2D and 3D test sets were scaled using a local matrix size of per GPU. Results are summarized in Figure 9, with the top and bottom rows showing the results from the 2D and 3D test sets, respectively. We observed no significant variability in the timings, with the highs and lows within 1–3% of reported average. There is a slight jitter in the plots as the problems are scaled up, due to small changes in the structure of the matrix tree affecting the amount of actual computations performed, which do not grow exactly at the same rate as the problem size. The relative efficiency was computed as , the ratio of the relative flops performed and the scaling factor relative to a base case of GPUs.

For the 2D tests, the scalability is near ideal up to 512 GPUs across all multiple vector sizes, . For the single vector case, a bandwidth-limited operation, the throughput is about 150 Gflop/s per GPU. With , the additional arithmetic intensity pushes the computation into a compute-bound regime and achieves 2.6 Tflop/s per GPU, more than 95% of the sustained peak of the batched GEMM operation measured at 2.7 Tflop/s. Even with the larger data volume being communicated, corresponding to various parts of 64 vectors, the communication is essentially hidden by the local computations. Only with 1024 GPUs, the plots show a slight degradation in performance and a deviation from ideal scalability, particularly for the case, with performance dropping to 2.3 Tflop/s per GPU. The primary reason for this is that the root tree on the master GPU is now 10 levels deep and is of size . The computations on this sizeable top tree become a bottleneck. In order to scale up to this number of GPUs or larger, we will need to coarsen to a single top GPU more smoothly than the -to- ratio used in the current implementation. For example, the trees above a first C-level can be distributed on multiple GPUs, and back to a single GPU after a second C-level, so that the top level work is small enough to be overlapped with other parts of the computation.

The results of the 3D problem test set display a similar behavior, but the communication overhead appears earlier. For the single vector case, results scale reasonably well with problem size and the relative efficiency is about 90% with 1024 GPUs, with a performance of about 130 Gflop/s per GPU. As the number of vectors increases however, the communication needed for transferring all the relevant portions of the vectors to the GPUs that need those data becomes substantial. This is in addition to the larger root tree difficulty mentioned above. The compute part of the operation grows sub-linearly with problem size because of the favorable increase in arithmetic intensity; however, the communication volume grows linearly and can no longer be hidden by the now relatively faster compute phases. While a performance of 2.6 Tflop/s per GPU is reached for 2 GPUs, the performance at 1024 GPUs reaches only 1.1 Tflop/s, an efficiency less than 45%.

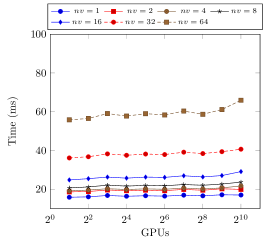

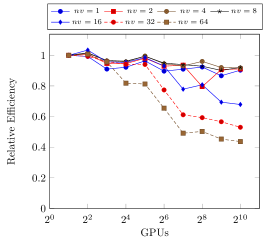

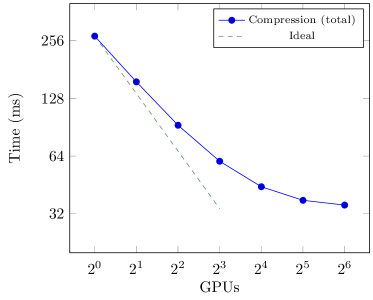

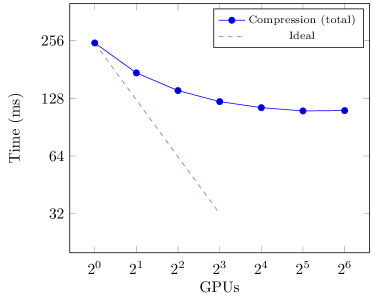

6.2.2. Strong Scalability

We report, in Figure 10, strong scalability results of the matrix-vector multiplication for the 2D and 3D test data described above, for different numbers of test vectors. In all cases, the problem size is to fit on a single GPU of Summit and is executed on an increasingly larger number of GPUs. As expected, the more arithmetically intensive multiplication involving a larger set of vectors scales better than the single vector operation. In all cases however, the limits of strong scalability is reached around 32 GPUs. This is not unexpected, since at this scale the local problem size is now only . There is very little local work to do to hide communication, and the whole computation takes only few milliseconds.

6.3. Matrix Compression

To test the performance and scalability of the algebraic compression routines, we used the same sets of matrices described earlier. For the 2D tests, we start with the matrix defined as above, with point clusters of size , and admissibility parameter . Its low rank blocks are initially constructed using a Chebyshev polynomial approximation of the kernel in the bounding boxes of the point clusters. A grid is used, resulting in all low rank blocks having a uniform rank . Compression seeks to reduce these ranks to maintain an accuracy of . The 2D test set was scaled using a local matrix size , reaching a matrix size of 67M on 64 GPUs.

For the 3D tests, a point cluster size and an admissibility parameter are used in the matrix construction. The low rank blocks are initially computed using a tri-cubic Chebyshev polynomial approximation of the kernel in the bounding boxes of the point clusters, resulting in all low rank blocks having a uniform rank . Compression seeks to reduce these ranks to maintain an accuracy of . The test test was scaled using a local matrix size , reaching a matrix size of 16M on 64 GPUs.

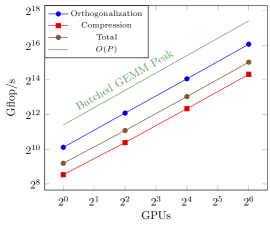

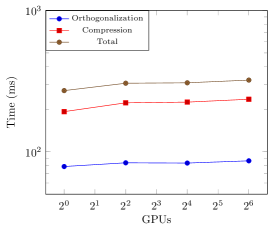

6.3.1. Weak Scalability

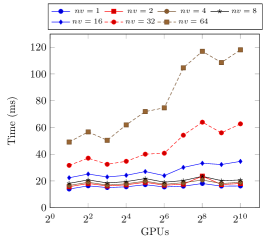

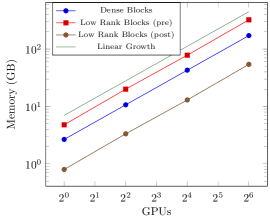

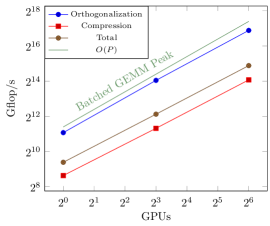

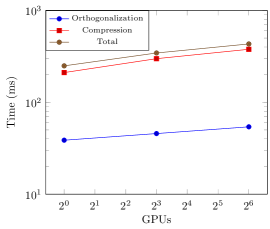

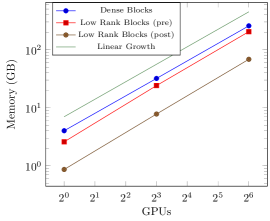

Figure 11 shows the weak scalability and effectiveness of the compression operation. Compression starts with an initial phase to orthogonalize the (non-orthogonal) bases constructed by Chebyshev interpolation without modifying their ranks. We time and report the orthogonalization phase separately. This is followed by a pair of downsweep/upsweep passes to construct a new reweighed basis and truncate it, finalized with a projection of the low rank blocks on the new bases. These steps are reported together under the compression phase label. The orthogonalization phase involves fewer floating point operations and as a result it executes faster than the compression phase. In 2D, the scaling is near ideal for up to 64 GPUs, with a slight bump when moving beyond a single GPU, because of inter-GPU communication. However for , the execution time is essentially flat indicating that all communication has been hidden by the computational steps. In 3D, we note a slight deviation from ideal scalability when , because the communication volume is larger than the 2D case and it can no longer be totally hidden by local computations. The orthogonalization phase executes at around 2.1 Gflop/s/GPU (near the practically-achievable peak of 2.7 Gflop/s per GPU, as measured by the batched GEMM operation). The compression phase, featuring QR on stacks of blocks and SVD kernels, is not able to reach that level of performance, but this limitation comes purely form the single GPU performance of the corresponding batched routines for these operations, and would be directly improved when more performant batch kernels are implemented. Nonetheless, we should note that even with our current batch kernels, the 67M and 16M matrices in 2D and 3D respectively, are compressed in just a fraction of a second, as reported in the central column of Figure 11.

In the rightmost column of Figure 11, we report on the effectiveness of the compression operation in reducing the memory footprint of the low rank blocks. In the 2D case, there is a factor of reduction between pre-compression low rank memory (with all blocks having a uniform rank ) and post-compression to an accuracy of . In the 3D case, the compression is a factor of for the low rank data, primarily because the starting matrix was generated with a relatively small footprint (all blocks have rank 64, generated from tri-cubic polynomials) and not much reduction is possible to maintain an accuracy of . In all cases however, we note the ideal growth in memory.

6.3.2. Strong Scalability

Strong scalability results are shown in Figure 12. Deviation from ideal scalability becomes noticeable as soon as the local problem size is small enough where there are not enough computations to perform locally and communication time dominates. For the 2D tests, the problem size is . With GPUs, the local problem size is only and results in an efficiency reduction to near . On 32 GPUs, the local problem size is and the limit of strong scalability is essentially reached: there is very little local work to do and the whole operation takes a few ms, spent mostly in communications. For the 3D tests, the problem size is . On GPUs, the local problem size is already only , and the resulting efficiency drops below . With 16 GPUs, the strong scalability limit is essentially reached as the problem size is now only , with very little local work available for each GPU.

6.4. Integral Fractional Diffusion Equation

We consider the performance of a Krylov solver for the solution of the integral equation where is the fractional diffusion operator defined as:

| (5) |

The spatially varying diffusivity is defined as the geometric mean of the usual diffusion coefficient, , is the fractional order () and we assume it to be constant in this experiment, is the region in which the solution is sought, and is a surrounding region in which volume constraints, somewhat analogous to the Dirichlet boundary conditions in the classical diffusion equations, are imposed on the solution, for . We solve the problem with , , and constant fractional order . We use a diffusivity field of the form

| (6) |

with the bump function defined as:

| (7) |

and a right hand side in .

The singularity of the kernel in (5) requires that the discretization of the integral be done carefully to allow standard quadrature rules to attain their theoretical convergence rate. To this end, we rewrite the integral as:

| (8) |

where is a function with local support around chosen to remove the singularity of the integrand and allow a trapezoidal rule to achieve its quadratic convergence. The first integral in (8) can be then discretized on a regular grid while the second integral involves terms that can be integrated analytically. The details are derived in [8], which generalizes the treatment of [43] to the variable coefficient case. This correction has the property that it allows the hierarchical matrix treatment of the kernel to remain as is, similarly to the FMM-compatible treatment of integral equations on curves [34, 48] and other locally-corrected quadrature schemes [29].

In 2D, using a regular grid of points with spacing in , the final discretization results in a system of the form:

| (9) |

where is an diagonal matrix with entries

| (10) |

is an formally-dense matrix with zero diagonals and entries

| (11) |

while is a sparse matrix resulting from the regularization of the integral. is essentially the discretization of an inhomogeneous, but non-fractional, diffusion operator, and we use it to construct a preconditioner for (8). Its footprint on a regular grid is the same as a 5-point Laplacian discretization, for an accuracy. (Note however that when the solution does not have sufficient regularity, only first-order convergence in solution error can be expected).

The matrix is constructed and compressed as an matrix using the H2Opus facilities described above. A -d tree partitions the grid points into clusters that are distributed to the different GPUs. An admissibility condition defines the structure of the matrix. Dense blocks are generated by evaluating the kernel directly. The low rank blocks are first approximated using polynomial Chebyshev approximations of the kernel on bounding boxes of the point clusters, and they are then algebraically compressed to generate the matrix that is used during the solution phase.

The diagonal matrix is constructed by noting that its entries can be recast as the product , where is a matrix similar to , but defined on the larger region , discretized with the same , and is a vector of ones. We therefore use the distributed-memory facilities of H2Opus to generate , perform the matrix-vector multiplication, to compute the diagonal entries of . is then discarded.

Finally, the sparse matrix is distributed among the processors using the same blockwise row partitioning of the matrix , and its entries computed explicitly.

The Krylov solver uses the facilities of the PETSc library. The operator to be inverted is symmetric positive definite, and we thus employ a preconditioned conjugate gradient method as solver. For the preconditioning step, we consider a smoothed aggregation algebraic multigrid method constructed on the matrix , using a diagonally preconditioned Chebyshev method as a smoother. The setup of the preconditioner runs partly on the CPU and partly on the GPU, while the Krylov solver, including the preconditioner application, runs entirely on the GPU [42, 56].

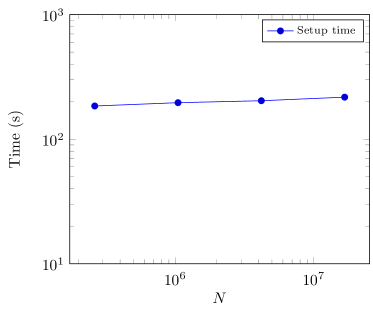

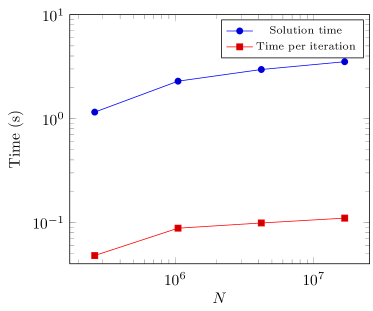

The weak scalability of the solver is shown in Figure 13 on grids of size , , , and using 1, 4, 16, and 64 GPUs respectively. Setup times are shown in the left panel as a function of the size of the problem , and they include all the necessary steps to assemble the operator to be inverted together with the setup of the preconditioner. The only setup computation not included in the timing is the construction of the -d tree for the matrix structure generation. This is an computation that is currently performed sequentially on the CPU. In the right panel, we report the total time for the Krylov solver, and the time per iteration as the problem size is scaled up.

Perfect weak scaling can be observed for the operators’ setup; the increase in total solving time can be attributed to a slight increase in the number of Krylov iterations being 24, 26, 30 and 32 using 1, 4, 16, and 64 GPUs respectively. Since the domain is kept fixed in the above tests while the discretization step is reduced, these results confirm the algorithmic scalability of the solver as well as of its parallel implementation. We are not aware of other software libraries that can readily handle variable coefficient fractional diffusion problems at this scale.

7. Conclusions

We presented distributed-memory, GPU-accelerated, algorithms and implementations for two key operations on matrices. The first is the matrix-vector (and multi-vector) multiplication and the second is the algebraic matrix re-compression to reduce the memory footprint consistent with a desired accuracy. The algorithms are supported by distributed data structures for representing, accessing, and operating on hierarchical matrices with nested bases, and the inter-process MPI communication is optimized to hide much of the data transfer cost with local compute phases of the algorithms. Both algorithms exhibit behavior and near-ideal weak-scalability for a large number of GPUs; high performance on individual GPUs is achieved through the use of batched dense linear algebra kernels. The algorithms are incorporated in the open source library H2Opus and have interfaces to the PETSc library.

The performance and usefulness of the library is demonstrated through the solution of a variable diffusivity integral fractional diffusion problem in 2D. Algorithmic and implementation scalability are demonstrated on grids of size up to on 64 GPUs, using the facilities of the PETSc library for the Krylov solver and the construction of an algebraic multigrid preconditioner.

Future work is planned on three fronts. The first is the development of distributed-memory multi-GPU versions of additional matrix algorithms, including their construction from randomized sampling. This will open the door for matrix-matrix multiplication and inversion via Newton-Schulz iterations. The second is the development of interfaces to BEM++ [47] and related high-productivity software libraries for integral equations that handle discretization tasks. H2Opus can provide highly effective core solvers for such libraries and will allow their use for large scale problems. The last front entails portability, and we plan to extend the H2Opus library to run on AMD GPUs.

References

- [1] FFM3D: Flatiron Institute Fast Multipole Libraries.

- [2] H2Lib.

- [3] H2Opus–a performance-oriented library for hierarchical matrices.

- [4] MAGMA–matrix algebra on GPU and multicore architectures.

- [5] STRUMPACK–STRUctured Matrices PACKage, v3.3.

- [6] Thrust library documentation.

- [7] J. I. Aliaga, R. Carratalá-Sáez, R. Kriemann, and E. S. Quintana-Ortí. Task-parallel LU factorization of hierarchical matrices using OmpSs. In IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), pages 1148–1157, 2017.

- [8] Hasnaa Alzahrani, George Turkiyyah, Omar Knio, and David Keyes. Space-fractional diffusion with variable order and diffusivity: Discretization and direct solution strategies. arXiv preprint arXiv:2108.12772, 2021.

- [9] Ilona Ambartsumyan, Wajih Boukaram, Tan Bui-Thanh, Omar Ghattas, David Keyes, Georg Stadler, George Turkiyyah, and Stefano Zampini. Hierarchical matrix approximations of hessians arising in inverse problems governed by pdes. SIAM Journal on Scientific Computing, 42(5):A3397–A3426, 2020.

- [10] Sivaram Ambikasaran, Daniel Foreman-Mackey, Leslie Greengard, David W. Hogg, and Michael O’Neil. Fast direct methods for gaussian processes. IEEE Transactions on Pattern Analysis & Machine Intelligence, 38(2):252–265, 2016.

- [11] Sivaram Ambikasaran, Karan Raj Singh, and Shyam Sundar Sankaran. HODLRlib: a library for hierarchical matrices. Journal of Open Source Software, 4(34):1167, 2019.

- [12] Marc Baboulin, James Demmel, Jack Dongarra, Stanimire Tomov, and Vasily Volkov. Enhancing the performance of dense linear algebra solvers on GPUs (in the MAGMA project). In Proceedings of the International Conference for High Performance Computing, Networking, Storage, and Analysis, SC08, 2008-11 2008.

- [13] Satish Balay, Shrirang Abhyankar, Mark F. Adams, Jed Brown, Peter Brune, Kris Buschelman, Lisandro Dalcin, Alp Dener, Victor Eijkhout, William D. Gropp, Dmitry Karpeyev, Dinesh Kaushik, Matthew G. Knepley, Dave A. May, Lois Curfman McInnes, Richard Tran Mills, Todd Munson, Karl Rupp, Patrick Sanan, Barry F. Smith, Stefano Zampini, Hong Zhang, and Hong Zhang. PETSc users manual. Technical Report ANL-95/11 - Revision 3.13, Argonne National Laboratory, 2020.

- [14] Satish Balay, Shrirang Abhyankar, Mark F. Adams, Jed Brown, Peter Brune, Kris Buschelman, Lisandro Dalcin, Alp Dener, Victor Eijkhout, William D. Gropp, Dmitry Karpeyev, Dinesh Kaushik, Matthew G. Knepley, Dave A. May, Lois Curfman McInnes, Richard Tran Mills, Todd Munson, Karl Rupp, Patrick Sanan, Barry F. Smith, Stefano Zampini, Hong Zhang, and Hong Zhang. PETSc Web page. https://www.mcs.anl.gov/petsc, 2020.

- [15] Amanda Bienz, William D. Gropp, and Luke N. Olson. Node aware sparse matrix–vector multiplication. Journal of Parallel and Distributed Computing, 130:166 – 178, 2019.

- [16] Steffen Börm. Efficient numerical methods for non-local operators: -matrix compression, algorithms and analysis, volume 14. European Mathematical Society, 2010.

- [17] Steffen Börm and Joana Bendoraityte. Distributed -matrices for non-local operators. Computing and Visualization in Science, 11(4):237–249, 2008.

- [18] Steffen Börm, Sven Christophersen, and Ronald Kriemann. Semi-automatic task graph construction for -matrix arithmetic. arXiv preprint arXiv:1911.07531, 2019.

- [19] Wajih Boukaram, George Turkiyyah, and David Keyes. Hierarchical matrix operations on GPUs: Matrix-vector multiplication and compression. ACM Transactions on Mathematical Software, 45(1):3:1–3:28, 2019.

- [20] Wajih Boukaram, George Turkiyyah, and David Keyes. Randomized GPU algorithms for the construction of hierarchical matrices from matrix-vector operations. SIAM Journal on Scientific Computing, 41(4):C339–C366, 2019.

- [21] Wajih Boukaram, George Turkiyyah, Hatem Ltaief, and David Keyes. Batched QR and SVD Algorithms on GPUs with Applications in Hierarchical Matrix Compression. Parallel Computing, 74:19–33, 2018.

- [22] Wajih Boukaram, Stefano Zampini, George Turkiyyah, and David Keyes. H2OPUS-TLR: High performance tile low rank symmetric factorizations using adaptive randomized approximation. arXiv preprint arXiv:2108.11932, 2021.

- [23] Athena Elafrou, Georgios Goumas, and Nectarios Koziris. Conflict-free symmetric sparse matrix-vector multiplication on multicore architectures. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, SC ’19, New York, NY, USA, 2019. Association for Computing Machinery.

- [24] L. Erlandson, D. Cai, Y. Xi, and E. Chow. Accelerating parallel hierarchical matrix-vector products via data-driven sampling. In 2020 IEEE International Parallel and Distributed Processing Symposium (IPDPS), pages 749–758, Los Alamitos, CA, USA, may 2020. IEEE Computer Society.

- [25] P. Ghysels, X. S. Li, C. Gorman, and F. Rouet. A Robust Parallel Preconditioner for Indefinite Systems Using Hierarchical Matrices and Randomized Sampling. In IEEE International Parallel and Distributed Processing Symposium (IPDPS), pages 897–906, 2017.

- [26] Adrianna Gillman, Alex H. Barnett, and Per-Gunnar Martinsson. A spectrally accurate direct solution technique for frequency-domain scattering problems with variable media. BIT Numerical Mathematics, 55(1):141–170, 2015.

- [27] Adrianna Gillman and Per-Gunnar Martinsson. An O(N) algorithm for constructing the solution operator to 2D elliptic boundary value problems in the absence of body loads. Adv. Comput. Math., 40(4):773–796, 2014.

- [28] Lars Grasedyck and Wolfgang Hackbusch. Construction and arithmetics of -matrices. Computing, 70:295–334, 2003.

- [29] Leslie Greengard, Michael O’Neil, Manas Rachh, and Felipe Vico. Fast multipole methods for the evaluation of layer potentials with locally-corrected quadratures. Journal of Computational Physics: X, 10:100092, 2021.

- [30] Dahai Guo, William Gropp, and Luke N Olson. A hybrid format for better performance of sparse matrix-vector multiplication on a GPU. The International Journal of High Performance Computing Applications, 30(1):103–120, 2016.

- [31] W. Hackbusch. Hierarchical Matrices: Algorithms and Analysis. Springer, 2015.

- [32] W. Hackbusch and S. Börm. Data-sparse approximation by adaptive -matrices. Computing, 69(1):1–35, 2002.

- [33] Nathan Halko, P. Martinsson, and Joel A Tropp. Finding structure with randomness: Probabilistic algorithms for constructing approximate matrix decompositions. SIAM Review, 53(2):217–288, 2011.

- [34] S. Hao, A. H. Barnett, P. G. Martinsson, and P. Young. High-order accurate methods for Nyström discretization of integral equations on smooth curves in the plane. Adv. Comput. Math., 40(1):245–272, February 2014.

- [35] Kenneth L. Ho. FLAM: Fast linear algebra in matlab –Algorithms for hierarchical matrices. Journal of Open Source Software, 5(51):1906, 2020.

- [36] Hua Huang, Xin Xing, and Edmond Chow. H2Pack: High-performance matrix package for kernel matrices using the proxy point method. ACM Trans. Math. Softw., 47(1), December 2020.

- [37] Akihiro Ida, Takeshi Iwashita, Takeshi Mifune, and Yasuhito Takahashi. Parallel hierarchical matrices with adaptive cross approximation on symmetric multiprocessing clusters. Journal of Information Processing, 22(4):642–650, 2014.

- [38] Pierre Jolivet, Jose E Roman, and Stefano Zampini. KSPHPDDM and PCHPDDM: Extending PETSc with advanced Krylov methods and robust multilevel overlapping Schwarz preconditioners. Computers & Mathematics with Applications, 84:277–295, 2021.

- [39] Gary R. Marple, Alex Barnett, Adrianna Gillman, and Shravan Veerapaneni. A fast algorithm for simulating multiphase flows through periodic geometries of arbitrary shape. SIAM Journal on Scientific Computing, 38(5):B740–B772, 2016.

- [40] Stefano Massei, Leonardo Robol, and Daniel Kressner. hm-toolbox: MATLAB software for HODLR and HSS matrices. SIAM Journal on Scientific Computing, 42(2):C43–C68, 2020.

- [41] Duane Merrill and Michael Garland. Merge-based parallel sparse matrix-vector multiplication. In Proceedings of the International Conference for High Performance Computing, Networking, Storage and Analysis, SC ’16. IEEE Press, 2016.

- [42] Richard Tran Mills, Mark F Adams, Satish Balay, Jed Brown, Alp Dener, Matthew Knepley, Scott E Kruger, Hannah Morgan, Todd Munson, Karl Rupp, Barry F Smith, Stefano Zampini, Hong Zhang, and Junchao Zhang. Toward performance-portable PETSc for GPU-based exascale systems. arXiv preprint arXiv:2011.00715, 2020.

- [43] Victor Minden and Lexing Ying. A simple solver for the fractional laplacian in multiple dimensions. SIAM Journal on Scientific Computing, 42(2):A878–A900, 2020.

- [44] Satoshi Ohshima, Ichitaro Yamazaki, Akihiro Ida, and Rio Yokota. Optimization of Hierarchical Matrix Computation on GPU. In Rio Yokota and Weigang Wu, editors, Supercomputing Frontiers, volume 10776 of Lecture Notes in Computer Science, pages 274–292. Springer International Publishing, 2018.

- [45] E. Rebrova, G. Chávez, Y. Liu, P. Ghysels, and X. S. Li. A Study of Clustering Techniques and Hierarchical Matrix Formats for Kernel Ridge Regression. In IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), pages 883–892, 2018.

- [46] François-Henry Rouet, Xiaoye S. Li, Pieter Ghysels, and Artem Napov. A distributed-memory package for dense hierarchically semi-separable matrix computations using randomization. ACM Transactions on Mathematical Software, 42(4):27:1–35, 2016.

- [47] Wojciech Smigaj, Timo Betcke, Simon Arridge, Joel Phillips, and Martin Schweiger. Solving boundary integral problems with BEM++. ACM Trans. Math. Softw., 41(2), February 2015.

- [48] Bowei Wu and Per-Gunnar Martinsson. Zeta correction: a new approach to constructing corrected trapezoidal quadrature rules for singular integral operators. Advances in Computational Mathematics, 47(3):45, 2021.

- [49] I. Yamazaki, A. Abdelfattah, A. Ida, S. Ohshima, S. Tomov, R. Yokota, and J. Dongarra. Performance of hierarchical-matrix BiCGStab solver on GPU clusters. In IEEE International Parallel and Distributed Processing Symposium (IPDPS), pages 930–939, 2018.

- [50] Ichitaro Yamazaki, Akihiro Ida, Rio Yokota, and Jack Dongarra. Distributed-memory lattice -matrix factorization. The International Journal of High Performance Computing Applications, 33(5):1046–1063, 2019.

- [51] C. D. Yu, W. B. March, and G. Biros. An Parallel Fast Direct Solver for Kernel Matrices. In IEEE International Parallel and Distributed Processing Symposium (IPDPS), pages 886–896, 2017.

- [52] C. D. Yu, S. Reiz, and G. Biros. Distributed O(N) linear solver for dense symmetric hierarchical semi-separable matrices. In IEEE 13th International Symposium on Embedded Multicore/Many-core Systems-on-Chip (MCSoC), pages 1–8, 2019.

- [53] Chenhan D. Yu, William B. March, Bo Xiao, and George Biros. INV-ASKIT: A Parallel Fast Direct Solver for Kernel Matrices. In IEEE International Parallel and Distributed Processing Symposium (IPDPS), pages 161–171, 2016.

- [54] Chenhan D. Yu, Severin Reiz, and George Biros. Distributed-memory hierarchical compression of dense SPD matrices. In Proceedings of the International Conference for High Performance Computing, Networking, Storage, and Analysis, SC ’18. IEEE Press, 2018.

- [55] Peter Zaspel. Algorithmic patterns for -matrices on many-core processors. Journal of Scientific Computing, 78(2):1174–1206, 2019.

- [56] Junchao Zhang, Jed Brown, Satish Balay, Jacob Faibussowitsch, Matthew Knepley, Oana Marin, Richard Tran Mills, Todd Munson, Barry F Smith, and Stefano Zampini. The PetscSF scalable communication layer. IEEE Transactions on Parallel and Distributed Systems, 2021.