Gyroscope-Assisted Motion Deblurring Network

Abstract

Image research has shown substantial attention in deblurring networks in recent years. Yet, their practical usage in real-world deblurring, especially motion blur, remains limited due to the lack of pixel-aligned training triplets (background, blurred image, and blur heat map) and restricted information inherent in blurred images. This paper presents a simple yet efficient framework to synthetic and restore motion blur images using Inertial Measurement Unit (IMU) data. Notably, the framework includes a strategy for training triplet generation, and a Gyroscope-Aided Motion Deblurring (GAMD) network for blurred image restoration. The rationale is that through harnessing IMU data, we can determine the transformation of the camera pose during the image exposure phase, facilitating the deduction of the motion trajectory (aka. blur trajectory) for each point inside the three-dimensional space. Thus, the synthetic triplets using our strategy are inherently close to natural motion blur, strictly pixel-aligned, and mass-producible. Through comprehensive experiments, we demonstrate the advantages of the proposed framework: only two-pixel errors between our synthetic and real-world blur trajectories, a marked improvement (around 33.17%) of the state-of-the-art deblurring method MIMO on Peak Signal-to-Noise Ratio (PSNR).

Introduction

Motion blur, which actively occurs in wheeled and legged robots, autonomous vehicles, and hand-held photography, is one of the most dominant sources of image quality degradation in digital imaging, impacting the robustness of industrial applications Koo et al. (2022). Over the past decades, the research on image deblurring has made considerable strides Nayar and Ben-Ezra (2004); Chen et al. (2008); Cho and Lee (2009). In particular, integrating neural networks has remarkably enhanced the effectiveness of deblurring (from blurry to sharp images) techniques Zhang et al. (2020); Kupyn et al. (2019); Zhang et al. (2022). However, the performance of these studies hinges heavily on the availability of high-quality blurred datasets and their corresponding ground truth images. For instance, pixel-aligned training triplets (background, blurred image, and blur heat map) are required to train a model for various related tasks.

Current methods for collecting blurred datasets involve synthesizing blurred images from clear ones. However, existing synthesis approaches assume that the blur trajectory of each pixel (or region within a grid) is the same, which starkly contrasts with reality. In practice, camera-shaking speed and pose are varied (e.g., camera rolling), and motion blur trajectories on each pixel are coherently different. To overcome this, Mustaniemi et al. proposed using IMU to synthesize blurred images Mustaniemi et al. (2019). However, they assume the blurred trajectory is a straight line far from the actual situation. As a result, the primary challenge lies in accurately calculating the blurred trajectory for each image pixel. The motion blur presented in Fig. 1 (top) cannot be accurately synthetic with existing methods. In short, such a phenomenon brings two critical challenges to motion blur synthesis and removal: (1) How to effectively simulate real-world blur effects, and (2) how to leverage blur trajectory information to aid image deblurring.

Given the challenges above, we introduce a framework to leverage blur trajectory on blur image synthetic and deblurring. The framework includes a strategy for training triplet (background, blurred image, and blur heat map) generation and a Gyroscope-Aided Motion Deblurring (GAMD) network for blurred image restoration. We synthesize and restore motion blur images using Inertial Measurement Unit (IMU) data. The rationale is that through harnessing IMU data, we can determine the transformation of the camera pose during the image exposure phase, facilitating the deduction of the motion trajectory (aka. blur trajectory) for each point inside the three-dimensional space. As a result, for the first challenge, our proposed synthetic strategy can fully use the trajectory map generated by the IMU. Specifically, we record the accurate image blur trajectory and the corresponding IMU data by shaking the camera to shoot the laser matrix (see Fig. 3 (a)). The matrix is then employed to guide each pixel to synthesize blurred images according to different trajectories, so the generated blurred images are inherently close to real-world motion blur (see Fig. 3 (b)). Fig. 2 demonstrates that our proposed strategy can generate realistic blurred images in which each pixel is finely shifted according to the trajectory of the motion blur. In particular, our proposed approach can also efficiently create various rotating blurs, which are often omitted from traditional methods.

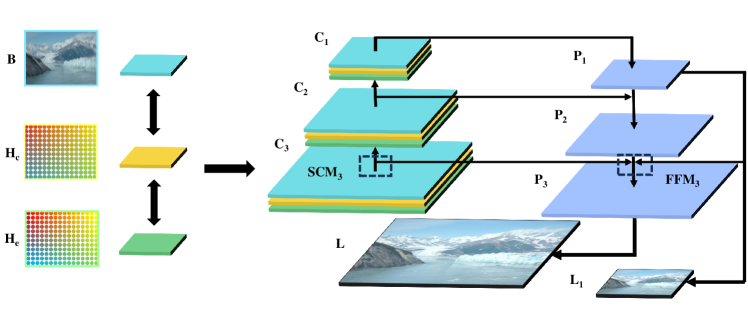

For the second challenge, we propose a GAMD network based on FPN (Feature Pyramid Networks) Ghiasi et al. (2019) (see Fig. 3 (b) and (c)) makes full utilize of the blur heat map, that is derived from the blur trajectory, to guide the deblurring in fine-grained level. As shown in Fig. 1, blurred details are properly restored using our proposed GAMD network. Experiments in Section Experiments show that our framework improves the peak signal-to-noise ratio (PSNR) by about 33.17% over the state-of-the-art MIMO Cho et al. (2021).

In summary, we fully utilise blur trajectory for motion blur image synthesis and restoration. Thus, our contributions are twofold: (1) We introduce an efficient strategy for flexibly modelling blurred images to build pixel-aligned and close-to-real-world training triplets. We also generate a new publicly available dataset (namely IMU-Blur, 8350 triplets, far more than existing datasets in quality and quantity) with our method. (2) We introduce a novel network, GAMD, by learning image features and blur heatmaps to guide image deblurring. Comprehensive experiments demonstrate the accuracy and effectiveness of our proposed framework.

Related Works

We provide a succinct review of existing works on generating blur datasets and deblurring methodologies. For a more thorough treatment of these topics, recent compilations by Koh Koh et al. (2021) and Zhang Zhang et al. (2022) offer sufficiently good reviews.

Motion Blur Collection

Existing methods are broadly divided into direct simulation and physical acquisition. For the first one, the seminal work by Sun et al. synthesizes motion blur by convolving the entire image with a fixed kernel Sun et al. (2013). However, it does not consider the variation of the blur kernel for each pixel. Rim et al. propose using gyroscope data to generate realistic blur fields Mustaniemi et al. (2019). Although spatial transform convolution is performed, it ignores the relationship between IMU and blur trajectories. Thus, it cannot generate blurry images that closely resemble natural environments.

For the second one, the images are usually acquired using high-speed cameras. For instance, Levin et al. collects blurred photos by capturing the projected images on a wall while shaking the camera Levin et al. (2009). Though it better approximates real-world blur, the background and the blurry images are not pixel-aligned. Moreover, its efficiency and quantity are limited for network training. The GoPro dataset Nah et al. (2017) is currently the most extensively utilized in deblurring research, created by capturing high-speed video and synthesizing blurred images (via clear frame average). Some real-world blur datasets (with blurred and sharp images) Rim et al. (2020) were captured using dual cameras simultaneously. Nevertheless, these datasets comprise images from a single scene, potentially limiting the generalization of network training.

Unlike existing approaches, we use the camera IMU data to synthesize blur trajectories and extract large-scale and pixel-aligned blur images from backgrounds. Thus, our proposed strategy is more efficient, and the generated training triplets have higher quality in pixel alignment, close to real-world blur and diversity.

Deblurring

Image deblurring is a long-standing problem in computer vision. Earlier deblurring methods focused primarily on uniform deblurring Cho and Lee (2009); Fergus et al. (2006). However, these methods often fall short as real-world images typically exhibit non-uniform blurring, with varying blur kernels across different image regions. In the deep learning era, numerous CNN-based blind deblurring approaches have been proposed. For instance, the generative adversarial network DeblurGAN Kupyn et al. (2018) uses blurred and sharp image pairs to train a conditional GAN for deblurring. SRN Tao et al. (2018) employs recurrent networks to extract features between images at multiple scales. Similarly, MIMO-UNet Cho et al. (2021) extracts image features at various scales and merges these features to complete the deblurring task. However, these blind deblurring algorithms often fail to achieve satisfactory results due to the lack of blur-related prior on region, intensity, and motion trajectory. To overcome this problem, we feed a heatmap (converted from IMU data) into the network to guide deblurring. At the same time, we found a slight error between the blur prior data generated by the IMU and the ground truth. These errors are proportional to the size of the image pixels and are negligible on coarse-grained images. Therefore, we use the image pyramid network structure to learn blur trajectories and blur image features. Increasing the proportion of coarse-grained features can enhance the image deblurring effect. Experiments show that our method can effectively improve deblurring accuracy, achieving a state-of-the-art performance.

Method

We first review the principles of blurred image generation in the real world and, based on this, propose a method for triplet generation. Next, we detail how GAMD utilizes blur trajectories to guide image deblurring. Note that the blurred images we discuss here are all produced by camera movement.

Blur Trajectories

Motion blur is mainly due to the relative motion between the camera and the scene within a limited exposure time. Suppose there is a three-dimensional space point in the camera lens. When the camera moves, the blur trajectory of point on the image is superimposed by its imaging position within the exposure time. In this stage, there is a mapping relationship between the point on the image and the pose change of the camera. At the same time, the camera pose at each moment is obtained based on the IMU data. So, we can derive the blur trajectory of point through the IMU and use the blur trajectory to synthesize the corresponding blurred image. Table 3 summarizes all the variables and their descriptions.

| Symbol | Description |

|---|---|

| The coordinates of the mapped position of point on the camera | |

| The coordinates of point under the camera coordinate axis | |

| The instantaneous clear image captured by the camera at time | |

| The focal length of the camera in the and directions | |

| An array of at time | |

| Blurred image captured by the camera | |

| Blurred image synthesized by our method | |

| The pose of the camera at time | |

| When , the above symbols represent the initial state |

Next, we separately discuss the effects of camera translation and camera rotation on the transformation of the imaging position of point on the image. When the camera only moves in translation, there is the following equation:

| (1) |

where is the camera motion’s translation matrix, is the camera’s intrinsic matrix, and is the depth of point from the camera at time . Since the camera’s exposure time is generally in the range of tens of milliseconds, the translational displacement of the camera is minimal during this period. We can assume that , and calculate the pixel displacement of point , () as . This equation shows that as the point is located at a deeper position ( becomes larger, and remain constant), the effect of camera translation on pixel motion becomes smaller. A real-world analogy is when driving a car and looking at an obstacle on the side of the road - the closer this obstacle is to the vehicle, the faster it moves.

Therefore, when the camera captures distant objects, we can ignore the effect of camera translation on image motion blur. Regarding camera rotation, when shooting, all light passes through the centre of the camera lens. Inspired by this phenomenon, we use spherical polar coordinates to represent points in space. This alternative representation facilitates problem-solving. The rotation of the camera consists of Pitch, Roll and Yaw. Since the camera exposure time is very short, we can approximate the blur trajectory during this period as the vector sum of the blur trajectories generated by each movement. The following discussion explores motion blur caused by three types of motion.

In yaw-induced motion blur, the spatial coordinate representation is depicted in Fig. 4. The coordinates can be expressed as follows:

| (2) |

where are marked in Fig. 4 (a). Combining Eq. 2 and camera imaging principles, the pixel coordinates of the spatial point mapped onto the image are:

| (3) |

It’s evident from the above Eq. 3 that the variable cancels out. When capturing images, the distance between the target object and the centre of the camera lens does not influence the projected pixel position. From the spherical coordinate system, remains constant as the camera undergoes yaw. Only changes, and this change can be determined through the camera’s gyroscope readings. For a given pixel point (known , ), we can derive the corresponding and values under various blur conditions using Eq. 3 and use the gyroscope to acquire .

| (4) |

Due to space limitations, the remaining two ambiguous conditions are discussed in detail in the appendix. Using Eq. 4, we can calculate the difference in a blur for each pixel and stack these calculations to calculate the projected point of the target point on the new image. Connecting these projected points is the approximate blur trajectory of point . This process needs to iteratively update the latest projection point coordinates and gyroscope information from the moment the camera exposure starts until the end of the direction. Our experiments show that the gyroscope data of our device corresponds to six sets of data in each image frame. The pixel origin for each pixel can be computed by computing the corresponding blur trajectory node along seven possible trajectories. These seven nodes connect to form a curve that outlines the trajectory. However, the accuracy of blur trajectories is inherently limited by the frequency of gyroscope data acquisition. Increased accuracy requires higher frequency gyroscope data collection.

After calculating the pixel trajectories of the four corners of the image, the projective transformation can be performed on the entire clear image, and the images collected by the camera at each moment of the exposure time can be calculated. A blurred image close to the natural environment can be obtained by superimposing these images. The imaging principle of the camera involves collecting photons during the exposure time. This process can be integrated using the following formula:

| (5) |

where represents the blurred image captured by the camera, is the normalization factor, is the clear image captured at timestamp , and denotes the camera’s exposure time. Given that our gyroscope can collect six sets of data during the camera’s exposure time, the camera moves at different speeds during each interval, resulting in uneven blur in the image. The composite blurred image is derived as follows:

| (6) |

where is the number of gyroscope-acquired measurements during the camera’s exposure time, and the blur intensity varies across different stages. denotes the image after the -th perspective transformation of the original image in the -th stage. It defaults to the first frame’s clear image at the start of the exposure. For clarity, we select four points on the map as original points in the first frame and calculate the blur trajectories of these points using the gyroscope. We compute the corresponding perspective transformation matrix by selecting corresponding points on the four blur trajectories and derive the new instantaneous clear image . represents the pixel length of the blur trajectory corresponding to the -th pixel in the -th stage. To optimize the blur effect, we set the length of the longest blur trajectory as . We synthesize a blurred vision by superimposing these images, as depicted in Fig. 5.

Deblurring Network

Blur Trajectory in the Network

Unlike traditional neural networks that mainly focus on blurry images, we refer to heatmaps generated based on fine-grained blur trajectory data. To do so, we use the camera IMU data to calculate the blur trajectory for each pixel to create the heatmap. In practice, it is challenging to convert blur trajectories into heatmaps since the mathematical image of most trajectories is intractable. For this, we introduce a Bezier curve, a parametric curve defined by discrete “endpoints” and “control points” that describe the blur trajectory for each pixel. Here, we briefly summarize the advantages of Bezier curves:

-

•

Flexibility: Bezier curves, especially higher-order ones, can represent a broad range of shapes, making them apt for capturing complex motion patterns.

-

•

Locality: Changes in a control point of a Bezier curve influence only a specific portion of the curve, ensuring that local modifications do not lead to global alterations in the trajectory shape.

Notably, the start and endpoints of the blur trajectory (captured from gyroscope data) serve as the two main control points. Additional control points can be inferred from intermediate gyroscope readings, ensuring that curves closely follow blur paths. Our preliminary experiments show that Bezier curves’ endpoints and control points can correctly approximate more than 99.9% blur trajectories. The rest are sub-optimal but still tolerable in our proposed network. In addition, Bezier curves project different trajectories into precise length representations, providing more convenience for network training. During training and inference, together with blurred images, we project the endpoints and control points into heatmaps as auxiliary input features. The blur heat map is divided into a control point heat map and an endpoint heat map (see Fig. 7).

Network Architecture

Fig. 6 illustrates the general idea of our proposed GAMD. It uses blurred images and heat maps as input. FPN (Feature Pyramid Network) Ghiasi et al. (2019) is the main body for blurry image restoration. The rationale behind FPN is to restore low-level blur with the guidance of high-level context. While errors between the estimated and ground blur trajectories are sensitive (the higher the resolution, the smaller the error) to image resolution, we use the lower layer of the FPN for deblurring (with the guidance of the high-level features).

With FPN, GAMD is divided into three layers. The input feature groups of each layer are , , and , and the output features are , , and . is the feature extraction module in the FPN Ghiasi et al. (2019), which is used for preliminary processing and fusion of each layer’s blur images and heat maps. Note that the amount of information contained in the heat map is limited. To improve efficiency, we use convolutional layers to extract heat map features and convolutional layers to convolve the blurred image. Finally, these features are concatenated and fed into the module. We use the module for feature fusion, which has been extensively used in segmentation and detection tasks Kirillov et al. (2019). But different from traditional modules, we connect the output of the top layer to the of each layer, taking advantage of the top layer’s context to improve the deblurring effect in the bottom layers. The module can be expressed as:

| (7) |

where is the output of the n-th layer . This way, the network effectively reduces the noise from the bottom layer and fuses the features of adjacent layers. Finally, is fed into the decoder to restore the details of the input image more accurately, thereby improving the restoration performance. Finally, we introduce the loss function. We use Euclidean loss between network outputs and the ground truth, and our Loss function is expressed as follows:

| (8) |

where are GAMD output in the -th scale, is the ground truth (downsampled to the same size using bilinear interpolation), is the number of elements to be normalized in , is the weights for each scale, we set to 0.6.

Experiments

In this section, we systematically evaluate the proposed blurred image synthesis method and validate the effectiveness and precision of GAMD on image deblurring tasks. Additionally, we discuss the limitations of our approach. We trained the network for 3000 epochs with a mini-batch size of 8 and an initial learning rate of 1e-4, decreased by a factor of 0.5 at every 500 epochs. Our experiments are performed on an NVIDIA RTX 3090, and the entire training process takes an average of 2 weeks.

Datasets

IMU-blur: We commenced our evaluation by randomly selecting 8350 clear images (aka. backgrounds) from existing image datasets Zhou et al. (2017); Quattoni and Torralba (2009). By capturing IMU data during the motion blur induced by the D455i camera, we synthesized a dataset of 8350 blurred images accompanied by corresponding blur heat maps. Ultimately, this dataset, namely IMU-blur, contains 6680 triplets for training and 1670 triplets for testing. Our IMU-blur dataset has significant advantages over widely used datasets such as GoPro Nah et al. (2017) and RealBlur Rim et al. (2020), which contain limited scene variations. It includes images captured in different environments, eliminating the interference of features present in repeated scenes for network learning. Unlike existing blurred datasets mainly recorded by high-speed cameras, IMU-blur is more accessible to synthesize towards massive-produced and real-world motion blur.

BlurTrack: We introduce BlurTrack, a dataset for evaluating synthetic blur methods to enhance dataset diversity. By projecting a matrix of laser dots onto the wall, we captured accurate blur trajectories using camera and IMU data. BlurTrack contains 3994 images, divided into different types to evaluate blurred synthetic scenes. BlurTrack allows us to assess the reliability of our synthetic blur methods and their agreement with real-world blur patterns.

Blur Synthetic Evaluation

To assess the effectiveness of our proposed synthetic strategy, we conduct two comparisons: (1) a comparison of blurred trajectories with those of natural images and (2) a comparison of trained deblurring networks with existing datasets.

For the first one, we collect the starting point positions and corresponding IMU data of all blurred trajectories and use the method in this paper to predict the end point of the blurred trajectory. Comparing the expected blur track endpoints with those obtained from actual laser images (BlurTrack) allows us to quantify the difference in blurring effects. Our approach demonstrates an average pixel error of only two pixels compared to the exact blurred trajectory, indicating the high accuracy of the dataset. For the second one, we compare it with established datasets. We use this approach to synthesize new blurred datasets from clear images from the GoPro and RealBlur datasets. And trained models using the synthesized and original datasets. Subsequently, we evaluated their performance using SRN-DeblurNet Tao et al. (2018). Table 2 presents a comparative performance analysis. Our synthetic dataset outperforms the original dataset, and notably, this is compared using synthetic and accurate data, which confirms the efficacy of our artificial blur dataset. Researchers can use this method to quickly generate large amounts of data sets, significantly reducing the difficulty of data set collection.

| Training sets | Test sets | PSNR/SSIM |

|---|---|---|

| GoPro | GoPro | 33.02/0.9265 |

| GoPro (Our) | GoPro | 33.14/0.9284 |

| RealBlur | RealBlur | 33.54/0.9093 |

| RealBlur (Our) | RealBlur | 34.00/0.9253 |

Ablation Study

We conduct experiments on IMU-blur and analyze the impact of blur heatmaps and deblurring networks on the deblurring effect, respectively. To conduct experiments more fairly, we use MIMO-UNet Cho et al. (2021) as the baseline method and improve it so that it can input blur heat maps. We call the improved MIMO-UNet Cho et al. (2021) as MIMO-Pro. First, we compared the effects of MIMO-Pro and MIMO-UNet Cho et al. (2021). All parameters were set according to the original MIMO-UNet Cho et al. (2021). The results are shown in the Table 3. GAMD improves PSNR by 6.93 dB. We then compared the network without adding blur heat maps with MIMO-Unet Cho et al. (2021) and found that the PSNR increased by 1.73 dB. Experiments show that GAMD mainly improves the performance of the deblurring network by inputting additional blur information.

| Model | PSNR | SSIM |

|---|---|---|

| MIMO-UNet | 24.39 | 0.7360 |

| MIMO-Pro | 31.32 | 0.9138 |

| GAMD (Only Net) | 26.12 | 0.7830 |

| Model | PSNR | SSIM |

|---|---|---|

| SRN | 22.54 | 0.6604 |

| DeblurGAN-v2 | 21.45 | 0.6339 |

| MIMO-UNet | 24.39 | 0.7360 |

| GAMD (Our) | 32.48 | 0.9014 |

Performance Comparison

We evaluated the GAMD against state-of-the-art deblurring networks, including SRN Tao et al. (2018), DeblurGAN-v2 Kupyn et al. (2018), and MIMO-UNet Cho et al. (2021). GAMD exhibited superior performance, achieving a PSNR of 32.48 and an SSIM of 0.9014, significantly surpassing existing deblurring methods (Table 4). Among traditional networks, MIMO-UNet Cho et al. (2021) showcased the best performance. Fig. 8 provides visual examples of the IMU-blur test dataset, comparing the deblurred images produced by GAMD (our method) against MIMO-UNet Cho et al. (2021), DeblurGAN-v2 Kupyn et al. (2018), and SRN Tao et al. (2018). The presented results underscore the efficacy of our approach, particularly in mitigating the challenges of motion blur posed by significant camera shakes.

Limitations

The proposed deblurring network may still struggle with significant camera roll and offset angles. For instance, Fig. 9 presents some failure cases with our GAMD. We can clearly observe the blurry effects, even after deblurring with our proposed GAMD. Nonetheless, GAMD and IMU-blur stand out for their superior deblurring performance, making them a contribution to the field.

Conclusion

This paper presents a novel approach for motion blur (caused by camera shake) synthesis and ultimately creates the blur dataset. At the same time, this paper also proposes a deblurring network, GAMD, which integrates blur trajectories, uses blur heat maps to collect blur trajectory information and guides image deblurring, enhancing network performance. Comparative experimental evaluation highlights the superiority of our approach over existing methods.

References

- Chen et al. [2008] Jia Chen, Lu Yuan, Chi-Keung Tang, and Long Quan. Robust dual motion deblurring. In IEEE Conference on Computer Vision and Pattern Recognition, pages 1–8. IEEE, 2008.

- Cho and Lee [2009] Sunghyun Cho and Seungyong Lee. Fast motion deblurring. In ACM SIGGRAPH, pages 1–8. 2009.

- Cho et al. [2021] Sung-Jin Cho, Seo-Won Ji, Jun-Pyo Hong, Seung-Won Jung, and Sung-Jea Ko. Rethinking coarse-to-fine approach in single image deblurring. In IEEE International Conference on Computer Vision, pages 4641–4650, 2021.

- Fergus et al. [2006] Rob Fergus, Barun Singh, Aaron Hertzmann, Sam T Roweis, and William T Freeman. Removing camera shake from a single photograph. In Acm Siggraph, pages 787–794. 2006.

- Ghiasi et al. [2019] Golnaz Ghiasi, Tsung-Yi Lin, and Quoc V Le. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In IEEE Conference on Computer Vision and Pattern Recognition, pages 7036–7045, 2019.

- Kirillov et al. [2019] Alexander Kirillov, Ross Girshick, Kaiming He, and Piotr Dollár. Panoptic feature pyramid networks. In IEEE Conference on Computer Vision and Pattern Recognition, pages 6399–6408, 2019.

- Koh et al. [2021] Jaihyun Koh, Jangho Lee, and Sungroh Yoon. Single-image deblurring with neural networks: A comparative survey. Computer Vision and Image Understanding, 203:103134, 2021.

- Koo et al. [2022] Ja Hyung Koo, Se Woon Cho, Na Rae Baek, Young Won Lee, and Kang Ryoung Park. A survey on face and body based human recognition robust to image blurring and low illumination. Mathematics, 10(9):1522, 2022.

- Kupyn et al. [2018] Orest Kupyn, Volodymyr Budzan, Mykola Mykhailych, Dmytro Mishkin, and Jiří Matas. Deblurgan: Blind motion deblurring using conditional adversarial networks. In IEEE Conference on Computer Vision and Pattern Recognition, pages 8183–8192, 2018.

- Kupyn et al. [2019] Orest Kupyn, Tetiana Martyniuk, Junru Wu, and Zhangyang Wang. Deblurgan-v2: Deblurring (orders-of-magnitude) faster and better. In IEEE International Conference on Computer Vision, pages 8878–8887, 2019.

- Levin et al. [2009] Anat Levin, Yair Weiss, Fredo Durand, and William T Freeman. Understanding and evaluating blind deconvolution algorithms. In IEEE Conference on Computer Vision and Pattern Recognition, pages 1964–1971. IEEE, 2009.

- Mustaniemi et al. [2019] Janne Mustaniemi, Juho Kannala, Simo Särkkä, Jiri Matas, and Janne Heikkila. Gyroscope-aided motion deblurring with deep networks. In IEEE Winter Conference on Applications of Computer Vision, pages 1914–1922. IEEE, 2019.

- Nah et al. [2017] Seungjun Nah, Tae Hyun Kim, and Kyoung Mu Lee. Deep multi-scale convolutional neural network for dynamic scene deblurring. In IEEE Conference on Computer Vision and Pattern Recognition, pages 3883–3891, 2017.

- Nayar and Ben-Ezra [2004] Shree K Nayar and Moshe Ben-Ezra. Motion-based motion deblurring. IEEE Transactions on Pattern Analysis and Machine Intelligence, 26(6):689–698, 2004.

- Quattoni and Torralba [2009] Ariadna Quattoni and Antonio Torralba. Recognizing indoor scenes. In IEEE Conference on Computer Vision and Pattern Recognition, pages 413–420. IEEE, 2009.

- Rim et al. [2020] Jaesung Rim, Haeyun Lee, Jucheol Won, and Sunghyun Cho. Real-world blur dataset for learning and benchmarking deblurring algorithms. In European Conference on Computer Vision, pages 184–201. Springer, 2020.

- Sun et al. [2013] Libin Sun, Sunghyun Cho, Jue Wang, and James Hays. Edge-based blur kernel estimation using patch priors. In IEEE International Conference on Computational Photography, pages 1–8. IEEE, 2013.

- Tao et al. [2018] Xin Tao, Hongyun Gao, Xiaoyong Shen, Jue Wang, and Jiaya Jia. Scale-recurrent network for deep image deblurring. In IEEE Conference on Computer Vision and Pattern Recognition, pages 8174–8182, 2018.

- Zhang et al. [2020] Kaihao Zhang, Wenhan Luo, Yiran Zhong, Lin Ma, Bjorn Stenger, Wei Liu, and Hongdong Li. Deblurring by realistic blurring. In IEEE Conference on Computer Vision and Pattern Recognition, pages 2737–2746, 2020.

- Zhang et al. [2022] Kaihao Zhang, Wenqi Ren, Wenhan Luo, Wei-Sheng Lai, Björn Stenger, Ming-Hsuan Yang, and Hongdong Li. Deep image deblurring: A survey. International Journal of Computer Vision, 130(9):2103–2130, 2022.

- Zhou et al. [2017] Bolei Zhou, Agata Lapedriza, Aditya Khosla, Aude Oliva, and Antonio Torralba. Places: A 10 million image database for scene recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 40(6):1452–1464, 2017.