XX \jnumXX \paper8 \jmonthMonth \jtitlePublication Title

Group Benefits Instances Selection for Data Purification

Abstract

Manually annotating datasets for training deep models is very labor-intensive and time-consuming. To overcome such inferiority, directly leveraging web images to conduct training data becomes a natural choice. Nevertheless, the presence of label noise in web data usually degrades the model performance. Existing methods for combating label noise are typically designed and tested on synthetic noisy datasets. However, they tend to fail to achieve satisfying results on real-world noisy datasets. To this end, we propose a method named GRIP to alleviate the noisy label problem for both synthetic and real-world datasets. Specifically, GRIP utilizes a group regularization strategy that estimates class soft labels to improve noise-robustness. Soft label supervision reduces overfitting on noisy labels and learns inter-class similarities to benefit classification. Furthermore, an instance purification operation globally identifies noisy labels by measuring the difference between each training sample and its class soft label. Through operations at both group and instance levels, our approach integrates the advantages of noise-robust and noise-cleaning methods and remarkably alleviates the performance degradation caused by noisy labels. Comprehensive experimental results on synthetic and real-world datasets demonstrate the superiority of GRIP over the existing state-of-the-art methods. The data and source code of this work have been made available at: https://github.com/NUST-Machine-Intelligence-Laboratory/GRIP.

1 Introduction

Recently, deep neural networks (DNNs) have achieved satisfying performance in various image recognition challenges1, 2, 3, 4, 5, 6, 7, 8, e.g. ImageNet9 and COCO10. Particularly, the impressive results typically rely on the availability of large-scale and well-labeled datasets. Unfortunately, high-quality and reliable annotations can be laborious and expensive11, 12, 13, even not always available in some domains such as fine-grained visual recognition due to the requirement of expert knowledge14. To address the expensive-annotation problem, a promising solution is straightforwardly utilizing data from web or multimedia to conduct large scale datasets15, 16, 17, 18, 19. For example, WebFG-49620 leverages free web images as training data with keywords as labels, while YFCC100M21 and Youtube-8m22 contain millions of media objects. Nevertheless, annotations from web or multimedia only provide unreliable supervision and inevitably contain label noise. According to the memorization effect23, 24, DNNs would perfectly fit the training set with noisy labels and consequently degrade generalization.

To tackle this issue, researchers have proposed a number of methods for combating noisy labels25, 26, 27, 28, 29, 30, 31, 32, 33. An active research direction is to investigate noise-robust methods which aim to reduce contributions of false-labeled samples in model optimization, e.g. robust loss functions34, 35, 36, early-learning37, label smoothing (LS)38, and online label smoothing (OLS) regularization39. This type of method does not involve specific designs for noisy labels and therefore becomes flexible in practical application. However, since noisy labels are not explicitly coped with, noise-robust approaches still inevitably suffer from the performance drop caused by label noise.

Another intuitive research direction is to perform noise-cleaning which aims to correct or discard mislabeled samples to purify datasets. For example, label correction methods aim to revise false labels through noise transition matrix estimation40 or label re-assignment41. Sample selection works42, 43, 44, 45, 46, 47 typically select instances in manual-defined criteria, e.g. the small-loss principle48 that regards images with small losses as clean data. Recently, some hybrid researches49, 50 combine label correction and sample selection methods for more efficient noise-cleaning. However, these approaches tend to be designed and tested on synthetic noisy datasets such as CIFAR51, and typically do not take the real-world scenario into consideration, Consequently, they tend to be less practical on real-world noisy datasets.

To this end, we propose a simple yet effective approach termed GRIP (Group Regularization and Instance Purification) to boost instance purification via group regularization. Specifically, the proposed group regularization strategy estimates the soft label of each class to provide additional supervision. It guides the network to learn inter-class similarities and improves robustness by preventing overfitting noisy labels. Resorting to estimated class soft labels, an instance purification strategy is applied to specifically clean noisy labels from the entire dataset in a global manner. It measures the similarity between the predicted probability distribution of each sample and its estimated class soft label to identify noisy and revisable labels. Subsequently, noisy samples are discarded and revisable instances are re-labeled by model prediction then utilized for training. How group regularization benefits instance purification is visualized in Fig. 1. Owing to the regularization strategy, clean samples and corresponding class centers are encouraged to be closer and therefore it is easier to perform noise identification.

Owing to operations from both group and instance aspects, GRIP integrates the advantages of noise-robust and noise-cleaning methods for tackling noisy labels. To sum up, our contributions are as follows:

-

1.

We propose a group regularization strategy to estimate class soft labels for benefiting instance purification. It also improves the robustness against noisy labels from the category aspect and greatly boosts the model generalization.

-

2.

We propose an instance purification strategy resorting to estimated class soft labels. It globally identifies noisy and revisable labels from the entire dataset. Experimental results demonstrate that it surpasses the widely-used small-loss principle in noise identification on real-world noisy datasets.

-

3.

Our approach integrates the advantages of noise-robust and noise-cleaning methods. Comprehensive experimental results demonstrate that GRIP significantly outperforms state-of-the-art methods on both synthetic and real-world noisy datasets.

2 Related Works

2.1 Noise-Robust

Noise-robust learning approaches directly train models using noisy labeled data. They aim to become insensitive to the presence of noisy labels52. One typical branch is to develop robust loss functions to overcome the problem that cross-entropy loss is sensitive to samples with corrupted labels53. For example, Wang et al. proposed to leverage a noise-robust reverse cross-entropy34 to symmetrically boost cross-entropy loss. Ma et al. 35 proposed a framework to build new loss functions by combining active and passive robust loss functions. Zhang et al. 36 proposed a generalization of mean absolute error and cross-entropy loss. However, since these robust loss functions typically aim to deal with noisy labels through under-learning, they inevitably underfit clean samples. Another branch is to employ regularization to improve robustness. For example, LS38 built soft labels by combining a one-hot and uniform distribution to provide regularization. OLS39 further improved LS by replacing the uniform distribution with a more reasonable probability on non-target categories. However, these approaches do not explicitly tackle noisy labels, leading to a suboptimal performance.

2.2 Noise-Cleaning

Noise-cleaning methods aim to tackle noisy labels by discarding or relabeling them. An intuitive type of research is label correction that corrects noisy labels. For example, several works40 tried to correct noisy labels by estimating the noise transition matrix. However, it is difficult to estimate the accurate noise transition matrix. Furthermore, these label correction methods are unable to deal with out-of-distribution (OOD) instances28 whose true labels do not belong to the training set. This drawback restricts their application in the real-world scenario. Another typical idea of combating noisy labels is to perform sample selection that identifies and removes corrupted data through proper criteria46, 47, 54. For example, Co-teaching43 utilized the small-loss principle and let peer networks select noisy samples for each other. JoCoR44 claimed that different models tend to disagree on false labeled samples and leveraged this principle for noise identification. Nevertheless, the above approaches typically performed sample selection within each mini-batch with a fixed drop rate. Jo-SRC50 and Zhang et al. 55 claimed that noise ratios in different mini-batches inevitably fluctuate in the training process. To overcome this problem, they replaced the widely-used mini-batch noise identifying strategy with a global one for the purpose of stabilizing the selection results. A growing number of methods such as SELFIE49 and Co-learning56 combined label correction and sample selection to further boost the performance. Some state-of-the-art noise-cleaning approaches also adopted noise-robust techniques. For example, Jo-SRC50 and DivideMix41 leveraged LS trick and mix-up57, respectively.

3 The Proposed Method

3.1 Preliminary

Generally, we train a DNN on a noisy dataset with classes, where and denote the -th training sample and the corresponding label, respectively. We define the label distribution of the one-hot label as and . The DNN model takes each sample as input and predicts a probability for each class using the softmax function. The cross-entropy training loss between the predicted probability distributions of training images and their corresponding label is written as

| (1) |

Since commonly-used cross-entropy loss is proved to be sensitive to noisy labels53 and DNNs can perfectly memorize noisy samples23, 24, deep models tend to suffer from label noise when trained using the noisy dataset with .

3.2 Group Regularization

Our learning framework is illustrated in Fig. 2. Motivated by OLS39, we adopt a noise-robust strategy through regularizing the training process at the category level. We define as the collection of soft labels of each class for training epochs. For each epoch , is a matrix, whose columns correspond to soft labels and are initialized to zero. Given an input image , if the classification is in accord with its label , the soft label of the target class will be updated using the predicted probability through

| (2) |

where denotes the indexes of and indicates the number of correctly predicted samples with label . According to Eq. (2), each soft label is the average predicted probability of correctly classified samples belonging to one class.

To stabilize the estimated class soft labels , we further apply an exponential moving average (EMA) strategy through

| (3) |

where denotes the momentum that controls the weight on the previous result. EMA smoothes out by alleviating the issue that probabilities predicted by the model can be unstable in the training process. After obtaining the soft label, we utilize to supervise the training process in each epoch . Then the soft training loss can be formulated as

| (4) |

Similar to LS, assigns weights to non-target categories. Consequently, it reduces overfitting and improves the robustness against noisy labels. Moreover, it encourages DNNS to learn inter-class similarities and benefits challenging image recognition tasks, e.g. fine-grained classification39.

However, we find that tends to approach the one-hot label distribution where has a large value while other categories only share tiny weights as shown in Fig. 3 (a). This behavior may derive from the strong fitting ability of cross-entropy loss. To address this issue, we utilize the maximum entropy principle58 as a strong regularization. It forces the model prediction to be less confident. The maximum entropy loss can be formulated as

| (5) | ||||

Since aims to increase the entropy of prediction , it leads to a more reasonable soft label as illustrated in Fig. 3 (b). More importantly, since makes predictions less confident, it further reduces the risk of overfitting noisy labels and guides DNNs to become more noise-robust.

Finally, both the hard and soft labels are leveraged as supervision with the maximum-entropy principle applied as regularization. The total training loss of our group regularization strategy can be represented by

| (6) |

where and are weights to balance contributions of , , and . Owing to and , our group regularization strategy guides DNNs to become less sensitive to noisy labels and boosts their robustness. Furthermore, class soft labels generated by group regularization strategy can be leveraged for instance purification.

3.3 Instance Purification

After obtaining class soft labels, we can perform data purification from the instance aspect. According to the memorization effect23, 24, DNNs learn the clean and easy pattern in the initial epochs. Accordingly, clean instances tend to have more contributions to the estimation of class soft labels at the early stage. Moreover, our group regularization strategy reduces the contribution of noisy labels in model optimization by significantly improving noise-robustness. Therefore, generated class soft labels should be closer to predictions of clean samples than that of noisy ones. Based on this phenomenon, we can build a noise identification criterion.

Inspired by Jo-SRC50, we adopt the Jensen-Shannon (JS) divergence between each probability and its class soft label as our noise identification criterion through

| (7) | ||||

where is the Kullback-Leibler (KL) divergence function and denotes the simplified form of . In this formula, is a measure of the difference between predicted probability and corresponding class soft label , where a larger value indicates a more significant difference. Moreover, is a symmetric measure and bounded in when using a base logarithm59.

Since clean samples tend to be closer to their class soft labels, they should have smaller values of than noisy instances. Accordingly, we can utilize a threshold to separate clean instances from noisy ones. We define the threshold for each epoch as

| (8) |

where indicates the collection of for the entire dataset, and denote computing average value and standard deviation respectively, and is a hyperparameter. Specifically, for each training batch , we divide it into a clean set and a noisy set after a warm-up period by

| (9) | ||||

Once noisy samples are identified, we further perform label re-assignment on the noisy samples to leverage revisable ones. Specifically, we first calculate the JS divergence between the probability of each instance in and soft label of its predicted class through

| (10) |

Then, since is bounded in , we can utilize a hard threshold to select revisable samples in through

| (11) | ||||

Eq. (11) indicates that we regard a noisy sample as a revisable one when its probability is close enough to the soft label of its predicted category . Its predicted class is assigned as pseudo label.

Finally, we jointly utilize clean and relabeled samples for training by Eq. (6). Furthermore, we apply in Eq. (5) on discarded images to encourage the model to generate even probabilities for them. This design guides predicted probabilities of noisy samples to become more different from estimated class soft labels and therefore improves the reliability of noise-cleaning in the following epochs. Details of our proposed GRIP are demonstrated in Algorithm 1.

3.4 Discussion

3.4.1 Comparison with OLS

Our group regularization strategy is motivated by OLS39. Compared with OLS, our approach applies maximum entropy loss as regularization to smooth the predicted probability. It addresses the problem that the estimated soft label tends to approach the one-hot distribution. Furthermore, EMA utilizes previous results to stabilize the process of soft label estimation. Consequently, our group regularization strategy becomes more practical and efficient than OLS.

3.4.2 Dynamic and Fixed Thresholds

Notably, in the initial stages of training, a significant disparity between the predicted distribution and soft labels is observed, yielding generally large values of d. Conversely, as training progresses, there is a trend towards smaller values of d. The use of a fixed threshold would result in the model initially selecting too few clean samples, leading to a low recall rate. Furthermore, in later stages, there arises the issue of selecting too many samples, encompassing a substantial amount of noisy false positives and resulting in a low precision rate. To address these challenges, we employ the mean and standard deviation of the distance to formulate the threshold for sample selection criteria, thereby enhancing the flexibility and adaptability of our approach. This selection strategy, widely embraced by numerous classical algorithms60, attests to its effectiveness and generalizability.

As shown in Fig. 4 (a) and (b), decreases as the DNN gradually fits the dataset in the training process. Accordingly, we utilize a dynamic threshold , whose value depends on the distribution of . The mean value, which roughly separates "low" and "high", can be utilized as a rough threshold to identify clean and noisy labels. To further adjust the threshold, we leverage the standard deviation controlled by hyperparameter as the offset to the mean. Compared with a fixed offset, the standard deviation can automatically adapt to the distribution of . It will be small if the data distribution is dense and become large otherwise. Briefly, the threshold is jointly controlled by mean as well as standard deviation. It can adapt to the changing and select samples with lower for training. Similar to , also gradually decreases in the training process. Contrary to threshold , we fix for the label re-assignment. It guides the number of relabeled samples to grow from a small value as the training proceeds. As the model becomes more robust, more noisy samples can be relabeled for training. This progressive re-assignment strategy controls the number of relabeled samples and stabilizes the training process.

3.4.3 Selection Criterion

Since is obtained according to the distribution of on the entire dataset, the selection operation is performed in a global view. This global selection strategy can alleviate the problem that noise ratios in different mini-batches inevitably fluctuate50, 55. A mini-batch is a sampling of the entire dataset. If the batch size is large enough (e.g. >100), the noise ratio fluctuation problem may not be severe. However, on the contrary, if the batch size has to be small due to the large size of the model or input image, this problem can harm the noise-cleaning performance. We will illustrate the advantage of the global selection strategy over a mini-batch one in experiments.

Compared with the popular small-loss principle, our selection criterion takes the advantage of soft labels in noise identification. The soft label encodes more information than training losses and therefore tends to be more reliable in selecting samples.

4 Experiments on Synthetic Noisy Datasets

In order to demonstrate the superiority and applicability of our approach, we conduct experiments on both synthetic and real-world datasets. In this experiment, we first evaluate our approach on synthetic noisy datasets for coarse-grained classification to validate its robustness under different types of noisy labels and varying noise ratios.

| Noise Ratio | Backbone | Decoupling42 | Co-teaching43 | Co-teaching+61 | JoCoR44 | SELC62 | GRIP |

| 69.18 0.52 | 69.32 0.40 | 78.23 0.27 | 78.71 0.34 | 85.73 0.19 | 87.08 0.07 | 88.83 0.09 | |

| 42.71 0.42 | 40.22 0.30 | 71.30 0.13 | 57.05 0.54 | 79.41 0.25 | 81.66 0.11 | 84.59 0.07 | |

| 28.67 0.47 | 26.76 0.35 | 34.53 0.28 | 29.05 0.21 | 47.74 0.25 | 54.58 0.15 | 59.32 0.07 | |

| 69.43 0.33 | 68.72 0.30 | 73.78 0.22 | 68.84 0.20 | 76.36 0.49 | 78.90 0.12 | 80.82 0.28 |

| Noise Ratio | Backbone | Decoupling42 | Co-teaching43 | Co-teaching+61 | JoCoR44 | SELC62 | GRIP |

| 35.14 0.44 | 33.10 0.12 | 43.73 0.16 | 49.27 0.03 | 53.01 0.04 | 55.44 0.09 | 61.40 0.13 | |

| 16.97 0.40 | 15.25 0.20 | 34.96 0.50 | 40.04 0.70 | 43.49 0.46 | 46.73 0.08 | 51.27 0.15 | |

| 9.67 0.34 | 8.69 0.09 | 23.62 0.38 | 27.08 0.51 | 31.92 0.27 | 34.51 0.15 | 39.67 0.15 | |

| 27.29 0.25 | 26.11 0.39 | 28.35 0.25 | 33.62 0.39 | 32.70 0.35 | 45.19 0.12 | 53.48 0.11 |

4.1 Datasets and Evaluation Metric

We follow the common experimental settings as in recent works43, 44 and manually corrupt CIFAR-10 and CIFAR-10051 to create synthetic noisy datasets. Specifically, we adopt symmetric and asymmetric noisy labels with noise transition matrices with a transition matrix .

We generate symmetric noisy labels by uniformly flipping a given ratio of training labels to other classes. Asymmetric label noise simulates the fine-grained recognition task with noisy labels, where very similar classes may confuse annotators. In this setting, we only flip a specific set of classes, e.g. flipping CAT to DOG in CIFAR-10. The definition of transition matrix is as follows:

where and denote the number of the class and noise ratio, respectively. For evaluating the model classification performance, we take Average Classification Accuracy (ACA) as the evaluation metric.

4.2 Implementation Details

Following JoCoR44, we adopt a 7-layer CNN network architecture and leverage Adam optimizer with momentum set to for training. We train the network for epochs with batch size set to . The learning rate is initialized to and starts to linearly decrease to after epochs. As for hyper-parameters, we set momentum for EMA and weight , to , , , respectively. Warm-up epoch and hyper-parameter are empirically set to and , respectively. As for , we adopt a linear decrease from to in epochs to smooth the discarding process, which is similar to the increasing drop rate trick in Co-teaching43. All experiments are conducted on one NVIDIA Tesla V100 GPU.

4.3 Baseline Methods

To evaluate our approach on synthetic datasets, we compare our approach with the following state-of-the-art algorithms: Decoupling42, Co-teaching43, Co-teaching+61, JoCoR44, SELC62 and the backbone network straightforwardly trained on noisy datasets. As the work of JoCoR44 has reproduced most other baselines42, 43, 61, we directly copy results from it for simplicity. For SELC62, we re-execute their code and report the experimental results. Our method is trained using the same experimental settings as the above methods, and therefore the comparisons are fair.

4.4 Experimental Results and Analysis

4.4.1 Results on CIFAR-10

We demonstrate the test accuracy of each method on CIFAR-10 in Table 1. As we can see from Table 1, GRIP consistently outperforms other approaches in all three noisy settings. Specifically, GRIP surpasses the best results of baselines by , , and in Symmetry-, Symmetry-, Symmetry-, and Asymmetry- cases, respectively. From experimental results, we can conclude that our approach is effective on synthetic noisy datasets for simple coarse-grained classification tasks.

4.4.2 Results on CIFAR-100

The test accuracy of each approach on CIFAR-100 is shown in Table 2. By observing Table 2, we can find that GRIP achieves the highest test accuracies among all methods. Specifically, the improvements over JoCoR become , , and in Symmetry-, Symmetry-, Symmetry-, and Asymmetry- cases, respectively. Our great superiority in the Asymmetry- case deserves attention. Since asymmetric noise is a simulation of the real-world label noise41, our advantage in Asymmetry- cases indicates that GRIP is promising on real-world noisy datasets.

Moreover, by comparing the results on CIFAR-10 and CIFAR-100, we can find that GRIP shows more significant improvements over baselines as the classification task becomes more challenging (from 10 to 100 classes). This experimental result demonstrates the superiority of our method in more complicated classification tasks with noisy labels.

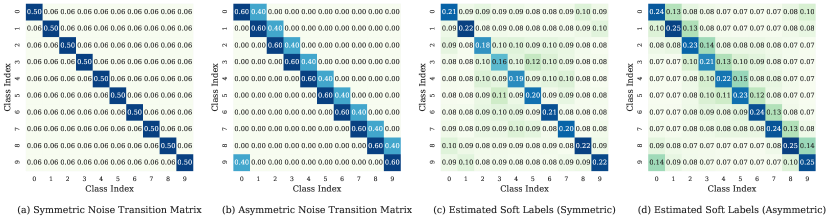

4.5 Noise Matrix and Soft Labels

In this subsection, we visualize the noise transition matrix and soft labels estimated by GRIP to illustrate the noise-robustness of our approach. To be detailed, we investigate the Symmetry- and Asymmetry- cases and demonstrate soft labels estimated after the warm-up period in Fig. 5.

From Fig. 5, we can observe that in the Symmetry- case, all non-target classes of soft labels share similar weights (Fig. 5 (a) and (c)), and target categories have lower weights in soft labels than in noise transition matrices. This phenomenon indicates that our approach becomes less confident on the noisy datasets and shows robustness against label noise.

While in the Asymmetry- case, flipped noisy classes have much lower weights in soft labels than in noise transition matrices (Fig. 5 (b) and (d)), which indicates that our approach is less likely to overfit noisy labels. The great noise-robustness results from the maximum entropy regularization, which guides the model to become less confident on potential noisy labels.

| Dataset | Web-bird | Web-aircraft | Web-car |

| Class Number | 200 | 100 | 196 |

| Training Images | 18388 | 13503 | 21448 |

| Test Images | 5794 | 3333 | 8041 |

| Label Accuracy | 65% | 73% | 67% |

| Method | Publication | Noise-cleaning | Noise-robust | Datasets | ||

| Web-bird | Web-aircraft | Web-car | ||||

| Backbone | - | 73.30 | 78.71 | 80.86 | ||

| Decoupling42 | NeurIPS 2017 | ✓ | 68.81 | 73.21 | 78.71 | |

| Co-teaching43 | NeurIPS 2018 | ✓ | 75.51 | 79.51 | 83.42 | |

| Co-teaching+61 | ICML 2019 | ✓ | 70.12 | 74.80 | 76.77 | |

| Sub-center63 | ECCV 2020 | ✓ | 75.77 | 79.80 | 82.59 | |

| Self-Adaptive64 | NeurIPS 2020 | ✓ | 78.49 | 77.92 | 78.19 | |

| JoCoR44 | CVPR 2020 | ✓ | 79.19 | 80.11 | 85.10 | |

| DivideMix41 | ICLR 2020 | ✓ | ✓ | 74.40 | 82.48 | 84.27 |

| PLC65 | ICLR 2021 | ✓ | 76.22 | 79.24 | 81.87 | |

| Peer-learning20 | ICCV 2021 | ✓ | 75.37 | 78.64 | 82.48 | |

| OLS39 | TIP 2021 | ✓ | 79.11 | 81.27 | 86.58 | |

| Jo-SRC50 | CVPR 2021 | ✓ | 81.52 | 82.73 | 88.13 | |

| SELC62 | IJCAI 2022 | ✓ | 77.25 | 80.26 | 80.89 | |

| Co-LDL66 | TMM 2022 | ✓ | 80.11 | 81.97 | 86.95 | |

| AGCE67 | TPAMI 2023 | ✓ | 75.54 | 82.21 | 82.76 | |

| CMW-Net-SL68 | TPAMI 2023 | ✓ | 77.41 | 76.48 | 79.70 | |

| GRIP(Ours) | - | ✓ | ✓ | 82.53 | 83.29 | 89.49 |

5 Experiments on Real-world Noisy Datasets

In this experiment, we further evaluate our approach on more challenging web noisy datasets to demonstrate its applicability under real-world scenario.

5.1 Datasets and Evaluation Metric

We evaluate our approach on WebFG-49620, which is designed for research on webly supervised fine-grained classification tasks. It contains three real-world sub-datasets, Web-bird, Web-aircraft, and Web-car. They utilize the fine-grained category labels of benchmark datasets CUB200-201169, FGVC-aircraft70, and Cars-19671 as target categories to crawl web images from Bing Image Search Engine. Compared with benchmark datasets, they have a larger number of training samples (18388, 13503, and 21448, respectively) but potential noisy labels. The test sets are directly taken from three benchmark datasets (CUB200-2011, FGVC-aircraft, and Stanford Cars). Details of three sub-datasets are as follows and summarized in Table 3.

Web-bird consists of 200 different subcategories of birds. Its web training set contains 18388 images and the test set has 5794 clean samples. The training label accuracy estimated by random sampling is about 65%.

Web-aircraft covers 100 variants of aircraft. The number of web training samples and clean test images are 13503 and 3333, respectively. Its estimated web label accuracy is approximately 73%.

Web-car contains 196 types of vehicles. Its training set consists of 21448 web samples with a roughly estimated label accuracy of 67%. The test set contains 8041 manually labeled images.

We follow the evaluation metric in section 4.1 and utilize ACA to evaluate model performance.

5.2 Implementation Details

We adopt a ImageNet pre-trained ResNet-5072 model as our backbone network and resize input images to with random horizontal flip as weak data augmentation. The network is trained for epochs with batch size set to . The learning rate is initialized to and decreases in a cosine annealing manner73. The momentum and weight decay of stochastic gradient descent (SGD)74 optimizer are set to and , respectively.

As for hyper-parameters, we set momentum for EMA and weight , to . Warm-up epoch is empirically set to . We set to on Web-bird and adopt a linear decreasing on Web-aircraft and Web-car. Specifically, linearly decreases from to and in epochs on Web-aircraft and Web-car, respectively, which is motivated by the increasing drop rate trick43. Threshold for relabeling is set to on Web-bird, Web-aircraft, and Web-car, respectively. Our experiments are all performed on one NVIDIA Tesla V100 GPU.

5.3 Baseline Methods

On real-world datasets, our baselines contain the following state-of-the-art methods: Decoupling42, Co-teaching43, Sub-center63, Co-teaching+61, Self-Adaptive64, JoCoR44, DivideMix41, Jo-SRC39, OLS39, PLC65, Peer-learning20, SELC62, Co-LDL66, AGCE67 and CMW-Net-SL68. We reproduce most of the above baselines42, 43, 63, 61, 64, 44, 41, 39, 65, 20, 62, 67 using the same backbone network for fair comparisons. In addition, we also train a ResNet-50 network using the cross-entropy loss function for comparison (Backbone).

5.4 Experimental Results and Analysis

We demonstrate ACA performances of baseline approaches and GRIP on WebFG-496 in Table 4. From Table 4, we can observe that GRIP significantly outperforms baselines on all three datasets. Specifically, it surpasses the best results of baselines by , , and on Web-bird, Web-aircraft, Web-car, respectively. For clearer illustration, we also present test accuracy trending of GRIP and compare it with some representative noise-cleaning baselines in Fig. 6. As illustrated in Fig. 6, GRIP shows higher accuracies and faster training speeds than other noise-cleaning approaches42, 44 on all benchmark datasets. This superiority owes to our group regularization strategy, which significantly improves the noise-robustness. In addition, compared with noise-robust approaches63, 39, 62, 67, 68 in Table 4, our approach achieves higher test accuracies by leveraging the instance purification strategy to specifically tackling noisy labels. The significant improvements over baselines demonstrate the effectiveness of simultaneously leveraging both noise-robust and noise-cleaning strategies for combating noisy labels.

Particularly, GRIP shows superior performance than DivideMix41, which utilizes noise-cleaning and noise-robust algorithms simultaneously. DivideMix has large gaps to GRIP on Web-bird () and Web-car (). The reason may lie in the Gaussian Mixture Model (GMM)75 used for dividing training samples in DivideMix. It can be difficult to fit a GMM on the real-world noisy data distribution. If GMM fails, the performance will inevitably decline.

Furthermore, we can observe from Table 4 that some baselines (Decoupling42, Co-teaching+61, Self-Adaptive64, PLC65, CMW-Net-SL68) only show slight improvements or even inferior performances to Backbone. The reason can be that they are designed and tested on coarse-grained synthetic noisy datasets. As a result, they tend to be less practical for fine-grained tasks in a real-world scenario. Compared with them, our approach is more effective in practical application.

6 Ablation Studies

In order to further analyze our approach, we conduct experiments on the real-world dataset Web-bird using ResNet-18 by default.

6.1 Parameter Analysis

In this experiment, we investigate the parameter sensitivities of weights and for loss functions, momentum for EMA, and for noise-cleaning, and warm-up epoch . Although our approach seems to have many parameters, we will show that half of them are robust and easy to adjust. The experimental results are shown in Fig. 7.

We set to and changes from to for investigation. From Fig. 7 (a), we can observe that the performance first steadily increases to the optimal value as rises. This phenomenon indicates that boosts the robustness with a proper . However, if further increases, the supervision of is weakened, which results in a performance decline. To achieve the best performance, we set to on all datasets, which is a balanced weight between and . In addition, we can also see that is robust in , which indicates a relatively balanced can work well.

Similar to the analysis on , we set to and changes from to . We can observe from Fig. 7 (b) that the performance climbs as rises from to , then slightly decreases when further increases. Supported by this result, we simply set to on all datasets. We can also find that is robust in .

We analyze the effect of in Fig. 7 (c). It can be observed from Fig. 7 (c) that unless is too large (over 0.8), applying EMA can boost the performance over the baseline (=). Furthermore, EMA can work well and achieve close test accuracies in .

We do not perform label re-assignment and only analyze the effect of on sample selection in Fig. 7 (d). When increases from to , the performance steadily climbs because more training samples are utilized. However, when is larger than , the performance declines with some fluctuations. The reason is that fewer noisy images are discarded and thus noise-cleaning becomes less effective.

The analysis of is illustrated in Fig. 7 (e). From Fig. 7 (e), we can see that performance climbs as increases from to . Then it drops fast when becomes larger (). The reason can be that too many noisy samples are relabeled and reused. Some of them may still have false labels or even be out-of-distribution (OOD). From this result, we believe that a small value of is safe and can boost performance.

The analysis of is illustrated in Fig. 7 (f). We can observe from Fig. 7 (f) that if the warm-up period is too short (less than ), the model cannot learn reliable soft labels for noise identification, which results in an unsatisfying performance. A proper lies in . If further increases, the model will be affected by noisy labels in the warm-up period and noise-cleaning becomes less useful, resulting in declining test accuracies.

From the experimental results in Fig. 7, we can conclude that weights , , and momentum are robust and easy to adjust. Parameters and for noise-cleaning should be changed on each dataset because they are concerned with noise ratios. As for , a relatively small value is recommended, e.g. in .

6.2 Analysis on Dataset Sizes

In this experiment, we investigate the applicability of GRIP on small datasets by changing the number of web images used for each category on Web-bird20. To be detailed, we construct sub-datasets from Web-bird by randomly selecting to samples for each class. Then we compare the performance of the backbone network, our group regularization strategy (GR), and our proposed GRIP on each sub-dataset in Fig. 8. It can be observed from Fig. 8 that our group regularization strategy shows significant and consistent improvements across different dataset sizes over the baseline. After applying the instance purification strategy, GRIP further boosts the performance on each sub-dataset. It shows remarkable noise-robustness even when the dataset is rather small, e.g. images per class. From the experimental results, we can conclude that our approach is insensitive to the dataset size.

We can also see from Fig. 8 that the ACA performance climbs steadily when more training samples are utilized. Therefore, leveraging free web images is a promising research direction as it allows boosting model performance and robustness through enlarging datasets.

| Backbone | Method | Performance | Improvement |

| VGG-16 | Baseline | 66.34 | 9.53 |

| Ours | 75.87 | ||

| ResNet-18 | Baseline | 71.10 | 7.81 |

| Ours | 78.91 | ||

| ResNet-50 | Baseline | 73.30 | 9.23 |

| Ours | 82.53 |

6.3 Applicability Across Different Backbones

We test our approach using different backbone networks on Web-bird to analyze the applicability in Table 5. We can observe from Table 5 that our approach boosts the performance by , , and on VGG-1676, ResNet-18, and ResNet-50, respectively. The experimental results indicate that our approach is robust and shows remarkable improvements across different CNN architectures.

6.4 Contribution of Each Component

In this subsection, we gradually add components in our method to the baseline model and present the contribution of each component in Table 6. From lines to in Table 6, we can observe that all components contribute to the performance improvements. To be detailed, leveraging surpasses the baseline by around . Then we further makes remarkable improvements through applying () and EMA (). Owing to our group regularization strategy, the ACA performance reaches and significantly surpasses the baseline. After further applying instance purification, the final performance reaches by employing JS divergence noise identification () and relabeling (). Resorting to the contribution of each component, our approach boosts the final performance by over the baseline.

| No | Regularization | Purification | Comparisons | ACA | |||||

| Soft | ME | EMA | JS | RE | LS | SL | MB | ||

| 1 | 71.10 | ||||||||

| 2 | ✓ | 73.11 | |||||||

| 3 | ✓ | ✓ | 76.46 | ||||||

| 4 | ✓ | ✓ | ✓ | 76.90 | |||||

| 5 | ✓ | ✓ | ✓ | ✓ | 78.32 | ||||

| 6 | ✓ | ✓ | ✓ | ✓ | ✓ | 78.91 | |||

| 7 | ✓ | 75.13 | |||||||

| 8 | ✓ | 72.28 | |||||||

| 9 | ✓ | ✓ | 75.18 | ||||||

| 10 | ✓ | ✓ | ✓ | ✓ | 77.58 | ||||

| 11 | ✓ | ✓ | ✓ | ✓ | 77.98 | ||||

6.5 Comparisons

In this experiment, we demonstrate the superiority of our proposed approach over other similar strategies. We first compare the proposed group regularization with the widely-used LS trick. Then we compare JS divergence noise identification with the small-loss principle. We also compare the global and mini-batch selection based on our JS divergence principle.

The experimental results are presented in Table 6 (lines to ). We can observe from Table 6 that LS shows a slightly inferior performance to simply leveraging soft label supervision . Applying LS and maximum entropy strategy together shows nearly no improvement over utilizing alone. On the contrary, combining and boosts the performance significantly. This result demonstrates the advantages of utilizing estimated soft labels over LS: higher performance and better flexibility. Owing to and , our group regularization strategy surpasses LS remarkably.

Comparing lines and in Table 6, we can find that noise identification within mini-batch based on JS divergence is superior to that based on loss. This result supports our argument that utilizing class soft labels is more effective than simply relying on loss values in noise identification. Comparing ling and , we can observe that global selection further boosts the performance. The improvement derives from that the noise rate imbalance problem is alleviated.

Note that we apply on discarded images. In order to demonstrate the contribution of this design, we remove it for comparison. Then we find that the performance drops from to . Since noisy samples are utilized for training in the warm-up period, the network memorizes them to some extent. They potentially misguide noise identification and degrade performance. To solve this problem, provides a strong regularization to guide the network to forget noisy samples and make them farther from class soft labels to guarantee more reliable noise identification.

6.6 Noise-cleaning Visualization

We sampled noise-cleaning results on WebFG-496 and visualize them in Fig. 9. We can observe from Fig. 9 that our method can effectively divide clean, revisable, and discarded samples. We can also notice that web dataset inevitably contain noisy labels. For example, searching web images for cars has a risk of getting some images of tires and steering wheels. This phenomenon reminds us that noise-cleaning operation is necessary for training robust models in learning with noisy labels tasks.

7 Conclusion

In this paper, we proposed an effective training method named GRIP that leverages group regularization to benefit instance purification on both synthetic and real-world datasets. The proposed group regularization strategy generates reliable class soft labels to boost model robustness against label noise. By measuring the differences between instances and class soft labels, our method can globally identify noisy and revisable samples. Resorting to the regularization from the category aspect and purification at the instance level, GRIP inherits the advantages of both noise-robust and noise-cleaning strategies. By conducting comprehensive experiments, we demonstrate the superiority of our approach over existing methods in combating noisy labels on both synthetic and real-world datasets.

References

- 1 G. Pei, F. Shen, Y. Yao, G.-S. Xie, Z. Tang, and J. Tang, “Hierarchical feature alignment network for unsupervised video object segmentation,” in Proceedings of the European Conference on Computer Vision, 2022, pp. 596–613.

- 2 S.-H. Wang, D. R. Nayak, D. S. Guttery, X. Zhang, and Y.-D. Zhang, “Covid-19 classification by ccshnet with deep fusion using transfer learning and discriminant correlation analysis,” Information Fusion, vol. 68, pp. 131–148, 2021.

- 3 S. Wang, M. E. Celebi, Y.-D. Zhang, X. Yu, S. Lu, X. Yao, Q. Zhou, M.-G. Miguel, Y. Tian, J. M. Gorriz et al., “Advances in data preprocessing for biomedical data fusion: An overview of the methods, challenges, and prospects,” Information Fusion, vol. 76, pp. 376–421, 2021.

- 4 Y.-D. Zhang, Z. Dong, S.-H. Wang, X. Yu, X. Yao, Q. Zhou, H. Hu, M. Li, C. Jiménez-Mesa, J. Ramirez et al., “Advances in multimodal data fusion in neuroimaging: Overview, challenges, and novel orientation,” Information Fusion, vol. 64, pp. 149–187, 2020.

- 5 H. Zhu, S. Liu, L. Deng, Y. Li, and F. Xiao, “Infrared small target detection via low-rank tensor completion with top-hat regularization,” IEEE Transactions on Geoscience and Remote Sensing, vol. 58, no. 2, pp. 1004–1016, 2019.

- 6 L. Deng, H. Zhu, Q. Zhou, and Y. Li, “Adaptive top-hat filter based on quantum genetic algorithm for infrared small target detection,” Multimedia Tools and Applications, vol. 77, pp. 10 539–10 551, 2018.

- 7 H. Zhu, H. Ni, S. Liu, G. Xu, and L. Deng, “Tnlrs: Target-aware non-local low-rank modeling with saliency filtering regularization for infrared small target detection,” IEEE Transactions on Image Processing, vol. 29, pp. 9546–9558, 2020.

- 8 L. Deng, J. Zhang, G. Xu, and H. Zhu, “Infrared small target detection via adaptive m-estimator ring top-hat transformation,” Pattern Recognition, vol. 112, p. 107729, 2021.

- 9 J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2009, pp. 248–255.

- 10 T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in Proceedings of the European Conference on Computer Vision. Springer, 2014, pp. 740–755.

- 11 Y. Yao, T. Chen, G.-S. Xie, C. Zhang, F. Shen, Q. Wu, Z. Tang, and J. Zhang, “Non-salient region object mining for weakly supervised semantic segmentation,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021, pp. 2623–2632.

- 12 T. Chen, Y. Yao, and J. Tang, “Multi-granularity denoising and bidirectional alignment for weakly supervised semantic segmentation,” IEEE Transactions on Image Processing, vol. 32, pp. 2960–2971, 2023.

- 13 H. Liu, P. Peng, T. Chen, Q. Wang, Y. Yao, and X.-S. Hua, “Fecanet: Boosting few-shot semantic segmentation with feature-enhanced context-aware network,” IEEE Transactions on Multimedia, pp. 1–13, 2023.

- 14 Q. Tian, Y. Cheng, S. He, and J. Sun, “Unsupervised multi-source domain adaptation for person re-identification via feature fusion and pseudo-label refinement,” Computers and Electrical Engineering, vol. 113, p. 109029, 2024.

- 15 Y. Yao, J. Zhang, F. Shen, X. Hua, J. Xu, and Z. Tang, “Exploiting web images for dataset construction: A domain robust approach,” IEEE Transactions on Multimedia, vol. 19, no. 8, pp. 1771–1784, 2017.

- 16 Y. Yao, J. Zhang, F. Shen, L. Liu, F. Zhu, D. Zhang, and H. T. Shen, “Towards automatic construction of diverse, high-quality image datasets,” IEEE Transactions on Knowledge and Data Engineering, vol. 32, no. 6, pp. 1199–1211, 2019.

- 17 Y. Yao, F. Shen, G. Xie, L. Liu, F. Zhu, J. Zhang, and H. T. Shen, “Exploiting web images for multi-output classification: From category to subcategories,” IEEE Transactions on Neural Networks and Learning Systems, vol. 31, no. 7, pp. 2348–2360, 2020.

- 18 Y. Yao, Z. Sun, F. Shen, L. Liu, L. Wang, F. Zhu, L. Ding, G. Wu, and L. Shao, “Dynamically visual disambiguation of keyword-based image search,” 2019, pp. 996–1002.

- 19 T. Xiao, T. Xia, Y. Yang, C. Huang, and X. Wang, “Learning from massive noisy labeled data for image classification,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2015, pp. 2691–2699.

- 20 Z. Sun, Y. Yao, X.-S. Wei, Y. Zhang, F. Shen, J. Wu, J. Zhang, and H. T. Shen, “Webly supervised fine-grained recognition: Benchmark datasets and an approach,” in Proceedings of the International Conference on Computer Vision, 2021, pp. 10 602–10 611.

- 21 B. Thomee, D. A. Shamma, G. Friedland, B. Elizalde, K. Ni, D. Poland, D. Borth, and L.-J. Li, “Yfcc100m: The new data in multimedia research,” Communications of the ACM, vol. 59, no. 2, pp. 64–73, 2016.

- 22 S. Abu-El-Haija, N. Kothari, J. Lee, P. Natsev, G. Toderici, B. Varadarajan, and S. Vijayanarasimhan, “Youtube-8m: A large-scale video classification benchmark,” arXiv preprint arXiv:1609.08675, 2016.

- 23 D. Arpit, S. Jastrzębski, N. Ballas, D. Krueger, E. Bengio, M. S. Kanwal, T. Maharaj, A. Fischer, A. Courville, Y. Bengio et al., “A closer look at memorization in deep networks,” in Proceedings of the International Conference on Machine Learning, 2017, pp. 233–242.

- 24 C. Zhang, S. Bengio, M. Hardt, B. Recht, and O. Vinyals, “Understanding deep learning requires rethinking generalization,” in Proceedings of the International Conference on Learning Representations, 2016, pp. 1–15.

- 25 Z. Sun, F. Shen, D. Huang, Q. Wang, X. Shu, Y. Yao, and J. Tang, “Pnp: Robust learning from noisy labels by probabilistic noise prediction,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022, pp. 5311–5320.

- 26 T. Liu and D. Tao, “Classification with noisy labels by importance reweighting,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 38, no. 3, pp. 447–461, 2015.

- 27 C. Zhang, Q. Wang, G. Xie, Q. Wu, F. Shen, and Z. Tang, “Robust learning from noisy web images via data purification for fine-grained recognition,” IEEE Transactions on Multimedia, vol. 24, pp. 1198–1209, 2021.

- 28 C. Zhang, Y. Yao, X. Shu, Z. Li, Z. Tang, and Q. Wu, “Data-driven meta-set based fine-grained visual recognition,” in Proceedings of the ACM International Conference on Multimedia, 2020, pp. 2372–2381.

- 29 H. Liu, C. Zhang, Y. Yao, X.-S. Wei, F. Shen, Z. Tang, and J. Zhang, “Exploiting web images for fine-grained visual recognition by eliminating open-set noise and utilizing hard examples,” IEEE Transactions on Multimedia, vol. 24, pp. 546–557, 2021.

- 30 C. Zhang, G. Lin, Q. Wang, F. Shen, Y. Yao, and Z. Tang, “Guided by meta-set: A data-driven method for fine-grained visual recognition,” IEEE Transactions on Multimedia, pp. 4691–4703, 2022.

- 31 M. Ren, W. Zeng, B. Yang, and R. Urtasun, “Learning to reweight examples for robust deep learning,” in Proceedings of the International Conference on Machine Learning. PMLR, 2018, pp. 4334–4343.

- 32 J. Shu, Q. Xie, L. Yi, Q. Zhao, S. Zhou, Z. Xu, and D. Meng, “Meta-weight-net: Learning an explicit mapping for sample weighting,” Advances in Neural Information Processing Systems, vol. 32, 2019.

- 33 M. Sheng, Z. Sun, Z. Cai, T. Chen, Y. Zhou, and Y. Yao, “Adaptive integration of partial label learning and negative learning for enhanced noisy label learning,” arXiv preprint arXiv:2312.09505, 2023.

- 34 Y. Wang, X. Ma, Z. Chen, Y. Luo, J. Yi, and J. Bailey, “Symmetric cross entropy for robust learning with noisy labels,” in Proceedings of the International Conference on Computer Vision, 2019, pp. 322–330.

- 35 X. Ma, H. Huang, Y. Wang, S. Romano, S. Erfani, and J. Bailey, “Normalized loss functions for deep learning with noisy labels,” in Proceedings of the International Conference on Machine Learning. PMLR, 2020, pp. 6543–6553.

- 36 Z. Zhang and M. Sabuncu, “Generalized cross entropy loss for training deep neural networks with noisy labels,” Advances in Neural Information Processing Systems, vol. 31, 2018.

- 37 X. Xia, T. Liu, B. Han, C. Gong, N. Wang, Z. Ge, and Y. Chang, “Robust early-learning: Hindering the memorization of noisy labels,” in Proceedings of the International Conference on Learning Representations, 2020, pp. 1–15.

- 38 C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna, “Rethinking the inception architecture for computer vision,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 2818–2826.

- 39 C.-B. Zhang, P.-T. Jiang, Q. Hou, Y. Wei, Q. Han, Z. Li, and M.-M. Cheng, “Delving deep into label smoothing,” IEEE Transactions on Image Processing, pp. 5984–5996, 2021.

- 40 J. Goldberger and E. Ben-Reuven, “Training deep neural-networks using a noise adaptation layer,” 2016.

- 41 J. Li, R. Socher, and S. C. Hoi, “Dividemix: Learning with noisy labels as semi-supervised learning,” in Proceedings of the International Conference on Learning Representations, 2020, pp. 1–14.

- 42 E. Malach and S. Shalev-Shwartz, “Decoupling" when to update" from" how to update",” Advances in Neural Information Processing Systems, vol. 30, 2017.

- 43 B. Han, Q. Yao, X. Yu, G. Niu, M. Xu, W. Hu, I. Tsang, and M. Sugiyama, “Co-teaching: Robust training of deep neural networks with extremely noisy labels,” Advances in Neural Information Processing Systems, vol. 31, 2018.

- 44 H. Wei, L. Feng, X. Chen, and B. An, “Combating noisy labels by agreement: A joint training method with co-regularization,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2020, pp. 13 726–13 735.

- 45 H. Liu, H. Zhang, J. Lu, and Z. Tang, “Exploiting web images for fine-grained visual recognition via dynamic loss correction and global sample selection,” IEEE Transactions on Multimedia, vol. 24, pp. 1105–1115, 2022.

- 46 Z. Cai, G.-S. Xie, X. Huang, D. Huang, Y. Yao, and Z. Tang, “Robust learning from noisy web data for fine-grained recognition,” Pattern Recognition, vol. 134, p. 109063, 2023.

- 47 Z. Cai, H. Liu, D. Huang, Y. Yao, and Z. Tang, “Co-mining: Mining informative samples with noisy labels,” Signal Processing, vol. 209, p. 109003, 2023.

- 48 X.-J. Gui, W. Wang, and Z.-H. Tian, “Towards understanding deep learning from noisy labels with small-loss criterion,” 2021, pp. 2469–2475.

- 49 H. Song, M. Kim, and J.-G. Lee, “Selfie: Refurbishing unclean samples for robust deep learning,” in Proceedings of the International Conference on Machine Learning, 2019, pp. 5907–5915.

- 50 Y. Yao, Z. Sun, C. Zhang, F. Shen, Q. Wu, J. Zhang, and Z. Tang, “Jo-src: A contrastive approach for combating noisy labels,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2021, pp. 5192–5201.

- 51 A. Krizhevsky and G. Hinton, “Learning multiple layers of features from tiny images,” Technical report, University of Tront, vol. 1, no. 4, p. 7, 2009.

- 52 X. Peng, K. Wang, Z. Zeng, Q. Li, J. Yang, and Y. Qiao, “Suppressing mislabeled data via grouping and self-attention,” in Proceedings of the European Conference on Computer Vision. Springer, 2020, pp. 786–802.

- 53 A. Ghosh, H. Kumar, and P. Sastry, “Robust loss functions under label noise for deep neural networks,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 31, no. 1, 2017.

- 54 Z. Sun, X.-S. Hua, Y. Yao, X.-S. Wei, G. Hu, and J. Zhang, “Crssc: salvage reusable samples from noisy data for robust learning,” in Proceedings of the ACM International Conference on Multimedia, 2020, pp. 92–101.

- 55 C. Zhang, Y. Yao, H. Liu, G.-S. Xie, X. Shu, T. Zhou, Z. Zhang, F. Shen, and Z. Tang, “Web-supervised network with softly update-drop training for fine-grained visual classification,” in Proceedings of the AAAI Conference on Artificial Intelligence, 2020, pp. 12 781–12 788.

- 56 C. Tan, J. Xia, L. Wu, and S. Z. Li, “Co-learning: Learning from noisy labels with self-supervision,” in Proceedings of the ACM International Conference on Multimedia, 2021, pp. 1405–1413.

- 57 D. Berthelot, N. Carlini, I. Goodfellow, N. Papernot, A. Oliver, and C. A. Raffel, “Mixmatch: A holistic approach to semi-supervised learning,” Advances in Neural Information Processing Systems, vol. 32, 2019.

- 58 A. Dubey, O. Gupta, R. Raskar, and N. Naik, “Maximum-entropy fine grained classification,” Advances in Neural Information Processing Systems, vol. 31, 2018.

- 59 J. Lin, “Divergence measures based on the shannon entropy,” IEEE Transactions on Information Theory, vol. 37, no. 1, pp. 145–151, 1991.

- 60 D. Patel and P. Sastry, “Adaptive sample selection for robust learning under label noise,” in IEEE Winter Conference on Applications of Computer Vision, 2023, pp. 3932–3942.

- 61 X. Yu, B. Han, J. Yao, G. Niu, I. Tsang, and M. Sugiyama, “How does disagreement help generalization against label corruption?” in Proceedings of the International Conference on Machine Learning, 2019, pp. 7164–7173.

- 62 Y. Lu and W. He, “SELC: self-ensemble label correction improves learning with noisy labels,” in Proceedings of the International Joint Conference on Artificial Intelligence, vol. 31, 2022, pp. 3278–3284.

- 63 J. Deng, J. Guo, T. Liu, M. Gong, and S. Zafeiriou, “Sub-center arcface: Boosting face recognition by large-scale noisy web faces,” in Proceedings of the European Conference on Computer Vision. Springer, 2020, pp. 741–757.

- 64 L. Huang, C. Zhang, and H. Zhang, “Self-adaptive training: Beyond empirical risk minimization,” Advances in Neural Information Processing Systems, vol. 33, 2020.

- 65 Y. Zhang, S. Zheng, P. Wu, M. Goswami, and C. Chen, “Learning with feature-dependent label noise: A progressive approach,” in Proceedings of the International Conference on Learning Representations, 2021, pp. 1–13.

- 66 Z. Sun, H. Liu, Q. Wang, T. Zhou, Q. Wu, and Z. Tang, “Co-ldl: A co-training-based label distribution learning method for tackling label noise,” IEEE Transactions on Multimedia, vol. 24, pp. 1093–1104, 2022.

- 67 X. Zhou, X. Liu, D. Zhai, J. Jiang, and X. Ji, “Asymmetric loss functions for noise-tolerant learning: theory and applications,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 7, pp. 8094–8109, 2023.

- 68 J. Shu, X. Yuan, D. Meng, and Z. Xu, “Cmw-net: Learning a class-aware sample weighting mapping for robust deep learning,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023.

- 69 C. Wah, S. Branson, P. Welinder, P. Perona, and S. Belongie, “The caltech-ucsd birds-200-2011 dataset,” 2011.

- 70 S. Maji, E. Rahtu, J. Kannala, M. Blaschko, and A. Vedaldi, “Fine-grained visual classification of aircraft,” arXiv preprint arXiv:1306.5151, 2013.

- 71 J. Krause, M. Stark, J. Deng, and L. Fei-Fei, “3d object representations for fine-grained categorization,” in Proceedings of the International Conference on Computer Vision, 2013, pp. 554–561.

- 72 K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 770–778.

- 73 I. Loshchilov and F. Hutter, “Sgdr: Stochastic gradient descent with warm restarts,” in Proceedings of the International Conference on Learning Representations, 2016, pp. 1–16.

- 74 L. Bottou, “Large-scale machine learning with stochastic gradient descent,” in Proceedings of the International Conference on Computational Statistics, 2010, p. 177.

- 75 H. Permuter, J. Francos, and I. Jermyn, “A study of gaussian mixture models of color and texture features for image classification and segmentation,” Pattern Recognition, pp. 695–706, 2006.

- 76 K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint arXiv:1409.1556, 2014.