GREN: Graph-Regularized Embedding Network for Weakly-Supervised Disease Localization in X-ray Images

Abstract

Locating diseases in chest X-ray images with few careful annotations saves large human effort. Recent works approached this task with innovative weakly-supervised algorithms such as multi-instance learning (MIL) and class activation maps (CAM), however, these methods often yield inaccurate or incomplete regions. One of the reasons is the neglection of the pathological implications hidden in the relationship across anatomical regions within each image and the relationship across images. In this paper, we argue that the cross-region and cross-image relationship, as contextual and compensating information, is vital to obtain more consistent and integral regions. To model the relationship, we propose the Graph Regularized Embedding Network (GREN), which leverages the intra-image and inter-image information to locate diseases on chest X-ray images. GREN uses a pre-trained U-Net to segment the lung lobes, and then models the intra-image relationship between the lung lobes using an intra-image graph to compare different regions. Meanwhile, the relationship between in-batch images is modeled by an inter-image graph to compare multiple images. This process mimics the training and decision-making process of a radiologist: comparing multiple regions and images for diagnosis. In order for the deep embedding layers of the neural network to retain structural information (important in the localization task), we use the Hash coding and Hamming distance to compute the graphs, which are used as regularizers to facilitate training. By means of this, our approach achieves the state-of-the-art result on NIH chest X-ray dataset for weakly-supervised disease localization. Our codes111https://github.com/qibaolian/GREN are accessible online.

Computer-assisted diagnosis, Chest X-ray, Weakly supervised localization, Domain knowledge.

1 Introduction

The automatic disease localization on chest X-ray images has become an increasingly important technique to support clinical diagnosis and treatment. The past decade has witnessed the application of convolutional neural networks (CNNs) in medical image analysis, including disease classification [33], [11], [2], [19], segmentation [6], [8], [28], [29], detection [39], [4], and report generation [12], [14], [31]. CNNs require a large amount of finely annotated training data to locate diseases. However, the annotation is tedious and laborious for radiologists. As a result, the X-ray datasets often only have the image-level labels and few location labels, which leads to inferior localization results. Developing localization models with coarse-grained (image-level) labels and few location annotations is an urgent problem, which arouses a weakly-supervised localization task [38].

Existing weakly-supervised localization algorithms are mainly based on multi-instance learning (MIL) [3], [16] and class activation maps (CAM) [23], [30], [26]. However, these methods often yield inaccurate or incomplete regions. For example, the CAM-based method only activates the most discriminative region and generates incomplete targets. To overcome these problems, beyond mere algorithmic innovations [22], [4], [10], [24], we argue that considering the complementary information between anatomical regions and multiple images can lead to a better localization result. This insight is inspired by the domain knowledge in the medical field: radiologists are trained to read a large number of X-ray images and analyse them by recognizing and comparing the differences (e.g., shapes, textures, contrast, etc.). Radiologists make decisions based on what they have learned and observed though comparison.

Recently, several attention-based works [18], [37] conducted disease localization by comparing abnormal and normal instances. However, they involved no direct supervision (e.g., the position label) to conduct error feedback, and it was difficult to interpret what the models have learned. To overcome these insufficiencies, we proposed the cross chest graph (CCG) [34] to regularize the model instead of the attention operations [18], [37]. CCG considered the inter-image relationship and neglected the intra-image relationship. More importantly, CCG obtained regions via clustering, and the self-organized regions lack pathological explanations. Based on but quite different from CCG, the proposed Graph Regularized Embedding Network (GREN) (Figure 1) considers both inter-image and intra-image relationships, and explicitly models the anatomical structures. We calculate the relationship between the two lung lobes because most chest diseases such as pneumonia, infiltration and consolidation, rarely appear symmetrically in both sides [37], and cardiomegaly usually makes left lung region smaller on X-ray image. Thus, the features of these abnormal regions can be identified via comparing the lung lobes. Our main contributions are:

-

•

We propose GREN, the first method exploring relational graphs across images and regions to regularize training in weakly-supervised localization. Compared with the attention-based methods, GREN avoids the normal control sampling problem. Compared with Graph-Rise [13], GREN requires no additional manual annotations. As the core elements, we present the intra-image and inter-image knowledge learning modules to model the complementary information.

-

•

We achieve the state-of-the-art result for disease localization on NIH chest X-ray dataset. Ablation experiments demonstrate the effectiveness of each component. We make the code publicly available for subsequent studies to reproduce our results.

2 Related Work

Deep learning has been widely applied in X-ray images analysis, including disease classification [30], [25], [10], [36] and detection [16], [18], [24]. However, locating diseases in chest X-ray images with few careful annotations remains a challenging problem. Wang et al. [30] contributed a chest X-ray dataset and localized the disease with a multi-label disease classification model according to the heatmaps. Li et al. [16] proposed to jointly model the disease identification and localization with multi-instance loss and binary cross-entropy loss. Tang et al. [25] proposed an iterative attention-guided refinement framework to improve the classification and weakly-supervised localization performance via CAM [23]. Sedai et al. [22] proposed a weakly supervised method based on class-aware multiscale convolutional feature to localize chest pathologies. Cai et al. [4] proposed an attention mining strategy to improve the sensitivity and saliency of model to disease patterns. All these methods overlooked the image-to-image and region-to-region relationship during modelling.

For the last three years, some works have attempted to embed image relations into deep learning. Zhao et al. [35] modeled the contralateral context information using a spatial transformer network to enhance disease representations. Lian et al. [17] leveraged the constant structure and disease relations using a structure-aware relation extraction network. These methods utilized the region-to-region relationship in individual image and overlooked the image-to-image relationship in modelling. There are also some works using inter-image relationship for weakly-supervised medical image analysis. Liu et al. [18] utilized the contrast-induced attention acquired on paired images between healthy and unhealthy samples to provide more information for localization. Zhou et al. [37] exploited two contrastive abnormal attention models to improve the performance of thoracic multi-disease recognition. They designed a left-right lung contrastive network to learn intra-attentive abnormal features, and an inter-contrastive abnormal attention model to compare healthy samples with multiple unhealthy samples to compute the abnormal attention map. However, the uncertainty of the normal control may lead to the uncertainty of performance. Regularizing the models, as another strategy, involves no such sampling problem. Juan et al. [13] proposed a neural graph learning framework leveraging graph structures to regularize training, where the similarity between images is used as edges. Graph-Rise [13] used the distance graph between images for regularization. CCG [34] constructed graph nodes using clustered regions and lacked interpretability. The proposed GREN, in contrast, is free from additional manual annotation and the regions has explicit anatomical definitions.

3 Method

The architecture of GREN is presented at Figure 2. The input images are chest X-ray images in a training batch. They go through a parenchymal segmentation network [1], and the left lung region and right lung region of each image are thus obtained, which is . Then, the input images and the images of lung regions are fed to the feature extractor to yield feature maps and . The distance of the feature map and the feature map is computed to obtain the intra-image distance and inter-image distance, respectively. At the same time, the Hash coding with digits is used to represent each patch. We calculate the Hamming distances between paired patches, resulting into the intra-image and inter-image similarity. Finally, the intra-image similarity and inter-image similarity are used to minimize the intra-image distance and the inter-image distance, respectively. A task head is added upon the embedding space to complete the weakly-supervised localization task. Please note that the feature extractor and the task head share weights. GREN aims to utilize the structural relationship of images and formulate a more informative embedding space to improve the localization performance.

3.1 Backbone

The ResNet-50 pre-trained on the ImageNet dataset is used as the feature extractor of GREN. The input images are . After removing the final classification and global pooling layer of ResNet-50, the feature map is extracted, which is 32 times down-sampled compared with the input. Meanwhile, the input image is divided into patch grids, and for each grid, the existent probability of disease is predicted by the network. Note that is an adjustable hyper-parameter (=16 in our experiments). Then, we pass through two convolutional layers and a sigmoid layer to obtain the final predictions of with channels, where is the number of possible disease types. Finally, we compute losses and make predictions in each channel for the corresponding class, following the paradigm used in [18] and [16].

For images with box-level annotations, if the grid in the feature map is covered partially by the ground truth box, we assign label 1 to the grid. Otherwise, we assign 0 to it. We use the binary cross-entropy (BCE) loss for each grid:

| (1) |

where , , and are the indexes of class, sample and grid, respectively. denotes the target label of the th grid in the th image for the class , and denotes the predicted probability of the th grid in the th image for the class .

For images with only disease-level annotations, we use the MIL loss:

| (2) |

where denotes the target label of the image. For the backbone network, the loss is formulated as

| (3) |

where denotes the network parameters, denotes if the class in the sample has the box annotation, and is the balance weight of the two losses and is set to 4.

3.2 Graph Regularization

Although existing works indeed paved the way to locate and identify diseases in chest X-ray images with limited box-level labels, there are still abundant information about the inter-image and intra-image structural relationship (similarity) that can be exploited to compensate for the lack of labels. These structural similarity can be naturally represented as a graph, where the graph nodes denote the images and the edges denote their relationships. To model the structural similarities, we train the network using the graph regularization. Namely, the more similar the images are, the closer their representations are in the embedding space. To achieve this goal, we exploit two graph regularization terms to encourage similar images (within batches) to move closer in the embedding space.

In an X-ray image, the areas containing the common lesions are the left and right lung regions, and the clavicle area and the blank area outside the body contain rare information. Therefore, the left and right lung regions of all samples are extracted by the segmentation algorithm [1]. The similarity is then calculated to measure the structural relationships of lung regions. We opt to computational efficiency and choose the Hamming distance and cosine distance for CNNs during computation.

Hash coding [27] is an algorithm that produces a snippet or fingerprint of images, which analyses the image structure and shows whether two images look nearly identical. We construct the relation graph based on Hash coding for two reasons. First, Hash coding has a higher computation and storage efficiency, which can fast calculate the Hamming distance to obtain the similarity of two images without additional manual annotation [15]. Second, Hash coding preserves structural information of X-ray images, which is ideal for the localization task. Since it generates a low frequency signature in the spectrum space, the low frequency signature of the monochromatic X-ray image can be viewed as structural information. The insight has been verified in [16] and [18]. Thus, we use the Hash coding and Hamming distance to measure the similarity of X-ray images. Specifically, the perceptual image Hash is designed not to change much when an image undergoes minor modifications such as compression, color-correction, and brightness change [21].

The computation has three steps, including image pre-processing, perceptual feature extraction, and quantization or compression to generate the Hash string. First, an image is pre-processed by resizing, color transformation and normalization. This step reduces the size of the data, and thus reducing the processing time. Second, the discrete cosine transform (DCT) is used to make perception feature extraction of an image invariable to content preserving manipulation. Third, numerical values that represent features of an image are quantized, generating a fixed sized Hash which is a compact and somewhat unique representation of an image [21]. Finally, the Hamming distance is used to quantify the similarity of two Hash codings. Additionally, the cosine similarity is a measurement that quantifies the similarity between two or more vectors, which is the cosine of the angle between vectors, and it is most commonly used in high-dimensional features of CNNs [32]. We use the cosine distance as another similarity algorithm to measure the similarity of the CNN features.

3.2.1 Intra-image Knowledge Learning

The left and right lung regions in chest X-ray image show some symmetric structures, and the lesions of most diseases rarely appear symmetrically in both sides, such as pneumonia, infiltration and consolidation [37]. It is very useful to compare and distinguish the differences (e.g., textures and shadows) between the two lung regions for radiologists.

The structural relationship of X-ray regions is modeled as a graph , where the nodes denote the two regions of left lung and right lung. The edges denote the similarity of the graph nodes ,

| (4) |

where and are the representations of the Hash coding of the left lung regions and the right lung regions in image, respectively. It is obtained by using perceptual hashing algorithm. calculates the Hamming distance of and . is a normalized parameter, which makes . The value of it is the product of the length of the Hash coding ( = 64 in our experiments). The graph regularization term is obtained,

| (5) |

where denotes the similarity of the left lung region and the right lung region , is the distance metric function, which is the Euclidean distance, and mean the feature map of the left lung region and the right lung , respectively. Please note that the and are obtained by taking the masks of the left lung region and the right lung region in the feature map .

3.2.2 Inter-image Knowledge Learning

There are phenomena of “different X-ray images of the similar diseases” and “different diseases of the similar X-ray images”, so it is difficult for the deep learning models to locate and classify diseases with limited box-level labels. Therefore, we propose a inter-image knowledge learning module to dig out the structural differences between different X-ray images, which provides a large difference for the similar diseases and similar X-ray images.

A pair of images and are randomly selected from the input images . The structural relationship information of different X-ray images is modeled to get the graph , where the graph nodes denote the images in a mini-batch of the network ( in our experiments), the edges denote the similarity of graph nodes . Since there are left and right lung regions in an image, the Hamming distance of two lung regions in all images should be calculated,

| (6) |

where , , and are the Hash codings of left and right lung regions of the image and the image , respectively. is the Hamming distance calculation. Note that the Hash coding generation is training-free. is a normalization parameter, which denotes the product of the length of the Hash coding ( = 64 in our experiments). The graph regularization term is written as

| (7) |

where denotes the similarity of and , and is the Euclidean distance, and and mean the feature maps of image and image , respectively.

The final objective is the summation of the loss of the localization network and the graph regularization terms and ,

| (8) |

where the hyper-parameters and control the balance between the three losses and are set to 0.11 and 0.15 by searching from 0 to 1 with a step of 0.05 and 0.01 for coarse-to-fine searching. For example, it is assumed that the hyper-parameter in coarse searching is 0.10 (with the step of 0.05), and the interval in fine searching is [0.96, 0.99] and [0.11, 0.14] (with the step of 0.01). When = 0 and = 0, the network reduces to a localization model without the graph regularization.

| T (IoU) | Models | Atelectasis | Cardiomegaly | Effusion | Infiltration | Mass | Nodule | Pneumonia | Pneumothorax | Mean |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 | Wang et al. [30] | 0.05 | 0.18 | 0.11 | 0.07 | 0.01 | 0.01 | 0.03 | 0.03 | 0.06 |

| Li et al [16] | 0.14 | 0.84 | 0.22 | 0.30 | 0.22 | 0.07 | 0.17 | 0.19 | 0.27 | |

| Liu et al. [18] | 0.32 | 0.78 | 0.40 | 0.61 | 0.33 | 0.05 | 0.37 | 0.23 | 0.39 | |

| Zhou et al. [37] | 0.39 | 0.86 | 0.46 | 0.65 | 0.39 | 0.13 | 0.43 | 0.27 | 0.45 | |

| Zhao et al. [34] | 0.27 | 0.86 | 0.48 | 0.72 | 0.53 | 0.14 | 0.58 | 0.35 | 0.49 | |

| Baseline (B) | 0.12 | 0.79 | 0.32 | 0.36 | 0.26 | 0.07 | 0.13 | 0.20 | 0.28 | |

| B+Intra | 0.37 | 0.86 | 0.48 | 0.80 | 0.37 | 0.14 | 0.58 | 0.30 | 0.49 | |

| B+Inter | 0.32 | 0.89 | 0.52 | 0.80 | 0.42 | 0.21 | 0.50 | 0.35 | 0.50 | |

| GREN (B+Intra+Inter) | 0.37 | 0.86 | 0.52 | 0.84 | 0.42 | 0.29 | 0.54 | 0.45 | 0.54 | |

| 0.7 | Wang et al. [30] | 0.01 | 0.03 | 0.02 | 0.00 | 0.00 | 0.00 | 0.01 | 0.02 | 0.01 |

| Li et al. [16] | 0.04 | 0.52 | 0.07 | 0.09 | 0.11 | 0.01 | 0.05 | 0.05 | 0.12 | |

| Liu et al.[18] | 0.18 | 0.70 | 0.28 | 0.41 | 0.27 | 0.04 | 0.25 | 0.18 | 0.29 | |

| Zhou et al. [37] | 0.24 | 0.75 | 0.33 | 0.45 | 0.33 | 0.09 | 0.35 | 0.23 | 0.35 | |

| Zhao et al. [34] | 0.20 | 0.86 | 0.48 | 0.68 | 0.32 | 0.14 | 0.54 | 0.30 | 0.44 | |

| Baseline (B) | 0.05 | 0.71 | 0.16 | 0.04 | 0.16 | 0.00 | 0.08 | 0.15 | 0.17 | |

| B+Intra | 0.27 | 0.86 | 0.44 | 0.72 | 0.32 | 0.14 | 0.58 | 0.20 | 0.44 | |

| B+Inter | 0.29 | 0.86 | 0.48 | 0.68 | 0.37 | 0.21 | 0.46 | 0.35 | 0.46 | |

| GREN (B+Intra+Inter) | 0.34 | 0.86 | 0.48 | 0.60 | 0.37 | 0.21 | 0.46 | 0.35 | 0.46 |

3.2.3 CNN Features Instead of Hash Coding

To calculate the similarity between regions, it is most intuitive to use CNN features. To investigate on this, we replace the similarity computing with CNN features to compare with the Hash coding with Hamming distance. The feature map is extracted using the same method as described previously. The similarities between the feature maps are calculated according to

| (9) |

where and are the feature maps of the left (right) regions of the image and image . is the similarity of feature maps and . is the similarity of the right regions and left regions in feature maps. is the cosine distance of the feature maps. The graph regularization term and are written as

| (10) | ||||

where is the Euclidean distance metric. and mean the feature maps of image and image , respectively. The final objective is the summation of the localization loss and the graph regularization loss and ,

| (11) |

where the hyper-parameter and control the balance between the three losses and are set to 0.15 with the same method as described previously.

4 Experiments Results

4.1 Datasets and Evaluation Metrics

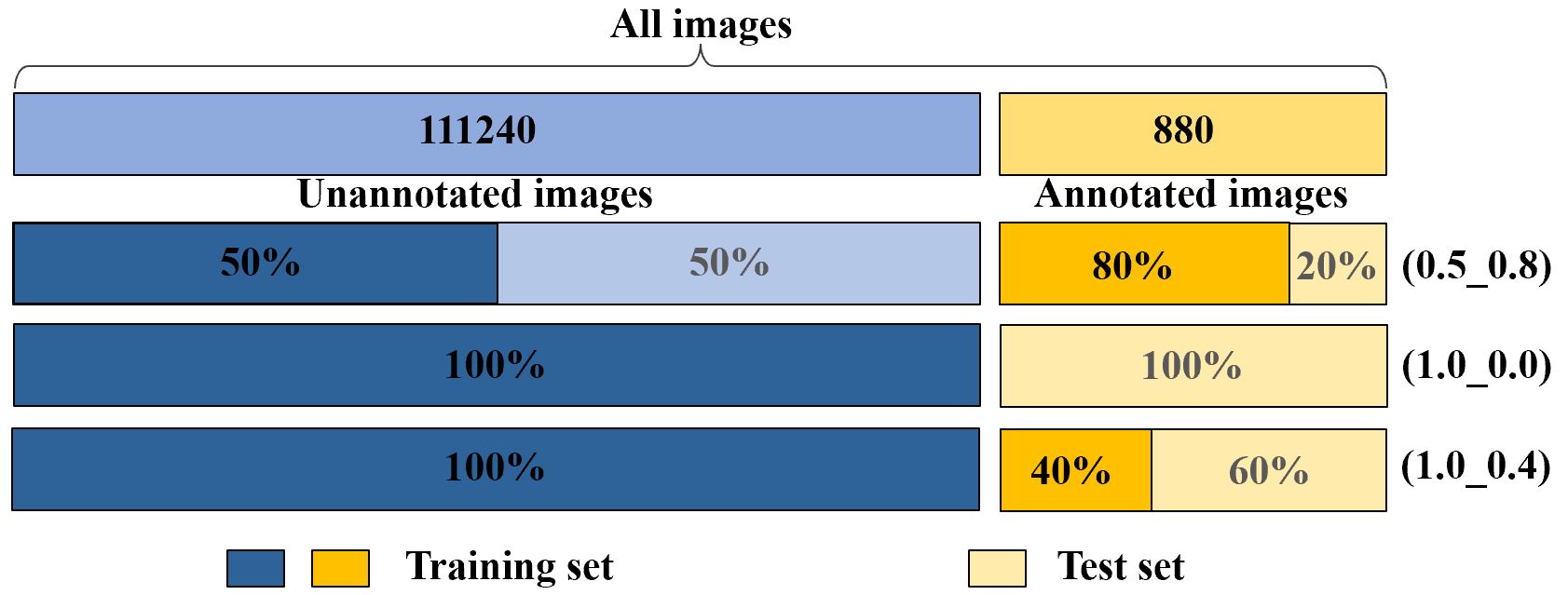

The NIH chest X-ray dataset [30] consists of 112,120 frontal-view X-ray images with 14 classes of diseases. Furthermore, the dataset contains 880 images with 984 labeled bounding boxes, and the provided bounding boxes only have 8 type of disease instances. Since we pay more attention to the task of locating diseases, we follow the terms in [16] and [18] to call the 880 images with labeled bounding boxes as ‘annotated’ and the remaining 111,240 images as ‘unannotated’ (Figure 3). For fast processing, we resize the original 3-channel images from resolution of to without any data augmentation techniques.

We follow the metrics used in [16]. For localization, the intersection over union (IoU) between predictions and ground truths is used to evaluate the performance of the models. The localization results are regarded as correct when , where is the threshold. In practice, we usually pay most attention to the accuracy of high thresholds of T(IoU), so two thresholds of 0.5 and 0.7 are set in our experiments. For more accurate localization with limited data with location annotations, the localization predictions are discrete small rectangles. The performance of the eight diseases with ground truth boxes is reported, which is inherited from existing researches [16] and [18].

4.2 Experimental Settings

All the models are trained on the NIH chest X-ray dataset using the stochastic gradient descent (SGD) algorithm with the Nesterov momentum. The learning rate starts from 0.001 and decreases by 10 times after every 4 epochs with a total of 9 epochs. The weight decay is 0.0001 and the momentum is 0.9. All the weights are initialized with pre-trained ResNet-50 [9] on ImageNet [7]. The mini-batch size is set to 4 with the NVIDIA 2080Ti GPU. All the algorithms are implemented with PyTorch [20]. The threshold of 0.5 is used to distinguish positive grids from negative grids in the class-wise feature map, which has been adopted in previous studies [16] and [18]. Note that the up-sampling operation [18] of feature maps is used to gain a more accurate localization for lesion location before two last fully convolutional layers. The paired images are randomly sampled in the train phase, and the test phase does not need the paired images.

4.3 Comparison with State-of-the-Art

In order to effectively evaluate GREN in weakly supervised disease localization, we strictly follow [16], [34], [18] and use three data participation methods (Figure 3) and compute the average values with a five-fold cross validation in each participation. In the first participation, we use 50% unannotated images and 80% annotated images for training and the remaining 20% annotated images for testing (0.5_0.8). In the second participation, we use the 100% unannotated images without annotated images for training and all annotated images for testing (1.0_0.0). In the third participation, we use the 100% unannotated images and 40% annotated images for training and the remaining 60% annotated images for testing (1.0_0.4).

Our experiment results are compared with six state-of-the-art methods. The first method is Wang et al. [30], which uses a multi-label disease classification model with disease heatmaps for location disease. The second method is Li et al. [16], which integrates the multi-instance level loss and binary cross-entropy loss into one framework for disease localization. The third method is Liu et al. [18], which utilizes the contrast-induced attention acquired on paired images between healthy and unhealthy samples to provide information for localization. The fourth method is Zhou et al. [37], which exploits two contrastive abnormal attention models and a dual-weighting graph convolution to improve the performance of thoracic multi-disease recognition. The fifth method is Zhao et al. [35], which proposes the contralateral context information for enhancing feature representations of disease proposals by using a spatial transformer network. The sixth method is Zhao et al. [34], which proposed the CCG to regularize the model using the similarities computed via of clustered regions.

| T(IoU) | Models | Atelectasis | Cardiomegaly | Effusion | Infiltration | Mass | Nodule | Pneumonia | Pneumothorax | Mean |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 | Wang et al.∗ [30] | 0.00 | 0.25 | 0.01 | 0.06 | 0.09 | 0.05 | 0.06 | 0.00 | 0.07 |

| Li et al.∗ [16] | 0.18 | 0.10 | 0.27 | 0.46 | 0.18 | 0.03 | 0.00 | 0.11 | 0.17 | |

| Liu et al. [18] | 0.19 | 0.53 | 0.19 | 0.47 | 0.33 | 0.03 | 0.08 | 0.11 | 0.24 | |

| Zhao et al. [35] | 0.32 | 0.68 | 0.39 | 0.61 | 0.49 | 0.07 | 0.15 | 0.21 | 0.36 | |

| Zhao et al. [34] | 0.31 | 0.79 | 0.37 | 0.75 | 0.40 | 0.06 | 0.24 | 0.27 | 0.40 | |

| Baseline (B) | 0.18 | 0.51 | 0.14 | 0.47 | 0.27 | 0.03 | 0.01 | 0.12 | 0.22 | |

| B+Intra | 0.30 | 0.79 | 0.38 | 0.72 | 0.42 | 0.05 | 0.25 | 0.27 | 0.40 | |

| B+Inter | 0.31 | 0.79 | 0.37 | 0.70 | 0.41 | 0.08 | 0.08 | 0.24 | 0.37 | |

| GREN (B+Intra+Inter) | 0.36 | 0.86 | 0.41 | 0.70 | 0.49 | 0.10 | 0.11 | 0.22 | 0.41 | |

| 0.7 | Wang et al.∗ [30] | 0.00 | 0.01 | 0.00 | 0.03 | 0.04 | 0.04 | 0.01 | 0.00 | 0.01 |

| Li et al.∗ [16] | 0.09 | 0.01 | 0.07 | 0.28 | 0.08 | 0.01 | 0.00 | 0.05 | 0.07 | |

| Liu et al. [18] | 0.08 | 0.30 | 0.09 | 0.25 | 0.19 | 0.01 | 0.04 | 0.07 | 0.13 | |

| Zhao et al. [35] | 0.13 | 0.54 | 0.19 | 0.27 | 0.27 | 0.04 | 0.05 | 0.17 | 0.21 | |

| Zhao et al. [34] | 0.06 | 0.64 | 0.08 | 0.38 | 0.19 | 0.01 | 0.08 | 0.09 | 0.19 | |

| Baseline (B) | 0.11 | 0.34 | 0.06 | 0.32 | 0.20 | 0.01 | 0.00 | 0.06 | 0.14 | |

| B+Intra | 0.21 | 0.74 | 0.22 | 0.62 | 0.35 | 0.03 | 0.18 | 0.18 | 0.32 | |

| B+Inter | 0.20 | 0.75 | 0.25 | 0.55 | 0.38 | 0.04 | 0.06 | 0.21 | 0.30 | |

| GREN (B+Intra+Inter) | 0.28 | 0.77 | 0.22 | 0.61 | 0.44 | 0.06 | 0.09 | 0.16 | 0.33 |

Here, a model needs to predict the location of eight diseases, so we pay more attention to the overall performance of the model rather than to a particular disease. Therefore, in the following discussion, we are most concerned with the mean accuracy. In the first participation, we compare the localization results of our model with [30], [16], [18], [37] and [34]. We can observe that GREN outperforms the existing methods in most cases, as shown in Table 1. Particularly, with the increase of T(IoU), GREN has greater advantages over the reference models. For examples, when T(IoU) = 0.5, the mean accuracy is 0.54, and outperforms [30], [16], [18], [37] and [34] by 0.48, 0.27, 0.15, 0.09 and 0.05. However, when T(IoU) = 0.7, the mean accuracy of GREN is 0.46, and outperforms [30], [16], [18], [37] and [34] by 0.45, 0.34, 0.17, 0.11 and 0.02. In the second participation, we train our model without any annotated images. Since [16] only provides the results at T(IoU) = 0.1, in order to better show the performance of our model, we reproduce the results of T(IoU) = 0.5 and 0.7. Note that the sysbol denotes the reproduced results. For each disease, the bold and red values denote the best results of the eight disease types and mean accuracy, respectively. We compare the localization results of our model with [30], [16], [18], [35] and [34]. It can be seen that GREN outperforms existing methods in most cases, as shown in Table 2. In the third participation, we use more annotated images comparing the second participation. We compare the localization results of GREN with [16], [18] and [34] in exact data setting. It can be seen that GREN still has the advantages over the existing methods, as shown in Table 3.

Overall, the experiment results demonstrate that GREN achieves state-of-the-art results for disease localization. Even when there is no annotated data for training, GREN still achieves decent localization results. Specifically, compared with the methods without image relations, the methods of modeling the relations in X-ray image have better performance. For example, the mean accuracies of [18], [34], [35], [37] and GREN outperform [30], [16]. The comparative results show that image relations are useful in weakly-supervised medical image analysis. Compared with the methods that using only inter-image or intra-image relationship [18], [34], [35], [37], GREN that integrates the intra-image and inter-image relationship is obviously superior to these methods. It can be concluded that GREN is effectively applied to the weakly supervised disease localization tasks.

| T(IoU) | Models | Atelectasis | Cardiomegaly | Effusion | Infiltration | Mass | Nodule | Pneumonia | Pneumothorax | Mean |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 | Li et al.∗ [16] | 0.23 | 0.72 | 0.30 | 0.60 | 0.22 | 0.02 | 0.32 | 0.20 | 0.32 |

| Liu et al. [18] | 0.36 | 0.57 | 0.37 | 0.62 | 0.34 | 0.13 | 0.23 | 0.17 | 0.35 | |

| Zhao et al. [34] | 0.26 | 0.80 | 0.41 | 0.67 | 0.15 | 0.06 | 0.42 | 0.18 | 0.37 | |

| Baseline (B) | 0.27 | 0.76 | 0.39 | 0.58 | 0.24 | 0.02 | 0.39 | 0.21 | 0.36 | |

| B+Intra | 0.44 | 0.91 | 0.60 | 0.82 | 0.52 | 0.13 | 0.58 | 0.33 | 0.54 | |

| B+Inter | 0.45 | 0.74 | 0.62 | 0.85 | 0.43 | 0.19 | 0.70 | 0.33 | 0.54 | |

| GREN (B+Intra+Inter) | 0.42 | 0.83 | 0.60 | 0.86 | 0.54 | 0.10 | 0.71 | 0.36 | 0.55 | |

| 0.7 | Li et al.∗ [16] | 0.07 | 0.64 | 0.17 | 0.38 | 0.17 | 0.00 | 0.20 | 0.17 | 0.21 |

| Liu et al. [18] | 0.19 | 0.47 | 0.20 | 0.41 | 0.22 | 0.06 | 0.12 | 0.11 | 0.22 | |

| Zhao et al. [34] | 0.18 | 0.71 | 0.20 | 0.50 | 0.20 | 0.02 | 0.29 | 0.06 | 0.27 | |

| Baseline (B) | 0.14 | 0.62 | 0.20 | 0.42 | 0.07 | 0.00 | 0.23 | 0.08 | 0.22 | |

| B+Intra | 0.35 | 0.89 | 0.49 | 0.76 | 0.37 | 0.06 | 0.51 | 0.26 | 0.46 | |

| B+Inter | 0.38 | 0.71 | 0.49 | 0.72 | 0.43 | 0.13 | 0.58 | 0.24 | 0.46 | |

| GREN (B+Intra+Inter) | 0.32 | 0.78 | 0.52 | 0.74 | 0.43 | 0.06 | 0.65 | 0.26 | 0.47 |

4.4 Ablation Studies

4.4.1 Ablation of the Inter and Intra Construction

We compare the localization results of the baseline model (B) with three models under different configurations, including the model with the intra-image knowledge learning module (B+Intra), the model with the inter-image knowledge learning module (B+Inter), and the model with both knowledge learning modules (B+Intra+Inter). The experiment results in Table 1, Table 2 and Table 3 show that the model (B+Intra) and the model (B+Inter) demonstrate more advantages over the baseline method, and the model (B+Intra+Inter) achieves the best localization result. For example, in Table 1, when T(IoU) = 0.5, the mean accuracy of the model (B+Intra) and the model (B+Inter) are 0.49 and 0.50, and outperform the baseline (B) by 0.21 and 0.22, respectively. The model (B+Intra+Inter) achieves the best localization results, which is 0.54, and outperforms the baseline (B) by 0.26. Additionally, the localization results in Table 1 and Table 2 demonstrate that when there is more annotated data in the training process, the model using the inter-image knowledge learning module has more advantages; when there is no annotated data in the training process, the model using the intra-image knowledge learning module has more advantages. For example, the model (B+Inter) performs better in most cases compared with the model (B+Intra) in Table 1, and the model (B+Intra) performs better in most cases compared with the model (B+Inter) in Table 2. Overall, the experiment results demonstrate that using intra-image and inter-image structural relationship information can improve the performance of models for disease localization. Moreover, the experiment results show that the combined effect of the inter and intra construction can further boost the performance of weakly supervised disease localization.

| T (IoU) | Models | Atelectasis | Cardiomegaly | Effusion | Infiltration | Mass | Nodule | Pneumonia | Pneumothorax | Mean |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 | Baseline (B) | 0.12 | 0.79 | 0.32 | 0.36 | 0.26 | 0.07 | 0.13 | 0.20 | 0.28 |

| B+Attention | 0.34 | 0.89 | 0.52 | 0.72 | 0.37 | 0.21 | 0.71 | 0.15 | 0.49 | |

| GREN | 0.37 | 0.86 | 0.52 | 0.84 | 0.42 | 0.29 | 0.54 | 0.45 | 0.54 | |

| 0.7 | Baseline (B) | 0.05 | 0.71 | 0.16 | 0.04 | 0.16 | 0.00 | 0.08 | 0.15 | 0.17 |

| B+Attention | 0.29 | 0.89 | 0.44 | 0.64 | 0.32 | 0.14 | 0.58 | 0.15 | 0.43 | |

| GREN | 0.34 | 0.86 | 0.48 | 0.60 | 0.37 | 0.21 | 0.46 | 0.35 | 0.46 |

4.4.2 Ablation of Similarity Computing Methods

To investigate the similarity computing method, we compare the Hash coding using Hamming distance with CNN features using cosine similarity (B+Cosine), which is shown in Figure 4(a). The B+Cosine model is competitive to the baseline model but is inferior to GREN for all the three data settings. There are two possible reasons. First, it is not easy to obtain a CNN-based model that needs to not only perform as a feature extractor but also learn class-aware similarity. Hash coding solved this problem via projecting features to a low frequency signature. Second, as a comparison, Hash coding is data-independent and training-free. Overall, the structural relationship (similarity) can be used to regularize the training of deep neural networks, so as to achieve more accurate and integral localization results with few careful annotations. Moreover, using Hash coding and Hamming distance instead of the cosine distance of CNN features can achieve better performance, which demonstrates that the image features extracted directly using Hash coding can to some extent compensate for the information lost in CNNs, making the proposed method reasonable.

4.4.3 The Number of Graph Nodes

We explore the influence of different number of graph nodes on the results, which is shown in Figure 4(b). The value is equal to the batch size of the model, including 2, 4, 8, and 16. The data of 100% unannotated images and 40% annotated images are used to evaluate the performance. The mean accuracies improve with the increase of batch size, whereas the trend is not an unbounded growth. For example, when T(IoU) = 0.7, the mean accuracy of the model with batch size of 16 is 0.47, outperforming the model with batch size of 2 by 0.03. However, when T(IoU) = 0.5, the mean accuracy of the model with batch size of 16 is 0.54, which is the same as the model with the batch size of 4. Using more images in each graph is good to effective regularization, but the growth is bounded (slow after the batch size exceeds 4). Therefore, the batch size is set to 4 in our experiment by considering the trade-off between memory cost burden and performance.

4.4.4 Ablation of Inter-image and Intra-image Similarity

Graph is a concise and effective way to characterize inter- and intra-image relations. There are several works to investigate the relationship and achieve a superior performance in multi-label natural image recognition, such as [5]. In the X-ray image analysis, the inter- and intra-image relations can be used to simulate the physician’s practice of comparing multiple images and symmetric regions of the same image for diagnosis respectively. Existing literatures [35], [34] imply that the relationship between the left and right lung lobes is useful in automatic diagnosis. However, these methods do not explore the underlying reasons why the inter- and intra- relationship modeling is effective. Here, we construct relation graphs using the left and right lung lobes as the nodes of graphs, and then incorporate the graph as a regularizer to facilitate the representation learning. Specially, we try to explore the underlying reasons of this.

We explore the influence of the inter-image and intra-image similarity as shown in Figure 5. We randomly choose 10 images from the dataset. In Figure 5(a), the horizontal and longitudinal axis are the same lung region images, and the two adjacent images have the same classes. We calculate the similarity of lung regions of these images using the Hash coding and Hamming distance. The two adjacent images have higher similarity compared with other images, where the darker color denotes higher similarity. For example, the diagonal rectangles are the similarities of the same images, so the values are 1. Additionally, we calculate the similarity of the left lung and right lung regions in an image, which is diagonal rectangle, and other rectangles are filled with the same color. In Figure 5(b), the horizontal and longitudinal axis are the corresponding left lung and right lung regions in an image. The darker-colored rectangle denotes higher similarity of the left lung and right lung in an image. Particularly, the sixth and ninth darker color rectangles (from upper left to low right) imply that the sixth and ninth images have highly similar left and right lung, and their categories are actually “No Finding” (The categories of the second, sixth, and ninth images are “No Finding”). The first, fourth, and eighth light color rectangles imply that the left and right lung regions of the first, fourth, and eighth images have low similarity, and their categories are “Cardiomegaly”, “Cardiomegaly”, and “Pneumonia”, respectively. To some degree, the inter-image similarity can indicate the categories of images without annotation data, and the intra-image similarity can indicate the information of differences of left and right lung regions, which is helpful to distinguish the unilateral disease of X-ray images.

| Models | Atelectasis | Cardiomegaly | Consolidation | Edema | Effusion | Emphysema | Fibrosis | |

|---|---|---|---|---|---|---|---|---|

| Baseline (B) | 76.77 | 87.09 | 77.73 | 87.90 | 84.65 | 90.04 | 77.87 | |

| B+Intra | 77.39 | 87.78 | 76.16 | 87.13 | 85.24 | 90.98 | 77.83 | |

| B+Inter | 76.61 | 86.17 | 77.02 | 89.00 | 84.16 | 90.22 | 79.43 | |

| GREN | 77.57 | 88.19 | 79.01 | 89.25 | 85.11 | 91.98 | 79.50 | |

| Models | Hernia | Infiltration | Mass | Nodule | Pleural Thickening | Pneumonia | Pneumothorax | Mean |

| Baseline (B) | 88.71 | 64.53 | 80.57 | 68.37 | 77.68 | 67.62 | 85.62 | 79.65 |

| B+Intra | 91.84 | 64.84 | 80.59 | 73.01 | 77.62 | 67.06 | 85.99 | 80.25 |

| B+Inter | 81.15 | 64.08 | 81.23 | 70.63 | 78.53 | 72.36 | 86.40 | 80.32 |

| GREN | 86.65 | 66.04 | 81.34 | 72.07 | 78.96 | 67.47 | 87.25 | 80.74 |

4.4.5 Ablation of Attention-based Method

We compare the localization results of our model with the attention-based method, which integrates the attention mechanism into the baseline to learn the difference of lung regions. In Table 4, the B+Attention model outperforms the baseline by 0.21 and 0.26 at T(IoU) = 0.5 and 0.7, however, GREN still holds advantages. It ourperforms the B+Attention model by 0.26 and 0.29 under the two thresholds. Overall, the attention-based method can indeed improve the accuracy, but GREN using the intra-image and inter-image structural relationship has larger advantages to enhance the weakly-supervised disease localization.

4.4.6 Disease Identification Results

Although we focus on the weakly-supervised localization task, we additionally evaluate the results of disease identification. Table 5 presents the AUC scores (the area under the receiver operating characteristic curve) for all the classes. Following [16] and [18], we use 70% images for training and 20% images for testing. We compare the performance of disease identification of the Baseline (B) with the B+Intra, B+Inter and GREN. The mean AUC scores of the B+Intra, B+Inter and GREN are 80.25%, 80.32% and 80.74%, outperforming the Baseline (79.65%) by 0.60%, 0.67% and 1.09%, respectively. GREN is more effective in disease localization than disease identification because the task of disease identification has more sufficient supervision. Overall, GREN can maintain performance of disease identification, but enjoys better ability for weakly-supervised disease localization. Particularly, GREN has better application value in the task with limited annotation data.

4.4.7 Visualizations

To better demonstrate the final effect of our method on disease localization, we visualize some of typical predictions of both the baseline model and GREN, as shown in Figure 6. The first column shows the original images, the second and the third column show the localization results of the baseline model and GREN. The green bounding box and red area mean the ground truth and prediction, respectively. It can be seen that GREN can predict more accurate regions compared with the baseline model. For example, for the class of “Nodule”, the localization results of the baseline model are completely inconsistent with the ground truth, but the localization results of GREN are consistent with the ground truth.

In order to demonstrate the difference of GREN (B+Intra+Inter) with B+Intra and B+Inter, we visualize some of typical predictions of them, as shown in Figure 7. From left to right, it shows the localization results of the Baseline (B), B+Intra, B+Inter and GREN. GREN plays an important role in integrating the advantages of B+Inter and B+Intra to make the predictions better. For a sample (Figure 7), some of B+Inter results are better than B+Intra results, such as (a), (b), (d), some of B+Intra results are better than B+Inter results, such as (e), (f). It can be seen that GREN can modify and complement the predicted results of B+Inter and B+Intra to make better predictions. The underlying reason may be that the functions of Inter-image and Intra-image knowledge learning module are not only complementary but also share common mechanisms. For example, the lungs are highly structured. For an X-ray image, there are not only similarities but also differences between left and right lungs. For different X-ray images, there are not only differences but also similarities in ipsilateral lung. Therefore, the functions of Inter-image and Intra-image knowledge learning module cannot be completely separated, and some overlap and crossover inevitably exist between them.

We also visualize some of typical predictions of both the CAM-based method [30] and GREN, as shown in Figure 8. The first column shows the original images, the second and the third column show the localization results of [30] and GREN. It can be seen that GREN has greater advantages over [30]. Moreover, compared with [30], GREN uses the regularization of the intra-image and inter-image structural information to pay more attention to the lung regions than other regions (e.g., clavicle and arm regions). It further demonstrates that GREN can effectively improve the performance of weakly supervised disease localization.

5 Conclusion

We have proposed and evaluated GREN, which leverages the intra-image and inter-image information to regularize CNNs to preserve the structural similarities between lung regions and image pairs in the embedding space. The experiment results on the NIH Chest-14 dataset demonstrate that GREN achieves the state-of-the-art performance in various settings. We demonstrate that the relationship of intra-image and inter-image can be used to model the graphs to facilitate training in weakly supervised disease localization. The proposed method has a very practical use in the weakly-supervised localization task. Since the public dataset available for X-ray lung segmentation only allowed us to segment the left and right lungs, we build graphs based on two lungs. However, many areas of the lung have explicit anatomical meanings, such as right upper lobe, right middle lobe, right lower lobe, left upper lobe, and left lower lobe. In theory, the more comprehensive lung regions are considered, the more relationship information GREN can model. For future work, we will exploit the relationship information of five lung lobes from CT images to construct graphs to compensate for the lack of labels in weakly supervised disease localization. We will also investigate algorithms applicable to the localization of small targets (especially pulmonary nodules).

References

- [1] “https://pypi.org/project/lungs-segmentation/.”

- [2] A. I. Aviles-Rivero, N. Papadakis, R. Li, P. Sellars, Q. Fan, R. T. Tan, and C.-B. Schönlieb, “Graphx chest x-ray classification under extreme minimal supervision,” 2020.

- [3] B. Babenko, “Multiple instance learning: algorithms and applications,” View Article PubMed/NCBI Google Scholar, pp. 1–19, 2008.

- [4] J. Cai, L. Lu, A. P. Harrison, X. Shi, P. Chen, and L. Yang, “Iterative attention mining for weakly supervised thoracic disease pattern localization in chest x-rays,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2018, pp. 589–598.

- [5] B. Chen, Z. Zhang, Y. Lu, F. Chen, G. Lu, and D. Zhang, “Semantic-interactive graph convolutional network for multilabel image recognition,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, pp. 1–13, 2021.

- [6] C. Chen, Q. Dou, H. Chen, and P.-A. Heng, “Semantic-aware generative adversarial nets for unsupervised domain adaptation in chest x-ray segmentation,” in International workshop on machine learning in medical imaging. Springer, 2018, pp. 143–151.

- [7] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “Imagenet: A large-scale hierarchical image database,” in 2009 IEEE conference on computer vision and pattern recognition. Ieee, 2009, pp. 248–255.

- [8] G. Gaál, B. Maga, and A. Lukács, “Attention u-net based adversarial architectures for chest x-ray lung segmentation,” arXiv preprint arXiv:2003.10304, 2020.

- [9] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [10] R. Hermoza, G. Maicas, J. C. Nascimento, and G. Carneiro, “Region proposals for saliency map refinement for weakly-supervised disease localisation and classification,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2020, pp. 539–549.

- [11] J. Irvin, P. Rajpurkar, M. Ko, Y. Yu, S. Ciurea-Ilcus, C. Chute, H. Marklund, B. Haghgoo, R. Ball, K. Shpanskaya et al., “Chexpert: A large chest radiograph dataset with uncertainty labels and expert comparison,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, no. 01, 2019, pp. 590–597.

- [12] B. Jing, P. Xie, and E. Xing, “On the automatic generation of medical imaging reports,” arXiv preprint arXiv:1711.08195, 2017.

- [13] D.-C. Juan, C.-T. Lu, Z. Li, F. Peng, A. Timofeev, Y.-T. Chen, Y. Gao, T. Duerig, A. Tomkins, and S. Ravi, “Graph-rise: Graph-regularized image semantic embedding,” 2019.

- [14] C. Y. Li, X. Liang, Z. Hu, and E. P. Xing, “Hybrid retrieval-generation reinforced agent for medical image report generation,” arXiv preprint arXiv:1805.08298, 2018.

- [15] Q. Li, Z. Sun, R. He, and T. Tan, “Deep supervised discrete hashing,” in Proceedings of the 31st International Conference on Neural Information Processing Systems, ser. NIPS’17. Red Hook, NY, USA: Curran Associates Inc., 2017, p. 2479–2488.

- [16] Z. Li, C. Wang, M. Han, Y. Xue, W. Wei, L.-J. Li, and L. Fei-Fei, “Thoracic disease identification and localization with limited supervision,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018, pp. 8290–8299.

- [17] J. Lian, J. Liu, S. Zhang, K. Gao, X. Liu, D. Zhang, and Y. Yu, “A structure-aware relation network for thoracic diseases detection and segmentation,” arXiv preprint arXiv:2104.10326, 2021.

- [18] J. Liu, G. Zhao, Y. Fei, M. Zhang, Y. Wang, and Y. Yu, “Align, attend and locate: Chest x-ray diagnosis via contrast induced attention network with limited supervision,” in 2019 IEEE/CVF International Conference on Computer Vision (ICCV), 2019, pp. 10 631–10 640.

- [19] G. Nousias, E.-K. Panagiotopoulou, K. Delibasis, A.-M. Chaliasou, A.-M. Tzounakou, and G. Labiris, “Video-based eye blink identification and classification,” IEEE Journal of Biomedical and Health Informatics, vol. 26, no. 7, pp. 3284–3293, 2022.

- [20] A. Paszke, S. Gross, S. Chintala, G. Chanan, E. Yang, Z. DeVito, Z. Lin, A. Desmaison, L. Antiga, and A. Lerer, “Automatic differentiation in pytorch,” 2017.

- [21] P. Samanta and S. Jain, “Analysis of perceptual hashing algorithms in image manipulation detection,” Procedia Computer Science, vol. 185, pp. 203–212, 2021.

- [22] S. Sedai, D. Mahapatra, Z. Ge, R. Chakravorty, and R. Garnavi, “Deep multiscale convolutional feature learning for weakly supervised localization of chest pathologies in x-ray images,” in International Workshop on Machine Learning in Medical Imaging. Springer, 2018, pp. 267–275.

- [23] R. Selvaraju, A. Das, R. Vedantam, M. Cogswell, D. Parikh, and D. Batra, “Grad-cam: Why did you say that? visual explanations from deep networks via gradient-based localization,” arXiv:1611.01646, 2016.

- [24] L. K. Tam, X. Wang, E. Turkbey, K. Lu, Y. Wen, and D. Xu, “Weakly supervised one-stage vision and language disease detection using large scale pneumonia and pneumothorax studies,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2020, pp. 45–55.

- [25] Y. Tang, “Large scale semi-supervised object detection using visual and semantic knowledge transfer,” In Computer Vision and Pattern Recognition (CVPR), 2016.

- [26] Y. Tang, X. Wang, A. P. Harrison, L. Lu, J. Xiao, and R. M. Summers, “Attention-guided curriculum learning for weakly supervised classification and localization of thoracic diseases on chest radiographs,” in International Workshop on Machine Learning in Medical Imaging. Springer, 2018, pp. 249–258.

- [27] R. Venkatesan, S. M. Koon, M. H. Jakubowski, and P. Moulin, “Robust image hashing,” in International Conference on Image Processing, 2000.

- [28] O. Viniavskyi, M. Dobko, and O. Dobosevych, “Weakly-supervised segmentation for disease localization in chest x-ray images,” in International Conference on Artificial Intelligence in Medicine. Springer, 2020, pp. 249–259.

- [29] D. Wang, S. Chen, X. Sun, Q. Chen, Y. Cao, B. Liu, and X. Liu, “Afp-mask: Anchor-free polyp instance segmentation in colonoscopy,” IEEE Journal of Biomedical and Health Informatics, vol. 26, no. 7, pp. 2995–3006, 2022.

- [30] X. Wang, Y. Peng, L. Lu, Z. Lu, M. Bagheri, and R. M. Summers, “Chestx-ray8: Hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 2097–2106.

- [31] X. Wang, Y. Peng, L. Lu, Z. Lu, and R. M. Summers, “Tienet: Text-image embedding network for common thorax disease classification and reporting in chest x-rays,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 9049–9058.

- [32] Wikipedia contributors, “Cosine similarity — Wikipedia, the free encyclopedia,” https://en.wikipedia.org/w/index.php?title=Cosine_similarity&oldid=1050346098, 2021, [Online; accessed 19-October-2021].

- [33] C. Yan, J. Yao, R. Li, Z. Xus, and J. Huang, “Weakly supervised deep learning for thoracic disease classification and localization on chest x-rays,” in Proceedings of the 2018 ACM International Conference on Bioinformatics, Computational Biology, and Health Informatics. ACM, 2018, pp. 103–110.

- [34] G. Zhao, “Cross chest graph for disease diagnosis with structural relational reasoning,” in MM ’21: ACM Multimedia Conference, Virtual Event, China, October 20 - 24, 2021. ACM, 2021, pp. 612–620. [Online]. Available: https://doi.org/10.1145/3474085.3475221

- [35] G. Zhao, C. Fang, G. Li, Z. Zhou, J. Li, L. Jiao, and Y. Yu, “Contralaterally enhanced networks for thoracic disease detection,” IEEE Transactions on Medical Imaging, 2021.

- [36] G. Zhao, Q. Feng, C. Chen, Z. Zhou, and Y. Yu, “Diagnose like a radiologist: Hybrid neuro-probabilistic reasoning for attribute-based medical image diagnosis,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021.

- [37] Y. Zhou, T. Zhou, T. Zhou, H. Fu, J. Liu, and L. Shao, “Contrast-attentive thoracic disease recognition with dual-weighting graph reasoning,” IEEE Transactions on Medical Imaging, vol. 40, no. 4, pp. 1196–1206, 2021.

- [38] Z.-H. Zhou, “A brief introduction to weakly supervised learning,” National Science Review, vol. 5, no. 1, pp. 44–53, 08 2017. [Online]. Available: https://doi.org/10.1093/nsr/nwx106

- [39] W. Zhu, Y. S. Vang, Y. Huang, and X. Xie, “Deepem: Deep 3d convnets with em for weakly supervised pulmonary nodule detection,” in International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, 2018, pp. 812–820.