Graph-Based Spatial-Temporal Convolutional Network for Vehicle Trajectory Prediction in Autonomous Driving

Abstract

Forecasting the trajectories of neighbor vehicles is a crucial step for decision making and motion planning of autonomous vehicles. This paper proposes a graph-based spatial-temporal convolutional network (GSTCN) to predict future trajectory distributions of all neighbor vehicles using past trajectories. This network tackles the spatial interactions using a graph convolutional network (GCN), and captures the temporal features with a convolutional neural network (CNN). The spatial-temporal features are encoded and decoded by a gated recurrent unit (GRU) network to generate future trajectory distributions. Besides, we propose a weighted adjacency matrix to describe the intensities of mutual influence between vehicles, and the ablation study demonstrates the effectiveness of our proposed scheme. Our network is evaluated on two real-world freeway trajectory datasets: I-80 and US-101 in the Next Generation Simulation (NGSIM). Comparisons in three aspects, including prediction errors, model sizes, and inference speeds, show that our network can achieve state-of-the-art performance.

Index Terms:

Vehicle trajectory prediction, graph convolutional network, spatial-temporal dependency, autonomous driving.I Introduction

Human needs to continually observe nearby vehicles’ behaviors during driving so as to plan future motions for safely and efficiently passing through complex traffics. Similarly, autonomous vehicles should collect the movement information of nearby objects and then decide which maneuver can minimize risks and maximize efficiencies. Detection of such information has become possible with the development of onboard sensors and vehicle-to-infrastructure (V2I) communication [1, 2]. Recent works focus on how to utilize this information to plan motions for autonomous vehicles [3, 4]. One of the main aspects of motion planning is to predict other traffic participants’ future trajectories [5, 6], with which autonomous vehicles can infer future situations they might encounter [7, 8].

In the past decades, inspired by vehicle evolution models [9] and statistics-based-models [10, 11], research on vehicle trajectory prediction is undergoing developments, among which kinematic models [12, 13, 14] and Kalman filter [15] have been widely studied. These traditional models possess high computational efficiencies, such that they are especially suitable for real-time applications with limited hardware resources. However, if the spatial-temporal correlations of vehicles are ignored, the long-term prediction (e.g., longer than one second) calculated by these traditional methods would be unreliable [16]. In order to extend these traditional methods to consider the spatial-temporal interactions among vehicles, combination methods were proposed which take the advantages of traditional methods and advanced models (e.g., machine-learning-based models). For example, Ju et al. [17] proposed a model which combines Kalman filter, kinematic models and neural network to capture the interactive effects among vehicles. Since machine-learning-based models can model the interactions and learn nonlinear trajectory evolution from real-world data, these combination methods have shown better performance than the above approaches.

So far, most of machine-learning-based methods have assumed that the task of vehicle trajectory prediction can be decomposed into two steps: firstly predict the maneuver of a target vehicle, and then generate a maneuver-based trajectory. For example, Deo et al. [18] used hidden Markov models to estimate maneuver intentions and then applied variational Gaussian mixture models for the trajectory predictions. Tran et al. [19] used a Gaussian process regression model to recognize the maneuver being performed by a target vehicle and applied Monte-Carlo method to predict future trajectories. Other maneuver estimation models include support vector machines [20, 21], random forest [22], multi-layer perceptrons [23] and Bayesian networks [24, 25]. However, most of these approaches are highly dependent on handcrafted features, which are designed to model interactions and physical constraints among vehicles under certain scenarios. Therefore, their performance would degrade in unexpected traffic scenarios.

As a branch of machine learning, deep learning can extract features automatically by learning from abundant data, which can overcome the shortages induced by handcrafted features. Especially, after the success of long-short term memory (LSTM) networks in capturing the complex temporal dependencies [26, 27], many works have applied LSTM to vehicle trajectory prediction. For example, Zyner et al. [28] proposed an LSTM-based model to predict a potential direction that the driver would take at an intersection. Xin et al. [29] proposed a dual LSTM-based model to estimate driver intentions and predict future trajectories. These approaches take the past trajectory of a target vehicle as input and can achieve high accuracy of trajectory prediction. However, they ignore the impact of nearby vehicles on the target vehicle.

Later on, inspired by the interactive nature of drivers, researchers began to add the effects of nearby vehicles to deep-learning-based models. For example, Deo et al. [30] proposed a maneuver LSTM (M-LSTM), which takes the past trajectories of a target vehicle and its nearby vehicles as inputs. However, this method only aggregates all trajectories together but ignores the different effects of nearby vehicles on the target one. To improve the M-LSTM, Deo et al. [31] proposed a network named CS-LSTM where a social tensor and a convolutional social pooling mechanism are introduced to model and capture the spatial interactions, respectively. Similarly, Zhao et al. [32] proposed a multi-agent tensor fusion (MATF) network, which introduces a spatial tensor to represent spatial relations among vehicles. However, since both the social tensor and the spatial tensor in CS-LSTM and MATF only retain the spatial relationships at the last timestamp of past trajectories, the spatial-temporal dependencies are ignored by them. To address this issue, Dai et al. [33] proposed a spatio-temporal LSTM to consider the dynamic effects of six closest vehicles on the target vehicle. Similarly, Hou et al. [34] proposed a structural-LSTM network to consider the dynamic effects of five nearby vehicles on the target vehicle.

Although the above deep-learning-based approaches have made great progress in improving the accuracy of vehicle trajectory prediction, there are still two limitations. Firstly, the spatial relations of vehicles are essentially non-Euclidean, such that it is difficult to explain the physical meaning of features when using LSTM to model the spatial-temporal interactions. Therefore, these LSTM-based approaches would be neither efficient nor intuitive in modeling the spatial-temporal interactions. Secondly, these models taking the LSTM as the backbone network require an intensive computation power, and most of them only predict one target vehicle’s future trajectory each time. Thus, the computation time would increase exponentially when predicting future trajectories of all neighbor vehicles, which would be not suitable for the real-time decision-making of autonomous vehicles.

Therefore, to overcome the two aforementioned limitations, this paper proposes an efficient and fast network called graph-based spatial-temporal convolutional network (GSTCN), which can simultaneously predict future trajectory distributions of all neighbor vehicles. Inspired by the fact that GCN can capture the spatial dependencies in a traffic network with a faster computation speed and a higher efficiency than the LSTM-based methods [35, 36, 37, 38, 39, 40], we design a spatial graph convolutional module to learn the spatial dependencies among vehicles. To distinguish the respective effects of neighbor vehicles on a vehicle, we propose a weighted adjacency matrix which is embedded into the spatial graph convolutional module. Moreover, to capture the correlations of the features between the prediction and past time horizons, we design a CNN-based temporal dependency extractor (TDE) operated in the temporal dimensions. In this way, features in the past time horizon are mapped into the prediction time horizon for analysis of vehicle trajectory evolutions. In addition, the spatial-temporal features are fed into a GRU-based encoder-decoder to generate future trajectory distributions. The main contributions of our work are as follows.

(1) The backbones of our GSTCN are GCN and CNN, so it has a smaller model size and a faster inference speed than those of LSTM-based models, making the real-time prediction of all nearby vehicles’ trajectories possible.

(2) A weighted adjacency matrix is proposed to describe the intensity of mutual influence between two vehicles, and the ablation study demonstrates the network with it has better performance than that with an unweighted adjacency matrix.

(3) The GSTCN generates the probability distributions over the future trajectories, which can describe the stochastic behaviors of the human drivers compared to models predicting deterministic trajectories, especially in a long prediction horizon.

The rest of this paper is organized as follows. The problem description is given in Section II. We present a detailed description of the proposed network in Section III. The experimental results and analysis are given in Section IV. Finally, conclusions and possible future works are drawn in Section V.

II Problem Description of Vehicle Trajectory Prediction

Vehicle trajectory prediction is an important assistant function of autonomous vehicles, which can help autonomous vehicles to assess the possibilities of risks and to plan appropriate trajectories in advance. Similar to the work in [31], this paper formulates the vehicle trajectory prediction as estimating the future trajectory distributions given past trajectories. The difference is that we predict future positions for all neighbor vehicles simultaneously, which can provide autonomous vehicles with more detailed information about future situations.

To formulate this problem, we first introduce some notations. Vehicles’ positions over a past time horizon are denoted as:

| (1) |

where,

| (2) |

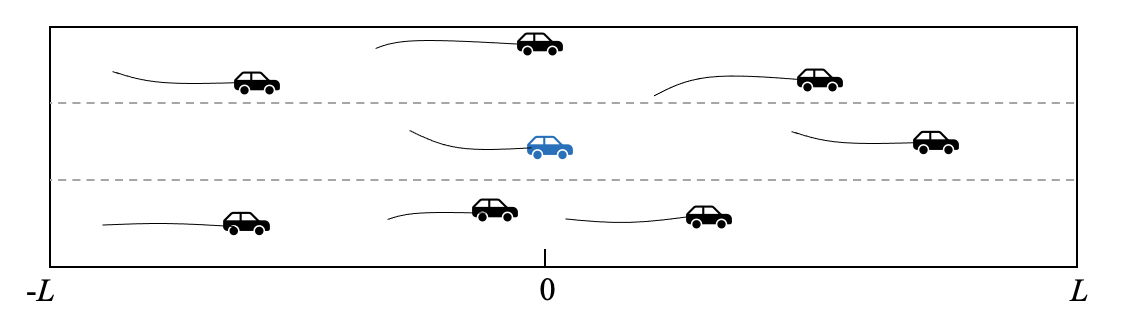

are the coordinates at the time , and is the number of vehicles. As shown in Fig. 1, we assume that the autonomous vehicles can observe the motions of vehicles within meters longitudinally and two adjacent lanes laterally, and can collect their past trajectories with a certain frequency. The trajectory distributions in the future time horizon is denoted as:

| (3) |

with,

| (4) |

We follow the assumption in [40] that the predicted coordinates are random variables satisfying bi-variable Gaussian distribution, i. e.,

| (5) |

where is the mean, is the standard deviation, and is the correlation.

Thus, the trajectory prediction problem can be summarized as follows. Given all neighbor vehicles’ positions over a past time horizon , the aim is to predict their trajectory distributions in the future time horizon .

III Graph-based Spatial-Temporal Convolutional Network

To solve the trajectory prediction problem, a key challenge is to figure out how vehicles affect each other, i.e., spatial-temporal dependencies among vehicles. Moreover, since predicted trajectories are time series, another challenge lies in how to tackle the sequence generation task. To address the two challenges, we propose a graph-based spatial-temporal convolutional network (GSTCN) for vehicle trajectory prediction. The overall architecture of our proposed network is shown in Fig. 2, in which the spatial graph convolutional module and the TDE are used to capture the spatial-temporal dependencies, and the trajectory prediction module is applied to predict future trajectories. We introduce each component in the following subsections.

III-A Spatial graph convolutional module

III-A1 Generation of spatial-temporal graph using trajectories

Inspired by the topological structure of the graph, we model the interactions among vehicles as a spatial-temporal graph. The spatial-temporal graph is defined as , where is the spatial graph representing the spatial relations of vehicles at the time . Supposing that there are vehicles in a scene, we define the spatial graph as , where is the set of all vertices. Each vertex represents an individual vehicle in the scene, and the attribute of is the coordinate . is the set of all edges, and each edge represents the mutual effects between vehicles.

Generally, a vehicle has different effects on other vehicles. For example, the sudden deceleration of a vehicle would cause close vehicles to slow down or change lanes, but have little influence on vehicles far away from it. Therefore, to distinguish the intensity of interactions between two vehicles, we consider that each edge in should be assigned to different weights. In this work, we introduce as a weighted adjacency matrix whose entries represent how strong the interactions between two vehicles could be. Considering that two vehicles with closer distances have stronger effects on each other, we use the reciprocal of the distance to measure the weight between two vehicles so that closer vehicles have higher weights. Hence, can be written as,

| (6) |

where in denotes the distance between the vehicles and at the time . A toy example about the generation of a spatial graph is given in Fig. 3, in which each vertex in the graph is corresponding to a vehicle and the reciprocal of distances represent the weights in the weighted adjacency matrix . At each time in the past time horizon, we can construct a spatial graph . By stacking , we get the spatial-temporal graph , which is the input of our network. In the spatial-temporal graph, we define as the stacked tensor of all in the past time horizon, and is set to be 2 to represent the two-dimensional coordinate . Similarly, the adjacency matrix of is denoted as , which is the stack of .

III-A2 Spatial graph convolution

The spatial-temporal graph contains raw information about dependencies among vehicles, so we should use well-designed networks to extract these dependencies from the graph. In the spatial dimension, a vehicle’s future trajectory is highly dependent on the motions of its nearby vehicles. To capture the spatial dependencies, existing works [31, 32] use the number of zeros in the grid to represent distances between vehicles, which is inefficient. Since GCN directly operates on the vertices of a graph and has shown its effectiveness to capture the spatial dependencies between one vertex and its neighbors [41], we introduce the spatial graph convolution in this work.

Extended from standard two dimensional convolution [42], the graph convolution operation is expressed as:

| (7) |

where denotes the feature matrix of vertices in layer . is an activation function, , is the identity matrix, is the diagonal node degree matrix of , is the parameters matrix of the layer . The aim of computing is to normalize the adjacency matrix, which can speed up the learning process of GCN [42].

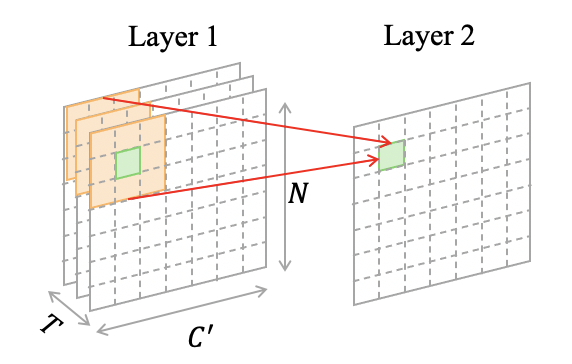

As shown in Fig. 4, the graph convolution operations apply a weighted sum to the features of a target vehicle and its surrounding vehicles, and then pass the results to the next layer. The feature of the target vehicle is considered because the state of the target vehicle also impacts its future motions. Note that the shapes of features are the same before and after the graph convolution.

III-B Temporal dependency extractor

In the temporal dimension, the future motions of a vehicle are highly dependent on its own past trajectory. For instance, a vehicle that is conducting the maneuver of lane change is most likely to continue this maneuver in the next few seconds. In addition, the effects of the surrounding vehicles on the target vehicle are time-varying. To capture the temporal dependencies, most of the existing works [31, 43] rely on LSTM. However, LSTM would lead to low training efficiency and slow computation speed [44]. Inspired by the work in [40], we design a CNN-based temporal dependency extractor (TDE) to extract the temporal features. Since this module depends on convolution operations, it has a smaller parameter size and a faster inference speed than those of LSTM.

The procedure of this module is as follows. After the neighboring information of each vertex has been captured by the spatial graph convolution operations, we get a three-dimensional tensor which contains the extracted spatial features. Then, we generate from with the transposition of dimensions. Each channel of contains the spatial features of all vehicles at the corresponding timestamp. The TDE takes as inputs to learn the evolving tendency of each vehicle from its own past motions and nearby vehicles’ dynamic interactions. The TDE takes the length of the past time horizon as the number of input channels. As shown in Fig. 5(a), a filter consists of multiple kernels, and each kernel is designed to learn the interactions among vehicles at one past timestamp by operating convolutions in one past temporal feature map. We set the number of kernels in a filter to be equal to the length of the past time horizon, so a filter can integrate past temporal information together into one feature map by performing element-wise addition on its kernels’ output feature maps. Besides, we set the number of filters in TDE to be equal to the length of the prediction time horizon. In this way, the TDE can extract temporal dependencies by learning the mapping relation between the input features and future temporal features. The output of the TDE is a tensor with shape , as shown in Fig. 5(b).

III-C Trajectory prediction module

After capturing the spatial-temporal features, we should generate future trajectory distributions, which is a typical sequence generating task. Inspired by the excellent performance of gated recurrent unit (GRU) network on sequence-based tasks and its cheap computation cost [45], we design the trajectory prediction module as a GRU-based encoder-decoder network. In our model, the spatial graph convolutional module learns the spatial dependencies among vehicles from the inputs, and then the temporal dependency extractor further extracts the temporal dependencies. Although the operations of these two modules are consecutive in the implementation steps, the spatial dependencies and temporal dependencies are not extracted at the same time, which would weaken the correlation between the spatial and temporal dependencies contained in the extracted features. Therefore, in this module, the encoder GRU is designed to strengthen the correlation between spatial dependencies and temporal dependencies, and the decoder GRU generates the probability distributions of future trajectories. The predicted coordinate is given by . Note that the encoder GRU or the decoder GRU used for each vehicle has shared weights, which guarantees the generalization of the model even if the number of neighbor vehicles varies.

IV Experimental Evaluation

IV-A Datasets

The model is trained on two public vehicle trajectory datasets: I-80 and US-101 in NGSIM [46], in which trajectories are recorded with a frequency of 10Hz under real freeway scenarios. Both datasets contain vehicle trajectories in mild, moderate, and heavy traffic scenarios for 45 minutes. The rich scenarios in the datasets are suitable for the evaluation of the robustness and effectiveness of the proposed network. The freeways of I-80 and US-101 to be studied are shown in Fig. 6, where the white arrows indicate driving directions.

To make a fair comparison, we follow the same training strategy in [31]: the raw data are downsampled to 5Hz, and the trajectories are split into segments of 8 seconds, in which the first 3 seconds of each segment are taken as the past time horizon and the remaining 5 seconds are treated as the prediction time horizon. We finally get 13,218 segments of trajectories, and all of them are randomly split into training, validation, and testing sets.

IV-B Evaluation metrics

In order to achieve quantitative metrics, the root mean square error (RMSE) of the predicted trajectory and the ground truth is used to evaluate performance. The RMSE is calculated as,

| (8) |

where and are the predicted coordinate of vehicle at time . We report the RMSE values for different prediction time horizons (from 1 to 5 seconds). We note when evaluating predicted probability distributions, previous works [31, 32] only calculate the RMSE of the mean values and the ground truth but ignores the predicted standard deviations and correlations. Thus, in order to make a more comprehensive evaluation, we report the lowest error based on 5 random samplings, which is similar to [40].

IV-C Implementation details

We implement the proposed model using PyTorch as that in [47]. Several implementation details are given as follows.

IV-C1 Data preprocessing

In a real scenario with imperfect sensors which, for example, result in missing or abnormal data, it is necessary to preprocess the raw data, where it is required to remove the abnormal data and infer the missing data. We have introduced a data preprocessing module to complete these tasks. With this module, the abnormal data can be detected and removed by using one of existing anomaly detection methods, and missing data can be inferred by cubic Hermite interpolation.

IV-C2 Scene size

We set the autonomous vehicle’s horizon of sight as follows. The autonomous vehicles can observe the motions of vehicles within the range of 100 meters longitudinally and two adjacent lanes laterally.

IV-C3 Input embedding

We use a 32-channel convolutional layer with kernel size to increase the dimensions of spatial coordinates, which can improve the learning ability of the network [27].

IV-C4 Temporal dependency extractor

Residual connections are used in this module. The kernel size is , and the padding is set to be 1 to guarantee that the shape of features is not changed after the convolutions.

IV-C5 Trajectory prediction module

Both the encoder and decoder are one-layer GRUs. A linear layer is used to make sure the outputs of the decoder have the expected shape, and also dropout (with 0.5 probability) is applied to prevent overfittings.

IV-C6 Training loss

Since the outputs of our network are the probability distributions, we train the network by minimizing the negative log-likelihood loss:

| (9) |

where denotes the likelihood of the ground truth position over the predicted probability distribution.

IV-C7 Training process

The GSTCN is trained on the NVIDIA GTX1080Ti GPU. The batch size is set to be 128, and we train the model for 250 epochs using Stochastic Gradient Descent (SGD) optimizer with an initial learning rate of 0.1. The learning rate is multiplied by 0.1 every 80 epochs to speed up the convergence of the loss.

IV-C8 Model configuration

We choose appropriate hyperparameters to improve the performance of our network. We first test how the numbers of layers in the spatial graph convolutional module and the TDE affect the performance. As shown in Fig. 7, the best model has one layer in the spatial graph convolutional module and five layers in the TDE. Then, we fix the layer number and vary the number of hidden units in GRU as the number from . As shown in Table I, the model has the lowest RMSE values when the number of hidden units is set to be 32.

| Prediction | 16 | 32 | 64 | 100 | 128 |

|---|---|---|---|---|---|

| Horizon(s) | |||||

| 1 | 0.48 | 0.44 | 0.43 | 0.42 | 0.42 |

| 2 | 0.87 | 0.83 | 0.84 | 0.83 | 0.84 |

| 3 | 1.36 | 1.33 | 1.36 | 1.37 | 1.36 |

| 4 | 2.05 | 2.01 | 2.05 | 2.05 | 2.03 |

| 5 | 3.06 | 2.98 | 3.03 | 3.03 | 2.99 |

| Average | 1.56 | 1.52 | 1.54 | 1.54 | 1.53 |

IV-D Ablation study

In this subsection, we conduct several ablative studies to verify the effectiveness of our scheme.

IV-D1 Effectiveness of different modules

Our full model mainly consists of three modules. To verify the effectiveness of each module on vehicle trajectory prediction, we train three variants of our model with different modules: GSTCN without GCN, GSTCN without TDE and GSTCN without GRU. As shown in Table II, the removal of any module in GSTCN will cause an increase in RMSE, which indicates the effectiveness of each module. When removing GCN or TDE from our full model, the features sent to the GRU lack the extracted spatial dependencies or temporal dependencies. As a result, the GRU fails to integrate the correlation between the spatial dependencies and temporal dependencies and cannot reasonably generate future trajectories. If the GRU is removed, the weak correlation between the spatial and temporal dependencies cannot be strengthened. Only after both the spatial dependencies and temporal dependencies are sent to the trajectory prediction module, can our model achieve the best performance, which indicates that the GCN and TDE are complementary in our full model, and the GRU encoder-decoder can strengthen the correlation between spatial dependencies and temporal dependencies from the extracted features and generate more accurate future trajectories.

| Prediction | GSTCN | GSTCN | GSTCN | GSTCN |

|---|---|---|---|---|

| Horizon(s) | W/O GCN | W/O TDE | W/O GRU | |

| 1 | 0.66 | 0.62 | 0.82 | 0.44 |

| 2 | 1.64 | 1.53 | 1.58 | 0.83 |

| 3 | 2.73 | 2.61 | 2.50 | 1.33 |

| 4 | 3.98 | 3.84 | 3.73 | 2.01 |

| 5 | 5.30 | 5.22 | 5.29 | 2.98 |

| Average | 2.86 | 2.76 | 2.78 | 1.52 |

IV-D2 Influence of different locations

The proposed network can simultaneously predict all neighbor vehicles’ future trajectories based on their past trajectories. Therefore, it is necessary to analyze the prediction errors of vehicles at different locations. As shown in Fig. 8, we report the RMSE values for vehicles located in the middle, front, and rear of the scene.

We note that vehicles located in the middle of the scene have the lowest RMSE values for all prediction horizons, which is consistent with the intuition that vehicles located in the middle have more neighboring information as inputs than vehicles at the edge. An interesting phenomenon is that the prediction errors of vehicles located in the rear of the scene are always lower than those of the vehicles in the front of the scene. This is because drivers are more likely to be affected by the vehicles in front of them according to the driving experience. However, when predicting the trajectories of the vehicles in the front of the scene, we can only utilize the motion information of the vehicles behind them. Thus, the predictions of the GSTCN are consistent with intuition.

IV-D3 GSTCN with different weighted adjacency matrices

The weights in the adjacency matrix measure the intensities of interactions among vehicles, so we should appropriately mark the weights. In Section III-A, we have introduced the reciprocal of the distance between two vehicles to mark the weights based on prior knowledge. This means that the closer the distance between vehicles the stronger the mutual effects are. Therefore, to validate this prior knowledge has a positive influence on the performance of our model, we introduce two other ways to measure the weights: (1) we directly use the distances between two vehicles to mark the weights; (2) similar to existing works [39, 42], we set all elements in the adjacency matrix to ones as a baseline.

| Prediction | Distance | Ones | Reciprocal of |

| Horizon(s) | distance | ||

| 1 | 0.48 | 1.10 | 0.44 |

| 2 | 0.88 | 2.04 | 0.83 |

| 3 | 1.41 | 3.09 | 1.33 |

| 4 | 2.10 | 4.29 | 2.01 |

| 5 | 3.08 | 5.70 | 2.98 |

| Average | 1.59 | 3.24 | 1.52 |

The RMSE values of different weighted adjacency matrices are listed in Table III. The model with the weighted adjacency matrices defined by the reciprocal of the distance outperforms others. Therefore, the reasonable usage of the prior knowledge can help improve the performance of the model. Note that all weighted adjacency matrices outperform the baseline, which demonstrates the effectiveness of weighted adjacency matrices. An interesting result is that although it is contrary to the intuition when marking the weights directly using the distances, the prediction errors are still lower than that of the baseline, which is partly due to the reverse learning ability of the deep-learning-based models.

| Prediction | CV | V-LSTM | C-VGMM+VIM | CS-LSTM-M | CS-LSTM | GRIP | GSTCN-ONE |

| Horizon(s) | |||||||

| 1 | 0.73 | 0.66 | 0.66 | 1.25 | 1.03 | 0.37 | 0.42 |

| 2 | 1.78 | 1.62 | 1.56 | 1.24 | 1.13 | 0.86 | 0.81 |

| 3 | 3.13 | 2.94 | 2.75 | 1.71 | 1.61 | 1.45 | 1.29 |

| 4 | 4.78 | 4.63 | 4.24 | 2.43 | 2.31 | 2.21 | 1.97 |

| 5 | 6.68 | 6.63 | 5.99 | 3.38 | 3.21 | 3.16 | 2.95 |

| Average | 3.42 | 3.30 | 3.04 | 2.00 | 1.86 | 1.61 | 1.49 |

IV-E Baselines

We evaluate the performance of our proposed method by comparing it with the following baselines:

-

•

Constant Velocity (denoted as CV) [31]: This baseline uses a constant velocity Kalman filter to predict the deterministic trajectory of one vehicle. The effects of neighbor vehicles on the target vehicle are ignored.

-

•

Vanilla LSTM (denoted as V-LSTM) [32]: This baseline is a simple LSTM encoder-decoder that takes the past trajectory of the target vehicle as inputs and generates a deterministic future trajectory of the target vehicle. The effects of surrounding vehicles on the target vehicle are ignored.

-

•

Class variational Gaussian mixture models with vehicle interaction module (denoted as C-VGMM+VIM) [18]: This model uses hidden Markov models to estimate maneuver intentions and then applies variational Gaussian mixture models for the future trajectory prediction.

-

•

CS-LSTM with maneuvers (denoted as CS-LSTM-M) [31]: This LSTM-based model applies convolutional social pooling layers to tackling the spatial interactions and predicts the multi-modal trajectory distributions of the target vehicle based on maneuvers.

-

•

CS-LSTM [31]: This baseline is the same as CS-LSTM-M except that it generates unimodal prediction distribution.

-

•

MATF [32]: This baseline takes past trajectories of all vehicles and the scene image of the predicted area as inputs and uses LSTM to predict deterministic trajectories of all vehicles in a scene.

-

•

Graph-based interaction-aware trajectory prediction model (denoted as GRIP-ALL) [39]: This baseline uses the graph to model the interactions among vehicles and uses LSTM to predict deterministic trajectories of all vehicles in a scene.

-

•

GRIP [39]: This baseline is the same as GRIP-ALL except that it only predicts the trajectory of one vehicle in the central location of the scene.

IV-F Quantitative analysis for prediction results

In this subsection, we compare the prediction errors, model sizes, and inference speeds of our method with those of the above baselines. Since some methods can predict trajectories of all neighbor vehicles simultaneously, while others only predict the trajectory of one vehicle each time, we compare the results respectively for the sake of fairness.

IV-F1 Performance when predicting one vehicle

In order to compare with the methods that only predict one vehicle’s future trajectory each time, we report the RMSE values of the vehicle located in the middle of the scene, as shown in the column of “GSTCN-ONE” in Table IV. It shows that the GSTCN-ONE achieves the lowest prediction errors for almost all prediction horizons, demonstrating the powerful ability of our GSTCN in capturing the spatial-temporal dependencies and inferring the future trajectories.

In Table IV, we can see that in the prediction horizon of one second, the previous state-of-the-art model GRIP has better performance than the GSTCN. However, the deterministic trajectories generated by the GRIP fail to describe the stochastic behaviors of human drivers, so our GSTCN outperforms the GRIP in long prediction horizons, and the average RMSE values are 7.45% lower than that of the GRIP. Both the GSTCN and the CS-LSTM predict the probability distributions over the future trajectories, but GSTCN outperforms CS-LSTM in all prediction horizons, which demonstrates that the GCN is more effective to capture the spatial dependencies than LSTM. In addition, we note that almost all deep-learning-based approaches have better performance than traditional and machine-learning-based methods (CV and C-VGMM+VIM). Among all deep-learning-based methods, the ones that use the information of surrounding vehicles outperform the ones that do not (i.e., V-LSTM). Therefore, it is crucial to consider the neighboring information when predicting the trajectories.

IV-F2 Performance when predicting all vehicles

Among all baselines, only the MATF and the GRIP-ALL can simultaneously predict trajectories of all vehicles. Therefore, this paper compares the performance of the two methods with our GSTCN in the case of predicting trajectories of all vehicles. As shown in Table V, our network improves the prediction accuracies for all prediction horizons. For example, the GSTCN achieves 22.4% average accuracy improvement compared with the GRIP-ALL. In addition, although our model does not take the scene images as the additional inputs, it still outperforms the MATF that does. This is because MATF only considers the spatial relationships at one timestamp but ignores the spatial-temporal dependencies, which demonstrates that capturing the spatial-temporal dependencies is far more important than processing the scene images.

| Prediction | MATF | GRIP-ALL | GSTCN |

| Horizon(s) | |||

| 1 | 0.67 | 0.64 | 0.44 |

| 2 | 1.51 | 1.13 | 0.83 |

| 3 | 2.51 | 1.80 | 1.33 |

| 4 | 3.71 | 2.62 | 2.01 |

| 5 | 5.12 | 3.60 | 2.98 |

| Average | 2.70 | 1.96 | 1.52 |

IV-F3 Comparison of model size and inference speed

Model size and inference speed are two important performance factors to decide whether an algorithm can be deployed to autonomous vehicles. The small model size guarantees that the method can work well with limited hardware resources. The fast inference speed can guarantee that autonomous vehicles have enough time to make decisions based on predictions. Since only the MATF and the GRIP-ALL can simultaneously predict trajectories of all vehicles among all baselines, we compare model sizes and inference speeds of them, as listed in Table VI.

The GRIP-ALL was previously the smallest model with 496.3K parameters. The model size of GSTCN is only about one tenth of that of the GRIP-ALL. To make a fair comparison, the inference speeds of these models are tested on the NVIDIA GTX1080Ti GPU, and we compare the average time required for each model to predict the trajectory of one vehicle. These three model simultaneously predict trajectories of 120 vehicles each time. Our GSTCN spends an average of 0.044 ms to predict one vehicle’s trajectory, which is about 7.3 times faster than the previously fastest model (GRIP-ALL). We achieve these improvements because the backbones of the GSTCN are GCN and CNN, which overcome the limitations induced by the recurrent architecture of LSTM.

| Model | Parameters | Average inference time |

|---|---|---|

| count | for one vehicle (ms) | |

| MATF | 15.3M (313) | 0.370 (8.4) |

| GRIP-ALL | 496.3K (10) | 0.322 (7.3) |

| GSTCN | 48.9K | 0.044 |

IV-G Qualitative analysis for GSTCN

In this subsection, we qualitatively analyze the prediction performance of our GSTCN by visualizing several representative predicted trajectories under mild, moderate, and heavy traffic scenarios. All the results are sampled from the I-80 and US-101 datasets. As shown in Fig. 9, our model observes the past trajectories of all vehicles in the scene for 3 seconds, illustrated as dashed lines. Then the probability distributions over all trajectories for the next 5 seconds are predicted, which are shown as color densities. The solid lines represent the ground truth trajectories. Overall, the predicted trajectory distributions can capture the pattern of ground truth trajectories well.

As shown in Fig. 9(a) and (b), when the traffic condition is mild, vehicles have few interactions and tend to drive at a high speed, and the predicted distributions well prove that our network has learned this feature. In the moderate traffic scenario, vehicles are more likely to change lanes to maximize their speeds. For example, as shown in Fig. 9(c), since the front vehicle on Lane 3 has a relatively slow speed, the vehicle behind it would conduct lane changing. The GSTCN can capture this kind of spatial-temporal dependencies and successfully predict future distributions. While in heavy traffic, the motions of vehicles become more complicated. For example, as shown in Fig. 9(d), vehicles on Lane 1 drive at relatively high speeds, while vehicles on other lanes move slowly. Our GSTCN still successfully predicts the future distributions of all vehicles, which demonstrates the robustness of our network.

IV-H Robustness to imperfect data

In the previous experiments, since the NGSIM datasets have been preprocessed by the data provider, we assume that the trajectory data received by the autonomous vehicle are perfect. However, in practical application, such perfection is generally impossible to achieve. Therefore, we will discuss the robustness of the proposed model in the case of imperfect data in this subsection.

| Prediction | Case I (Perfect) | Case II (Perfect) | Perfect |

| Horizon(s) | |||

| 1 | 0.48 (+0.04) | 0.48 (+0.04) | 0.44 |

| 2 | 0.90 (+0.07) | 0.93 (+0.10) | 0.83 |

| 3 | 1.44 (+0.11) | 1.55 (+0.22) | 1.33 |

| 4 | 2.14 (+0.13) | 2.37 (+0.36) | 2.01 |

| 5 | 3.12 (+0.14) | 3.53 (+0.55) | 2.98 |

| Average | 1.62 (+0.10) | 1.77 (+0.25) | 1.52 |

IV-H1 Case I: partially missing

In a general case, the data collected by the sensor have some missing points, so we randomly select half of the sequences from the testing set, from which randomly delete 20% of the data points. Before these imperfect data are fed into our model, we use cubic Hermite interpolation to infer the value of missing points.

IV-H2 Case II: totally missing

An extreme case is that a vehicle is totally undetected during a past time horizon, so it is impossible to infer reasonable data for the model. For this case, we randomly select a vehicle from the input and completely discard its trajectory data.

As shown in Table VII, we compare the RMSE for our model with imperfect and perfect data. We can see that the RMSE for imperfect data are greater than these for the perfect data, but the increase is acceptable, which demonstrates the robustness of our model and its potential for practical applications. In addition, when one nearby vehicle is totally undetected during the past time horizon, the RMSE increments in the long prediction horizon (3-5s) are much larger than these in case I, which indicates that the complete undetection of one vehicle has a greater impact on the accuracy of vehicle trajectory prediction than the partial undetection of several vehicles.

V Conclusions and Future Work

In this paper, we have presented a graph-based spatial-temporal convolutional network (GSTCN) that can predict the future trajectory distributions of all vehicles in a scene simultaneously. In our method, a weighted adjacency matrix has been proposed to distinguish different effects from nearby vehicles on the target vehicle. Based on this adjacency matrix, a spatial graph convolutional module can be used to learn the spatial dependencies among vehicles. The experimental results have shown that our GSTCN outperforms the main previous methods, and the small model size and fast inference speed of our GSTCN have demonstrated it has the potential to be deployed to autonomous vehicles.

In the future, several works can be done to further extend our proposed network. Firstly, in addition to past trajectories of surrounding vehicles, there are many other supplementary data (e.g., visual scene images, high-resolution maps, and vehicle-to-vehicle communication information) that can be detected by autonomous vehicles. Therefore, how to utilize these supplementary data to further improve the performance is worth studying. Secondly, the effects of nearby vehicles in different directions on the target vehicle are slightly different, even if the distances are the same. Hence, we can study how to consider the effects of different directions when constructing the weighted adjacency matrix. Finally, the aim of trajectory prediction is to enable autonomous vehicles to gain the ability to make optimal decisions. Thus, better motion planning algorithms for autonomous vehicles based on the predictions of our GSTCN can be studied to improve traffic efficiency and security.

In order to better understand the predictions in Fig. 9, we present the speeds of each vehicle in the past time horizon in Table VIII.

| Scene | Samples | Timestamp | |||||

| 1 | 2 | 3 | |||||

| a | Lane 2-1 | 22.9 | 0.03 | 22.6 | 0.04 | 23.4 | -0.10 |

| b | Lane 1-1 | 16.8 | -0.03 | 14.7 | 0.02 | 15.3 | -0.22 |

| Lane 2-1 | 13.3 | 0.04 | 13.7 | 0.20 | 15.0 | -0.20 | |

| Lane 3-1 | 11.8 | 0.18 | 11.8 | -0.12 | 11.8 | -0.06 | |

| Lane 3-2 | 16.0 | 0.02 | 15.5 | 0.16 | 15.4 | -0.01 | |

| c | Lane 1-1 | 14.92 | -0.47 | 12.60 | -0.78 | 10.23 | 0.01 |

| Lane 2-1 | 10.03 | -0.82 | 8.10 | -0.01 | 9.14 | 0.02 | |

| Lane 2-2 | 9.30 | -0.08 | 7.79 | -0.18 | 8.05 | -0.41 | |

| Lane 3-1 | 8.34 | 0.00 | 7.98 | 0.05 | 8.12 | 0.32 | |

| Lane 3-2 | 5.13 | 0.01 | 5.02 | 0.00 | 5.16 | 0.02 | |

| d | Lane 1-1 | 1.27 | -0.06 | 1.27 | -0.06 | 1.81 | -0.06 |

| Lane 1-2 | 3.23 | -0.07 | 3.30 | -0.07 | 3.44 | 0.00 | |

| Lane 1-3 | 3.36 | 0.01 | 3.29 | 0.01 | 3.91 | 0.00 | |

| Lane 1-4 | 6.06 | 0.02 | 6.71 | 0.01 | 7.19 | 0.02 | |

| Lane 2-1 | 0.00 | 0.00 | 1.40 | 0.00 | 3.21 | 0.00 | |

| Lane 2-2 | 0.64 | 0.00 | 1.06 | 0.00 | 1.38 | 0.00 | |

| Lane 2-3 | 3.48 | 0.00 | 2.42 | 0.00 | 3.08 | 0.00 | |

| Lane 2-4 | 1.72 | -0.07 | 1.70 | -0.01 | 1.71 | 0.03 | |

| Lane 2-5 | 3.36 | 0.00 | 4.42 | 0.00 | 6.07 | 0.01 | |

| Lane 2-6 | 4.41 | 0.04 | 4.80 | 0.05 | 3.77 | -0.06 | |

| Lane 2-7 | 4.36 | 0.05 | 3.78 | 0.00 | 3.80 | 0.00 | |

| Lane 3-1 | 0.16 | 0.00 | 3.12 | 0.00 | 3.03 | 0.00 | |

| Lane 3-2 | 3.35 | 0.00 | 2.38 | 0.00 | 1.25 | 0.00 | |

| Lane 3-3 | 1.56 | 0.00 | 1.48 | 0.00 | 1.52 | 0.00 | |

| Lane 3-4 | 3.15 | 0.00 | 3.23 | 0.00 | 3.12 | 0.00 | |

| Lane 3-5 | 2.01 | 0.00 | 3.79 | 0.00 | 3.28 | 0.00 | |

| Lane 3-6 | 6.19 | -0.24 | 6.71 | 0.00 | 4.27 | 0.00 | |

| Lane 3-7 | 4.47 | -0.10 | 4.37 | -0.09 | 3.92 | 0.00 | |

| Lane 3-8 | 3.35 | 0.01 | 3.43 | 0.01 | 3.50 | 0.00 | |

References

- [1] G. Xie, H. Gao, L. Qian, B. Huang, K. Li, and J. Wang, “Vehicle trajectory prediction by integrating physics-and maneuver-based approaches using interactive multiple models,” IEEE Trans. Ind. Electron., vol. 65, no. 7, pp. 5999–6008, Jul. 2018.

- [2] D. Dorrell, A. Vinel, and D. Cao, “Connected vehicles-advancements in vehicular technologies and informatics,” IEEE Trans. Ind. Electron., vol. 62, no. 12, pp. 7824–7826, Dec. 2015.

- [3] D. González, J. Pérez, V. Milanés, and F. Nashashibi, “A review of motion planning techniques for automated vehicles,” IEEE Trans. Intell. Transp. Syst., vol. 17, no. 4, pp. 1135–1145, Apr. 2015.

- [4] L. Claussmann, M. Revilloud, D. Gruyer, and S. Glaser, “A review of motion planning for highway autonomous driving,” IEEE Trans. Intell. Transp. Syst., vol. 21, no. 5, pp. 1826–1848, May 2019.

- [5] S. Mozaffari, O. Y. Al-Jarrah, M. Dianati, P. Jennings, and A. Mouzakitis, “Deep learning-based vehicle behavior prediction for autonomous driving applications: A review,” IEEE Trans. Intell. Transp. Syst., pp. 1–15, 2020.

- [6] A. Rudenko, L. Palmieri, M. Herman, K. M. Kitani, D. M. Gavrila, and K. O. Arras, “Human motion trajectory prediction: A survey,” Int. J. Robot. Res., vol. 39, no. 8, pp. 895–935, Jun. 2020.

- [7] X. Li, Z. Sun, D. Cao, Z. He, and Q. Zhu, “Real-time trajectory planning for autonomous urban driving: Framework, algorithms, and verifications,” IEEE/ASME Trans. Mechatronics, vol. 21, no. 2, pp. 740–753, Apr. 2016.

- [8] S. Bae, D. Saxena, A. Nakhaei, C. Choi, K. Fujimura, and S. Moura, “Cooperation-aware lane change maneuver in dense traffic based on model predictive control with recurrent neural network,” in Proc. Amer. Control Conf., Jul. 2020, pp. 1209–1216.

- [9] C.-F. Lin, A. G. Ulsoy, and D. J. LeBlanc, “Vehicle dynamics and external disturbance estimation for vehicle path prediction,” IEEE Trans. Control Syst. Technol., vol. 8, no. 3, pp. 508–518, May 2000.

- [10] C. M. Kang, S. J. Jeon, S.-H. Lee, and C. C. Chung, “Parametric trajectory prediction of surrounding vehicles,” in Proc. IEEE Int. Conf. Veh. Electron. Saf., Jun. 2017, pp. 26–31.

- [11] S. Klingelschmitt, M. Platho, H.-M. Groß, V. Willert, and J. Eggert, “Combining behavior and situation information for reliably estimating multiple intentions,” in Proc. IEEE Intell. Vehicles Symp., Jun. 2014, pp. 388–393.

- [12] M. Brännström, E. Coelingh, and J. Sjöberg, “Model-based threat assessment for avoiding arbitrary vehicle collisions,” IEEE Trans. Intell. Transp. Syst., vol. 11, no. 3, pp. 658–669, Sep. 2010.

- [13] J. Hillenbrand, A. M. Spieker, and K. Kroschel, “A multilevel collision mitigation approach—Its situation assessment, decision making, and performance tradeoffs,” IEEE Trans. Intell. Transp. Syst., vol. 7, no. 4, pp. 528–540, Dec. 2006.

- [14] A. Polychronopoulos, M. Tsogas, A. J. Amditis, and L. Andreone, “Sensor fusion for predicting vehicles’ path for collision avoidance systems,” IEEE Trans. Intell. Transp. Syst., vol. 8, no. 3, pp. 549–562, Sep. 2007.

- [15] B. Mourllion, D. Gruyer, A. Lambert, and S. Glaser, “Kalman filters predictive steps comparison for vehicle localization,” in Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst., Aug. 2005, pp. 565–571.

- [16] S. Lefèvre, D. Vasquez, and C. Laugier, “A survey on motion prediction and risk assessment for intelligent vehicles,” Robomech J., vol. 1, no. 1, pp. 1–14, Jul. 2014.

- [17] C. Ju, Z. Wang, C. Long, X. Zhang, and D. E. Chang, “Interaction-aware Kalman neural networks for trajectory prediction,” in Proc. IEEE Intell. Vehicles Symp., Oct. 2020, pp. 1793–1800.

- [18] N. Deo, A. Rangesh, and M. M. Trivedi, “How would surround vehicles move? A unified framework for maneuver classification and motion prediction,” IEEE Trans. Intell. Vehicles, vol. 3, no. 2, pp. 129–140, Jun. 2018.

- [19] Q. Tran and J. Firl, “Online maneuver recognition and multimodal trajectory prediction for intersection assistance using non-parametric regression,” in Proc. IEEE Intell. Vehicles Symp., Jun. 2014, pp. 918–923.

- [20] B. T. Morris and M. M. Trivedi, “Trajectory learning for activity understanding: Unsupervised, multilevel, and long-term adaptive approach,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 33, no. 11, pp. 2287–2301, Nov. 2011.

- [21] G. S. Aoude, V. R. Desaraju, L. H. Stephens, and J. P. How, “Driver behavior classification at intersections and validation on large naturalistic data set,” IEEE Trans. Intell. Transp. Syst., vol. 13, no. 2, pp. 724–736, Jun. 2012.

- [22] J. Schlechtriemen, F. Wirthmueller, A. Wedel, G. Breuel, and K.-D. Kuhnert, “When will it change the lane? A probabilistic regression approach for rarely occurring events,” in Proc. IEEE Intell. Vehicles Symp., Jul. 2015, pp. 1373–1379.

- [23] M. G. Ortiz, J. Fritsch, F. Kummert, and A. Gepperth, “Behavior prediction at multiple time-scales in inner-city scenarios,” in Proc. IEEE Intell. Vehicles Symp., Jun. 2011, pp. 1068–1073.

- [24] S. Lefèvre, C. Laugier, and J. Ibañez-Guzmán, “Exploiting map information for driver intention estimation at road intersections,” in Proc. IEEE Intell. Vehicles Symp., Jun. 2011, pp. 583–588.

- [25] M. Schreier, V. Willert, and J. Adamy, “Bayesian, maneuver-based, long-term trajectory prediction and criticality assessment for driver assistance systems,” in Proc. 17th Int. IEEE Conf. Intell. Transp. Syst., Oct. 2014, pp. 334–341.

- [26] X. Ma, Z. Tao, Y. Wang, H. Yu, and Y. Wang, “Long short-term memory neural network for traffic speed prediction using remote microwave sensor data,” Transp. Res. C, Emerg. Technol., vol. 54, pp. 187–197, May 2015.

- [27] A. Alahi, K. Goel, V. Ramanathan, A. Robicquet, L. Fei-Fei, and S. Savarese, “Social LSTM: Human trajectory prediction in crowded spaces,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., Jun. 2016, pp. 961–971.

- [28] A. Zyner, S. Worrall, and E. Nebot, “A recurrent neural network solution for predicting driver intention at unsignalized intersections,” IEEE Robot. Autom. Lett., vol. 3, no. 3, pp. 1759–1764, Jul. 2018.

- [29] L. Xin, P. Wang, C.-Y. Chan, J. Chen, S. E. Li, and B. Cheng, “Intention-aware long horizon trajectory prediction of surrounding vehicles using dual LSTM networks,” in Proc. 21th Int. IEEE Conf. Intell. Transp. Syst., Nov. 2018, pp. 1441–1446.

- [30] N. Deo and M. M. Trivedi, “Multi-modal trajectory prediction of surrounding vehicles with maneuver based LSTMs,” in Proc. IEEE Intell. Vehicles Symp., Jun. 2018, pp. 1179–1184.

- [31] ——, “Convolutional social pooling for vehicle trajectory prediction,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., Jun. 2018, pp. 1468–1476.

- [32] T. Zhao, Y. Xu, M. Monfort, W. Choi, C. Baker, Y. Zhao, Y. Wang, and Y. N. Wu, “Multi-agent tensor fusion for contextual trajectory prediction,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., Jun. 2019, pp. 12 126–12 134.

- [33] S. Dai, L. Li, and Z. Li, “Modeling vehicle interactions via modified LSTM models for trajectory prediction,” IEEE Access, vol. 7, pp. 38 287–38 296, 2019.

- [34] L. Hou, L. Xin, S. E. Li, B. Cheng, and W. Wang, “Interactive trajectory prediction of surrounding road users for autonomous driving using structural-LSTM network,” IEEE Trans. Intell. Transp. Syst., vol. 21, no. 11, pp. 4615–4625, Nov. 2020.

- [35] D. Chai, L. Wang, and Q. Yang, “Bike flow prediction with multi-graph convolutional networks,” in Proc. 26th ACM SIGSPATIAL Int. Conf. Adv. Geographic Inf. Syst., Nov. 2018, pp. 397–400.

- [36] S. Guo, Y. Lin, N. Feng, C. Song, and H. Wan, “Attention based spatial-temporal graph convolutional networks for traffic flow forecasting,” in Proc. 33st AAAI Conf. Artif. Intell., vol. 33, Jan. 2019, pp. 922–929.

- [37] X. Geng, Y. Li, L. Wang, L. Zhang, Q. Yang, J. Ye, and Y. Liu, “Spatiotemporal multi-graph convolution network for ride-hailing demand forecasting,” in Proc. 33st AAAI Conf. Artif. Intell., vol. 33, Jan. 2019, pp. 3656–3663.

- [38] L. Liu, Z. Qiu, G. Li, Q. Wang, W. Ouyang, and L. Lin, “Contextualized spatial–temporal network for taxi origin-destination demand prediction,” IEEE Trans. Intell. Transp. Syst., vol. 20, no. 10, pp. 3875–3887, Oct. 2019.

- [39] X. Li, X. Ying, and M. C. Chuah, “Grip: Graph-based interaction-aware trajectory prediction,” in Proc. 22th Int. IEEE Conf. Intell. Transp. Syst., Oct. 2019, pp. 3960–3966.

- [40] A. Mohamed, K. Qian, M. Elhoseiny, and C. Claudel, “Social-STGCNN: A social spatio-temporal graph convolutional neural network for human trajectory prediction,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., Jun. 2020, pp. 14 424–14 432.

- [41] B. Yu, H. Yin, and Z. Zhu, “Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting,” in Proc. 27th Int. Joint Conf. Artif. Intell., Jul. 2018, pp. 3634–3640.

- [42] T. N. Kipf and M. Welling, “Semi-supervised classification with graph convolutional networks,” in Proc. Int. Conf. Learn. Representations, Apr. 2017, pp. 1–14.

- [43] F. Altché and A. de La Fortelle, “An LSTM network for highway trajectory prediction,” in Proc. 20th Int. IEEE Conf. Intell. Transp. Syst., Oct. 2017, pp. 353–359.

- [44] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” in Proc. Adv. Neural Inf. Process. Syst., Dec. 2017, pp. 5998–6008.

- [45] K. Cho, B. Van Merriënboer, C. Gulcehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio, “Learning phrase representations using RNN encoder-decoder for statistical machine translation,” in Proc. Conf. Empirical Methods Natural Lang. Process., Oct. 2014, pp. 1724–1734.

- [46] Traffic Analysis Tools: Next Generation Simulation-FHWA Operations. Accessed: November 24, 2020. [Online]. Available: https://ops.fhwa.dot.gov/trafficanalysistools/ngsim.htm

- [47] A. Paszke, S. Gross, S. Chintala, G. Chanan, E. Yang, Z. DeVito, Z. Lin, A. Desmaison, L. Antiga, and A. Lerer, “Automatic differentiation in PyTorch,” in Proc. Adv. Neural Inf. Process. Syst. Workshops, Dec. 2017, pp. 1–4.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e612ebb4-49ec-4f89-87f9-a6ab6b6ad83a/shengzihao.jpg) |

Zihao Sheng received the B.S. degree in automation from Xi’an Jiao Tong University, Xi’an, P.R. China, in 2019, and is currently pursuing the M.S. degree in control engineering from Shanghai Jiao Tong University, Shanghai, P.R. China. His research interests include vehicle trajectory prediction, control in autonomous driving and intelligent transportation systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e612ebb4-49ec-4f89-87f9-a6ab6b6ad83a/xuyunwen.jpg) |

Yunwen Xu (Member, IEEE) received the B.S. degree in automation from the Nanjing University of Science and Technology, Nanjing, P.R. China, in 2012, and the M.S. and Ph.D. degrees in control science and engineering from Shanghai Jiao Tong University, Shanghai, P.R. China, in 2014 and 2019, respectively. She is currently a Postdoctoral Researcher with the Department of Automation, Shanghai Jiao Tong University. Her research interests include model predictive control, urban traffic modeling, and intelligent control of complex systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e612ebb4-49ec-4f89-87f9-a6ab6b6ad83a/xueshibei.jpg) |

Shibei Xue (Senior Member, IEEE) received the Ph.D. degree in control science and engineering from Tsinghua University, Beijing, P.R. China, in 2013. He is currently an Associate Professor with the Department of Automation, Shanghai Jiao Tong University, Shanghai, P.R. China. From 2014 to 2016, he was a Postdoctoral Researcher with the University of New South Wales, Canberra, ACT, Australia, and then, he worked as a Postdoctoral Researcher with the Department of Physics, National Cheng Kung University, Tainan City, Taiwan. In July 2017, he joined Shanghai Jiao Tong University. His research interests include quantum control, optimization, and intelligent control of complex systems. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/e612ebb4-49ec-4f89-87f9-a6ab6b6ad83a/lidewei.jpg) |

Dewei Li received the B.S. degree and the Ph.D. degree in automation from Shanghai Jiao Tong University, Shanghai, P.R. China, in 1993 and 2009, respectively. He is a Professor with the Department of Automation at Shanghai Jiao Tong University. He worked as a Postdoctoral Researcher with Shanghai Jiao Tong University from 2009 to 2010. His research interests include model predictive control, intelligent control, and intelligent transportation systems. |