Gradient Leakage Attack Resilient Deep Learning

Abstract

Gradient leakage attacks are considered one of the wickedest privacy threats in deep learning as attackers covertly spy gradient updates during iterative training without compromising model training quality, and yet secretly reconstruct sensitive training data using leaked gradients with high attack success rate. Although deep learning with differential privacy is a defacto standard for publishing deep learning models with differential privacy guarantee, we show that differentially private algorithms with fixed privacy parameters are vulnerable against gradient leakage attacks. This paper investigates alternative approaches to gradient leakage resilient deep learning with differential privacy (DP). First, we analyze existing implementation of deep learning with differential privacy, which use fixed noise variance to injects constant noise to the gradients in all layers using fixed privacy parameters. Despite the DP guarantee provided, the method suffers from low accuracy and is vulnerable to gradient leakage attacks. Second, we present a gradient leakage resilient deep learning approach with differential privacy guarantee by using dynamic privacy parameters. Unlike fixed-parameter strategies that result in constant noise variance, different dynamic parameter strategies present alternative techniques to introduce adaptive noise variance and adaptive noise injection which are closely aligned to the trend of gradient updates during differentially private model training. Finally, we describe four complementary metrics to evaluate and compare alternative approaches. Extensive experiments on six benchmark datasets show that differentially private deep learning with dynamic privacy parameters outperforms the deep learning using fixed DP parameters, and existing adaptive clipping approaches in all aspects: compelling accuracy performance, strong differential privacy guarantee, and high attack resilience.

Index Terms:

deep learning, gradient leakage attack, differential privacyI Introduction

Deep neural networks (DNNs) have demonstrated superior capability of learning complex tasks with high prediction accuracy. With the premium environment for model training using rich data that companies are collecting about their users, two questions remain an overwhelming challenge: (i) how can a model be trained on private collections of sensitive data so that it can be deployed safely, minimizing disclosure of sensitive training data? and (ii) can a DNN model trained with differential privacy be trusted for its outputs against privacy intrusion?

Privacy Risks in Deep Learning. Deep learning is vulnerable to many privacy attacks at both training phase and prediction phase, by exploiting its large capacity from the large number of model parameters sufficient for encoding the details of the individual data. Gradient leakage attacks are the dominating privacy threats during training phase [1, 2, 3, 4, 5, 6, 7, 8, 9, 10], assuming training data is encrypted in storage and transportation. The attacker, without any prior knowledge about the learning model, can breach secrecy and confidentiality of the training data from the intermediate gradients. There are also privacy threats in prediction phase, e.g., model inversion [11, 12], attribute inference [13, 14], membership inference [15, 16, 17, 18, 19, 20] and GAN-based reconstruction attack [21, 22]. These privacy concerns aggravate with the broad deployment of deep learning applications and deep learning as a service. Although recent studies on gradient leakage attacks were in the context of federated learning [1, 2, 4, 5], the attacks are high-risk threats for both centralized cloud and edge clients because the same spying process and unauthorized read can be silently employed during model training without being noticed, and the same reconstruction algorithms can be utilized to disclose private training data from the leaked gradients. This paper presents risk assessment of gradient leakage attacks and presents a gradient leakage resilient deep learning approach by extending conventional deep learning with differential privacy algorithms with dynamic privacy parameter optimizations.

Deep Learning with Differential Privacy. Differentially private deep learning is the de facto standard for publishing DNN models with provable privacy guarantee [23]: It is extremely hard to characterize the difference in output between any two models trained using two neighboring inputs differing by at most one element. In other words, by simply observing the output of a DNN model trained using the differentially private learning algorithm, one cannot tell if a single example is used in the training. [24] is the first to implement differentially private deep learning with moments accountant method for a much tighter privacy accounting under random sampling. To regulate the maximum influence of the model under the two neighboring inputs, conventional approaches [24, 25, 26, 27, 28] implement differentially private deep learning by first clipping the gradients and then applying differential privacy-controlled noise to perturb the gradients before employing the stochastic gradient descent (SGD) algorithm, ensuring that each gradient descent step is differentially private. Based on composition properties of differential privacy algorithms [23], the final model produced upon completion of the total training steps provides a certain level of differential privacy.

Inherent Limitations of Baseline. Inspired by the pioneer work [24], many proposals in the literature [25, 27, 29] and open source community [30, 31] employ the fixed privacy parameter strategy to decide clipping method, define the sensitivity of gradient updates and noise scale, which results in constant noise injection throughout every step of the entire training process. Although such a rigid setting of privacy parameters has shown reasonable accuracy while providing a certain level of differential privacy guarantee, they suffer some inherent limitations. First, fixed privacy parameters induce constant differential privacy noise throughout the training, which deems unnecessary especially at the later stage of training, and leads to low accuracy utility. Second, even with differential privacy guarantee, such algorithms require careful privacy parameter selection as otherwise remaining vulnerable against gradient leakage induced privacy violation, breaking the intended privacy protection. Although some development aim to improve the accuracy of differentially private deep learning by optimizing the noise [24, 32, 33, 26, 34, 35, 36], all these methods are not designated to defend against the gradient leakage attacks.

Scope and Contributions. In this paper, we investigate alternative approaches to differentially private deep learning, aiming for strong privacy, high accuracy performance as well as high resilience against gradient leakage attacks. This paper makes three original contributions. First, we analyze existing implementation of deep learning with differential privacy which injects differential privacy controlled noise to perturb the gradients in all layers using fixed privacy parameters. We show that despite the differential privacy guarantee provided, the deep learning model suffers from low accuracy utility and can be vulnerable to gradient leakage attacks. This motivates us to analyze the inherent limitations of using fixed-parameter strategies in deep learning with differential privacy. Second, we propose a differentially private deep learning approach with adaptive DP parameters to address the inherent limitations of conventional deep learning algorithms with fixed DP parameters. We propose three adaptive DP parameter optimizations to allow dynamic DP controlled noise variance such that more noise is injected in early rounds and smaller noise is used to perturb the gradients in the later rounds, including dynamic gradient clipping method, dynamic sensitivity and dynamic noise scale. We show that our approach with dynamic DP parameters can achieve high resilience against gradient leakage attacks, competitive accuracy performance, better differential privacy guarantee. Unlike fixed-parameter strategies that result in constant noise variance, differentially private deep learning with adaptive DP parameters can align noise injection to the trend of gradient updates during differentially private model training. Third, we present four complementary metrics for evaluation and comparative analysis of alternative approaches to differentially private deep learning: (i) comparing accuracy (utility) performance under the same privacy budget, (ii) comparing privacy cost and the level of privacy protection under the same target model accuracy, and (iii) comparing resilience against gradient leakage attacks. Extensive experiments are conducted on six benchmark datasets with five privacy accounting methods. The results show that deep learning with dynamic differential privacy optimization on sensitivity and noise scale outperforms both the baseline and other alternative approaches with dynamic parameter optimizations, offering compelling accuracy performance, strong differential privacy guarantee, and high attack resilience.

II Gradient Leakage Threat Model

Gradient leakage attacks are most relevant privacy threats in the context of deep learning, in which a curious or malicious insider may conduct unauthorized read on the gradients and reconstruct private training data based on the gradients obtained through spying over the layer-wise gradients utilized by per-step SGD in each iteration of the training. Such insider threats do not directly compromise the accuracy of the trained model and thus are much harder to detect and mitigate through reactive defense methods. We argue that effective approaches to deep learning with differential privacy can build one of the best defense methods against such threats. Our threat model makes the following assumptions about the insider adversary: (1) the insider adversary cannot gain access to the encrypted training data prior to training; (2) the insider adversary has no intention of compromise the training procedure or the quality of the trained model; and (3) the insider adversary may gain access to the intermediate model training parameters that are often saved as checkpoint data to allow the resume of iterative training from a given step. Unlike white-box adversarial example attacks [37, 38, 39, 40], gradient leakage attacks do not need any prior knowledge of the DNN training algorithm and simply use independent reconstruction algorithms on leaked gradients to infer and disclose the private training data [5] while keeping the integrity of the training.

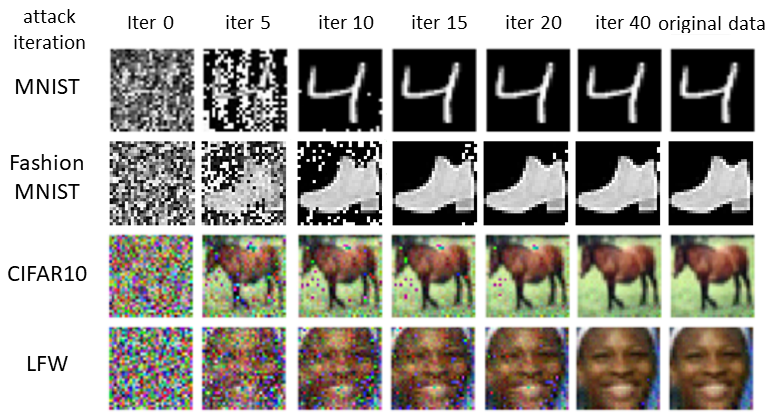

With parallel processing of the data, the module is replicated on each device in the forward pass and each replica handles a portion of the input. During the backward pass, gradients from each replica are summed into the original module. Figure 1 gives a sketch of the gradient leakage attack algorithm under the multi-GPU setting, which configures and executes the reconstruction attack in five steps: (1) It configures the initialization seed (), a dummy data of the same resolution (or attribute structure for text) as the training data. [5] showed some significant impact of different initialization seeds on the attack success rate and attack cost ( attack iterations to succeed). (2) The dummy attack seed is fed into the current copy of the model. (3) The gradient of the dummy attack seed is obtained by backpropagation. (4) The gradient loss is computed using a vector distance loss function, e.g., , between the gradient of the attack seed and the actual gradient from the training. The choice of this reconstruction loss function is another tunable attack parameter. (5) The dummy attack seed is modified by the attack reconstruction learning algorithm. It aims to minimize the vector distance loss by a loss optimizer such that the gradients of the reconstructed seed at round will be closer to the actual gradient updates stolen upon the completion of computing the gradients of one input in the batch or of the entire batch. For batch gradient, [1] and [4] maliciously introduce separate weights for each batch example, making it possible for a recovery of a batch of data: where is the batch size and is index of data in the batch. This attack reconstruction learning iterates until it reaches the attack termination condition (), typically defined by the attack iterations, e.g., (also a configurable attack parameter). If the reconstruction loss is smaller than the specified distance threshold then the reconstruction attack is successful. Figure 2 provides a visualization by examples from four datasets at different attack iterations. The details of these datasets are given in Section VI. All the experiments on gradient leakages in this paper use the patterned random seed initialization111https://github.com/git-disl/ESORICS20-CPL, with a based loss function and L-BFGS optimizer, for high attack success rate (ASR) and fast attack convergence.

III Baseline Solution with Differential Privacy

In this section, we first review the differential privacy concept.Then we present the baseline deep learning with differential privacy approach that utilizes fixed privacy parameters and point out the inherent problem of fixed privacy parameters in terms of accuracy utility loss and gradient leakage vulnerability.

III-A Preliminary

Definition 1.

This definition states that given , a smaller would indicate a smaller difference between the output of and the output of . By Lemma 3.17 of [23], -differential privacy ensures that for any adjacent , the absolute value of the privacy loss will be bounded by with probability of at least . When , this definition implies that it is most likely that the observed output for will be similar to the output for its neighboring input , whereas when , it indicates that the output observed under is highly likely to be observed under . Since is the upper bound probability of for breaking -differential privacy, a smaller is desired. Following the literature [24, 27, 36, 26], is set to in our experiments such that -differential privacy would hold with probability of at least 0.9999.

Definition 2.

Sensitivity [41]: Let be the domain of possible input data and be the domain of all possible output. The sensitivity of a function is the maximum amount that the function value varies when a single entry of the input is changed.

| (2) |

Definition 2 implies that to produce a randomized differentially private algorithm by injecting noise that follows some randomization distribution while preserving the utility of , we need to bound the noise by the maximum change defined as the sensitivity of function with neighboring inputs. In this paper, we consider Gaussian mechanism as our randomization noise distribution, which adds Gaussian noise calibrated to the sensitivity of the function in norm. Hence, we define by sensitivity under Gaussian noise injection.

Theorem 1.

Gaussian mechanism [23]: Let be the domain of possible input data and be the range of all possible output. With privacy parameter , applying Gaussian noise calibrated to a real valued function: with noise variance such that is -differentially private if .

This theorem indicates that for a constant , when , we have Gaussian mechanism , and if , then holds with probability at least , where is the random variable utilizing the Gaussian distribution noise with Gaussian density function . Hence, it is straightforward to get the following lemma:

Lemma 1.

Let noise variance in Gaussian mechanism be where is the noise scale and is the sensitivity. We have the noise scale satisfying

According to this Lemma, noise scale and privacy loss have an inverse correlation given a fixed , i.e., a large noise scale indicates a small , and conversely, a small noise scale implies the spending of a large privacy budget .

III-B Privacy Accounting

Based on the DP composition theory [23], post processing theory [23], and privacy amplification [42], the privacy budget spending can be tracked throughout the iterations of DNN training. There are four representative privacy accounting methods: moments accountant (MA) [24], zCDP [27], advanced composition (AdvC) [43] and optimal composition (OptC) [44].

Theorem 2.

Composition theorem [23]: Let be a randomized function that is -differentially private. If is a sequence of consecutive invocations (executions) of ()-differentially private algorithm , then is ()-differentially private.

By Theorem 2, a randomized function that consists of a sequence of differentially private mechanisms is differentially private, and its privacy guarantee is determined by the sum of the individual privacy losses, defined by . In our experiments, we consider five privacy accounting rules: base composition, advanced composition, optimal composition, Moments Accountant, and zCDP.

Advanced composition (AdvC [43]): Let . The class of -differentially private mechanisms satisfies ()-differential privacy under -fold composition for:

| (3) |

Optimal composition (OptC [44]): Let . The class of -differentially private mechanisms satisfies ()-differential privacy under -fold composition for:

| (4) |

Moments accountant (MA [24]): For a mechanism with Gaussian noise added at each iteration where denotes the sensitivity and is the noise scale, given dataset with a total of data points, the random sampling with replacement and the sampling rate where is the sample size, and the number of iterations , if , there exist constants and so that for any , is -differentially private for and

| (5) |

Note that Rényi differential privacy [45, 46] is proposed on top of moments accountant to keep track of the privacy spending of a sequence of randomized mechanisms with elegant composition rules. Rényi differential privacy can be transformed to the standard differential privacy definition. We will use Moments accountant for fair comparison with other privacy accounting methods.

zCDP [27]: For a mechanism with Gaussian noise added at each iteration where denotes the sensitivity and is the per-iteration noise scale, given dataset with a total of data points, given the random sampling with replacement and the sampling rate where is the sample size, and the number of iterations , if , is -differentially private for and

| (6) |

Due to the random sampling involved, both advanced composition and optimal composition consider an amplified per-iteration privacy spending and assume a fixed to track using the following privacy amplification theorem. Note that for Moments accountant, we follow the implementation provided by the Tensorflow Privacy module [47] as no closed-form expression in terms of the noise scale nor the heterogeneous per-step is given to compute the accumulated .

Theorem 3.

Privacy amplification [42]: Given dataset with data points, subsampling is defined as random sampling with replacement with as the sample size. If is -differentially private, then the subsampled mechanism with sampling rate is -differentially private.

III-C Baseline with Fixed Parameters

The goal of deep learning with differential privacy is to train a -differentially private model over iterations. By composition theorem, we need to ensure that the per-step SGD, denoted by , is -differentially private () with and . Hence, for each iteration , we inject differential privacy controlled noise to the gradients before performing per-step SGD. Given a DNN of layers, the baseline implementation for ensuring that is -differentially private injects noise to all layers of the model during each training iteration.

Fixed privacy parameters. Most of existing approaches to deep learning with differential privacy [24, 27, 36, 48], including Tensorflow privacy module [30] and Pytorch Opacus privacy module [31], all employ fixed privacy parameter strategies, such as the constant clipping method with pre-defined clipping bound (e.g., ), the fixed pre-defined noise scale (e.g., ), and the fixed sensitivity defined using the constant clipping bound . As a result, in addition to distributing the privacy budget uniformly across the total training iterations, the noise variance is fixed during the training, resulting in injecting constant noise to the gradients in each training iteration.

Constant clipping method with fixed clipping bound. Given a training example , let denote the layer-wise per-example gradient vector for the layer (). The clipping method is used prior to noise injection to address the problem of gradient explosion [49]. With a constant clipping method using a fixed clipping bound , the layer-wise per-example gradient vector is preserved if its norm satisfies . Otherwise, holds, and the gradient vector needs to be brought down so that its norm is capped by . This is done by multiplying every coordinate of the gradients with a scaling factor: . Such per-example gradient clipping will be performed on all layers for each example in the batch of the given iteration. Let denote the clipped per-example gradient for layer of training example at iteration (). The clipped per-example gradients are then gathered for batch averaging: . Algorithm 1 present pseudo code for baseline clipping function using the constant clipping method initialized with a preset clipping bound [24].

Noise injection with fixed sensitivity and fixed . Next, the -differential privacy controlled Gaussian noise is injected to each layer of the batch gradients: . The per-step SGD function performs the gradient descent at iteration using the Gaussian noise perturbed gradients , such that . This process of sampling, computing gradients, clipping, and noise injection repeats until reaching the total iterations.

Limitation with using Fixed Privacy Parameters. First, using a fixed clipping bound to define the sensitivity of gradient changes for all iterations can be problematic, especially for the later iterations of training. This is because the norm of the layer-wise per-example gradient vector satisfies in most cases as the training approaching the end. Second, with fixed sensitivity defined by the fixed clipping bound , the constant noise computed based on and fixed can be much larger than for . Third, injecting such large constant noise to gradients in each iteration of the training may have a detrimental effect on the accuracy performance and slow down the convergence of training and sadly it does not gain any additional privacy protection, because the accumulated privacy spending is only inversely correlated with .

Furthermore, algorithms with fixed privacy parameters may be more vulnerable to gradient leakage attacks. Any inappropriate setting of fixed privacy parameters will bring in vulnerability to gradient leakage attacks. Figure 3 provides four visual examples on four datasets: MNIST, Fashion-MNIST, LFW, and CIFAR10 under three scenarios: non-private, DP-baseline with fixed parameter setting of clipping bound and noise scale as in [50, 29] and DP-baseline with clipping bound and noise scale as in [24, 27]. It is observed that an adequate amount of noise is necessary to mask the gradients from malicious or curious inference attackers using the reconstruction learning on the leaked gradients.

Given that gradients at early training iterations tend to leak more information than gradients in the later stage of the training [5], an obvious approach is to design a differential privacy algorithm that can inject larger noise at the early stage of training and resort to smaller noise injection as the training is close to the end. Given that the noise variance is the product of sensitivity and noise scale , several possible strategies can be promising, such as having the sensitivity calibrated to the -norm of the gradients, or having a smoothly decaying noise scale such that the noise variance follows the trend of gradient updates across the training iterations.

IV Dynamic Privacy Parameters

A detrimental limitation of using fixed privacy parameter strategies for deciding the clipping method and for defining sensitivity and noise scale is the result of constant noise variance and constant noise injection in each iteration of deep learning, which is a root cause for poor resilience against gradient leakage attacks. In this section, we address such inherent limitations by developing dynamic parameter strategies for determining and configuring these privacy parameters in a training progress-aware manner. This brings out a significant advantage: smaller noise variance will be used to inject noise at the later stage of the training, improving the convergence speed of training with high accuracy performance; and at the same time larger noise variance will be used to inject a larger amount of noise at the early stage of the training, leading to higher resilience against gradient leakage attacks. Given that the noise variance is the product of sensitivity and noise scale (recall Theorem 1), several possible dynamic strategies are promising, such as having the sensitivity calibrated to the -norm of the gradients or having a smoothly decaying noise scale such that the noise variance follows the trend of gradient updates across the training iterations.

IV-A Dynamic Sensitivity

We describe three different strategies for implementing dynamic sensitivity, aiming to derive declining noise variance as the training progresses in iterations: -norm based sensitivity, denoted by DP-dynS[-max], dynamic decaying clipping method, denoted by DP-dynS[], and the combination of both, denoted by DP-dynS.

DP-dynS[-max]. Given that the clipping with a fixed bound is performed on the -norm of the layer-wise gradients of per-example in a batch, one way to accommodate the noise variance calibrated to the norm of the gradient is to use the max norm measured on per-example gradients in a batch as the sensitivity of the training function for iteration (recall Definition 2). Consider two scenarios: (1) When any of the per-example gradients in a batch is larger than the clipping bound , the sensitivity is set to , and however, (2) when norm of all per-example gradients in a batch is smaller than the pre-defined clipping bound , the clipping bound is unfortunately a loose estimation of the true sensitivity of function at iteration . If we instead define the sensitivity of by the max norm among these per-example gradients in the batch, we will correct the problems in the above scenario (2). In summary, the -max sensitivity will take the smallest of the max norm and the clipping bound . Given that the -norm of the gradients closely follows the trend of gradient changes as the training progresses in iterations, our dynamic sensitivity approach will have adaptive sensitivity , and hence adaptive noise variance when a fixed is used, and consequently inject larger noise at the early stage of training and smaller differential privacy noise at the later stage of training.

Note that unlike mean estimation [51, 52], knowing the norm-based sensitivity of the multi-dimension gradient vector on per-example gradients in deep learning training cannot help disclosing the gradient value at each coordinate. Meanwhile, the -max norm actually tracks the sensitivity of the training function at each iteration. Yet composition theorem only requires the individual training function component to be differentially private, with each training function component taking care of its own sensitivity. This makes the -max sensitivity a possible choice for sensitivity optimization.

DP-dynS[Cdecay]. The second dynamic sensitivity strategy is to use dynamic decaying clipping. Given that clipping is used to regulate the largest change of gradients during the training, as the training progresses, the maximum changes of the gradients decrease, and gradually approach zero when the training starts to converge. Hence, one may use dynamic decaying clipping method to estimate the maximum changes of the gradients and define the dynamic sensitivity accordingly. Motivated by the dynamic learning rate [53], we propose a set of decaying policies for implementing the decay-clipping based dynamic sensitivity with , and being the control parameters for the decaying rate.

Linear decay: The clipping decays in a linear trend, defined as where is the smooth controlling term for clipping at iteration .

Exponential decay: The clipping decays in an exponential trend defined by an exponential function: where is the control term for exponential trending.

Cyclic decay: The clipping decay follows a decaying triangular cyclic policy originated from the cyclic learning rate [54]. With step size , for odd and for even . itself decays every cyclic triangle: .

DP-dynS. The third dynamic sensitivity strategy is to combine -max sensitivity and clipping decay sensitivity such that we can take the advantage of the best side of both worlds. Concretely, although -max sensitivity is a tighter estimation of the maximum changes in gradients, it may still benefit from additional improvements in some situations, in which the -max sensitivity happens to be defined by the clipping bound . In such situations, by integrating the decay clipping sensitivity with the -max sensitivity, the decaying clipping will be used instead of the initial large clipping bound. By Lemma 1, both the decay clipping-based dynamic sensitivity and -max sensitivity do not have a direct impact on the differential privacy guarantee. However, with an accuracy target, the model with combined sensitivity optimizations may reach the accuracy and terminate the training earlier, resulting in smaller accumulated privacy spending.

| baseline | dynS[Cdecay] | dynS[-max] | dynS | dyn | dyn[S,] | |

| decay | decay | decay | ||||

| -max | -max | -max | ||||

| decay | decay |

IV-B Dynamic Sensitivity with Dynamic

Dynamic noise scale with a decaying policy is an alternative approach to supporting dynamic Gaussian noise variance over the number of training iterations, denoted by DP-dyn. Recall that the per-example gradients in early iterations are more informative and thus more vulnerable against gradient leakage attacks. With a dynamic decaying noise scale, we can ensure a larger noise scale and thus larger noise variance in the early stage of training and smoothly decay the scale as training progresses, such that the per-example gradients are perturbed with larger noise in early iterations and relatively less exploitable by the attacker, even when a fixed sensitivity defined by the fixed clipping bound is used. In our prototype implementation, we implement the dynamic decaying noise scale policies with a starting noise scale , by employing the same set of three decay policies, denoted by , used in designing our decay-clipping dynamic sensitivity.

When combining dynamic with dynamic sensitivity based on both -max and decay clipping, denoted by DP-dyn[S,], we expect the best dynamic parameter optimization for differentially private deep learning. Algorithm 2 provides a sketch of the pseudo-code of DP-dyn[S,], which modifies DP-baseline by adding dynamic decay clipping (line 3), dynamic decay noise scale (line 4), and combining with dynamic -max sensitivity (line 11) for each iteration of the training. Table I provides a summary of comparison for the five different dynamic privacy parameter optimizations and the DP-baseline with respect to the three privacy parameters: clipping , sensitivity and noise scale . Figure 4 measures the noise variance on MNIST with three different settings: (i) fixed and fixed (DP-baseline), (ii) -max sensitivity and fixed (DP-dynS) and (iii) DP-dyn[, ] with -max sensitivity and decaying noise scale starting from . Compared to the baseline approach with fixed privacy parameters and our methods with dynamic -max or dynamic clipping, the method of DP-dyn[, ] with both dynamic sensitivity S and dynamic noise scale offers more resilience and better accuracy because it can add larger noise in the early stage of training and small noise as the training progresses towards convergence.

V Privacy Analysis Metrics

For DP algorithms with fixed-parameter strategies, such as fixed clipping , fixed noise scale and fixed sensitivity , no matter which one of the five privacy accounting methods outlined in Section III-A we choose to use, the total privacy spending will be the same, given the sampling rate and . Hence, the evaluation will focus on measuring and comparing the accuracy performance of the model trained by using these DP-algorithms. However, for DP-algorithms with dynamic privacy parameter strategies, additional metrics should be considered. In this section, we describe four complementary metrics to evaluate and compare the effectiveness of alternative approaches to different differentially private deep learning: (1) model accuracy, (2) differential privacy, (3) resilience against gradient leakage attacks.

Accuracy with a target privacy budget. This metric is designed for performing utility analysis with respect to model accuracy under the same differential privacy spending (budget). When the privacy budget is given and fixed for comparing different algorithms, according to the privacy accounting methods outlined in Section III-A, the following parameters are fixed: the total iterations (), the sampling rate , the privacy parameter . By Lemma 1, the lower bound of noise scale are also fixed. By Theorem 1, the definition of sensitivity will directly impact on how the noise variance is defined. This motivates us to advocate this metric as one of the four principled criteria to compare the baseline DP algorithm that use fixed privacy parameter strategies (see Section III-C) with the alternative DP algorithms that use different dynamic privacy parameter strategies (see Section IV). Following a similar setting, one may conduct privacy analysis to compare alternative DP algorithms under a fixed privacy budget and a pre-defined total training iteration () on different datasets. This allows us to measure the level of differential privacy spending using all five representative privacy accounting methods (recall Section III-A), and to gain a deeper understanding of the difference among different accounting methods in tracking privacy spending over the same iterations of training on the same training set. The DP-algorithm with the highest accuracy performance will be the winner in this analysis.

Next, we introduce two additional privacy analysis metrics: (i) privacy with a target accuracy and a fixed noise scale and (ii) privacy with a target accuracy and a fixed noise variance . They are designed specifically to evaluate and compare alternative DP algorithms that use dynamic parameter strategies with baseline DP-algorithms that use fixed-parameter strategies. Both metrics are designed for conducting privacy analysis under the same utility goal defined by the target accuracy. A proper target accuracy should be chosen such that it can be achieved by every alternative algorithm being compared during privacy analysis. Given a fixed target model accuracy, with a fixed sampling rate and fixed , some algorithms may terminate the training before reaching its pre-defined total iterations . This may result in spending less privacy budget , regardless of which one of the five accounting methods is used to track the accumulative privacy spending for privacy analysis.

Privacy with a target accuracy and a fixed . By Lemma 1, noise scale defines the lower bound of the accumulative privacy spending over the training iterations used to achieve the given target accuracy. Hence, those DP algorithms that can achieve the target model accuracy before reaching the pre-defined iterations will terminate the training earlier, which may result in smaller accumulated privacy spending. Based on Gaussian Mechanism, when a DP algorithm uses a dynamic sensitivity with a fixed noise scale , it will result in a dynamically changing noise variance , following the trend of dynamic sensitivity. In this case, even the fixed will lead to the same privacy spending under the same iterations, the dynamic sensitivity optimized DP training may achieve the target accuracy and terminate earlier. This will result in smaller accumulated privacy spending. However, for DP algorithms with fixed privacy parameters, e.g., a fixed noise scale is combined with a fixed sensitivity , then a constant noise variance will be used in each iteration of the training, which may result in excessive noise in the later stage of the training, degrading the utility without any gain on privacy.

Privacy with a target accuracy and a fixed . This metric allows us to conduct privacy analysis from a very different perspective. Based on Theorem 1 and Lemma 1, the Gaussian noise variance is defined as the multiplication of sensitivity and noise scale . With a fixed noise variance , all DP-algorithms will inject the same amount of noise in each of the total training iterations, regardless of whether they are using fixed-parameter strategies or dynamic parameter strategies. However, the accumulated privacy spending for DP-algorithms with dynamic parameter strategies will be smaller compared to the accumulative privacy cost for DP-algorithms with fixed-parameter strategies. Concretely, for DP algorithms with dynamic sensitivity, under a fixed noise variance , the noise scale can no longer be fixed, and it is determined in a reverse trend of the dynamic sensitivity . As the training progresses, the dynamic sensitivity tends to align closely with the declining trend of gradient updates, and we will have to use a large noise scale to keep noise variance constant. As a result, DP-algorithms with dynamic sensitivity tend to result in smaller privacy spending () compared to the DP-algorithms with fixed parameters.

Resilience against Gradient Leakage Attacks. This metric is designed to measure and compare alternative DP-algorithms with respect to attack resilience, which can be defined using both (i) adverse effect of the attack, measured by the accuracy performance under attack, and (ii) attack cost in terms of the time spent to perform reconstruction inference on the leaked gradients and whether the inference results in a successful disclosure of a private training example. A DP-algorithm is considered highly resilient in the effectiveness evaluation, if under the same privacy spending, this algorithm outperforms other alternatives with the highest attack resilience.

VI Experimental Evaluation

| # train | # test | # features | # class | # iter. | batch size | acc. | |

|---|---|---|---|---|---|---|---|

| MNIST | 60000 | 10000 | 28*28 | 10 | 10000 | 600 | 0.989 |

| Fashion-MNIST | 60000 | 10000 | 28*28 | 10 | 10000 | 600 | 0.875 |

| CIFAR10 | 50000 | 10000 | 32*32*3 | 10 | 10000 | 500 | 0.687 |

| LFW | 2267 | 756 | 32*32*3 | 62 | 6000 | 22 | 0.766 |

| Purchase-10 | 10000 | 2000 | 600 | 10 | 5000 | 100 | 0.825 |

| Purchase-50 | 10000 | 2000 | 600 | 50 | 5000 | 100 | 0.667 |

We evaluate alternative approaches to deep learning with differential privacy using six benchmark datasets. Table II list the six datasets with training set, test set, #features, #classes, total training iterations , batch size , and non-private accuracy. MNIST is a grey-scale hand-written digit image dataset. Fashion-MNIST is an image dataset associated with a label from 10 clothing classes such as T-shirt, Trouser, and Pullover. CIFAR10 is a dataset of color images of objects. LFW is a dataset, originated from 13233 images of 5749 classes, by resizing the original image size of to and extracting the ’interesting’ region. Since most of the classes have a very limited number of data points, we consider 3023 images from 62 classes that have more than 20 images per class. A 4:1 train-test ratio is applied. Purchase is a dataset adopted from Kaggle challenge of “acquire valued shopper”, with shopping histories for several thousand individuals. We consider a simplified version with 197,325 records, each with 600 binary features converted from the non-numerical raw data. Similar to [15], we cluster the data record into 10 and 50 classes for classification. A 4:1 ratio is used for training and test data split. For the four image datasets, a deep convolutional neural network with two convolutional layers and one fully connected layer is used. For the two attribute datasets, a fully connected model with two hidden layers is applied.

| mnist | Fashion-MNIST | cifar10 | LFW | ||

| non-private | attack iter | 11.5 | 12.4 | 28.3 | 25 |

| ASR | 1 | 1 | 0.973 | 1 | |

| MSE | 1.50E-05 | 2.59E-05 | 2.20E-04 | 2.20E-04 | |

| DP-baseline | attack iter | 13.4 | 14.1 | 30.5 | 26.3 |

| ASR | 0.23 | 0.25 | 0.16 | 0.13 | |

| MSE | 0.547 | 0.588 | 0.401 | 0.352 | |

| Quantileclip [55] | attack iter | 12.9 | 14.3 | 30.5 | 27.1 |

| ASR | 0.64 | 0.67 | 0.47 | 0.49 | |

| MSE | 0.097 | 0.121 | 0.203 | 0.229 | |

| Adaclip [56] | attack iter | 12.6 | 14.1 | 29.9 | 26.4 |

| ASR | 0.72 | 0.76 | 0.58 | 0.61 | |

| MSE | 0.033 | 0.041 | 0.129 | 0.144 | |

| DP-dyn[,] | attack iter | 300 | 300 | 300 | 300 |

| ASR | 0 | 0 | 0 | 0 | |

| MSE | 0.886 | 0.904 | 0.912 | 0.897 | |

VI-A Resilience against Gradient Leakages

The first set of experiments measures and compares the resilience of alternative DP algorithms with fixed-parameter strategies, existing adaptive clipping approaches, and dynamic parameter strategies against gradient leakage attacks. The following three attack metrics are used to evaluate the adverse effect and cost of gradient leakage attacks, which in turn can be used to measure the resilience of different DP approaches in the presence of attacks. They are attack success rate (ASR), #attack iterations (attack iter) to succeed the inference with 300 as the default, and attack reconstruction distance in MSE (mean square error). The successful attack reconstruction is defined by MSE smaller than 0.70. We report the attack iterations and attack reconstruction distance only for those successful attack examples. For attacks with complete failure, we record their reconstruction distance at the pre-defined maximum reconstruction/inference iterations of 300. Table III reports the attack results under non-private models, the DP-baseline, DP-dyn[S,], and two representative adaptive clipping methods: quantile-based clipping [55], Adaclip [56] and for the four image datasets. AdaClip performs the clipping bound estimation based on the coordinates and adaptively add different noise levels to different dimensions of the gradients, whereas the quantile clipping estimates the clipping bound using the quantile of the unclipped gradient norm. The default setting of , is used for DP-baseline algorithms (fixed parameters) and also used as the initial value for quantile-based clipping [55] and DP-dyn[S,]. The attack is performed on the gradients from the first training iteration as gradients at early training iterations tend to leak more information than gradients in the later stage of the training [5]. Figure 5 illustrates the reconstruction results. We make three observations: (1) For all four datasets, the gradients in non-private models are vulnerable to the gradient leakage attack. (2) The quantile-based clipping [55] and Adaclip [56] method can slightly improve the resilience against the gradient leakage attack compared to non-private training. The two adaptive clipping approaches focus mainly on reducing the noise variance rather than gradient attack resilience. Therefore, the DP-baseline, with large and constant noise, is more effective with high resilience against the gradient leakage threats. (3) Compared to both DP-baseline and existing adaptive clipping approaches, DP-dyn[S,] offers the highest resilience against gradient leakage attacks. This is because DP-dyn[S,] adds larger noise at the early iterations of the training thanks to the combined effect of dynamic sensitivity and dynamic noise scale, which effectively creates difficulty for the gradient leakage attack to succeed. The high attack reconstruction distance in Table III shows strong attack resilience against gradient leakage threats. The visualization in Figure 5 also provides an intuitive illustration of this effect. For the two existing approaches, the adaptive clipping brings down the differential privacy noise compared to DP-baseline and thus shows less improvement on the resilience of DP training against gradient leakage attacks, compared to DP-baseline. However, differential privacy noise injection combined with dynamic sensitivity and dynamic noise scale will offer the best defense against gradient leakage threats.

In the rest of the experiments, we provide empirical results and analysis to further illustrate the benefits of the proposed gradient leakage resilient deep learning with dynamic differential privacy parameter optimizations. We first demonstrate how the proposed DP-dyn[,] achieves higher accuracy compared to the DP-baseline at the same () differential privacy level. Then we show how DP-dyn[,] obtains a stronger differential privacy guarantee in terms of smaller spending at a target accuracy. Finally, we show that in addition to high resilience against gradient leakage, the proposed dynamic privacy parameter optimizations have an acceptable time cost compared to the conventional DP deep learning baseline. Furthermore, our approach consistently offers high test accuracy on all benchmark datasets, compared to both the conventional DP deep learning baseline and existing adaptive clipping proposals.

| C=1 | C=2 | C=4 | C=8 | C=16 | C=32 | ||

| MNIST | non-private | 0.9892 | |||||

| DP-baseline | 0.9450 | 0.963 | 0.960 | 0.945 | 0.915 | 0.881 | |

| DP-dynS[Cdecay] | 0.948 | 0.966 | 0.964 | 0.951 | 0.931 | 0.919 | |

| DP-dynS[-max] | 0.959 | 0.975 | 0.977 | 0.983 | 0.975 | 0.976 | |

| DP-dynS | 0.955 | 0.975 | 0.978 | 0.980 | 0.978 | 0.977 | |

| Fashion-MNIST | non-private | 0.875 | |||||

| DP-baseline | 0.825 | 0.832 | 0.833 | 0.831 | 0.812 | 0.765 | |

| DP-dynS[Cdecay] | 0.824 | 0.834 | 0.839 | 0.837 | 0.829 | 0.822 | |

| DP-dynS[-max] | 0.830 | 0.840 | 0.845 | 0.844 | 0.840 | 0.840 | |

| DP-dynS | 0.829 | 0.841 | 0.848 | 0.845 | 0.840 | 0.840 | |

| CIFAR10 | non-private | 0.687 | |||||

| DP-baseline | 0.576 | 0.595 | 0.608 | 0.588 | 0.538 | 0.397 | |

| DP-dynS[Cdecay] | 0.575 | 0.596 | 0.610 | 0.592 | 0.564 | 0.507 | |

| DP-dynS[-max] | 0.583 | 0.603 | 0.616 | 0.609 | 0.591 | 0.592 | |

| DP-dynS | 0.582 | 0.604 | 0.621 | 0.612 | 0.595 | 0.595 | |

| LFW | non-private | 0.766 | |||||

| DP-baseline | 0.704 | 0.709 | 0.692 | 0.647 | 0.595 | 0.406 | |

| DP-dynS[Cdecay] | 0.692 | 0.714 | 0.703 | 0.675 | 0.643 | 0.606 | |

| DP-dynS[-max] | 0.715 | 0.722 | 0.738 | 0.71 | 0.683 | 0.645 | |

| DP-dynS | 0.711 | 0.725 | 0.749 | 0.716 | 0.685 | 0.662 | |

| Purchase-10 | non-private | 0.825 | |||||

| DP-baseline | 0.731 | 0.734 | 0.744 | 0.739 | 0.716 | 0.683 | |

| DP-dynS[Cdecay] | 0.727 | 0.738 | 0.749 | 0.744 | 0.729 | 0.721 | |

| DP-dynS[-max] | 0.751 | 0.766 | 0.769 | 0.763 | 0.759 | 0.761 | |

| DP-dynS | 0.752 | 0.768 | 0.773 | 0.77 | 0.765 | 0.766 | |

| Purchase-50 | non-private | 0.667 | |||||

| DP-baseline | 0.549 | 0.561 | 0.571 | 0.574 | 0.556 | 0.503 | |

| DP-dynS[Cdecay] | 0.545 | 0.569 | 0.577 | 0.581 | 0.579 | 0.562 | |

| DP-dynS[-max] | 0.571 | 0.573 | 0.591 | 0.595 | 0.587 | 0.58 | |

| DP-dynS | 0.573 | 0.577 | 0.596 | 0.604 | 0.591 | 0.593 | |

VI-B Dynamic Sensitivity: Accuracy Analysis

This set of experiments compares the DP-baseline with three dynamic sensitivity methods on six datasets: DP-dynS[-max], DP-dynS[Cdecay], and DP-dynS, which denotes the dynamic sensitivity defined by combining -max sensitivity with dynamic clipping. Given that both DP-baseline and DP-dynS[-max] use a constant clipping method with fixed pre-defined clipping bound, for a fair comparison, we vary the setting of clipping bound from 1 to 32 by the interval of the power of 2 and set . Table IV reports the results. We make three observations. First, DP-dynS[-max] consistently outperforms DP-baseline under all settings of clipping bounds on all six datasets. This is because by Lemma 1, DP-baseline uses constant noise variance, and injects constant noise in each iteration of the training, regardless of the decreasing trend of gradients in the later stage of the training. Also, DP-baseline is highly sensitive to the settings of clipping bound for all six datasets. It results in lower accuracy when the clipping bound is set too large (e.g. C=16, 32) or too small bound (e.g. C=1). In contrast, DP-dynS[-max] uses dynamic -max sensitivity, which changes from iteration to iteration and closely aligns with the declining trend of gradients throughout the training. Second, DP-dynS[-max] consistently outperforms DP-dynS[Cdecay], showing that noise variance defined by -max sensitivity and noise scale offers tighter alignment than dynamic sensitivity defined by decaying clipping bound . A simple policy of linearly decaying from the initial clipping bound to is used in this experiment. Third, DP-dynS takes the best from both -max sensitivity and dynamic clipping and consistently outperforms all other alternative approaches on all six datasets. Based on our empirical observations, it is hard to provide a scalable and stable performance for deep learning with DP property when using dynamic clipping to approximate the sensitivity S for two reasons. (1) Even with dynamic decaying clipping like the DP-dynS[Cdecay] or AdaClip or Quantile Clip (see Table XI), the dynamic clipping relies on the initial setting of the clipping upper bound and the minimum clipping bound as the training progresses toward convergence to ensure the approximation of the sensitivity is correct. Such max and min clipping bound is to some extent dataset and training task dependent, as shown in Table IV. In comparison, our proposed DP-dynS[-max] is a scalable and much stable dynamic DP parameter optimization in terms of attack resilience, accuracy, convergence, and privacy spending.

| Privacy spending () | acc | ||||||

| BaseC | AdvC | OptC | zCDP | MA | |||

| MNIST | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.960 |

| DP-dynS[Cdecay] | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.964 | |

| DP-dynS[-max] | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.977 | |

| DP-dynS | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.978 | |

| Fashion-MNIST | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.833 |

| DP-dynS[Cdecay] | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.839 | |

| DP-dynS[-max] | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.845 | |

| DP-dynS | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.848 | |

| CIFAR10 | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.608 |

| DP-dynS[Cdecay] | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.610 | |

| DP-dynS[-max] | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.616 | |

| DP-dynS | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.621 | |

| LFW | DP-baseline | 74.024 | 5.503 | 5.037 | 0.893 | 0.636 | 0.692 |

| DP-dynS[Cdecay] | 74.024 | 5.503 | 5.037 | 0.893 | 0.636 | 0.703 | |

| DP-dynS[-max] | 74.024 | 5.503 | 5.037 | 0.893 | 0.636 | 0.738 | |

| DP-dynS | 74.024 | 5.503 | 5.037 | 0.893 | 0.636 | 0.749 | |

| purchase-10 | DP-baseline | 61.689 | 4.952 | 4.546 | 0.814 | 0.580 | 0.744 |

| DP-dynS[Cdecay] | 61.689 | 4.952 | 4.546 | 0.814 | 0.580 | 0.749 | |

| DP-dynS[-max] | 61.689 | 4.952 | 4.546 | 0.814 | 0.580 | 0.769 | |

| DP-dynS | 61.689 | 4.952 | 4.546 | 0.814 | 0.580 | 0.773 | |

| purchase-50 | DP-baseline | 61.689 | 4.952 | 4.546 | 0.814 | 0.580 | 0.571 |

| DP-dynS[Cdecay] | 61.689 | 4.952 | 4.546 | 0.814 | 0.580 | 0.577 | |

| DP-dynS[-max] | 61.689 | 4.952 | 4.546 | 0.814 | 0.580 | 0.591 | |

| DP-dynS | 61.689 | 4.952 | 4.546 | 0.814 | 0.580 | 0.596 | |

VI-C Dynamic Sensitivity: Privacy Analysis

Privacy under five accounting methods. The first set of experiments for privacy analysis is designed to measure privacy spending using five privacy accounting methods for DP-baseline and three alternative optimizations of dynamic sensitivity: DP-dynS[-max], DP-dynS[Cdecay] and DP-dynS. Table V reports the results. We make two observations. First, all five accounting methods show consistent performance for all four DP algorithms on the six benchmark datasets, with Moment Accountant as the most efficient tracking of privacy spending followed by zCDP. Second, DP-dynS consistently outperforms both DP-baseline and the other two dynamic sensitivity algorithms with high accuracy performance, showing that the combo of sensitivity with decay clipping method is more effective in enabling the sensitivity to be aligned with the trend of gradient updates during the training.

Privacy under a target accuracy and a fixed . In this second set of experiments, we analyze and compare DP-baseline with three alternative dynamic sensitivity optimizations in terms of their privacy spending under a target accuracy and a fixed noise scale . We use the accuracy achieved by DP-baseline at iterations as the target accuracy for each of the six datasets (recall Table 2). Table VI reports the results. DP-dynS is the winner consistently across all six datasets because it is the first to achieve the target accuracy with the smallest privacy spending and hence it is the first to terminate the training. Concretely, it takes 5137, 5532, 6336, 3645, 3690, and 3995 iterations for DP-dynS to achieve the target accuracy for MNIST, Fashion-MNIST, CIFAR10, LFW, Purchase-10, and Purchase-50 respectively. As a result, its accumulated privacy spending is much less than the other three approaches.

| Privacy spending () | iter | ||||||

| BaseC | AdvC | OptC | zCDP | MA | |||

| MNIST (0.960) | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 10000 |

| DP-dynS[Cdecay] | 98.646 | 6.518 | 5.930 | 1.034 | 0.735 | 7996 | |

| DP-dynS[-max] | 66.858 | 5.188 | 4.756 | 0.848 | 0.604 | 5419 | |

| DP-dynS | 63.379 | 5.029 | 4.615 | 0.825 | 0.588 | 5137 | |

| Fashion-MNIST (0.833) | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 10000 |

| DP-dynS[Cdecay] | 100.200 | 6.578 | 5.983 | 1.042 | 0.741 | 8124 | |

| DP-dynS[-max] | 71.878 | 5.411 | 4.955 | 0.880 | 0.626 | 5826 | |

| DP-dynS | 68.251 | 5.250 | 4.812 | 0.857 | 0.610 | 5532 | |

| CIFAR10 (0.608) | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 10000 |

| DP-dynS[] | 109.044 | 6.919 | 6.280 | 1.088 | 0.773 | 8841 | |

| DP-dynS[-max] | 80.809 | 5.794 | 5.294 | 0.934 | 0.664 | 6550 | |

| DP-dynS | 78.169 | 5.682 | 5.195 | 0.918 | 0.653 | 6336 | |

| LFW (0.692) | DP-baseline | 74.024 | 5.503 | 5.037 | 0.893 | 0.636 | 6000 |

| DP-dynS[] | 62.417 | 4.985 | 4.576 | 0.819 | 0.583 | 5059 | |

| DP-dynS[-max] | 47.331 | 4.254 | 3.919 | 0.711 | 0.508 | 3836 | |

| DP-dynS | 44.975 | 4.132 | 3.809 | 0.693 | 0.495 | 3645 | |

| purchase-10 (0.744) | DP-baseline | 61.689 | 4.952 | 4.546 | 0.814 | 0.580 | 5000 |

| DP-dynS[] | 58.926 | 4.822 | 4.430 | 0.795 | 0.567 | 4776 | |

| DP-dynS[-max] | 46.615 | 4.217 | 3.886 | 0.706 | 0.504 | 3778 | |

| DP-dynS | 45.530 | 4.161 | 3.835 | 0.697 | 0.498 | 3690 | |

| purchase-50 (0.571) | DP-baseline | 61.689 | 4.952 | 4.546 | 0.814 | 0.580 | 5000 |

| DP-dynS[Cdecay] | 59.308 | 4.840 | 4.446 | 0.798 | 0.568 | 4807 | |

| DP-dynS[-max] | 50.871 | 4.433 | 4.080 | 0.738 | 0.526 | 4123 | |

| DP-dynS | 49.292 | 4.354 | 4.009 | 0.726 | 0.518 | 3995 | |

| BaseC | AdvC | OptC | zCDP | MA | acc | ||

| MNIST (C=4) | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.9596 |

| DP-dynS[Cdecay] | 105.173 | 5.889 | 5.736 | 1.119 | 0.806 | 0.9596 | |

| DP-dynS[-max] | 34.412 | 1.866 | 1.787 | 0.436 | 0.314 | 0.9596 | |

| DP-dynS | 32.529 | 1.783 | 1.694 | 0.412 | 0.307 | 0.9596 | |

| MNIST (C=8) | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.945 |

| DP-dynS[Cdecay] | 114.531 | 6.552 | 6.341 | 0.994 | 0.675 | 0.945 | |

| DP-dynS[-max] | 28.828 | 1.589 | 1.511 | 0.378 | 0.283 | 0.945 | |

| DP-dynS | 28.015 | 1.426 | 1.412 | 0.366 | 0.269 | 0.945 | |

| CIFAR10 (C=4) | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.608 |

| DP-dynS[Cdecay] | 120.119 | 7.006 | 6.662 | 1.153 | 0.812 | 0.608 | |

| DP-dynS[-max] | 116.717 | 6.292 | 6.330 | 1.114 | 0.790 | 0.608 | |

| DP-dynS | 113.458 | 5.557 | 5.536 | 1.044 | 0.763 | 0.608 | |

| CIFAR10 (C=8) | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.588 |

| DP-dynS[Cdecay] | 116.304 | 6.316 | 6.405 | 1.137 | 0.792 | 0.588 | |

| DP-dynS[-max] | 109.405 | 5.865 | 5.886 | 1.063 | 0.754 | 0.588 | |

| DP-dynS | 105.256 | 5.849 | 5.834 | 1.022 | 0.748 | 0.588 | |

| MNIST (C=4) | DP-baseline | 523.375 | 232.117 | 258.635 | 4.109 | 21.174 | 0.9773 |

| DP-dynS[Cdecay] | 155.677 | 8.575 | 9.037 | 1.370 | 0.944 | 0.9773 | |

| DP-dynS[-max] | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 0.9773 | |

| DP-dynS | 117.266 | 7.036 | 6.687 | 1.149 | 0.806 | 0.9773 | |

Privacy under a target accuracy and a fixed . The third set of experiments for privacy analysis measures the privacy spending under a target accuracy and a fixed noise variance . We compare DP-baseline and the three alternative DP algorithms with three different dynamic sensitivity strategies DP-dynS[], DP-dynS[], and DP-dynS on MNIST and CIFAR10. The accuracy of DP-baseline is used as the target accuracy for both MNIST and CIFAR10. We set , and and conduct the experiments with two fixed noise variance settings: with and with . Based on Theorem 2 and Lemma 1, when the noise variance is fixed, with dynamic sensitivity , the noise scale will follow the trend of in a reverse trend: as continues to decline with the training progresses in iterations, the noise scale will increase to keep the noise scale constant. As a result, larger sensitivity in the early stage of the training will lead to a smaller noise scale and larger privacy spending to protect informative gradients. As the training is approaching the end, the sensitivity will become smaller, resulting in larger and smaller privacy spending. Table VII shows the results. Consider the first four scenarios, in which the target accuracy is set to the accuracy of DP-baseline for each dataset. We make three observations: (1) DP-dynS consistently outperforms the other three alternatives for the two noise variance settings on both datasets when given a target accuracy and a fixed noise variance . (2) DP-dynS[-max] consistently ranked as the second winner with significantly smaller privacy spending than DP-dynS[] for all four scenarios under all five privacy accounting methods. This further demonstrates that using adaptive clipping with decay function is still a loose estimation compared to using the -max sensitivity for differentially private deep learning. Hence, DP-dynS[] consumes more privacy budget under both fixed noise variances for both datasets, compared to DP-dynS[-max] and DP-dynS. (3) When comparing the privacy spending on the same dataset with two different fixed noise variances, or when comparing two different datasets on the same fixed noise variance, it is interesting to note that DP-baseline has the same privacy spending under both variance settings on both MNIST and CIFAR10. This is because the privacy spending is anti-correlated to noise scale (recall Lemma 1) and not sensitive to the different settings of clipping bound for the constant clipping method. However, for DP algorithms with dynamic sensitivity, the privacy spending is also training data dependent. For each of the two fixed noise variances, both with , all three dynamic sensitivity algorithms on CIFAR10 will incur higher privacy spending while their respective privacy spending on MNIST will be smaller. This confirms the common knowledge that the gradient update trend during training is model-dependent and dataset-dependent.

The last scenario is included for privacy analysis under two different target accuracy. For MNIST with , we set the target accuracy to the accuracy of DP-dynS[-max] (0.9773) instead of 0.9596 from DP-baseline. We make two interesting observations. (1) DP-dynS remains the winner with strong privacy guarantee at the smallest privacy spending , followed by DP-dynS[]. (2) To achieve the target accuracy of 0.9773, DP-baseline has to enforce a smaller to maintain the fixed , resulting in much higher spending of privacy budget according to all five privacy accounting methods. For MA, DP-baseline results in for target accuracy of 0.9773 compared to for the target accuracy of 0.9596 obtained at . For zCDP and base composition, DP-baseline spent of the privacy budget for achieving the target accuracy of 0.9773, compared to the privacy spent for achieving the target accuracy of 0.9596 at .

VI-D Dynamic Noise Scale Optimization

In this section, we evaluate the effectiveness of incorporating dynamic noise scale into DP-baseline and the three alternative dynamic sensitivity optimized DP algorithms.

| BaseC | AdvC | OptC | zCDP | MA | ||

| 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | ||

| fix | DP-baseline | 0.9596 | ||||

| DP-dynS[Cdecay] | 0.9639 | |||||

| DP-dynS[-max] | 0.9773 | |||||

| DP-dynS | 0.9778 | |||||

| iteration | 10000 | |||||

| adaptive (linear) | DP-dyn | 0.962 | 0.9614 | 0.9608 | 0.9618 | 0.9614 |

| DP-dyn[S[Cdecay],] | 0.9673 | 0.965 | 0.9641 | 0.9653 | 0.9649 | |

| DP-dyn[S[-max],] | 0.9788 | 0.978 | 0.9775 | 0.9781 | 0.9778 | |

| DP-dyn[S,] | 0.9791 | 0.9783 | 0.9779 | 0.9783 | 0.9782 | |

| Iteration | 9646 | 9481 | 8908 | 9494 | 9450 | |

| adaptive (exponential) | DP-dyn | 0.9616 | 0.9604 | 0.9597 | 0.9608 | 0.9603 |

| DP-dyn[S[Cdecay],] | 0.9681 | 0.9652 | 0.9647 | 0.9665 | 0.9665 | |

| DP-dyn[S[-max],] | 0.979 | 0.9775 | 0.9775 | 0.9786 | 0.9781 | |

| DP-dyn[S,] | 0.9803 | 0.9781 | 0.9781 | 0.9789 | 0.9787 | |

| iteration | 9678 | 9311 | 8955 | 9553 | 9501 | |

| adaptive (cyclic) | DP-dyn | 0.9601 | 0.9599 | 0.9596 | 0.9599 | 0.9599 |

| DP-dyn[S[Cdecay],] | 0.9652 | 0.9644 | 0.9641 | 0.9648 | 0.9645 | |

| DP-dyn[S[-max],] | 0.9784 | 0.9779 | 0.9776 | 0.9781 | 0.9779 | |

| DP-dyn[S,] | 0.9787 | 0.9781 | 0.9779 | 0.9785 | 0.9784 | |

| iteration | 8019 | 7724 | 7251 | 7993 | 7876 | |

Accuracy analysis under a target privacy budget . The first set of experiments evaluate and compare these eight alternative DP algorithms on MNIST under a given target privacy budget such that each algorithm will terminate when its target privacy budget is exhausted. Unless otherwise stated, , and for MNIST. Table VIII reports the results. We make three observations. (1) For DP-baseline and the three dynamic sensitivity algorithms under fixed , we measure and compare their accuracy since they have the same privacy spending as shown in Table V when reaching iterations. DP-dynS and DP-dynS[-max] are clear winners with DP-dynS slightly higher in accuracy. (2) By Lemma 1, the decaying noise scale will lead to increased per-iteration spending for early iterations in the training. As a result, the training is terminated early when the accumulated privacy loss reaches the target privacy budget under each accounting method. Different privacy composition methods accumulate heterogeneous privacy spending differently and result in different ending iterations. (3) By integrating dynamic noise scale optimization, one can further improve accuracy performance under all three noise scale decay policies compared to their corresponding algorithms under the fixed . DP-dyn[S,] is consistently the best performing algorithm under all three decaying policies. Although DP-dyn with only dynamic noise scale slightly outperforms DP-baseline under the three decaying policies, dynamic sensitivity approaches consistently outperform DP-dyn in accuracy performance, showing the critical role of sensitivity in achieving high training utility of DP algorithms. (4) Empirically, noise scale decay with the exponential trend has the best accuracy performance.

| BaseC | AdvC | OptC | zCDP | MA | iter | ||

| fix | DP-baseline | 123.354 | 7.450 | 6.740 | 1.159 | 0.823 | 10000 |

| DP-dynS[Cdecay] | 98.646 | 6.518 | 5.930 | 1.034 | 0.735 | 7996 | |

| DP-dynS[-max] | 66.858 | 5.188 | 4.756 | 0.848 | 0.604 | 5419 | |

| DP-dynS | 63.379 | 5.029 | 4.615 | 0.825 | 0.588 | 5137 | |

| linear | DP-dyn[S,] | 51.365 | 4.538 | 4.501 | 0.561 | 0.459 | 5532 |

| exponential | 48.027 | 4.017 | 3.962 | 0.537 | 0.453 | 5541 | |

| cyclic | 56.776 | 4.703 | 4.667 | 0.683 | 0.572 | 5493 | |

Privacy analysis under a target accuracy. In the next set of experiments, we measure and compare the accumulated privacy spending under a given target accuracy to evaluate the effectiveness of the best dynamic parameter optimization DP-dyn[S,] by comparing it with DP-baseline and three alternative dynamic sensitivity algorithms with fixed for MNIST: DP-dynS[-max], DP-dynS[Cdecay], DP-dynS and DP-dyn[S,]. The target accuracy is set to 0.9596, the accuracy of DP-baseline as provided in Table V with and . Table IX reports the results. We make two observations: (1) DP-dyn[S,] with exponential decay provides the best differential privacy guarantee at the smallest privacy spending under all five privacy accounting methods. (2) Both DP-dynS and DP-dynS[-max] terminate slightly earlier than DP-dyn[S,] under the same target accuracy. This is likely because the large early noise in the dynamic noise scale algorithm may have some marginal effect on the convergence, leading to taking a few iterations more than the DP-dynS in reaching the target accuracy. However, DP-dyn[S,] spent the smallest privacy budget to achieve the target accuracy even with a slightly longer training time. This also shows that early termination does not always guarantee smaller privacy spending. The dynamic sensitivity and dynamic noise scale ultimately control the right amount of noise to be added for achieving the best privacy under a target accuracy.

VI-E Time Cost Evaluation

This set of experiments compare the time cost for the non-private algorithm and five alternative approaches to differentially private deep learning using all six benchmark datasets. Table X report the per-iteration time cost measurement in seconds. We make three observations: (1) Incorporating dynamic parameters of sensitivity and noise scale incurs negligible additional cost. This is because all differentially private deep learning algorithms will always need to compute the norm of the gradients in order to perform clipping. The difference between DP algorithms and non-private model indicates the cost of computing norm of the gradients per iteration. (2) The relative cost is smaller when the model is simpler with a smaller number of parameters. For example, the two attribute datasets have a lower time cost than the four image datasets. (3) In addition to model complexity, batch size may also impact this additional time cost. The relative cost is smaller when the batch size is smaller. For example, LFW has a much smaller batch size than the other three image datasets and is relatively faster to run one iteration.

| MNIST | Fashion-MNIST | CIFAR10 | LFW | Purchase | |

| non-private | 0.138 | 0.144 | 0.504 | 0.069 | 0.024 |

| DP-baseline | 1.63 | 1.68 | 1.8 | 0.149 | 0.125 |

| DP-dynS[Cdecay] | 1.70 | 1.72 | 1.83 | 0.152 | 0.131 |

| DP-dynS[-max] | 1.71 | 1.72 | 1.83 | 0.153 | 0.131 |

| DP-dynS | 1.72 | 1.74 | 1.84 | 0.155 | 0.132 |

| DP-dyn[S,] | 1.72 | 1.74 | 1.85 | 0.155 | 0.132 |

VI-F Comparison with Existing Dynamic Clipping

We have shown in Section VI-A that our proposed approach for gradient leakage resilient deep learning with dynamic parameter optimizations offers the strongest resilience against gradient leakage attacks to training data privacy by comparing with both the state of the art DP baseline for deep learning and the two representative adaptive clipping enhancements [56, 55]. In this set of experiments, we provide the accuracy performance comparison to show that our dynamic parameter optimization on both noise scale and sensitivity outperforms both the baseline DP approach [24] and the two adaptive clipping proposals. Table XI compares DP-dyn[S,] with the two approaches in terms of accuracy performance and time cost. We consider the accuracy for the four image datasets and measure the time cost by sec/iteration. We make two observations: (1) The proposed dynamic optimization on both sensitivity and noise-scale consistently outperforms both baseline DP and the two existing proposals to improve the baseline DP with AdaClip or Quantile clipping with larger accuracy performance enhancements. (2) Comparing to the baseline DP [24], the proposed dynamic parameter optimization approach incurs the smallest time cost compared to AdaClip and Quantile clipping while offering the highest accuracy improvement in comparison.

| MNIST | Fashion-MNIST | CIFAR-10 | LFW | ||

| DP-baseline | accuracy | 0.960 | 0.833 | 0.608 | 0.692 |

| cost (s/iter) | 1.63 | 1.68 | 1.8 | 0.149 | |

| Quantileclip [55] | accuracy | 0.971 | 0.846 | 0.614 | 0.733 |

| cost (s/iter) | 2.66 | 2.75 | 2.92 | 0.304 | |

| Adaclip [56] | accuracy | 0.969 | 0.843 | 0.611 | 0.725 |

| cost (s/iter) | 1.81 | 1.84 | 1.94 | 0.185 | |

| DP-dyn[S,] | accuracy | 0.978 | 0.848 | 0.621 | 0.749 |

| cost (s/iter) | 1.72 | 1.74 | 1.85 | 0.155 | |

VII Related Work

We have given an overview of related work in privacy threats and deep learning with differential privacy in Section I. In this section, we focus on the most relevant literature. The first proposal for deep learning with DP [24] has been deployed in google TensorFlow [30]. However, one known problem for deep learning with DP is the degradation of model accuracy compared to the non-DP trained model. Several recent efforts have been put forward for improving the accuracy of the approach proposed in [24]. The most relevant efforts to this paper include the recent zCDP proposal with dynamic privacy budget allocation [27] instead of uniformed privacy budget allocation in [24], and the adaptive clipping proposals represented by AdaClip [56] and Quantile Clipping [55]. The main contribution of Yu et.al [27] is the new privacy accounting method zCDP that can compute the privacy spending when the DP training is using an approximate differential privacy under CDP with parameter to control dynamic privacy budget instead of privacy budget as in [24]. In addition, [27] also proposed a dynamic privacy budget allocation solution to improve [24] which uses fixed privacy budget in every iteration of the DNN training, aiming to improving the accuracy of trained DNN model with DP. [27] did not consider gradient leakage attack and resilience, and also uses the fixed clipping as the approximation to sensitivity in the same way as [24]. AdaClip performs the clipping bound estimation based on the coordinates of the gradient norm and adaptively add different noise levels to different dimensions of the gradients. Quantile clipping is an alternative approach to AdaClip. It estimates the clipping bound during the training iterations using the quantile of the unclipped gradient norm instead of estimation of clipping bound based on the coordinates of the gradient norm in AdaClip. Both approaches are costly to compute dynamic clipping bounds since both needs to compute the clipping estimation on all M layers of a DNN for every example. Also both use the dynamic clipping bound to approximate the sensitivity while maintaining the fixed noise scale throughout the iterations of training to ensure that a sufficient amount of DP-noise is added according to the DP theory. Both are designed to improve accuracy of DNN training with DP but fail to be resilient against gradient leakages. In comparison, our approach is by design both gradient leakage resilient and improving model accuracy thanks to injecting dynamic controlled DP noise. Our dynamic DP parameter optimization approach DP-dyn[-max] incorporates dynamic sensitivity and dynamic noise scale to support decaying noise variance, such that as learning progresses in iterations, we inject less amount of DP controlled noises to the gradients instead of constant noise amount as done in [24]. As a result, our dynamic -max sensitivity provide a tighter DP controlled noise bound than the fixed Gaussian noise variance in used in conventional approaches.

VIII Conclusions

We have presented a suite of algorithms with dynamic privacy parameters for gradient leakage resilient deep learning with differential privacy. We first analyze some limitations of existing algorithms using fixed-parameter strategies that inject constant differential privacy noise to all layers during each training iteration. We then presented a suite of DP algorithms with dynamic parameter optimizations, including dynamic sensitivity mechanisms, dynamic noise scale mechanisms, and different combinations of dynamic parameter strategies. Extensive experiments on six benchmark datasets demonstrate that the proposed differentially private deep learning with dynamic hybrid sensitivity and dynamic decaying noise scale can outperform existing state-of-the-art approaches and other dynamic parameter alternatives with competitive accuracy performance, strong differential privacy guarantee, high resilience against gradient privacy leakage.

Acknowledgement. The authors would like to first thank the associate editor Dr. Grigorios Loukides and the reviewers for their constructive and helpful comments. The authors acknowledge partial support by the National Science Foundation under Grants NSF 2038029, NSF 1564097, and an IBM faculty award.

References

- [1] L. Zhu, Z. Liu, and S. Han, “Deep leakage from gradients,” in NeurIPS, 2019, pp. 14 747–14 756.

- [2] B. Zhao, K. R. Mopuri, and H. Bilen, “idlg: Improved deep leakage from gradients,” arXiv preprint arXiv:2001.02610, 2020.

- [3] Y. Aono, T. Hayashi, L. Wang, and S. Moriai, “Privacy-preserving deep learning via additively homomorphic encryption,” TIFS, vol. 13, no. 5, pp. 1333–1345, 2017.

- [4] J. Geiping, H. Bauermeister, H. Dröge, and M. Moeller, “Inverting gradients - how easy is it to break privacy in federated learning?” in NeurIPS, 2020, pp. 16 937–16 947.

- [5] W. Wei, L. Liu, M. Loper, K.-H. Chow, M. E. Gursoy, S. Truex, and Y. Wu, “A framework for evaluating client privacy leakages in federated learning,” in ESORICS. Springer, 2020, pp. 545–566.

- [6] J. Zhu and M. Blaschko, “R-gap: Recursive gradient attack on privacy,” arXiv preprint arXiv:2010.07733, 2020.

- [7] H. Weng, J. Zhang, F. Xue, T. Wei, S. Ji, and Z. Zong, “Privacy leakage of real-world vertical federated learning,” arXiv preprint arXiv:2011.09290, 2020.

- [8] Y. Liu, X. Zhu, J. Wang, and J. Xiao, “A quantitative metric for privacy leakage in federated learning,” in ICASSP. IEEE, 2021.

- [9] H. Yin, A. Mallya, A. Vahdat, J. M. Alvarez, J. Kautz, and P. Molchanov, “See through gradients: Image batch recovery via gradinversion,” in CVPR. IEEE, 2021.

- [10] J. Qian, H. Nassar, and L. K. Hansen, “Minimal conditions analysis of gradient-based reconstruction in federated learning,” arXiv preprint arXiv:2010.15718, 2020.

- [11] M. Fredrikson, S. Jha, and T. Ristenpart, “Model inversion attacks that exploit confidence information and basic countermeasures,” in CCS. ACM, 2015, pp. 1322–1333.

- [12] C. Song, T. Ristenpart, and V. Shmatikov, “Machine learning models that remember too much,” in CCS. ACM, 2017, pp. 587–601.

- [13] L. Melis, C. Song, E. De Cristofaro, and V. Shmatikov, “Exploiting unintended feature leakage in collaborative learning,” in S&P. IEEE, 2019, pp. 691–706.

- [14] K. Ganju, Q. Wang, W. Yang, C. A. Gunter, and N. Borisov, “Property inference attacks on fully connected neural networks using permutation invariant representations,” in CCS. ACM, 2018, pp. 619–633.

- [15] R. Shokri, M. Stronati, C. Song, and V. Shmatikov, “Membership inference attacks against machine learning models,” in S&P. IEEE, 2017, pp. 3–18.

- [16] M. Nasr, R. Shokri, and A. Houmansadr, “Comprehensive privacy analysis of deep learning: Stand-alone and federated learning under passive and active white-box inference attacks,” in S&P. IEEE, 2019, pp. 739–753.

- [17] B. Hui, Y. Yang, H. Yuan, P. Burlina, N. Z. Gong, and Y. Cao, “Practical blind membership inference attack via differential comparisons,” in NDSS, 2021.

- [18] A. Salem, Y. Zhang, M. Humbert, P. Berrang, M. Fritz, and M. Backes, “Ml-leaks: Model and data independent membership inference attacks and defenses on machine learning models,” in NDSS, 2018.

- [19] L. Song and P. Mittal, “Systematic evaluation of privacy risks of machine learning models,” in USENIX Security, 2021.

- [20] G. Liu, J. Zhao, R. Zhang, C. Wang, and L. Liu, “Gradient-leaks: Enabling black-box membership inference attacks against machine leaning models,” TIFS, 2021.

- [21] B. Hitaj, G. Ateniese, and F. Perez-Cruz, “Deep models under the gan: information leakage from collaborative deep learning,” in CCS. ACM, 2017, pp. 603–618.

- [22] Z. Wang, M. Song, Z. Zhang, Y. Song, Q. Wang, and H. Qi, “Beyond inferring class representatives: User-level privacy leakage from federated learning,” in INFOCOM. IEEE, 2019, pp. 2512–2520.

- [23] C. Dwork and A. Roth, “The algorithmic foundations of differential privacy,” Foundations and Trends® in Theoretical Computer Science, vol. 9, no. 3–4, pp. 211–407, 2014.

- [24] M. Abadi, A. Chu, I. Goodfellow, H. B. McMahan, I. Mironov, K. Talwar, and L. Zhang, “Deep learning with differential privacy,” in CCS. ACM, 2016, pp. 308–318.

- [25] H. B. McMahan, D. Ramage, K. Talwar, and L. Zhang, “Learning differentially private recurrent language models,” in ICLR, 2018.

- [26] N. Papernot, S. Song, I. Mironov, A. Raghunathan, K. Talwar, and Ú. Erlingsson, “Scalable private learning with pate,” in ICLR, 2018.

- [27] L. Yu, L. Liu, C. Pu, M. E. Gursoy, and S. Truex, “Differentially private model publishing for deep learning,” in S&P. IEEE, 2019, pp. 332–349.

- [28] K. Chaudhuri, C. Monteleoni, and A. D. Sarwate, “Differentially private empirical risk minimization.” JMLR, vol. 12, no. 3, 2011.