Gradient-Free Nash Equilibrium Seeking in N-Cluster Games with Uncoordinated Constant Step-Sizes

Abstract

This work investigates a problem of simultaneous global cost minimization and Nash equilibrium seeking, which commonly exists in -cluster non-cooperative games. Specifically, the agents in the same cluster collaborate to minimize a global cost function, being a summation of their individual cost functions, and jointly play a non-cooperative game with other clusters as players. For the problem settings, we suppose that the explicit analytical expressions of the agents’ local cost functions are unknown, but the function values can be measured. We propose a gradient-free Nash equilibrium seeking algorithm by a synthesis of Gaussian smoothing techniques and gradient tracking. Furthermore, instead of using the uniform coordinated step-size, we allow the agents across different clusters to choose different constant step-sizes. When the largest step-size is sufficiently small, we prove a linear convergence of the agents’ actions to a neighborhood of the unique Nash equilibrium under a strongly monotone game mapping condition, with the error gap being propotional to the largest step-size and the smoothing parameter. The performance of the proposed algorithm is validated by numerical simulations.

Index Terms:

Nash equilibrium (NE) seeking, gradient-free methods, non-cooperative games.I Introduction

The research on cooperation and competition across multiple interacting agents has been extensively studied in recent years, especially on distributed optimization and Nash equilibrium (NE) seeking in non-cooperative games. Specifically, distributed optimization deals with a cooperative minimization problem among a network of agents. On the other hand, NE seeking in non-cooperative games is concerned with a number of agents (also known as players), who are self-interested to minimize their individual cost given the other agents’ decisions.

To simultaneously model the cooperative and competitive behaviors in networked systems, an -cluster game is formulated. This game is essentially a non-cooperative game played among interacting clusters with each cluster being a virtual player. In each cluster, there are a group of agents who collaboratively minimize a cluster-level cost function given by a summation of their individual local cost functions. With these features, the -cluster game naturally accommodates both collaboration and competition in a unified framework, which motivates us to study and propose solutions to find its NE. In this paper, we consider such an -cluster non-cooperative game. Moreover, we further suppose that the explicit analytical expressions of the agents’ local cost functions are unknown, but the function values can be measured.

A substantial works on NE seeking algorithms for non-cooperative games have been reported in the recent literature, including [1, 2, 3, 4, 5], to list a few. The focus of the aforementioned works is mainly on the competitive nature in the non-cooperative games. Different from that, the works in [6, 7] considered two sub-networks zero-sum games, where each subnetwork owns an opposing cost function to be cooperatively minimized by the agents in the corresponding subnetwork. Then, an extension of such problems to subnetworks was firstly formulated in [8], which is known as an -cluster (or coalition) game. Then, this problem has received a high research interest recently, which includes [9, 10, 11, 12, 13, 14, 15, 16]. Most of the above works focus on continuous-time based methods, such as gradient play [9, 10, 11, 12], subgradient dynamics [13], projected primal-dual dynamics [14], and extremum-seeking techniques [15]. Our previous work in [16] introduced a discrete-time NE seeking strategy based on a synthesis of gradient-free and gradient-tracking techniques. This paper revisits the -cluster game, and aims to extend the results to uncoordinated step-sizes across different clusters.

Contributions: As compared to the aforementioned relevant works, the contributions of this work can be summarized as follows. 1) In contrast to the problem setups in [9, 10, 11, 12, 13, 14], we limit the agents on the access to the cost functions: no explicit analytical expressions but only the values of the local cost functions can be utilized in the update laws. In this case, no gradient information can be directly utilized in the design of the algorithm. Hence, gradient-free techniques are adopted in this work. 2) As compared to our prior work [16], we extend the gradient-tracking method to allow uncoordinated constant step-sizes across different clusters, which further reduces the coordination among players from different clusters. 3) The technical challenges of the convergence analysis brought by gradient tracking methods in games, and uncoordinated step-sizes are addressed in this work. For the convergence results: we obtain a linear convergence to a neighborhood of the unique NE with the error being proportional to the largest step-size and a smoothing parameter under appropriate settings.

Notations: We use () for an -dimensional vector with all elements being 1 (0), and for an identity matrix. For a vector , we use to denote a diagonal matrix formed by the elements of . For any two vectors , their inner product is denoted by . The transpose of is denoted by . Moreover, we use for its standard Euclidean norm, i.e., . For vector , we use to denote its -th entry. The transpose and spectral norm of a matrix are denoted by and , respectively. We use to represent the spectral radius of a square matrix . The expectation operator is denoted by .

II Problem Statement

II-A Problem Formulation

An -cluster game is defined by , where each cluster, indexed by , consists of a group of agents, denoted by . Denote . These agents aim to minimize a cluster-level cost function , defined as

where is a local cost function of agent in cluster , is a collection of all agents’ actions in cluster with being the action of agent in cluster , and denotes a collection of all agents’ actions except cluster . Denote .

Definition 1

(NE of -Cluster Games). A vector is said to be an NE of the -cluster non-cooperative game , if and only if

Within each cluster , there is an underlying directed communication network, denoted by with an adjacency matrix . In particular, if agent can directly pass information to agent , and otherwise. We suppose . Regarding the communication network, the following standard assumption is supposed.

Assumption 1

For , the digraph is strongly connected. The adjacency matrix is doubly-stochastic.

Noting that [17, Lemma 1], we define and .

Moreover, we consider the scenario where the explicit analytical expressions of the agents’ local cost functions are unknown, but the function values can be measured, similar to the settings in [15, 16, 18, 19]. Regarding the cost function, the following standard assumption is supposed.

Assumption 2

For each , the local cost function is convex in , and continuously differentiable in . The total gradient is -Lipschitz continuous in , i.e., for any , .

The game mapping of is defined as

The following standard assumption on the game mapping is supposed.

Assumption 3

The game mapping of game is strongly monotone with a constant , i.e., for any , we have .

II-B Preliminaries

This part presents some preliminary results on gradient-free techniques based on Gaussian smoothing [20].

For , , a Gaussian-smoothed function of the local cost function can be defined as

| (1) |

where is generated from a Gaussian distribution , and is a smoothing parameter.

For each cluster , the randomized gradient-free oracle of for player with respect to agent , , at time step is developed as

| (2) |

where denotes the -th element of with , and being player ’s own version of at time step , and . The oracle (2) is useful as it can correctly estimate the partial gradient of the Gaussian-smoothed cost function . The following results for and can be readily obtained according to [20].

Lemma 1

We define a Gaussian-smoothed game associated with the -cluster game , denoted by , having the same set of clusters and action sets as game , but the cost function is given by

where is a Gaussian-smoothed function of defined in (1). Similar to the game mapping of , we define the game mapping of by . The following lemma shows the strong monotonicity condition of , and quantifies the distance between the NE of the smoothed game and the NE of the original game in terms of the smoothing parameter .

Lemma 2

It follows from Lemma 2 that is the unique NE of the smoothed game , and hence holds that . We define .

III NE Seeking Algorithm for N-Cluster Games

III-A Algorithm

In this part, we present an NE seeking strategy for the -Cluster Game. Specifically, each agent , needs to maintain its own action variable , and gradient tracker variables for . Let denote the values of these variables at time-step . The update laws for each agent , are designed as

| (3a) | ||||

| (3b) | ||||

| (3c) | ||||

with arbitrary and , where is the gradient estimator given by (2). and is a constant step-size sequence adopted by all agents in cluster . Denote the largest step-size by and the average of all step-sizes by . Define the heterogeneity of the step-size as the following ratio, , where and .

III-B Main Results

This part presents the main results of the proposed algorithm, as stated in the following theorem. Detailed proof is given in Sec. IV-B.

Theorem 1

Suppose Assumptions 1, 2 and 3 hold. Generate the auxiliary variables , the agent’s action and gradient tracker by (3) with the uncoordinated constant step-size satisfying and

where , and are defined in Sec. IV-B. Then, all players’ decisions linearly converges to a neighborhood of the unique NE , and

Remark 2

Theorem 1 characterizes the convergence performance of the proposed algorithm. It shows that the agents’ actions converge to a neighborhood of the NE linearly with the error bounded by two terms: one is proportional to the largest step-size, and the other is proportional to the smoothing parameter due to the gradient estimation.

IV Convergence Analysis

Let denote the -field generated by the entire history of the random variables from time-step 0 to . We introduce the following notations. Denote that and . For , . Then, the update laws (3a) and (3c) read:

| (4a) | ||||

| (4b) | ||||

Pre-multiplying both sides of (4a) by and augmenting the relation for , we have

| (5) |

The convergence analysis of the proposed algorithm will be conducted by: 1) constructing a linear system of three terms , and in terms of their past iterations and some constants, 2) analyzing the convergence of the established linear system.

IV-A Auxiliary Analysis

We first derive some results for the averaged gradient tracker .

Proof: For 1), multiplying from the left on both sides of (4b), and noting that is doubly stochastic, we have

Recursively expanding the above relation and noting that completes the proof.

For 2), following the result of part 1) and Lemma 1-3), we obtain

For 3), it follows that

On the other hand, it follows from Lemma 1-3) that

| (6) |

where and the last inequality follows from (3b) that

| (7) |

The proof is completed by combining the above relations. ∎

Then, we provide a bound on the stacked gradient tracker .

Proof: It is noted that

The proof is completed by taking the conditional expectation on on both sides and substituting Lemma 3-3). ∎

Now, we are ready to establish an inequality relation for the first term, in Lemma 5.

Lemma 5

Proof: It follows from (4a) that for

Taking the conditional expectation on and noting that , we obtain

Applying Lemma 4 and summing over to , to complete the proof. ∎

Then, we proceed to build the inequality relation for the second term, in Lemma 6.

Lemma 6

Proof: From (5), we know

| (8a) | ||||

| (8b) | ||||

| (8c) | ||||

| (8d) | ||||

For the second term in (8a), applying Lemma 3-(3)

| (9) |

For (8b),

| (10) |

For (8c),

| (11) |

where the last inequality is due to . For (8d),

| (12a) | |||

| (12b) | |||

For (12a),

| (13) |

For (12b),

| (14) |

Combining (13) and (14), we obtain for (8d) that

| (15) |

Finally, taking the conditional expectation for (8) on , and substituting (9), (10), (11) and (15) into it, we obtain the desired result. ∎

Finally, we derive an inequality relation for the third term, in Lemma 7.

IV-B Proof of Theorem 1

Now, we proceed to the proof of Theorem 1. Based on the results in Lemmas 5, 6 and 7, we can construct a linear system by taking the total expectation on the corresponding relations.

| (17) |

where

, , , , , , , , , , , , , and .

For the linear system (17), we aim to prove such that each component of can linearly converge to a neighborhood of 0 [21].

We adopt the following result to guarantee :

Lemma 8

(see [21, Cor. 8.1.29]) Let be a matrix with non-negative entries and be a vector with positive entries. If there exists a constant such that , then .

To apply Lemma 8, each element of should be non-negative. Hence, we may set and , i.e.,

Next, based on Lemma 8, it suffices to find a vector with such that , i.e.,

Without loss of generality, we may set . It remains to find and such that the following inequalities hold

| (18a) | |||

| (18b) | |||

| (18c) | |||

V Numerical Simulations

We illustrate the proposed NE seeking strategy on a connectivity control game [22], played among a number of sensor networks. Specifically, there are sensor networks, where each sensor network contains sensors. Let denote the position of sensor (referred to as an agent) from a sensor network (referred to as a cluster). Then, this sensor aims to seek a tradeoff between a local cost, (e.g., source seeking and positioning) and the global cost, (e.g., connectivity preservation with other sensor networks). Hence, the cost function to be minimized by this sensor is given by

where

and are constant matrices or vectors of appropriate dimensions, and stands for the set of neighbors of sensor network in a connected graph characterizing their position dependence. Specifically, if , then the corresponding term represents the intention of sensor from a sensor network to preserve the connectivity with the sensors from sensor network .

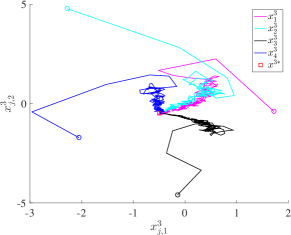

In this simulation, we consider and . The local and global costs are set as for , and , , for . Then, it is readily verified that Assumptions 2 and 3 hold. The directed communication graph for each sensor network is as shown in Fig. 1. For the algorithm parameters, we let the smoothing parameter be , and the constant step-sizes for sensors of network be , , , respectively. Thus, . We initialize the algorithm with arbitrary , and . The trajectories of the sensors’ positions for the three sensor networks are plotted in Fig. 2. It can be seen that the positions of all sensors can almost converge to the NE. Also, more ‘zigzags’ can be observed for the case of a larger step-size, since the update is more aggressive.

Next, we illustrate the convergence rate results. First, we set the constant step-sizes for sensors of network be , and , respectively, and let , and , respectively. Hence, we fix the heterogeneity of the step-size , and set the largest step-size to , and , respectively. The trajectories of the error gap with these settings are plotted in Fig. 3a. Then, we fix the largest step-size to and the averaged step-size , and set the heterogeneity of the step-size , , and , respectively. The trajectories of the error gap with these settings are plotted in Fig. 3b. As can be seen from both figures, the error gap descends linearly for all cases. Moreover, the convergence speed is faster with larger step-sizes and smaller heterogeneity, which verifies the derived results in Theorem 1.

VI Conclusions

This work has studied an -cluster non-cooperative game problem, where the agents’ cost functions are possibly non-smooth and the explicit expressions are unknown. By integrating the Gaussian smoothing techniques with the gradient tracking, a gradient-free NE seeking algorithm has been developed, in which the agents are allowed to select their own preferred constant step-sizes. We have shown that, when the largest step-size is sufficiently small, the agents’ actions approximately converge to the unique NE under a strongly monotone game mapping condition, and the error gap is proportional to the largest step-size and the smoothing parameter. Finally, the derived results have been verified by numerical simulations.

References

- [1] M. Ye and G. Hu, “Distributed nash equilibrium seeking in multiagent games under switching communication topologies,” IEEE Transactions on Cybernetics, vol. 48, no. 11, pp. 3208–3217, 2018.

- [2] K. Lu, G. Jing, and L. Wang, “Distributed Algorithms for Searching Generalized Nash Equilibrium of Noncooperative Games,” IEEE Transactions on Cybernetics, vol. 49, no. 6, pp. 2362–2371, 2019.

- [3] P. Yi and L. Pavel, “An operator splitting approach for distributed generalized Nash equilibria computation,” Automatica, vol. 102, pp. 111–121, 2019.

- [4] C. De Persis and S. Grammatico, “Continuous-Time Integral Dynamics for a Class of Aggregative Games With Coupling Constraints,” IEEE Transactions on Automatic Control, vol. 65, no. 5, pp. 2171–2176, 2020.

- [5] Y. Zhang, S. Liang, X. Wang, and H. Ji, “Distributed Nash Equilibrium Seeking for Aggregative Games With Nonlinear Dynamics Under External Disturbances,” IEEE Transactions on Cybernetics, pp. 1–10, 2019.

- [6] B. Gharesifard and J. Cortes, “Distributed convergence to Nash equilibria in two-network zero-sum games,” Automatica, vol. 49, no. 6, pp. 1683–1692, 2013.

- [7] Y. Lou, Y. Hong, L. Xie, G. Shi, and K. H. Johansson, “Nash Equilibrium Computation in Subnetwork Zero-Sum Games With Switching Communications,” IEEE Transactions on Automatic Control, vol. 61, no. 10, pp. 2920–2935, 2016.

- [8] M. Ye, G. Hu, and F. Lewis, “Nash equilibrium seeking for N-coalition noncooperative games,” Automatica, vol. 95, pp. 266–272, 2018.

- [9] M. Ye and G. Hu, “Simultaneous social cost minimization and nash equilibrium seeking in non-cooperative games,” in Chinese Control Conference, CCC. IEEE Computer Society, 2017, pp. 3052–3059.

- [10] M. Ye and G. Hu, “A distributed method for simultaneous social cost minimization and nash equilibrium seeking in multi-agent games,” in IEEE International Conference on Control and Automation, ICCA, 2017, pp. 799–804.

- [11] M. Ye, G. Hu, F. L. Lewis, and L. Xie, “A Unified Strategy for Solution Seeking in Graphical N-Coalition Noncooperative Games,” IEEE Transactions on Automatic Control, vol. 64, no. 11, pp. 4645–4652, 2019.

- [12] X. Nian, F. Niu, and Z. Yang, “Distributed Nash Equilibrium Seeking for Multicluster Game Under Switching Communication Topologies,” IEEE Transactions on Systems, Man, and Cybernetics: Systems, 2021.

- [13] X. Zeng, J. Chen, S. Liang, and Y. Hong, “Generalized Nash equilibrium seeking strategy for distributed nonsmooth multi-cluster game,” Automatica, vol. 103, pp. 20–26, 2019.

- [14] C. Sun and G. Hu, “Distributed Generalized Nash Equilibrium Seeking of N-Coalition Games with Full and Distributive Constraints,” arXiv preprint arXiv:2109.12515, sep 2021.

- [15] M. Ye, G. Hu, and S. Xu, “An extremum seeking-based approach for Nash equilibrium seeking in N-cluster noncooperative games,” Automatica, vol. 114, p. 108815, 2020.

- [16] Y. Pang and G. Hu, “Nash Equilibrium Seeking in N-Coalition Games via a Gradient-Free Method,” Automatica, vol. 136, p. 110013, 2022.

- [17] S. Pu and A. Nedić, “Distributed stochastic gradient tracking methods,” Mathematical Programming, pp. 1–49, 2020.

- [18] Y. Pang and G. Hu, “Distributed Nash Equilibrium Seeking with Limited Cost Function Knowledge via A Consensus-Based Gradient-Free Method,” IEEE Transactions on Automatic Control, vol. 66, no. 4, pp. 1832–1839, 2021.

- [19] Y. Pang and G. Hu, “A Gradient-Free Distributed Nash Equilibrium Seeking Method with Uncoordinated Step-Sizes,” in 2020 IEEE 59th Conference on Decision and Control(CDC), 2020, pp. 2291–2296.

- [20] Y. Nesterov and V. Spokoiny, “Random Gradient-Free Minimization of Convex Functions,” Foundations of Computational Mathematics, vol. 17, no. 2, pp. 527–566, 2017.

- [21] R. A. Horn and C. R. Johnson, Matrix Analysis. Cambridge university press, 1990.

- [22] M. S. Stankovic, K. H. Johansson, and D. M. Stipanovic, “Distributed Seeking of Nash Equilibria With Applications to Mobile Sensor Networks,” IEEE Transactions on Automatic Control, vol. 57, no. 4, pp. 904–919, 2012.