Gradient Boosted Normalizing Flows

Abstract

By chaining a sequence of differentiable invertible transformations, normalizing flows (NF) provide an expressive method of posterior approximation, exact density evaluation, and sampling. The trend in normalizing flow literature has been to devise deeper, more complex transformations to achieve greater flexibility. We propose an alternative: Gradient Boosted Normalizing Flows (GBNF) model a density by successively adding new NF components with gradient boosting. Under the boosting framework, each new NF component optimizes a sample weighted likelihood objective, resulting in new components that are fit to the residuals of the previously trained components. The GBNF formulation results in a mixture model structure, whose flexibility increases as more components are added. Moreover, GBNFs offer a wider, as opposed to strictly deeper, approach that improves existing NFs at the cost of additional training—not more complex transformations. We demonstrate the effectiveness of this technique for density estimation and, by coupling GBNF with a variational autoencoder, generative modeling of images. Our results show that GBNFs outperform their non-boosted analog, and, in some cases, produce better results with smaller, simpler flows.††Appearing in the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, Canada.

1 Introduction

Deep generative models seek rich latent representations of data, and provide a mechanism for sampling new data. Beyond their wide-ranging applications, generative models are an attractive class of models that place strong assumptions on the data and hence exhibit higher asymptotic bias when the model is incorrect [2]. A popular approach to generative modeling is with variational autoencoders (VAEs) [49]. A major challenge in VAEs, however, is that they assume a factorial posterior, which is widely known to limit their flexibility [66, 48, 58, 15, 42, 77, 10, 79]. Further, VAEs do not offer exact density estimation, which is a requirement in many settings.

Normalizing flows (NF) are an important recent development and can be used in both density estimation [74, 68, 20] and variational inference [66]. Normalizing flows are smooth, invertible transformations with tractable Jacobians, which can map a complex data distribution to simple distribution, such as a standard normal [61]. In the context of variational inference, a normalizing flow transforms a simple, known base distribution into a more faithful representation of the true posterior. As such, NFs complement VAEs, providing a method to overcome the limitations of a factorial posterior. Flow-based models are also an attractive approach for density estimation [74, 20, 21, 62, 19, 34, 70, 39, 41, 47, 61] because they provide exact density computation and sampling with only a single neural network pass (in some instances) [25].

Recent developments in NFs have focused of creating deeper, more complex transformations in order to increase the flexibility of the learned distribution [47, 54, 39, 12, 41, 14, 4]. With greater model complexity comes a greater risk of overfitting while slowing down training, prediction, and sampling. Boosting [28, 57, 29, 30, 31] is flexible, robust to overfitting, and generally one the most effective learning algorithms in machine learning [37]. While boosting is typically associated with regression and classification, it is also applicable in the unsupervised setting [69, 9, 58, 36, 35, 9, 53].

Our contributions.

In this work we propose a wider, as opposed to strictly deeper, approach for increasing the expressiveness of density estimators and posterior approximations. Our approach, gradient boosted normalizing flows (GBNF), iteratively adds new NF components to a model based on gradient boosting, where each new NF component is fit to the residuals of the previously trained components. A weight is learned for each component of the GBNF model, resulting in a mixture structure. However, unlike a mixture model, GBNF offers the optimality advantages associated with boosting [3], and a simplified training objective that focuses solely on optimizing a single new component at each step. GBNF compliments existing flow-based models, improving performance at the cost of additional training cycles—not more complex transformations. Prediction and sampling are not slowed with GBNF, as each component is independent and operates in parallel.

While gradient boosting is straight-forward to apply in the density estimation setting, our analysis highlights the need for analytically invertible flows in order to efficiently boost flow-based models for variational inference. Further, we address the “decoder shock” phenomenon—a challenge unique to VAEs with GBNF approximate posteriors, where the loss increases suddenly coinciding with the introduction of a new component. Our results show that GBNF improves performance on density estimation tasks, capable of modeling multi-modal data. Lastly, we augment the VAE with a GBNF variational posterior, and present image modeling results on par with state-of-the-art NFs.

The remainder of the paper is organized as follows. In Section 2 we briefly review normalizing flows. In Section 3 we introduce GBNF for density estimation, and Section 4 we extend our idea for the approximate inference setting. In Section 5 we discuss normalizing flows that are compatible with GBNF, and the “decoder shock” phenomenon. In Section 6 we present results. Finally, we conclude the paper in Section 7.

2 Background

Normalizing flows are applicable to both approximate density estimation techniques, like variational inference, as well as tractable density estimation. As such, we review variational inference techniques using modern deep neural networks to amortize the cost of learning parameters. We then share how augmenting deep generative models with normalizing flows improves variational inference. Finally, we highlight recent work using normalizing flows as density estimators directly on the data.

2.1 Variational Inference

Approximate inference plays an important role in fitting complex probabilistic models. Variational Inference (VI), in particular, transforms inference into an optimization problem with the goal of finding a variational distribution that closely approximates the true posterior , where are the observed data, the latent variables, and are learned parameters [45, 80, 6]. Writing the log-likelihood of the data in terms of the approximate posterior reveals:

| (1) |

Since the second term in (1) is the Kullback-Leibler (KL) divergence, which is non-negative, then the first term forms a lower bound on the log-likelihood of the data, and hence referred to as the evidence lower bound (ELBO).

2.2 Variational Autoencoder

Kingma and Welling, [49], Rezende et al., [67] show that a re-parameterization of the ELBO can result in a differentiable bound that is amenable to optimization via stochastic gradients and back-propagation. Further, Kingma and Welling, [49] structure the inference problem as an autoencoder, introducing the variational autoencoder (VAE) and minimizing the negative-ELBO . Re-writing the VI objective as:

| (2) |

shows the probabilistic decoder , and highlights how the VAE encodes the latent variables with the variational posterior , but is regularized with the prior .

2.3 Normalizing Flows

Tabak and Vanden-Eijnden, [75], Tabak and Turner, [74] introduce normalizing flows (NF) as a composition of simple maps. Parameterizing flows with deep neural networks [21, 20, 68] has popularized the technique for density estimation and variational inference [61].

Variational Inference

Rezende and Mohamed, [66] use NFs to modify the VAE’s [49] posterior approximation by applying a chain of transformations to the inference network output . By defining as an invertible, smooth mapping, by the chain rule and inverse function theorem has a computable density [75, 74]:

| (3) |

Thus, a VAE with a -step flow-based posterior minimizes the negative-ELBO:

| (4) |

where is a known base distribution (e.g. standard normal) with parameters .

Density Estimation

Given a set of samples from a target distribution , our goal is to learn a flow-based model , which corresponds to minimizing the forward KL-divergence: [61]. A NF formulates as a transformation of a base density with as a -step flow [20, 21, 62]. Thus, to estimate the expectation over we take a Monte Carlo approximation of the forward KL, yielding:

| (5) |

which is equivalent to fitting the model to samples by maximum likelihood estimation [61].

2.4 Gradient Boosting

Gradient boosting [57, 29, 30, 31] considers the minimization of a loss , where is a function representing the current model. Consider an additive perturbation around to , where is a function representing a new component. A Taylor expansion as :

| (6) |

reveals the functional gradient , which is the direction that reduces the loss at the current solution.

Thus, to minimize loss at the current model, choose the best function in a class of functions (e.g. regression trees), which corresponds to solving a linear program where defines the weights for every function in . Underlying Gradient boosting is a connection to conditional gradient descent and the Frank-Wolfe algorithm [26]: we first solve a constrained convex minimization problem to choose , then solve a line-search problem to appropriately weight relative to the previous components [9, 36].

3 Density Estimation with GBNF

Gradient boosted normalizing flows (GBNF) build on recent ideas in boosting for variational inference [36, 58] and generative models [35] in order to increase the flexibility of density estimators and posteriors approximated with NFs. A GBNF is constructed by successively adding new components based on forward-stagewise gradient boosting, where each new component is a -step normalizing flow that is fit to the functional gradient of the loss from the previously trained components .

Gradient boosting assigns a weight to the new component and we restrict to ensure the model stays a valid probability distribution. The resulting density can be written as a mixture model:

| (7) |

where the full model is a monotonic function of a convex combination of fixed components and new component , and is the partition function. Two special cases are of interest: first, when , which corresponds to a standard additive mixture model with :

Second, when with , which corresponds to a multiplicative mixture model [35, 17]:

The advantage of GBNF over a standard mixture model with a pre-determined fixed number of components is that additional components can always be added to the model, and the weights for non-informative components will degrade to zero. Since each GBNF component is a NF, we evaluate (7) recursively, and at each stage the last component is computed by the change of variables formula:

| (8) |

where is the -step flow transformation for component , and the base density is shared by all components. In our formulation we consider components, where is fixed and finite.

Density Estimation

With GBNF density estimation is similar to (5): we seek flow parameters that minimize , which for finite number of samples drawn from corresponds to minimizing:

| (9) |

Directly optimizing (9) for mixture model is non-trivial. Gradient boosting, however, provides an elegant solution that greatly simplifies the problem. During training, the first component is fit using a traditional objective function—no boosting is applied222No boosting during the first stage is equivalent to setting to uniform on the domain of .. At stages , we already have , consisting of a convex combination of the -step flow models from the previous stages, and we train a new component by taking a Frank-Wolfe [26, 5, 9, 43, 53, 36] linear approximation of the loss (9). Since jointly optimizing w.r.t. both and is a challenging non-convex problem [36], we train until convergence, and then use (9) as the objective to optimize w.r.t the corresponding weight .

3.1 Updates to New Boosting Components

Additive Boosting

We first consider the special case and , corresponding to the additive mixture model. Our goal is to derive an update to the new component using functional gradient descent. Thus, we take the gradient of (9) w.r.t. fixed parameters of at , giving:

| (10) |

Since is fixed, then maximizing is achieved by choosing a new component and weighing by the negative of the gradient from (10) over the samples:

| (11) |

where is the family of -step flows. Note that (11) is a linear program in which is a constant, and will hence yield a degenerate point probability distribution where the entire probability mass is placed at the minimum point of . To avoided the degenerate solution, a standard approach adds an entropy regularization term which is controlled by the hyperparameter [36, 9]:

| (12) |

Multiplicative Boosting

In this paper, we instead use with , which corresponds to the multiplicative mixture model, and, from the boosting perspective, a multiplicative boosting model [35, 17]. However, in contrast to the existing literature on multiplicative boosting for probabilistic models, we consider boosting with normalizing flow components. In the multiplicative setting, explicitly maintaining the convex combination between and is unnecessary: the partition function ensures the validity of the probabilistic model. Thus, the multiplicative GBNF seeks a new component that minimizes:

| (13) |

The partition function is defined as and computing for GBNF is straightforward since normalizing flows learn self-normalized distributions—and hence can be computed without resorting to simulated annealing or Markov chains [35]. Moreover, following standard properties [35, 17], we also inherit a recursive property of the partition function. To see the recursive property, denote the un-normalized GBNF density as , where but does not integrate to . Then, by definition:

then, integrating both sides and using gives

and therefore , as desired.

The objective in (13) represents the loss under the model which followed from minimizing the forward KL-divergence , where is the normalized approximate distribution and the target distribution. To improve (13) with gradient boosting, consider the difference in losses after introducing a new component to the model:

| (14) |

where (a) follows by Jensen’s inequality since . Note that we want to choose the new component so that is minimized, or equivalently, for a fixed , the difference is maximized. Since , it suffices to focus on the following maximization problem:

| (15) |

If we choose a new component according to:

then, with this choice of , we see that (15) reduces to:

Our choice of is, therefore, optimal and gives a lower bound to (3.1) with . The solution to (15) can also be understood in terms of the change-of-measure inequality [1, 23], which also forms the basis of the PAC-Bayes bound and a certain regret bounds [1].

Similar to the additive case, the new component chosen in (15) shows that maximizes the likelihood of the samples while discounting those that are already explained by . Unlike the additive GBNF update in (12), however, the multiplicative GBNF update is a numerically stable and does not require an entropy regularization term.

Further, our analysis reveals the source of a surrogate loss function [59, 3, 60] which optimizes the global objective—namely, when written as as a minimization (15) is the weighted negative log-likelihood of the samples. Surrogate loss functions are common in the boosting framework [28, 71, 3, 29, 27, 8, 76, 69]. Adaboost [27, 28], in particular, solves a re-weighted classification problem where weak learners, in the form of decision trees, optimize surrogate losses like information gain or Gini index. The negative log-likelihood is specifically chosen as a surrogate loss function in other boosted probabilistic and density estimation models which also have -divergence based global objectives [35, 69], however here we clarify that the surrogate loss follows from (3.1).

In practice, instead of weighing the loss by to the reciprocal of the fixed components, we follow Grover and Ermon, [35] and train the new component to perform maximum likelihood estimation over a re-weighted data distribution:

| (16) |

where denotes a re-weighted data distribution whose samples are drawn with replacement using sample weights inversely-proportional to . Since (16) is the same objective as the generative multiplicative boosting model in Grover and Ermon, [35], where we have set their parameter from Proposition 1 to one, then (16) provides a non-increasing update to the multiplicative objective function (13).

Lastly, we note that the analysis of Cranko and Nock, [17] highlights important properties of the broader class of boosted density estimation models that optimize (9), of which both the additive and multiplicative forms of GBNF are members. Specifically, Remark 3 in Cranko and Nock, [17] shows a sharper decrease in the loss—that is, for any step size the loss has geometric convergence:

| (17) |

where denotes the explicit dependence of on . Thus GBNF provides a strong convergence guarantee on the global objective.

3.2 Update to Component Weights

Component weights are updated to satisfy using line-search. Alternatively, taking the gradient of the loss with respect to gives a stochastic gradient descent (SGD) algorithm (see Section 4.2, for example).

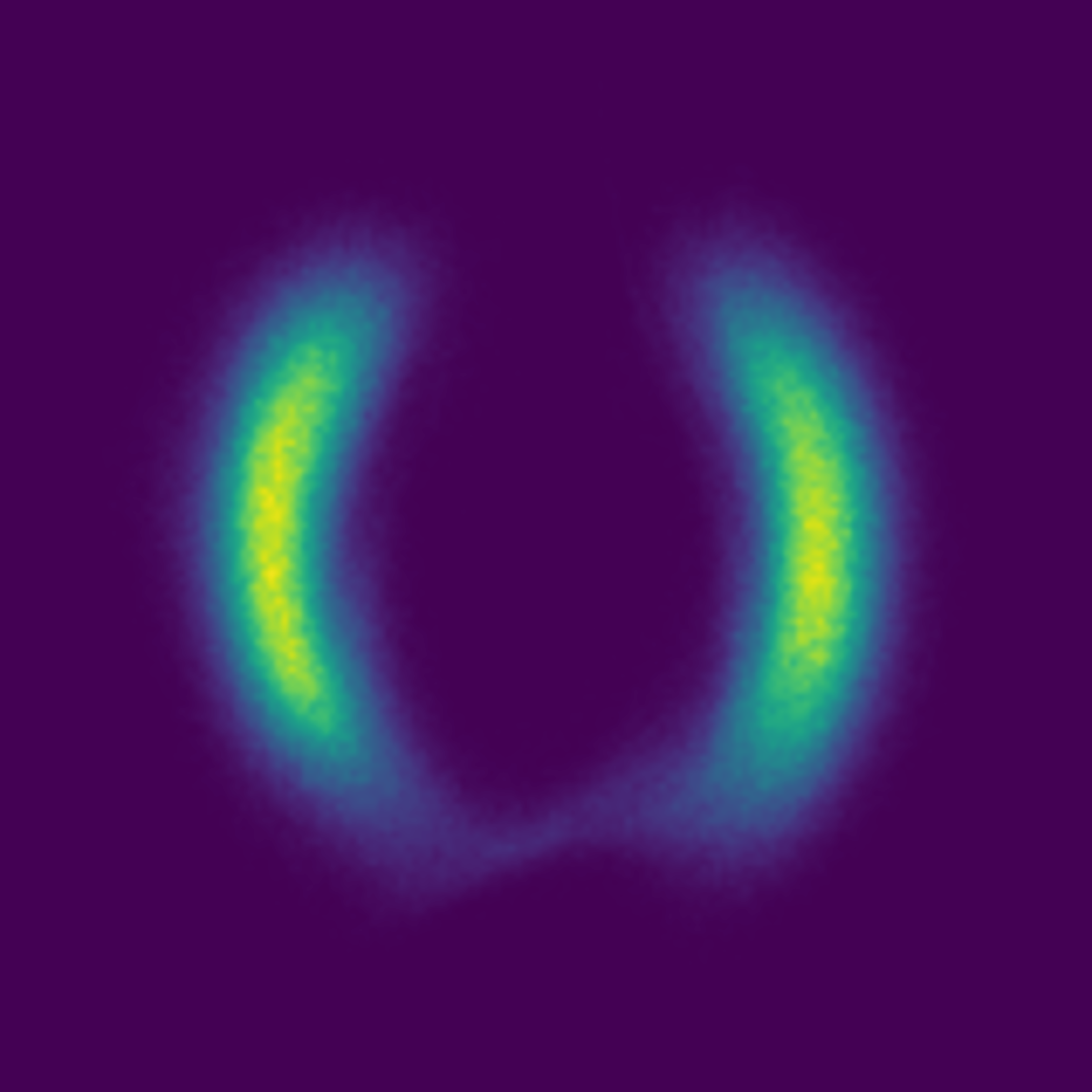

Updating a component’s weight is only needed once after each component converges. We find, however, that results improve by “fine-tuning” each component and their weights with additional training after the initial training pass. During the fine-tuning stage, we sequentially retrain each component for , during which we treat as fixed where represents the mixture of all other components: . Figure 1 demonstrates this phenomenon: when a single flow is not flexible enough to model the target, mode-covering behavior arises. Introducing the second component trained with the boosting objective improves results, and consequently the second component’s weight is increased. Fine-tuning the first component leads to a better solution and assigns equal weight to the two components.

4 Variational Inference with GBNF

Gradient boosting is also applicable to posterior approximation with flow-based models. For variational inference we map a simple base distribution to a complex posterior. Unlike (4), however, we consider a VAE whose approximate posterior is a GBNF with components and of the form:

| (18) |

We seek a variational posterior that closely matches the true posterior , which corresponds to the reverse KL-divergence . Minimizing KL is equivalent to minimizing the negative-ELBO up to a constant. Thus, we seek to minimize the variational bound:

| (19) |

4.1 Updates to New Boosting Components

Given the bound (19), we then derive updates for new components. Similar to Section 3.1, consider the functional gradient w.r.t. at :

| (20) |

We minimize by choosing a new component that has the minimum inner product with the gradient from (20).

However, to avoid degenerating to a point mass at the functional gradient’s minimum, we add an entropy regularization term333In our experiments that augment the VAE with a GBF-based posterior, we find good results setting the regularization . In the density estimation experiments, better results are often achieved with near 0.8. controlled by , thus:

| (21) |

Despite the differences in derivation, optimization of GBNF has a similar structure to other flow-based VAEs. Specifically, with the addition of the entropy regularization, rearranging (21) shows:

| (22) |

If is constant, then we recover the VAE objective exactly. By learning a GBNF approximate posterior the reconstruction error – is down-weighted for samples that are easily explained by the fixed components. For updates to the component weights see Appendix 4.2.

Lastly, we note that during a forward pass the model encodes data to produce . To sample from the posterior , however, we transform according to , where randomly chooses a component—similar to sampling from a mixture model. Thus, during training we compute a fast stochastic approximation of the likelihood . Likewise, prediction and sampling are as fast as the non-boosted setting, and easily parallelizable across components.

4.2 Updating Component Weights

After has been estimated, the mixture model still needs to estimate . Similar to the density estimation setting, the weights on each component can be updated by taking the gradient of the loss with respect to . Recall that can be written as the convex combination:

Then, with , the objective function can be written as a function of :

| (23) |

The above expression can be used in a black-box line search method or, as we have done, in a stochastic gradient descent algorithm 1. Toward that end, taking gradient of (4.2) w.r.t. yields the component weight updates:

| (24) |

where we’ve defined:

To ensure a stable convergence we follow Guo et al., [36] and implement an SGD algorithm with a decaying learning rate.

4.3 Decoder Shock: Abrupt Changes to the VAE Approximate Posterior

Sharing the decoder between all GBNF components presents a unique challenge in training a VAE with a GBNF approximate posterior. During training the decoder acclimates to samples from a particular component (e.g. ). However, when a new stage begins the decoder begins receiving samples from a new component . At this point the loss jumps (see Figure 2), a phenomenon we refer to as “decoder shock”. Reasons for “decoder shock” are as follows.

The introduction of causes a sudden shift in the distribution of samples passed to the decoder, causing a sharp increase in reconstruction errors. Further, we anneal the KL [7, 73, 38] in (22) cyclically [32], with restarts corresponding to the introduction of new boosting components. By reducing the weight of the KL term in (22) during the initial epochs the model is free to discover useful representations of the data before being penalized for complexity. Without KL-annealing, models may choose the “low hanging fruit” of ignoring and relying purely on a powerful decoder [7, 73, 15, 65, 18, 38]. Thus, when the annealing schedule restarts, is unrestricted and the validation’s KL term temporarily increases.

A spike in loss between boosting stages is unique to GBNF. Unlike other boosted models, with GBNF there is a module (the decoder) that depends on the boosted components—this does not exist when boosting decision trees for regression or classification (for example). To overcome the “decoder shock” problem, propose a simple solution that deviates from a traditional boosting approach. Instead of only drawing samples from during training, we periodically sample from the fixed components, helping the decoder remember past components and adjust to changes in the full approximate posterior . We emphasize that despite drawing samples from , the parameters for remain fixed—samples from are purely for the decoder’s benefit. Additionally, Figure 2 highlights how fine-tuning (blue line) consolidates information from all components and improves results at very little computational cost.

5 Related Work

Below we highlight connections between GBNF and related work, along with unique aspects of GBNF. First, we discuss the catalog of normalizing flows that are compatible with gradient boosting. We then compare GBNF to other boosted generative models and flows with mixture formulations.

Flows Compatible with Gradient Boosting

While all normalizing flows can be boosted for density estimation, boosting for variational inference is only practical with analytically invertible flows (see Figure 3). The focus of GBNF for variational inference is on training the new component , but in order to draw samples we sample from the base distribution and transform according to:

However, updating for variational inference requires computing the likelihood . Following Figure 3, to compute we seek the point within the base distribution such that:

where is sampled from and randomly chooses one of the fixed components. Then, under the change of variables formula, we approximate by:

While planar and radial [66], Sylvester [79], and neural autoregressive flows [41, 19] are provably invertible, we cannot compute the inverse. Inverse and masked autoregressive flows [48, 62] are invertible, but times slower to invert where is the dimensionality of .

Analytically invertible flows include those based on coupling layers, such as NICE [20], RealNVP [21], and Glow—which replaced RealNVP’s permutation operation with a convolution [47]. Neural spline flows increase the flexibility of both coupling and autoregressive transforms using monotonic rational-quadratic splines [25], and non-linear squared flows [81] are highly multi-modal and can be inverted for boosting. Continuous-time flows [11, 13, 34, 70] use transformations described by ordinary differential equations, with FFJORD being “one-pass” invertible by solving an ODE.

Flows with Mixture Formulations

The main bottleneck in creating more expressive flows lies in the base distribution and the class of transformation function [61]. Autoregressive [41, 44, 19, 48, 62, 54], residual [4, 14, 79, 66], and coupling-layer flows [20, 21, 47, 39, 64] are the most common classes of finite transformations, however, discrete (RAD, [22]) and continuous (CIF, [16]) mixture formulations offer a promising new approach where the base distribution and transformation change according to the mixture component. GBNF also presents a mixture formulation, but trained in a different way, where only the updates to the newest component are needed during training and extending an existing model with additional components is trivial. Moreover, GBNF optimizes a different objective that fits new components to the residuals of previously trained components, which can refine the mode covering behavior of VAEs (see Hu et al., [40]) and maximum likelihood (similar to Dinh et al., [22]). The continuous mixture approach of CIF, however, cannot be used in the variational inference setting to augment the VAE’s approximate posterior [16].

Gradient Boosted Generative Models

By considering convex combinations of distributions and , boosting is applicable beyond the traditional supervised setting [9, 17, 35, 36, 52, 53, 69, 76]. In particular, boosting variational inference (BVI, [58, 36, 17]) improves a variational posterior, and boosted generative models (BGM, [35]) constructs a density estimator by iteratively combining sum-product networks. Unlike BVI and BGM our approach addresses the unique algorithmic challenges of boosting applied to flow-based models—such as the need for analytically invertible flows and the “decoder shock” phenomena when enhancing the VAE’s approximate posterior with GBNF.

6 Experiments

To evaluate GBNF, we highlight results on two toy problems, density estimation on real data, and boosted flows within a VAE for generative modeling of images. We boost coupling flows [21, 47] parameterized by feed-forward networks with TanH activations and a single hidden layer. While RealNVP [21], in particular, is less flexible and shown to be empirically inferior to planar flows in variational inference [66], coupling flows are attractive for boosting: sampling and inference require one forward pass, log-likelihoods are computed exactly, and they are trivially invertible. In the toy experiments flows are trained for 25k iterations using the Adam optimizer [46]. For all other experiments details on the datasets and hyperparameters can be found in Appendix A.

1

3

2

4

6.1 Toy Density Matching

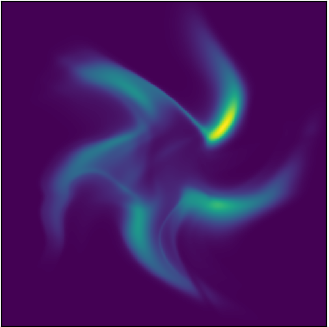

For density matching the model generates samples from a standard normal and transforms them into a complex distribution . The 2-dimensional unnormalized target’s analytical form is known and parameters are learned by minimizing .

Results

In Figure 4 we compare our results to a deep 16-step RealNVP flow on four energy functions. In each case GBNF provides an accurate density estimation with half as many parameters. When the component flows are flexible enough to model most or all of the target density, components can overlap. However, by training the component weights the model down-weights components that don’t provide additional information. On more challenging targets, such as 3 (top-right), GBNF fits one component to each of the top and bottom divergences within the energy function, and some component overlap occurring elsewhere.

6.2 Toy Density Estimation

1

2

4

8

We apply GBNF to the density estimation problems found in [47, 34, 19]. Here the model receives samples from an unknown 2-dimensional data distribution, and the goal is to learn a density estimator of the data. We consider GBNF with either or RealNVP components, each of which includes or coupling layers [21], respectively. Here RealNVP and GBNF use flows of equivalent depth, and we evaluate improvements resulting from GBNF’s additional boosted components.

Results

As shown in Figure 5, even when individual components are weak the composite model is expressive. For example, the 8-Gaussians figure shows that the first component (RealNVP column) fails to model all modes. With additional 1-step flows, GNBF achieves a multimodal density model. Both the 8-Gaussians and Spiral results show that adding boosted components can drastically improve density estimates without requiring more complex transformations. On the Checkerboard and Pinwheel, where RealNVP matches the data more closely, GBNF sharpens density estimates.

6.3 Density Estimation on Real Data

Following Grathwohl et al., [34] we report density estimation results on the POWER, GAS, HEPMASS, and MINIBOONE datasets from the UCI machine learning repository [24], as well as the BSDS300 dataset [56]. We compare boosted and non-boosted RealNVP [21] and Glow models [47]. Glow uses a learned base distribution, whereas our boosted implementation of Glow (and the RealNVPs) use fixed Gaussians. Results for non-boosted models are from [34].

Results

In Table 1 we find significant improvements by boosting Glow and the more simple RealNVP normalizing flows, even with only components. Our implementation of Glow was unable to match the results for BSDS300 from [34], and only achieves an average log-likelihood of 152.96 without boosting. After boosting Glow with components, however, the log-likelihood rises significantly to 154.68, which is comparable to the baseline.

| Model | POWER | GAS | HEPMASS | MINIBOONE | BSDS300 |

|---|---|---|---|---|---|

| =6;=2,049,280 | =8;=1,052,065 | =21;=525,123 | =43;=36,488 | =63;=1,300,000 | |

| RealNVP | |||||

| Boosted RealNVP | |||||

| Glow | |||||

| Boosted Glow |

6.4 Image Modeling with Variational Autoencoders

Following Rezende and Mohamed, [66], we employ NFs for improving the VAE’s approximate posterior [49]. We compare our model on the same image datasets as those used in van den Berg et al., [79]: Freyfaces, Caltech 101 Silhouettes [55], Omniglot [50], and statically binarized MNIST [51].

Results

In Table 2 we compare the performance of GBNF to other normalizing flow architectures. In all results RealNVP, which is more ideally suited for density estimation tasks, performs the worst of the flow models. Nonetheless, applying gradient boosting to RealNVP improves the results significantly. On Freyfaces, the smallest dataset consisting of just 1965 images, gradient boosted RealNVP gives the best performance—suggesting that GBNF may avoid overfitting. For the larger Omniglot dataset of hand-written characters, Sylvester flows are superior, however, gradient boosting improves the RealNVP baseline considerably and is comparable to Sylvester. GBNF improves on the baseline RealNVP, however both GBNF and IAF’s results are notably higher than the non-coupling flows on the Caltech 101 Silhouettes dataset. Lastly, on MNIST we find that boosting improves NLL on RealNVP, and is on par with Sylvester flows. All models have an approximately equal number of parameters, except the baseline VAE (fewer parameters) and Sylvester which has x the number of parameters (grid search for hyperparameters is chosen following [79]).

| Model | MNIST | Freyfaces | Omniglot | Caltech 101 | ||||

|---|---|---|---|---|---|---|---|---|

| -ELBO | NLL | -ELBO | NLL | -ELBO | NLL | -ELBO | NLL | |

| VAE | ||||||||

| Planar | 4.60 | 100.18 | 104.23 | |||||

| Radial | ||||||||

| Sylvester | 84.54 | 81.99 | 4.54 | 4.49 | 101.99 | 98.54 | 112.26 | 100.38 |

| IAF | 83.14 | |||||||

| RealNVP | ||||||||

| GBNF | 82.59 | 4.41 | 99.09 | 106.40 | ||||

7 Conclusion

In this work we introduce gradient boosted normalizing flows, a technique for increasing the flexibility of flow-based models through gradient boosting. GBNF, iteratively adds new NF components, where each new component is fit to the residuals of the previously trained components. We show that GBNF can improve results for existing normalizing flows on density estimation and variational inference tasks. In our experiments we demonstrated that GBNF improves over their baseline single component model, without increasing the depth of the model, and produces image modeling results on par with state-of-the-art flows. Further, we showed GBNF models used for density estimation create more flexible distributions at the cost of additional training and not more complex transformations.

In the future we wish to further investigate the “decoder shock” phenomenon occurring when GBNF is paired with a VAE. Future work may benefit from exploring other strategies for alleviating “decoder shock”, such as multiple decoders or different annealing strategies. In our real data experiments in Section 6.4 we fixed the entropy regularization at 1.0, however adjusting the regularization on a per-component level may be worth pursuing. Additionally, in our image modeling experiments we used RealNVP as the base component. Future work may consider other flows for boosting, as well as heterogeneous combinations of flows as the different components.

8 Broader Impact

As a generative model, gradient boosted normalizing flows (GBNF) are suited for a variety of tasks, including the synthesis of new data-points. A primary motivation for choosing GBNF, in particular, is producing a flexible model that can synthesize new data-points quickly. GBNF’s individual components can be less complex and thus faster, yet as a whole the model is powerful. Since the components operate in parallel, prediction and sampling can be done quickly—a valuable characteristic for deployment on mobile devices. One limitation of GBNF is the requirement for additional computing resources to train the added components, which can be costly for deep flow-based models. As such, GBNF advantages research laboratories and businesses with access to scalable computing. Those with limited computing resources may find benchmarking or deploying GBNF too costly.

Acknowledgements

The research was supported by NSF grants OAC-1934634, IIS-1908104, IIS-1563950, IIS-1447566, IIS-1447574, IIS-1422557, CCF-1451986. We thank the University of Minnesota Supercomputing Institute (MSI) for technical support.

References

- Banerjee, [2006] Banerjee, A. (2006). On bayesian bounds. In Proceedings of the 23rd International Conference on Machine Learning, pages 81–88. ACM.

- Banerjee, [2007] Banerjee, A. (2007). An Analysis of Logistic Models: Exponential Family Connections and Online Performance. In Proceedings of the 2007 SIAM International Conference on Data Mining, Proceedings, pages 204–215. Society for Industrial and Applied Mathematics.

- Bartlett et al., [2006] Bartlett, P. L., Jordan, M. I., and McAuliffe, J. D. (2006). Convexity, Classification, and Risk Bounds. Journal of the American Statistical Association, 101(473):138–156.

- Behrmann et al., [2019] Behrmann, J., Grathwohl, W., Chen, R. T. Q., Duvenaud, D., and Jacobsen, J.-H. (2019). Invertible Residual Networks. In International Conference on Machine Learning, page 10.

- Beygelzimer et al., [2015] Beygelzimer, A., Hazan, E., Kale, S., and Luo, H. (2015). Online Gradient Boosting. In Advances in Neural Information Processing Systems, page 9.

- Blei et al., [2017] Blei, D. M., Kucukelbir, A., and McAuliffe, J. D. (2017). Variational Inference: A Review for Statisticians. Journal of the American Statistical Association, 112(518):859–877.

- Bowman et al., [2016] Bowman, S. R., Vilnis, L., Vinyals, O., Dai, A., Jozefowicz, R., and Bengio, S. (2016). Generating Sentences from a Continuous Space. In Proceedings of The 20th SIGNLL Conference on Computational Natural Language Learning, pages 10–21, Berlin, Germany. Association for Computational Linguistics.

- Bühlmann and Hothorn, [2007] Bühlmann, P. and Hothorn, T. (2007). Boosting Algorithms: Regularization, Prediction and Model Fitting. Statistical Science, 22(4):477–505.

- Campbell and Li, [2019] Campbell, T. and Li, X. (2019). Universal Boosting Variational Inference. In Advances in Neural Information Processing Systems.

- Casale et al., [2018] Casale, F. P., Dalca, A. V., Saglietti, L., Listgarten, J., and Fusi, N. (2018). Gaussian Process Prior Variational Autoencoders. Advances in Neural Information Processing Systems, page 11.

- [11] Chen, C., Li, C., Chen, L., Wang, W., Pu, Y., and Carin, L. (2017a). Continuous-Time Flows for Efficient Inference and Density Estimation. arXiv:1709.01179 [stat].

- Chen et al., [2020] Chen, J., Lu, C., Chenli, B., Zhu, J., and Tian, T. (2020). VFlow: More Expressive Generative Flows with Variational Data Augmentation. arXiv:2002.09741 [cs, stat].

- Chen et al., [2018] Chen, R. T. Q., Rubanova, Y., Bettencourt, J., and Duvenaud, D. (2018). Neural Ordinary Differential Equations. In Advances in Neural Information Processing Systems.

- Chen et al., [2019] Chen, T. Q., Behrmann, J., Duvenaud, D. K., and Jacobsen, J.-H. (2019). Residual Flows for Invertible Generative Modeling. In Advances in Neural Information Processing Systems, page 11.

- [15] Chen, X., Kingma, D. P., Salimans, T., Duan, Y., Dhariwal, P., Schulman, J., Sutskever, I., and Abbeel, P. (2017b). Variational Lossy Autoencoder. ICLR.

- Cornish et al., [2020] Cornish, R., Caterini, A. L., Deligiannidis, G., and Doucet, A. (2020). Relaxing Bijectivity Constraints with Continuously Indexed Normalising Flows. arXiv:1909.13833 [cs, stat].

- Cranko and Nock, [2019] Cranko, Z. and Nock, R. (2019). Boosting Density Estimation Remastered. Proceedings of the 36th International Conference on Machine Learning, 97:1416–1425.

- Cremer et al., [2018] Cremer, C., Li, X., and Duvenaud, D. (2018). Inference Suboptimality in Variational Autoencoders. In International Conference on Machine Learning, Stockholm, Sweden.

- De Cao et al., [2019] De Cao, N., Titov, I., and Aziz, W. (2019). Block Neural Autoregressive Flow. 35th Conference on Uncertainty in Artificial Intelligence (UAI19).

- Dinh et al., [2015] Dinh, L., Krueger, D., and Bengio, Y. (2015). NICE: Non-linear Independent Components Estimation. ICLR.

- Dinh et al., [2017] Dinh, L., Sohl-Dickstein, J., and Bengio, S. (2017). Density estimation using Real NVP. ICLR.

- Dinh et al., [2019] Dinh, L., Sohl-Dickstein, J., Pascanu, R., and Larochelle, H. (2019). A RAD approach to deep mixture models. arXiv:1903.07714 [cs, stat].

- Donsker and Varadhan, [1976] Donsker, M. D. and Varadhan, S. R. S. (1976). On the principal eigenvalue of second-order elliptic differential operators. Communications on Pure and Applied Mathematics, 29(6):595–621.

- Dua and Taniskidou, [2017] Dua, D. and Taniskidou, E. K. (2017). UCI Machine Learning Repository. https://archive.ics.uci.edu/ml/index.php.

- Durkan et al., [2019] Durkan, C., Bekasov, A., Murray, I., and Papamakarios, G. (2019). Neural Spline Flows. In Advances in Neural Information Processing Systems.

- Frank and Wolfe, [1956] Frank, M. and Wolfe, P. (1956). An Algorithm for Quadratic Programming. Naval Research Logistics Quarterly, 3:95–110.

- Freund and Schapire, [1996] Freund, Y. and Schapire, R. E. (1996). Experiments with a New Boosting Algorithm. In International Conference on Machine Learning, page 9.

- Freund and Schapire, [1997] Freund, Y. and Schapire, R. E. (1997). A Decision-Theoretic Generalization of On-Line Learning and an Application to Boosting. Journal of Computer and System Sciences, 55(1):119–139.

- Friedman et al., [2000] Friedman, J., Hastie, T., and Tibshirani, R. (2000). Additive logistic regression: A statistical view of boosting. The annals of statistics, 28(2):337–407.

- Friedman, [2001] Friedman, J. H. (2001). Greedy function approximation: A gradient boosting machine. Annals of statistics, pages 1189–1232.

- Friedman, [2002] Friedman, J. H. (2002). Stochastic gradient boosting. Computational Statistics & Data Analysis, 38(4):367–378.

- Fu et al., [2019] Fu, H., Li, C., Liu, X., Gao, J., Celikyilmaz, A., and Carin, L. (2019). Cyclical Annealing Schedule: A Simple Approach to Mitigating KL Vanishing. In NAACL.

- Germain et al., [2015] Germain, M., Gregor, K., Murray, I., and Larochelle, H. (2015). MADE: Masked Autoencoder for Distribution Estimation. In International Conference on Machine Learning, volume 37, Lille, France.

- Grathwohl et al., [2019] Grathwohl, W., Chen, R. T. Q., Bettencourt, J., Sutskever, I., and Duvenaud, D. (2019). FFJORD: Free-form Continuous Dynamics for Scalable Reversible Generative Models. In International Conference on Learning Representations.

- Grover and Ermon, [2018] Grover, A. and Ermon, S. (2018). Boosted Generative Models. In AAAI.

- Guo et al., [2016] Guo, F., Wang, X., Fan, K., Broderick, T., and Dunson, D. B. (2016). Boosting Variational Inference. In Advances in Neural Information Processing Systems, Barcelona, Spain.

- Hastie et al., [2001] Hastie, T., Tibshirani, R., and Friedman, J. (2001). The Elements of Statistical Learning, volume 1 of Springer Series in Statistics. Springer New York Inc., second edition.

- Higgins et al., [2017] Higgins, I., Matthey, L., Pal, A., Burgess, C., Glorot, X., Botvinick, M., Mohamed, S., and Lerchner, A. (2017). -VAE: Learning Basic Visual Concepts with a Constrained Variational Framework. ICLR, page 22.

- Ho et al., [2019] Ho, J., Chen, X., Srinivas, A., Duan, Y., and Abbeel, P. (2019). Flow++: Improving Flow-Based Generative Models with Variational Dequantization and Architecture Design. In International Conference on Machine Learning.

- Hu et al., [2018] Hu, Z., Yang, Z., Salakhutdinov, R., and Xing, E. P. (2018). On Unifying Deep Generative Models. In ICLR, pages 1–19.

- [41] Huang, C.-W., Krueger, D., Lacoste, A., and Courville, A. (2018a). Neural Autoregressive Flows. In International Conference on Machine Learning, page 10, Stockholm, Sweden.

- [42] Huang, C.-W., Tan, S., Lacoste, A., and Courville, A. (2018b). Improving Explorability in Variational Inference with Annealed Variational Objectives. In Advances in Neural Information Processing Systems, page 11, Montréal, Canada.

- Jaggi, [2013] Jaggi, M. (2013). Revisiting Frank-Wolfe: Projection-Free Sparse Convex Optimization. In Proceedings of the 30th International Conference on Machine Learning, volume 28(1) of Proceedings of Machine Learning Research, page 9. PMLR.

- Jaini et al., [2019] Jaini, P., Selby, K. A., and Yu, Y. (2019). Sum-of-Squares Polynomial Flow. In International Conference on Machine Learning, volume 97, Long Beach, California. PMLR.

- Jordan et al., [1999] Jordan, M. I., Ghahramani, Z., Jaakkola, T. S., and Saul, L. K. (1999). An Introduction to Variational Methods for Graphical Models. In Jordan, M. I., editor, Learning in Graphical Models, pages 105–161. Springer Netherlands, Dordrecht.

- Kingma and Ba, [2015] Kingma, D. P. and Ba, J. (2015). Adam: A method for stochastic optimization. ICLR.

- Kingma and Dhariwal, [2018] Kingma, D. P. and Dhariwal, P. (2018). Glow: Generative Flow with Invertible 1x1 Convolutions. In Advances in Neural Information Processing Systems, Montréal, Canada.

- Kingma et al., [2016] Kingma, D. P., Salimans, T., Jozefowicz, R., Chen, X., Sutskever, I., and Welling, M. (2016). Improving Variational Inference with Inverse Autoregressive Flow. In Advances in Neural Information Processing Systems.

- Kingma and Welling, [2014] Kingma, D. P. and Welling, M. (2014). Auto-Encoding Variational Bayes. Proceedings of the 2nd International Conference on Learning Representations (ICLR), pages 1–14.

- Lake et al., [2015] Lake, B. M., Salakhutdinov, R., and Tenenbaum, J. B. (2015). Human-level concept learning through probabilistic program induction. Science, 350(6266):1332–1338.

- Larochelle and Murray, [2011] Larochelle, H. and Murray, I. (2011). The Neural Autoregressive Distribution Estimator. International Conference on Artificial Intelligence and Statistics (AISTATS), 15:9.

- Lebanon and Lafferty, [2002] Lebanon, G. and Lafferty, J. D. (2002). Boosting and Maximum Likelihood for Exponential Models. NIPS, page 8.

- Locatello et al., [2018] Locatello, F., Khanna, R., Ghosh, J., and Rätsch, G. (2018). Boosting Variational Inference: An Optimization Perspective. International Conference on Artificial Intelligence and Statistics.

- Ma et al., [2019] Ma, X., Kong, X., Zhang, S., and Hovy, E. (2019). MaCow: Masked Convolutional Generative Flow. In Advances in Neural Information Processing Systems, page 10.

- Marlin et al., [2010] Marlin, B. M., Swersky, K., Chen, B., and de Freitas, N. (2010). Inductive Principles for Restricted Boltzmann Machine Learning. 13thInternational Conference on Artificial Intelligence and Statistics (AISTATS), 9:8.

- Martin et al., [2001] Martin, D., Fowlkes, C., Tal, D., and Malik, J. (2001). A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings Eighth IEEE International Conference on Computer Vision. ICCV 2001, volume 2, pages 416–423, Vancouver, BC, Canada. IEEE Comput. Soc.

- Mason et al., [1999] Mason, L., Baxter, J., Bartlett, P. L., and Frean, M. R. (1999). Boosting Algorithms as Gradient Descent. In Advances in Neural Information Processing Systems, pages 512–518.

- Miller et al., [2017] Miller, A. C., Foti, N., and Adams, R. P. (2017). Variational Boosting: Iteratively Refining Posterior Approximations. In Proceedings of the 34th International Conference on Machine Learning, volume 70, pages 2420–2429. PMLR.

- Nguyen et al., [2005] Nguyen, X., Wainwright, M. J., and Jordan, M. I. (2005). On divergences, surrogate loss functions, and decentralized detection. CoRR, abs/math/0510521:35.

- Nguyen et al., [2009] Nguyen, X., Wainwright, M. J., and Jordan, M. I. (2009). On surrogate loss functions and $f$-divergences. The Annals of Statistics, 37(2):876–904.

- Papamakarios et al., [2019] Papamakarios, G., Nalisnick, E., Rezende, D. J., Mohamed, S., and Lakshminarayanan, B. (2019). Normalizing Flows for Probabilistic Modeling and Inference. arXiv:1912.02762 [cs, stat].

- Papamakarios et al., [2017] Papamakarios, G., Pavlakou, T., and Murray, I. (2017). Masked Autoregressive Flow for Density Estimation. In Advances in Neural Information Processing Systems.

- Paszke et al., [2017] Paszke, A., Gross, S., Chintala, S., Chanan, G., Yang, E., DeVito, Z., Lin, Z., Desmaison, A., Antiga, L., and Lerer, A. (2017). Automatic differentiation in PyTorch. In Advances in Neural Information Processing Systems, page 4.

- Prenger et al., [2018] Prenger, R., Valle, R., and Catanzaro, B. (2018). WaveGlow: A Flow-based Generative Network for Speech Synthesis. arXiv:1811.00002 [cs, eess, stat].

- Rainforth et al., [2018] Rainforth, T., Kosiorek, A. R., Le, T. A., Maddison, C. J., Igl, M., Wood, F., and Teh, Y. W. (2018). Tighter Variational Bounds Are Not Necessarily Better. In International Conference on Machine Learning, Stockholm, Sweden.

- Rezende and Mohamed, [2015] Rezende, D. J. and Mohamed, S. (2015). Variational Inference with Normalizing Flows. In International Conference on Machine Learning, volume 37, pages 1530–1538, Lille, France. PMLR.

- Rezende et al., [2014] Rezende, D. J., Mohamed, S., and Wierstra, D. (2014). Stochastic Backpropagation and Approximate Inference in Deep Generative Models. In Proceedings of the 31st International Conference on Machine Learning, volume 32 of 2, pages 1278–1286, Beijing, China. PMLR.

- Rippel and Adams, [2013] Rippel, O. and Adams, R. P. (2013). High-Dimensional Probability Estimation with Deep Density Models. arXiv:1302.5125 [cs, stat].

- Rosset and Segal, [2002] Rosset, S. and Segal, E. (2002). Boosting Density Estimation. In Advances in Neural Information Processing Systems, page 8.

- Salman et al., [2018] Salman, H., Yadollahpour, P., Fletcher, T., and Batmanghelich, K. (2018). Deep Diffeomorphic Normalizing Flows. arXiv:1810.03256 [cs, stat].

- Schapire et al., [1998] Schapire, R. E., Freund, Y., Bartlett, P., and Lee, W. S. (1998). Boosting the margin: A new explanation for the effectiveness of voting methods. The Annals of Statistics, 26(5):1651–1686.

- Smith, [2017] Smith, L. N. (2017). Cyclical Learning Rates for Training Neural Networks. In 2017 IEEE Winter Conference on Applications of Computer Vision (WACV), pages 464–472, Santa Rosa, CA, USA. IEEE.

- Sønderby et al., [2016] Sønderby, C. K., Raiko, T., Maaløe, L., Sønderby, S. K., and Winther, O. (2016). Ladder Variational Autoencoders. In Advances in Neural Information Processing Systems.

- Tabak and Turner, [2013] Tabak, E. G. and Turner, C. V. (2013). A Family of Nonparametric Density Estimation Algorithms. Communications on Pure and Applied Mathematics, 66(2):145–164.

- Tabak and Vanden-Eijnden, [2010] Tabak, E. G. and Vanden-Eijnden, E. (2010). Density estimation by dual ascent of the log-likelihood. Communications in Mathematical Sciences, 8(1):217–233.

- Tolstikhin et al., [2017] Tolstikhin, I., Gelly, S., Bousquet, O., Simon-Gabriel, C.-J., and Schölkopf, B. (2017). AdaGAN: Boosting Generative Models. arXiv:1701.02386 [cs, stat].

- Tomczak and Welling, [2018] Tomczak, J. and Welling, M. (2018). VAE with a VampPrior. In International Conference on Artificial Intelligence and Statistics (AISTATS), volume 84, Lanzarote, Spain.

- Uria et al., [2013] Uria, B., Murray, I., and Larochelle, H. (2013). RNADE: The real-valued neural autoregressive density-estimator. In Advances in Neural Information Processing Systems.

- van den Berg et al., [2018] van den Berg, R., Leonard Hasenclever, Jakub M. Tomczak, and Max Welling (2018). Sylvester Normalizing Flows for Variational Inference. In Uncertainty in Artificial Intelligence (UAI).

- Wainwright and Jordan, [2007] Wainwright, M. J. and Jordan, M. I. (2007). Graphical Models, Exponential Families, and Variational Inference. Foundations and Trends® in Machine Learning, 1(1–2):1–305.

- Ziegler and Rush, [2019] Ziegler, Z. M. and Rush, A. M. (2019). Latent Normalizing Flows for Discrete Sequences. In Advances in Neural Information Processing Systems.

Appendix A Experiment Details

A.1 Image Modeling

Datasets

In Section 6.4, VAEs are modified with GBNF approximate posteriors to model four datasets: Freyfaces444http://www.cs.nyu.edu/~roweis/data/frey_rawface.mat, Caltech 101 Silhouettes555https://people.cs.umass.edu/~marlin/data/caltech101_silhouettes_28_split1.mat [55], Omniglot666https://github.com/yburda/iwae/tree/master/datasets/OMNIGLOT [50], and statically binarized MNIST777http://yann.lecun.com/exdb/mnist/ [51]. Details of these datasets are given below.

The Freyfaces dataset contains 1965 gray-scale images of size portraying one man’s face in a variety of emotional expressions. Following van den Berg et al., [79], we randomly split the dataset into 1565 training, 200 validation, and 200 test set images.

The Caltech 101 Silhouettes dataset contains 4100 training, 2264 validation, and 2307 test set images. Each image portrays the black and white silhouette of one of 101 objects, and is of size . As van den Berg et al., [79] note, there is a large variety of objects relative to the training set size, resulting in a particularly difficult modeling challenge.

The Omniglot dataset contains 23000 training, 1345 validation, and 8070 test set images. Each image portrays one of 1623 hand-written characters from 50 different alphabets, and is of size . Images in Omniglot are dynamically binarized.

Finally, the MNIST dataset contains 50000 training, 10000 validation, and 10000 test set images. Each image is a binary, and portrays a hand-written digit.

Experimental Setup

We limit the computational complexity of the experiments by reducing the number of convolutional layers in the encoder and decoder of the VAEs from 14 layers to 6. In Table 2 we compare the performance of our GBNF to other normalizing flow architectures. Planar, radial, and Sylvester normalizing flows each use , with Sylvester’s bottleneck set to orthogonal vectors per orthogonal matrix. IAF is trained with transformations, each of which is a single hidden layer MADE [33] with either or hidden units. RealNVP uses transformations with either or hidden units in the Tanh feed-forward network. For all models, the dimensionality of the flow is fixed at .

Each baseline model is trained for 1000 epochs, annealing the KL term in the objective function over the first 250 epochs as in Bowman et al., [7], Sønderby et al., [73]. The gradient boosted models apply the same training schedule to each component. We optimize using the Adam optimizer [46] with a learning rate of (decay of 0.5x with a patience of 250 steps). To evaluate the negative log-likelihood (NLL) we use importance sampling (as proposed in Rezende et al., [67]) with 2000 importance samples. To ensure a fair comparison, the reported ELBO for GBNF models is computed by (4)—effectively dropping GBNF’s fixed components term and setting the entropy regularization to .

Model Architectures

In Section 6.4, we compute results on real datasets for the VAE and VAEs with a flow-based approximate posterior. In each model we use convolutional layers, where convolutional layers follow the PyTorch convention [63]. The encoder of these networks contains the following layers:

where is a kernel size, is a padding size, and is a stride size. The final convolutional layer is followed by a fully-connected layer that outputs parameters for the diagonal Gaussian distribution and amortized parameters of the flows (depending on model).

Similarly, the decoder mirrors the encoder using the following transposed convolutions:

where is an outer padding. The decoders final layer is passed to standard 2-dimensional convolutional layer to reconstruction the output, whereas the other convolutional layers listed above implement a gated action function:

where and are inputs and outputs of the -th layer, respectively, are weights of the -th layer, denote biases, is the convolution operator, is the sigmoid activation function, and is an element-wise product.

A.2 Density Estimation on Real Data

Dataset

For the unconditional density estimation experiments we follow Papamakarios et al., [62], Uria et al., [78], evaluating on four dataset from the UCI machine learning repository [24] and patches of natural images from natural images [56]. From the UCI repository the POWER dataset (, 2,049,280) contains electric power consumption in a household over a period of four years, GAS (, 1,052,065) contains logs of chemical sensors exposed to a mixture of gases, HEPMASS (, 525,123) contains Monte Carlo simulations from high energy physics experiments, MINIBOONE (, 36,488) contains electron neutrino and muon neutrino examples. Lastly we evaluate on BSDS300, a dataset (, 1,300,000) of patches of images from the homonym dataset. Each dataset is preprocessed following Papamakarios et al., [62].

Experimental Setup

We compare our results against Glow [47], and RealNVP [21]. We train models using a small grid search on the depth of the flows , the number of hidden units in the coupling layers , where is the input dimension of the data-points. We trained using a cosine learning rate schedule with the learning rate determined using the learning rate range test [72] for each dataset, and similar to Durkan et al., [25] we use batch sizes of 512 and up to 400,000 training steps, stopping training early after 50 epochs without improvement. The log-likelihood calculation for GBNF follows (7), that is we recursively compute and combine log-likelihoods for each component.

Appendix B Additional Results

B.1 Density Estimation

8 1

4 2

2 4

B.2 Image Reconstructions from VAE Models

Freyfaces

Caltech

Omniglot

MNIST