E-mail: {zeyuzhou,longyuan}@njust.edu.cn. X. Lin is with the School of Computer Science and Engineering, the University of New South Wales, Sydney, Australia.

E-mail: [email protected]. Z. Chen is with the Software Engineering Institute, East China Normal University, Shanghai, China.

E-mail: [email protected]. X. Zhao is with the College of Systems Engineering, National University of Defense Technology, Changsha, China.

E-mail: [email protected]. F. Zhang is with Cyberspace Institute of Advanced Technology, Guangzhou University, Guangzhou, China.

E-mail: [email protected]. ††thanks: Manuscript received xxx; revised xxx.

: Efficient Structural Graph Clustering on GPUs

Abstract

Structural clustering is one of the most popular graph clustering methods, which has achieved great performance improvement by utilizing GPUs. Even though, the state-of-the-art GPU-based structural clustering algorithm, , still suffers from efficiency issues since lots of extra costs are introduced for parallelization. Moreover, assumes that the graph is resident in the GPU memory. However, the GPU memory capacity is limited currently while many real-world graphs are big and cannot fit in the GPU memory, which makes unable to handle large graphs. Motivated by this, we present a new GPU-based structural clustering algorithm, , in this paper. To address the efficiency issue, we propose a new progressive clustering method tailored for GPUs that not only avoid high parallelization costs but also fully exploits the computing resources of GPUs. To address the GPU memory limitation issue, we propose a partition-based algorithm for structural clustering that can process large graphs with limited GPU memory. We conduct experiments on real graphs, and the experimental results demonstrate that our algorithm can achieve up to 168 times speedup compared with the state-of-the-art GPU-based algorithm when the graph can be resident in the GPU memory. Moreover, our algorithm is scalable to handle large graphs. As an example, our algorithm can finish the structural clustering on a graph with 1.8 billion edges using less than 2 GB GPU memory.

Index Terms:

structural graph clustering, GPU, graph algorithm1 Introduction

Graph clustering is one of the most fundamental problems in analyzing graph data. It has been extensively studied [14, 24, 27, 40, 42] and frequently utilized in many applications, such as natural language processing [3], recommendation systems [2], social and biological network analysis [13, 21], and load balancing in distributed systems[11].

One well-known method of graph clustering is structural clustering, which is introduced via the Structural Clustering Algorithm for Networks () [40]. Structural clustering defines the structural similarity between two vertices, and two vertices with structural similarity not less than a given parameter are considered similar. A core is a vertex that has at least similar neighbors, where is also a given parameter. A cluster contains the cores and vertices that are structurally similar to the cores. If a vertex does not belong to any cluster but its neighbors belong to more than one cluster, then it is a hub; Otherwise, it is an outlier. Fig. 1 shows the clustering result of structural clustering with and on graph in which two clusters are colored gray, cores are colored black, and hubs and outliers are labeled. Compared with most graph clustering approaches aiming to partition the vertices into disjoint sets, structural clustering is also able to find hub vertices that connected different clusters and outlier vertices that lack strong ties to any clusters, which is important for mining various complex networks [40, 16, 38]. Structural clustering has been successfully used to cluster biological data [10, 21] and social media data [20, 18, 28, 29].

Motivation. In recent years, the rapid evolution of Graphics Processing Unit (GPU) has attracted extensive attention in both industry and academia, and it has been used successfully to speed up various graph algorithms [19, 15, 41, 31, 32]. The massively parallel hardware resources in GPUs also offer promising opportunities for accelerating structural clustering. In the literature, a GPU-based algorithm named is proposed [36]. By redesigning the computation steps of structural clustering, significantly improves the clustering performance compared with the state-of-the-art serial algorithm. However, it has the following drawbacks:

-

•

Prohibitive parallelization overhead. aims to fully utilize the massively parallel hardware resources in GPU. However, lots of extra computation cost is introduced for this goal. Given a graph , the state-of-the art serial algorithm [7] can finish the clustering with work while needs work 111We use the work-span framework to analyze the parallel algorithm [9]. The work of an algorithm is the number of operations it performs. The span of an algorithm is the length of its longest sequential dependence. for it, where denotes the number of edges in the graph, denotes the maximum degree of the vertices in , and is a not small integer as verified in our experiments determined by the given graph and parameter and . The prohibitive parallelization overhead makes inefficient for structural clustering.

-

•

GPU memory capacity limitation. Compared to common server machines equipped with hundreds of gigabytes or even terabytes of main memory, the GPU memory capacity is very limited currently. assumes that the graph is resident in the GPU memory. Nevertheless, many real-world graphs are big and may not fit entirely in the GPU memory. The memory capacity limitation significantly restricts the scale of graphs that can handle.

Motivated by this, in this paper, we aim to devise a new efficient GPU-based structural clustering algorithm that can handle large graphs.

Our solution. We address the drawbacks of and propose new GPU-based algorithms for structural clustering in this paper. Specifically, for the prohibitive parallelization overhead, we devise a CSR-enhanced graph storage structure and adopt a progressive clustering paradigm with which unnecessary similarity computation can be pruned. Following this paradigm, we develop a new GPU-based algorithm with careful considerations of total work and parallelism, which are crucial to the performance of GPU programs. Our new algorithm significantly reduces the parallelization overhead. We theoretically prove that the work of our new algorithm is reduced to , and experimental results show that our algorithms achieve 85 times speedup on average compared with .

For the GPU memory capacity limitation, a direct solution is to use the Unified Virtual Memory (UVM) provided by recent GPUs. UVM utilizes the large host main memory of common server machines and allows GPU memory to be oversubscribed as long as there is sufficient host main memory to back the allocated memory. The driver and the runtime system handle data movement between the host main memory and GPU memory without the programmer’s involvement, which allows running GPU applications that may otherwise be infeasible due to the large size of datasets [12]. However, the performance of this approach is not competitive due to poor data locality caused by irregular memory accesses during the structural clustering and relatively slow rate I/O bus connecting host memory and GPU memory. Therefore, we propose a new partition-based structural clustering algorithm. Specifically, we partition the graph into several specified subgraphs. When conducting the clustering, we only need to load the specified subgraphs into the GPU memory and we can guarantee that the clustering results are the same as loading the whole graph in the GPU memory, which not only addresses the GPU memory limitation problem in but also avoids the data movement overheads involved in the UVM-based approach.

Contributions. We make the following contributions:

(A) We theoretically analyze the performance of the state-of-the-art GPU-based structural clustering algorithm and reveal the key reasons leading to its inefficiency. Following the analysis, we propose a new progressive clustering algorithm tailored for GPUs that not only avoids the prohibitive parallelization overhead but also fully exploits the computing power of GPUs.

(B) To overcome the GPU memory capacity limitation, we devise a new out-of-core GPU-based SCAN algorithm. By partitioning the original graph into a series of smaller subgraphs, our algorithm can significantly reduce the GPU memory requirement when conducting the clustering and scale to large data graphs beyond the GPU memory.

(C) We conduct extensive performance studies using ten real graphs. The experimental results show that our proposed algorithm achieves 168/85 times speedup at most/on average, compared with the state-of-the-art GPU-based SCAN algorithm. Moreover, our algorithm can finish the structural clustering on a graph with 1.8 billion edges using less than 2 GB of GPU memory.

2 Preliminaries

2.1 Problem Definition

Let be an undirected and unweighted graph, where is the set of vertices and is the set of edges, respectively. We denote the number of vertices as and the number of edges as , i.e., , . For a vertex , we use to denote the set of neighbors of . The degree of a vertex , denoted by is the number of neighbors of , i.e., . For simplicity, we omit in the notations when the context is self-evident.

Definition 2.1: (Structural Neighborhood) Given a vertex in , the structural neighborhood of , denoted by , is defined as .

Definition 2.2: (Structural Similarity) Given two vertices and in , the structural similarity between and , denoted by , is defined as:

| (1) |

Definition 2.3: (-Neighborhood) Given a similarity threshold , the -neighborhood of , denoted by , is defined as the subset of in which every vertex satisfies , i.e., .

Note that the -neighborhood of a given vertex contains itself as . When the number of -neighbors of a vertex is large enough, it becomes a core vertex:

Definition 2.4: (Core) Given a structural similarity threshold and an integer , a vertex is a core vertex if .

Given a core vertex , the structurally reachable vertex of is defined as:

Definition 2.5: (Structural Reachability) Given two parameters and , for two vertices and , is structurally reachable from vertex if there is a sequence of vertices such that: (i) ; (ii) are core vertices; and (iii) for each .

Intuitively, a cluster is a set of vertices that can be structurally reachable from any core vertex. Formally,

Definition 2.6: (Cluster) A cluster is a non-empty subset of such that:

-

•

(Connectivity) For any two vertices , there exists a vertex such that both and are structurally reachable from .

-

•

(Maximality) If a core vertex , then all vertices which are structure-reachable from are also in .

Definition 2.7: (Hub and Outlier) Given the set of clusters in graph , a vertex not in any cluster is a hub vertex if its neighbors belong to two or more clusters, and it is an outlier vertex otherwise.

Following the above definitions, it is clear that two vertices that are not adjacent in the graph have no effects on the clustering results even if they are similar. Therefore, we only need to focus on the vertex pair incident to an edge. In the following, for an edge , we use the edge similarity of and the similarity of and interchangeably for brevity. We summarize the notations used in the paper in Table I.

| Symbol | Description |

|---|---|

| Graph with vertices and edges | |

| All vertices/edge in | |

| Neighbors/degree of a vertex u | |

| number of vertices/edges in | |

| maximum degree in | |

| the role of a vertex or the similarity of an edge is unknown | |

| / | the vertex is a core/non-core vertex |

| / | the edge is similar/dis-similar |

| / | the vertex is a hub/outlier |

Example 2.1: Considering shown in Fig. 1 where and , the structural similarity of every pair of adjacent vertices is shown near the edge. , , , , , , , , and are core vertices as they all have at least similar neighbors including themselves. These core vertices form two clusters, which are marked in grey. is a hub as its neighbors and are in different clusters, while and are outliers. Due to the limited space, in the following examples, we only show procedures on the subgraph of .

Problem Statement. Given a graph and two parameters and , structural graph clustering aims to efficiently compute all the clusters in and identify the corresponding role of each vertex. In this paper, we aim to accelerate the clustering performance of by GPUs.

2.2 GPU Architecture and CUDA Programming Platform

GPU Architecture. A GPU consists of multiple streaming multiprocessors (SM). Each SM has a large number of GPU cores. The cores in each SM work in single-instruction multiple-data (SIMD) 222Single-instruction multi-thread (SIMT) model in NIVIDA’s term fashion, i.e., they execute the same instructions at the same time on different input data. The smallest execution units of a GPU are warps. In NVIDIA’s GPU architectures, a warp typically contains 32 threads. Threads in the same warp that finish their tasks earlier have to wait until other threads finish their computations, before swapping in the next warp of threads. A GPU is equipped with several types of memory. All SMs in the GPU share a larger but slower global memory of the GPU. Each SM has small but fast-access local programmable memory (called shared memory) that is shared by all of its cores. Moreover, data can be exchanged between a GPU’s global memory and the host’s main memory via a relatively slow rate I/O bus.

CUDA. CUDA (Compute Unified Device Architecture) is the most popular GPU programming language. A CUDA problem consists of codes running on the CPU (host code) and those on the GPU (kernel). Kernels are invoked by the host code. Kernels start by transferring input data from the main memory to the GPU’s global memory and then processing the data on the GPU. When finishing the process, results are copied from the GPU’s global memory back to the main memory. The GPU executes each kernel with a user-specified number of threads. At runtime, the threads are divided into several blocks for execution on the cores of an SM, each block contains several warps, and each warp of threads executes concurrently.

For the thread safety of GPU programming, CUDA provides programmers with atomic operations, including and . reads the 16-bit, 32-bit, or 64-bit word old located at the address address in global or shared memory, computes , and stores the result back to memory at the same address. These three operations are performed in one atomic transaction and returns old value. (Compare And Swap) reads the 16-bit, 32-bit, or 64-bit word old located at the address address in global or shared memory, computes , and stores the result back to memory at the same address. These three operations are performed in one atomic transaction and return old.

3 Related work

3.1 CPU-based

Structural graph clustering () is first proposed in [40]. After that, a considerable number of follow-up works are conducted in the literature. For the online algorithms regarding a fixed parameter and , ++ [33], pSCAN [7] and [43] speed up by pruning unnecessary structural similarity computation. [44] conducts the structural clustering on the Spark system. [30] considers the case that the input graph is stored on the disk. [8] parallelizes pruning-based algorithms and uses vectorized instructions to further improve the performance.

For the index-based algorithms aiming at returning the clustering result fast for frequently issued queries, GS*-Index [39] designs a space-bounded index and proposes an efficient algorithm to answer a clustering query. [38] parallelizes GS*-Index and uses locality-sensitive hashing to design a novel approximate index construction algorithm. [26] studies the maintenance of computed structural similarity between vertices when the graph is updated and proposes an approximate algorithm that achieves amortized cost for each update for a specified failure probability and every sequence of updates. [22] studies structural clustering on directed graphs and proposes an index-based approach to computing the corresponding clustering results. However, all these algorithms are CPU-based and cannot be translated into efficient solutions on GPUs due to the inherently unique characteristics of a GPU different from a CPU.

3.2 GPU-based

For the GPU-based approach, [37] is the state-of-the-art GPU-based solution, which conducts the clustering in three separate phases: (1) -neighborhood identification. In Phase 1, computes the similarity of each edge and determines the core vertices in the graph. (2) cluster detection. After identifying the core vertices, detects the clusters based on Definition 2.1 in Section 2. (3) hub and outlier classification. With the clustering results, the hub and outlier vertices are classified in Phase 3. The following example illustrates the three phases of .

Example 3.1: Consider graph shown in Fig. 1, Fig. 2 shows the clustering procedures of with parameters . Due to the limited space, we only show the clustering procedures related to the subgraph of in Fig. 1 in the following examples and the remaining part can be processed similarly.

Fig. 2 (a)-(b) show the -neighborhood identification phase. uses and arrays to represent the edges of the graph, a array in which each element stores whether an edge is similar or not, and and arrays to store the neighbors of each vertex. uses a warp to computing the similarity of each edge . According to Definition 2.1, the key to compute the similarity of is to find the common neighbors in and . To achieve this goal, for each , uses 8 threads in a warp to check whether is also in 8 continuous elements in . As a warp usually contains 32 threads, four elements in can be compared concurrently. After the comparisons of these four elements are complete, if the largest vertex id of these four elements in is bigger than the largest one in the 8 continuous elements , then, the next continuous 8 elements in are used to repeat the above comparisons. If the largest vertex id of these four elements in is smaller than the largest one in the 8 continuous elements , then, the next 4 elements in are used to repeat the above comparisons. Otherwise, the next 4 elements in and the next 8 elements in are used to repeat the above comparisons. The common neighbors are found when all the elements in and have been explored. Take edge as an example. Fig. 2 (b) shows the procedure of to compute their common neighbors. As , the first 4 elements in are compared first. For the 32 threads in a warp, threads handle the comparison for . The remaining threads are assigned to handle the comparison for vertices , , and similarly. As , threads finds . Similarly, threads , , find . After that, they find the largest vertex id of elements in that were compared is smaller than the largest one in . Then, the remaining 3 elements in are used to repeat the comparisons similarly. In this comparison, threads find that is the common element of and . Therefore, , which means and are similar, and the corresponding element in the array is set as 1.

Fig. 2 (c) shows the graph clustering phase of . Following Definition 2.1, only similar edges could be in the final clusters. Therefore, builds two new arrays and by retrieving the similar edges in and . Consider the initialization step in Fig. 2 (c), as and are similar while and are not similar, only and are kept in and . Then Based on and , can be obtained easily. For example, for , , , thus, is a core vertex. Similarly, and are also core vertices. Then, it removes the edges in and not incident to core vertices. Moreover, represents all clusters by a spanning forest through a array. In the array, each element represents the parent of the corresponding vertex in the forest, and the corresponding element for the vertices in and is initialized as the vertex itself, which is also shown in Initialization of Fig. 2 (c).

After that, detects the clusters in the graph iteratively. In each odd iteration, for each vertex in , it finds the smallest neighbor of in and takes the smaller one between and as the parent of in the array. Then, it replaces each vertex in and by its parent and removes the edges with the same incident vertices. Consider Iteration 1.1-1.4 in Fig. 2 (c), for , its smallest neighbor in is , which is smaller than itself, thus, the parent of is replaced by in the array. The other vertices are processed similarly (Iteration 1.1 and 1.2). Then, is replaced by in and in Iteration 1.3, and only edges and are left after removing the edges with the same incident vertices in Iteration 1.4. In each even iteration, conducts the clustering similarly to the odd iteration except the largest neighbor is selected as its parent. Iteration 2.1-2.4 in Fig. 2 (c) show the even case. Note that to obtain the and at the end of each iteration, uses and functions in the library provided by Nvidia [23]. When and become empty, explores each vertex and sets its corresponding element in the array as the root vertex in the forest. For example, in Iteration 2.2, the parent of is , the parent of is , and is the parent of itself, thus, the parent of is set as . After Phase 2 finishes, the vertices with the same parent are in the same cluster. In Fig. 2 (c), as , , , , and have the same parent, they are in the same cluster.

In Phase 3, classifies the hub and outlier vertices. It first retrieves edge from and such that is not in a cluster and is in a cluster and keeps them in new arrays and . Then, it replaces the vertices in by their parents. Then, for a vertex , it has two more different neighbors, then it is a hub vertex. Otherwise, it is an outlier vertex. Consider the example shown in Fig. 2 (d), , , , and are not in a cluster, thus, the edges that they form with their neighbors within the cluster are retrieved and stored in and (Step 1). Then, , , and are replaced with their parents (In Step 2, the parent of is , which is not shown in Fig. 2 due to limited space). Since has two neighbors and in , it is a hub vertex. , , only have one neighbor in , they are outlier vertices.

Theorem 3.1: Given a graph and two parameters and , the work of to finish structural clustering is , and the span is , where denotes the number of iterations in Phase 2.

Proof: In Phase 1, for each edge , has to explore the neighbors of and . Thus, the work and span of Phase 1 are and , respectively. In Phase 2, in each iteration, the time used for dominates the whole time of this iteration, and the work and span of are and [35]. Thus, the work and span of Phase 2 are and , respectively. In Phase 3, explores the edges once and only the neighbors of hubs and outliers are visited. Thus, the work and span of Phase 3 are and . Thus, the work and space of are and .

Drawbacks of . seeks to parallelize to benefit from the computational power provided by GPUs. However, it suffers from the following two drawbacks, which make it unable to fully utilize the massive parallelism of GPUs.

- •

-

•

Low scalability. assumes that the input graphs can be fit in the GPU memory. However, the sizes of real graphs such as social networks and web graphs are huge while the GPU memory is generally in the range of a few gigabytes. The assumption that the sizes of data graphs cannot exceed the GPU memory makes it unscalable to handle large graphs in practice.

4 Our Approach

We address the drawbacks of in two sections. In this section, we focus on the scenario in which the graphs can be stored in the GPU memory and aim to reduce the high computation cost introduced for parallelization. In the next section, we present our algorithm to address the problem that the graph cannot fit in the GPU memory.

4.1 A CSR-Enhanced Graph Layout

simply uses the edge array and adjacent array to represent the input graph. The shortcomings of this representation are two-fold: (1) the degree of a vertex can not be easily obtained, which is a commonly used operation during the clustering, (2) the relation between the edge array and adjacent array for the same edge is not directly maintained, which complicates the processing when identifying the cluster vertices in Phase 2. To avoid these problems, we adopt a CSR (Compressed Sparse Row)-enhanced graph layout, which contains four parts:

-

•

Vertex array. Each entry keeps the starting index for a specific vertex in the adjacent array.

-

•

Adjacent array. The adjacent array contains the adjacent vertices of each vertex. The adjacent vertices are stored in the increasing order of vertex ids.

-

•

Eid array. Each entry corresponds to an edge in the adjacent array and keeps the index of the edge in the degree-oriented edge array.

-

•

Degree-oriented edge array. The degree-oriented edge array contains the edges of the graph and the edges with the same source vertex are stored together. For each edge in the degree-oriented edge array, we guarantee or and . In this way, the workload for edges incident to a large degree vertex can be computed based on the vertex with smaller degree.

-

•

Similarity array. Each element corresponds to an edge in the degree-oriented edge array and maintain the similarity of the corresponding edge.

Example 4.1: Fig. 3 shows the CSR-Enhanced graph layout regarding , , , in . These five arrays are organized as discussed above.

4.2 A Progressive Structural Graph Clustering Approach

To reduce the high parallelization cost, the designed algorithm should avoid unnecessary computation during the clustering and fully exploit the unique characteristics of GPU structure. As shown in Section 3.2, first computes the structural similarity for each edge in Phase 1 and then identifies the roles of each vertex accordingly. However, based on Theorem 2, computing the structural similarity is a costly operation. To avoid unnecessary structural similarity computation, we adopt a progressive approach in which the lower bound () and upper bound () of are maintained. Clearly, we have the following lemma on and :

Lemma 4.1: Given a graph and two parameters and , for a vertex , if , then is a core vertex. If , then is not a core vertex.

According to Lemma 1, we can maintain the and progressively and delay the structural similarity computation until necessary. Following this idea, our new algorithm, , is shown in Algorithm 1. It first initializes as , as for each vertex (lines 1-2, refers to the vertex array). The role of each vertex and the structural similarity of each edge are initialized as unknown () (lines 1-4). Then, it identifies the core vertices (line 5), detects the clusters follow the core vertices (line 6), and classifies the hub vertices and outlier vertices (line 7).

Identify core vertices. first identifies the core vertices in the graph based on the given parameters and , which is shown in Algorithm 2.

To identify the core vertices, it assigns a warp for each edge to determine its structural similarity (line 1), which is similar to . However, the detailed procedures to compute the similarity are significantly different from that of . Specifically, if the role of or has been known, it delays the computation of structural similarity between and to the following steps as the role of these two vertices has been determined and it is possible that we can obtain the clustering results without knowing the structural similarity of and in the following steps (line 2). Then, it computes the structural similarity between and by invoking . If and are similar, then the similarity indicator between and is set to (line 6) and / is increased by as and are similar (line 7). After that, if /, the role of / is set as (lines 8-9). If and are dissimilar, / and the role of / are set accordingly (lines 11-14).

Procedure is used to determine the structural similarity between and based on the given . Without loss of generality, we assume . For each neighbor of , it assigns a thread to check whether is also a neighbor of (line 17). Since the neighbors of are stored in increasing order based on their id in , it looks up among the neighbors of in a binary search manner (lines 18-26). Specifically, three indices , , and are maintained and initialized as , , and , respectively. If is less than , is updated as (lines 21-22) ( refers to the adjacent array). If is larger than , then is updated as +1 (lines 23-24). Otherwise, the common neighbors of and , which are recorded in , are increased by 1 (line 26). Last, whether and are similar returns based on Definition 2.1 (line 27-29).

Example 4.2: Fig. 4 shows the procedures of to check the similarity between and of shown in Fig. 1. In order to compute their similarity, the neighbors of and are first obtained from the CSR-Enhanced graph layout as shown in Fig. 4 (a). As the , thread , , , and in the warp are used to check whether the neighbors of are also neighbors of , which is shown in Fig. 4 (b). Take thread as an example, since the neighbors of are sorted based on their ids, thread explores the neighbors of in a binary-search manner, which is shown in Fig. 4 (c). After traversing the leaf node , it is clear that is not a neighbor of . Similarly, threads find that , , and are neighbors of as well. Therefore, the common neighbors of and are , , . Because , and are similar, and returns .

Detect clusters. After the core vertices have been identified, detects clusters in the graph, which is shown in Algorithm 3. After the core vertices identification phase, the vertices are either core vertices or non-core vertices. Moreover, the similarities of some edges are known but there are still edges whose similarities are unknown. Therefore, Algorithm 3 first detects the sub-clusters that can be determined by the computed information (lines 1-9). After that, it further computes the similarity for the edges whose similarities are unknown and obtains the complete clusters in the graph (lines 10-27). In this way, we can avoid some unnecessary similarity computation compared with .

Specifically, Algorithm 3 uses the array to store the cluster id that each vertex belongs to (negative cluster id value indicates the corresponding vertex is not in a cluster currently). Similar to , Algorithm 3 also uses a spanning forest to represent the clusters through the array. However, Algorithm 3 uses a different algorithm to construct the forest, which makes much more efficient than as verified in our experiment. It initializes each core vertex as a separate cluster with cluster id and the non-core vertices with a negative cluster id (lines 1-3). Then, for each core vertex , it first finds the root id of the cluster by the procedure (line 5). After that, for the neighbors of , if we have already known and are similar (line 7), we know and are the same cluster following Definition 2.1. Thus, we union the subtrees represented by and in through procedure (lines 6-9).

After having explored the sub-clusters that can be determined by the computed information, for each core vertices , Algorithm 3 further explores the neighbors of such that is also a core vertex but the similarity of and are unknown (lines 10-13). If and are not in the same cluster currently (line 15), then it computes the similarity between and (line 16). If and are similar, we union the subtrees represented by and in the array (line 17). Otherwise, and are marked as dissimilar (line 18). When the clusters of the core vertices are determined, the root cluster id is assigned to each core vertex (lines 19-20). At last, Algorithm 3 explores the non-core neighbors of each core vertex , if the similarity between and is unknown, their similarity is computed (lines 24-25). If and are similar, is merged in the cluster represented by (lines 26-27). For the procedure and , they are used to find the root of the tree in the forest and union two sub-trees in the forest. As they are self-explainable, we omit the description. Note the / in line 34 represents the height of tree rooted at .

Example 4.3: Fig. 5 shows the procedure to detect clusters in . Assume that we know that , , , are core vertices, and are similar, but the similarity of , and are unknown. Parents of the four core vertices are initialized as themselves while the others are set as -2.

first processes the core vertices in parallel. To avoid redundant computation, when clustering core vertices, only neighbors with smaller ids than their own ids are considered. At the same moment, for , are similar, then the parent of becomes and for , are similar, then the parent of becomes , which are shown in Fig. 5 (a). After that, detects the clusters related to and as the similarities between some of their neighbors and themself have not been determined yet. For the neighbor of , finds that and have been in the same cluster by procedure , they do not need further process, which is shown in Fig. 5 (b). For , due to the atomic operation, the thread which explores its neighbor executes first. It finds that they are not in the same cluster based on procedure . Therefore, after determining that they are similar by procedure , it changes the parent of to , which is shown in Fig. 5 (c). Then, and are processed by another thread similarly, which is shown in Fig. 5 (d). When the cluster of core vertices , , , and is detected, explores these vertices and sets their corresponding element in the array as the root vertices in the forest. Fig. 5 (e) illustrates this process, in which the of changes to the traced root vertex . At last, assigns the parent of to based on the similarity of core vertex and non-core vertex as shown in Fig. 5 (f).

Classify hub and outlier vertices. At last, classifies vertices that are not in clusters into outliers and hubs, which is shown in Algorithm 4. Specifically, it assigns a warp for each vertex not in a cluster and considers as an outlier (line 2). Then it checks whether has more than one neighbor vertices, and if this condition is satisfies, has the potential to become hubs (line 3). Then, Algorithm 4 uses the shared variable of the threads in a warp to record the cluster that one of its neighbors belongs to and sets it as (line 4). Each thread in the warp maintains an exclusive variable with an initial value of (line 5). If a neighbor of is found in a cluster by a thread, then, is updated to the cluster id of by (lines 7-8). Once another thread finds that the cluster id recorded by is different from the cluster id of it processes, it means at least two neighbors of are in different clusters, therefore, is classified as a hub (lines 9-11).

4.3 Theoretical Analysis

Theorem 4.1: Given a graph and two parameters and , the work of finishes the clustering in , and the span is .

Proof: In Phase 1 (Algorithm 2), the work and span of are and . In Phase 2 (Algorithm 3), is possible to check the similarity of all edges, thus, the work of Phase 2 is as well. For the span, the height of the spanning forest is due to the union-by-size used in line 37 of Algorithm 3 [34]. Thus, the span of Phase 2 is . In Phase 3 (Algorithm 4), it is easy to derive that the work is and . Therefore, the work of is and the span is .

Compared with , Theorem 4.3 shows that reduces the total work and the span during the clustering. Note that in Phase 1, uses the binary-search-based manner the check the similarity, which reduces the span of Phase 1 from to compared with .

5 A New Out-of-Core Algorithm

In the previous section, we focus on improving the clustering performance when the graph can be fit in the GPU memory. However, in real applications, the graph data can be very large and the GPU memory is insufficient to load the whole graph data. A straightforward solution is to use the Unified Virtual Memory (UVM) provided by the GPUs directly. However, this is approach is inefficient as verified in our experiments. On the other hand, in most real graphs, such as social networks and web graphs, the number of edges is much larger than the number of vertices. For example, the largest dataset in SNAP contains 65 million nodes and 1.8 billion edges. It is practical to assume that the vertices of the graph can be loaded in the GPU memory while the edges are stored in the CPU memory.

Following this assumption, we propose an out-of-core GPU algorithm that adopts a divide-and-conquer strategy. Specifically, we first divide the graph into small subgraphs whose size does not exceed the GPU memory size. Then, we conduct the clustering based on the divided subgraphs instead of the original graph. As the divided subgraphs are much smaller than the original graphs, the GPU memory requirement is significantly reduced. Before introducing our algorithm, we first define the local subgraphs as follows:

Definition 5.1: (Edge Extended Subgraph) Given a graph and a set of edges , the edge extended subgraph of consists of the edges and such that incident to at least one edge in .

Lemma 5.1: Given a graph and a set of edge , let denote the edge extended subgraph of , then, for any edge , , where denotes the structural similarity computed based on .

Example 5.1: Fig. 6 shows the edge extended subgraphs of . As and share the same vertex but is not in , should be added into the edge extended subgraph of .

Therefore, we divide into a series of edge extended subgraphs such that , , where , and each edge extended subgraph can be loaded in the GPU memory. Following Lemma 5, we can obtain the structural similarity for all edges in regarding correctly. Moreover, as the vertices can be loaded in the memory, which means we can identify the core vertices by iterating each edge extended subgraphs and maintain the clustering information in the GPU memory accordingly.

Algorithm. Following the above idea, our algorithm GPU-based out-of-core algorithm is shown in Algorithm 5. It first partitions the edges of into a series of disjoint sets and constructs the corresponding edge extended subgraphs that can be fit in the GPU memory based on the GPU memory capacity following Definition 5 by CPU (line 3-12). After that, we initialize , , , and array for each vertex in GPU memory following our assumption. After that, based on Lemma 5, the similarity for each edge can be correctly obtained by the edge extended subgraph alone. Accordingly, the clusters and the role of each vertex can also be determined by the edge extended subgraph locally. Therefore, we just load each edge extended subgraph into the GPU memory sequentially and repeat the three phases by invoking , , and for the in-GPU-memory algorithm (lines 14-21). The correctness of Algorithm 5 can be easily obtained based on Lemma 5 and the correctness of Algorithm 1. The only thing that needs to explain is how to estimate the size of the edge extended subgraph in line 10. In Algorithm 5, represents the current edge extended subgraph (line 1). Whenever a new edge is to be added into (line 3), the edges incident to are computed and stored by . According to the CSR-Enhanced graph layout introduced in Section 4.1, . As adjacent array, Eid array, and degree-oriented edge array consumes bytes together, the similarity array consumes byte, and vertex array consumes (we use 4-byte integer to store a vertex id). can be computed in the same way. Moreover, (2 bytes per element, as each element will not exceed the value of the parameter which is generally not very large), (4 bytes for each element), (1 byte for each element), (4 bytes for each element), and (4 byte for each element) array for each vertex take together. If three GPU memory consumptions are larger than the GPU memory in line 10, we generate a new empty edge extended subgraph in line 11; Otherwise, is added into in line 12.

As verified in our experiment, Algorithm 5 can finish the clustering on a graph with 1.8 billion edges using less than 2GB GPU memory efficiently.

6 Experiments

This section presents our experimental results. The in-memory algorithms are evaluated on a machine with an Intel Xeon 2.4GHz CPU equipped with 128 GB main memory and an Nvidia Tesla V100 16GB GPU, running Ubuntu 16.04LTS. The out-of-core algorithms are evaluated on a machine with Nvidia GTX1050 2GB GPU, running Ubuntu 16.04LTS.

| Datasets | Name | ||||

| Enwiki-2022 | 6,492,490 | 159,047,205 | 24.50 | 91 | |

| IndoChina | 7,414,866 | 194,109,311 | 26.18 | 143 | |

| Hollywood | 2,180,759 | 228,985,632 | 105.00 | 35 | |

| Orkut | 3,072,626 | 234,370,166 | 76.28 | 28 | |

| Tech-P2P | 5,792,297 | 295,659,774 | 51.04 | 173 | |

| UK-2002 | 18,520,486 | 298,113,762 | 16.10 | 201 | |

| EU-2015 | 11,264,052 | 386,915,963 | 34.35 | 230 | |

| Soc-Twitter | 21,297,773 | 530,051,090 | 24.89 | 30 | |

| Twitter-2010 | 41,652,230 | 1,468,365,182 | 35.25 | - | |

| Friendster | 65,608,366 | 1,806,067,135 | 27.53 | - |

Datasets. We evaluate the algorithms on ten real graphs. and are downloaded from the SNAP [17]. and are downloaded from the Network Repository [25]. , , , , and are downloaded from WebGraph [6, 5, 4]. The details are shown in Table II. The first eight datasets are used to evaluate the in-memory algorithms, while the last two datasets cannot fit into the Nvidia Tesla V100 GPU and are used to evaluate the out-of-core algorithms.

Algorithms. We evaluate the following algorithms:

- •

- •

-

•

: Direct out-of-core algorithm, namely, based on the .

- •

We use CUDA 10.1 and GCC 4.8.5 to compile all codes with -O3 option. The time cost of the algorithms is measured as the amount of elapsed wall-clock time during the execution. We set the maximum running time for each test to be 100,000 seconds. If a test does not stop within the time limit, we denote the corresponding running time as . For , we choose with as default. For , we choose with as default.

| Dataset | Phase 1 | Phase 2 | Phase 3 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Speedup | Speedup | Speedup | |||||||

| 581.32 | 6.35 | 91.55 | 0.80 | 0.022 | 3.64 | 10.60 | 0.23 | 46.08 | |

| 846.88 | 4.31 | 196.49 | 94.50 | 0.025 | 3780.00 | 6.57 | 0.071 | 92.48 | |

| 203.84 | 9.33 | 21.85 | 76.579 | 0.010 | 7657.90 | 5.94 | 0.017 | 349.41 | |

| 108.43 | 6.02 | 18.01 | 1.14 | 0.013 | 87.69 | 8.49 | 0.12 | 70.75 | |

| 998.70 | 13.65 | 73.16 | 4.24 | 0.021 | 201.90 | 10.87 | 1.25 | 8.696 | |

| 315.31 | 6.95 | 45.37 | 65.33 | 0.058 | 1126.38 | 11.96 | 0.16 | 74.75 | |

| 2456.73 | 16.58 | 148.17 | 493.97 | 0.036 | 13721.39 | 13.09 | 0.237 | 55.23 | |

| 3030.11 | 40.04 | 75.68 | 11.39 | 0.064 | 117.97 | 18.20 | 0.30 | 60.67 | |

6.1 Performance Studies on In-memory Algorithms

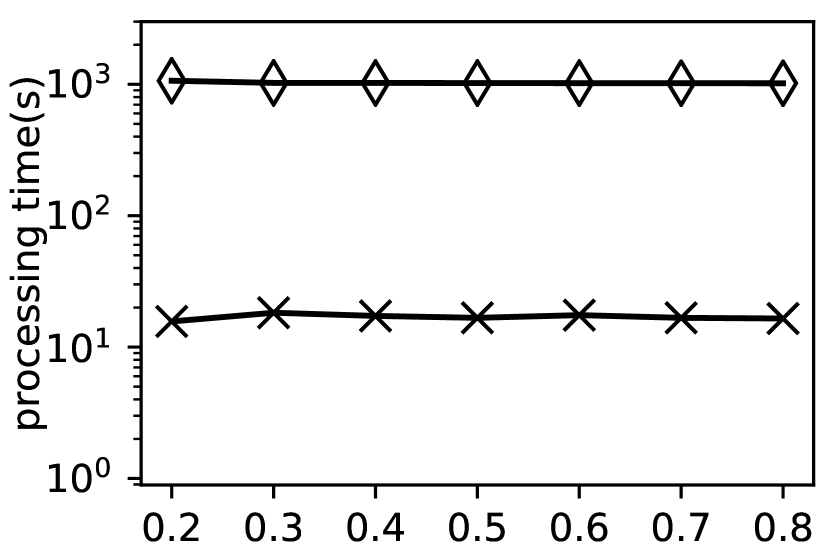

Exp-1: Performance when varying . In this experiment, we evaluate the performance of and by varying . We report the processing time on the first eight datasets in Fig. 7.

Fig. 7 shows that consumes much more time when varying the value of . For example, on , takes 3141.11s to finish the clustering when while only takes 18.93s in the same situation, which achieves 168 times speedup. This is because lots of extra costs are introduced in compared with , which is consistent with the time complexity analysis in Section 3 and Section 4. Moreover, Fig. 7 shows that both the running time of and decrease when the value of increases. For , this is because as increases, the number of core vertices and clusters decreases, which means the time for cluster detection in decreases. Additionally, Exp-3 shows that the cluster detection phase accounts for a great proportion of the total time. Therefore, the running time of decreases as increases. For , as the value of increases, more vertex pairs are dissimilar. Consequently, more unnecessary similarity computation is avoided. Thus, the running time decreases as well.

Exp-2: Performance when varying . In this experiment, we evaluate the performance of and by varying . The results are shown in Fig. 8.

As shown in Fig. 8, is much more efficient than . For example, int the , takes 411.97s to finish the clustering when while only takes 8.866s. The reasons are the same as discussed in Exp-1.

Exp-3: Time consumption of each phase. In this experiment, we compare the time consumption of and in each phase with and . And the results are shown in Table III.

As shown in Table III, achieves significant speedup in all three phases compared with , especially for Phase 2. For example, on dataset , takes 846.88s/94.50s/6.57s in Phase 1/Phase 2/Phase 3, respectively. On the other hand, the consuming time of for these three phases is 4.31s/0.025s/0.071s, which achieves 196.49/3780.00/92.48 times speedup, respectively. The reasons are as follows: for Phase 1, the span of is , while reduces the span to . For Phase 2, has to construct and by and in library in each iteration, which is time-consuming. Moreover, it needs several iterations to finish the whole cluster detection, which is shown in the last column of Table II. On the other hand, for , we construct the spanning forest based on the array directly and the time-consuming retrieval of and is avoided due to the CSR-Enhanced graph layout structure. For phase 3, still needs to retrieve and from and while totally avoid these time-consuming operation.

6.2 Performance Studies on Out-of-Core Algorithms

Exp-5: Performance when varying . In this experiment, we evaluate the performance of and when varying the value of on datasets and . The results are shown in Fig. 9.

Fig. 9 shows that is much more efficient than on these two datasets. For example, on , takes 30886.315s to finish the clustering when while finishes the clustering in 3866.424s. On , cannot finish the clustering when while finishes the clustering in a reasonable time. This is because UVM aims to provide a general mechanism to overcome the GPU memory limitation. However, as the data locality of structure clustering is poor, involves lots of data movements between CPU memory and GPU memory, which is time-consuming. On the other hand, conducts the clustering based on the edge extended subgraph, which not only avoids the time-consuming data movements but also overcomes the GPU memory limitation. Moreover, the running time of and decreases as the value of increases. This is because as the value of , more vertex pairs are dissimilar. Consequently, more unnecessary similarity computation is avoided in these two algorithms.

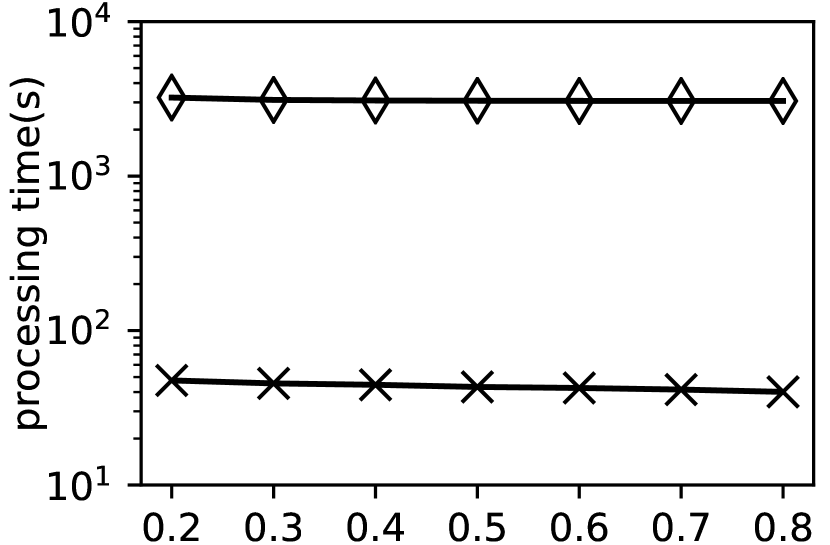

Exp-6: Performance when varying . In this experiment, we evaluate the performance of and when varying the value of on dataset and . The results are shown in Fig. 10.

As shown in Fig. 10, is much more efficient than . For example, on , takes 19037.683s to finish the clustering when while finishes the clustering in 2974.947s. The reasons are the same as discussed in Exp-5.

7 Conclusion

In this paper, we study the GPU-based structural clustering problem. Motivated by the state-of-the-art GPU-based structural clustering algorithms that suffer from inefficiency and GPU memory limitation, we propose new GPU-based structural clustering algorithms. For efficiency issues, we propose a new progressive clustering method tailored for GPUs that not only avoids extra parallelization costs but also fully exploits the computing resources of GPUs. To address the GPU memory limitation issue, we propose a partition-based algorithm for structural clustering that can process large graphs with limited GPU memory. We conduct experiments on ten real graphs and the experimental results demonstrate the efficiency of our proposed algorithm.

References

- [1] C. C. Aggarwal and H. Wang. A survey of clustering algorithms for graph data. In Managing and mining graph data, pages 275–301. Springer, 2010.

- [2] A. Bellogín and J. Parapar. Using graph partitioning techniques for neighbour selection in user-based collaborative filtering. In Proceedings of ACM RecSys, pages 213–216, 2012.

- [3] C. Biemann. Chinese whispers-an efficient graph clustering algorithm and its application to natural language processing problems. In Proceedings of TextGraphs: the first workshop on graph based methods for natural language processing, pages 73–80, 2006.

- [4] P. Boldi, B. Codenotti, M. Santini, and S. Vigna. Ubicrawler: A scalable fully distributed web crawler. Software: Practice & Experience, 34(8):711–726, 2004.

- [5] P. Boldi, M. Rosa, M. Santini, and S. Vigna. Layered label propagation: A multiresolution coordinate-free ordering for compressing social networks. In S. Srinivasan, K. Ramamritham, A. Kumar, M. P. Ravindra, E. Bertino, and R. Kumar, editors, Proceedings of the 20th international conference on World Wide Web, pages 587–596. ACM Press, 2011.

- [6] P. Boldi and S. Vigna. The WebGraph framework I: Compression techniques. In Proc. of the Thirteenth International World Wide Web Conference (WWW 2004), pages 595–601, Manhattan, USA, 2004. ACM Press.

- [7] Chang, Lijun, Zhang, Wenjie, Wei, Yang, Shiyu, Qin, and Lu. pSCAN: Fast and Exact Structural Graph Clustering. IEEE Transactions on Knowledge and Data Engineering, 29(2):387–401, 2017.

- [8] Y. Che, S. Sun, and Q. Luo. Parallelizing pruning-based graph structural clustering. In Proceedings of ICPP, pages 77:1–77:10, 2018.

- [9] T. H. Cormen, C. E. Leiserson, R. L. Rivest, and C. Stein. Introduction to algorithms. MIT press, 2022.

- [10] Y. Ding, M. Chen, Z. Liu, D. Ding, Y. Ye, M. Zhang, R. Kelly, L. Guo, Z. Su, S. C. Harris, et al. atbionet–an integrated network analysis tool for genomics and biomarker discovery. BMC genomics, 13(1):1–12, 2012.

- [11] W. Fan, R. Jin, M. Liu, P. Lu, X. Luo, R. Xu, Q. Yin, W. Yu, and J. Zhou. Application driven graph partitioning. In Proceedings of SIGMOD, pages 1765–1779, 2020.

- [12] P. Gera, H. Kim, P. Sao, H. Kim, and D. Bader. Traversing large graphs on gpus with unified memory. Proceedings of the VLDB Endowment, 13(7):1119–1133, 2020.

- [13] M. Girvan and M. E. Newman. Community structure in social and biological networks. Proceedings of the national academy of sciences, 99(12):7821–7826, 2002.

- [14] R. Guimera and L. A. N. Amaral. Functional cartography of complex metabolic networks. nature, 433(7028):895–900, 2005.

- [15] L. Hu, L. Zou, and Y. Liu. Accelerating triangle counting on gpu. In Proceedings of SIGMOD, pages 736–748, 2021.

- [16] U. Kang and C. Faloutsos. Beyond ’caveman communities’: Hubs and spokes for graph compression and mining. In Proceedings of ICDM, pages 300–309, 2011.

- [17] J. Leskovec and A. Krevl. SNAP Datasets: Stanford large network dataset collection. http://snap.stanford.edu/data, June 2014.

- [18] X. Li, H. Cai, Z. Huang, Y. Yang, and X. Zhou. Social event identification and ranking on flickr. World Wide Web, 18(5):1219–1245, 2015.

- [19] W. Lin, X. Xiao, X. Xie, and X.-L. Li. Network motif discovery: A gpu approach. IEEE transactions on knowledge and data engineering, 29(3):513–528, 2016.

- [20] V. Martha, W. Zhao, and X. Xu. A study on twitter user-follower network: A network based analysis. In Proceedings of ASONAM, pages 1405–1409, 2013.

- [21] V.-S. Martha, Z. Liu, L. Guo, Z. Su, Y. Ye, H. Fang, D. Ding, W. Tong, and X. Xu. Constructing a robust protein-protein interaction network by integrating multiple public databases. In BMC bioinformatics, volume 12, pages 1–10. Springer, 2011.

- [22] L. Meng, L. Yuan, Z. Chen, X. Lin, and S. Yang. Index-based structural clustering on directed graphs. In Proceedings of ICDE, pages 2832–2845, 2022.

- [23] NVIDIA. Cuda thrust library. https://docs.nvidia.com/cuda/thrust/, 2021.

- [24] G. Palla, I. Derényi, I. Farkas, and T. Vicsek. Uncovering the overlapping community structure of complex networks in nature and society. nature, 435(7043):814–818, 2005.

- [25] R. A. Rossi and N. K. Ahmed. Graph repository. http://www.graphrepository.com, 2013.

- [26] B. Ruan, J. Gan, H. Wu, and A. Wirth. Dynamic structural clustering on graphs. In Proceedings of SIGMOD, pages 1491–1503, 2021.

- [27] S. E. Schaeffer. Graph clustering. Computer science review, 1(1):27–64, 2007.

- [28] M. Schinas, S. Papadopoulos, Y. Kompatsiaris, and P. A. Mitkas. Visual event summarization on social media using topic modelling and graph-based ranking algorithms. In Proceedings of ICMR, pages 203–210, 2015.

- [29] M. Schinas, S. Papadopoulos, G. Petkos, Y. Kompatsiaris, and P. A. Mitkas. Multimodal graph-based event detection and summarization in social media streams. In Proceedings of SIGMM, pages 189–192, 2015.

- [30] J. H. Seo and M. H. Kim. pm-scan: an I/O efficient structural clustering algorithm for large-scale graphs. In Proceedings of CIKM, pages 2295–2298, 2017.

- [31] M. Sha, Y. Li, and K.-L. Tan. Self-adaptive graph traversal on gpus. In Proceedings of SIGMOD, pages 1558–1570, 2021.

- [32] X. Shi, Z. Zheng, Y. Zhou, H. Jin, L. He, B. Liu, and Q.-S. Hua. Graph processing on gpus: A survey. ACM Computing Surveys (CSUR), 50(6):1–35, 2018.

- [33] H. Shiokawa, Y. Fujiwara, and M. Onizuka. Scan++: efficient algorithm for finding clusters, hubs and outliers on large-scale graphs. Proceedings of the VLDB Endowment, 8(11):1178–1189, 2015.

- [34] N. Simsiri, K. Tangwongsan, S. Tirthapura, and K.-L. Wu. Work-efficient parallel union-find. Concurrency and Computation: Practice and Experience, 30(4):e4333, 2018.

- [35] D. P. Singh, I. Joshi, and J. Choudhary. Survey of gpu based sorting algorithms. International Journal of Parallel Programming, 46:1017–1034, 2018.

- [36] T. R. Stovall, S. Kockara, and R. Avci. Gpuscan: Gpu-based parallel structural clustering algorithm for networks. IEEE Transactions on Parallel and Distributed Systems, 26(12):3381–3393, 2014.

- [37] T. R. Stovall, S. Kockara, and R. Avci. GPUSCAN: gpu-based parallel structural clustering algorithm for networks. IEEE Transactions on Parallel and Distributed Systems, 26(12):3381–3393, 2015.

- [38] T. Tseng, L. Dhulipala, and J. Shun. Parallel index-based structural graph clustering and its approximation. In Proceedings of SIGMOD, pages 1851–1864, 2021.

- [39] D. Wen, L. Qin, Y. Zhang, L. Chang, and X. Lin. Efficient structural graph clustering: an index-based approach. Proceedings of the VLDB Endowment, 11(3):243–255, 2017.

- [40] X. Xu, N. Yuruk, Z. Feng, and T. A. Schweiger. SCAN: a structural clustering algorithm for networks. In Proceedings of SIGKDD, pages 824–833, 2007.

- [41] C. Ye, Y. Li, B. He, Z. Li, and J. Sun. Gpu-accelerated graph label propagation for real-time fraud detection. In Proceedings of the SIGMOD, pages 2348–2356, 2021.

- [42] H. Yin, A. R. Benson, J. Leskovec, and D. F. Gleich. Local higher-order graph clustering. In Proceedings of SIGKDD, pages 555–564, 2017.

- [43] W. Zhao, G. Chen, and X. Xu. Anyscan: An efficient anytime framework with active learning for large-scale network clustering. In Proceedings of ICDM, pages 665–674. IEEE Computer Society, 2017.

- [44] Q. Zhou and J. Wang. Sparkscan: a structure similarity clustering algorithm on spark. In National Conference on Big Data Technology and Applications, pages 163–177. Springer, 2015.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/b8b446eb-8d8a-493b-8450-4733ad6adb91/longyuan.jpg) |

Long Yuan is currently a professor in School of Computer Science and Engineering, Nanjing University of Science and Technology, China. He received his PhD degree from the University of New South Wales, Australia, M.S. degree and B.S. degree both from Sichuan University, China. His research interests include graph data management and analysis. He has published papers in conferences and journals including VLDB, ICDE, WWW, The VLDB Journal, and TKDE. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/b8b446eb-8d8a-493b-8450-4733ad6adb91/zeyu.jpg) |

Zeyu Zhou Zeyu Zhou is currently a master student in the Department of Computer Science, Nanjing University of Science and Technology. His research interests include graph data management and analysis. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/b8b446eb-8d8a-493b-8450-4733ad6adb91/xueminlin.jpg) |

Xuemin Lin received the BSc degree in applied math from Fudan University, in 1984, and the PhD degree in computer science from the University of Queensland, in 1992. He is a professor with the School of Computer Science and Engineering, University of New South Wales. His current research interests include approximate query processing, spatial data analysis, and graph visualization. He is a fellow of the IEEE. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/b8b446eb-8d8a-493b-8450-4733ad6adb91/zichen.jpg) |

Zi Chen received the PhD degree from the Software Engineering Institute, East China Normal University, in 2021. She is currently a postdoctor in ECNU. Her research interest is big graph data query and analysis, including cohesive subgraph search on large-scale social networks and path planning on road networks. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/b8b446eb-8d8a-493b-8450-4733ad6adb91/xiangzhao.jpg) |

Xiang Zhao received the PhD degree from The University of New South Wales, Australia, in 2014. He is currently a professor with the National University of Defense Technology, China. His research interests include graph datamanagement and mining. He is a member of the IEEE. He has published papers in conferences and journals including VLDB, ICDE, WWW, The VLDB Journal, and TKDE. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/b8b446eb-8d8a-493b-8450-4733ad6adb91/fanzhang.jpg) |

Fan Zhang is a Professor at Guangzhou University. He was a research associate in the School of Computer Science and Engineering, University of New South Wales, from 2017 to 2019. He received the BEng degree from Zhejiang University in 2014, and the PhD from University of Technology Sydney in 2017. His research interests include graph algorithms and social networks. Since 2017, he has published more than 20 papers in top venues, e.g., SIGMOD, PVLDB, ICDE, IJCAI, AAAI, TKDE and VLDB Journal. |