GPT-4V-AD: Exploring Grounding Potential of

VQA-oriented GPT-4V for Zero-shot Anomaly Detection

Abstract

Large Multimodal Model (LMM) GPT-4V(ision) endows GPT-4 with visual grounding capabilities, making it possible to handle certain tasks through the Visual Question Answering (VQA) paradigm. This paper explores the potential of VQA-oriented GPT-4V in the recently popular visual Anomaly Detection (AD) and is the first to conduct qualitative and quantitative evaluations on the popular MVTec AD and VisA datasets. Considering that this task requires both image-/pixel-level evaluations, the proposed GPT-4V-AD framework contains three components: 1) Granular Region Division, 2) Prompt Designing, 3) Text2Segmentation for easy quantitative evaluation, and have made some different attempts for comparative analysis. The results show that GPT-4V can achieve certain results in the zero-shot AD task through a VQA paradigm, such as achieving image-level 77.1/88.0 and pixel-level 68.0/76.6 AU-ROCs on MVTec AD and VisA datasets, respectively. However, its performance still has a certain gap compared to the state-of-the-art zero-shot method, e.g., WinCLIP and CLIP-AD, and further researches are needed. This study provides a baseline reference for the research of VQA-oriented LMM in the zero-shot AD task, and we also post several possible future works. Code is available at https://github.com/zhangzjn/GPT-4V-AD.

1 Introduction

GPT-4V(ision) OpenAI (2023b) is a recent enhancement of GPT-4 OpenAI (2023a) released by OpenAI. It allows users to input additional images to extend the pure language model, implementing user interaction through a Visual Question Answering (VQA) manner. Recent works Yang et al. (2023c, a); Wu et al. (2023); Shi et al. (2023) have explored its potential in various settings, demonstrating its powerful generalization capabilities. On the other hand, due to the growing demand for industrial applications and the development of datasets Bergmann et al. (2019); Zou et al. (2022); Wang et al. (2024), Anomaly Detection (AD) is receiving increasing attention from researchers and practitioners Roth et al. (2022); Deng and Li (2022); You et al. (2022); Liang et al. (2023); Liu et al. (2023b); Gu et al. (2023b). The recent zero-shot AD is first proposed in WinCLIP Jeong et al. (2023), a setting dedicated to detecting image-level and pixel-level anomalies in a given image without any positive or negative samples. This setting addresses the pain point of difficulty in obtaining anomaly samples in industrial applications, thus having high practical value. Current approaches Jeong et al. (2023); Chen et al. (2023a); Cao et al. (2023a); Gu et al. (2023a); Chen et al. (2023b) distinguishes anomalies based on a large language model, e.g., CLIP Radford et al. (2021a), which uses pre-trained vision language alignment to achieve anomaly detection. This framework often requires careful design. Unlike the aforementioned approach, this report explores a more general VQA paradigm for the zero-shot anomaly detection setting, hoping to bring new ideas to the solution of the zero-shot AD setting.

Specifically, this technical report explores the results of VQA-oriented LMM (using GPT-4V in this paper) on the zero-shot AD setting for the first time and proposes a general VQA-based AD framework, which includes 1) Granular Region Division (Sec. 3.1), 2)Prompt Designing (Sec. 3.2), and 3) Text2Segmentation (Sec. 3.3). We conduct quantitative and qualitative experiments on the popular MVTec AD and VisA datasets (c.f., Sec. 4.2, Sec. 4.3, Sec. 4.4). The results show that GPT-4V has certain effects on the zero-shot AD setting, and even surpasses the zero-shot SoTA method in some metrics, such as achieving 88.0 AU-ROC on VisA, surpassing SoTA CLIP-AD by +6.8. However, given that the anomaly detection setting requires pixel-level grounding capabilities, the current performance of GPT-4V still needs to be further improved. We hope this technical report can promote more zero-shot AD researches, especially the VQA-oriented paradigm.

2 Related Work

Visual Anomaly Detection. Visual anomaly detection, classified by setting, encompasses full-shot unsupervised and zero-/few-shot approaches.

Among these, full-shot unsupervised methods, the most widely recognized, can be further divided into three categories: Synthetic-based Zavrtanik et al. (2021); Li et al. (2021); Schlüter et al. (2022); Hu et al. (2024), Feature Embedding-based Roth et al. (2022); Defard et al. (2021); Bergmann et al. (2020); Cohen and Hoshen (2020); Rudolph et al. (2021), and Reconstruction-based Deng and Li (2022); Zhao (2023); Zhang et al. (2024); He et al. (2024). Synthetic-based methods enhance anomaly localization accuracy by artificially generating anomalies during training. Feature embedding techniques map normal samples into a compact feature space, where they are compared with test inputs in feature dimensions. Reconstruction-based methods learn the distribution of normal samples during training and reconstruct anomaly regions as normal in the testing phase, facilitating anomaly detection through comparison. All these methods necessitate extensive amounts of normal data for training and learning the typical sample distribution.

Recent advancements have enabled visual anomaly detection with minimal or zero sample data Xie et al. (2023); Radford et al. (2021b); Cao et al. (2023b). PatchCore Roth et al. (2022), for instance, constructs a subset of feature cores using a limited number of normal samples for comparison purposes. Notably, several recent studies Chen et al. (2023a, b); Jeong et al. (2023); Zhou et al. (2023) on few-shot and zero-shot anomaly detection using the CLIP Radford et al. (2021a) model have garnered significant attention, highlighting the potential of CLIP’s visual-linguistic model. AnomalyGPT Gu et al. (2023a), leveraging a large-scale visual-linguistic model based on GPT, has demonstrated promising results in tasks with limited samples. On the other hand, GPT-4V Cao et al. (2023c) conducts simple text inference on zero- and single-sample scenarios using prompts, but lacks quantitative evaluation metrics.

Large Vision-Language Models. Recent advances have seen the proliferation of Large Visual-Language Models (LVLMs) Radford et al. (2021b), with notable examples such as BLIP-2 Li et al. (2023), which employs Q-Former for efficient multimodal alignment. InstructBLIP Dai et al. (2024) consists of a visual encoder, Q-Former, and a large language model (LLM). GPT-4 Cao et al. (2023c), the first model to integrate vision and language, showcases exceptional performance in both modalities, built on a Transformer architecture. MiniGPT-4 Zhu et al. (2023) is composed of pre-trained components like Vicuna Chiang et al. (2023) from LLMs, ViT-G Dosovitskiy et al. (2021) for vision, and Q-Former. LLaVA Liu et al. (2023a) integrates LLaMA Touvron et al. (2023) from pre-trained LLMs with the CLIP Radford et al. (2021a) visual encoder, demonstrating strong capabilities in visual-language understanding and inference.

However, these LVLMs primarily generate language text outputs, necessitating additional modules for image localization tasks. This paper investigates the potential of GPT-4V in zero-shot visual anomaly detection and provides quantitative analysis, including anomaly localization metrics.

3 Methodology

As a VQA model, LMM GPT-4V excels at comprehending input images at the semantic level but lacks pixel-level location perception. Anomaly detection tasks require pixel-level segmentation, so this section explores how to unleash the potential of GPT-4V’s grounded vision-language capabilities in the AD task. Specifically, we propose a GPT-4V-AD framework that includes three components: Granular Region Division, Prompt Designing, and Text2Segmentation, as shown in Fig. 1.

3.1 Granular Region Division

We first conduct a naive toy experiment. As shown in Fig. 2, when the original image and prompt are directly fed into GPT-4V, the model can generate judgments about defects, but cannot output accurate location results. We believe this is because GPT-4V can align text and object content at the semantic level, but is not adept at aligning text to pixel level. Therefore, we attempt to transform the AD problem based on VQA into a text and image region grounding problem, which is more suitable for GPT-4V(ision). We assume that the anomalous regions have some form of connectivity under certain relationship constraints, such as semantics and local structure. The regions associated with the anomalous area should ideally closely adhere to the anomaly itself, ensuring that the segmentation results have a maximum upper limit.

Region Generation. Fig. 3 shows three region generation schemes we attempted: 1) Grid Sampling that uniformly samples different areas. 2) Semantic level region division generated by SAM Kirillov et al. (2023). 3) Super-pixel manner that considers more similarity of layout structure. The qualitative results indicate that the grid manner does not pay enough attention to fine granularity, and the generated regions cannot cover the anomaly area well (c.f., first row of Fig. 3); SAM may overkill by focusing on additional areas with obvious semantics (c.f., second row on the left), or discard areas with unclear semantics (c.f., second row on the right); Super-pixel can relatively stably generate divisions with a high overlap with anomalous ground truth. In addition, the three methods respectively achieve image-level 58.5/71.2/75.4 and 63.7/74.6/77.3 pixel-level AU-ROC results (limited by the number of GPT-4V accesses, experiments are only conducted on object hazelnut and texture leather). Results indicate that grid sampling, which has no structural prior, performs the worst, while the results of super-pixel are the best. Therefore, we recommend super-pixel as the default region generation module for the AD task.

Region Labeling. Similar to recent work Yang et al. (2023b), the number is used as region labeling, but we outline each region in white without using a color filling. This is because anomaly detection is very sensitive to defect details, and this manner can minimize the impact of excessively small defect areas as illustrated in Fig. 1.

3.2 Prompt Designing

Appropriate prompts are crucial for GPT-4V. Cao et al. (2023c) experimented with various prompt formats, evaluating both zero-shot and one-shot approaches for enhanced performance. For any test image of the popular AD datasets Bergmann et al. (2019); Zou et al. (2022), we can obtain the image category. Thus, we design a general prompt description for all categories and then inject the category information to it, i.e., in Fig. 1. For example, ’This is an image of hazelnut. The image has different region divisions, each distinguished by white edges and each with a unique numerical identifier within the region, starting from 1. Each region may exhibit anomalies of unknown types, and anomaly scores range from 0 to 1, with higher values indicating a higher probability of an anomaly. Please output the anomaly scores for the regions with anomalies. Provide the answer in the following format: “region 1: 0.9; region 3: 0.7.". Ignore the region that does not contain anomalies.’

3.3 Text2Segmentation

Through structural output , we can easily obtain the final anomaly segmentation result through regular matching combined with preprocessed regions . As illustrated in Fig. 1, the results corresponding to each region of are populated into the corresponding areas of . Regions with higher anomaly scores are depicted in progressively deeper shades of red, while other areas remain black, indicating no detected anomalies. The resulting constitutes the desired anomaly localization score map, which can be utilized for subsequent calculations and evaluations of quantitative metrics.

4 Experiments

4.1 Setup for Zero-shot AD

Task Setting. This investigation discusses the zero-shot AD task recently proposed in WinCLIP Jeong et al. (2023), which aims to detect image-level and pixel-level anomalies without having seen samples of the anomalous categories. This setting is highly valuable for practical applications, as in many cases, anomalous samples are difficult to obtain or are extremely limited in quantity. Also, due to security reasons, the data may not be transferred externally. This paper explores the potential of GPT-4V, which is based on the VQA paradigm, in performing this task.

Dataset. We evaluate GPT-4V(ision) with SoTAs on popular MVTec AD Bergmann et al. (2019) and VisA Zou et al. (2022) datasets for both anomaly classification and segmentation. In detail, MVTec AD contains 15 products in 2 types (i.e., object and texture) with 3,629 normal images for training and 467/1,258 normal/anomaly images for testing (5,354 images in total). VisA contains 12 objects in 3 types (i.e., single instance, multiple instance, and complex structure) with 8,659 normal images for training and 962/1,200 normal/anomaly images for testing (10,821 images in total).

The top and middle parts respectively show the single-category results of GPT-4V on texture and object. The bottom part shows the average results, as well as a comparison with recent SoTA zero-shot AD methods. The attempted VQA-oriented AD paradigm has achieved considerable results, but there is still a certain gap compared to the CLIP-based contrastive framework. Category Image-level Pixel-level AU-ROC AP -max AU-ROC AP -max AU-PRO Texture Carpet 64.9 62.9 73.0 69.9 4.9 15.6 24.0 Grid 53.7 61.9 72.2 60.7 1.6 5.0 28.6 Leather 69.3 62.8 70.3 81.3 5.5 12.8 56.8 Tile 94.1 93.8 88.8 71.7 17.3 30.0 28.5 Wood 93.2 90.9 90.3 67.8 5.5 18.8 35.8 Object Bottle 75.8 83.2 79.9 56.2 11.3 20.6 24.4 Cable 77.9 77.7 71.7 54.6 4.6 8.1 8.9 Capsule 55.0 60.3 68.8 63.5 1.5 2.6 38.9 Hazelnut 81.4 81.4 84.2 73.3 10.8 24.4 50.2 Metal Nut 96.2 94.9 89.7 52.6 7.2 13.3 22.7 Pill 97.0 34.7 66.4 83.5 12.9 29.9 30.9 Screw 99.0 38.0 66.1 74.4 1.5 2.1 29.2 Toothbrush 75.2 75.5 67.4 88.1 2.5 13.1 60.7 Transistor 70.6 67.8 71.9 56.5 7.4 14.9 10.2 Zipper 53.4 62.0 66.4 65.9 1.9 8.3 20.7 Zero-shot SoTAs Average (GPT-4V-AD) 77.1 69.9 75.1 68.0 6.4 14.6 31.4 WinCLIP 91.8 96.5 92.9 85.1 - 31.7 64.6 SAA 44.8 73.8 84.3 67.7 15.2 23.8 31.9 SAA+ 63.1 81.4 87.0 73.2 28.8 37.8 42.8 CLIP-AD 89.9 95.5 91.1 88.7 28.5 35.3 89.9 CLIP-AD+ 90.8 95.4 91.4 91.2 39.4 41.9 85.6

Metric. Following prior works Jeong et al. (2023); Chen et al. (2023a), we use threshold-independent sorting metrics: 1) mean Area Under the Receiver Operating Curve (AU-ROC), 2) mean Average Precision Zavrtanik et al. (2021) (AP), and 3) mean -score at optimal threshold Zou et al. (2022) (-max) for both image-level and pixel-level evaluations. And 4) mean Area Under the Per-Region-Overlap Bergmann et al. (2020) (AU-PRO) is also employed.

Implementation Details. The input image resolution is set to 768768 to maintain consistency with GPT-4V’s input. In the region divisions, areas smaller than 600 or larger than 120K are filtered out. The edge of the region is outlined with a 1-pixel border, and the numerical labeling is placed within the mask and as centrally as possible within the entire region. For SAM Kirillov et al. (2023), we use ViT-H Dosovitskiy et al. (2021) as the region division backbone, and SLIC Achanta et al. (2012) is chosen as the super-pixel approach with 60 segments and 20 compactness.

4.2 Quantitative Results on MVTec AD

We evaluate the zero-shot generalization ability of GPT-4V(ision) on the MVTec AD dataset Bergmann et al. (2019). As shown in Tab. 1, the VQA-oriented framework also has category bias, i.e., it cannot maintain consistent performance across different categories, which is also reflected in CLIP-based contrastive zero-shot methods. The bottom of Tab. 1 shows a comparison with the results of the recent zero-shot methods Jeong et al. (2023); Cao et al. (2023a); Chen et al. (2023b). It can be seen that the VQA-oriented method has similar effects to the most recent SAA Cao et al. (2023a), but there is still a certain gap compared to the SoTA results, which still needs further research.

4.3 Quantitative Results on VisA

The top three parts shows the results of GPT-4V on different categories. The bottom part shows the average results, as well as a comparison with recent SoTA zero-shot AD results. The attempted VQA-oriented AD paradigm has achieved highly competitive results on some metrics, but overall, there is still a certain gap compared to the CLIP-based contrastive framework. Category Image-level Pixel-level AU-ROC AP -max AU-ROC AP -max AU-PRO Complex Structure PCB1 100. 37.7 65.8 70.6 1.3 1.0 8.0 PCB2 100. 37.0 65.8 67.2 1.5 1.8 6.2 PCB3 92.7 85.8 91.3 54.7 0.6 1.2 0.3 PCB4 73.3 71.9 77.6 96.7 10.5 12.3 78.3 Multiple Instances Macaroni1 95.9 94.3 89.8 98.7 1.8 2.8 34.2 Macaroni2 69.2 44.4 66.3 76.6 1.0 0.6 41.3 Capsules 87.2 38.2 67.2 90.5 0.9 1.8 36.8 Candle 99.2 100 100 54.8 1.1 0.7 30.8 Single Instance Cashew 54.0 56.0 67.2 57.0 4.3 12.8 6.3 Chewing Gum 85.4 85.1 76.2 74.8 12.1 31.1 11.9 Fryum 99.2 99.5 99.9 81.4 15.9 23.2 28.7 Pipe Fryum 99.5 99.1 100. 96.0 0.1 0.9 71 Zero-shot SoTAs Average (GPT-4V-AD) 88.0 70.7 80.6 76.6 4.3 7.5 29.5 WinCLIP 78.1 81.2 79.0 79.6 - 14.8 56.8 SAA 48.5 60.3 73.1 83.7 5.5 12.8 41.9 SAA+ 71.1 77.3 76.2 74.0 22.4 27.1 36.8 CLIP-AD 81.2 83.7 80.0 84.1 9.6 16.0 63.4 CLIP-AD+ 81.1 85.1 80.9 94.8 20.3 26.5 85.3

We further evaluate the performance of GPT-4V(ision) on the popular VisA dataset Zou et al. (2022), which contains more small defects and is more challenging. Surprisingly, unlike the MVTec AD results, the proposed VQA paradigm performs significantly better on this dataset and even surpasses the SoTA method on some metrics, such as achieving an image-level AU-ROC of 88.0 that surpasses SoTA CLIP-AD by +6.8.

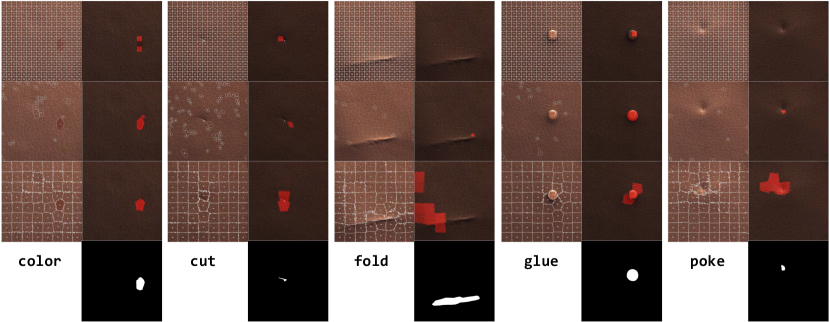

4.4 Qualitative results

Fig. 4 shows the qualitative segmentation results. Even based on the intuitive VQA approach, general-purpose GPT-4V can still obtain segmentation results closer to the ground truth of the anomaly region, demonstrating its powerful visual grounding ability.

4.5 Analyses and Visualization

1) Ablation study on region division manner. This paper adopts three Granular Region Division methods, i.e., grid, SAM Kirillov et al. (2023), and super-pixel Achanta et al. (2012). Fig. 5 and Fig. 6 respectively show the qualitative results of the three methods on object hazelnut and texture leather. SAM tends to locate more semantically biased areas, ignoring some weak semantic areas with unclear edges, which has a good effect on defects with obvious semantic classification, e.g., crack in the hazelnut and glue in the leather. However, it may misjudge areas that are too semantically obvious (c.f., bottom of the hazelnut), and miss minor defects (c.f., fold defect in leather). In contrast, super-pixel can pay attention to every area in the image and provide better division results for partial structures than the grid manner.

2) Results with Extra Reference Images. The few-shot setting introduces additional images into the model to improve its performance. Therefore, we further input two extra normal images as reference images into the model. The experimental results in Fig. 7 show that the current version of GPT-4V cannot effectively utilize the additional reference images in the anomaly detection task, but often gets disturbed and cannot output results normally.

3) Repeatability Analysis. We conduct repeated experiments on the consistency of GPT-4V’s results. As shown in Fig. 8, when we use the same image and prompt inputs, the output anomaly regions will have slight differences, including both region number and confidence score.

5 Conclusion and Future Works

This paper explores the potential of VQA-oriented GPT-4V(ision) in the zero-shot AD task and proposes adapted image and prompt processing methods for quantitative and qualitative evaluation. The results indicate that it has a certain effectiveness on popular AD datasets. Nevertheless, there is still room for further improvement in AD tasks. Moreover, although GPT-4V has achieved epoch-making improvements in human-machine interaction, how to better apply this capability to pixel-level fine-grained tasks remains to be further studied, and the issue of high inference cost needs to be addressed.

Limitations and Future Works. We have summarized some of the current challenges and future works:

1) Due to the limitation on the number of accesses, more suitable image preprocessing methods and more complex prompt designs can be attempted to fully evaluate this task.

2) AD datasets are generally collected from specific scenarios, such as industry, and GPT-4V may have less data from these scenarios in its training set, which could lead to poor generalization performance. Therefore, specific fine-tuning for AD tasks can be studied.

3) This paper only explores the experimental results of VQA-based pure LMM (Large Multi-modal Models), and combining it with current zero-shot AD methods may further improve the model’s performance.

4) Prior learning of few-shot normal/anomalous samples should help GPT-4V better understand defects and grounding, which researchers can further explore.

5) Using GPT-4V for semi-automatic data annotation can reduce the cost of manual annotation.

6) The labeling scheme chosen may impact overly small defects, leading to overkill or missed detection. It would be worthwhile to explore more reasonable alternative solutions.

7) The uniqueness of the output from the current paradigm is relatively poor, and there may even be significant gaps among different tests. This paper only provides a preliminary report, and further experiments are warranted for more stable model testing in the future.

References

- Achanta et al. [2012] Radhakrishna Achanta, Appu Shaji, Kevin Smith, Aurelien Lucchi, Pascal Fua, and Sabine Süsstrunk. Slic superpixels compared to state-of-the-art superpixel methods. IEEE transactions on pattern analysis and machine intelligence, 34(11):2274–2282, 2012.

- Bergmann et al. [2019] Paul Bergmann, Michael Fauser, David Sattlegger, and Carsten Steger. Mvtec ad–a comprehensive real-world dataset for unsupervised anomaly detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 9592–9600, 2019.

- Bergmann et al. [2020] Paul Bergmann, Michael Fauser, David Sattlegger, and Carsten Steger. Uninformed students: Student-teacher anomaly detection with discriminative latent embeddings. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 4183–4192, 2020.

- Cao et al. [2023a] Yunkang Cao, Xiaohao Xu, Chen Sun, Yuqi Cheng, Zongwei Du, Liang Gao, and Weiming Shen. Segment any anomaly without training via hybrid prompt regularization. arXiv preprint arXiv:2305.10724, 2023.

- Cao et al. [2023b] Yunkang Cao, Xiaohao Xu, Chen Sun, Yuqi Cheng, Zongwei Du, Liang Gao, and Weiming Shen. Segment any anomaly without training via hybrid prompt regularization. arXiv preprint arXiv:2305.10724, 2023.

- Cao et al. [2023c] Yunkang Cao, Xiaohao Xu, Chen Sun, Xiaonan Huang, and Weiming Shen. Towards generic anomaly detection and understanding: Large-scale visual-linguistic model (gpt-4v) takes the lead. arXiv preprint arXiv:2311.02782, 2023.

- Chen et al. [2023a] Xuhai Chen, Yue Han, and Jiangning Zhang. A zero-/few-shot anomaly classification and segmentation method for cvpr 2023 vand workshop challenge tracks 1&2: 1st place on zero-shot ad and 4th place on few-shot ad. arXiv preprint arXiv:2305.17382, 2023.

- Chen et al. [2023b] Xuhai Chen, Jiangning Zhang, Guanzhong Tian, Haoyang He, Wuhao Zhang, Yabiao Wang, Chengjie Wang, Yunsheng Wu, and Yong Liu. Clip-ad: A language-guided staged dual-path model for zero-shot anomaly detection. arXiv preprint arXiv:2311.00453, 2023.

- Chiang et al. [2023] Wei-Lin Chiang, Zhuohan Li, Zi Lin, Ying Sheng, Zhanghao Wu, Hao Zhang, Lianmin Zheng, Siyuan Zhuang, Yonghao Zhuang, Joseph E Gonzalez, et al. Vicuna: An open-source chatbot impressing gpt-4 with 90%* chatgpt quality. See https://vicuna. lmsys. org (accessed 14 April 2023), 2(3):6, 2023.

- Cohen and Hoshen [2020] Niv Cohen and Yedid Hoshen. Sub-image anomaly detection with deep pyramid correspondences. arXiv preprint arXiv:2005.02357, 2020.

- Dai et al. [2024] Wenliang Dai, Junnan Li, Dongxu Li, Anthony Meng Huat Tiong, Junqi Zhao, Weisheng Wang, Boyang Li, Pascale N Fung, and Steven Hoi. Instructblip: Towards general-purpose vision-language models with instruction tuning. Advances in Neural Information Processing Systems, 36, 2024.

- Defard et al. [2021] Thomas Defard, Aleksandr Setkov, Angelique Loesch, and Romaric Audigier. Padim: a patch distribution modeling framework for anomaly detection and localization. In International Conference on Pattern Recognition, pages 475–489. Springer, 2021.

- Deng and Li [2022] Hanqiu Deng and Xingyu Li. Anomaly detection via reverse distillation from one-class embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9737–9746, 2022.

- Dosovitskiy et al. [2021] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, and Neil Houlsby. An image is worth 16x16 words: Transformers for image recognition at scale. In International Conference on Learning Representations, 2021.

- Gu et al. [2023a] Zhaopeng Gu, Bingke Zhu, Guibo Zhu, Yingying Chen, Ming Tang, and Jinqiao Wang. Anomalygpt: Detecting industrial anomalies using large vision-language models. arXiv preprint arXiv:2308.15366, 2023.

- Gu et al. [2023b] Zhihao Gu, Liang Liu, Xu Chen, Ran Yi, Jiangning Zhang, Yabiao Wang, Chengjie Wang, Annan Shu, Guannan Jiang, and Lizhuang Ma. Remembering normality: Memory-guided knowledge distillation for unsupervised anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 16401–16409, 2023.

- He et al. [2024] Haoyang He, Jiangning Zhang, Hongxu Chen, Xuhai Chen, Zhishan Li, Xu Chen, Yabiao Wang, Chengjie Wang, and Lei Xie. A diffusion-based framework for multi-class anomaly detection. In AAAI, 2024.

- Hu et al. [2024] Teng Hu, Jiangning Zhang, Ran Yi, Yuzhen Du, Xu Chen, Liang Liu, Yabiao Wang, and Chengjie Wang. Anomalydiffusion: Few-shot anomaly image generation with diffusion model. In AAAI, 2024.

- Jeong et al. [2023] Jongheon Jeong, Yang Zou, Taewan Kim, Dongqing Zhang, Avinash Ravichandran, and Onkar Dabeer. Winclip: Zero-/few-shot anomaly classification and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 19606–19616, 2023.

- Kirillov et al. [2023] Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C Berg, Wan-Yen Lo, et al. Segment anything. arXiv preprint arXiv:2304.02643, 2023.

- Li et al. [2021] Chun-Liang Li, Kihyuk Sohn, Jinsung Yoon, and Tomas Pfister. Cutpaste: Self-supervised learning for anomaly detection and localization. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 9664–9674, 2021.

- Li et al. [2023] Junnan Li, Dongxu Li, Silvio Savarese, and Steven Hoi. Blip-2: Bootstrapping language-image pre-training with frozen image encoders and large language models. In International conference on machine learning, pages 19730–19742. PMLR, 2023.

- Liang et al. [2023] Yufei Liang, Jiangning Zhang, Shiwei Zhao, Runze Wu, Yong Liu, and Shuwen Pan. Omni-frequency channel-selection representations for unsupervised anomaly detection. IEEE Transactions on Image Processing, 2023.

- Liu et al. [2023a] Haotian Liu, Chunyuan Li, Qingyang Wu, and Yong Jae Lee. Visual instruction tuning, 2023.

- Liu et al. [2023b] Zhikang Liu, Yiming Zhou, Yuansheng Xu, and Zilei Wang. Simplenet: A simple network for image anomaly detection and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 20402–20411, 2023.

- OpenAI [2023a] OpenAI. Gpt-4 research, 2023. Accessed: 2023-11-05.

- OpenAI [2023b] OpenAI. Gpt-4v system card, 2023. Accessed: 2023-11-05.

- Radford et al. [2021a] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Radford et al. [2021b] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Roth et al. [2022] Karsten Roth, Latha Pemula, Joaquin Zepeda, Bernhard Schölkopf, Thomas Brox, and Peter Gehler. Towards total recall in industrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 14318–14328, 2022.

- Rudolph et al. [2021] Marco Rudolph, Bastian Wandt, and Bodo Rosenhahn. Same same but differnet: Semi-supervised defect detection with normalizing flows. In Proceedings of the IEEE/CVF winter conference on applications of computer vision, pages 1907–1916, 2021.

- Schlüter et al. [2022] Hannah M Schlüter, Jeremy Tan, Benjamin Hou, and Bernhard Kainz. Natural synthetic anomalies for self-supervised anomaly detection and localization. In European Conference on Computer Vision, pages 474–489. Springer, 2022.

- Shi et al. [2023] Yongxin Shi, Dezhi Peng, Wenhui Liao, Zening Lin, Xinhong Chen, Chongyu Liu, Yuyi Zhang, and Lianwen Jin. Exploring ocr capabilities of gpt-4v (ision): A quantitative and in-depth evaluation. arXiv preprint arXiv:2310.16809, 2023.

- Touvron et al. [2023] Hugo Touvron, Thibaut Lavril, Gautier Izacard, Xavier Martinet, Marie-Anne Lachaux, Timothée Lacroix, Baptiste Rozière, Naman Goyal, Eric Hambro, Faisal Azhar, et al. Llama: Open and efficient foundation language models. arXiv preprint arXiv:2302.13971, 2023.

- Wang et al. [2024] Chengjie Wang, Wenbing Zhu, Bin-Bin Gao, Zhenye Gan, Jianning Zhang, Zhihao Gu, Shuguang Qian, Mingang Chen, and Lizhuang Ma. Real-iad: A real-world multi-view dataset for benchmarking versatile industrial anomaly detection. In CVPR, 2024.

- Wu et al. [2023] Yang Wu, Shilong Wang, Hao Yang, Tian Zheng, Hongbo Zhang, Yanyan Zhao, and Bing Qin. An early evaluation of gpt-4v (ision). arXiv preprint arXiv:2310.16534, 2023.

- Xie et al. [2023] Guoyang Xie, Jinbao Wang, Jiaqi Liu, Yaochu Jin, and Feng Zheng. Pushing the limits of fewshot anomaly detection in industry vision: Graphcore. In The Eleventh International Conference on Learning Representations, 2023.

- Yang et al. [2023a] Jianwei Yang, Hao Zhang, Feng Li, Xueyan Zou, Chunyuan Li, and Jianfeng Gao. Set-of-mark prompting unleashes extraordinary visual grounding in gpt-4v. arXiv preprint arXiv:2310.11441, 2023.

- Yang et al. [2023b] Jianwei Yang, Hao Zhang, Feng Li, Xueyan Zou, Chunyuan Li, and Jianfeng Gao. Set-of-mark prompting unleashes extraordinary visual grounding in gpt-4v. arXiv preprint arXiv:2310.11441, 2023.

- Yang et al. [2023c] Zhengyuan Yang, Linjie Li, Kevin Lin, Jianfeng Wang, Chung-Ching Lin, Zicheng Liu, and Lijuan Wang. The dawn of lmms: Preliminary explorations with gpt-4v (ision). arXiv preprint arXiv:2309.17421, 9, 2023.

- You et al. [2022] Zhiyuan You, Lei Cui, Yujun Shen, Kai Yang, Xin Lu, Yu Zheng, and Xinyi Le. A unified model for multi-class anomaly detection. Advances in Neural Information Processing Systems, 35:4571–4584, 2022.

- Zavrtanik et al. [2021] Vitjan Zavrtanik, Matej Kristan, and Danijel Skočaj. Draem-a discriminatively trained reconstruction embedding for surface anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 8330–8339, 2021.

- Zhang et al. [2024] Jiangning Zhang, Xiangtai Li, Guanzhong Tian, Zhucun Xue, Yong Liu, Guansong Pang, and Dacheng Tao. Learning feature inversion for multi-class unsupervised anomaly detection under general-purpose coco-ad benchmark. arXiv, 2024.

- Zhao [2023] Ying Zhao. Omnial: A unified cnn framework for unsupervised anomaly localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3924–3933, 2023.

- Zhou et al. [2023] Qihang Zhou, Guansong Pang, Yu Tian, Shibo He, and Jiming Chen. Anomalyclip: Object-agnostic prompt learning for zero-shot anomaly detection. arXiv preprint arXiv:2310.18961, 2023.

- Zhu et al. [2023] Deyao Zhu, Jun Chen, Xiaoqian Shen, Xiang Li, and Mohamed Elhoseiny. Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv preprint arXiv:2304.10592, 2023.

- Zou et al. [2022] Yang Zou, Jongheon Jeong, Latha Pemula, Dongqing Zhang, and Onkar Dabeer. Spot-the-difference self-supervised pre-training for anomaly detection and segmentation. In European Conference on Computer Vision, pages 392–408. Springer, 2022.