Goal-oriented Tensor: Beyond Age of Information Towards Semantics-Empowered Goal-Oriented Communications

Abstract

Optimizations premised on open-loop metrics such as Age of Information (AoI) indirectly enhance the system’s decision-making utility. We therefore propose a novel closed-loop metric named Goal-oriented Tensor (GoT) to directly quantify the impact of semantic mismatches on goal-oriented decision-making utility. Leveraging the GoT, we consider a sampler & decision-maker pair that works collaboratively and distributively to achieve a shared goal of communications. We formulate a two-agent infinite-horizon Decentralized Partially Observable Markov Decision Process (Dec-POMDP) to conjointly deduce the optimal deterministic sampling policy and decision-making policy. To circumvent the curse of dimensionality in obtaining an optimal deterministic joint policy through Brute-Force-Search, a sub-optimal yet computationally efficient algorithm is developed. This algorithm is predicated on the search for a Nash Equilibrium between the sampler and the decision-maker. Simulation results reveal that the proposed sampler & decision-maker co-design surpasses the current literature on AoI and its variants in terms of both goal achievement utility and sparse sampling rate, signifying progress in the semantics-conscious, goal-driven sparse sampling design.

Index Terms:

Goal-oriented communications, Goal-oriented Tensor, Status updates, Age of Information, Age of Incorrect Information, Value of Information, Semantics-aware sampling.I Introduction

The recent advancement of the emerging 5G and beyond has spawned the proliferation of both theoretical development and application instances for Internet of Things (IoT) networks. In such networks, timely status updates are becoming increasingly crucial for enabling real-time monitoring and actuation across a plethora of applications. To address the inadequacies of traditional throughput and delay metrics in such contexts, the Age of Information (AoI) has emerged as an innovative metric to capture the data freshness perceived by the receiver [1], defined as , where denotes the generation time of the latest received packet before time . Since its inception, AoI has garnered significant research attention and has been extensively analyzed in the queuing systems [2, 3, 4, 5, 6, 7], physical-layer communications [8, 9, 10, 11, 12, 13, 14], MAC-layer communications [15, 16, 17, 18, 19], industrial IoT [20, 21], energy harvesting systems[22, 23, 24, 25], and etc. (see [26] and the references therein for more comprehensive review). These research efforts are driven by the consensus that a freshly received message typically contains more valuable information, thereby enhancing the utility of decision-making processes.

Though AoI has been proven efficient in many freshness-critical applications, it exhibits several critical shortcomings. Specifically, () AoI does not provide a direct measure of information value; () AoI does not consider the content dynamics of source data and ignores the effect of End-to-End (E2E) information mismatch on the decision-making process.

To address shortcoming (a), a typical approach is to impose a non-linear penalty on AoI[27, 28, 29, 30, 31]. In [27], the authors map the AoI to a non-linear and non-decreasing function to evaluate the degree of “discontent” resulting from stale information. Subsequently, the optimal sampling policy is deduced for an arbitrary non-decreasing penalty function. The authors in [28] introduce two penalty functions, namely the exponential penalty function and the logarithmic penalty function , for evaluating the Cost of Update Delay (CoUD). In [29] and [30], the binary indicator function is applied to evaluate whether the most recently received message is up-to-date. Specifically, the penalty assumes a value of when the instantaneous AoI surpasses a predetermined threshold ; otherwise, the penalty reverts to . The freshness of web crawling is evaluated through this AoI-threshold binary indicator function. In [31], an analogous binary indicator approach is implemented in caching systems to evaluate the freshness of information.

The above works tend to tailor a particular non-linear penalty function to evaluate the information value. However, the selection of penalty functions in the above research relies exclusively on empirical configurations, devoid of rigorous derivations. To this end, several information-theoretic techniques strive to explicitly derive the non-linear penalty function in terms of AoI [32, 33, 34, 35]. One such quintessential work is the auto-correlation function , which proves to be a non-linear function of AoI when the source is stationary [32]. Another methodology worth noting is the mutual information metric between the present source state and the vector consisting of an ensemble of successfully received updates [33, 34]. In the context of a Markovian source, this metric can be reduced to , which demonstrates a non-linear dependency on AoI under both the Gaussian Markov source and the Binary Markov source [33]. In [34], an analogous approach is utilized to characterize the value of information (VoI) for the Ornstein-Uhlenbeck (OU) process, which likewise demonstrates a non-linear dependency on AoI. In [35], the conditional entropy is further investigated to measure the uncertainty of the source for a remote estimator given the history received updates . When applied to a Markov Source, this conditional entropy simplifies to , thus exemplifying a non-linear penalty associated with AoI.

To address shortcoming (b), substantial research efforts have been invested in the optimization of the Mean Squared Error (MSE), denoted by , with an ultimate objective of constructing a real-time reconstruction remote estimation system [36, 37, 38, 39]. In [36], a metric termed effective age is proposed to minimize the MSE for the remote estimation of a Markov source. In [37] and [38], two Markov sources of interest, the Wiener process and the OU process are investigated to deduce the MSE-optimal sampling policy. Intriguingly, these policies were found to be threshold-based, activating sampling only when the instantaneous MSE exceeds a predefined threshold, otherwise maintaining a state of idleness. The authors in [39] explored the trade-off between MSE and quantization over a noisy channel, and derived the MSE-optimal sampling strategy for the OU process.

Complementary to the above MSE-centered research, variants of AoI that address shortcoming have also been conceptualized [40, 41, 42, 43]. In [40], Age of Changed Information (AoCI) is proposed to address the ignorance of content dynamics of AoI. In this regard, unchanged statuses do not necessarily provide new information and thus are not prioritized for transmission. In [41], the authors introduce the context-aware weighting coefficient to propose the Urgency of Information (UoI), a metric capable of measuring the weighted MSE in diverse urgency contexts. In [42], the authors propose a novel age penalty named Age of Synchronization (AoS), a novel metric quantifying the time since the most recent end-to-end synchronization. Moreover, considering that an E2E status mismatch may exert a detrimental effect on the overall system’s performance over time, the authors of [43] propose a novel metric called Age of Incorrect Information (AoII). This metric quantifies the adverse effect stemming from the duration of the E2E mismatch, revealing that both the degree and duration of E2E semantic mismatches lead to a utility reduction for subsequent decision-making.

The above studies focused on the open-loop generation-to-reception process within a transmitter-receiver pair, neglecting the closed-loop perception-actuation timeliness. A notable development addressing this issue is the extension from Up/Down-Link (UL/DL) AoI to a closed-loop AoI metric, referred to as the Age of Loop (AoL) [44]. Unlike the traditional open-loop AoI, which diminishes upon successful reception of a unidirectional update, the AoL decreases solely when both the UL status and the DL command are successfully received. Another advanced metric in [45], called Age of Actuation (AoA), also encapsulates the actuation timeliness, which proves pertinent when the receiver employs the received update to execute timely actuation.

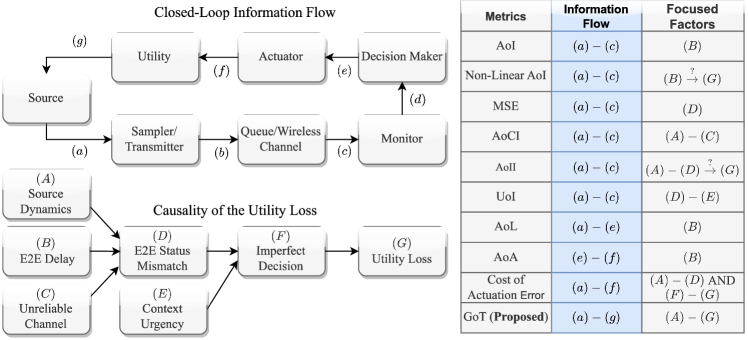

Notwithstanding the above advancements, the question on how the E2E mismatch affects the closed-loop utility of decision-making has yet to be addressed. To address this issue, [46, 47, 48, 49] introduce a metric termed Cost of Actuation Error to delve deeper into the cost resulting from the error actuation due to imprecise real-time estimations. Specifically, the Cost of Actuation Error is denoted by an asymmetric zero diagonal matrix , with each value representing the instant cost under the E2E mismatch status . In this regard, the authors reveal that the utility of decision-making bears a close relation to the E2E semantic mismatch category, as opposed to the mismatch duration (AoII) or mismatch degree (MSE). For example, an E2E semantic mismatch category that “Fire” is estimated as “No Fire” will result in high cost; while in the opposite scenario, the cost is low. Nonetheless, we notice that ) the method to obtain a Cost of Actuation Error remains unclear, which implicitly necessitates a pre-established decision-making policy; ) Cost of Actuation Error does not consider the context-varying factors, which may also affect the decision-making utility; ) the zero diagonal property of the matrix implies the supposition that if , then , thereby signifying that error-less actuation necessitates no energy expenditure. Fig. 1 provides an overview of the existing metrics.

Against this background, the present authors have recently proposed a new metric referred to as GoT in [50], which, compared to Cost of Actuation Error, introduces new dimensions of the context and the decision-making policy to describe the true utility of decision-making. Remarkably, we find that GoT offers a versatile degeneration to established metrics such as AoI, MSE, UoI, AoII, and Cost of Actuation Error. Additionally, it provides a balanced evaluation of the cost trade-off between the sampling and decision-making. The contributions of this work could be summarized as follows:

We focus on the decision utility issue directly by employing the GoT. A controlled Markov source is observed, wherein the transition of the source is dependent on both the decision-making at the receiver and the contextual situation it is situated. In this case, the decision-making will lead to three aspects in utility: ) the future evolution of the source; ) the instant cost at the source; ) the energy and resources consumed by actuation.

We accomplish the goal-oriented sampler & decision-maker co-design, which, to the best of our knowledge, represents the first work that addresses the trade-off between semantics-aware sampling and goal-oriented decision-making. Specifically, we formulate this problem as a two-agent infinite-horizon Decentralized Partially Observable Markov Decision Process (Dec-POMDP) problem, with one agent embodying the semantics and context-aware sampler, and the other representing the goal-oriented decision-maker. Note that the optimal solution of even a finite-horizon Dec-POMDP is known to be NEXP-hard [51], we develop the RVI-Brute-Force-Search Algorithm. This algorithm seeks to derive optimal deterministic joint policies for both sampling and decision-making. A thorough discussion on the optimality of our algorithm is also presented.

To further mitigate the “curse of dimensionality” intricately linked with the execution of the optimal RVI-Brute-Force-Search, we introduce a low-complexity yet efficient algorithm to solve the problem. The algorithm is designed by decoupling the problem into two distinct components: a Markov Decision Process (MDP) problem and a Partially Observable Markov Decision Process (POMDP) issue. Following this separation, the algorithm endeavors to search for the joint Nash Equilibrium between the sampler and the decision-maker, providing a sub-optimal solution to this goal-oriented communication-decision-making co-design.

II Goal-oriented Tensor

II-A Specific Examples of Goal-Oriented Communications

Consider a time-slotted communication system. Let represent the perceived semantic status at time slot at the source, and denote the constructed estimated semantic status at time slot . Real-time reconstruction-oriented communications is a special type of goal-oriented communications, whose goal is achieving real-time accurate reconstruction:

| (1) |

Although real-time reconstruction is important, it does not represent the ultimate goals of communications, such as implementing accurate actuation (as opposed to merely real-time reconstruction) and minimizing the system’s long-term Cost of Actuation Error, as in [46, 47, 48, 49]:

| (2) |

where represents the instantaneous system cost of the system at time slot . This cost is derived from the erroneous actuation stemming from the status mismatch between transceivers.

Following the avenue of Cost of Actuation Error, we notice that the matrix-based metric could be further augmented to tensors to realize more flexible goal characterizations. For example, drawing parallels with the concept of Urgency of Information [41], we can introduce a context element to incorporate context-aware attributes into this metric.111It is important to note that the GoT could be expanded into higher dimensions by integrating additional components, including actuation policies, task-specific attributes, and other factors. Accordingly, we can define a three-dimensional GoT through a specified mapping: , which could be visualized by Fig. 2. In this regard, the GoT, denoted by or , indicates the instantaneous cost of the system at time slot , with the knowledge of . Consequently, the overarching goal of this system could be succinctly expressed as:

| (3) |

II-B Degeneration to Existing Metrics

In this subsection, we demonstrate, through visualized examples and mathematical formulations, that a three-dimension GoT can degenerate to existing metrics. Fig. 2 showcases a variety of instances of the GoT metric.

Degeneration to AoI: AoI is generally defined as , where is the generated time stamp of the -th status update, represents the corresponding deliver time slot. Since AoI is known to be semantics-agnostic [52, 46], the values in the tensor only depend on the freshness context . In this case, each tensor value given a determined context status is a constant, and the GoT is reduced to

| (4) |

where (a) indicates that AoI is semantics-agnostic. In this case, AoI refers to the context exactly. The process of reducing GoT to AoI is visually depicted in Fig. 2(a).

Degeneration to AoII: AoII is defined as , where . AoII embraces the semantics-aware characteristics and is hence regarded as an enabler of semantic communications [53]. The inherent multiplicative characteristic of AoII guarantees the existence of a base layer of the tensor representation, from which other layers are derived by multiplying this base layer, as depicted in Fig. 2(b). Let , the GoT is reduced to

| (5) |

where , typically characterized by , represents the base layer in the tensor, and indicates the inherent multiplicative characteristic of AoII. The visual representation of the GoT reduction to AoII is depicted in Fig. 2(b).

Degeneration to MSE: MSE is defined as . MSE is intuitively context-agnostic. In the scenario where , the GoT collapses to the MSE metric:

| (6) |

where establishes due to the context-agnostic nature of MSE. The visualization of the GoT reduction to MSE is shown in Fig. 2(c).

Degeneration to UoI: UoI is defined by , where the context-aware weighting coefficient is further introduced [41]. When , the GoT could be transformed into the UoI by

| (7) |

where indicates the inherent multiplicative characteristic of UoI. The visualization of the GoT reduction to UoI is shown in Fig. 2(d).

Degeneration to Cost of Actuation Error: Cost of Actuation Error is defined by , which indicates the instantaneous system cost if the source status is and the estimated one mismatch [48]. Let , the GoT collapses to Cost of Actuation Error:

| (8) |

where establishes due to the context-agnostic nature of Cost of Actuation Error. The visualization of the GoT reduction to Cost of Actuation Error is shown in Fig. 2(e).

II-C Goal Characterization Through GoT

As shown in Fig. 2(f), a more general GoT exhibits neither symmetry, zero diagonal property, nor a base layer, offering considerable versatility contingent upon the specific goal. Here we propose a method that constructs a specific GoT, there are four steps:

Step 1: Clarify the scenario and the communication goal. For instance, in the wireless accident monitoring and rescue systems, the goal is to minimize the cumulative average cost associated with accidents and their corresponding rescue interventions over the long term.

Step 2: Define the sets of semantic status, , and contextual status, . These sets can be modeled as collections of discrete components. For instance, the set might encompass the gravity of industrial mishaps in intelligent factories, whereas the set could encompass the meteorological circumstances, which potentially influence the source dynamics and the costs.

Step 3: The GoT could be decoupled by three factors: 222The definitions of costs are diverse, covering aspects such as financial loss, resource usage, or a normalized metric derived through scaling, depending on the specific objective they are designed to address.

The status inherent cost . It quantifies the cost associated with different status pairs in the absence of external influences;

The actuation gain , where is a deterministic decision policy contingent upon . This cost quantifies the positive utility resulting from the actuation ;

The actuation resource expenditure , which reflects the resources consumed by a particular actuation .

Step 4: Constructing the GoT. The GoT, given a specific triple-tuple and a certain decision strategy , is calculated by

| (9) |

The ramp function ensures that any actuation reduces the cost to a maximum of 0. A visualization of a specific GoT construction is shown in Fig. 4. The GoT in Fig. 4 is obtained through the following definition:

| (10) |

III System Model

We consider a time-slotted perception-actuation loop where both the perceived semantics and context are input into a semantic sampler, tasked with determining the significance of the present status and subsequently deciding if it warrants transmission via an unreliable channel. The semantics and context are extracted and assumed to perfectly describe the status of the observed process. The binary indicator, , signifies the sampling and transmission action at time slot , with the value representing the execution of sampling and transmission, and the value indicating the idleness of the sampler. here represents the sampling policy. We consider a perfect and delay-free feedback channel [46, 47, 48, 49], with ACK representing a successful transmission and NACK representing the otherwise. The decision-maker at the receiver will make decisions based on the estimate 333We consider a general abstract decision-making set that exhibits adaptability to diverse applications. Notably, this decision-making set can be customized and tailored to suit specific requirements., which will ultimately affect the utility of the system. An illustration of our considered model is shown in Fig. 4.

III-A Semantics and Context Dynamics

Thus far, a plethora of studies have delved into the analysis of various discrete Markov sources, encompassing Birth-Death Markov Processes elucidated in [49], binary Markov sources elucidated in [54], and etc. In real-world situations, the context and the actuation also affect the source’s evolution. Consequently, we consider a context-dependent controlled Discrete Markov source:

| (11) |

where the dynamics of the source is dependent on both the decision-making and context . Furthermore, we take into account the variations in context , characterized by:

| (12) |

Note that the semantic status and context status could be tailored according to the specific application scenario. In general, these two processes are independent with each other.

III-B Unreliable Channel and Estimate Transition

We assume that the channel realizations exhibit independence and identical distribution (i.i.d.) across time slots, following a Bernoulli distribution. Particularly, the channel realization assumes a value of in the event of successful transmission, and otherwise. Accordingly, we define the probability of successful transmission as and the failure probability as . To characterize the dynamic process of , we consider two cases as described below:

. In this case, the sampler and transmitter remain idle, manifesting that there is no new knowledge given to the receiver, i.e., . As such, we have:

| (13) |

. In this case, the sampler and transmitter transmit the current semantic status through an unreliable channel. As the channel is unreliable, we differentiate between two distinct situations: and :

(a) . In this case, the transmission is successful. As such, the estimate at the receiver is nothing but , and the transition probability is

| (14) |

(b) . In this case, the transmission is not successfully decoded by the receiver. As such, the estimate at the receiver remains . In this way, the transition probability is

| (15) |

As the channel realization is independent with the process of , , and , we have that

| (16) | ||||

III-C Goal-oriented decision-making and Actuating

We note that the previous works primarily focused on minimizing the open-loop freshness-related or error-related penalty for a transmitter-receiver system. Nevertheless, irrespective of the fresh delivery or accurate end-to-end timely reconstruction, the ultimate goal of such optimization efforts is to ensure precise and effective decision-making. To this end, we broaden the open-loop transmitter-receiver information flow to include a perception-actuation closed-loop utility flow by incorporating the decision-making and actuation processes. As a result, decision-making and actuation enable the conversion of status updates into ultimate effectiveness. Here the decision-making at time slot follows that , with representing the deterministic decision-making policy.

IV Problem Formulation and Solution

Traditionally, the development of sampling strategies has been designed separately from the decision-making process. An archetypal illustration of this two-stage methodology involves first determining the optimal sampling policy based on AoI, MSE, and their derivatives, such as AoII, and subsequently accomplishing goal-oriented decision-making. This two-stage separate design arises from the inherent limitation of existing metrics that they fail to capture the closed-loop decision utility. Nevertheless, the metric GoT empowers us to undertake a co-design of sampling and decision-making.

We adopt the team decision theory, wherein two agents, one embodying the sampler and the other the decision-maker, collaborate to achieve a shared goal. We aim at determining a joint deterministic policy that minimizes the long-term average cost of the system. It is considered that the sampling and transmission of an update also consumes energy, incurring a cost. In this case, the instant cost of the system could be clarified by , and the problem is characterized as:

| (17) |

where denotes the joint sampling and decision-making policy, comprising and , which correspond to the sampling action sequence and actuation sequence, respectively. Note that is characterized by (9).

IV-A Dec-POMDP Formulation

The problem in (17) aims to find the optimal decentralized policy so that the long-term average cost of the system is minimized. To solve the problem , we ought to formulate a DEC-POMDP problem, which is initially introduced in [51] to solve the cooperative sequential decision issues for distributed multi-agents. Within a Dec-POMDP framework, a team of agents cooperates to achieve a shared goal, relying solely on their localized knowledge. A typical Dec-POMDP is denoted by a tuple :

denotes the number of agents. In this instance, we have , signifying the presence of two agents within this context: one agent embodies the semantics-context-aware sampler and transmitter, while the other represents the -dependent decision-maker, denoted by .

is the finite set of the global system status, characterized by . For the sake of brevity, we henceforth denote in the squeal.

is the transition function defined by

| (18) |

which is defined by the transition probability from global status to status , after the agents in the system taking a joint action . For the sake of concise notation, we let symbolize in the subsequent discourse. Then, by taking into account the conditional independence among , , and , given and , the transition functions can be calculated in lemma 1.

Lemma 1.

The transition functions of the Dec-POMDP:

| (19) |

| (20) |

for any and indexes , , , , , and .

Proof.

, with representing the set of binary sampling actions executed by the sampler, and representing the set of decision actions undertaken by the actuator.

constitutes a finite set of joint observations. Generally, the observation made by a single agent regarding the system status is partially observable. signifies the sampler’s observation domain. In this instance, the sampler is entirely observable, with encompassing the comprehensive system state . signifies the actuator’s observation domain. In this case, the actuator (or decision-maker) is partially observable, with comprising . The joint observation at time instant is denoted by .

represents the observation function, where and denotes the observation function of the sampler and the actuator , respectively, defined as:

| (21) | |||

The observation function of an agent signifies the conditional probability of agent perceiving , contingent upon the prevailing global system state as . For the sake of brevity, we henceforth let represent and represent in the subsequent discourse. In our considered model, the observation functions are deterministic, characterized by lemma 2.

Lemma 2.

The observation functions of the Dec-POMDP:

| (22) | ||||

for indexes , , , , , and , .

is the reward function, characterized as a mapping . In the long-term average reward maximizing setup, resolving a Dec-POMDP is equivalent to addressing the following problem . Subsequently, to establish congruence with the problem in (17), the reward function is defined as:

| (23) |

IV-B Solutions to the Infinite-Horizon Dec-POMDP

In general, solving a Dec-POMDP is known to be NEXP-complete for the finite-horizon setup [51], signifying that it necessitates formulating a conjecture about the solution non-deterministically, while each validation of a conjecture demands exponential time. For an infinite-horizon Dec-POMDP problem, finding an optimal policy for a Dec-POMDP problem is known to be undecidable. Nevertheless, within our considered model, both the sampling and decision-making processes are deterministic, given as and . In such a circumstance, it is feasible to determine a joint optimal deterministic policy via Brute-Search-across the decision-making policy space.

IV-B1 Optimal Solution

The idea is based on the finding that, given a deterministic decision-making policy , the sampling problem can be formulated as a standard fully observed MDP problem denoted by .

Definition 1.

Given a deterministic decision-making policy , the optimal sampling problem could be formulated by a typical fully observed MDP problem , where the elements are given as follows:

-

•

: the same as the pre-defined Dec-POMDP tuple.

-

•

: the sampling and transmission action set.

- •

-

•

: the same as the pre-defined Dec-POMDP tuple.

We now proceed to solve the MDP problem , which is characterized by a tuple . In order to deduce the optimal sampling policy under a deterministic decision-making policy , it is imperative to resolve the Bellman equations [55]:

| (25) |

where is the value function and is the optimal long-term average reward given the decision-making policy . We apply the relative value iteration (RVI) algorithm to solve this problem. The details are shown in Algorithm 1:

With Definition 1 and Algorithm 1 in hand, we could then perform a Brute-Force-Search across the decision-making policy space , thereby acquiring the joint sampling-decision-making policy. The algorithm is called RVI-Brute-Force-Search Algorithm, which is elaborated in Algorithm 2. In the following theorem, we discuss the optimality of the RVI-Brute-Force-Search Algorithm.

Theorem 1.

The RVI-Brute-Force-Search Algorithm (Algorithm 2) could achieve the optimal joint deterministic policies , given that the transition function follows a unichan.

Proof. If the the transition function follows a unichan, we obtain from [56, Theorem 8.4.5] that for any , we could obtain the optimal deterministic policy such that . Also, Algorithm 2 assures that for any , . This leads to the conclusion that for any , we have that

| (26) |

Nonetheless, the Brute-Force-Search across the decision-making policy space remains computationally expensive, as the size of the decision-making policy space amounts to . This implies that executing the RVI algorithm times is necessary to attain the optimal policy. Consequently, although proven to be optimal, such an algorithm is ill-suited for scenarios where and are considerably large. To ameliorate this challenge, we propose a sub-optimal, yet computation-efficient alternative in the subsequent section.

IV-B2 A Sub-optimal Solution

The method here is to instead find a locally optimal algorithm to circumvent the high complexity of the Brute-Force-Search-based approach. We apply the Joint Equilibrium-Based Search for Policies (JESP) for Nash equilibrium solutions [57]. Within this framework, the sampling policy is optimally responsive to the decision-making policy and vice versa, i.e., .

To search for the Nash equilibrium, we first search for the optimal sampling policy prescribed a decision-making policy. This problem can be formulated as a standard fully observed MDP problem denoted by (see Definition 1). Next, we alternatively fix the sampling policy and solve for the optimal decision-making policy . This problem can be modeled as a memoryless partially observable Markov decision process (POMDP), denoted by (see Definition 2). Then, by alternatively iterating between and , we could obtain the Nash equilibrium between the two agents.

Definition 2.

Given a deterministic sampling policy , the optimal sampling problem could be formulated as a memoryless POMDP problem , where the elements are given as follows:

We then proceed to solve the memoryless POMDP problem discussed in Definition 2 to obtain the deterministic decision-making policy. Denote as the transition probability under the sampling policy and , we then have that

| (28) |

where could be obtained by Lemma 1 and is obtained by (27). By assuming the ergodicity of the and rewrite it as a matrix , we could then solve out the stationary distribution of the system status under the policies and , denoted as , by solving the balance equations:

| (29) |

where is the all one vector , could be solved out by Cramer’s rules. Denote as the stationary distribution of . Also, we denote as the expectation reward of global system status under policies and . It could be calculated as:

| (30) |

The performance measure is the long-term average reward:

| (31) |

With in hand, we then introduce the relative reward , defined by

| (32) |

which satisfies the Poisson equations [58]:

| (33) |

Denote as the vector consisting of , as the vector consisting of . could be solved by utilizing [56]:

| (34) |

With the relative reward in hand, we then introduce and as follows:

Lemma 3.

and are defined and calculated as:

| (35) | ||||

| (36) | ||||

where can be obtained by (28) and can be obtained by the Bayesian formula:

| (37) |

Proof.

Please refer to Appendix B. ∎

With in hand, it is then easy to conduct the Policy Iteration (PI) Algorithm with Step Sizes [59] to iteratively improve the deterministic memoryless decision-making policy . The detailed steps are shown in Algorithm 3.

Thus far, we have solved two problems: ) by capitalizing on Definition 1 and Algorithm 1, we have ascertained an optimal sampling strategy contingent upon the decision-making policy ; ) by harnessing Definition 2 and Algorithm 3, we have determined an optimal actuation strategy predicated on the decision-making policy . Consequently, we could iteratively employ Algorithm 1 and Algorithm 3 in an alternating fashion, whereby Algorithm 1 yields the optimal sampling strategy , subsequently serving as an input for Algorithm 3 to derive the decision-making policy . The procedure shall persist until the average reward reaches convergence, indicating that the solution achieves a Nash equilibrium between the sampler and the actuator.The intricacies of the procedure are delineated in Algorithm 4.

Remark 1.

Generally, the JESP algorithm should restart the algorithm by randomly choosing the initial decision-making policy to ensure a good solution, as the initialization of decision-making policy may often lead to poor local optima. We here investigate a heuristic initialization to find the solution quickly and reliably. Specifically, we assume that the decision-maker is fully observable and solve a MDP problem:

Through solving the above MDP problem, we could explicitly obtain the Q function , define as , the initial decision-making policy is given as

| (39) |

V Simulation Results

Tradition metrics such as Age of Information have been developed under the assumption that a fresher packet or more accurate packet, capable of aiding in source reconstruction, holds a higher value for the receiver, thus promoting goal-oriented decision-making. Nevertheless, the manner in which a packet update impacts the system’s utility via decision-making remains an unexplored domain. Through the simulations, we endeavor to elucidate the following observations of interest:

GoT-optimal vs. State-of-the-art. In contrast with the state-of-the-art sampling policies, the proposed goal-oriented sampler & decision-maker co-design is capable of concurrently maximizing goal attainment and conserving communication resources, accomplishing a closed-loop utility optimization via sparse sampling. (See Fig. 9 and 9)

Separate Design vs. Co-Design. Compared to the two-stage sampling-decision-making separate framework, the co-design of sampling and decision-making not only achieves superior goal achievement but also alleviates resource expenditure engendered by communication and actuation implementation. (See Fig. 9 and 9)

Optimal Brute-Force-Search vs. Sub-optimal JESP. Under different successful transmission probability and sampling cost , the sub-optimal yet computation-efficient JESP algorithm will converge to near-optimal solutions. (See Fig. 9)

Trade-off: Transmission vs. Actuation. There is a trade-off between transmission and actuation in terms of resource expenditure: under reliable channel conditions, it is apt to increase communication overhead to ensure effective decision-making; conversely, under poor channel conditions, it is advisable to curtail communication expenses and augment actuation resources to attain maximal system utility. (See Fig. 9)

V-A Comparing Benchmarks

Fig. 9 illustrates the simulation results, which characterizes the utility by the average cost composed by status inherent cost , actuation gain cost , actuation inherent cost , and sampling cost . For the simulation setup, we set , , and the corresponding costs are given as:

| (40) |

and are both linear to the actuation with and . The following comparing benchmarks of interest are considered:

Uniform. Sampling is triggered periodically in this policy. In this case, , where and . For each , we could calculate the sampling rate as and explicitly obtain the long-term average cost through Markov chain simulations under pre-defined decision-making policy . The setup of represents a trade-off between utility and sampling frequency, as depicted in Fig. 9: If is minimal, sampling and transmission will contribute positively to the utility; If the sampling action is expansive, sampling may not yield adequate utility; If a single sampling consumes moderate resource, the utility will exhibit a U-shaped pattern in terms of the sampling rate.

Age-aware. Sampling is executed when the AoI attains a predetermined threshold, a principle that has been established as a threshold-based result for AoI-optimal sampling [27]. In this case, , where the AoI-optimal threshold can be ascertained using the Bisection method delineated in Algorithm 1 of [27]. In this context, rather than determining a fixed threshold that minimized AoI, we dynamically shift the threshold to explore the balance between sampling and utility. As evidenced in Fig. 9, the utility derived from this sampling policy consistently surpasses that of the uniform sampling policy.

Change-aware. Sampling is triggered whenever the source status changes. In such a case, . The performance of this policy is dependent on the dynamics of the system, i.e., if the semantics of the sources transfers frequently, then the sampling rate will be higher. The utility of this policy may be arbitrarily detrimental owing to its semantics-unaware nature. In our considered model, the Change-aware policy will turn out to have the unsynchronized status while . In this case, the actuator will implement the actuation according to the estimate , which will in turn make the status converges to .

Optimal MSE. This is a type of E2E goal-oriented sampling policy if the goal is determined as achieving real-time reconstruction. Nevertheless, this policy disregards the semantics conveyed by the packet and the ensuing actuation updating precipitated by semantics updates. The problem could be formulated as a standard MDP formulation and solved out through RVI Algorithm. The sampling rate and average cost are obtained given the MSE-optimal sampling policy and the pre-defined decision-making policy .

Optimal AoII (also optimal AoCI). From [43], it has been proven that the AoII-optimal sampling policy turns out to be . From [40], the AoCI-optimal sampling policy is . Note that , these two sampling policies are equivalent. The sampling rate and average cost are obtained given this sampling policy and the greedy-based decision-making policy. .

V-B Separate Design vs. Co-Design

Conventionally, the sampling and actuation policies are designed in a two-stage manner: they first emphasize open-loop performance metrics such as average mean squared error (MSE) or average Age of Information (AoI), we then focus on the decision-making policy design. Specifically, we consider that is predetermined using a greedy methodology:

| (41) |

This greedy approach entails selecting the actuation that minimizes cost in the current step, given that the estimate is perfect. By calculating (41), we obtain .

However, we notice that sampling and actuation are closely intertwined, highlighting the potential for further co-design. In this paper, we have proposed the RVI-Brute-Force-Search and the Improved JESP algorithms for such optimal co-design. As shown in Fig. 9, the sampler & decision-maker co-design achieves the optimal utility through sparse sampling. Specifically, only semantically important information is sampled and transmitted, while non-essential data is excluded. This goal-oriented, semantic-aware, and sparse sampling design represents a significant advancement in sampling policy design. By incorporating a best-matching decision-making policy, the sparse sampling achieves superior performance compared to existing methods.

Fig. 9 presents a comparative analysis between the AoII (or AoCI)-optimal sampling policy, the MSE-optimal sampling policy, and our proposed GoT-optimal sampler & decision-maker co-design. It is verified that under different and , the proposed GoT-optimal sampler & decision-maker co-design achieves the optimal goal-oriented utility. Importantly, under the condition of an extremely unreliable channel and high sampling cost , the proposed co-design facilitates a significant reduction in long-term average cost, exceeding 60%. This underscores the superiority of the GoT-optimal sampler & decision-maker co-design.

V-C Optimal vs. Sub-Optimal

Fig. 9 presents a comparative visualization between optimal and sub-optimal solutions over a wide range of and values. The negligible zero-approaching value in Fig. 9 implies a trivial deviation between the optimal and sub-optimal solutions, suggesting the latter’s potential for convergence towards near-optimal outcomes. The minimal variance testifies to the sub-optimal algorithm’s consistent ability to approximate solutions with high proximity to the optimal. This critical observation underscores the practical advantages of employing sub-optimal improved JESP Algorithm, especially in scenarios with extensive and .

V-D Trade-off: Transmission vs. Actuation

Fig. 9 exemplifies the resource allocation trade-off between transmission and actuation when the long-term average cost is minimized. When the probability of remains low (signifying an unreliable channel) or is high (indicating expensive sampling), it becomes prudent to decrease sampling and transmission, while concurrently augmenting actuation resources for optimal system utility. In contrast, when the channel is reliable, sampling and transmission resource can be harmonized with actuation resources to achieve the goal better. This indicates that through the investigation of the optimal co-design of the sampler & decision-maker paradigm, a trade-off between transmission and actuation resources can be achieved.

VI Conclusion

In this paper, we have investigated the GoT metric to directly describe the goal-oriented system decision-making utility. Employing the proposed GoT, we have formulated an infinite horizon Dec-POMDP problem to accomplish the co-design of sampling and actuating. To address this problem, we have developed two algorithms: the computationally intensive RVI-Brute-Force-Search, which is proven to be optimal, and the more efficient, albeit suboptimal algorithm, named JESP Algorithm. Comparative analyses have substantiated that the proposed GoT-optimal sampler & decision-maker pair can achieve sparse sampling and meanwhile maximize the utility, signifying the initial realization of a sparse, goal-oriented, and semantics-aware sampler design.

References

- [1] S. Kaul, M. Gruteser, V. Rai, and J. Kenney, “Minimizing age of information in vehicular networks,” in 2011 8th Annual IEEE Communications Society Conference on Sensor, Mesh and Ad-Hoc Communications and Networks, 2011, pp. 350–358.

- [2] Y. Sun, E. Uysal-Biyikoglu, R. D. Yates, C. E. Koksal, and N. B. Shroff, “Update or wait: How to keep your data fresh,” IEEE Transactions on Information Theory, vol. 63, no. 11, pp. 7492–7508, 2017.

- [3] M. Costa, M. Codreanu, and A. Ephremides, “On the age of information in status update systems with packet management,” IEEE Transactions on Information Theory, vol. 62, no. 4, pp. 1897–1910, 2016.

- [4] R. D. Yates and S. K. Kaul, “The age of information: Real-time status updating by multiple sources,” IEEE Transactions on Information Theory, vol. 65, no. 3, pp. 1807–1827, 2018.

- [5] C. Kam, S. Kompella, G. D. Nguyen, and A. Ephremides, “Effect of message transmission path diversity on status age,” IEEE Transactions on Information Theory, vol. 62, no. 3, pp. 1360–1374, 2015.

- [6] A. M. Bedewy, Y. Sun, and N. B. Shroff, “The age of information in multihop networks,” IEEE/ACM Transactions on Networking, vol. 27, no. 3, pp. 1248–1257, 2019.

- [7] M. Moltafet, M. Leinonen, and M. Codreanu, “On the age of information in multi-source queueing models,” IEEE Transactions on Communications, vol. 68, no. 8, pp. 5003–5017, 2020.

- [8] H. Sac, B. T. Bacinoglu, E. Uysal-Biyikoglu, and G. Durisi, “Age-Optimal Channel Coding Blocklength for an M/G/1 Queue with HARQ,” 2018 IEEE 19th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), pp. 1–5, 2018.

- [9] M. Xie, Q. Wang, J. Gong, and X. Ma, “Age and energy analysis for LDPC coded status update with and without ARQ,” IEEE Internet of Things Journal, vol. 7, no. 10, pp. 10 388–10 400, 2020.

- [10] J. You, S. Wu, Y. Deng, J. Jiao, and Q. Zhang, “An age optimized hybrid ARQ scheme for polar codes via Gaussian approximation,” IEEE Wireless Communications Letters, vol. 10, no. 10, pp. 2235–2239, 2021.

- [11] S. Meng, S. Wu, A. Li, J. Jiao, N. Zhang, and Q. Zhang, “Analysis and Optimization of the HARQ-Based Spinal Coded Timely Status Update System,” IEEE Transactions on Communications, vol. 70, no. 10, pp. 6425–6440, 2022.

- [12] H. Pan, T.-T. Chan, V. C. Leung, and J. Li, “Age of Information in Physical-layer Network Coding Enabled Two-way Relay Networks,” IEEE Transactions on Mobile Computing, 2022.

- [13] A. Maatouk, M. Assaad, and A. Ephremides, “Minimizing The Age of Information: NOMA or OMA?” IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), pp. 102–108, 2019.

- [14] S. Wu, Z. Deng, A. Li, J. Jiao, N. Zhang, and Q. Zhang, “Minimizing Age of Information in HARQ-CC Aided NOMA Systems,” IEEE Transactions on Wireless Communications, 2022.

- [15] E. T. Ceran, D. Gündüz, and A. György, “Average Age of Information with Hybrid ARQ under a resource constraint,” IEEE Transactions on Wireless Communications, vol. 18, no. 3, pp. 1900–1913, 2019.

- [16] D. Li, S. Wu, J. Jiao, N. Zhang, and Q. Zhang, “Age-Oriented Transmission Protocol Design in Space-Air-Ground Integrated Networks,” IEEE Transactions on Wireless Communications, vol. 21, no. 7, pp. 5573–5585, 2022.

- [17] F. Peng, Z. Jiang, S. Zhang, and S. Xu, “Age of Information Optimized MAC in V2X Sidelink via Piggyback-Based Collaboration,” IEEE Transactions on Wireless Communications, vol. 20, pp. 607–622, 2020.

- [18] H. Pan, T.-T. Chan, J. Li, and V. C. M. Leung, “Age of information with collision-resolution random access,” IEEE Transactions on Vehicular Technology, vol. 71, no. 10, pp. 11 295–11 300, 2022.

- [19] A. Li, S. Wu, J. Jiao, N. Zhang, and Q. Zhang, “Age of Information with Hybrid-ARQ: A Unified Explicit Result,” IEEE Transactions on Communications, vol. 70, no. 12, pp. 7899–7914, 2022.

- [20] B. Zhou and W. Saad, “Joint status sampling and updating for minimizing age of information in the internet of things,” IEEE Transactions on Communications, vol. 67, no. 11, pp. 7468–7482, 2019.

- [21] X. Xie, H. Wang, and X. Liu, “Scheduling for minimizing the age of information in multi-sensor multi-server industrial iot systems,” IEEE Transactions on Industrial Informatics, pp. 1–10, 2023.

- [22] M. A. Abd-Elmagid and H. S. Dhillon, “Closed-Form Characterization of the MGF of AoI in Energy Harvesting Status Update Systems,” IEEE Transactions on Information Theory, vol. 68, no. 6, pp. 3896–3919, 2022.

- [23] M. Hatami, M. Leinonen, Z. Chen, N. Pappas, and M. Codreanu, “On-Demand AoI Minimization in Resource-Constrained Cache-Enabled IoT Networks With Energy Harvesting Sensors,” IEEE Transactions on Communications, vol. 70, no. 11, pp. 7446–7463, 2022.

- [24] R. D. Yates, “Lazy is timely: Status updates by an energy harvesting source,” in 2015 IEEE International Symposium on Information Theory (ISIT), 2015, pp. 3008–3012.

- [25] A. Arafa, J. Yang, S. Ulukus, and H. V. Poor, “Age-minimal transmission for energy harvesting sensors with finite batteries: Online policies,” IEEE Transactions on Information Theory, vol. 66, no. 1, pp. 534–556, 2019.

- [26] R. D. Yates, Y. Sun, D. R. Brown, S. K. Kaul, E. Modiano, and S. Ulukus, “Age of Information: An Introduction and Survey,” IEEE Journal on Selected Areas in Communications, vol. 39, no. 5, pp. 1183–1210, 2021.

- [27] Y. Sun and B. Cyr, “Sampling for data freshness optimization: Non-linear age functions,” Journal of Communications and Networks, vol. 21, no. 3, pp. 204–219, 2019.

- [28] A. Kosta, N. Pappas, A. Ephremides, and V. Angelakis, “The cost of delay in status updates and their value: Non-linear ageing,” IEEE Transactions on Communications, vol. 68, no. 8, pp. 4905–4918, 2020.

- [29] J. Cho and H. Garcia-Molina, “Effective page refresh policies for web crawlers,” ACM Transactions on Database Systems (TODS), vol. 28, no. 4, pp. 390–426, 2003.

- [30] Y. Azar, E. Horvitz, E. Lubetzky, Y. Peres, and D. Shahaf, “Tractable near-optimal policies for crawling,” Proceedings of the National Academy of Sciences, vol. 115, no. 32, pp. 8099–8103, 2018.

- [31] M. Bastopcu and S. Ulukus, “Information freshness in cache updating systems,” IEEE Transactions on Wireless Communications, vol. 20, no. 3, pp. 1861–1874, 2020.

- [32] K. T. Truong and R. W. Heath, “Effects of Channel Aging in Massive MIMO systems,” Journal of Communications and Networks, vol. 15, no. 4, pp. 338–351, 2013.

- [33] Y. Sun and B. Cyr, “Information aging through queues: A mutual information perspective,” in 2018 IEEE 19th International Workshop on Signal Processing Advances in Wireless Communications (SPAWC), 2018, pp. 1–5.

- [34] Z. Wang, M.-A. Badiu, and J. P. Coon, “A framework for characterizing the value of information in hidden markov models,” IEEE Transactions on Information Theory, vol. 68, no. 8, pp. 5203–5216, 2022.

- [35] G. Chen, S. C. Liew, and Y. Shao, “Uncertainty-of-information scheduling: A restless multiarmed bandit framework,” IEEE Transactions on Information Theory, vol. 68, no. 9, pp. 6151–6173, 2022.

- [36] C. Kam, S. Kompella, G. D. Nguyen, J. E. Wieselthier, and A. Ephremides, “Towards an effective age of information: Remote estimation of a Markov source,” in IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), 2018, pp. 367–372.

- [37] Y. Sun, Y. Polyanskiy, and E. Uysal, “Sampling of the Wiener process for remote estimation over a channel with random delay,” IEEE Transactions on Information Theory, vol. 66, no. 2, pp. 1118–1135, 2019.

- [38] T. Z. Ornee and Y. Sun, “Sampling for remote estimation through queues: Age of information and beyond,” in 2019 International Symposium on Modeling and Optimization in Mobile, Ad Hoc, and Wireless Networks (WiOPT), 2019, pp. 1–8.

- [39] A. Arafa, K. Banawan, K. G. Seddik, and H. V. Poor, “Sample, quantize, and encode: Timely estimation over noisy channels,” IEEE Transactions on Communications, vol. 69, no. 10, pp. 6485–6499, 2021.

- [40] X. Wang, W. Lin, C. Xu, X. Sun, and X. Chen, “Age of changed information: Content-aware status updating in the internet of things,” IEEE Transactions on Communications, vol. 70, no. 1, pp. 578–591, 2022.

- [41] X. Zheng, S. Zhou, and Z. Niu, “Urgency of information for context-aware timely status updates in remote control systems,” IEEE Transactions on Wireless Communications, vol. 19, no. 11, pp. 7237–7250, 2020.

- [42] J. Zhong, R. D. Yates, and E. Soljanin, “Two freshness metrics for local cache refresh,” in 2018 IEEE International Symposium on Information Theory (ISIT), pp. 1924–1928.

- [43] A. Maatouk, S. Kriouile, M. Assaad, and A. Ephremides, “The age of incorrect information: A new performance metric for status updates,” IEEE/ACM Transactions on Networking, vol. 28, no. 5, pp. 2215–2228, 2020.

- [44] J. Cao, X. Zhu, S. Sun, P. Popovski, S. Feng, and Y. Jiang, “Age of Loop for Wireless Networked Control System in the Finite Blocklength Regime: Average, Variance and Outage Probability,” IEEE Transactions on Wireless Communications, pp. 1–1, 2023.

- [45] A. Nikkhah, A. Ephremides, and N. Pappas, “Age of Actuation in a Wireless Power Transfer System,” in IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), 2023.

- [46] M. Kountouris and N. Pappas, “Semantics-empowered communication for networked intelligent systems,” IEEE Communications Magazine, vol. 59, pp. 96–102, 2020.

- [47] N. Pappas and M. Kountouris, “Goal-oriented communication for real-time tracking in autonomous systems,” in 2021 IEEE International Conference on Autonomous Systems (ICAS), 2021, pp. 1–5.

- [48] M. Salimnejad, M. Kountouris, and N. Pappas, “Real-time Reconstruction of Markov Sources and Remote Actuation over Wireless Channels,” arXiv, 2023. [Online]. Available: https://doi.org/10.48550/arXiv.2302.13927

- [49] E. Fountoulakis, N. Pappas, and M. Kountouris, “Goal-oriented Policies for Cost of Actuation Error Minimization in Wireless Autonomous Systems,” IEEE Communications Letter, 2023.

- [50] A. Li, S. Wu, S. Meng, and Q. Zhang, “Towards goal-oriented semantic communications: New metrics, open challenges, and future research directions,” Submitted to IEEE Communications Magazine., 2023. [Online]. Available: https://arxiv.org/abs/2304.00848

- [51] D. S. Bernstein, R. Givan, N. Immerman, and S. Zilberstein, “The complexity of decentralized control of markov decision processes,” Mathematics of operations research, vol. 27, no. 4, pp. 819–840, 2002.

- [52] E. Uysal, O. Kaya, A. Ephremides, J. Gross, M. Codreanu, P. Popovski, M. Assaad, G. Liva, A. Munari, B. Soret et al., “Semantic communications in networked systems: A data significance perspective,” IEEE Network, vol. 36, no. 4, pp. 233–240, 2022.

- [53] A. Maatouk, M. Assaad, and A. Ephremides, “The age of incorrect information: An enabler of semantics-empowered communication,” IEEE Transactions on Wireless Communications, 2022.

- [54] C. Kam, S. Kompella, and A. Ephremides, “Age of incorrect information for remote estimation of a binary markov source,” in IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), 2020, pp. 1–6.

- [55] D. Bertsekas, Dynamic programming and optimal control. Athena scientific, 2012, vol. 1.

- [56] M. L. Puterman, Markov decision processes: discrete stochastic dynamic programming. John Wiley & Sons, 2014.

- [57] R. Nair, M. Tambe, M. Yokoo, D. V. Pynadath, and S. Marsella, “Taming decentralized pomdps: Towards efficient policy computation for multiagent settings,” in Proceedings of the Eighteenth International Joint Conference on Artificial Intelligence, 2003.

- [58] D. P. Bertsekas and J. N. Tsitsiklis, “Neuro-dynamic programming: an overview,” in Proceedings of 1995 34th IEEE conference on decision and control, vol. 1, pp. 560–564.

- [59] Y. Li, B. Yin, and H. Xi, “Finding optimal memoryless policies of pomdps under the expected average reward criterion,” European Journal of Operational Research, vol. 211, no. 3, pp. 556–567, 2011.

Appendix A The Proof of Lemma 1

By taking into account the conditional independence among , , and , given and , we we can express the following:

| (42) | ||||

wherein the first, second, and third terms can be derived through conditional independence, resulting in simplified expressions of (11), (16), and (12), respectively:

| (43) |

| (44) |

| (45) |

Substituting (43), (44), and (45) into (42) yields the (1) in Lemma 1. In the case where , we can obtain a similar expression by replacing with . Substituting (11), (13), and (12) into this new expression results in the proof of (20) in Lemma 1.

Appendix B Proof of Lemma 3

could be simplified as follows:

| (46) | ||||

could be solved as:

| (47) | ||||

where is the posterior conditional probability. Note that the state is independent of when is known, we have that

| (48) |