∎

Raleigh, NC. 33institutetext: A. Alexanderian 44institutetext: Department of Mathematics, North Carolina State University,

Raleigh, NC.

55institutetext: B.v.B. Waanders

Center for Computing Research, Sandia National Labs,

Albuquerque, NM.

Goal oriented optimal design of infinite-dimensional

Bayesian inverse problems using quadratic approximations

Abstract

We consider goal-oriented optimal design of experiments for infinite-dimensional Bayesian linear inverse problems governed by partial differential equations (PDEs). Specifically, we seek sensor placements that minimize the posterior variance of a prediction or goal quantity of interest. The goal quantity is assumed to be a nonlinear functional of the inversion parameter. We propose a goal-oriented optimal experimental design (OED) approach that uses a quadratic approximation of the goal-functional to define a goal-oriented design criterion. The proposed criterion, which we call the -optimality criterion, is obtained by integrating the posterior variance of the quadratic approximation over the set of likely data. Under the assumption of Gaussian prior and noise models, we derive a closed-form expression for this criterion. To guide development of discretization invariant computational methods, the derivations are performed in an infinite-dimensional Hilbert space setting. Subsequently, we propose efficient and accurate computational methods for computing the -optimality criterion. A greedy approach is used to obtain -optimal sensor placements. We illustrate the proposed approach for two model inverse problems governed by PDEs. Our numerical results demonstrate the effectiveness of the proposed strategy. In particular, the proposed approach outperforms non-goal-oriented (A-optimal) and linearization-based (c-optimal) approaches.

1 Introduction

Inverse problems are common in science and engineering applications. In such problems, we use a model and data to infer uncertain parameters, henceforth called inversion parameters, that are not directly observable. We consider the case where measurement data are collected at a set of sensors. In practice, often only a few sensors can be deployed. Thus, optimal placement of the sensors is critical. Addressing this requires solving an optimal experimental design (OED) problem AtkinsonDonev92 ; Ucinski05 ; Pukelsheim06 .

In some applications, the estimation of the inversion parameter is merely an intermediate step. For example, consider a source inversion problem in a heat transfer application. In such problems, one is often interested in prediction quantities such as the magnitude of the temperature within a region of interest or heat flux through an interface. A more complex example is a wildfire simulation problem, where one may seek to estimate the source of the fire, but the emphasis is on prediction quantities summarizing future states of the system. In such problems, design of experiments should take the prediction/goal quantities of interest into account. Failing to do so might result in sensor placements that do not result in optimal uncertainty reduction in the prediction/goal quantities. This points to the need for a goal-oriented OED approach. This is the subject of this article.

We focus on Bayesian linear inverse problems governed by PDEs with infinite-dimensional parameters. To make matters concrete, we consider the observation model,

| (1.1) |

Here, is a vector of measurement data, is a linear parameter-to-observable map, is the inversion parameter, and is a random variable that models measurement noise. We consider the case where belongs to an infinite-dimensional real separable Hilbert space and is a continuous linear transformation. The inverse problem seeks to estimate using the observation model (1.1). Examples of such problems include source inversion or initial state estimation in linear PDEs. See Section 2, for a brief summary of the requisite background regarding infinite-dimensional Bayesian linear inverse problems and OED for such problems.

We consider the case where solving the inverse problem is an intermediate step and the primary focus is accurate estimation of a scalar-valued prediction quantity characterized by a nonlinear goal-functional,

| (1.2) |

In the present work, we propose a goal-oriented OED approach that seeks to find sensor placements minimizing the posterior uncertainty in such goal-functionals.

Related work. The literature devoted to OED is extensive. Here, we discuss articles that are closely related to the present work. OED for infinite-dimensional Bayesian linear inverse problems has been addressed in several works in the past decade; see e.g., AlexanderianPetraStadlerEtAl14 ; AlexanderianSaibaba18 ; HermanAlexanderianSaibaba20 . Goal-oriented approaches for OED in inverse problems governed by differential equations have appeared in HerzogRiedelUcinski18 ; Li19 ; ButlerJakemanWildey20 . The article HerzogRiedelUcinski18 considers nonlinear problems with nonlinear goal operators. In that article, a goal-oriented OED criterion is obtained using linearization of the goal operator and an approximate (linearization-based) covariance matrix for the inversion parameter. The thesis Li19 considers linear inverse problems with Gaussian prior and noise models, where the goal operator itself is a linear transformation of the inversion parameters. A major focus of that thesis is the study of methods for the combinatorial optimization problem corresponding to optimal sensor placement. The work ButlerJakemanWildey20 considers a stochastic inverse problem formulation, known as data-consistent framework ButlerJakemanWildey18 . This approach, while related, is different from traditional Bayesian inversion. Goal-oriented OED for infinite-dimensional linear inverse problems was studied in AttiaAlexanderianSaibaba18 ; WuChenGhattas23a . These articles consider goal-oriented OED for the case of linear parameter-to-goal mappings.

For the specific class of problems considered in the present work, a traditional approach is to consider a linearization of the goal-functional around a nominal parameter . Considering the posterior variance of this linearized functional leads to a specific form of the well-known c-optimality criterion ChalonerVerdinelli95 . However, a linear approximation does not always provide sufficient accuracy in characterizing the uncertainty in the goal-functional. In such cases, a more accurate approximation to is desirable.

Our approach and contributions. We consider a quadratic approximation of the goal-functional. Thus, is approximated by

| (1.3) |

Following an A-optimal design approach, we consider the posterior variance of the quadratic approximation, . We derive an analytic expression for this variance in the infinite-dimensional setting, in Section 3. Note, however, that this variance expression depends on data , which is not available a priori. To overcome this, we compute the expectation of this variance expression with respect to data. This results in a data-averaged design criterion, which we call the -optimality criterion. Here, indicates the goal-oriented nature of the criterion and indicates the use of a quadratic approximation. The closed-form analytic expression for this criterion is derived in Theorem 3.2.

Subsequently, in Section 4, we present three computational approaches for fast estimation of , relying on Monte Carlo trace estimators, low-rank spectral decompositions, or a low-rank singular value decomposition (SVD) of , respectively. Focusing on problems where the goal functional is defined in terms of PDEs, our methods rely on adjoint-based expressions for the gradient and Hessian of . We demonstrate effectiveness of the proposed goal-oriented approach in a series of computational experiments in Section 5.1 and Section 5.2. The example in Section 5.1 involves inversion of a volume source term in an elliptic PDE with the goal defined as a quadratic functional of the state variable. The example in Section 5.2 concerns a porous medium flow problem with a nonlinear goal functional.

The key contributions of this article are as follows:

-

derivation of a novel goal-oriented design criterion, the -optimality criterion, based on a quadratic approximation of the goal-functional, in an infinite-dimensional Hilbert space setting (see Section 3);

-

efficient computational methods for estimation of the -optimality criterion (see Section 4);

-

extensive computational experiments, demonstrating the importance of goal-oriented OED and effectiveness of the proposed approach (see Section 5).

2 Background

In this section, we discuss the requisite background concepts and notations regarding Bayesian linear inverse problems and OED.

2.1 Bayesian linear inverse problems

The key components of a Bayesian inverse problem are the prior distribution, the data-likelihood, and the posterior distribution. The prior encodes our prior knowledge about the inversion parameter, which we denote by . The likelihood, which incorporates the parameter-to-observable map, describes the conditional distribution of data for a given inversion parameter. Finally, the posterior is a distribution law for that is conditioned on the observed data and is consistent with the prior. These components are related via the Bayes formula Stuart10 . Here, we summarize the process for the case of linear Bayesian inverse problem.

The data likelihood. We consider a bounded linear parameter-to-observable map, . In linear inverse problems governed by PDEs, we define as the composition of a linear PDE solution operator and a linear observation operator , which extracts solution values at a prespecified set of measurement points. Hence, . In the present, work, we consider observation models of the form

| (2.1) |

We assume and are independent, which implies, . This defines the data-likelihood.

Prior. Herein, , where is a bounded domain in two- or three-space dimensions. This space is equipped with the inner product and norm . We consider a Gaussian prior law . To define the prior, we follow the approach in Stuart10 ; Bui-ThanhGhattasMartinEtAl13 . The prior mean is assumed to be a sufficiently regular element of and the prior covariance operator is defined as the inverse of a differential operator. Specifically, let be the mapping , defined by the solution operator of

| (2.2) | ||||

where and are positive constants. Then, the prior covariance is defined as .

Posterior. For a Bayesian linear inverse problem with a Gaussian prior and a Gaussian noise model given by (2.1), it is well-known Stuart10 that the posterior is the Gaussian measure with

| (2.3) |

where denotes the adjoint of . Here, the posterior mean is the maximum a posteriori probability (MAP) point. Also, recall the variational characterization of this MAP point as the unique global minimizer of

| (2.4) |

in the Cameron–Martin space, ; see DashtiStuart17 . The Cameron–Martin space plays a key role in the study of Gaussian measures on Hilbert spaces. In particular, this space is important in the theory of Bayesian inverse problems with Gaussian priors. Here, is the Cameron–Martin norm, .

It can be shown that the Hessian of , denoted by , satisfies . In what follows, the Hessian of data-misfit term in (2.4) will be important. We denote this Hessian by . A closely related operator is the prior-preconditioned data-misfit Hessian,

| (2.5) |

which also plays a key role in the discussions that follow.

Lastly, we remark on the case when the forward operator is affine. This will be the case for inverse problems governed by linear PDEs with inhomogeneous source volume or boundary source terms. The model inverse problem considered in Section 5.2 is an example of such problems. In that case, the forward operator may be represented as the affine map , where is a bounded linear transformation. Under the Gaussian assumption on the prior and noise, the posterior is a Gaussian with the same covariance operator as in (2.3) and with the mean given by .

Discretization. We discretize the inverse problem using the continuous Galerkin finite element method. Consider a nodal finite element basis of compactly supported functions . The discretized inversion parameter is represented as . Following common practice, we identify with the vector of its finite element coefficients, . The discretized inversion parameter space is thus equipped with the mass-weighted inner product . Here, is the finite element mass matrix, , for . Note that this mass-weighted inner product is the discretized inner product. Throughout the article, we use the notation for equipped with the mass-weighted inner product .

We use boldfaced symbols to represent the discretized versions of the operators appearing in the Bayesian inverse problem formulation. For details on obtaining such discretized operators, see Bui-ThanhGhattasMartinEtAl13 . The discretized solution, observation, and forward operators are denoted by , , and , respectively. Similarly, the discretized Hessian is presented as . We denote the discretized prior and posterior covariance operators by and , respectively. Note that and are selfadjoint operators on .

2.2 Classical optimal experimental design

In the present work, an experimental design corresponds to an array of sensors selected from a set of candidate sensor locations, . In a classical OED problem, an experimental design is called optimal if it minimizes a notion of posterior uncertainty in the inversion parameter. This is different from a goal-oriented approach, where we seek designs that minimize the uncertainty in a goal quantity of interest.

To formulate an OED problem, it is helpful to parameterize sensor placements in some manner. A common approach is to assign weights to each sensor in the candidate sensor grid. That is, we assign a weight to each , . This way, a sensor placement is identified with a vector . Each may be restricted to some subset of depending on the optimization scheme. Here, we assume ; a weight of zero means the corresponding sensor is inactive.

The vector of the design weights is incorporated in the Bayesian inverse problem formulation through the data-likelihood Alexanderian21 . This yields a -dependent posterior measure. In particular, the posterior covariance operator is given by

| (2.6) |

There are several classical criteria in the OED literature. One example is the A-optimality criterion, which is defined as the trace of . The corresponding discretized criterion is

| (2.7) |

The A-optimality criterion quantifies the average posterior variance of the inversion parameter field. To define a goal-oriented analogue of the A-optimality criterion, we need to consider the posterior variance of the goal-functional in (1.2). This is discussed in the next section.

3 Goal-oriented OED

In a goal-oriented OED problem, we seek designs that minimize the uncertainty in a goal quantity of interest, which is a function of the inversion parameter . Here, we consider a nonlinear goal-functional

| (3.1) |

In our target applications, evaluating involves solving PDEs. Thus, computing the posterior variance of via sampling can be challenging—a potentially large number of samples might be required. Also, computing an optimal design requires evaluation of the design criterion in every step of an optimization algorithm. Furthermore, generating samples from the posterior requires forward and adjoint PDE solves. Thus, design criteria that require sampling at every step of an optimization algorithm will be computationally inefficient. One approach to developing a computationally tractable goal-oriented OED approach is to replace by a suitable approximation. This leads to the definition of approximate measures of uncertainty in .

We can use local approximations of to derive goal-oriented criteria. This requires an expansion point, which we denote as . A simple choice is to let be the prior mean. Another approach, which might be feasible in some applications, is to assume some initial measurement data is available. This data may be used to compute an initial parameter estimate. Such an initial estimate might not be suitable for prediction purposes, but can be used in place of . The matter of expansion point selection is discussed later in Section 5. For now, is considered to be a fixed element in . In what follows, we assume is twice differentiable and denote

| (3.2) |

A known approach for obtaining an approximate measure of uncertainty in is to consider a linearization of and compute the posterior variance of this linearization. In the present work, this is referred to as the -optimality criterion, denoted by . The is used to indicate goal, and is a reference to linearization. As seen shortly, this -optimality criterion is a specific instance of the Bayesian c-optimality criterion ChalonerVerdinelli95 . Consider the linear approximation of given by

| (3.3) |

The -optimality criterion is

| (3.4) |

It is straightforward to note that

| (3.5) |

where we have used the definition of covariance operator; see (A.1). Letting , we obtain the c-optimality criterion . The variance of the linearized goal is an intuitive and tractable choice for a goal-oriented criterion. However, a linearization might severely underestimate the posterior uncertainty in or be overly sensitive to choice of .

In the present work, we define an OED criterion based on the quadratic Taylor expansion of . This leads to the -optimality criterion mentioned in the introduction. Consider the quadratic approximation,

| (3.6) |

We can compute analytically. This is facilitated by Theorem 3.1 below. The result is well-known in the finite-dimensional setting. In the infinite-dimensional setting, this can be obtained from properties of Gaussian measures on Hilbert spaces, some developments in DaPratoZabczyk02 (cf. Remark 1.2.9., in particular), along with the formula for the expected value of a quadratic form on a Hilbert space AlexanderianGhattasEtAl16 . This approach was used in AlexanderianPetraStadlerEtAl17 to derive the expression for the variance of a second order Taylor expansion, within the context of optimization under uncertainty. However, to our knowledge a direct and standalone proof of Theorem 3.1, which is of independent and of broader interest, does not seem to be available in the literature. Thus, we present a detailed proof of this result in the appendix for completeness.

Theorem 3.1 (Variance of a quadratic functional)

Let be a bounded selfadjoint linear operator on a Hilbert space and let . Consider the quadratic functional given by

| (3.7) |

Let be a Gaussian measure on . Then, we have

Proof

See Appendix A.

We next consider the posterior variance of . Using Theorem 3.1, we obtain

| (3.8) |

Note that this variance expression depends on data , which is not available when solving the OED problem. Indeed, the main point of solving an OED problem is to determine how data should be collected. Hence, we consider the “data-averaged” criterion,

| (3.9) |

Here, and represent expectations with respect to the prior and likelihood, respectively. This uses the information available in the Bayesian inverse problem formulation to compute the expected value of over the set of likely data. In the general case of nonlinear inverse problems, such averaged criteria are computed via sample averaging AlexanderianPetraStadlerEtAl16 ; Alexanderian21 . However, in the present setting, exploiting the linearity of the parameter-to-observable map and the Gaussian assumption on prior and noise models, we can compute analytically. This is the main result of this section and presented in the following theorem.

Theorem 3.2 (Goal-oriented criterion)

Let be as defined in (3.9). Then,

| (3.10) |

Proof

See Appendix B.

We call in (3.10) the -optimality criterion. Proving Theorem 3.2 involves three main steps. In the first step, the variance of the quadratic approximation of is calculated using Theorem 3.1. This results in (3.8). In the second step, we need to compute the nested expectations in (3.9). Calculating these moments requires obtaining the expectations of linear and quadratic forms with respect to the data-likelihood and prior laws. The derivations rely on facts about measures on Hilbert spaces. Subsequently, using properties of traces of Hilbert space operators, the definitions of the constructs in the inverse problem formulation, and some detailed manipulations, we derive (3.10). See Appendix B, for details.

4 Computational Methods

Computing the -optimality criterion (3.10) requires computing traces of high-dimensional and expensive to apply operators, which is a computational challenge. To establish a flexible computational framework, in this section, we present three different algorithms for fast estimation of the -optimality criterion. In Section 4.1, we present an approach based on randomized trace estimators. Then, we present an algorithm that uses the low-rank spectral decomposition of the prior-preconditioned data misfit Hessian in Section 4.2. Finally, in Section 4.3, we present an approach that uses the low-rank SVD of the prior-preconditioned forward operator. In each case, we rely on structure exploiting methods to obtain scalable algorithms.

Before presenting these methods, we briefly discuss the discretization of the -optimality criterion. In addition to the discretized operators presented in Section 2.1, we require access to the discretized goal functional, denoted as , and its derivatives. In what follows, we denote

| (4.1) |

The discretized -optimality criterion is given by

| (4.2) |

Similarly, discretizing the -optimality criterion , presented in (3.4), yields

| (4.3) |

4.1 A randomized algorithm

In large-scale inverse problems, it is expensive to build the forward operator, the prior and posterior covariance operators, or the Hessian of the goal-functional, in (4.1). Therefore, matrix-free methods that only require applications of these operators on vectors are essential. A key challenge here is computation of the traces in (4.2). In this section, we present an approach for computing that relies on randomized trace estimation Avron2011 . As noted in AlexanderianPetraStadlerEtAl14 , the trace of a linear operator on can be approximated via

| (4.4) |

This is known as a Monte Carlo trace estimator. The number of the required trace estimator vectors is problem-dependent. However, in practice, often a modest (in order of tens) is sufficient for the purpose of optimization.

We use Monte Carlo estimators to approximate the trace terms in (4.2). In particular, we use

where we have exploited the fact that and are selfadjoint with respect to the mass-weighted inner product . Thus, letting

we can estimate by

| (4.5) |

This enables a computationally tractable approach for approximating . We outline the procedure for computing in Algorithm 1. The computational cost of this approach is discussed in Section 4.4.

The utility of methods based on Monte Carlo trace estimators in the context of OED for large-scale inverse problems has been demonstrated in previous studies such as HaberHoreshTenorio08 ; HaberMagnantLuceroEtAl12 ; AlexanderianPetraStadlerEtAl14 . A key advantage of the present approach is its simplicity. However, further accuracy and efficiency can be attained by exploiting the low-rank structures embedded in the inverse problem. This is discussed in the next section.

4.2 Algorithm based on low-rank spectral decomposition of

Here we present a structure-aware algorithm for estimating the -optimality criterion that exploits low-rank components within the inverse problem. Namely, we leverage the often present low-rank structure in the (discretized) prior-preconditioned data misfit Hessian, .

Let us denote

Note that the posterior covariance operator can be represented as

| (4.6) |

As shown in Bui-ThanhGhattasMartinEtAl13 , we can obtain a computationally tractable approximation of using a low-rank representation of . Let be the dominant eigenpairs of . We use

where and . Now, define and . We can approximate using the Sherman-Woodbury-Morrison formula,

| (4.7) |

Substituting by in (4.6), yields the approximation

| (4.8) |

Subsequently, the -optimality criterion (4.2) is approximated by

| (4.9) |

The following result provides a convenient expression for computing .

Proposition 1

Let be as in (4.9). Then,

| (4.10) |

Proof

See Appendix C.

Note that the second term in (4.10), , is a constant that does not depend on the experimental design (sensor placement). Therefore, when seeking to optimize as a function of , we can neglect that constant term and focus instead on minimizing the functional

| (4.11) |

The spectral approach for estimating the -optimality criterion is outlined in Algorithm 2.

Note that the approximate posterior covariance operator can be used to estimate the classical A-optimality criterion as well. Namely, we can use . Since is independent of the experimental design, A-optimal designs can be obtained by minimizing

| (4.12) |

Furthermore, the present spectral approach can also be used for fast computation of the -optimality criterion. In particular, it is straightforward to note that -optimal designs can be computed by minimizing

This is accomplished by substituting into the discretized -optimality criterion, given by (4.3), and performing some basic manipulations.

4.3 An approach based on low-rank SVD of

In this section, we present an algorithm for estimating the -optimality criterion that relies on computing a low-rank SVD of prior-preconditioned forward operator. This approach relies on the specific form of the -dependent posterior covariance operator; see (2.7).

Before outlining our approach, we make the additional definitions

| (4.13) |

The following result enables a tractable representation for the -optimality criterion.

Proof

See Appendix D.

Using Proposition 2, we can state the -optimality criterion in (4.2) as

| (4.14) |

Note that the second term is independent of the design weights . Thus, we can ignore this term when minimizing . In that case, we focus on

| (4.15) |

Computing the first term requires applications of to vectors. We note,

This only requires a linear solve in the measurement space, when computing . Once a low-rank SVD of is available, this can be done without performing any PDE solves. The trace term in (4.15) can also be computed efficiently. First, we build

at the cost of applications of to vectors. The remaining part of computing does not require any PDE solves. Let denote the Euclidean inner product and the standard basis in . We have

| (4.16) |

Computing this expression requires calculating , for , which amounts to building . We are now in a position to present and an algorithm for computing the -optimality criterion using a low-rank SVD of . This is summarized in Algorithm 3.

.

4.4 Computational cost

Here, we discuss the computational cost of the three algorithms presented above. We measure complexity in terms of applications of the operators and , , and . Note that and correspond to forward and adjoint PDE solves. First we highlight the key computational considerations for each algorithm.

- Computing :

-

The bottleneck in evaluating is the need for applications of and applications of . We assume that a Krylov iterative method is used to apply to vectors, requiring iterations. In the present setting, is determined by the numerical rank of the prior-preconditioned data-misfit Hessian. Thus, each application of requires forward and adjoint solves.

- Computing :

- Computing :

-

This algorithm requires a low-rank SVD of computed up-front. This can be done using a Krylov iterative method or randomized SVD HalkoMartinssonTropp11 . In this case, a rank approximation costs applications of and . This algorithm also requires applications of .

For readers’ convenience, we summarize the computational complexity of the methods in Table 1.

| Algorithm | / | |

|---|---|---|

| randomized | ||

| spectral | ||

| low-rank SVD | - |

A few remarks are in order. Algorithm 2 and Algorithm 3 are more accurate than the randomized approach in Algorithm 1. When deciding between the spectral and low-rank SVD algorithms, several considerations must be accounted for. First, we note that the spectral approach is particularly cheap when the size of the desired design, i.e., the number of active sensors, is small. If the design vector is such that , then . Thus, we only require the computation of the leading eigenvalues of . The low-rank SVD approach is advantageous in the case when the forward model is expensive and applications of are relatively cheap. This is due to the fact that no forward or adjoint solves are required in Algorithm 3, after precomputing the low-rank SVD of . However, the number of applications of is fixed at , where is the number of candidate sensor locations. Lastly, we observe that all algorithms given in Section 4 may be modified to incorporate the low-rank approximation of . Implementation of this is problem specific. Thus, methods presented are agnostic to the structure of

5 Computational experiments

In this section we consider two numerical examples. The first one, concerns goal-oriented OED where the goal-functional is a quadratic functional. In that case, the second order Taylor expansion provides an exact representation of the goal-functional. That example is used to provide an intuitive illustration of the proposed strategy; see Section 5.1. In the second example, discussed in Section 5.2, the goal-functional is nonlinear. In that case, we consider the inversion of a source term in a pressure equation, and the goal-functional is defined in terms of the solution of a second PDE, modeling diffusion and transport of a substance. That example enables testing different aspects of the proposed framework and demonstrating its effectiveness. In particular, we demonstrate the superiority of the proposed -optimality framework over the -optimality and classical A-optimality approaches, in terms of reducing uncertainty in the goal.

5.1 Model problem with a quadratic goal functional

Below, we first describe the model inverse problem under study and the goal-functional. Subsequently, we present our computational results.

5.1.1 Model and goal

We consider the estimation of the source term in the following stationary advection-diffusion equation:

| (5.1) | |||||

The goal-functional is defined as the norm of the solution to (5.1), restricted to a subdomain . To this end, we consider the restriction operator

and define the goal-functional by

Recalling that is the solution operator to (5.1), we can equivalently describe the goal as

| (5.2) |

5.1.2 The inverse problem

In (5.1), we take the diffusion constant to be and velocity as . Additionally, we let be the union of the left and top edges of and the union of the right and bottom edges.

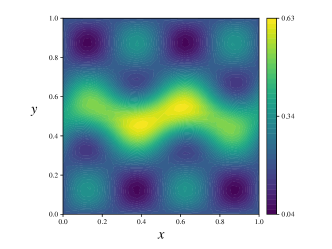

In the present experiment, we use a ground truth parameter , defined as the sum of two Gaussian-like functions to generate a data vector . We depict our choice of and the corresponding state solution in Figure 1. Note that in Figure 1 (right), we also depict our choice of the subdomain for the present example. Additionally, the noise variance is set to . This results in a roughly noise-level. As for the prior, we select the prior mean as and use in (2.2). As an illustration, we visualize the MAP-point and several posterior samples in Figure 2.

For all numerical experiments in this paper, we use a continuous Galerkin finite element discretization with piecewise linear nodal basis functions and spatial grid points. Regarding the experimental setup, we use candidate sensor locations distributed uniformly across the domain. Implementations in the present work are conducted in python and finite element discretization is performed with FEniCS fenics2015 .

5.1.3 Optimal design and uncertainty

In what follows, we choose the spectral method for computing the classical and goal-oriented design criteria, due to its accuracy and computational efficiency. A-optimal designs are obtained by minimizing (4.12). As for the -optimality criterion, we implement the spectral algorithm as outlined in Algorithm 2. Let be the discretized version of operator in (5.2). In the context of this problem, the -optimality criterion, resulting from (4.11), is

| (5.3) |

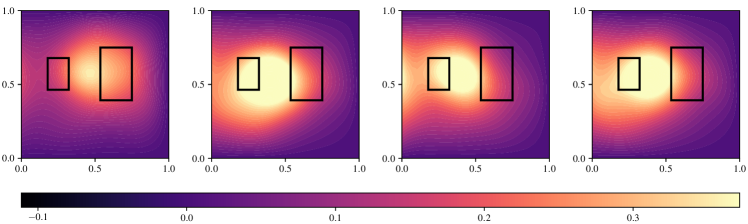

Both classical A-optimal and goal-oriented -optimal designs are obtained with the greedy algorithm. As a first illustration, we plot both types of designs over the state solution ; see Figure 3. Note that for the -optimal designs, we overlay the subdomain , used in the definition of the goal-functional in (5.2). In Figure 3, we observe that the classical designs tend to spread over the domain, while the -optimal designs cluster around the subdomain . However, while the goal-oriented sensor placements prefer the subdomain, sensors are not exclusively placed within this region.

We next illustrate the effectiveness of the -optimal designs in reducing the uncertainty in the goal-functional, as compared to A-optimal designs. In the left column of Figure 4, we consider posterior uncertainty in the goal-functional (top) and the inversion parameter (bottom) when using classical designs with sensors. Uncertainty in the goal functional is quantified by inverting on a given design, then propagating posterior samples through the goal functional. We refer to the computed probability density function of the goal values as a goal-density. Analogous results are reported in the right column, when using goal-oriented designs. Here, the posterior distribution corresponding to each design is obtained by solving the Bayesian inverse problem, where we use data synthesized using the ground-truth parameter.

We observe that the -optimal designs are far more effective in reducing posterior uncertainty in the goal-functional. The bottom row of the figure reveals that the goal-oriented designs are more effective in reducing posterior uncertainty in the inversion parameter in and around the subdomain . On the other hand, the classical designs, being agnostic to the goal functional, attempt to reduce uncertainty in the inversion parameter across the domain. While this is intuitive, we point out that the nature of the goal-oriented sensor placements are not always obvious. Note that for the -optimal design reported in Figure 4 (bottom-right), a sensor is placed near the right boundary. This implies that reducing uncertainty in the inversion parameter around this location is important for reducing the uncertainty in the goal-functional. In general, sensor placements are influenced by physical parameters such as the velocity field, modeling assumptions such as boundary conditions, as well as the definition of the goal-functional.

To provide further insight, we next consider classical and goal-oriented designs with varying number of sensors. Specifically, we plot the corresponding goal-densities against each other in Figure 5, as the size of the designs increases. We observe that the densities corresponding to the -optimal designs have a smaller spread and are closer to the true goal value, when compared to the densities obtained using the A-optimal designs. This provides further evidence that our goal-oriented OED framework is more effective in reducing uncertainty in the goal-functional when compared to the classical design approach.

Next, we compare the effectiveness of classical and goal-oriented designs in terms of reducing the posterior variance in the goal-functional. Note that Theorem 3.1 provides an analytic formula for the variance of the goal with respect to a given Gaussian measure. Here, for a vector of design weights, we obtain the MAP point by solving the inverse problem using data corresponding to the active sensors. Then, compute the posterior variance of the goal via

| (5.4) |

We compute , i.e., the goal standard deviation, for designs corresponding to the A-optimal and -optimal designs. Additionally, we generate random weight vectors for each and compute the resulting values of . The results of this numerical experiment are presented in Figure 6. We first observe that the goal standard deviations corresponding to the -optimal designs are considerably smaller than the values for the A-optimal approach. Furthermore, both classical and goal-oriented methods out-perform the random designs in terms of uncertainty reduction. Also, note the large spread in the goal standard deviations, when using random designs.

5.2 Model problem with a nonlinear goal functional

In this section, we consider an example where the goal-functional depends nonlinearly on the inversion parameter.

5.2.1 Models and goal

We consider a simplified model for the flow of a tracer through a porous medium that is saturated with a fluid. Assuming a Darcy flow model, the system is governed by the PDEs modeling fluid pressure and tracer concentration . The pressure equation is given by

| (5.5) | |||||

Here, denotes the permeability field. The transport of the tracer is modeled by the following steady advection diffusion equation.

| (5.6) | |||||

In this equation , is a diffusion constant and is a source term. Note that the velocity field in the transport equation is defined by the Darcy velocity .

In the present example, the source term in (5.5) is an inversion parameter that we seek to estimate using sensor measurements of the pressure . Thus, the inverse problem is governed by the pressure equation (5.5), which we call the inversion model from now on. We obtain a posterior distribution for by solving this inverse problem. This, in turn, dictates the distribution law for the pressure field . Consequently, the uncertainty in propagates into the transport equation through the advection term in (5.5).

We define the goal-functional by

| (5.7) |

where is a subdomain of interest, and is the indicator function of this set. Note that evaluating the goal-functional requires solving the pressure equation (5.5), followed by solving the transport equation (5.6). In what follows, we call (5.6) the prediction model.

Here, the domain is chosen to be the unit square. In (5.5), we set as the right boundary and as the left boundary. Additionally, is selected as the union of the top and bottom edges of . The permeability field simulates a channel or pocket of higher permeability, oriented left-to-tight across . We display this field in Figure 7 (top-left).

As for the prediction model, we take to be the union of the top, bottom, and right edges of , and as the left edge. The source in (5.6) is a single Gaussian-like function, shown in Figure 8, and the diffusion constant is set to . Moreover, is given by

We require a ground-truth inversion parameter for data generation. This is selected as the sum of two Gaussian-like functions, oriented asymmetrically; see Figure 7 (top-right). For the inverse problem, we set the noise variance to , resulting in approximately noise. The prior mean is set to the constant function . The prior covariance operator is defined according to (2.2) with . We use finite element grid points and equally-spaced candidate sensors.

We depict the pressure field corresponding to the true parameter along with the Darcy velocity, in Figure 7 (bottom-left). The MAP point obtained by solving the inverse problem using all sensors is reported in Figure 7 (bottom-right).

Recall that the goal-functional is formed by integrating over , shown in Figure 9. To illustrate the dependence of on the inversion parameter, we plot where is sampled from the posterior distribution. In particular, we generate a random design of size , collect data on this design, then retrieve a posterior distribution via inversion. Figure 9 shows corresponding to posterior samples. We overlay the subdomain , used to define .

Note that due to the small amount of data used for solving the inverse problem, there is considerable variation in realizations of the concentration field.

5.2.2 Optimal designs and uncertainty

To compute -optimal designs, we need to minimize the discretized goal-oriented criterion (4.2). The definition of this criterion requires the first and second order derivatives of the goal-functional, as well as an expansion point. We provide the derivation of the gradient and Hessian of for the present example, in a function space setting, in Appendix E. As for the expansion point, we experiment with using the prior mean as well as prior samples for . The numerical tests that follow include testing the effectiveness of the -optimal designs compared to A-optimal ones, as well as comparisons of designs obtained by minimizing the -optimality criterion. As before, we utilize the spectral method, outlined in Section 4.2, to estimate both classical and goal-oriented criteria.

Figure 10 compares A-optimal and -optimal designs. Here, we use as the expansion point to form the -optimality criterion. Note that, unlike the study in Section 5.1, the sensors corresponding to the goal-oriented designs do not necessarily accumulate around . This indicates the non-trivial nature of such sensor placements and pitfalls of following an intuitive approach of placing sensors within .

Next, we examine the effectiveness of the -optimal designs in comparison to A-optimal ones. We use a prior sample as expansion point for the -optimality criterion. Figure 11 presents a pairwise comparison of the goal-functional densities obtained by solving the Bayesian inverse problem with goal-oriented and classical design of various sizes.

We observe that the densities corresponding to goal-oriented -optimal designs have a smaller spread and tend to be closer to the true goal value in comparison to densities obtained using classical A-optimal designs. This provides further evidence that the proposed framework is more effective than the classical approach in reducing the uncertainty in the goal-functional.

5.2.3 Comparing goal-oriented OED approaches

To gain insight on the -optimality and -optimality approaches, we report designs corresponding to these schemes in Figure 12. Both goal-oriented criteria are built using a prior sample for expansion point.

We note that the -optimal and -optimal designs behave similarly. This is most evident for the optimal designs of size . To provide a quantitative comparison of these two sensor placement strategies, we report goal-functional densities corresponding to -optimal and -optimal designs in Figure 13. We use the same prior sample as the expansion point. Overall, we note that the -optimal designs are more effective in reducing the uncertainty in the goal-functional compared to -optimal designs.

So far, our numerical tests correspond to comparisons with a single expansion point, being either the prior mean or a sample from the prior. To understand how results vary for different expansion points, we conduct a numerical experiment with multiple expansion points. The set of expansion points used in the following demonstration consists of the prior mean and prior samples. This study enables a thorough comparison of the proposed -optimality framework against the classical A-optimal and goal-oriented -optimality approaches.

We use the prior mean and prior samples as expansion points. These points are used to form -optimality and 21 -optimality criteria. For each of the 21 expansion points, we obtain -optimal and -optimal optimal designs of size . This results in 42 posterior distributions corresponding to each of the considered values of . To compare the performance of the two goal-oriented approaches, we consider a normalized notion of the posterior uncertainty in the goal-functional in each case. Specifically, we consider coefficient of variation (CV) of the goal-functional:

where and indicate variance and expectation with respect to the posterior distribution. We estimate the CV empirically.

For each , we obtain 21 CV values for the goal-functional corresponding to -optimal designs and 21 CV values for the goal-functional corresponding to -optimal designs. We also compute the classical A-optimal design for each . The results are summarized in Figure 14. For each , we report the CV corresponding to the A-optimal design of size and pairwise box plots depicting the distribution of the CVs for the computed goal-oriented designs of size . It is clear from Figure 14 that, on average, both goal-oriented designs produce smaller CVs than the classical approach. Furthermore, -optimal designs reduce CV the most. Additionally, we notice that choice of expansion point matters significantly for the goal-oriented schemes, especially for the -optimal designs. This is highlighted by considering the case, where there is a high variance in the CVs. To illustrate this further, we isolate a subset of the design sizes and report the statistical outliers in the CV data in addition to the box plots; see Figure 15.

Overall, the numerical tests paint a consistent picture: (i) both types of goal-oriented designs outperform the classical designs; (ii) compared to -optimal designs, the -optimal designs are more effective in reducing uncertainty in the goal functional; and (iii) compared to the -optimal designs, -optimal designs show greater sensitivity to the choice of the expansion point.

6 Conclusion

In the present work, we developed a mathematical and computational framework for goal-oriented optimal of infinite-dimensional Bayesian linear inverse problems governed by PDEs. The focus is on the case where the quantity of interest defining the goal is a nonlinear functional of the inversion parameter. Our framework is based on minimizing the expected posterior variance of the quadratic approximation to the goal-functional. We refer to this as the -optimality criterion. We demonstrated that this strategy outperforms classical OED, as well as c-optimal experimental design (which is based on linearization of the goal-functional), in reducing the uncertainty in the goal-functional. Additionally, the cost of our methods, measured in number of PDEs solves, are independent of the dimension of the discretized inversion parameter.

Several avenues of interest for future investigations exist on both theoretical and computational fronts. For one thing, it is natural to consider the case when the inverse problem is nonlinear. Clearly, the resulting methods would expand the application space of our goal-oriented framework. A starting point for addressing goal-oriented optimal design of nonlinear inverse problems is to consider a linearization of the parameter-to-observable mapping, resulting in locally optimal goal-oriented designs. A related approach is to use a Laplace approximation to the posterior, as is common in optimal design of infinite-dimensional inverse problems. Cases of inverse problems with potentially multi-modal designs might demand more costly strategies based on sampling. It would be interesting to investigate suitable importance sampling schemes in such contexts, for efficient evaluation of the -optimality criterion.

A complementary perspective on identifying measurements that are informative to the goal-functional is a post-optimality sensitivity analysis approach. This idea was used in SunseriHartVanBloemenWaandersAlexanderian20 to identify measurements that are most influential to the solution of a deterministic inverse problem. Such ideas were extended to cases of Bayesian inverse problems governed by PDEs in SunseriAlexanderianHartEtAl24 ; ChowdharyTongStadlerEtAl24 . This approach can also be used in a goal-oriented manner. Namely, one can consider the sensitivity of measures of uncertainty in the goal-functional to different measurements to identify informative experiments. This is particularly attractive in the case of nonlinear inverse problems governed by PDEs.

Another important line of inquiry is to investigate goal-oriented criteria defined in terms of quantities other than the posterior variance. For example, one can seek designs that maximize information gain regarding the goal-functional or optimizing inference of the tail behavior of the goal-functional. A yet another potential avenue of further investigations is considering relaxation strategies to replace the binary goal-oriented optimization problem with a continuous optimization problem, for which powerful gradient-based optimization methods maybe deployed.

Acknowledgments

This article has been authored by employees of National Technology & Engineering Solutions of Sandia, LLC under Contract No. DE-NA0003525 with the U.S. Department of Energy (DOE). The employees own all right, title and interest in and to the article and are solely responsible for its contents. SAND2024-15167O.

This material is also based upon work supported by the U.S. Department of Energy, Office of Science, Office of Advanced Scientific Computing Research Field Work Proposal Number 23-02526.

References

- [1] A. Alexanderian. Optimal experimental design for infinite-dimensional Bayesian inverse problems governed by PDEs: A review. Inverse Problems, 37(4), 2021.

- [2] A. Alexanderian, P. J. Gloor, and O. Ghattas. On Bayesian A- and D-Optimal Experimental Designs in Infinite Dimensions. Bayesian Analysis, 11(3), 2016.

- [3] A. Alexanderian, N. Petra, G. Stadler, and O. Ghattas. A-optimal design of experiments for infinite-dimensional Bayesian linear inverse problems with regularized -sparsification. SIAM Journal Scientific Computing, 36(5):A2122–A2148, 2014.

- [4] A. Alexanderian, N. Petra, G. Stadler, and O. Ghattas. A fast and scalable method for A-optimal design of experiments for infinite-dimensional Bayesian nonlinear inverse problems. SIAM Journal Scientific Computing, 38(1):A243–A272, 2016.

- [5] A. Alexanderian, N. Petra, G. Stadler, and O. Ghattas. Mean-variance risk-averse optimal control of systems governed by PDEs with random parameter fields using quadratic approximations. SIAM/ASA Journal on Uncertainty Quantification, 5(1):1166–1192, 2017.

- [6] A. Alexanderian and A. K. Saibaba. Efficient D-optimal design of experiments for infinite-dimensional Bayesian linear inverse problems. SIAM Journal Scientific Computing, 40(5):A2956–A2985, 2018.

- [7] M. Alnæs, J. Blechta, J. Hake, A. Johansson, B. Kehlet, A. Logg, C. Richardson, J. Ring, M. E. Rognes, and G. N. Wells. The fenics project version 1.5. Archive of numerical software, 3(100), 2015.

- [8] A. C. Atkinson and A. N. Donev. Optimum Experimental Designs. Oxford, 1992.

- [9] A. Attia, A. Alexanderian, and A. K. Saibaba. Goal-oriented optimal design of experiments for large-scale Bayesian linear inverse problems. Inverse Problems, 34(9):095009, 2018.

- [10] H. Avron and S. Toledo. Randomized algorithms for estimating the trace of an implicit symmetric positive semi-definite matrix. Journal of the ACM, 58(2):34, 2011.

- [11] T. Bui-Thanh, O. Ghattas, J. Martin, and G. Stadler. A computational framework for infinite-dimensional Bayesian inverse problems. Part I: The linearized case, with application to global seismic inversion. SIAM Journal on Scientific Computing, 35(6):A2494–A2523, 2013.

- [12] T. Butler, J. Jakeman, and T. Wildey. Combining push-forward measures and Bayes’ rule to construct consistent solutions to stochastic inverse problems. SIAM Journal on Scientific Computing, 40(2):A984–A1011, 2018.

- [13] T. Butler, J. D. Jakeman, and T. Wildey. Optimal experimental design for prediction based on push-forward probability measures. Journal of Computational Physics, 416:109518, 2020.

- [14] K. Chaloner and I. Verdinelli. Bayesian experimental design: A review. Statistical Science, 10(3):273–304, 1995.

- [15] A. Chowdhary, S. Tong, G. Stadler, and A. Alexanderian. Sensitivity analysis of the information gain in infinite-dimensional Bayesian linear inverse problems. International Journal for Uncertainty Quantification, 14(6), 2024.

- [16] G. Da Prato. An introduction to infinite-dimensional analysis. Springer, 2006.

- [17] G. Da Prato and J. Zabczyk. Second-order partial differential equations in Hilbert spaces. Cambridge University Press, 2002.

- [18] M. Dashti and A. M. Stuart. The Bayesian approach to inverse problems. In R. Ghanem, D. Higdon, and H. Owhadi, editors, Handbook of Uncertainty Quantification, pages 311–428. Spinger, 2017.

- [19] E. Haber, L. Horesh, and L. Tenorio. Numerical methods for experimental design of large-scale linear ill-posed inverse problems. Inverse Problems, 24(055012):125–137, 2008.

- [20] E. Haber, Z. Magnant, C. Lucero, and L. Tenorio. Numerical methods for A-optimal designs with a sparsity constraint for ill-posed inverse problems. Computational Optimization and Applications, pages 1–22, 2012.

- [21] N. Halko, P.-G. Martinsson, and J. A. Tropp. Finding structure with randomness: Probabilistic algorithms for constructing approximate matrix decompositions. SIAM Review, 53(2):217–288, 2011.

- [22] E. Herman, A. Alexanderian, and A. K. Saibaba. Randomization and reweighted -minimization for A-optimal design of linear inverse problems. SIAM Journal on Scientific Computing, 42(3):A1714–A1740, 2020.

- [23] R. Herzog, I. Riedel, and D. Uciński. Optimal sensor placement for joint parameter and state estimation problems in large-scale dynamical systems with applications to thermo-mechanics. Optimization and Engineering, 19:591–627, 2018.

- [24] B. Holmquist. Moments and cumulants of the multivariate normal distribution. Stochastic Analysis and Applications, 6(3):273–278, 1988.

- [25] F. Li. A combinatorial approach to goal-oriented optimal Bayesian experimental design. PhD thesis, Massachusetts Institute of Technology, 2019.

- [26] F. Pukelsheim. Optimal design of experiments. SIAM, 2006.

- [27] A. M. Stuart. Inverse problems: A Bayesian perspective. Acta Numerica, 19:451–559, 2010.

- [28] I. Sunseri, A. Alexanderian, J. Hart, and B. van Bloemen Waanders. Hyper-differential sensitivity analysis for nonlinear Bayesian inverse problems. International Journal for Uncertainty Quantification, 14(2), 2024.

- [29] I. Sunseri, J. Hart, B. van Bloemen Waanders, and A. Alexanderian. Hyper-differential sensitivity analysis for inverse problems constrained by partial differential equations. Inverse Problems, 2020.

- [30] K. Triantafyllopoulos. Moments and cumulants of the multivariate real and complex gaussian distributions, 2002.

- [31] F. Tröltzsch. Optimal Control of Partial Differential Equations: Theory, Methods and Applications, volume 112 of Graduate Studies in Mathematics. American Mathematical Society, 2010.

- [32] D. Uciński. Optimal measurement methods for distributed parameter system identification. CRC Press, Boca Raton, 2005.

- [33] U. Villa, N. Petra, and O. Ghattas. hIPPYlib: An extensible software framework for large-scale inverse problems governed by PDEs: Part I: Deterministic inversion and linearized Bayesian inference. ACM Transactions on Mathematical Software (TOMS), 47(2):1–34, 2021.

- [34] C. S. Withers. The moments of the multivariate normal. Bulletin of the Australian Mathematical Society, 32(1):103–107, 1985.

- [35] K. Wu, P. Chen, and O. Ghattas. An offline-online decomposition method for efficient linear Bayesian goal-oriented optimal experimental design: Application to optimal sensor placement. SIAM Journal on Scientific Computing, 45(1):B57–B77, 2023.

Appendix A Proof of Theorem 3.1

We first recall some notations and definitions regarding Gaussian measures on Hilbert spaces. Recall that for a Gaussian measure on a real separable Hilbert space the mean satisfies,

Moreover, is a positive self-adjoint trace class operator that satisfies,

| (A.1) |

For further details, see [16, Section 1.4]. We assume that is strictly positive. In what follows, we let be the complete orthonormal set of eigenvectors of and the corresponding (real and positive) eigenvalues.

Consider the probability space , where is the Borel -algebra on . For a fixed , the linear functional

considered as a random variable , is a Gaussian random variable with mean and variance . More generally, for in , the random -vector given by is an -variate Gaussian whose distribution law is ,

| (A.2) |

The arguments in this appendix rely heavily on the standard approach of using finite-dimensional projections to facilitate computation of Gaussian integrals. As such we also need some basic background results regarding Gaussian random vectors. In particular, we need the following result [34, 24, 30].

Lemma 1

Suppose is an -variate Gaussian random variable. Then, for , and in ,

-

(a)

; and

-

(b)

.

The following technical result is useful in what follows.

Lemma 2

Let be a bounded selfadjoint linear operator on a Hilbert space , and let be a Gaussian measure on . We have

-

(a)

, for all and in ;

-

(b)

, for all ; and

-

(c)

.

Proof

The first statement follows immediately from (A.1). We consider (b) next. For , we define the projector in terms of the eigenvectors of ,

Note that defined by has an -variate Gaussian law, , with

where is the Kronecker delta. We next consider,

Note that for each ,

The last step follows from Lemma 1(a). Furthermore, , for all . Therefore, since , by the Dominated Convergence Theorem,

We next consider the third statement of the lemma. The approach is similar to the proof of part (b). We note that

As in the case of above, we can easily bound . Specifically, , for all . Note also that . Therefore, by the Dominated Convergence Theorem,

| (A.3) |

Next, note that for each ,

| (A.4) |

where we have used Lemma 1(b) in the final step. Let us consider each of the three terms in (A.4). We note,

| (A.5) |

Before, we consider the second and third terms in (A.4), we note

Next, we note

| (A.6) |

as . A similar argument shows,

| (A.7) |

Hence, combining (A.3)–(A.7), we obtain

which completes the proof of the lemma.

The following result extends Lemma 2 to the case of non-centered Gaussian measures.

Lemma 3

Let be a bounded selfadjoint linear operator on a real Hilbert space , and let be a Gaussian measure on .

-

(a)

, for all and in ;

-

(b)

for all ; and

-

(c)

.

Proof

These identities follow from Lemma 2 and some basic manipulations. For brevity, we only prove the third statement. The other two can be derived similarly. Using Lemma 2(c),

Subsequently, we solve for . To do this, we require the formula for the expected value of a quadratic form on a Hilbert space (see [2, Lemma 1]) and items (a) and (b) of the present lemma. Performing the calculation, we arrive at

We now have all the tools required to prove Theorem 3.1.

Appendix B Proof of Theorem 3.2

Proof (Proof of Theorem 3.2)

We begin with the following definitions

| (B.1) |

These components enable expressing as

Note that the variance of does not depend on . We can apply Theorem 3.1 to obtain an expression for the variance of in relation to :

| (B.2) |

Next, we compute the remaining expectations in (3.9). However, this will require some manipulation of the formula for . We view the MAP point, given in (2.3), as an affine transformation on data . Thus,

| (B.3) |

Using this representation of , (B.2) becomes

Now the variance expression is in a form suitable for calculating the final moments. Recalling the definition of the likelihood measure, we find that

Computing the outer expectation with respect to the prior measure yields,

| (B.4) | ||||

The remainder of the proof involves the acquisition of a meaningful representation of . Our first step towards simplification requires substituting the components and of , given by (B.3), into (B.4). We follow this by recognizing occurrences of in the resulting expression. Performing these operations, we have that

| () | ||||

| () | ||||

| () | ||||

| () | ||||

| () | ||||

| () |

To facilitate the derivations that follow, we have assigned a label to each of the summands, in the above equation. We refer to , and as product terms and call , , and the trace terms.

Let us consider the product terms. Note that

| () | ||||

| () | ||||

| () | ||||

| () | ||||

| () | ||||

| () |

Using the identity , it follows that

Similarly, splitting and using that is selfadjoint,

Finally, we finish the simplification of the product terms by combining previous calculations with the remaining term ,

| (B.5) | ||||

Lastly, we turn our attention to the trace terms. Combining the first two trace terms and manipulating,

Adding in the remaining term , the sum of the trace terms is computed to be

| (B.6) | ||||

Appendix C Proof of Proposition 1

Proof

Proving the proposition is equivalent to manipulating the trace terms in (4.9). Recall that , with , as in (4.7). We then claim that

| (C.1) | ||||

| (C.2) |

This result follows from repeated applications of the cyclic property of the trace. We show the proof of the second equality and omit the first one for brevity. Using the definition of ,

Here, we have also used the facts and , for , and in . Substituting (C.1) and (C.2) into (4.9), we arrive at the desired representation of .

Appendix D Proof of Proposition 2

Proof

We begin by proving (a). Considering , we note that

Thus, , and the Sherman–Morrison–Woodbury identity provides that

Therefore,

Parts (b) and (c) follow from some algebraic manipulations and using the identity for .

Appendix E Gradient and Hessian of goal as in Section 5.2.1

We obtain the adjoint-based expressions for the gradient and Hessian of following a formal Lagrange approach. This is accomplished by forming weak representations of the inversion model (5.5) and prediction model (5.6) and formulating a Lagrangian functional constraining the goal to these forms. In what follows, we denote

We next discuss the weak formulations of the inversion and prediction models. The weak form of the inversion model is

Similarly, the weak formulation of the prediction model is

We constrain the goal-functional to these weak forms, arriving at the Lagrangian

| (E.1) |

Here, and are the Lagrange multipliers. This Lagrangian facilitates computing the derivative of the goal functional with respect to the inversion parameter .

Gradient. The gradient expression is derived using the formal Lagrange approach [31]. Namely, the Gâteaux derivative of at , and in a direction , satisfies

| (E.2) |

provided the variations of the Lagrangian with respect to the remaining arguments vanish. Here is shorthand for

Thus, an evaluation of the gradient requires solving the following system,

| (E.3) |

along all test functions in the respective test function spaces. The equations are solved in the order presented in (E.3). It can be shown that the weak form of the inversion model is equivalent to , similarly the prediction model weak form is equivalent to . These are referred to as the state equations. We refer to and as the adjoint equations. The variations required to form the gradient system are

Hessian. We compute the action of the Hessian using a formal Lagrange approach as well. This is facilitated by formulating a meta-Lagrangian functional; for a discussion of this approach, see, e.g., [33]. The meta-Lagrangian is

| (E.4) | ||||

where , and are additional Lagrange multipliers. Equating variations of with respect to , and to zero returns the gradient system. To apply the Hessian of at to (in the direction), we must solve the gradient system (E.3), then the additional system

| (E.5) |

for all test functions , , , and . The equations and are referred to as the incremental state equations. We call the equations and the incremental adjoint equations. For readers’ convenience, we provide the required variational derivative for forming the incremental equations:

The variation of with respect to reveals a means to compute the Hessian vector product, , as follows.

| (E.6) |