GMC-IQA: Exploiting Global-correlation and Mean-opinion Consistency for No-reference Image Quality Assessment

Abstract

Due to the subjective nature of image quality assessment (IQA), assessing which image has better quality among a sequence of images is more reliable than assigning an absolute mean opinion score for an image. Thus, IQA models are evaluated by global correlation consistency (GCC) metrics like PLCC and SROCC, rather than mean opinion consistency (MOC) metrics like MAE and MSE. However, most existing methods adopt MOC metrics to define their loss functions, due to the infeasible computation of GCC metrics during training. In this work, we construct a novel loss function and network to exploit Global-correlation and Mean-opinion Consistency, forming a GMC-IQA framework. Specifically, we propose a novel GCC loss by defining a pairwise preference-based rank estimation to solve the non-differentiable problem of SROCC and introducing a queue mechanism to reserve previous data to approximate the global results of the whole data. Moreover, we propose a mean-opinion network, which integrates diverse opinion features to alleviate the randomness of weight learning and enhance the model robustness. Experiments indicate that our method outperforms SOTA methods on multiple authentic datasets with higher accuracy and generalization. We also adapt the proposed loss to various networks, which brings better performance and more stable training.

1 Introduction

Image quality assessment (IQA) is a long-standing research in image processing fields. According to the availability of reference images, IQA can be categorized into three types: full-reference IQA (FR-IQA), reduced-reference IQA (RR-IQA) and no-reference IQA (NR-IQA). Among these types, NR-IQA has gained more attention since it removes the dependence on reference images, which are unavailable in many real-world applications.

IQA is a extremely subjective task, since different people have various opinions and are hard-pressed to give the exactly same quality score for an image. Therefore, the ground truth (GT) labels of images are defined as the average of subjective scores provided by multiple human annotators, namely mean opinion score (MOS). Compared to rating an absolute quality score for an image, it is easier and more reliable to assess the relative quality for a sequence of images. Therefore, IQA models are evaluated using Spearman’s rank order correlation coefficient (SROCC) and Pearson’s linear correlation coefficient (PLCC), which measure the global correlation consistency (GCC) between predicted scores and GT labels for a data set. However, most IQA methods employ distance-based loss functions, i.e.MAE and MSE, for training by minimizing the discrepancy between predicted scores and GT labels to achieve the mean opinion consistency (MOC). This inconsistency between the training and evaluation objectives leads to sub-optimal performance.

In this work, we exploit GCC and MOC for IQA from the aspect of loss function and network. To achieve the global correlation consistency, one straightforward solution is to employ the PLCC and SROCC as the training objective. However, there exist two main problems. First, the ranking function in the SROCC is non-differentiable, which makes the back-propagation of gradients infeasible. Additionally, due to the limited GPU memory, the two metric scores can only be computed within a batch during training, leading to a significant disparity compared to the results computed on the entire data set. To address these issues, we propose a pairwise preference-based rank estimation approach to build a differentiable SROCC variant. As a result, we combine the two evaluation metrics into the training objective and formulate a kind of global correlation consistency (GCC) loss. Meanwhile, to break through the limitation constrained by the GPU memory, we introduce a queue mechanism to store previous data during the training, enabling the resulting GCC loss to be approximate to that computed on the whole data set. Moreover, we theoretically prove that the proposed GCC loss can be reformulated into the MSE form by applying normalization to score values and ranking indices, which introduces strong constraints to global data correlation and global ranking consistency, respectively.

Additionally, a novel network architecture called mean-opinion network (MoNet) is proposed. Mimicking the human rating process, we develop a multi-view attention learning (MAL) module for the MoNet to implicitly learn diverse opinion features by capturing complementary contexts from various perspectives. The opinion features collected from different MALs are integrated into a comprehensive quality score, effectively relieving the impacts of hyper-parameter configurations on the performance, facilitating more robust quality score assessment. The MoNet is trained end to end by combining the proposed GCC loss and the MSE loss to optimize Global-correlation and Mean-opinion Consistency, forming the GMC-IQA framework.Extensive experimental results show that the proposed GMC-IQA achieves state-of-the-art performance on multiple authentic datasets. Cross-dataset evaluation validates the better generalization of the GMC-IQA compared to many existing methods. Moreover, we experimentally prove that the proposed loss function can be adapted to various network architectures, enabling better performance and more stable training. Overall, our contributions are summarized as follows:

-

1.

We propose a novel GCC loss for IQA and develop a queue mechanism based optimization strategy, effectively addressing the unalignment between the training and evaluation objectives.

-

2.

We propose a mean-opinion network, which integrates diverse opinion features produced by our meticulously designed MAL modules, effectively alleviating the randomness of weight learning and enhancing the robustness of the model.

-

3.

Numerous experimental results validate that our GMC-IQA framework significantly outperforms many advanced methods with higher accuracy and superior generalization. We also experimentally prove the advantages of the proposed loss and network architecture in promoting the training stability and generalization.

2 Related Works

In the context of our work, we provide a brief review of related works on NR-IQA and loss functions applied in IQA.

2.1 No-Reference Image Quality Assessment

Due to the remarkable progress in vision applications, considerable attention has been focused on elevating the performance of IQA. As a pioneer, Kang et al. (2014) design a convolutional neural network (CNN) for IQA to extract image features. Then they extend this work to a multi-task CNN Kang et al. (2015). However, insufficient training samples limit effective learning of CNNs-based models. For this reason, some methods Su et al. (2020); Qin et al. (2023) employ pre-trained networks, such as ResNet He et al. (2016) and vision transformer (ViT) Dosovitskiy et al. (2020), as feature extractors. However, recent research Zhu et al. (2020); Chen et al. (2022) point out that these popular networks pre-trained for high-level tasks are not suitable for IQA. Therefore, some works pre-train models on related pretext tasks, e.g., image restoration Lin and Wang (2018); Ma et al. (2021), quality ranking Liu et al. (2017); Ma et al. (2017), and contrastive learning Zhao et al. (2023); Madhusudana et al. (2022). Some other methods enhance the IQA performance by introducing auxiliary information. For instance, Wang et al. (2023a); Saha et al. (2023) integrate textual information into the IQA. Zhang et al. (2023) explore the relationship among multiple tasks, namely the IQA, scene classification and distortion classification. Additionally, many methods utilize the idea of ensemble learning to aggregate IQA-related knowledge for more effective learning. Ma et al. (2019) collect a set of existing IQA models for annotation. The annotated samples are used for training their model to learn the quality score as well as the uncertainty. Both Wang et al. (2023b) and Zhang et al. (2020) propose a novel multi-dataset training strategy.

Although existing methods have improved IQA performance by addressing various aspects of the model, they ignore to exploit the global correlation consistency in the training process, which makes a gap between training and evaluation objectives.

2.2 Loss Functions for Image Quality Assessment

Distance-based loss and rank-based loss are two representative losses used in IQA models. The distance-based loss aims to minimize the difference between predicted scores and GTs. MAE and MSE are widely used for training the IQA models. When evaluating the performance of IQA models, global correlation consistency metrics, like PLCC and SROCC, are employed. This inconsistency leads to a gap between training and evaluation objectives. To address this problem, Li et al. (2020) propose a Norm loss, which is formulated using a novel normalization approach. The Norm loss is closely connected to PLCC and RMSE. It is performed within a batch during training, while PLCC and SROCC are computed on the entire test dataset during evaluation. This also contributes to inconsistent training and evaluation objectives.

For rank-based loss, the IQA task is viewed as a quality ranking problem. Gao et al. (2013) adopt the cross-entropy loss to compute the discrepancy between predicted quality ranking and GT binary labels for each image pair. Liu et al. (2017) employ the hinge loss to formulate the optimization objective of quality ranking learning. Ma et al. (2017) use learning-to-rank algorithms, like RankNet Burges et al. (2005) and ListNet Cao et al. (2007), to train the IQA model on a number of image pairs. Most of these methods employ image pairs to compute the rank-based loss during training, while the evaluation is conducted on a large sequence of data to compute the ranking consistency. This discrepancy in training and evaluation can lead to sub-optimal performance.

3 The Proposed Method

3.1 Global Correlation Consistency Loss

The performance of an IQA model is typically evaluated using two metrics: PLCC and SROCC. Given images, the two metrics measure the correlation consistency between the predicted scores and the GT labels . They can be formulated into a unified form as follows:

| (1) |

In PLCC, and ; in SROCC, ) and , where is a ranking function. In Eq. (1), and denote the standard deviation of and , respectively, and is the covariance.

There are two main problems preventing the IQA models to be directly optimized towards the two evaluation metrics: 1) the ranking function in the SROCC is non-differentiable and 2) due to the limited GPU memory, the two metric scores can only be computed within a batch during training, leading to a significant disparity compared to the results computed on the entire data set. To address these problems, we present a pairwise preference-based rank approximation approach to build a differentiable SROCC variant. Based on the PLCC and the SROCC variant, we propose a kind of global correlation consistency (GCC) loss, which enables IQA models to be optimized for the two evaluation metrics. Furthermore, we devise a queue mechanism to narrow the disparity between metric scores computed on a batch and the entire dataset.

A) Pairwise Preference-based Rank Estimation. To tackle the issue of non-differentiability of the , we propose a novel approach called pairwise preference-based rank estimation, which transforms the sequence ranking into pairwise comparisons. By computing the expectation of pairwise comparison results, we build a differentiable proxy function to estimate the rank indices, which are statistically close to the true ranking results.

For the input data , we first normalize it by , where and . Then we obtain a normalized data . To get the relative comparison of any two elements in , we propose a pairwise preference label (PPL) . The PPL () is defined as the probability that is greater than , which is mathematically expressed as follows:

|

|

(2) |

where denotes the probability density function of the standard normal distribution, and erf is the error function. For any two elements , , there are three possible situations:

If , (when , );

If , ;

If , (when , ).

Based on Eq. (2), we obtain a probability matrix . Based on the PPLs, we propose a ranking proxy function , which estimates the rank indices for the input data as follows:

|

|

(3) |

where denotes the estimated index for the element , and denotes the expectation. According to Eq. (3), when is higher, the PPL also tends to increase, which leads to a greater estimated index . In this manner, we achieve a statistically differentiable estimation to the non-smooth ranking function. Additionally, the proposed method preserves both monotonicity and correlation characteristics of the original ranking function.

B) Queue Mechanism. To address problem brought by the limited GPU memory, we propose a queue mechanism to approximate the two losses computed on a batch to those computed on the entire data set. The core idea is to maintain an on-the-fly queue for storing the latest samples. This allows us to reuse the predicted scores of the model from the immediate preceding batches. Specifically, the queue always maintains pairs of data, each of which consists of a predicted score of previous batches and the corresponding GT. The data in the queue are progressively replaced, where the current batch of data are enqueued and the oldest data are dequeued. Removing the oldest data can be beneficial, since these data are the most outdated and thus the least consistent with the newest ones, ensuring a small discrepancy between the queue data and the current batch of data.

The queue length is an important hyper-parameter. The queue data always represent a sampled subset of the entire dataset. Increasing the value of can lead to a closer estimation of the PLCC and SROCC computed on the entire dataset. However, it’s important to note that if is too large, it may cause the queue to retain a number of old data, potentially reducing the accuracy of ranking estimation. In the experiments, we demonstrate the impact of different values of on the performance of our model.

C) Formulation of GCC loss. Combining the pairwise preference-based rank estimation and the queue mechanism, the proposed GCC loss consists of two loss functions: a PLCC-formulated and a SGCC-formulated global correlation consistency (PGCC and SGCC) loss. They are formulated as follows:

| (4) |

where is defined in Eq. (1), and is the proposed rank estimation defined in Eq. (3). The PGCC and the SGCC loss measure the monotonicity and the linear correlation between predicted scores and GT labels, respectively.

D) Theoretical Analysis. For the input data , the normalized data has the property . Thus, we can reformulate Eq. (1) to:

|

|

(5) |

Then we can transform and to:

|

|

(6) |

Eq. (6) clearly indicates that both and can be reformulated in the form of the MSE loss. Compared to the widely used MSE loss which computes the one-by-one distance between predicted scores and GT scores, the two losses introduce global consistency by applying the normalization to score values and ranking indices, which impose stronger constraints on the global data distribution and ranking correlation, respectively.

3.2 Mean-opinion Network

In this work, we present a novel network called mean-opinion network (MoNet), which collects various opinions by capturing diverse attention contexts to make a comprehensive decision on the image quality score. Fig. 1 shows the network architecture of the MoNet, which mainly consists of three parts: i) a pre-trained ViT is employed for multi-level feature perception, ii) multi-view attention learning (MAL) modules are proposed for opinion collection, and iii) an image quality score regression module is designed for quality estimation.

A) Multi-level Semantic Perception. Given an image , we firstly crop it into patches with the size of , where and denote the height and width of the image and . Then the patches are flattened and fed into a linear projection with the dimension of , producing the embedding feature . Subsequently, the features sequentially traverses 12 transformer blocks, resulting in a set of multi-level features. Finally, the outputs of transformer blocks are selected and used as basic features, denoted as ().

B) Multi-view Attention Learning Module. The critical part of the MoNet is the multi-view attention learning (MAL) module. The motivation behind it is that individuals often have diverse subjective perceptions and regions of interest when viewing the same image. To this end, we employ multiple MALs to learn attentions from different viewpoints. Each MAL is initialized with different weights and updated independently to encourage diversity and avoid redundant output features. The number of MALs can be flexibly set as a hyper-parameter. We show in our results its effect on the performance of our model. As shown in Fig. 1, the MAL starts from self-attentions (SAs), each of which is responsible to process a basic feature (). The outputs of all the SAs are concatenated, forming a multi-level aggregated feature . Then passes through two branches, i.e., a pixel-wise SA branch and a channel-wise SA branch, which apply a SA across spatial and channel dimensions, respectively, to capture complementary non-local contexts and generate multi-view attention maps. In particular, for the channel-wise SA, the feature is first reshaped and permuted to convert the size from to . After the SA, the output feature is permuted and reshaped back to the original size . Subsequently, the outputs of the two branches are added and average pooled, generating an opinion feature. The design of the two branches has two key advantages. First, implementing the SA in different dimensions promotes diverse attention learning, yielding complementary information. Second, contextualized long-range relationships are aggregated, benefiting global quality perception.

C) Image Quality Score Regression. Assuming that opinion features are generated from MALs employed in the MoNet. To derive a global quality score from the collected opinion features, we utilize an additional MAL. The MAL integrates diverse contextual perspectives, resulting in a comprehensive opinion feature that captures essential information. This feature is then processed through a transformer block, three convolutional layers with kernel sizes of , , and to reduce the number of channels, followed by two fully connected layers that transform the feature size from 128 to 64 and from 64 to 1. Finally, we obtain a predicted quality score from the MoNet.

3.3 Formulation of the Training Objective

Our total loss function combines the two GCC losses and the MSE loss. The two GCC losses are computed based on both the queue data ( and ) and the current batch of data ( and ), constituting a new sequence of data and . The MSE loss is only computed on the current batch of data. The total loss is defined as follows:

|

|

(7) |

where , and are hyper-parameters. In Eq. (7), the GCC losses and the MSE loss enhance the global correlation consistency and mean opinion consistency, respectively. Therefore, the total loss is called GMC loss. When the current batch of data are easy samples, both GCC and MSE losses tend to be small, resulting in a smaller GMC loss; otherwise, the GMC loss becomes larger. Therefore, the GMC loss can pull away easy samples from hard ones, thereby strengthening the training on hard samples. We train the MoNet using the GMC loss, forming our GMC-IQA framework.

4 Experiments

4.1 Datasets And Evaluation Metrics

We train and evaluate our model on four authentic datasets, namely LIVEC Ghadiyaram and Bovik (2015), BID Ciancio et al. (2010), Koniq Hosu et al. (2020), and SPAQ Fang et al. (2020), which contain 1162, 586, 10073 and 11125 images with realistic distortions, respectively. PLCC and SROCC are used for evaluating the performance of IQA models. Both metrics range from -1 to 1, with higher values indicating better performance of IQA models.

4.2 Implementation Details

Following the settings in Su et al. (2020); Yang et al. (2022); Qin et al. (2023), we randomly divide each dataset into 80% for training and 20% for testing. For the SPAQ, the shortest side of an image is resized to 512 according to Fang et al. (2020). Let denote the number of training samples, the queue length is set to 60%. The hyper-parameters , and defined in Eq. (7) are set to 0.5, 0.5 and 1. The pre-trained vit_base_patch8_224 is used as the backbone of the MoNet. We use transformer blocks to extract basic features, namely the 3rd, 6th, 9th and 12th blocks. If not explicitly specified, the default number of the MAL is set to . All experiments are conducted 10 times, and the median of the 10 scores are reported as the final score. We use the Adam optimizer with a learning rate of and a weight decay of . The learning rate is adjusted using the Cosine Annealing for every 50 epochs. We train our model for 100 epochs with a batch size of 11.

4.3 Comparisons With State-of-the-Arts

A) Individual Dataset Comparison. We compare our model with 10 NR-IQA models, namely CNNIQA Kang et al. (2014), ILNIQE Zhang et al. (2015), WaDIQaM-NR Bosse et al. (2017), SFA Li et al. (2018), HyperIQA Su et al. (2020), TeacherIQA Chen et al. (2022), MANIQA Yang et al. (2022), TReS Golestaneh et al. (2022), DEIQT Qin et al. (2023) and QPT-ResNet50 Zhao et al. (2023).

The results on the four authentic datasets are shown in Tab. 1. The proposed GMC-IQA outperforms all the compared models on the LIVEC, BID and Koniq. On the SPAQ, GMC-IQA obtains a slightly inferior performance to QPT-ResNet50 Zhao et al. (2023) with a margin of 0.13% in terms of PLCC. It is worth noting that QPT-ResNet50 synthesizes approximately distorted images for pre-training. It exhibits a remarkable superiority over existing methods. Nevertheless, our GMC-IQA achieves better results to QPT-ResNet50 on most datasets without the complicated dataset synthesis for pre-training.

| LIVEC | BID | Koniq | SPAQ | |||||

|---|---|---|---|---|---|---|---|---|

| Model | SROCC | PLCC | SROCC | PLCC | SROCC | PLCC | SROCC | PLCC |

| CNNIQA Kang et al. (2014) | 0.6269 | 0.6008 | 0.6163 | 0.6144 | 0.6852 | 0.6837 | 0.7959 | 0.7988 |

| ILNIQE Zhang et al. (2015) | 0.4531 | 0.5114 | 0.4946 | 0.4538 | 0.5029 | 0.4956 | 0.7194 | 0.654 |

| WaDIQaM-NR Bosse et al. (2017) | 0.6916 | 0.7304 | 0.6526 | 0.6359 | 0.7294 | 0.7538 | 0.8397 | 0.8449 |

| SFA Li et al. (2018) | 0.8037 | 0.8213 | 0.8202 | 0.8253 | 0.8882 | 0.8966 | 0.9057 | 0.9069 |

| HyperIQA Su et al. (2020) | 0.8650 | 0.8831 | 0.8345 | 0.8757 | 0.9066 | 0.9216 | 0.9137 | 0.9170 |

| TeacherIQA Chen et al. (2022) | 0.8411 | 0.8510 | 0.7663 | 0.7891 | 0.9100 | 0.9160 | — | — |

| MANIQA Yang et al. (2022) | 0.8914 | 0.9089 | 0.8755 | 0.9022 | 0.9307 | 0.9452 | 0.9229 | 0.9265 |

| TReS Golestaneh et al. (2022) | 0.8427 | 0.8568 | 0.8448 | 0.8740 | 0.9263 | 0.9120 | 0.9213 | 0.9217 |

| DEIQT Qin et al. (2023) | 0.8750 | 0.8940 | — | — | 0.9210 | 0.9340 | 0.9190 | 0.9230 |

| QPT-ResNet50 Zhao et al. (2023) | 0.8947 | 0.9141 | 0.8875 | 0.9109 | 0.9271 | 0.9413 | 0.9250 | 0.9279 |

| GMC-IQA | 0.9062 | 0.9225 | 0.9059 | 0.9182 | 0.9325 | 0.9471 | 0.9251 | 0.9267 |

B) Cross-dataset Evaluation. We compare the cross-dataset performance of our model with three NR-IQA models, namely HyperIQA Su et al. (2020), TReS Golestaneh et al. (2022) and MANIQA Yang et al. (2022). To validate the generalization of our network architecture, we train a variant model MoNet-MSE, which optimizes the MoNet only using the MSE loss and employs the same training configurations as our full model. We randomly choose 80% images of one dataset for training, and use another three datasets with all images for testing. The results in Tab. 2 demonstrate the superiority of MoNet-MSE on multiple datasets, validating the robust generalization of the proposed MoNet architecture. It can be seen that our full model GMC-IQA further improves the performance, although in some cases, its performance is slightly lower than that of MANIQA, such as a difference of 0.48% and 0.59% when trained on the SPAQ and tested on the LIVEC and Koniq. The results suggest that the proposed GMC loss can further enhance the generalization, enabling our model to extend its capabilities across a wider range of image distributions.

| Training | Testing | HyperIQA | TReS | MANIQA | MoNet-MSE | GMC-IQA |

|---|---|---|---|---|---|---|

| Koniq | 0.7427 | 0.7307 | 0.7970 | 0.7948 | 0.8015 | |

| BID | 0.8708 | 0.8594 | 0.8759 | 0.8763 | 0.8782 | |

| LIVEC | SPAQ | 0.8489 | 0.8655 | 0.8759 | 0.8763 | 0.8773 |

| LIVEC | 0.7606 | 0.7704 | 0.8655 | 0.8599 | 0.8677 | |

| BID | 0.8018 | 0.8149 | 0.8571 | 0.8529 | 0.8573 | |

| Koniq | SPAQ | 0.8354 | 0.8465 | 0.8908 | 0.8874 | 0.8880 |

| LIVEC | 0.7717 | 0.7541 | 0.8328 | 0.8337 | 0.8405 | |

| Koniq | 0.6880 | 0.7071 | 0.7673 | 0.7819 | 0.7751 | |

| BID | SPAQ | 0.8290 | 0.8390 | 0.8363 | 0.8389 | 0.8416 |

| LIVEC | 0.7596 | 0.7814 | 0.8367 | 0.8358 | 0.8327 | |

| Koniq | 0.7336 | 0.7468 | 0.8249 | 0.8181 | 0.8200 | |

| SPAQ | BID | 0.7755 | 0.7787 | 0.8048 | 0.8118 | 0.8180 |

4.4 Performance Evaluation on the Loss Function

A) Adapability Validation. To validate the adapability of the proposed GMC loss to various network architectures, we employ it to train three advanced NR-IQA models, namely HyperIQA Su et al. (2020), MANIQA Yang et al. (2022) and TeacherIQAChen et al. (2022). We also use it to train two versions of the proposed MoNet, which adopt ViT-Small and ViT-Base as the backbone, respectively. For comparison, we train these networks using the distance-based loss and the Norm loss Li et al. (2020). As shown in Tab. 3, it is evident that all the networks obtain the highest scores on both the LIVEC and BID datasets when the GMC loss is used, proving the advantages of the GMC loss over the compared losses. Without any modification to the network architecture, the GMC loss can be easily integrated with existing IQA models and promote their performance.

| Dataset | LIVEC | BID | ||||

|---|---|---|---|---|---|---|

| Models | Dist | Norm | GMC | Dist | Norm | GMC |

| HyperIQA | 0.8650 | 0.8591 | 0.8741(+1.05%) | 0.8345 | 0.8444 | 0.8466(+1.45%) |

| TeacherIQA | 0.8411 | 0.8523 | 0.8529(+1.41%) | 0.7663 | 0.7592 | 0.7788(+1.63%) |

| MANIQA | 0.8914 | 0.9025 | 0.9043(+1.45%) | 0.8755 | 0.8889 | 0.8868(+1.29%) |

| Ours(Small) | 0.8580 | 0.8705 | 0.8709(+1.50%) | 0.8380 | 0.8435 | 0.8517(+1.63%) |

| Ours(Base) | 0.8937 | 0.8982 | 0.9062(+1.40%) | 0.8960 | 0.8966 | 0.9059(+1.10%) |

B) Sensitivity to Hyper-parameters. In Fig. 2 we compare the performance of the MoNet trained with the MSE loss and the GMC loss under different learning rates (lr), including 1e-4, 1e-5 and 1e-6, on LIVEC and BID datasets. The results show that both losses achieve the highest scores when the lr is set to 1e-5 and perform the worst when the lr is set to 1e-4. Nevertheless, the GMC loss always outperforms the MSE loss. Especially when the lr is set to 1e-4, the GMC loss shows a more significant advantage with margins of 8.7% and 4.2% on the two datasets, respectively. The results indicate that the GMC loss is more robust to the setting of the lr, ensuring the training stability of the model.

C) Convergence Comparison. Fig. 3 compares the SROCC curve of the MoNet trained with the MSE loss and the GMC loss on the validation set of the Koniq and SPAQ datasets. We can clearly see that the performance of the MoNet using the GMC loss surpasses 0.9 when it is trained with only one epoch. Moreover, the curve of the GMC loss is much smoother, demonstrating a faster convergence compared to the MSE loss. The results further validate the benefit of the GMC loss in enhancing the training stability, allowing us to train a model using fewer epochs.

4.5 More Discussions and Analysis

A) Ablation Studies. To validate the effectiveness of the proposed key components, we train five variants of our model: i) w/o MAL, which replaces the three MALs in the MoNet by three simple blocks, respectively, and each block consists of three convolutional layers; ii) w/o SGCC and iii) w/o PGCC, which remove the SGCC loss and the PGCC loss from our total loss function defined in Eq. (7), respectively; iv) w/o GCC, which removes the two GCC losses and only adopts the MSE loss for training; v) w/o queue, which removes previous data and only uses the current batch of data to compute the two GCC losses. The results from Tab. 4 reveal that the removal of any component degrades the model’s performance. We can see that removing the MAL has the most remarkable decline in performance, validating the significance of the diverse opinion feature learning. In addition, removing the queue mechanism also shows a noticeable drop in performance, indicating that the utilization of previous data is beneficial to promoting the accuracy of the estimated metric scores. Moreover, removing both GCC losses demonstrates a more significant decrease than removing any one of them, suggesting that the joint optimization of the two GCC losses imposes stronger constraints on the global consistency.

| Dataset | LIVEC | BID | ||

|---|---|---|---|---|

| Model | SROCC | PLCC | SROCC | PLCC |

| w/o MAL | 0.8912 | 0.9109 | 0.8938 | 0.9078 |

| w/o SGCC | 0.9017 | 0.9188 | 0.9010 | 0.9119 |

| w/o PGCC | 0.9021 | 0.9183 | 0.9025 | 0.9158 |

| w/o GCC | 0.8937 | 0.9135 | 0.8960 | 0.9103 |

| w/o queue | 0.8962 | 0.9188 | 0.8936 | 0.9108 |

| Full model | 0.9062 | 0.9225 | 0.9059 | 0.9182 |

B) Discussion about the Length of the Queue. To investigate the effect of the queue length on the performance of our model, we re-train our model using different settings of . Specifically, we set , where denotes the ratio of the training set size , and is set to 20%, 40%, 60%, 80%. The results on the LIVEC, BID and SPAQ datasets are illustrated in Tab. 5. It can be seen that as increases, the performance of our model first increases and then declines. This conforms our assumption that neither is too small nor too large is beneficial to the accuracy.

| Dataset | LIVEC | BID | SPAQ | |||

|---|---|---|---|---|---|---|

| Length Ratio | SROCC | PLCC | SROCC | PLCC | SROCC | PLCC |

| 20% | 0.9030 | 0.9183 | 0.9004 | 0.9141 | 0.9232 | 0.9256 |

| 40% | 0.9062 | 0.9194 | 0.9040 | 0.9159 | 0.9230 | 0.9251 |

| 60% | 0.9062 | 0.9225 | 0.9059 | 0.9182 | 0.9251 | 0.9267 |

| 80% | 0.9003 | 0.9198 | 0.9020 | 0.9145 | 0.9235 | 0.9266 |

C) Discussion about the Number of the MAL. To explore the effect of the MAL’s number on the performance of our model, we re-train our model using different settings of (1, 2, 3, 4 and 5). The results on the BID, LIVEC and SPAQ datasets are illustrated in Fig. 4. We can see that with the increase of , our model consistently demonstrates an improved performance on the LIVEC and SPAQ. This indicates that incorporating more MALs can benefit the performance, since more complementary contexts are learned. Additionally, we find that the scores on the BID initially experience an upward trend and subsequently decline. We speculate that this stems from the constraints imposed by limited training samples and single distortion type. These limitations weaken the model’s capacity in capturing diverse attentions.

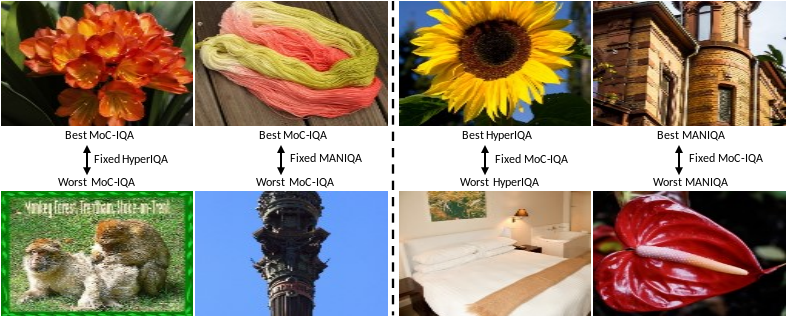

D) GMAD competition. In the gMAD Ma et al. (2016), an attacker challenges a defender to select image pairs, where the defender perceives them as the same quality while the attacker perceives them differently. We conduct the gMAD with HyperIQA Su et al. (2020) and MANIQA Yang et al. (2022) on the Koniq. As shown in Fig. 5, when our model acts as the attacker (leftmost two columns), it accurately recognizes the image pairs with noticeable quality differences while the defenders regard them with similar quality. Conversely, when our model acts as the defender (rightmost two columns), the attackers struggle to distinguish the quality difference of the images selected by our model.

E) Visualization Analysis on the MAL. To validate that the proposed MALs can learn diverse attentions, we compute the cosine similarity between the weights of each pairwise MALs and show it in Fig. 6. We see that all the similarity scores except those in the diagonal are extremely low, meaning that there exists little redundancy between each pairwise MALs. More intuitively, we visualize the output of different MALs in Fig. 7. It can be observed that different MALs have distinct attention regions. For example, the first MAL pays more attention to global semantics, the second MAL mainly focuses on local salient regions, and the third MAL prefers the background. The examples show that each MAL effectively learns complementary opinion features.

5 Conclusion

In this paper, we develop a kind of GCC loss, which is combined with the MSE loss for jointly optimizing global-correlation consistency and mean-opinion consistency. Additionally, we develop a MoNet that aggregates various opinion features by capturing multi-view contexts, which effectively strengthens the robustness of the network architecture. We adopt the proposed loss to optimize the MoNet, achieving better performance, more stable training and faster convergence. Extensive experimental results have demonstrated the superior accuracy and generalization achieved by our model on multiple authentic IQA datasets. Moreover, the proposed loss can be easily integrated with existing IQA models and further boost their performance.

References

- Bosse et al. [2017] Sebastian Bosse, Dominique Maniry, Klaus-Robert Müller, Thomas Wiegand, and Wojciech Samek. Deep neural networks for no-reference and full-reference image quality assessment. IEEE Transactions on image processing, 27(1):206–219, 2017.

- Burges et al. [2005] Chris Burges, Tal Shaked, Erin Renshaw, Ari Lazier, Matt Deeds, Nicole Hamilton, and Greg Hullender. Learning to rank using gradient descent. In International Conference on Machine Learning, pages 89–96, 2005.

- Cao et al. [2007] Zhe Cao, Tao Qin, Tie-Yan Liu, Ming-Feng Tsai, and Hang Li. Learning to rank: from pairwise approach to listwise approach. In Proceedings of the 24th international conference on Machine learning, pages 129–136, 2007.

- Chen et al. [2022] Zewen Chen, Juan Wang, Bing Li, Chunfeng Yuan, Weihua Xiong, Rui Cheng, and Weiming Hu. Teacher-guided learning for blind image quality assessment. In Proceedings of the Asian Conference on Computer Vision, pages 2457–2474, 2022.

- Ciancio et al. [2010] Alexandre Ciancio, Eduardo AB da Silva, Amir Said, Ramin Samadani, Pere Obrador, et al. No-reference blur assessment of digital pictures based on multifeature classifiers. IEEE Transactions on image processing, 20(1):64–75, 2010.

- Dosovitskiy et al. [2020] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929, 2020.

- Fang et al. [2020] Yuming Fang, Hanwei Zhu, Yan Zeng, Kede Ma, and Zhou Wang. Perceptual quality assessment of smartphone photography. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3677–3686, 2020.

- Gao et al. [2013] Fei Gao, Dacheng Tao, Xinbo Gao, and Xuelong Li. Learning to rank for blind image quality assessment. arXiv e-prints, 2013.

- Ghadiyaram and Bovik [2015] Deepti Ghadiyaram and Alan C Bovik. Massive online crowdsourced study of subjective and objective picture quality. IEEE Transactions on Image Processing, 25(1):372–387, 2015.

- Golestaneh et al. [2022] S Alireza Golestaneh, Saba Dadsetan, and Kris M Kitani. No-reference image quality assessment via transformers, relative ranking, and self-consistency. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pages 1220–1230, 2022.

- He et al. [2016] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

- Hosu et al. [2020] Vlad Hosu, Hanhe Lin, Tamas Sziranyi, and Dietmar Saupe. Koniq-10k: An ecologically valid database for deep learning of blind image quality assessment. IEEE Transactions on Image Processing, 29:4041–4056, 2020.

- Kang et al. [2014] Le Kang, Peng Ye, Yi Li, and David Doermann. Convolutional neural networks for no-reference image quality assessment. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1733–1740, 2014.

- Kang et al. [2015] Le Kang, Peng Ye, Yi Li, and David Doermann. Simultaneous estimation of image quality and distortion via multi-task convolutional neural networks. In 2015 IEEE international conference on image processing (ICIP), pages 2791–2795. IEEE, 2015.

- Li et al. [2018] Dingquan Li, Tingting Jiang, Weisi Lin, and Ming Jiang. Which has better visual quality: The clear blue sky or a blurry animal? IEEE Transactions on Multimedia, 21(5):1221–1234, 2018.

- Li et al. [2020] Dingquan Li, Tingting Jiang, and Ming Jiang. Norm-in-norm loss with faster convergence and better performance for image quality assessment. In Proceedings of the 28th ACM International Conference on Multimedia, pages 789–797, 2020.

- Lin and Wang [2018] Kwan-Yee Lin and Guanxiang Wang. Hallucinated-iqa: No-reference image quality assessment via adversarial learning. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 732–741, 2018.

- Liu et al. [2017] Xialei Liu, Joost Van De Weijer, and Andrew D Bagdanov. Rankiqa: Learning from rankings for no-reference image quality assessment. In Proceedings of the IEEE international conference on computer vision, pages 1040–1049, 2017.

- Ma et al. [2016] Kede Ma, Qingbo Wu, Zhou Wang, Zhengfang Duanmu, Hongwei Yong, Hongliang Li, and Lei Zhang. Group mad competition-a new methodology to compare objective image quality models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 1664–1673, 2016.

- Ma et al. [2017] Kede Ma, Wentao Liu, Tongliang Liu, Zhou Wang, and Dacheng Tao. dipiq: Blind image quality assessment by learning-to-rank discriminable image pairs. IEEE Transactions on Image Processing, 26(8):3951–3964, 2017.

- Ma et al. [2019] Kede Ma, Xuelin Liu, Yuming Fang, and Eero P Simoncelli. Blind image quality assessment by learning from multiple annotators. In 2019 IEEE international conference on image processing (ICIP), pages 2344–2348. IEEE, 2019.

- Ma et al. [2021] Jupo Ma, Jinjian Wu, Leida Li, Weisheng Dong, Xuemei Xie, Guangming Shi, and Weisi Lin. Blind image quality assessment with active inference. IEEE Transactions on Image Processing, 30:3650–3663, 2021.

- Madhusudana et al. [2022] Pavan C Madhusudana, Neil Birkbeck, Yilin Wang, Balu Adsumilli, and Alan C Bovik. Image quality assessment using contrastive learning. IEEE Transactions on Image Processing, 31:4149–4161, 2022.

- Qin et al. [2023] Guanyi Qin, Runze Hu, Yutao Liu, Xiawu Zheng, Haotian Liu, Xiu Li, and Yan Zhang. Data-efficient image quality assessment with attention-panel decoder. Proceedings of the AAAI Conference on Artificial Intelligence, 37:2091–2100, 2023.

- Saha et al. [2023] Avinab Saha, Sandeep Mishra, and Alan C Bovik. Re-iqa: Unsupervised learning for image quality assessment in the wild. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5846–5855, 2023.

- Su et al. [2020] Shaolin Su, Qingsen Yan, Yu Zhu, Cheng Zhang, Xin Ge, Jinqiu Sun, and Yanning Zhang. Blindly assess image quality in the wild guided by a self-adaptive hyper network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 3667–3676, 2020.

- Wang et al. [2023a] Jianyi Wang, Kelvin CK Chan, and Chen Change Loy. Exploring clip for assessing the look and feel of images. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 37, pages 2555–2563, 2023.

- Wang et al. [2023b] Juan Wang, Zewen Chen, Chunfeng Yuan, Bing Li, Wentao Ma, and Weiming Hu. Hierarchical curriculum learning for no-reference image quality assessment. International Journal of Computer Vision, pages 1–20, 2023.

- Yang et al. [2022] Sidi Yang, Tianhe Wu, Shuwei Shi, Shanshan Lao, Yuan Gong, Mingdeng Cao, Jiahao Wang, and Yujiu Yang. Maniqa: Multi-dimension attention network for no-reference image quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1191–1200, 2022.

- Zhang et al. [2015] Lin Zhang, Lei Zhang, and Alan C Bovik. A feature-enriched completely blind image quality evaluator. IEEE Transactions on Image Processing, 24(8):2579–2591, 2015.

- Zhang et al. [2020] Weixia Zhang, Kede Ma, Guangtao Zhai, and Xiaokang Yang. Learning to blindly assess image quality in the laboratory and wild. In 2020 IEEE International Conference on Image Processing (ICIP), pages 111–115. IEEE, 2020.

- Zhang et al. [2023] Weixia Zhang, Guangtao Zhai, Ying Wei, Xiaokang Yang, and Kede Ma. Blind image quality assessment via vision-language correspondence: A multitask learning perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 14071–14081, 2023.

- Zhao et al. [2023] Kai Zhao, Kun Yuan, Ming Sun, Mading Li, and Xing Wen. Quality-aware pre-trained models for blind image quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 22302–22313, 2023.

- Zhu et al. [2020] Hancheng Zhu, Leida Li, Jinjian Wu, Weisheng Dong, and Guangming Shi. Metaiqa: Deep meta-learning for no-reference image quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 14143–14152, 2020.