Global optimization of tensor renormalization group using the corner transfer matrix

Abstract

A tensor network renormalization algorithm with global optimization based on the corner transfer matrix is proposed. Since the environment is updated by the corner transfer matrix renormalization group method, the forward-backward iteration is unnecessary, which is a time-consuming part of other methods with global optimization. In addition, a further approximation reducing the order of the computational cost of contraction for the calculation of the coarse-grained tensor is proposed. The computational time of our algorithm in two dimensions scales as the sixth power of the bond dimension while the higher-order tensor renormalization group and the higher-order second renormalization group methods have the seventh power. We perform benchmark calculations in the Ising model on the square lattice and show that the time-to-solution of the proposed algorithm is faster than that of other methods.

I Introduction

Tensor network methods attract much attention as powerful tools for computing strongly correlated many-body problems [1, 2]. The partition function of classical statistical systems and low-energy states in quantum systems can be represented by tensor networks, in which exponentially large information is compressed efficiently. However, contraction of a large tensor network still requires a huge computational cost. The combination of tensor networks and real-space renormalization group ideas resolves this problem. The tensor renormalization group (TRG) method provide a way to calculate a coarse-grained tensor based on the singular value decomposition [3]. The truncation of smaller singular values avoids divergence of tensor size. The higher-order tensor renormalization group (HOTRG) method is another method applicable to higher-dimensional systems [4].

Both of the methods do information compression by solving local optimization problems. Approximations in these methods are locally optimal, but not so for contraction of the whole tensor network. Therefore, the global optimization is necessary. Since the global optimization problem is defined by using the whole network, we need to introduce other approximations. The whole network is split into two parts, i.e., a small system and an environment surrounding it, and then the latter is approximated in an appropriate way. The second renormalization group (SRG) method [5, 6] and the higher-order SRG (HOSRG) method [4] represent an environment as one tensor, which is called the environment tensor. Recently, the automatic differentiation technique was proposed to calculate the environment tensor [7]. Although these methods drastically improve accuracy, calculation of the environment tensor requires performing the forward-backward iterations.

In this paper, we propose another approximation of the environment. We replace the environment tensor with the corner transfer matrices (CTMs) [8] and the edge tensors, which we call the CTM environment. It can be updated by using the CTM renormalization group (CTMRG) [9, 10] instead of the backward iteration. The computational cost of the CTMRG is smaller than the backward iteration. The former scales as against the bond dimension while the latter has scaling. In addition, we introduce an additional decomposition, whose key idea is information compression with the environment. This approximation reduces the computational cost of tensor contraction for the coarse-grained tensor. Finally, our algorithm achieves computational cost scales as , while HOTRG and HOSRG have cost in two-dimensional systems.

In the next section, we introduce our improved algorithm, which we call CTM-TRG. In the third section, benchmark results performed in the two-dimensional Ising model are shown. We will show that our algorithm has a smaller time-to-solution than HOTRG and HOSRG. The last section is devoted to discussions and conclusions.

II Algorithm

Let us consider the contraction of a tensor network on the square lattice,

| (1) |

A local tensor located at each site has four indices and connects with other tensors on the nearest-neighbor sites. We assume that each index of runs from to at most. In other words, the bond dimension of is equal to . For classical systems, is the partition function and the Boltzmann weight determines elements of . In quantum systems, such a contraction commonly appears as an inner product of a wave function, the so-called tensor network state or the projected entanglement paired state [11].

The HOTRG algorithm approximates this contraction by introducing the operator which merges two bonds into a single bond. In the original paper of HOTRG [4], this bond-merging operator was obtained by the higher-order singular value decomposition (HOSVD) of a tensor,

| (2) |

Another solution of the bond-merging operator is the oblique projector which minimizes

| (3) |

while keeping the bond dimension between and to . Its graphical representation is shown in Fig. 1(a). The algorithm for the calculation of is well known [12, 13, 14, 15] and its computational cost scales as [15]. After inserting bond-merging operators, the coarse-grained tensor is defined by contraction,

| (4) |

The computationally heaviest part in HOTRG is this tensor contraction [Fig. 1(b)], which scales as .

The minimization problem for the bond-merging operator in HOTRG (3) is a local optimization problem. Although such a solution is the best for a cluster, it is not the case for contraction of the whole network. A bond-merging operator obtained from larger clusters such as in Fig. 2 will improve the accuracy and its limit to infinite cluster size converges to a solution of the global optimization problem which minimizes the difference between the whole network with and without a bond-merging operator. However, an optimization problem with large clusters is intractable because its computational cost rapidly diverges with the cluster size.

Since the global optimization problem involves contraction of the whole network, some approximations are necessary. In the HOSRG algorithm, one local tensor is picked up and the other part is considered as an environment. The environment is represented by a four-index tensor, which we call the environment tensor. More precisely, the local tensor and corresponding environment tensor at each renormalization step approximate the contraction of the whole network as

| (5) |

The calculation of the environment tensor is done by the so-called backward iteration. It is a fine-grained process which updates the environment tensor using information at the -th step. On the other hand, a coarse-grained process, called the forward iteration, updates the local tensors and the bond-merging operators. The HOSRG algorithm repeats the forward and backward iterations until convergence.

We note that the computational cost of the HOSRG algorithm scales as , as does HOTRG. Although calculation of the bond density operator defined in Ref. [4] requires computational cost, it can be avoided by using a similar technique shown in Ref. [16]. Thus, both the forward and backward iterations have computational cost.

The environment tensor requires the backward iteration and it causesthe main difficulty of the HOSRG method. The CTM-TRG employs the corner transfer matrix and the edge tensor since these can be calculated only from the local tensor. The environment tensor is decomposed into four CTMs and four edge tensors , which we call the CTM environment in this paper. The subscripts (T, R, B, and L) indicate the positions from a local tensor (top, right, bottom, and left, respectively). For example, the top-right CTM represents all the tensors in the first quadrant.

The global optimization problem is represented by a cluster of local tensors and its surrounding CTM environment. The bond-merging operator is calculated as the oblique projector between two half networks as shown in Fig. 3. Both the contraction of the half network and calculation of the bond-merging operator have a computational cost proportional to .

The CTM environment reduces the computational cost for the calculation of the bond-merging operator to . However, contraction for the coarse-grained tensor [Fig. 1(b)] still scales as . To reduce this cost, we introduce an additional approximation (Fig. 4). First, we decompose the local tensor by using the singular-value decomposition, , and define and . Next, we insert truncation operators and between and to reduce the bond dimension from to . These operators are also calculated as oblique projectors which minimize the difference shown in Fig. 5(a), where and ( and ) are obtained from the singular value decomposition of () such as , , and [Fig. 5(b)]. We define and . In the same manner, we calculate and . Finally, we obtain the coarse-grained tensor by contraction of the tensor network as shown in Fig. 4(b), where and are the bond-merging operators for vertical bonds. Clearly, the computational cost of each step scales as at most. In contrast to HOTRG and HOSRG, this method performs scale transformations along the horizontal and vertical axes simultaneously.

At the beginning of the next step, we need to update the CTM environment by using CTMRG with the local tensor . An initial value of the CTM environment at the step can be easily generated from the CTM environment at the previous step . An initial value of is equal to and that of is calculated from and the bond-merging operators. For example, the edge tensor on the left edge is initialized as

| (6) |

Since these tensors are a good initial guess for the CTM environment at , the number of CTMRG iterations for an update of the CTM environment is smaller than that for initialization of the CTM environment at . We note that this contraction has only computational cost.

III Results

We simulate the Ising model on the square lattice to investigate the performance of our proposed algorithm. The initial local tensor at temperature is given as

| (7) |

where is a matrix,

| (8) |

The critical temperature is . The free energy per site is estimated from the coarse-grained tensor as

| (9) |

where is the number of renormalization steps and . We perform 20 renormalization steps, which is enough to observe convergence of the free energy even when its relative error is less than . Because of the global optimization, the free energy in CTM-TRG converges to its value in the thermodynamic limit much faster than that in HOTRG except in the near-critical region. We note that the free energy can also be estimated from the CTMs [9]. It corresponds to the fixed or open boundary condition, while Eq. (9) assumes the periodic boundary condition. The both should take the same value in the thermodynamic limit and we confirm this fact in our numerical simulations.

In our algorithm, we use the same bond dimension for the local tensor and CTM environment and set for the diagonal decomposition of a local tensor in Fig. 4. In most cases, we use the fixed boundary condition (FBC) for the initial condition of the CTM environment. The initial edge tensor is a tensor and its element is given as , where the first index connects with a local tensor. The initial CTM is a identity matrix, that is, . We first perform CTMRG steps to obtain the CTM environment at and do four CTMRG steps per each update of . Although we observe that four is not enough to achieve convergence near the criticality, it is still sufficient for making the whole procedure produce more accurate results than HOTRG. We also use the open boundary condition (OBC), which is expected to be better than FBC in the paramagnetic phase. The initial CTM and edge tensor for OBC are defined by using as well as the initial local tensor , for example, . For comparison, we also perform HOTRG and HOSRG simulations. In HOSRG, we repeat the forward-backward iterations four times because of our observation that it is sufficient for the convergence of the free energy in all the cases.

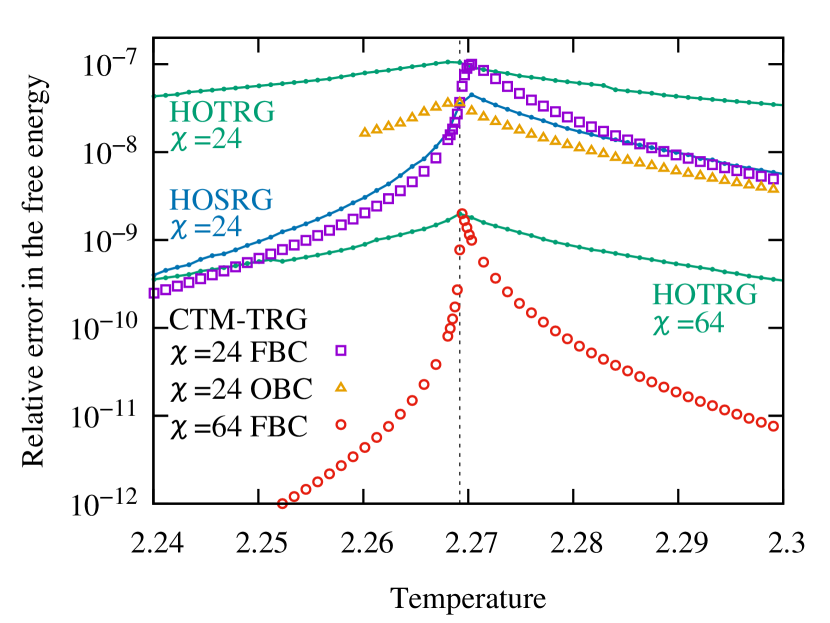

We compare the relative errors in the free energy from the exact solution in the thermodynamic limit (Fig. 6). The method of CTM-TRG shows better accuracy than HOTRG in all cases, and is compatible to HOSRG. In the ferromagnetic phase, CTM-TRG is more accurate than HOSRG. This is because CTM-TRG performs scale transformations along horizontal and vertical directions simultaneously [Fig. 4(b)]. We confirmed that our algorithm without the approximation shows the same accuracy as HOSRG. Above the critical temperature, CTM-TRG has a slightly larger error than HOSRG, which is caused by the small number of CTMRG iterations. The open boundary condition is more suitable for an initial value of the CTM environment in the paramagnetic phase and it provides more accurate results than HOSRG. We note that the results with are much better than the results reported in Ref. [7], in which a one-dimensional renormalization algorithm for a large bond dimension was performed.

The elapsed time of each method is shown in Fig. 7. Our data clearly show scaling of CTM-TRG while HOTRG and HOSRG have . Although the elapsed time of CTM-TRG is longer than HOTRG in the range of the bond dimension we calculated, they will switch places around . The computational time was measured by simulations in a single core on Intel Xeon E5-2697A (2.60 GHz) with 128 GB memory.

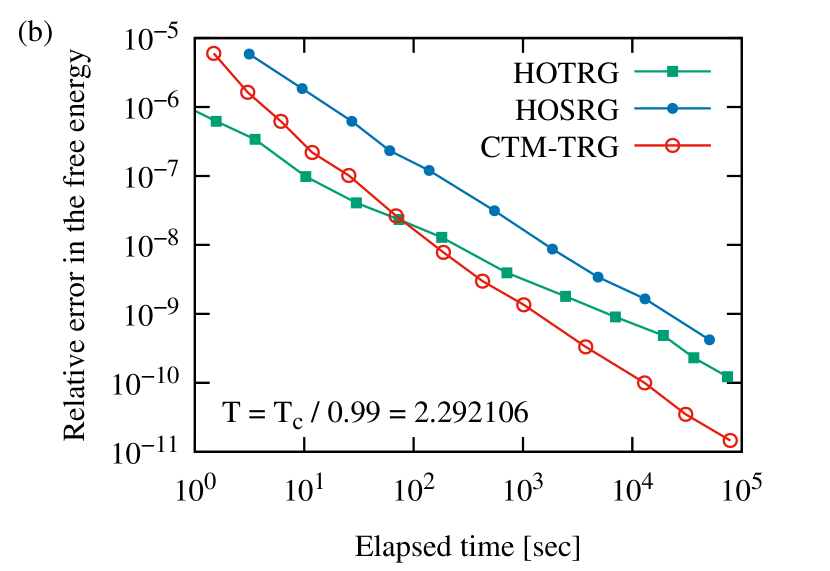

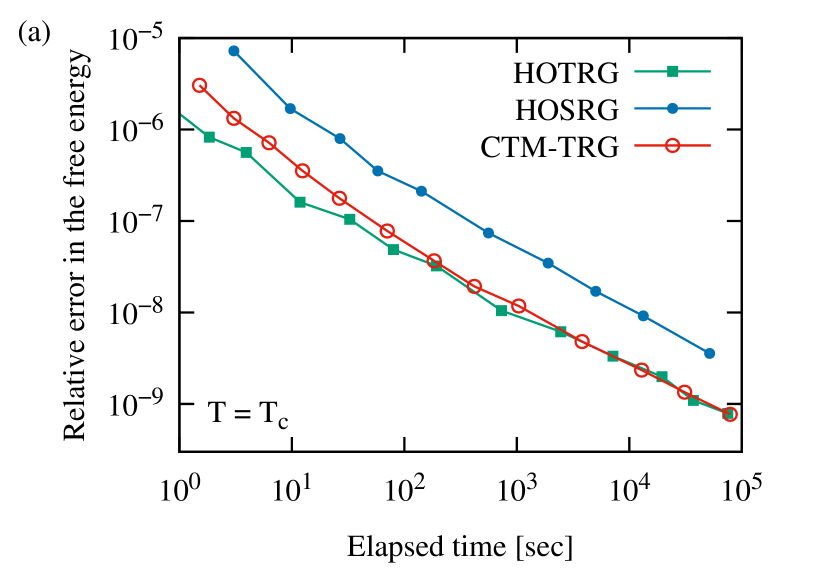

Figure 8 shows the time-to-solution at and . CTM-TRG always outperform the other methods at . This is because the global optimization drastically improves accuracy in the ferromagnetic phase as shown in Fig. 6. In the paramagnetic phase (), CTM-TRG has the steepest slope and achieves the best performance with large bond dimensions.

In Fig. 9, we show the performance of CTM-TRG at criticality. Although CTM-TRG and HOTRG seem to have almost the same time-to-solution, the former should be superior to the latter in larger bond dimensions. As shown by the dashed line in Fig. 9(b), we find that the relative error in the free energy of CTM-TRG is and CTM-TRG achieves the same accuracy with 20% smaller bond dimension than HOTRG. From these facts and scaling of the computational time, we estimate that the crossing point between CTM-TRG and HOTRG exists at about seconds, as shown in Fig. 9(a). The relative error of CTM-TRG and HOTRG is proportional to and , respectively.

IV Discussion and conclusions

In this paper, we consider the global optimization of a tensor renormalization group and propose the improved algorithm based on the CTM environment. In our proposed algorithm CTM-TRG, the environment tensor of the HOSRG method is replaced by the CTMs and edge tensors. Since the CTM environment can be easily updated by using CTMRG, our algorithm does not require any backward iteration. In addition, we introduce an additional approximation by decomposing the four-rank tensor into three-rank tensors which reduces the order of the computational cost for tensor contraction without a serious reduction in the overall accuracy. The computational cost of each step in CTM-TRG is bounded by , while HOTRG and HOSRG have computational cost. Therefore, our algorithm can produce almost the same accuracy as HOSRG within a smaller computational time. We also show that the time-to-solution of CTM-TRG is shorter than HOTRG and HOSRG in the paramagnetic and ferromagnetic phases.

In the present work, we use the standard CTMRG method to calculated the CTM environment [9, 13]. Recently, a variational method based on the uniform matrix product state was proposed [17] and it was applied to the contraction of two-dimensional tensor networks [18]. The edge tensors construct an eigenvector of the row-to-row (or column-to-column) transfer matrix represented as a matrix product operator, and the CTM is calculated as a solution of the fixed point equation. This algorithm improves the convergence speed of the CTM environment, especially near the critical point. Thus usage of such a variational approach instead of CTMRG may improve the performance of our proposed algorithm.

We also comment on the applicability of CTM-TRG to higher-dimensional systems. Although we have focused on the two-dimensional systems in this paper, the global optimization using the CTM environment can be generalized to higher dimensions. In three dimensions, the face tensor in addition to the CTMs and edge tensors is necessary to construct the environment [19]. The CTM calculation in high dimensions is more difficult than that in two dimensions because of the slow decay of the singular values. We may need to use additional techniques to obtain accurate results as mentioned below.

It is known that TRG and HOTRG may converge to a fictitious fixed point owing to short-range entanglement [20, 21], and the calculation of the scaling dimensions from eigenvalues of the transfer matrix fails [20, 14]. Even in tensor renormalization methods with the global optimization, such as HOSRG, short-range correlations are not removed completely. Such a problem also occurs in our proposed algorithm. Thus, catching critical phenomena with better accuracy may eventually require the introduction of entanglement filtering techniques [14, 22, 23, 24, 25]. In particular, the loop entanglement filtering [14] and the full environment truncation [25] seem to fit well with the network structure shown in Fig. 4(b). Moreover, entanglement filtering using the environment may remove short-range correlations more efficiently. However, this is out of the scope of the present work and remains for future work.

Acknowledgements.

The authors would like to thank K. Harada, and T. Okubo for valuable discussions. The computation in this work was partially executed on computers at the Supercomputer Center, the Institute for Solid State Physics, the University of Tokyo. This research was supported by MEXT as part of the “Exploratory Challenge on Post-K Computer” (Challenge of Basic Science—Exploring Extremes through Multi-Physics and Multi-Scale Simulations) and by JSPS KAKENHI Grants No. JP19H01809 and No. JP20K03780.References

- Orús [2014] R. Orús, Ann. Phys. 349, 117 (2014).

- Ran et al. [2020] S.-J. Ran, E. Tirrito, C. Peng, X. Chen, L. Tagliacozzo, G. Su, and M. Lewenstein, Tensor Network Contractions: Methods and Applications to Quantum Many-Body Systems (Springer International, New York, 2020).

- Levin and Nave [2007] M. Levin and C. P. Nave, Phys. Rev. Lett. 99, 120601 (2007).

- Xie et al. [2012] Z. Y. Xie, J. Chen, M. P. Qin, J. W. Zhu, L. P. Yang, and T. Xiang, Phys. Rev. B 86, 045139 (2012).

- Xie et al. [2009] Z. Y. Xie, H. C. Jiang, Q. N. Chen, Z. Y. Weng, and T. Xiang, Phys. Rev. Lett. 103, 160601 (2009).

- Zhao et al. [2010] H. H. Zhao, Z. Y. Xie, Q. N. Chen, Z. C. Wei, J. W. Cai, and T. Xiang, Phys. Rev. B 81, 174411 (2010).

- Chen et al. [2020] B.-B. Chen, Y. Gao, Y.-B. Guo, Y. Liu, H.-H. Zhao, H.-J. Liao, L. Wang, T. Xiang, W. Li, and Z. Y. Xie, Phys. Rev. B 101, 220409 (2020).

- Baxter [1982] R. J. Baxter, Exactly Solved Models in Statistical Mechanics (Academic Press, London, 1982).

- Nishino and Okunishi [1996] T. Nishino and K. Okunishi, J. Phys. Soc. Jpn. 65, 891 (1996).

- Nishino and Okunishi [1997] T. Nishino and K. Okunishi, J. Phys. Soc. Jpn. 66, 3040 (1997).

- [11] F. Verstraete and J. I. Cirac, arXiv:cond-mat/0407066 .

- [12] L. Wang and F. Verstraete, arXiv:1110.4362 .

- Corboz et al. [2014] P. Corboz, T. M. Rice, and M. Troyer, Phys. Rev. Lett. 113, 046402 (2014).

- Yang et al. [2017] S. Yang, Z.-C. Gu, and X.-G. Wen, Phys. Rev. Lett. 118, 110504 (2017).

- Iino et al. [2019] S. Iino, S. Morita, and N. Kawashima, Phys. Rev. B 100, 035449 (2019).

- Morita et al. [2018] S. Morita, R. Igarashi, H.-H. Zhao, and N. Kawashima, Phys. Rev. E 97, 033310 (2018).

- Zauner-Stauber et al. [2018] V. Zauner-Stauber, L. Vanderstraeten, M. T. Fishman, F. Verstraete, and J. Haegeman, Phys. Rev. B 97, 045145 (2018).

- Fishman et al. [2018] M. T. Fishman, L. Vanderstraeten, V. Zauner-Stauber, J. Haegeman, and F. Verstraete, Phys. Rev. B 98, 235148 (2018).

- Nishino and Okunishi [1998] T. Nishino and K. Okunishi, J. Phys. Soc. Jpn. 67, 3066 (1998).

- Gu and Wen [2009] Z. C. Gu and X. G. Wen, Phys. Rev. B 80, 155131 (2009).

- Ueda et al. [2014] H. Ueda, K. Okunishi, and T. Nishino, Phys. Rev. B 89, 075116 (2014).

- Ying [2016] L. Ying, Multiscale Model. Simul. 15, 1423 (2016).

- Harada [2018] K. Harada, Phys. Rev. B 97, 045124 (2018).

- Hauru et al. [2018] M. Hauru, C. Delcamp, and S. Mizera, Phys. Rev. B 97, 045111 (2018).

- Evenbly [2018] G. Evenbly, Phys. Rev. B 98, 085155 (2018).