GFS-VO: Grid-based Fast and Structural Visual Odometry

Abstract

In the field of Simultaneous Localization and Mapping (SLAM), researchers have always pursued better performance in terms of accuracy and time cost. Traditional algorithms typically rely on fundamental geometric elements in images to establish connections between frames. However, these elements suffer from disadvantages such as uneven distribution and slow extraction. In addition, geometry elements like lines have not been fully utilized in the process of pose estimation. To address these challenges, we propose GFS-VO, a grid-based RGB-D visual odometry algorithm that maximizes the utilization of both point and line features. Our algorithm incorporates fast line extraction and a stable line homogenization scheme to improve feature processing. To fully leverage hidden elements in the scene, we introduce Manhattan Axes (MA) to provide constraints between local map and current frame. Additionally, we have designed an algorithm based on breadth-first search for extracting plane normal vectors. To evaluate the performance of GFS-VO, we conducted extensive experiments. The results demonstrate that our proposed algorithm exhibits significant improvements in both time cost and accuracy compared to existing approaches.

I INTRODUCTION

Simultaneous Localization and Mapping (SLAM) is a vital task in computer vision, enabling autonomous systems like robots, drones, and unmanned vehicles to navigate and create maps of unknown environments. A comprehensive SLAM framework typically consists of three core components: front end, back end, and loop detection. However, some frameworks opt to exclude loop detection to meet real-time and lightweight requirements. These frameworks are commonly known as odometry. Visual odometry is one such technique that has garnered substantial attention from the fields of computer vision and robotics. It utilizes sequences of images as input, providing advantages such as portability, cost-effectiveness, and robustness to environmental conditions.

In feature-based visual odometry, the utilization of geometry features plays a critical role in establishing frame-to-frame connections. Point features are commonly used due to their ease of extraction and abundance in the environment. However, they are susceptible to lighting variations, occlusion, and blur, resulting in decrease in pose estimation accuracy. One solution is incorporating line features into framework. Line features exhibit greater robustness to environmental factors compared to point features, offering more stable constraints between frames. However, existing research on line features has certain shortcomings, which can be summarized as follows:

-

1.

High cost of extraction. Existing approaches commonly employ LSD [1] as line extractor, which is readily accessible through OpenCV functions. However, the computational time required to calculate line-support region is prohibitively expensive, which contradicts the real-time requirements of visual odometry.

-

2.

Inhomogeneous distribution of lines in the image. Both point and line features exhibit a common weakness of uneven distribution, being abundant in textured areas but scarce in regions with low texture. This imbalance frequently leads to pose estimation inaccuracies.

-

3.

Underutilization of Line feature. During the process of pose estimation and optimization, line do not exhibit significant difference compared to point.

In light of the aforementioned deficiencies, we present GFS-VO, a novel RGB-D camera-based visual odometry approach. Our contributions are outlined as follows:

-

•

We optimize extraction of line and analyze difficulties associated with line homogenization. To address these challenges, we propose three strategies that effectively achieve line homogenization.

-

•

We design a plane normal vector extraction algorithm based on breadth-first search, which achieves faster and more accurate extraction of MA than existing methods.

-

•

We introduce a visual odometry framework that combines point and line features. A variety of constraints are employed to obtain more precise estimations of pose.

II RELATED WORK

In visual odometry, geometry features are widely used for pose estimation. Among these features, point features, as the most basic geometry element, play an essential role in various algorithms. They can be extracted and described quickly and accurately in most scenes, resulting in preferable performance of point feature-based frameworks [2, 3, 4, 5]. However, the sensitivity and instability of point features have prompted researchers to integrate more robust feature such as line feature into their framework [6, 7, 8].

Compared to point, line extraction is more time-consuming. The Line Segment Detector (LSD) [1] estimates the rectangular approximation of line support region based on the angle of each pixel and then calculate parameter of line. Although the gradient-based growing process is fast, the calculation and validation of support region incur time costs, ultimately affecting overall speed. [9] also utilizes a growing process to detect lines but replaces the support region with anchors, which significantly accelerates extraction speed.

In addition to extraction speed, optimizations are employed to handle unique properties of line features. [10] address line cracks by connecting lines based on the current gradient direction. [11][12] utilize collinear constraints to compensate for instability caused by fractures. Furthermore, the inhomogeneous distribution of geometry features also poses challenge. The quadtree structure adopted in [3] achieves point homogenization by placing feature points into nodes and preferentially dividing nodes based on the number of feature points within them. However, applying the same division approach to line features presents difficulties in allocating lines effectively, as incomplete grid coverage would compromise the homogenization effect.

Lastly, the utilization of line features in visual odometry frameworks remains limited. [6][13] calculate line reprojection using endpoint-to-line distance and minimize sum of point and line errors to estimate camera’s pose. [14] extend the steps of SVO [15] to handle lines. In this case, the intensity residual for a given line is defined as the photometric error between sampled pixels on the 3D line. Notably, the usage of point and line features does not exhibit significant differences in these scenarios.

To leverage line features, several algorithms [16, 17, 18, 19, 20, 21] incorporate the Manhattan world hypothesis into their frameworks. The Manhattan world hypothesis [22] asserts that in structured scenes like Fig. 1, it is possible to extract a perpendicular Manhattan Axis (MA). Lines in these scenes exhibit parallel or vertical relationships with the axes of MA. However, the calculation of MA poses a significant challenge in its utilization. Current methods predominantly rely on plane normal vectors and line direction vectors to compute MA. But extraction of plane is more challenging than point and line. [17] applies neural network to segment plane regions and estimate plane normal vectors. [23] [18] utilize integral graph of pixel normal vector to extract parameter of plane. Given the real-time requirements of visual odometry, the development of a fast and precise method for detecting normal vectors becomes paramount for the successful integration of the MA.

III METHOD

The structure of GFS-VO is demonstrated in Fig. 2. The system start with geometry feature extraction. In spatical feature extraction, we use homogenized lines and plane normal vector to calculate MA. Multi feature constraint will be used in the following pose estimation and optimization. Further details can be found in the following of this chapter.

III-A Feature Extraction

Feature used in GFS-VO can be divided in two kinds: geometry and spatial. Geometry features are extracted using separate threads. For point features, we utilize ORB[24] to extract and describe points. In contrast to commonly used method [1], we employ EDLine[9] for line detection. Considering long lines provide more stable observations across frames, we adopt line connection strategy proposed in [10]. This strategy extends broken lines along the current gradient direction to connect them. Finally, extracted lines are described using LBD descriptor [25].

In GFS-VO, we incorporate plane feature primarily to extract MA, which relies on plane normal vectors. To achieve accurate and efficient extraction, we devise a Breadth-First Search based approach. Algorithm 1 provides an overview of our method. Firstly, we reconstruct 3D positions of pixels using depth image. Next, we examine the angle between normal vectors of current pixel and adjacent pixels. If the angle is below threshold, we consider these two pixels to have the same direction and belong to a same plane. Subsequently, we perform successive search to identify and count the number of pixels with same direction within one search. Only planes with enough same direction pixels are deemed valid planes. To determine the normal vector of a plane, we compute the average of normal vectors of all same direction pixels associated with that plane. Fig .3 illustrates the distinction between our method and other approaches.

Spatial feature used in our algorithm is mainly MA. We adopt method inspired by [18], which utilizes plane normal vectors and 3D line direction vectors. To get accurate 3D lines, after homogenization, we filter out depth-illegal pixels and offset-illegal pixels and then calculate parameters of 3d lines. Extracted spatial features will be employed in optimization which is introduced in section III-C.

III-B Grid based Line Homogenization

III-B1 Grid Structure

We employ grid structure to divide image into separate areas, with each area referred to as a grid. The grid structure offers advantage of showing feature’s distribution in image. We then build a bipartite index to establish connections between grids and lines, which serves as the foundation of subsequent processes such as line homogenization and tracking.

III-B2 Line Homogenization Strategies

As mentioned in chapter II, the challenge in line homogenization primarily lies in node allocation. To address this issue, we propose three line homogenization methods. The idea is as follows:

-

•

Quadtree based scheme: For lines in the image, a marker is added to all the grids they traverse. The sum of markers within a grid is considered as record.

-

•

Midpoint-Quadtree based scheme: Lines are assigned to a specific grid based on the position of their midpoint. The sum of midpoints within a grid is record.

-

•

Score based scheme: Linse within each grid are rewarded or penalized based on their average gradient. A scoring mechanism is employed to rank all lines, and a portion of lines with the highest scores are retained.

The first two scheme are extension of point homogenization. Our primary focus is on finding a unique node to represent a given line. In Quadtree-based scheme, we assign a record to every grid that line passes through. On the other hand, Midpoint-Quadtree scheme only adds a record to grid where the midpoint of line is located. As a result, the record of each grid can effectively describe density of lines within a specific range. Similar to point homogenization, subsequent division step can be performed based on records of grids.

Score-based scheme is designed from a global perspective. During the comparison experiment, we noticed that in some dense part of image like Fig. 4, complete divide a line into corresponding region of a node becomes challenging due to line’s inherent extension characteristics. Consequently, this often results in the incomplete homogenization. The key is that the selection of a line within a node does not imply that it is the best line, but rather that it is comparatively better than the other within a specific area. Building upon this concept, we replace division step in Quadtree or Midpoint-Quadtree based schemes with a scoring mechanism. In this approach, we prioritize lines with the highest average gradient and penalize the other within same grid. These rewards and punishments are reflected in the scores assigned to each line, which are determined based on the number of lines present in adjacent area of the grid.

Given that only one line in each grid is awarded while all remaining lines are punished, there is a significant disparity between numbers of punishment and award. To ensure fair selection of punished lines in the global screening process, we introduce an asymmetric score value, where the deduction for punished lines is less than the award. This asymmetric score value can be calculated as formula (1) and (2):

| (1) | |||||

| (2) | |||||

| (3) |

where is the number of lines in adjacent area of current grid and is the number of grids that line has passed through. After calculating score of all lines, we retain lines with higher scores as the outcome of homogenization.

Furthermore, it is important to note that the average gradient can’t reflect line’s length. Consequently, longer lines tend to receive lower scores due to their participation in scoring multiple times. In scenarios where there is a significant disparity in line’s length, it is recommended to utilize formula (3), which reduces deduction value for longer lines by incorporating an exponential function in denominator.

III-C Visual Odometry

III-C1 Grid-based Tracking

When system is able to accurately estimate speed, the change between two frames is not expected to be significant. Under these circumstances matching time can be greatly reduced by leveraging grid structure. Specifically, we first find grids that the line passes through, as well as neighboring grids. Lines that pass through these grids are selected as candidate matches. Subsequently, descriptor matching is performed between given line and candidate lines. This approach reduces the number of candidate matches compared to one-to-one exhaustive calculation. Furthermore, it incorporates geometric positioning into the matching process, thereby enhancing both the accuracy and speed of matching procedure.

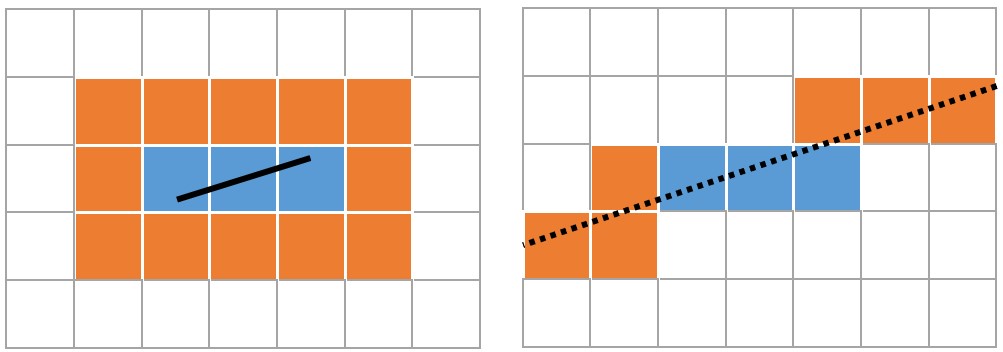

In scenarios where estimated speed is unstable, matched line obtained from tracking tends to decrease. To address this issue, we can employ method of expanding search scope. Simply expanding the search radius, as done with point features, may not be effective due to the instability of line length. Nonetheless, the fracture of line segments does not alter their slope. Therefore, when tracking performance is poor, we can utilize an extension tracking method, as depicted in Fig. 5. The grids that the extension line passes through are then considered as an additional search range.

III-C2 Pose Estimation

Pose will be optimized when we get enough matched feature. For matched points and lines, we use constraints in [2] and [6] to calculate rotation and translation. For two consecutive frames, lowercase letters are used to represent features in the image plane, while uppercase letters represent features in world coordinates.

| (4) |

where is a binary function which return 1 only if point and is matched. is Huber loss function to reduce interfaces of noise. is projection function used to project 3d points into image plane. In a similar way, the reprojection error of a map line can be defined as:

| (5) |

where and represent the coordinates of two endpoints of line and represents line’s normal vector. Base on this, loss function needs to be minimized can be defined as:

| (6) |

Formula (9) will be optimized by LM algorithm in g2o library [26]. Once the pose of the current frame is determined, we establish the connection between local map and current frame by utilizing both the pose and observation information.

III-C3 Keyframe Select and Filter

Line homogenization introduces some instability to line features, which can result in reduction of map lines and reduction in their observation results. This may lead to tracking deviations. To address this issue, we propose two solutions. Firstly, we adjust the threshold for point and line observations when selecting keyframes, thereby weakening the connection between keyframes and local map. This adjustment helps mitigate the impact of line instability on the overall system. Secondly, in filtering keyframe, we extend point-based strategy to a point-and-line strategy. This means that a keyframe will only be considered redundant if there is a significant overlap in observations between both points and lines. By incorporating both point and line information, we ensure a more robust determination of redundant keyframes.

III-C4 Local Optimization

Considering structural constraints existing in line segments and MA, the way used in [19] is adopted here to embed structural constraints into optimization. The pose of covisible keyframe and coordinates of covisible elements will be optimizated. For a given keyframe , we can get keyframes that have covisibility relationships from convisibility graph. We use and to represent map point and line seen by . Set of keyframes that observe and but don’t connect to are also considered in optimization, but their pose is fixed. What we need to optimize is , so the loss function is:

| (7) |

This formula is composed of three parts, which respectively correspond to reprojection error(), structural constraints(), and parallel relation constraints with manhattan axis(). Specifically, is similar to the definition in formula (6), but match relationship changes to 2d feature and map elements. Structural constraints error is used to reflect the parallel and vertical relationship, which can be defined as:

| (8) |

where and are sets of perpendicular and parallel line pairs in . and is given by:

| (9) | |||||

| (10) |

Finally, We use to represent map line that associated with a MA and seen by any keyframe in . is used to reflect the parallel relationship between given line and extracted MA, which can be given by:

| (11) |

IV EXPERIMENT RESULT

To check performance of our algorithm, we carried out sufficient experiments and compared with the latest algorithms. Considering that dataset collected in real scene always exist depth-illegal pixels, we also examine our performance in virtual scenes. All experiments have been performed on an Intel Core i5-10400 CPU @ 2.90GHz × 12/16GB RAM, without GPU parallelization.

IV-A Line homogenization

Fig. 6 presents results of homogenization in a randomly selected image from TUM dataset. Dense areas in image are highlighted by red circles (Fig. 6(a)). It can be observed that each of the three methods has its own advantages.

Score-based scheme (Fig. 6(b)) exhibits noticeable performance, particularly in highly dense regions. This is primarily because, in such areas, the increase or decrease in score is largely influenced by the number of lines in adjacent grid. As a result, it becomes highly unlikely for poor-quality lines to be retained during subsequent screening process. Midpoint-Quadtree based scheme (Fig. 6(c)) represents the most balanced approach from a global perspective, as it selects only one line to be retained in each grid. This strategy ensures a more even distribution of retained lines throughout the map. However, the quadtree-based strategy (Fig. 6(d)) may face challenges in detecting dense locations, which leads to relatively weaker performance compared to the other two methods.

As SLAM is a framework with stringent real-time requirements, we also assess time consumption of proposed homogenization algorithm. We randomly select a group of images from TUM dataset and recorded their processing times. Our results show that the Score-based and Midpoint-Quadtree-based methods outperform the Quadtree-based method, with an average processing time of 4ms. However, the Quadtree-based method still performs well, taking only 6ms to complete. Our findings indicate that there are no significant speed differences among the three methods. All three strategies effectively filter lines within dense areas of the image without significantly impacting overall processing speed.

IV-B Framework Performance Comparison

We select widely used RGB-D datasets ICL-NUIM [27] and TUM-RGBD [28] to evaluate performance of our framework. ICL-NUIM consists of eight indoor sequences captured in two different scenes. These scenes present challenges such as low-textured regions and uneven feature distributions, which can lead to pose estimation deviations. Additionally, this dataset has optimized depth map noise, ensuring all pixel depths are valid. TUM also comprises several indoor sequences captured under various environmental conditions. Unlike ICL-NUIM, TUM-RGBD includes depth noise. We utilize ICL-NUIM to evaluate performance under ideal conditions and TUM-RGBD to assess performance in real-world scenes.

IV-B1 Time Performance Comparison

| Sequence | MSC-VO[19] | GFS-VO | |

| Midpoint-Quadtree | Score | ||

| fr1_xyz | 0.2833/0.0473 | 0.0972/0.0296 | 0.1550/0.0313 |

| fr1_desk | 0.4225/0.0475 | 0.1315/0.0326 | 0.2111/0.0298 |

| fr3_longoffice | 0.5434/0.0487 | 0.1735/0.0289 | 0.1289/0.0306 |

| lr_kt0 | 0.1291/0.0288 | 0.0641/0.0203 | 0.0657/0.0186 |

| lr_kt1 | 0.2157/0.0363 | 0.0197/0.0225 | 0.0231/0.0238 |

| lr_kt2 | 0.1777/0.0349 | 0.0347/0.0228 | 0.0352/0.0231 |

| lr_kt3 | 0.1604/0.0305 | 0.0319/0.0191 | 0.0318/0.0178 |

| of_kt0 | 0.1359/0.0361 | 0.0627/0.0250 | 0.0449/0.0246 |

| of_kt1 | 0.1657/0.0327 | 0.0426/0.0191 | 0.0546/0.0286 |

| of_kt2 | 0.1513/0.0343 | 0.0399/0.0225 | 0.0774/0.0227 |

| of_kt3 | 0.1247/0.0414 | 0.0468/0.0241 | 0.0321/0.0277 |

To assess time cost of proposed feature extraction method, we conducted a comparison between GFS-VO and MSC-VO[19], both utilizing MA for trajectory estimation. The results, presented in Table I, are represented by ”MA extraction/feature process”. It is important to note that in MSC-VO, the feature processing time includes both extraction and reconstruction of geometry features and the extraction of plane normal vectors. On the other hand, in GFS-VO, this processing time also encompasses grid distribution and line homogenization. Based on the results presented in Table I, it is evident that GFS-VO significantly improves the speed of feature extraction.

The reduction in time cost can be attributed to three main factors. Firstly, in geometry feature extraction, we employ EDLINE instead of LSD, which reduces line extraction time. Secondly, line homogenization scheme in our algorithm reduces the number of line segments involved in reconstruction. Lastly, BFS-based approach enables accurate and rapid extraction of normal vectors from image. This helps in reducing unstable features and mitigating the influence of noise during the MA extraction, thereby improving extraction speed without compromising accuracy.

At the same time, we also noticed deficiencies of GFS-VO. For datasets with large changes in the amount of line (like Fig. 7), careful consideration should be given to setting the homogenization threshold. The main challenge arises from the fact that the threshold required for locations with abundant line features differs from that needed for sparser areas. Setting a small threshold effectively filters lines in rich locations, but it may not apply in sparse areas, and vice versa. There is no universal scale to measure the intensity of line features in all scenes.

| Sequence | GFS-VO | MSC-VO[19] | SReg[16] | ManhattanSLAM[21] | LPVO[29] | Structure-SLAM[17] | ORBSLAM2[3] | PS-SLAM[30] | InfiniTAM[31] | |

| Midpoint-quadtree | Score | |||||||||

| lr_kt0 | 0.0082 | 0.0063 | 0.006 | 0.006 | 0.007 | 0.01 | NA | 0.025 | 0.016 | NA |

| lr_kt1 | 0.0116 | 0.0105 | 0.010 | 0.015 | 0.011 | 0.04 | 0.016 | 0.008 | 0.018 | 0.006 |

| lr_kt2 | 0.0083 | 0.0102 | 0.009 | 0.020 | 0.015 | 0.03 | 0.045 | 0.023 | 0.017 | 0.013 |

| lr_kt3 | 0.0152 | 0.0241 | 0.038 | 0.012 | 0.011 | 0.10 | 0.046 | 0.021 | 0.025 | NA |

| of_kt0 | 0.0210 | 0.0190 | 0.028 | 0.041 | 0.025 | 0.06 | NA | 0.037 | 0.032 | 0.042 |

| of_kt1 | 0.0160 | 0.0171 | 0.017 | 0.020 | 0.013 | 0.05 | NA | 0.029 | 0.019 | 0.025 |

| of_kt2 | 0.0146 | 0.0127 | 0.014 | 0.011 | 0.015 | 0.04 | 0.031 | 0.039 | 0.026 | NA |

| of_kt3 | 0.0108 | 0.0096 | 0.010 | 0.014 | 0.013 | 0.03 | 0.065 | 0.065 | 0.012 | 0.010 |

| fr1_desk | 0.0178 | 0.0167 | 0.019 | NA | 0.027 | NA | NA | 0.022 | 0.026 | NA |

| fr1_xyz | 0.0094 | 0.0109 | 0.010 | NA | 0.010 | NA | NA | 0.010 | 0.010 | NA |

| fr2_desk | 0.0135 | 0.0200 | 0.023 | NA | 0.037 | NA | NA | 0.040 | 0.025 | NA |

| fr2_xyz | 0.0036 | 0.0037 | 0.005 | NA | 0.008 | NA | NA | 0.009 | 0.009 | NA |

| fr3_long_office | 0.0188 | 0.0209 | 0.022 | NA | NA | 0.19 | NA | 0.028 | NA | NA |

-

•

* We use NA to stand for unavailable result. The best result is shown in orange while the second best is show in blue.

IV-B2 Accuracy Comparison

We use Root-Mean-Square Error (RMSE) of absolute trajectory error as evaluation standard. The performance of other methods in experiment are from the best results provided in the respective papers. The comparison is shown in Tab. II.

Upon experiment results, we notice that improvement in virtual scenes is limited. This limitation primarily stems from the scarcity of stable features in these datasets, particularly in the living room dataset. The instability of line features, in terms of length and quantity, not only affects the accuracy of pose estimation but also impacts MA extraction. Conversely, in TUM dataset, which represents real scenes, GFS-VO demonstrates significant improvement. We attribute this improvement to the complexity of point and line features in actual scenes, which highlights the benefits brought about by line homogenization. On one hand, our method preserves longer lines in the scene compared to traditional response-based line screening, establishing a more stable observation relationship between frames. On the other hand, homogenization removes short lines in dense areas. These short lines are more unstable and prone to errors in matching and pose estimation. The removal of such lines positively impacts the overall accuracy.

V CONCLUSION

This paper presents GFS-VO, a fast-structural visual odometer based on grid. Leveraging the grid structure, we design stable line homogenization and accurate line tracking algorithm. To fully use line feature, we introduce MA into our framework. Considering the real-time requirement of visual odometry, we also propose a plane normal vector extraction method to calculate MA faster. The experiment result shows that our method has a significant improvement in both accuracy and speed. For future work, we will continue to refine the line homogenization strategy and explore alternative approaches for measuring intensity. Furthermore, we also aim to investigate the impact of the position between point and line features on the accuracy of visual odometry, addressing the issues identified during our experiments.

References

- [1] R. G. Von Gioi, J. Jakubowicz, J.-M. Morel, and G. Randall, “Lsd: A line segment detector,” Image Processing On Line, vol. 2, pp. 35–55, 2012.

- [2] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardos, “Orb-slam: a versatile and accurate monocular slam system,” IEEE transactions on robotics, vol. 31, no. 5, pp. 1147–1163, 2015.

- [3] R. Mur-Artal and J. D. Tardós, “Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras,” IEEE transactions on robotics, vol. 33, no. 5, pp. 1255–1262, 2017.

- [4] C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. Montiel, and J. D. Tardós, “Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam,” IEEE Transactions on Robotics, vol. 37, no. 6, pp. 1874–1890, 2021.

- [5] T. Qin, P. Li, and S. Shen, “Vins-mono: A robust and versatile monocular visual-inertial state estimator,” IEEE Transactions on Robotics, vol. 34, no. 4, pp. 1004–1020, 2018.

- [6] A. Pumarola, A. Vakhitov, A. Agudo, A. Sanfeliu, and F. Moreno-Noguer, “Pl-slam: Real-time monocular visual slam with points and lines,” in 2017 IEEE international conference on robotics and automation (ICRA). IEEE, 2017, pp. 4503–4508.

- [7] Q. Fu, J. Wang, H. Yu, I. Ali, F. Guo, Y. He, and H. Zhang, “Pl-vins: Real-time monocular visual-inertial slam with point and line features,” arXiv preprint arXiv:2009.07462, 2020.

- [8] Y. He, J. Zhao, Y. Guo, W. He, and K. Yuan, “Pl-vio: Tightly-coupled monocular visual–inertial odometry using point and line features,” Sensors, vol. 18, no. 4, p. 1159, 2018.

- [9] C. Akinlar and C. Topal, “Edlines: A real-time line segment detector with a false detection control,” Pattern Recognition Letters, vol. 32, no. 13, pp. 1633–1642, 2011.

- [10] I. Suárez, J. M. Buenaposada, and L. Baumela, “Elsed: Enhanced line segment drawing,” Pattern Recognition, vol. 127, p. 108619, 2022.

- [11] L. Zhou, G. Huang, Y. Mao, S. Wang, and M. Kaess, “Edplvo: Efficient direct point-line visual odometry,” in 2022 International Conference on Robotics and Automation (ICRA). IEEE, 2022, pp. 7559–7565.

- [12] L. Zhou, S. Wang, and M. Kaess, “Dplvo: Direct point-line monocular visual odometry,” IEEE Robotics and Automation Letters, vol. 6, no. 4, pp. 7113–7120, 2021.

- [13] Q. Wang, Z. Yan, J. Wang, F. Xue, W. Ma, and H. Zha, “Line flow based simultaneous localization and mapping,” IEEE Transactions on Robotics, vol. 37, no. 5, pp. 1416–1432, 2021.

- [14] R. Gomez-Ojeda, J. Briales, and J. Gonzalez-Jimenez, “Pl-svo: Semi-direct monocular visual odometry by combining points and line segments,” in 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2016, pp. 4211–4216.

- [15] C. Forster, M. Pizzoli, and D. Scaramuzza, “Svo: Fast semi-direct monocular visual odometry,” in 2014 IEEE International Conference on Robotics and Automation (ICRA), 2014, pp. 15–22.

- [16] Y. Li, R. Yunus, N. Brasch, N. Navab, and F. Tombari, “Rgb-d slam with structural regularities,” in 2021 IEEE international conference on Robotics and automation (ICRA). IEEE, 2021, pp. 11 581–11 587.

- [17] Y. Li, N. Brasch, Y. Wang, N. Navab, and F. Tombari, “Structure-slam: Low-drift monocular slam in indoor environments,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 6583–6590, 2020.

- [18] P. Kim, B. Coltin, and H. J. Kim, “Low-drift visual odometry in structured environments by decoupling rotational and translational motion,” in 2018 IEEE international conference on Robotics and automation (ICRA). IEEE, 2018, pp. 7247–7253.

- [19] J. P. Company-Corcoles, E. Garcia-Fidalgo, and A. Ortiz, “Msc-vo: Exploiting manhattan and structural constraints for visual odometry,” IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 2803–2810, 2022.

- [20] J. Straub, G. Rosman, O. Freifeld, J. J. Leonard, and J. W. Fisher, “A mixture of manhattan frames: Beyond the manhattan world,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2014, pp. 3770–3777.

- [21] R. Yunus, Y. Li, and F. Tombari, “Manhattanslam: Robust planar tracking and mapping leveraging mixture of manhattan frames,” in 2021 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2021, pp. 6687–6693.

- [22] J. M. Coughlan and A. L. Yuille, “Manhattan world: Compass direction from a single image by bayesian inference,” in Proceedings of the seventh IEEE international conference on computer vision, vol. 2. IEEE, 1999, pp. 941–947.

- [23] D. Holz, S. Holzer, R. B. Rusu, and S. Behnke, “Real-time plane segmentation using rgb-d cameras,” in RoboCup 2011: Robot Soccer World Cup XV 15. Springer, 2012, pp. 306–317.

- [24] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “Orb: An efficient alternative to sift or surf,” in 2011 International conference on computer vision. Ieee, 2011, pp. 2564–2571.

- [25] L. Zhang and R. Koch, “An efficient and robust line segment matching approach based on lbd descriptor and pairwise geometric consistency,” Journal of visual communication and image representation, vol. 24, no. 7, pp. 794–805, 2013.

- [26] R. K”̈ummerle, G. Grisetti, H. Strasdat, K. Konolige, and W. Burgard, “g 2 o: A general framework for graph optimization,” in 2011 IEEE International Conference on Robotics and Automation. IEEE, 2011, pp. 3607–3613.

- [27] J. Sturm, N. Engelhard, F. Endres, W. Burgard, and D. Cremers, “A benchmark for the evaluation of rgb-d slam systems,” in 2012 IEEE/RSJ international conference on intelligent robots and systems. IEEE, 2012, pp. 573–580.

- [28] A. Handa, T. Whelan, J. McDonald, and A. J. Davison, “A benchmark for rgb-d visual odometry, 3d reconstruction and slam,” in 2014 IEEE international conference on Robotics and automation (ICRA). IEEE, 2014, pp. 1524–1531.

- [29] P. Kim, B. Coltin, and H. J. Kim, “Low-drift visual odometry in structured environments by decoupling rotational and translational motion,” in 2018 IEEE International Conference on Robotics and Automation (ICRA), 2018, pp. 7247–7253.

- [30] X. Zhang, W. Wang, X. Qi, Z. Liao, and R. Wei, “Point-plane slam using supposed planes for indoor environments,” Sensors, vol. 19, no. 17, p. 3795, 2019.

- [31] V. A. Prisacariu, O. K”̈ahler, S. Golodetz, M. Sapienza, T. Cavallari, P. H. Torr, and D. W. Murray, “Infinitam v3: A framework for large-scale 3d reconstruction with loop closure,” arXiv preprint arXiv:1708.00783, 2017.