\ul

GFairHint: Improving Individual Fairness for Graph Neural Networks via Fairness Hint

Abstract.

Given the growing concerns about fairness in machine learning and the impressive performance of Graph Neural Networks (GNNs) on graph data learning, algorithmic fairness in GNNs has attracted significant attention. While many existing studies improve fairness at the group level, only a few works promote individual fairness, which renders similar outcomes for similar individuals. A desirable framework that promotes individual fairness should (1) balance between fairness and performance, (2) accommodate two commonly-used individual similarity measures (externally annotated and computed from input features), (3) generalize across various GNN models, and (4) be computationally efficient. Unfortunately, none of the prior work achieves all the desirables. In this work, we propose a novel method, GFairHint, which promotes individual fairness in GNNs and achieves all aforementioned desirables. GFairHint learns fairness representations through an auxiliary link prediction task, and then concatenates the representations with the learned node embeddings in original GNNs as a “fairness hint”. Through extensive experimental investigations on five real-world graph datasets under three prevalent GNN models covering both individual similarity measures above, GFairHint achieves the best fairness results in almost all combinations of datasets with various backbone models, while generating comparable utility results, with much less computational cost compared to the previous state-of-the-art (SoTA) method.

1. Introduction

Graph Neural Networks (GNNs) have shown great potential in modeling graph-structured data for various tasks such as node classification, graph classification, and link prediction (Wu et al., 2021). Node classification, particularly, has been applied in many real-world scenarios, such as recruitment (Lambrecht and Tucker, 2019; Zhang et al., 2021), recommendation system (Li et al., 2020; Wu et al., 2022a, 2019, b), and loan default prediction (Hu et al., 2020b; WANG et al., 2022). Although, GNNs play important roles in these decision-making processes, increasing attention is being paid to fairness in graph-structured data and GNNs (Mehrabi et al., 2021a; Dong et al., 2022a; Lambrecht and Tucker, 2019). Since the node representation is learned by aggregating information from its neighbours, the unfairness may be amplified due to such message-passing mechanism in GNNs and result in unexpected discrimination (Dong et al., 2022b; Wang et al., 2022; Kose and Shen, 2022). Taking social networks as an example, users tend to connect with others in the same demographic group. The message-passing mechanism may cause GNNs to perform differently for different demographic groups and have different predictions for similar individuals if they belong to different demographic groups. Such unfairness prevents the adoption of the GNNs in many high-stake applications.

Algorithmic fairness can be defined at both group and individual level (Mehrabi et al., 2021b). While group fairness attempts to treat different groups equally, individual fairness, which is the focus of our work, intends to give similar predictions to similar individuals for a specific task. Compared to group fairness, individual fairness reduces bias directly on each individual. In particular, when information on sensitive attributes is not available in real-world applications, we can only choose individual fairness to scrutinize the discrimination.

A core question for individual fairness is how to define the task-specific similarity metric that measures how similar two individuals are. Dwork et al. (2012) originally envisioned that the metric would be provided by human experts “as a (near ground-truth) approximation agreed upon by the society”. Lahoti et al. (2019) argues that it is very difficult for experts to measure individuals based on a quantitative similarity metric when in operationalization. They further suggest that it is much easier to make pairwise judgments which results in a binary similarity measure between two individuals. Class-specific similarity where individuals in the same class are considered similar can also be transformed in the binary format. For cases where there is no task-specific similarity metric at hand, other works (Mukherjee et al., 2020; Dong et al., 2021; Kang et al., 2020) use simplified notions by computing continuous similarity metrics (e.g., a weighted Euclidean distance) over a feature space of data attributes. Thus, we argue that an ideal framework to promote individual fairness in models should be compatible with multiple task-specific similarity metrics.

We highlight four desiderata for prompting individual fairness in GNNs based on the discussion above and practical experience when adding a fairness promotion module to the models. (1) The model should achieve a good balance between utility (e.g., classification accuracy) and fairness when making predictions. (2) The proposed method for individual fairness should be compatible with both binary and continuous similarity measures. (3) The fairness promotion method should be compatible with different GNN model architectures and various designs for specific tasks. (4) The additional computational cost introduced to promote fairness should be reasonably small.

In this study, we propose an individual fairness representation learning framework to improve individual FAIRness for GNNs via fairness HINT (GFairHint), with the above desired properties. We consider the setting in which similarity measures are available for each pair of individuals, either binary or continuous. As shown in Figure 1, in addition to the original input graph, we create a fairness graph where the edge between two nodes is weighted by the given similarity measure and does not exist when the similarity value is or below a certain threshold. We then learn a fairness representation for each node from the constructed fairness graph via link prediction, where we encourage the model to recover randomly masked edges. The learned fairness representation is then used as fairness hint to be concatenated with the node embeddings from the original graph, which is trained in parallel to maximize utility with the main GNN model. We feed the concatenated representations to multilayer perceptrons (MLPs) to make fair and accurate predictions.

GFairHint focuses on learning an additional fair representation and is compatible with various GNN model designs. It is orthogonal and complementary to a strategy adopted by most previous work (Lahoti et al., 2019; Dong et al., 2021; Kang et al., 2020), i.e., adding a fairness regularization term to the training objective. Meanwhile, GFairHint is a light-weighted framework. It is more computationally efficient and especially works better with large network datasets in terms of computation cost and both utility and fairness performance, while previous work either takes much longer training time (Dong et al., 2021) or requires large memory allocation, and therefore may fail on some large datasets (Lahoti et al., 2019). Furthermore, GFairHint can benefit from these existing methods with fairness regularized objective functions when integrated together to further improve the performance.

To demonstrate the effectiveness of our proposed method, we conduct extensive empirical evaluations on five datasets for node classification. These datasets apply either continuous similarity measures derived from the input space or binary measures provided by external annotators to quantify the similarity between individuals. Additionally, we experiment with three popular GNN backbone models, resulting in a total of 15 models dataset comparisons. Our GFairHint framework consistently outperforms other methods in terms of fairness, achieving the best results in 12 out of the 15 comparisons. Furthermore, in 9 out of the 15 comparisons, GFairHint achieves the best utility performance, while in the remaining comparisons, it achieves comparable utility results. We summarize our main contributions as follows:

-

•

Plug-and-play Framework: We present GFairHint, a plug-and-play framework for enhancing individual fairness in GNNs. This framework learns a fairness hint through an auxiliary link prediction task. We provide a theoretical analysis on the fairness hint and prove that for any two nodes, the learned hints are individually fair.

-

•

Satisfied Desiderata: GFairHint is compatible with two distinct settings for similarity measures among individuals and can achieve comparable accuracy while generating more individually fair predictions. Additionally, the method is computationally efficient and seamlessly integrates with various model designs.

-

•

Rigorous Experiments: We empirically show that the proposed method achieves the best fairness results in most comparisons (12/15), with the best utility results in the 9/15 comparisons, and comparable utility performance in the other comparisons.

2. Related Work

Fairness for Graph-structured Data

Most previous efforts focus on promoting group fairness in graphs (Rahman et al., 2019a; Li et al., 2021; Wang et al., 2022; Bose and Hamilton, 2019; Dai and Wang, 2021; Buyl and De Bie, 2020), which encourages the same results across different sensitive groups (e.g., demographics). Another line of research work is on counterfactual fairness (Kusner et al., 2017; Ma et al., 2022), which aims to generate the same prediction results for each individual and its counterfactuals.

Few research studies individual fairness in graphs (Dwork et al., 2012; Mehrabi et al., 2021b). Individual fairness intends to render similar predictions to similar individuals for a specific task. Kang et al. (2020) propose a framework called InFoRM (Individual Fairness on Graph Mining) to debias a graph mining pipeline from the input graph (preprocessing), the mining model (processing), and the mining results (postprocessing), but not specifically for GNN models. Song et al. (2022) identify a new challenge to enforce individual fairness informed by group equality. Promoting group and individual fairness at the same time requires group information, which is not available in some real-life scenarios, such as the academic networks studied in this paper. The work closest to ours is REDRESS (Dong et al., 2021). They propose to model individual fairness from a ranking-based perspective and design a ranking-based loss accordingly. However, their method does not generalize well when the similarity measure is binary, especially binary, because calculating the ranking-based loss requires to rank the individuals based on the similarity values but with binary similarity measures (i.e., many individuals on the same similarity level) the rankings are not as informative as in the cases with continuous similarity measures. Moreover, despite their effort on reducing the computation cost and the effectiveness of the ranking-based loss, high computational cost is unavoidable when computing the rank.

Individual Fairness

There are other works focusing on individual fairness, but not specifically for graph-structured data. The definition of individual fairness, similar predictions for similar individuals, can be formulated by the Lipschitz constraint, which inspires works such as Pairwise Fair Representation (PFR) (Lahoti et al., 2019) to learn fair representation as input. This can be considered as a preprocssing method for GNN models. However, the transformation from the original input feature to fair representation may distort the original information in the input features and cause a detriment in the utility performance of the model (Dong et al., 2021). Because it is computationally difficult to enforce Lipschitz constraint, Yurochkin and Sun (2021) propose an in-process method with a lifted constraint and Petersen et al. (2021) propose a post-processing method with Laplacian smoothing. In this work, we compare with REDRESS (Dong et al., 2021) and adapt PFR (Lahoti et al., 2019) and InFoRM (Kang et al., 2020) to work with GNN models. We do not compare with Dwork et al. (2012) because their contribution is mainly conceptual and it was not included as baseline in previous works (Lahoti et al., 2019; Kang et al., 2020; Dong et al., 2021).

3. Proposed Method - GFairHint

3.1. Problem Formulation

The generic definition of individual fairness is individuals who are similar should have similar outcomes (Dwork et al., 2012). We can formulate the similarity between individuals with an oracle similarity matrix , where the value of -th entry is the similarity between the node and . We follow the same setting from previous works (Dong et al., 2021; Kang et al., 2020; Lahoti et al., 2019), where the oracle similarity matrix is given apriori (annotated by external experts or calculated by input features). Depending on the definition of similarity measure, the entry of could be continuous or binary. For graph data and GNN models, we denote the outcome similarity matrix as with the predicted outcome from GNN model, where the -th entry is the similarity between the embeddings and of the nodes and from the last model layer.

Inspired by the individual fairness definition from the previous work (Dwork et al., 2012; Kang et al., 2020), we define the fair condition of GNN model as follows:

Definition 0.

Let and be two nodes in a graph. The output of the model and are individually fair w.r.t. to the node similarity measure and output distance measure if the following condition holds.

| (1) |

where ¿ 0 is a constant for fairness tolerance and if . is the number of nodes in a graph. We define as the fairness bound.

With this definition, our goal to promote the individual fairness can be achieved by narrowing the difference between and .

3.2. Overall Structure

To achieve a good balance between utility and fairness, GNN models need to utilize input information from both sides. We first apply a representation learning method to extract the fairness information from the input and add the learned fairness representation to the original GNN model to promote individual fairness. Our proposed GFairHint framework, as shown in Figure 1, consists of three steps. First (Section 3.3), we construct an unweighted fairness graph, , with the same set of nodes in the original input graph. The undirected edges of represent that two nodes have a high similarity value in . Next (Section 3.4), we obtain the individual fairness hint through a representation learning method that learns fairness representations for the nodes in . Specifically, the representation learning model predicts whether two nodes in have an edge through a GNN link prediction model whose final hidden layer output is used as the fairness hint. Finally (Section 3.5), we concatenate the node fairness hint with the learned node embedding of the GNN for original tasks and use the joint embedding for further training.

3.3. Construction of Fairness Graph

To extract fairness information for each individual via a link-prediction-based representation learning method, we first construct a fairness graph based on the apriori oracle similarity matrix . Note that can be given by incorporating various types of data sources and our fairness graph construction complies with the data sources of . Here, we show two commonly utilized data sources, i.e., external annotation and input feature.

Oracle Similarity Matrix based on External Annotation

Constructing is straightforward when external pairwise judgments are available on whether two individuals should be treated similarly given a specific task (Lahoti et al., 2019). The entry in is when individual and are labeled as similar, and otherwise. In this case, is the adjacency matrix for the fairness graph . An alternative type of judgments is to map individuals into binary equivalence classes. A pair of individuals is linked in the fairness graph, , only if they belong to the same class (Lahoti et al., 2019).

Oracle Similarity Matrix based on Input Features

Although the similarity for individual fairness was originally envisioned to be provided by human experts (Dwork et al., 2012), it is often impractical to obtain for real-world tasks. Previous works (Dong et al., 2021; Mukherjee et al., 2020) obtain the oracle similarity matrix from input feature space i.e., the entry in measures the feature similarity between nodes and , such as cosine similarity. To construct the fairness graph, , we can discretize the continuous cosine values by connecting each node only to its top- similar nodes from .

3.4. Fairness Hint Learning

To incorporate the fairness information into the original GNN model, we learn fairness node representation with a separate GNN model using an auxiliary link prediction task on the fairness graph, . Similarly to masked language modeling in NLP to learn the contextual word embeddings by randomly masking words (Devlin et al., 2018), we expect that predicting whether two nodes share a “similarity” edge inside the fairness graph can yield a valid fairness hint. We provide a theoretical analysis of this expectation later.

We use Graph Convolutional Network (GCN) model (Kipf and Welling, 2016) with GCN layers for link prediction, i.e., predicting whether two nodes in share an edge. The initial input node features of the link prediction model are the same as the features of the original task. For any node embedding (the embedding of node from the th hidden layer), GCN layer combines the node embedding and other node embeddings from its neighbor node set , which is formally denoted as

| (2) |

If setting the output of the last layer in the link prediction model for node and node as and respectively, we use the inner product and sigmoid function as the probability of node and node sharing an edge, as well as the similarity between the outcome. We use cross entropy loss to optimize the link prediction model.

We train this fairness representation learning model separately from the original task GNN model to avoid overfitting. We extract the output of the last layer for each node as the fairness hint, and the fairness hint is reusable for different backbone GNN models. As we show next, after training a link prediction model on the fairness graph, we can obtain individually fair fairness hints. When we feed the fairness hints to the original GNNs, the models can receive similar fairness hints for similar individuals and render similar outcomes.

Theoretical Analysis In our link prediction model, we use the inner product and sigmoid function as the similarity measure between the outcome and the cross entropy loss to optimize the GCN model, so the corresponding loss can be calculated as

| (3) |

where is the label of the fairness edge existence and is the probability of link existence between node and node . Ideally, after optimizing the GNN model to minimize the cross entropy loss to 0, should be 1 for two nodes connected by an edge and 0 for two nodes without any edge. We can then make an assumption that after the model training converges, if and if , for any and where is a small number. Given the inner product as the similarity measure, we can define the distance function of model outputs as . The following conditions should hold.

| (4) |

With Definition 1 and Equation 4, we can deduce the following theorem.

Theorem 2.

Let and be two arbitrary nodes in a graph with node features and . With Definition 1, denote as the fairness tolerance. Assume that a GNN model for link prediction on this graph is trained with the similarity function and then define the output distance function . After training, we assume that if an edge exists between and and if no edge, where is a small number. The outputs and are individually fair if .

We provide the proof and the justification of how the assumption is achievable in practice in Appendix C. The intuition behind this theorem is that, with the current representation learning setup, if nodes and share an edge in , the generated embeddings and from the link prediction model should be more similar than the other nodes without edges and thus similar nodes have similar fairness hints as the representations. Theorem 2 supports that our representation learning method encodes the fairness information of each node with the fairness hint. Next, we describe how to integrate the fairness hints with the learning of backbone GNN model for node classification tasks.

3.5. Fairness Promotion for GNN Models

Our GFairHint framework is compatible with various GNN model architectures for the original tasks. The basic operations of each GNN layer are similar to the GCN operation in Equation (2), but the convolutional operations are replaced with other message-passing mechanisms for different GNN models. We train the chosen GNN backbone models with utility loss (i.e., cross entropy loss) and obtain the utility node embedding from the last GNN hidden layer. We then concatenate with the fairness hint to form a joint node embedding . We add two layers of multilayer perceptron (MLP) with weights and to encourage the model to learn both utility and fairness information from the joint node embeddings. The final embedding of the node can be calculated as

We extract the fairness hint using the learned fairness representation learning model before training the original GNN model, and the fairness hint is fixed during the optimization process. For node classification tasks, we apply softmax to the final node embedding to obtain the predictions where is the number of classes and apply to optimize the parameters in the backbone GNN models. We empirically show that the fairness hint is fully involved in models’ decision-making process via gradient-based interpretability method in Section 5.2 and the MLP layers can learn to balance between utility and fairness hint when we integrate the loss function with fairness regularization which we introduce next.

3.6. Extension: Integration with Fairness Loss

Our GFairHint framework can simply utilize the utility loss as the final loss. Moreover, we can further encourage the model to learn fairness information by adding the “fairness” loss into the final loss function. Previous fairness promotion methods have designed various fairness loss to enforce a good balance between utility and fairness (Dong et al., 2021; Petersen et al., 2021), which are complementary to our fairness hint. In our work, we integrate the ranking-based fairness loss in REDRESS (Dong et al., 2021) to our framework GFairHint.

The objective of the loss is to minimize the difference between the oracle similarity matrix and the outcome similarity matrix . For each node, when is based on input features, we can obtain two top-k ranking lists derived from and respectively. The fairness loss of node can be calculated as

| (5) | |||

| (6) | |||

| (7) | |||

| (8) |

where is the similarity metric (NDCG or ERR (Chapelle et al., 2009; Järvelin and Kekäläinen, 2002)) between the two top-k ranking lists and node or are selected from the ranking lists. Specifically, and are the entries from and respectively. However, when is from external annotations where only pairwise judgements are available, the entries in are binary. Therefore, it is meaningless to calculate the ranking-based loss of the constructed fairness graph in this case. In this work, to apply REDRESS related models to fairness datasets with external annotations, we made an adjustment by replacing from external annotations with the one derived from input features. Details will be discussed in Section 4.2.

The total fairness loss is then calculated as the sum of the fairness loss on each node. The final objective is to combine the fairness loss and the utility loss.

| (9) |

where is an adjustable hyperparameter. By changing the value of , we can control the weight of fairness and utility during training according to the task requirement. The details discussion is in Section 5.3.

4. Experiment Setup

4.1. Dataset Collection

In our work, we focus on the node classification task to evaluate the fairness promotion ability of our proposed GFairHint. We collect five real-word datasets to assess the model performance in multiple domains (see statistics in Table 1). Coauthor-CS (CS) and Coauthor-Phy (Phy) are two co-authorship network datasets (Shchur et al., 2018), where each node represents an author, and they connect the nodes if two authors have published a paper together. ACM is a dataset of citation network (Tang et al., 2008), where each node represents a paper, and the edge denotes the citation relationship. These three datasets (ACM, CS, Phy) are also applied in the REDRESS paper as the experiment benchmarks (Dong et al., 2021). In addition, we use another citation network OGBN-ArXiv dataset (Hu et al., 2020a), which is several magnitude orders larger than the ACM, CS, and Phy datasets.

For ACM, CS, and Phy datasets, we follow the preprocessing procedure in REDRESS and use the bag-of-word model to transform the title and abstract of a paper as its node feature. We use the pre-split training, validation, and test datasets from the REDRESS paper111https://github.com/yushundong/REDRESS/tree/main/node%20classification/data. Regarding the ArXiv dataset, we directly use the processed 128-dimensional feature vectors from a pre-trained skip-gram model (Mikolov et al., 2013). We then follow the train/validation/test splits from the official release of Open Graph Benchmark (OGB).222https://ogb.stanford.edu/docs/nodeprop/ We repeat the experiments for each model setting twice, because the split of the dataset is fixed by the previous work. Since the citation and co-authorship network datasets do not contained human annotated similarity, we follow previous work (Dong et al., 2021) and use the cosine similarities between node features as the entries in . 333We also tested with euclidean distance. We do not develop or evaluate different individual fairness measures but rather show that our method is compatible with various existing measures.

Additionally, we curate a dataset with external human annotation on individual fairness similarity in the binary setting to demonstrate our framework’s compatibility when external annotation is available. The Crime dataset (Redmond, 2009) consists of socioeconomic, demographic and law / police data records for neighborhoods in the US. We follow Lahoti et al. (2019) for most of the preprocessing and introduce additional information on the geometric adjacency of the county444https://pypi.org/project/county-adjacency/ to form a graph-structured dataset. The nodes are the neighborhoods, and the edges indicate that two neighborhoods reside in the same county or adjacent counties. We have a binary outcome variable for whether the neighborhood is violent and consider other data records as input features. For the similarity measure of individual fairness, we also follow (Lahoti et al., 2019) to collect human reviews on Crime Safety for neighborhoods in the U.S. from a neighborhood review website, Niche.555http://niche.com The judgments are given in the form of 1-star to 5-star ratings by current and past residents of these neighborhoods. We then use aggregated mean ratings to construct the fairness graph as described in Section 3.3, where the neighborhoods with the same rating level (e.g., 5 stars) are linked in the fairness graph. As there is no predefined train/validation/test split, we randomly split the dataset and repeat five times for each model setting.

| Type | Dataset | # Nodes | # Features | # Classes |

|---|---|---|---|---|

| Input Feature | Coauthor-CS | 916 | 6,805 | 15 |

| Coauthor-Phy | 1,724 | 8,415 | 5 | |

| ACM | 824 | 8,337 | 9 | |

| ArXiv | 90,941 | 128 | 40 | |

| External | Crime | 1,994 | 122 | 2 |

| Dataset | Backbone | Method | Fairness: NDCG@10 | Fairness: ERR@10 | Utility: ACC |

| ArXiv | GCN | Vanilla | 75.01 ± 0.19 | 91.45 ± 0.01 | \ul70.19 ± 0.02 |

| InFoRM | 74.66 ± 0.05 | 91.47 ± 0.09 | 68.86 ± 0.68 | ||

| REDRESS | 75.25 ± 0.71 | 91.57 ± 0.10 | 68.65 ± 1.13 | ||

| REDRESS + MLP | 75.01 ± 0.26 | 91.47 ± 0.01 | 69.85 ± 0.27 | ||

| GFairHint | \ul81.93 ± 0.27 | \ul94.28 ± 0.09 | 70.62 ± 0.91 | ||

| GFairHint + REDRESS | 85.48 ± 3.47 | 95.22 ± 0.80 | 69.80 ± 0.47 | ||

| SAGE | Vanilla | 75.47 ± 0.37 | 91.71 ± 0.13 | 70.44 ± 0.69 | |

| InFoRM | 74.82 ± 0.18 | 91.60 ± 0.14 | 69.20 ± 1.45 | ||

| REDRESS | 75.51 ± 0.73 | 91.58 ± 0.16 | 69.34 ± 0.55 | ||

| REDRESS + MLP | 74.45 ± 0.53 | 91.40 ± 0.09 | 69.75 ± 0.18 | ||

| GFairHint | \ul81.92 ± 0.17 | \ul94.34 ± 0.04 | \ul70.40 ± 0.34 | ||

| GFairHint + REDRESS | 85.32 ± 3.45 | 95.22 ± 0.79 | 68.98 ± 0.25 | ||

| GAT | Vanilla | 76.64 ± 0.21 | 92.04 ± 0.09 | \ul70.86 ± 0.64 | |

| InFoRM | 76.37 ± 0.04 | 91.92 ± 0.11 | 69.73 ± 0.40 | ||

| REDRESS | 77.46 ± 0.09 | 92.18 ± 0.02 | 69.74 ± 0.19 | ||

| REDRESS + MLP | 76.23 ± 0.98 | 91.86 ± 0.25 | 70.45 ± 0.30 | ||

| GFairHint | \ul81.80 ± 0.14 | \ul94.20 ± 0.04 | 71.06 ± 0.45 | ||

| GFairHint + REDRESS | 85.49 ± 4.73 | 95.20 ± 1.19 | 69.89 ± 0.11 | ||

| ACM | GCN | Vanilla | 33.90 ± 0.73 | \ul76.99 ± 0.08 | 70.78 ± 0.18 |

| REDRESS | 34.82 ± 0.80 | 76.98 ± 0.13 | 70.15 ± 1.77 | ||

| REDRESS + MLP | 30.93 ± 0.46 | 76.66 ± 0.19 | \ul70.64 ± 1.89 | ||

| GFairHint | \ul35.12 ± 0.34 | 76.39 ± 0.52 | 69.70 ± 0.77 | ||

| GFairHint + REDRESS | 38.58 ± 2.85 | 77.00 ± 0.16 | 69.77 ± 0.95 | ||

| SAGE | Vanilla | 30.55 ± 1.86 | 76.63 ± 0.18 | \ul69.26 ± 0.60 | |

| REDRESS | 31.58 ± 1.06 | 76.68 ± 0.04 | 68.23 ± 0.97 | ||

| REDRESS + MLP | 28.73 ± 0.12 | 76.29 ± 0.78 | 69.32 ± 0.44 | ||

| GFairHint | \ul36.12 ± 0.72 | \ul76.39 ± 0.25 | 69.24 ± 0.11 | ||

| GFairHint + REDRESS | 37.83 ± 3.78 | 77.37 ± 0.55 | 67.52 ± 0.16 | ||

| GAT | Vanilla | 34.62 ± 0.28 | 77.00 ± 0.20 | 71.14 ± 1.14 | |

| REDRESS | 34.83 ± 0.45 | \ul77.40 ± 0.28 | 70.49 ± 0.87 | ||

| REDRESS + MLP | 32.82 ± 1.36 | 76.22 ± 0.09 | 69.87 ± 0.70 | ||

| GFairHint | \ul37.52 ± 0.54 | 76.79 ± 0.27 | \ul71.04 ± 0.74 | ||

| GFairHint + REDRESS | 43.01 ± 2.02 | 77.50 ± 0.36 | 69.65 ± 0.88 |

4.2. Methods for Comparison

To show the superiority of our proposed framework, we implement the vanilla GNN models and previous SOTA as baseline models with sensitivity analysis. Note that some existing works (Bose and Hamilton, 2019; Rahman et al., 2019b) for group fairness promotion cannot be used as baseline models because our work focuses on individual fairness. We explain these baseline methods below.

Vanilla: Vanilla denotes the vanilla GNN models without any individual fairness promotion method.

PFR: PFR (Lahoti et al., 2019) learns fair representation and can be considered as a pre-processing method for GNN models, and we adapt the implementation of PFR666https://github.com/plahoti-lgtm/PairwiseFairRepresentations to GNN models by transforming the input features to the fairness representations and use the transformed representations as node features in vanilla GNN models. The PFR method requires computing the Laplacian matrix and the eigenvectors of the oracle similarity matrix (Lahoti et al., 2019), so we have to explicitly store in memory. The experiment of PFR on the Arxiv dataset with 90,941 nodes will cause out-of-memory issues. Since Dong et al. (2021) has already shown REDRESS’s priority over PFR on the ACM, Coauthor-Phy and Coauthor-CS datasets, we only experiment with PFR on the Crime dataset.

InFoRM: InFoRM (Kang et al., 2020) is a framework to promote individual fairness in conventional machine learning tasks on graphs. Similarly, due to the previous work’s experiments showing the better performance of REDRESS than InFoRM on ACM, CS and Phy datasets, we only experiment with InFoRM on the Crime and Arxiv datasets by combining its fairness promotion loss with the utility loss of the GNN models.

REDRESS: REDRESS is the previous SOTA framework for individual fairness promotion in GNN models (Dong et al., 2021). They formulate the conventional individual fairness promotion into a ranking-based optimization problem. By optimizing the ranking-based loss and the utility loss , REDRESS can achieve the goal of maximization of utility and promotion of individual fairness simultaneously. For the implementation of its framework and ranking-based loss, we adapt the codebase released by the authors777https://github.com/yushundong/REDRESS.

REDRESS + MLP As mentioned in Section 3.5, after concatenating the utility node embeddings and fairness hint, our proposed framework GFairHint uses additional MLP layers to process the concatenated embeddings, which increases the model complexity. This variant of REDRESS adds the MLP layers with the same size after the GNN models along with the original REDRESS loss for a fair comparison. We use the output of MLP layers from this variation model to calculate the loss and optimize the parameters in the GNN and MLP layers. REDRESS + MLP model can show the effectiveness of GFairHint without interference of the model complexity confounder.

Our methods: We study the performance of GFairHint and examine its effectiveness with the combination of REDRESS loss:

GFairHint: We combine the fairness hint with the utility node embedding and only use the loss to update the model parameters.

GFairHint + REDRESS: As described in Section 3.6, we combine the ranking-based loss in REDRESS with the utility loss to further encourage the models to learn individual fairness. The only difference between this method and REDRESS + MLP is that GFairHint + REDRESS incorporates the fairness hint.

We note that for the Crime dataset, since the entries of oracle similarity matrix are binary (0-1), we cannot calculate the ranking-based loss of the constructed fairness graph . Therefore, we adapt the REDRESS-related methods to calculate the ranking-based loss based on input feature similarity. As a result, for the Crime dataset, REDRESS and REDRESS + MLP do not have any access to the fairness information (i.e., fairness graph ), while GFairHint + REDRESS get fairness information only through the fairness hint but not the ranking-based loss.

4.3. Evaluation Metric

We evaluate both the utility performance and the fairness performance of the models. A desired model should achieve the highest fairness performance without sacrificing much, if it must, utility performance. For utility performance, we follow previous work (Dong et al., 2021) and the official OGB leaderboard to use classification accuracy (ACC). As for fairness performance, we use different evaluation metrics in accordance with two different settings of the oracle similarity, i.e., continuous and binary.

For the co-authorship and citation networks (ACM, ArXiv, CS, Phy), the oracle similarity matrix is continuous, where the entry is the input feature similarity. We follow previous work (Dong et al., 2021) to utilize ERR@K (Chapelle et al., 2009) and NDCG@K (Järvelin and Kekäläinen, 2002), where is the same as the threshold of to determine the edge existence in fairness graph in Section 3.3 for based on input features. Higher ERR and NDCG values represent better individual fairness promotion. These two metrics measure the similarity between the ranking lists obtained from the oracle similarity matrix and the outcome similarity matrix . In the following experiments, we choose and report the results of NDCG@10 and ERR@10 for all models for comparison. We also provide the sensitivity analysis of difference choices of values on the model performance in Appendix F.

For the Crime dataset, where the entry of the oracle similarity matrix is binary, we follow (Lahoti et al., 2019) and use Consistency as the evaluation metric. It measures the consistency of outcomes between individuals who are similar to each other. The formal definition regarding a fairness similarity matrix is

Consistency measures how the predictions align with the oracle fairness similarity . ERR and NDCG measure a similar concept from a ranking perspective.

4.4. Implementation Details

All the backbone GNN models and our auxiliary link prediction models are implemented in the Pytorch framework, especially the package PyTorch Geometric (Paszke et al., 2017; Fey and Lenssen, 2019). For each of our five datasets, we experiment with two backbone GNN settings, small and large model size. For the small model size setting, the number of layers and the dimension of the embeddings in the hidden layers are set to 2 and 16. For the big model size setting, we set these two numbers to 10 and 128 respectively. For all experiments, we fix the values of the hyperparameters and at 1 and 10 as suggested in the previous work (Dong et al., 2021), where is the weighting factor when integrating with the ranking-based loss (Equation 9) and is the number of top entries used to calculate the ranking loss and fairness evaluation metrics NDCG@K and ERR@K.

Our learned fairness hint for each node contains individual fairness information, and we expect that the fairness hint helps promote fairness in various GNN models. We choose three popular GNN models: GCN (Kipf and Welling, 2016), GraphSAGE (Hamilton et al., 2017), and Graph Attention Networks (GAT) (Veličković et al., 2017) to demonstrate the compatibility of GFairHint with various GNN model designs. Note that we do not need to relearn the fairness hint for the same dataset even if the backbone models have changed. Other details of the implementation are in Appendix A.

5. Experimental Results

We aim to answer the questions in the following sections. Section 5.1: How well can GFairHint and its extension method promote individual fairness compared with other baselines with different similarity measures? Section 5.2: How important are the fairness hint for GNN models when making predictions? Section 5.3: How does the fairness constraint hyperparemter influence the performance of GFairHint? Section 5.4: How computationally efficient is GFairHint and how does it compare with other baselines?

5.1. Effectiveness of GFairHint

In this section, we present the results of our proposed methods and baselines on collected datasets. For each dataset, we choose model hyperparameter settings with better average utility between two large and small model size settings as described in Section 4.4.

Oracle Similarity Matrix based on Input Feature

For the citation and coauthorship networks, we use the input feature similarity as the entry of the oracle similarity matrix to construct the fairness graph. The results are shown in Table 2 for the citation networks (i.e., Arxiv and ACM) and Table 5 Appendix B for the co-authorship networks (i.e., Phy and CS). 888We follow previous works (Dong et al., 2021; Kang et al., 2020) to use cosine similarity as the oracle similarity measure. We additionally include the results and discussion of using similarity measure based on Euclidean distance in Appendix B. For utility performance, our proposed GFairHint and GFairHint + REDRESS models achieve comparable results with vanilla backbone GNN models and other fairness promotion models. Indeed, in 5 cases out of 6 experiments for co-authorship datasets, our models achieve the best utility performance. Regarding the fairness performance, our proposed models achieve the best fairness performance in nearly all settings, except for the ERR value of the GNN models on the Phy dataset. We also achieve comparable ERR values with the SoTA REDRESS model for the Phy dataset. Moreover, our proposed GFairHint also behave better than the REDRESS model in the most scenarios of the citation network dataset. InFoRM method does not imporve the fairness performance on the Arxiv dataset, which is consistent with the results on the other academic networks from the REDRESS paper (Dong et al., 2021).

| Backbone | Method | Consistency | Acc |

|---|---|---|---|

| GCN | Vanilla | 54.80 ± 0.23 | 73.83 ± 0.34 |

| PFR | 52.20 ± 0.55 | 71.53 ± 1.10 | |

| InFoRM | 56.84 ± 1.77 | 72.93 ± 0.96 | |

| REDRESS | 54.07 ± 0.96 | 73.98 ± 0.70 | |

| REDRESS + MLP | 53.06 ± 1.04 | 73.58 ± 1.80 | |

| GFairHint | \ul62.76 ± 2.74 | \ul75.44 ± 0.71 | |

| GFairHint + REDRESS | 63.61 ± 4.44 | 75.54 ± 0.90 | |

| SAGE | Vanilla | 62.09 ± 0.50 | 82.16 ± 0.33 |

| PFR | 56.75 ± 0.95 | 80.15 ± 0.67 | |

| InFoRM | 60.93 ± 2.59 | 79.05 ± 0.51 | |

| REDRESS | 61.46 ± 1.91 | \ul82.11 ± 0.52 | |

| REDRESS + MLP | 61.46 ± 1.36 | 81.35 ± 0.34 | |

| GFairHint | \ul62.26 ± 0.98 | 80.60 ± 0.98 | |

| GFairHint + REDRESS | 62.49 ± 4.86 | 80.85 ± 1.21 | |

| GAT | Vanilla | 55.17 ± 0.81 | 73.68 ± 0.79 |

| PFR | 54.06 ± 1.20 | 73.83 ± 0.90 | |

| InFoRM | 53.44 ± 2.24 | 71.38 ± 1.43 | |

| REDRESS | 53.55 ± 1.15 | 72.88 ± 0.74 | |

| REDRESS + MLP | 51.84 ± 0.42 | 72.08 ± 1.24 | |

| GFairHint | \ul64.04 ± 2.74 | 75.34 ± 0.74 | |

| GFairHint + REDRESS | 65.30 ± 3.60 | \ul74.94 ± 1.05 |

Oracle Similarity Matrix based on External Annotation

For the Crime dataset, we construct a fairness graph from collected human expert judgements. We show the results in Table 3. We find that for all three backbone GNN models, GFairHint and GFairHint + REDRESS are the best two methods in the fairness (Consistency) evaluation. This is as expected since Vanilla and REDRESS models do not have access to fairness information in this setting, and InFoRM and PFR methods are not specifically designed for GNN models. GFairHint and GFairHint + REDRESS have close performance in consistency, demonstrating the effectiveness of fairness hint even when it is used alone. Although GFairHint + REDRESS has slightly better results, it has much higher computational cost because of the ranking-based loss. Detailed discussion on computation efficiency is in Section 5.4. For GCN and GAT backbone models, our proposed methods achieve the best two results in utility (accuracy) evaluation.

Summary

We systematically evaluate the utility performance and fairness performance in combinations of dataset and backbone model, which results in utility comparisons and fairness comparisons.999For the four academic networks with oracle similarity matrix based on input feature, we evaluated two fairness metrics, which leads to comparisons for fairness. Our proposed GFairHint + REDRESS method achieved best fairness performance in almost all comparisons (24/27), while GFairHint performed second best in 16/27 of the comparisons when applied alone. These two methods also have comparable utility performance with the Vanilla model, as they ranked top two in 12/15 utility comparisons. Although GFairHint + REDRESS achieved better fairness performance than GFairHint in general, the gaps are small. GFairHint even ranked higher in 6/15 utility performance, especially for large dataset (3/3). These observations empirically show that GFairHint achieves a good balance between utility and fairness, and demonstrate that while GFairHint is better than previous work on individual fairness promotion in most cases, it is also complementary to other methods with fairness regularization loss and can further improve the performance.

| Dataset | Method | Score() | Score() | |

|---|---|---|---|---|

| Arxiv | GFairHint | 0.044 | 0.041 | 0.923 |

| GFairHint+REDRESS | 0.051 | 0.034 | 0.677 | |

| ACM | GFairHint | 0.158 | 0.056 | 0.354 |

| GFairHint+REDRESS | 0.245 | 0.045 | 0.184 | |

| CS | GFairHint | 0.157 | 0.164 | 1.050 |

| GFairHint+REDRESS | 0.109 | 0.092 | 0.848 | |

| Phy | GFairHint | 0.169 | 0.182 | 1.073 |

| GFairHint+REDRESS | 0.225 | 0.166 | 0.738 | |

| Crime | GFairHint | 0.065 | 0.228 | 3.536 |

| GFairHint+REDRESS | 0.022 | 0.057 | 2.594 |

5.2. Importance of Fairness Hint

In Section 3.4, we prove that our learned fairness hints are individually fair and integrate the fairness hints into the training of backbone GNN models to achieve better fairness promotion. We further evaluate: Does GNN backbone model utilize the concatenated fairness hints in the node label prediction?

We answer this question by borrowing the idea of saliency map (Simonyan et al., 2013) to demonstrate the relative importance of utility node embedding, , and fairness hints, , when making predictions. We calculate the gradient of the model output w.r.t. each dimension of the node embedding as the importance score for the dimension. We then average the importance score for utility node embedding and fairness hint as and respectively. The details are shown in Appendix D.

From Table 4, we observe that the magnitude of importance score of utility node embedding and fairness hint for our framework is comparable, which indicates that GNNs in our method actually apply the fairness hint to predict the node labels. Moreover, the values of for GFairHint + REDRESS are lower than the ones for GFairHint, suggesting that the fairness hint becomes more important when integrated with fairness loss. This further demonstrates that GFairHint is a plug-and-play framework as the fairness hint can be effectively utilized by the previous SoTA fairness promotion method with fairness regularization.

5.3. Trade-off between Fairness and Utility

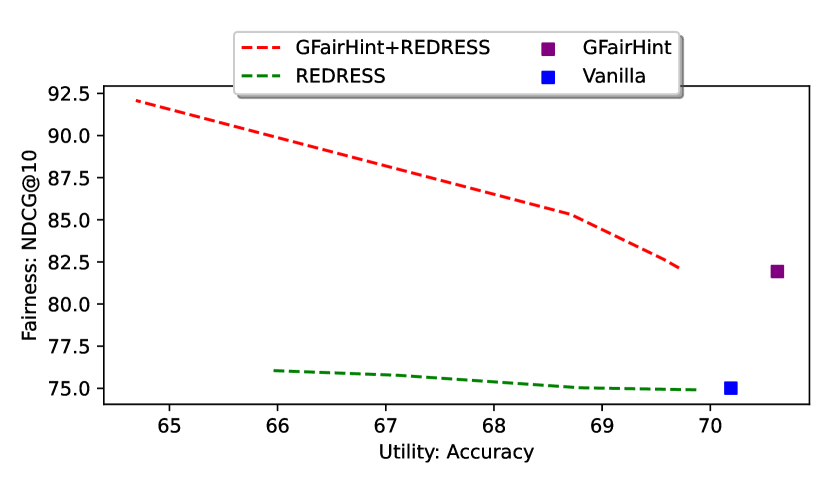

GFairHint + REDRESS achieves the best fairness performance, where we integrate fairness hint with ranking-based loss. The value of the hyperparameter in Equation 9 controls the strength of the fairness constraint. There is a trade-off between utility and fairness when adjusting the value (Dong et al., 2021). To demonstrate the effectiveness of GFairHint, we perform experiments with multiple values of for the REDRESS and GFairHint + REDRESS models on the Arxiv dataset with GCN as the backbone GNN model. Figure 2 shows the trade-off between accuracy and fairness (NDCG@10) with varying values of for the REDRESS and GFairHint + REDRESS methods. The curves in the figure for REDRESS and GFairHint + REDRESS are generated by changing a range of fairness coefficients from 0.01 to 100 and computing the Pareto frontiers. We also further visualize the accuracy and NDCG@10 values for the vanilla and GFairHint models as two data points for reference.

With the value of being small (e.g., ), REDRESS and GFairHint + REDRESS models behave similarly to the vanilla and GFairHint models respectively as expected. When increasing the value of , we can observe fairness improvements for both REDRESS and the GFairHint + REDRESS models. This fairness improvement is more significant for the GFairHint + REDRESS model. We conjecture that little improvement for the REDRESS model is due to the vanishing gradient problem of deep GNN models (Demir et al., 2021), which may reduce the impact of fairness loss. GFairHint + REDRESS model is less impacted because it also directly learns from the fairness hint that is incorporated into the final GNN layers. We observe that with the same accuracy level, our proposed GFairHint + REDRESS model achieves a higher NDCG@10 value than the REDRESS model, demonstrating the effectiveness of the fairness hint.

We expect that the adjustment of the trade-off between fairness and utility can provide more flexibility in practical applications. For example, some tasks may pay more attention to fairness rather than utility.

5.4. Efficiency Evaluation

In addition to fairness and utility results, we compare the efficiency of GFairHint models with other baseline models in terms of time complexity and empirical training time. The time complexity of training a -layer GCN model is , where is the edge number and is the node number (Blakely et al., 2021). During the training of GNN model, the ranking-based loss in REDRESS requires finding a list containing top-k similar nodes for each node and ranking the list. The additional complexity of REDRESS is (Dong et al., 2021) and our additional time complexity is since we only add two MLP layers when training GNN models

The additional cost introduced by the auxiliary link prediction task is small because the complexity is the same as the original node classification task and the learned fairness can be reused for other backbone GNN models on the same dataset, which reduces the marginal cost of this auxiliary task. Moreover, even if the fairness hint is only used once, the time required for training a link prediction GNN and a node classification GNN is significantly smaller than using the REDRESS framework. We empirically show the difference in training time in Figure 3 and the details in Appendix E. The results show that the additional cost of GFairHint is negligible compared to the Vanilla model. Therefore, the proposed GFairHint model is more scalable in practice when applied to large graph datasets.

6. Limitations and Future Work

One crucial question for individual fairness is the source of similarity measures. External annotation is often impractical, subjective, and potentially biased, and the input feature can be an incomplete and imperfect source. It requires comprehensive domain knowledge to develop the fairness similarity measure for a specific real-world application. We note that our framework is compatible with different types of similarity measures as long as the construction of a fairness graph is viable. Moreover, there may be bias in the original input features, and we can have a sense of such bias by looking at the fairness evaluations of the vanilla models. However, it is unavoidable to use original information, as it is the only source of feature information available for making predictions. The intuition for promoting individual fairness is to use oracle individual fairness similarity to guide the model to utilize such fairness information, whereas in this paper we rely on the learned fairness hint. We acknowledge that there are works that debias the original information directly as pre-processing (Kang et al., 2020; Lahoti et al., 2019), but they are not as empirically effective as the proposed method or the in-processing method (Dong et al., 2021) when applied along. Our method is also orthogonal to these pre-processing methods and is thus possible to integrate with them for better performance. Lastly, in this paper, we show the effectiveness of fairness hint with a simple concatenation strategy, and it is possible to develop more complex and better integration methods to utilize fairness hint, especially in compliance with the tasks and data formats at hand. We leave these directions for future work.

7. Conclusions

In this work, we propose GFairHint, a plug-and-play framework for promoting individual fairness in GNNs via fairness hint. Our method learns fairness hint through an auxiliary link prediction task on a constructed fairness graph. The fairness graph can be derived from both continuous and binary oracle similarity matrix, corresponding to two ways of obtaining similarity for individual fairness respectively, i.e., from input feature space and from external human annotations. We also integrate GFairHint with another complementary individual fairness promotion method, REDRESS. We conduct extensive empirical evaluations on node classification tasks to show the effectiveness of our proposed method in achieving good balance in utility and fairness, with much less computational cost.

References

- (1)

- Blakely et al. (2021) Derrick Blakely, Jack Lanchantin, and Yanjun Qi. 2021. Time and Space Complexity of Graph Convolutional Networks. Accessed on: Dec 31 (2021).

- Bose and Hamilton (2019) Avishek Bose and William Hamilton. 2019. Compositional fairness constraints for graph embeddings. In International Conference on Machine Learning. PMLR, 715–724.

- Buyl and De Bie (2020) Maarten Buyl and Tijl De Bie. 2020. DeBayes: a Bayesian Method for Debiasing Network Embeddings. In Proceedings of the 37th International Conference on Machine Learning (Proceedings of Machine Learning Research, Vol. 119), Hal Daumé III and Aarti Singh (Eds.). PMLR, 1220–1229. https://proceedings.mlr.press/v119/buyl20a.html

- Chapelle et al. (2009) Olivier Chapelle, Donald Metlzer, Ya Zhang, and Pierre Grinspan. 2009. Expected reciprocal rank for graded relevance. In Proceedings of the 18th ACM conference on Information and knowledge management. 621–630.

- Dai and Wang (2021) Enyan Dai and Suhang Wang. 2021. Say no to the discrimination: Learning fair graph neural networks with limited sensitive attribute information. In Proceedings of the 14th ACM International Conference on Web Search and Data Mining. 680–688.

- Demir et al. (2021) Andac Demir, Toshiaki Koike-Akino, Ye Wang, Masaki Haruna, and Deniz Erdogmus. 2021. EEG-GNN: Graph Neural Networks for Classification of Electroencephalogram (EEG) Signals. In 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC). IEEE, 1061–1067.

- Devlin et al. (2018) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2018. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805 (2018).

- Dong et al. (2021) Yushun Dong, Jian Kang, Hanghang Tong, and Jundong Li. 2021. Individual fairness for graph neural networks: A ranking based approach. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining. 300–310.

- Dong et al. (2022a) Yushun Dong, Jing Ma, Chen Chen, and Jundong Li. 2022a. Fairness in Graph Mining: A Survey. arXiv preprint arXiv:2204.09888 (2022).

- Dong et al. (2022b) Yushun Dong, Song Wang, Yu Wang, Tyler Derr, and Jundong Li. 2022b. On Structural Explanation of Bias in Graph Neural Networks. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. 316–326.

- Dwork et al. (2012) Cynthia Dwork, Moritz Hardt, Toniann Pitassi, Omer Reingold, and Richard Zemel. 2012. Fairness through awareness. In Proceedings of the 3rd innovations in theoretical computer science conference. 214–226.

- Fey and Lenssen (2019) Matthias Fey and Jan E. Lenssen. 2019. Fast Graph Representation Learning with PyTorch Geometric. In ICLR Workshop on Representation Learning on Graphs and Manifolds.

- Hamilton et al. (2017) Will Hamilton, Zhitao Ying, and Jure Leskovec. 2017. Inductive representation learning on large graphs. Advances in neural information processing systems 30 (2017).

- Hu et al. (2020b) Binbin Hu, Zhiqiang Zhang, Jun Zhou, Jingli Fang, Quanhui Jia, Yanming Fang, Quan Yu, and Yuan Qi. 2020b. Loan default analysis with multiplex graph learning. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management. 2525–2532.

- Hu et al. (2020a) Weihua Hu, Matthias Fey, Marinka Zitnik, Yuxiao Dong, Hongyu Ren, Bowen Liu, Michele Catasta, and Jure Leskovec. 2020a. Open graph benchmark: Datasets for machine learning on graphs. Advances in neural information processing systems 33 (2020), 22118–22133.

- Järvelin and Kekäläinen (2002) Kalervo Järvelin and Jaana Kekäläinen. 2002. Cumulated gain-based evaluation of IR techniques. ACM Transactions on Information Systems (TOIS) 20, 4 (2002), 422–446.

- Kang et al. (2020) Jian Kang, Jingrui He, Ross Maciejewski, and Hanghang Tong. 2020. Inform: Individual fairness on graph mining. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 379–389.

- Kingma and Ba (2014) Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

- Kipf and Welling (2016) Thomas N Kipf and Max Welling. 2016. Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907 (2016).

- Kose and Shen (2022) O Deniz Kose and Yanning Shen. 2022. Fair node representation learning via adaptive data augmentation. arXiv preprint arXiv:2201.08549 (2022).

- Kusner et al. (2017) Matt J Kusner, Joshua Loftus, Chris Russell, and Ricardo Silva. 2017. Counterfactual fairness. Advances in neural information processing systems 30 (2017).

- Lahoti et al. (2019) Preethi Lahoti, Krishna P Gummadi, and Gerhard Weikum. 2019. Operationalizing individual fairness with pairwise fair representations. Proceedings of the VLDB Endowment 13, 4 (2019), 506–518.

- Lambrecht and Tucker (2019) Anja Lambrecht and Catherine Tucker. 2019. Algorithmic bias? An empirical study of apparent gender-based discrimination in the display of STEM career ads. Management science 65, 7 (2019), 2966–2981.

- Li et al. (2021) Peizhao Li, Yifei Wang, Han Zhao, Pengyu Hong, and Hongfu Liu. 2021. On dyadic fairness: Exploring and mitigating bias in graph connections. In International Conference on Learning Representations.

- Li et al. (2020) Zhao Li, Xin Shen, Yuhang Jiao, Xuming Pan, Pengcheng Zou, Xianling Meng, Chengwei Yao, and Jiajun Bu. 2020. Hierarchical bipartite graph neural networks: Towards large-scale e-commerce applications. In 2020 IEEE 36th International Conference on Data Engineering (ICDE). IEEE, 1677–1688.

- Ma et al. (2022) Jing Ma, Ruocheng Guo, Mengting Wan, Longqi Yang, Aidong Zhang, and Jundong Li. 2022. Learning fair node representations with graph counterfactual fairness. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining. 695–703.

- Mehrabi et al. (2021a) Ninareh Mehrabi, Fred Morstatter, Nripsuta Saxena, Kristina Lerman, and Aram Galstyan. 2021a. A Survey on Bias and Fairness in Machine Learning. ACM Comput. Surv. 54, 6, Article 115 (jul 2021), 35 pages. https://doi.org/10.1145/3457607

- Mehrabi et al. (2021b) Ninareh Mehrabi, Fred Morstatter, Nripsuta Saxena, Kristina Lerman, and Aram Galstyan. 2021b. A survey on bias and fairness in machine learning. ACM Computing Surveys (CSUR) 54, 6 (2021), 1–35.

- Mikolov et al. (2013) Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S Corrado, and Jeff Dean. 2013. Distributed representations of words and phrases and their compositionality. Advances in neural information processing systems 26 (2013).

- Mukherjee et al. (2020) Debarghya Mukherjee, Mikhail Yurochkin, Moulinath Banerjee, and Yuekai Sun. 2020. Two Simple Ways to Learn Individual Fairness Metrics from Data. In Proceedings of the 37th International Conference on Machine Learning (ICML’20). JMLR.org, Article 658, 11 pages.

- Paszke et al. (2017) Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. 2017. Automatic differentiation in pytorch. (2017).

- Petersen et al. (2021) Felix Petersen, Debarghya Mukherjee, Yuekai Sun, and Mikhail Yurochkin. 2021. Post-processing for Individual Fairness. In Advances in Neural Information Processing Systems, M. Ranzato, A. Beygelzimer, Y. Dauphin, P.S. Liang, and J. Wortman Vaughan (Eds.), Vol. 34. Curran Associates, Inc., 25944–25955. https://proceedings.neurips.cc/paper/2021/file/d9fea4ca7e4a74c318ec27c1deb0796c-Paper.pdf

- Rahman et al. (2019a) Tahleen Rahman, Bartlomiej Surma, Michael Backes, and Yang Zhang. 2019a. Fairwalk: Towards Fair Graph Embedding. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI-19. International Joint Conferences on Artificial Intelligence Organization, 3289–3295. https://doi.org/10.24963/ijcai.2019/456

- Rahman et al. (2019b) Tahleen Rahman, Bartlomiej Surma, Michael Backes, and Yang Zhang. 2019b. Fairwalk: Towards fair graph embedding. (2019).

- Redmond (2009) Michael Redmond. 2009. Communities and Crime. UCI Machine Learning Repository.

- Shchur et al. (2018) Oleksandr Shchur, Maximilian Mumme, Aleksandar Bojchevski, and Stephan Günnemann. 2018. Pitfalls of graph neural network evaluation. arXiv preprint arXiv:1811.05868 (2018).

- Simonyan et al. (2013) Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman. 2013. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034 (2013).

- Song et al. (2022) Weihao Song, Yushun Dong, Ninghao Liu, and Jundong Li. 2022. GUIDE: Group Equality Informed Individual Fairness in Graph Neural Networks. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining. 1625–1634.

- Tang et al. (2008) Jie Tang, Jing Zhang, Limin Yao, Juanzi Li, Li Zhang, and Zhong Su. 2008. Arnetminer: extraction and mining of academic social networks. In Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining. 990–998.

- Veličković et al. (2017) Petar Veličković, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Lio, and Yoshua Bengio. 2017. Graph attention networks. arXiv preprint arXiv:1710.10903 (2017).

- WANG et al. (2022) JIANIAN WANG, SHENG ZHANG, YANGHUA XIAO, and RUI SONG. 2022. A Review on Graph Neural Network Methods in Financial Applications. Journal of Data Science 20, 2 (2022).

- Wang et al. (2022) Yu Wang, Yuying Zhao, Yushun Dong, Huiyuan Chen, Jundong Li, and Tyler Derr. 2022. Improving Fairness in Graph Neural Networks via Mitigating Sensitive Attribute Leakage. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining.

- Wu et al. (2019) Qitian Wu, Hengrui Zhang, Xiaofeng Gao, Peng He, Paul Weng, Han Gao, and Guihai Chen. 2019. Dual Graph Attention Networks for Deep Latent Representation of Multifaceted Social Effects in Recommender Systems. In The World Wide Web Conference (San Francisco, CA, USA) (WWW ’19). Association for Computing Machinery, New York, NY, USA, 2091–2102. https://doi.org/10.1145/3308558.3313442

- Wu et al. (2022a) Shiwen Wu, Fei Sun, Wentao Zhang, Xu Xie, and Bin Cui. 2022a. Graph Neural Networks in Recommender Systems: A Survey. ACM Comput. Surv. (mar 2022). https://doi.org/10.1145/3535101 Just Accepted.

- Wu et al. (2022b) Shiwen Wu, Fei Sun, Wentao Zhang, Xu Xie, and Bin Cui. 2022b. Graph neural networks in recommender systems: a survey. Comput. Surveys 55, 5 (2022), 1–37.

- Wu et al. (2021) Zonghan Wu, Shirui Pan, Fengwen Chen, Guodong Long, Chengqi Zhang, and Philip S. Yu. 2021. A Comprehensive Survey on Graph Neural Networks. IEEE Transactions on Neural Networks and Learning Systems 32, 1 (2021), 4–24. https://doi.org/10.1109/TNNLS.2020.2978386

- Yurochkin and Sun (2021) Mikhail Yurochkin and Yuekai Sun. 2021. SenSeI: Sensitive Set Invariance for Enforcing Individual Fairness. In International Conference on Learning Representations. https://openreview.net/forum?id=DktZb97_Fx

- Zhang et al. (2021) Le Zhang, Ding Zhou, Hengshu Zhu, Tong Xu, Rui Zha, Enhong Chen, and Hui Xiong. 2021. Attentive heterogeneous graph embedding for job mobility prediction. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining. 2192–2201.

Appendix A Training Process Details

When training the GCN model for the auxiliary link prediction task, we use a two-layer GCN model with embedding size as 128. We randomly mask the and edges of the fairness graph as the positive edges sampled in the validation set and the test set. We also generate the same number of negative edges. For optimization, we use Adam optimizer (Kingma and Ba, 2014) with learning rate 0.001 and full batch training for 200 epochs.

For the main node classification task, when training without the ranking-based loss (Vanilla and GFairHint methods), the numbers of training epochs of ArXiv, ACM, Phy, CS and Crime datasets are 300, 150, 300, 300, 500 respectively. When training with ranking-based loss, we first train the models with only utility loss for tens of epochs to “warm up” and then the models will be trained with ranking-based loss and utility loss together. The numbers of “warm-up” epochs and training epochs with ranking-based loss are 150 and 300, 250 and 300, 50 and 150, 50 and 500, 50 and 600 for ArXiv, ACM, Phy, CS and Crime datasets, respectively. This warm-up operation also follows the procedure in the REDRESS paper (Dong et al., 2021).

The hyperparameters for model size are as follows. For citation networks, the results are from 10-layer models with hidden layer dimension 128. For co-authorship netowrks, the results are from 2-layer models with hidden layer dimension 16. Specifically, for the ArXiv dataset, since they have about 90k nodes in the training dataset, using 10-layer GAT would cause a memory issue, so we only experiment on the Arxiv data with the 3-layer and 128-dimensional hidden layer GAT model. For Crime network, we select the small model size setting with 2 hidden layers and hidden layer dimension size as 16.

| Dataset | Backbone | Method | Fairness: NDCG@10 | Fairness: ERR@10 | Utility: ACC |

|---|---|---|---|---|---|

| CS | GCN | Vanilla | 44.00 ± 1.14 | 78.93 ± 0.07 | 80.16 ± 9.32 |

| REDRESS | 49.24 ± 2.36 | \ul81.25 ± 0.55 | 79.88 ± 2.68 | ||

| REDRESS + MLP | 40.54 ± 2.45 | 78.76 ± 0.27 | 77.35 ± 2.10 | ||

| GFairHint | \ul51.31 ± 1.17 | 79.56 ± 0.36 | \ul87.08 ± 2.05 | ||

| GFairHint + REDRESS | 64.60 ± 0.58 | 83.48 ± 0.20 | 91.17 ± 0.54 | ||

| SAGE | Vanilla | 46.34 ± 0.98 | 78.33 ± 0.02 | 85.49 ± 6.58 | |

| REDRESS | \ul54.71 ± 1.91 | \ul81.09 ± 0.59 | \ul88.26 ± 3.30 | ||

| REDRESS + MLP | 42.83 ± 1.40 | 77.80 ± 0.44 | 83.29 ± 1.66 | ||

| GFairHint | 51.00 ± 0.73 | 79.32 ± 0.30 | 86.67 ± 3.07 | ||

| GFairHint + REDRESS | 64.49 ± 0.45 | 83.21 ± 0.31 | 91.06 ± 0.02 | ||

| GAT | Vanilla | 46.99 ± 0.98 | 79.44 ± 0.29 | 80.73 ± 7.52 | |

| REDRESS | 51.14 ± 0.25 | 80.62 ± 0.00 | 79.53 ± 2.75 | ||

| REDRESS + MLP | 44.39 ± 0.73 | 79.13 ± 0.48 | 82.42 ± 1.87 | ||

| GFairHint | \ul53.80 ± 0.99 | \ul80.91 ± 0.38 | \ul86.11 ± 0.94 | ||

| GFairHint + REDRESS | 63.67 ± 0.09 | 83.46 ± 0.33 | 90.54 ± 0.57 | ||

| Phy | GCN | Vanilla | 30.46 ± 1.05 | 73.30 ± 0.10 | 88.33 ± 5.11 |

| REDRESS | 35.76 ± 1.72 | 74.69 ± 0.06 | 84.28 ± 2.12 | ||

| REDRESS + MLP | \ul36.06 ± 0.88 | \ul74.61 ± 0.13 | \ul93.40 ± 0.38 | ||

| GFairHint | 33.26 ± 0.21 | 71.60 ± 0.32 | 87.35 ± 0.03 | ||

| GFairHint + REDRESS | 41.53 ± 4.63 | 73.87 ± 1.46 | 94.15 ± 0.15 | ||

| SAGE | Vanilla | 30.66 ± 0.94 | 72.45 ± 0.15 | 94.88 ± 0.66 | |

| REDRESS | \ul41.01 ± 2.25 | 74.89 ± 0.64 | 89.72 ± 0.33 | ||

| REDRESS + MLP | 35.65 ± 0.90 | \ul74.22 ± 0.04 | 93.08 ± 0.04 | ||

| GFairHint | 29.95 ± 0.98 | 71.75 ± 0.28 | 90.00 ± 3.15 | ||

| GFairHint + REDRESS | 41.66 ± 0.23 | 74.06 ± 0.03 | \ul93.24 ± 0.69 | ||

| GAT | Vanilla | 33.27 ± 1.32 | 73.78 ± 0.57 | 90.33 ± 5.19 | |

| REDRESS | \ul36.24 ± 0.06 | \ul74.64 ± 0.34 | 84.74 ± 5.39 | ||

| REDRESS + MLP | 36.43 ± 2.78 | 74.70 ± 0.97 | \ul92.54 ± 0.15 | ||

| GFairHint | 30.16 ± 0.11 | 71.99 ± 0.45 | 88.10 ± 1.77 | ||

| GFairHint + REDRESS | 44.56 ± 2.62 | 74.62 ± 1.10 | 93.67 ± 0.30 |

| Dataset | Method | Fairness: NDCG@10 | Fairness: ERR@10 | Utility: ACC |

|---|---|---|---|---|

| Arxiv | Vanilla | 74.09 ± 0.49 | 48.85 ± 0.45 | \ul70.18 ± 0.04 |

| REDRESS | 74.27 ± 0.42 | 49.15 ± 0.42 | 69.34 ± 0.77 | |

| REDRESS + MLP | 72.71 ± 1.30 | 48.04 ± 1.17 | 69.08 ± 1.84 | |

| GFairHint | \ul80.01 ± 0.46 | \ul50.75 ± 0.48 | 70.81 ± 0.47 | |

| GFairHint + REDRESS | 81.67 ± 1.59 | 51.82 ± 1.00 | 68.22 ± 2.61 | |

| ACM | Vanilla | 37.37 ± 1.25 | 20.00 ± 0.46 | 69.28 ± 1.77 |

| REDRESS | 38.04 ± 1.44 | 20.24 ± 0.59 | 70.14 ± 0.92 | |

| REDRESS + MLP | 34.51 ± 0.80 | 18.71 ± 0.38 | 68.86 ± 1.31 | |

| GFairHint | \ul43.31 ± 0.39 | \ul22.54 ± 0.19 | \ul69.89 ± 1.09 | |

| GFairHint + REDRESS | 43.94 ± 0.25 | 22.89 ± 0.13 | 69.63 ± 0.53 | |

| CS | Vanilla | 42.94 ± 0.72 | 21.07 ± 0.31 | 83.43 ± 2.01 |

| REDRESS | 44.08 ± 0.50 | 21.57 ± 0.22 | 89.78 ± 0.86 | |

| REDRESS + MLP | 27.99 ± 0.87 | 14.64 ± 0.42 | 72.85 ± 1.22 | |

| GFairHint | \ul49.78 ± 1.42 | \ul23.66 ± 0.58 | 85.96 ± 0.45 | |

| GFairHint + REDRESS | 52.16 ± 0.29 | 24.45 ± 0.08 | \ul88.06 ± 0.75 | |

| Phy | Vanilla | 29.39 ± 0.14 | 17.68 ± 0.11 | \ul95.08 ± 0.20 |

| REDRESS | \ul34.03 ± 0.66 | \ul19.88 ± 0.33 | 95.72 ± 0.06 | |

| REDRESS + MLP | 24.56 ± 2.75 | 15.25 ± 1.51 | 87.69 ± 0.23 | |

| GFairHint | 26.96 ± 1.50 | 16.38 ± 0.73 | 90.39 ± 1.09 | |

| GFairHint + REDRESS | 34.73 ± 0.72 | 20.15 ± 0.35 | 92.22 ± 1.46 |

Appendix B Supplementary Results

To verify the robustness of our framework on the other measures of similarity based on input features, we reproduce the experiments for oracle similarity matrix based on input feature but replace the similarity measures from the cosine similarity to euclidean distance. The similarity between two nodes and with by euclidean distance can be calculated as follows:

| (10) |

Here, we apply the SAGE model as the backbone GNN model and present the results of our framework and other baseline methods in Table 6.

We can observe that the behaviour of our framework GFairHint and GFairHint + REDRESS is similar as its performance in Section 5. For utility performance, our proposed GFairHint and GFairHint + REDRESS models achieve comparable results with vanilla backbone GNN models and other fairness promotion models. Regarding the fairness performance, our proposed models GFairHint + REDRESS achieve the best fairness performance in all scenarios. Moreover, our proposed GFairHint also behaves better than the REDRESS model in the most scenarios except on the Coauthor-Phy dataset. Based on these observations, we can indicate that our findings in Section 5 can be generalized on other continuous similarity measures if the oracle similar matrix is based on the input features, which demonstrates the robustness of our framework on different types of similarity measures.

Appendix C Extended Theoretical Analysis

In this section, we will first prove Theorem 2 and provide the justification of the assumption is achievable in practice in Theorem 2.

Proof.

Since in Definition 1 is a constant and can be set to an arbitrary number greater than 0, we can set as the edge existence threshold when constructing the fairness graph where edge exists only when the oracle similarity between two nodes is greater than .

We will separately discuss two scenarios for and : with or without an edge between them on the fairness graph. For two nodes and on the fairness graph, if there is no edge between them, then we have . The fairness bound and the outcome distance hold the condition that

| (11) |

The outcome of GNN models for and are individually fair. Similarly, for two nodes and , if there is an edge between them, then we have . For the fairness bound and the outcome distance, we can deduce that

| (12) |

The individual fair condition still holds. Hence, we can conclude that the outcomes (fairness hint) of our link prediction GNN model training on the fairness graph are individually fair. ∎

Justification of

In both settings of oracle similarity matrix in Section 3.3, only a small proportion of node pairs can be connected so the threshold of edge existence, , is close to the maximum similarity score between any two nodes in the fairness graph. In practice, for datasets with oracle similarity from external annotation, , and for datasets with oracle similarity from input features, . Meanwhile, the link prediction models for learning fairness hint achieved low loss (less than 0.1) in our experiments, which implies a low value of loss tolerance . Hence, our assumption the fairness tolerance in Theorem 2 should hold in most circumstances.

Appendix D Supplementary Material on Importance of Fairness Hint

Suppose that is the model output with respect to the input node , and is the joint node embedding with fairness hint and utility node embedding of node . and represents the -th dimension of the utility node embedding and fairness hint respectively. The importance of the node embedding dimension can be viewed as:

| (13) |

Hence, we can define the importance of the utility node embedding and fairness hint as the average importance of each node embedding dimension,

| (14) |

where and is the dimension number of utility embedding and fairness hint respectively. For the whole dataset, we calculate the average embedding importance across all nodes and obtain the overall utility or fairness importance:

| (15) |

where is the number of nodes in the dataset. We calculate the overall importance for utility node embedding and fairness hint for each dataset with respect to our GFairHint and GFairHint + REDRESS framework and the ratio between two importance scores.

Appendix E Supplementary Material on Efficiency Evaluation

To show the gap more clearly, we perform efficiency evaluation experiments on the largest dataset, ArXiv, which has 90k training nodes. We choose GCN as the backbone model. The experiments were conducted in a controlled computation environment with single GPU (RTX2080ti) and fixed GPU memory (12GB). For each method, we train the models for 300 epochs and visualize the average training time of 50 epochs in Figure 3.

Appendix F Sensitivity Analysis on Value

In this section, we investigate how the value of , which is used to as the threshold to construct the fairness graph, will influence the performance of our proposed methods on both utility and individual fairness. We vary the among , reconstruct the fairness graph by connecting each node to its top- similar nodes, and regenerate the fairness hints. We reproduce the experiments with different values for Vanilla, GFairHint, and GFairHint + REDRESS methods on the Arxiv dataset with SAGE as the backbone model. Figure 4 shows the accuracy and fairness (NDCG@K) with varying values of . From the figure, we can observe that Our proposed GFairHint and GFairHint + REDRESS methods consistently outperform the baseline methods with multiple values of . The utility of our methods is minimally affected as the value of k increases. This pattern indicates that GFairHint successfully strikes a suitable balance between preserving model utility and promoting individual fairness across different thresholds of fairness graph construction.