Geometric constraints improve inference of sparsely observed stochastic dynamics

Abstract

The dynamics of systems of many degrees of freedom evolving on multiple scales are often modeled in terms of stochastic differential equations. Usually the structural form of these equations is unknown and the only manifestation of the system’s dynamics are observations at discrete points in time. Despite their widespread use, accurately inferring these systems from sparse-in-time observations remains challenging. Conventional inference methods either focus on the temporal structure of observations, neglecting the geometry of the system’s invariant density, or use geometric approximations of the invariant density, which are limited to conservative driving forces. To address these limitations, here, we introduce a novel approach that reconciles these two perspectives. We propose a path augmentation scheme that employs data-driven control to account for the geometry of the invariant system’s density. Non-parametric inference on the augmented paths, enables efficient identification of the underlying deterministic forces of systems observed at low sampling rates.

1 Introduction

Unraveling a system’s governing equations from time-series observations is often crucial for understanding unexplained natural phenomena. The goal is to find a mathematical representation that aligns with observational data and provides a comprehensive phenomenological understanding of the underlying mechanisms. This requires employing proper representations that capture key system properties while effectively simplify extraneous degrees of freedom. Stochastic differential equations (SDE) provide such a flexible representation (Arnold, 2014; Lande, 1976; Chandrasekhar, 1943; Nelson, 2004), by representing the dominant system forces in the deterministic part of the equation, (drift function) , and summarising the unresolved or irrelevant degrees as stochastic forces acting on the system (diffusion). The resulting evolution equation has the form

| (1) |

where stands for the noise amplitude, and for the d-dimensional vector of independent Wiener processes. The equation should be interpreted according to the Ito formalism. We observe the system state at distinct points in time through , where , with observations collected at inter-observation intervals , and want approximate the drift function from the observations . Here, we will consider , but the method generalises for monotonic functions .

2 Identifying stochastic systems from sparse-in-time observations

For small inter-observation intervals , we consider that we observe the continuous path of the system state . ††margin: High-frequency observations In that case, we can identify the drift function by the first order Kramers-Moyal coefficient (Kramers, 1940; Moyal, 1949; Tabar, 2019) by empirically estimating conditional expectations of state increments (Friedrich et al., 2000; Ragwitz & Kantz, 2001; Boninsegna et al., 2018; Siegert et al., 1998). For the Bayesian non-parametric counterpart of this approach, (Ruttor et al., 2013; Batz et al., 2018) consider that transition probabilities between observations are Gaussian for , resulting in a (Gaussian) likelihood for the drift (see Sec. A Eq. 7)

| (2) |

To identify the drift Ruttor et al. (2013) impose a Gaussian process prior on the function values (Eq. 12). In Eq. 2 we introduced the weighted inner product and weighted norm .

However, as the inter-observation interval increases, the transition probabilities between consecutive observations cannot be considered Gaussian, and thus the likelihood (Eq. 2) assumed between two successive observations is no longer valid if Eq. 1 is non-linear. ††margin: Low-frequency observations The likelihood for the drift for such settings takes the form of a path integral

| (3) |

where denotes the set of discrete time observations, the prior path probability assuming the dynamics of Eq. 1, identifies the formal volume element on the path space, while stands for the likelihood of observations given the latent path .

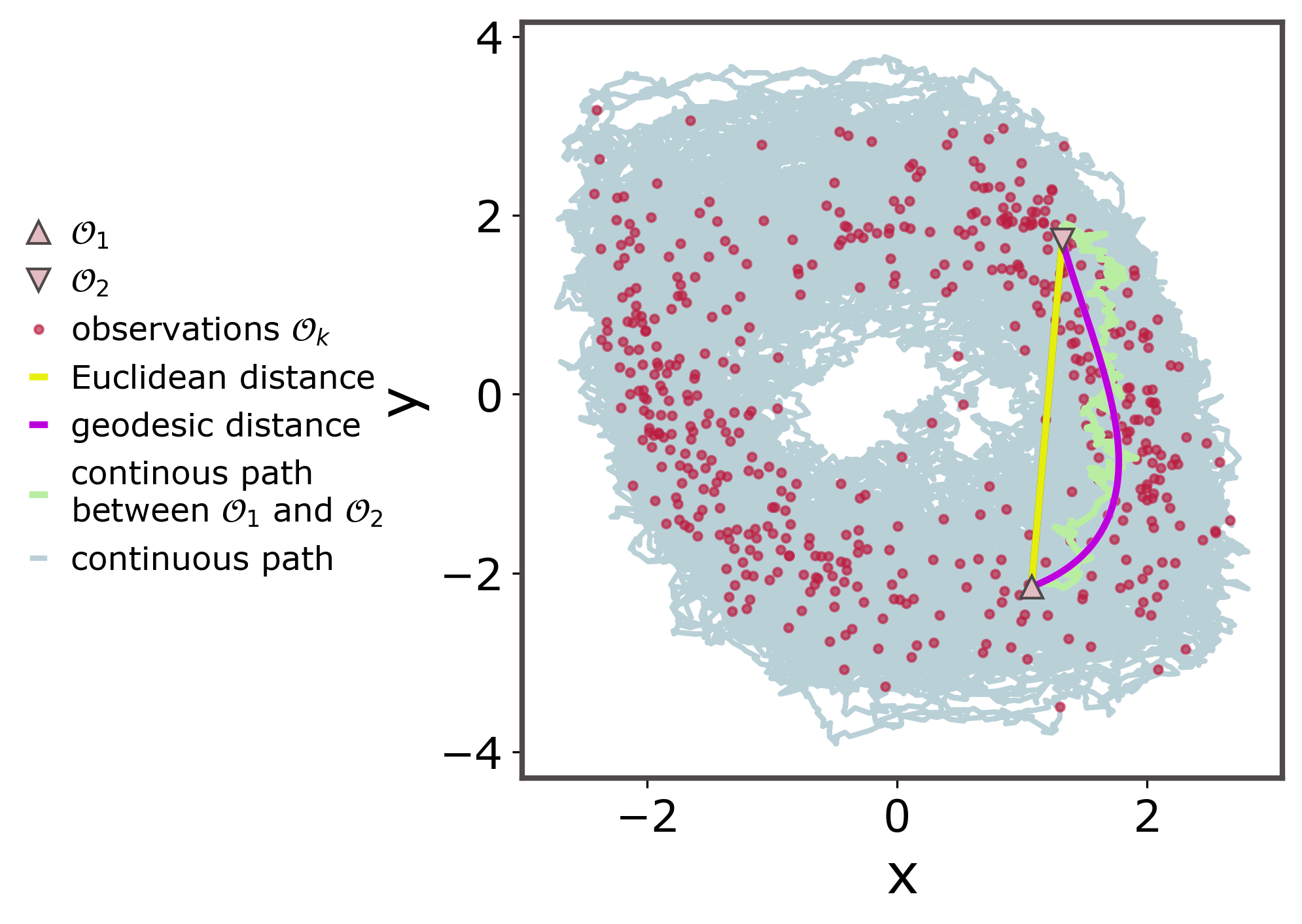

From a geometric perspective, we consider that the nonlinear system dynamics induce an invariant density that may be approximated by a (possibly low dimensional) manifold. The sparse-in-time observations are samples of that manifold. For low-frequency observations, Euclidean distances employed for computing the state increments do not consider the geometry induced by the nonlinear dynamics, and thereby underestimate the curvature of the transition density between consecutive observations (Figure 4).

3 Geometric path augmentation

Since the likelihood of Eq. 3 is intractable, we consider the unobserved continuous path between observations as latent random variables , and obtain a maximum a posteriori estimate for the drift through Expectation Maximisation (EM) (Dempster et al., 1977). Similar parametric (Elerian et al., 2001; Sermaidis et al., 2013) and non-parametric (Batz et al., 2018; Ruttor et al., 2013) methods have addressed the drift inference in the past, primarily in high-frequency observation settings. Our approach is based on the non-parametric method discussed in (Batz et al., 2018; Ruttor et al., 2013), with two significant advancements:

- (i)

-

(ii)

Importantly, we further constrain the augmented paths to align with the geometry of the invariant density between consecutive observations (Fig. 4 b.).

We follow an iterative algorithm, where at each iteration we perform the two following steps:

(1.)

An E(xpectation) step, where given a drift estimate we construct an approximate posterior over the latent variables .

(2.)

A M(aximisation) step, where we update the drift estimation.

Approximate posterior over paths. (E-step)

We approximate the continuous path trajectory between observations by a posterior path measure defined as the minimiser of the free energy

| (4) |

The term forces the latent path to pass through the observations (or close to them depending on the observation process), while guides the latent path towards the geodesic curves that connect consecutive observations on the manifold induced by the system’s invariant density (Sec. A.1.2). Here we denote , where is the geodesic connecting and , and . We identify the geodesic for each interval by learning the local metric of the manifold (see Sec. A.1.2 and Arvanitidis et al. (2019)).

Following Opper (2019), for each inter-observation interval we identify the posterior path measure (minimiser of Eq. 4) by the solution of a stochastic optimal control problem Maoutsa & Opper (2022; 2021); Maoutsa (2022) with the objective to obtain a time-dependent drift adjustment for the system with drift with initial and terminal constraints determined by , and additional path constraints .

Drift estimation. (M-step)

To estimate the drift from a sampled latent path, we assume a Gaussian process prior over function values and employ a sparse kernel approximation similar to Batz et al. (2018) (see Sec. A.2 for details).

4 Numerical experiments

To demonstrate the performance of the proposed method we performed systematic estimations for a two-dimensional Van der Pol oscillator under different noise conditions , observed at different inter-observation intervals for different lengths of trajectories (see Sec. B). For the examined noise amplitudes (Fig. 2 f.), the proposed path augmentation algorithm improves the naive estimation with Gaussian assumptions within two iterations for most noise amplitudes (Fig. 2). For increasing noise the improvement contributed by our approach decreases (Fig. 2f.), but is nevertheless not negligible. For low noise conditions, geodesics approximate the manifold structure better, however the path integral control is limited by the control costs proportional to inverse noise covariance. Our framework had comparable accuracy for all inter-observation lengths, but improvement was small for small lengths since in that setting the estimation with Gaussian likelihood already provides a good approximation of the ground truth drift. The proposed approach was compared to the augmentation method proposed by Batz et al. (2018) (augmentation with Ornstein-Uhlenbeck bridges) and delivered more accurate estimates for larger inter-observation intervals. For inter-observation intervals with only one observation per oscillation period (Figure 3c.,d.), the proposed approach delivered better results by considering additionally knowledge of the direction of movement in the state space (c.f. Sec. B). The variance of estimates of the proposed method was smaller compared to Batz et al. due to conditioning on the invariant geometry of the system.

5 Conclusion and Discussion

We introduced a new method for identifying stochastic systems from sparse-in-time observations of the system’s state that reconciles approaches that rely purely on the temporal structure of the observations with those that approximate the geometry of the invariant density. Our method employs a path augmentation strategy that uses the nonlinear dynamics of a coarse drift estimate and further constrains the augmented paths to follow the local geometry of the system’s invariant density. We found that the proposed approach provides efficient recovery of the underlying drift function for periodic or quasi-periodic systems under several noise conditions. Only a limited number of inference methods have attempted to merge geometric and temporal perspectives for the identification of stochastic systems, such as the Langevin regression (Callaham et al., 2021), TrajectoryNet (Tong et al., 2020), and the diffusion map method of Shnitzer et al. (2020; 2016). However, our method differs from these approaches by employing the geodesic approximation of the underlying data geometry (see also Sec. C Future directions).

Acknowledgements

We thank Mina Miolane for early guidance on extracting geodesics from variational autoencoder based approximation of data manifolds, Stefan Sommer for answering questions on diffusions on manifolds, and Prof. Manfred Opper for prompting us to work on this problem. We further thank Georgios Arvanitidis for maintaining an open repository with his previous work on approximating geodesics from data manifolds. An earlier account of this work has been presented at the NeurIPS 2022 workshop Machine Learning and the Physical Sciences (Maoutsa, 2023). We further acknowledge that previous work from the Python (Van Rossum & Drake Jr, 1995), numpy (Harris et al., 2020), scipy (Virtanen et al., 2020), matplotlib (Hunter, 2007), seaborn (Waskom, 2021), GPflow (Matthews et al., 2017), pyEMD (Pele & Werman, 2009), and pytorch (Paszke et al., 2017) communities facilitated the implementation of the computational part of this work.

An implementation of this work can be found in the following repository: https://github.com/dimitra-maoutsa/Geometric-path-augmentation-for-SDEs.

References

- Arnold (1990) Ludwig Arnold. Stochastic differential equations as dynamical systems. In Realization and Modelling in System Theory, pp. 489–495. Springer, 1990.

- Arnold (2014) Stevan J Arnold. Phenotypic Evolution: The Ongoing Synthesis: (American Society of Naturalists Address), 2014.

- Arvanitidis et al. (2017) Georgios Arvanitidis, Lars Kai Hansen, and Søren Hauberg. Latent space oddity: on the curvature of deep generative models. arXiv preprint arXiv:1710.11379, 2017. doi: https://doi.org/10.48550/arXiv.1710.11379.

- Arvanitidis et al. (2019) Georgios Arvanitidis, Soren Hauberg, Philipp Hennig, and Michael Schober. Fast and robust shortest paths on manifolds learned from data. In The 22nd International Conference on Artificial Intelligence and Statistics, pp. 1506–1515. PMLR, 2019.

- Batz et al. (2018) Philipp Batz, Andreas Ruttor, and Manfred Opper. Approximate Bayes learning of stochastic differential equations. Physical Review E, 98(2):022109, 2018.

- Beskos et al. (2006) Alexandros Beskos, Omiros Papaspiliopoulos, and Gareth O Roberts. Retrospective exact simulation of diffusion sample paths with applications. Bernoulli, 12(6):1077–1098, 2006.

- Boninsegna et al. (2018) Lorenzo Boninsegna, Feliks Nüske, and Cecilia Clementi. Sparse learning of stochastic dynamical equations. The Journal of chemical physics, 148(24):241723, 2018. doi: 10.1063/1.5018409.

- Callaham et al. (2021) Jared L Callaham, J-C Loiseau, Georgios Rigas, and Steven L Brunton. Nonlinear stochastic modelling with Langevin regression. Proceedings of the Royal Society A, 477(2250):20210092, 2021.

- Carverhill (1985) Andrew Carverhill. Flows of stochastic dynamical systems: ergodic theory. Stochastics: An International Journal of Probability and Stochastic Processes, 14(4):273–317, 1985.

- Chandrasekhar (1943) Subrahmanyan Chandrasekhar. Stochastic problems in physics and astronomy. Reviews of modern physics, 15(1):1, 1943.

- Chetrite & Touchette (2015) Raphaël Chetrite and Hugo Touchette. Variational and optimal control representations of conditioned and driven processes. Journal of Statistical Mechanics: Theory and Experiment, 2015(12):P12001, 2015.

- Chib et al. (2006) Siddhartha Chib, Michael K Pitt, and Neil Shephard. Likelihood based inference for diffusion driven state space models. Por Clasificar, pp. 1–33, 2006.

- Cong et al. (1997) Nguyen Dinh Cong et al. Topological dynamics of random dynamical systems. Oxford University Press, 1997.

- Dempster et al. (1977) Arthur P Dempster, Nan M Laird, and Donald B Rubin. Maximum likelihood from incomplete data via the EM algorithm. Journal of the Royal Statistical Society: Series B (Methodological), 39(1):1–22, 1977.

- Do Carmo & Flaherty Francis (1992) Manfredo Perdigao Do Carmo and J Flaherty Francis. Riemannian geometry, volume 6. Springer, 1992.

- Elerian et al. (2001) Ola Elerian, Siddhartha Chib, and Neil Shephard. Likelihood inference for discretely observed nonlinear diffusions. Econometrica, 69(4):959–993, 2001.

- Fenichel & Moser (1971) Neil Fenichel and JK Moser. Persistence and smoothness of invariant manifolds for flows. Indiana University Mathematics Journal, 21(3):193–226, 1971.

- Friedrich & Peinke (1997) Rudolf Friedrich and Joachim Peinke. Description of a turbulent cascade by a Fokker-Planck equation. Physical Review Letters, 78(5):863, 1997. doi: https://doi.org/10.1103/PhysRevLett.78.863.

- Friedrich et al. (2000) Rudolf Friedrich, Silke Siegert, Joachim Peinke, Marcus Siefert, Michael Lindemann, Jan Raethjen, Güntner Deuschl, Gerhard Pfister, et al. Extracting model equations from experimental data. Physics Letters A, 271(3):217–222, 2000.

- Fröhlich et al. (2021) Christian Fröhlich, Alexandra Gessner, Philipp Hennig, Bernhard Schölkopf, and Georgios Arvanitidis. Bayesian Quadrature on Riemannian Data Manifolds. In International Conference on Machine Learning, pp. 3459–3468. PMLR, 2021. doi: https://doi.org/10.48550/arXiv.2102.06645.

- Girya & Chueshov (1995) TV Girya and Igor Dmitrievich Chueshov. Inertial manifolds and stationary measures for stochastically perturbed dissipative dynamical systems. Sbornik: Mathematics, 186(1):29–45, 1995.

- Golightly & Wilkinson (2008) Andrew Golightly and Darren J Wilkinson. Bayesian inference for nonlinear multivariate diffusion models observed with error. Computational Statistics & Data Analysis, 52(3):1674–1693, 2008.

- Harris et al. (2020) Charles R. Harris, K. Jarrod Millman, Stéfan J. van der Walt, Ralf Gommers, Pauli Virtanen, David Cournapeau, Eric Wieser, Julian Taylor, Sebastian Berg, Nathaniel J. Smith, Robert Kern, Matti Picus, Stephan Hoyer, Marten H. van Kerkwijk, Matthew Brett, Allan Haldane, Jaime Fernández del Río, Mark Wiebe, Pearu Peterson, Pierre Gérard-Marchant, Kevin Sheppard, Tyler Reddy, Warren Weckesser, Hameer Abbasi, Christoph Gohlke, and Travis E. Oliphant. Array programming with NumPy. Nature, 585(7825):357–362, September 2020. doi: 10.1038/s41586-020-2649-2. URL https://doi.org/10.1038/s41586-020-2649-2.

- Honisch & Friedrich (2011) Christoph Honisch and Rudolf Friedrich. Estimation of Kramers-Moyal coefficients at low sampling rates. Physical Review E, 83(6):066701, 2011.

- Hunter (2007) J. D. Hunter. Matplotlib: A 2d graphics environment. Computing In Science & Engineering, 9(3):90–95, 2007. doi: 10.1109/MCSE.2007.55.

- Kramers (1940) Hendrik Anthony Kramers. Brownian motion in a field of force and the diffusion model of chemical reactions. Physica, 7(4):284–304, 1940.

- Lade (2009) Steven J Lade. Finite sampling interval effects in Kramers–Moyal analysis. Physics Letters A, 373(41):3705–3709, 2009.

- Lande (1976) Russell Lande. Natural selection and random genetic drift in phenotypic evolution. Evolution, pp. 314–334, 1976.

- Lee (2018) John M Lee. Introduction to Riemannian manifolds, volume 176. Springer, 2018.

- Li et al. (2020a) Zongyi Li, Nikola Kovachki, Kamyar Azizzadenesheli, Burigede Liu, Kaushik Bhattacharya, Andrew Stuart, and Anima Anandkumar. Fourier neural operator for parametric partial differential equations. International Conference on Learning Representations, 2020a.

- Li et al. (2020b) Zongyi Li, Nikola Kovachki, Kamyar Azizzadenesheli, Burigede Liu, Kaushik Bhattacharya, Andrew Stuart, and Anima Anandkumar. Neural operator: Graph kernel network for partial differential equations. arXiv preprint arXiv:2003.03485, 2020b.

- Liptser & Shiryaev (2013) Robert S Liptser and Albert N Shiryaev. Statistics of random processes II: Applications, volume 6. Springer Science & Business Media, 2013. doi: 10.1007/978-3-662-10028-8.

- Majumdar & Orland (2015) Satya N Majumdar and Henri Orland. Effective Langevin equations for constrained stochastic processes. Journal of Statistical Mechanics: Theory and Experiment, 2015(6):P06039, 2015.

- Maoutsa (2022) Dimitra Maoutsa. Revealing latent stochastic dynamics from single-trial spike train observations. Bernstein Conference for Computational Neuroscience, Berlin 2022, 09 2022.

- Maoutsa (2023) Dimitra Maoutsa. Geometric path augmentation for inference of sparsely observed stochastic nonlinear systems. arXiv preprint arXiv:2301.08102, 2023.

- Maoutsa & Opper (2021) Dimitra Maoutsa and Manfred Opper. Deterministic particle flows for constraining SDEs. Machine Learning and the Physical Sciences, Workshop at the 35th Conference on Neural Information Processing Systems (NeurIPS), arXiv preprint arXiv:2110.13020, 2021. doi: https://doi.org/10.48550/arXiv.2110.13020.

- Maoutsa & Opper (2022) Dimitra Maoutsa and Manfred Opper. Deterministic particle flows for constraining stochastic nonlinear systems. Phys. Rev. Research, 4:043035, Oct 2022. doi: 10.1103/PhysRevResearch.4.043035. URL https://link.aps.org/doi/10.1103/PhysRevResearch.4.043035.

- Maoutsa et al. (2020) Dimitra Maoutsa, Sebastian Reich, and Manfred Opper. Interacting particle solutions of Fokker–Planck equations through gradient–log–density estimation. Entropy, 22(8):802, 2020.

- Matthews et al. (2017) Alexander G. de G. Matthews, Mark van der Wilk, Tom Nickson, Keisuke. Fujii, Alexis Boukouvalas, Pablo León-Villagrá, Zoubin Ghahramani, and James Hensman. GPflow: A Gaussian process library using TensorFlow. Journal of Machine Learning Research, 18(40):1–6, apr 2017. URL http://jmlr.org/papers/v18/16-537.html.

- Mohammed & Scheutzow (1999) Salah-Eldin A Mohammed and Michael KR Scheutzow. The stable manifold theorem for stochastic differential equations. Annals of probability, pp. 615–652, 1999. doi: https://doi.org/10.1214/aop/1022677380.

- Moyal (1949) Jose Moyal. Stochastic processes and statistical physics. Journal of the Royal Statistical Society. Series B (Methodological), 11(2):150–210, 1949.

- Nelson (2004) Philip Nelson. Biological physics. WH Freeman New York, 2004.

- Opper (2019) Manfred Opper. Variational inference for stochastic differential equations. Annalen der Physik, 531(3):1800233, 2019.

- Papaspiliopoulos et al. (2012) Omiros Papaspiliopoulos, Yvo Pokern, Gareth O Roberts, and Andrew M Stuart. Nonparametric estimation of diffusions: a differential equations approach. Biometrika, 99(3):511–531, 2012.

- Paszke et al. (2017) Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, Edward Yang, Zachary DeVito, Zeming Lin, Alban Desmaison, Luca Antiga, and Adam Lerer. Automatic differentiation in pytorch. 2017.

- Pele & Werman (2009) Ofir Pele and Michael Werman. Fast and robust earth mover’s distances. In 2009 IEEE 12th International Conference on Computer Vision, pp. 460–467. IEEE, September 2009.

- Ragwitz & Kantz (2001) Mario Ragwitz and Holger Kantz. Indispensable finite time corrections for Fokker-Planck equations from time series data. Physical Review Letters, 87(25):254501, 2001. doi: 10.1103/physrevlett.87.254501.

- Rasmussen (2003) Carl Edward Rasmussen. Gaussian processes in machine learning. In Summer School on Machine Learning, pp. 63–71. Springer-Verlag, 2003.

- Ruttor et al. (2013) Andreas Ruttor, Philipp Batz, and Manfred Opper. Approximate Gaussian process inference for the drift function in stochastic differential equations. Advances in Neural Information Processing Systems, 26, 2013.

- Sermaidis et al. (2013) Giorgos Sermaidis, Omiros Papaspiliopoulos, Gareth O Roberts, Alexandros Beskos, and Paul Fearnhead. Markov chain Monte Carlo for exact inference for diffusions. Scandinavian Journal of Statistics, 40(2):294–321, 2013.

- Shnitzer et al. (2016) Tal Shnitzer, Ronen Talmon, and Jean-Jacques Slotine. Manifold learning with contracting observers for data-driven time-series analysis. IEEE Transactions on Signal Processing, 65(4):904–918, 2016. doi: https://doi.org/10.1109/TSP.2016.2616334.

- Shnitzer et al. (2020) Tal Shnitzer, Ronen Talmon, and Jean-Jacques Slotine. Manifold Learning for Data-Driven Dynamical System Analysis. In The Koopman Operator in Systems and Control, pp. 359–382. Springer, 2020.

- Siegert et al. (1998) Silke Siegert, R Friedrich, and J Peinke. Analysis of data sets of stochastic systems. Physics Letters A, 243(5-6):275–280, 1998. doi: 10.1016/S0375-9601(98)00283-7.

- Stark et al. (2003) Jaroslav Stark, David S Broomhead, Michael Evan Davies, and J Huke. Delay embeddings for forced systems. II. Stochastic forcing. Journal of Nonlinear Science, 13:519–577, 2003.

- Tabar (2019) M Tabar. Kramers–Moyal Expansion and Fokker–Planck Equation. In Analysis and Data-Based Reconstruction of Complex Nonlinear Dynamical Systems, pp. 19–29. Springer, 2019.

- Tamir et al. (2023) Ella Tamir, Martin Trapp, and Arno Solin. Transport with Support: Data-Conditional Diffusion Bridges. arXiv preprint arXiv:2301.13636, 2023.

- Tong et al. (2020) Alexander Tong, Jessie Huang, Guy Wolf, David Van Dijk, and Smita Krishnaswamy. TrajectoryNet: A dynamic optimal transport network for modeling cellular dynamics. In International conference on machine learning, pp. 9526–9536. PMLR, 2020.

- Van Rossum & Drake Jr (1995) Guido Van Rossum and Fred L Drake Jr. Python tutorial, volume 620. Centrum voor Wiskunde en Informatica Amsterdam, The Netherlands, 1995.

- Virtanen et al. (2020) Pauli Virtanen, Ralf Gommers, Travis E. Oliphant, Matt Haberland, Tyler Reddy, David Cournapeau, Evgeni Burovski, Pearu Peterson, Warren Weckesser, Jonathan Bright, Stéfan J. van der Walt, Matthew Brett, Joshua Wilson, K. Jarrod Millman, Nikolay Mayorov, Andrew R. J. Nelson, Eric Jones, Robert Kern, Eric Larson, C J Carey, İlhan Polat, Yu Feng, Eric W. Moore, Jake VanderPlas, Denis Laxalde, Josef Perktold, Robert Cimrman, Ian Henriksen, E. A. Quintero, Charles R. Harris, Anne M. Archibald, Antônio H. Ribeiro, Fabian Pedregosa, Paul van Mulbregt, and SciPy 1.0 Contributors. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nature Methods, 17:261–272, 2020. doi: 10.1038/s41592-019-0686-2.

- Wang et al. (2020) Ziyu Wang, Shuyu Cheng, Li Yueru, Jun Zhu, and Bo Zhang. A Wasserstein minimum velocity approach to learning unnormalized models. In International Conference on Artificial Intelligence and Statistics, pp. 3728–3738. PMLR, 2020.

- Waskom (2021) Michael L Waskom. Seaborn: statistical data visualization. Journal of Open Source Software, 6(60):3021, 2021.

- Wiggins (1994) Stephen Wiggins. Normally hyperbolic invariant manifolds in dynamical systems, volume 105. Springer Science & Business Media, 1994.

Appendix A Drift inference for high and low frequency observations

We consider systems whose evolution is captured by the stochastic differential equation Eq. 1.

High frequency observations.

When the system path is observed in continuous time, the infinitesimal transition probabilities of the diffusion process between consecutive observations are Gaussian, i.e.,

| (5) |

In turn, the transition probability of (discretised) Wiener paths (i.e., paths from a drift-less process) can be expressed as

| (6) |

where denotes the weighted norm with indicating the noise covariance. We can thus express the likelihood for the drift by the Radon-Nykodym derivative between and for paths within the time interval (Liptser & Shiryaev, 2013)

| (7) |

where for brevity we have introduced the notation for the weighted inner product with respect to the inverse noise covariance . This expression results from applying the Girsanov theorem on the path measures induced by a process with drift and a Wiener process, with same diffusion , and employing an Euler-Maruyama discretisation on the continuous path .

The likelihood given a continuously observed path of the SDE (Eq. 7) has a quadratic form in terms of the drift function. Therefore a Gaussian measure over function values (Gaussian process) is a natural conjugate prior for this likelihood. To identify the drift in a non-parametric form, we assume a Gaussian process prior for the function values , where and denote the mean and covariance function of the Gaussian process (Ruttor et al., 2013). The prior measure can be written as

| (8) |

if we consider a zero mean Gaussian process .

Bayesian inference for the drift function requires the computation of a probability distribution in the function space, the posterior probability distribution . From the Bayes’ rule the posterior can be expressed as

| (9) |

where denotes a normalising factor defined as a path integral

| (10) |

where denotes integration over the Hilbert space . Here we have expressed the prior probability over functions as . In (Ruttor et al., 2013) the authors show that in the continuous time limit, nonparametric estimation of drift functions becomes equivalent to Gaussian process regression, with the objective to identify the mapping from the system state to state increments (Rasmussen, 2003). More precisely, we consider observations of the system state as the regressor, with associated response variables

| (11) |

and denote the kernel function of the Gaussian process by .

If we denote with and the set of state observations and observation increments, the mean of the posterior process over drift functions can be expressed as

| (12) |

where we abused the notation and denoted with the vector resulting from evaluating the kernel at points and . Similarly stands for the matrix resulting from evaluation of the kernel on all observation pairs. In a similar vein, the posterior variance can be written as

| (13) |

where the term plays the role of observation noise.

Low frequency observations.

When the inter-observation interval becomes large (low frequency observations), the Gaussian likelihood of Eq. 7 becomes invalid, since for large inter-observation intervals the transition density is no longer Gaussian. Thus, drift estimation with Gaussian assumptions (Friedrich & Peinke, 1997; Ruttor et al., 2013) becomes inaccurate. To mitigate this issue Lade (Lade, 2009) introduced a method to compute finite time corrections for the drift estimates, which has been applied (to the best of our knowledge) mostly to one dimensional problems (Honisch & Friedrich, 2011). On the other hand, the statistics community has proposed path augmentation schemes that augment the observed trajectory to a nearly continuous-time trajectory by sampling a simplified system’s dynamics between observations (Golightly & Wilkinson, 2008; Papaspiliopoulos et al., 2012; Sermaidis et al., 2013; Beskos et al., 2006; Chib et al., 2006). However for large inter-observation intervals and for nonlinear systems the simplified dynamics employed for path augmentation match poorly the underlying path statistics, and these methods show poor convergence rates or fail to identify the correct dynamics (Figure 1 c. and d.). We point out here, that path augmentation with Ornstein Uhlenbeck bridges using as drift the local linearisation of the correct dynamics, provides a good approximation of the underlying transition density. However, during inference, the true underlying dynamics are unknown, and the proposed local linearisations on inaccurate drift estimates (Batz et al., 2018) perform poorly for low frequency observations.

Notice that as the inter-observation interval increases, the Gaussian likelihood assumed between two successive observations is no longer valid if the system is non-linear or when the noise is state dependent. The likelihood for the drift for such settings can be expressed in terms of a path integral

| (14) |

where denotes the set of discrete time observations, the prior path probability resulting from a diffusion process with drift , identifies the formal volume element on the path space, and stands for the likelihood of observations given the latent path .

However, the path integral of Eq. 14 is intractable for nonlinear systems, thus we need to simultaneously estimate the drift and latent state of the diffusion process, i.e., to approximate the joint posterior measure of latent paths and drift functions . Therefore we consider the unobserved continuous path as latent random variables and employ an Expectation Maximisation (EM) algorithm to identify a maximum a posteriori estimate for the drift function. More precisely, we follow an iterative algorithm, where at each iteration we alternate between the two following steps:

An Expectation step, where given a drift estimate we construct an approximate posterior over the latent variables , and compute the expected log-likelihood of the augmented path

| (15) |

A Maximisation step, where we update the drift estimation by maximising the expected log likelihood

| (16) |

In Eq. 16 denotes the Gaussian process prior over function values.

A.1 Approximate posterior over paths.

Here we first formulate the approximate posterior over paths (conditional distribution for the path given the observations) by considering only individual observations as constraints (Section A.1.1). However, this approach results computationally taxing calculations during path augmentation, since the observations are atypical states of the initially estimated drift. To overcome this issue, we subsequently extend the formalism (Section A.1.2) to incorporate constraints that consider also the local geometry of the observations.

A.1.1 Approximate posterior over paths without geometric constraints.

Given a drift function (or a drift estimate) we can apply variational techniques to approximate the posterior measure over the latent path conditioned on the observations . We consider that the prior process (the process without considering the observations ) is described by the equation

| (17) |

We will define an approximating (posterior) process that is conditioned on the observations. The conditioned process is also a diffusion process with the same diffusion as Eq. 17 but with a modified, time-dependent drift that accounts for the observations (Chetrite & Touchette, 2015; Majumdar & Orland, 2015). We identify the approximate posterior measure with the posterior measure induced by an approximating process that is conditioned by the observations (Opper, 2019), with governing equation

| (18) |

The effective drift of Eq. 18 may be obtained from the solution of the variational problem of minimising the free energy

| (19) |

By applying the Cameron-Girsanov-Martin theorem we can express the Kullback-Leibler divergence between the two path measures induced by the diffusions with drift and as

| (20) | ||||

| (21) | ||||

| (22) | ||||

| (23) |

where stands for the marginal density for of the approximate process. In the third line we have introduced the random variable . Under the assumption that the function is bounded, piece-wise continuous, and in , follows the distribution , which for a given will result into a constant . Thus the second term in Eq. 23 is not relevant for the minimisation of the free energy and will be omitted.

We can thus express the free energy of Eq. 19 as (Opper, 2019)

| (24) |

where the term accounts for the observations .

The minimisation of the functional of the free energy can be construed as a stochastic control problem (Opper, 2019) with the objective to identify a time-dependent drift adjustment for the system with drift so that the controlled dynamics fulfil the constraints imposed by the observations.

For the case of exact observations, i.e., for an observation process , we can compute the drift adjustment for each of the inter-observation intervals independently. Thus for each interval between consecutive observations, we identify the optimal control required to construct a stochastic bridge following the dynamics of Eq. 17 with initial and terminal states the respective observations and .

The optimal drift adjustment for such a stochastic control problem for the inter-observation interval between and can be obtained from the solution of the backward equation (see (Maoutsa & Opper, 2022; 2021))

| (25) |

with terminal condition and with denoting the adjoint Fokker-Planck operator for the process of Eq. 17. As shown in Maoutsa et al. (Maoutsa & Opper, 2022; 2021) the optimal drift adjustment can be expressed in terms of the difference of the logarithmic gradients of two probability flows

| (26) |

where fulfils the forward (filtering) partial differential equation (PDE)

| (27) |

while is the solution of a time-reversed PDE that depends on the logarithmic gradient of

| (28) |

with initial condition .

A.1.2 Approximate posterior over paths with geometric constraints.

The previously described construction of the approximate measure in terms of stochastic bridges is relevant when the observations have non vanishing probability under the law of the prior diffusion process of Eq. 17. However, when the prior process (with the estimated drift ) differs considerably from the process that generated the observations, such a construction might either provide a bad approximation of the underlying path measure, or show slow numerical convergence in the construction of the diffusion bridges. To overcome this issue, we consider here additional constraints for the posterior process that force the paths of the posterior measure to respect the local geometry of the observations. In the following we provide a brief introduction on the basics of Riemannian geometry and consequently continue with the geometric considerations of the proposed method.

Riemannian geometry.

A -dimensional Riemannian manifold (Do Carmo & Flaherty Francis, 1992; Lee, 2018) embedded in a -dimensional ambient space is a smooth curved -dimensional surface endowed with a smoothly varying inner product (Riemannian) metric on . A tangent space is defined at each point . The Riemannian metric defines a canonical volume measure on the manifold . Intuitively this characterises how to compute inner products locally between points on the tangent space of the manifold , and therefore determines also how to compute norms and thus distances between points on .

A coordinate chart provides the mapping from an open set on to an open set in the Euclidean space. The dimensionality of the manifold is if for each point there exists a local neighborhood . We can represent the metric on the local chart by the positive definite matrix (metric tensor) at each point .

For and , their inner product can be expressed in terms of the matrix representation of the metric on the tangent space as , where .

The length of a curve on the manifold is defined as the integral of the norm of the tangent vector

| (29) |

where the dotted letter indicates the velocity of the curve . A geodesic curve is a locally length minimising smooth curve that connects two given points on the manifold.

Riemannian geometry of the observations.

For approximating the posterior over paths we take into account the geometry of the invariant density as it is represented by the observations. To that end, we consider systems whose dynamics induce invariant (inertial) manifolds that contain the global attractor of the system and on which system trajectories concentrate (Wiggins, 1994; Mohammed & Scheutzow, 1999; Girya & Chueshov, 1995; Fenichel & Moser, 1971; Arnold, 1990; Carverhill, 1985). We assume thus that the continuous-time trajectories of the underlying system concentrates on an invariant manifold of dimensionality (possibly) smaller than . The discrete-time observations are thus samples of the manifold . The central premise of our approach is that unobserved paths between successive observations will be lying either on or in the vicinity of the manifold . In particular, we postulate that unobserved paths should lie in the vicinity of geodesics that connect consecutive observations on . To that end we propose a path augmentation framework that constraints the augmented paths to lie in the vicinity of identified geodesics between consecutive observations.

However, while this view of a lower dimensional manifold embedded in a higher dimensional ambient space helps to build our intuition for the proposed method, for computational purposes we adopt a complementary view inspired by the discussion in (Fröhlich et al., 2021). According to this view, we consider the entire observation space as a smooth Riemannian manifold, , characterised by a Riemannian metric . The effect of the nonlinear geometry of the observations is then captured by the metric . Thus to approximate the geometric structure of the system’s invariant density, we learn the Riemannian metric tensor and compute the geodesics between consecutive observations according to the learned metric. Intuitively according to this view the observations introduce distortions in the way we compute distances on the state space.

In effect this approach does not reduce the dimensionality of the space we operate, but changes the way we compute inner products and thus distances, lengths, and geodesic curves on . The alternative perspective of working on a lower dimensional manifold would strongly depend on the correct assessment of the dimensionality of said manifold. For example, one could use a Variational Autoencoder to approximate the observation manifold and subsequently obtain the Riemannian metric from the embedding of the manifold mediated by the decoder. However, our preliminary results of such an approach revealed that such a method requires considerable fine tuning to adapt to the characteristics of each dynamical system and is sensitive to the estimation of the dimensionality of the approximated manifold.

To learn the Riemannian metric and compute the geodesics we follow the framework proposed by Arvanitidis et al. (2019). In particular, we approximate the local metric induced by the observations at location of the state space, in a non-parametric form by the inverse of the weighted local diagonal covariance computed on the observations as (Arvanitidis et al., 2019)

| (30) |

with weights , and denoting the -th dimensional component of the vector . The parameter ensures non-zero diagonals of the weighted covariance matrix, while characterises the curvature of the manifold.

Between consecutive observations for each interval , we identify the geodesic as the energy minimising curve, i.e., as the minimiser of the kinetic energy functional

| (31) |

where denotes the Lagrangian. The minimising curve of this functional is the same as the minimiser of the curve length functional (Eq. 29), i.e., the geodesic (Do Carmo & Flaherty Francis, 1992).

By applying calculus of variations, the minimising curve of the functional can be obtained from the Euler-Lagrange equations, resulting in the following system of second order differential equations (Arvanitidis et al., 2017; Do Carmo & Flaherty Francis, 1992)

| (32) |

with boundary conditions and , where stands for the Kroenecker product, and denotes the vectorisation operation of matrix through stacking the columns of into a vector. Arvanitidis et al. (2019) obtain the geodesics by approximating the solution of the boundary value problem of Eq. 32 with a probabilistic differential equation solver.

Extended free energy functional.

We denote the collection of individual geodesics by , where is the geodesic connecting and , and denotes a rescaled time variable. Additional to the constraints imposed in the previously explained setting (Sec A.1.1), here we add an extra term in the free energy that accounts for the local geometry of the invariant density, and guides the latent path towards the geodesic curves that connect consecutive observations

| (33) |

Here we denote the observation term by , while stands for a weighting constant that determines the relative weight of the geometric term in the control objective.

Following Opper (2019), for each inter-observation interval we identify the posterior path measure (minimiser of Eq. 33) by the solution of a stochastic optimal control problem (Maoutsa & Opper, 2022; 2021) with the objective to obtain a time-dependent drift adjustment for the system with drift with initial and terminal constraints defined by , and additional path constraints .

A.2 Approximate posterior over drift functions.

For a fixed path measure , the optimal measure for the drift is a Gaussian process given by

| (34) |

with

and

where denotes the marginal constrained density of the state . The function denotes the effective drift.

We assume a Gaussian process prior for the unknown function , i.e., where and denote the mean and covariance function of the Gaussian process. Following Ruttor et al. (Ruttor et al., 2013), we employ a sparse kernel approximation for the drift by optimising the function values over a sparse set of inducing points . We obtain the resulting drift from

| (35) |

where we have defined introduced the notation

| (36) |

| (37) |

We employ a sample based approximation of the densities in Eq. 34 resulting from the particle sampling of the path measure . Thus by representing the densities by samples, we can rewrite the density in terms of a sum of Dirac delta functions centered around the particles positions

and replace the Riemannian integrals with summation over particles. Here represents the position of the -th particle at time point .

Appendix B Details on numerical experiments

We simulated a two dimensional Van der Pol oscillator with drift function

| (38) | ||||

| (39) |

starting from initial condition and under noise amplitudes for total duration of time units. The employed inter-observation intervals . The last inter-observation interval exceeds the half period of the oscillator and thus samples only a single state per period. This resulted in erroneous estimates. In this setting this indicates the upper limit of for which we can provide estimates. However for any inference method, if the observation process samples only one observation per period, identifying the underlying force field without additional assumptions is not possible with temporal methods. The discretisation time-step used for simulation of the ground truth dynamics, and path augmentation . For sampling the controlled bridges we employed particles evolving the associated ordinary differential equation as described in Maoutsa & Opper (2022; 2021); Maoutsa et al. (2020). The logarithmic gradient estimator used inducing points. The sparse Gaussian process for estimating the drift was based on a sparse kernel approximation of points. In the presented simulation we have employed a weighting parameter (Eq. 33). This provides a moderate pull towards the invariant density. The example in Figure 1 was constructed with and provides a better approximation of the transition density, than .

For identifying geodesic curves in settings where only one observation is collected per oscillation period, we have to bias the method that computes the geodesics to identify the geodesic towards the correct direction of movement, that in this case is not always the shortest curve on the manifold between two consecutive observations. To that end we assigned to each observation a phase-like variable and considered for the computation of the geodesics only the observations that had properly valued phases given the direction of movement and the phases of the two end-point observations. For example, for a counter-clockwise rotation and for phases at the end points of the bridge and , we construct the geodesics only on the observations that have phases within thin if , or within if . With this approach we consider essentially only the relevant part of the manifold that aligns with the direction of movement. With the function we denote the function that assigns a phase variable to each observation. Here for the Van der Pol oscillator we considered . An alternative option for assigning phase to each observation is measuring the angle between the Hilbert transform of and itself, and rescaling the values to the interval. Here denotes one dimensional component of the observations.

Appendix C Future directions

The study of topological and geometric properties of invariant densities induced by stochastic dynamical systems is a relatively unexplored area with limited research available. Cong and Huang (Cong et al., 1997) approached the study of topological properties of systems perturbed by noise through the lens of random dynamical systems, which, under certain conditions and assumptions, can be translated to the language of stochastic dynamical systems. This direction has great potential for future investigation, especially in conjunction with delay embedding approaches devised for random dynamical systems (Stark et al., 2003) for estimation of the dimensionality of the invariant manifold.

One potential direction for future research is to directly employ Riemannian Langevin dynamics (Wang et al., 2020) to construct the augmented paths on the approximated metric. Alternatively, future directions for inference at the low sampling rate limit may tackle the problem through an operation learning perspective. Neglecting geometric considerations, constructing augmented paths for each inter-observation interval essentially solves the same control problem multiple times, only with different initial and terminal constraints. Thus neural network operator learning approaches like the neural operator used in Li et al. (2020a; b) that can provide generalizations for different initial and possibly terminal conditions may be relevant for tackling the inference problem through path augmentation in a more computationally efficient way.

Further promising future directions may consider path augmentation in terms of Schrödinger bridges that would account for noisy observations (here we implicitly assume no noise in the observations or small Gaussian noise). Tamir et al. (2023) propose an efficient algorithm for introducing path constraints in the Schrödinger bridge problem that may be employed in our framework to account for geometric inductive biases for inference of stochastic dynamics.

Appendix D Additional figures