Generating Adjacency-Constrained Subgoals in Hierarchical Reinforcement Learning

Abstract

Goal-conditioned hierarchical reinforcement learning (HRL) is a promising approach for scaling up reinforcement learning (RL) techniques. However, it often suffers from training inefficiency as the action space of the high-level, i.e., the goal space, is often large. Searching in a large goal space poses difficulties for both high-level subgoal generation and low-level policy learning. In this paper, we show that this problem can be effectively alleviated by restricting the high-level action space from the whole goal space to a -step adjacent region of the current state using an adjacency constraint. We theoretically prove that the proposed adjacency constraint preserves the optimal hierarchical policy in deterministic MDPs, and show that this constraint can be practically implemented by training an adjacency network that can discriminate between adjacent and non-adjacent subgoals. Experimental results on discrete and continuous control tasks show that incorporating the adjacency constraint improves the performance of state-of-the-art HRL approaches in both deterministic and stochastic environments.-1-1-1Code is available at https://github.com/trzhang0116/HRAC.

1 Introduction

Hierarchical reinforcement learning (HRL) has shown great potentials in scaling up reinforcement learning (RL) methods to tackle large, temporally extended problems with long-term credit assignment and sparse rewards [39, 31, 2]. As one of the prevailing HRL paradigms, goal-conditioned HRL framework [5, 37, 20, 42, 26, 22], which comprises a high-level policy that breaks the original task into a series of subgoals and a low-level policy that aims to reach those subgoals, has recently achieved significant success. However, the effectiveness of goal-conditioned HRL relies on the acquisition of effective and semantically meaningful subgoals, which still remains a key challenge.

As the subgoals can be interpreted as high-level actions, it is feasible to directly train the high-level policy to generate subgoals using external rewards as supervision, which has been widely adopted in previous research [26, 25, 22, 20, 42]. Although these methods require little task-specific design, they often suffer from training inefficiency. This is because the action space of the high-level, i.e., the goal space, is often as large as the state space. The high-level exploration in such a large action space results in inefficient learning. As a consequence, the low-level training also suffers as the agent tries to reach every possible subgoal produced by the high-level policy.

One effective way for handling large action spaces is action space reduction or action elimination. However, it is difficult to perform action space reduction in general scenarios without additional information, since a restricted action set may not be expressive enough to form the optimal policy. There has been limited literature [43, 41, 19] studying action space reduction in RL, and to our knowledge, there is no prior work studying action space reduction in HRL, since the information loss in the goal space can lead to severe performance degradation [25].

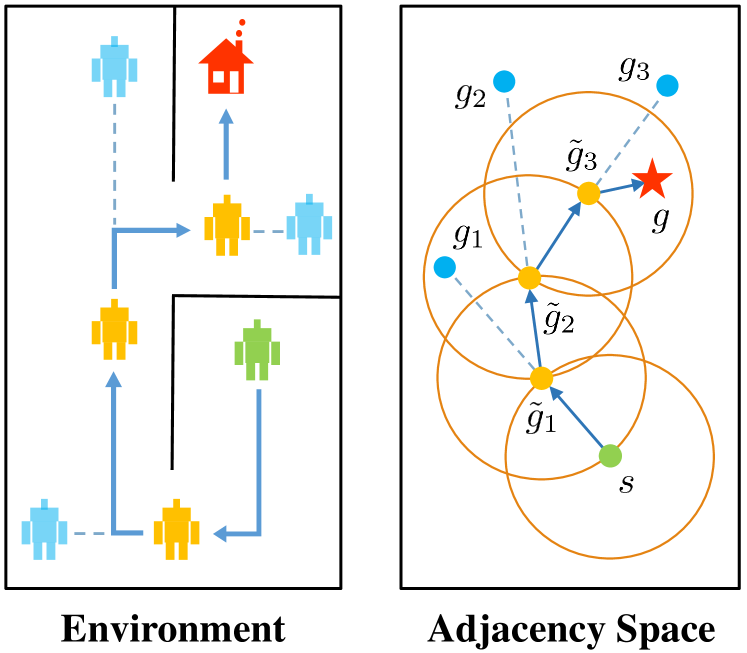

In this paper, we present an optimality-preserving high-level action space reduction method for goal-conditioned HRL. Concretely, we show that the high-level action space can be restricted from the whole goal space to a -step adjacent region centered at the current state. Our main intuition is depicted in Figure 3: distant subgoals can be substituted by closer subgoals, as long as they drive the low-level to move towards the same “direction”. Therefore, given the current state and the subgoal generation frequency , the high-level only needs to explore in a subset of subgoals covering states that the low-level can possibly reach within steps. By reducing the action space of the high-level, the learning efficiency of both the high-level and the low-level can be improved: for the high-level, a considerably smaller action space relieves the burden of exploration and value function approximation; for the low-level, adjacent subgoals provide a stronger learning signal as the agent can be intrinsically rewarded with a higher frequency for reaching these subgoals. Formally, we introduce a -step adjacency constraint for high-level action space reduction, and theoretically prove that the proposed constraint preserves the optimal hierarchical policy in deterministic MDPs. Also, to practically implement the constraint, we propose to train an adjacency network so that the -step adjacency between all states and subgoals can be succinctly derived.

We benchmark our method on various tasks, including discrete control and planning tasks on grid worlds and challenging continuous control tasks based on the MuJoCo simulator [40], which have been widely used in HRL literature [26, 22, 25, 11]. Experimental results exhibit the superiority of our method on both sample efficiency and asymptotic performance compared with the state-of-the-art HRL approach HIRO [26], demonstrating the effectiveness of the proposed adjacency constraint.

2 Preliminaries

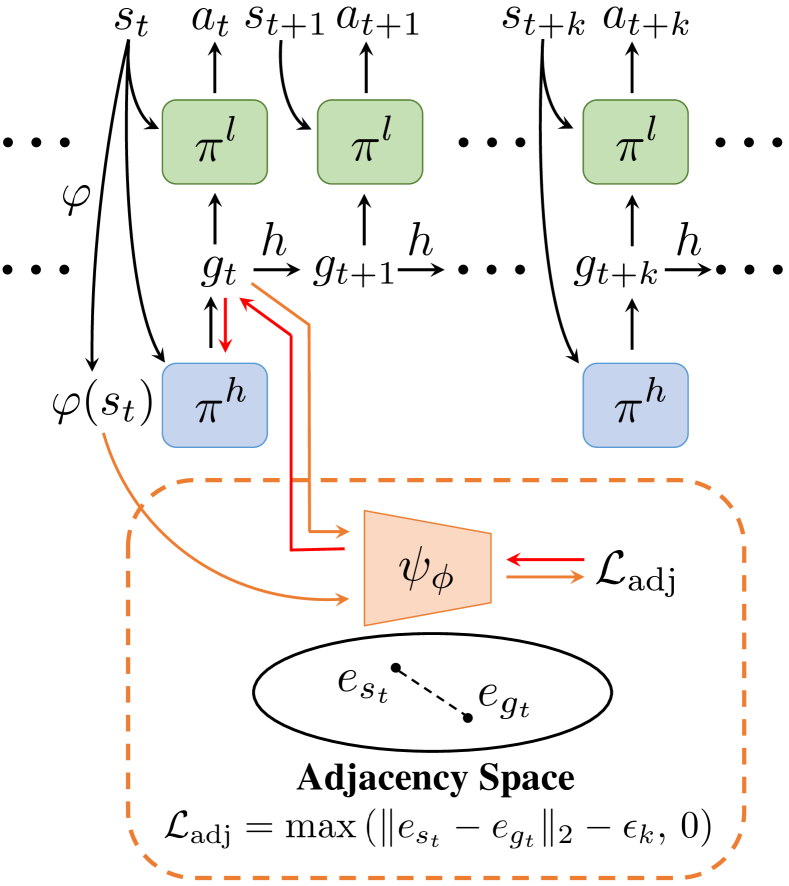

We consider a finite-horizon, goal-conditioned Markov Decision Process (MDP) defined as a tuple , where is a state set, is a goal set, is an action set, is a state transition function, is a reward function, and is a discount factor. Following prior work [20, 42, 26], we consider a framework comprising two hierarchies: a high-level controller with policy and a low-level controller with policy parameterized by two function approximators, e.g., neural networks with parameters and respectively, as shown in Figure 3. The high-level controller aims to maximize the external reward and generates a high-level action, i.e., a subgoal every time steps when , where is a pre-determined hyper-parameter. It modulates the behavior of the low-level policy by intrinsically rewarding the low-level for reaching these subgoals. The low-level aims to maximize the intrinsic reward provided by the high-level, and performs a primary action at every time step. Following prior methods [26, 1], we consider a goal space which is a sub-space of with a known mapping function . When , a pre-defined goal transition process is utilized. We adopt directional subgoals that represent the differences between desired states and current states [42, 26], where the goal transition function is set to . The reward function of the high-level policy is defined as:

| (1) |

which is the accumulation of the external reward in the time interval .

While the high-level controller is motivated by the environmental reward, the low-level controller has no direct access to this external reward. Instead, the low-level is supervised by the intrinsic reward that describes subgoal-reaching performance, defined as , where is a binary or continuous distance function. In practice, we employ Euclidean distance as .

The goal-conditioned HRL framework above enables us to train high-level and low-level policies concurrently in an end-to-end fashion. However, it often suffers from training inefficiency due to the unconstrained subgoal generation process, as we have mentioned in Section 1. In the following section, we will introduce the -step adjacency constraint to mitigate this issue.

3 Theoretical Analysis

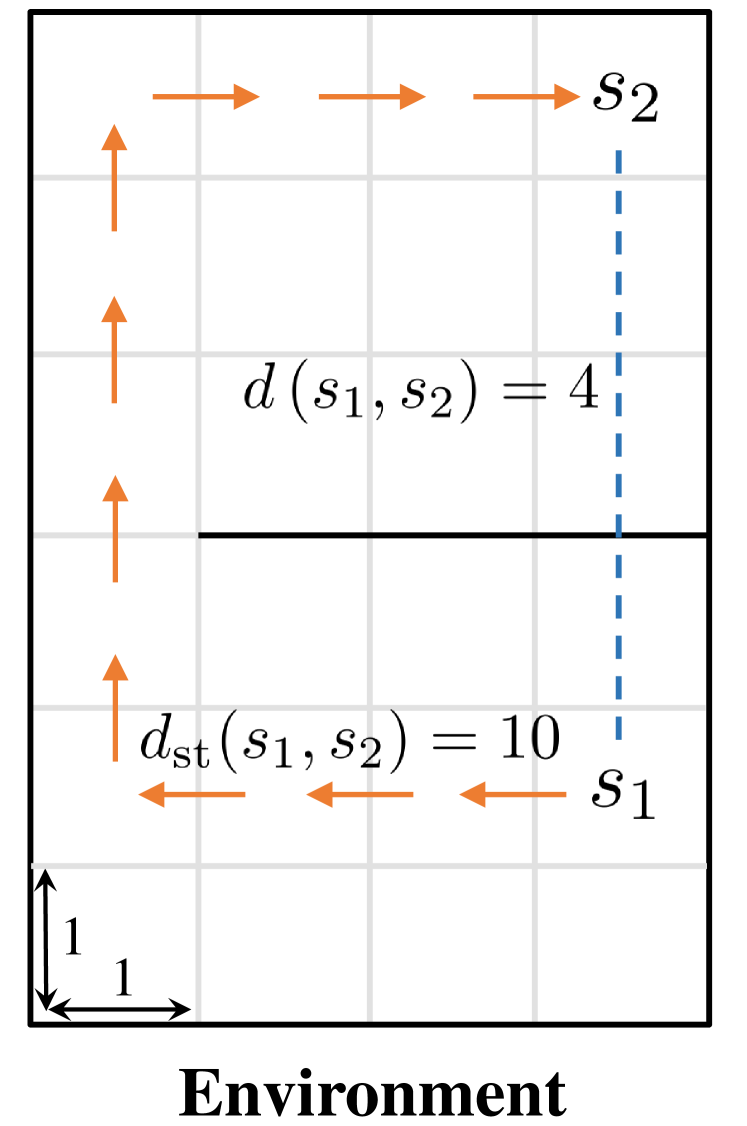

In this section, we provide our theoretical results and show that the optimality can be preserved when learning a high-level policy with -step adjacency constraint. We begin by introducing a distance measure that can decide whether a state is “close” to another state. In this regard, common distance functions such as the Euclidean distance are not suitable, as they often cannot reveal the real structure of the MDP. Therefore, we introduce shortest transition distance, which equals to the minimum number of steps required to reach a target state from a start state, as shown in Figure 3. In stochastic MDPs, the number of steps required is not a fixed number, but a distribution conditioned on a specific policy. In this case, we resort to the notion of first hit time from stochastic processes, and define the shortest transition distance by minimizing the expected first hit time over all possible policies.

Definition 1.

Let . Then, the shortest transition distance from to is defined as:

| (2) |

where is the complete policy set and denotes the first hit time from to .

The shortest transition distance is determined by a policy that connects states and in the most efficient way, which has also been studied by several prior work [10, 8]. This policy is optimal in the sense that it requires the minimum number of steps to reach state from state . Compared with the dynamical distance [15], our definition here does not rely on a specific non-optimal policy. Also, we do not assume that the environment is reversible, i.e., does not hold for all pairs of states. Therefore, the shortest transition distance is a quasi-metric as it does not satisfy the symmetry condition. However, this limitation does not affect the following analysis as we only need to consider the transition from the start state to the goal state without the reversed transition.

Given the definition of the shortest transition distance, we now formulate the property of an optimal (deterministic) goal-conditioned policy [36]. We have:

| (3) |

where is the known inverse mapping of . We then consider the goal-conditioned HRL framework with high-level action frequency . Different from a flat goal-conditioned policy, in this setting the low-level policy is required to reach the subgoals with limited steps. As a result, only a subset of the original states can be reliably reached even with an optimal goal-conditioned policy. We introduce the notion of -step adjacent region to describe the set of subgoals mapped from this reachable subset of states.

Definition 2.

Let . Then, the -step adjacent region of is defined as:

| (4) |

Harnessing the property of , we can show that in deterministic MDPs, given an optimal low-level policy , subgoals that fall in the -step adjacent region of the current state can represent all optimal subgoals in the whole goal space in terms of the induced -step low-level action sequence. We summarize this finding in the following theorem.

Theorem 1.

Let and let be an optimal goal-conditioned policy. Under the assumptions that the MDP is deterministic and that the MDP states are strongly connected, for all satisfying , there exists a surrogate goal such that:

| (5) | ||||

where is the -step state trajectory starting from state under and .

Theorem 1 suggests that the -step low-level action sequence generated by an optimal low-level policy conditioned on a distant subgoal can be induced using a subgoal that is closer. Naturally, we can generalize this result to a two-level goal-conditioned HRL framework, where the low-level is actuated not by a single subgoal, but by a subgoal sequence produced by the high-level policy.

Theorem 2.

Given the high-level action frequency and the high-level planning horizon , for , let be the high-level subgoal trajectory starting from state under an optimal high-level policy . Also, let be the high-level state trajectory under and an optimal low-level policy . Then, there exists a surrogate subgoal trajectory such that:

| (6) | ||||

where is the optimal high-level -function under policy .

Theorem 1 and 2 show that we can constrain the high-level action space to state-wise -step adjacent regions without the loss of optimality. We formulate the high-level objective incorporating this -step adjacency constraint as:

| (7) |

where is the high-level reward defined by Equation (1) and .

In practice, Equation (7) is hard to optimize due to the strict constraint. Therefore, we employ relaxation methods and derive the following un-constrained optimizing objective:

| (8) |

where is a hinge loss function and is a balancing coefficient.

One limitation of our theoretical results is that the theorems are derived in the context of deterministic MDPs. However, these theorems are instructive for practical algorithm design in general cases, and the deterministic assumption has also been exploited by some prior works that investigate distance metrics in MDPs [15, 3]. Also, we note that many real-world applications can be approximated as environments with deterministic dynamics where the stochasticity is mainly induced by noise. Hence, we may infer that the adjacency constraint could preserve a near-optimal policy when the magnitude of noise is small. Empirically, we show that our method is robust to certain types of stochasticity (see Section 5 for details), and we leave rigorous theoretical analysis for future work.

4 HRL with Adjacency Constraint

Although we have formulated the adjacency constraint in Section 3, the exact calculation of the shortest transition distance between two arbitrary states remains complex and non-differentiable. In this section, we introduce a simple method to collect and aggregate the adjacency information from the environment interactions. We then train an adjacency network using the aggregated adjacency information to approximate the shortest transition distance in a parameterized form, which enables a practical optimization of Equation (8).

4.1 Parameterized Approximation of Shortest Transition Distances

As shown in prior research [30, 10, 8, 15], accurately computing the shortest transition distance is not easy and often has the same complexity as learning an optimal low-level goal-conditioned policy. However, from the perspective of goal-conditioned HRL, we do not need a perfect shortest transition distance measure or a low-level policy that can reach any distant subgoals. Instead, only a discriminator of -step adjacency is needed, and it is enough to learn a low-level policy that can reliably reach nearby subgoals (more accurately, subgoals that fall into the -step adjacent region of the current state) rather than all potential subgoals in the goal space.

Given the above, here we introduce a simple approach to determine whether a subgoal satisfies the -step adjacency constraint. We first note that Equation (2) can be approximated as follows:

| (9) |

where is a finite policy set containing different deterministic policies. Obviously, if these policies are diverse enough, we can effectively approximate the shortest transition distance with a sufficiently large . However, training a set of diverse policies separately is costly, and using one single policy to approximate the policy set () [34, 35] often leads to non-optimality. To handle this difficulty, we exploit the fact that the low-level policy itself changes over time during the training procedure. We can thus build a policy set by sampling policies that emerge in different training stages. To aggregate the adjacency information gathered by multiple policies, we propose to explicitly memorize the adjacency information by constructing a binary -step adjacency matrix of the explored states. The adjacency matrix has the same size as the number of explored states, and each element represents whether two states are -step adjacent. In practice, we use the agent’s trajectories, where the temporal distances between states can indicate their adjacency, to construct and update the adjacency matrix online. More details are in the supplementary material.

In practice, using an adjacency matrix is not enough as this procedure is non-differentiable and cannot generalize to newly-visited states. To this end, we further distill the adjacency information stored in a constructed adjacency matrix into an adjacency network parameterized by . The adjacency network learns a mapping from the goal space to an adjacency space, where the Euclidean distance between the state and the goal is consistent with their shortest transition distance:

| (10) |

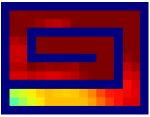

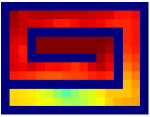

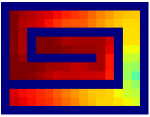

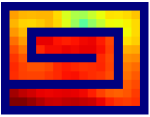

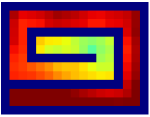

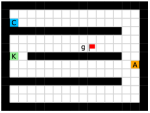

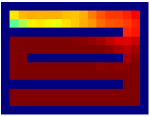

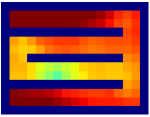

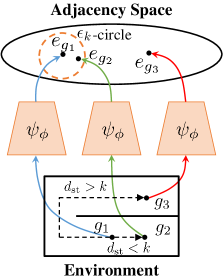

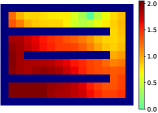

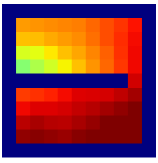

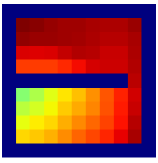

where and is a scaling factor. As we have mentioned above, it is hard to regress the Euclidean distance in the adjacency space to the shortest transition distance accurately, and we only need to ensure a binary relation for implementing the adjacency constraint, i.e., for , and for , as shown in Figure 4. Inspired by modern metric learning approaches [14], we adopt a contrastive-like loss function for this distillation process:

| (11) | ||||

where , , and a hyper-parameter is used to create a gap between the embeddings. represents the label indicating -step adjacency derived from the -step adjacency matrix. Equation (11) penalizes adjacent state embeddings () with large Euclidean distances in the adjacency space and non-adjacent state embeddings () with small Euclidean distances. In practice, we use states evenly-sampled from the adjacency matrix to approximate the expectation, and train the adjacency network each time after the adjacency matrix is updated with newly-sampled trajectories.

Although the construction of an adjacency matrix limits our method to tasks with tabular state spaces, our method can also handle continuous state spaces using goal space discretization (see our continuous control experiments in Section 5). For applications with vast state spaces, constructing a complete adjacency matrix will be problematic, but it is still possible to scale our method to these scenarios using specific feature construction or dimension reduction methods [28, 29, 7], or substituting the distance learning procedure with more accurate distance learning algorithms [10, 8] at the cost of some learning efficiency. We consider possible extensions in this direction as our future work.

4.2 Combining HRL and Adjacency Constraint

With a learned adjacency network , we can now incorporate the adjacency constraint into the goal-conditioned HRL framework. According to Equation (8), we introduce an adjacency loss to replace the original strict adjacency constraint and minimize the following high-level objective:

| (12) |

where is the balancing coefficient, and is derived by replacing with defined by Equation (10) in the second term of Equation (8):

| (13) |

where . Equation (13) will output a non-zero value when the generated subgoal and the current state have an Euclidean distance larger than in the adjacency space, indicating non-adjacency. It is thus consistent with the -step adjacency constraint. In practice, we plug as an extra loss term into the original policy loss term of a specific high-level RL algorithm, e.g., TD error for temporal-difference learning methods.

5 Experimental Evaluation

We have presented our method of Hierarchical Reinforcement learning with -step Adjacency Constraint (HRAC). Our experiments are designed to answer the following questions: (1) Can HRAC promote the generation of adjacent subgoals? (2) Can HRAC improve the sample efficiency and overall performance of goal-conditioned HRL? (3) Can HRAC outperform other strategies that may also improve the learning efficiency of hierarchical agents, e.g., hindsight experience replay [1]?

5.1 Environment Setup

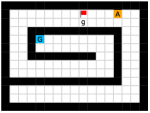

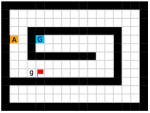

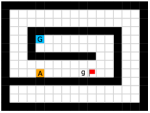

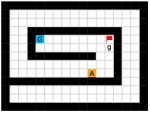

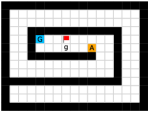

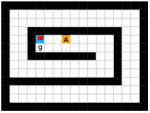

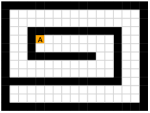

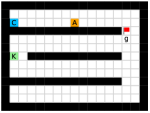

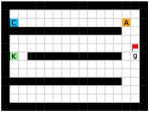

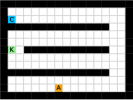

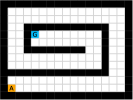

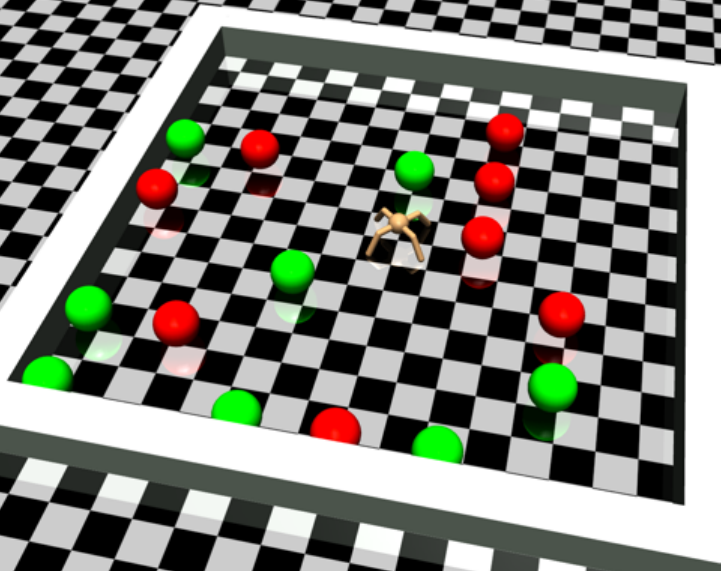

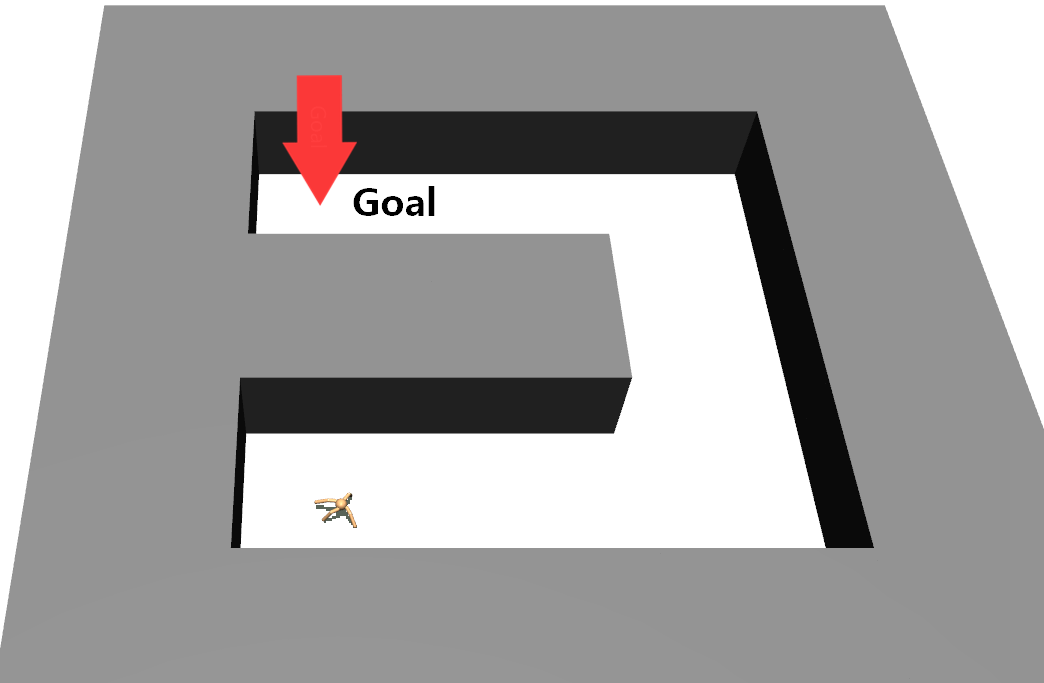

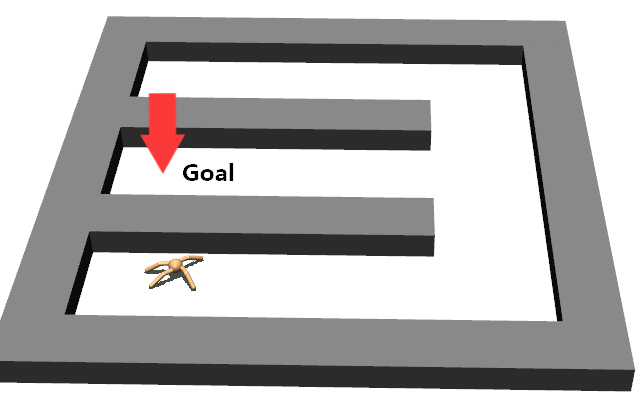

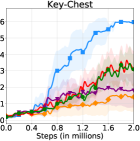

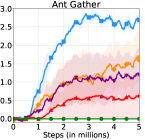

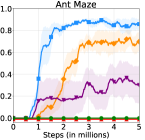

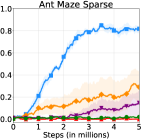

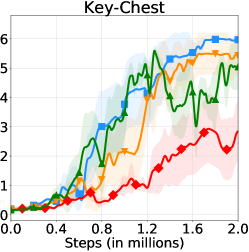

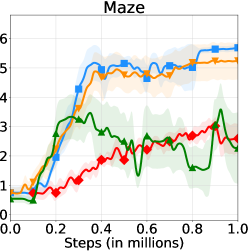

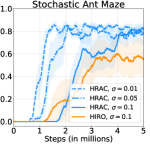

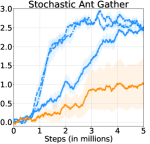

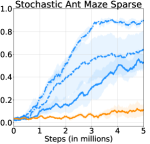

We employed two types of tasks with discrete and continuous state and action spaces to evaluate the effectiveness of our method, as shown in Figure 5. Discrete tasks include Key-Chest and Maze, where the agents are spawned in grid worlds with injected stochasticity and need to accomplish tasks that require both low-level control and high-level planning. Continuous tasks include Ant Gather, Ant Maze and Ant Maze Sparse, where the first two tasks are widely-used benchmarks in HRL community [6, 11, 26, 25, 22], and the third task is a more challenging navigation task with sparse rewards. In all tasks, we used a pre-defined 2-dimensional goal space that represents the position of the agent. More details of the environments are in the supplementary material.

5.2 Comparative Experiments

To comprehensively evaluate the performance of HRAC with different HRL implementations, we employed two different HRL instances for different tasks. On discrete tasks, we used off-policy TD3 [13] for high-level training and on-policy A2C, the syncrhonous version of A3C [24], for the low-level. On continuous tasks, we used TD3 for both the high-level and the low-level training, following prior work [26], and discretized the goal space to grids for adjacency learning.

We compared HRAC with the following baselines. (1) HIRO [26]: one of the state-of-the-art goal-conditioned HRL approaches. (2) HIRO-B: a baseline analagous to HIRO, using binary intrinsic reward for subgoal reaching instead of the shaped reward used by HIRO. (3) HRL-HER: a baseline that employs hindsight experience replay (HER) [1] to produce alternative successful subgoal-reaching experiences as complementary low-level learning signals [22]. (4) Vanilla: Kulkarni et al. [20] used absolute subgoals instead of directional subgoals and adopted a binary intrinsic reward setting. More details of the baselines are in the supplementary material.

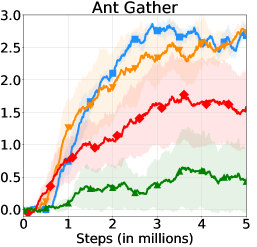

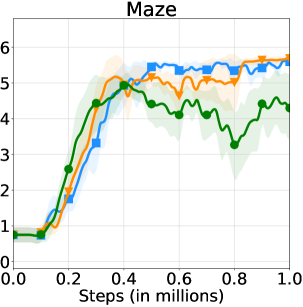

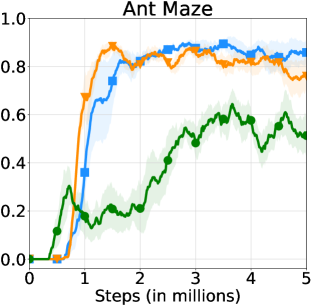

The learning curves of HRAC and baselines across all tasks are plotted in Figure 6. In the Maze task with dense rewards, HRAC achieves comparable performance with HIRO and outperforms other baselines, while in other tasks HRAC consistently surpasses all baselines both in sample efficiency and asymptotic performance. We note that the performance of the baseline HRL-HER matches the results in the previous study [26] where introducing hindsight techniques often degrades the performance of HRL, potentially due to the additional burden introduced on low-level training.

5.3 Ablation Study and Visualizations

We also compared HRAC with several variants to investigate the effectiveness of each component. (1) HRAC-O: An oracle variant that uses a perfect adjacency matrix directly obtained from the environment. We note that compared to other methods, this variant uses the additional information that is not available in many applications. (2) NoAdj: A variant that uses an adjacency training method analagous to the work of Savinov et al. [34, 35], where no adjacency matrix is maintained. The adjacency network is trained using state-pairs directly sampled from stored trajectories, under the same training budget as HRAC. (3) NegReward: This variant implements the -step adjacency constraint by penalizing the high-level with a negative reward when it generates non-adjacent subgoals, which is used by HAC [22].

We provide learning curves of HRAC and these variants in Figure 8. In all tasks, HRAC yields similar performance with the oracle variant HRAC-O while surpassing the NoAdj variant by a large margin, exhibiting the effectiveness of our adjacency learning method. Meanwhile, HRAC achieves better performance than the NegReward variant, suggesting the superiority of implementing the adjacency constraint using a differentiable adjacency loss, which provides a stronger supervision than a penalty. We also empirically studied the effect of different balancing coefficients . Results are shown in Figure 8, which suggests that generally a large can lead to better and more stable performance.

Finally, we visualize the subgoals generated by the high-level policy and the adjacency heatmap in Figure 9. Visualizations indicate that the agent does learn to generate adjacent and interpretable subgoals. We provide additional visualizations in the supplementary material.

5.4 Empirical Study in Stochastic Environments

To empirically verify the stochasticity robustness of HRAC, we applied it to a set of stochastic tasks, including stochastic Ant Gather, Ant Maze and Ant Maze Sparse tasks, which are modified from the original ant tasks respectively. Concretely, we added Gaussian noise with different standard deviations to the position of the ant robot at every step, including and , representing increasing environmental stochasticity. In these tasks we compare HRAC with the baseline HIRO, which has exhibited generally better performance than other baselines, in the most noisy scenario when . As displayed in Figure 10, HRAC achieves similar asymptotic performances with different noise magnitudes in stochastic Ant Gather and Ant Maze tasks and consistently outperforms HIRO, exhibiting robustness to stochastic environments.

6 Related Work

Effectively learning policies with multiple hierarchies has been a long-standing problem in RL. Goal-conditioned HRL [5, 37, 20, 42, 26, 22] aims to resolve this problem with a framework that separates high-level planning and low-level control using subgoals. Recent advances in goal-conditioned HRL mainly focus on improving the learning efficiency of this framework. Nachum et al. [26, 25] proposed an off-policy correction technique to stabilize training, and addressed the problem of goal space representation learning using a mutual-information-based objective. However, the subgoal generation process in their approaches is unconstrained and supervised only by the external reward, and thus these methods may still suffer from training inefficiency. Levy et al. [22] used hindsight techniques [1] to train multi-level policies in parallel and also penalized the high-level for generating subgoals that the low-level failed to reach. However, their method has no theoretical guarantee, and they directly obtain the reachability measure from the environment, using the environmental information that is not available in many scenarios. There are also prior works focusing on unsupervised acquisition of subgoals based on potentially pivotal states [23, 18, 21, 34, 32, 17]. However, these subgoals are not guaranteed to be well-aligned with the downstream tasks and thus are often sub-optimal.

Several prior works have constructed an environmental graph for high-level planning used search nearby graph nodes as reachable subgoals for the low-level [34, 8, 17, 44]. However, these approaches hard-coded the high-level planning process based on domain-specific knowledge, e.g., treat the planning process as solving a shortest-path problem in the graph instead of a learning problem, and thus are limited in scalability. Nasiriany et al. [29] used goal-conditioned value functions to measure the feasibility of subgoals, but a pre-trained goal-conditioned policy is required. A more general topic of goal generation in RL has also been studied in the literature [12, 28, 33]. However, these methods only have a flat architecture and therefore cannot successfully solve tasks that require complex high-level planning.

Meanwhile, our method relates to previous research that studied transition distance or reachability [30, 34, 35, 10, 15]. Most of these works learn the transition distance based on RL [30, 10, 15], which tend to have a high learning cost. Savinov et al. [34, 35] proposed a supervised learning approach for reachability learning. However, the metric they learned depends on a certain policy used for interaction and thus could be sub-optimal compared to our learning method. There are also other metrics that can reflect state similarities in MDPs, such as successor represention [4, 21] that depends on both the environmental dynamics and a specific policy, and bisimulation metrics [9, 3] that depend on both the dynamics and the rewards. Compared to these metrics, the shortest transition distance depends only on the dynamics and therefore may be seamlessly applied to multi-task settings.

7 Conclusion

We present a novel -step adjacency constraint for goal-conditioned HRL framework to mitigate the issue of training inefficiency, with the theoretical guarantee of preserving the optimal policy in deterministic MDPs. We show that the proposed adjacency constraint can be practically implemented with an adjacency network. Experiments on several testbeds with discrete and continuous state and action spaces demonstrate the effectiveness and robustness of our method.

As one of the most promising directions for scaling up RL, goal-conditioned HRL provides an appealing paradigm for handling large-scale problems. However, some key issues involving how to devise effective and interpretable hierarchies remain to be solved, such as how to empower the high-level policy to learn and explore in a more semantically meaningful action space [27], and how to enable the subgoals to be shared and reused in multi-task settings. Other future work includes extending our method to tasks with high-dimensional state spaces, e.g., by encompassing modern representation learning schemes [16, 25, 38], and leveraging the adjacency network to improve the learning efficiency in more general scenarios.

Broader Impact

This work may promote the research in the field of HRL and RL, and has potential real-world applications such as robotics. The main uncertainty of the proposed method might be the fact that the RL training process itself is somewhat brittle, and may break in counterintuitive ways when the reward function is misspecified. Also, since the training data of RL heavily depends on the training environments, designing unbiased simulators or real-world training environments is important for eliminating the biases in the data collected by the agents.

Acknowledgments and Disclosure of Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 61671266, Grant 61836004, Grant 61836014 and in part by the Tsinghua-Guoqiang research program under Grant 2019GQG0006. The authors would also like to thank the anonymous reviewers for their careful reading and their many insightful comments.

References

- [1] Marcin Andrychowicz, Filip Wolski, Alex Ray, Jonas Schneider, Rachel Fong, Peter Welinder, Bob McGrew, Josh Tobin, Pieter Abbeel, and Wojciech Zaremba. Hindsight experience replay. In Advances in Neural Information Processing Systems, 2017.

- [2] Andrew G. Barto and Sridhar Mahadevan. Recent advances in hierarchical reinforcement learning. Discrete event dynamic systems, 13(1-2):41–77, 2003.

- [3] Pablo Samuel Castro. Scalable methods for computing state similarity in deterministic Markov Decision Processes. In AAAI, 2020.

- [4] Peter Dayan. Improving generalization for temporal difference learning: The successor representation. Neural Computation, 5(4):613–624, 1993.

- [5] Peter Dayan and Geoffrey E Hinton. Feudal reinforcement learning. In Advances in Neural Information Processing Systems, 1993.

- [6] Yan Duan, Xi Chen, Rein Houthooft, John Schulman, and Pieter Abbeel. Benchmarking deep reinforcement learning for continuous control. In ICML, 2016.

- [7] Adrien Ecoffet, Joost Huizinga, Joel Lehman, Kenneth O. Stanley, and Jeff Clune. Go-Explore: A new approach for hard-exploration problems. arXiv preprint arXiv:1901.10995, 2019.

- [8] Ben Eysenbach, Russ R Salakhutdinov, and Sergey Levine. Search on the replay buffer: Bridging planning and reinforcement learning. In Advances in Neural Information Processing Systems, 2019.

- [9] Norm Ferns, Prakash Panangaden, and Doina Precup. Metrics for finite Markov Decision Processes. In AAAI, 2004.

- [10] Carlos Florensa, Jonas Degrave, Nicolas Heess, Jost Tobias Springenberg, and Martin Riedmiller. Self-supervised learning of image embedding for continuous control. arXiv preprint arXiv:1901.00943, 2019.

- [11] Carlos Florensa, Yan Duan, and Pieter Abbeel. Stochastic neural networks for hierarchical reinforcement learning. In ICLR, 2017.

- [12] Carlos Florensa, David Held, Xinyang Geng, and Pieter Abbeel. Automatic goal generation for reinforcement learning agents. In ICML, 2018.

- [13] Scott Fujimoto, Herke van Hoof, and David Meger. Addressing function approximation error in actor-critic methods. In ICML, 2018.

- [14] R. Hadsell, S. Chopra, and Y. LeCun. Dimensionality reduction by learning an invariant mapping. In CVPR, 2006.

- [15] Kristian Hartikainen, Xinyang Geng, Tuomas Haarnoja, and Sergey Levine. Dynamical distance learning for semi-supervised and unsupervised skill discovery. In ICLR, 2020.

- [16] Irina Higgins, Loic Matthey, Arka Pal, Christopher Burgess, Xavier Glorot, Matthew Botvinick, Shakir Mohamed, and Alexander Lerchner. Beta-VAE: Learning basic visual concepts with a constrained variational framework. In ICLR, 2017.

- [17] Zhiao Huang, Fangchen Liu, and Hao Su. Mapping state space using landmarks for universal goal reaching. In Advances in Neural Information Processing Systems, 2019.

- [18] Özgür Şimşek, Alicia P. Wolfe, and Andrew G. Barto. Identifying useful subgoals in reinforcement learning by local graph partitioning. In ICML, 2005.

- [19] Khimya Khetarpal, Zafarali Ahmed, Gheorghe Comanici, David Abel, and Doina Precup. What can I do here? A theory of affordances in reinforcement learning. In ICML, 2020.

- [20] Tejas D. Kulkarni, Karthik Narasimhan, Ardavan Saeedi, and Josh Tenenbaum. Hierarchical deep reinforcement learning: Integrating temporal abstraction and intrinsic motivation. In Advances in Neural Information Processing Systems, 2016.

- [21] Tejas D. Kulkarni, Ardavan Saeedi, Simanta Gautam, and Samuel J. Gershman. Deep successor reinforcement learning. arXiv preprint arXiv:1606.02396, 2016.

- [22] Andrew Levy, George Konidaris, Robert Platt, and Kate Saenko. Learning multi-level hierarchies with hindsight. In ICLR, 2019.

- [23] Amy McGovern and Andrew G. Barto. Automatic discovery of subgoals in reinforcement learning using diverse density. In ICML, 2001.

- [24] Volodymyr Mnih, Adria Puigdomenech Badia, Mehdi Mirza, Alex Graves, Timothy Lillicrap, Tim Harley, David Silver, and Koray Kavukcuoglu. Asynchronous methods for deep reinforcement learning. In ICML, 2016.

- [25] Ofir Nachum, Shixiang Gu, Honglak Lee, and Sergey Levine. Near-optimal representation learning for hierarchical reinforcement learning. In ICLR, 2019.

- [26] Ofir Nachum, Shixiang Shane Gu, Honglak Lee, and Sergey Levine. Data-efficient hierarchical reinforcement learning. In Advances in Neural Information Processing Systems, 2018.

- [27] Ofir Nachum, Haoran Tang, Xingyu Lu, Shixiang Gu, Honglak Lee, and Sergey Levine. Why does hierarchy (sometimes) work so well in reinforcement learning? arXiv preprint arXiv:1909.10618, 2019.

- [28] Ashvin V Nair, Vitchyr Pong, Murtaza Dalal, Shikhar Bahl, Steven Lin, and Sergey Levine. Visual reinforcement learning with imagined goals. In Advances in Neural Information Processing Systems, 2018.

- [29] Soroush Nasiriany, Vitchyr H. Pong, Steven Lin, and Sergey Levine. Planning with goal-conditioned policies. In Advances in Neural Information Processing Systems, 2019.

- [30] Vitchyr Pong, Shixiang Gu, Murtaza Dalal, and Sergey Levine. Temporal difference models: Model-free deep RL for model-based control. In ICLR, 2018.

- [31] Doina Precup. Temporal abstraction in reinforcement learning. PhD thesis, University of Massachusetts, Amherst, 2000.

- [32] Jacob Rafati and David C Noelle. Unsupervised methods for subgoal discovery during intrinsic motivation in model-free hierarchical reinforcement learning. In AAAI, 2019.

- [33] Zhizhou Ren, Kefan Dong, Yuan Zhou, Qiang Liu, and Jian Peng. Exploration via hindsight goal generation. In Advances in Neural Information Processing Systems, 2019.

- [34] Nikolay Savinov, Alexey Dosovitskiy, and Vladlen Koltun. Semi-parametric topological memory for navigation. In ICLR, 2018.

- [35] Nikolay Savinov, Anton Raichuk, Raphaël Marinier, Damien Vincent, Marc Pollefeys, Timothy Lillicrap, and Sylvain Gelly. Episodic curiosity through reachability. In ICLR, 2019.

- [36] Tom Schaul, Daniel Horgan, Karol Gregor, and David Silver. Universal value function approximators. In ICML, 2015.

- [37] Jürgen Schmidhuber and Reiner Wahnsiedler. Planning simple trajectories using neural subgoal generators. In From Animals to Animats 2: Proceedings of the Second International Conference on Simulation of Adaptive Behavior, volume 2, page 196. MIT Press, 1993.

- [38] Aravind Srinivas, Michael Laskin, and Pieter Abbeel. CURL: Contrastive unsupervised representations for reinforcement learning. In ICML, 2020.

- [39] Richard S. Sutton, Doina Precup, and Satinder Singh. Between MDPs and semi-MDPs: A framework for temporal abstraction in reinforcement learning. Artificial Intelligence, 112(1-2):181–211, 1999.

- [40] Emanuel Todorov, Tom Erez, and Yuval Tassa. Mujoco: A physics engine for model-based control. In International Conference on Intelligent Robots and Systems, 2012.

- [41] Tom Van de Wiele, David Warde-Farley, Andriy Mnih, and Volodymyr Mnih. Q-learning in enormous action spaces via amortized approximate maximization. In ICLR, 2020.

- [42] Alexander Sasha Vezhnevets, Simon Osindero, Tom Schaul, Nicolas Heess, Max Jaderberg, David Silver, and Koray Kavukcuoglu. FeUdal networks for hierarchical reinforcement learning. In ICML, 2017.

- [43] Tom Zahavy, Matan Haroush, Nadav Merlis, Daniel J Mankowitz, and Shie Mannor. Learn what not to learn: Action elimination with deep reinforcement learning. In Advances in Neural Information Processing Systems, 2018.

- [44] Amy Zhang, Adam Lerer, Sainbayar Sukhbaatar, Rob Fergus, and Arthur Szlam. Composable planning with attributes. In ICML, 2018.

Appendix A Proofs of Theorems

A.1 Proof of Theorem 1

Proof.

Under the assumption that the MDP is deterministic and all states are strongly connected, there exists at least one shortest state trajectory from to . Without loss of generality, we consider one shortest state trajectory , where and . For all and , let , and let be the -step sub-trajectory of from to . Since and is connected by in steps, we have that , i.e., . In the following, we will prove that .

We first prove that the shortest transition distance satisfies the triangle inequality, i.e., consider three arbitrary states , then : let be one shortest state trajectory between and and let be one shortest state trajectory between and . We can concatenate and to form a trajectory that connects and . Then, by Definition 1 we have .

Using the triangle inequality, we can prove that the sub-trajectory is also a shortest trajectory from to : assume that this is not true and there exists a shorter trajectory from to . Then, by Definition 1 we have . Since is a valid trajectory from to , we have . Applying the triangle inequality, we have , which is in contradiction with . Thus, our original assumption must be false, and the trajectory is a shortest trajectory from to .

Finally, let be an inverse dynamics model, i.e., given state and the next state , outputs the action that is performed at to reach . Then, employing Equation (3), for we have given that is a shortest trajectory from to , and given that is a shortest trajectory from to . This indicates that . ∎

A.2 Proof of Theorem 2

Proof.

Using Theorem 1, we have that for each subgoal , there exists a subgoal that can induce the same low-level -step action sequence as . This indicates that the agent’s trajectory and the high-level reward defined by Equation (1) remain the same for all when replacing with . Then, using the high-level Bellman optimality equation for the optimal function

| (14) | ||||

and as is the final state of , we have . ∎

Appendix B Implementation Details

B.1 Adjacency Learning

Constructing and updating the adjacency matrix.

We use the agent’s trajectories to construct and update the adjacency matrix. Concretely, the adjacency matrix is initialized to an empty matrix at the beginning of training. Each time when the agent explores a new state that it has never visited before, the adjacency matrix is augmented by a new row and a new column with zero elements, representing the -step adjacent relation between the new state and explored states. When the temporal distance between two states in one trajectory is not larger than , then the corresponding element in the adjacency matrix will be labeled to 1, indicating the adjacency. (The diagnoal of the adjacency matrix will always be labeled to 1.) Although the temporal distance between two states based on a single trajectory is often larger than the real shortest transition distance, it can be easily shown that the adjacency matrix with this labeling strategy can converge to the optimal adjacency matrix asymptotically with sufficient trajectories sampled by different policies. In practice, we employ a trajectory buffer to store newly-sampled trajectories, and update the adjacency matrix online in a fixed frequency using the stored trajectories. The trajectory buffer is cleared after each update.

Training the adjacency network.

The adjacency network is trained by minimizing the objective defined by Equation (11). We use states evenly-sampled from the adjacency matrix (i.e., from the set of all explored states) to approximate the expectation, and train the adjacency network each time after the adjacency matrix is updated with new trajectories. Note that by explicitly aggregating the adjacency information using an adjacency matrix, we are able to achieve the uniform sampling of all explored states and thus achieve a nearly unbiased estimation of the expectation, which cannot be realized when we directly sample state-pairs from the trajectories (see the following comparison with the work of Savinov et al. [34, 35] for details).

Embedding all subgoals with a single adjacency network is enough to express adjacency when the environment is reversible. However, when this condition is not satisfied, it is insufficient to express directional adjacency using one adjacency network, as the parameterized approximation defined by Equation (10) is symmetric for and . In this case, one can use two separate sub-networks to embed and in Equation (10) respectively using the structure proposed in UVFA [36].

Comparison with the work of Savinov et al.

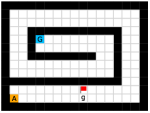

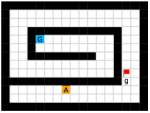

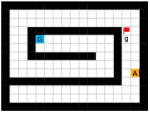

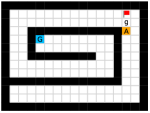

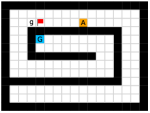

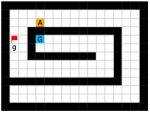

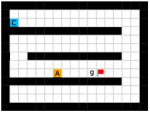

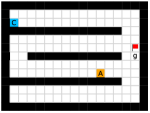

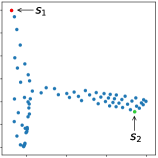

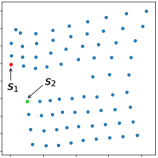

Savinov et al. [34, 35] also propose a supervised learning approach for learning the adjacency between states. The main differences between our method and theirs are: 1) We use trajectories sampled by multiple policies to construct training samples, while they only use trajectories sampled by one specific policy; 2) We use an adjacency matrix to explicitly aggregate the adjacency information and sample training pairs based on the adjacency matrix, while they directly sample training pairs from trajectories. These differences lead to two advantages of our method: 1) By using multiple policies, we achieve a more accurate adjacency approximation, as shown by Equation (9); 2) By maintaining an adjacency matrix, we can uniformly sample from the set of all explored states and realize a nearly unbiased estimation of the expectation in Equation (11), while the estimation by sampling state-pairs from trajectories is biased. As an example, consider a simple grid world in Figure 11, where states are represented by their positions. In this environment, states and are non-adjacent since they are separated by a wall. However, it is hard for the method by Savinov et al. to handle this situation as these two states rarely emerge in the same trajectory due to the large distance, and thus the loss induced by this state-pair is very likely to be dominated by the loss of other nearer state-pairs. Meanwhile, our method treat the loss of all state-pairs equally, and can therefore alleviate this phenomenon. Empirically, we employed a random agent (since the random policy is stochastic, it can be viewed as multiple deterministic policies, and is enough for adjacency learning in this simple environment) to interact with the environment for steps, and trained the adjacency network with collected samples using both methods. We visualize the LLE of state embeddings and two adjacency distance heatmaps by both methods respectively in Figure 11 and 11. Visualizations validate our analysis, showing that our method does learn a better adjacency measure in this scenario.

B.2 Algorithm Pseudocode

We provide Algorithm 1 to show the training procedure of HRAC. Some training details are omitted for brevity, e.g., the concrete training flow of the low-level policy.

B.3 Environment Details

Maze.

This environment has a size of , with a discrete 2-dimensional state space representing the position of the agent and a discrete 4-dimensional action space corresponding to actions moving towards four directions. The agent is provided with a dense reward to facilitate exploration, i.e., each step if the agent moves closer to the goal, and each step if the agent moves farther. Each episode has a maximum length of 200. Environmental stochasticity is introduced by replacing the action of the agent by a random action each step with a probability of 0.25.

Key-Chest.

This environment has a size of , with a discrete 3-dimensional state space in which the first two dimensions represent the position of the agent respectively, and the third dimension represents whether the agent has picked up the key (1 if the agent has the key and 0 otherwise). The agent has the same action space as the Maze task. The agent is provided with sparse reward of and , respectively for picking up the key and opening the chest. Each episode ends if the agent opens the chest or runs out of the step limit of 500. The random action probability of the environment is also 0.25.

Ant Gather.

This environment has a size of , with a continuous state space including the current position and velocity, the current time step , and the depth readings defined by the stardard Gather environment [6]. We use the ant robot pre-defined by Rllab, with a 8-dimensional continuous action space. The ant robot is spawned at the center of the map and needs to gather apples while avoiding bombs. Both apples and bombs are randomly placed in the environment at the beginning of each episode. The agent receives a positive reward of for each apple and a negative reward of for each bomb. Each episode terminates at 500 time steps.

Ant Maze.

This environment has a size of , with a continuous state space including the current position and velocity, the target location, and the current time step . In the training stage, the environment randomly samples a target position at the beginning of each episode, and the agent receives a dense reward at each time step according to its negative Euclidean distance from the target position. At evaluation stage, the target position is fixed to , and the success is defined as being within an Euclidean distance of 5 from the target. Each episode ends at 500 time steps. In practice, we scale the environmental reward by 0.1 equally for all methods.

Ant Maze Sparse.

This environment has a size of , with the same state and action spaces as the Ant Maze task. The target position (goal) is set at the position in the center corridor. The agent is rewarded by only if it reachs the goal, which is defined as having a Euclidean distance that is smaller than 1 from the goal. At the beginning of each episode, the agent is randomly placed in the maze except at the goal position. Each episode is terminated if the agent reaches the goal or after 500 steps.

B.4 HRAC and Baseline Details

We use PyTorch to implement our method HRAC and all the baselines.000We use the open source PyTorch implementation of HIRO at https://github.com/bhairavmehta95/data-efficient-hrl.

HRAC.

For discrete control tasks, we adopt a binary intrinsic reward setting: we set the intrinsic reward to 1 when and , where is the position of the agent and is the position of the desired subgoal. For continuous control tasks, we adopt a dense intrinsic reward setting based on the negative Euclidean distances between states and subgoals.

HIRO.

Following Nachum et al. [26], we restrict the output of high-level to , representing the desired shift of the agent’s position. By limiting the range of directional subgoals generated by the high-level, HIRO can roughly control the Euclidean distance between the absolute subgoal and the current state in the raw goal space rather than the learned adjacency space.

HRL-HER.

As HER cannot be applied to the on-policy training scheme in a straightforward manner, in discrete control tasks where the low level policy is trained using A2C, we modify its implementation so that it can be incorporated into the on-policy setting. For this on-policy variant, during the training phase, we maintain an additional episodic state memory. This memory stores states that the agent has visited from the beginning of each episode. When the high-level generates a new subgoal, the agent randomly samples a subgoal mapped from a stored state with a fixed probability 0.2 to substitute the generated subgoal for the low-level to reach. This implementation resembles the “episode” strategy introduced in the original HER. We still use the original HER in continuous control tasks.

NoAdj.

We follow the training pipeline proposed by Savinov et al. [34, 35], where no adjacency matrix is maintained. Training pairs are constructed by randomly sampling state-pairs from the stored trajectories. The samples with are labeled as positive with , and the samples with are negative ones with . The hyper-parameter is used to create a gap between the two types of samples, where in practice we use .

NegReward.

In this variant, every time the high-level generates a subgoal, we use the adjacency network to judge whether it is -step adjacent. If the subgoal is non-adjacent, the high-level will be penalized with a negative reward .

B.5 Network Architecture

For the hierarchical policy network, we employ the same architecture as HIRO [26] in continuous control tasks, where both the high-level and the low-level use TD3 [13] algorithm for training. In discrete control tasks, we use two networks consisting of 3 fully-connected layers with ReLU nonlinearities as the low-level actor and critic networks of A2C (our preliminary results show that the performances using on-policy and off-policy methods for the low-level training are similar in the discrete control tasks we consider), and use the same high-level TD3 network architecture as the continuous control task. The size of the hidden layers of both low-level actor and critic is . The output of high-level actor is activated using the tanh function and scaled to fit the size of the environments.

For the adjacency network, we use a network consisting of 4 fully-connected layers with ReLU nonlinearities in all tasks. Each hidden layer of the adjacency network has the size of . The dimension of the output embedding is 32.

We use Adam optimizer for all networks.

B.6 Hyper-parameters

We list all hyper-parameters we use in the discrete and continuous control tasks respectively in Table 1 and Table 2, and list the hyper-parameters used for adjacency network training in Table 3. “Ranges” in the tables show the ranges of hyper-parameters considered, and the hyper-parameters without ranges are not tuned.

Appendix C Additional Visualizations

We provide additional subgoal and adjacency heatmap visualizations of the Maze and Key-Chest tasks respectively in Figure 12 and Figure 13.

| Hyper-parameters | Values | Ranges |

|---|---|---|

| High-level TD3 | ||

| Actor learning rate | 0.0001 | |

| Critic learning rate | 0.001 | |

| Replay buffer size | 10000 / 20000 for Maze / K-C | {10000, 20000} |

| Batch size | 64 | |

| Soft update rate | 0.001 | |

| Policy update frequency | 2 | {1, 2} |

| 0.99 | ||

| High-level action frequency | 10 | |

| Reward scaling | 1.0 | |

| Exploration strategy | Gaussian ( for Maze / K-C) | {3.0, 5.0} |

| Adjacency loss coefficient | 20 | {1, 5, 10, 20} |

| Low-level A2C | ||

| Actor learning rate | 0.0001 | |

| Critic learning rate | 0.0001 | |

| Entropy weight | 0.01 | |

| 0.99 | ||

| Reward scaling | 1.0 |

| Hyper-parameters | Values | Ranges |

|---|---|---|

| High-level TD3 | ||

| Actor learning rate | 0.0001 | |

| Critic learning rate | 0.001 | |

| Replay buffer size | 200000 | |

| Batch size | 128 | |

| Soft update rate | 0.005 | |

| Policy update frequency | 1 | |

| 0.99 | ||

| High-level action frequency | 10 | |

| Reward scaling | 0.1 / 1.0 for Ant Maze / others | {0.1, 1.0} |

| Exploration strategy | Gaussian () | {1.0, 2.0} |

| Adjacency loss coefficient | 20 | {1, 5, 10, 20} |

| Low-level TD3 | ||

| Actor learning rate | 0.0001 | |

| Critic learning rate | 0.001 | |

| Replay buffer size | 200000 | |

| Batch size | 128 | |

| Soft update rate | 0.005 | |

| Policy update frequency | 1 | |

| 0.95 | ||

| Reward scaling | 1.0 | |

| Exploration strategy | Gaussian () |

| Hyper-parameters | Values | Ranges |

|---|---|---|

| Adjacency Network | ||

| Learning rate | 0.0002 | |

| Batch size | 64 | |

| 1.0 | ||

| 0.2 | ||

| Steps for pre-training | 50000 | |

| Pre-training epochs | 50 | |

| Online training frequency (steps) | 50000 | |

| Online training epochs | 25 |