Generalized Neural Collapse for a Large Number of Classes

Abstract

Neural collapse provides an elegant mathematical characterization of learned last layer representations (a.k.a. features) and classifier weights in deep classification models. Such results not only provide insights but also motivate new techniques for improving practical deep models. However, most of the existing empirical and theoretical studies in neural collapse focus on the case that the number of classes is small relative to the dimension of the feature space. This paper extends neural collapse to cases where the number of classes are much larger than the dimension of feature space, which broadly occur for language models, retrieval systems, and face recognition applications. We show that the features and classifier exhibit a generalized neural collapse phenomenon, where the minimum one-vs-rest margins is maximized. We provide empirical study to verify the occurrence of generalized neural collapse in practical deep neural networks. Moreover, we provide theoretical study to show that the generalized neural collapse provably occurs under unconstrained feature model with spherical constraint, under certain technical conditions on feature dimension and number of classes.

1 Introduction

Over the past decade, deep learning algorithms have achieved remarkable progress across numerous machine learning tasks and have significantly enhanced the state-of-the-art in many practical applications ranging from computer vision to natural language processing and retrieval systems. Despite their tremendous success, a comprehensive understanding of the features learned from deep neural networks (DNNs) is still lacking. The recent work Papyan et al. (2020); Papyan (2020) has empirically uncovered an intriguing phenomenon regarding the last-layer features and classifier of DNNs, called Neural Collapse () that can be briefly summarized as the following characteristics:

-

•

Variability Collapse (): Within-class variability of features collapses to zero.

-

•

Convergence to Simplex ETF (): Class-mean features converge to a simplex Equiangular Tight Frame (ETF), achieving equal lengths, equal pair-wise angles, and maximal distance in the feature space.

-

•

Self-Duality (): Linear classifiers converge to class-mean features, up to a global rescaling.

Neural collapse provides a mathematically elegant characterization of learned representations or features in deep learning based classification models, independent of network architectures, dataset properties, and optimization algorithms. Building on the so-called unconstrained feature model (Mixon et al., 2020) or the layer-peeled model (Fang et al., 2021), subsequent research (Zhu et al., 2021; Lu & Steinerberger, 2020; Ji et al., 2021; Yaras et al., ; Wojtowytsch et al., 2020; Ji et al., ; Zhou et al., ; Han et al., ; Tirer & Bruna, 2022; Zhou et al., 2022a; Poggio & Liao, 2020; Thrampoulidis et al., 2022; Tirer et al., 2023; Nguyen et al., 2022) has provided theoretical evidence for the existence of the phenomenon when using a family of loss functions including cross-entropy (CE) loss, mean-square-error (MSE) loss and variants of CE loss. Theoretical results regarding not only contribute to a new understanding of the working of DNNs but also provide inspiration for developing new techniques to enhance their practical performance in various settings, such as imbalanced learning (Xie et al., 2023; Liu et al., 2023b), transfer learning (Galanti et al., 2022a; Li et al., 2022; Xie et al., 2022; Galanti et al., 2022b), continual learning (Yu et al., 2022; Yang et al., 2023), loss and architecture designs (Chan et al., 2022; Yu et al., 2020; Zhu et al., 2021), etc.

However, most of the existing empirical and theoretical studies in focus on the case that the number of classes is small relative to the dimension of the feature space. Nevertheless, there are many cases in practice where the number of classes can be extremely large, such as

-

•

Person identification (Deng et al., 2019), where each identity is regarded as one class.

-

•

Language models (Devlin et al., 2018), where the number of classes equals the vocabulary size111Language models are usually trained to classify a token (or a collection of them) that is either masked in the input (as in BERT (Devlin et al., 2018)), or the next one following the context (as in language modeling), or a span of masked tokens in the input (as in T5 (Raffel et al., 2020)), etc. In such cases, the number of classes is equal to the number of all possible tokens, i.e., the vocabulary size..

-

•

Retrieval systems (Mitra et al., 2018), where each document in the dataset represents one class.

-

•

Contrastive learning (Chen et al., 2020a), where each training data can be regarded as one class.

In such cases, it is usually infeasible to have a feature dimension commensurate with the number of classes due to computational and memory constraints. Therefore, it is crucial to develop a comprehensive understanding of the characteristics of learned features in such cases, particularly with the increasing use of web-scale datasets that have a vast number of classes.

Contributions.

This paper studies the geometric properties of the learned last-layer features and the classifiers for cases where the number of classes can be arbitrarily large compared to the feature dimension. Motivated by the use of spherical constraints in learning with a large number of classes, such as person identification and contrastive learning, we consider networks trained with spherical constraints on the features and classifiers. Our contributions can be summarized as follows.

-

•

The Arrangement Problem: Generalizing to a Large Number of Classes. In Section 2 we introduce the generalized () for characterizing the last-layer features and classifier. In particular, 1 and 3 state the same as 1 and 3, respectively. 2 states that the classifier weight is a Softmax Code, which generalizes the notion of a simplex ETF and is defined as the collection of points on the unit hyper-sphere that maximizes the minimum one-vs-all distance (see Figure 1 (a,b) for an illustration). Empirically, we verify that the approximately holds in practical DNNs trained with a small temperature in CE loss. Furthermore, we conduct theoretical study in Section 3 to show that under the unconstrained features model (UFM) (Mixon et al., 2020; Fang et al., 2021; Zhu et al., 2021) and with a vanishing temperature, the global solutions satisfy under technical conditions on Softmax Code and solutions to the Tammes problem (Tammes, 1930), the latter defined as a collection of points on the unit hyper-sphere that maximizes the minimum one-vs-one distance (see Figure 1(c) for an illustration).

-

•

The Assignment Problem: Implicit Regularization of Class Semantic Similarity. Unlike the simplex ETF (as in 2) in which the distance between any pair of vectors is the same, not all pairs in a Softmax Code (as in 2) are of equal distant when the number of classes is greater than the feature space dimension. This leads to the “assignment” problem, i.e., the correspondence between the classes and the weights in a Softmax Code. In Section 4, we show empirically an implicit regularization effect by the semantic similarity of the classes, i.e., conceptually similar classes (e.g., Cat and Dog) are often assigned to closer classifier weights in Softmax Code, compared to those that are conceptually dissimilar (e.g., Cat and Truck). Moreover, such an implicit regularization is beneficial, i.e., enforcing other assignments produces inferior model quality.

-

•

Cost Reduction for Practical Network Training/Fine-tuning. The universality of alignment between classifier weights and class means (i.e., 3) implies that training the classifier is unnecessary and the weight can be simply replaced by the class-mean features. Our experiments in Section 5 demonstrate that such a strategy achieves comparable performance to classical training methods, and even better out-of-distribution performance than classical fine-tuning methods with significantly reduced parameters.

Related work.

The recent work Liu et al. (2023a) also introduces a notion of generalized for the case of large number of classes, which predicts equal-spaced features. However, their work focuses on networks trained with weight decay, for which empirical results in Appendix B and Yaras et al. (2023) show to not produce equal-length and equal-spaced features for a relatively large number of classes. Moreover, the work Liu et al. (2023a) relies on a specific choice of kernel function to describe the uniformity. Instead, we concretely define 2 through the softmax code. When preparing this submission, we notice a concurrent work Gao et al. (2023) that provides analysis for generalized , but again for networks trained with weight decay. In addition, that work analyzes gradient flow for the corresponding UFM with a particular choice of weight decay, while our work studies the global optimality of the training problem. The work Zhou et al. (2022a) empirically shows that MSE loss is inferior to the CE loss when , but no formal analysis is provided for CE loss. Finally, the global optimality of the UFM with spherical constraints has been studied in Lu & Steinerberger (2022); Yaras et al. (2023) but only for the cases or .

2 Generalized Neural Collapse for A Large Number of Classes

In this section, we begin by providing a brief overview of DNNs and introducing notations used in this study in Section 2.1. We will also introduce the concept of the UFM which is used in theoretical study of the subsequent section. Next, we introduce the notion of Softmax Code for describing the distribution of a collection of points on the unit sphere, which prepares us to present a formal definition of Generalized Neural Collapse and empirical verification of its validity in Section 2.2.

2.1 Basics Concepts of DNNs

A DNN classifier aims to learn a feature mapping with learnable parameters that maps from input to a deep representation called the feature , and a linear classifier such that the output (also known as the logits) can make a correct prediction. Here, represents all the learnable parameters of the DNN.222We ignore the bias term in the linear classifier since the bias term is used to compensate the global mean of the features and vanishes when the global mean is zero (Papyan et al., 2020; Zhu et al., 2021), it is the default setting across a wide range of applications such as person identification (Wang et al., 2018; Deng et al., 2019), contrastive learning (Chen et al., 2020a; Chen & He, 2021), etc.

Given a balanced training set , where is the -th sample in the -th class and is the corresponding one-hot label with all zero entries except for unity in the -th entry, the network parameters are typically optimized by minimizing the following CE loss

| (1) |

In above, we assume that a spherical constraint is imposed on the feature and classifier weights and that the logit is divided by the temperature parameter . This is a common practice when dealing with a large number of classes (Wang et al., 2018; Chang et al., 2019; Chen et al., 2020a). Specifically, we enforce for all and . An alternative regularization is weight decay on the model parameters , the effect of which we study in Appendix B.

To simplify the notation, we denote the oblique manifold embedded in Euclidean space by . In addition, we denote the last-layer features by . We rewrite all the features in a matrix form as

Also we denote by the class-mean feature for each class.

Unconstrained Features Model (UFM).

The UFM (Mixon et al., 2020) or layer-peeled model (Fang et al., 2021), wherein the last-layer features are treated as free optimization variables, are widely used for theoretically understanding the phenomena. In this paper, we will consider the following UFM with a spherical constraint on classifier weights and unconstrained features :

| (2) |

2.2 Generalized Neural Collapse

We start by introducing the notion of softmax code which will be used for describing .

Definition 2.1 (Softmax Code).

Given positive integers and , a softmax code is an arrangement of points on a unit sphere of that maximizes the minimal distance between one point and the convex hull of the others:

| (3) |

In above, the distance between a point and a set is defined as , where denotes the convex hull of a set.

We now extend to the Generalized Neural Collapse () that captures the properties of the features and classifiers at the terminal phase of training. With a vanishing temperature (i.e., ), the last-layer features and classifier exhibit the following phenomenon:

-

•

Variability Collapse (). All features of the same class collapse to the corresponding class mean. Formally, as used in Papyan et al. (2020), the quantity , where and denote the between-class and within-class covariance matrices, respectively.

-

•

Softmax Codes (). Classifier weights converge to the softmax code in definition 2.1. This property may be measured by .

-

•

Self-Duality (). Linear classifiers converge to the class-mean features. Formally, this alignment can be measured by .

The main difference between and lies in 2 / 2, which describe the configuration of the classifier weight . In 2, the classifier weights corresponding to different classes are described as a simplex ETF, which is a configuration of vectors that have equal pair-wise distance and that distance is maximized. Such a configuration does not exist in general when the number of classes is large, i.e., . 2 introduces a new configuration described by the notion of softmax code. By Definition 2.1, a softmax code is a configuration where each vector is maximally separated from all the other points, measured by its distance to their convex hull. Such a definition is motivated from theoretical analysis (see Section 3). In particular, it reduces to simplex ETF when (see Theorem 3.3).

Interpretation of Softmax Code.

Softmax Code abides a max-distance interpretation. Specifically, consider the features from classes. In multi-class classification, one commonly used distance (or margin) measurement is the one-vs-rest (also called one-vs-all or one-vs-other) distance (Murphy, 2022), i.e., the distance of class vis-a-vis other classes. Noting that the distance between two classes is equivalent to the distance between the convex hulls of the data from each class (Murphy, 2022), the distance of class vis-a-vis other classes is given by . From 1 and 3 we can rewrite the distance as

| (4) |

By noticing that the rightmost term is minimized in a Softmax Code, it follows from 2 that the learned features have the property that their one-vs-rest distance minimized over all classes is maximized. In other words, measured by one-vs-rest distance, the learned features are such that they are maximally separated. Finally, we mention that the separation of classes may be characterized by other measures of distance as well, such as the one-vs-one distance (also known as the sample margin in Cao et al. (2019); Zhou et al. (2022b)) which leads to the well-known Tammes problem. We will discuss this in Section 3.2.

Experimental Verification of .

We verify the occurence of by training a ResNet18 (He et al., 2016) for image classification on the CIFAR100 dataset (Krizhevsky, 2009), and report the results in Figure 2. To simulate the case of , we use a modified ResNet18 where the feature dimension is . From Figure 2, we can observe that both 1 and 3 converge to , and 2 converges towards the spherical code with relatively small temperature . Additionally, selecting a small is not only necessary for achieving , but also for attaining high testing performance. Due to limited space, we present experimental details and other experiments with different architectures and datasets in Appendix B. In the next section, we provide a theoretical justification for under UFM in (2).

3 Theoretical Analysis of GNC

In this section, we provide a theoretical analysis of under the UFM in (2). We first show in Section 3.1 that under appropriate temperature parameters, the solution to (2) can be approximated by the solution to a “HardMax” problem, which is of a simpler form amenable for subsequent analysis. We then provide a theoretical analysis of in Section 3.2, by first proving the optimal classifier forms a Softmax Code (2), and then establishing 1 and 3 under technical conditions on Softmax Code and solutions to the Tammes problem. In addition, we provide insights for the design of feature dimension given a number of classes by analyzing the upper and lower bound for the one-vs-rest distance of a Softmax Code. All proofs can be found in Appendix C.

3.1 Preparation: the Asymptotic CE Loss

Due to the nature of the softmax function which blends the output vector, analyzing the CE loss can be difficult even for the unconstrained features model. The previous work Yaras et al. (2023) analyzing the case relies on the simple structure of the global solutions, where the classifiers form a simplex ETF. However, this approach cannot be directly applied to the case due to the absence of an informative characterization of the global solution. Motivated by the fact that the temperature is often selected as a small value (, e.g., in Wang et al. (2018)) in practical applications (Wang et al., 2018; Chen & He, 2021), we consider the case of where the CE loss (2) converges to the following “HardMax” problem:

| (5) |

where denotes the inner-product operator. More precisely, we have the following result.

Lemma 3.1 (Convergence to the HardMax problem).

For any positive integers and , we have

| (6) |

Our goal is not to replace CE with the HardMax function in practice. Instead, we will analyze the HardMax problem in (5) to gain insight into the global solutions and the phenomenon.

3.2 Main Result: Theoretical Analysis of

2 and Softmax Code.

Our main result for 2 is the following.

Theorem 3.2 (2).

Let be an optimal solution to (5). Then, it holds that is a Softmax Code, i.e.,

| (7) |

2 is described by the Softmax Code, which is defined from an optimization problem (see Definition 2.1). This optimization problem may not have a closed form solution in general. Nonetheless, the one-vs-rest distance that is used to define Softmax Code has a clear geometric meaning, making an intuitive interpretation of Softmax Code tractable. Specifically, maximizing the one-vs-rest distance results in the classifier weight vectors to be maximally distant. As shown in Figures 1(a) and 1(b) for a simple setting of four classes in a 2D plane, the weight vectors that are uniformly distributed (and hence maximally distant) have a larger margin than the non-uniform case.

For certain choices of the Softmax Code bears a simple form.

Theorem 3.3.

For any positive integers and , let be a Softmax Code. Then,

-

•

: is uniformly distributed on the unit circle, i.e., for some ;

-

•

: forms a simplex ETF, i.e., for some orthonomal ;

-

•

: which can be achieved when are a subset of vertices of a cross-polytope;

For the cases of , the optimal from Theorem 3.3 is the same as that of Lu & Steinerberger (2022). However, Theorem 3.3 is an analysis of the HardMax loss while Lu & Steinerberger (2022) analyzed the CE loss.

1 and Within-class Variability Collapse.

To establish the within-class variability collapse property, we require a technical condition associated with the Softmax Code. Recall that Softmax Codes are those that maximize the minimum one-vs-rest distance over all classes. We introduce rattlers, which are classes that do not attain such a minimum.

Definition 3.4 (Rattler of Softmax Code).

Given positive integers and , a rattler associated with a Softmax Code is an index for which

| (8) |

In other words, rattlers are points in a Softmax Code with no neighbors at the minimum one-to-rest distance. This notion is borrowed from the literature of the Tammes Problem (Cohn, 2022; Wang, 2009), which we will soon discuss in more detail333The occurrence of rattlers is rare: Among the pairs of for which the solution to Tammes problem is known, only have rattlers (Cohn, 2022). This has excluded the cases of or where there is no rattler. The occurrence of ratter in Softmax Code may be rare as well..

We are now ready to present the main results for 1.

Theorem 3.5 (1).

Let be an optimal solution to (5). For all that is not a rattler of , it holds that

| (9) |

where denotes the projection of on .

The following result shows that the requirement in the Theorem 3.5 that is not a rattler is satisfied in many cases.

Theorem 3.6.

If , or , Softmax Code has no rattler for all classes.

3 and Self-Duality.

To motivate our technical conditions for establishing self-duality, assume that any optimal solution to (5) satisfies self-duality as well as 1. This implies that

| (10) |

After simplification we may rewrite the optimization problem on the right hand side equivalently as:

| (11) |

Eq. (11) is the well-known Tammes problem. Geometrically, the problem asks for a distribution of points on the unit sphere of so that the minimum distance between any pair of points is maximized. The Tammes problem is unsolved in general, except for certain pairs of .

Both the Tammes problem and the Softmax Code are problems of arranging points to be maximally separated on the unit sphere, with their difference being the specific measures of separation. Comparing (11) and (3), the Tammes problem maximizes for all the one-vs-one distance, i.e., , whereas the Softmax Code maximizes the minimum one-vs-rest distance, i.e., . Both one-vs-one distance and one-vs-rest distances characterize the separation of the weight vector from . As illustrated in Figure 1, taking , the former is the distance between and its closest point in the set , in this case (see Figure 1(c)), whereas the later captures the minimal distance from to the convex hull of the rest vectors (see Figure 1(b)).

Since the Tammes problem can be derived from the self-duality constraint on the HardMax problem, it may not be surprising that the Tammes problem can be used to describe a condition for establishing self-duality. Specifically, we have the following result.

Theorem 3.7 (3).

For any such that both Tammes problem and Softmax Code have no rattler, the following two statements are equivalent:

-

•

Any optimal solution to (5) satisfies ;

-

•

The Tammes problem and the Softmax codes are equivalent, i.e.,

(12)

In words, Theorem 3.7 states that 3 holds if and only if the Tammes problem in (11) and the Softmax codes are equivalent. As both the Tammes problem and Softmax Code maximize separation between one vector and the others, though their notions of separation are different, we conjecture that they are equivalent and share the same optimal solutions. We prove this conjecture for some special cases and leave the study for the general case as future work.

Theorem 3.8.

If , or , the Tammes problem and the Softmax codes are equivalent.

3.3 Insights for Choosing Feature Dimension Given Class Number

Given a class number , how does the choice of feature dimension affect the model performance? Intuitively, smaller reduces the separability between classes in a Softmax Code. We define this rigorously by providing bounds for the one-vs-rest distance of a Softmax Code based on and .

Theorem 3.9.

Assuming and letting denote the Gamma function, we have

| (13) |

The bounds characterize the separability for classes in -dimensional space. Given the number of classes and desired margin , the minimal feature dimension is roughly an order of , showing classes separate easily in higher dimensions. This also provides a justification for applications like face classification and self-supervised learning, where the number of classes (e.g., millions of classes) could be significantly larger than the dimensionality of the features (e.g., ).

By conducting experiments on ResNet-50 with varying feature dimensions for ImageNet classification, we further corroborate the relationship between feature dimension and network performance in Figure 3. First, we observe that the curve of the optimal distance is closely aligned with the curve of testing performance, indicating a strong correlation between distance and testing accuracy. Moreover, both the distance and performance curves exhibit a slow (exponential) decrease as the feature dimension decreases, which is consistent with the bounds in Theorem 3.9.

4 The Assignment Problem: An Empirical Study

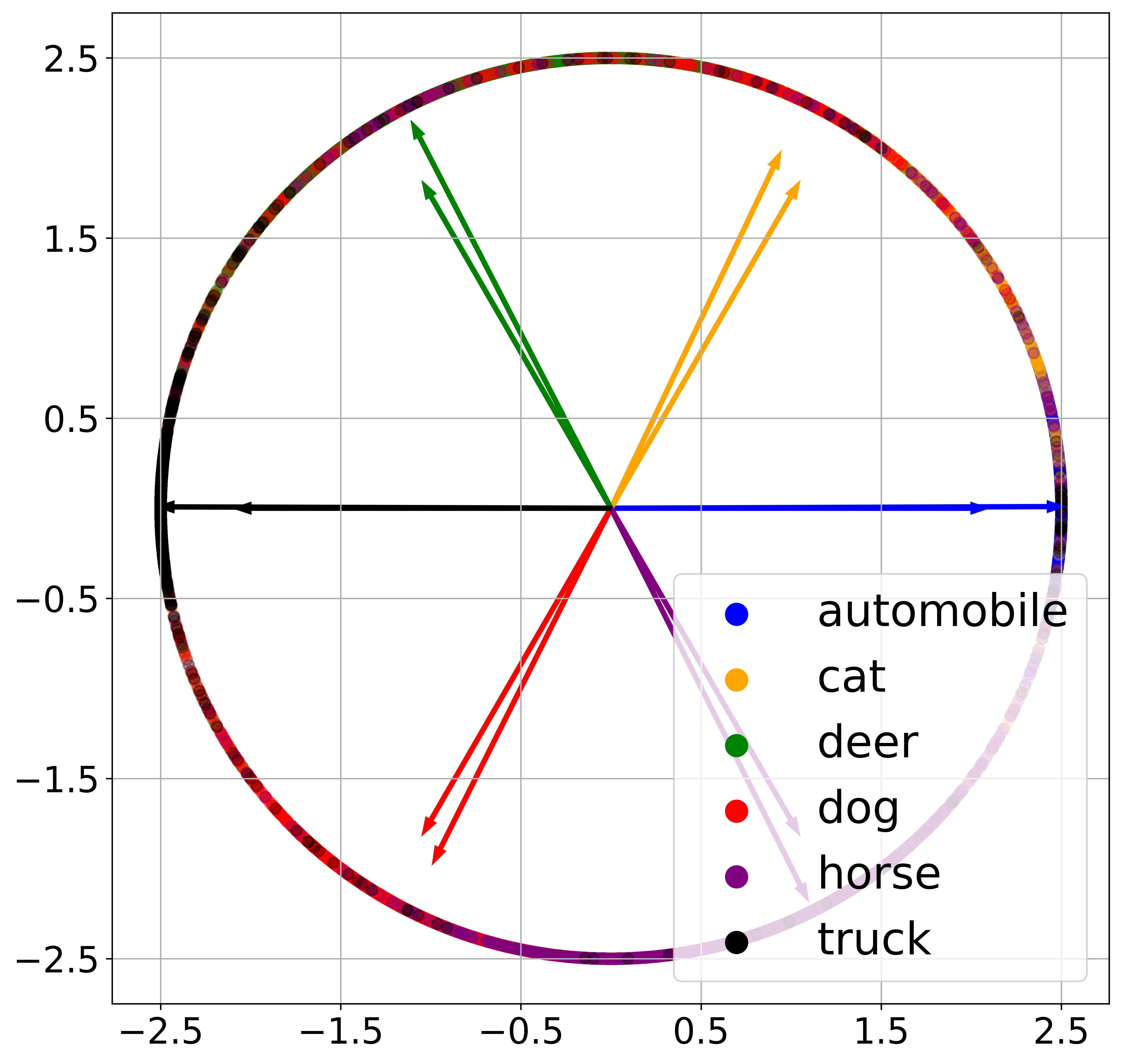

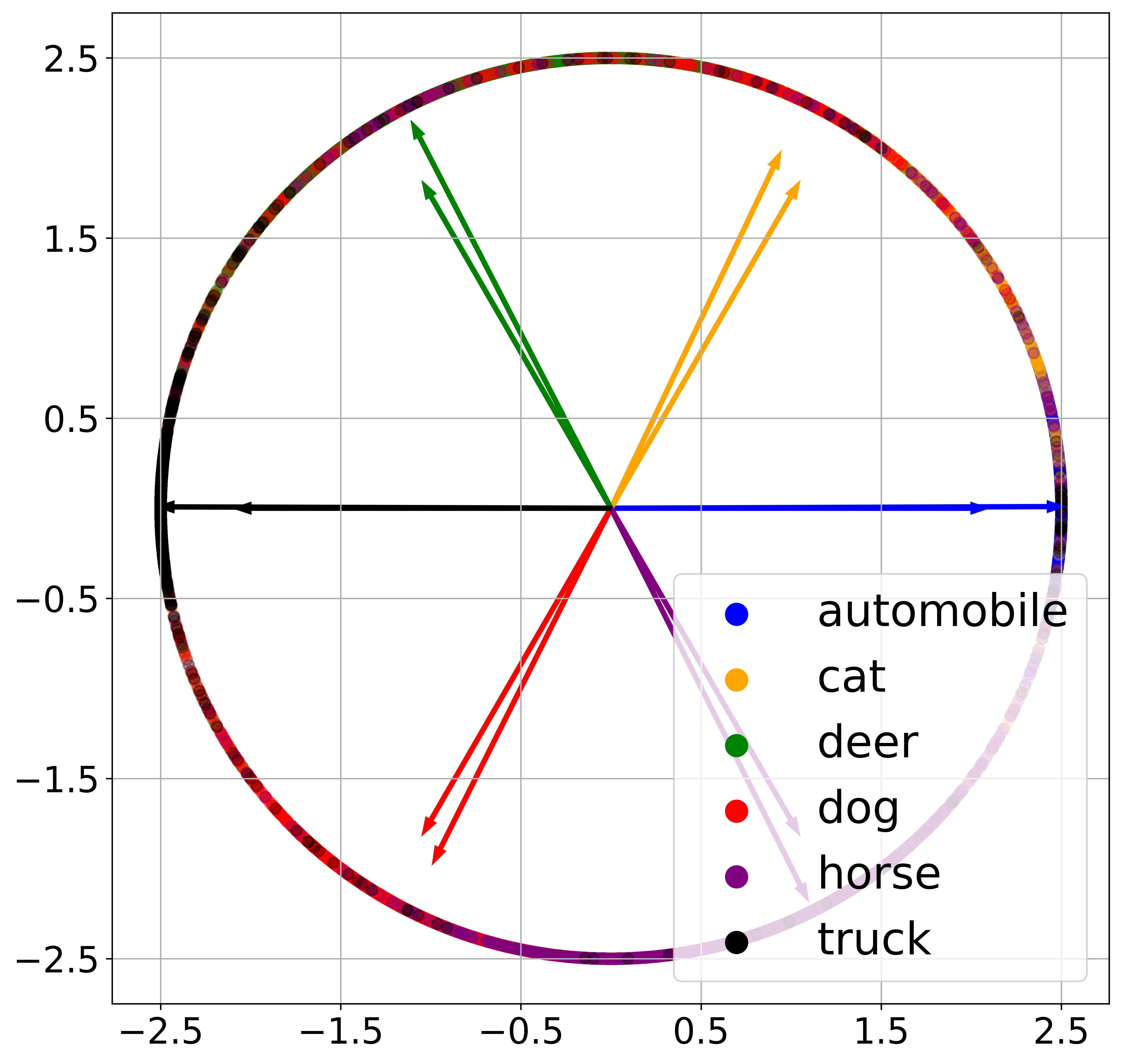

Unlike the case where the optimal classifier (simplex ETF) has equal angles between any pair of the classifier weights, when , not all pairs of classifier weights are equally distant with the optimal (Softmax Code) predicted in Theorem 3.2. Consequently, this leads to a “class assignment” problem. To illustrate this, we train a ResNet18 network with on four classes {Automobile, Cat, Dog, Truck} from CIFAR10 dataset that are selected due to their clear semantic similarity and discrepancy. In this case, according to Theorem 3.3, the optimal classifiers are given by , up to a rotation. Consequently, there are three distinct class assignments, as illustrated in Figures 4(b), 4(c) and 4(d).

When doing standard training, the classifier consistently converges to the case where Cat and Dog are closer together across 5 different trials; Figure 4(a) shows the learned features (dots) and classifier weights (arrows) in one of such trials. This demonstrates the implicit algorithmic regularization in training DNNs, which naturally attracts (semantically) similar classes and separates dissimilar ones.

We also conduct experiments with the classifier fixed to be one of the three arrangements, and present the results in Figures 4(b), 4(c) and 4(d). Among them, we observe that the case where Cat and Dog are far apart achieves a testing accuracy of , which is lower than the other two cases with testing accuracies of and . This demonstrates the important role of class assignment to the generalization of DNNs, and that the implicit bias of the learned classifier is benign, i.e., leads to a more generalizable solutions.

5 Implications for Practical Network Training/Fine-tuning

Since the classifier always converges to a simplex ETF when , prior work proposes to fix the classifier as a simplex ETF for reducing training cost (Zhu et al., 2021) and handling imbalance dataset (Yang et al., 2022). When , the optimal classifier is also known to be a Softmax Code according to 2. However, the same method as in prior work may become sub-optimal due to the class assignment problem (see Section 4). To address this, we introduce the method of class-mean features (CMF) classifiers, where the classifier weights are set to be the exponential moving average of the mini-batch class-mean features during the training process. This approach is motivated from 3 which states that the optimal classifier converges to the class-mean features. We explain the detail of CMF in Appendix B. As in prior work, CMF can reduce trainable parameters as well. For instance, it can reduce % of total parameters in a ResNet18 for BUPT-CBFace-50 dataset (Zhang & Deng, 2020). Here, we compare CMF with the standard training where the classifier is learned together with the feature mapping, in both training from scratch and fine-tuning.

Training from Scratch.

We train a ResNet18 on CIFAR100 by using a learnable classifier or the CMF classifier. The learning curves in Figure 5 indicate that the approach with CMF classifier achieves comparable performance to the classical training protocols.

Fine-tuning.

To verify the effectiveness of the CMF classifiers on fine-tuning, we follow the setting in Kumar et al. (2022) to measure the performance of the fine-tuned model on both in-distribution (ID) task (i.e., CIFAR10 Krizhevsky (2009)) and OOD task (STL10 Coates et al. (2011)). We compare the standard approach that fine-tunes both the classifier (randomly initialized) and the pre-trained feature mapping with our approach (using the CMF classifier). Our experiments show that the approach with CMF classifier achieves slightly better ID accuracy ( VS ) and a better OOD performance ( VS ). The improvement of OOD performance stems from the ability to align the classifier with the class-means through the entire process, which better preserves the OOD property of the pre-trained model. Our approach also simplifies the two-stage approach of linearly probing and subsequent full fine-tuning in Kumar et al. (2022).

6 Conclusion

In this work, we have introduced generalized neural collapse () for characterizing learned last-layer features and classifiers in DNNs under an arbitrary number of classes and feature dimensions. We empirically validate the phenomenon on practical DNNs that are trained with a small temperature in the CE loss and subject to spherical constraints on the features and classifiers. Building upon the unconstrained features model we have proven that holds under certain technical conditions. could offer valuable insights for the design, training, and generalization of DNNs. For example, the minimal one-vs-rest distance provides implications for designing feature dimensions when dealing with a large number of classes. Additionally, we have leveraged to enhance training efficiency and fine-tuning performance by fixing the classifier as class-mean features. Further exploration of in other scenarios, such as imbalanced learning, is left for future work. It is also of interest to further study the problem of optimally assigning classifiers from Softmax Code for each class, which could shed light on developing techniques for better classification performance.

Acknowledgment

JJ, JZ, and ZZ acknowledge support from NSF grants CCF-2240708 and IIS-2312840. PW and QQ acknowledge support from NSF CAREER CCF-2143904, NSF CCF-2212066, NSF CCF-2212326, NSF IIS 2312842, ONR N00014-22-1-2529, an AWS AI Award, a gift grant from KLA, and MICDE Catalyst Grant. We thank CloudBank (supported by NSF under Award #1925001) for providing the computational resources.

References

- Boyd & Vandenberghe (2004) Stephen P Boyd and Lieven Vandenberghe. Convex optimization. Cambridge university press, 2004.

- Braides (2006) Andrea Braides. A handbook of -convergence. In Handbook of Differential Equations: stationary partial differential equations, volume 3, pp. 101–213. Elsevier, 2006.

- Cao et al. (2019) Kaidi Cao, Colin Wei, Adrien Gaidon, Nikos Arechiga, and Tengyu Ma. Learning imbalanced datasets with label-distribution-aware margin loss. Advances in neural information processing systems, 32, 2019.

- Carathéodory (1911) Constantin Carathéodory. Über den variabilitätsbereich der fourier’schen konstanten von positiven harmonischen funktionen. Rendiconti Del Circolo Matematico di Palermo (1884-1940), 32(1):193–217, 1911.

- Chan et al. (2022) Kwan Ho Ryan Chan, Yaodong Yu, Chong You, Haozhi Qi, John Wright, and Yi Ma. Redunet: A white-box deep network from the principle of maximizing rate reduction. The Journal of Machine Learning Research, 23(1):4907–5009, 2022.

- Chang et al. (2019) Wei-Cheng Chang, X Yu Felix, Yin-Wen Chang, Yiming Yang, and Sanjiv Kumar. Pre-training tasks for embedding-based large-scale retrieval. In International Conference on Learning Representations, 2019.

- Chen et al. (2020a) Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. A simple framework for contrastive learning of visual representations. In International conference on machine learning, pp. 1597–1607. PMLR, 2020a.

- Chen & He (2021) Xinlei Chen and Kaiming He. Exploring simple siamese representation learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 15750–15758, 2021.

- Chen et al. (2020b) Xinlei Chen, Haoqi Fan, Ross Girshick, and Kaiming He. Improved baselines with momentum contrastive learning, 2020b.

- Coates et al. (2011) Adam Coates, Andrew Ng, and Honglak Lee. An analysis of single-layer networks in unsupervised feature learning. In Geoffrey Gordon, David Dunson, and Miroslav Dudík (eds.), Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, volume 15 of Proceedings of Machine Learning Research, pp. 215–223, Fort Lauderdale, FL, USA, 11–13 Apr 2011. PMLR. URL https://proceedings.mlr.press/v15/coates11a.html.

- Cohn (2022) Henry Cohn. Small spherical and projective codes. 2022.

- Deng et al. (2019) Jiankang Deng, Jia Guo, Niannan Xue, and Stefanos Zafeiriou. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 4690–4699, 2019.

- Devlin et al. (2018) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- Fang et al. (2021) Cong Fang, Hangfeng He, Qi Long, and Weijie J Su. Exploring deep neural networks via layer-peeled model: Minority collapse in imbalanced training. Proceedings of the National Academy of Sciences, 118(43):e2103091118, 2021.

- Fickus et al. (2017) Matthew Fickus, John Jasper, Dustin G Mixon, and Cody E Watson. A brief introduction to equi-chordal and equi-isoclinic tight fusion frames. In Wavelets and Sparsity XVII, volume 10394, pp. 186–194. SPIE, 2017.

- Galanti et al. (2022a) Tomer Galanti, András György, and Marcus Hutter. Generalization bounds for transfer learning with pretrained classifiers. arXiv preprint arXiv:2212.12532, 2022a.

- Galanti et al. (2022b) Tomer Galanti, András György, and Marcus Hutter. On the role of neural collapse in transfer learning. In International Conference on Learning Representations, 2022b.

- Gao et al. (2023) Peifeng Gao, Qianqian Xu, Peisong Wen, Huiyang Shao, Zhiyong Yang, and Qingming Huang. A study of neural collapse phenomenon: Grassmannian frame, symmetry, generalization, 2023.

- (19) XY Han, Vardan Papyan, and David L Donoho. Neural collapse under mse loss: Proximity to and dynamics on the central path. In International Conference on Learning Representations.

- He et al. (2015) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition, 2015.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016.

- (22) Wenlong Ji, Yiping Lu, Yiliang Zhang, Zhun Deng, and Weijie J Su. An unconstrained layer-peeled perspective on neural collapse. In International Conference on Learning Representations.

- Ji et al. (2021) Wenlong Ji, Yiping Lu, Yiliang Zhang, Zhun Deng, and Weijie J Su. An unconstrained layer-peeled perspective on neural collapse. arXiv preprint arXiv:2110.02796, 2021.

- Krizhevsky (2009) Alex Krizhevsky. Learning multiple layers of features from tiny images. pp. 32–33, 2009. URL https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf.

- Kumar et al. (2022) Ananya Kumar, Aditi Raghunathan, Robbie Jones, Tengyu Ma, and Percy Liang. Fine-tuning can distort pretrained features and underperform out-of-distribution, 2022.

- Li et al. (2022) Xiao Li, Sheng Liu, Jinxin Zhou, Xinyu Lu, Carlos Fernandez-Granda, Zhihui Zhu, and Qing Qu. Principled and efficient transfer learning of deep models via neural collapse. arXiv preprint arXiv:2212.12206, 2022.

- Liu et al. (2023a) Weiyang Liu, Longhui Yu, Adrian Weller, and Bernhard Schölkopf. Generalizing and decoupling neural collapse via hyperspherical uniformity gap. arXiv preprint arXiv:2303.06484, 2023a.

- Liu et al. (2023b) Xuantong Liu, Jianfeng Zhang, Tianyang Hu, He Cao, Yuan Yao, and Lujia Pan. Inducing neural collapse in deep long-tailed learning. In International Conference on Artificial Intelligence and Statistics, pp. 11534–11544. PMLR, 2023b.

- Lu & Steinerberger (2020) Jianfeng Lu and Stefan Steinerberger. Neural collapse with cross-entropy loss. arXiv preprint arXiv:2012.08465, 2020.

- Lu & Steinerberger (2022) Jianfeng Lu and Stefan Steinerberger. Neural collapse under cross-entropy loss. Applied and Computational Harmonic Analysis, 59:224–241, 2022.

- Mitra et al. (2018) Bhaskar Mitra, Nick Craswell, et al. An introduction to neural information retrieval. Foundations and Trends® in Information Retrieval, 13(1):1–126, 2018.

- Mixon et al. (2020) Dustin G. Mixon, Hans Parshall, and Jianzong Pi. Neural collapse with unconstrained features, 2020.

- Moore (1974) Michael H. Moore. Vector packing in finite dimensional vector spaces. Linear Algebra and its Applications, 8(3):213–224, 1974. ISSN 0024-3795. doi: https://doi.org/10.1016/0024-3795(74)90067-6. URL https://www.sciencedirect.com/science/article/pii/0024379574900676.

- Murphy (2022) Kevin P Murphy. Probabilistic machine learning: an introduction. MIT press, 2022.

- Nguyen et al. (2022) Duc Anh Nguyen, Ron Levie, Julian Lienen, Gitta Kutyniok, and Eyke Hüllermeier. Memorization-dilation: Modeling neural collapse under noise. arXiv preprint arXiv:2206.05530, 2022.

- Papyan (2020) Vardan Papyan. Traces of class/cross-class structure pervade deep learning spectra. Journal of Machine Learning Research, 21(252):1–64, 2020.

- Papyan et al. (2020) Vardan Papyan, XY Han, and David L Donoho. Prevalence of neural collapse during the terminal phase of deep learning training. Proceedings of the National Academy of Sciences, 117(40):24652–24663, 2020.

- Poggio & Liao (2020) Tomaso Poggio and Qianli Liao. Explicit regularization and implicit bias in deep network classifiers trained with the square loss. arXiv preprint arXiv:2101.00072, 2020.

- Raffel et al. (2020) Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J Liu. Exploring the limits of transfer learning with a unified text-to-text transformer. The Journal of Machine Learning Research, 21(1):5485–5551, 2020.

- Rangamani et al. (2023) Akshay Rangamani, Marius Lindegaard, Tomer Galanti, and Tomaso A Poggio. Feature learning in deep classifiers through intermediate neural collapse. In International Conference on Machine Learning, pp. 28729–28745. PMLR, 2023.

- Rockafellar & Wets (2009) R Tyrrell Rockafellar and Roger J-B Wets. Variational analysis, volume 317. Springer Science & Business Media, 2009.

- Tammes (1930) Pieter Merkus Lambertus Tammes. On the origin of number and arrangement of the places of exit on the surface of pollen-grains. Recueil des travaux botaniques néerlandais, 27(1):1–84, 1930.

- Thrampoulidis et al. (2022) Christos Thrampoulidis, Ganesh Ramachandra Kini, Vala Vakilian, and Tina Behnia. Imbalance trouble: Revisiting neural-collapse geometry. Advances in Neural Information Processing Systems, 35:27225–27238, 2022.

- Tirer & Bruna (2022) Tom Tirer and Joan Bruna. Extended unconstrained features model for exploring deep neural collapse. In International Conference on Machine Learning, pp. 21478–21505. PMLR, 2022.

- Tirer et al. (2023) Tom Tirer, Haoxiang Huang, and Jonathan Niles-Weed. Perturbation analysis of neural collapse. In International Conference on Machine Learning, pp. 34301–34329. PMLR, 2023.

- Wang et al. (2018) Hao Wang, Yitong Wang, Zheng Zhou, Xing Ji, Dihong Gong, Jingchao Zhou, Zhifeng Li, and Wei Liu. Cosface: Large margin cosine loss for deep face recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 5265–5274, 2018.

- Wang (2009) Jeffrey Wang. Finding and investigating exact spherical codes. Experimental Mathematics, 18(2):249–256, 2009.

- Wojtowytsch et al. (2020) Stephan Wojtowytsch et al. On the emergence of simplex symmetry in the final and penultimate layers of neural network classifiers. arXiv preprint arXiv:2012.05420, 2020.

- Xie et al. (2023) Liang Xie, Yibo Yang, Deng Cai, and Xiaofei He. Neural collapse inspired attraction-repulsion-balanced loss for imbalanced learning. Neurocomputing, 2023.

- Xie et al. (2022) Shuo Xie, Jiahao Qiu, Ankita Pasad, Li Du, Qing Qu, and Hongyuan Mei. Hidden state variability of pretrained language models can guide computation reduction for transfer learning. arXiv preprint arXiv:2210.10041, 2022.

- Yang et al. (2022) Yibo Yang, Liang Xie, Shixiang Chen, Xiangtai Li, Zhouchen Lin, and Dacheng Tao. Do we really need a learnable classifier at the end of deep neural network? arXiv preprint arXiv:2203.09081, 2022.

- Yang et al. (2023) Yibo Yang, Haobo Yuan, Xiangtai Li, Zhouchen Lin, Philip Torr, and Dacheng Tao. Neural collapse inspired feature-classifier alignment for few-shot class incremental learning. arXiv preprint arXiv:2302.03004, 2023.

- (53) Can Yaras, Peng Wang, Zhihui Zhu, Laura Balzano, and Qing Qu. Neural collapse with normalized features: A geometric analysis over the riemannian manifold. In Advances in Neural Information Processing Systems.

- Yaras et al. (2023) Can Yaras, Peng Wang, Zhihui Zhu, Laura Balzano, and Qing Qu. Neural collapse with normalized features: A geometric analysis over the riemannian manifold, 2023.

- You (2018) Chong You. Sparse methods for learning multiple subspaces from large-scale, corrupted and imbalanced data. PhD thesis, Johns Hopkins University, 2018.

- Yu et al. (2022) Longhui Yu, Tianyang Hu, Lanqing Hong, Zhen Liu, Adrian Weller, and Weiyang Liu. Continual learning by modeling intra-class variation. arXiv preprint arXiv:2210.05398, 2022.

- Yu et al. (2020) Yaodong Yu, Kwan Ho Ryan Chan, Chong You, Chaobing Song, and Yi Ma. Learning diverse and discriminative representations via the principle of maximal coding rate reduction. Advances in Neural Information Processing Systems, 33:9422–9434, 2020.

- Zhang & Deng (2020) Yaobin Zhang and Weihong Deng. Class-balanced training for deep face recognition. In Proceedings of the ieee/cvf conference on computer vision and pattern recognition workshops, pp. 824–825, 2020.

- (59) Jinxin Zhou, Chong You, Xiao Li, Kangning Liu, Sheng Liu, Qing Qu, and Zhihui Zhu. Are all losses created equal: A neural collapse perspective. In Advances in Neural Information Processing Systems.

- Zhou et al. (2022a) Jinxin Zhou, Xiao Li, Tianyu Ding, Chong You, Qing Qu, and Zhihui Zhu. On the optimization landscape of neural collapse under mse loss: Global optimality with unconstrained features. In International Conference on Machine Learning, pp. 27179–27202. PMLR, 2022a.

- Zhou et al. (2022b) Xiong Zhou, Xianming Liu, Deming Zhai, Junjun Jiang, Xin Gao, and Xiangyang Ji. Learning towards the largest margins. arXiv preprint arXiv:2206.11589, 2022b.

- Zhu et al. (2021) Zhihui Zhu, Tianyu Ding, Jinxin Zhou, Xiao Li, Chong You, Jeremias Sulam, and Qing Qu. A geometric analysis of neural collapse with unconstrained features. Advances in Neural Information Processing Systems, 34:29820–29834, 2021.

Part Appendix

Appendix A Organizations and Basic.

The organization of the appendix is as follows. Firstly, we introduce the fundamental theorems that are utilized throughout the appendices. In Appendix B, we offer comprehensive information regarding the datasets and computational resources for each figure, along with presenting additional experimental results on practical datasets. Lastly, in Appendix C, we provide the theoretical proofs for all the theorems mentioned in Section 3.

Lemma A.1 (LogSumExp).

Let denote and the LogSumExp function . With , we have

In other words, the LogSumExp function is a smooth approximation to the maximum function.

Proof of Lemma A.1.

According to the definition of the LogSumExp function, we have

where the first inequality is strict unless and the second inequality is strict unless all arguments are equal . Therefore, with , . ∎

Theorem A.2 (Carathéodory’s theorem Carathéodory (1911)).

Given , if a point lies in the convex hull of , then resides in the convex hull of at most of the points in .

Appendix B Experiment

In this section, we begin by providing additional details regarding the datasets and the computational resources utilized in the paper. Specifically, CIFAR10, CIFAR100, and BUPT-CBFace datasets are publicly available for academic purposes under the MIT license. Additionally, all experiments were conducted on 4xV100 GPU with 32G memory. Furthermore, we present supplementary experiments and implementation specifics for each figure.

B.1 Implementation details

We present implementation details for results in the paper.

Results in Figure 2.

To illustrate the occurrence of the phenomenon in practical multi-class classification problems, we trained a ResNet18 network (He et al., 2016) on the CIFAR100 dataset (Krizhevsky, 2009) using CE loss with varying temperature parameters. In this experiment, we set the dimensions of the last-layer features to . This is achieved by setting the number of channels in the second convolutional layer of the last residual block before the classifier layer to be . Prior to training, we applied standard preprocessing techniques, which involved normalizing the images (channel-wise) using their mean and standard deviation. We also employed standard data augmentation methods. For optimization, we utilized SGD with a momentum of and an initial learning rate of , which decayed according to the CosineAnnealing over a span of epochs.

The optimal margin (represented by the dotted line) in the second left subfigure is obtained from numerical optimization of the Softmax Code problem. In this optimization process, we used an initial learning rate of and decreased it by a factor of every iterations, for a total of iterations.

Results in Figure 4.

To demonstrate the implicit algorithmic regularization in training DNNs, we train a ResNet18 network on four classes Automobile, Cat, Dog, Truck from CIFAR10 dataset. We set the dimensions of the last-layer features to so that the learned features can be visualized, and we used a temperature parameter of . We optimized the networks for a total of epochs using SGD with a momentum of and an initial learning rate of , which is decreased by a factor of every epochs.

Results in Figure 5.

To assess the effectiveness of the proposed CMF (Class Mean Feature) method, we utilized the ResNet18 architecture (He et al., 2015) as the feature encoder on the CIFAR100 dataset (Krizhevsky, 2009), where we set the feature dimension to and the temperature parameter to . Since the model can only access a mini-batch of the dataset for each iteration, it becomes computationally prohibitive to calculate the class-mean features of the entire dataset. As a solution, we updated the classifier by employing the exponential moving average of the feature class mean, represented as . Here, denotes the class mean feature of the mini-batch in iteration , represents the classifier weights, and denotes the momentum coefficient (in our experiment, ). We applied the same data preprocessing and augmentation techniques mentioned previously and employed an initial learning rate of with the CosineAnnealing scheduler.

Results in the fine-tuning experiment of Section 5.

We fine-tune a pretrained ResNet50 on MoCo v2 (Chen et al., 2020b) on CIFAR10 (Krizhevsky, 2009). The CIFAR10 test dataset was chosen as the in-distribution (ID) task, while the STL10 dataset (Coates et al., 2011) served as the out-of-distribution (OOD) task. To preprocess the dataset, we resized the images to using BICUBIC interpolation and normalized them by their mean and standard deviation. Since there is no “monkey” class in CIFAR10 dataset, we remove the “monkey” class in the STL10 dataset. Additionally, we reassign the labels of CIFAR10 datasets to the STL10 dataset in order to match the classifier output. For optimization, we employed the Adam optimizer with a learning rate of and utilized the CosineAnnealing scheduler. The models are fine-tuned for 5 epochs with the batch size of 100. We reported the best ID testing accuracy achieved during the fine-tuning process and also provided the corresponding OOD accuracy.

Results in Figure B.1.

To visualize the different structures learned under weight decay or spherical constraint, we conducted experiments in both practical and unconstrained feature model settings. In the practical setting, we trained a ResNet18 network (He et al., 2016) on the first classes of the CIFAR100 dataset (Krizhevsky, 2009) using either weight decay or spherical constraint with the cross-entropy (CE) loss. For visualization purposes, we set the dimensions of the last-layer features to , and for the spherical constraint, we used a temperature parameter of . Prior to training, we applied standard preprocessing techniques, which included normalizing the images (channel-wise) using their mean and standard deviation. Additionally, we employed standard data augmentation methods. We optimized the networks for a total of 1600 epochs using SGD with a momentum of and an initial learning rate of 0.1, which decreased by a factor of every epochs. For the unconstrained feature model setting, we considered only one sample per class and treated the feature (class-mean features) and weights as free optimization variables. In this case, we initialized the learning rate at and decreased it by a factor of every iterations, for totaling iterations. The temperature parameter was set to for the spherical constraint.

Results in Figure B.6.

To demonstrate the implicit algorithmic regularization in training DNNs, we train a ResNet18 network on four classes Automobile, Cat, Deer, Dog, Horse, Truck from CIFAR10 dataset. We set the dimensions of the last-layer features to so that the learned features can be visualized, and we used a temperature parameter of . We optimized the networks for a total of epochs using SGD with a momentum of and an initial learning rate of , which is decreased by a factor of every epochs.

B.2 Effect of regularization: Spherical constraint vs. weight decay

We study the features and classifiers of networks trained with weight decay for the case , and compare with those obtained with spherical constraint. For the purpose of visualization, we train a ResNet18 with on the first classes of CIFAR100 and display the learned features and classifiers in Figure B.1(a). Below we summarize observations from Figure B.1(a).

-

•

Non-equal length and non-uniform distribution. The vectors of class-mean features do not have equal length, and also appear to be not equally spaced when normalized to the unit sphere.

-

•

Self-duality only in direction. The classifiers point towards the same direction as their corresponding class-mean features, but they have different lengths, and there exists no global scaling to exactly align them. As shown in Figure B.2, the ratios between the lengths of classifier weights and class-mean features vary across different classes. Therefore, there exists no global scaling to align them exactly.

In contrast, using spherical constraint produces equal-length and uniformly distributed features, as well as aligned classifier and classifier weights not only in direction but also in length, see Figure B.1 (b). Such a result is aligned with . This observation may explain the common practice of using feature normalization in applications with an extremely large number of classes (Chen & He, 2021; Wang et al., 2018), and justify our study of networks trained with sphere constraints in (2) rather than weight decay as in Liu et al. (2023a).

We note that the discrepancy between the approaches using weight decay and spherical constraints is not due to the insufficient expressiveness of the networks. In fact, we also observe different performances in the unconstrained feature model with spherical constraints as in (2) and with the following regularized form (Zhu et al., 2021; Mixon et al., 2020; Tirer & Bruna, 2022; Zhou et al., 2022a):

| (B.1) |

where represents the weight decay parameters. We observe similar phenomena in Figure B.1(c, d) as in Figure B.1(a, b) that the weight decay formulation results in features with non-equal lengths, non-uniform distribution, and different lengths than the classifiers. In the next section, we provide a theoretical justification for under the UFM for Problem (2).

B.3 Additional results on prevalence of

We provide additional evidence on the occurrence of the phenomenon in practical multi-class classification problems. Towards that, we train ResNet18, DenseNet121, and ResNeXt50 network on the CIFAR100, Tiny-ImageNet and BUPT-CBFace-50 datasets using CE loss. To illustrate the case where the feature dimension is smaller than the number of classes, we insert another linear layer before the last-layer classifier and set the dimensions of the features as for CIFAR100 and Tiny-ImageNet, and for BUPT-CBFace-50.

The results are reported in Figure B.3. It can be seen that in all the cases the 1, 2 and 3 measures converge mostly monotonically as a function of the training epochs towards the values predicted by (i.e., for 1 and 3 and the objective of Softmax Code for 2, see Section 2.2).

Effect of temperature .

The results on BUPT-CBFace-50 reported in Figure B.3 uses a temperature . To examine the effect of , we conduct experiments with varying and report the 2 in Figure B.4. It can be seen that the 2 measure at convergence monotonically increases as decreases.

Δ

Implementation detail.

We choose the temperature for CIFAR100 and Tiny-ImageNet and for the BUPT-CBFace-50 dataset. The CIFAR100 dataset consists of color images in classes and Tiny-ImageNet contains color images in classes. The BUPT-CBFace-50 dataset consists of images in classes and all images are resized to the size of . To adapt to the smaller size images, we modify these architectures by changing the first convolutional layer to have a kernel size of , a stride of , and a padding size of . For our data augmentation strategy, we employ the random crop with a size of and padding of , random horizontal flip with a probability of , and random rotation with degrees of to increase the diversity of our training data. Then we normalize the images (channel-wise) using their mean and standard deviation. For optimization, we utilized SGD with a momentum of and an initial learning rate of , which decayed according to the CosineAnnealing over a span of epochs. The optimal margin (represented by the dash line) in the second from left column is obtained through numerical optimization of the Softmax Codes problem.

B.4 The Nearest Centroid Classifier

As the learned features exhibit within-class variability collapse and are maximally distant between classes, the classifier also converges to the nearest centroid classifier (a.k.a nearest class-center classifier (NCC), where each sample is classified with the nearest class-mean features), which is termed as 4 in Papyan et al. (2020) and exploited in (Galanti et al., 2022b; a; Rangamani et al., 2023) for studying . To evaluate the convergence in terms of NCC accuracy, we use the same setup as in Figure 2, i.e., train a ResNet18 network on the CIFAR100 dataset with CE loss using different temperatures. We then classify the features by the NCC. The result is presented in Figure B.5. We can observe that with a relatively small temperature , the NCC accuracy converges to 100% and hence the classifier also converges to a NCC.

B.5 Additional results on the effect of assignment problem

We provide additional evidence on the effect of ”class assignment” problem in practical multi-class classification problems. To illustrate this, we train a ResNet18 network with d = 2 on six classes {Automobile, Cat, Deer, Dog, Horse, Truck} from CIFAR10 dataset that are selected due to their clear semantic similarity and discrepancy. Consequently, according to Theorem 3.3, there are fifteen distinct class assignments up to permutation.

When doing standard training, the classifier consistently converges to the case where Cat-Dog, Automobile-Truck and Deer-Horse pairs are closer together across 5 different trials; Figure 6(a) shows the learned features (dots) and classifier weights (arrows) in one of such trials. This demonstrates the implicit algorithmic regularization in training DNNs, which naturally attracts (semantically) similar classes and separates dissimilar ones.

We also conduct experiments with the classifier fixed to be three of the fifteen arrangements, and present the results in Figures 6(b) and 6(d). Among them, we observe that the case where Cat-Dog, Automobile-Truck and Deer-Horse pairs are far apart achieves a testing accuracy of , which is lower than the other two cases with testing accuracies of and . This demonstrates the important role of class assignment to the generalization of DNNs, and that the implicit bias of the learned classifier is benign, i.e., leads to a more generalizable solutions. Moreover, compared with the case of four classes in Figure 4, the discrepancy of test accuracy between different assignments increases from to . This suggests that as the number of classes grows, the importance of appropriate class assignments becomes increasingly paramount.

Appendix C Theoretical Proofs

C.1 Proof of Equation 6

We rewrite Equation 6 below for convenience.

Lemma C.1 (Convergence to the “HardMax” problem).

For any positive integers and , we have

| (C.1) |

In above, all are taken over .

Proof.

Our proof uses the fundamental theorem of -convergence Braides (2006) (a.k.a. epi-convergence Rockafellar & Wets (2009)). Denote

| (C.2) |

and let

| (C.3) |

By Lemma C.2, the function converges uniformly to on as . Combining it with (Rockafellar & Wets, 2009, Proposition 7.15), we have -converges to on as well. By applying (Braides, 2006, Theorem 2.10), we have

| (C.4) |

Note that in above, are taken over which is a strict subset of . However, by Lemma C.3, we know that

| (C.5) |

which holds for all sufficiently small. Hence, we have

| (C.6) |

which concludes the proof. ∎

Lemma C.2.

converges uniformly to in the domain as .

Proof.

Recall from the definition of the CE loss in (1) that

| (C.7) |

Denote for convenience. Fix any and , by the property that for all , we have

| (C.8) |

Note that in the domain we have for all . It follows trivially from monotonicity of exponential function that holds for all . Hence, continuing from the inequality above we have

| (C.9) |

Summing over we obtain

| (C.10) |

Using the property of max function we further obtain

| (C.11) |

Taking logarithmic on both sides and multiplying all terms by we get

| (C.12) |

Noting that , we have

| (C.13) |

Hence, for any , by taking , we have that for any , we have

| (C.14) |

for any . That is, converges uniformly to .

∎

Lemma C.3.

Given any there exists a constant such that for any we have

| (C.15) |

Proof.

Note that for any , there exists , and such that . Hence, we have

| (C.16) |

On the other hand, we show that one may construct a such that for any small enough . Towards that, we take to be any matrix in with distinct columns. Denote the inner product of the closest pair of columns from , which by construction has value . Take for all . We have , for all , and . Plugging this into the definition of we have

| (C.17) |

for some that depends only on . In above, the last inequality holds because one can always find a such that for . This implies that any is not a minimizer of for a sufficiently small , which finishes the proof. ∎

As depicted in Figure C.1, we plot the cosine value of the minimal angle obtained from optimizing the CE loss (blue line) and the ”HardMax” approach (black line) for different temperature parameters. The figure demonstrates that as the temperature parameter , the blue line converges to the black line, thus validating our proof.

C.2 An important lemma

We present an important lemma which will be used to prove many of the subsequent results.

Lemma C.4 (Optimal Features for Fixed Classifier).

For any , suppose , then

| (C.18) |

In addition, the optimal is given by

| (C.19) |

where denotes the projection of on .

Lemma C.5.

Suppose . Then

| (C.20) |

where means the two problems are equivalent, i.e., have the same optimal solutions.

Proof of Lemma C.5.

By the separating hyperplane theorem (e.g. (Boyd & Vandenberghe, 2004, Example 2.20)), there exist a nonzero vector and such that and , i.e., . Let be any optimal solution to the RHS of (C.20). Then, it holds that

Hence, it must be the case that ; otherwise, taking gives lower objective for the RHS of (C.20), contradicting the optimality of . ∎

Lemma C.6.

Suppose . Consider the (primal) problem

| (C.21) |

Its dual problem is given by

| (C.22) |

with zero duality gap. Moreover, for any primal optimal solution there is a dual optimal solution and they satisfy

| (C.23) |

Proof of Lemma C.6.

We rewrite the primal problem as

| (C.24) |

Introducing the dual variable , the Lagragian function is

| (C.25) |

We now derive the dual problem, defined as

| (C.26) |

In above, the second equality follows from the fact that the conjugate (see e.g. Boyd & Vandenberghe (2004)) of the max function is the indicator function of the probability simplex. The third equality uses the assumption that , which implies that under the simplex constraint of , hence the optimal to the optimization in the second line can be easily obtained as .

Finally, the rest of the claims hold as the primal problem is convex with the Slater’s condition satisfied. ∎

C.3 Proof of Theorem 3.2

Here we prove the following result which is a stronger version of Theorem 3.2.

Theorem C.7.

Proof.

The proof is divided into two parts.

Any optimal solution to (5) is a Softmax Code. Our proof is based on providing a lower bound on the objective . We distinguish two cases in deriving the lower bound.

-

•

has distinct columns. In this case we use the following bound:

(C.28) In above, the first equality follows directly from definition of the HardMax function. The inequality follows trivially from the property of the operator. The last equality follows from Lemma C.4, which requires that has distinct columns. Continuing on the rightmost term in (C.28), we have

(C.29) where is any Softmax Code. In above, the equality follows from the definition of the operator , and the inequality follows from the definition of the Softmax Code. In particular, by defining and as

(C.30) all inequalities in (C.28) and (C.29) holds with equality by taking and , at which we have that .

-

•

does not have distinct columns. Hence, there exists , such that but . We have

(C.31)

Combining the above two cases, and by noting that , we have that is an optimal solution to the HardMax problem in (5). Moreover, since is an optimal solution to the HardMax problem, it must attain the lower bound, i.e.,

| (C.32) |

Hence, has to attain the equality in (C.29). By definition, this means that is a Softmax Code, which concludes the proof of this part.