Generalized correlation based Imaging for satellites

Abstract

We consider imaging of fast moving small objects in space, such as low earth orbit satellites. The imaging system consists of ground based, asynchronous sources of radiation and several passive receivers above the dense atmosphere. We use the cross correlation of the received signals to reduce distortions from ambient medium fluctuations. Imaging with correlations also has the advantage of not requiring any knowledge about the probing pulse and depends weakly on the emitter positions. We account for the target’s orbital velocity by introducing the necessary Doppler compensation. We show that over limited imaging regions, a constant Doppler factor can be used, resulting in an efficient data structure for the correlations of the recorded signals. We then investigate and analyze different imaging methods using the cross-correlation data structure. Specifically, we show that using a generalized two point migration of the cross correlation data, the top eigenvector of the migrated data matrix provides superior image resolution compared to the usual single-point migration scheme. We carry out a theoretical analysis that illustrates the role of the two point migration methods as well as that of the inverse aperture in improving resolution. Extensive numerical simulations support the theoretical results and assess the scope of the imaging methodology.

1 Introduction

The imaging of satellites in low earth orbit is motivated by the need to detect and closely track small debris (1-10cm) revolving around the earth at altitudes in the range of km-km, [21]. The amount of debris has been growing steadily in recent years, substantially increasing the risk of satellite damage from collisions [20, 14]. There are roughly debris of size larger than cm in Low Earth Orbit (LEO) and there is concern that future collisions may lead to a chain reaction that will generate an unacceptably risky environment [22],[15]. In this paper, we model the small fast moving debris as point-like reflectors moving with constant velocity, .

The recorded data are the scattered signals from a train of incident pulses emitted by transmitters located on the ground. The receivers are assumed to be located at a height of 15 km or more. They span an area of diameter , which acts as the physical aperture of the imaging system. In synthetic aperture radar (SAR), a single airborne transmit/receive element is moving and its trajectory defines the synthetically created aperture of the imaging system [7, 9]. In a similar way, the trajectory of the moving target defines an inverse synthetic aperture (iSAR) of length , with denoting the total time of data acquisition. Other important parameters of the imaging system are the central frequency, , and the bandwidth, of the probing signals as well as the pulse repetition frequency. We consider here emitters operating at high frequency with a relatively large bandwidth, such as the X-Band (8-12 GHz) with a bandwidth of up to 600 MHz.

Correlation-based imaging uses the cross correlations of the recorded signals between pairs of receivers. The signals are also Doppler compensated and synchronized. However, in this case the synchronization does not require knowledge of the emitter locations since only time differences matter in cross correlations. In correlation-based imaging we also do not need to know the pulse profile or the emission times but we need to record an extended train of scattered pulses. We assume that recording is done at a sufficiently high sampling rate so that signals can be recorded with no loss of information [3, 1]. Correlation-based imaging has been shown to be more robust to ambient medium fluctuations such as atmospheric lensing [16] and aberrations [19]. This is true for receivers that are not located on the ground but are located above the turbulent atmosphere, on drones for example. Indeed, considering airborne receivers transforms the passive correlation-based problem to a virtual source array imaging problem that has been studied for stationary receiver arrays in [10, 11, 12]. The key idea is that passive correlation-based imaging becomes equivalent to having a virtual active array at the location of the passive receivers. By moving the receivers above the turbulent atmosphere, the atmospheric fluctuation effects on imaging are minimized and imaging resolution is as if we were in a homogeneous, fluctuation free medium.

Correlation-based imaging is, in addition, passive because it can be carried out using opportunistic emitters with largely unknown properties. In the imaging setting considered in this paper, opportunistic sources could be global navigation satellite systems (GNSS). This has been considered in [18] and it is shown that the main challenge in this case is the low signal-to-noise ratio because the scattered signals received at terrestrial receivers are very weak.

The use of correlation based imaging for satellites is considered in [8], where its resolution is analyzed and compared to matched-filter based imaging. Matched-filter imaging depends linearly on the measurements, but requires knowing the position of the emitters with high accuracy, as well as the emitted pulse trains. It also lacks the robustness to medium fluctuations, contrary to correlation based imaging. It is shown in [8] that under certain conditions matched-filter and correlation based imaging attain comparable resolutions, indicating that correlation based imaging is a good method for LEO satellite imaging. The imaging functions introduced in [8] are a generalization of the well known Kirchhoff migration (KM), which forms an image by superposing the recorded signals after translating them by the travel time to a search location in the image region.

As was noted in [8], the resolution analysis is limited to well separated point scatterers. While this is a suitable choice with a linear imaging method, where more complicated scenarios are considered as superpositions of the single target case, this is no longer so when dealing with correlations, especially when there is interference between neighboring targets. In correlation based imaging, the abilities to localize and separate targets need to be addressed separately. We show in this paper that the single point scatterer resolution results do not generalize to multiple closely spaced scatterers in a straightforward manner. Another key result of [8] is that the resolution of the target depends only weakly on the inverse synthetic aperture. This seems to be counter intuitive as the correlation data still contains a significant amount of information. These observations have motivated us to consider other possible extensions of the imaging function.

1.1 Main result

In this paper we present a generalization of the migration method for correlations, which is motivated by the structure of the data. We first define a correlation data structure, which compensates for the Doppler effect on the measurements. We then show that out of this data structure a natural migration scheme arises, which translates the data to two points rather than one. This is natural when considering data correlations, and a similar migration scheme was recently used in [4]. The result of this migration is not an image but rather a two-point interference pattern. We show that there are several possible ways to derive an image from the two-point interference pattern, with one in particular that we call the rank-1 image. This rank-1 image is the result of taking the first eigenvector of the interference pattern, and it provides superior resolution. We demonstrate its superiority both in terms of favorable mathematical properties, such as having an optimization interpretation, as well as by providing analytical explanations of its performance. This is the main result of the paper.

Numerical simulations confirm that the resolution of the rank-1 image is better than that of the previously used correlation based imaging function, and achieves resolution comparable to that of linear migration, while retaining the benefits of imaging with correlations rather than with the data directly. The method is stable with respect to additive noise and can be applied to many other imaging problems.

The rest of the paper is as follows. In Section 2 we briefly review the model for our data (further expanded in Appendix A), and introduce the cross-correlation data structure. In Section 3 we recast the problem in matrix form and introduce the interference pattern, and the possible extensions of KM to cross-correlation data, particularly the rank-1 image. In Section 4 we present numerical simulations which confirm the superior performance of the rank-1 image. We motivate the results by further simulations in Section 5, and analysis in Appendix B and Appendix C. We conclude with a summary and conclusions in Section 6.

2 Correlation based imaging of fast moving objects

In this section we present the general form of the recorded signals and then review the basic algorithms of correlation based imaging with Doppler effects. We also introduce a cross-correlation data structure that is more efficient for imaging, which will be used in subsequent sections for introducing new imaging methods and comparing them with existing methods.

2.1 Scattered signal model

We want to image a cluster of objects, moving in Keplerian low earth orbit at a high velocity , so that without rotation for all the objects we have

| (2.1) |

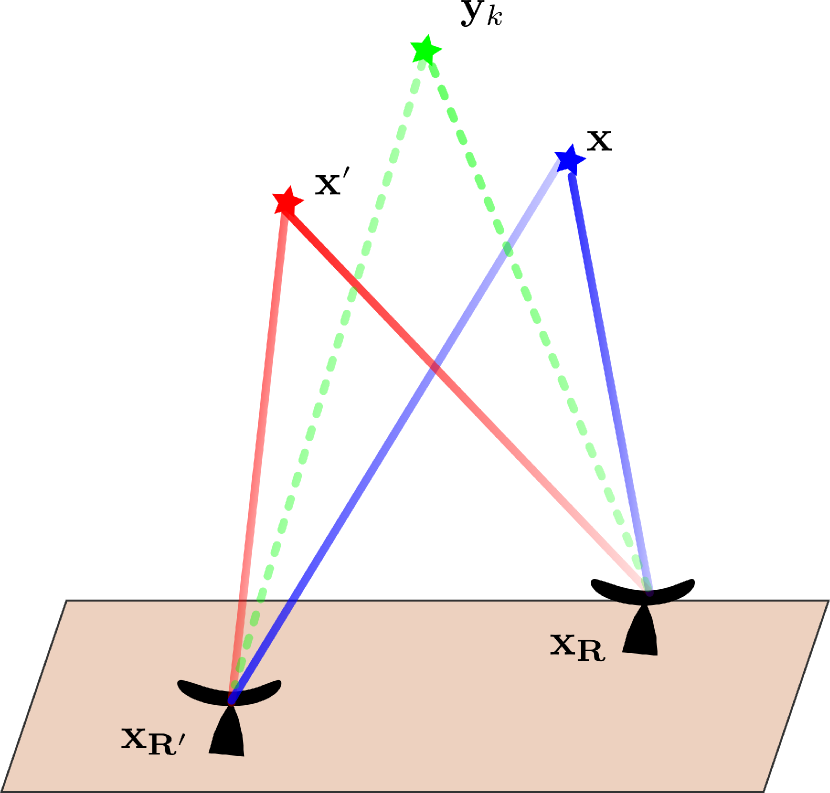

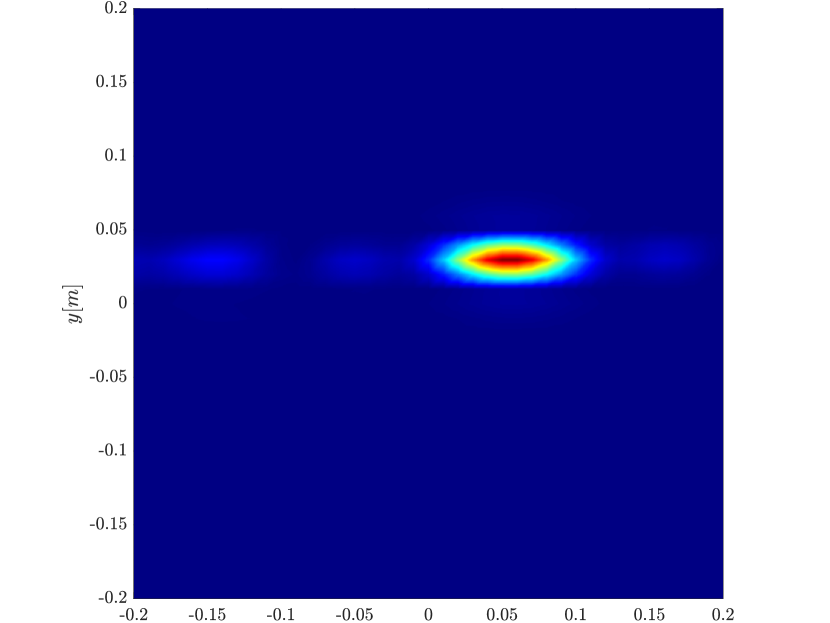

The data is the collection of signals recorded at ground based or at low elevation receivers, with positions . Successive pulses are emitted at a slow time by a source located at on the ground, as illustrated in Figure 1.

The receiver emits a series of pulses , at slow time intervals of , with a total aperture size , such that the recorded signal at the receiver location due to a pulse, , emitted at slow time , is

| (2.2) |

The derivation of (2.2) is in Appendix A. With the speed of light, is the Doppler scale correction factor and is the signal travel time, which to first order in are given by

| (2.3) |

The formula (2.2) for the recorded data will be used in the theoretical resolution analysis.

An important consideration in the data recording process is the sampling frequency, which in currently available microwave acquisition systems is limited to about 2 GHz. Signals with higher carrier frequency need to be downramped before sampling, to avoid information loss. This is done with a heterodyne oscillator that shifts down the carrier frequency followed by low pass filtering. When using asynchronous emitters, the central frequency and bandwidth might not be known accurately. In that case the frequency of the oscillator and the sampling frequency need to be chosen so that loss of information is minimal.

2.2 Imaging with Doppler-scaled cross correlations

We use the cross correlations of the signals received at different receivers to image. In [8] the imaging function was defined via scaling and translating the signals at the receivers by different Doppler factors for every point in the imaging domain

| (2.4) |

This imaging function is a generalization of the regular Kirchhoff migration, which back propagates the recorded signals by the travel time to a specific search point in the image domain, but now at the level of cross correlations. The idea is that when migrating and correlating the data from the different receivers, the peaks of the signals sum coherently if a reflector exists at a point , with velocity , resulting in a peak for at that point. Since the rapid velocity affects the signal via , the Doppler factor must be used when migrating the data. At low speeds and without the Doppler correction this imaging function reduces to the classical correlation based migration [13].

We assume here that the locations of the receivers are accurately known. The source location is also assumed to be known, but a lesser degree of accuracy is needed, since only affects (see [8]). The formulation (2.4) is less advantageous for two reasons:

-

1.

It is computationally ineffective to recompute the cross correlation for any point in the imaging domain. It would be much more efficient to compute the cross correlations once for any triplet as a function of , the translation, and use that for imaging [13].

-

2.

Important features in the image manifest themselves in the raw cross correlation data. In order to use the data intelligently one must define a data structure, as an intermediate step, before applying the imaging function.

We next define the Doppler-scaled cross correlation function , and show how it can be used in the imaging problem.

2.3 Relative variation of the Doppler factor

In general, (2.3) shows that is dependent on the target’s position and velocity. Having an accurate value for is extremely important in calculating cross correlations, as small changes in the frequency support would greatly affect the outcome of the correlation integral and hence the resolution of the image. However, we can use prior information at our disposal to limit the image region. For example, can be estimated from the fact that the objects are assumed to be in a Keplerian orbit, i.e., . Accurate range measurements based on the signal’s bandwidth can be used to estimate and subsequently . Moreover, when forming an image we are usually interested in a limited search domain in space, based on auxiliary information. Of course is a continuous function of , , such that if , , are small enough then

| (2.5) |

In our numerical simulations over an image window of size mmm, varies at most by %. For signals with our frequency support and time duration, can be very well approximated by a constant in the imaging domain.

2.4 The cross-correlation data structure in iSAR

Relying upon the weak variation of , we use a rescaled signal to image over the image window, rather than rescaling the signal for every point in the image window as was done in [8](eq. 3.6). Specifically, we construct by scaling the signal received by a reference Doppler factor,

| (2.6) |

Note that for a reflector with a trajectory , the received signal has the form

| (2.7) |

We define the cross correlation function as well,

| (2.8) |

Using (2.7), the imaging function (2.4) reduces to

| (2.9) |

where

| (2.10) |

Notice that is still dependent on . The imaging function in (2.9) migrates the cross correlation data to a search point , based on the expected travel time difference between the signals recorded at both receivers . This is possible because we have adequately scaled the fields with respect to a specific search region, such that is only dependent on travel time differences. Note also that the recorded signals are time shifted by the sum of the travel times from the source to the center of the image window plus the travel time from the center of the image window to the receiver. This ensures that is supported around .

The imaging function (2.9) is an extension of the classical Kirchhoff Migration (KM) imaging function in the linear case, where the travel times are used to migrate the field

| (2.11) |

Thus, we can break down the imaging problem into two separate steps:

-

1.

In the first step, the trajectory and fast linear motion of the object is estimated and thereafter assumed known. The relevant imaging domain can then be taken to be small enough so that the approximation of the ratio (2.5) holds. We express the estimated motion of the center of the image window as , where

(2.12) Alternatively, we can use prior information about the trajectory to center the image window.

-

2.

We then substitute in (2.8) for and for to compute the cross-correlation .

3 Generalized migration schemes for correlation based imaging

The imaging function defined in (2.4) has been analyzed in [8], where its resolution was also compared to that of the matched filter imaging function. While Kirchhoff migration arises naturally in regular SAR/iSAR as the action of the adjoint forward operator applied to the recorded data, this is not necessarily a natural choice when the image is to be formed using correlations of the data. Moreover, as mentioned Section 1, unlike in classic KM the resolution only weakly depends on the synthetic aperture. Numerical simulations with correlation based imaging suggest deterioration of the resolution when multiple reflectors are not well separated, or when there is a contrast in the reflectivity, with strong and weak reflectors in the same image window. The two-point migration introduced below in Section 3.3 addresses these issues.

As a first step, we want to express the scaled cross correlation data structure in (2.8) in terms of the medium reflectivity. We shall use that to introduce extensions of the migration imaging function to cross correlation data.

3.1 The model for the cross correlation data

Assume we have discretized the medium in a small image window relative to its moving center , as in (2.12), with grid points and corresponds to the center of the window. The unknown reflectivity is discretized by its values on this grid

The unknown reflectivity vector has dimension , which is the number of pixels in the image window. Most of these reflectivities are zero because there are usually few relatively strong reflectors that can be imaged, that is, we can estimate their location in the image window and their strength. We assume the image window is small enough so that the Doppler term is constant over it, as explained in Section 2.

We saw in (2.7) that the signal recorded at receiver location can be written, after an appropriate scaling, as

| (3.13) |

where

| (3.14) |

In the frequency domain the recorded signal is

| (3.15) |

with

| (3.16) |

The phase comes from the reduced travel time from the target to the receiver, relative to that of the image window center.

We assume the distance from the reflectors to the different receivers doesn’t vary greatly, hence we can approximate

| (3.17) |

i.e, we neglect the dependence of the amplitude factor on a specific receiver. By using this approximation we compromise the accuracy in which the amplitude of the reflector is retrieved. However, for most applications, the support and the relative reflectivities of the scatterers are of the greatest importance. Using this notation, and the fact that correlation in time is equivalent to multiplication in frequency, we get that

| (3.18) |

3.2 Matrix formulation of the forward model

We introduce matrix notation that relates our model for reflectivities to the cross correlation data. Denote by the unknown reflectivities in vector form

Denote , our model for the migration matrix, has dimensions and entries . This matrix acts on the reflectivities and returns data. Denote the recorded signal data as an vector vector whose entries are given by (3.15). The cross correlation data is also a matrix, of dimension , with entries

Combining these, we have in matrix form the following model for the recorded signal data vector and cross correlation data matrix

| (3.19) |

| (3.20) |

We denote by , the outer product of reflectivities, then our model in matrix form is

| (3.21) |

The cross correlations depend on the reflectivities through their outer product . As a result there can be several different extensions of Kirchhoff migration to cross correlations, which we investigate in the next section.

3.3 Imaging functions for cross correlation data

Given the data and the model , Kirchhoff migration of the data is given by

| (3.22) |

Identifying , (3.22) is a discretized version of the imaging function in (2.11), .

Kirchhoff migration is usually derived by considering the solution of the ordinary least square (OLS) optimization problem. While the solution of OLS involves the pseudo-inverse of the forward matrix, it is common to approximate it as diagonal for imaging problems, which is well justified for separated targets. In that case, OLS and Kirchhoff migration yield the same support. Kirchhoff migration can also be interpreted as the solution of a different constrained optimization problem, related to the forward models of (3.19). Neglecting the scales , we can interpret migration as looking for the set of reflectivities that yield the set of signals most correlated with our measurements, under a norm constraint.

| (3.23) |

While the solution of OLS for the cross correlation model (3.21) involves writing as a vector and the matrix multiplication operations as matrix-vector multiplication, (3.23) has a direct extension for the model of(3.21). With abuse of notation for matrices . Neglecting the scales again, we have

| (3.24) |

As a result, a natural extension of the migration of (3.22) for migrating cross correlations is the matrix

| (3.25) |

with elements

| (3.26) |

As expected, the result of the migration is an estimation of rather than , as our model is quadratic with respect to the reflectivities. is a square matrix with dimensions , where is the number of search points in the imaging domain. If points are associated with reflectivities , we can think about as a two-variable generalized cross correlation imaging function

| (3.27) |

Note that is Hermitian positive definite by definition. We next see how can an image be extracted from .

3.4 Imaging with migrated cross correlation data

The imaging function (3.27) lacks a direct physical interpretation. It evaluates the outer product of reflectivities rather than the reflecitivities themselves. We shall see that several ways to extract exist, not all equal in general.

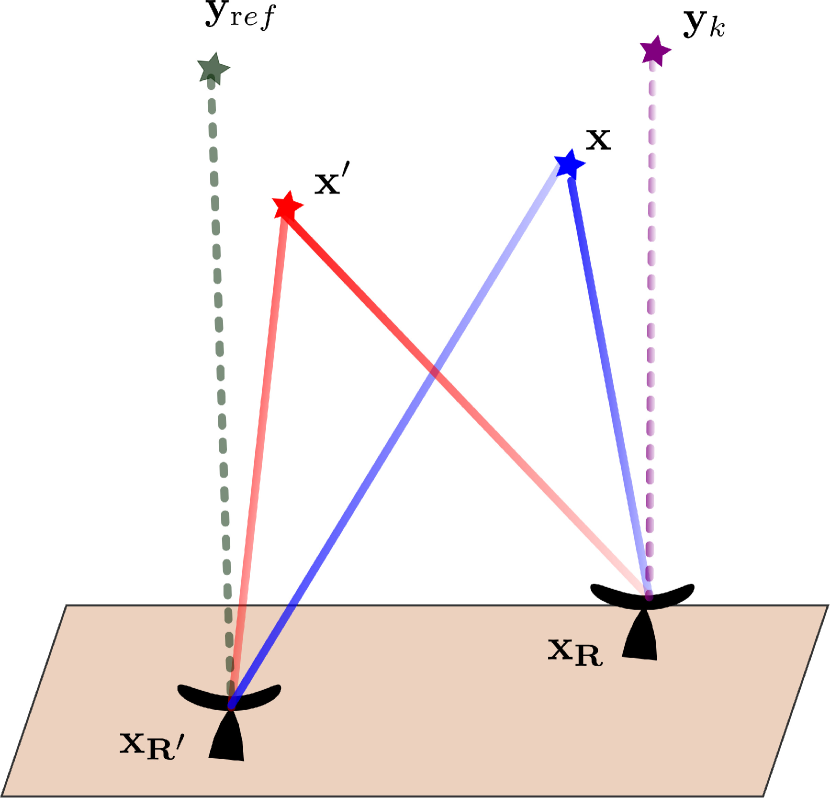

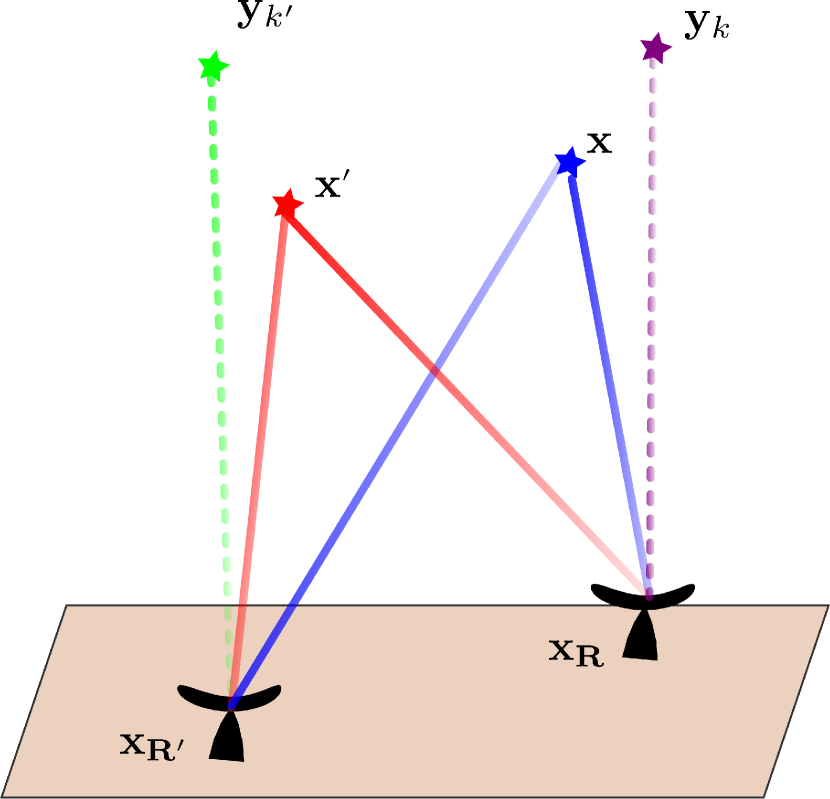

If was exactly rank-1, i.e. , there would be several ways to extract .

- 1.

-

2.

. This is equivalent to migrating the data to a reference point with respect to one of the receiver pairs and forming an image by migrating the other receiver to a search point. In terms of (3.27) the image is evaluated by plugging a reference term in the first variable and a search point in the other

-

3.

, i.e., calculate the top eigenvector of . In terms of (3.27) the image is evaluated by taking, , the first eigenvector of , thought of as a matrix

We call this the rank-1 image.

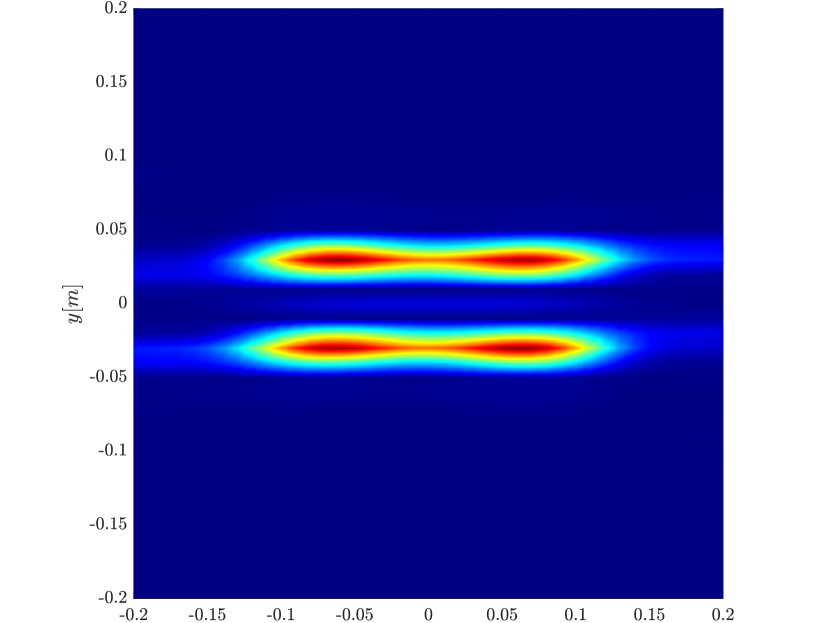

The different methods are illustrated in Figure 2.

All of these methods reconstruct the reflectivity up to a global phase as expected. The first two methods have the advantage that they are only dependent on a small subdomain of the elements of . However, as mentioned in Section 2, they suffer from limitations in the achieved image resolution. The eigenvector on the other hand is a global method, relying on the entire cross correlation data to reconstruct the image.

A physical interpretation would be the following- a cluster of scatterers moving at the same speed would exhibit coherent correlations not only when correlating reflections from the same scatterer at different receivers, but also when correlating reflections from different scatterers at different receivers. Thus, the entire data can be used to estimate the most probable locations for the scatterers, given that the fields measured at both receivers were generated by the same set of scatterers. Note that single point migration can also not resolve any of the relative phases of the reflectors, while the eigenvector is only invariant with respect to a global phase.

The single point migration and two point migration are related by

| (3.29) |

with being the rank of . i.e., the single point migration is a weighted sum of all the eigenvectors, squared, by their respective eigenvalues. When there is a rapid decay in the singular values, we expect similar results for both migration methods. However, when this is not the case, we expect a different result, as we show through analysis and numerical simulations in Section 5.

3.5 The rank-1 imaging function

Based on the observations of the previous section we suggest the following imaging function

-

1.

Backpropagate the cross correlation to two different points using the operator to form the two-point image

-

2.

Take the rank-1 image to be the top eigenvector of

We can reduce the cost of the algorithm by considering a rectangular rather than a square matrix. This means that when migrating we don’t use the same grid for both receivers. We replace our candidate image with the top left singular vector (for a tall matrix). As we show in Section 4, numerical simulations suggest that we can downsample one dimension by a factor of 10 and still retain the same resolution. There are several motivations to consider the rank-1 image:

-

1.

Robustness to noise. Since the eigendecomposition uses the entire cross correlation data, it is less sensitive to additive noise or even incomplete data. One could use low-rank matrix completion algorithms to reconstruct the image.

-

2.

Optimization interpretation. The top singular vector has an optimization interpretation: plug in the expression for in (3.20), then

(3.30) The top eigenvector can be expressed as the solution to an optimization problem. The top eigenvector solves:

(3.31) i.e, we are trying to find the which is the most correlated with the observed field measurement, given our forward model. Notice that while similar to (3.23), this is a nonlinear optimization problem, and the absolute value in (3.31) removes the sensitivity to a global phase.

3.6 Performance of the rank-1 image

In Sections 4 and 5 we compare through simulations the performance of the two-point and single-point migration schemes for plane images, that is, images with a fixed coordinate (height), so that the image coordinate . We show that as the synthetic aperture increases, the resolution of the rank-1 image tends to improve compared to the usual single point migration image. We explain this by analyzing the stationary points of the interference pattern , in Appendix B.

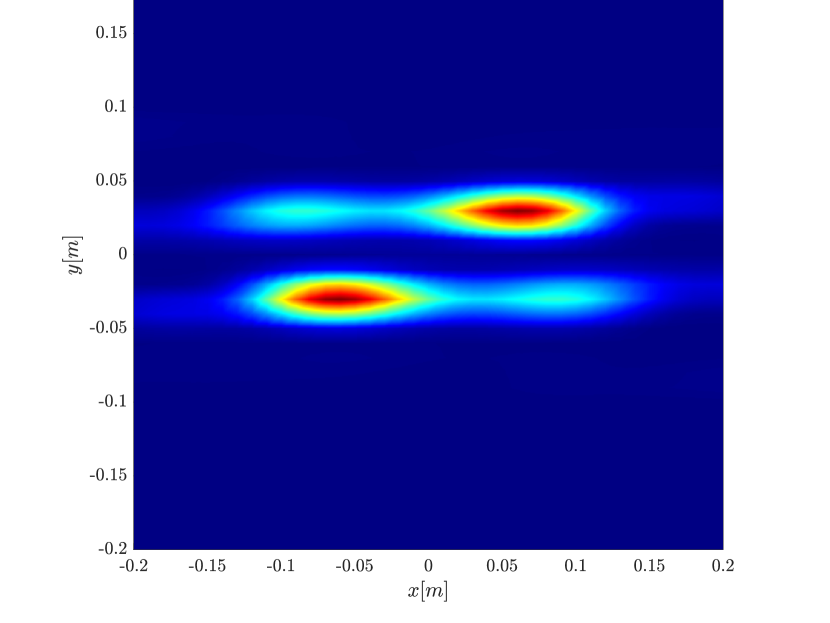

The main result in this appendix is that the synthetic aperture induces an anisotropy in the resolution of in the space . For a small synthetic aperture, the width of the stationary point is approximately in all directions, where is the wavelength of the carrier frequency, is the height of the target (the average distance from the receivers), and is the diameter of the imaging array spanned by the receivers. This is consistent with the resolution analysis in [8], where it was evaluated for a relatively short synthetic aperture, so that the resolution is dominated by the size of the array of receivers.

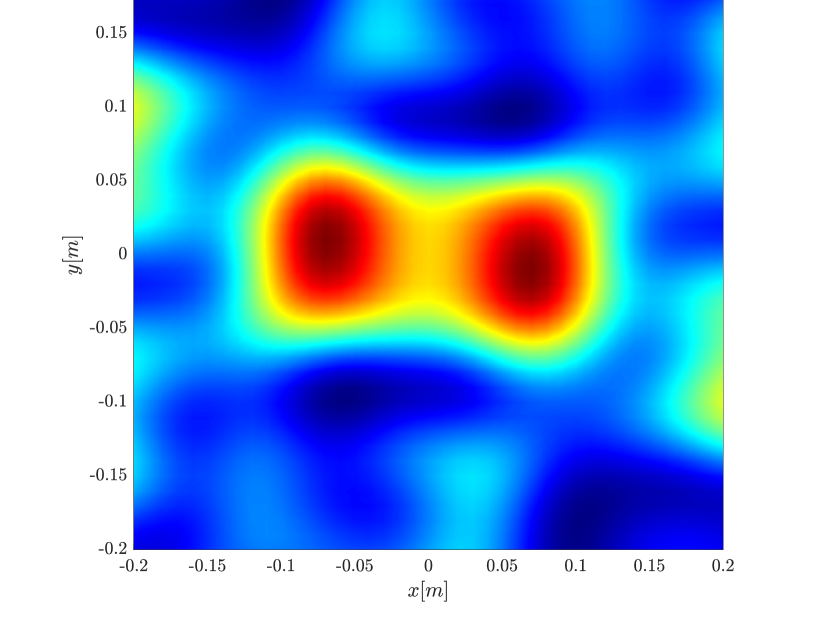

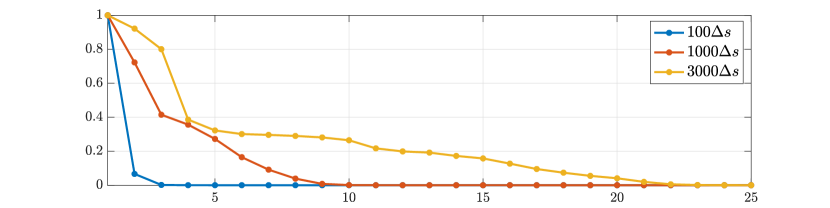

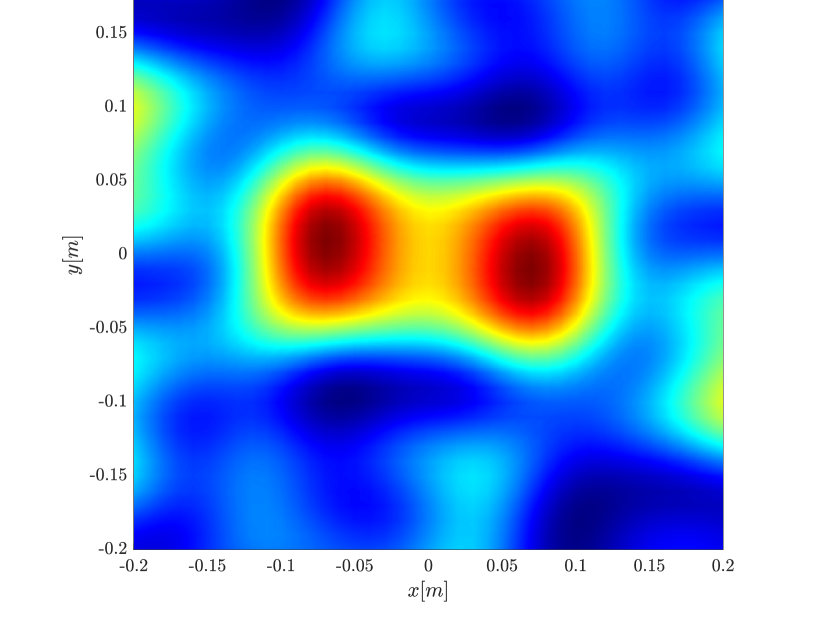

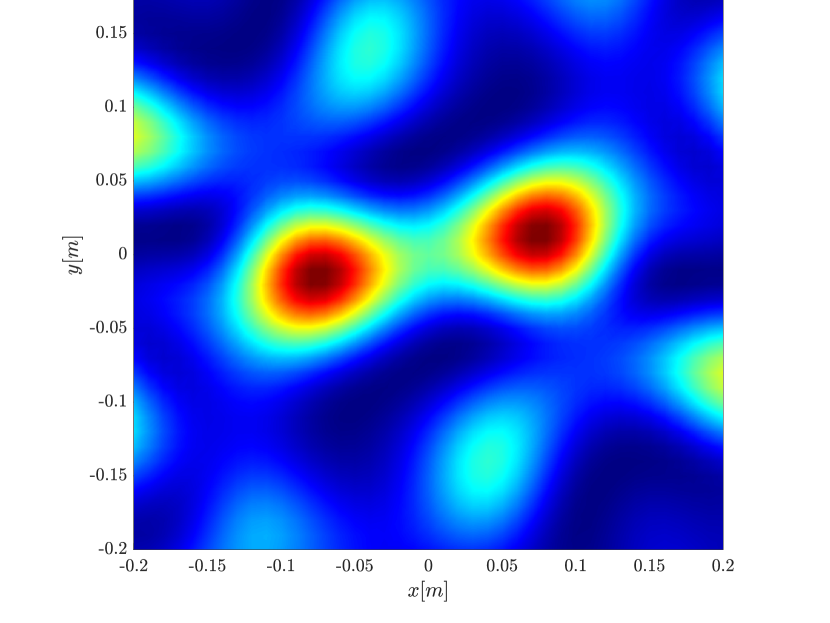

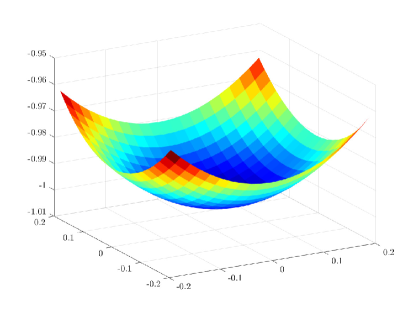

However, as the synthetic aperture grows larger and becomes comparable in size to the physical aperture, the anisotropy becomes significant. As a result, the stationary points have different widths in different directions. They exhibit the narrowest spot size in the direction , which is approximately , with the size of the synthetic aperture. The width is unchanged in , which is the direction corresponding to the diagonal/single point migration (2.9). This is again consistent with the resolution analysis of [8], which suggests that the resolution of planar images is independent of the synthetic aperture size. Anisotropic localized kernels have eigenvectors whose width is smaller then the maximum width, as we show in Appendix C for several one dimensional cases. In the case of the point interference patters the width tends to the harmonic mean, which greaty improves on the maximal width. Thus, the top eigenvector can provide an image with better resolution. This is indeed the case, as illustrated in Figure 3. In numerical simulations, shown in Section 4, the resolution is comparable to the linear KM image.

In Section 4 we present extensive numerical simulations that confirm the superior performance of the rank-1 image with an increasing synthetic aperture size. Then, in Section 5 we further investigate the performance by considering the case of a single target. The numerical results are in accordance with the analysis of Appendix B and Appendix C.

4 Numerical simulations

In this section we compare with numerical simulations the performance of the single point migration, the rank-1 image and the linear Kirchhoff migration. The object to be imaged is a cluster composed of either two or four point scatterers. The scatterers are at km height and they all move with the same linear speed. In the inertial frame of references the scatterers are in the plane. The distance between the scatterers is cm in the direction, and cm in the direction. We show reconstructions in the plane at a fixed height equal to the true height of the scatterers.

We compute imaging results for different synthetic aperture sizes corresponding to 100 pulses (1.5 seconds), 1000 pulses (15 seconds) and 3000 pulses (45 seconds). The calculations were performed in the frequency domain using as sampling frequencies , with , GHz and MHz corresponding to half the bandwidth of the pulse .

In the following figures we demonstrate the improvement in resolution and target separation when using the rank-1 image, compared to the classical single-point migration. The observed performance is further investigated in Section 5, and given an analytical explanation in Appendix B.

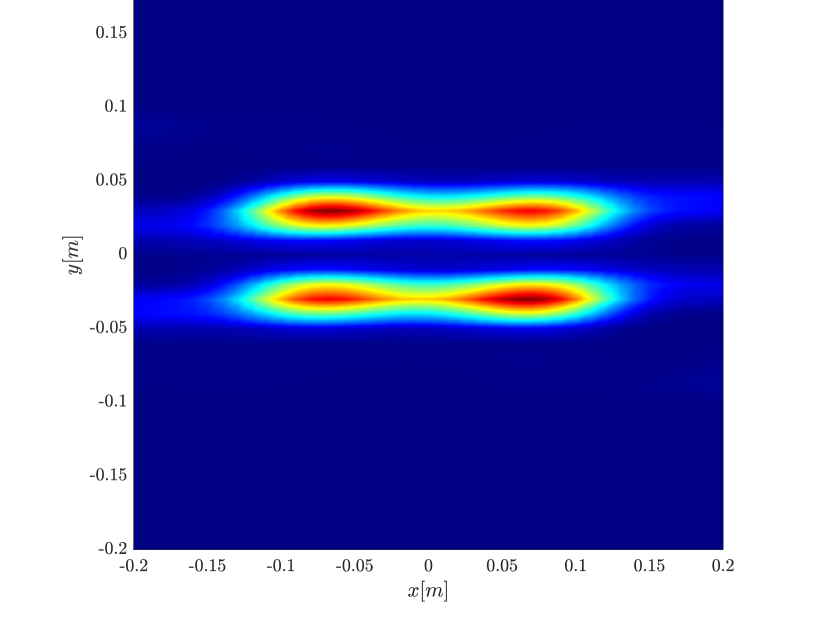

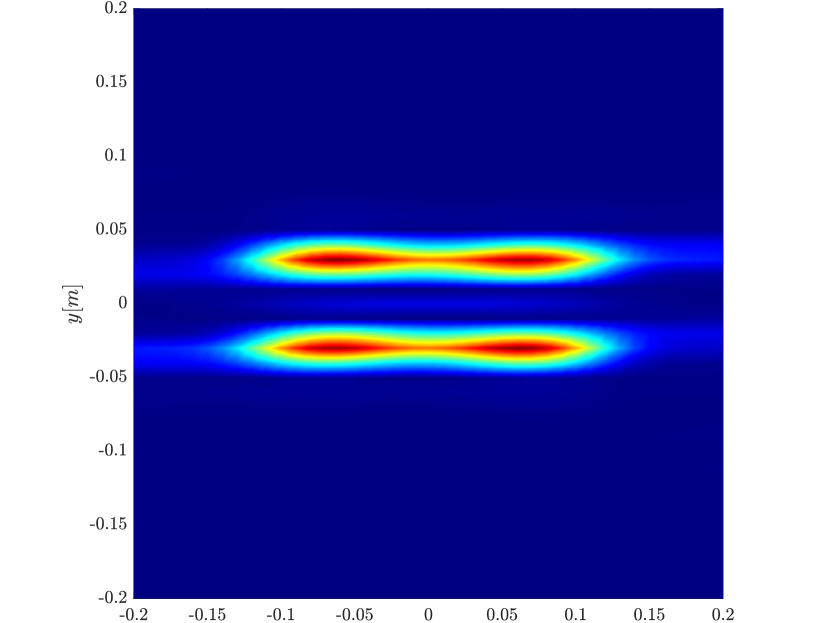

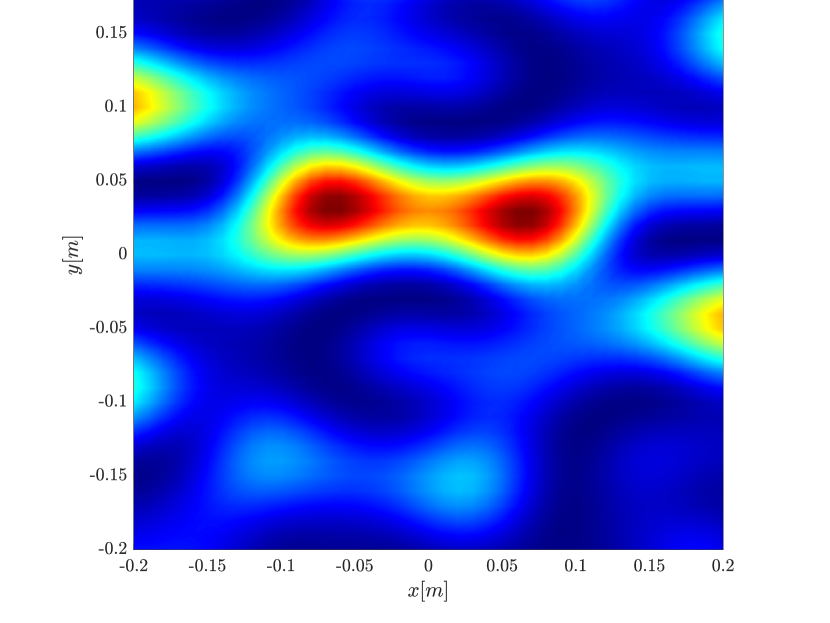

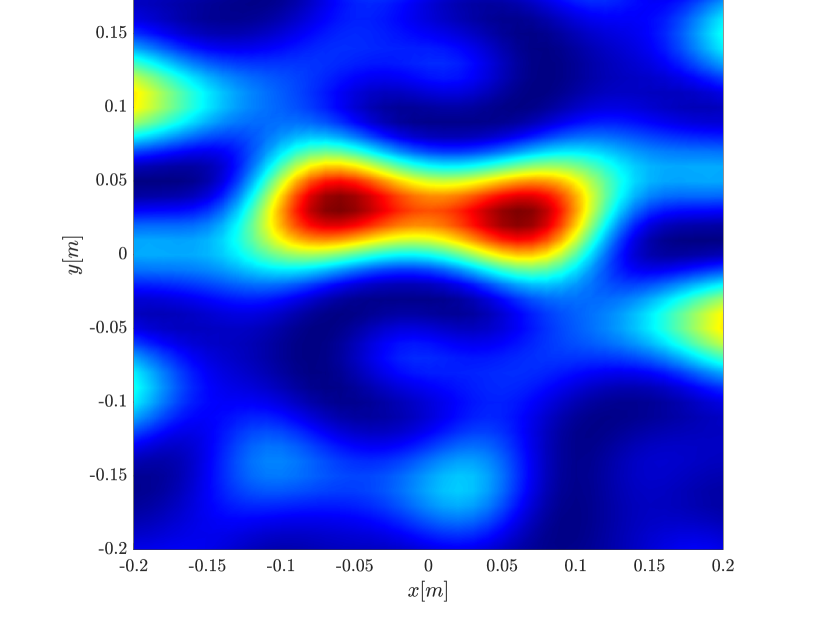

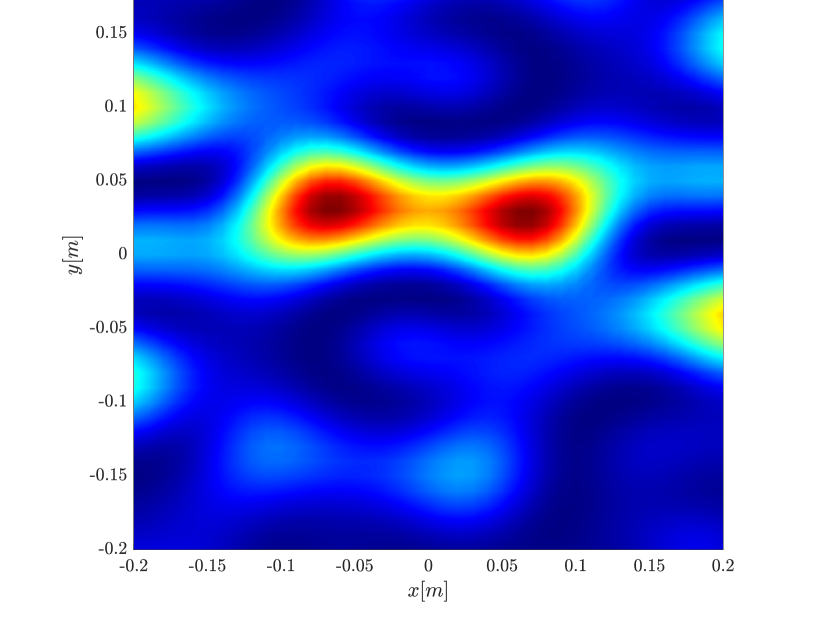

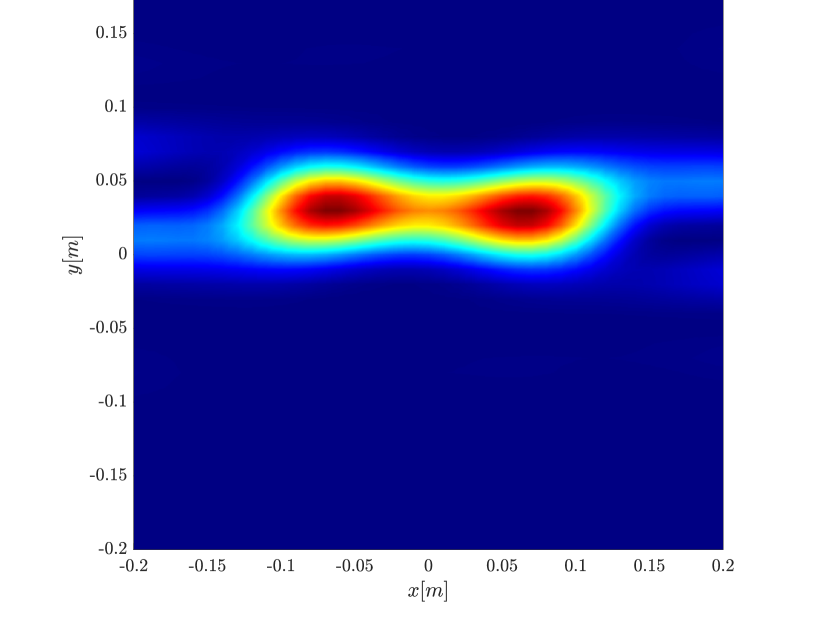

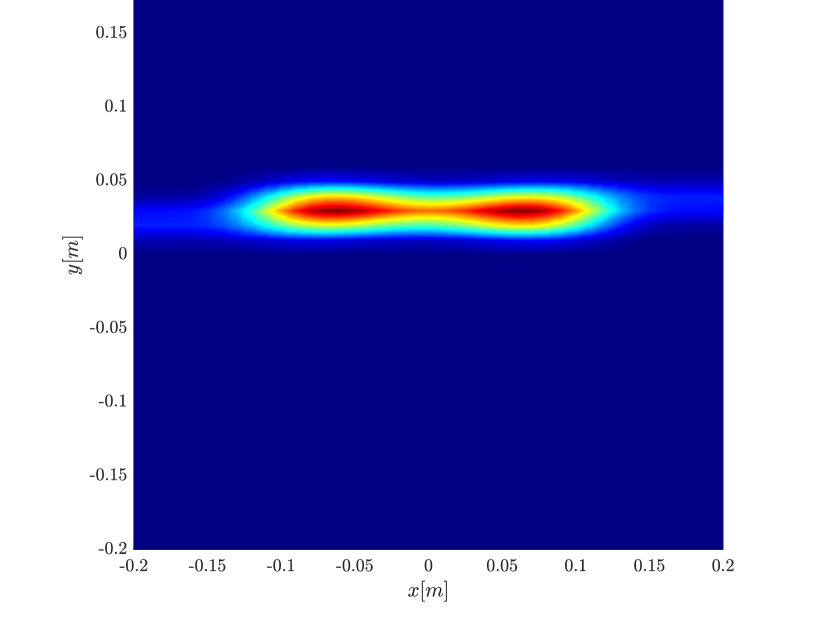

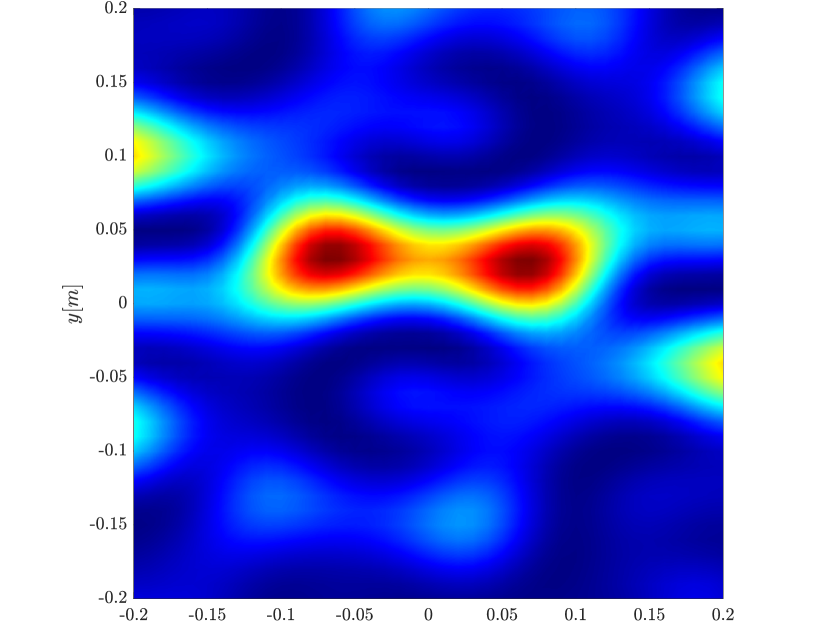

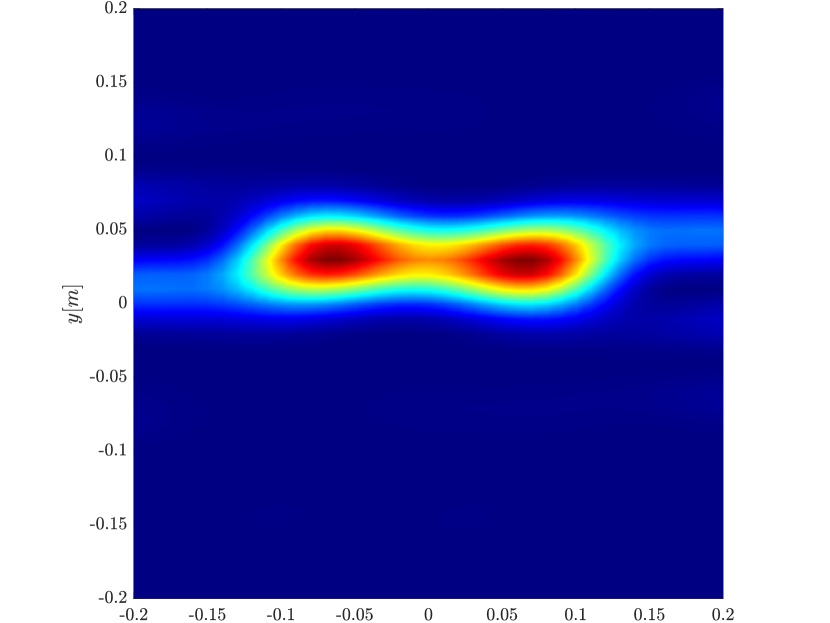

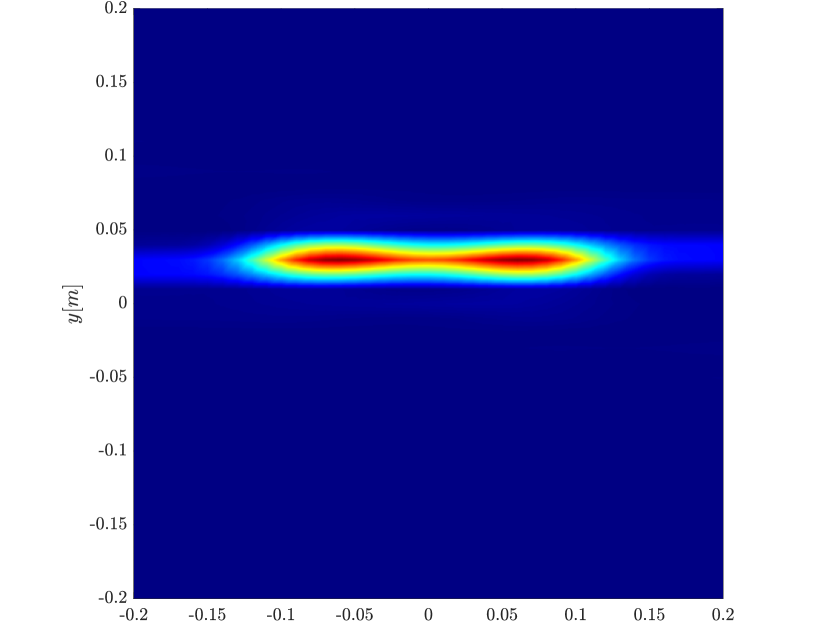

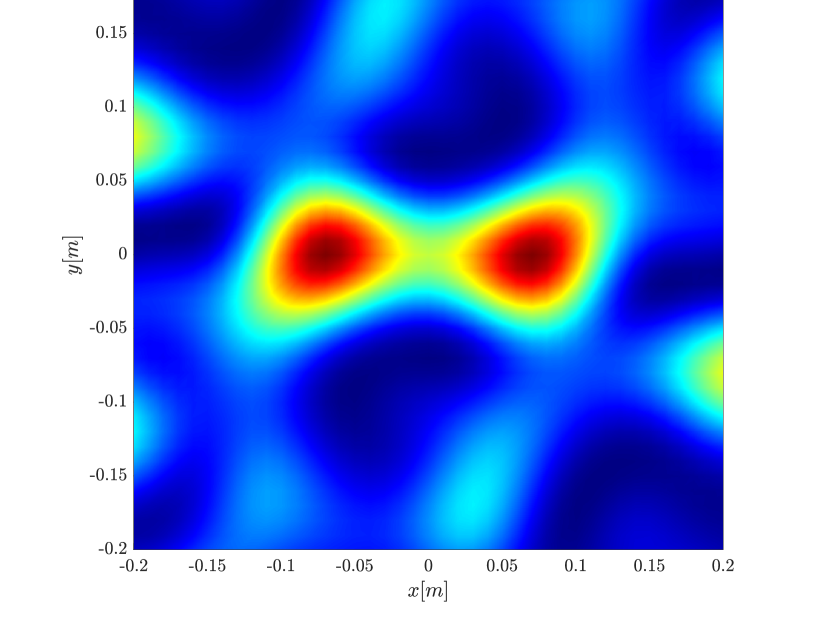

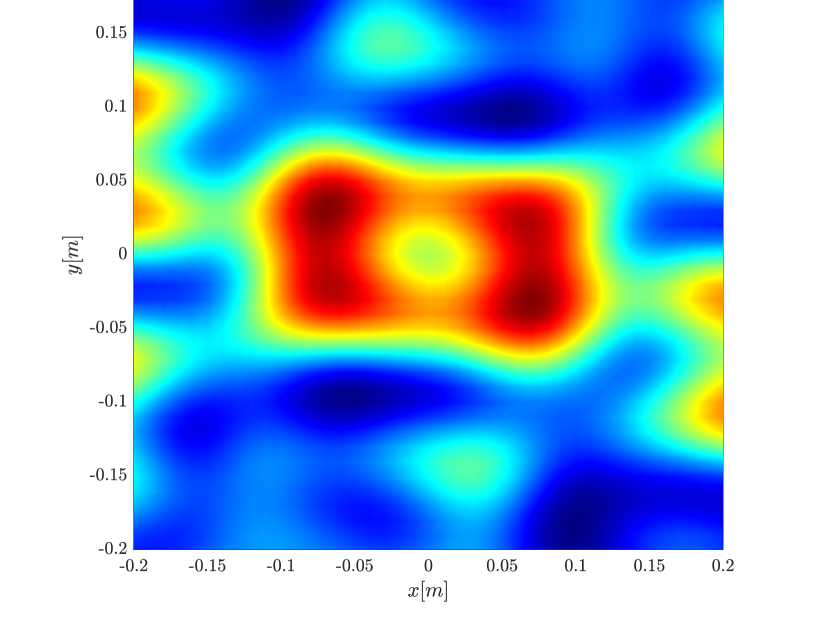

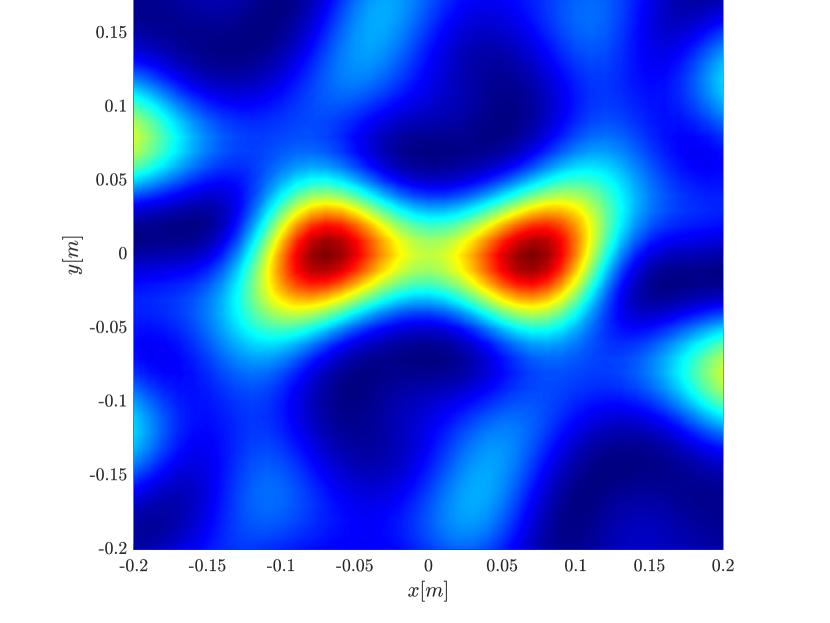

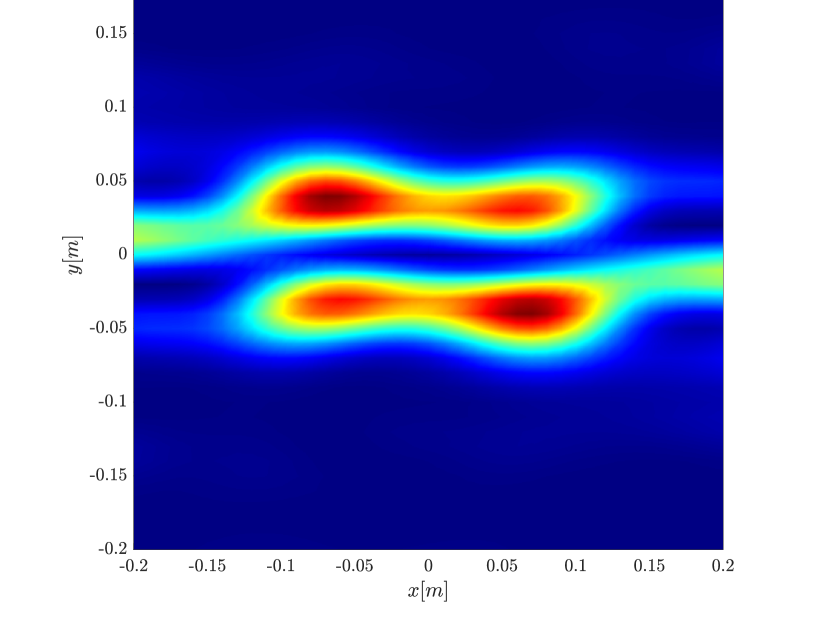

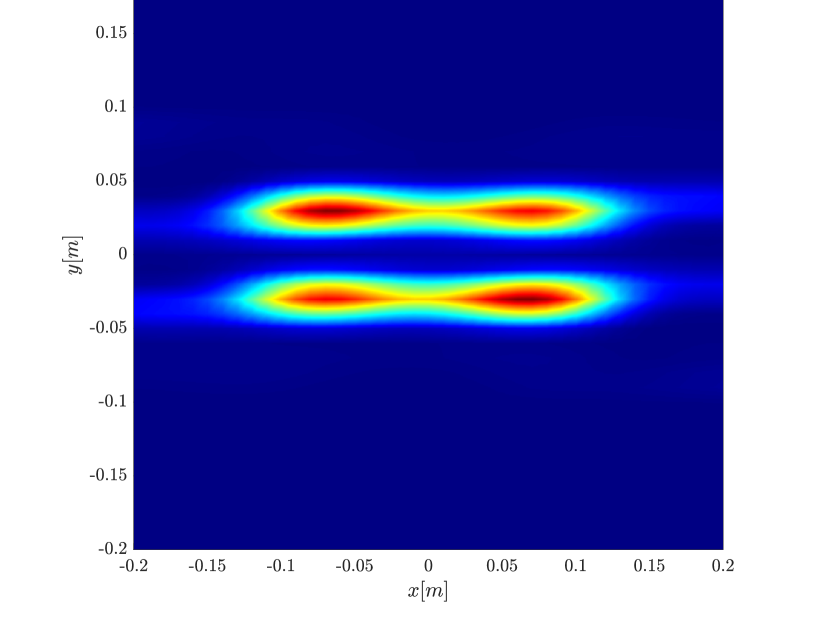

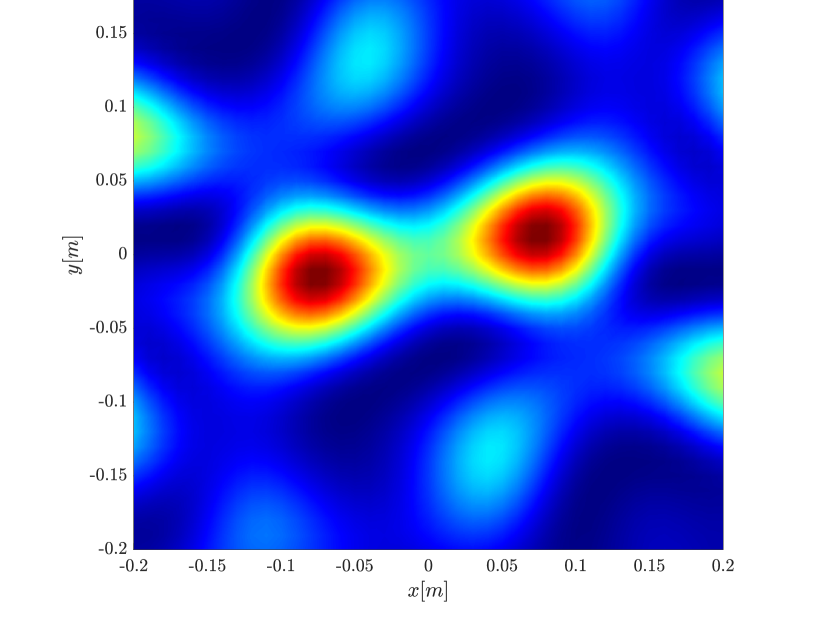

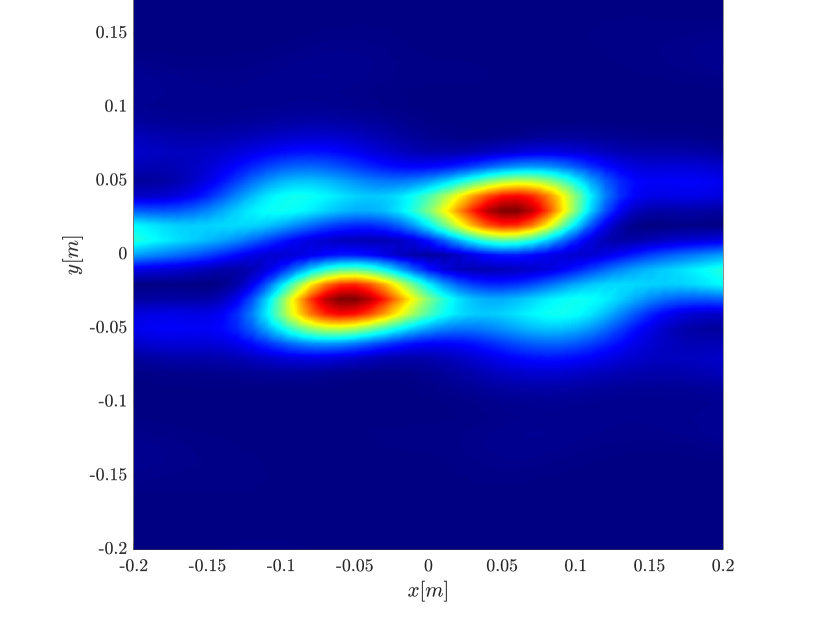

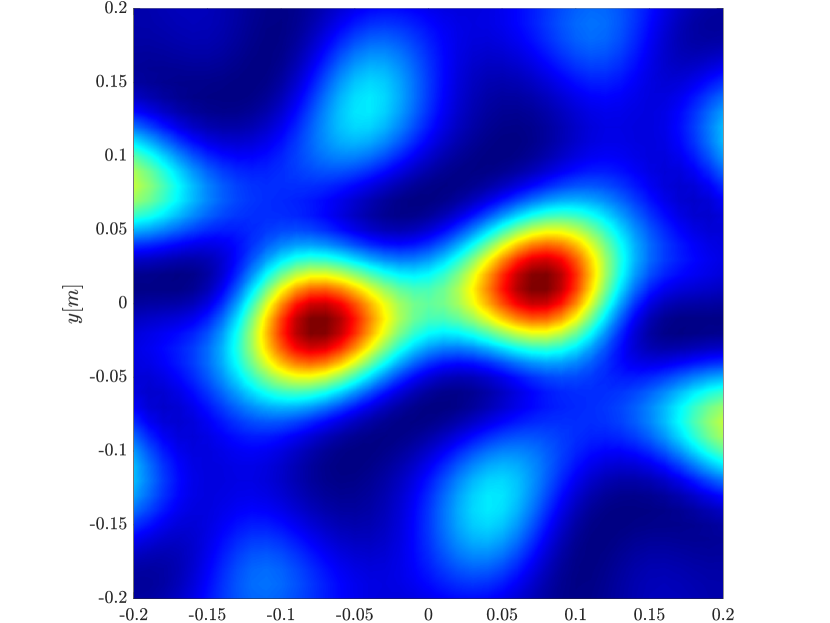

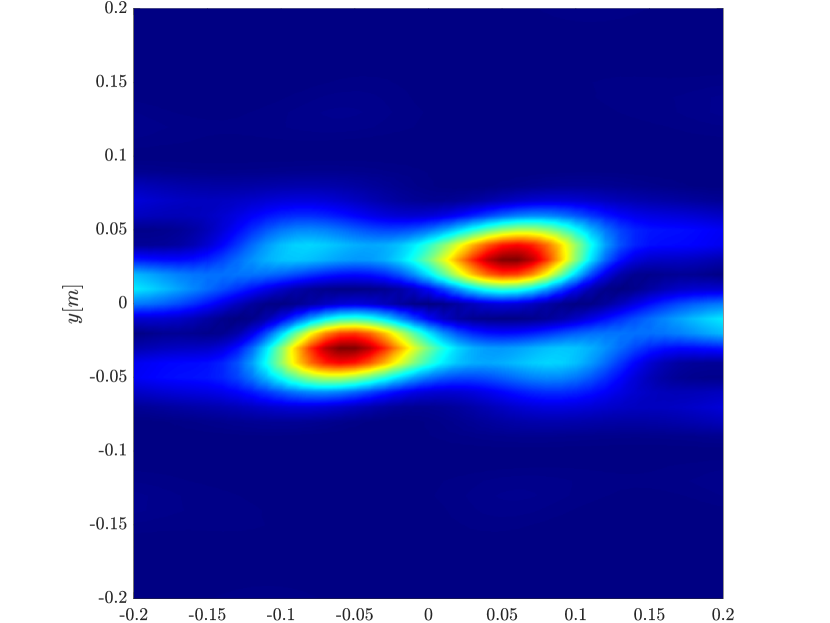

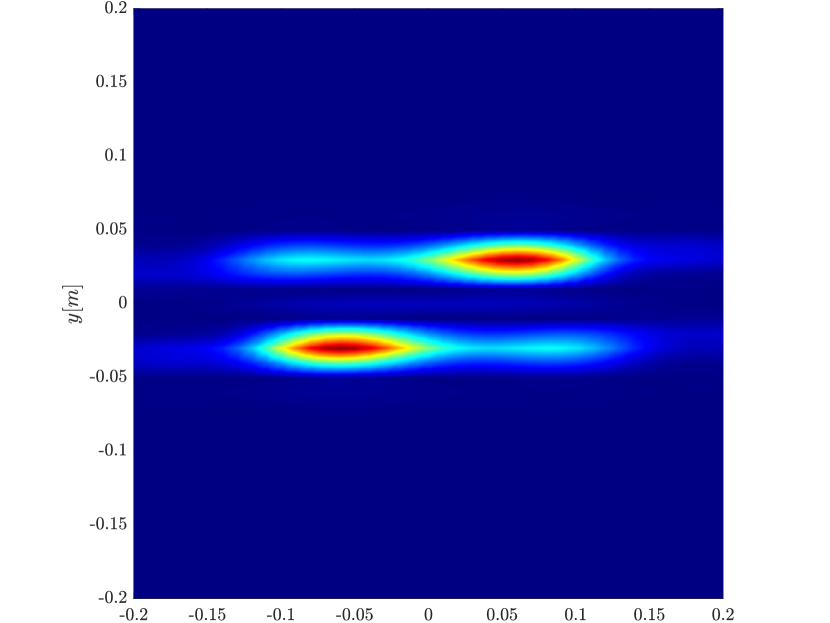

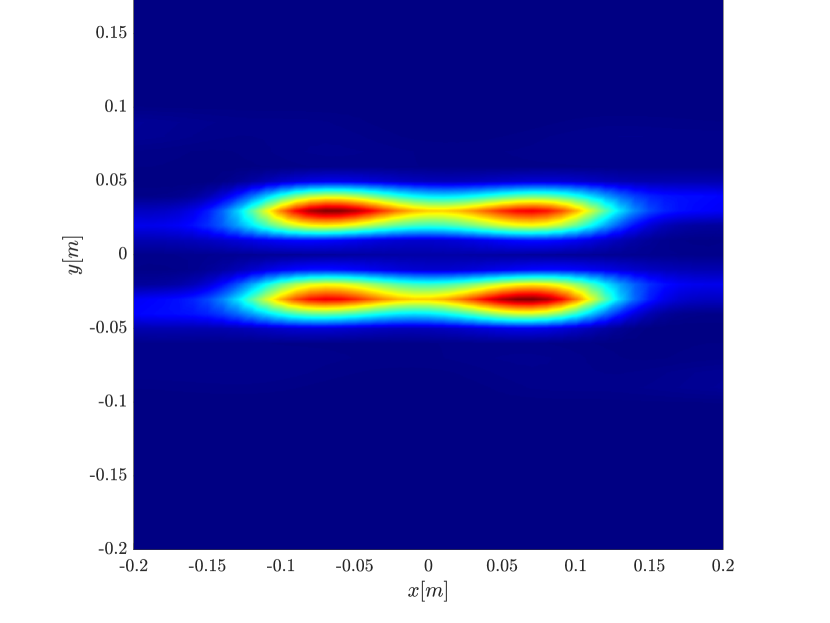

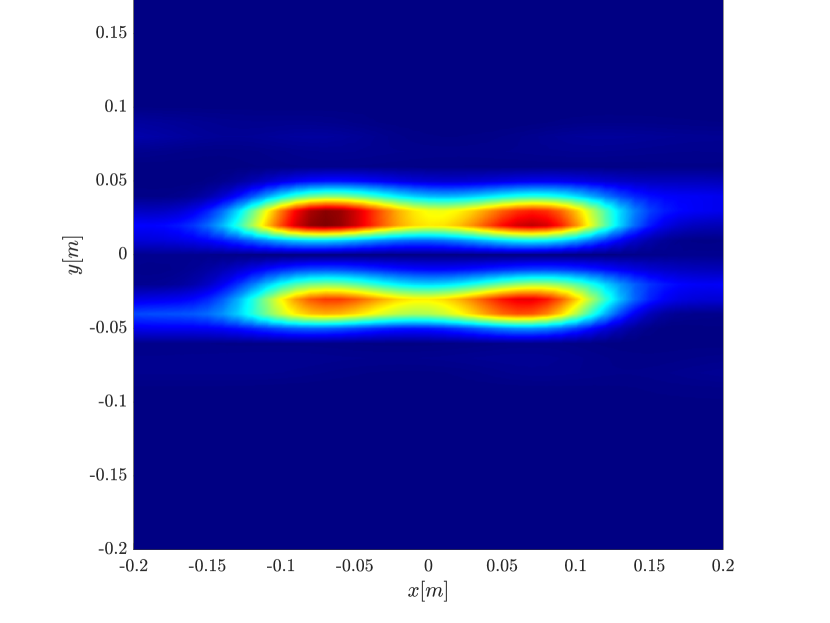

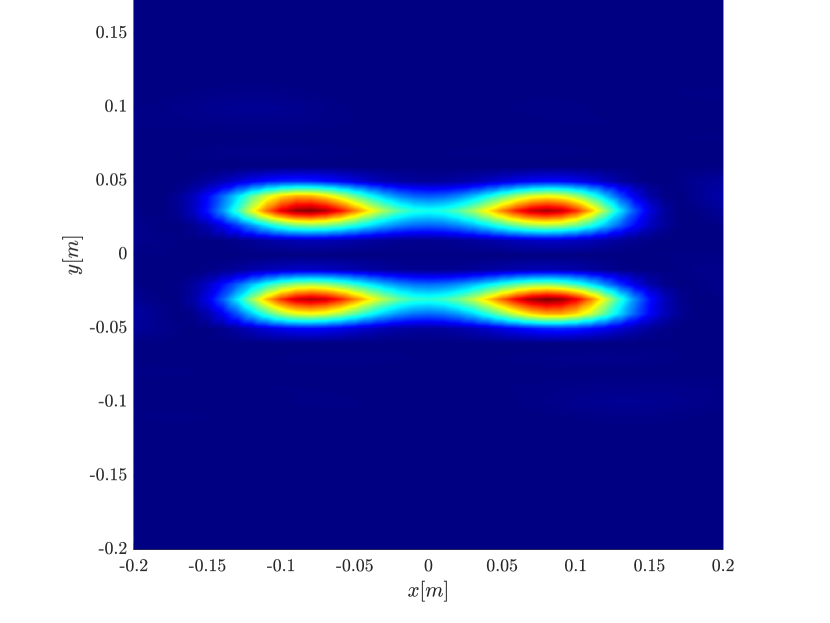

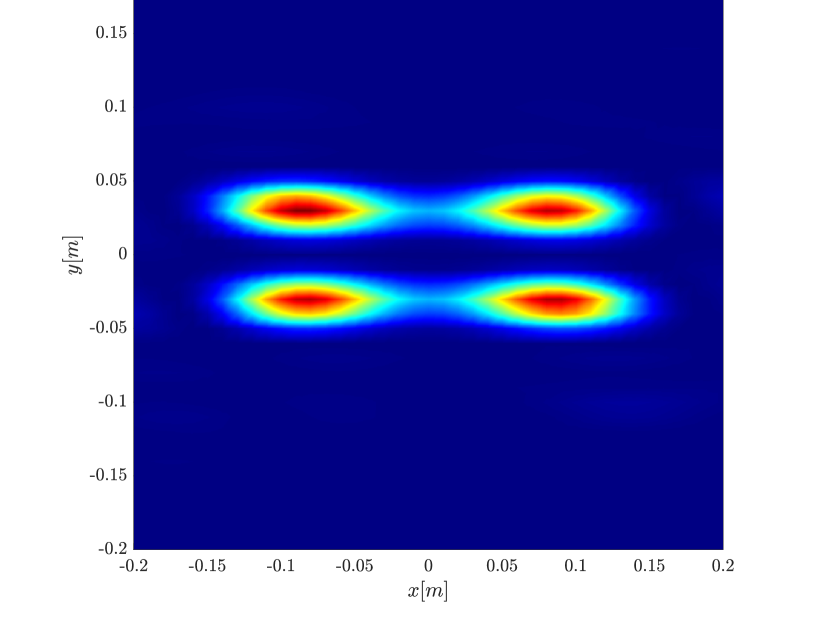

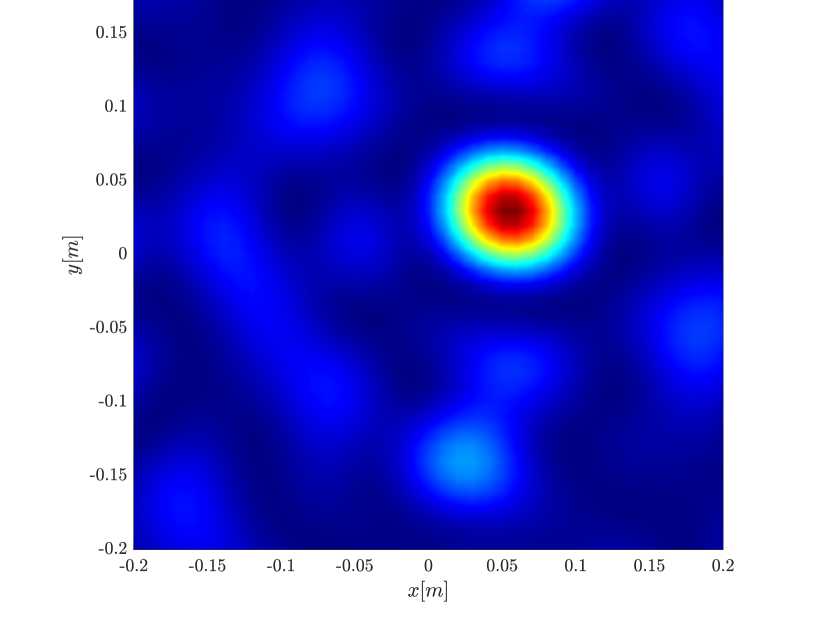

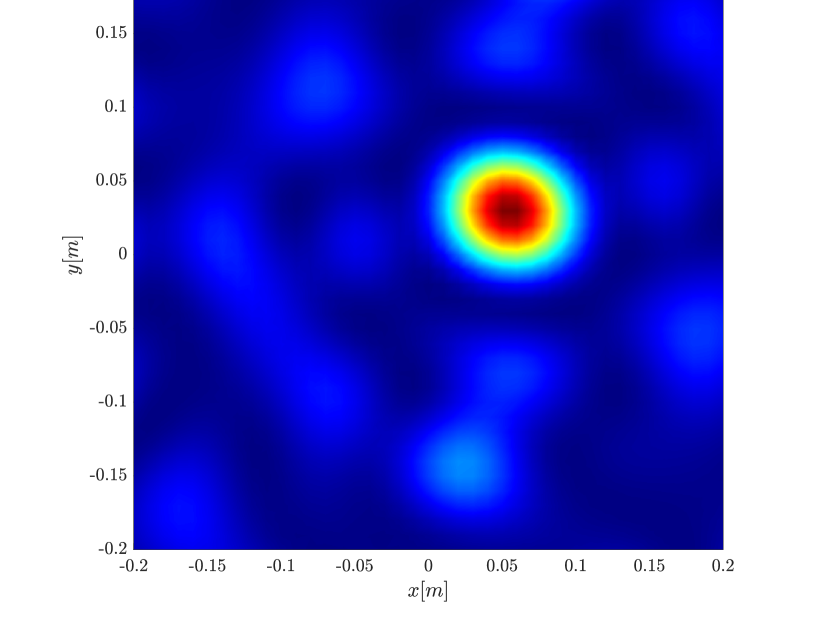

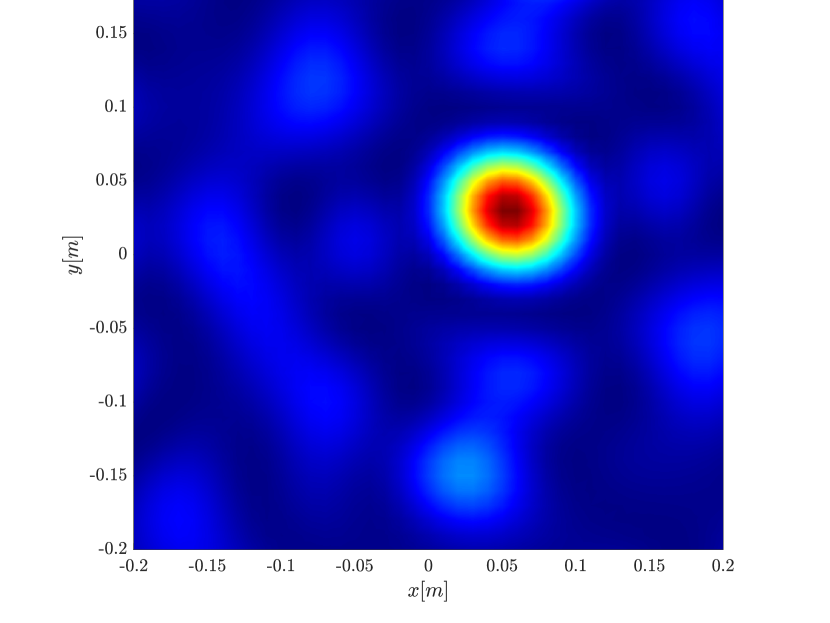

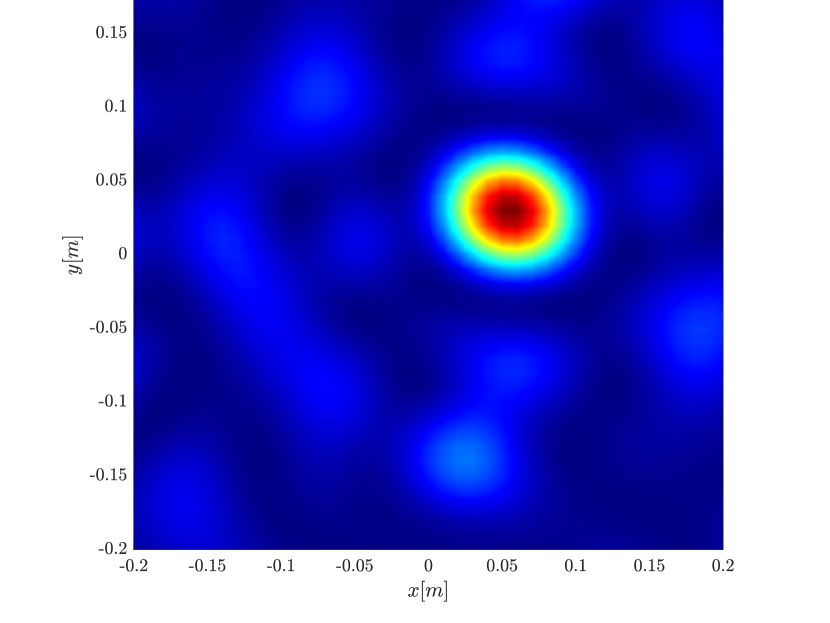

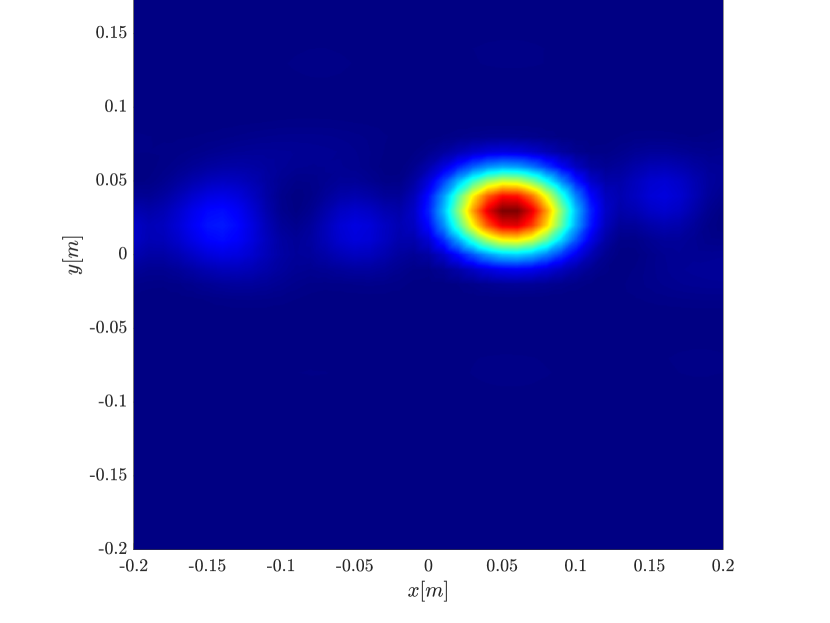

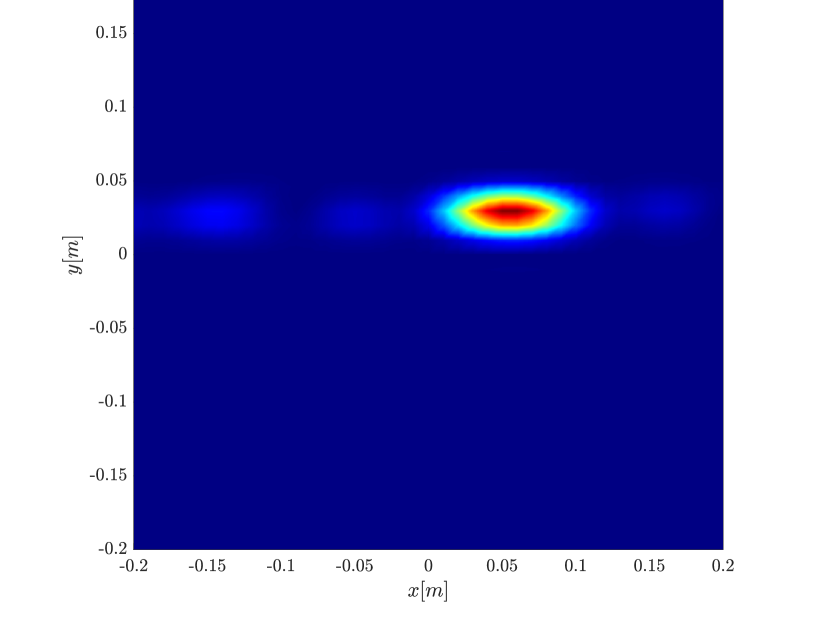

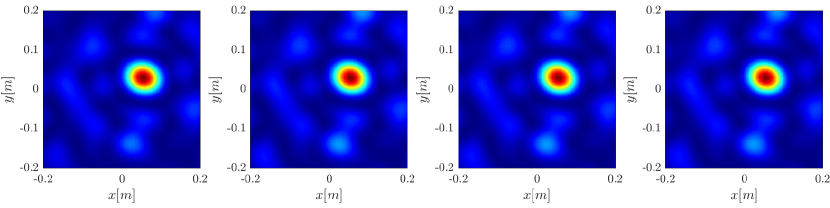

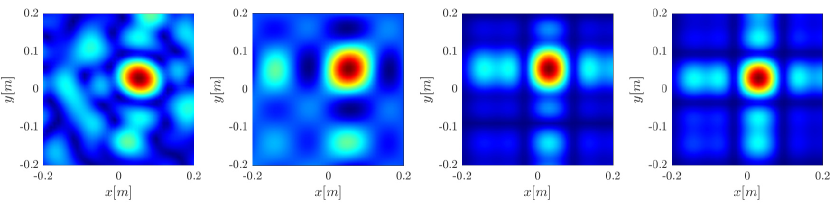

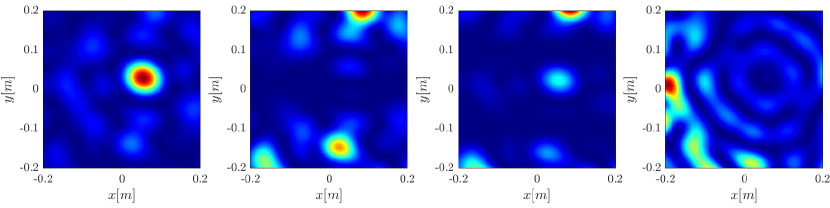

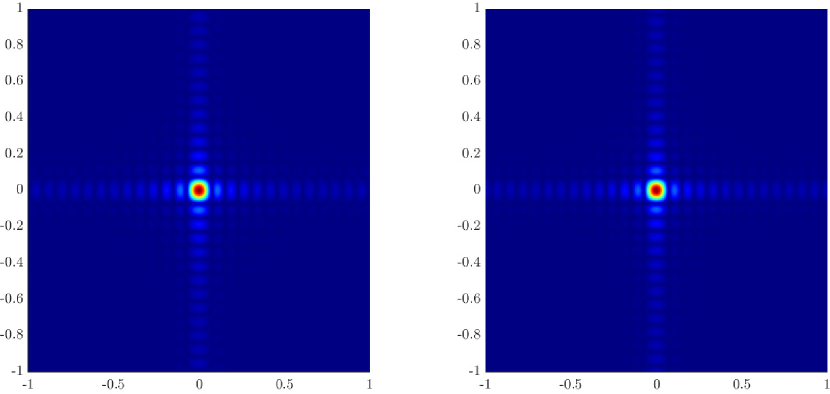

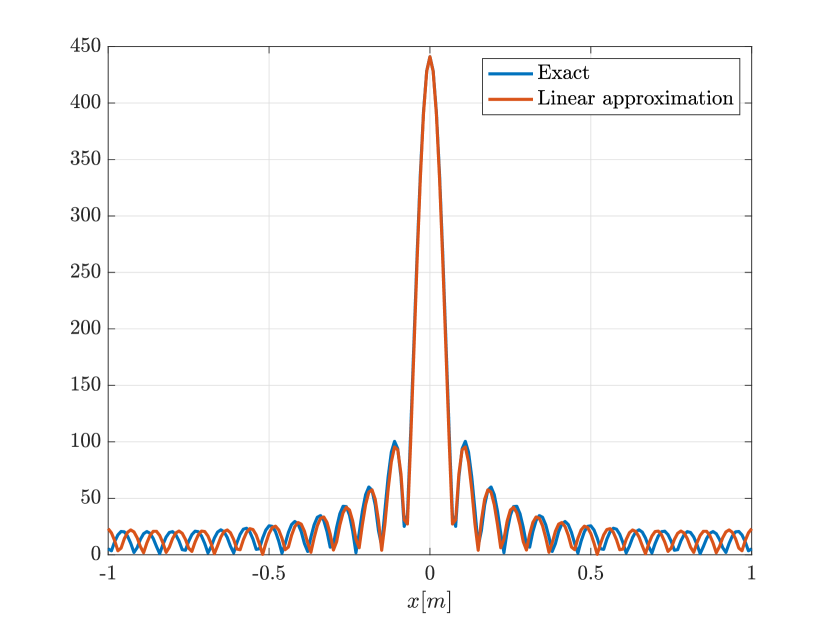

We first consider the two scatterer cluster illustrated in Figure 4. The scatterers are located at with respect to the center of the image window. We observe that as the synthetic aperture increases there is a dramatic improvement in the resolution attained by the rank-1 image compared to the single point one, whose resolution does not significantly change as the synthetic aperture increases. Note that the resolution of the rank-1 image is comparable to that of the linear Kirchhoff migration.

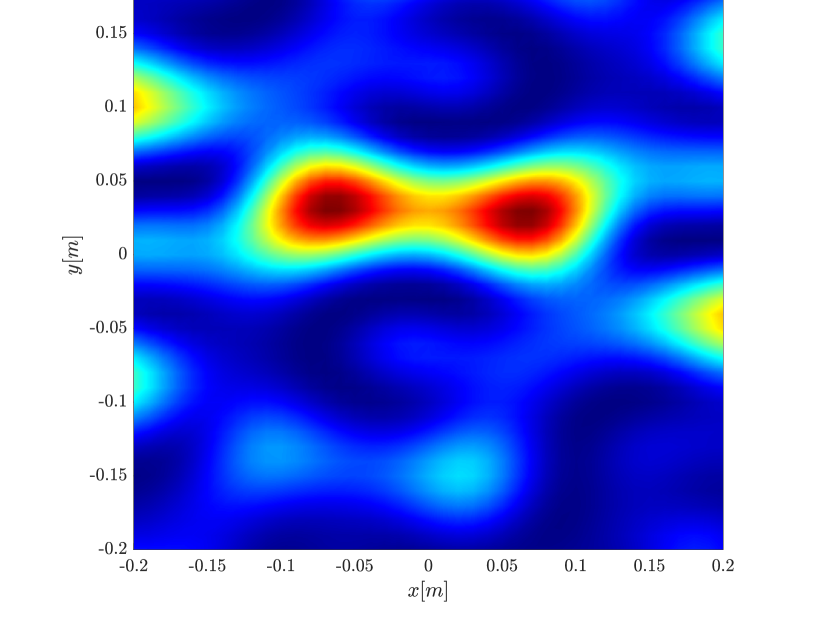

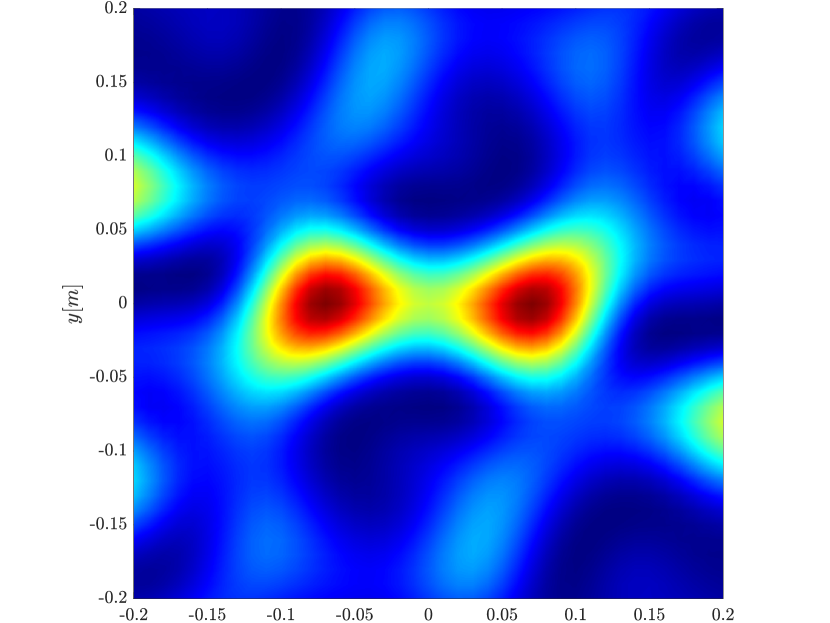

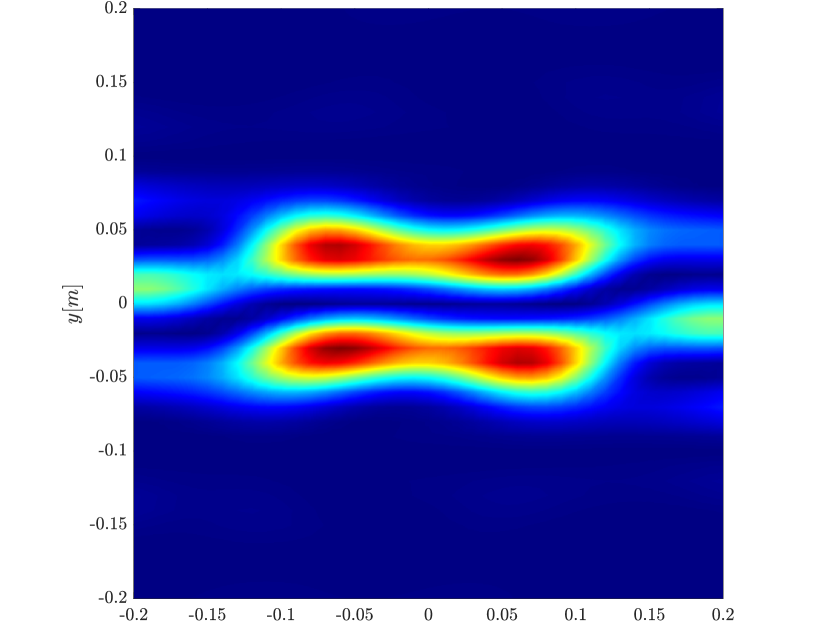

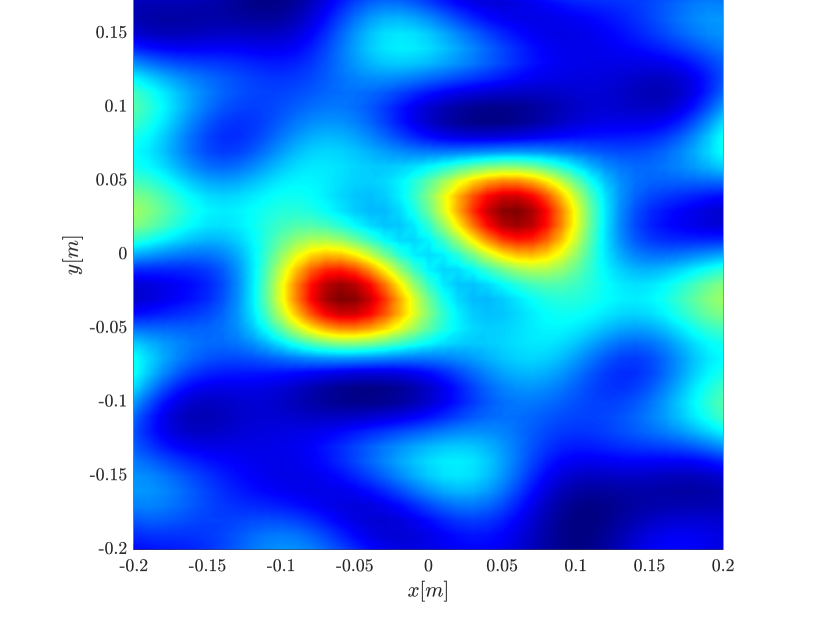

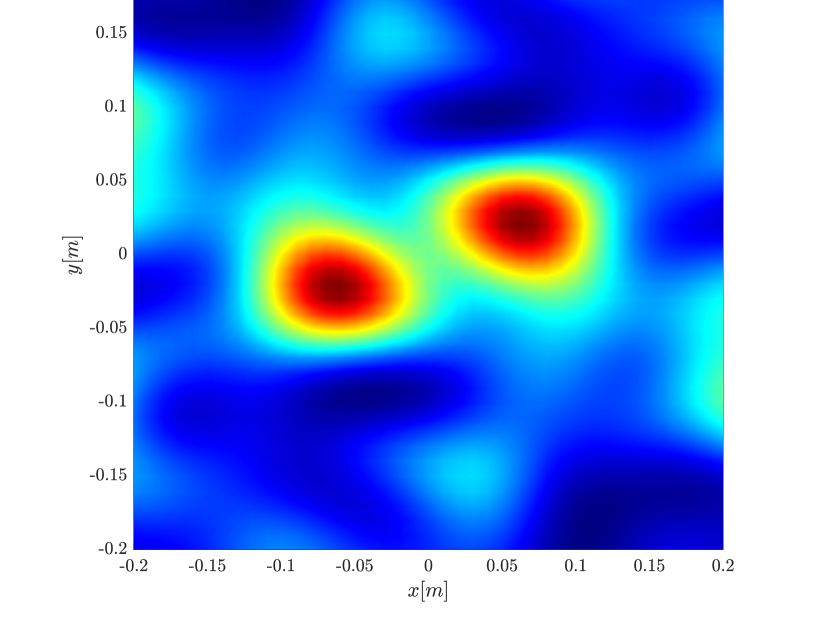

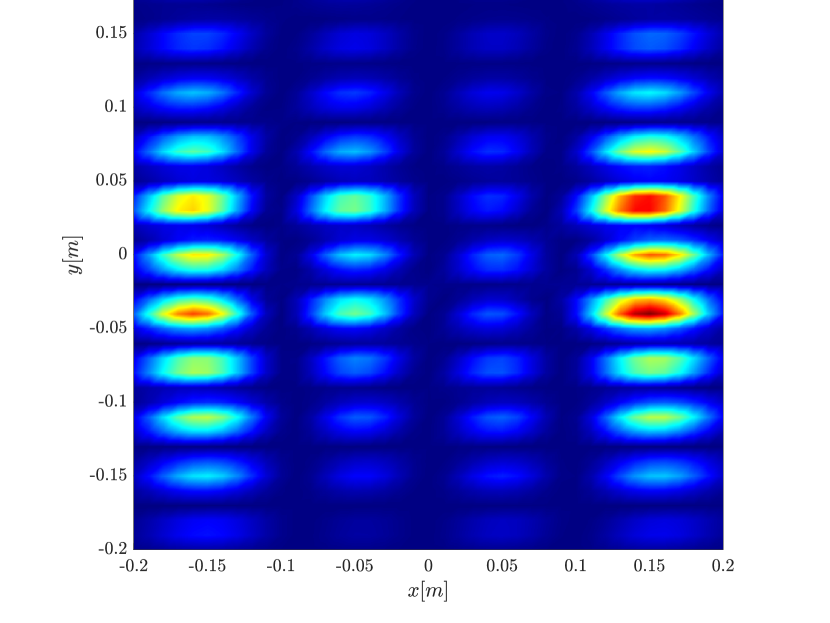

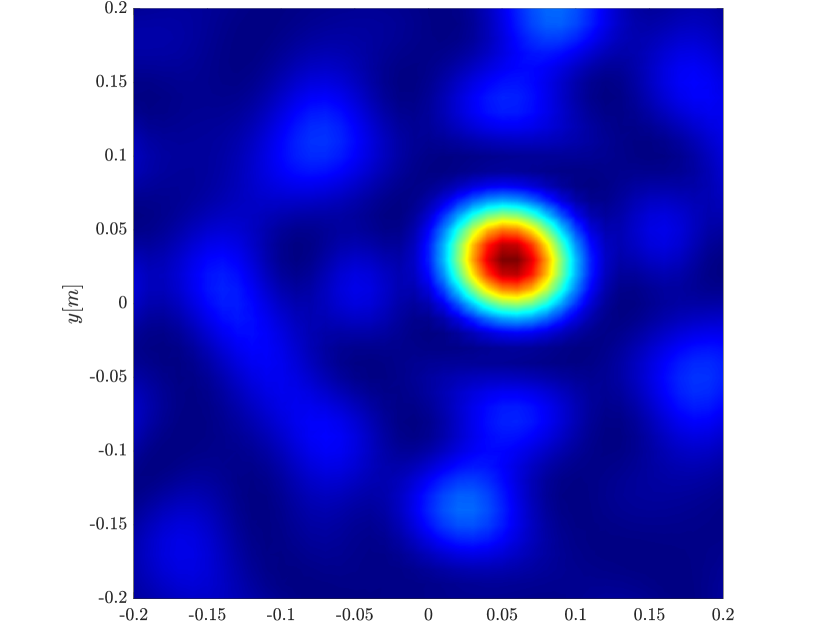

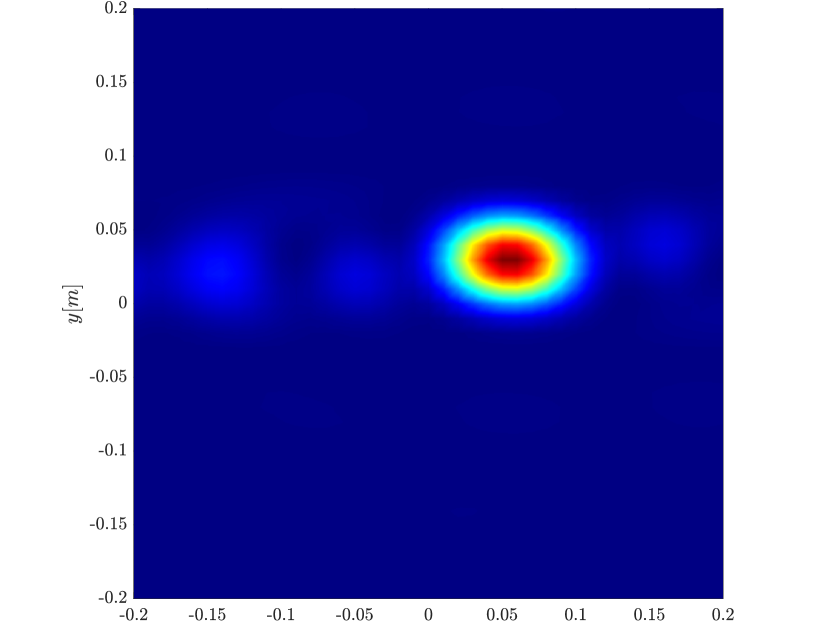

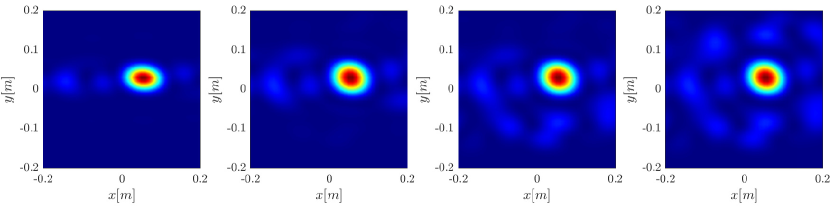

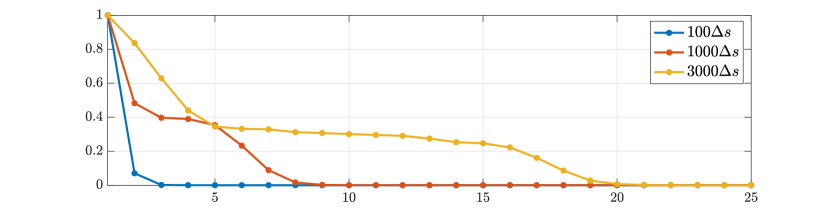

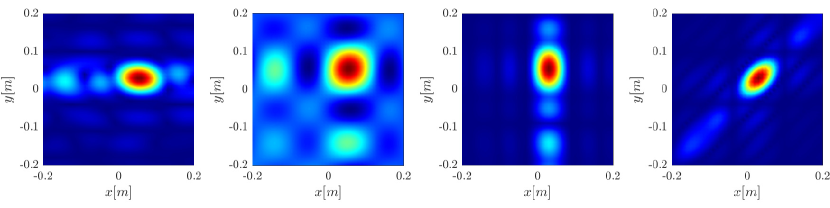

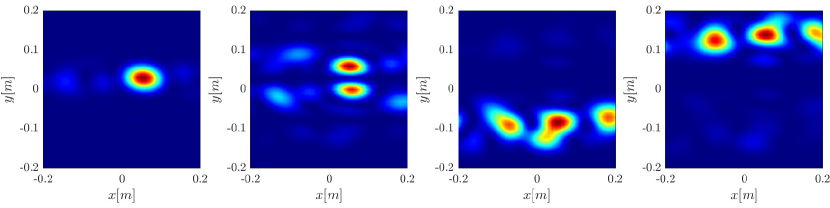

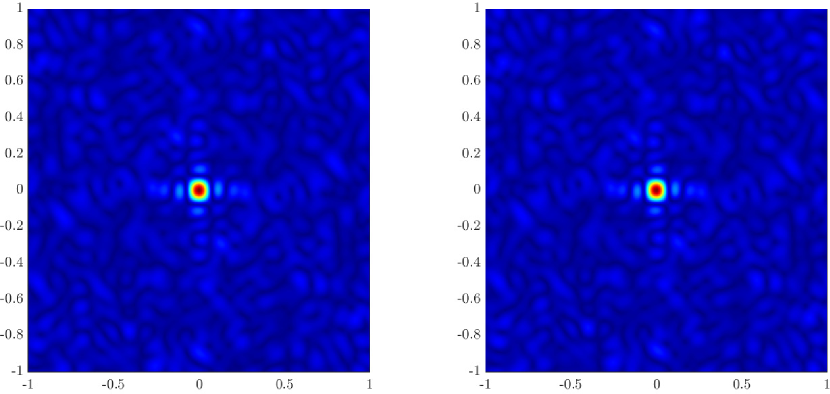

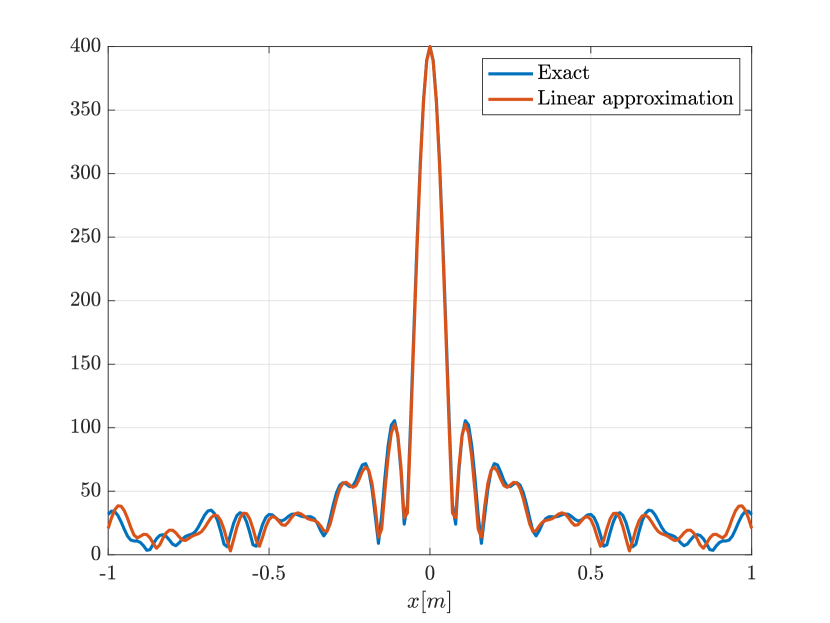

Next we consider the four scatterer cluster illustrated in Figure 5. The scatterers are located at with respect to the center of the image window. Again, the resolution of the rank-1 image improves significantly as the synthetic aperture increases while this is not the case for the single point migration. For all the synthetic apertures considered, the single point migration cannot resolve the scatterers in the -direction and provides a blurry image of the cluster of scatterers. As for Figure 4 the resolution of the rank-1 image is comparable to that of the linear Kirchhoff migration. The top 25 eigenvalues of for the different synthetic aperture sizes are shown in Figure 3-(d). We observe that, as the synthetic aperture size increases, so does the number of significant eigenvalues. That is due to the anisotropy in the resolution of the interference pattern . As mentioned in Section 3.6, in the direction the resolution is while it is in the direction . Therefore as the size of the synthetic aperture increases () the anisotropy becomes more significant. As a consequence the rank of increases and we expect a differentiation between the single-point migration and the rank-1 image. As we explain in Appendix C the rank-1 image provides a resolution which is in-between and .

In Figure 6 we consider again the four scatterer cluster but now the scatterers have different reflectivity. Two of the scatterers are weaker having % of the reflectivity of the other two. This translates to a ratio of for . In this case the single point migration reconstructs only the strong scatterers and those with a resolution that is significantly inferior of that of the rank-1 image. As before rank-1 image and linear KM provide similar results.

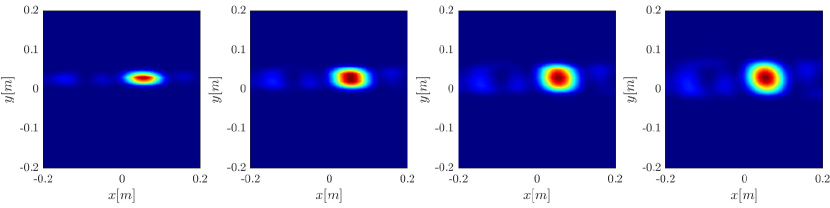

To reduce the computational complexity of the rank-1 image, we can downsample the two-point migration with respect to one of its dimensions. In this case instead of computing the first eigenvector of the interference pattern , we compute its first singular vector. This downsampling does not affect the resolution of the rank-1 image. This is illustrated in Figure 7 where we see that the image retains its resolution even if one of the dimensions is downsampled by a factor of 10 (keeping 10% of the columns chosen at random), reducing the computational complexity by the same factor.

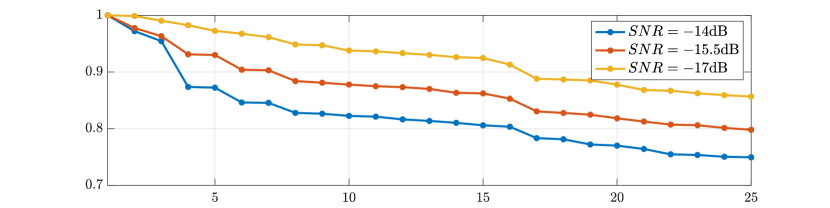

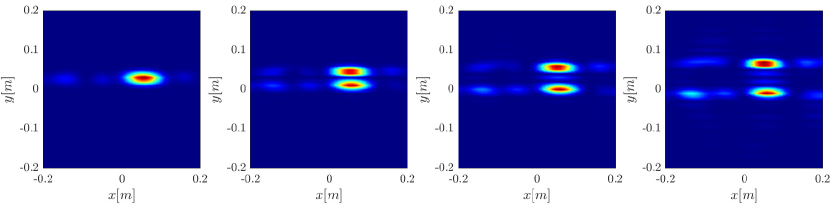

To investigate the robustness of the rank-1 image to noise we corrupt the data corresponding to Figure 5 with additive white Gaussian noise with varying noise levels. We see in Figure 8 that the rank-1 image remains stable down to a low signal to noise ratio (SNR) of dB. Below that point, as Figure 9 illustrates, the spectrum of the noise distorts that of the data.

5 Properties of the rank-1 imaging function

The results presented in Section 4 show that the performance of the rank-1 image is superior to the single point migration and comparable to the linear Kirchhoff migration. In order to better understand these results we consider in this section a simplified model problem with a single point scatterer. The results for this simplified case allow us to explain the improved performance of the rank-1 image and are consistent with our theoretical analysis in Appendix B.

We first repeat the results of Figure 4 for a single scatterer, illustrated in Figure 10. We observe the same behavior, namely the resolution increases with the synthetic aperture size for the rank-1 image, greatly improving on the classical migration when the synthetic aperture is large enough.

In order to further investigate the cause for the improved resolution we use (3.29), that is the single point migration is the weighted sum of all the eigenvectors of squared, weighted by their eigenvalue. We plot in Figure 11 the cumulative sum , for for the different inverse aperture sizes. The results motivate us to look into the eigenvalues of illustrated in Figure 12. We indeed see that as the inverse aperture increases, , has more significant eigenvalues.

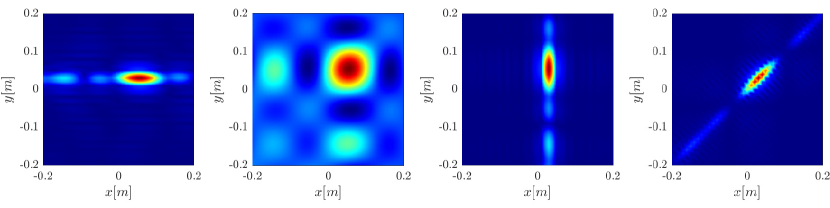

Stationary phase analysis of the two point migration function, carried out in Appendix B, suggest that the stationary points of are all the points , where is the collection of all scatterer locations. In the single scatterer case we would have only one stationary point. We analyze the behavior of around the stationary point, to see in greater detail the effect of the synthetic aperture. Since for a 2D imaging domain would be four dimensional, we look at all the possible planar cross sections of at the stationary point- 6 in total. Since is Hermitian, there are only 4 distinct cross sections (up to conjugation).

As illustrated in Figure 13, as the synthetic aperture size increases the stationary point becomes anisotropic in the sense the the main lobe has different width with respect to different directions. Specifically, the directions with the weakest decay are the ones for which , that is also the direction along which the single point migration is computed. In Appendix B, we show by stationary phase analysis that this anisotropy is the result of the synthetic aperture, and indeed we expect anisotropy to increase with the size of the synthetic aperture. By taking the top eigenvector rather than the diagonal of we can gain from the anisotropy and reduce the spot size as we show in Appendix C.

Anisotropy is also related to the observed increase in rank. If the stationary point is isotropic, it is close to being separable with respect to the two indices, and hence having rank 1. Anisotropy ensures this is no longer the case.

These results can also be interpreted in light of (3.31). We saw that the top eigenvector solves an optimization problem. The second eigenvector would solve the same optimization problem under the added constraint of orthogonality to the first eigenvector. As the first eigenvector becomes more localized, there might be higher modes who are localized as well, and whose eigenvalues do not decay rapidly. The orthogonality constraint means that they cannot have the same support as the first eigenvector. As a result summing them all would increase the spot size, which is the observed effect in the single point migration limit. This is indeed the case, as illustrated in Figure 14.

We close this section by summarizing our observations

-

1.

For a point scatterer model, the interference pattern has localized stationary points at all the possible combinations where are positions of the scatterers.

-

2.

As the synthetic aperture increases the spot size around the stationary point develops an anisotropy. The directions of weakest decay are the ones that match the single point migration. This is shown both numerically and analytically in Appendix B.

-

3.

The anisotropy causes the top eigenvector to be more localized, as the spot size is some mean of the spot width in the different directions, as shown in Appendix C.

-

4.

Anisotropy also increases the rank, generating more significant localized eigenvectors, and hence accentuating the difference between the two point and single point migrations.

6 Summary and Conclusions

We have considered the problem of imaging small fast moving objects, such as satellites in low earth orbit, using several receivers flying above the turbulent atmosphere, and asynchronous sources located on the ground. The resolution of the imaging system depends on the aperture spanned by the receivers, the bandwidth and the central frequency of the probing signals, as well as the inverse synthetic aperture created by the fast moving objects. In Section 2 we have shown that by forming the cross-correlations of the recorded signals and compensating for the Doppler effect in small imaging windows, we can create a cross-correlation data structure that can be used for imaging. This data structure suggests a natural extension to linear Kirchhoff migration imaging, which is a two-point interference pattern defined by (3.27).

In Section 3 we have considered several ways of extracting an image from the interference pattern. The usual single point migration image is the diagonal . The alternative that we have introduced here is the rank-1 image , which is the first eigenvector of the interference pattern. This rank-1 image has superior resolution compared to the single point migration. The improvement in resolution can be explained by studying the structure of the stationary points of the interference pattern. We have shown in Appendix B and Appendix C that the inverse synthetic aperture induces anisotropy in the profile of the point spread function. The effect is weakest in the direction that corresponds to the single point migration image, causing the image resolution to depend weakly on the synthetic aperture size. Taking the leading eigenvector as the image exploits the anisotropy and provides a resolution comparable to the linear KM system, even when considering multiple closely spaced targets. This is supported by extensive numerical simulations in Sections 4 and 5. We have noted in Section 3.5 that the interference pattern need not be a square matrix, with numerical simulations suggesting that one dimension can be downsampled by a up to a factor of 10 while still retaining the same resolution. Instead of the eigenvector, one then uses the top singular vector as the rank-1 image. We have also shown in Section 4 the robustness of the algorithm to additive noise.

An important effect to be considered in future work is the rotation of the object, which is a more realistic scenario for satellites. Further work will involve a systematic study of the robustness of the proposed rank-1 image to measurement noise, as well as to the effects of atmospheric turbulence.

7 Acknowledgements

The work of M. Leibovich and G. Papanicolaou was partially supported by AFOSR FA9550-18-1-0519. The work of C. Tsogka was partially supported by AFOSR FA9550-17-1-0238 and AFOSR FA9550-18-1-0519.

Appendix A The direct and scattered waves

The derivation follows the one in [8]. We give it here for completeness. We assume a source located at emits a short pulse whose compact support is in (or any interval of the form )

Even though in electromagnetic waves solve a Maxwell’s equation rather than the wave equation, the scalar wave equation captures the main propagation and scattering effects which are of interest, neglecting polarization effects [6].

The scalar wave solves the wave equation excited by the source

| (A.1) |

The velocity model assumes a uniform background and a localized perturbation centered at . We use here the slow/fast time representation so that , and the perturbation is

The scattered field, , is defined as the difference between the total field , solution of (A.1) and the incident field .

| (A.2) |

solves the free space wave equation

| (A.3) |

The incident field has the form

| (A.4) |

where is the free space Green’s function, given by

| (A.5) |

Plugging in Green’s function in (A.4) we obtain,

| (A.6) |

Thus, the scattered field solves,

so that

In the Born approximation, we can neglect as an effective source term in the integral, as its contributions would be quadratic in , assumed to be small, so that

If , i.e. a point- like scatterer, we get

Substituting the expression of and integrating by parts twice, we obtain:

Therefore the scattered field recorded by the receiver at , when the target is at , has the form:

| (A.7) |

Introduce

then we have

Denoting

| (A.8) |

then we can write

| (A.9) |

The unique zero of in , can is a root of the quadratic equation

Which is given by

| (A.10) |

Appendix B Approximate evaluation of the two point interference pattern

In this appendix we analyze the expression (3.26) of the two point interference pattern. The structure of the interference pattern plays a determining role in the resolution of the rank-1 image.

Assume the scatterers are located at with respect to the center of the image window , that is,

and the reflectivities are . Then, (3.18) takes the form

| (B.1) |

with

Let us define,

| (B.2) |

The two point migration translates the cross correlation data to a pair of points in the image window, and then sums over all receiver pairs , pulses , and frequencies . The interference pattern in (3.26) then has the form

| (B.3) |

We approximate the sum over pulses and frequencies with integrals. The sum over receiver pairs is replaced by a double integral over the physical aperture spanned by the receivers. This is well justified if we assume that the receiver positions are uniformly distributed over an area of as we do here. By making the change , the interference pattern has the form

| (B.4) |

We see that the integrals over the physical receiver aperture separate. We can approximate the travel time difference in the exponent using . We have to first order

Thus, also taking , we can approximate the argument of the exponent as

| (B.5) |

We can then write the integral over the physical aperture as

| (B.6) |

This is a classical result in array imaging [2]. The physical receiver array induces an integral over phases linear in the difference between the positions of the scatterer and the search point . If we further define

the integral is in fact one over a domain in space, which is a subdomain of the 2-sphere with radius , as illustrated in Figure 15,

| (B.7) |

The Jacobian and the integral can be evaluated in a more general setting using the Green-Helmholtz identity [13]. We can then write (B.4) as

| (B.8) |

Similar expressions have also been analyzed in [2]. As expected, the resolution is inversely proportional to the physical aperture size, and is bounded by .

We can make a further approximation to simplify the calculation in our case. Assume

where is the height of the targets. This is well justified for uniformlly distributed ground receivers and an inverse aperture of a size comparable to the ground array (, the diameter of the receiver array). In this approximation we also have that . Noting , we can rewrite the integral (B.8) as

| (B.9) |

If we assume a rectangular grid then the point spread function is

| (B.10) |

As illustrated in figures 15-17, the approximations made above are well justified in the context of our numerical simulations.

We see that is, as a function of , localized around the scatterer location . Hence has peaks at points , where are scatterer positions. has an effective resolution . Recall that this is the classical cross range resolution obtained with an array of size , imaging a point at range . On the other hand, the synthetic aperture produces the term in (B.9), which has resolution . For small the resolution is dominated by the size of the receiver array. However as the inverse aperture increases and becomes comparable to , anisotropy appears, as illustrated in Figure 13 in Section 5. The effect of the synthetic aperture is however not isotropic. Since the argument of the sinc is , the direction in which the argument is constant will be unaffected, while the orthogonal direction experiences the strongest decay. We note again that the single point migration is equivalent to taking with and so its resolution does not benefit from the synthetic aperture.

We have thus shown that we can approximate the form of in (3.26) as a collection of localized peaks, located at pairs of scatterer positions. When considering the extended domain of the interference pattern the peaks exhibit anisotropy with principal widths that are aligned with the directions , .

The rank-1 image takes as an alternative image the top eigenvector of . In Appendix C, we analyze the top eigenfunction of kernels that have a similar anisotropic form, and show that in general the resolution of the eigenfunction is better than the maximal width associated with single point migration.

Appendix C Eigenfunction of localized kernels

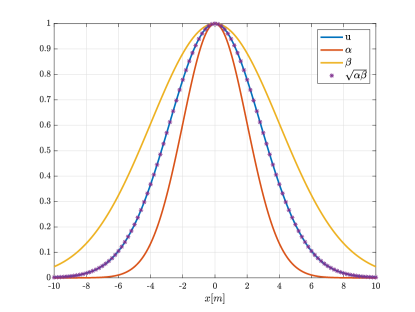

In this appendix we estimate the top eigenfunction of a localized anisotropic kernel in one dimension. This is the continuum limit of the results obtained in Appendix B, where it was shown that the peaks of the two point interference pattern are anisotropic. We evaluate the top eigenfunction, show it is localized, and get an estimate for its width in terms of the principal widths of the kernel. We show results in 1D, but they generalize to higher dimensions.

We saw in (B.9) that the peaks of the two point interference pattern have principal widths in the directions , . Hence, we are interested in analyzing kernels of the form

where is localized around . This is an intermediate case between two extreme cases. If , is approximately separable (indeed separable if is a Gaussian), and has rank 1 with eigenfunction . On the other hand if either , would be a correlation/convolution kernel and will have full rank, with non localized eigenfunctions. We are thus interested in the intermediate case.

We specifically look at two cases for - we start with a Gaussian kernel for which an analytical solution exists, and then consider a more realistic sinc model. While the Gaussian case is given here for illustration purposes, it could represent a situation where the medium has random fluctuations [5].

We first analyze the eigenfunctions of in the two cases. We then show how the eigenfunction of can be expressed using them.

C.1 Gaussian Kernel Approximation

Introduce the following Gaussian model for ,

| (C.1) |

And plug in a candidate eigenfunction . For to be an eigenfunction, it must satisfy

| (C.2) |

The exponential argument of has the form:

| (C.3) |

Denote , then we can rewrite the argument as

| (C.4) |

Integrating over we get

| (C.5) |

i.e. plugging in the value for , the equation for is

| (C.6) |

The eigenfunction has a variance which is the geometric mean of the variances of the two principal axes.

Since has non-negative entries and its support is a non-disjoint set, we know by the Krein-Rutman theorem [17] (extension of the Perron-Frobenius theorem to Banach spaces) that its top eigenfunction can be chosen to be strictly non-negative, so we have indeed recovered the top eigenfunction.

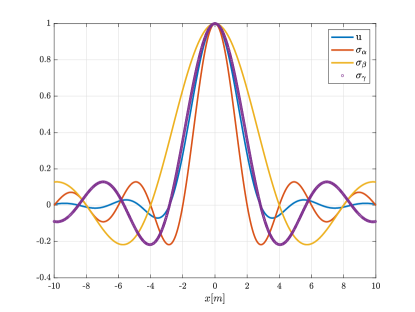

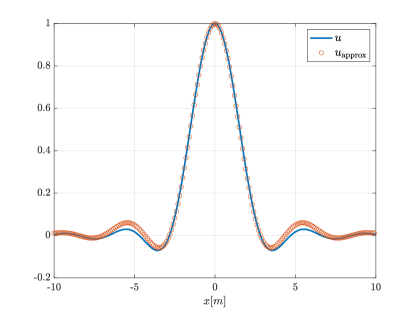

C.2 Sinc kernel approximation

We now wish to approximate the kernel as a product of 2 sinc functions:

| (C.7) |

This is a more realistic case since we saw that the behavior around the peaks of the two point interferece pattern can be approximated as a product of sinc functions. We’ll look for a candidate eigenfunction of the form . Numerical simulations suggest that while this is not an exact eigenfunction, it is close to one. Specifically, .

We are looking to estimate an integral of the form:

| (C.8) |

We first drop the constants from the denominator and use the following identity:

| (C.9) |

Next we decompose the numerator using the following identity

| (C.10) |

Which yields in our case:

| (C.11) |

We can combine all the terms in (C.9) to a single denominator using an appropriate variable transformation .

This will give us the following numerator, up to a constant

| (C.12) |

Which is up to a constant

| (C.13) |

We will use extensively the following result:

| (C.14) |

The integral then yields:

| (C.15) |

We assume , so some of the signs are unambiguous

| (C.16) |

If we further assume we get that the integral is proportianal to

| (C.17) |

In order to find the approximate width we wish to find values of for which (C.17) has a zero for .

We can rewrite the numerator as

| (C.18) |

for (the first zero of ), using , the numerator becomes

| (C.19) |

which has zeros at

The most localized eigenvector would be for the largest value of , e.g. for , we get , which indeed matches the numerical estimation as can be seen in figures 19 and 20. The effective width of , defined by the first zero in the direction is . So if we denote

we get the equation

| (C.20) |

which is very close to the harmonic mean. It is easy to verify that with the integral is 0 in principal value.

We see that in the sinc case the top eigenfunction is even more favorable compared to the case of a Gaussian kernel in the previous subsection. Now is close to the harmonic mean which is biased toward the smaller width, that is, we gain in resolution compared to the Gaussian case, where we got the geometric mean. This makes sense as a Gaussian case could represents a random medium, as noted before, and we expect better resolution in media with weak fluctuations or no randomness. We also note that the top eigenfunction has a faster decay rate compared to the diagonal of the kernel, that is, the single point migration image. The estimation suggests as a lower bound, compared to decay of the Kernel itself.

C.3 Eigenfunction of the multiple stationary points

If now is a linear combination of translations of (C.1),

then as long as the translations are far enough apart such that

the top eigenfunction of is

with the restriction

i.e, the eigenfunction’s coefficient vector is an eigenvector of the coefficient matrix .

To summarize we have shown that the top eigenfunction of the superposition of anisotropic kernels is more localized than the maximal width of the kernel. Hence, on top of other considerations considered in Section 3, the rank-1 image provides better resolution than the single point migration image.

References

- [1] IEEE Aerospace and Electronic Systems Magazine, 27, 2012.

- [2] Stockwell Bleistein N., Cohen J.K. and John W. Jr. Mathematics of Multidimensional Seismic Imaging, Migration, and Inversion. Springer, 2001.

- [3] L. Borcea, J. Garnier, G. Papanicolaou, K. Solna, and C. Tsogka. Resolution analysis of passive synthetic aperture imaging of fast moving objects. SIAM J. Imaging Sciences, 28, 2017.

- [4] Liliana Borcea and Josselin Garnier. High-resolution interferometric synthetic aperture imaging in scattering media. arXiv preprint arXiv:1907.02514, 2019.

- [5] Liliana Borcea, George Papanicolaou, and Chrysoula Tsogka. Theory and applications of time reversal and interferometric imaging. Inverse Problems, 19(6):S139, 2003.

- [6] M. Cheney and B. Borden. Fundamentals of radar imaging. SIAM, 2009.

- [7] John C. Curlander and Robert N. McDonough. Synthetic Aperture Radar: Systems and Signal Processing. Wiley-Interscience, 1991.

- [8] Jacques Fournier, Josselin Garnier, George Papanicolaou, and Chrysoula Tsogka. Matched-filter and correlation-based imaging for fast moving objects using a sparse network of receivers. SIAM Journal on Imaging Sciences, 10(4):2165–2216, 2017.

- [9] G. Franschetti and R. Lanari. Synthetic aperture radar processing. Boca Raton, FL: CRC Press, 1999.

- [10] J. Garnier and G. Papanicolaou. Correlation based virtual source imaging in strongly scattering media. Inverse Problems, 28:075002, 2012.

- [11] J. Garnier and G. Papanicolaou. Passive imaging with ambient noise. Cambridge University Press, 2016.

- [12] J. Garnier, G. Papanicolaou, A. Semin, and C. Tsogka. Signal-to-noise ratio analysis in virtual source array imaging. SIAM J. Imaging Sciences, 8:248–279, 2015.

- [13] Josselin Garnier and George Papanicolaou. Passive imaging with ambient noise. Cambridge University Press, 2016.

- [14] J. A. Haimerl and G. P. Fonder. Space fence system overview. In Proceedings of the Advanced Maui Optical and Space Surveillance Technology Conference, 2015.

- [15] Donald J Kessler and Burton G Cour-Palais. Collision frequency of artificial satellites: The creation of a debris belt. Journal of Geophysical Research: Space Physics, 83(A6):2637–2646, 1978.

- [16] M. E. Lawrence, C. T. Hansen, S. P. Deshmukh, and B. C. Flickinger. Characterization of the effects of atmospheric lensing in sar images. SPIE Proceedings, 7308, 2009.

- [17] P.D. Lax. Functional Analysis. Pure and Applied Mathematics: A Wiley Series of Texts, Monographs and Tracts. Wiley, 2002.

- [18] M.S. Mahmud, S. U. Qaisar, and C. Benson. Tracking low earth orbit small debris with gps satellites a bistatic radar. In Proceedings of the Advanced Maui Optical and Space Surveillance Technology Conference, 2016.

- [19] R. W. McMillan. Atmospheric turbulence effects on radar systems. In Proceedings of the IEEE 2010 National Aerospace & Electronics Conference, pages 181–196, 2010.

- [20] D. Mehrholz, L. Leushacke, W. Flury, R. Jehn, H. Klinkrad, and M. Landgraf. Detecting, tracking and imaging space debris. ESA Bulletin, 109:128–134, 2002.

- [21] M. T. Valley, S. P. Kearney, and M. Ackermann. Small space object imaging: LDRD final report. Sandia National Laboratories, 2009.

- [22] K. Wormnes, R. Le Letty, L. Summerer, R. Schonenborg, O. Dubois-Matra, E. Luraschi, A. Cropp, H. Kragg, and J. Delaval. Esa technologies for space debris remediation. In Proceedings of 6th European Conference on Space Debris, Darmstadt, Germany, 2013.