Generalized Constraints as A New Mathematical Problem in Artificial Intelligence: A Review and Perspective

Abstract

In this comprehensive review, we describe a new mathematical problem in artificial intelligence (AI) from a mathematical modeling perspective, following the philosophy stated by Rudolf E. Kalman that “Once you get the physics right, the rest is mathematics”. The new problem is called “Generalized Constraints (GCs)”, and we adopt GCs as a general term to describe any type of prior information in modelings. To understand better about GCs to be a general problem, we compare them with the conventional constraints (CCs) and list their extra challenges over CCs. In the construction of AI machines, we basically encounter more often GCs for modeling, rather than CCs with well-defined forms. Furthermore, we discuss the ultimate goals of AI and redefine transparent, interpretable, and explainable AI in terms of comprehension levels about machines. We review the studies in relation to the GC problems although most of them do not take the notion of GCs. We demonstrate that if AI machines are simplified by a coupling with both knowledge-driven submodel and data-driven submodel, GCs will play a critical role in a knowledge-driven submodel as well as in the coupling form between the two submodels. Examples are given to show that the studies in view of a generalized constraint problem will help us perceive and explore novel subjects in AI, or even in mathematics, such as generalized constraint learning (GCL).

Index Terms:

Constraints, Prior, Transparency, Interpretability, Explainability.

I Introduction

Once you get the physics right, the rest is mathematics [1]. This statement by Kalman is particularly true to the study of artificial intelligence (AI). In contrast to the natural intelligence displayed by humans or other lives, AI demonstrates its intelligence by machines (or tools, systems, models in other terms) programmed from a computer language. The statement will direct us to seek the fundamental of AI at a mathematical level rather than to stay at application levels. Therefore, when deep learning (DL) advanced AI to a new wave, one question seems to be: ”Do we encounter any new mathematical, yet general, problem in AI”?

In this paper, we take the notion of “Generalized Constraints (GCs)” and consider it as a new mathematical problem in AI. The related backgrounds are given below.

I-A Three core terms

Definition 1

Conventional constraint (CC) refers to a constraint in which its representation is fully known and given in a structured form.

Definition 2

Prior information (PI) is any information or knowledge about the particular things (such as problem, data, fact, etc.) that is known for someone.

Definition 3

Generalized constraint (GC) is a term used in mathematical modelings to describe any related prior information.

From the definitions above, we can describe their relations by using a set notation:

| (1) |

where CC is a subset of GC, and GC is equal to PI. When the term PI appears in daily life, the term GC stresses a mathematical meaning in modeling. For simplifying discussions, we take PI as a general term which may be called prior knowledge, prior fact, specification, bias, hint, context, side information, invariance, idea, hypothesis, principle, theory, common sense, etc. In [2], Hu et al. pointed out that PI usually exhibits one or a combination of features in modelings, such as incomplete information and unstructured form in its representation. They showed several examples about incomplete information in GCs, such as, , where and are unknown parameters.

I-B Extra challenges of GCs over CCs

Duda et al. pointed out that [3]: “incorporating prior knowledge can be far more subtle and difficult”. For a better understanding about CCs and GCs, we present an overall comparison between them in respect to several aspects (Table I). One can see that GCs do not only enlarge the application domains and the representation forms over CCs, but also add a significant amount of extra challenges in AI studies. For example, the new subjects may appear from the study of GCs. Some of GCs may involve a transformation between linguistic prior and computational representation, which is a difficult task because one may face a “semantic gap” [4]. We will discuss those extra challenges further in the later sections.

|

|

|

|

|

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|

|

|

|

|

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|

|

|

|

|

|

I-C Mathematical notation of generalized constraints

In fact, the term GCs is not a new concept and it appeared in literature, such as a paper by Greene [5] in 1966. In the earlier studies, GC was used as a term without a formal definition until the studies by Zadeh [6, 7, 8] in 1986, 1996, and 2011, respectively. Zadeh presented a mathematical formulation of GCs in a canonical form [7]:

| (2) |

where (pronounced “ezar”) is a variable copula which defines the way in which constrains a variable . He stated that “the role of R in relation to X is defined by the value of the discrete variable r”. The values of for GCs are defined by probabilistic, probability, usuality, fuzzy set, rough set, etc; so that a wide variety of constraints can be included. Zadeh used GCs for proposing a new framework called “Generalized Theory of Uncertainty (GTU)”. He described that “The concept of a generalized constraint is the center piece of GTU” [8]. The idea by Zadeh is very stimulating in the sense that we need to utilize all kinds of prior, or GCs, in AI modelings.

In 2009, Hu et al. [2] proposed so called “Generalized Constraint Neural Network (GCNN)” model, in which the GCs considered by them were “partially known relationship (PKR)” knowledge about the system being studied. Without awareness of the pioneer work by Zadeh, they proposed the formulation for a regression problem in a form of:

| (3) |

where and are the input and output for a target function to be estimated, is the approximate function with a parameter set , is the ith PKR of , the symbol “” represents the term “is compatible with” so that “hard” or “soft” constraints can be specified to the PKR, and the symbol denotes “about” because some PKRs cannot be expressed by mathematical functions. They adopted the term “relationships” rather than “functions”, for the reason to express a variety of types of prior knowledge in a wider sense. Their work was inspired by the studies called “Hybrid Neural Network (HNN)” models [9, 10], but adopted “generalized constraint” rather than “hybrid” as a descriptive term so that the mathematical meaning was clear and stressed.

I-D A view from mathematical spaces

Following the philosophy of Kalman [1], we can view any study in machine learning (ML) or in AI is a mapping study among the mathematical spaces as shown in Fig. 1.

The all spaces are interactive to each other, such as a knowledge space (i.e., a set of knowledge) which is connected to other spaces via either an input (i.e., embedded knowledge) or an output (i.e., derived knowledge) means. In fact, a knowledge space is not mutually exclusive with other spaces in the sense of sets. The diagram is given for a schematically understanding about the information flows in modelings as well as in an intelligent machine. The interactive and feedback relationships imply that intelligence is a dynamic process.

Most machine learning systems can be seen as a study on deriving a hypothesis space (i.e., a set of hypotheses) from a data space (i.e., a set of observations or even virtual datasets) [12]. The systems are also viewed as parameter learning machines if the concern is focused on a parameter space (i.e., a set of parameters) [11]. This paper will focus on a constraint space (i.e., a set of constraints) but highlight the problem of GCs in the context of mathematical modeling in AI.

I-E GCs as a new mathematical problem

AI machines generally involve optimizations [13]. However, most existing studies in AI concern more on CCs. There are primarily three types of constraints in CCs, that is, equality, inequality, and integer constraints, respectively. All of them are given in a structured form. It is understandable that any modeling will involve the application of PI. In a real-world setting, PI does not always show it in a mathematical form of CCs. Therefore, in the designs of AI machines, we basically encounter a GC, rather than a CC, problem. However, GCs are still considered as a new mathematical problem due to the following facts.

-

•

From a mathematical viewpoint, we still miss “a mathematical theory of AI” if following the position of Shannon [14]. In other words, we have no theory to deal with GCs. For the given image, we can tell if a cat or a dog. This process is strongly related to the specific GCs embedded in our brains. However, for either deep learning or human brains, we are far away from understanding the specific GCs in a mathematical form. A theoretical study of GCs is needed, such as its notation and formalization as a general problem in AI.

-

•

From an application viewpoint, we need an applicable yet simple modeling tool that is able to incorporate any form of PI maximally and explicitly for understanding AI machines. Although application studies in AI encounter more GCs than CCs, not much studies are given under the notion of GCs. The GC problem is still far away from awareness for every related community, and mostly concerned within a case-by-case procedure. Moreover, the extra challenges listed in GCs have not been addressed systematically.

There are extensive literature implicitly covering GCs. The goal of this paper attempts to provide a comprehensive review about GCs so that readers will be able to understand the basics about them. The paper is not aiming at an extensive review of the existing works nor a rigorous about most terms and problems. For simplification of discussions, we will apply most terms directly and suggest to consider them in a broader sense. Only a few of terms are given or redefined for clarification. We take a “top-down” way, that is, from outlooks to methods in the review. Therefore, in Section II, we will present outlooks given by different researchers, so that one can understand why GCs are critical in AI. Sections III and IV introduce the related methods in embedding GCs and extracting GCs, respectively. The summary and final remarks are given in Section V.

II AI machines and their futures

This section will present overall understandings about AI machines and their futures. Because there exist numerous descriptions to the subjects, only a few of them are presented for the purpose of justifying the notion of GCs.

II-A Five tribes and the Master Algorithm

Domingos [15] gave the systematic descriptions about the current learning machines and divided them with five “tribes”. He suggest that the all tribes should be evolved into so called “the Master Algorithm” in future, which refers to a general-purpose learner. Table II lists the five tribes with respect to the different features (or, GCs). Using a schematic plot, he illustrated the tribes by five circular sectors surrounding a core circle which is the Master Algorithm. He proposed a hypothesis below [15]:

“All knowledge—past, present, and future—can be derived from data by a single, universal learning algorithm.”

The hypothesis suggests one important implication in future AI, that is,

-

•

The future AI machines should be able to deal with any form of prior knowledge with a single algorithm.

The implication presents the necessary conditions of future AI machines in regardless of the existence of the Master Algorithm. In fact, there exist other terms to describe future AI machines, such as “artificial general intelligence (AGI)”[16]. All of them have implied the utilization of GCs as a general tool.

| Tribe | Representation | Evaluation | Optimization | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Symbolists | Logic | Accuracy |

|

||||||

| Connectionists |

|

|

|

||||||

| Evolutionaries |

|

Fitness |

|

||||||

| Bayesians |

|

|

|

||||||

| Analogizers |

|

Margin |

|

II-B Reasoning methods

In 1976, Box [17] illustrated the reasoning procedures (Fig. 2) for “The advancement of learning”. In Fig. 2 (a), if the upper part and the lower part refer to data and knowledge, respectively, an induction procedure follows a direction from data to knowledge and a deduction procedure follows a direction from knowledge to data. He called them “an iteration between theory and practice”. In Fig. 2 (b), he clearly showed that learning is an information processing involving both induction and deduction inferences as a dynamic system having a feedback loop. Usually, induction refers to a “bottom-up” reasoning approach, and deduction a “top-down” reasoning approach [18]. Hence, the positions for upper part of the set “Practice et al.” and the lower part of the set “Hypothesis et al.” are better to be exchanged in Fig. 2 (a), so that the meanings for the top and the bottom are correct.

Hu et al. [19] adopted an image cognition example in [20] for explanations of inferences. One can guess what it is about in Fig. 3. If one cannot provide a guess, it is better to see Fig. 16 in Appendix, and then re-examine Fig. 3. In general, most people can present a correct answer after seeing both figures. Only a small portion of people are able to tell the answer of Fig. 3 directly in the first-time seeing.

If Figs. 3 and 16 represent the original data and prior knowledge respectively, the guessing answer of Fig. 3 is a hypothesis. We can understand a cognition process will involve both induction and deduction procedures. This is true for all people in either seeing or not seeing Fig. 16. Generally, without human face prior in one’s mind, the one is unable to provide a correct answer to the image in Fig. 3. This cognition example confirms the proposal of Box [17] in Fig. 2. The study of Box and the cognition example provide the following implications in relation to GCs:

-

•

The knowledge part in Fig. 2 (a) can be viewed as GCs, but how to formalize GCs in Fig. 16 is still a challenge.

-

•

The GCs are generally updated within a feedback loop, particularly when more data comes in.

II-C Knowledge role in future AI machines

Niyogi et al. [21] pointed out that “incorporation of prior knowledge might be the only way for learning machines to tractably generalize from finite data”. The statement suggested utilization of prior knowledge in a minimum sense. In a maximum sense, Ran and Hu [11] suggested the future AI machines should go to a higher position over “Average Human Being” in both data utilization and knowledge utilization (Fig. 4). When the term “big knowledge” have been appeared, such as in [22], we provided a formal definition based on [19] below:

Definition 4

Big knowledge is a term for a knowledge base that is given on multiple discipline subbases, for multiple application domains, and with multiple forms of knowledge representations.

The knowledge role in future AI can be justified from the ultimate goals of AI. There are numerous understanding about the goals of AI in different communities. For example, Marr [24] described that “the goal of AI is to identify and solve tractable information processing problems”. Other researchers considered it as “passing the Turing Test” [25], General Problem Solver (GPS) [26], or AGI [16, 27]. Some researchers proposed two ultimate goals, namely, scientific goal and engineering goal [28, 29]. Following their positions and descriptions, we redefine the two goals of AI below:

-

•

Engineering goal: To create and use intelligent tools for helping humans maximumly for good.

-

•

Scientific goal: To gain knowledge, better in depth, about humans themselves and other worlds.

When the scientific goal suggests an explicit role of knowledge as an output of AI machines, the engineering goal requires knowledge as an input in constructing the machines. For example, without knowledge, modelers will fail to reach the engineering goal in terms of “maximumly for good”. From the discussions above, we can understand that GCs will play an important role in the studies of knowledge utilization, big knowledge, or realizing the ultimate goals of AI.

II-D Comprehension levels in AI machines

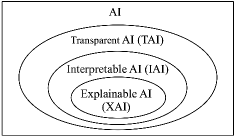

Currently, AI machines are dominated by black-box DL models. For understanding AI machines as a rigorous science [30], more investigations have been reported, such as [31, 32, 33, 34, 35], in together with review papers, like [36, 37, 38, 39]. In those investigations, several terms were given to define the different classes of AI machines, mostly based on application purposes. We present novel definitions below to the three specific classes of AI and show their relations in a mathematical notation.

Definition 5

Transparent AI (TAI) refers to a class of methods in AI that are constructed by adding transparency over their counterparts without such operation.

Definition 6

Interpretable AI (IAI) refers to a class of methods in AI that are comprehensible for humans with interpretations from any natural language.

Definition 7

Explainable AI (XAI) refers to a class of methods in AI that are comprehensible for humans with mathematical explanations.

Fig. 5 shows an Euler diagram of the different classes of AI machines. One can see their strict subset relations as

| (4) |

We can set the four classes of AI in an ordered way of comprehension (or knowledge) levels from “unknown”, “shallow”, “medium shallow” to “deep”, respectively. Their subset relations will remove the ambiguity in examination of methods, and provide a respective link to the comprehension levels in an ordered way. A black-box AI may be a preferred tool for some users. An autofocus camera for dummies is a good example. In this situation, AI tools with an unknown comprehension are fine for the users.

When TAI is a necessary condition of both IAI and XAI, we distinguish the both by their representation forms. A natural language representation is a naturally-evolved form for communication among humans. A mathematical representation is a structured form for communication among mathematicians. The differences of knowledge levels for IAI and XAI are due to the two forms of representations. We use Newton’s second law as an example (Table III) to show the differences in representation forms and knowledge levels. The knowledge levels are given for a relative comparison. We can understand that a natural language representation will suffer the problems of incompleteness, ambiguity, unclarity, and/or inconsistency. The problems will lead us for a shallow or incorrect understanding about the knowledge described. However, a mathematical representation will present a better form in describing knowledge exactly and completely. Although one is able to apply a natural language representation for describing Newton’s second law exactly and completely, there exists no equality of sets for the two forms of representations. We need to note that GCs may be given in terms of either IXI or XAI, such as “different parts of perceptual input have common causes in the external world” and “mutual information”, respectively, in processing adjacent patches of images [40].

|

|

|

Example | ||||||||||||

|

|

|

|

||||||||||||

|

Deep | Yes |

III Embedding GCs

In a study of adding transparency to artificial neural networks (ANNs), Hu et al. [41, 42] described two main strategies, respectively:

-

•

Strategy I: Embedding prior information into the models;

-

•

Strategy II: Extracting knowledge or rules from the models;

where a hierarchical diagram (Fig. 6) was given so that one can get a simple and direct knowledge about the working format behind the method. The two strategies differ in the nature of the knowledge flow with respect to its model. Whereas the first strategy applies the available knowledge more like an input into the model, the second strategy obtains explicit knowledge as an outcome from the system. In this section, we will focus on the first strategy in the notion of GCs.

III-A Generic Priors in GCs

Bengio et al. [43] adopted a notion of “generic priors (GPs)” for representation learning study in AI. They reviewed the works in lines of embedding the ten types of GPs into the models, that is:

-

1.

smoothness,

-

2.

multiple explanatory factors,

-

3.

a hierarchical organization of explanatory factors,

-

4.

semi-supervised learning,

-

5.

shared factors across tasks,

-

6.

manifolds,

-

7.

natural clustering,

-

8.

temporal and spatial coherence,

-

9.

sparsity,

-

10.

simplicity.

They also considered GPs to be “Generic AI-level Priors”. We advocate this notion and attempt to provide its formal definition below:

Definition 8

Generic priors (GPs) are a class of GCs which exhibits the generality in AI machines and is independent to the specific applications.

Note that GPs may be given in a linguistic form so that they are not CCs. No fixed boundary exists between GPs and non-GPs (or called specific priors). Numerous studies have been reported on seeking GPs. Only some of them are given below with different backgrounds.

III-A1 Objective priors

III-A2 Regularization

When addressing an inverse problem, regularization is an important set of GPs to solve such ill-posed problem [46, 47, 48]. When and penalties are used for the Lasso and ridge methods, they are corresponding to different GPs, sparseness [49] and smoothness [50], respectively. For tensor (or matrix) approximations, a regularization penalty can be a low-rank [51], or further GPs, such as, structured low-rank with nonnegative elements [52]. A semantic based regularization is expressed by a set of first-order logic (FOL) clauses [53].

III-A3 Knowledge/fuzzy rules and GTU

III-A4 Hints

Abu-Mostafa [58] was one of pioneers of proposing GCs in AI, but used notions of “intelligent hints” and “common sense hints” [59]. He summarized five types of hints, namely,

-

1.

invariance,

-

2.

monotonicity,

-

3.

approximation,

-

4.

binary,

-

5.

examples,

in learning unknown functions, and developed a canonical representation of the hints [58]. He pointed out that “Providing the machine with hints can make learning faster and easier” [59]. The important implications of his study are:

-

•

In AI modeling, one needs to apply various types of hints, or GCs, as much as possible “from simple observations to sophisticated knowledge” [59].

-

•

A mathematical and canonical representation of hints is necessary so that a learning algorithm is able to deal with hints numerically in a unified form.

III-A5 GPs in unsupervised learning

In [60], Jain presented several GPs in clustering studies, such as, criteria based on the Occam’s razor principle for determining the number of clusters (say, AIC, MDL, MML, etc), sample priors (say, must-link, cannot-link, seeding, etc). In unsupervised ranking of web pages in search engine studies, Google PageRank applied a semantic GP: “More important websites are likely to receive more links from other websites” [61], based on the link-structure data. When without such data in unsupervised ranking of multi-attribute objects, Li et al. [62] suggested five GCs, in the notion of “meta rules”, that should be satisfied for the ranking function, that is,

-

1.

scale and translation invariance,

-

2.

strict monotonicity,

-

3.

compatibility of linearity and nonlinearity,

-

4.

smoothness,

-

5.

explicitness of parameter size.

The important implication of the studies in unsupervised ranking is gained below.

-

•

Meta rules, or GPs, are important for assessing learning tools in terms of “high-level knowledge” [62], and become critically necessary when no ground truth (say, labels) exists.

III-A6 GPs in classification

In [63], Lauer and Bloch presented a comprehensive review of incorporating priors into SVM machines. They considered two main groups of priors, namely, class invariance group and knowledge on data group. In [64], Krupka and Tishby took the notion of meta features, and listed several of them in association with the specific tasks. For example, the suggested GPs in the handwritten recognition are

-

1.

position,

-

2.

orientation,

-

3.

geometric shape descriptors;

and in text classification are

-

1.

stem,

-

2.

semantic meaning,

-

3.

synonym,

-

4.

co-occurrence,

-

5.

part of speech,

-

6.

is stop word.

III-A7 PKR in regression

Considering a regression problem, Hu et al. [2] considered GCs in the notion of PKR. They listed ten types of PKR about nonlinear functions in a regression problem, that is,

-

1.

constants or coefficients,

-

2.

components,

-

3.

superposition,

-

4.

multiplication,

-

5.

derivatives and integrals,

-

6.

nonlinear properties,

-

7.

functional form,

-

8.

boundary conditions,

-

9.

equality conditions,

-

10.

inequality conditions.

For example, the first type of PKR, a physical constant, was studied from the Mackey-Glass dynamic problem in a difference form:

| (5) |

Within the observation data of the dynamics, one is able to have the prior for a time delay , to be a positive, yet unknown, integer in the problem. They showed solutions to gain the estimations of physical constants and using GCNN model based on radius basis function (RBF) networks. In [42], Qu and Hu further studied “linear priors (LPs)” within GCs, which was defined as “a class of prior information that exhibits a linear relation to the attributes of interests, such as variables, free parameters, or their functions of the models”. The total 25 types of LPs were listed in regressions.

III-A8 Virtual samples

Virtual samples are one of the important GPs given in either an explicit or implicit form in modeling. A few of approaches are listed below.

III-B Embedding modes and coupling forms

When GCs are given, there exist numerous methods of incorporating them. Some researchers [41, 63] suggested to catalog them with a few set of simple groups. We take a notion of “embedding modes” [42] and definite it below.

Definition 9

Embedding modes refer to a few and specific means of embedding GCs into a model.

Hu et al. [41, 42] listed the three basic embedding modes (Fig. 6), namely, data, algorithm, and structural modes, respectively. Their classification of the basic embedding modes is roughly correct since some methods may share the different modes simultaneously.

Significant benefits will be gained from using the mode notion. First, one is able to reach a better understanding about numerous methods in terms of the simple modes. Second, each basic mode presents a different level of explicitness for knowing the priors embedded. Second, the best level is suggested to be the structural mode, then followed sequentially by the algorithm mode, and the data mode as the last [42].

When Mitchell [71] described “three different methods for using prior knowledge”, that is, “to initiate the hypothesis, to alter the search objective, and to augment search operators”, we can say the three methods are basically within the algorithm mode. Lauer and Bloch [63] proposed a hierarchy of embedding methods with three groups, namely, “samples”, “kernel”, and “optimization problem”. The first group is the data mode and the last two are within the algorithm mode. For a better understanding, we list a few of approaches in terms of their embedding mode below.

III-B1 Data mode

This is a basic mode of incorporating GCs into a model mostly from its data used. In apart from the methods in the virtual samples discussed previously, the other methods appeared, such as,

III-B2 Algorithm mode

This is a basic mode of incorporating GCs into a model mostly from its algorithm used. The methods mentioned previously, say, in objective prior or regularization, fall within this mode. The other methods can be:

III-B3 Structural mode

This is a basic mode of incorporating GCs into a model mostly from its structure used, such as

III-B4 Hybrid mode

This is a mixed mode by including at lest two basic modes for incorporating GCs into a model, such as

Note that the classification above may be roughly correct to some approaches. For example, we put Bayesian networks within a structural mode to stress on its structural information, but put graphical models within a hybrid mode to stress on its structural information and generation of virtual data.

For coupling forms, we adopt the notion of “Generalized Constraint (GC)” model [11] for explanations. Fig. 7 depicts a GC model, which basically consists of two modules, namely, knowledge-driven (KD) submodel and data-driven (DD) submodel. For simplifying the discussion, a time-invariant model is considered. A two-way coupling connection is applied between two submodels. For stressing on the modeling paradigm, a GC model is considered within the KDDM approach [101]. The general description of a GC model is given in a form of [11]:

| (6) |

where and are the input and output vectors, is a function for a complete model relation between and , and are the functions associated to the KD and DD submodels, respectively. is the parameter vector of the function , and are the parameter vectors associated to the functions and , respectively. The symbol “” represents a coupling operation between the two submodels.

Definition 10

Coupling forms refer to a few and specific means of integrating the KD and DD submodels when they are available.

In fact, we can view the given GCs to be a KD submodel. In 1992, Psichogios and Ungar [9] proposed an idea of using the first principle or empirical function to be KD submodels. When simulating a bioreaction process, they solved partial differential equations (PDEs) by a conventional approach except that a vector of physical parameters was estimated by a neural network submodel. Their HNN model applied the coupling between the two submodels in a composition form:

| (7) |

where the prior was applied implicitly in which the physical parameter vector was a nonlinear function to the input variables, rather than constant. We can call this form to be Composition I (with parameter for learning), which can include a whole set or a part set of parameters for learning. This study is very enlightening to show an important direction to advance the modeling approach by the following implementations:

-

•

Any theoretical or empirical model can be used as KD submodel so that the whole model keeps a physical explanation to the system or process investigated.

-

•

The DD submodel, using either ANNs or other nonlinear tools, is integrated so that some unknown relations, nonlinearity, or parameters of the system investigated can be learnt.

From the implementations above we can see that coupling form seems to be an extra challenge in the design of HNN or GC models. In 1994, Thompson and Kramer [10] presented an extensive discussions about synthesizing HNN models for various types of prior knowledge. After combining a parametric KD submodel and a nonparametric DD submodel, they called the whole mode to be a semiparametric model. They considered two structures, parallel and serial, in coupling two submodels. Based on the two structures, Hu et al. [2] showed the four coupling forms in Fig. 8, and their mathematical expressions:

| (8) |

The four forms are simple and common in applications. In a parallel structure with a superposition form (Fig. 8(a)), when a KD submodel serves as a core element to simulate the main trends about the process investigated, a DD submodel works as an error compensator for uncertainties from the residual between the KD submodel output and the target output. One good example is a design of a nonlinear Kalman filter in [102], where they applied a linear Kalman filter as a KD submodel and a neural network as a DD submodel to compensate for nonlinear deviations of the state variables. A multiplication form (Fig. 8(b)) was shown on a “Sinc” function in [2]:

| (9) |

where the upper part was known, and the lower part was approximated by a RBF submodel. Hu et al. [2] used this example to show a possible problem introduced by a coupling which is discussed in the next section.

In a serial structure, another two composition forms are shown in eq. (8). The form of Composition II (Fig. 8(c)) corresponds to the inner function to be known as a KD submodel, such as a wavelet preprocessor before a neural network submodel in EEG analysis [103]. The idea of Composition III appeared in [104] to force the output to be consistent with a distal teacher, like a KD submodel in Fig. 8(d). Coupling forms can go quite complicated if considering more other structures, such as a cyclic loop in a dynamic process [105], or using a combination of various forms [106, 107].

III-C Extra challenges in embedding GCs

This subsection will discuss three extra procedures, or challenges, which are not discussed in the conventional ML study. More challenges exists, such as the mathematical conditions of guaranteed better performance for GC models over the models without using GCs [2].

III-C1 Constraint mathematization

GC can be given in any representation forms shown in Table I. Actually, GCs in modeling are initially described by natural language. Therefore, in general, a procedure will be involved as defined below:

Definition 11

Constraint mathematization refers to a procedure in modeling to transform GCs from natural language descriptions into mathematical representations.

If GCs are given in a form of mathematical representations, the procedure above will be passed in modeling. However, when GCs are known only in a form of natural language descriptions, this procedure must be processed, like the given example in Table I. The most difficulty in constraint mathematization is due to the problem called semantic gap. When various definitions about it exist from different contexts, such as from retrieval applications in [108], we adopt the general definition:

Definition 12 ([109])

“A semantic gap is the difference in meanings between constructs formed within different representation systems.”

In [4], Hu suggested consider the semantic gap further by distinguishing two ways of transformations in modeling. A direct way is to transform natural language descriptions into mathematical representations. An inverse way is opposite to the direct one. Hence, the problem of semantic gap can be further extended by the two mathematical problems, namely, ill-defined and ill-posed problems. In [110], Lynch et al. discussed various definitions of an ill-defined problem from the different researchers and proposed their definition below:

Definition 13 ([110])

“A problem is ill-defined when essential concepts, relations, or solution criteria are un- or under-specified, open-textured, or intractable, requiring a solver to frame or recharacterize it. This recharacterization, and the resulting solution, are subject to debate.”

Hadamard [111] was the first to define a mathematical term well-posed problems in mathematical modeling. Three criteria are given about well-posed definition to reflect three mathematical properties, namely, existence, uniqueness and continuity, respectively. Based on that, Poggio et al. proposed a simply definition of ill-posed problems below:

Definition 14 ([47])

“Mathematically ill-posed problems are problems where the solution either does not exist or is not unique or does not depend continuously on the data.”

Any violation of one of the three properties will result in an ill-posed problem. Constraint mathematization works like a direct way of semantic gap, in which GCs may be ill defined. However, object recognition or constraint learning is an inverse way in which one generally encounters the problems with both ill-defined and ill-posed characterizations.

In [53, 112], good examples were shown in constraint mathematization of first-order logic GCs for kernel machines and DNNs, respectively. However, it may be not easy to conduct constraint mathematization directly. For example, affective computing [113] will face more serious problems of semantic gap and ill-defined problems. The main difficulty sources mostly come from ambiguity and subjectivity of the linguistic representations about emotional or mental entities in modeling. In a study of emotion/mood recognition of music [114], the linguistic terms were used, such as “valence” and “arousal” in Thayer’s two-dimensional emotion model [115], and emotion states like “happy”, “sad”, “calm”, etc. Many low-level and high-level features were used for establishing the relationship between music and emotion states. This study shows that we may need a model for constraint mathematization.

III-C2 Coupling form selection

If a GC model (Fig. 7) is used, a new procedure will appear in modeling as:

Definition 15

Coupling form selection (CFS) refers to a procedure in modeling to select a coupling form between the KD and DD submodels when they are available.

The subject of coupling form selection has not received much attention in ML or AI studies. Sometimes, the selection is determined by the problem given, like the multiplication form in approximation of “Sinc” function in eq. (9), where its upper part is known. In a general case, for the same GC or KD submodel, there may exist several, yet different, coupling forms to combine with a DD submodel. One example is about monotonicity of the prediction function with respect to some of the inputs. In [116], Gupta et al. presented 20 methods, including their own method. They divided those methods within four categories: A. constrain monotonic functions, B. post-process after training, C. penalize monotonicity violations when training, D. re-label samples to be monotonic before training. The four categories are better to be viewed in a structural mode with different coupling forms, such as Categories A and C are a superposition in Fig. 8(a), B in Fig. 8(d), and D in Fig. 8(c), respectively.

In [101], Fan et al. showed a study of CFS in their KDDM approach (Fig. 9). When a KD submodel (called GreenLab) was given, they used RBF networks as a DD submodel. Two models, KDDMsup in Fig. 9(a) and KDDMcom in Fig. 9(b), were investigated. The input variables were five environmental factors and the output was the total growth yield of tomato crop. After using the real-world data of tomato growth datasets from twelve greenhouse experiments over five years in a 12-fold cross-validation testing, KDDMcom was selected because it showed a better prediction performance over KDDMsup. In fact, KDDMcom demonstrated a better interpretation about one variable, , in plant growth dynamics than that of KDDMsup. Their study also demonstrated several advantages of KDDM approach over the conventional KD or DD models, such as predictions of yields from different types of organs even some training data were missing.

One can see that CFS is an extra challenge over the conventional modeling studies, but required to be explored systematically. We still need to define related criteria in the selection. When a performance is a main concern, some other issues may be also considered, such as learning speed, or interpretation of a model. CFS presents a new subject in AI studies when more models or tribes are merged together as the Master Algorithm.

III-C3 Bio-inspired scheme for imposing constraints

In the conventional ML study, the common scheme for imposing constraints is the Lagrange multiplier as a standard method [47, 74, 48]. The question can be asked like “When ANNs emulate the synaptic plasticity functions of human brains, does a human brain apply the Lagrange multiplier if giving a new set of constraints”? Reviewing the image example of Fig. 3 again, if one relies on Fig. 16 for the correct recognition, we still do not know with which mathematical methods do our brains apply for describing and imposing the constraints.

In [117], Cao et al. attempted to address this question based on the “Locality Principle (LP) ” [118, 119]. LP is originally come from classical physics in the law of gravity and states that an object is mostly influenced by its immediate surroundings. In computer science, Denning and Schwartz [118] pointed out in 1972 that “Programs, to one degree or another, obey the principle of locality” and “The locality principle flows from human cognitive and coordinative behaviory.” In 2005, Denning further described that: “The locality principle found application well beyond virtual memory” and “Locality of reference is a fundamental principle of computing with many applications.” In neuroscience, LP can be supported from the knowledge: certain locations of a brain are responsible for certain functions (e.g. language, perception, etc.) [120]. Hence, Cao et al. [117] stated that “All constraints can be viewed as memory. The principle provides both time efficiency and energy efficiency, which implies that constraints are better to be imposed through a local means.” They proposed a non-Lagrange multiplier method for equality constraints, falling within a locally imposing scheme (LIS). The main idea behind the proposed method was to make local modifications to the solutions after constraints were added. The method transformed equality constraint problems into unconstrained ones and solved them by a linear approach, so that convexity of constraints was no more an issue. The numerical examples were given on solving PDEs with Dirichlet or Neumann boundary constraints. The proposed method was possible to achieve an exact satisfaction of the equality constraints, while the Lagrange multiplier method presented only approximations. They further compared the two methods numerically on a one dimensional “Sinc”, , with the interpolation constraints as: and . The comparison aimed at “how to discover Lagrange multiplier method to be globally imposing scheme (GIS) or LIS?” They found the answers to be interpretation form depended. Suppose an interpretation form is given in the following expression:

| (10) |

where is the RBF output without constraints and is the modification output after constraints are added in. From Fig. 10, one can see that shows a global modification, or GIS, when using the Lagrange multiplier, but a local and smooth modification, or LIS, when using the proposed method. This interpretation form provided a locality interpretation from a “signal modification” sense. They also investigated the other interpretation form, but failed to give the clear conclusion from a “synaptic weight changes” sense.

The study in [117] is very preliminary, but shows an important direction for the future ML or AI studies by the following aspects:

-

•

Locality principle is one of the important bases for realizing time efficiency and energy efficiency of bio-inspired machines. We need to transfer principles, or high-level GCs, into mathematical methods in AI machine designs. Convolutional neural networks (CNNs) [90] and RBFs [121] show the good examples in terms of the principle, satisfying a restricted region of the receptive field in biological processes [122]. More investigations are needed to explore LP in a wider sense for designs, such as LIS on the inequality constraints, or its role in continuous learning with another important GC: no catastrophic forgetting (NCF) [123, 124].

-

•

The hypothesis and selection of LIS and/or GIS suggest a new subject in the study of brain modeling or bio-inspired machines. When LP is rooted on the classical physics, quantum mechanics might violate locality from the entanglement phenomenon [125]. The different studies have been reported about brain modeling based on quantum theory, such as quantum mind [126, 127] and quantum cognition [128]. Those studies suggested that consciousness should be a quantum-type prior, and should be treated with non-classical methods. The subject shows that LP or nonlocality will be a main issue for realizing a brain-inspired machine, and GCs should be treated according to their principle behind.

IV Extracting GCs

In 1983, Scott [129] examined the nature of learning and presented the definition below:

“Learning is any process through which a system acquires synthetic a posteriori knowledge.”

The definition exactly reflects the purpose of learning in AI systems. After a posterioi knowledge is acquired, AI systems are better to take it as a priori so that the systems are able to advance along with explicit knowledge updated and increased through an automatic or interactive means.

Extracting knowledge is a main concern in the areas of knowledge discovery in databases (KDD) and data mining [130, 131, 132, 133]. It is also an important strategy in the context of adding transparency of ANN modeling [2] or IAI/XAI [33]. Fig. 6 shows a selection of representation formats (or forms) to be a first procedure within knowledge (or GCs) extracting approaches. Three basic forms are listed, namely, linguistic, mathematic and graphic, but the mathematic form is considered in a narrow sense here for not including the other two forms. The other form can be a non-formal language or a combination of the basic forms. This classification is roughly correct for the purpose of distinguishing the knowledge forms about extracting approaches. Furthermore, extracting GCs can be viewed as another subject defined below:

Definition 16

Generalized constraint learning (GCL) refers to a problem in machine learning to obtain a set of generalized constraint(s) which is embedded in the dataset(s) and/or the system(s) investigated.

GCL is an extension of prior learning (PL) [134], constrained based approach (CBA) [48], and constraint learning (CL) [135]. It stresses GCs from both data and system sources. GCL suggests that AI machines should serve as a tool for scientific discovery [136], such as on human brains. Supposing that a recognition mechanism, or an objective function, is fixed in a brain, GCs will explain why we identify a man, rather than a woman, from Fig. 3. In the followings, we review the extracting approaches, or GCL, according to the representation formats in Fig. 6.

IV-A Extracting linguistic GCs

The form of linguistic representation of knowledge considered here is symbolic rules, that is,

| (11) |

In 1988, Gallant [137] was a pioneer of extracting rules using ANN machines, for the purpose of generating an expert system automatically from data. He called such machine connectionist expert system (CES). The rules extracted were conventional (Boolean) symbolic ones. This work was very stimulating by showing a novel way for ANN modeling to obtain explicit knowledge:

| (12) |

in comparison with a conventional way:

| (13) |

According to Haykin [78], ANNs gain knowledge from data learning and store the knowledge with its model parameters, i.e. synaptic weights. Dienes and Pemer [138] considered that ANNs produce a type of implicit knowledge from those parameters.

After the study of Gallant [137], a number of investigations [139, 140, 141, 142, 143, 144, 145, 98] appeared on rule extraction from ANNs. In [139], Wang and Mendel developed an approach of generating fuzzy rules from numerical data. Fuzzy rules are a linguistic form to represent vague and imprecise information. In their fuzzy model, its fuzzy rule base was able to be formed by experts or rules extraction from the data. Fuzzy models are the same as ANNs in the sense of universal approximator [146, 91], but beneficial with regards to rules (or linguistic GCs) in modeling. In [142], Tsukimoto extracted rules applicable for both continuous and discrete values from either recurrent neural network (RNN) or multilayer perceptron (MLP). Several review papers [147, 148, 149] reported on rule extractions and presented more different approaches.

In evaluation of ANN based approaches, Andrew et al. [147] provided a taxonomy of five features. Among the features, the one called quality seems mostly important, for which they proposed four criteria in rule quality examinations, namely, accuracy, fidelity, consistency, and comprehensibility. They suggested the measurements of the criteria. This work shows an importance to evaluate quality of extracted GCs from different aspects.

IV-B Extracting mathematic GCs

In this subsection, we refer mathematic GCs in a narrow sense without including the forms of linguistic and graphic representations. We present several investigations which fall within the subjects of extracting mathematic GCs.

IV-B1 GCL for unknown parameters or properties

In [2], a GC was given first in a linguistic form, as shown by the GCs example in Table 1. This example describes the Mackey-Glass dynamic process, shown in eq. (5). The linguistic GC (or hypothesis) was finally transformed into a mathematic form as

| (14) |

For the given time series dataset with and , Hu et al. [2] used their GCNN model for the prediction and also obtained the solutions and .

In the same paper, Hu et al. also used GCNN as a hypothesis tool to discover the hidden property of data in approximating “Sinc” function, eq. (9). For the given GC, a GCNN model exhibited an abrupt change in the output when . They suggested the hypothesis of “removable singularity” for the abrupt change, and confirmed it from the data and analysis procedures. Their study showed that ML models can be a tool for identifying unknown parameters and hidden properties of physical systems.

IV-B2 GCL for unknown functionals

Recently, Alaa and van der Schaar [34] proposed a novel and encouraging direction to extract mathematic GCs in form of analytic functionals from ANN models. In their study, a black-box ANN model was described by . They formed a symbolic metamodel using Meijer -functions. The class of Meijer -functions includes a wide spectrum of common functions used in modeling, such as, polynomial, exponential, logarithmic, trigonometric, and Bessel functions. Moreover, Meijer -functions are analytic and closed-form functions, even for their differential forms. By minimizing a “metamodeling loss” , they obtained with a parameterized representation of symbolic expressions. They conducted numerical experiments on four different functions as the given GCs, that is, exponential, rational, Bessel and sinusoidal functions, respectively. Their approach was able to figure out the first three functional forms among the four functions.

In Fig. 1, we show that functional space is among the primary concerns in ML or AI. When the true functional form is unknown to the system investigated, modelers will involve a subject defined below:

Definition 17

Functional form selection (FFS) refers to a procedure in modeling to select a suitable functional form for describing the system investigated from a set of candidate functionals.

FFS has received little attention in AI communities. For simplification, most of the existing AI models directly apply a preferred functional without involving FFS. If considering AI to be a discovering tool, FFS should be considered first in modeling, particularly to complex processes, say, in economics [150]. In view of system identification [151, 152], FFS will be more basic and difficult than parameter estimations in nonlinear modeling. The study in [34] shows an important “gateway” in FFS, as well as in obtaining more fundamental knowledge, functional forms, about physical systems. Similar to the subject of CFS, FFS shows another open problem requiring a systematic study for ML or AI communities.

IV-B3 Parameter identifiability

Bellman and Åström [153] proposed the definition of structural identifiability (SI) in modeling. The term “structural” means the internal structure of a model, so that SI is independent of the data applied. Because any ML model with a finite set of parameters can be viewed as a “parameter learning machine” [11], Ran and Hu adopted the notion of parameter identifiability to be the theoretical uniqueness of model parameters in statistical learning [154]. Yang et al. [155] showed an example why parameter (or structural) identifiability becomes a prerequisite before estimating a physical parameter in GCNN model (Fig. 8(b)) in a form of:

| (15) |

where damping coefficient () is a single physical parameter in the KD submodel . They demonstrated that is unidentifiable if is a RBF submodel, which suggests no guarantee of convergence to the true value of . However, becomes identifiable if a sigmoidal feed-forward network (FFNs) submodel is applied. In a review paper [154], Ran and Hu presented the reasons of identifiability study in ML models:

“Identifiability analysis is important not only for models whose parameters have physically interpretable meaning, but also for models whose parameters have no physical implications, because identifiability has a significant influence on many aspects of a learning problem, such as estimation theory, hypothesis testing, model selection, learning algorithm, learning dynamic, and Bayesian inference.”

For example, Amari et al. [156] showed that FNNs or Gaussian mixture models (GMMs) will exhibit very slow dynamics of learning due to the nonidentifiability of parameters from plateaus of neuromanifold. Hence, parameter identifiability provides mathematical understanding, or explainability, about learning machines in terms of their parameter spaces involved. For details about parameter identifiability in ML, see [157, 67, 154] and the references therein.

IV-C Extracting graphic GCs

Human is more efficient in gaining knowledge from graphic means than from other means, such as from linguistic one. This paper considers graphic GCs in a wider sense. We refer graphic GCs to be GCs described by a graphic representation as well as shown by its graphic form. Hence, this subsection also includes graphic approaches for extracting GCs.

IV-C1 Data visualization for GCs

Data visualization (DV) is a graphic representation of data for modelers/users to understand the features of the data. In ML studies, DV is one of the most common techniques in modeling so that modelers are able to gain the related GCs for the design guidelines as well as for the interpretation to the response of models [158]. This technique can extend to the subjects of information visualization (IV) [159] or visual analytics (VA) [160]. Some DV tools are able to present knowledge from data in a mathematic form, such as histogram, heatmap, etc. We present an example below to show that this is not a case in general. DV tools usually exhibit knowledge in a form of GCs, which require users to seek and abstract. Constraint mathematization will be another challenge in abstractions of knowledge.

When high-dimensional data are difficult to interpret, researchers developed many approaches for viewing their intrinsic structures in a low-dimensional space [161]. In [162], van der Maaten and Hinton proposed an approach called t-SNE (t-Distributed Stochastic Neighbour Embedding) for visualization of high-dimensional data in a 2-D or 3-D space. Different from a PCA approach, t-SNE is a nonlinear dimensionality reduction technique. For the handwritten digits 0 to 9 in the MNIST dataset, they showed the 2-D visualization results with t-SNE (Fig. 11). The raw data is given in high dimensions with a size of 784 ( pixels). The t-SNE plot of Fig. 11 clearly demonstrates the hidden patterns of clusters in the high dimensional data. The patterns present a rather typical form of GCs instead of CCs. Modelers need to summarize them in form of either natural languages or mathematical descriptions, such as visual outliers [163] in classifications, or semantic emergence, shift, or death in dynamic word embedding [164] from t-SNE plots.

The most challenge in visualization is to develop/seek a DV tool suitable for revealing intrinsic properties, or theoretical understanding, about data. One good example is a study by Shwartz-Ziv and Tishby [165] in proposing a DV tool, called information plane. This tool presented a novel visualization of data from information-theoretic understanding, and was used for explaining the structural properties and leaning properties of DNNs.

IV-C2 Model visualization for GCs

Model visualization (MV) is a graphic representation of ML/AI models about their architectures, learning processes, decision patterns, or related entities for modelers/users to understand the properties of the models. MV is another means to foster insights into models, and it may often involve techniques from DV, such as learning trajectories in optimizations [166], or a heatmap for explaining convolutional neural networks (CNNs) [167, 168].

If regardless of a common representation of architecture diagrams of models, it was reported that Hinton diagrams [169] was among the first tools in visualizing ANN models [170]. This tool was designed for viewing connection strengths in ANNs, using color to denote sign and area to denote magnitude. With the help of Hinton diagram, Wu et al. [171] gained the knowledge about unimportant weights for pruning ANN architectures. In 1992, Craven and Shavlik [170] reviewed a number of visualization techniques for understanding decision-making processes of ANNs, such as Hinton diagrams [169], bond diagram and Trajectory diagrams [172], etc. They also developed a tool, called Lascaux, for visualizing ANNs. Graphic symbols were used to show the connections and neurons with activation and error signals, so that modelers were able to gain some insight into the learning behavior of ANNs. In the early study of MV, some researchers proposed a study of “Illuminating or opening the black box” of ANN models [173, 174]. In [173], Olden and Jackson applied neural interpretation diagram (NID), Garson’s algorithm [175], and sensitivity analysis, so that variable selections can be understood by visual perception of modelers. In [174], Tzeng and Ma used a new MV for knowing the working architectures of ANNs when the voxel of an image was corresponding to the brain material or not. The different architectures provided the relational knowledge between the important variables and voxel types.

When deep learning (DL) models are emerged, more MV studies are reported on understanding the inner-workings of this type of black-box models [176, 177]. In [178], Liu et al. developed a MV tool for visualizing the deep CNNs, called CNNVis. Fig. 12 shows a CNN model including four groups of convolutional layers and two fully connected layers. The model consisted of thousands of neurons and millions of connections in its architecture. Using CNNVis to simplify large graphs, they were able to show representations in clusters for better and fast understanding about pattern knowledge of CNN models. They conducted case studies on the influence of network architectures and diagnosis of a failed training process to demonstrate the benefits of using CNNVis. In a different GC form, Zhang et al. [179] applied decision trees in a semantic form to interpret CNNs models. For the other DL models, one can refer to the studies on recurrent neural networks (RNNs) [180, 181] and reinforcement learning (RL) [182, 183]. Some review papers [184, 177, 185] appeared recently about visualization of DL models. In general, MV techniques provide intuitive GCs in an unstructured form, and present a human-in-the-loop interactive of AI [185], such as to modify models or to adjust learning processes [160, 186].

IV-C3 Discovering GCs from graphical models

In general, we can say that graphical models (GMs), using graphic representations, are the best and direct means to describe worlds, either physical or cyber one. The main reason is from the facts below: (i) most objects are distinguished from their geometries, and (ii) they may be linked internally by their own entities or externally to each others. For every objects or data, their underlying graph structures are the most important knowledge we need to explore [188]. Buntine [189] pointed out that “Probabilistic graphical models are a unified qualitative and quantitative framework for representing and reasoning with probabilities and independencies”, and “are an attractive modeling tool for knowledge discovery”. We will present two sets of studies below to show that the knowledge discovered in GMs is often given in a form of GCs, instead of CCs.

In [187], Zhang et al. developed a tool, called ExpressGNN, by combing Markov logic networks (MLNs) [99] and graph neural networks (GNNs). The motivation was come from the facts that MLNs are computationally intractable from an NP-complete problem and the data-driven GNNs are unable to leverage the domain knowledge. Fig. 13 shows the working principle of ExpressGNN, in which knowledge graph (KG) is a knowledge base representing a collection of interlinked descriptions of entities. ExpressGNN scales MLN inference to KG problems and applies GNN for variational inference. With the GNN controllable embeddings, ExpressGNN were able to achieves more efficiency than MLNs in logical reasoning as well as a balance between expressiveness and simplicity of the model. In the numerical investigations, they demonstrated that ExpressGNN was able to fulfill zero-shot learning (ZSL). Fig. 14 illustrates an example [190] about ZLS, which is a learning to recognize unseen visual classes with some semantic information (or GCs) given. The term “zero-shot” means no instance to be given in the training dataset. ZLS is a kind of GCL because semantic information and/or unseen classes may be ill-defined or incompletely described in a form of GCs. Semantic gap may be involved for GMs, both in encoding and decoding of GCs, such as discovering unseen classes.

Another example is about studying co-evolving financial time-serious problems from GMs. In [191], Bai et al. proposed a tool, called EDTWK (Entropic Dynamic Time Warping Kernels), for time-varying financial networks. They computed the commute time matrix on each of the network structures for satisfying a GC as “the financial crises are usually caused by a set of the most mutually correlated stocks while having less uncertainty [192, 191]”. Based on the commute time matrix, a dominant correlated stock set was identified for processing. They conducted numerical investigations from the New York Stock Exchange (NYSE) dataset. For the given data, EDTWK was formed as a family of time-varying financial network with a fixed number of 347 vertices from 347 stocks and varying edge weights for the 5976 trading days. They used EDTWK to classify the time-varying financial networks into corresponding stages of each financial crisis period. Five sets of the crisis periods were studied, that is, Black Monday, Dot-com Bubble, 1997 Asia Financial Crisis, Newcentury Financial Bankruptcy, and Lehman Crisis. EDTWK was able to detect them from the given data and to characterize different stages in time-varying financial network evolutions. Some crisis events were shown in 3-D embedding, such as the two plots from two events in Fig. 15. One can see that the main challenge is still about how to discover and abstract GCs from the model and its visualization, either for modelers or for machines.

The examples given above indicate that, in AI modeling, it is a promising direction of merging other models with GMs, or utilizing graphic representations as much as possible. The studies in natural language processing (NLP) [193, 194, 195] have demonstrated that GMs can process natural language and have achieved much better performance than the conventional NLP models. Due to a transparent feature of GMs [67], more studies have been reported, such as on GNNs [196, 100, 197] and references therein.

|

| (a) |

|

| (b) |

V Summary and final remarks

In this paper, we presented a comprehensive review of GCs as a novel mathematical problem in AI modeling. Comparisons between CCs and GCs were given from several aspects, such as problem domains, representation forms, related tasks, etc. in Table I. One can observe that GCs provide a much larger space over CCs in the future AI studies. We introduced the history of GCs and considered the idea behind Zadeh [7] on GCs quite stimulating for us to go further. Various methods were provided along a hierarchical way in Fig. 6, so that one can get a direct knowledge about working format behind every method. Although most researchers have not applied the notion of GCs, they originally encountered problems of GCs, rather than CCs. The given examples demonstrated GCs to be a general problem in constructions of AI machines, and exhibited extra challenges. One may question about the “new mathematical problem” proposed in the paper, because it is significantly different with the conventional descriptions in mathematics. The history that Shannon [14] developed a mathematical theory of communication from seeking new problems inspired the authors to identify the new mathematical problem as a starting point for a mathematical theory of AI. However, defining new mathematical problems of AI will be far more complicated than the conventions by covering a broad spectrum of disciplines. This paper attempted to support that GCs should be a necessary set in learning target selection [4] towards a mathematical theory of AI. GCs will define every learning approaches and their outcomes fundamentally.

In the paper, we also presented our perspectives about future AI machines in related to GCs. We advocated the notion of big knowledge and redefined XAI for pursuing deep knowledge. GCs will provide a critical solution to achieving XAI. We expect that AI machines will advance themselves in terms of explicit knowledge via increased GCs for its big and deep knowledge goals. However, increased GCs will bring more theoretical challenges, such as:

- •

The challenge itself falls within XAI and implies importance in seeking a theoretical boundary of AI. An excellent example is from Turing’s study on the halting problem for Turing machines [200]. This theoretical study suggests that we need to avoid the efforts of generating a perpetual motion machine in AI applications. For the concerns or studies like “Can AI takeover humans?” or “superhuman intelligence”, we suggest that they should be finally answered in explanations of mathematics, rather than only in descriptions of linguistic arguments.

For the final remarks of the paper, we cite one saying from Confucius and present brief discussions on it below:

“Learning without thinking results in

confusion (knowledge).

Thinking without learning ends in

empty (new knowledge).”

by Confucius (551–479 BCE) When Confucius suggested the saying for his students, we take it by adding key words “knowledge” and “new knowledge” from AI perspective. The saying above exactly suggests that human-level AI should have both procedures for their developments, i.e., bottom-up learning and top-down thinking, where GCs will play a key role in mathematical modeling at both input and output levels for future AI machines.

[GCs for Fig. 3]

.

References

- [1] R. E. Kalman, “Kailath lecture, may 11,” Stanford University, Tech. Rep., 2008.

- [2] B.-G. Hu, H.-B. Qu, Y. Wang, and S.-H. Yang, “A generalized constraint neural network model: Associating partially known relationships for nonlinear regressions,” Inf. Sci., vol. 179, no. 12, pp. 1929–1943, May 2009.

- [3] R. O. Duda, P. E. Hart, and D. Stork, Pattern Classification, 2nd ed. New York, NY, USA: Wiley, 2001.

- [4] B.-G. Hu, “Information theory and its relation to machine learning,” in Proc. Chin. Intell. Autom. Conf. Berlin, Heidelberg: Springer, 2015, pp. 1–11.

- [5] B. E. Greene, “Application of generalized constraints in the stiffness method of structural analysis,” AIAA J., vol. 4, no. 9, pp. 1531–1537, Sep. 1966.

- [6] L. A. Zadeh, “Outline of a computational approach to meaning and knowledge representation based on the concept of a generalized assignment statement,” in Proc. Intl. Conf. Artif. Intell. Man-Mach. Syst. Springer, 1986, pp. 198–211.

- [7] ——, “Fuzzy logic = computing with words,” IEEE Trans. Fuzzy Syst., vol. 4, no. 2, pp. 103–111, May 1996.

- [8] ——, “Generalized theory of uncertainty: Principal concepts and ideas,” in Fundamental Uncertainty, S. M. D. Brandolini and R. Scazzieri, Eds. London: Palgrave Macmillan, 2011, pp. 104–150.

- [9] D. Psichogios and L. H. Ungar, “A hybrid neural network – first principles approach to process modeling,” AIChE J., vol. 38, no. 10, p. 1499–1511, Oct. 1992.

- [10] M. L. Thompson and M. A. Kramer, “Modeling chemical processes using prior knowledge and neural networks,” AIChE J., vol. 40, no. 8, p. 1328–1340, Aug. 1994.

- [11] Z.-Y. Ran and B.-G. Hu, “Determining structural identifiability of learning machines,” Neurocomputing, vol. 127, pp. 88–97, Mar. 2014.

- [12] H. Blockee, “Hypothesis language,” in Encyclopedia of Machine Learning, C. Sammut and G. I. Webb, Eds. Boston, MA: Springer, 2001.

- [13] S. J. Russell and P. Norvig, Artificial Intelligence-A Modern Approach, 3rd ed. Pearson, 2010.

- [14] C. E. Shannon, “A mathematical theory of communication,” Bell Syst. Tech. J., vol. 27, no. 3, pp. 379–423, 1948.

- [15] P. Domingos, The Master Algorithm: How The Quest for The Ultimate Learning Machine Will Remake Our World. New York: Basic Books, 2015.

- [16] P. Wang and B. Goertzel, “Introduction: Aspects of artificial general intelligence,” in Advance of Artificial General Intelligence, B. Goertzel and P. Wang, Eds. Amsterdam: IOS Press, 2007, pp. 1–16.

- [17] G. E. Box, “Science and statistics,” J. Am. Stat. Assoc., vol. 71, no. 356, pp. 791–799, 1976.

- [18] W. M. Trochim and J. P. Donnelly, Research Methods Knowledge Base (Vol.2). Cincinnati, OH: Atomic Dog Publishing, 2001.

- [19] B.-G. Hu, W.-M. Dong, and F.-Y. Huang, “Towards a big-data-and-big-knowledge based modeling approach for future artificial general intelligence,” Comm. CAA, vol. 37, no. 3, pp. 25–31, 2016.

- [20] P. B. Porter, “Find the hidden man,” Am. J. Psychol., vol. 67, pp. 550–551, 1954.

- [21] P. Niyogi, F. Girosi, and T. Poggio, “Incorporating prior information in machine learning by creating virtual examples,” in Proc. IEEE, vol. 86, no. 11, Nov. 1998, pp. 2196–2209.

- [22] X.-D. Wu, J. He, R.-Q. Lu, and N.-N. Zheng, “From big data to big knowledge: Hace + bigke,” Acta Automatica Sinica, vol. 42, no. 7, pp. 965–982, Jun. 2016.

- [23] Z.-Y. Ran and B.-G. Hu, “Parameter identifiability and its key issues in statistical machine learning,” Acta Automatica Sinica, vol. 43, no. 10, pp. 1677–1686, Oct. 2017.

- [24] D. Marr, “Artificial intelligence - a personal view,” Artif. Intell., vol. 9, no. 1, pp. 37–48, Aug. 1977.

- [25] A. Saygin, I. Cicekli, and V. Akman, “Turing test: 50 years later,” Minds Mach., vol. 10, p. 463–518, Nov. 2000.

- [26] A. Newell and H. Simon, “Computer science as empirical inquiry: Symbols and search,” Commun. ACM, vol. 19, no. 3, pp. 113–126, Mar. 1976.

- [27] B. Goertzel, “Artificial general intelligence: Concept, state of the art, and future prospects,” J. Artif. General Intelli., vol. 5, no. 1, pp. 1–48, Dec. 2014.

- [28] R. A. Brooks and L. A. Stein, “Building brains for bodies,” Auton. Robot., vol. 1, pp. 7–25, 1994.

- [29] P. Wang, “The goal of artificial intelligence,” in Rigid Flexibility: The Logic of Intelligence. Netherlands: Springer, 2006, pp. 3–27.

- [30] F. Doshi-Velez and B. Kim, “Towards a rigorous science of interpretable machine learning,” arXiv preprints arXiv:1702.08608, 2017.

- [31] M. T. Ribeiro, S. Singh, and C. Guestrin, “Why should i trust you? explaining the predictions of any classifier,” in Proc. 22nd ACM SIGKDD Int. Conf., Aug. 2016, pp. 1135–1144.

- [32] S. M. Lundberg and S. I. Lee, “A unified approach to interpreting model predictions,” in Proc. Adv. Neural Inf. Process Syst. (NeurIPS), 2017, pp. 4765–4774.

- [33] W. J. Murdoch, C. Singh, K. Kumbier, R. Abbasi-Asl, and B. Yu, “Interpretable machine learning: Definitions, methods, and applications,” arXiv preprints arXiv:1901.04592, 2019.

- [34] A. M. Alaa and M. van der Schaar, “Demystifying black-box models with symbolic metamodels,” in Proc. Adv. Neural Inf. Process Syst.(NeurIPS), 2019, pp. 11 304–11 314.

- [35] Z. Yang, A. Zhang, and A. Sudjianto, “Enhancing explainability of neural networks through architecture constraints,” IEEE Trans. Neural Netw. Learn. Syst., pp. 1–12, Jul. 2020.

- [36] D. Doran, S. Schulz, and T. R. Besold, “What does explainable AI really mean? A new conceptualization of perspectives,” arXiv preprints arXiv:1710.00794, 2017.

- [37] R. Guidotti, A. Monreale, S. Ruggieri, F. Turini, F. Giannotti, and D. Pedreschi, “A survey of methods for explaining black box models,” ACM Comput. Surv., vol. 51, no. 5, pp. 1–42, 2018.

- [38] S. Mohseni, N. Zarei, and E. D. Ragan, “A multidisciplinary survey and framework for design and evaluation of explainable ai systems,” arXiv preprints arXiv:1811.11839, 2018.

- [39] N. Xie, G. Ras, M. van Gerven, and D. Doran, “Explainable deep learning: A field guide for the uninitiated,” arXiv preprints arXiv:2004.14545, 2020.

- [40] S. Becker and G. Hinton, “A self-organizing neural network that discovers surfaces in random-dot stereograms,” Nature, vol. 355, pp. 161–163, Jan. 1992.

- [41] B.-G. Hu, Y. Wang, H.-B. Qu, and S.-H. Yang, “How to add transparency to artificial neural networks?” Pattern Recognit. Artif. Intell., vol. 20, no. 1, pp. 72–84, Mar. 2007.

- [42] Y.-J. Qu and B.-G. Hu, “Generalized constraint neural network regression model subject to linear priors,” IEEE Trans. Neural Netw., vol. 22, no. 12, p. 2447–2459, Sep. 2011.

- [43] Y. Bengio, A. Courville, and P. Vincent, “Representation learning: A review and new perspectives,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 35, no. 7, pp. 1798–1828, Aug. 2013.

- [44] H. Jeffreys, “An invariant form for the prior probability in estimation problems,” Proceedings of the Royal Society of London. Series A. Mathematical and Physical Sciences, vol. 186, no. 1007, pp. 453–461, 1946.

- [45] J. M. Bernardo and A. F. Smith, Bayesian theory. John Wiley & Sons, 2009, vol. 405.

- [46] A. N. Tikhonov, “On the solution of ill-posed problems and the method of regularization,” Dokl. Akad. Nauk SSSR, vol. 151, no. 3, pp. 501–504), 1963.

- [47] T. Poggio, H. Voorhees, and A. Yuille, “A regularized solution to edge detection,” J. Complex., vol. 4, no. 2, pp. 106–123, Jun. 1988.

- [48] M. Gori, Machine Learning: A Constrained-Based Approach. Morgan Kauffman, 2018.

- [49] R. Tibshirani, “Regression shrinkage and selection via the lasso,” J. Royal Stat. Soc. Ser. B (Methodol.), vol. 58, no. 1, pp. 267–288, 1996.

- [50] C. M. Bishop, “Curvature-driven smoothing: a learning algorithm for feedforward networks,” IEEE Trans. Neural Netw., vol. 4, no. 5, pp. 882–884, Sep. 1993.

- [51] Q. Zhao, G. Zhou, L. Zhang, A. Cichocki, and S. I. Amari, “Bayesian robust tensor factorization for incomplete multiway data,” IEEE Trans. Neural Netw. Learn. Syst., vol. 27, no. 4, pp. 736–748, Apr. 2015.

- [52] Y. Wang, L. Wu, X. Lin, and J. Gao, “Multiview spectral clustering via structured low-rank matrix factorization,” IEEE Trans. Neural Netw. Learn. Syst., vol. 29, no. 10, pp. 4833–4843, Oct. 2018.

- [53] M. Diligenti, M. Gori, M. Maggini, and L. Rigutini, “Bridging logic and kernel machines,” Mach. Learn., vol. 86, no. 1, pp. 57–88, 2012.

- [54] G. G. Towell and J. W. Shavlik, “Knowledge-based artificial neural networks,” Artif. Intell., vol. 70, no. 1-2, pp. 119–165, Oct. 1994.

- [55] H. Ying, Fuzzy Control and Modeling:Analytical Foundations and Applications. Wiley-IEEE Press, Aug. 2000.

- [56] Z. Pawlak, Rough Sets. In Theoretical Aspects of Reasoning About Data. Netherlands: Kluwer, 1991.

- [57] P. Smets, “Belief functions: The disjunctive rule of combination and the generalized bayesian theorem,” Int. J. Approx. Reasoning, vol. 9, no. 1, pp. 1–35, Aug. 1993.

- [58] Y. S. Abu-Mostafa, “A method for learning from hints,” in Proc. Adv. Neural Inf. Process Syst.(NeurIPS), San Francisco, CA, USA, 2018, pp. 73–80.

- [59] ——, “Machines that learn from hints,” Sci. Am., vol. 272, no. 4, pp. 64–69, Apr. 1995.

- [60] A. K. Jain, “Data clustering: 50 years beyond k-means,” Pattern Recognit. Lett., vol. 31, no. 8, pp. 651–666, Jun. 2010.

- [61] A. Peretti and A. Roveda, “On the mathematical background of google pagerank algorithm,” University of Verona, Department of Economics, Tech. Rep. 25, 2014.

- [62] C.-G. Li, X. Mei, and B.-G. Hu, “Unsupervised ranking of multi-attribute objects based on principal curves,” IEEE Trans. Knowl. Data Eng., vol. 27, no. 12, pp. 3404–3416, 2015.

- [63] F. Lauer and G. Bloch, “Incorporating prior knowledge in support vector regression,” Mach. Learn., vol. 70, pp. 89–118, 2008.