Generalization on the Enhancement of Layerwise Relevance Interpretability of Deep Neural Network

Abstract

The practical application of deep neural networks are still limited by their lack of transparency. One of the efforts to provide explanation for decisions made by artificial intelligence (AI) is the use of saliency or heat maps highlighting relevant regions that contribute significantly to its prediction. A layer-wise amplitude filtering method was previously introduced to improve the quality of heatmaps, performing error corrections by noise-spike suppression. In this study, we generalize the layerwise error correction by considering any identifiable error and assuming there exists a groundtruth interpretable information. The forms of errors propagated through layerwise relevance methods are studied and we propose a filtering technique for interpretability signal rectification taylored to the trend of signal amplitude of the particular neural network used. Finally, we put forth arguments for the use of groundtruth interpretable information.

1 Introduction

The success of deep learning (DL) predictive capability has not been followed by the understanding of its inner working mechanism. Generally, DL algorithms are still considered black-boxes. The need to understand the algorithms becomes clear especially when machine learning (ML) and neural network (NN) algorithms start to permeate into many aspects of the society, such as the medical sector, where accountability and responsibility are of utmost importance. Thus, a variety of attempts to understand them have emerged [1, 2, 3, 4]. Amongst them, visualization method is popular for understanding machine decision. Class Activation Maps (CAM) and its variants [5, 6] have been developed to extract more precise features in a data sample that contributes to the prediction. The information is presented as saliency maps or heatmaps, which are often considered readily interpretable and reader-friendly. Likewise, Layerwise Relevance Propagation (LRP) [7, 8] produces saliency map by decomposing and back-propagating modified signals that have been fed-forward during the prediction phase. In this manner, the prediction is “explained”, in particular by normalization and selection of positive signals. Also see guided backpropagation [9] and the excellent interactive presentation of heatmap visual comparisons in [10].

The quality and effectiveness of interpretable heatmaps have been demonstrated in several ways. CAM [5] and GradCAM [6] heatmaps were shown to improve the localization on ILSVRC datasets. [11] provides an ingenious method to pick important pixels to the prediction of a class compared to one other selected class . These pixels are deleted, and the desired change in log odds scores is successfully demonstrated. In [12], 3D U-Nets are used to perform lesion segmentation using multi-modal MRI data, a process of automation aimed at improving stroke diagnosis pipeline. LRP is used to extract interpretable information to analyse the region in the MRI data that contributes to the localization of lesion. Inclusivity coefficient is used to quantify the heatmap quality.

However, the heatmaps themselves are often not transparently presented. For some of the saliency methods mentioned above, conceptual uniformity may not have been attained. We define conceptual uniformity as the consistent attribution of relevance to features within or outside the object of interest being classified, segmented etc. Some heatmaps may highlight inexplicably different regions for the same class of prediction, even with correct predictions (consider [13, 14, 15]). Note that the standard for conceptual uniformity is not yet established, but preferably it is flexible enough to accommodate prediction based on the contribution of background information (otherwise the problem becomes similar to instance segmentation). Using the ideas defined in [16], this means the concept activation vectors (CAV) are pointing towards similar directions for a specific concept.

This paper extends the work in [12], which hypothesized that heatmap errors are propagated through spikes in the LRP signals in the U-Net. [12] thus applied amplitude filtering to reduce the spikes to rectify the LRP signals. The contributions of this paper are the following:

-

1.

We present a theoretical framework on the effect of filtering for the rectification of interpretability signals, generalizing amplitude filtering for the LRP signals in [12]. The framework assumes that groundtruth interpretable information exists and shows that optimized filter parameters could put an upper bound to the mean error value.

-

2.

We show that the hypothesis regarding error propagation in [12] is not general by defining the quantity MP (see later). It is however still possible to apply similar logic to rectify LRP signals through filtering, as suggested by point 1. In particular, if spikes are informative features (rather than errors) in a particular NN, then we use amplitude amplification filters (rather than amplitude clamp filters) for the spikes to improve the quality of interpretable information.

The importance of interpretability groundtruth is not only theoretical, but also evident in practice. Observe the relevance provided by the heatmap shown in figure 1 of [17]. Number 3 is predicted by supposedly considering the relevance of the regions around the middle protrusion of the digit 3. We can agree with this easily with human intuition. However, without conceptual uniformity (i.e. when there are many other predictions of the digit 3 with different heatmaps) the explanation provided by the heatmap [17] is said to be given after the fact and suffers from hindsight justification. If groundtruths for heatmaps are available, we can avoid such arbitrariness. With groundtruth, inexplicable parts of the heatmaps such as label 8 "Military Uniform" (observe the stars in the jet-plane) and label 13 "bison" (the wirenet appears with high attribution values) [10] might be improved. Deciding on such groundtruths may also help spur discussions on what is considered interpretable information. As an example, in figure 1 of [5], the heatmap for the classification "cutting-tree" may be reconsidered, perhaps with higher attribution on the whole tree. As for [12], the metric used to measure the quality of heatmaps does not contain constraint from medical knowledge. It acknowledges that a robust relevant medical knowledge is not available, raising possible questions of what groundtruth should be provided. The explainable AI community may need more discussions on what constitutes relevant interpretable information.

2 Background and Definitions

In this section, we aim to generalize the structure of the algorithm used to generate “explanation” used by LRP while retaining its layered form, as shown in figure 1. In this work, is used in place of LRP, although it should also admit any different algorithms that work by propagating some “relevance” values back through the layers with trainable weights.

Image-like components. Each is image-like; the superscript (k) is used here to denote layer k. We can set where corresponds to greyscale image, to the three RGB channels, width and height. Feature maps are given by , and they could be for example the usual feature maps from convolutional layers or a layer in the dense network. is the predictive output for the particular image , where we do not include the index denoting the n-th data point in the dataset.

Since these components are image-like, they have values in spatial domains. Indeed, we can treat as functions and is for example, discrete index . In this case, is a functional. We can also treat as a high-dimensional vector instead, which will be more convenient for some computation.

Neural network formula and relevance propagation. We can then write the NN and the relevance propagation (the algorithm to generate “explanation”) in the recursive form

| (1) |

| (2) |

In this work, at each layer, spatial domains for both forward and relevance propagation are the same. In particular, when using RGB images of dimension normalized to , we have with . This ensures that each pixel in the image has a relevance value. In figure 1, high relevance corresponds to darker patch in ; the region generally points to the position of the cat. The choice to sum up over all channels of seems to be, in practice, arbitrarily made.

First-order Errors and Arbitrary Errors. We are interested in separating “small” errors from “large” errors in the signals that are used to propagate interpretable information . The “large" error is separated from “small" error, inspired by the observation that some erroneous signals are spike-like, very large. How “small" or “large" the error is can be relative. However, in this work, we will refer to the usual first-order Taylor approximation error as the small error, and other errors as large.

For any image-like component , we now denote small deviation from at spatial coordinates as where denotes first order error. We denote large deviation at spatial coordinate as where denotes a large deviation. In both cases, is the true value at coordinate . We use set theoretic notation naturally, so that the function of a spatial domain , as a set, includes both the small and large errors . As a standalone set, will be used to denote small deviation from across its domain . The domains for and in are disjoint since is still a function, and we will refer to them mostly as respectively for an to be described later. Also, wherever sign is used, it will correspond to the small error.

Contracted Notation. We first focus on one arbitrary layer and replace equation (2) with where includes both input feature map to and parameter . The layer denotes true relevance since it contains no terms with errors such as . Note that this generalization is applicable for interpretability methods like LRP. The LRP process itself is independent of optimization that occurs during the training process. It thus takes in both the weights and image both as input on the same footing, justifying our contraction to .

3 Denoising Explanation with

Suppose we have a sub-optimally trained NN, i.e. an NN not yet achieving the desired evaluation performance. Studying sub-optimal NN may help provide more information about how to better guide the training process of a NN, although we acknowledge the subtle difficulty in deciding how optimal is optimal where evaluation performance such as accuracy cannot yet attain, say, . Nevertheless, let us proceed by denoting a sub-optimally trained layer as . Recall that we use apostrophe symbol ‘ to denote large error. Then, using contracted notation, suppose a relevance signal at this layer is i.e. the relevance propagated from the previous layer is already “denoised”, no longer having large errors (thus no longer denoted ). The objective here is to apply filter that denoise to so that it can be propagated to the next layer without carrying over large error. See a later section Unrectified Relevance Propagation where we observe the consequence of using instead.

Error separability and . Now, let the relevance be error separable, such that and is still largely erroneous. We have separated by small and large error domains and note that this is meaningful only when volume of error is manageable (see next section). In another words, there exists a property distinguishing large error encoded in some property (see example later).

Then, we define the corresponding denoising function for the separable error as such that for some . In [12], this corresponds to fraction-pass or clamp filters. Let us now define sum of errors

| (3) |

where which contains first order errors in the input to explanations. Furthermore,

| (4) |

Volume of domain containing errors. From [12], what appears to be spike-like errors motivated the use of filters dependent on signal magnitude. Our results in the experiment section later shows that spikes are not the general forms of errors in sub-optimal NN and thus suggestion in [12] possibly applies only to its 3D U-Net. However, a spike or an unnaturally large signal could be easily distinguished using computer algorithm, thus we will use it for illustration. Finding the general form of errors is not within the scope of this paper.s

An example of distinguishable large error is finding the subset of with property given by for some . This is the starting point that inspired the use of notation . If the relevance propagation signal layer is controlled in the sense that where is the maximum value in allowed in relevant components the system, then errors could thus be easily identified by their high amplitudes. However, this is not generally the case in existing designs of NN, especially with layers using ReLu or other activation functions that have no finite upper bound.

Suppose only a fraction of the domain manifests as large errors (for example spikes), i.e. for some small , where is the probability of finding the errors. Then from equation (3), we have and thus mean absolute error

| (5) |

where is some linear function because first order term from Taylor expansion is simply a linear term weighted by the gradient of the function.

Filters, Effectiveness and Their Optimization. In this section, we show how the optimization of filter parameter is related to the upper bound of errors, as indicated by figure 5 of [12].

Now suppose we apply no filter, that is, we set identity function. Then, from the second term of equation (4), using triangle inequality, we have hence where:

-

1.

The following has been used , which is expected in the case of non-erroneous parameters .

-

2.

Error from the first term in MAE is linear w.r.t first order errors, and thus is a typical small perturbation.

-

3.

The error in term is irrelevant for now, because of the next point.

-

4.

There is no best known upper bound for the term as is still present. The error can be arbitrarily large. We represented it as above. If , for example when the signals are capped via sigmoid functions, then we need to be on the order of or the error to not be disruptive; this is expected. Otherwise, the order of the errors is significant, which is also expected, since no filter is used. Often, is not true, for example when activation functions used are ReLu, which has not upper bound. This also means there is no upper bound for the error.

Optimality of filter parameter. Now, we apply a type of filter by setting and see the difference between its error upper bound with that above with no filter. For spike-like error, using triangle inequality, similarly we have with so that might still be significant, and only gives non-disruptive errors. Increasing reduces , though the error might spill over into the constants of the linear function due to the failure to capture the error because of the continuity towards the spikes. This is because as is the constant corresponding to total size of elements of (e.g. the total number of pixels from the input), increasing may reduce but increases where the error spills into. This error will be insignificant in case of very isolated spikes or high gradient spikes, where adjusting may not alter much the subset of the domain containing errors. This expresses the optimum setting that could be attained depending on the gradient of the spikes, as suggested by figure (5D) of [12]. As mentioned earlier in this section, this is the term allows for optimization of error minimization.

Finally, if , we are left with . This provides more relaxed condition to achieve small error compared to because both and can contribute to the error upper bound of the order of . Expecting to be on the order of means flattening to the level of uniform function with local variance, which might be feasible in the case of images with rather uniform datasets. Otherwise, in natural images, it might mean changing color channels and reducing color contrasts. In any case, optimizing and choosing optimum degree of “flattening” could give a low error upper bound as well.

Unrectified Relevance Propagation. Now, we observe the consequence of using instead, i.e. we attempt to rectify signals given unrectified signal. Suppose such that contains pixels that suffer only small errors even with , while contain the rest. By comparison, in the previous sections, we immediately separate to . Then . Thus

| (6) |

where because . Hence, thus is a larger error by the amount related to the size of . This reflects the need to control the error at each layer.

4 Experiment

4.1 Experimental Setup

The neural networks for our discussion are named S1, S2, AL1 and AL2, described in the following and the dataset used is MNIST. First, S1 is a generic, relatively small convolutional neural network (CNN) with 270578 parameters, which we just conveniently call SmallNet. AL1 is like AlexNet with 2664138 parameters. S2 is like S1, except that images are first enlarged by interpolation to and the SmallNet is modified so that the no. of parameters is similar, 273381. Likewise, AL2 is similar to AL1 with the same image enlargement and network modification, 2874810 parameters. Adam optimizer is used and batch size . Training dataset consists of 60000 images, hence each training epoch consists of iterations. Each evaluation of accuracy is performed on 1000 handwritten digits randomly drawn from the test dataset, while each evaluation of mean powers (defined in the next paragraph) on 240 randomly drawn from test dataset. To generate heatmaps for interpretability, we use LRP [7, 8]. Other settings can be found in the source code111https://github.com/etjoa003/explainable_ai/tree/master/fcalc.

As we are initially interested in observing the spikiness of the errors, we defined the quantity Mean Power, MP. Given threshold and a layer signal , let be positive part of i.e. negative signals are set to zero; likewise is the absolute value of the negative part of . Define the upper strength to be and is a pixel in , i.e. the mean value of all the signals in (assumed non-negative) that are greater equal to . Also, define the lower strength to be . Then , and . Spikier signals will give larger , and both positive and negative MPs are not sensitive to the absolute amplitude of the signal.

Following [12], clamp-filters and fraction-pass filters with are applied during layerwise backward propagation. However, after observing the trend, we apply also fractional-amplifier with at some of the layers. For relevance signal , it performs conditional pixel-wise signal amplification if and no effect to the pixel otherwise, where is the maximum absolute value across ’s pixels, and subscript is an index of some pixel. In this experiment, .

4.2 Results and Discussions

[12] proposes a general hypothesis on the forms of errors being propagated. If we describe it using MP, the proposed hypothesis can be simply summarized as “MP trend generally decreases". However, the hypothesis may only be applicable to the neural network used in [12] , i.e. it may not be general. To show that it is not general, we simply need to provide a counter-example on any dataset and model, i.e. by showing that the MP trend is not always increasing. Here, we choose to work with small or standard neural networks and show that some of them do serve as counter-examples. Small neural networks on simple MNIST data are chosen for fair comparison, as each neural networks will achieve equally high accuracy. Indeed, AlexNets AL1 and AL2 quickly exceeded 0.9 accuracy (within around 1000 iterations) while SmallNets S1 and S2 reached it at the end of epoch 2.

MP trends have been defined to test the way errors are propagated. The hypothesis suggested in [12] can be reformulated in terms of MP values: if errors consistently take the form of spikes, then MP values will decrease as neural networks undergo training, assuming that errors are reduced along the training. Hence, to show that the hypothesis is not general, we only need to show some examples of neural networks with MP values that are not decreasing. From figure 5, the variations in MP trends are clear. S1 and S2 generally have increasing MP values while AL2 shows decrease in MP and AL1 shows a less pronounced increase. On a side note, the same networks with different initialization show some resemblance in their MP trends (not shown), though it is not clear to what extent this applies.

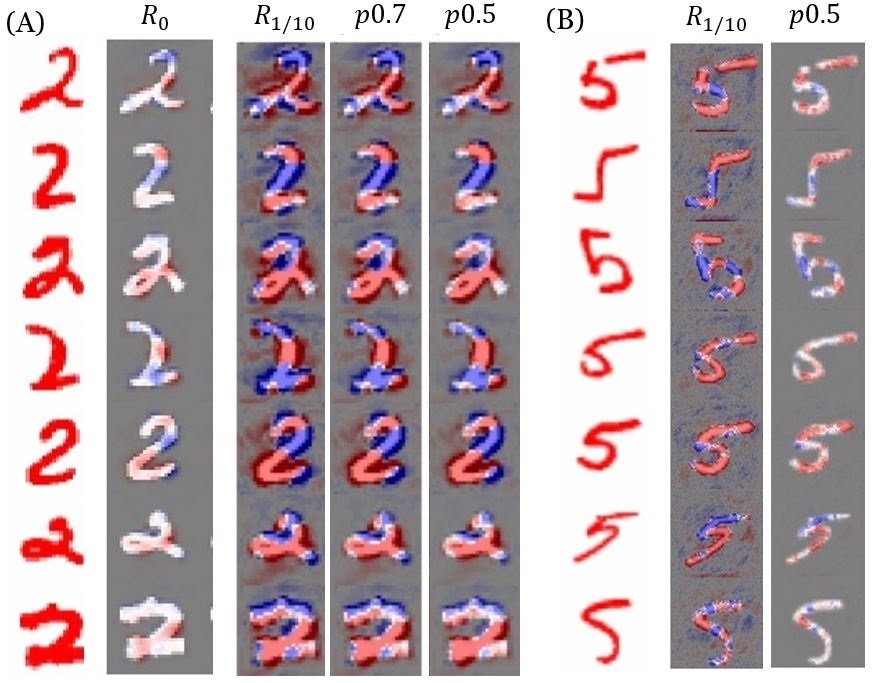

In [12], when the errors are perceived to be spikes, culling the spikes with clamp or pass filters appear to "rectify" the heatmaps to some extent. On the other hand, the increasing MP of S1 and S2 shows that for these NNs, the amount of spikes increase with the performance i.e. spikes are instead used to store useful information, and thus we conversely argue that sub-optimality manifests in the form of less-defined hills in the relevance signals, or the lack of spikes. The suggestion from [12] that errors in interpretable information are propagated as spikes in relevance signals does not hold; the spiking error may be unique to the 3D U-Net that is used. However, reversing the logic, the "rectification" comes in the form of amplifying the hills in the relevance signals to form spikes, which is why fractional-amplifier is used for S1 and S2. In particular, for S2, amplification result is displayed at iteration 10000, not at the end of the training. At this point, the MP values are still increasing, as marked in figure 5(B) Conv 2 orange dashed line. Figure 3(A,B) show some degree of success: if better interpretability means better hot/cold regions relevant to the areas in the images that contain the handwritten digits, then the improvement due to the amplifier with (shown as in the figure respectively) is to some degree shown by lower intensity noises outside the digits. In this argument, it is assumed that the groundtruth exactly overlaps with non-zero-intensity region of the digits (in another words, the digit itself). So far, we have addressed objective (2) of the paper without quantifying what it means to be “better" heatmaps. In fact, here, the “improvement" is only dependent on our visual observation, where heatmaps are closer to the assumed segmentation mask than the noisy version. This brings us back to the main point: we need a groundtruth at some point in the future. Before proceeding with it, we explore the notion of “better" or useful heatmaps with the following short detour.

To illustrate the importance of conceptually uniform groundtruth for interpretable information, we raise a small question on the utility of heatmaps. First, observe figure 3. The prediction for number “2" does not yield heatmaps of the same shapes. Next, observe figure 1 in the supp. material. We give a hindsight justification on the digit 2 boxed in green. Higher intensity red region contributes to the prediction “2", particularly the open curve in the center and left regions. Blue region is the opposite; it might indicate “7", although the tail region (bottom-right) helps conclude the prediction as “2". With such interpretation, the question is, which of the digit(s) in figure 1 of supp. material is/are correctly predicted? Is it possible to predict them reliably? Such situations lack conceptual uniformity, and the main suggestion of current work is precisely to avoid this by showing the importance of providing groundtruth heatmaps.

Back to the main point, if groundtruths are available, we might be able to utilise demonstrate quantitatively improvements in interpretability, for example, using equation 5. Consider figure 3(A) first row. With estimates (see supplementary materials error estimates), for and respectively. Finally, the answer to the previous question is, all predictions for number 2 and 1 in the figure 1 of supp. material are correct. It is correct even for the last row, where only a few local high intensities (spikes of intensities) contribute to the prediction. High relevance regions are hard to pinpoint, and this might raise doubts to the usefulness of heatmaps. For completeness sake, in figure 3(A,B), the predictions of 2s and 5s are 1101101 and 1100000 going down the row where 1 is correct and 0 is wrong. A heatmap has many potentials as the representation of interpretable information. It might need some improvements to boost its credibility, and providing groundtruth for interpretable information may be a solution. Training NN architecture that optimizes against the correct, desired groundtruth might be helpful, though admittedly the process may incur more resources required for manual annotation and labelling.

5 Conclusion

This paper presents two main results: (1) theoretical framework for the use of filters to put upper-bounds on noise-induced errors when groundtruth interpretability information exists and (2) an experimental demonstration to show the variety of errors propagated through layerwise relevance methods. Although general trend of spike-like errors suggested in [12] is not observed, the technique of applying filters to counter the trend of MP values can possibly be used to reduce the errors of the heatmaps. The results have been presented in the hope of spurring more research into the direction of having robust, quantifiable and verifiable interpretable information.

Broader Impact

Providing interpretable information for the automation of some tasks, such as medical diagnosis, is very important. Due to the lack of transparency in the working mechanism of a neural network, wrong prediction or misdiagnosis lower the credibility of a neural network, hence preventing its practical deployment. However, while interpretability research has been booming, the presentation of improvements in interpreteable information is beset by the lack of agreeable framework that match human understanding. Either a critical assessment of displayed heatmap is presented or a completely different pipeline on how interpretable information is displayed should be developed.

References

- [1] Andreas Holzinger, Georg Langs, Helmut Denk, Kurt Zatloukal, and Heimo Müller. Causability and explainability of artificial intelligence in medicine. WIREs Data Mining and Knowledge Discovery, 9(4):e1312, 2019.

- [2] Zachary Chase Lipton. The mythos of model interpretability. CoRR, abs/1606.03490, 2016.

- [3] Wojciech Samek, Thomas Wiegand, and Klaus-Robert Müller. Explainable artificial intelligence: Understanding, visualizing and interpreting deep learning models. CoRR, abs/1708.08296, 2017.

- [4] Erico Tjoa and Cuntai Guan. A survey on explainable artificial intelligence (XAI): towards medical XAI. CoRR, abs/1907.07374, 2019.

- [5] B. Zhou, A. Khosla, A. Lapedriza, A. Oliva, and A. Torralba. Learning deep features for discriminative localization. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 2921–2929, June 2016.

- [6] Ramprasaath R. Selvaraju, Abhishek Das, Ramakrishna Vedantam, Michael Cogswell, Devi Parikh, and Dhruv Batra. Grad-cam: Why did you say that? visual explanations from deep networks via gradient-based localization. CoRR, abs/1610.02391, 2016.

- [7] Sebastian Bach, Alexander Binder, Grégoire Montavon, Frederick Klauschen, Klaus-Robert Müller, and Wojciech Samek. On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLOS ONE, 10(7):1–46, 07 2015.

- [8] Sebastian Lapuschkin, Stephan Wäldchen, Alexander Binder, Grégoire Montavon, Wojciech Samek, and Klaus-Robert Müller. Unmasking clever hans predictors and assessing what machines really learn. Nature Communications, 10(1):1096, 2019.

- [9] Jost Tobias Springenberg, Alexey Dosovitskiy, Thomas Brox, and Martin Riedmiller. Striving for simplicity: The all convolutional net, 2014.

- [10] Daniel Smilkov, Nikhil Thorat, Been Kim, Fernanda Viégas, and Martin Wattenberg. Smoothgrad. https://pair-code.github.io/saliency/.

- [11] Avanti Shrikumar, Peyton Greenside, and Anshul Kundaje. Learning important features through propagating activation differences. CoRR, abs/1704.02685, 2017.

- [12] Erico Tjoa, H. Guo, Yuhao Lu, and Cuntai Guan. Enhancing the extraction of interpretable information for ischemic stroke imaging from deep neural networks. ArXiv, abs/1911.08136, 2019.

- [13] Luisa M. Zintgraf, Taco S. Cohen, Tameem Adel, and Max Welling. Visualizing deep neural network decisions: Prediction difference analysis. CoRR, abs/1702.04595, 2017.

- [14] Amirata Ghorbani, James Wexler, James Y Zou, and Been Kim. Towards automatic concept-based explanations. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, editors, Advances in Neural Information Processing Systems 32, pages 9277–9286. Curran Associates, Inc., 2019.

- [15] Pieter-Jan Kindermans, Sara Hooker, Julius Adebayo, Maximilian Alber, Kristof T. Schütt, Sven Dähne, Dumitru Erhan, and Been Kim. The (Un)reliability of Saliency Methods, pages 267–280. Springer International Publishing, Cham, 2019.

- [16] Been Kim, Martin Wattenberg, Justin Gilmer, Carrie Cai, James Wexler, Fernanda B. Viégas, and Rory Sayres. Interpretability beyond feature attribution: Quantitative testing with concept activation vectors (tcav). In Jennifer G. Dy and Andreas Krause, editors, ICML, volume 80 of JMLR Workshop and Conference Proceedings, pages 2673–2682. JMLR.org, 2018.

- [17] W. Samek, A. Binder, G. Montavon, S. Lapuschkin, and K. Müller. Evaluating the visualization of what a deep neural network has learned. IEEE Transactions on Neural Networks and Learning Systems, 28(11):2660–2673, 2017.

6 Supplementary Materials

7 Error Estimates

Consider figure 3(A) in the main text. If we take the groundtruth as the minus all the hot/cold region outside the digit, then the error in is approximately the following. For let by visual observation, i.e about 1/3 erroneous region. Errors can be considered perturbative since the heatmaps core hot/cold regions between , and are overlapping. The heatmap is normalized, i.e. the order is 1. The view is 1/10, the linear part of error can be considered like the usual perturbative error, assume of the order 0.001. Thus the first term is . The between and (which we assume to be groundtruth-like) appears to be approximately half-intensity of the erroneous region in 1/10-view, giving the second term which dominates the error. Hence, . Likewise, the error is dominated by the second term, although the erroneous region appears in half the intensity, thus . In another words, gives smaller error. Using similar methods to compute the error for intermediate values, we may arrive at figure 2 of supp. material that shows an apparent trend of improved heatmap quality. We do not include the full plot in the main text, since the analysis assumes that the parts of the digit are the only correct contributor to interpretable information (i.e. ignoring all other background information, even if it is blank in the case of MNIST dataset). In reality, this is akin to ignoring background of an image, which may actually be informative.