Generalizable Episodic Memory for Deep Reinforcement Learning

Supplementary Material

Abstract

Episodic memory-based methods can rapidly latch onto past successful strategies by a non-parametric memory and improve sample efficiency of traditional reinforcement learning. However, little effort is put into the continuous domain, where a state is never visited twice, and previous episodic methods fail to efficiently aggregate experience across trajectories. To address this problem, we propose Generalizable Episodic Memory (GEM), which effectively organizes the state-action values of episodic memory in a generalizable manner and supports implicit planning on memorized trajectories. GEM utilizes a double estimator to reduce the overestimation bias induced by value propagation in the planning process. Empirical evaluation shows that our method significantly outperforms existing trajectory-based methods on various MuJoCo continuous control tasks. To further show the general applicability, we evaluate our method on Atari games with discrete action space, which also shows a significant improvement over baseline algorithms.

1 Introduction

Deep reinforcement learning (RL) has been tremendously successful in various domains, like classic games (Silver et al., 2016), video games (Mnih et al., 2015), and robotics (Lillicrap et al., 2016). However, it still suffers from high sample complexity, especially compared to human learning (Tsividis et al., 2017). One significant deficit comes from gradient-based bootstrapping, which is incremental and usually very slow (Botvinick et al., 2019).

Inspired by psychobiological studies of human’s episodic memory (Sutherland & Rudy, 1989; Marr et al., 1991; Lengyel & Dayan, 2007) and instance-based decision theory (Gilboa & Schmeidler, 1995), episodic reinforcement learning (Blundell et al., 2016; Pritzel et al., 2017; Lin et al., 2018; Hansen et al., 2018; Zhu et al., 2020) presents a non-parametric or semi-parametric framework that fast retrieves past successful strategies to improve sample efficiency. Episodic memory stores past best returns, and the agents can act accordingly to repeat the best outcomes without gradient-based learning.

However, most existing methods update episodic memory only by exactly re-encountered events, leaving the generalization ability aside. As Heraclitus, the Greek philosopher, once said, “No man ever steps in the same river twice.” (Kahn et al., 1981) Similarly, for an RL agent acting in continuous action and state spaces, the same state-action pair can hardly be observed twice. However, humans can connect and retrospect from similar experiences of different times, with no need to re-encounter the same event (Shohamy & Wagner, 2008). Inspired by this human’s ability to learn from generalization, we propose Generalizable Episodic Memory (GEM), a novel framework that integrates the generalization ability of neural networks and the fast retrieval manner of episodic memory.

We use Figure 1 to illustrate our basic idea. Traditional discrete episodic control methods usually build a non-parametric slot-based memory table to store state-value pairs of historical experiences. In the discrete domain, states can be re-encountered many times. This re-encountering enables traditional methods to aggregate trajectories by directly taking maximum among all historical returns. However, this is not feasible in environments with high dimensional states and action space, especially in the continuous domain, where an agent will never visit the same state twice. On the contrary, GEM learns a virtual memory table memorized by deep neural networks to aggregate similar state-action pairs that essentially have the same nature. This virtual table naturally makes continuous state-action pairs generalizable by reconstructing experiences’ latent topological structure with neural networks. This memory organization enables planning across different trajectories, and GEM uses this capability to do implicit planning by performing value propagation along trajectories saved in the memory and calculating the best sequence over all possible real and counterfactual combinatorial trajectories.

We further propose to use twin networks to reduce the overestimation of these aggregated returns. Merely taking maximum among all possible plans leads to severe overestimation since overestimated values are preserved along the trajectory. Thus, we use two networks to separately calculate which trajectory has the maximal value and how large the maximum value is, which shares the similar idea with double Q-learning (van Hasselt, 2010).

The main contribution of our proposed method is threefold: 1. We present a novel framework inspired by psychology, GEM, to build generalizable episodic memory and improve sample efficiency. GEM consists of a novel value planning scheme for the continuous domain and a twin back-propagation process to reduce overestimation along the trajectories; 2. We formally analyze the estimation and convergence properties of our proposed algorithm; 3. We empirically show the significant improvement of GEM over other baseline algorithms and its general applicability across continuous and discrete domains. Besides, we analyze the effectiveness of each part of GEM by additional comparisons and ablation studies.

2 Preliminaries

We consider a Markov Decision Process (MDP), defined by the tuple , where is the state space and the action space. denotes the transition distribution function and the reward function. is the discount factor.

The goal of an RL agent is to learn a policy which maximizes the expectation of a discounted cumulative reward, i.e.,

where denotes the distribution of initial states.

2.1 Deterministic Policy Gradient

In the context of continuous control, actor-critic architecture is widely used (Sutton et al., 1999; Peters & Bagnell, 2010) to handle the continuous action space. In actor-critic framework, a critic is used to estimate the action-value function , while the parametric actor optimizes its policy using the policy gradient from parametric . When is a deterministic function, the gradient can be expressed as follows:

which is known as deterministic policy gradient theorem (Silver et al., 2014).

can be learned from the TD-target derived from the Bellman Equation (Bellman, 1957):

In the following section, when it does not lead to confusion, we use instead of for simplicity.

2.2 Double Q-Learning

Q-learning update state-action values by the TD target (Sutton, 1988a) :

However, the max operator in the target can easily lead to overestimation, as discussed in van Hasselt (2010). Thus, They propose to estimate the maximum -values over all actions using the double estimator, i.e.,

where are two independent function approximators for -values.

2.3 Model-Free Episodic Control

Traditional deep RL approaches suffer from sample inefficiency because of slow gradient-based updates. Episodic control is proposed to speed up the learning process by a non-parametric episodic memory (EM). The key idea is to store good past experiences in a tabular-based non-parametric memory and rapidly latch onto past successful policies when encountering similar states, instead of waiting for many steps of optimization. When different experiences meet up at the same state-action pair , model-free episodic control (MFEC; Blundell et al., 2016) aggregates the values of different trajectories by taking the maximum return among all these rollouts starting from the intersection . Specifically, is updated by the following equation:

| (1) |

At the execution time, MFEC selects the action according to the maximum -value of the current state. If there is no exact match of the state, MFEC performs a -nearest-neighbors lookup to estimate the state-action values, i.e.,

| (2) |

where are the nearest states from .

3 Generalizable Episodic Memory

3.1 Overview

In generalizable episodic memory (GEM), we use a parametric network to represent the virtual memory table with parameter , which is learned from tabular memory . To leverage the generalization ability of , we enhance the returns stored in the tabular memory by propagating value estimates from and real returns from along trajectories in the memory and take the best value over all possible rollouts, as depicted in Equation (4). Generalizable memory is trained by performing regression toward this enhanced target and is then used to guide policy learning as well as to build the new target for GEM’s learning.

A significant issue for the procedure above is the overestimation induced from taking the best value along the trajectory. Overestimated values are preserved when doing back-propagation along the trajectory and hinder learning efficiency. To mitigate this issue, we propose to use twin networks for the back-propagation of value estimates. This twin back-propagation process uses the double estimator for estimating the maximum along trajectories, which resembles the idea of Double Q-learning (van Hasselt, 2010) and is illustrated in detail in Section 3.4. It is well known that vanilla reinforcement learning algorithms with function approximation already has a tendency to overestimate (van Hasselt, 2010; Fujimoto et al., 2018), making the overestimation reduction even more critical. To solve this challenge, we propose to use additional techniques to make the value estimation from more conservative, which is illustrated in Section 3.5.

A formal description for GEM algorithm is shown in Algorithm 1. In the following sections, we use to represent for simplicity. Our implementation of GEM is available at https://github.com/MouseHu/GEM.

3.2 Generalizable Episodic Memory

Traditional discrete episodic memory is stored in a lookup table, learned as in Equation (2) and used as in Equation (1). This kind of methods does not consider generalization when learning values and enjoy little generalization ability from non-parametric nearest-neighbor search with random projections during execution. To enable the generalizability of such episodic memory, we use the parametric network to learn and store values. As stated in Section 3.1, this parametric memory is learned by doing regression toward the best returns starting from the state-action pair :

| (3) |

where is computed from implicit planning over both real returns in tabular memory and estimates in target generalizable memory table , as depicted in the next section. This learned virtual memory table is then used for both policy learning and building new target.

3.3 Implicit Memory-Based Planning

To leverage the analogical reasoning ability of our parametric memory, GEM conducts implicit memory-based planning to estimate the value for the best possible rollout for each state-action pair. At each step, GEM compares the best return so far along the trajectory with the value estimates from and takes the maximum between them. is generalized from similar experiences and can be regraded as the value estimates for counterfactual trajectories. This procedure is conducted recursively from the last step to the first step along the trajectory, forming an implicit planning scheme within episodic memory to aggregate experiences along and across trajectories. The overall back-propagation process can be written in the following form:

| (4) |

where denotes steps along the trajectory and the episode length. Further, the back-propagation process in Equation (4) can be unrolled and rewritten as follows:

| (5) |

where denotes different length of rollout steps, and we define and for .

3.4 Twin Back-Propagation Process

In this section, we describe our novel twin back-propagation process to reduce trajectory-induced overestimation.

As mentioned in Section 3.1, using Equation (3.3) for planning directly can lead to severe overestimation, even if is unbiased. Formally, for any unbiased set of estimators , where is the true value and is a set of independent, zero-mean random noise, we have

| (6) |

which can be derived directly from Jensen’s inequality.

Thus trajectory-augmented values have a tendency to overestimate and this overestimation bias can hurt performance greatly. We propose to use twin networks, , to form a double estimator for estimating the best value in the value back-propagation in Equation (3.3). One network is used to estimate the length of rollouts with the maximum returns along a trajectory ( in Equation (3.3)), while the other estimates how large the return is following this estimated best rollout ( in Equation (3.3)). Formally, the proposed twin back-propagation process (TBP) is given by 111In Equation (7) & (8), index represents it can be either or , while reversed index refers to the other network’s estimation, i.e. represents when refers to and vice versa.:

| (7) | ||||

| (8) |

And each -network is updated by

Note that this twin mechanism is different from double Q-learning, where we take the maximum over timesteps while double Q-learning takes the maximum over actions.

Formal analysis in Section 4.1 shows that this twin back-propagation process does not overestimate the true maximum expectation value, given unbiased . As stated in van Hasselt (2010) and Fujimoto et al. (2018), while this update rule may introduce an underestimation bias, it is much better than overestimation, which can be propagated along trajectories.

3.5 Conservative Estimation on Single Step

The objective in Equation (8) may still prone to overestimation since is not necessarily unbiased, and it is well known that deterministic policy gradient has a tendency to overestimate (Fujimoto et al., 2018). To address this issue, we further augment each functions with two independent networks , and use clipped double Q-learning objective as in Fujimoto et al. (2018) for the estimation at each step:

| (9) |

Finally, we propose the following asymmetric objective to penalize overestimated approximation error,

| (10) |

where is the regression error and . is the hyperparameter to control the degree of asymmetry.

4 Theoretical Analysis

In this section, we aim to establish a theoretical characterization of our proposed approach through several aspects. We begin by showing an important property that the twin back-propagation process itself does not have an incentive to overestimate values, the same property as guaranteed by double Q-learning. In addition, we prove that our approach guarantees to converge to the optimal solution in deterministic environments. Moreover, in stochastic environments, the performance could be bounded by a dependency term regrading the environment stochasticity.

4.1 Non-Overestimation Property

We first investigate the algorithmic property of the twin back-propagation process in terms of value estimation bias, which is an essential concept for value-based methods (Thrun & Schwartz, 1993; van Hasselt, 2010; Fujimoto et al., 2018). Theorem 1 indicates that our method would not overestimate the real maximum value in expectation.

Theorem 1.

Given unbiased and independent estimators , Equation (8) will not overestimate the true objective, i.e.

| (11) |

where

| (12) |

and is a trajectory.

4.2 Convergence Property

In addition to the statistical property of value estimation, we also analyze the convergence behavior of GEM. Following the same environment assumptions as related work (Blundell et al., 2016; Zhu et al., 2020), We first derive the convergence guarantee of GEM in deterministic scenarios as the following statement.

Theorem 2.

In a finite MDP with a discount factor , the tabular parameterization of Algorithm 1 would converge to w.p.1 under the following conditions:

-

1.

-

2.

The transition function of the given environment is fully deterministic, i.e., for some deterministic transition function

where denotes the scheduling of learning rates. A formal description for tabular parameterization of Algorithm 1 is included in Appendix A.

The proof of Theorem 2 is extended based on van Hasselt (2010), and details are deferred to Appendix B. Note that this theorem only applies to deterministic scenarios, which is a common assumption for memory-based algorithms (Blundell et al., 2016; Zhu et al., 2020). To establish a more precise characterization, we consider a more general class of MDPs, named near-deterministic MDPs, as stated in Definition 4.1.

Definition 4.1.

We define as the maximum value possible to receive starting from , i.e.,

An MDP is said to be nearly-deterministic with parameter , if

where is a dependency threshold to bound the stochasticity of environments.

Based on the definition of near-deterministic MDPs, we formalize the performance guarantee of our approach as the following statements:

Lemma 1.

Proof Sketch.

We only need to show that is a -contraction and also . The rest of the proof is similar to Theorem 2. ∎

Theorem 3.

For a nearly-deterministic environment with factor , GEM’s performance can be bounded w.p.1 by

5 Experiments

Our experimental evaluation aims to answer the following questions: (1) How well does GEM perform on the continuous state and action space? (2) How well does GEM perform on discrete domains? (3) How effective is each part of GEM?

5.1 Evaluation on Continuous Control Tasks

We compare our method with the most popular model-free RL algorithms, including DDPG (Lillicrap et al., 2016), TD3 (Fujimoto et al., 2018) and SAC (Haarnoja et al., 2018). Conventional episodic RL such as MFEC (Blundell et al., 2016), NEC (Pritzel et al., 2017), EMDQN (Lin et al., 2018), EVA (Hansen et al., 2018) and ERLAM (Zhu et al., 2020) adopts discrete episodic memory that are only designed for discrete action space, thus we cannot directly compare with them. To compare with episodic memory-based approaches on the continuous domain, we instead choose self-imitation learning (SIL; Oh et al., 2018) in our experiments. It also aims to exploit past good experiences and share a similar idea with episodic control; thus, it can be regarded as a continuous version of episodic control. For a fair comparison, We use the same code base for all algorithms, and we combine the self-limitation learning objective with techniques in TD3 (Fujimoto et al., 2018), rather than use the origin implementation, which combines with PPO (Schulman et al., 2017).

We conduct experiments on the suite of MuJoCo tasks (Todorov et al., 2012), with OpenAI Gym interface (Brockman et al., 2016). We truncate the maximum steps available for planning to reduce the overestimation bias. The memory update frequency is set to 100 with a smoothing coefficient . The rest of the hyperparameters are mostly kept the same as in TD3 to ensure a fair comparison. The detailed hyperparameters used are listed in Appendix C.

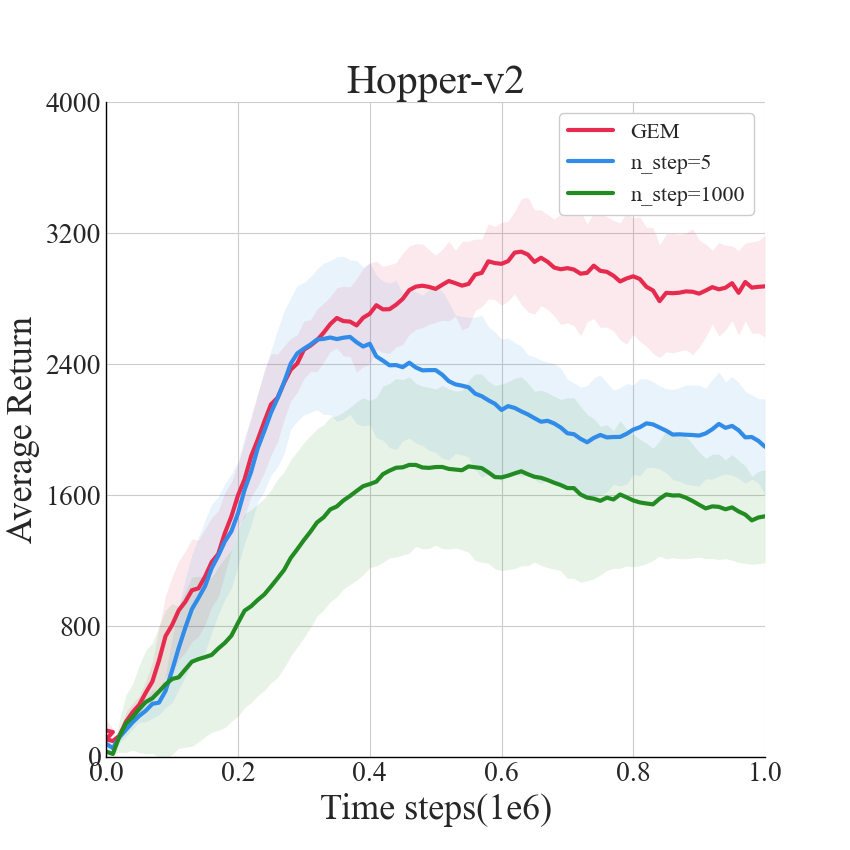

The learning curve on different continuous tasks is shown in Figure 2. We report the performance of 1M steps, which is evaluated with rollouts for every steps with deterministic policies. As the results suggest, our method significantly outperforms other baseline algorithms on most tasks. Only on Hopper, GEM is not the absolute best, but all the algorithms have similar performance.

5.2 Evaluation on Discrete Domains

Though GEM is proposed to facilitate continuous control tasks, it also has the general applicability of boosting learning on discrete domains. To demonstrate this, we compare GEM with several advanced deep Q-learning algorithms for discrete action space, including DQN (Mnih et al., 2015), DDQN (van Hasselt et al., 2016), and Dueling DQN (Wang et al., 2016). We also include the clipped double DQN, which is adopted from a state-of-the-art algorithm of the continuous domain, TD3 (Fujimoto et al., 2018). Most of these baseline algorithms focus on learning stability and the reduction of estimation bias. We evaluate all the above algorithms on 6 Atari games (Bellemare et al., 2013). We use hyper-parameters suggested in Rainbow (Hessel et al., 2018), and other hyper-parameters for our algorithm are kept the same as in the continuous domain. As shown in Figure 3, although not tailored for the discrete domain, GEM significantly outperforms baseline algorithms both in terms of sample efficiency and final performance.

5.3 Ablation Study

This section aims to understand each part’s contribution to our proposed algorithm, including the generalizable episodic memory, implicit memory-based planning, and twin back-propagation. We also empirically demonstrate the effect of overestimation. To check whether our method, which uses four networks, benifits from ensembling effect, we also compare our method with the random ensembling method as used in REDQ (Chen et al., 2021).

To verify the effectiveness of generalizable episodic memory, we compare our algorithm with SIL, which directly uses historical returns (which can be seen as a discrete episodic memory). As shown in both Figure 2 and Figure 4, although SIL improves over TD3, it only has marginal improvement. On the contrary, our algorithm improves significantly over TD3.

To understand the contribution of implicit planning, we compare with a variant of our method that uses -return rather than taking the maximum over different steps. We also compare our method with Optimality Tightening (He et al., 2017), which shares the similar idea of using trajectory-based information. As Figure 4 shows, Our method outperforms both methods, which verifies the effectiveness of implicit planning within memories.

To check the contribution of our twin network and conservative estimation, we compare the results without the twin back-propagation process and the results without asymmetric losses. The result and the estimation error are summarized in Figure 4. We can see that overestimation is severe without TBP or conservative estimation, and the performance is greatly affected, especially in games like Humanoid. In these games, the living condition for the agent is strict; thus overestimation is more severely punished.

To understand the contribution of the ensembling effect in our method, we compare our result with random ensembling as used in Chen et al. (2021), since we are not able to directly remove TBP while still using all four networks. We use the same update-to-data ratio as in GEM for a fair comparison. As Figure 4 shows, naive ensembling contributes little to performance, and overestimation reduction contributes much more than the ensembling effect.

From these comparisons, we can conclude that our generalizable memory aggregates return values much more effectively than directly using historical returns, and the twin back-propagation process contributes to the performance of GEM significantly. In addition, we verify that the merits of the twin back-propagation process mainly come from overestimation reduction rather than ensembling.

6 Related Work

Episodic Control.

Our method is closely related to episodic reinforcement learning, pioneered by Blundell et al. (2016), which introduces the concept of episodic memory into reinforcement learning and uses a non-parametric k-NN search to recall past successful experiences quickly. Pritzel et al. (2017) and Lin et al. (2018) consider to include a parametric counterpart for episodic memory, which enables better generalizability through function approximators. Although they provide some level of generalization ability, the aggregation of different trajectories still relies on the exact re-encountering, and their methods cannot handle continuous action space. Several advanced variants (Zhu et al., 2020; Lee et al., 2019) propose several ways to connect different experiences within the episodic memory to improve learning speed further. However, their methods are still not generally applicable in the continuous domain since they require taking maximum among all possible actions or require exact re-encountering. Hansen et al. (2018) adopts the similar idea of soft aggregation as ours, but they only consider decision time and leaves the ability of learning by analogy aside. Self-imitation learning (Oh et al., 2018), another memory-based approach built upon policy gradient methods, uses a return-based lower bound to restrict the value function, which cannot aggregate associative trajectories effectively.

From Discrete to Continuous.

Researchers have long been attracted to the challenge of making the RL algorithm applicable in the general continuous case. Kernel-based reinforcement learning (Ormoneit & Sen, 2002) proposes a way to overcomes the stability problems of temporal-difference learning in continuous state-spaces. Lillicrap et al. (2016) extends the success of deep Q-learning into the continuous domain, which successes on various MuJoCo tasks. Similarly, in episodic RL, GEM generalizes the idea of a discrete episodic memory by representing the episodic memory as a network-generated virtual table, making it generally applicable in the continuous case.

Maximization Bias in Q-Learning.

The maximization bias of Q-learning, first highlighted by Thrun & Schwartz (1993), is a long-lasting issue that hinders the learning efficiency of value-based methods. van Hasselt (2010) proposed to use a second value estimator as cross-validation to address this bias, and this technique has been extended to the deep Q-learning paradigm (van Hasselt et al., 2016). In practice, since it is intractable to construct two fully independent value estimators, double Q-learning is observed to overestimate sometimes, especially in continuous domains. To address this concern, Fujimoto et al. (2018) proposed clipped double Q-learning to repress further the incentive of overestimation, which has become the default implementation of most advanced approaches (Haarnoja et al., 2018; Kalashnikov et al., 2018). Recently, to control the estimation bias more precisely, people utilize an ensemble of value networks (Lan et al., 2020; Kuznetsov et al., 2020; Chen et al., 2021), which causes high computational costs.

Multi-step Bootstrapping.

The implementation of our proposed algorithm is also related to a technique named multi-step bootstrapping. This branch originates from the idea of eligibility traces (Singh & Sutton, 1996; Sutton, 1988b). Recently, it is prevalent in policy gradient methods such as GAE (Schulman et al., 2016) and its variants (Touati et al., 2018). In the context of temporal-difference learning, multi-step bootstrapping is also beneficial to improving the stability of Q-learning algorithms (Van Hasselt et al., 2018). The main limitation of multi-step bootstrapping is that the performance of multi-step training is sensitive to the bootstrapping horizon choice, and the trajectory used is required to be at least nearly on-policy rather than an arbitrary one.

7 Conclusion

This work presents Generalizable Episodic Memory, an effective memory-based method that aggregates different experiences from similar states and future consequences. We perform implicit planning by taking the maximum over all possible combinatorial trajectories in the memory and reduces overestimation error by using twin networks.

We provide theoretical analysis to show that our objective does not overestimate in general and converges to under mild conditions. Experimental results on continuous control tasks show that our method outperforms state-of-the-art model-free RL methods, as well as episodic memory-based algorithms. Our method also demonstrates general applicability in the discrete domain, such as Atari games. Our method is not primarily designed for discrete domains but can still significantly improve the learning efficiency of RL agents under this setting.

We make the first step to endow episodic memory with generalization ability. Besides, generalization ability also relies highly on the representation of states and actions. We leave the study of representation learning in episodic memory as interesting future work.

8 Acknowledgement

This work is supported in part by Science and Technology Innovation 2030 – “New Generation Artificial Intelligence” Major Project (No. 2018AAA0100904), and a grant from the Institute of Guo Qiang,Tsinghua University.

References

- Bellemare et al. (2013) Bellemare, M. G., Naddaf, Y., Veness, J., and Bowling, M. The arcade learning environment: An evaluation platform for general agents. Journal of Artificial Intelligence Research, 47:253–279, 2013.

- Bellman (1957) Bellman, R. Dynamic programming princeton university press princeton. New Jersey Google Scholar, 1957.

- Blundell et al. (2016) Blundell, C., Uria, B., Pritzel, A., Li, Y., Ruderman, A., Leibo, J. Z., Rae, J., Wierstra, D., and Hassabis, D. Model-free episodic control. arXiv preprint arXiv:1606.04460, 2016.

- Botvinick et al. (2019) Botvinick, M., Ritter, S., Wang, J. X., Kurth-Nelson, Z., Blundell, C., and Hassabis, D. Reinforcement learning, fast and slow. Trends in cognitive sciences, 23(5):408–422, 2019.

- Brockman et al. (2016) Brockman, G., Cheung, V., Pettersson, L., Schneider, J., Schulman, J., Tang, J., and Zaremba, W. Openai gym. arXiv preprint arXiv:1606.01540, 2016.

- Chen et al. (2021) Chen, X., Wang, C., Zhou, Z., and Ross, K. Randomized ensembled double q-learning: Learning fast without a model. In International Conference on Learning Representations, 2021.

- Fujimoto et al. (2018) Fujimoto, S., van Hoof, H., and Meger, D. Addressing function approximation error in actor-critic methods. In Dy, J. G. and Krause, A. (eds.), Proceedings of the 35th International Conference on Machine Learning, ICML 2018, volume 80 of Proceedings of Machine Learning Research, pp. 1582–1591. PMLR, 2018.

- Gilboa & Schmeidler (1995) Gilboa, I. and Schmeidler, D. Case-based decision theory. The Quarterly Journal of Economics, 110(3):605–639, 1995.

- Haarnoja et al. (2018) Haarnoja, T., Zhou, A., Abbeel, P., and Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Dy, J. G. and Krause, A. (eds.), Proceedings of the 35th International Conference on Machine Learning, ICML 2018, volume 80 of Proceedings of Machine Learning Research, pp. 1856–1865. PMLR, 2018.

- Hansen et al. (2018) Hansen, S., Pritzel, A., Sprechmann, P., Barreto, A., and Blundell, C. Fast deep reinforcement learning using online adjustments from the past. In Bengio, S., Wallach, H. M., Larochelle, H., Grauman, K., Cesa-Bianchi, N., and Garnett, R. (eds.), Advances in Neural Information Processing Systems 31: Annual Conference on Neural Information Processing Systems 2018, NeurIPS 2018, pp. 10590–10600, 2018.

- He et al. (2017) He, F. S., Liu, Y., Schwing, A. G., and Peng, J. Learning to play in a day: Faster deep reinforcement learning by optimality tightening. In 5th International Conference on Learning Representations, ICLR 2017. OpenReview.net, 2017.

- Hessel et al. (2018) Hessel, M., Modayil, J., van Hasselt, H., Schaul, T., Ostrovski, G., Dabney, W., Horgan, D., Piot, B., Azar, M. G., and Silver, D. Rainbow: Combining improvements in deep reinforcement learning. In McIlraith, S. A. and Weinberger, K. Q. (eds.), Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, (AAAI-18), pp. 3215–3222. AAAI Press, 2018.

- Kahn et al. (1981) Kahn, C. H. et al. The art and thought of Heraclitus: a new arrangement and translation of the Fragments with literary and philosophical commentary. Cambridge University Press, 1981.

- Kalashnikov et al. (2018) Kalashnikov, D., Irpan, A., Pastor, P., Ibarz, J., Herzog, A., Jang, E., Quillen, D., Holly, E., Kalakrishnan, M., Vanhoucke, V., et al. Qt-opt: Scalable deep reinforcement learning for vision-based robotic manipulation. In Conference on Robot Learning. PMLR, 2018.

- Kuznetsov et al. (2020) Kuznetsov, A., Shvechikov, P., Grishin, A., and Vetrov, D. P. Controlling overestimation bias with truncated mixture of continuous distributional quantile critics. In Proceedings of the 37th International Conference on Machine Learning, ICML 2020, volume 119 of Proceedings of Machine Learning Research, pp. 5556–5566. PMLR, 2020.

- Lan et al. (2020) Lan, Q., Pan, Y., Fyshe, A., and White, M. Maxmin q-learning: Controlling the estimation bias of q-learning. In 8th International Conference on Learning Representations, ICLR 2020. OpenReview.net, 2020.

- Lee et al. (2019) Lee, S. Y., Choi, S., and Chung, S. Sample-efficient deep reinforcement learning via episodic backward update. In Wallach, H. M., Larochelle, H., Beygelzimer, A., d’Alché-Buc, F., Fox, E. B., and Garnett, R. (eds.), Advances in Neural Information Processing Systems 32: Annual Conference on Neural Information Processing Systems 2019, NeurIPS 2019, pp. 2110–2119, 2019.

- Lengyel & Dayan (2007) Lengyel, M. and Dayan, P. Hippocampal contributions to control: The third way. In Platt, J. C., Koller, D., Singer, Y., and Roweis, S. T. (eds.), Advances in Neural Information Processing Systems 20, Proceedings of the Twenty-First Annual Conference on Neural Information Processing Systems, pp. 889–896. Curran Associates, Inc., 2007.

- Lillicrap et al. (2016) Lillicrap, T. P., Hunt, J. J., Pritzel, A., Heess, N., Erez, T., Tassa, Y., Silver, D., and Wierstra, D. Continuous control with deep reinforcement learning. In Bengio, Y. and LeCun, Y. (eds.), 4th International Conference on Learning Representations, ICLR 2016,, 2016.

- Lin et al. (2018) Lin, Z., Zhao, T., Yang, G., and Zhang, L. Episodic memory deep q-networks. In Lang, J. (ed.), Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI 2018, pp. 2433–2439. ijcai.org, 2018. doi: 10.24963/ijcai.2018/337.

- Machado et al. (2018) Machado, M. C., Bellemare, M. G., Talvitie, E., Veness, J., Hausknecht, M., and Bowling, M. Revisiting the arcade learning environment: Evaluation protocols and open problems for general agents. Journal of Artificial Intelligence Research, 61:523–562, 2018.

- Marr et al. (1991) Marr, D., Willshaw, D., and McNaughton, B. Simple memory: a theory for archicortex. In From the Retina to the Neocortex, pp. 59–128. Springer, 1991.

- Mnih et al. (2015) Mnih, V., Kavukcuoglu, K., Silver, D., Rusu, A. A., Veness, J., Bellemare, M. G., Graves, A., Riedmiller, M., Fidjeland, A. K., Ostrovski, G., et al. Human-level control through deep reinforcement learning. nature, 518(7540):529–533, 2015.

- Oh et al. (2018) Oh, J., Guo, Y., Singh, S., and Lee, H. Self-imitation learning. In Dy, J. G. and Krause, A. (eds.), Proceedings of the 35th International Conference on Machine Learning, ICML 2018, volume 80 of Proceedings of Machine Learning Research, pp. 3875–3884. PMLR, 2018.

- Ormoneit & Sen (2002) Ormoneit, D. and Sen, Ś. Kernel-based reinforcement learning. Machine learning, 49(2):161–178, 2002.

- Peters & Bagnell (2010) Peters, J. and Bagnell, J. A. Policy gradient methods. Scholarpedia, 5(11):3698, 2010.

- Pritzel et al. (2017) Pritzel, A., Uria, B., Srinivasan, S., Badia, A. P., Vinyals, O., Hassabis, D., Wierstra, D., and Blundell, C. Neural episodic control. In Precup, D. and Teh, Y. W. (eds.), Proceedings of the 34th International Conference on Machine Learning, ICML 2017, volume 70 of Proceedings of Machine Learning Research, pp. 2827–2836. PMLR, 2017.

- Schulman et al. (2016) Schulman, J., Moritz, P., Levine, S., Jordan, M. I., and Abbeel, P. High-dimensional continuous control using generalized advantage estimation. In Bengio, Y. and LeCun, Y. (eds.), 4th International Conference on Learning Representations, ICLR 2016, 2016.

- Schulman et al. (2017) Schulman, J., Wolski, F., Dhariwal, P., Radford, A., and Klimov, O. Proximal policy optimization algorithms. arXiv preprint arXiv:1707.06347, 2017.

- Shohamy & Wagner (2008) Shohamy, D. and Wagner, A. D. Integrating memories in the human brain: hippocampal-midbrain encoding of overlapping events. Neuron, 60(2):378–389, 2008.

- Silver et al. (2014) Silver, D., Lever, G., Heess, N., Degris, T., Wierstra, D., and Riedmiller, M. A. Deterministic policy gradient algorithms. In Proceedings of the 31th International Conference on Machine Learning, ICML 2014, volume 32 of JMLR Workshop and Conference Proceedings, pp. 387–395. JMLR.org, 2014.

- Silver et al. (2016) Silver, D., Huang, A., Maddison, C. J., Guez, A., Sifre, L., Van Den Driessche, G., Schrittwieser, J., Antonoglou, I., Panneershelvam, V., Lanctot, M., et al. Mastering the game of go with deep neural networks and tree search. nature, 529(7587):484–489, 2016.

- Singh & Sutton (1996) Singh, S. P. and Sutton, R. S. Reinforcement learning with replacing eligibility traces. Machine learning, 22(1):123–158, 1996.

- Sutherland & Rudy (1989) Sutherland, R. J. and Rudy, J. W. Configural association theory: The role of the hippocampal formation in learning, memory, and amnesia. Psychobiology, 17(2):129–144, 1989.

- Sutton (1988a) Sutton, R. S. Learning to predict by the methods of temporal differences. Machine learning, 3(1):9–44, 1988a.

- Sutton (1988b) Sutton, R. S. Learning to predict by the methods of temporal differences. Machine learning, 3(1):9–44, 1988b.

- Sutton et al. (1999) Sutton, R. S., McAllester, D. A., Singh, S. P., Mansour, Y., et al. Policy gradient methods for reinforcement learning with function approximation. In NIPS, volume 99, pp. 1057–1063. Citeseer, 1999.

- Thrun & Schwartz (1993) Thrun, S. and Schwartz, A. Issues in using function approximation for reinforcement learning. In Proceedings of the Fourth Connectionist Models Summer School, pp. 255–263. Hillsdale, NJ, 1993.

- Todorov et al. (2012) Todorov, E., Erez, T., and Tassa, Y. Mujoco: A physics engine for model-based control. In 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, pp. 5026–5033. IEEE, 2012.

- Touati et al. (2018) Touati, A., Bacon, P., Precup, D., and Vincent, P. Convergent TREE BACKUP and RETRACE with function approximation. In Dy, J. G. and Krause, A. (eds.), Proceedings of the 35th International Conference on Machine Learning, ICML 2018, volume 80 of Proceedings of Machine Learning Research, pp. 4962–4971. PMLR, 2018.

- Tsividis et al. (2017) Tsividis, P., Pouncy, T., Xu, J. L., Tenenbaum, J. B., and Gershman, S. J. Human learning in atari. In 2017 AAAI. AAAI Press, 2017.

- van Hasselt (2010) van Hasselt, H. Double q-learning. In Lafferty, J. D., Williams, C. K. I., Shawe-Taylor, J., Zemel, R. S., and Culotta, A. (eds.), Advances in Neural Information Processing Systems 23: 24th Annual Conference on Neural Information Processing Systems 2010, pp. 2613–2621. Curran Associates, Inc., 2010.

- van Hasselt et al. (2016) van Hasselt, H., Guez, A., and Silver, D. Deep reinforcement learning with double q-learning. In Schuurmans, D. and Wellman, M. P. (eds.), Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, pp. 2094–2100. AAAI Press, 2016.

- Van Hasselt et al. (2018) Van Hasselt, H., Doron, Y., Strub, F., Hessel, M., Sonnerat, N., and Modayil, J. Deep reinforcement learning and the deadly triad. arXiv preprint arXiv:1812.02648, 2018.

- Wang et al. (2016) Wang, Z., Schaul, T., Hessel, M., van Hasselt, H., Lanctot, M., and de Freitas, N. Dueling network architectures for deep reinforcement learning. In Balcan, M. and Weinberger, K. Q. (eds.), Proceedings of the 33nd International Conference on Machine Learning, ICML 2016, volume 48 of JMLR Workshop and Conference Proceedings, pp. 1995–2003. JMLR.org, 2016.

- Zhu et al. (2020) Zhu, G., Lin, Z., Yang, G., and Zhang, C. Episodic reinforcement learning with associative memory. In 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net, 2020.

Appendix A GEM Algorithm in Tabular Case

In this section, we present the formal description of the GEM algorithm in tabular case, as shown in Algorithm 3.

Appendix B Proofs of Theoremss

Theorem 4.

Given unbiased and independent estimators , Equation (8) will not overestimate the true objective, i.e.

| (13) |

where

| (14) |

and is a trajectory.

Proof.

By unrolling and rewritten Equation (7), we have

Where is the abbrevation for for simplicity. Then we have

Then naturally

∎

To prepare for the theorem below, we need the following lemma:

Lemma 2.

Consider a stochastic process , where satisfy the equations

| (15) |

Let be a filter such that and are -measurable, is -measurable, . Assume that the following hold:

-

•

is finite: .

-

•

, , a.s. for all .

-

•

, where and .

-

•

, where is some constant.

Then converge to zero w.p.1.

This lemma is also used in Double-Q learning (van Hasselt, 2010) and we omit the proof for simplicity.

In the following sections, we use to represent the infinity norm .

Theorem 5.

Algorithm 3 converge to w.p.1 with the following conditions:

-

1.

The MDP is finite, i.e.

-

2.

-

3.

The Q-values are stored in a lookup table

-

4.

-

5.

The environment is fully deterministic, i.e. for some deterministic transition function

Proof.

This is a sketch of proof and some technical details are omitted.

We just need to show that without double-q version, the update will be a -contraction and will converge. Then we need to show that converge to zero, which is similar with double-q learning.

We only prove convergence of , and by symmetry we have the conclusion.

Let , and ,

To use Lemma 2, we only need to prove that is a -contraction and converge to zero.

On the one hand,

On the other hand,

Thus is a -contraction w.r.t .

Finally we show converges to zero.

Note that , it suffices to show that converge to zero.

Depending on whether or is updated, the update rule can be written as

or

where and .

Now let , we have

when by definition we have

Then

Similarly, , we have

Now in both scenairos we have holds. Applying Lemma 2 again we have the desired results. ∎

The theorem apply only to deterministic scenairos. Nevertheless, we can still bound the performance when the environment is stochastic but nearly deterministic.

Theorem 6.

Proof.

We just need to prove that and converge to 0 w.p.1, where .

On the one hand, similar from the proof of Theorem 2 and let .

The rest is the same as the proof of Theorem 2, and we have converge to zero w.p.1.

On the other hand, let ,

We have

The rest is the same as the proof of Theorem 2, and we have converge to zero w.p.1.

∎

When the enironment is nearly-deterministic, we can bound the performance of Q despite its non-convergence:

Theorem 7.

For a nearly-deterministic environment with factor , in limit, GEM’s performance can be bounded by

| (17) |

Proof.

since we have , It is easy to show that

So we have the conclusion. ∎

Appendix C Hyperparameters

Here we listed the hyperparameters we used for the evaluation of our algorithm.

| Task | HalfCheetah | Ant | Swimmer | Humanoid | Walker | Hopper |

|---|---|---|---|---|---|---|

| Maximum Length | 1000 | 1000 | 1000 | 5 | 5 | 5 |

| Hyper-parameter | GEM |

| Critic Learning Rate | 1e-3 |

| Actor Learning Rate | 1e-3 |

| Optimizer | Adam |

| Target Update Rate() | 0.6 |

| Memory Update Period() | 100 |

| Memory Size | 100000 |

| Policy Delay() | 2 |

| Batch Size | 100 |

| Discount Factor | 0.99 |

| Exploration Policy | |

| Gradient Steps per Update | 200 |

The hyper-parameters for Atari games are kept the same as in the continuous domain, and other hyper-parameters are kept the same as Rainbow (Hessel et al., 2018).

Appendix D Additional Ablation Results

Here we include more ablation results of GEM. To verify the effectiveness of our proposed implicit planning, we compare our method with simple n-step Q learning combined with TD3. For a fair comparison, we include all different rollout lengths used in GEM’s result. The result is shown in Figure 6. We can see that GEM significantly outperform simple n-step learning.

To understand the effects of rollout lengths, we also compare the result of different rollout lengths on Atari games. The result is shown below in Figure 7. We can see that using short rollout length greatly hinders the performance of GEM.

To verify the effectiveness of GEM on the stochastic domain, we conduct experiments on Atari games with sticky actions, as suggested in (Machado et al., 2018). As illustrated in Figure 5, GEM is still competitive on stochastic domains.