Generalist: Decoupling Natural and Robust Generalization

Abstract

Deep neural networks obtained by standard training have been constantly plagued by adversarial examples. Although adversarial training demonstrates its capability to defend against adversarial examples, unfortunately, it leads to an inevitable drop in the natural generalization. To address the issue, we decouple the natural generalization and the robust generalization from joint training and formulate different training strategies for each one. Specifically, instead of minimizing a global loss on the expectation over these two generalization errors, we propose a bi-expert framework called Generalist where we simultaneously train base learners with task-aware strategies so that they can specialize in their own fields. The parameters of base learners are collected and combined to form a global learner at intervals during the training process. The global learner is then distributed to the base learners as initialized parameters for continued training. Theoretically, we prove that the risks of Generalist will get lower once the base learners are well trained. Extensive experiments verify the applicability of Generalist to achieve high accuracy on natural examples while maintaining considerable robustness to adversarial ones. Code is available at https://github.com/PKU-ML/Generalist.

1 Introduction

Modern deep learning techniques have achieved remarkable success in many fields, including computer vision [DBLP:conf/nips/KrizhevskySH12, DBLP:conf/cvpr/HeZRS16], natural language processing [DBLP:conf/nips/VaswaniSPUJGKP17, DBLP:conf/naacl/DevlinCLT19], and speech recognition [DBLP:conf/interspeech/SakSRB15, wang2017residual]. Yet, deep neural networks (DNNs) suffer a catastrophic performance degradation by human imperceptible adversarial perturbations where wrong predictions are made with extremely high confidence [DBLP:journals/corr/SzegedyZSBEGF13, DBLP:journals/corr/GoodfellowSS14, wang2020transferable]. The vulnerability of DNNs has led to the proposal of various defense approaches [DBLP:conf/ndss/Xu0Q18, wang2020hamiltonian, DBLP:conf/iclr/QinFSRCH20, DBLP:conf/sp/PapernotM0JS16, bai2019hilbert] for protecting DNNs from adversarial attacks. One of those representative techniques is adversarial training (AT) [DBLP:conf/iclr/MadryMSTV18, wang2019dynamic, wang2020improving, mo2022adversarial], which dynamically injects perturbed examples that deceive the current model but preserve the right label into the training set. Adversarial training has been demonstrated to be the most effective method to improve adversarially robust generalization [athalye2018obfuscated, wu2020adversarial].

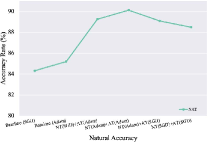

Despite these successes, such attempts of adversarial training have found a tradeoff between natural and robust accuracy, i.e., there exists an undesirable increase in the error on unperturbed images when the error on the worst-case perturbed images decreases, as illustrated in Figure 1. Prior works [DBLP:conf/iclr/TsiprasSETM19, DBLP:conf/icml/ZhangYJXGJ19] even argue that natural and robust accuracy are fundamentally at odds, which indicates that a robust classifier can be achieved only when compromising the natural generalization. However, the following works found that the tradeoff may be settled in a roundabout way, such as incorporating additional labeled/unlabeled data [DBLP:conf/nips/AlayracUHFSK19, DBLP:conf/nips/NajafiMKM19, DBLP:conf/nips/CarmonRSDL19, DBLP:conf/icml/RaghunathanXYDL20] or relaxing the magnitude of perturbations to generate suitable adversarial examples for better optimization [DBLP:conf/icml/ZhangXH0CSK20, DBLP:conf/cvpr/LeeLY20]. These works all focus on the data used for training while we propose to tackle the tradeoff problem from the perspective of the training paradigm in this paper.

Inspired by the spirit of the divide-and-conquer method, we decouple the objective function of adversarial training into two sub-tasks: one is used for natural example classification while the other one is used for adversarial example classification. Specifically, for each sub-task, we train a base learner on natural/adversarial datasets with the task-specific configuration while sharing the same model architecture. The parameters of base learners are collected and combined to form a global learner at intervals during the training process, which is then distributed to base learners as initialized parameters for continued training. We name the framework as Generalist whose proof-of-concept pipeline is shown in Figure 2. Different from the traditional joint training framework for natural and robust generalization, our proposed Generalist fully leverages task-specific information to individually train the base learners, which makes each sub-task to be solved better. Theoretically, we show that if the base learners are well trained, the final global learner is guaranteed to have a lower risk. Our proposed Generalist is the first to effectively address the tradeoff between natural and robust generalization by utilizing task-aware training strategies to achieve high clean accuracy in the natural setting, while also maintaining considerable robustness to the adversarial setting (as shown in Figure 1).

In summary, the main contributions are as follows:

-

•

For the tradeoff between natural and robust generalization, previous methods have struggled to find a sweet point to meet both goals in the joint training framework. Here, we propose a novel Generalist paradigm, which constructs multiple task-aware base learners to respectively achieve the generalization goal on natural and adversarial counterparts separately.

-

•

For each task, rather than being constricted in a stiff manner, every detail of the training strategies (e.g., optimization scheme) can be totally customized, thus each base learner can better explore the optimal trajectory in its field while the global learner can fully leverage the merits of all base learners.

-

•

We conduct extensive experiments in common settings against a wide range of adversarial attacks to demonstrate the effectiveness of our approach. Results show that our Generalist paradigm greatly improves both clean and robust accuracy on benchmark datasets compared to relevant techniques.

2 Preliminaries and Related Work

In this section, we briefly introduce some relevant background knowledge and terminology about adversarial training and meta-learning.

Notations. Consider an image classification task with input space and output space . Let denote a natural image and denote the corresponding ground-truth label. The natural and adversarial datasets and are sampled from a distribution and , respectively. We denote a DNN model as whose parameters are , which should classify any input image into one of classes. The objective functions and for the natural and adversarial setting can be defined as: and , which are usually positive, bounded, and upper-semi continuous [DBLP:journals/mor/BlanchetM19, Villani2003TopicsIO, DBLP:conf/colt/BartlettM01].

2.1 Standard Adversarial Training

The goal of the adversary is to generate a malignant example by adding an imperceptible perturbation to . And the generated adversarial example should be in the vicinity of so that it looks visually similar to the original one. This neighbor region anchored at with apothem can be defined as . For adversarial training, it first generates adversarial examples and then updates the parameters over these samples. The iteration process of adversarial training can be summed up as:

| (1) |

where is the projection operator, is the step size, is the learning rate, and is the loss difference of . The tradeoff factor balances the importance of natural and robust errors. Various adversarial training methods can be derived from Eq. 1. For instance, when , it is equivalent to the vanilla PGD training [DBLP:conf/iclr/MadryMSTV18], and when , it is transformed into the half-half loss in [DBLP:journals/corr/GoodfellowSS14]. The formulation degenerates to standard natural training as . Besides, we can get the formulation in TRADES [DBLP:conf/icml/ZhangYJXGJ19] when replacing with the KL-divergence.

2.2 Multi-Task Learning and Meta-Initialization

Multi-Task Learning. Multi-Task Learning (MTL) is to improve performance across tasks through joint training of different models [DBLP:conf/nips/BilenV16, DBLP:conf/cvpr/LuKZCJF17, DBLP:conf/iclr/YangH17]. Consider a set of assignments containing data distribution and loss function defined as with corresponding models parameterized by trainable tensors . In MTL, these sets have non-trivial pairwise intersections, and are trained in a joint model to find optimal parameters for each task:

| (2) |

where measures the performance of a model trained using on dataset . Our approach Generalist is directly related to MTL at first glance because both of them tend to learn a specific predictive model for different sources. However, Generalist differs significantly from MTL, i.e., multiple tasks are still learned jointly under a unified form in MTL while each assignment can be optimized by heterogeneous strategies in Generalist.

Meta-Learning. Meta-learning is to train a model that can quickly adapt to a new task. Suppose is divided into non-overlapping splits and , the model is first trained on the training sets and then guided by a small validation set on a set of tasks to make the trained model can be well adapted to new tasks:

| (3) |

meta-learning [DBLP:conf/icml/FinnAL17, DBLP:journals/corr/abs-1803-02999] is often designed to generalize across unseen tasks, whereas the goal of MTL is to tackle a series of known tasks. Nonetheless, our approach Generalist uses the technique of meta-learning to set good initializations for base learners to transfer knowledge between tasks.

3 The Proposed New Framework: Generalist

Similar to a physical-world Generalist who has broad knowledge across many topics and expertise in a few, our proposed Generalist can deal with both natural and adversarial samples during test time. It consists of different base learners gradually specialized in their own disjointed fields. Over time they stretch their expertise to encompass knowledge respectively. From a starting point, Generalist takes his suitcase packed full of wide-ranging experience with him wherever it goes (i.e., the global learner spreads accumulated knowledge and each expert learns from the re-initialization after a certain epoch).

3.1 Overview

The overall procedure of our proposed algorithm is shown in Algorithm 1, which mainly comprises two steps: optimizing parameters of the base learner in its assigned data distribution and distributing parameters of the global learner to all base learners. Base learners and the global learner share the same architecture, i.e., . Since we only focus on recognizing natural examples and adversarial ones in our setting, the total number of tasks is set to two.

3.2 Task-aware Base Learners

Given a global data distribution for the tradeoff problem, as denoted in Section 2, are subject to the distribution of training data . And natural images while adversarial examples generated by Eq. 1. So the training process of base learners is to solve the inner minimization of Eq. 3 over different distributions in a distributed manner:

| (4) |

Specifically, during the process, base learners and are assigned different subproblems that only requires accessing their own data distribution, respectively. Note that two base learners work in a complementary manner, meaning the update of parameters is independent among base learners and the global learner always collects parameters of both base learners. So the subproblem for each base learner is defined as:

| (5) |

where the task-aware optimizer search the optimal parameter states over the subproblem in rounds. Loss functions can also be task-specific and applied to each base learner separately. It is natural to consider minimizing the 0-1 loss in the natural and robust errors, however, solving the optimization problem is NP-hard thus computationally intractable. In practice, we select cross-entropy as the surrogate loss for both and since it is simple but good enough.

3.3 Initialization from the Global Learner

During the initial training periods, base learners are less instrumental since they are not adequately learned. Directly initializing parameters of base learners may mislead the training procedure and further accumulate bias when mixing them. Therefore, we set aside epochs from the beginning for fully training base learners and just aggregates states on the searching trajectory of base learners through optimization by exponential moving average (EMA), computed as: , where is the exponential decay rates for EMA and is the mixing ratio for base learners. They then learn an initialization from parameters of the global learner every epochs when each base learner is well trained in its field. Thus, the optimization of each base learner for every interlude can be expressed in Eq. 6:

| (6) |

Note that contains both and , meaning there always exists a term updated by gradient information of distribution different from the current subproblem. This mechanism enables fast learning within a given assignment and improves generalization, and the acceleration is applicable to the given assignment for its corresponding base learner only (proof in Appendix B.1).

With all discussed above, the learning progress of Generalist can be constructed by decending the gradient of and mixing both of them. The calculating steps in Algorithm 1 can be summarized in Eq. 7.

| (7) |

where is a Boolean function that returns one only when both and , otherwise it returns zero. and are optimizers for natural training and adversarial training assignments.

3.4 Theoretical Analysis

In this part, we theoretically analyze how base learners help global learner in Generalist. For brevity, we omit the expectation notation over samples from each distribution without losing generalization.

Definition 1.

(Tradeoff Regret with Mixed Strategies) For the natural training assignment and adversarial training assignment , consider an algorithm generates the trajectory of states and for two base learners, the regret of both base learners on its corresponding loss function , is

| (8) |

The last term obtains the oracle state , theoretically optimal parameters for each task . is the sum of the difference between the parameters of each base learner and the theoretically optimal parameters for each task. Based on the definition, we can give the following upper bound on the expected error of classifier trained by Generalist with respect to as:

Theorem 1.

(Proof in Appendix B.2) Consider an algorithm with regret bound that generates the trajectory of states for two base learners, for any parameter state , given a sequence of convex surrogate evaluation functions drawn i.i.d. from some distribution , the expected error of the global learner on both tasks over the test set can be bounded with probability at least :

| (9) |

So the above inequality indicates that any strategy beneficial to reducing the error of each task that makes smaller will decrease the error bound of the global learner. Considering Generalist divides the tradeoff problem into two independent tasks, Theorem 1 guarantees the upper bound of the risks given by the global learner trained by Generalist will get lower once the error for each task becomes lower. In practice, we can apply customized learning rate strategies, optimizers, and weight averaging to guarantee the error reduction of each base learner.

| (a) Evaluation results based on ResNet-18. | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | NAT | PGD20 | PGD100 | MIM | CW | Square | AA | ||||

| NT | 93.04 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| AT () | 84.32 | 48.29 | 48.12 | 47.95 | 49.57 | 47.47 | 48.57 | 45.14 | 46.17 | 54.21 | 44.37 |

| AT () | 87.84 | 44.51 | 44.53 | 47.30 | 44.93 | 40.58 | 42.55 | 40.20 | 44.56 | 50.76 | 40.06 |

| TRADES () | 83.91 | 54.25 | 52.21 | 55.65 | 52.22 | 53.47 | 50.89 | 48.23 | 48.53 | 55.75 | 48.20 |

| TRADES () | 87.88 | 45.58 | 45.60 | 47.91 | 45.05 | 42.95 | 42.49 | 40.38 | 43.89 | 53.49 | 40.32 |

| FAT | 87.72 | 46.69 | 46.81 | 47.03 | 49.66 | 46.20 | 47.51 | 44.88 | 45.76 | 52.98 | 43.14 |

| IAT | 84.60 | 40.83 | 40.87 | 43.07 | 39.57 | 37.56 | 37.95 | 35.13 | 36.06 | 49.30 | 35.13 |

| RST | 84.71 | 44.23 | 44.31 | 45.33 | 42.82 | 41.25 | 42.01 | 40.41 | 46.54 | 50.49 | 37.68 |

| Generalist | 89.09 | 50.01 | 50.00 | 52.19 | 50.04 | 46.53 | 48.70 | 46.37 | 47.32 | 56.68 | 46.07 |

| (b) Evaluation results based on WRN-32-10. | |||||||||||

| Method | NAT | PGD20 | PGD100 | MIM | CW | Square | AA | ||||

| NT | 93.30 | 0.01 | 0.02 | 0.05 | 0.00 | 0.00 | 0.00 | 0.00 | 0.87 | 0.28 | 0.00 |

| AT () | 87.32 | 49.01 | 48.83 | 48.25 | 52.80 | 48.83 | 49.00 | 46.34 | 48.17 | 54.26 | 46.11 |

| AT () | 89.27 | 48.95 | 48.86 | 51.35 | 49.56 | 45.98 | 47.66 | 44.89 | 46.42 | 56.83 | 44.81 |

| TRADES () | 85.11 | 54.58 | 54.82 | 55.67 | 54.91 | 54.89 | 55.50 | 52.71 | 52.61 | 57.62 | 52.19 |

| TRADES () | 87.20 | 51.33 | 51.65 | 52.47 | 53.19 | 51.60 | 51.88 | 49.97 | 50.01 | 54.83 | 49.81 |

| FAT | 89.65 | 48.74 | 48.69 | 48.24 | 52.11 | 48.50 | 48.81 | 46.70 | 46.17 | 51.51 | 44.73 |

| IAT | 87.93 | 50.55 | 50.72 | 52.37 | 48.71 | 47.71 | 46.55 | 43.84 | 45.78 | 56.52 | 43.80 |

| RST | 87.27 | 46.55 | 46.76 | 47.02 | 45.99 | 45.73 | 46.58 | 45.78 | 43.18 | 52.44 | 41.52 |

| Generalist | 91.03 | 56.88 | 56.92 | 58.87 | 57.23 | 53.94 | 55.80 | 53.00 | 53.65 | 63.10 | 52.91 |

4 Experiments

We conduct a series of experiments on ResNet-18 [DBLP:conf/cvpr/HeZRS16] and WRN-32-10 [DBLP:journals/corr/ZagoruykoK16] on benchmark datasets MNIST, SVHN, CIFAR-10, and CIFAR-100 under the norm.

Baselines. We select six approaches to compare with: AT using PGD ( in Eq. 1) [DBLP:conf/iclr/MadryMSTV18], AT using the half-half loss ( in Eq. 1) [DBLP:journals/corr/GoodfellowSS14], TRADES with different [DBLP:conf/icml/ZhangYJXGJ19], Friendly Adversarial Training (FAT) [DBLP:conf/icml/ZhangXH0CSK20], Interpolated Adversarial Training (IAT) [DBLP:conf/ccs/LambVKB19], and Robust Self Training (RST) [DBLP:conf/icml/RaghunathanXYDL20] used labeled data for fair comparison. For Generalist, we set and the optimal mixing strategy will be discussed in Section 4.2.2.

Evaluation. To evaluate the robustness of the proposed method, we apply several adversarial attacks including PGD [DBLP:conf/iclr/MadryMSTV18], MIM [DBLP:conf/cvpr/DongLPS0HL18], CW [DBLP:conf/sp/Carlini017], AutoAttack (AA) [DBLP:conf/icml/Croce020a] and all its components (, , , , and Square attacks).

4.1 Tradeoff Performance on Benchmark Datasets

To comprehensively manifest the power of our Generalist method, we present the results of both ResNet-18 and WRN-32-10 on CIFAR-10 in Table 1.

In Table 1(a), Generalist consistently improves standard test error relative to models trained by several robust methods, while maintaining adversarial robustness at the same level. More specifically, Generalist achieves the second highest standard accuracy of 89.09% (only lower than 93.04% obtained by natural training (NT)), while meantime robust accuracy against AA is 46.07%, hanging on to 48.2% from TRADES. If we force TRADES to meet the same level of clean accuracy as Generalist (89%), the robustness of TRADES against APGD will drop to 30% (see TRADES in Appendix A.4), which is significantly worse than Generalist. That means it is hard to obtain acceptable robustness but maintain clean accuracy above 89% in the joint training framework even if it is equipped with an advanced loss function, while the improvement of Generalist is notable since we only use the naive cross-entropy loss. Contrary to FAT managing the tradeoff through adaptively decreasing the step size of PGD, which still hurts robustness a lot, Generalist is the only method with clean accuracy above 89% and robust accuracy against AA above 46%. We should emphasize the final obtained model of Generalist is the same size as other trained models are. For the training time, Generalist does perform both NT and naive AT but the cost of NT is negligible, so the overhead of Generalist is smaller than TRADES, and whatever serial and parallel versions of Generalist are even faster than TRADES (see Appendix A.4).

Things become more obvious when it comes to WRN-32-10. In Table 1(b), the gap between test natural accuracy of Generalist and NT is reduced to 2.27%, a relative decrease of 3.65% in standard test error as compared to the second highest natural accuracy (except NT) achieved by FAT. It is also remarkable that the boost of accuracy does not hurt the robustness of Generalist, instead, Generalist even outperforms TRADES across multiple types of adversarial attacks. In particular, we find that Generalist has a standard test error of 6.7% while TRADES with has a standard test error of 14.89% only. And the improved robustness of Generalist among PGD20/100, MIM, CW, and Square is conspicuous. Besides, the best performance on AA, which is an ensemble of different attacks and the most powerful adaptive adversarial attack so far, demonstrates the reliability of Generalist. Likewise, only Generalist attains robust accuracy of AA higher than 52% along with clean accuracy higher than 90%. It should be emphasized that these features confirm the practicability of Generalist. In short, Generalist has consistently improved robustness without loss of natural accuracy. More results on benchmark datasets of MNIST, SVHN, and CIFAR-100 are in Appendix A.2 and A.3.

4.2 Comprehensive Understanding of Generalist

We run a number of ablations to analyze the Generalist framework in this part. As illustrated in Algorithm 1, two factors control the tradeoff between accuracy and robustness of the global learner: frequency of communication and mixing ratio . Here, we investigate how these parameters affect performance. If not specified otherwise, the experiments are conducted on CIFAR-10 using ResNet-18.

4.2.1 Mixing Strategies of

In Generalist, controls the tradeoff via balancing the contribution of individuals to the global learner when base learners are gradually well trained. Note that is a scalar but we do not explicitly assign a fixed value to it. Instead, we set several breakpoints and dynamically adjust the value along the training process using a piecewise linear function to decrease.

Results are shown in Figure 3. The numbers in brackets are the values at the 0/40/80/120-th epoch. If gets smaller, the base learner in charge of natural classification has a pronounced influence on the global learner. Among all configurations, the best one is to apply and to the global learner after the 75th epoch. When compared to strategies that decays during late periods, shows lower standard and robust accuracy, confirming that more sophisticated initialization could be useful for both accuracy and robustness. With the increase of the last breakpoint of dynamical strategies, the robust accuracy gradually increases; while the standard accuracy decreases by a small margin. We also investigate the static/dynamic strategy for . By observing and , the scheduled mixing strategy makes Generalist more robust to various attacks.

4.2.2 Communication Frequency

In Generalist, controls the communication frequency between the global learner and base learners. Therefore, for , with the fixed mixing ratio strategy, we sweep over the frequency of communication from 1 to 15.

Results are shown in Figure 3, and we have the following observations.

Intuitively, a larger means base learners communicate with the global learner less frequently to get the initialization, so they barely have the opportunity to move alternately towards two optimal solution manifolds. But specifically, the natural accuracy falls back down after reaching the peak while the robust accuracy in different adversarial settings roughly shows a trough. Such observation manifests that too much/little communication has a negative influence on standard accuracy but results in relatively higher robustness. It captures a tradeoff between natural and robust errors with respect to .

(a) Weight Averaging(b) Different Optimizers

4.2.3 Parameter Selection

In practice, it is natural to select the mixing parameter and the frequency of communication under a scenario without knowing the target model or dataset. We can find the best parameters on specific architecture and dataset, which is then transferred to others, i.e., choosing the best , , and their strategies on one model/dataset and then used for other models/datasets, which still works well. Specifically, for the experimental results on MNIST/SVHN/CIFAR100 shown in Appendix A.2 and A.3, we just find the optimal parameters and updating strategies on CIFAR10 and apply similar and on the target datasets and architectures without much fine-tuning. The performance is still very good.

4.3 Customized Policies for Individuals

As stressed above, one of the major advantages of Generalist in comparison with the standard joint training framework is that each base learner enables to customize the corresponding strategy for their own tasks freely rather than using the same strategy for all tasks. In this part, we investigate whether Generalist performs better when cooperating with diverse techniques.

Weight Averaging. Recent works [DBLP:journals/corr/abs-2103-01946, DBLP:conf/uai/IzmailovPGVW18, wang2022selfensemble] have shown that weight averaging (WA) greatly improves both natural and robust generalization. The average parameters of all history model snapshot through the training process to build an ensemble model on the fly. However, such technique cannot benefit both accuracy and robustness in the joint training framework. Therefore, we introduce WA into base learners separately. Results are shown in Figure 4 (a). We employ WA in either NT (NT_only) or AT (AT_only) or both of them (NT+AT). Overall, the results confirm that the performance of the global learner can be further improved after both base learners exploit WA. But unfortunately, an obvious tradeoff happens if only one of the base learner is equipped with WA. For instance, the standard test accuracy of NT_only continues to increase at the expense of the drop in the ability to defend attacks. A likely reason is that WA implicitly controls the learning speed of base learners. Indeed, the base learner with WA becomes an expert much faster than the one without WA in its sub-task, meaning the fast one is not in accordance with the slow one. This result is important because it not only illustrates the potential of Generalist comes from its base learners but also identifies a key challenge of tradeoff for future improvement.

Different Optimizers. We also investigate the effect of optimizers designed for different tasks. We choose AT () using SGD with momentum and Adam for piecewise learning rate schedule optimized by joint training as the baseline. The initial learning rate for Adam is 0.0001. We alternately apply these two optimizers in each subproblem. The comparison of the results is shown in Figure 4 (b). We can see that the gap of robust accuracy between models adversarially trained by Adam and the ones trained by SGD is significant. All three schemes equipped with Adam, namely NT (Adam)+AT (Adam), NT (SGD)+AT (Adam), and Baseline (Adam), perform worse than the ones using SGD when evaluated by adversarial attacks. But on the other hand, by comparing the results of Baseline (Adam) and NT (Adam)+AT (SGD), it confirms a proper optimization scheme with respect to data distribution can effectively benefit the corresponding performance without overlooking the other. That not only demonstrates the necessity of Generalist to decouple task-aware assignments from joint training but also indicates using Adam may not be the principal reason for robustness drop. It is just ill-suited for the outer and inner optimization in AT. Besides, though the best results still come from using SGD, the learning rate for different tasks can be customized which is not feasible in the joint framework, as shown in Appendix A.5.

(a)(b)

4.4 Visualization

Considering the proposed method achieves impressive clean accuracy without a harsh drop in robustness, it is naturally to ask what improvements Generalist has secured in comparison with robust methods in detail. Thus, we further investigate the predictions that robust classifiers are prone to make. As shown in Figure 5, we provide two perspectives to analyze the differences that classifiers trained by different AT methods.

To broadly study the case, we perform experiments on NT, TRADES with different , FAT and Generalist, then plot the distribution of the correct predictions of all methods for each class in Figure 5(a). As evident at first glance, we note that animals are more frequently misclassified, especially cats/dogs in the natural scenario and cats/deers in the adversarial scenario. In addition, the classifier trained by standard natural training does not always outperform the ones adversarially trained. Actually, they are equally skilled at most categories and the outcome is decided by specific categories (e.g. birds, cats and dogs). Generalist keeps pace with NT in the natural task, and meanwhile promotes the higher improvements in difficult items (e.g. cats and deers) against AA attack.

In Figure 5(b), we display specific samples in the testing dataset that are misclassified by robust classifiers (TRADES and FAT) but recognized by our proposed method, including both natural examples (the first two rows) and adversarial examples (the last two rows). Here, images shown in the first row are easy ones where the foreground objects stand out from the clear backgrounds, while hard samples are referred to those having confused objects with messy backgrounds. It is worth noting that TRADES delivers poor performances not only on hard examples with complex backgrounds or obscured objects but also on simple ones. For example, each image in the first row is typically plain and regular, however, TRADES fails in categorizing them into the right class. A plausible explanation for the issue is that TRADES lacks in a set of support measures specially devised for the natural classification task unlike Generalist does, highlighting design differentiation for sub-tasks is necessary.

Another interesting finding is that though both TRADES and FAT can build a robust classifier, they still rely on spurious background information and thus are easily deceived when encountering images with similar backgrounds but different objects. This phenomenon can be verified from the misclassification of the fourth and fifth images in the first row (taking white/blue backgrounds as evidence), and the fifth image in the fourth row (confused by the green background). But Generalist has the ability to sift the invariant feature of the foreground object while ignoring the background information spuriously correlated with the categories in both natural and adversarial settings. On the whole, Generalist demonstrates its strength to differentiate difficult samples close to the decision boundary and its potential to learn a background-invariant classifier.

5 Conclusion

In this paper, we propose a bi-expert framework named Generalist for improving the tradeoff issue between natural and robust generalization, which trains two base learners responsible for complementary fields and collects their parameters to construct a global learner. By decoupling from the joint training paradigm, each base learner can wield customized strategies based on data distribution. We provide theoretical analysis to justify the effectiveness of task-aware strategies and extensive experiments show that Generalist better mitigates the tradeoff of accuracy and robustness.

Acknowledgement

Yisen Wang is partially supported by the National Key R&D Program of China (2022ZD0160304), the National Natural Science Foundation of China (62006153), Open Research Projects of Zhejiang Lab (No. 2022RC0AB05), and Huawei Technologies Inc.

Appendix A Additional Experiments

A.1 Detailed Configurations

All images are normalized into . We train ResNet-18 using SGD with 0.9 momentum for 120 epochs (200 epochs for CIFAR-100) and the weight decay factor is set to for ResNet-18 and for WRN-32-10. We use the piecewise linear learning rate strategy for performing weight averaging in base-learners. For the base-learner of AT, the initial learning rate for ResNet-18 is set to 0.01 and 0.1 for WRN-32-10 till Epoch 40 and then linearly reduced by 10 at Epoch 60 and 120, respectively. The magnitude of maximum perturbation at each pixel is with step size and the PGD steps number in the inner maximization is 10. For the base-learner of NT, we fix the initial learning rate as 0.1 and the weight decay is for both ResNet-18 and WRN-32-10.

A.2 Experiments on MNIST/SVHN

We conducted experiments on MNIST () and SVHN using ResNet-18 with the same setup in Sec. A. We ran 5 individual trials and results with standard deviations are shown in Table 2. Our Generalist still achieves the best performance.

| MNIST | SVHN | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Methods | NAT | PGD20 | AA | NAT | PGD20 | AA | ||||||||||||

| TRADES |

|

|

|

|

|

|

||||||||||||

| FAT |

|

|

|

|

|

|

||||||||||||

| Generalist |

|

|

|

|

|

|

||||||||||||

A.3 Experiments on CIFAR-100

To further demonstrate our proposed Generalist achieves a better tradeoff between accuracy and robustness, we also conduct experiments on CIFAR-100 datasets. Here we still use ResNet-18 as the backbone model with the same configurations as claimed in Sec. A. We report the results of natural accuracy and several advanced adversarial attack methods in Table 3. Note that we do not design a specialized strategy for Generalist on CIFAR-100 but Generalist still achieves a gratifying tradeoff, so it still has the potential to perform better.

| Method | NAT | PGD20 | PGD100 | MIM | CW | Square | AA | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| NT | 65.74 | 0.02 | 0.01 | 0.02 | 0.01 | 0.00 | 0.00 | 0.00 | 0.07 | 0.37 | 0.00 |

| AT () | 60.10 | 28.22 | 28.27 | 28.31 | 24.87 | 26.63 | 24.13 | 21.98 | 23.91 | 27.93 | 23.87 |

| AT () | 60.84 | 22.64 | 22.61 | 23.86 | 22.28 | 20.66 | 21.67 | 19.2 | 20.09 | 25.36 | 19.17 |

| TRADES () | 59.93 | 29.90 | 29.88 | 29.55 | 26.14 | 27.93 | 25.43 | 24.72 | 25.16 | 30.03 | 23.72 |

| TRADES () | 60.18 | 28.93 | 28.91 | 29.12 | 25.79 | 27.07 | 25.00 | 23.65 | 24.31 | 28.76 | 23.22 |

| FAT | 61.71 | 22.93 | 22.87 | 22.64 | 23.45 | 24.78 | 24.91 | 20.56 | 23.16 | 26.37 | 20.01 |

| IAT | 57.04 | 21.40 | 21.39 | 22.37 | 19.18 | 19.63 | 18.92 | 15.50 | 16.63 | 23.26 | 15.50 |

| RST | 60.30 | 23.56 | 23.61 | 23.71 | 22.40 | 24.69 | 24.18 | 21.66 | 23.82 | 27.05 | 21.18 |

| Generalist | 62.97 | 29.48 | 29.49 | 30.35 | 27.77 | 27.45 | 27.42 | 24.04 | 25.54 | 31.41 | 23.96 |

A.4 Computational Cost and Tradeoff Comparison of Generalist

We compute the actual training time of TRADES and Generalist (serial/parallel version) using ResNet-18 on RTX 3090 GPU in Table 4. We also report the standard deviations over 5 runs to show the sensitivity of Generalist. Neither version of Generalist is slower than TRADES. Generalist does perform both NT and naive AT, but the cost of NT is negligible so the overhead (NT+AT) is smaller than TRADES.

Besides, Table 4 delivers another important message. For the tradeoff between robustness and accuracy, it is hard to obtain acceptable robustness while maintaining clean accuracy above 89% in the joint training framework (TRADES). For every percentage point increase in clean accuracy, the robust accuracy will decrease dramatically (e.g. TRADES can meet 89% on clean accuracy but its robustness against APGD will drop to 30%).

| Method | NAT | PGD100 | APGD |

|

||||||

|---|---|---|---|---|---|---|---|---|---|---|

| TRADES |

|

|

|

414 | ||||||

| Generalist (Serial) |

|

|

|

397 | ||||||

| Generalist (Parallel) |

|

|

|

342 |

A.5 Influence of Learning Rate

In this part, we also study the influence of the learning rate for different distribution-aware tasks. For simplicity, we set , and as their best options according to the main body of the paper. We search the most grid of learning rate configurations in the range of 0.1, 0.01, 0.001 for both natural training and adversarial training. Our Generalist achieves its best and second-best natural accuracy when the learning rate for the clean learner is set to 0.1. And the optimal learning rate for robust accuracy is 0.01. Based on all the observations from Table 5, the learning pace of learners is a little different but the process is compatible.

| NAT | AA | |

|---|---|---|

| NT=0.1, AT=0.01 | 89.09 | 46.37 |

| NT=0.1, AT=0.1 | 90.12 | 41.86 |

| NT=0.1, AT=0.001 | 90.45 | 43.55 |

| NT=0.01, AT=0.01 | 88.4 | 48.03 |

| NT=0.01, AT=0.1 | 88.25 | 42.98 |

Appendix B Proofs of Theoretical Results

B.1 Proof of Claim in Section 3.3

Proof.

At epoch , the parameters of the global learner are distributed to the experts and each expert train from this initialization with steps by calculating the gradients (e.g. using SGD optimizer). Following [DBLP:journals/corr/abs-1803-02999], we approximate the update performed by the initialization based on the Taylor expansion:

| (10) | ||||

Recalling that represents an optimizer that updates the parameter vector at the -th step: . For each base-learner, we approximate the gradient at intervals:

| (11) | ||||

Replacing with and substituting for Eq. 10, we expand to leading order:

| (12) | ||||

Therefore, we take the expectation of over steps, and obtain:

| (13) |

Recalling that is mixed by and . For simplicity of exposition, we use and to stand for the scalar factors, meaning . Ignoring the higher order terms, for each expert initialized by the global learner (e.g. ), we have:

| (14) | ||||

The first term pushes to move forward the minimum of its assigned loss over its data distribution; while the second one improves generalization by increasing the inner product between gradients of different mini-batches and updating the parameters from the other task. ∎

B.2 Proof of Theorem 1

Before we present the proof of the Theorem we present useful intermediate results which we require in our proof.

Proposition 1.

Consider a sequence of loss functions drawn i.i.d. from some distribution is given to an algorithm that generates a sequence of hypotheses then the following inequality each hold w.p. :

| (15) |

Proof.

The proof of the Proposition can be directly derived from the Proposition 1 in [DBLP:journals/tit/Cesa-BianchiCG04]. ∎

Then we could immediately obtain the below inequality by the symmetric version of the Azuma-Hoeffding inequality [Azuma1967WEIGHTEDSO]

Remark 1.

| (16) |

Finally, we give the definition of the regret of minimizing any subproblem:

Definition 2.

(Subproblem Regret) Consider an algorithm generates the trajectory of states , the regret of such an algorithm on loss function is:

| (17) |

Theorem 2.

(Restated) Consider an algorithm with regret bound that generates the trajectory of states for two base learners, for any parameter state , given a sequence of convex surrogate evaluation functions drawn i.i.d. from some distribution , the expected error of the global learner on both tasks over the test set can be bounded with probability at least :

| (18) |

Proof.

From Theorem 1 and Remark 1, we obtain that

| (19) |

It is obvious that:

| (20) |

So we obtain:

| (21) |

Recalling that in Section 3.3, can be expressed by the linear combination of and through since is aggregateed by EMA, so the above inequality can be further derived by the Jensen’s inequality (convex surrogate functions could be selected to evaluate the test errors instead of the 0-1 loss):

| (22) | ||||

Note that this inequality also holds when applying weight averaging technique to the base-learner, because weight averaging is still the linear combination of all history states. ∎