Gaussian Multi-head Attention for Simultaneous Machine Translation

Abstract

Simultaneous machine translation (SiMT) outputs translation while receiving the streaming source inputs, and hence needs a policy to determine where to start translating. The alignment between target and source words often implies the most informative source word for each target word, and hence provides the unified control over translation quality and latency, but unfortunately the existing SiMT methods do not explicitly model the alignment to perform the control. In this paper, we propose Gaussian Multi-head Attention (GMA) to develop a new SiMT policy by modeling alignment and translation in a unified manner. For SiMT policy, GMA models the aligned source position of each target word, and accordingly waits until its aligned position to start translating. To integrate the learning of alignment into the translation model, a Gaussian distribution centered on predicted aligned position is introduced as an alignment-related prior, which cooperates with translation-related soft attention to determine the final attention. Experiments on EnVi and DeEn tasks show that our method outperforms strong baselines on the trade-off between translation and latency.

1 Introduction

Simultaneous machine translation (SiMT) Gu et al. (2017); Ma et al. (2019); Arivazhagan et al. (2019), which outputs translation before receiving the complete source sentence, is mainly used for streaming translation tasks, such as simultaneous interpretation, live broadcast and online translation. Different from full-sentence machine translation which waits for the complete source sentence, SiMT requires a policy to determine where to start translating when given the streaming inputs. The SiMT policy has to trade off between translation quality and latency and an ideal one should wait for the right number of source words, which are sufficient but not excess, until deciding to output target words Arivazhagan et al. (2019).

For full-sentence translation, each target word is generated based on the attended source information, where each source word provides different amount of information for the target word. Among them, the most informative source word can be considered as an aligned word to the target word Garg et al. (2019). Then for SiMT, the alignment can be a good guider for the policy to determine where to start translating. For high translation quality, the SiMT policy is supposed to start translating after receiving the aligned source word to ensure enough source information for the translation. To consider low latency, the SiMT policy is expected not to wait for too many words after receiving the aligned source word. Therefore, if the alignment can be modeled in the SiMT model explicitly, translation quality and latency can be controlled in a unified manner for an ideal SiMT policy.

However, the existing SiMT methods, mostly employing fixed or adaptive policy, do not reflect the alignment information in the modeling, which makes them trade off between translation quality and latency in a separate way. Fixed policy, such as wait-k policy Ma et al. (2019), waits for a fixed number of source words and then performs READ and WRITE operations one after another, so it is a rule-based policy and precludes alignment modeling. Adaptive policy, such as MILk Arivazhagan et al. (2019) and MMA Ma et al. (2020), determines READ/WRITE operations by sampling from a Bernoulli distribution, as shown in Figure 1(a), where the decisions are made independently and no relationship between the decision and translation is introduced, so it has to employ an additional loss to control the latency. Besides, some methods Wilken et al. (2020); Arthur et al. (2021) apply external ground-truth alignment as an ideal position to start translating, but the performance is inferior to MMA since separating translation and alignment.

In this paper, to explicitly involve alignments in the SiMT modeling, we propose Gaussian Multi-head Attention (GMA) to develop a SiMT policy with the guidance of alignments. To determine where to start translating with alignment, GMA first models the aligned source position of the current target word via predicting the relative distance from the previous aligned source position, called incremental steps, shown in Figure 1(b). Meanwhile, a relaxation offset after the aligned position is set to allow the model to wait for some additional source inputs, thereby providing a controllable trade-off between translation quality and latency in practice. Accordingly, GMA starts translating after receiving the aligned source position and waiting for the extra relaxation offset. To jointly learn alignments (i.e., SiMT policy) and translation, a Gaussian distribution centered on predicted aligned position is introduced as a prior attention over the received source words. As a result, GMA finally uses the posterior attention for translation derived from the alignment-related Gaussian prior and translation-related soft attention. Experiments on EnVi and DeEn SiMT tasks show that GMA outperforms strong baselines on the trade-off between translation quality and latency.

2 Background

GMA is applied on the multi-head attention in Transformer Vaswani et al. (2017), so we briefly introduce SiMT and the multi-head attention.

2.1 Simultaneous Machine Translation

In a translation task, we denote the source sentence as and the corresponding source hidden states as with source length . The model generates a target sentence and the corresponding target hidden states with target length . Different from the full-sentence machine translation, the source words received by SiMT model are incremental and hence the model needs to decide where to output translation.

Output position Define Ma et al. (2019) as a monotonic non-decreasing function of step , to denote the number of source words received by SiMT model when translating , i.e., is the output position of .

In SiMT, is determined by the specific policy, and the probability of generating the target word is , where is first source words and is previous target words. Therefore, the decoding probability of is calculated as:

| (1) |

2.2 Multi-head Attention

Multi-head attention Vaswani et al. (2017) contains multiple attention heads, where each attention head performs scaled dot-product attention. Our method is based on the cross-attention, where the queries are the target hidden states , the keys and values both come from the source hidden states . The soft attention weight is calculated as:

| (2) |

where and are projection functions from the input space to the query and key space respectively, and is the dimension of inputs. Then the context vector is calculated as:

| (3) |

where is a projection function to value space.

3 The Proposed Method

The architecture of GMA is shown in Figure 2. For SiMT policy, GMA predicts the aligned source position of the current target word, and accordingly determines the output position. To integrate the learning of SiMT policy within the translation, we introduce a Gaussian prior centered on the predicted aligned position, which is multiplied with soft attention (Eq.(2)) to get final attention distribution. Due to the unimodality of Gaussian prior, it enables the model to learn the position that gets the highest soft attention (i.e., alignment), thereby developing a reasonable SiMT policy. Besides, since the Gaussian prior is continuous and differentiable, it can be integrated into the translation model directly and adjusted with the learning of translation.

3.1 Alignment-Guided SiMT Policy

Alignments prediction To develop a SiMT policy with alignments, GMA first predicts the aligned source position of the current target word. Due to the incrementality of streaming inputs in SiMT, it is unstable to directly predict the absolute position of the aligned source word. Instead, we predict the relative distance from the previous aligned source position, called incremental step.

Formally, we denote the aligned source position of the target word as and the incremental step as . Therefore, the aligned source position is calculated as:

| (4) |

where we set the initial aligned position to the first source word, and the incremental step is predicted through a multi-layer perceptron (MLP) based on the previous target hidden state :

| (5) |

where , are learnable parameters of MLP.

SiMT policy Besides the predicted aligned position , we also introduce a relaxation offset to allow the model to wait for some additional source inputs, thereby providing a controllable trade-off between translation quality and latency in practice. Specifically, the output position (i.e., wait for the first source words and then translate the target word) is calculated as:

| (6) |

where is a floor operation. In our experiments, relaxation offset is a hyperparameter we set to obtain the translation quality under different latency. Overall, the SiMT policy is shown in Algorithm 1.

3.2 Integrating Alignment in Translation

To jointly learn the SiMT policy (i.e., aligned positions which determine latency) with translation, we weaken the attention of source words far away from the predicted aligned position in advance, thereby forcing the model to move the predicted aligned position to the source word that is most informative for translation (i.e., with the highest soft attention).

To this end, for target word, we introduce a Gaussian distribution centered on aligned position as the prior probability, calculated as:

| (7) |

where the Gaussian distribution is limited in first source words. is the variance used to control the attenuation degree of the prior probability as away from the aligned position. To prevent the prior probability of the furthest source word from being too small, we set according to the “two-sigma rule” Pukelsheim (1994). We will compare the performance of different settings of prior probability in Sec.6.1. Note that since the source position is discrete, we normalize the Gaussian distribution with .

Given the prior probability and soft attention (calculated as Eq.(2)), which is considered as likelihood probability, we calculate the posterior probability and normalize it as the final attention distribution :

| (8) | ||||

| (9) |

Then, the context vector is calculated as:

| (10) |

3.3 Adaptation to Multi-head Structure

When GMA is integrated into the Transformer with decoder layers and attention heads per layer, if multiple heads (totally heads) independently predict their alignments, some outlier111Outlier heads mean that most of the heads are aligned in the front position, while some individual heads are aligned to the farther position, which requires the model to wait until the farthest aligned word is received, causing unnecessary latency. heads will cause unnecessary latency Ma et al. (2020); Zhang and Feng (2022a).

Therefore, to better adapt to multi-head attention and capture alignments, for each decoder layer, heads in GMA jointly predict the aligned source position and share it among heads, while the predicted alignments in each decoder layer still remain independent. Since the output position (determined by predicted alignments) in each layer may be different, the model starts translating after reaching the furthest one. We will compare the performance of different sharing settings in Sec.6.2.

4 Related Work

A reasonable policy is the key to the SiMT performance. Early policies used segmented translation Bangalore et al. (2012); Cho and Esipova (2016); Siahbani et al. (2018). Gu et al. (2017) used reinforcement learning to train an agent to decide read/write. Alinejad et al. (2018) added a predict operation to the agent based on Gu et al. (2017).

Recent SiMT policies fall into fixed and adaptive. For fixed policy, Dalvi et al. (2018) proposed STATIC-RW, which alternately read and write words after reading words. Ma et al. (2019) proposed a wait-k policy, which translates after lagging source words. Elbayad et al. (2020a) enhanced wait-k policy by sampling different . Zhang et al. (2021) proposed future-guide training for wait-k policy. Han et al. (2020) applied meta-learning in wait-k. Zhang and Feng (2021a) proposed a char-level wait-k policy. Zhang and Feng (2021c) proposed mixture-of-experts wait-k policy.

For adaptive policy, Zheng et al. (2019a) trained an agent with golden READ/WRITE actions generated by rules. Zheng et al. (2019b) added a “delay” token to read source words. Arivazhagan et al. (2019) proposed MILk, using a Bernoulli variable to determine READ/WRITE. Ma et al. (2020) proposed MMA to implement MILK on Transformer. Bahar et al. (2020) and Wilken et al. (2020) used the external ground-truth alignments to train the policy. Zhang et al. (2020) proposed an adaptive segmentation policy MU for SiMT. Liu et al. (2021) proposed cross-attention augmented transducer for SiMT. Alinejad et al. (2021) introduced an full-sentence model to generate a ground-truth action sequence and accordingly train a SiMT policy. Miao et al. (2021) proposed a generative SiMT policy.

Previous methods often neglected to jointly model alignments with translation, and meanwhile introduce additional loss functions to control the latency. However, GMA jointly learns alignment and translation, and thereby controls the latency through a simple Gaussian prior probability.

5 Experiments

5.1 Datasets

We evaluate GMA on the following public datasets.

IWSLT15222nlp.stanford.edu/projects/nmt/ English Vietnamese (EnVi) (133K pairs) Cettolo et al. (2015) We use TED tst2012 as validation set (1553 pairs) and TED tst2013 as test set (1268 pairs). Following the previous setting Raffel et al. (2017); Ma et al. (2020), we replace tokens that the frequency less than 5 by , and the vocabulary sizes are 17K and 7.7K for English and Vietnamese respectively.

WMT15333www.statmt.org/wmt15/translation-task German English (DeEn) (4.5M pairs) Following Ma et al. (2019), Arivazhagan et al. (2019) and Ma et al. (2020), we use newstest2013 as validation set (3000 pairs) and newstest2015 as test set (2169 pairs). BPE Sennrich et al. (2016) was applied with 32K merge operations and the vocabulary is shared across languages.

5.2 Systems Setting

We conduct experiments on the following systems.

Offline Conventional Transformer Vaswani et al. (2017) model for full-sentence translation.

Wait-k Wait-k policy proposed by Ma et al. (2019), the most widely used fixed policy with strong performance and simple structure, which first waits for source words and then translates a target word and waits for a source word alternately.

MU A segmentation policy proposed by Zhang et al. (2020), which classify whether the current inputs is a complete meaning unit (MU), and then fed MU into the full-sentence MT model for translation until generating . We compare our method with MU on DeEn(Big) since they report their results on DeEn with Transformer-Big.

MMA444github.com/pytorch/fairseq/tree/master/examples/simultaneous_translation Monotonic multi-head attention (MMA) proposed by Ma et al. (2020), the state-of-the-art adaptive policy. At each step, MMA predicts a Bernoulli variable to decide whether to start translating or wait for the next source token.

GMA Proposed method in Sec.3.

The implementations of all systems are adapted from Fairseq Library Ott et al. (2019) based on Transformer Vaswani et al. (2017). The setting is the same as Ma et al. (2020). For EnVi, we apply Transformer-small (6 layers, 4 heads per layer). For DeEn, we apply Transformer-Base (6 layers, 8 heads per layer) and Transformer-Big (6 layers, 16 heads per layer). We evaluate these systems with BLEU Papineni et al. (2002) for translation quality and Average Lagging (AL) Ma et al. (2019) for latency. Average lagging is currently the most widely used latency metric, which evaluates the number of words lagging behind the ideal policy. Given , AL is calculated as:

| (11) | ||||

| (12) |

where and are the length of the source sentence and target sentence respectively.

5.3 Main Results

We compared GMA with the Wait-k, MU and MMA, the current best representative of fixed policy, segmentation policy and adaptive policy respectively, and plot latency-quality curves in Figure 3, where GMA curve is drawn with various (in Eq.(6)), Wait-k curve is drawn with various lagging numbers , MU curve is drawn with various classification thresholds of the meaning unit, MMA curve is drawn with various latency loss weights .

Compared with Wait-k, GMA has a significant improvement, since Wait-k ignores the alignments and thus the target word may be forced to be translated before receiving its aligned source word, which seriously affects the translation quality. Compared with MMA and MU, our method achieves better performance under most latency levels. Since MU first segments the source sentence based on the meaning unit, and then translates each segment with the full-sentence MT model, MU performed particularly well under high latency, but meanwhile it is difficult to extend to lower latency.

Compared with the SOTA adaptive policy MMA, GMA has stable performance and simpler training method. MMA introduces two additional loss functions to control the latency, and meanwhile applies the expectation training to train Bernoulli variables Ma et al. (2020). GMA successfully balances the translation quality and latency without any additional loss function. Owing to the proposed Gaussian prior probability centered on predicted alignments, the source words far away from the aligned position get less Gaussian prior, so that the model is forced to move the aligned position close to the most informative source word, thereby capturing the alignments and controlling the latency. With GMA, the translation quality and latency are integrated into a unified manner and jointly optimized without any additional loss function.

6 Analysis

We conduct extensive analyses to study the specific improvements of GMA. Unless otherwise specified, all the results are reported on DeEn(Base).

6.1 Ablation Study

| Variants | AL | BLEU |

|---|---|---|

| Aligned Source Position | ||

| Incremental step | 4.66 | 28.50 |

| \hdashlineAbsolute position | 7.33 | 25.61 |

| Prior Probability | ||

| Gaussian ( ) | 4.66 | 28.50 |

| \hdashline | 6.87 | 28.96 |

| 4.55 | 27.61 | |

| Predicted | 5.34 | 27.12 |

| Laplace | 8.14 | 29.19 |

| Linear | 12.83 | 27.86 |

| None | 1.48 | 20.84 |

We conduct sufficient ablation studies on the method of modeling aligned position and the proposed prior probability in Table 1. For modeling aligned source position, predicting incremental step performs better. Since the complete length of the streaming inputs in SiMT is unknown, predicting absolute position easily exceeds the source length, resulting in unnecessary latency. In practice, the value range of incremental step is more regular than absolute position and thus easier to learn.

Among the prior probabilities of different distributions, the Gaussian distribution performs best. The Laplace distribution is more fat-tailed than the Gaussian distribution (i.e., more prior probability on the position that far away from the aligned position), resulting in learning a later aligned position and higher latency. The linear distribution performs worse since its attenuation with distance is smoother. When removing the prior probability, since the parameters to predict alignments do not get the back-propagation gradient, the model cannot learn the alignments at all, resulting in very low latency and poor translation quality. Focusing on the best performing Gaussian prior probability, when (the attenuation degree is small), the predicted aligned position is much later and the latency is higher. when , the translation quality declines since the prior of some source words far away from the aligned position is too small. When the is predicted, some small predicted will make the prior probability of the distant source words almost 0, resulting in poor translation quality. In comparison, the Gaussian prior () we proposed not only guarantees a certain attenuation degree, but also assigns the furthest source word some prior probability.

6.2 Effect of Sharing Alignments

| #Predicted Alignments | AL | BLEU | |

|---|---|---|---|

| All independent | 7.85 | 29.18 | |

| Share among heads | 4.66 | 28.50 | |

| Share among layers | 4.46 | 27.82 | |

| Share all | 3.07 | 27.26 | |

When integrated into multi-head attention, to reduce the overall latency of the model, GMA shares the predicted alignments among heads in each layer. Table 2 reports the performance of sharing alignments in different parts (all independent, among heads, among layers or among all).

‘All independent’ achieves the best translation quality and also brings a higher latency, as the overall latency of the model is determined by the farthest one among all predicted positions. Besides, ‘Share all’ gets the lowest latency but loses translation quality. Comparing ‘Share among heads’ and ‘Share among layers’, sharing among heads performs better, which is in line with the previous conclusion that there are obvious differences between the alignments in each decoder layer Garg et al. (2019); Voita et al. (2019); Li et al. (2019).

6.3 Statistics of Incremental Steps

Unlike MMA predicting the READ/WRITE action, GMA directly predicts the incremental step between adjacent target outputs. To analyze the advantages of modeling the incremental step, we show the distribution of step size (i.e., the number of waiting source words between two adjacent outputs) in Figure 4, where we select the SiMT models with the similar latency (AL 4.5).

For Wait-k, the step size is blunt and there are only three cases, which occur before the start of translation (step size: first lag words), during translation (step size: wait and output one word alternately.), and after the end of the source inputs (step size: output the translation at one time). The proposed GMA and MMA have obvious differences in the step size distribution. The step size distribution of GMA is more even, only distributed between 04, which shows that each source segment is shorter and thus the translating process is more streaming. The step size of MMA is more widely distributed, most of which are 0 (consecutively output target words), and there is also a large proportion of step size greater than 5, which shows that MMA tends to consecutively wait for more source words and then output more target words, resulting in longer source segments. Therefore, although GMA and MMA have similar latency (AL), their performance in real applications is different, where the translation process of GMA is more streaming, while MMA is more segmented.

Furthermore, to more accurately evaluate the streaming degree of the translation process, we apply Consecutive Wait (CW) Gu et al. (2017) as the latency metric to evaluate the systems. Consecutive wait evaluates the number of source words waited between two target words, which reflects the streaming degree of SiMT. Given , CW is calculated as:

| (13) |

where counts the number of . In other words, CW measures the average source segment length (the best case is 1 for word-by-word streaming translation and the worst case is for full-sentence MT), where the smaller the CW, the shorter the average source segment, and the translation is more streaming.

As shown in Figure 5, GMA gets much smaller CW scores than MMA, where the average source segment length is about 2 words, which shows that GMA achieves more streaming translation than the previous adaptive policy.

Additionally, although the alignments between the two languages is not necessarily monotonic, we require the predicted incremental step to guarantee the model performs READ/WRITE monotonically. This is due to two considerations. First, we don’t want to waste any useful source content, i.e., to avoid the model moving to the previous position and thereby ignoring some received source information caused by . Second, we argue that monotonic alignments are more friendly to SiMT learning. We use fast-align555https://github.com/clab/fast_align Dyer et al. (2013) to generate the ground-truth aligned source position of target token, denoted as , and then show the distribution of distances between adjacent alignments under monotonic and non-monotonic alignments in Figure 6, where ‘Non-Monotonic’ measures and ‘Monotonic’ measures . The distance distribution between adjacent alignments under monotonic alignment is more concentrated, between 0 and 4, which is easier for the model to learn incremental step. Actually, the incremental steps predicted by GMA almost distribute between 0 and 4 (see Figure 4), which shows that GMA successfully learns the relative distance between monotonic alignments.

6.4 Quality of Predicted Alignments

| Latency | AER |

|

||

|---|---|---|---|---|

| Low | 0.49 | 81.00% | ||

| Mid | 0.61 | 88.27% | ||

| High | 0.76 | 95.58% | ||

To evaluate the quality of the aligned source position predicted by GMA, we measure the alignment accuracy on the RWTH DeEn alignment dataset 666https://www-i6.informatik.rwth-aachen.de/goldAlignment/, whose reference alignment was manually annotated by experts Liu et al. (2016); Zhang and Feng (2021b). As shown in Table 3, we sample one decoder layer to calculate the alignments error rate (AER) Vilar et al. (2006), and meanwhile count how many ground-truth aligned source words are located before the output position (i.e., translate after receiving the aligned source word).

GMA achieves good alignment accuracy, especially at low latency, since the model is required to output immediately after receiving the aligned source words ( in Eq.(6) is small). More importantly, most of the ground-truth alignments is within , showing that GMA guarantees that in most cases, the model starts translating a target word after receiving its aligned source words, which is beneficial to translation quality.

6.5 Balancing Translation and Latency

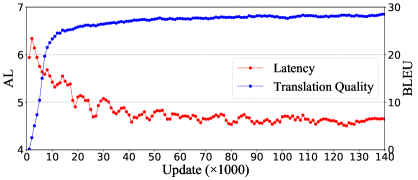

To study how GMA learns to balance translation quality and latency without any additional loss function during training, we draw a learning curve for translation quality and latency in Figure 7.

Initially, the high latency indicates that the model first moves the predicted aligned position to a further position, to learn the translation by seeing more source words. Then, as the number of training steps increases, the translation quality improves and the latency gradually decreases, which shows that for better translation, the model moves the predicted aligned source position to a more appropriate position due to the introduced Gaussian prior probability. Overall, for better translation, GMA constantly adjusts the predicted aligned source position to a suitable position and thereby controls the latency, which is completely different from the previous method of introducing the additional latency loss to constrain the latency with translation Ma et al. (2020); Miao et al. (2021).

6.6 Characteristics of Attention in GMA

We explore the characteristics of GMA by visualizing the attention distributions in Figure 8. We show two cases with the alignments of different difficulty levels, where the reverse orders in alignments are considered as a major challenge for SiMT Ma et al. (2019); Zhang and Feng (2021c).

For more monotonic alignments, GMA can predict the aligned source position well and output the target word after receiving the aligned word. Meanwhile, due to the characteristics of Gaussian distribution, GMA can also avoid focusing too much on source words in the front position, and strengthen the attention on the newly received source words, which is proved to be beneficial to SiMT performance Elbayad et al. (2020b); Zhang and Feng (2022b). For more complex alignments, the aligned position predicted by GMA is close to the ground-truth alignments, so that GMA starts translating after receiving most of aligned words. Besides, GMA learns some implicit prediction ability, e.g., before receiving “worden sein”, GMA generates the correct translation “have been” based on the context. We consider this is because the predicted alignments during training are monotonic due to the incremental step, where modeling monotonic alignments forces the model to learn the correct translation from the incomplete source and previous outputs Ma et al. (2019).

7 Conclusion

In this paper, we propose the Gaussian multi-head attention to develop a SiMT policy which starts translating a target word after receiving its aligned source word. Experiments and analyses show that our method achieves promising results on performance, alignments quality and streaming degree.

Acknowledgements

We thank all the anonymous reviewers for their insightful and valuable comments. This work was supported by National Key R&D Program of China (NO. 2017YFE0192900).

References

- Alinejad et al. (2021) Ashkan Alinejad, Hassan S. Shavarani, and Anoop Sarkar. 2021. Translation-based supervision for policy generation in simultaneous neural machine translation. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 1734–1744, Online and Punta Cana, Dominican Republic. Association for Computational Linguistics.

- Alinejad et al. (2018) Ashkan Alinejad, Maryam Siahbani, and Anoop Sarkar. 2018. Prediction improves simultaneous neural machine translation. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, pages 3022–3027, Brussels, Belgium. Association for Computational Linguistics.

- Arivazhagan et al. (2019) Naveen Arivazhagan, Colin Cherry, Wolfgang Macherey, Chung-Cheng Chiu, Semih Yavuz, Ruoming Pang, Wei Li, and Colin Raffel. 2019. Monotonic infinite lookback attention for simultaneous machine translation. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 1313–1323, Florence, Italy. Association for Computational Linguistics.

- Arthur et al. (2021) Philip Arthur, Trevor Cohn, and Gholamreza Haffari. 2021. Learning coupled policies for simultaneous machine translation using imitation learning. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume, pages 2709–2719, Online. Association for Computational Linguistics.

- Bahar et al. (2020) Parnia Bahar, Patrick Wilken, Tamer Alkhouli, Andreas Guta, Pavel Golik, Evgeny Matusov, and Christian Herold. 2020. Start-before-end and end-to-end: Neural speech translation by AppTek and RWTH Aachen University. In Proceedings of the 17th International Conference on Spoken Language Translation, pages 44–54, Online. Association for Computational Linguistics.

- Bangalore et al. (2012) Srinivas Bangalore, Vivek Kumar Rangarajan Sridhar, Prakash Kolan, Ladan Golipour, and Aura Jimenez. 2012. Real-time incremental speech-to-speech translation of dialogs. In Proceedings of the 2012 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 437–445, Montréal, Canada. Association for Computational Linguistics.

- Cettolo et al. (2015) Mauro Cettolo, Niehues Jan, Stüker Sebastian, Luisa Bentivogli, R. Cattoni, and Marcello Federico. 2015. The iwslt 2015 evaluation campaign.

- Cho and Esipova (2016) Kyunghyun Cho and Masha Esipova. 2016. Can neural machine translation do simultaneous translation?

- Dalvi et al. (2018) Fahim Dalvi, Nadir Durrani, Hassan Sajjad, and Stephan Vogel. 2018. Incremental decoding and training methods for simultaneous translation in neural machine translation. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), pages 493–499, New Orleans, Louisiana. Association for Computational Linguistics.

- Dyer et al. (2013) Chris Dyer, Victor Chahuneau, and Noah A. Smith. 2013. A simple, fast, and effective reparameterization of IBM model 2. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pages 644–648, Atlanta, Georgia. Association for Computational Linguistics.

- Elbayad et al. (2020a) Maha Elbayad, Laurent Besacier, and Jakob Verbeek. 2020a. Efficient Wait-k Models for Simultaneous Machine Translation.

- Elbayad et al. (2020b) Maha Elbayad, Michael Ustaszewski, Emmanuelle Esperança-Rodier, Francis Brunet-Manquat, Jakob Verbeek, and Laurent Besacier. 2020b. Online versus offline NMT quality: An in-depth analysis on English-German and German-English. In Proceedings of the 28th International Conference on Computational Linguistics, pages 5047–5058, Barcelona, Spain (Online). International Committee on Computational Linguistics.

- Garg et al. (2019) Sarthak Garg, Stephan Peitz, Udhyakumar Nallasamy, and Matthias Paulik. 2019. Jointly learning to align and translate with transformer models. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 4453–4462, Hong Kong, China. Association for Computational Linguistics.

- Gu et al. (2017) Jiatao Gu, Graham Neubig, Kyunghyun Cho, and Victor O.K. Li. 2017. Learning to translate in real-time with neural machine translation. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 1, Long Papers, pages 1053–1062, Valencia, Spain. Association for Computational Linguistics.

- Han et al. (2020) Hou Jeung Han, Mohd Abbas Zaidi, Sathish Reddy Indurthi, Nikhil Kumar Lakumarapu, Beomseok Lee, and Sangha Kim. 2020. End-to-end simultaneous translation system for IWSLT2020 using modality agnostic meta-learning. In Proceedings of the 17th International Conference on Spoken Language Translation, pages 62–68, Online. Association for Computational Linguistics.

- Li et al. (2019) Xintong Li, Guanlin Li, Lemao Liu, Max Meng, and Shuming Shi. 2019. On the word alignment from neural machine translation. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 1293–1303, Florence, Italy. Association for Computational Linguistics.

- Liu et al. (2021) Dan Liu, Mengge Du, Xiaoxi Li, Ya Li, and Enhong Chen. 2021. Cross attention augmented transducer networks for simultaneous translation. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 39–55, Online and Punta Cana, Dominican Republic. Association for Computational Linguistics.

- Liu et al. (2016) Lemao Liu, Masao Utiyama, Andrew Finch, and Eiichiro Sumita. 2016. Neural machine translation with supervised attention. In Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers, pages 3093–3102, Osaka, Japan. The COLING 2016 Organizing Committee.

- Ma et al. (2019) Mingbo Ma, Liang Huang, Hao Xiong, Renjie Zheng, Kaibo Liu, Baigong Zheng, Chuanqiang Zhang, Zhongjun He, Hairong Liu, Xing Li, Hua Wu, and Haifeng Wang. 2019. STACL: Simultaneous translation with implicit anticipation and controllable latency using prefix-to-prefix framework. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 3025–3036, Florence, Italy. Association for Computational Linguistics.

- Ma et al. (2020) Xutai Ma, Juan Miguel Pino, James Cross, Liezl Puzon, and Jiatao Gu. 2020. Monotonic multihead attention. In International Conference on Learning Representations.

- Miao et al. (2021) Yishu Miao, Phil Blunsom, and Lucia Specia. 2021. A generative framework for simultaneous machine translation. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 6697–6706, Online and Punta Cana, Dominican Republic. Association for Computational Linguistics.

- Ott et al. (2019) Myle Ott, Sergey Edunov, Alexei Baevski, Angela Fan, Sam Gross, Nathan Ng, David Grangier, and Michael Auli. 2019. fairseq: A fast, extensible toolkit for sequence modeling. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics (Demonstrations), pages 48–53, Minneapolis, Minnesota. Association for Computational Linguistics.

- Papineni et al. (2002) Kishore Papineni, Salim Roukos, Todd Ward, and Wei-Jing Zhu. 2002. Bleu: a method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, pages 311–318, Philadelphia, Pennsylvania, USA. Association for Computational Linguistics.

- Pukelsheim (1994) Friedrich Pukelsheim. 1994. The three sigma rule. The American Statistician, 48(2):88–91.

- Raffel et al. (2017) Colin Raffel, Minh-Thang Luong, Peter J. Liu, Ron J. Weiss, and Douglas Eck. 2017. Online and linear-time attention by enforcing monotonic alignments. In Proceedings of the 34th International Conference on Machine Learning, volume 70 of Proceedings of Machine Learning Research, pages 2837–2846. PMLR.

- Sennrich et al. (2016) Rico Sennrich, Barry Haddow, and Alexandra Birch. 2016. Neural machine translation of rare words with subword units. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 1715–1725, Berlin, Germany. Association for Computational Linguistics.

- Siahbani et al. (2018) Maryam Siahbani, Hassan Shavarani, Ashkan Alinejad, and Anoop Sarkar. 2018. Simultaneous translation using optimized segmentation. In Proceedings of the 13th Conference of the Association for Machine Translation in the Americas (Volume 1: Research Papers), pages 154–167, Boston, MA. Association for Machine Translation in the Americas.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Ł ukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems 30, pages 5998–6008. Curran Associates, Inc.

- Vilar et al. (2006) David Vilar, Maja Popovic, and Hermann Ney. 2006. AER: do we need to “improve” our alignments? In Proceedings of the Third International Workshop on Spoken Language Translation: Papers, Kyoto, Japan.

- Voita et al. (2019) Elena Voita, David Talbot, Fedor Moiseev, Rico Sennrich, and Ivan Titov. 2019. Analyzing multi-head self-attention: Specialized heads do the heavy lifting, the rest can be pruned. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 5797–5808, Florence, Italy. Association for Computational Linguistics.

- Wilken et al. (2020) Patrick Wilken, Tamer Alkhouli, Evgeny Matusov, and Pavel Golik. 2020. Neural simultaneous speech translation using alignment-based chunking. In Proceedings of the 17th International Conference on Spoken Language Translation, pages 237–246, Online. Association for Computational Linguistics.

- Zhang et al. (2020) Ruiqing Zhang, Chuanqiang Zhang, Zhongjun He, Hua Wu, and Haifeng Wang. 2020. Learning adaptive segmentation policy for simultaneous translation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), pages 2280–2289, Online. Association for Computational Linguistics.

- Zhang and Feng (2021a) Shaolei Zhang and Yang Feng. 2021a. ICT’s system for AutoSimTrans 2021: Robust char-level simultaneous translation. In Proceedings of the Second Workshop on Automatic Simultaneous Translation, pages 1–11, Online. Association for Computational Linguistics.

- Zhang and Feng (2021b) Shaolei Zhang and Yang Feng. 2021b. Modeling concentrated cross-attention for neural machine translation with Gaussian mixture model. In Findings of the Association for Computational Linguistics: EMNLP 2021, pages 1401–1411, Punta Cana, Dominican Republic. Association for Computational Linguistics.

- Zhang and Feng (2021c) Shaolei Zhang and Yang Feng. 2021c. Universal simultaneous machine translation with mixture-of-experts wait-k policy. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 7306–7317, Online and Punta Cana, Dominican Republic. Association for Computational Linguistics.

- Zhang and Feng (2022a) Shaolei Zhang and Yang Feng. 2022a. Modeling dual read/write paths for simultaneous machine translation. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland. Association for Computational Linguistics.

- Zhang and Feng (2022b) Shaolei Zhang and Yang Feng. 2022b. Reducing position bias in simultaneous machine translation with length-aware framework. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics, Dublin, Ireland. Association for Computational Linguistics.

- Zhang et al. (2021) Shaolei Zhang, Yang Feng, and Liangyou Li. 2021. Future-guided incremental transformer for simultaneous translation. Proceedings of the AAAI Conference on Artificial Intelligence, 35(16):14428–14436.

- Zheng et al. (2019a) Baigong Zheng, Renjie Zheng, Mingbo Ma, and Liang Huang. 2019a. Simpler and faster learning of adaptive policies for simultaneous translation. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 1349–1354, Hong Kong, China. Association for Computational Linguistics.

- Zheng et al. (2019b) Baigong Zheng, Renjie Zheng, Mingbo Ma, and Liang Huang. 2019b. Simultaneous translation with flexible policy via restricted imitation learning. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 5816–5822, Florence, Italy. Association for Computational Linguistics.

Appendix A Numerical Results

We additionally use Consecutive Wait (CW) Gu et al. (2017), Average Proportion (AP) Cho and Esipova (2016), Average Lagging (AL) Ma et al. (2019), and Differentiable Average Lagging (DAL) Arivazhagan et al. (2019) to evaluate the latency of GMA, and the numerical results are shown in Table 4, 5 and 6.

| IWSLT15 EnVi (Small) | |||||

|---|---|---|---|---|---|

| CW | AP | AL | DAL | BLEU | |

| 0.9 | 1.20 | 0.65 | 3.05 | 4.08 | 27.95 |

| 1.0 | 1.27 | 0.68 | 4.01 | 4.77 | 28.20 |

| 2.0 | 1.49 | 0.74 | 5.47 | 6.37 | 28.44 |

| 2.2 | 1.60 | 0.77 | 6.04 | 6.96 | 28.56 |

| 2.5 | 1.74 | 0.78 | 6.55 | 7.55 | 28.72 |

| WMT15 DeEn (Base) | |||||

|---|---|---|---|---|---|

| CW | AP | AL | DAL | BLEU | |

| 0.9 | 1.33 | 0.64 | 3.87 | 4.61 | 28.12 |

| 1.0 | 1.49 | 0.67 | 4.66 | 5.56 | 28.50 |

| 2.0 | 1.85 | 0.72 | 5.79 | 7.75 | 28.71 |

| 2.2 | 2.01 | 0.73 | 6.13 | 8.43 | 29.23 |

| 2.4 | 5.89 | 0.96 | 14.05 | 25.76 | 31.31 |

| WMT15 DeEn (Big) | |||||

|---|---|---|---|---|---|

| CW | AP | AL | DAL | BLEU | |

| 1.0 | 1.54 | 0.68 | 4.60 | 5.89 | 30.20 |

| 2.0 | 1.98 | 0.74 | 6.34 | 8.18 | 30.64 |

| 2.2 | 2.13 | 0.75 | 6.86 | 8.91 | 31.33 |

| 2.4 | 2.28 | 0.76 | 7.28 | 9.59 | 31.62 |

| 2.5 | 3.10 | 0.88 | 12.06 | 20.43 | 31.91 |