Gaussian in the Wild: 3D Gaussian Splatting for Unconstrained Image Collections

Abstract

Novel view synthesis from unconstrained in-the-wild images remains a meaningful but challenging task. The photometric variation and transient occluders in those unconstrained images make it difficult to reconstruct the original scene accurately. Previous approaches tackle the problem by introducing a global appearance feature in Neural Radiance Fields (NeRF). However, in the real world, the unique appearance of each tiny point in a scene is determined by its independent intrinsic material attributes and the varying environmental impacts it receives. Inspired by this fact, we propose Gaussian in the wild (GS-W), a method that uses 3D Gaussian points to reconstruct the scene and introduces separated intrinsic and dynamic appearance feature for each point, capturing the unchanged scene appearance along with dynamic variation like illumination and weather. Additionally, an adaptive sampling strategy is presented to allow each Gaussian point to focus on the local and detailed information more effectively. We also reduce the impact of transient occluders using a 2D visibility map. More experiments have demonstrated better reconstruction quality and details of GS-W compared to NeRF-based methods, with a faster rendering speed. Video results and code are available at https://eastbeanzhang.github.io/GS-W/.

Keywords:

Novel view synthesis 3D Gaussian Splatting Unconstrained image collections

1 Introduction

Novel view synthesis has long been a high-profile and complicated task in computer vision which aims to recover the 3D structure of a scene from 2D image collections and plays a significant role in many applications like virtual reality (VR) and autonomous driving. Recently, implicit representations, especially Neural Radiance Field (NeRF)[26] and its subsequent work [1, 2, 27] have shown impressive progress in rendering photorealistic images from arbitrary viewpoints.

Meanwhile, explicit representations have also drawn increasing attention thanks to their real-time rendering speed. 3D Gaussian Splatting(3DGS)[16] introduces 3D Gaussian as a novel and flexible scene representation and designs a fast differentiable rendering approach, allowing real-time rendering while maintaining high-fidelity for view synthesis.

However, these aforementioned methods only focus on static scenes from which images are captured without transient occluders and dynamic appearance variation such as ever-changing sky, weather, and illumination. Unfortunately, in practice, input unconstrained image collections may be captured by cameras with different settings at different times, and typically include pedestrians or vehicles. The assumption of the previous methods that images must be captured in static scenes is severely violated, resulting in sharp performance degradation[23, 50].

Several recent attempts [23, 6, 41, 19] adopt a global latent embedding for each image, granting their model the ability to handle the appearance variations between images. CR-NeRF[50] proposes a new cross-ray paradigm that utilizes information from multiple rays to obtain colors of several pixels, achieving more realistic and efficient appearance modeling. Nonetheless, the methods above still suffer from three defects: 1) Global appearance representation: The representation they use to control the dynamic appearance variation is shared by the whole scene, which may struggle to describe local high-frequency changes. By contrast, in the 3D real world, every single point of the scene has its appearance feature which brings them unique gloss and texture in different views; 2) Blurring intrinsic and dynamic appearance: An object’s intrinsic appearance is determined by its own material and surface properties while dynamic appearance is affected by environmental factors like highlight and shadow. Previous approaches blur the two appearances together, causing confusion in applications such as appearance tuning; 3) High time cost: Similar to volume rendering based methods, most NeRF-based approaches above suffer from high training costs and low rendering speed due to a large amount of network evaluation.

To address these challenges, we propose Gaussian in the wild (GS-W), a method to achieve high-quality and flexible scene reconstruction for unconstrained image collections. Specifically, we first use 3D Gaussian points to represent the scene and introduce independent appearance features to each point, enabling their unique appearance expression. Second, the intrinsic and dynamic appearance is separated and an adaptive sampling strategy is presented to grant every point the freedom to focus on various detailed dynamic appearance information. Additionally, the scene rendering speed is significantly accelerated thanks to the tile-based rasterizer.

Our contribution can be summarized as follows:

-

•

We propose a new framework GS-W, a 3D Gaussian Splatting based method, in which each Gaussian point is equipped with separated intrinsic and dynamic appearance features to enable more flexible varying appearance modeling from unconstrained image collections.

-

•

To better incorporate environmental factors from the image into the scene, we propose adaptive sampling, allowing each point to sample dynamic appearance features more effectively from the feature maps, thereby focusing on more local and detailed information.

-

•

Experimental results demonstrate that our method not only outperforms the state-of-the-art NeRF-based methods in terms of quality but also surpasses them in rendering speed by over .

2 Related Work

2.1 3D representations

Diverse 3D representations are developed to represent the geometric and appearance information of three-dimensional objects or scenes, among which implicit and explicit representation are two common methods for practical applications like 3D object generation and scene reconstruction. Implicit representation represents 3D data as continuous functions or fields, like occupancy fields[24], distance fields [29], color, and density. NeRF [26] is an outstanding work among them which models the scene as a continuous field of density and radiance. NeRF indicates that using MLP can represent complex scenes and render novel photo-realistic views with the help of volume rendering. On the contrary, explicit representation describes scenes by storing and manipulating 3D data using discrete structures like meshes[45, 14, 15], point clouds[31, 32, 40], voxels[47, 48, 38] and so on. More recently, methods represented by 3DGS[16] have entered researchers’ vision by their real-time rendering speed while preserving high-resolution synthesis quality. An efficient tile-based rasterizer is introduced by 3DGS to splat Gaussians to the image plane and accelerate the rendering speed many times compared to previous methods. Many researchers are exploring its potential by extending it to various tasks[46, 33, 52]. Several hybrid representations [4, 3, 5, 10, 39] are also emerging and creating more possibilities.

2.2 Novel view synthesis

Synthesizing arbitrary views of a scene using a set of 2D images is a long-standing problem in computer vision. Many NeRF-based methods[27, 11, 53, 12, 34] have achieved expressive synthesis quality [1, 49, 42] along with good view-consistency[7, 43, 28]. Mip-NeRF[1] replaces the rays with a 3D conical frustum and proposes integrated position embedding to anti-aliasing. Instant-NGP [27] introduces multi-resolution hash encoding that permits the use of a smaller network to reduce the training cost. There are also some Gaussian-based methods[51, 22, 9, 54] contributing to this task by modifying 3DGS. For example, to handle scenes with specular elements, Spec-Gaussian[51] departs from using spherical harmonics and instead adopts an anisotropic spherical Gaussian appearance field to model each point. Since these aforementioned methods all assume that input images are captured in a static scene, their performance declines intensely when reconstructing from unconstrained photo collections. Thus, several attempts[25, 23, 36, 6, 50, 19] are proposed to address this challenging in-the-wild task by handling appearance variation and transient occluders. Other works [20, 21] focus on scenes with time-varying appearances, while methods [55, 18, 8] use physical rendering models for diverse lighting conditions. This field still faces some remaining issues and looks forward to advancements.

As one of them, our proposed method tries to push the field one step forward by achieving a more delicate and flexible synthesis with higher rendering speed, through our modifications mentioned in Sec. 4.

3 Preliminaries

3D Gaussian Splatting (3DGS)[16] is a method for reconstructing 3D scenes from static images with camera pose information. It uses explicit 3D Gaussian points to represent the scene and achieves real-time image rendering through a differentiable tile-based rasterizer. These Gaussian points’ positions are initialized with point clouds extracted by SFM[37] from the image set. Particularly, it uses 3D covariance to model the impact of each Gaussian point on the color anisotropy of the surrounding area:

| (1) |

For ease of optimizing the covariance while maintaining its positive semi-definiteness, the method decomposes the covariance of each Gaussian point into a scaling matrix and a rotation matrix , which are then stored as the Gaussian point attributes and respectively, using 3D vectors and quaternions.

| (2) |

Additionally, each Gaussian point is equipped with two more attributes: opacity and color , with the color attribute represented by third-order spherical harmonic coefficients. When rendering, besides projecting each Gaussian point onto a grid of tiles on the image plane, the 3D covariance is projected to 2D using the viewing transformation and the Jacobian of the affine approximation of the projective transformation :

| (3) |

Then, based on the Gaussian points sorted by the rasterizer, the color of each pixel is aggregated using -blending:

| (4) | ||||

| (5) |

Where represents the position of a pixel, and denotes the sorted Gaussian points associated with that pixel. The final rendered image is then used to compute loss with reference images for training, jointly optimizing all Gaussian attributes. Moreover, it devises a strategy for point growth and pruning based on gradients and opacity.

4 Method

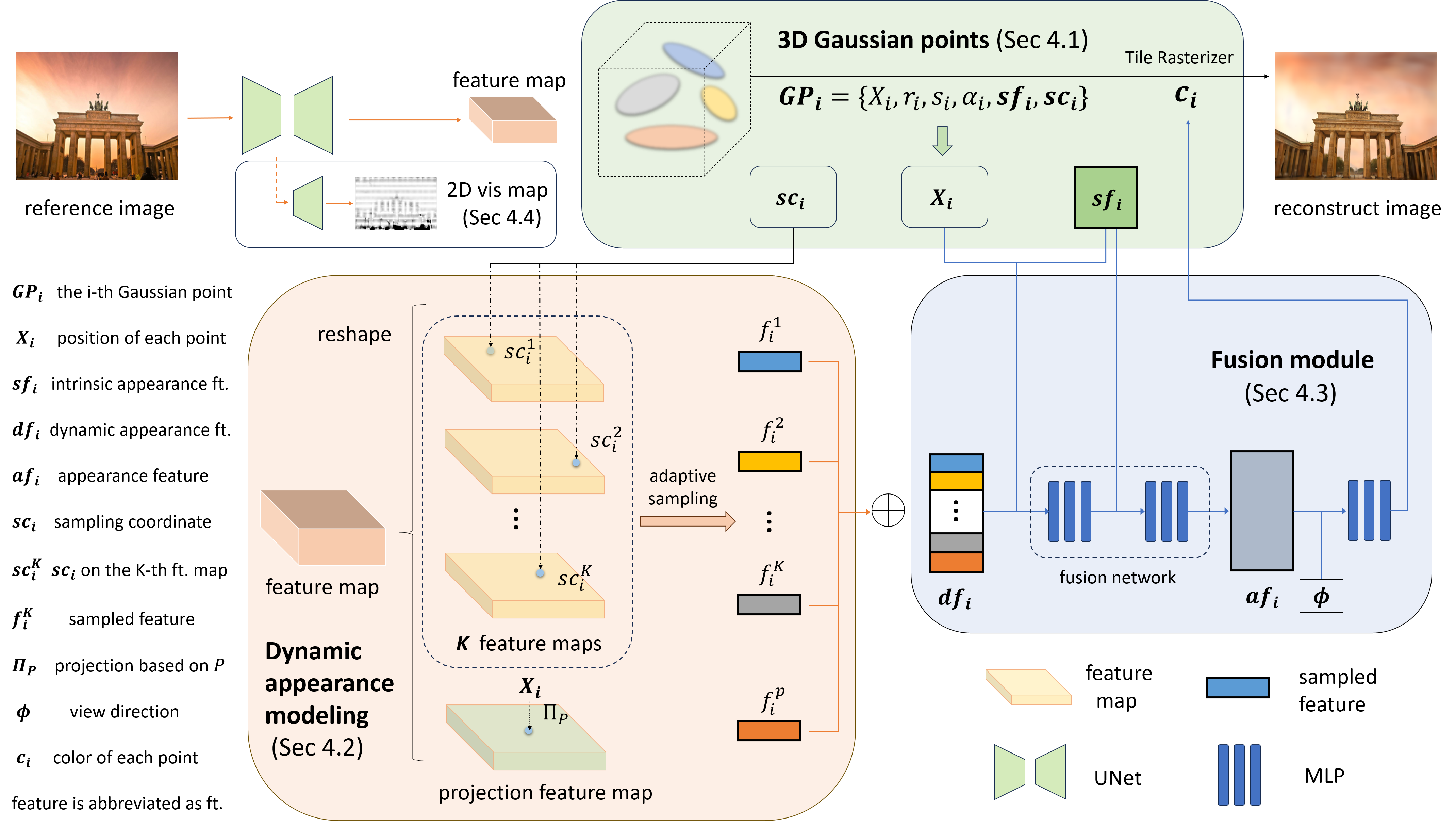

Based on previous analysis, aforementioned NeRF-based methods[23, 6, 50] lack enough attention to high-frequency and local detailed information in appearance, along with significant rendering costs due to the large number of sampling points. To address these issues, we utilize 3D Gaussian points to explicitly model the scene in Sec. 4.1 and introduce a new appearance modeling method for each Gaussian point in Sec. 4.2. Intrinsic and dynamic appearance features are separated and then fused in Sec. 4.3. Additionally, when calculating the losses in Sec. 4.5, a visibility map is employed to reduce the impact of transient objects in Sec. 4.4. The whole pipeline is visualized as Fig. 2.

4.1 3D Gaussian Splatting in the Wild

Appearance. Since 3DGS[16] is designed for reconstructing static scenes, GS-W abandons the conventional color modeling approach using spherical harmonic coefficients. Instead, in the subsequent section, we introduce a new appearance feature for each Gaussian point, adapting to variations in the reference image by fusing intrinsic appearance feature with dynamic appearance feature extracted from the image.

Transient object. Handling transient objects is also challenging for 3DGS, in which Gaussian points around transient object regions may receive gradients and move or grow, bringing the emergence of meaningless floating points and rendering artifacts. Therefore, a visibility map is employed to mitigate this issue in Sec. 4.4. In addition, we maintain the pruning and growing strategies for points to reconstruct buildings that are absent in the initial point cloud.

4.2 Dynamic appearance features modeling

In the real physical world, even within the same scene, most object points experience varying environmental influences, such as light from different directions. Combined with unique intrinsic material properties, each point displays a different color, gloss, and texture. NeRF-W[23] and Ha-NeRF[6] employ a global feature embedding from the reference image for the entire scene’s appearance, assuming uniform environmental information across all points. CR-NeRF[50] integrates reference image features into rendered image features at the 2D level, lacking dynamic environmental information in 3D. These methods tend to roughly restore the global color tone of the scene and struggle to capture local details such as highlight and shadow. To address this, we introduce diverse information for each point, better aligning with real-world scenarios.

Projection feature map. We extract 2D features from the image and then map each point to the 2D space for feature sampling. With the known camera pose of the image, the mapping relationship from 3D points to the 2D image can be determined. Therefore, from the image we extract a projection feature map , onto which each 3D point is projected using a projection matrix , followed by the bilinear interpolation feature sampling, as illustrated below:

| (6) |

where represents the position coordinate of the i-th Gaussian point, while denotes the features sampled from the projection feature map for this Gaussian point, constituting a portion of its dynamic appearance feature. The term indicates the bilinear interpolation sampling.

By employing this method, Gaussian points along different rays can effectively capture features at their corresponding positions on the reference image.

K feature maps. Limited by the single-view reference image , features sampled from the projection feature map are identical along the same ray, which is unreasonable under uneven lighting. Besides, since the reference image sometimes only contains part of the scene, many points may not be projected into effective regions for obtaining valid feature samples, causing inconsistencies in new viewpoints. To address these issues and grant Gaussian points the freedom to focus on diverse information, we propose extracting additional feature maps from to construct a high-dimensional sampling space. Each Gaussian point is mapped to these maps respectively for adaptive sampling, allowing them to focus on high-frequency features.

Adaptive sampling. It is necessary to map the Gaussian points to different positions on K feature maps, to better focus on diverse information. Inspired by the motive of each Gaussian point independently learning its own attributes, we consider it an efficient way to allow every point to determine its sampling positions through self-learning. Therefore, we assign each Gaussian point with K learnable sampling coordinate attributes , enabling them to adaptively select the information they need to focus on. The sampling process using these K sampling coordinates in K feature maps is as follows:

| (7) |

where represents the features sampled from K feature maps for the i-th Gaussian point. Next, we concatenate the sampled features with to jointly represent the dynamic appearance feature of the Gaussian point as follows:

| (8) |

During training, to prevent sampling coordinates from deviating beyond the effective sampling range, we introduce a regularization term:

| (9) |

where N represents the total number of Gaussian points, and denotes the absolute value.

Feature maps extraction. We utilize a Unet[35] model with ResNet[13] backbone to generate both the projection feature map and K feature maps. The Unet model takes the reference image as input and produces a 2D feature map of the same spatial size. Subsequently, this feature map is evenly divided along the channel dimension into feature maps, each serving as one of the K feature maps , and the projection feature map . We choose Unet as the feature extractor due to its simplicity and effectiveness in extracting features from images at the same scale.

4.3 Intrinsic and dynamic appearance

Separation of intrinsic and dynamic appearance. As mentioned before, an object’s appearance is influenced by both its intrinsic material as well as surface properties, and dynamic environmental factors. However, previous methods like Ha-NeRF primarily rely on image features, positional data, and viewing direction to decode appearance using MLPs. This implicit modeling of the scene’s intrinsic attributes through MLPs makes it difficult to express the high-frequency features solely with small MLPs, thus relying on the extracted dynamic features to capture comprehensive information. Consequently, such blurring of features hinders the model’s ability to distinguish between intrinsic and dynamic appearance accurately, particularly in scenarios involving changes in illumination and weather conditions. To address this, we explicitly separate the scene appearance into two forms: intrinsic and dynamic appearance features.

Similar to modeling the static Gaussian points’ positions, we assign a new learnable intrinsic appearance attribute to each Gaussian point. Meanwhile, the dynamic appearance features are obtained by features extracted from the reference image.

Fusion of intrinsic and dynamic appearance features. After acquiring independent dynamic appearance features for each Gaussian point through adaptive sampling, it’s essential to combine them with the corresponding intrinsic appearance features to generate a comprehensive appearance feature . To accomplish this, we design a fusion network composed of two MLPs, which takes two appearance features and position information as inputs and produces a holistic appearance feature influenced by both. Specifically:

| (10) |

When rendering images, the fused appearance feature , along with the view direction , are jointly decoded by an MLP to obtain the color of the i-th Gaussian point, as in Eq. 11. Finally, the Gaussian points are rendered using a differentiable tile rasterizer and colors are aggregated according to Eq. 5, producing image with the appearance features of the reference image.

| (11) |

4.4 Transient objects handling

To mitigate the impact of transient objects and prevent the occurrence of artifacts like floating points, we employ a 2D visibility map obtained from a Unet model, facilitating accurate segmentation between transient and static objects. Leveraging the visibility map, we weight the loss calculation between the reference image and the rendered image , as Sec. 4.5. During training, the model often struggles to reconstruct the geometry and appearance of transient objects, leading to larger losses in regions containing such objects. Therefore, in unsupervised scenarios, the 2D visibility map tends to decrease the visibility of transient objects to minimize the training loss. As a result, regions with higher visibility receive more emphasis, while those with lower visibility are disregarded. Additionally, to prevent the visibility map from marking all pixels invisible, we introduce a regularization loss term for the visibility map:

| (12) |

4.5 Optimization

Similar to [16], we apply two types of the loss function, and [44], to calculate the pixel error between the rendered image and the reference image . Differently, a visibility map is incorporated to guide the supervision of rendered images by reference images. Moreover, we introduce the perceptual loss [56]. Therefore, the overall image loss function is represented as in Sec. 4.5, where denotes the Hadamard product. Combining the regularization loss terms mentioned in Eq. 9 and Eq. 12, the total loss function is formulated as in Eq. 14, where , , , and are 0.8, 0.2, 0.005, 0.001, 0.15, respectively.

| (13) |

| (14) |

5 Experiments

5.1 Implementation details

We implement our method using Pytorch [30] and train our networks with Adam optimizer [17]. We set in our experiment as the increasing K brings no performance improvement with meaningless computational cost. We train the full model on a single Nvidia RTX 3090 GPU for steps and downsample all the images 2 times during training and evaluation, which takes approximately 2 hours. We also perform the adaptive control of the 3D Gaussians and follow other hyperparameter settings similar to 3DGS [16].

| Brandenburg Gate | Sacre Coeur | Trevi Fountain | |||||||

|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | |

| 3DGS | 19.33 | 0.8838 | 0.1317 | 17.70 | 0.8454 | 0.1761 | 17.08 | 0.7139 | 0.2413 |

| NeRF-W | 24.17 | 0.8905 | 0.1670 | 19.20 | 0.8076 | 0.1915 | 18.97 | 0.6984 | 0.2652 |

| Ha-NeRF | 24.04 | 0.8873 | 0.1391 | 20.02 | 0.8012 | 0.1710 | 20.18 | 0.6908 | 0.2225 |

| CR-NeRF | 26.53 | 0.9003 | 0.1060 | 22.07 | 0.8233 | 0.1520 | 21.48 | 0.7117 | 0.2069 |

| GS-W (Ours) | 27.96 | 0.9319 | 0.0862 | 23.24 | 0.8632 | 0.1300 | 22.91 | 0.8014 | 0.1563 |

| Brandenburg Gate | Sacre Coeur | Trevi Fountain | |

|---|---|---|---|

| 3DGS | 221 | 268 | 198 |

| NeRF-W | 0.0518 | 0.0514 | 0.0485 |

| Ha-NeRF | 0.0489 | 0.0497 | 0.0498 |

| CR-NeRF | 0.0445 | 0.0447 | 0.0446 |

| Ours | 55.8 | 58.3 | 38 |

| Ours-cache | 221 | 301 | 197 |

5.2 Evaluation

Dataset, metrics, baseline. We evaluate our proposed method on three scenes from the PhotoTourism dataset: Brandenburg Gate, Sacre Coeur, and Trevi Fountain, which include varying appearances and transient objects. For quantitative comparison, we use PSNR, SSIM[44], and LPIPS[56] as metrics to assess the performance of our method. We also present rendered images generated from the same pose as the input view for visual inspection. To demonstrate the superiority of our method, we evaluate our proposed method against 3DGS[16], NeRF-W [23], Ha-NeRF[6], and CR-NeRF[50].

Quantitative comparison. Quantitative results are shown in Tab. 1. 3DGS performs poorly on both PSNR and SSIM metrics, as it does not explicitly model the appearance variation and transient objects. NeRF-W and Ha-NeRF achieve moderate performance by introducing a global appearance embedding and anti-transient module. It’s worth noting that NeRF-W needs to optimize the appearance embedding of test images, thus the comparison with NeRF-W is unfair. CR-NeRF achieves competitive performance due to cross-ray manner. Utilizing an adaptive sampling strategy that allows each point to focus on local details, our method outperforms the baselines on three scenes in terms of PSNR, SSIM, and LPIPS, which verifies that we can capture more details and render higher-quality images.

Render speed. To compare the render speed of different methods during inference, we experiment on the three scenes by setting the image resolution to and calculating the average rendering time per image using a single RTX 3090 GPU. The time taken for feature extraction from the reference images in Ha-NeRF, CR-NeRF, and our method is included in the overall inference time. As shown in Tab. 2, our method achieves a significant improvement in rendering speed, which is 1000 faster than previous NeRF-based methods.

Since our method only requires one feature extraction step and can cache appearance feature for each Gaussian point, only a small MLP decoder is needed for color decoding when synthesizing a novel view. This enables GS-W to achieve a 200 FPS rendering speed, comparable to 3DGS.

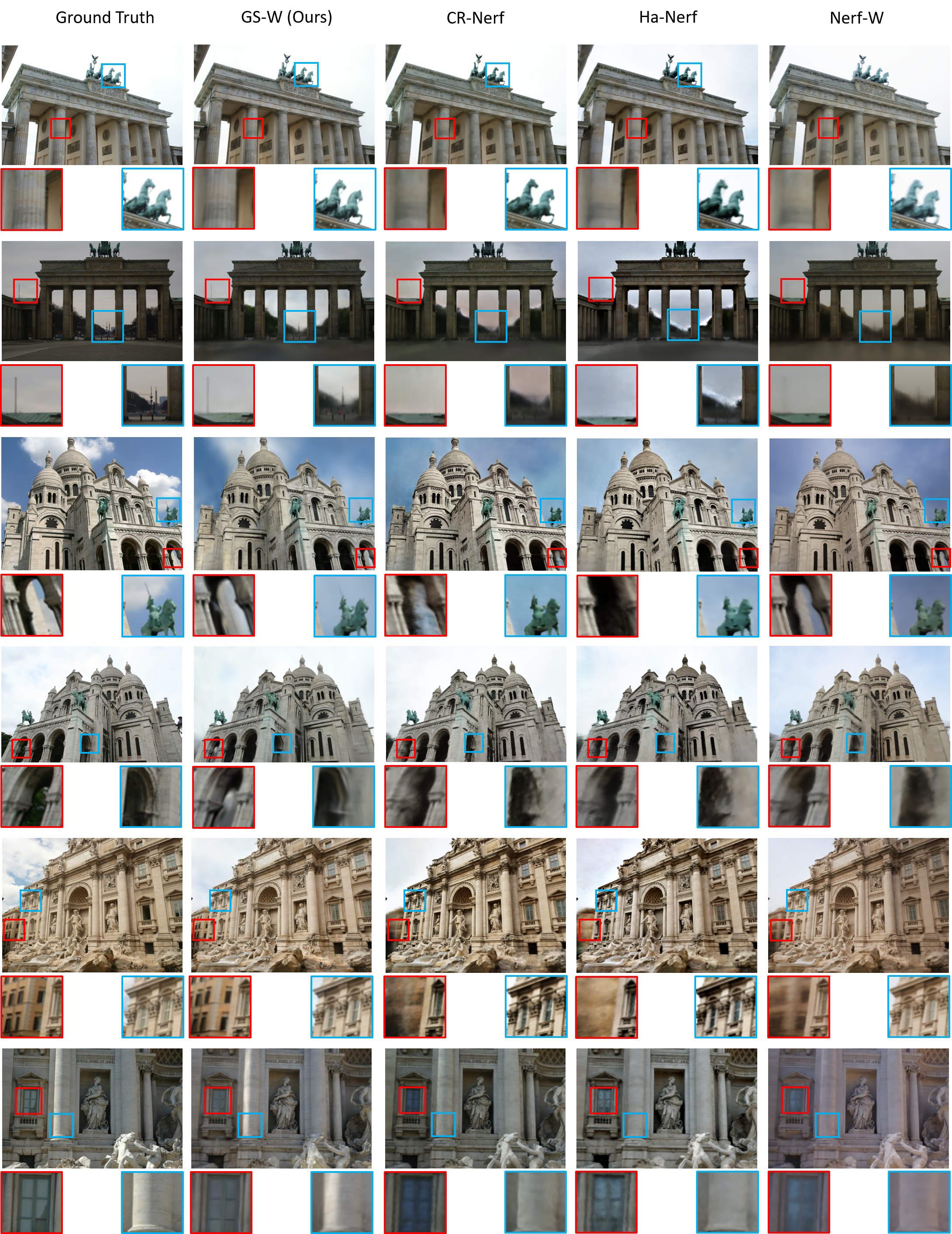

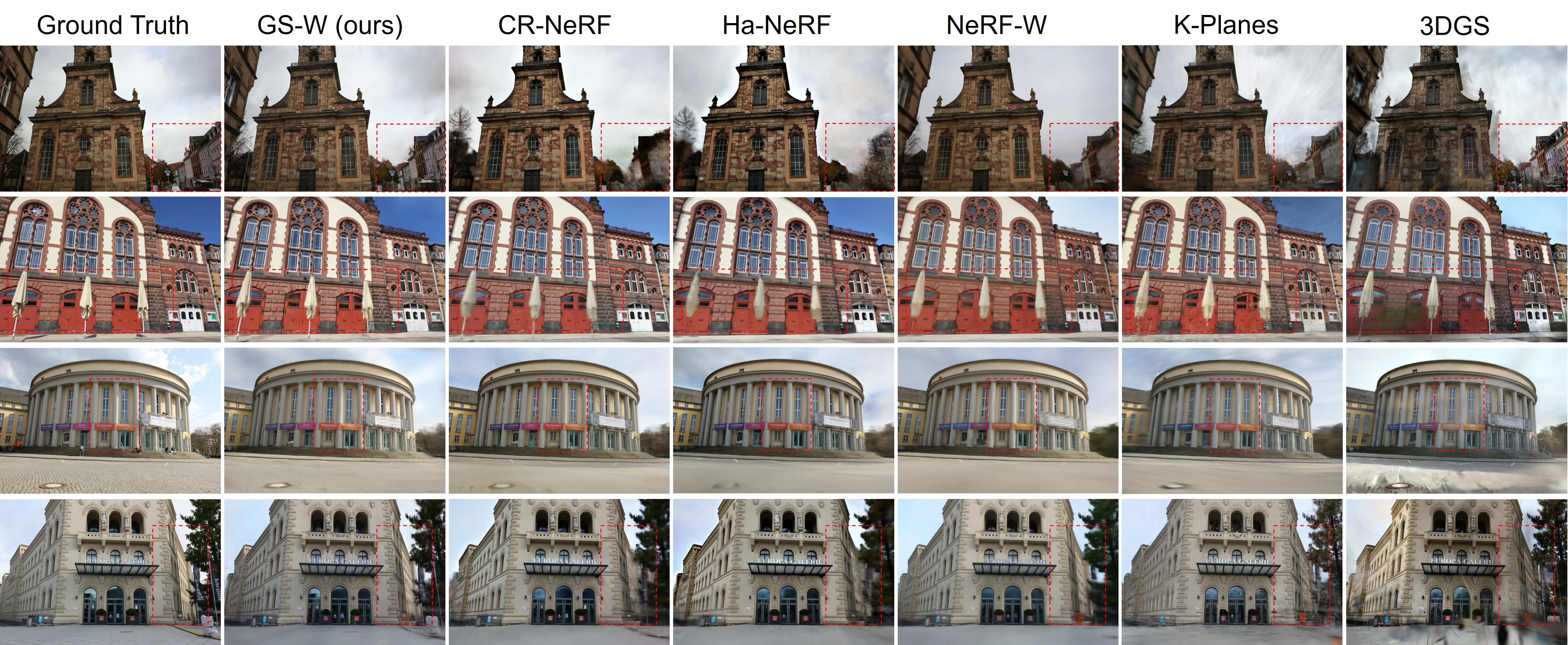

Qualitative comparison. Fig. 3 presents the qualitative result for all methods. NeRF-W and Ha-NeRF can model varying appearances from the reference image by introducing a global appearance embedding. CR-NeRF can reconstruct better geometry and model appearance variation compared with Ha-NeRF and NeRF-W. However, they all struggle to reconstruct the details of scenes in the distance and the intricate textures of the scenes, e.g. the door pillars and distant tower in Brandenburg, the cavity in Sacre, and the distant buildings in Trevi. In contrast, thanks to the higher-frequency dynamic appearance features, our method recovers more accurate appearance details, e.g. the horse sculpture in Brandenburg, the sky and clouds in Sacre, and the light on columns in Trevi.

5.3 Ablation studies

We summarize the ablation studies of our method on Brandenburg, Sacre, and Trevi datasets in Tab. 3 and produce qualitative results in Fig. 4 and Fig. 5 to validate the effectiveness of each component.

Without the visibility map. Removing the transient object handling module leads to higher metric performances but introduces artifacts in the rendered image due to the influence of transient objects, as depicted in Fig. 4. Since most test images lack dynamic objects, these artifacts may not significantly impact the metrics.

Without K feature maps or projection feature map. The removal of K feature maps or the projection feature map results in performance degradation. Especially, synthesizing novel views without the K feature maps not only reduces the ability to capture information from the reference image but also produces view-inconsistent appearances, as shown in Fig. 4.

Without adaptive sampling. Retaining K feature maps while keeping the sampling coordinates fixed for each point significantly decreases performance. This highlights the importance of our adaptive sampling strategy for K feature maps, enabling Gaussian points to adaptively focus on local and detailed features.

| Brandenburg Gate | Sacre Coeur | Trevi Fountain | |||||||

|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | |

| w/o visibility map | 28.64 | 0.9324 | 0.0867 | 23.76 | 0.8723 | 0.1234 | 23.03 | 0.8025 | 0.1532 |

| w/o K feature maps | 26.37 | 0.9210 | 0.1018 | 21.59 | 0.8521 | 0.1417 | 22.27 | 0.7859 | 0.1700 |

| w/o Projection map | 26.61 | 0.9285 | 0.0955 | 22.99 | 0.8635 | 0.1320 | 22.33 | 0.7980 | 0.1580 |

| w/o adaptive sampling | 26.44 | 0.9219 | 0.0961 | 21.45 | 0.8479 | 0.1439 | 22.18 | 0.7866 | 0.1674 |

| w/o separation | 28.22 | 0.9291 | 0.0942 | 22.94 | 0.8520 | 0.1469 | 23.27 | 0.7907 | 0.1714 |

| full (Ours) | 27.96 | 0.9319 | 0.0862 | 23.24 | 0.8632 | 0.1300 | 22.91 | 0.8014 | 0.1563 |

Without separation. We eliminate the intrinsic feature and predict the color of Gaussian points by the dynamic appearance feature only. The results in Tab. 3 show a noticeable decrease in both LPIPS and SSIM, which are more in line with human visual perception. In Fig. 5, the absence of dynamic appearance features results in incomplete scene appearance. This emphasizes the significance of intrinsic feature in retaining essential scene characteristics. Both illustrate that separation is beneficial for the model to accurately comprehend and reconstruct sharp appearances.

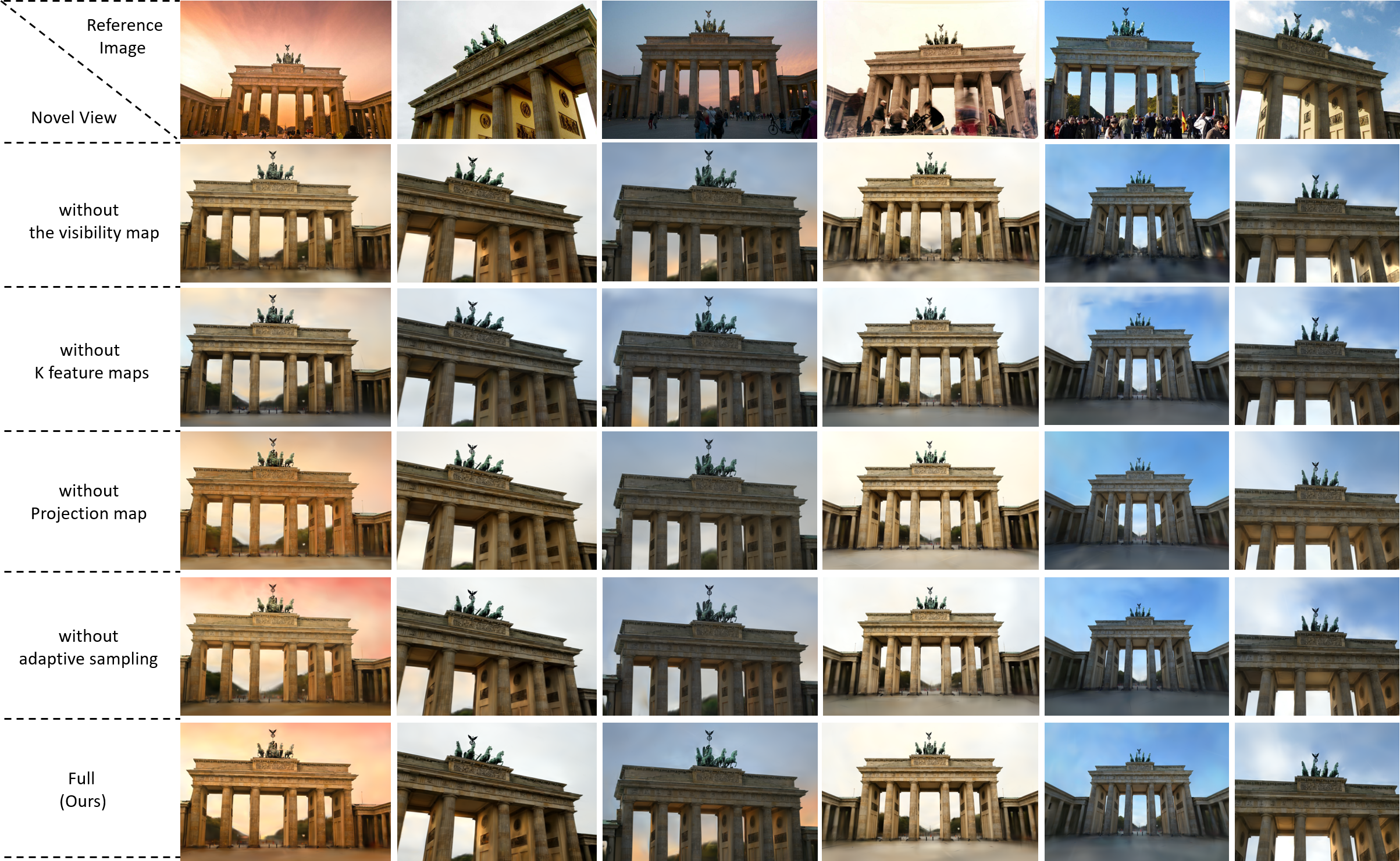

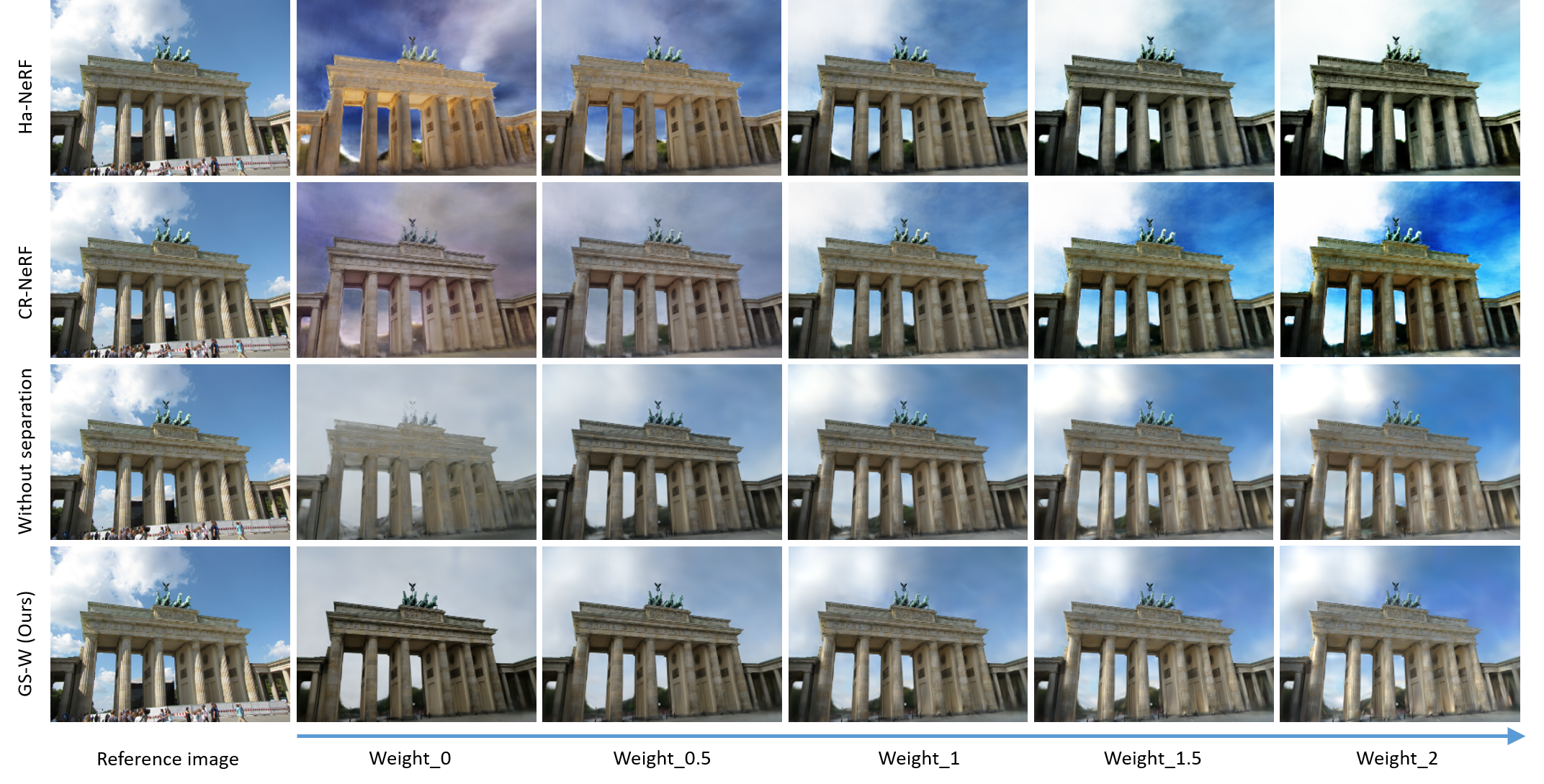

5.4 Appearance tuning experiment

Since we explicitly model the scene’s appearance as unchanged intrinsic and varying dynamic features, we can adjust the impact of dynamic appearance features on intrinsic appearance by multiplying by a proportional weight. We also compare it with Ha-NeRF and CR-NeRF by applying the same weights to extracted image features. Qualitative results are shown in Fig. 5. Ha-NeRF and CR-NeRF show odd coloration in the sky and buildings at low weights, while at high weights, they fail to capture the detailed highlights and enhance the style of sufficient illumination, resulting in darker building colors. In contrast, our method aligns more closely with human understanding of the physical world. As the weight increases from small to large, our method gradually applies extracted environmental impacts to the scene, e.g. appearing highlights on pillars and enhancing illumination. This demonstrates the importance of dynamic features in capturing environmental information and explicitly separating intrinsic and dynamic appearances aid the model in clearly learning and distinguishing between the two, thereby achieving more flexible tuning over the scene’s appearance.

5.5 Limitations

While GS-W outperforms previous methods, it still has limitations. It struggles with complex lighting variations, specular reflections, and accurately reconstructing textures in frequently occluded scenes, like the floor texture in the Brandenburg Gate scene. Additionally, it assumes known image poses when incorporating appearance information from reference images. Future research may focus on developing new appearance modeling techniques to address these issues.

6 Conclusion

In this paper, we introduce GS-W, a method for reconstructing scenes from unconstrained image collections. Using 3D Gaussian points as 3D representation, we introduce separated intrinsic and dynamic appearance features for each point to effectively model scene appearance. We propose an adaptive sampling strategy to capture local environmental factors like highlights and utilize a visibility map to handle transient objects. Our approach outperforms previous NeRF-based methods by providing better extraction of dynamic environmental impacts from images and addressing slow rendering speeds. Experimental results demonstrate the superiority and efficiency of our method compared to previous approaches.

References

- [1] Barron, J.T., Mildenhall, B., Tancik, M., Hedman, P., Martin-Brualla, R., Srinivasan, P.P.: Mip-nerf: A multiscale representation for anti-aliasing neural radiance fields. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 5855–5864 (2021)

- [2] Barron, J.T., Mildenhall, B., Verbin, D., Srinivasan, P.P., Hedman, P.: Mip-nerf 360: Unbounded anti-aliased neural radiance fields. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 5470–5479 (2022)

- [3] Cao, A., Johnson, J.: Hexplane: A fast representation for dynamic scenes. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 130–141 (2023)

- [4] Chan, E.R., Lin, C.Z., Chan, M.A., Nagano, K., Pan, B., De Mello, S., Gallo, O., Guibas, L.J., Tremblay, J., Khamis, S., et al.: Efficient geometry-aware 3d generative adversarial networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 16123–16133 (2022)

- [5] Chen, A., Xu, Z., Geiger, A., Yu, J., Su, H.: Tensorf: Tensorial radiance fields. In: European Conference on Computer Vision. pp. 333–350. Springer (2022)

- [6] Chen, X., Zhang, Q., Li, X., Chen, Y., Feng, Y., Wang, X., Wang, J.: Hallucinated neural radiance fields in the wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 12943–12952 (2022)

- [7] Deng, K., Liu, A., Zhu, J.Y., Ramanan, D.: Depth-supervised nerf: Fewer views and faster training for free. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 12882–12891 (2022)

- [8] Engelhardt, A., Raj, A., Boss, M., Zhang, Y., Kar, A., Li, Y., Sun, D., Brualla, R.M., Barron, J.T., Lensch, H., et al.: Shinobi: Shape and illumination using neural object decomposition via brdf optimization in-the-wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 19636–19646 (2024)

- [9] Fan, Z., Wang, K., Wen, K., Zhu, Z., Xu, D., Wang, Z.: Lightgaussian: Unbounded 3d gaussian compression with 15x reduction and 200+ fps. arXiv preprint arXiv:2311.17245 (2023)

- [10] Fridovich-Keil, S., Meanti, G., Warburg, F.R., Recht, B., Kanazawa, A.: K-planes: Explicit radiance fields in space, time, and appearance. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 12479–12488 (2023)

- [11] Fridovich-Keil, S., Yu, A., Tancik, M., Chen, Q., Recht, B., Kanazawa, A.: Plenoxels: Radiance fields without neural networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 5501–5510 (2022)

- [12] Garbin, S.J., Kowalski, M., Johnson, M., Shotton, J., Valentin, J.: Fastnerf: High-fidelity neural rendering at 200fps. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 14346–14355 (2021)

- [13] He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 770–778 (2016)

- [14] Kanazawa, A., Tulsiani, S., Efros, A.A., Malik, J.: Learning category-specific mesh reconstruction from image collections. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 371–386 (2018)

- [15] Kato, H., Ushiku, Y., Harada, T.: Neural 3d mesh renderer. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 3907–3916 (2018)

- [16] Kerbl, B., Kopanas, G., Leimkühler, T., Drettakis, G.: 3d gaussian splatting for real-time radiance field rendering. ACM Transactions on Graphics 42(4) (2023)

- [17] Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

- [18] Kuang, Z., Olszewski, K., Chai, M., Huang, Z., Achlioptas, P., Tulyakov, S.: Neroic: Neural rendering of objects from online image collections. ACM Transactions on Graphics (TOG) 41(4), 1–12 (2022)

- [19] Li, P., Wang, S., Yang, C., Liu, B., Qiu, W., Wang, H.: Nerf-ms: Neural radiance fields with multi-sequence. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 18591–18600 (2023)

- [20] Li, Z., Xian, W., Davis, A., Snavely, N.: Crowdsampling the plenoptic function. In: Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part I 16. pp. 178–196. Springer (2020)

- [21] Lin, H., Wang, Q., Cai, R., Peng, S., Averbuch-Elor, H., Zhou, X., Snavely, N.: Neural scene chronology. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 20752–20761 (2023)

- [22] Lu, T., Yu, M., Xu, L., Xiangli, Y., Wang, L., Lin, D., Dai, B.: Scaffold-gs: Structured 3d gaussians for view-adaptive rendering. arXiv preprint arXiv:2312.00109 (2023)

- [23] Martin-Brualla, R., Radwan, N., Sajjadi, M.S., Barron, J.T., Dosovitskiy, A., Duckworth, D.: Nerf in the wild: Neural radiance fields for unconstrained photo collections. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 7210–7219 (2021)

- [24] Mescheder, L., Oechsle, M., Niemeyer, M., Nowozin, S., Geiger, A.: Occupancy networks: Learning 3d reconstruction in function space. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 4460–4470 (2019)

- [25] Meshry, M., Goldman, D.B., Khamis, S., Hoppe, H., Pandey, R., Snavely, N., Martin-Brualla, R.: Neural rerendering in the wild. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 6878–6887 (2019)

- [26] Mildenhall, B., Srinivasan, P.P., Tancik, M., Barron, J.T., Ramamoorthi, R., Ng, R.: Nerf: Representing scenes as neural radiance fields for view synthesis. Communications of the ACM 65(1), 99–106 (2021)

- [27] Müller, T., Evans, A., Schied, C., Keller, A.: Instant neural graphics primitives with a multiresolution hash encoding. ACM Transactions on Graphics (ToG) 41(4), 1–15 (2022)

- [28] Niemeyer, M., Barron, J.T., Mildenhall, B., Sajjadi, M.S., Geiger, A., Radwan, N.: Regnerf: Regularizing neural radiance fields for view synthesis from sparse inputs. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 5480–5490 (2022)

- [29] Park, J.J., Florence, P., Straub, J., Newcombe, R., Lovegrove, S.: Deepsdf: Learning continuous signed distance functions for shape representation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 165–174 (2019)

- [30] Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., et al.: Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems 32 (2019)

- [31] Qi, C.R., Su, H., Mo, K., Guibas, L.J.: Pointnet: Deep learning on point sets for 3d classification and segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 652–660 (2017)

- [32] Qi, C.R., Yi, L., Su, H., Guibas, L.J.: Pointnet++: Deep hierarchical feature learning on point sets in a metric space. Advances in neural information processing systems 30 (2017)

- [33] Qin, M., Li, W., Zhou, J., Wang, H., Pfister, H.: Langsplat: 3d language gaussian splatting. arXiv preprint arXiv:2312.16084 (2023)

- [34] Reiser, C., Peng, S., Liao, Y., Geiger, A.: Kilonerf: Speeding up neural radiance fields with thousands of tiny mlps. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 14335–14345 (2021)

- [35] Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18. pp. 234–241. Springer (2015)

- [36] Rudnev, V., Elgharib, M., Smith, W., Liu, L., Golyanik, V., Theobalt, C.: Nerf for outdoor scene relighting. In: European Conference on Computer Vision. pp. 615–631. Springer (2022)

- [37] Schonberger, J.L., Frahm, J.M.: Structure-from-motion revisited. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 4104–4113 (2016)

- [38] Schwarz, K., Sauer, A., Niemeyer, M., Liao, Y., Geiger, A.: Voxgraf: Fast 3d-aware image synthesis with sparse voxel grids. Advances in Neural Information Processing Systems 35, 33999–34011 (2022)

- [39] Shao, R., Zheng, Z., Tu, H., Liu, B., Zhang, H., Liu, Y.: Tensor4d: Efficient neural 4d decomposition for high-fidelity dynamic reconstruction and rendering. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 16632–16642 (2023)

- [40] Shi, S., Guo, C., Jiang, L., Wang, Z., Shi, J., Wang, X., Li, H.: Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp. 10529–10538 (2020)

- [41] Tancik, M., Casser, V., Yan, X., Pradhan, S., Mildenhall, B., Srinivasan, P.P., Barron, J.T., Kretzschmar, H.: Block-nerf: Scalable large scene neural view synthesis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8248–8258 (2022)

- [42] Verbin, D., Hedman, P., Mildenhall, B., Zickler, T., Barron, J.T., Srinivasan, P.P.: Ref-nerf: Structured view-dependent appearance for neural radiance fields. In: 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 5481–5490. IEEE (2022)

- [43] Wang, G., Chen, Z., Loy, C.C., Liu, Z.: Sparsenerf: Distilling depth ranking for few-shot novel view synthesis. arXiv preprint arXiv:2303.16196 (2023)

- [44] Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing 13(4), 600–612 (2004)

- [45] Wen, C., Zhang, Y., Li, Z., Fu, Y.: Pixel2mesh++: Multi-view 3d mesh generation via deformation. In: Proceedings of the IEEE/CVF international conference on computer vision. pp. 1042–1051 (2019)

- [46] Wu, G., Yi, T., Fang, J., Xie, L., Zhang, X., Wei, W., Liu, W., Tian, Q., Wang, X.: 4d gaussian splatting for real-time dynamic scene rendering. arXiv preprint arXiv:2310.08528 (2023)

- [47] Wu, Z., Song, S., Khosla, A., Yu, F., Zhang, L., Tang, X., Xiao, J.: 3d shapenets: A deep representation for volumetric shapes. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1912–1920 (2015)

- [48] Xu, Y., Peng, S., Yang, C., Shen, Y., Zhou, B.: 3d-aware image synthesis via learning structural and textural representations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 18430–18439 (2022)

- [49] Yang, J., Pavone, M., Wang, Y.: Freenerf: Improving few-shot neural rendering with free frequency regularization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8254–8263 (2023)

- [50] Yang, Y., Zhang, S., Huang, Z., Zhang, Y., Tan, M.: Cross-ray neural radiance fields for novel-view synthesis from unconstrained image collections. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 15901–15911 (2023)

- [51] Yang, Z., Gao, X., Sun, Y., Huang, Y., Lyu, X., Zhou, W., Jiao, S., Qi, X., Jin, X.: Spec-gaussian: Anisotropic view-dependent appearance for 3d gaussian splatting. arXiv preprint arXiv:2402.15870 (2024)

- [52] Yi, T., Fang, J., Wu, G., Xie, L., Zhang, X., Liu, W., Tian, Q., Wang, X.: Gaussiandreamer: Fast generation from text to 3d gaussian splatting with point cloud priors. arXiv preprint arXiv:2310.08529 (2023)

- [53] Yu, A., Li, R., Tancik, M., Li, H., Ng, R., Kanazawa, A.: Plenoctrees for real-time rendering of neural radiance fields. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 5752–5761 (2021)

- [54] Yu, Z., Chen, A., Huang, B., Sattler, T., Geiger, A.: Mip-splatting: Alias-free 3d gaussian splatting. arXiv preprint arXiv:2311.16493 (2023)

- [55] Zhang, J., Yang, G., Tulsiani, S., Ramanan, D.: Ners: Neural reflectance surfaces for sparse-view 3d reconstruction in the wild. Advances in Neural Information Processing Systems 34, 29835–29847 (2021)

- [56] Zhang, R., Isola, P., Efros, A.A., Shechtman, E., Wang, O.: The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 586–595 (2018)

Supplementary material for “Gaussian in the Wild”

A Video demo

We strongly recommend readers to watch the video demo provided in webpage. The video showcases the novel view synthesis achieved by GS-W across various scenes, based on different reference images. It also illustrates the variations in scene appearance attained through the interpolation of different dynamic features . Additionally, we provide a visual comparison between GS-W and CR-NeRF in terms of novel view synthesis and appearance tuning. The video illustrates GS-W’s superior reconstruction of scene geometry and precise capture of environmental factors from the reference images, maintaining multi-view consistency. Furthermore, it highlights GS-W’s ability to adjust scene appearance in a manner more aligned with human perception by weighting the features extracted from the reference images.

B More implementation details

B.1 Model parameters

Below, we provide a detailed overview of the network parameters and other hyperparameter settings in GS-W. The Unet’s encoder utilizes the first 8 sub-convolution modules from a pre-trained ResNet-18 to extract image features. On the other hand, the decoder for generating feature maps comprises 4 up-sampling convolution modules, along with a decoder including 3 up-sampling convolution modules for producing the visibility map. The two decoder modules are both equipped with batch normalization and ReLU activation functions. To reduce computation, there are no skip connections between the encoder and the visibility map generating decoder during the forward process. Transpose convolution is employed for up-sampling, with the number of channels for each feature map fixed at 16. The learning rate for the Unet network gradually decreases from to .

The Fusion network consists of two MLP modules, each with 3 and 2 hidden layers, and each with hidden unit counts of and , respectively. Meanwhile, the MLP for color decoding comprises only one hidden layer with 48 units. Xavier initialization is used for initializing the weight parameters of these MLPs. During the forward process, we use 10 frequencies to encode position. For training, random dropout with 0.1 probability is applied to the dynamic appearance features to prevent overfitting, and the learning rate for training these MLPs is set to . The dimension of the intrinsic feature is set to 48.

Furthermore, the learning rate for Gaussian point positions decreases from to . Gaussian points are densified from 500 iterations to 15k iterations during training, with a gradient threshold set to . Other hyperparameters are set according to the guidelines of 3DGS.

B.2 Initialization of sampling coordinates

In Sec. 4.2, we equip each Gaussian point with K learnable sampling coordinate attributes to enable it to adaptively sample features on K feature maps. Specifically, here we describe how to initialize them before training. To ensure the points close to each other have similar initial sampling coordinates, we utilize linear transformation to convert the position of each Gaussian point into K sampling coordinates. First, we randomly generate K matrices , while ensuring that the sum of each matrix’s rows is 1. Then, we multiply these matrices with each Gaussian point’s position to obtain the initial values of these K sampling coordinates, as follows:

| (15) |

| PSNR | SSIM | LPIPS | FPS | Size(MB) | |

|---|---|---|---|---|---|

| global feature | 22.43 | 0.8555 | 0.1349 | 56.4 | 123.0 |

| K-planes-hybrid | 22.40 | 0.7604 | 0.2731 | 0.67 | 133.3 |

| Ours | 24.70 | 0.8655 | 0.1242 | 50.7 | 122.7 |

| Sacre Coeur | |||||

|---|---|---|---|---|---|

| PSNR | SSIM | LPIPS | FPS | Size(MB) | |

| K=1 | 23.08 | 0.8598 | 0.1318 | 60.3 | 93.8 |

| K=2 | 23.13 | 0.8590 | 0.1334 | 59.6 | 96.1 |

| K=3 | 23.24 | 0.8632 | 0.1300 | 58.3 | 97.2 |

| K=4 | 23.02 | 0.8616 | 0.1303 | 58.0 | 98.2 |

| K=8 | 22.94 | 0.8618 | 0.1296 | 55.8 | 101.8 |

| K=16 | 22.98 | 0.8611 | 0.1321 | 51.8 | 113.9 |

| Synthetic Lego Dataset | |||

|---|---|---|---|

| PSNR | SSIM | LPIPS | |

| 3DGS | 23.73 | 0.9250 | 0.0748 |

| NeRF-W | 26.76 | 0.9224 | 0.0552 |

| Ha-NeRF | 26.51 | 0.9416 | 0.0339 |

| GS-W (Ours) | 29.64 | 0.9522 | 0.0323 |

C Further experiments

C.1 More comparison

Global appearance feature. To highlight the superiority of independently sampled dynamic appearance features over a single global feature, We replace each point’s dynamic appearance feature with a global one extracted from the reference image. As shown in Tab. 4 and Fig. 6, the global feature method achieves an inferior result and fails to recover local appearance details on the pillars and sky.

K-Planes. Aiming to further demonstrate the advantages of our method in rendering quality and speed, we compared it with K-Planes[10]. Unlike NeRF-based methods, K-Planes uses a hybrid 3D representation that achieves lower storage and faster rendering. Quantitative results, including metrics for storage and rendering efficiency, along with qualitative results, are presented in Tab. 4,Tab. 7,Fig. 6 and Fig. 9. Our method continues to achieve superior rendering quality and speed in comparison.

C.2 K value selection

In our method statement, K represents the number of feature maps used for sampling. We conducted experiments under the Sacre Coeur scene to determine an effective K, setting K to 1, 2, 3, 4, 8, and 16 respectively. The experimental results are presented in Tab. 5. We observe that the performance on the test set does not improve when K exceeds 3 while bringing more computation cost. Thus, we reasonably set K to 3.

C.3 More appearance tuning results

In Fig. 7, we present additional qualitative comparison results for appearance tuning. From the figure, it’s fair to conclude that our method applies the environmental factors from the reference images to the scene more reasonably, gradually increasing their influence. In the first example from top to bottom, the colors of the buildings become strange as the weight increases in the other two methods which is against practical human understanding. In the second example, our method captures the highlights on the pillars better and enhances them as the weight increases.

| stjohann | lwp | st | europa | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | PSNR | SSIM | LPIPS | |

| 3DGS | 17.32 | 0.7430 | 0.313 | 15.43 | 0.7000 | 0.3360 | 15.23 | 0.5920 | 0.4240 | 16.32 | 0.6520 | 0.3270 |

| K-planes-hybrid | 20.39 | 0.7548 | 0.3366 | 21.65 | 0.7482 | 0.3397 | 19.66 | 0.6151 | 0.4361 | 18.75 | 0.6487 | 0.4549 |

| NeRF-W | 21.38 | 0.8200 | 0.1940 | 21.29 | 0.7610 | 0.2950 | 19.68 | 0.6310 | 0.4010 | 19.55 | 0.6870 | 0.3470 |

| Ha-NeRF | 19.93 | 0.7870 | 0.2100 | 21.32 | 0.7560 | 0.2780 | 20.56 | 0.6360 | 0.3780 | 18.76 | 0.6610 | 0.3480 |

| CR-NeRF | 22.27 | 0.8350 | 0.1750 | 22.61 | 0.7850 | 0.2580 | 21.67 | 0.6610 | 0.3600 | 19.92 | 0.6960 | 0.3100 |

| GS-W (Ours) | 26.23 | 0.8927 | 0.1133 | 24.44 | 0.8272 | 0.2082 | 22.45 | 0.6900 | 0.3147 | 22.04 | 0.7577 | 0.2309 |

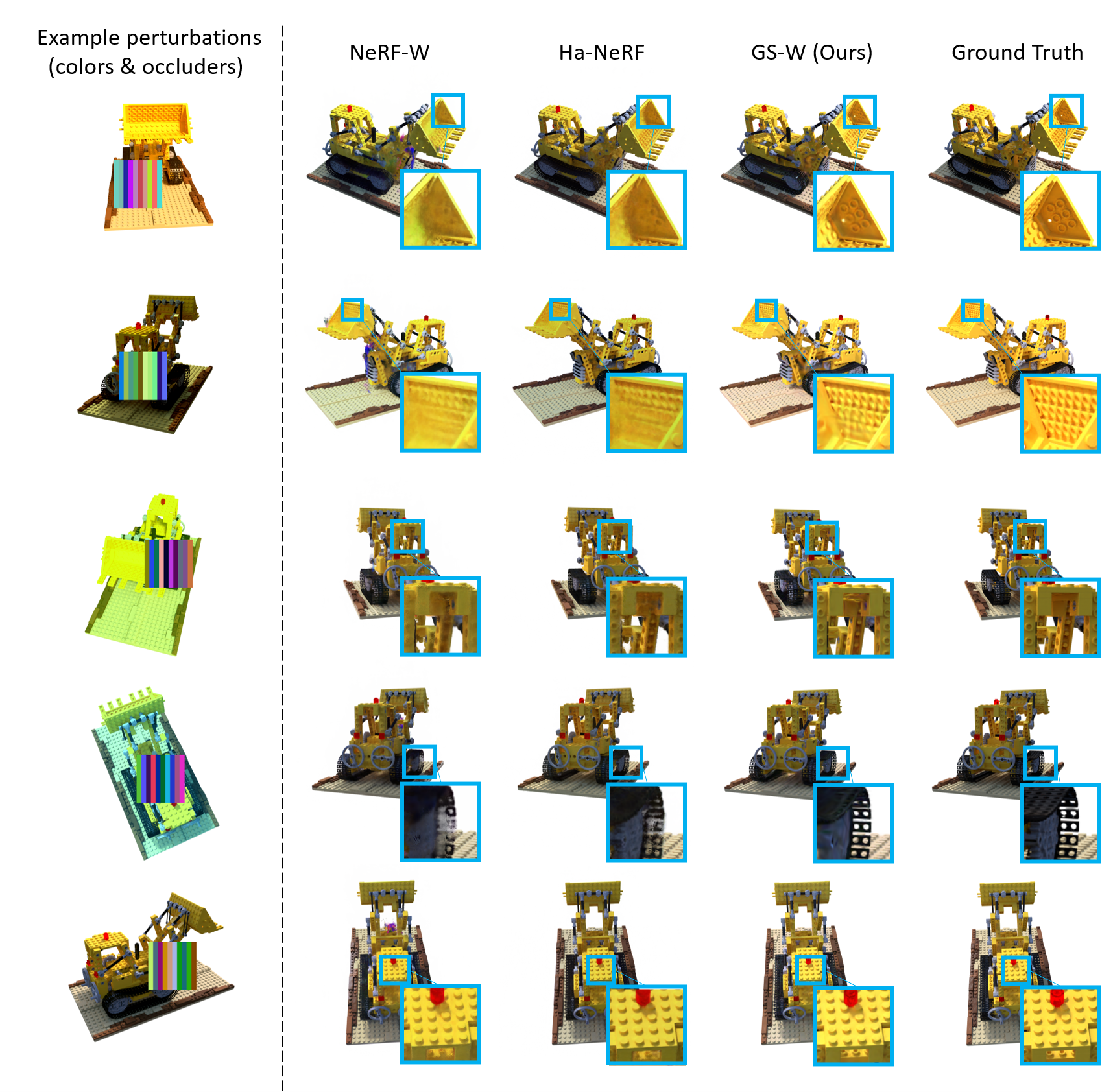

C.4 Synthetic Lego dataset

Following NeRF-W and Ha-NeRF, we further compared GS-W with them on the synthetic Lego dataset to validate our method’s effectiveness. To ensure a fair comparison, we followed their setup by manually introducing perturbations such as colors and occluders in the training set of the synthetic Lego dataset to simulate scenarios that might be encountered in the wild, as the first column in Fig. 8. The training set comprises 100 images, while the test set consists of 200 images. During testing, only a single unperturbed training image is used as the reference image to extract features. Subsequently, novel views of the test images are rendered, and metrics are calculated against the test images.

As CR-NeRF does not provide hyperparameter settings for this dataset, the attempts to use its default parameters from other datasets result in training crashes and produce poor and peculiar results. Therefore, we only compare with NeRF-W, Ha-NeRF, and 3DGS on this dataset. Due to the limited size of the Lego training dataset and each view being influenced by a fixed color, we decode color using position instead of view direction to prevent dependency on the latter in appearance modeling. During training, without initialized point clouds, we adjust the gradient threshold for Gaussian point densification to to allow the model to generate more valid points. Other hyperparameters remain the same, with training 20k iterations.

Quantitative and qualitative results are presented in Tab. 6 and Fig. 8, respectively. We can observe that 3DGS performs poorly while our method performs the best under scenarios with added color and occluder perturbations. This testing process, conducted on novel viewpoints rather than on the original reference image viewpoints, also partially validates the multi-view consistency of GS-W and its ability to generalize single-image features to new viewpoints.

C.5 NeRF-OSR dataset

We further evaluate the robustness of GS-W using the NeRF-OSR dataset[36]. We experiment with four scenes - europa, lwp, st, and stjohann - with 12.5% of images from each sequence as the test set. Each training set contains approximately 350 images, and each test set contains about 50 images.

We compared our method with NeRF-W, Ha-NeRF, CR-NeRF, 3DGS, and K-Planes, using their default settings. Evaluation results on the test set, shown in Tab. 7 and Fig. 9, demonstrate that our method consistently achieves the best performance. Other methods struggle to capture local details, and 3DGS fails to handle variations in scene appearance and transient occlusions. In contrast, our method accurately captures local details and effectively handles transient occlusions.

Despite our method achieving good performance, this dataset, not sourced from the internet, features limited appearance variations per scene. Consequently, the reconstructed scenes may exhibit inconsistencies in appearance across different viewpoints. In the future, training a more generalized dynamic appearance extractor across multiple scenes could effectively address and improve this issue.

D Style transfer

Similar to CR-NeRF, our method can also transfer style from images to the scene, as shown in Fig. 10. However, for style transfer across scenes or from unseen images, the absence of camera poses from the provided reference images makes it difficult to map Gaussian points to the projection feature map for sampling. Meanwhile, in style transfer, there lack of physical significance to using the projection sampling method. Therefore, when performing style transfer from unseen images, we set all features sampled from the projection feature map to 0. The results demonstrate that our method can effectively transfer the style provided by reference images to the scene.