GATSBI: An Online GTSP-Based Algorithm

for Targeted Surface Bridge Inspection

Abstract

We study the problem of visual surface inspection of a bridge for defects using an Unmanned Aerial Vehicle (UAV). We do not assume that the geometric model of the bridge is known beforehand. Our planner, termed GATSBI, plans a path in a receding horizon fashion to inspect all points on the surface of the bridge. The input to GATSBI consists of a 3D occupancy map created online with LiDAR scans. Occupied voxels corresponding to the bridge in this map are semantically segmented and used to create a bridge-only occupancy map. Inspecting a bridge voxel requires the UAV to take images from a desired viewing angle and distance. We then create a Generalized Traveling Salesperson Problem (GTSP) instance to cluster candidate viewpoints for inspecting the bridge voxels and use an off-the-shelf GTSP solver to find the optimal path for the given instance. As the algorithm sees more parts of the environment over time, it replans the path to inspect novel parts of the bridge while avoiding obstacles. We evaluate the performance of our algorithm through high-fidelity simulations conducted in AirSim and real-world experiments. We compare the performance of GATSBI with a classical exploration algorithm. Our evaluation reveals that targeting the inspection to only the segmented bridge voxels and planning carefully using a GTSP solver leads to a more efficient and thorough inspection than the baseline algorithm.

I Introduction

In this work, we are interested in designing a high-level planner that inspects a 3D surface, i.e., a bridge for identifying visual defects. Inspection is closely related to coverage and exploration, which are problems that have been well-studied in the literature. However, as we will show, coverage and exploration are not necessarily the best approaches for inspection. Given a 3D model of the environment (including the bridge), we can find a coverage path that covers all points on the bridge using an offline planner [1]. In practice, we often do not have any prior model of the layout of the bridges. Even if a prior 3D model is available, it may be inaccurate due to changes in the environment surrounding the bridge as well as structural changes made to the bridge. In this work, we address the problem of designing targeted inspection plans as the 3D model of the environment is built online.

Recently, a number of commercial solutions such as the ones from Skydio [2] and Exyn [3] and ongoing work in academia provide robust autonomy including SLAM and low-level planning (how to navigate from point A to point B). Our work on high-level planning (determining what the next waypoint B should be) is complementary to these works. Current forms of planning mostly consist of someone clicking on waypoints for the UAVs to fly to. As a result, we develop tools that autonomously solve the more general problem of inspecting a bridge with no prior information about its geometry.

Frontier-based strategies [4] are typically used for exploring an initially unknown environment. A frontier is a boundary between explored and unexplored regions. The strategy chooses which of the frontiers to visit (and which path to follow to get to the chosen frontier) to help speed up exploration. The algorithm terminates when there are no more accessible unexplored regions. A bounding box placed around the bridge can restrict exploration when operating in an open environment. Exploring the bridge does not necessarily mean that the UAV will get inspection-quality images. Instead, an inspection planner that can take into account viewing and distance constraints may be more efficient. We present such a planner, termed GTSP-Based Algorithm for Targeted Surface Bridge Inspection (GATSBI), and show that it outperforms the frontier-based exploration strategies in efficiently inspecting the bridge (Section V).

GATSBI consists of four modules: 3D occupancy grid mapping using LiDAR data, a semantic segmentation algorithm to find LiDAR points that correspond to the surface of the bridge, a Generalized Traveling Salesperson Problem (GTSP) solver for finding inspection paths for the UAV, and a navigation algorithm for executing the planned path. We use off-the-shelf modules for occupancy grid mapping (OctoMap [5]), solving GTSP (GLNS [6]), and point-to-point navigation (MoveIt [7]). Our key algorithmic contribution is to show how to reduce the inspection problem to a GTSP instance and the full pipeline that outperforms baseline strategies. Our technique takes into account overlapping viewpoints that can view the same parts of the bridge and simultaneously selects where to take images and what order to visit those viewpoints. We also show when and how to replan as we obtain more information about the environment.

II Related Work

As described earlier, frontier-based exploration is a widely-used method for 3D exploration of unknown environments [8, 9, 10]. Other works proposed variants of frontier exploration focused on choosing the next frontier to visit [11, 12]. Another popular approach is to model the exploration problem as one of information gathering and choose a path (or a frontier) that maximizes the information gain [13, 14]. Additionally, there are Next-Best-View approaches [15] that, as the name suggests, plan the next-best location to take an image from to explore the environment. We refer the reader to a recent, comprehensive survey on multi-robot exploration by Li [16] that covers a variety of exploration strategies.

As shown in our simulations, generic exploration strategies are inefficient when performing targeted inspection (e.g., bridge inspection). There has been work on designing inspection algorithms that plan paths that take into account the viewpoint considerations [17, 18, 19, 20]. When prior information is available, one can plan inspection paths carefully by considering the geometric model of the environment. Typically, algorithms use prior information, such as a low-resolution version of the environment, to create an inspection path and obtain high-resolution measurements of the environment [17, 19]. Unlike these works, we consider a scenario where the robot has no prior environmental information and must plan using incrementally revealed data.

Bircher et al. [21] presented a receding horizon planner for exploration and inspection. Both algorithms use a Rapidly-Exploring Random Tree to generate a set of candidate paths in the known, free space of the environment. Then the algorithm selects a path based on a criterion that values how much information a path gains about the environment. The planner uses a receding horizon algorithm repeatedly invoked with new information. We follow a similar approach; however, their algorithm knows the inspection surface a priori, while our work only requires the UAV to see a portion of the bridge at the start of inspection. The environment is not known for their exploration algorithm but it is known for their inspection algorithm. GATSBI has no prior knowledge about the environment and minimal knowledge of the target inspection surface. Furthermore, we cluster potential viewpoints using GTSP which leads to further efficiency.

Song et al. [20] recently proposed an online algorithm that consists of a high-level coverage planner and a low-level inspection planner. The low-level planner takes into account the viewpoint constraints and chooses a local path that gains additional information about the structure under inspection. Our work differentiates by guaranteeing the quality of inspection, not requiring a bounding box around the target infrastructure, and segmenting the infrastructure of interest from the environment which guarantees inspection of only the target infrastructure.

In summary, we make the following contributions:

-

•

Present a receding horizon algorithm, GATSBI, that plans paths to efficiently inspect bridges when no prior information about them is present;

-

•

Demonstrate that GATSBI outperforms a baseline frontier-based exploration 15x at bridge surface inspection and 17x at crack detection through numerous simulations in AirSim using 3D models of bridges;

-

•

Validate the practical feasibility with experiments on a mock bridge and a simplified version of GATSBI;

-

•

Provide ROS packages that integrate MoveIt with AirSim for simulations and DJI’s SDK for real-world experiments.111https://github.com/raaslab/GATSBI

III Problem Formulation

We describe the overarching problem below.

Problem 1

Given a UAV with a 3D LiDAR and RGB camera, find a path to inspect every point on the bridge surface to minimize the total flight distance.

We consider the scenario where the geometric model of the bridge is unknown a priori. We assume that the UAV starts the algorithm at a location where at least some part of the bridge is visible. If this is not the case, we can run a frontier exploration strategy until the bridge is visible. We then plan an inspection path for the part of the bridge that is visible. As the UAV sees more of the bridge, we replan to find a better tour in a receding horizon fashion. Problem 2 (defined shortly) focuses on finding a path for the UAV based on the partial information. We solve the larger Problem 1 by repeatedly solving Problem 2 as we gain new information.

We use a 3D semantic, occupancy grid built using localized pointcloud data from the 3D LiDAR to represent the model of the bridge built online. GATSBI assigns each voxel in the occupancy grid a semantic label. The label indicates whether the voxel is free space ; is occupied space, part of the bridge, and previously inspected ; is occupied, part of the bridge, but not yet inspected ; and occupied but not a bridge voxel (i.e., obstacles) . Our goal is to inspect all the voxels that correspond to the bridge surface, i.e., to ensure that .

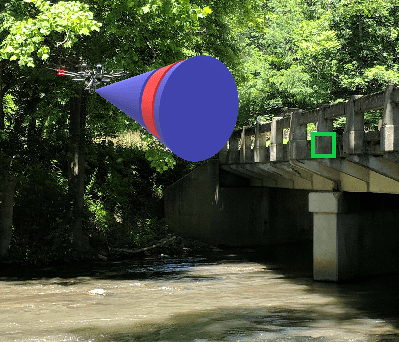

A voxel is inspected if we inspect at least one of its six faces. A face is inspected if the center of that face falls within a cone given apex angle centered at the UAV camera and within a minimum and maximum range of the UAV camera. The apex angle represents the field of view of the camera that is rigidly attached to the UAV. The viewing distance is a minimum and maximum distance range that the UAV should inspect a bridge voxel to ensure quality images for inspection. Figure 2 shows an example of these viewing constraints. For the rest of the paper, we refer to the viewing cone and distance as . The RGB camera is used to take pictures of the bridge once the UAV has reached a target point.

Problem 2

Given a 3D occupancy map consisting of four sets of voxels (), find a minimum length path that inspects every voxel in .

In the following section, we show how to model this problem as a GTSP instance.

IV The GATSBI Planner

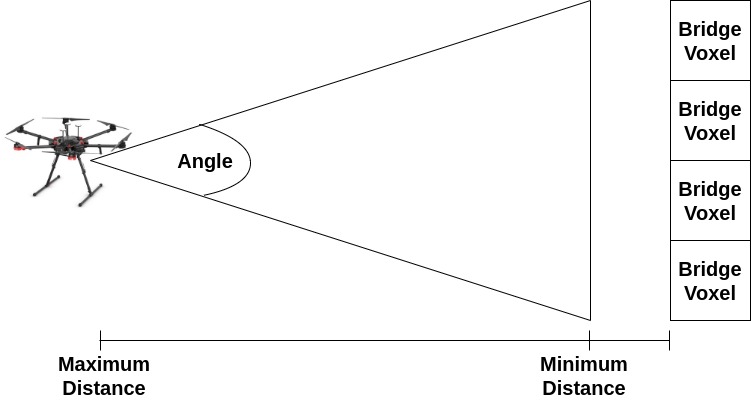

In this section, we give an overview of the GATSBI algorithm. We show the full pipeline (Fig. 3) which broadly consists of two modules: perception and planning. We describe each in detail next.

IV-A Perception

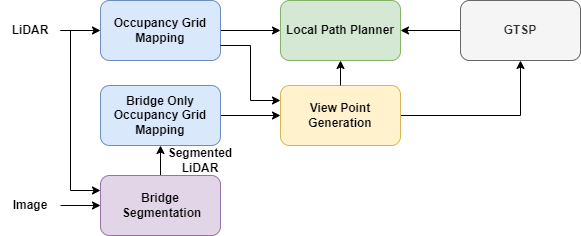

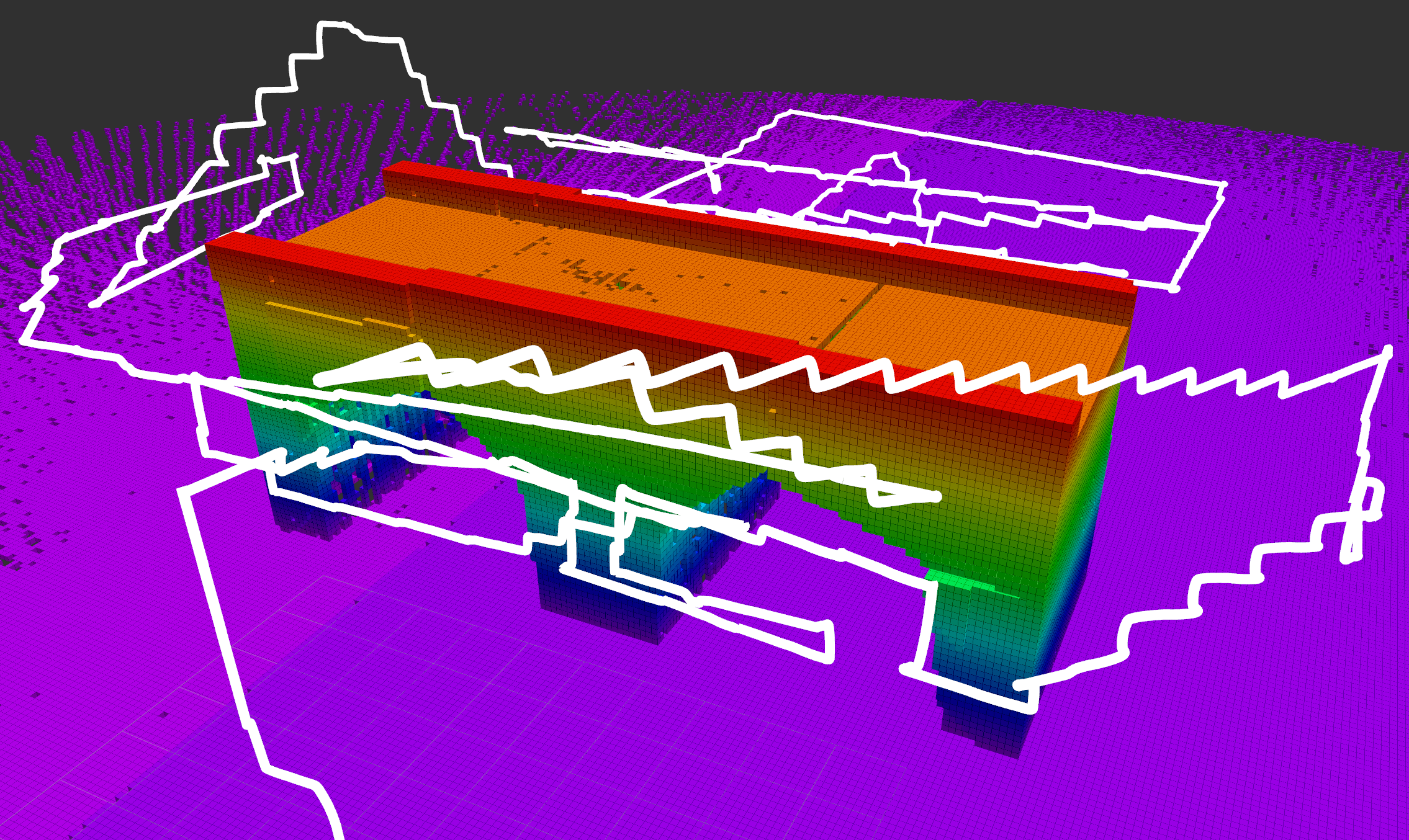

GATSBI starts by segmenting the bridge from the environment. Segmenting out the bridge allows the algorithm to differentiate between the bridge and obstacles in the environment. This allows only the bridge to be inspected as opposed to every object in the environment. The 3D LiDAR points that lie on the segmented bridge are classified as bridge points. These bridge points are then used to create a 3D occupancy grid. In parallel, the complete point cloud (segmented and non-segmented points), is used to generate an environmental 3D occupancy grid. Together, these two occupancy grids output a set of voxels: free , bridge , bridge , and obstacle . The algorithm uses the segmented voxels () to plan inspection paths. The algorithm uses the other voxels (, ) to plan collision-free paths and take into account viewing constraints. OctoMap [5] is used to generate our 3D occupancy grids. An example of the 3D occupancy grid is shown in Fig. 4.

IV-B Planner

To inspect a bridge, we need to inspect all voxels in (as described in Section III). GATSBI works in a receding horizon fashion. The set keeps track of inspected voxels. This avoids unnecessarily inspecting the same voxel more than once. Specifically, the UAV must view each voxel in from some point on its path within . We formulate this problem as a Generalized Traveling Salesperson Problem (GTSP) instance. GTSP generalizes the Traveling Salesperson Problem and is NP-Hard [6]. The input to GTSP consists of a weighted graph, , where vertices are clustered into sets. The edges of the graph are the distances between the vertices. The objective is a minimum weight tour that visits at least one vertex in each set once. Our GTSP configuration is explained next.

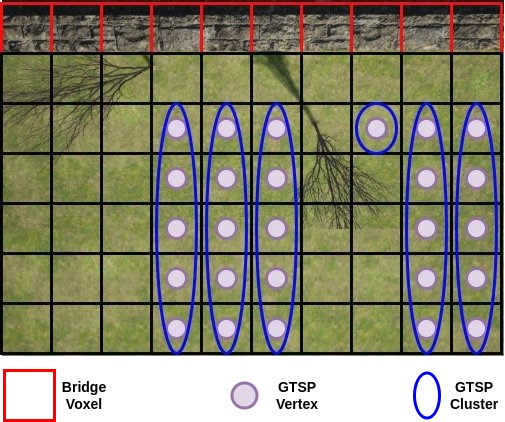

For our implementation, vertices are the center-points of voxels that the UAV can fly to and clusters are the set of all a specific can be inspected at. Each vertex in corresponds to a candidate viewpoint. We check all pairs of and to see if lies within of one of the faces of . If so, we add a vertex in the graph corresponding to the pair and .

Each free voxel that can inspect the same will add one vertex each to the cluster corresponding to . A simplified example of this graph setup is shown in Fig. 5. The GTSP tour will ensure the UAV visits at least one viewpoint in this cluster.

Next, we create an edge between every pair of vertices in . The cost for each of these edges is initially the Euclidean distance between the two vertices. With the vertices, edges, and clusters, we create a GTSP instance and use the GTSP solver, GLNS [6], to find a path for the UAV. We use GTSP as our planner because it will guarantee at least one point corresponding to every will be visited.

Before moving from one point to the next, we check the distance from the current location of the UAV to the next vertex in the GTSP path. Instead of Euclidean distance, we find the distance of the path between these points using a Rapidly-exploring random tree (RRT) connect algorithm with our environment occupancy grid. This ensures the path between these points is collision-free. We use RRT Connect to quickly find a path within a threshold as opposed to using another RRT variant such as RRT* to find the optimal path. If the difference between the RRT distance and the Euclidean distance is greater than (discrepancy distance), we update the edge costs in the GTSP instance and replan the GTSP path. We replan as needed to ensure that the first edge in the returned tour is within of the Euclidean distance. We call this a lazy evaluation of edge costs. Computing the RRT distance (which is a more accurate approximation of the actual travel distances) between every pair of vertices would be time-consuming. By checking the discrepancy lazily, we find a tour quickly while also not executing any edge where the actual distance is significantly larger than the expected distance. For our experiments, we set to be 125% of the Euclidean distance to account for some of the variances in paths generated using RRT algorithms but still allow replanning when necessary.

We keep track of each newly visited cluster during the flight. Each of these newly visited clusters corresponds to a non-inspected bridge voxel. The camera is also used to take an image at each visited point in the path to obtain inspection images. Once inspected, GATSBI moves them from set to . We execute the plan until one of two conditions is met: either a time limit () elapses, or we complete the path, whichever occurs first. We also record the raw sensor data during the flight. Once we complete navigation, we use the stored data to update the bridge inspected and non-inspected voxels and replan. Once is empty, GATSBI considers the bridge inspected and terminates. An overview of a single iteration of the GATSBI algorithm can be viewed in Algorithm 1.

V Evaluation

In this section, we evaluate the algorithm in both simulation and hardware experiments. For the simulations, we compare it against a baseline algorithm as well as discuss parameter tuning.

V-A Simulations

We present simulation results to evaluate the performance of GATSBI. We first present a qualitative example of the inspection paths produced by GATSBI. We also evaluate the computational time for three subroutines within GATSBI. Finally, we compare GATSBI with the baseline frontier-based exploration.

V-A1 Setup

We use Robot Operating System (ROS) Melodic on Ubuntu 18.04 and AirSim to carry out the simulations. We equipped the simulated UAV with a LiDAR with specifications set to match a Velodyne VLP-16 3D LiDAR and an RGB camera. The 3D LiDAR generates around 300,000 points/sec. It also has a 360∘ horizontal field of view with 15∘ vertical field of view. The VLP-16 has a range of 100m [22]. Due to the LiDAR’s orientation, the LiDAR had a 30∘ horizontal FoV and measured distances ahead, behind, and below the UAV (with respect to the UAV’s coordinate frame). We use the MoveIt [7] software package based on the work done by Köse [23] to implement the RRT connect algorithm. MoveIt uses RRT connect and the environmental 3D occupancy grid to find collision-free paths for point-to-point navigation.

For all experiments, we use a viewing cone with an apex angle of 0∘ and a viewing distance between two to ten meters. We set the viewing cone to the strictest possibility — a straight line from the camera. This is to ensure the highest quality of images captured for inspection. We set the viewing distance based on Dorafshan et al. [24], where they suggest a minimum flight distance of two meters to allow for the safe flight of the UAV.

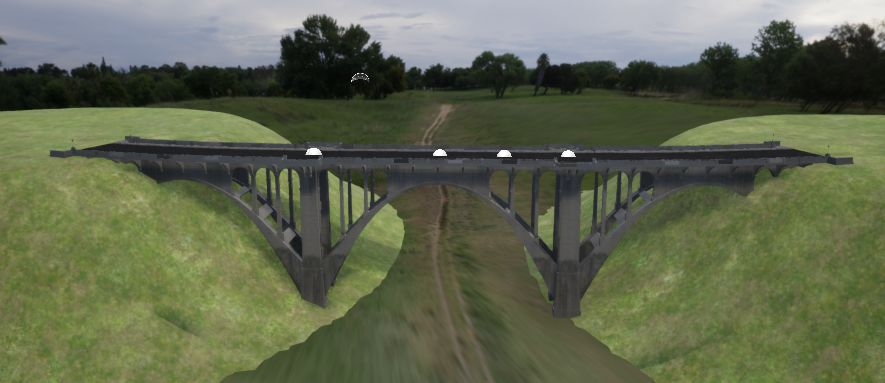

V-A2 Qualitative Example

We evaluate GATSBI on five bridges shown in Fig. 6. The five bridges used are the arch bridge, box girder bridge, covered bridge, iron bridge, and steel bridge. All bridges, except the steel bridge, do not contain any other object in the environment except for the ground. The steel bridge, on the other hand, has other obstacles such as the landscape. They were all chosen because they distinctly represent different types of bridges. Figure 7 shows the path followed by the UAV as given by GATSBI around the Arch Bridge environment. We used AirSim’s built-in segmentation algorithm to segment the bridge out from the rest of the environment. AirSim labels each LiDAR point with the segmentation color it belongs to. We modified the AirSim ROS wrapper to publish a segmented pointcloud containing points only belonging to the bridge using their built-in segmentation. The choice of the segmentation algorithm does not affect the main contribution of this paper which is the GATSBI planner. We also created a MoveIt implementation that integrates with AirSim. This package is available in our repository. Next, we present quantitative results on GATSBI.

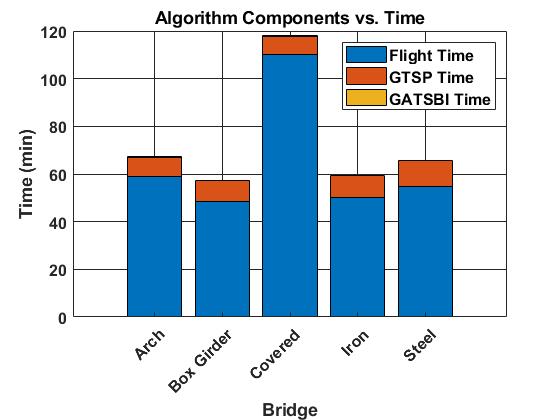

V-A3 Computational Time

We examine the time it takes for executing GATSBI. In Fig. 8, we report the average time for different components of the algorithm during all the simulations. We report three times: the time spent in the planner to create the GTSP instance (GATSBI), the time taken to solve the GTSP instance (GTSP), and the flight time before the algorithm calls the planner again (flight). We see that the time it takes GATSBI to perform segmentation and create a GTSP instance takes an average of 0.14 minutes. The GTSP solver takes an average of 8.82 minutes. Compared to the flight time (average of 64.5 minutes), the time taken by the planner is not significant. This suggests that GATSBI is not a bottleneck and is capable of running in real-time on UAVs that are executing 3D bridge inspection in unknown environments.

V-A4 Comparison with Baseline

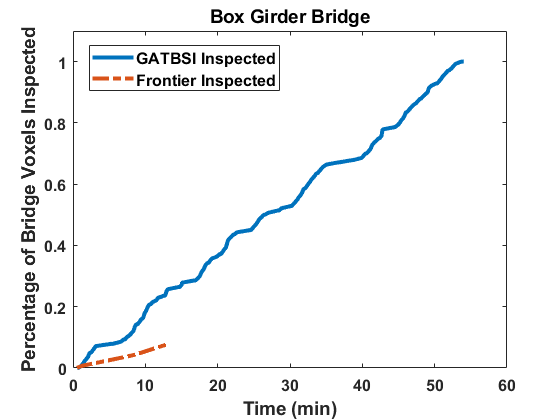

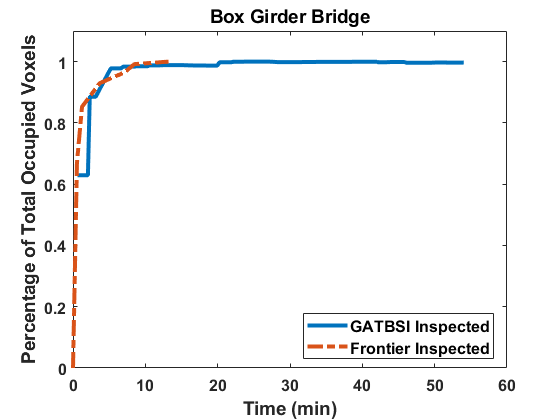

We compare the performance of GATSBI with a baseline algorithm that we developed that is based on frontier exploration. Since the baseline method does not directly count the number of inspected voxels, we implement a package on top of the baseline to count the inspected voxels. This way we compare only the inspected voxels, not the covered voxels. We can see in Fig. 9 and Table I that our method does better than the baseline method when comparing the percentage of bridge voxels inspected. We obtain this value by dividing the inspected bridge voxels () upon the termination of the algorithm by the total inspectable bridge voxels. Note, obstructed bridge voxels that have no candidate viewpoints are uninspectable.

| Bridge | Algorithm | Bridge Vox. | Inspected | Runtime |

|---|---|---|---|---|

| Arch | Frontier | 124 | 10 | 15.2 min |

| GATSBI | 124 | 44.8 min | ||

| Box Girder | Frontier | 140 | 11 | 13.2 min |

| GATSBI | 140 | 53.7 min | ||

| Covered | Frontier | 60 | 5 | 8.7 min |

| GATSBI | 60 | 69.3 min | ||

| Iron | Frontier | 167 | 6 | 8.4 min |

| GATSBI | 167 | 66.9 min | ||

| Steel | Frontier | 214 | 12 | 24.5 min |

| GATSBI | 214 | 77.3 min |

Nevertheless, we observe that GATSBI achieves inspection of 100% voxels while the baseline only achieves a maximum of 10%. Frontier exploration does not explicitly take inspection into account. As shown in the right plot in Fig. 9, it performs as well as GATSBI at exploration. The environment is also simple; in a more complicated environment, it would be better at exploration than the GATSBI algorithm. This validates our claim that GATSBI targets the inspection of bridge surfaces instead of just covering the environment. We also see that GATSBI executes a more thorough inspection than the baseline. Therefore, we justify the claim that GATSBI is more efficient in inspection compared to a frontier exploration algorithm.

V-A5 Parameter Tuning

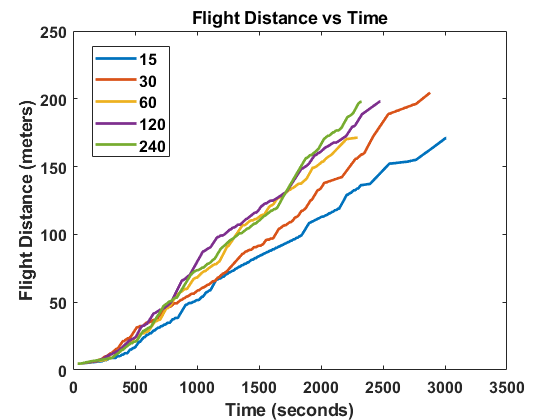

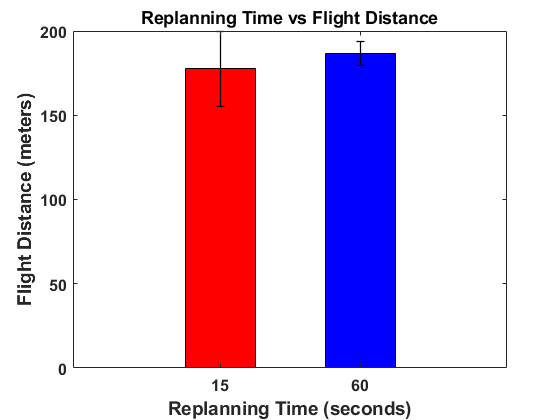

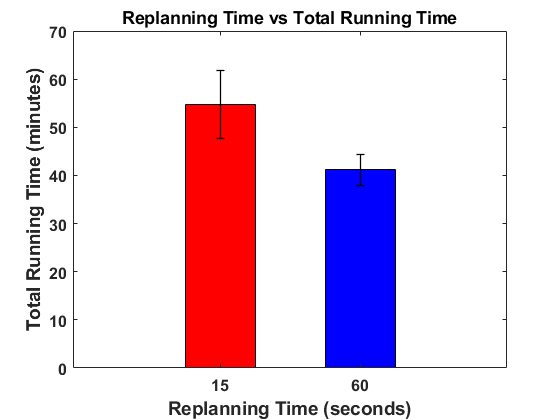

One parameter used in the algorithm is replanning time, . This time determines when to stop on the current GTSP tour if it has not been completed and replan with GATSBI using the most up-to-date environment and bridge information. Here, we discuss how we determined what to set to. Initially, we evaluated different values of for one of the bridge simulations. The results of this are shown in Fig. 10a. values of 15 and 60 seconds were then chosen for further evaluation due to them having the shortest flight distance. 5 runs each at these values were conducted using the simulation setup. Figure 10b shows the average flight distance during these 5 runs. On average, an value of 15 seconds had a slightly shorter total flight time (177.5 vs 186.5 meters) compared to an value of 60 seconds but with a higher standard deviation (22.4 vs 7.1). However, the average total runtime was longer using an value of 15 seconds (54.7 vs 41.2 min) while also having a higher standard deviation (7.0 vs 3.3) compared to an value of 60 seconds as shown in Fig. 10c. Because of this, an value of 60 seconds was used for the algorithm.

V-A6 Crack Detection

We also evaluated GATSBI on a simplified crack detection setup and compared its performance to frontier exploration. We randomly placed defects on 10% of the bridge’s surface voxels and determined how many of them were detected by both algorithms. Detected is defined as inspecting the voxel that the crack is on. This evaluation was done in an offline manner and each bridge was evaluated 10,000 times for both algorithms. The results are shown in Table II. GATSBI performed at least 11.5x better at inspecting voxels with defects than frontier exploration. The reason that GATSBI’s detection is not close to 100% is that cracks were also placed on top of the bridge and below. For our bridge models, on average 40% of the bridge surface is inspectable using a front-facing camera. Since the UAV is not equipped with an upwards and downwards-facing camera, the remaining 60% cracks are not possible to be found. However, by adding these cameras and setting up the GTSP instance accordingly, these cracks would also be detected as long as they are not obstructed. Cracks could also be blocked by obstacles in the environment making them impossible to inspect.

| Bridge | Algorithm | % Found | Std. Dev. |

|---|---|---|---|

| Arch | Frontier | 2.6% | 2.5% |

| GATSBI | 32.5% | 7.2% | |

| Box Girder | Frontier | 3.2% | 2.8% |

| GATSBI | 49.4% | 8.0% | |

| Covered | Frontier | 3.0% | 3.9% |

| GATSBI | 34.5% | 10.9% | |

| Iron | Frontier | 1.6% | 1.9% |

| GATSBI | 44.1% | 7.6% | |

| Steel | Frontier | 2.4% | 2.1% |

| GATSBI | 42.8% | 6.7% |

V-B Real-World Experiments

This section presents real-world experiments that evaluate the performance of GATSBI. Here, we show results with GATSBI since our simulated experiments demonstrated that GATSBI outperformed frontier exploration (our baseline in Section V-A4). Below, we present quantitative results that compare the computation time, flight time, and the number of voxels inspected with GATBSI.

V-B1 Setup

We performed these experiments using a DJI Matrice M600 Pro (see Fig. 11). The M600 Pro was equipped with an NVIDIA Jetson TX2 (which ran Ubuntu 16.04 and ROS Kinetic), Velodyne VLP-16 3D LiDAR, and GPS. Here, we used the same LiDAR model and parameters in the simulated experiments.

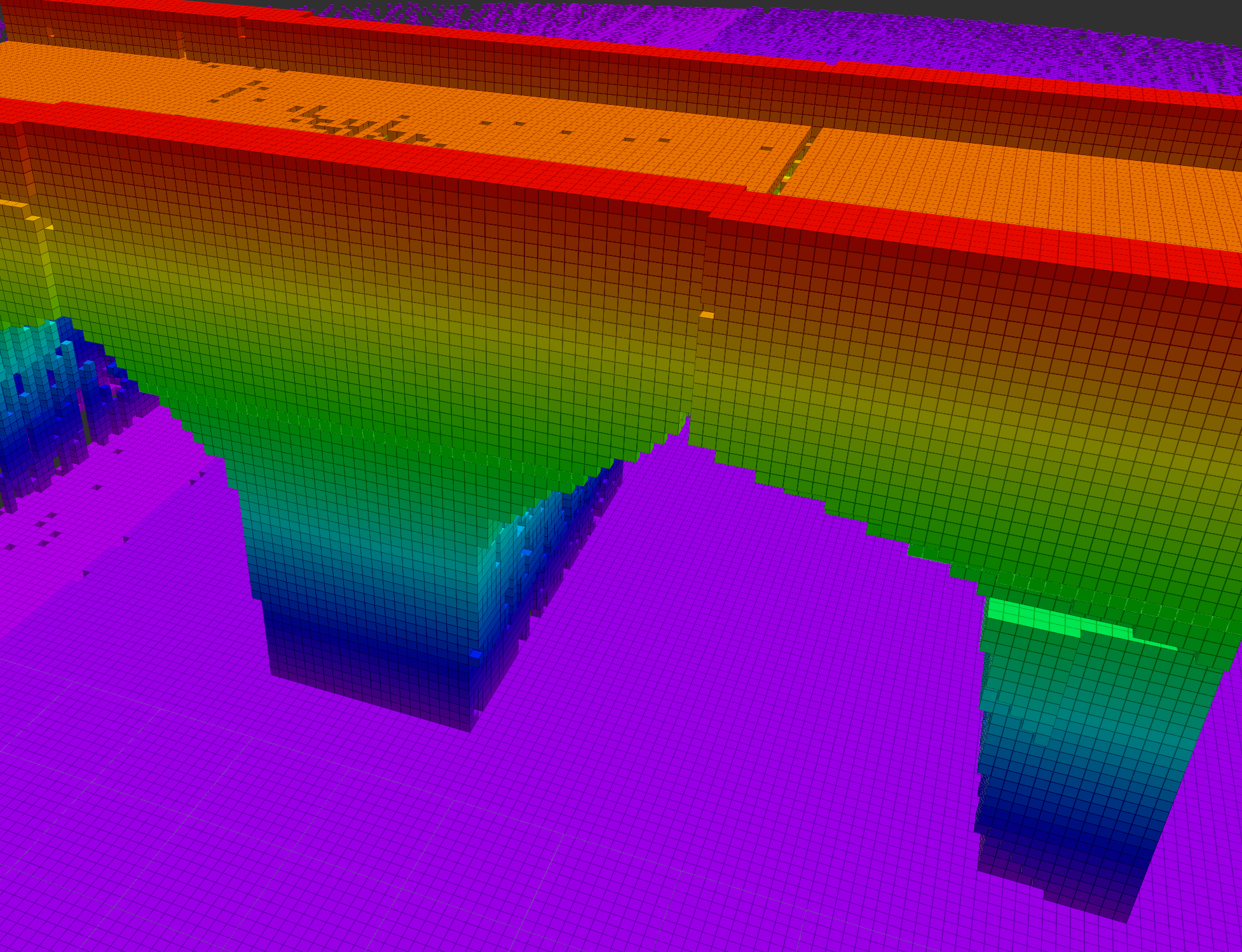

We constructed a mock bridge (Fig. 11) and flew the UAV around it within an outdoor UAV cage called the Fearless Flight Facility (F3) at the University of Maryland, College Park. Due to mapping limitations, a simplified version of GATSBI was run. These limitations are addressed in Section VI. For our experiments, we used the UAV’s LiDAR to create a map of our bridge and then ran GATSBI in an offline manner to find the inspection path. To localize the pointcloud, we used the pose of the DJI M600 Pro obtained using its GPS array. Unlike simulations where the localization is perfect, there is noise in the real world. To combat this, we can use off-the-shelf Visual Inertial Odometry along with GPS for localization along the bridge surface [25, 26]. For our experiments, the bridge was segmented out using a box filter on the localized pointcloud. For our use-case, geographical segmentation worked well but for more complicated experiments, color-based or learning-based segmentation networks can be used. Another implementation of MoveIt was integrated with DJI’s SDK and used for our obstacle-avoidance navigation planning. This package is also provided in our repository and is the first of its kind. For larger structures, battery-life of the UAV would limit structure coverage during the single flight. We can account for this by returning the UAV to the home-point when it’s battery gets low and swapping out the batteries with charged ones. In the future, we are interested in investigating how GATSBI scales when we use multiple agents to inspect for bridge.

V-B2 Results

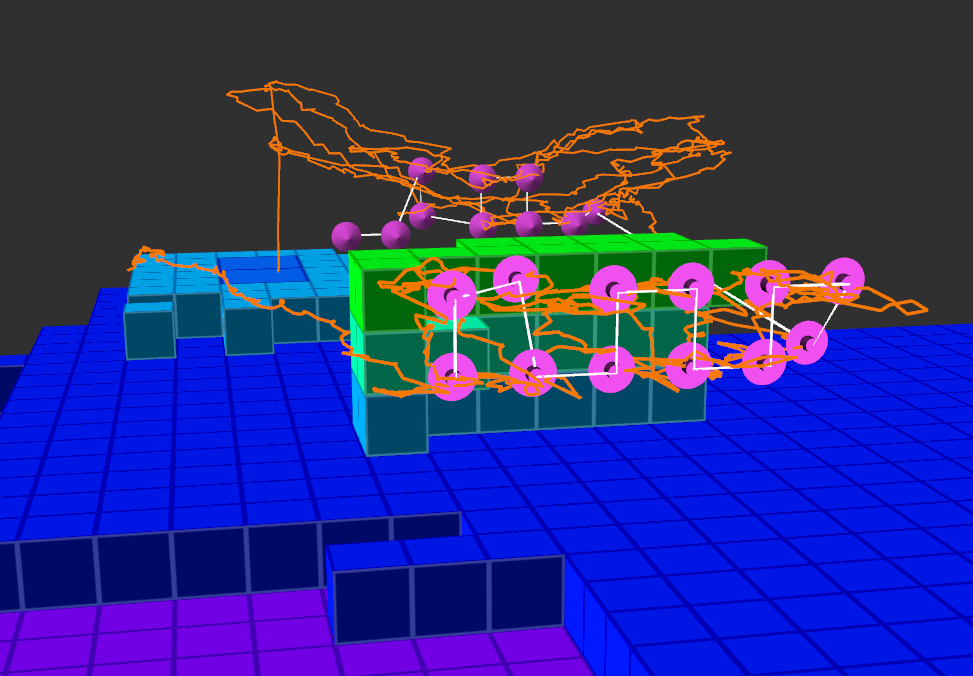

We implemented a simplified version of the GATSBI algorithm on the real-world mock bridge. Our algorithm was able to inspect all 15 inspectable bridge voxels. The target inspection path and actual flown path are shown in Fig. 12. The white line is the direct path between the target inspection points. The orange line is the actual flown path. The pink cones represent the inspection points. This experiment validates that GATSBI can be used for real-world infrastructure inspection. The computation time of the GATSBI algorithm was 0.32 seconds, GTSP time was 8.25 seconds, and flight time was 772 seconds. As shown in the simulations, the GATSBI algorithm time is not the bottleneck. Including the GTSP solver time, the total algorithm time is still much smaller than the flight time.

VI Conclusion

We present GATSBI, a 3D bridge inspection planner. We evaluate the performance of the algorithm through AirSim simulations and real-world hardware experiments with a UAV equipped with a 3D LiDAR and an RGB camera. The simulations show that GATSBI outperforms a frontier-based exploration algorithm. The hardware experiments show that GATSBI is a viable solution to real-world infrastructure inspection. In particular, we show that the algorithm is efficient in the sense that it targets inspectable voxels rather than simply exploring a volume. The simulations and experiments also demonstrate that the algorithm can run in real-time. In future work, we intend to improve our real-world experiments. In particular, implementing a SLAM module to account for noise found in the real world is needed to conduct more complex experiments. We are also looking into implementing a multi-agent solution to account for limited battery-life of UAV’s.

References

- [1] B. Kakillioglu, J. Wang, S. Velipasalar, A. Janani, and E. Koch. 3D Sensor-Based UAV Localization for Bridge Inspection. In 2019 53rd Asilomar Conference on Signals, Systems, and Computers, pages 1926–1930, 2019.

- [2] Skydio 2+™ and x2™ – skydio inc. https://www.skydio.com/. Accessed: 2023-2-1.

- [3] Exyn Technologies. Industrial drone technology. https://www.exyn.com/. Accessed: 2023-2-1.

- [4] Brian Yamauchi. A frontier-based approach for autonomous exploration. In Proceedings 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97.’Towards New Computational Principles for Robotics and Automation’, pages 146–151. IEEE, 1997.

- [5] Armin Hornung, Kai M. Wurm, Maren Bennewitz, Cyrill Stachniss, and Wolfram Burgard. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Autonomous Robots, 2013. Software available at http://octomap.github.com.

- [6] S. L. Smith and F. Imeson. GLNS: An Effective Large Neighborhood Search Heuristic for the Generalized Traveling Salesman Problem. Computers & Operations Research, 87:1–19, 2017.

- [7] David Coleman, Ioan Sucan, Sachin Chitta, and Nikolaus Correll. Reducing the Barrier to Entry of Complex Robotic Software: a MoveIt! Case Study. arXiv preprint arXiv:1404.3785, 2014.

- [8] Cheng Zhu, Rong Ding, Mengxiang Lin, and Yuanyuan Wu. A 3D Frontier-Based Exploration Tool for MAVs. In 2015 IEEE 27th International Conference on Tools with Artificial Intelligence (ICTAI), pages 348–352. IEEE, 2015.

- [9] Daniel Louback da Silva Lubanco, Markus Pichler-Scheder, and Thomas Schlechter. A Novel Frontier-Based Exploration Algorithm for Mobile Robots. In 2020 6th International Conference on Mechatronics and Robotics Engineering (ICMRE), pages 1–5. IEEE, 2020.

- [10] Farzad Niroui, Ben Sprenger, and Goldie Nejat. Robot exploration in unknown cluttered environments when dealing with uncertainty. In 2017 IEEE International Symposium on Robotics and Intelligent Sensors (IRIS), pages 224–229. IEEE, 2017.

- [11] Anna Dai, Sotiris Papatheodorou, Nils Funk, Dimos Tzoumanikas, and Stefan Leutenegger. Fast Frontier-based Information-driven Autonomous Exploration with an MAV. arXiv preprint arXiv:2002.04440, 2020.

- [12] Shaojie Shen, Nathan Michael, and Vijay Kumar. Autonomous indoor 3d exploration with a micro-aerial vehicle. In 2012 IEEE international conference on robotics and automation, pages 9–15. IEEE, 2012.

- [13] Micah Corah, Cormac O’Meadhra, Kshitij Goel, and Nathan Michael. Communication-Efficient Planning and Mapping for Multi-Robot Exploration in Large Environments. IEEE Robotics and Automation Letters, 4(2):1715–1721, 2019.

- [14] Aravind Preshant Premkumar, Kevin Yu, and Pratap Tokekar. Combining Geometric and Information-Theoretic Approaches for Multi-Robot Exploration. arXiv preprint arXiv:2004.06856, 2020.

- [15] Richard Pito. A solution to the next best view problem for automated surface acquisition. IEEE Transactions on pattern analysis and machine intelligence, 21(10):1016–1030, 1999.

- [16] Alberto Quattrini Li. Exploration and Mapping with Groups of Robots: Recent Trends. Current Robotics Reports, pages 1–11, 2020.

- [17] Cheng Peng and Volkan Isler. Adaptive View Planning for Aerial 3D Reconstruction. In 2019 International Conference on Robotics and Automation (ICRA), pages 2981–2987. IEEE, 2019.

- [18] Geoffrey A Hollinger, Brendan Englot, Franz S Hover, Urbashi Mitra, and Gaurav S Sukhatme. Active planning for underwater inspection and the benefit of adaptivity. The International Journal of Robotics Research, 32(1):3–18, 2013.

- [19] Mike Roberts, Debadeepta Dey, Anh Truong, Sudipta Sinha, Shital Shah, Ashish Kapoor, Pat Hanrahan, and Neel Joshi. Submodular Trajectory Optimization for Aerial 3D Scanning. In Proceedings of the IEEE International Conference on Computer Vision, pages 5324–5333, 2017.

- [20] Soohwan Song, Daekyum Kim, and Sungho Jo. Online coverage and inspection planning for 3d modeling. Autonomous Robots, pages 1–20, 2020.

- [21] Andreas Bircher, Mina Kamel, Kostas Alexis, Helen Oleynikova, and Roland Siegwart. Receding horizon path planning for 3d exploration and surface inspection. Autonomous Robots, 42(2):291–306, 2018.

- [22] Velodyne puck vlp-16 sensor — lidar — autonomoustuff. https://autonomoustuff.com/product/velodyne-puck-vlp-16/. (Accessed on 09/21/2020).

- [23] tahsinkose/hector-moveit: Hector quadrotor with moveit! motion planning framework. https://github.com/tahsinkose/hector-moveit. (Accessed on 03/03/2021).

- [24] Sattar Dorafshan, Marc Maguire, Nathan V Hoffer, and Calvin Coopmans. Fatigue Crack Detection Using Unmanned Aerial Systems in Under-Bridge Inspection. 2017.

- [25] Burak Kakillioglu, Jiyang Wang, Senem Velipasalar, Alireza Janani, and Edward Koch. 3D Sensor-Based UAV Localization for Bridge Inspection. In 2019 53rd Asilomar Conference on Signals, Systems, and Computers, pages 1926–1930. IEEE, 2019.

- [26] Seungwon Song, Sungwook Jung, Hyungjin Kim, and Hyun Myung. A Method for Mapping and Localization of Quadrotors for Inspection under Bridges Using Camera and 3D-LiDAR. In Proceedings of the 7th Asia-Pacific Workshop on Structural Health Monitoring, Hong Kong SAR, PR China, 2019.