GATOR: Graph-Aware Transformer with Motion-Disentangled Regression for Human Mesh Recovery from a 2D Pose

Abstract

3D human mesh recovery from a 2D pose plays an important role in various applications. However, it is hard for existing methods to simultaneously capture the multiple relations during the evolution from skeleton to mesh, including joint-joint, joint-vertex and vertex-vertex relations, which often leads to implausible results. To address this issue, we propose a novel solution, called GATOR, that contains an encoder of Graph-Aware Transformer (GAT) and a decoder with Motion-Disentangled Regression (MDR) to explore these multiple relations. Specifically, GAT combines a GCN and a graph-aware self-attention in parallel to capture physical and hidden joint-joint relations. Furthermore, MDR models joint-vertex and vertex-vertex interactions to explore joint and vertex relations. Based on the clustering characteristics of vertex offset fields, MDR regresses the vertices by composing the predicted base motions. Extensive experiments show that GATOR achieves state-of-the-art performance on two challenging benchmarks. Code is available at https://github.com/kasvii/GATOR.

Index Terms— 3D Human Mesh Recovery, Transformer, Graph Convolutional Network, Motion Disentangling

1 Introduction

3D human mesh recovery from the 2D observation is an essential task for many 3D applications [1]. However, image-based methods suffer from the domain gap in image appearance between well-controlled datasets and in-the-wild scenes, while pose-based methods naturally relieve this problem with the skeleton inputs [2, 3, 4, 5]. But existing pose-based methods neglect the multiple relations during the evolution from skeleton to mesh, including joint-joint, joint-vertex, and vertex-vertex relations, that are prone to produce implausible results.

Existing pose-based methods follow an encoder-decoder manner [1]. In encoders, Graph Convolution Networks (GCNs) and Transformers have become the mainstream [6, 7, 8]. Benefiting from the graph structure of human skeleton, GCNs naturally capture the physical relations between neighboring joints [9, 10]. But it is difficult to capture non-local relations. In contrast, Transformers can explore global information by the attention mechanism while weakening the graph topology and local relations. Recently, several methods combine GCNs and Transformers to complement each other [4, 11]. But the neglect of graph structures in Transformers and the adopted cascaded architecture may limit the effectiveness.

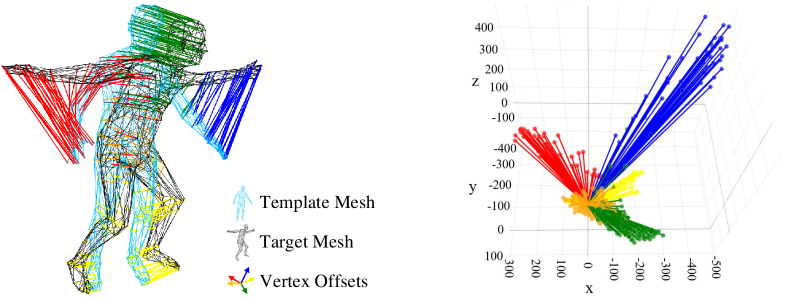

For decoders, some methods regress vertex coordinates [11, 12, 13], and some recent works predict the offset fields then add to the template mesh [4, 14, 15]. They regress 3D coordinates directly from high-dimension features, which is data-driven ignoring the physical plausibility. As shown in Fig. 1, the offset field from template mesh to target mesh can be clustered to several base motions due to the motion similarity in the same body part. This inspires us to generate the vertex motions by predicting the base motions and using them to constitute each vertex offset. Compared to directly regressing the vertex offsets, predicting and aggregating the base motions shall release the network training burden and provide more accurate results.

Based on the above observations, we present a novel network, termed GATOR, including an encoder of Graph-Aware Transformer (GAT) and a decoder with Motion-Disentangled Regression (MDR), which recovers 3D human mesh from a 2D human pose. (1) In GAT, we design a two-branch module that contains a GCN branch and a Graph-Aware Self-Attention (GA-SA) branch to explore physical and hidden joint relations, where GA-SA takes two important skeleton encodings to enhance graph awareness. (2) Moreover, MDR models joint-vertex and vertex-vertex interactions and generates the vertex offsets by composing the predicted base motions. (3) Experimental results show that GATOR outperforms previous state-of-the-art methods on two benchmark datasets.

2 Method

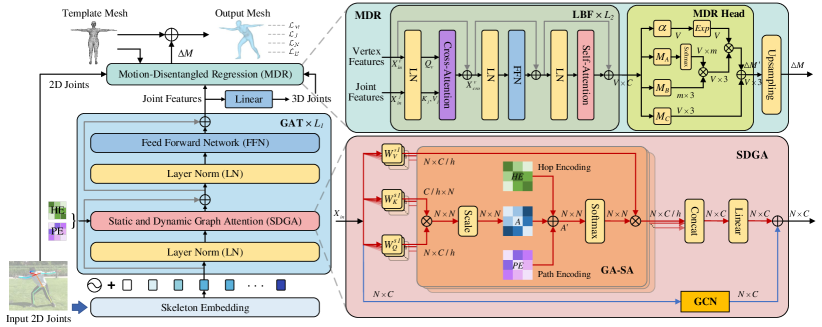

Fig. 2 illustrates the architecture of GATOR, including GAT and MDR. Given 2D human joints estimated by an off-the-shelf 2D pose detector, GAT first extracts physical and hidden joint features and then generates a 3D pose. MDR learns vertex features through joint-vertex and vertex-vertex interactions, then predicts base motions to constitute the vertex offsets which are added to the template mesh as the final mesh.

2.1 Graph-Aware Transformer Encoder

The joint relations include physical skeleton topology and action-specific information (e.g., the relation between hands and feet is strong during running but weak when sitting), which is difficult to capture by a static graph [6]. Therefore, we propose a two-branch module named Static and Dynamic Graph Attention (SDGA). One branch is GA-SA which takes two important skeleton structures to improve graph awareness for global and dynamic feature learning. The other is the GCN branch to enhance the physical topology along a static graph.

Graph-Aware Self-Attention. Inspired by graph representation tasks [16], wherein the injected priors in the attention mechanism can adaptively change the attention distribution, we design GA-SA by introducing two crucial skeleton priors.

One is the multi-hop connectivity between joints, represented by a matrix , where is the number of joints and denotes the hop distance between joint and joint . A learnable embedding table is used to project each hop number in to a vector of size , the head number, and thus embeds the matrix to a learnable tensor named Hop Encoding (HE) :

| (1) |

where represents the indexing operation.

The other is the path information between joints, which reflects the bone length and the body proportion [1]. The Path Encoding (PE) mechanism is built upon a distance graph , where denotes the joints, and denotes the spatial distances between adjacent joints. The vector is defined as the path from joint to joint . A linear embedding layer is used to project each path to a learnable tensor: . The path encoding of joint pair is defined as an average of the dot-products of the edge embeddings and the learnable weights in the path:

| (2) |

where denotes the learnable weights for .

By adding up the hop and path encodings to the attention matrix , the improved attention matrix can be written as:

| (3) |

| (4) |

where is the feature dimension, are the input features, and are the learnable weight matrices that project the input to different representations.

Static and Dynamic Graph Learning. GA-SA is updated by input features in both training and inference processes. When the input pose varies, the attention maps also change to capture the dynamic action-specific relations. However, GA-SA weakens the physical and local interaction. A GCN branch is further introduced following MGCN [9], whose parameters are updated during training and fixed during inference to capture the physical topology. The joint feature enters each branch and is transformed by the attention maps and the adjacent matrices. By adding up the two features, the output contains the information from both static and dynamic graphs.

2.2 Motion-Disentangled Regression Decoder

We design MDR including a Linear Blend Featuring (LBF) module to learn vertex features through joint-vertex and vertex-vertex interactions, and a Motion-Disentangled Regression Head (MDR Head) to predict base motions and use them to constitute vertex offsets. To avoid redundancies and make training more effcient [11, 13], MDR processes a coarse mesh with 431 vertices, then samples the vertex offsets up to 6K and adds to the original template mesh as the final result.

Linear Blend Featuring. Previous pose-based methods [2, 3, 4] ignore the inherent joint-vertex relations in the transition from skeleton to mesh. In the algorithm of Linear Blend Skinning (LBS), each vertex is driven by joints and its coordinate can be represented as a weighted sum of all joints [18, 19].

Inspired by LBS, a cross-attention module is designed to perform joint-vertex interaction, which can be expressed as:

| (5) |

| (6) |

where is the feature dimension. and denote the input features of vertices and joints, and denote the numbers of vertices and joints, respectively. are the learnable weight matrices. Thus, each vertex feature is a weighted sum of the joint features. The input joint feature is the concatenation of 2D and 3D joint coordinates and the output joint feature from GAT. Besides, the input feature of a vertex is the concatenation of the vertex coordinate from the coarse template mesh and its nearest 3D joint coordinate. After the joint-vertex interaction, we introduce a self-attention module for vertex-vertex interaction.

Motion-Disentangled Regression Head. Traditional mesh regression heads project the high-dimension features to the vertex coordinates by a linear layer while ignoring the physical plausibility. Motivated by the observation in Sec. 1, we propose a novel regression head based on the motion similarity in the same body part. Specifically, we predict several base motions to constitute the vertex offsets of coarse mesh (431 vertices) and add them to the original template mesh (6K vertices) after the upsampling operation. The coarse vertex offsets consist of the weighted sum of base motions determining the general orientation and translation, and the learnable biases for refinement, which is expressed as:

| (7) |

where is the motion weight matrix, denotes base motions, denotes motion biases, and denotes scaling factors. They are all learned from the network. The coarse vertex offset is upsampled to the original resolution with 6K vertices through a linear projection and added to the template mesh to get the final mesh result.

2.3 Loss Functions

GAT is first pretrained using the 3D joint loss to supervise the intermediate 3D pose. Then following [2, 4], the whole model is supervised by four losses: mesh vertex loss , 3D joint loss (joints from the final mesh), surface normal loss , and surface edge loss . The total loss is calculated as:

| (8) |

where , , , in our experiments.

| Method | Human3.6M | 3DPW | ||||

| MPJPE | PA-MPJPE | MPJPE | PA-MPJPE | MPVE | ||

| image | HMR [22] | 88.0 | 56.8 | 81.3 | 130.0 | |

| GraphCMR [12] | 50.1 | 70.2 | ||||

| SPIN [23] | 62.5 | 41.1 | 96.9 | 59.2 | 116.4 | |

| PyMAF [24] | 57.7 | 40.5 | 92.8 | 58.9 | 110.1 | |

| I2LMeshNet [17] | 55.7 | 41.1 | 93.2 | 57.7 | ||

| ProHMR [25] | 41.2 | 59.8 | ||||

| OCHMR [26] | 89.7 | 58.3 | 107.1 | |||

| video | VIBE [27] | 65.6 | 41.4 | |||

| TCMR [28] | 62.3 | 41.1 | ||||

| AdvLearning [29] | 92.6 | 55.2 | 111.9 | |||

| MPS-Net [30] | 91.6 | 54.0 | 109.6 | |||

| 2D pose | Pose2Mesh [2] | 64.9 | 46.3 | 88.9 | 58.3 | 106.3 |

| PQ-GCN [3] | 64.6 | 47.9 | 89.2 | 58.3 | 106.4 | |

| GTRS [4] | 64.3 | 45.4 | 88.5 | 58.9 | 106.2 | |

| GATOR (Ours) | 64.0 | 44.7 | 87.5 | 56.8 | 104.5 | |

| Pose2Mesh* [2] | 51.3 | 35.9 | 65.1 | 34.6 | ||

| GTRS* [4] | 50.6 | 34.4 | 53.8 | 34.5 | 61.6 | |

| GATOR* (Ours) | 48.8 | 31.2 | 50.8 | 30.5 | 59.6 | |

3 Experiments

Datasets. Human3.6M [31], 3DPW [32], COCO [33], and MuCo-3DHP [34] are used following previous works [2, 3, 4].

Evaluation Metrics. Three metrics are used to report the experimental results: Mean Per Joint Position Error (MPJPE), Procrustes-Aligned MPJPE (PA-MPJPE) that denotes MPJPE after rigid alinement, and Mean Per Vertex Error (MPVE).

Implementation Details. We first pretrain GAT and then train the whole GATOR in an end-to-end manner. The GAT is stacked by layers with the feature dimension . In MDR, the LBF is stacked by layers with the feature dimension . GAT is pretrained by Adam optimizer for 30 epochs with a batch size of 256 and a learning rate of , while the whole GATOR is trained with a batch size of 64 and a learning rate of for 30 epochs. All experiments are conducted on one NVIDIA RTX 3090 GPU.

3.1 Comparison with State-of-the-Art Methods

Table 1 compares GATOR with previous image/video/pose-based methods on Human3.6M and 3DPW datasets. For a fair comparison, we follow the same setting as previous works [4, 2, 22, 23]. For Human3.6M, GATOR is trained on the Human3.6M training set and measured PA-MPJPE on the frontal camera set. For 3DPW, GATOR is trained on multi-datasets including Human3.6M, COCO, and MuCo-3DHP, and evaluated on the 3DPW testing set to examine cross-dataset generalization ability. When using detected 2D poses [20, 21] as input, our method outperforms previous pose-based methods and achieves comparable results with image/video-based methods. Especially on 3DPW, although the input poses are not particularly accurate and without any image or temporal information, GATOR outperforms existing methods on the metrics of MPJPE and MPVE. When using GT 2D poses as inputs, the performance boosts by a large margin (improves 9.3% from 34.4 mm to 31.2 mm on Human3.6M, 11.6% from 34.5 mm to 30.5 mm on 3DPW in the metric of PA-MPJPE). It indicates that with a more accurate 2D pose detector, our method can further improve the performance.

3.2 Ablation Study

Effectiveness of GA-SA and GCN. Table 3.2 examines the components of GAT on the intermediate 3D pose and the final mesh. The proposed GAT improves both pose and mesh performance, and a more accurate 3D pose is beneficial to higher mesh accuracy. Individual HE, PE or GCN brings similar improvements but combining them together boosts the performance by a clear margin (improve 2.3 mm in MPJPE and 3.2 mm in MPVE of 3D mesh). The improvements indicate that the hidden action-specific information explored by GA-SA and the physical skeleton topology extracted by GCN effectively complement each other to achieve better results.

tableComparison of GAT components on 3DPW. HE PE GCN 3D Pose 3D Mesh MPJPE MPJPE MPVE 87.1 89.8 107.7 ✓ 86.5 88.6 105.5 ✓ 86.4 88.7 106.0 ✓ 86.6 88.7 105.6 ✓ ✓ 86.0 88.0 105.3 ✓ ✓ 85.9 88.3 105.3 ✓ ✓ 85.2 88.0 105.1 ✓ ✓ ✓ 84.3 87.5 104.5

tableComparison of different regressors on 3DPW. Regressor 3D Mesh PA-MPJPE MPVE Linear 63.2 119.9 Linear + LBF 58.8 107.7 MDR20 w/o LBF 58.2 107.2 MDR5 56.8 104.8 MDR10 57.4 105.5 MDR20 56.8 104.5 MDR30 57.4 105.2 MDR40 57.5 105.5

Effectiveness of MDR. Table 3.2 evaluates the impact of MDR by removing LBF, replacing MDR Head with a general linear layer, and setting different base motion numbers. The top two lines show that the joint and vertex interactions in LBF are effective for relations exploring and significantly improve the results. When considering MDR Heads, the performance is further improved, where MDR Head with 20 base motions achieves the optimal results. More or fewer base motions may bring inappropriate clustering, which drops the performance.

3.3 Qualitative Results

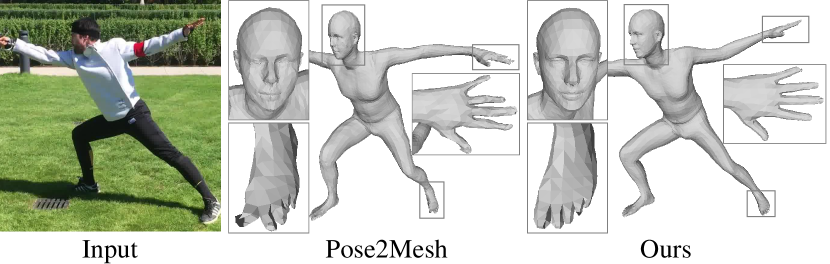

Fig. 3 shows qualitative results compared to GraphCMR [12], I2L-MeshNet [17], and GTRS [4] on the COCO dataset. The first two are image-based methods that are often impacted by the background, while the latter two pose-based methods are more robust, whereas GATOR produces more plausible results. Fig. 4 compares mesh details between Pose2Mesh [2] and GATOR. Pose2Mesh is prone to generate artifacts due to the sub-optimal prediction of vertex positions, while our method can provide more accurate vertices for fine-grained meshes.

4 Conclusion

In this paper, we present GATOR, a novel network for 3D human mesh recovery from a 2D pose. To explore multiple joint and vertex relations in the evolution from skeleton to mesh, GAT is designed to explore joint relations by combining a GCN branch and a GA-SA branch in parallel for static and dynamic graph learning, where GA-SA takes two important skeleton encodings to enhance the graph awareness. Besides, MDR is proposed to model joint-vertex and vertex-vertex interactions and generate the vertex coordinates in a motion-disentangled regression, which provides more accurate results. Extensive experiments show that our method achieves state-of-the-art performance on two challenging benchmarks.

References

- [1] Y. Tian, H. Zhang, Y. Liu, and L. Wang, “Recovering 3D human mesh from monocular images: A survey,” arXiv preprint arXiv:2203.01923, 2022.

- [2] H. Choi, G. Moon, and K. M. Lee, “Pose2Mesh: Graph convolutional network for 3D human pose and mesh recovery from a 2D human pose,” in Proc. ECCV, 2020, pp. 769–787.

- [3] L. Wang, X. Liu, X. Ma, J. Wu, J. Cheng, and M. Zhou, “A progressive quadric graph convolutional network for 3D human mesh recovery,” IEEE TCSVT, pp. 104–117, 2022.

- [4] C. Zheng, M. Mendieta, P. Wang, A. Lu, and C. Chen, “A lightweight graph transformer network for human mesh reconstruction from 2D human pose,” in Proc. ACM MM, 2022, pp. 5496–5507.

- [5] C. Wu, Y. Li, X. Tang, and J. Wang, “MUG: Multi-human graph network for 3D mesh reconstruction from 2D pose,” arXiv preprint arXiv:2205.12583, 2022.

- [6] A. Zeng, X. Sun, L. Yang, N. Zhao, M. Liu, and Q. Xu, “Learning skeletal graph neural networks for hard 3D pose estimation,” in Proc. ICCV, 2021, pp. 11436–11445.

- [7] W. Li, H. Liu, R. Ding, M. Liu, P. Wang, and W. Yang, “Exploiting temporal contexts with strided transformer for 3D human pose estimation,” IEEE TMM, 2022.

- [8] W. Li, H. Liu, H. Tang, P. Wang, and L. Van Gool, “MHFormer: Multi-hypothesis transformer for 3D human pose estimation,” in Proc. CVPR, 2022, pp. 13147–13156.

- [9] Z. Zou and W. Tang, “Modulated graph convolutional network for 3D human pose estimation,” in Proc. ICCV, 2021, pp. 11477–11487.

- [10] W. Li, H. Liu, T. Guo, H. Tang, and R. Ding, “GraphMLP: A graph MLP-like architecture for 3D human pose estimation,” arXiv preprint arXiv:2206.06420, 2022.

- [11] K. Lin, L. Wang, and Z. Liu, “Mesh graphormer,” in Proc. ICCV, 2021, pp. 12939–12948.

- [12] N. Kolotouros, G. Pavlakos, and K. Daniilidis, “Convolutional mesh regression for single-image human shape reconstruction,” in Proc. CVPR, 2019, pp. 4501–4510.

- [13] K. Lin, L. Wang, and Z. Liu, “End-to-end human pose and mesh reconstruction with transformers,” in Proc. CVPR, 2021, pp. 1954–1963.

- [14] T. Luan, Y. Wang, J. Zhang, Z. Wang, Z. Zhou, and Y. Qiao, “PC-HMR: Pose calibration for 3D human mesh recovery from 2D images/videos,” in Proc. AAAI, 2021, vol. 35, pp. 2269–2276.

- [15] M. Zanfir, A. Zanfir, E. G. Bazavan, W. T. Freeman, R. Sukthankar, and C. Sminchisescu, “THUNDR: Transformer-based 3D human reconstruction with markers,” in Proc. ICCV, 2021, pp. 12971–12980.

- [16] C. Ying, T. Cai, S. Luo, S. Zheng, G. Ke, D. He, Y. Shen, and T. Y. Liu, “Do transformers really perform badly for graph representation?,” in Proc. NeurIPS, 2021, pp. 28877–28888.

- [17] G. Moon and K. M. Lee, “I2L-MeshNet: Image-to-lixel prediction network for accurate 3D human pose and mesh estimation from a single RGB image,” in Proc. ECCV, 2020, pp. 752–768.

- [18] A. Jacobson, Z. Deng, L. Kavan, and J. P. Lewis, “Skinning: Real-time shape deformation,” in ACM SIGGRAPH 2014 Courses. 2014.

- [19] M. Loper, N. Mahmood, J. Romero, G. Pons-Moll, and M. J. Black, “SMPL: A skinned multi-person linear model,” ACM TOG, vol. 34, no. 6, pp. 1–16, 2015.

- [20] X. Sun, B. Xiao, F. Wei, S. Liang, and Y. Wei, “Integral human pose regression,” in Proc. ECCV, 2018, pp. 529–545.

- [21] F. Zhang, X. Zhu, H. Dai, M. Ye, and C. Zhu, “Distribution-aware coordinate representation for human pose estimation,” in Proc. CVPR, 2020, pp. 7093–7102.

- [22] A. Kanazawa, M. J. Black, D. W. Jacobs, and J. Malik, “End-to-end recovery of human shape and pose,” in Proc. CVPR, 2018, pp. 7122–7131.

- [23] N. Kolotouros, G. Pavlakos, M. J. Black, and K. Daniilidis, “Learning to reconstruct 3D human pose and shape via model-fitting in the loop,” in Proc. ICCV, 2019, pp. 2252–2261.

- [24] H. Zhang, Y. Tian, X. Zhou, W. Ouyang, Y. Liu, L. Wang, and Z. Sun, “PyMAF: 3D human pose and shape regression with pyramidal mesh alignment feedback loop,” in Proc. ICCV, 2021, pp. 11446–11456.

- [25] N. Kolotouros, G. Pavlakos, D. Jayaraman, and K. Daniilidis, “Probabilistic modeling for human mesh recovery,” in Proc. ICCV, 2021, pp. 11605–11614.

- [26] R. Khirodkar, S. Tripathi, and K. Kitani, “Occluded human mesh recovery,” in Proc. CVPR, 2022, pp. 1715–1725.

- [27] M. Kocabas, N. Athanasiou, and M. J. Black, “VIBE: Video inference for human body pose and shape estimation,” in Proc. CVPR, 2020, pp. 5253–5263.

- [28] H. Choi, G. Moon, J. Y. Chang, and K. M. Lee, “Beyond static features for temporally consistent 3D human pose and shape from a video,” in Proc. CVPR, 2021, pp. 1964–1973.

- [29] Y. Sun, J. Zhang, and W. Wang, “Adversarial learning enhancement for 3D human pose and shape estimation,” in Proc. ICASSP, 2022, pp. 3743–3747.

- [30] W. L. Wei, J. C. Lin, T. L. Liu, and H. Y. M. Liao, “Capturing humans in motion: temporal-attentive 3D human pose and shape estimation from monocular video,” in Proc. CVPR, 2022, pp. 13211–13220.

- [31] C. Ionescu, D. Papava, V. Olaru, and C. Sminchisescu, “Human3.6M: Large scale datasets and predictive methods for 3D human sensing in natural environments,” IEEE TPAMI, vol. 36, no. 7, pp. 1325–1339, 2013.

- [32] T. Von Marcard, R. Henschel, M. J. Black, B. Rosenhahn, and G. Pons-Moll, “Recovering accurate 3D human pose in the wild using imus and a moving camera,” in Proc. ECCV, 2018, pp. 601–617.

- [33] T. Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft COCO: Common objects in context,” in Proc. ECCV, 2014, pp. 740–755.

- [34] D. Mehta, O. Sotnychenko, F. Mueller, W. Xu, S. Sridhar, G. Pons-Moll, and C. Theobalt, “Single-shot multi-person 3D pose estimation from monocular RGB,” in Proc. 3DV, 2018, pp. 120–130.