Further author information: Quan Liu : E-mail: [email protected]

Contact Author: Yuankai Huo: E-mail: [email protected]

GAN based Unsupervised Segmentation:

Should We Match the Exact Number of Objects

Abstract

The unsupervised segmentation is an increasingly popular topic in biomedical image analysis. The basic idea is to approach the supervised segmentation task as an unsupervised synthesis problem, where the intensity images can be transferred to the annotation domain using cycle-consistent adversarial learning. The previous studies have shown that the macro-level (global distribution level) matching on the number of the objects (e.g., cells, tissues, protrusions etc.) between two domains resulted in better segmentation performance. However, no prior studies have exploited whether the unsupervised segmentation performance would be further improved when matching the exact number of objects at micro-level (mini-batch level). In this paper, we propose a deep learning based unsupervised segmentation method for segmenting highly overlapped and dynamic sub-cellular microvilli. With this challenging task, both micro-level and macro-level matching strategies were evaluated. To match the number of objects at the micro-level, the novel fluorescence-based micro-level matching approach was presented. From the experimental results, the micro-level matching did not improve the segmentation performance, compared with the simpler macro-level matching.

keywords:

Unsupervised learning, Segmentation, Synthesis, GAN1 INTRODUCTION

Semantic segmentation is one of the central tasks in microscope image analysis, which segments targeting objects from background context [1]. Traditionally, the semantic segmentation was performed by unsupervised intensity based methods, such as watershed [2], Gaussian mixture model (GMM) [3], graph-cut [4] etc. In the past few years, the deep learning based methods have been increasingly popular in microscopy imaging, due to the superior accuracy and better generalizability [5]. However, one of the major limitations in deep learning based semantic segmentation is the need of large-scale annotated images, which is not only tedious, but also resource intensive [6]. CycleGAN [7], a breakthrough generative adversarial network (GAN) [8] was proposed recently, which shed light on semantic segmentation with minimal or even no manual annotation [9, 10, 11, 12].

Within the CycleGAN framework [7], many previous studies have tackled the unsupervised semantic segmentation in microscopy imaging. Ihle et al. [13] proposed to use the CycleGAN framework to segment bright-field images of cell cultures, a live-dead assay of C.Elegans, and X-ray-computed tomography of metallic nanowire meshes. A similar approach was proposed by [14] for facilitating stain-independent supervised and unsupervised segmentation on kidney histology. DeepSynth [15] was proposed to further extend the CycleGAN framework from 2D to 3D nuclear segmentation. Even though the CycleGAN based unsupervised segmentation approaches have shown decent performance on microscope images, very few studies have investigated the challenging sub-cellular microvilli segmentation with fluorescence microscopy imaging. The sub-cellular microvilli segmentation is challenging due to the highly overlapping and dynamic nature of such small sub-celluar objects [16, 17].

Different from Pix2Pix GAN [18], which requires pixel-level matching between images across two domains, CycleGAN is able to perform image synthesis without paired images. However, the previous studies emphasized that the macro-level (global distribution level) matching on the number of objects between intensity images and simulated masks improved the segmentation performance [13]. That fact inspired us with the question that if the segmentation performance could be further improved by doing more careful matching than the macro-level. To answer the question, we propose a new micro-level matching (mini-batch level) strategy to match the rough number of objects across two domains when training the CycleGAN framework.

In this paper, we develop a deep learning based unsupervised semantic segmentation method for sub-cellular microvilli segmentation using fluorescence microscopy. Meanwhile, we evaluate the performance of micro-level matching strategy, which is enabled by the multi-channel nature of fluorescence images. The contributions of this study are three-fold: (1) We propose the first deep learning based unsupervised sub-cellular microvilli segmentation method; (2) We propose the micro-level matching to ensure the roughly same number of objects across two modalities within each mini-batch, without introducing extra human annotation efforts; (3) Comprehensive analyses are provided to evaluate the outcomes of different augmentation strategies when generating the simulated masks for unsupervised microvilli segmentation.

2 METHODS

Our proposed unsupervised segmentation method consists of two parts: (1) image synthesis, and (2) segmentation. In image synthesis, our goal is to synthesize realistic looking images from the simulated masks. Then, the paired synthetic images and masks are used to train another segmentation network. Note that, no manual annotations are used in our training either for CycleGAN or U-Net, as an unsupervised framework.

2.1 Cycle-Consistent Image Synthesis

The CycleGAN [7] is used to generate our synthetic training data. As the standard CycleGAN implementation, generators and discriminators are used to transfer the styles between two image modalities. The role of the generators is to convert the real images to another domain, which are typically called ”fake” images. The discriminators then judge if a given image is real or fake. The CycleGAN model design creatively forms the entire learning process as a cycle-consistent loop, where the reconstructed fake images after two generators should be close to the original real images. In each branch, the generator tries to generate realistic images, while the discriminator try to distinguish the fake images from the real ones.

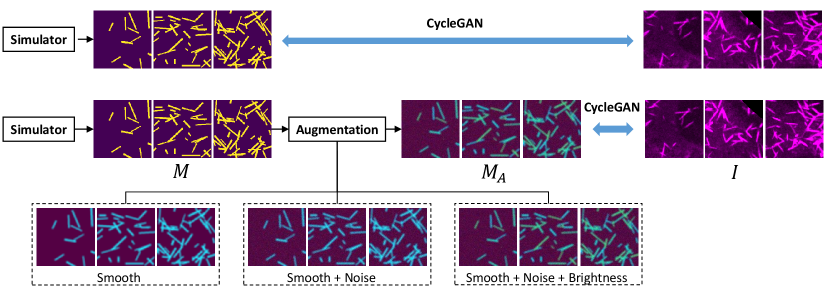

In our unsupervised image segmentation framework (Fig. 1), the CycleGAN is employed to synthesize segmentation masks (or called ”annotations”) from the real images , and synthesize realistic looking images from simulated segmentation masks . In the ideal case, the trained generator can be directly used as a segmentation network to segment new images. However, the quality of synthesis between real images and clean binary masks is typically not optimal since the underlying Poisson distribution of the binary masks is not a realistic distribution in real images [13]. Moreover, the optimization of the KL divergence for training discriminators is more difficult to converge [14] using clean binary masks. Therefore, the Gaussian smoothing, random noise, and brightness variations are used to generate augmented masks in additional to the simulated mask images for better synthetic performance (Fig. 2). Then, the trained generator will provide us unlimited fake but realistic looking images from the simulated and augmented masks . Eventually, the fake images and the clean binary masks (before augmentation) are used to train another independent segmentation network (see Image Segmentation section).

2.2 Micro- and Macro-level Matching

Compared with traditional pixel-to-pixel conditional GAN design which needs pixel-level correspondence between two modalities, the CycleGAN does not need paired images for training. However, the superior synthetic segmentation performance is typically achieved if the distributions of the number of objects in real image modality and annotation modality are roughly matched [13, 14], named as ”macro-level” matching. However, no studies have explored the level of matching in the middle of pixel-level and macro-level. In this study, we proposed the idea called ”micro-level” matching, which matches the number of objects in each mini-batch (Fig. 1). For example, if a real image has roughly 21 microvilli, we will provide a simulated mask with the same 21 sticks, when forming the mini-batch. Then, the next question is that how can we get rough number of objects from the real images. In this study, we utilize the multi-channel nature of fluorescence microscopy to split the microvilli marker mCherry-Espin (magenta color objects) and microvilli tip marker EGFP-EPS8 (green color objects). Using the simple intensity thresholding based cell counting algorithm [19], the rough number of protein objects are easily achieved. The numbers are then used as the rough number of microvilli to simulate the corresponding mask files with the same number of objects, as the micro-level matching. Note that we only match the number of the objects in the micro-level matching, where the spatial distribution of the objects is still random.

2.3 Image Segmentation

U-Net [20] is employed as the segmentation backbone network, which is a fully convolutional neural network and widely used in image segmentation tasks. The segmentation part of our framework is shown in Fig. 3. In our proposed unsupervised segmentation framework, the input images of U-Net are the fake microvilli images, which are generated from the simulated masks using or from trained CycleGAN. Then the Dice loss function is calculated by comparing the predicted segmentation with the simulated binary masks. Traditional deep neural network typically needs a large number of annotated images to train a segmentation network. Using our design, however, we can generate unlimited number of training data to train the segmentation network without any manual annotation efforts.

3 Data and Experimental Design

3.1 Microvilli Images

Twelve microvilli images acquired using fluorescence microscopy were used as training data, where each image had pixels with pixel resolution 1.1 m. Then, 500 image patches with pixels were randomly sampled from the twelve images to train the CycleGAN as the real images. Then, another independent microvilli video with 20 frames was used as testing data to evaluate the performance of the proposed unsupervised segmentation methods. Each frame has pixels with pixel resolution 1.1 m. All microvilli in each frame were densely annotated manually by an experienced biologist as the gold standard segmentation. The video of microvilli frames and manual annotations are presented in the supplementary materials: https://github.com/iamliuquan/GAN_based_segmentation.

3.2 Experimental Design

In order to test if micro-level matching can improve the unsupervised segmentation performance, we performed experiments using both macro-level and micro-level matching. For micro-level matching, the number of sticks of each images was obtained by automatically counting the number of green proteins. For macro-level matching, the number of sticks for each image was randomly sampled from a uniform distribution (range from 11 to 63), according to distribution of proteins.

As shown in Fig 2, we have four different augmentation settings to generate the simulated masks in annotation domain:

Binary masks: The binary masks were directly simulated as the images in the annotation domain, without any augmentation. Based on [17] and the prior biological knowledge of microvilli, the width of each microvilli was simulated between 2 to 5 m, while the length was simulated between 10 to 50 m. As the pixel resolution of all our images was 1.1 m, we randomly generated sticks with 2 to 4 pixels width and 9 to 45 pixels length from uniform distribution.

Gaussian Smoothing: The first augmentation was Gasussian smoothing, where a Gaussian filter with kernal size of was applied to the binary masks.

Random noise: Upon the Gaussian smoothing, the random Gaussian noise was further applied to the entire mask image. The values of random noise ranged from 0 to 255 follows Gaussian distribution.

Different brightness: To further introduce the global intensity variations, random intensity values (200 to 255) were assigned to each stick in binary masks, where maximum foreground intensity value was 255.

To improve the segmentation performance, CycleGAN was employed to synthesis cell images for U-Net model training. In our experiment, CycleGAN is used to learn the mapping from simulated masks to real microvilli cell images. We built up dataset in these two domains as CycleGAN model’s input. Our CycleGAN model was trained for 60 epochs. According to the training loss, generator trained for 50 epochs shows the best performance. Generator trained in CycleGAN will be used to synthesis microvilli cell images based on simulated mask images.

CycleGAN model cannot cover all details using original frames as input which has too many cells. For both CycleGAN and U-Net, the input images were all with resolution cropped from original frames, and then resized to during training. When applying trained U-Net on testing microvilli images, each testing image was first split to four images, and the final segmentation were achieved by concatenating the corresponding four predictions back to the original resolution. The Dice results were calculated in the original resolution for testing images. The CycleGAN and U-Net were deployed on a computer with GeForce GTX 1060 Graphic Card with 6 GB memory. To get better synthesised data and avoid over-fitting, the CycleGAN was trained with 50 epochs and the U-Net was trained with 10 epochs for all experiments. According to the prediction performance, U-Net has the best performance after 10 epochs. The results from the last epochs were reported in this paper.

| Exp. | Smooth | Noise | Bright. | |||||

|---|---|---|---|---|---|---|---|---|

| Micro-level matching | 0.3818 | 0.4860 | 0.5459 | 0.5605 | 0.5628 | |||

| ✓ | 0.3650 | 0.4691 | 0.5301 | 0.5535 | 0.5667 | |||

| ✓ | ✓ | 0.3738 | 0.4783 | 0.5367 | 0.5511 | 0.5547 | ||

| ✓ | ✓ | ✓ | 0.3810 | 0.4865 | 0.5479 | 0.5650 | 0.5730 | |

| Macro-level matching | 0.3639 | 0.4717 | 0.5364 | 0.5583 | 0.5675 | |||

| ✓ | 0.3811 | 0.4918 | 0.5557 | 0.5776 | 0.5888 | |||

| ✓ | ✓ | 0.3902 | 0.4981 | 0.5607 | 0.5965 | 0.6169 | ||

| ✓ | ✓ | ✓ | 0.3894 | 0.4903 | 0.5467 | 0.5615 | 0.5631 |

“” indicate the Dice score, means the width of the ground truth.

4 Results

Considering both micro- and macro-level matching with different augmentation strategies, we performed eight experiments by training eight different CycleGAN networks. The qualitative results of image synthesis from eight different CycleGAN networks are provided in Fig. 4.

Then, the synthetic images were used to train eights different U-Net models using synthetic training image patches and applied to the real testing images. For testing images, the manual annotation was performed by tracking center line fragments of each microvillus (annotated by experienced biologist) since the traditional contour based annotations were extremely difficult on the tiny sub-cellular structures. To evaluate the segmentation results, we assigned different width to the manual segmentation and reported the results in Table. 1. The corresponding qualitative results of segmentation are provided in Fig. 5. According to microvilli cell’s biologic characteristic, manual annotation images are presented with width=3. From the results, the macro-level matching with Gaussian smoothing and random noise achieved the best performance across different widths of manual annotation. The micro-level matching did not improve the segmentation performance. Micro-level pairing can achieve higher accuracy on training dataset because its pairing is detailed to fit training dataset properties. Macro-level pairing is more robust. U-Net model trained by macro-level pairing performs better than micro-level pairing on new dataset. The standard Dice similarity coefficient metrics were used to evaluate different methods. The video of microvilli frames and our unsupervised segmentation results are presented in the supplementary materials: https://github.com/iamliuquan/GAN_based_segmentation.

5 Conclusion

In this study, we proposed the first deep learning solution to enable unsupervised sub-cellular microvilli segmentation. Beyond the current standard macro-level matching strategy, we utilized the multi-channel nature of fluorescence microscopy to enable the micro-level matching of the number of objects in each mini-batch without introducing new human annotation efforts. From the experimental results, we conclude that the micro-level matching of object numbers at the mini-batch level did not lead to better segmentation performance. From the comprehensive analyses of introducing noise, smoothness and brightness, the Gaussian smoothing and random noise on the simulated annotations with macro-level matching resulted in the best microvilli segmentation performance.

References

- [1] Wu, K., Gauthier, D., and Levine, M. D., “Live cell image segmentation,” IEEE Transactions on biomedical engineering 42(1), 1–12 (1995).

- [2] Pinidiyaarachchi, A. and Wählby, C., “Seeded watersheds for combined segmentation and tracking of cells,” in [International Conference on Image Analysis and Processing ], 336–343, Springer (2005).

- [3] Ragothaman, S., Narasimhan, S., Basavaraj, M. G., and Dewar, R., “Unsupervised segmentation of cervical cell images using gaussian mixture model,” in [Proceedings of the IEEE conference on computer vision and pattern recognition workshops ], 70–75 (2016).

- [4] Leskó, M., Kato, Z., Nagy, A., Gombos, I., Torok, Z., Vigh Jr, L., and Vigh, L., “Live cell segmentation in fluorescence microscopy via graph cut,” in [2010 20th International Conference on Pattern Recognition ], 1485–1488, IEEE (2010).

- [5] Moen, E., Bannon, D., Kudo, T., Graf, W., Covert, M., and Van Valen, D., “Deep learning for cellular image analysis,” Nature methods , 1–14 (2019).

- [6] Zhang, L., Lu, L., Nogues, I., Summers, R. M., Liu, S., and Yao, J., “Deeppap: deep convolutional networks for cervical cell classification,” IEEE journal of biomedical and health informatics 21(6), 1633–1643 (2017).

- [7] Zhu, J.-Y., Park, T., Isola, P., and Efros, A. A., “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in [Proceedings of the IEEE international conference on computer vision ], 2223–2232 (2017).

- [8] Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y., “Generative adversarial nets,” in [Advances in neural information processing systems ], 2672–2680 (2014).

- [9] Huo, Y., Xu, Z., Bao, S., Assad, A., Abramson, R. G., and Landman, B. A., “Adversarial synthesis learning enables segmentation without target modality ground truth,” in [2018 IEEE 15th international symposium on biomedical imaging (ISBI 2018) ], 1217–1220, IEEE (2018).

- [10] Huo, Y., Xu, Z., Moon, H., Bao, S., Assad, A., Moyo, T. K., Savona, M. R., Abramson, R. G., and Landman, B. A., “Synseg-net: Synthetic segmentation without target modality ground truth,” IEEE transactions on medical imaging 38(4), 1016–1025 (2018).

- [11] Zhang, Z., Yang, L., and Zheng, Y., “Translating and segmenting multimodal medical volumes with cycle-and shape-consistency generative adversarial network,” in [Proceedings of the IEEE conference on computer vision and pattern recognition ], 9242–9251 (2018).

- [12] Chen, C., Dou, Q., Chen, H., Qin, J., and Heng, P.-A., “Synergistic image and feature adaptation: Towards cross-modality domain adaptation for medical image segmentation,” in [Proceedings of the AAAI Conference on Artificial Intelligence ], 33, 865–872 (2019).

- [13] Ihle, S., Reichmuth, A. M., Girardin, S., Han, H., Stauffer, F., Bonnin, A., Stampanoni, M., Vörös, J., and Forró, C., “Udct: Unsupervised data to content transformation with histogram-matching cycle-consistent generative adversarial networks,” bioRxiv , 563734 (2019).

- [14] Gadermayr, M., Gupta, L., Appel, V., Boor, P., Klinkhammer, B. M., and Merhof, D., “Generative adversarial networks for facilitating stain-independent supervised and unsupervised segmentation: a study on kidney histology,” IEEE transactions on medical imaging 38(10), 2293–2302 (2019).

- [15] Dunn, K. W., Fu, C., Ho, D. J., Lee, S., Han, S., Salama, P., and Delp, E. J., “Deepsynth: Three-dimensional nuclear segmentation of biological images using neural networks trained with synthetic data,” Scientific reports 9(1), 1–15 (2019).

- [16] Julio, G., Merindano, M. D., Canals, M., and Ralló, M., “Image processing techniques to quantify microprojections on outer corneal epithelial cells,” Journal of anatomy 212(6), 879–886 (2008).

- [17] Meenderink, L. M., Gaeta, I. M., Postema, M. M., Cencer, C. S., Chinowsky, C. R., Krystofiak, E. S., Millis, B. A., and Tyska, M. J., “Actin dynamics drive microvillar motility and clustering during brush border assembly,” Developmental cell 50(5), 545–556 (2019).

- [18] Isola, P., Zhu, J.-Y., Zhou, T., and Efros, A. A., “Image-to-image translation with conditional adversarial networks,” in [Proceedings of the IEEE conference on computer vision and pattern recognition ], 1125–1134 (2017).

- [19] Refai, H., Li, L., Teague, T. K., and Naukam, R., “Automatic count of hepatocytes in microscopic images,” in [Proceedings 2003 International Conference on Image Processing (Cat. No. 03CH37429) ], 2, II–1101, IEEE (2003).

- [20] Ronneberger, O., Fischer, P., and Brox, T., “U-net: Convolutional networks for biomedical image segmentation,” in [International Conference on Medical image computing and computer-assisted intervention ], 234–241, Springer (2015).