FusionLoc: Camera-2D LiDAR Fusion Using Multi-Head Self-Attention for End-to-End Serving Robot Relocalization

Abstract

As technology advances in autonomous mobile robots, mobile service robots have been actively used more and more for various purposes. Especially, serving robots have been not surprising products anymore since the COVID-19 pandemic. One of the practical problems in operating a serving robot is that it often fails to estimate its pose on a map that it moves around. Whenever the failure happens, servers should bring the serving robot to its initial location and reboot it manually. In this paper, we focus on end-to-end relocalization of serving robots to address the problem. It is to predict robot pose directly from only the onboard sensor data using neural networks. In particular, we propose a deep neural network architecture for the relocalization based on camera-2D LiDAR sensor fusion. We call the proposed method FusionLoc. In the proposed method, the multi-head self-attention complements different types of information captured by the two sensors to regress the robot pose. Our experiments on a dataset collected by a commercial serving robot demonstrate that FusionLoc can provide better performances than previous end-to-end relocalization methods taking only a single image or a 2D LiDAR point cloud as well as a straightforward fusion method concatenating their features.

Index Terms:

FusionLoc, serving robot, camera-2D LiDAR fusion, multi-head self-attention, end-to-end relocalization.I Introduction

Recently, various mobile robots can be seen in many places around us for reasons such as the development of autonomous mobile robot technology, the pursuit of efficiency in repetitive tasks, and the increase in the value of non-face-to-face services. For instance, some mobile robots are helping people in various places, such as guiding people at airports or museums, disinfecting schools or hospitals, following people with heavy objects, and serving food in restaurants. Focusing on in restaurants and cafes, servers are known to walk around 8 to 15 kilometers a day. Also, carrying dishes in hand is more laborious than walking empty-handed. As a result, it can lower the quality of essential services for customers. For these reasons, serving robots are employed in more and more sites to improve more efficient working environments while reducing physical burden primarily.

For the autonomous mobile robots, localization is indispensable. Some mobile robots use a global positioning solution based on markers attached to the ceiling for localization. However, it has limitations due to the cost of infrastructure construction and maintenance and the damage to aesthetic elements in cafes and restaurants. Another localization methodology first produces a map of the place where the robot operates using simultaneous localization and mapping (SLAM) methods [1], [2]. Then, the serving robot estimates its location on the map based on information captured from its various sensors like wheel encoders, cameras, LiDARs, or IMUs. However, it often fails to estimate its location for some reasons, e.g., many people around the robot. The failure in the localization requires the serving robot user to reboot it at a predetermined starting position and orientation. That is one of the reasons why relocalization is needed.

For mobile robot relocalization, many studies were presented based on conventional visual features, and some studies utilized visual information together with geometric information measured by a LiDAR sensor [3]. However, deep learning studies for relocalization have been actively conducted in the recent few years. PoseNet [4] applied a convolutional neural network (CNN) to a single RGB image to regress a 6 degrees of freedom (DoF) camera pose. It was shown that PoseNet is more robust to illumination variation and occlusion than point feature-based relocalization methods. This advantage is one of the reasons why we focus on deep learning-based relocalization methods. Various studies followed PoseNet to apply a Bayesian CNN [5], long-short term memory (LSTM) [6], or geometric reprojection loss function [7]. Also, temporal information was utilized with an LSTM module [8], and an encoder-decoder architecture was employed for camera relocalization in [9]. Moreover, geometric constraints of two consecutive poses were considered to improve relocalization accuracy in [10], and a self-attention block was adopted in regressing the camera pose [11]. In recent studies, deep neural networks (DNN) took LiDAR [12] or IMU [13] data instead of using images for relocalization in an end-to-end manner.

In this paper, we leverage two sensors equipped with a commercial serving robot to improve relocalization accuracy. From the perspective of the extension of previous studies, this paper proposes a fusion DNN taking both an RGB image and a 2D LiDAR point cloud as input to regress the 3-DoF pose of the serving robot. We call the proposed architecture FusionLoc. To our best knowledge, this work is the first deep learning-based study fusing camera and LiDAR to address the mobile robot relocalization in two-dimensional planar space. The proposed network extracts features by adopting the AtLoc [11] architecture and the PointLoc [12] architecture from RGB images and 2D LiDAR point clouds, respectively. These features are combined through a concatenation operation. Then, the information captured from each sensor interacts together within multi-head self-attention (MHSA) layers [14]. Finally, FusionLoc outputs the position and orientation of the serving robot. Furthermore, this study introduces a new dataset consisting of tuples of an RGB image, a 2D LiDAR point cloud, and a 3-DoF pose. The data were collected using a Polaris3D serving robot named ereon. Experiments on the dataset show that the proposed network outperforms the previous methods taking a single image or a 2D LiDAR point cloud only. The contributions of this paper are summarized as follows:

-

•

A fusion deep neural network leveraging multi-head self-attention layers is proposed to take an image and a 2D LiDAR point cloud as input.

-

•

A new dataset, collected using a commercial serving robot, is introduced.

This paper is organized as follows. Section II briefly introduces the previous DNN-based studies for relocalization. Section III describes the proposed DNN architecture for camera-2D LiDAR fusion in detail. The new dataset is explained, and the experiments based on the dataset are shown in Section IV. Finally, Section V concludes the paper and provides future work.

II Related works

II-A Deep learning-based camera relocalizaton

There have been a lot of studies on deep learning for localization using cameras. The visual localization studies include place recognition and end-to-end camera relocalization. Given a query image, place recognition performs localization by image retrieval, i.e., transforming the query image into a descriptor by a deep network and then searching the most similar descriptor with the input descriptor in a database consisting of descriptors and their location information. NetVLAD [15] is a representative study belonging to visual place recognition. On the other hand, end-to-end camera relocalization directly predicts the pose of the mobile robot from the input image. PoseNet [4] was a breaking ground work that tried to directly regress 6-DoF camera pose from a single image using a CNN. It was noted that it was robust to motion blur, darkness, and unknown camera intrinsics compared to conventional methods based on the scale-invariant feature transform (SIFT [16]). Probabilistic PoseNet [5], an extension of PoseNet, adopted a Bayesian CNN with Monte Carlo dropout sampling to handle uncertainty in pose estimation. In [6], LSTM was presented as a substitute for the fully-connected layer before the pose regression layer to prevent overfitting and to perform a structured dimensionality reduction. In [7], loss function was also a consideration to improve relocalization performance. To this end, two alternatives were presented and tested in the study. One was a learnable balancing parameter between position and orientation, and the other was the reprojection error between predicted and actual camera poses. In [8], VidLoc was proposed. It leveraged a bidirectional LSTM to utilize temporal information in successive images. The trial led to some reduction in the relocalization error. In [9], the authors employed an encoder-decoder CNN architecture, which was called hourglass, for fine-grained information restoration. In the encoder, ResNet-34 [17] was adopted instead of GoogLeNet [18] used in PoseNet. In [10], MapNet was proposed using an additional loss term related to the relative poses between image pairs together with the loss term of the absolute poses of images. It was meaningful concerning encoding geometric constraints between consecutive poses into the loss function. Moreover, in the study, the logarithm of the unit quaternion was also proposed as the representation of the camera orientation instead of the unit quaternion. The logarithm of the unit quaternion has been popular in most follow-up studies. In [19] and [20], a Siamese architecture was presented to reduce the relocalization error with the relative poses of the image pairs. ViPNet [21] utilized the squeeze-and-excitation blocks and a Bayesian CNN with ResNet-50 to deal with uncertainty in predicting a camera pose like Probabilistic PoseNet [5]. AtLoc [11] adopted a self-attention module to focus on geometrically rigid objects rather than dynamic ones. VMLoc [22] encoded the features of depth images (or projection of sparse LiDARs to RGB images) and RGB images in each CNN branch. Then, the two types of features were fused by Product-of-Experts.

II-B Deep learning-based other sensors relocalizaton

There have been a few studies on deep learning for relocalization with other sensors. Compared to camera relocalization, those studies have recently been published. The 3D LiDAR point clouds have more geometric information because they can see with a wide angle of 360 degrees and vertical field of view. In [23], a point cloud odometry method was proposed, which took two consecutive 3D point clouds as input and predicted the transformation between them. Each point cloud was first encoded to a panoramic depth image, and then the two depth images were stacked. The DeepPCO network estimated 3D translation and 3D orientation from the stacked depth image using its two sub-networks. Different from [23], PointLoc [12] regressed a 6-DoF pose of a 3D LiDAR sensor directly from a point cloud. In PointLoc, the PointNet++ [24] architecture was employed to extract features from unordered and unstructured 3D point clouds, and a self-attention module was also employed to remove outliers. Unlike DeepPCO and PointLoc, StickyLocalization [25] relocalized input 3D point cloud within a pre-built map. In the pillar layer where PointPillar [13] method was utilized, local and global key points were extracted from a current point cloud and the global point cloud corresponding to a map, respectively. Then, self-attention and cross-attention modules in the graph neural network layer aggregated context information to improve robustness. The final optimal transport layer output the pose of the current point cloud by a matching process between the outputs of the graph neural network layer. Although its task was not to estimate the pose of a mobile robot, 2DLaserNet [26] processed 2D laser scan data with a neural network developed from PointNet++ [24] to classify the location of the mobile robot as one of room, corridor, and doorway.

In addition to camera and LiDAR, IMU has also been used for relocalization. RoNIN [27] utilized the backbone networks such as ResNet, LSTM, and temporal convolutional network to estimate human 3-DoF poses from a sequence of IMU sensor data. IDOL [28] regressed 5-DoF poses with a two-stage procedure consisting of an orientation module using extended Kalman filters (EKFs) and LSTMs and a position module using bidirectional LSTMs. In NILoc [29], a neural inertial navigation technique was presented to convert IMU sensor data to a sequence of velocity vectors. The methods leveraged a Transformer-based neural network architecture to reduce high uncertainty in IMU data. Moreover, in [30], a neural network framework was proposed to handle laser scan data and IMU sensor data together for mobile robot localization. For feature extraction in the method, a stack of two laser scans and a IMU data sequence between the two laser scans were passed through CNN and LSTM, respectively. Then, another LSTM regressed the 3-DoF robot pose from the fused feature.

III Method

III-A Self-attention

Since Transformer [14] made a great success in the literature on natural language process, its self-attention, one of the essential elements in Transformer, has been utilized in many studies for computer vision applications. In particular, it was shown in [11] that a self-attention on CNN features was effective in camera pose regression. We use the same self-attention, but its input consists of image features computed from an input image and point cloud features computed from an input 2D point cloud. The input of the self-attention , which is a column vector, is first projected to generate query , key , and value by three learnable projections. Then, the value is weighted based on the normalized correlations between the query and the key. The correlations are calculated using the softmax function , and these procedures can be represented as

Here, is the output of scaled dot-product attention in [14]. The output of the self-attention is computed based on a linear projection of and a residual connection as

According to [11], it was illustrated that this self-attention makes activation more intense in fixed objects like buildings and furniture in its input image than in dynamic ones like moving vehicles and pedestrians, which leads to robust relocalization. It was also shown that the self-attention is effective in capturing the correlations among the elements of in camera relocalization as well as in other computer vision applications [31], [32].

III-B Camera-LiDAR fusion for relocalization with multi-head self-attention

The proposed network consists of two feature extraction modules, an MHSA module, and a regression module as shown in Fig. 1. Receiving two types of data obtained from different sensors, an image captured by a camera and a point cloud captured by a 2D LiDAR, it provides a robot pose, which consists of a position and an orientation . We assume that they are synchronized in time.

At first, in the feature extraction modules, the image and point cloud features are computed from the image and the point cloud, respectively. The image feature is computed by the feature extractor of AtLoc [11], which consists of a CNN based on ResNet-34 [17] followed by the self-attention module described above. On the other hand, the point cloud feature is computed by the feature extractor of the PointLoc [12], which consists of the set abstraction layers, the self-attention layer, and the group all layer. Except for the self-attention layers, the other layers in the network were presented in PointNet++ [24], which was proposed for 3D point cloud classification and semantic segmentation. Since the input point cloud is two-dimensional, not three-dimensional, we modified the feature extraction module so that it receives a 2D point cloud as input. As in a CNN where a basic block consisting of convolution, nonlinear activation, and pooling operations is consecutively performed, several successive set abstraction layers extract features from a 2D LiDAR scan to give a feature matrix. The self-attention layer in PointLoc is slightly different from the one in AtLoc described above. In the layer, a weight matrix is computed by passing the feature matrix through a shared multi-layer perceptron (MLP) layer, a sigmoid function, and a broadcasting operation. Then, the output of the self-attention layer is obtained by multiplying the feature matrix and the weight matrix element-wise. Note that the input and output of the self-attention layer in PointLoc also have the same dimension as in AtLoc. The group all layer takes the output of the self-attention layer as its input and provides the point cloud feature by conducting an MLP and max-pooling operations. More details are referred to as [12].

From the perspective of sensor fusion, it may be important to make data measured from different sensors interact with each other. For the relocalization using both camera and 2D LiDAR, the most straightforward way to use their data together in a network is to combine the two features by summation or concatenation as in [33], [34], [35] and perform a pose regression using the combined feature, which is called the fusion feature below. In our network, we chose concatenation to make the fusion feature from the image and point cloud features because summation is impossible but concatenation allows them to have different dimensions. However, we figured out from many experiments that those simple concatenation is not enough to effectively fuse different information obtained from the two sensors. To overcome this limitation, we propose to apply additional MHSA as shown in Fig. 2 to the fusion feature. Since the self-attention effectively captures the correlations between its input elements, it can allow different information contained in and to interact with each other. In other words, we utilize the MHSA module for information fusion.

In [14], MHSA was presented to capture different correlations among input elements by performing several scaled dot-product attentions in parallel. To this end, the outputs of all attentions are integrated and a linear projection is performed as

where is the output of the -th scaled dot-product attention, is the number of the attention heads, and is the concatenation of . In this operation, each attention is scaled by a scaling factor so that its output has the same dimension as the input. Like the Transformer encoder in [14], a normalization layer is applied before MHSA, and a residual connection is attached after MHSA. We employ batch normalization (BN, [36]) instead of layer normalization (LN, [37]) different from [14]. It was demonstrated in [36] that LN is more effective than BN for recurrent networks. However, we find out from experiments that BN is more effective than LN in this work. Also, we do not use the positional encoding, another input of the Transformer encoder, because the order of elements in the fusion feature is not important in this task, unlike a sequence. This MHSA block with identical architecture repeats times as in [14]. It will be demonstrated in experiments that the repetition of MHSA improves the accuracy of the relocalization based on the camera-2D LiDAR fusion.

Finally, the regression module predicts the pose, the position and the orientation from the output of the MHSA module. It consists of a position branch and an orientation branch as in [11], [12]. Each branch is composed of consecutive MLPs. In [12], a leaky ReLU activation function was used after each MLP except for the last one in its regression head, but we replace it with the ReLU activation function in our network. Different from most of the previous studies for end-to-end relocalization, both the position and the orientation are two-dimensional under the assumption that typical serving robots move on planar space. To take into account the continuity of the rotation angle [38], we present the rotation as rather than . To our best knowledge, this work is the first study addressing the end-to-end relocalization for a serving robot based on the camera-2D LiDAR fusion in two-dimensional planar space.

IV Experiments

| Dataset | Sequence | Length | Training | Evaluation |

| Set-01 | seq-01 | 394 | ✓ | |

| seq-02 | 374 | ✓ | ||

| seq-03 | 389 | ✓ | ||

| seq-04 | 359 | ✓ | ||

| seq-05 | 429 | ✓ | ||

| seq-06 | 401 | ✓ | ||

| seq-07 | 390 | ✓ | ||

| seq-08 | 404 | ✓ | ||

| seq-09 | 408 | ✓ | ||

| seq-10 | 416 | ✓ | ||

| Set-02 | seq-01 | 4,820 | ✓ | |

| seq-02 | 7,805 | ✓ | ||

| seq-03 | 6,377 | ✓ |

IV-A Dataset

In order to train and evaluate the neural networks for serving robot relocalization, we constructed a dataset using a commercial serving robot Polaris3D ereon as shown in Fig. 3. The dataset contains two sets, Set-01 and Set-02. We gathered the data samples in Set-01 by moving the robot around an area with tables and chairs in our testbed as shown in the left of Fig. 4a. Set-01 originally consisted of a single sequence of 3,964 lengths. We split it into ten shorter ones as in the right of Fig. 4a. Seven sequences and the others among them were used for training and evaluation, respectively. In addition, we collected the data samples for Set-02 by operating the robot in a relatively wider area with long corridors as shown in the left of Fig. 4b. Set-02 consists of three sequences with lengths of 4,820, 7,805, and 6,377 as in the right of Fig. 4b. Two sequences and the other one among them were used for training and evaluation, respectively. Table I summarizes the ereon dataset described above.

As shown in Fig. 3, ereon has two cameras, Intel RealSense D435 and one 2D LiDAR, SLAMTEC RPLiDAR A1M8. The cameras are installed at the side of the upper and lower serving tray, and the LiDAR is mounted in the center of the drive unit located under the lower serving tray. In order to capture the whole body of people around the robot, the lower and upper cameras face upwards and downwards, respectively, rather than facing straight ahead. We gathered images and point clouds obtained from the lower camera and the LiDAR because the upper camera was affected by vibration during robot movement. The obtained RGB images have the size of 420240 pixels with a frequency of 1.5 Hz. It may be seen that the image resolution and the saving frequency are low. The serving robot is equipped with a single-board computer (SBC) instead of a PC or a laptop due to the unit cost of production. It is not easy in practice to capture and store high-resolution images and 2D LiDAR point clouds at a high frequency on the SBC performing a localization to obtain ground truth pose information mentioned below. The LiDAR sensor captures 2D point clouds with a range radius of up to 12 meters (m) and a field of view of 360 degrees (∘). Although it can measure point clouds at a frequency of 8 Hz, we acquired only the point clouds synchronized with images. Since the angular resolution of the LiDAR sensor is equal to or greater than 0.313∘, the number of 2D points in a point cloud is up to about 1,150. Fig. 5 shows the example RGB images and 2D point clouds captured by our sensors. Together with the sensor data, the robot poses corresponding to images and point clouds are necessary to train and evaluate relocalization algorithms. To do this, we first constructed a map of our testbed using a 2D LiDAR-based SLAM technique and then measured the poses on the constructed map by its localization mode using only the 2D LiDAR. Since the SBC in ereon has not powerful as mentioned above, 2D LiDAR-based mapping and localization is a better solution than visual SLAM and camera-based localization for real-time navigation. The localization mode provides a 6-DoF pose with the quaternion representation for orientation. However, we used the x- and y-axis values for position and the yaw angle value for orientation under the consideration that typical serving robots operate in a flat environment. Given a quaternion , the yaw angle is calculated as

| Layer name | Point num. | Radius | Sample num. | MLP |

| SA1 | 256 | 0.2 | 32 | [16, 16, 32] |

| SA2 | 128 | 0.4 | 16 | [32, 32, 64] |

| SA3 | 64 | 0.8 | 8 | [64, 64, 64] |

| AtLoc (Image) | PointLoc (Point cloud) | |||

| or | median | mean | median | mean |

| 256 | 1.03 m, 22.14∘ | 1.48 m, 38.56∘ | 1.77 m, 5.77∘ | 2.38 m, 19.44∘ |

| 512 | 1.03 m, 23.98∘ | 1.49 m, 39.75∘ | 1.44 m, 5.03∘ | 2.07 m, 17.59∘ |

| 1024 | 1.07 m, 24.75∘ | 1.50 m, 40.06∘ | 1.42 m, 4.10∘ | 2.11 m, 16.23∘ |

| 2048 | 1.05 m, 25.72∘ | 1.52 m, 40.44∘ | 1.26 m, 4.20∘ | 1.93 m, 16.20∘ |

IV-B Training details

We implemented and trained our proposed network and other networks presented for the same task under the setting below. The Adam method was employed as the solver or optimizer. The learning rate was set to 0.0001, and the weight decay was determined to be the same value. The networks were trained up to 1000 epochs using the ereon dataset with a batch size of 256 on a single GPU of NVIDIA GeForce RTX 3090. As in AtLoc [11] and PointLoc [12], we adopted distances to measure the dissimilarity between the ground truth pose and the estimated pose in our loss function as

where and are learnable parameters to balance the position and orientation loss terms. Their initial values and were set to and , respectively, as in [11] and [12]. In the above loss function, we employed the distance between the predicted angle and the ground truth angle instead of the distance between two logarithms of their unit quaternions. This replacement came from the dimension of the space in which our robot operates.

| 256 | 512 | 1024 | 2048 | |||||

| median | mean | median | mean | median | mean | median | mean | |

| 256 | 0.95 m, 8.19∘ | 1.37 m, 34.70∘ | 0.96 m, 3.78∘ | 1.38 m, 29.95∘ | 0.99 m, 3.07∘ | 1.39 m, 30.48∘ | 0.83 m, 2.38∘ | 1.19 m, 22.38∘ |

| 512 | 0.97 m, 8.73∘ | 1.45 m, 33.72∘ | 0.89 m, 4.66∘ | 1.34 m, 31.80∘ | 0.98 m, 3.77∘ | 1.38 m, 29.89∘ | 0.87 m, 3.59∘ | 1.35 m, 28.64∘ |

| 1024 | 0.85 m, 7.81∘ | 1.26 m, 32.47∘ | 0.86 m, 5.06∘ | 1.35 m, 31.98∘ | 0.90 m, 3.51∘ | 1.32 m, 29.39∘ | 0.87 m, 3.18∘ | 1.36 m, 27.78∘ |

| 2048 | 0.90 m, 5.11∘ | 1.34 m, 33.43∘ | 0.90 m, 5.83∘ | 1.43 m, 32.27∘ | 0.85 m, 3.72∘ | 1.30 m, 32.56∘ | 0.82 m, 3.51∘ | 1.26 m, 28.22∘ |

For the purpose of comparison, AtLoc and PointLoc were selected as the image- and the point cloud-based baseline methods for end-to-end serving robot relocalization, respectively. We also utilized their networks as backbones for image and point cloud feature extraction. For image feature extraction, as in AtLoc, we scaled the short side of the image to have 256 pixels, then randomly cropped it to 256256 pixels and normalized the cropped image when training. The random cropping was replaced by the center cropping when testing. For data augmentation, the color jittering method was also conducted by setting the brightness, contrast, and saturation values to 0.7 and the hue value to 0.5. The pre-trained ResNet34 with the ImageNet dataset was chosen as the backbone for image feature extraction. We also applied a dropout operation with a probability of 0.5 whereas no dropout was applied when using BN. Table II shows the parameter values of the set abstraction layers in the point cloud feature extractor used in our experiments. We reduced the numbers of layers and neurons in MLP compared to the original PointLoc setting because the 2D point cloud has fewer input points than the 3D point cloud. We also employed ReLU instead of LeakyReLU as the activation function in the fully connected layers. After the feature extractions mentioned above, the image and the point cloud features were concatenated into the fusion feature. Then, the fusion feature was fed into the several MHSA blocks described in the previous section and the final regression head to provide the pose estimate. In these procedures, both the image and the point cloud features could be set to 256-, 512-, 1024-, and 2048-dimensional giving 16 combinations of fusion. Also, we conducted experiments applying MHSA with 1, 2, 4, and 8 heads and 1, 2, 4, and 6 layers.

IV-C Evaluation results on Set-01

Baselines. In the original AtLoc and PointLoc, the image and point cloud features were 2048- and 1024-dimensional, respectively. We first tried to determine the dimensions of the image and point cloud features represented as and , respectively. To do this, we trained the two methods using the data in the seven training sequences in Set-01 in Table I. Then, we measured the median and mean of the position (m) and orientation errors (∘) using the data in each evaluation sequence in the same set for each value of the and . Most previous end-to-end relocalization studies reported only the median errors. However, we report the mean errors with the median errors since the latter can not reflect a few large errors. We computed the averages of the median and mean errors, and Table III shows the average median and mean errors of position and orientation. We can see from the table that AtLoc gives lower position errors than PointLoc whereas PointLoc yields lower orientation errors than AtLoc. Another interesting point is that the tendency between the error values and their feature dimension is opposite, i.e., the error values of AtLoc and PointLoc decrease and increase as their dimension increases, respectively. Also, in the case of position error, AtLoc provided similar error values varying the dimension of the image features, and the difference was up to 0.04 m and 3.58∘ for position and orientation, respectively. However, the error values of PointLoc had a large difference, the maximum of which was 0.51 m and 3.24∘.

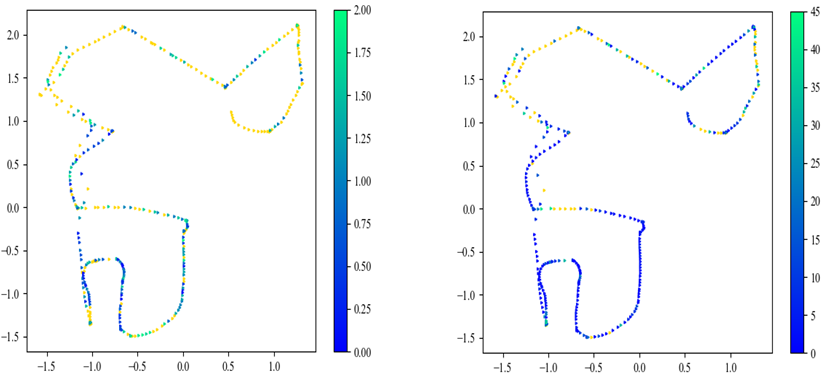

A simple fusion. We present experiments with the fusion feature corresponding to the concatenation of the image and point cloud features extracted by AtLoc and PointLoc without MHSA. Table IV shows the average median and mean errors of position and orientation depending on the fusion combinations, which have different dimensions of the image and point cloud features. Note that the position error decreased to less than 1 m by fusing the image and point cloud features in terms of the average median error. The average median orientation error also maximally decreased to 2.38∘, which was less than the PointLoc’s one. This result clearly shows the benefit of fusing different sensor data. However, the fusion feature still provided a large average mean error of orientation compared to the point cloud features. Additionally, we analyzed these results by visualizing position and orientation errors measured using seq-03 as colors. Fig. 6 shows the position and orientation errors represented as colors. In the figure, the positions of the points indicate the ground truth positions. Also, the yellow indicates outlier values exceeding 2 m in position error and 45∘ in orientation error. Comparing Fig. 6c to Fig. 6a, we can find the points at which orientation error decreased. However, we can also see that some points, e.g., on the top right and the bottom middle of Fig. 6c, still have somewhat large orientation errors compared to the corresponding points in Fig. 6b.

| FusionLoc | (, )=(256, 256) | (, )=(2048, 2048) | |||

| median | mean | median | mean | ||

| 1 | 1 | 0.88 m, 5.23∘ | 1.34 m, 27.06∘ | 0.65 m, 2.10∘ | 1.09 m, 8.47∘ |

| 2 | 0.84 m, 5.22∘ | 1.18 m, 24.01∘ | 0.70 m, 2.15∘ | 1.25 m, 7.80∘ | |

| 4 | 0.78 m, 2.61∘ | 1.19 m, 20.38∘ | 0.62 m, 2.05∘ | 1.13 m, 10.34∘ | |

| 6 | 0.77 m, 2.34∘ | 1.14 m, 17.10∘ | 0.66 m, 1.84∘ | 1.00 m, 8.30∘ | |

| 2 | 1 | 0.91 m, 5.06∘ | 1.36 m, 28.16∘ | 0.61 m, 2.29∘ | 1.37 m, 8.66∘ |

| 2 | 0.81 m, 3.15∘ | 1.21 m, 23.27∘ | 0.66 m, 1.96∘ | 1.35 m, 6.86∘ | |

| 4 | 0.79 m, 2.41∘ | 1.14 m, 17.69∘ | 0.73 m, 1.74∘ | 1.25 m, 7.75∘ | |

| 6 | 0.72 m, 1.95∘ | 1.09 m, 14.19∘ | 0.65 m, 1.83∘ | 1.13 m, 9.87∘ | |

| 4 | 1 | 0.77 m, 4.74∘ | 1.12 m, 25.01∘ | 0.58 m, 1.68∘ | 1.13 m, 8.57∘ |

| 2 | 0.80 m, 3.60∘ | 1.19 m, 23.22∘ | 0.66 m, 2.24∘ | 1.28 m, 11.38∘ | |

| 4 | 0.77 m, 1.93∘ | 1.20 m, 15.30∘ | 0.68 m, 2.03∘ | 1.21 m, 9.25∘ | |

| 6 | 0.74 m, 1.99∘ | 1.15 m, 13.41∘ | 0.63 m, 1.78∘ | 1.16 m, 8.05∘ | |

| 8 | 1 | 0.77 m, 4.89∘ | 1.22 m, 26.37∘ | 0.58 m, 1.52∘ | 0.99 m, 7.25∘ |

| 2 | 0.83 m, 2.82∘ | 1.24 m, 20.69∘ | 0.63 m, 1.89∘ | 1.20 m, 6.63∘ | |

| 4 | 0.81 m, 2.33∘ | 1.20 m, 19.26∘ | 0.60 m, 1.96∘ | 1.09 m, 6.89∘ | |

| 6 | 0.71 m, 2.62∘ | 1.07 m, 13.16∘ | 0.72 m, 2.25∘ | 1.28 m, 9.56∘ | |

FusionLoc. In addition to AtLoc, PointLoc, and the simple concatenation, we finally conducted relocalization experiments by adopting repetitive MHSAs taking the fusion feature as input. As mentioned in the previous section, we applied BN instead of LN to each MHSA block based on the fact that the range of the image feature values was different from that of the point cloud feature values. Moreover, it was mentioned in [37] that LN is not as effective in CNN as in recurrent networks. Fig. 7 shows the trajectories of the estimated positions by FusionLoc with LN and BN using the training sequences seq-01 and seq-10, respectively. This result indicates that BN is more effective than LN to train the proposed network for relocalization. Table V shows the results of some ablation studies on the number of attention heads and the number of MHSA layers in the MSHA module. For efficiency, the position and orientation errors were measured under only the settings of (, )=(256, 256) and (, )=(2048, 2048). When (, )=(2048, 2048), we set the batch size to 64 due to memory limitation. We can find from the table that the errors generally decrease as each of and increases in the case of (, )=(256, 256). Especially, most average mean orientation errors become smaller than those of PointLoc when the number of layers is equal to or greater than 4. This result could not be obtained without the MHSA module as shown in Table IV. We can also see that the position and orientation errors are minimized when =2 and =6 in terms of the average median and when =8 and =6 in terms of the average mean in the case of (, )=(256, 256). Compared to the (, )=(256, 256) without the MHSA module in Table IV, the minimum error values get smaller by (0.23 m, 6.24∘) and (0.3 m, 21.54∘), respectively. The improvement can also be found by comparing Fig. 6c and Fig. 6d. And, they are lower than those of (, )=(2048, 2048) in Table IV though using the lower numbers of image and point cloud features. Moreover, the minimum errors decreased more as the MHSA module was applied to the case of (, )=(2048, 2048). Using the MHSA module made differences by (0.24 m, 1.99∘) in terms of average median error and (0.27 m, 20.97∘) in terms of average mean error when (, )=(2048, 2048).

IV-D Evaluation results on Set-02

In order to validate the proposed method in a larger place, we compared the position and orientation errors using Set-02 data. For efficiency, we set to = = 256. As in the above experiments using the data in Set-01, we trained every neural network aforementioned using the training sequences in Set-02, and we measured the median and mean errors of position and orientation using the evaluation sequence. Fig. 8 shows the median and mean errors of AtLoc, PointLoc, the simple concatenation method, FusionLoc using LN, and FusionLoc using BN, which were computed at every 100 up to 1000 epochs. Different from the results of Set-01 in Table III that AtLoc was better than PointLoc in terms of the position error whereas PointLoc was better than AtLoc in terms of the orientation error, we can see in Fig. 8 that AtLoc provided lower position and orientation errors than PointLoc. This inconsistency may come from the fact that i) Set-02 was collected by making the robot move similar paths multiple times unlike Set-01 as shown in Fig. 4, and ii) the maximum range of the used LiDAR sensor is relatively lower than the area of the place where Set-02 was gathered. Note that using only the fusion feature could decrease the position and the orientation errors if the learning progresses enough. Actually, it yielded a more accurate result than AtLoc by 0.24 m and 1.09∘ in terms of the mean errors at 1000 epochs. We can also see that FusionLoc using LN provided higher errors than the simple concatenation method. However, FusionLoc using BN gave the minimum errors in position and orientation, which were lower than the concatenation method by 0.1 m and 0.5∘ in terms of the mean error at 1000 epochs. It corresponds to 15% and 18% reductions in the mean position and orientation errors. Fig. 9 shows a visualization of the position and orientation errors represented as color using Set-02. We also figure out from the figure that FusionLoc is more effective than the other methods in the relocalization task. In summary, our experimental results demonstrate that MHSA can be an effective solution to fuse the features captured by different sensors, and BN is more appropriate than LN in MHSA for robot relocalization based on the camera-2D LiDAR fusion.

V Conclusions and Future Works

In this paper, we proposed FusionLoc, an end-to-end relocalization method for serving robots based on the fusion of RGB images and 2D LiDAR point clouds. The proposed network performs the pose regression through AtLoc and PointLoc feature extractors, the MHSA module, and the regression module. To evaluate the proposed network, we constructed a dataset by collecting images, 2D point clouds, and robot poses using a commercial serving robot. Conducting relocalization experiments using the dataset, FusionLoc showed better performances than the previous relocalization approaches taking only an image or a 2D point cloud as their input. We observed from the experiments that images and point clouds play a role in complementing the lack of information in each modality. In particular, MHSA was an effective way to make the interaction between different information contained in the image and point cloud. Our fusion method using MHSA can help the serving robot find its current pose with less error when it lost its location based on conventional methods such as adaptive Monte Carlo localization.

From the perspective of dataset, our dataset used in the experiments includes only static scenarios at a single site although the proposed method was demonstrated to be more effective than the previous methods. In future works, we are supposed to supplement the dataset by collecting more data under various variations on dynamic scenarios at several places where the serving robots will be applied. Using the newly collected dataset, we will also try to additionally reduce relocalization errors with vision Transformers which have shown successes for many computer vision tasks.

Acknowledgments

This work was mainly supported by Electronic and Telecommunications Research Institute (ETRI) grant funded by the Korean government [23ZD1130, Development of ICT Convergence Technology for Daegu-Gyeongbuk Regional Industry]. The first author (J. Lee) was supported by the Industrial Strategic Technology Development Program (20009396) funded By the Ministry of Trade, Industry & Energy (MOTIE, Korea).

References

- [1] S. Thrun, W. Burgard, and D. Fox, Probabilistic Robotics. Cambridge, Mass.: MIT Press, 2005.

- [2] Hong, Seonghun and Park, Soon-Yong and Lee, Sejin and Kim, Junho and Park, Jong-Il, “Special issue on recent advancements in simultaneous localization and mapping (SLAM) and its applications,” ETRI Journal, vol. 43, no. 4, pp. 577–579, 2021. [Online]. Available: https://onlinelibrary.wiley.com/doi/abs/10.4218/etr2.12398

- [3] Z. Su, X. Zhou, T. Cheng, H. Zhang, B. Xu, and W. Chen, “Global localization of a mobile robot using lidar and visual features,” in 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), 2017, pp. 2377–2383.

- [4] A. Kendall, M. Grimes, and R. Cipolla, “PoseNet: A Convolutional Network for Real-Time 6-DOF Camera Relocalization,” in 2015 IEEE International Conference on Computer Vision (ICCV), 2015, pp. 2938–2946.

- [5] A. Kendall and R. Cipolla, “Modelling Uncertainty in Deep Learning for Camera Relocalization,” in 2016 IEEE International Conference on Robotics and Automation (ICRA), 2016, pp. 4762–4769.

- [6] F. Walch, C. Hazirbas, L. Leal-Taixé, T. Sattler, S. Hilsenbeck, and D. Cremers, “Image-Based Localization Using LSTMs for Structured Feature Correlation,” in 2017 IEEE International Conference on Computer Vision (ICCV), 2017, pp. 627–637.

- [7] A. Kendall and R. Cipolla, “Geometric Loss Functions for Camera Pose Regression with Deep Learning,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 6555–6564.

- [8] R. Clark, S. Wang, A. Markham, N. Trigoni, and H. Wen, “VidLoc: A Deep Spatio-Temporal Model for 6-DoF Video-Clip Relocalization,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 2652–2660.

- [9] I. Melekhov, J. Ylioinas, J. Kannala, and E. Rahtu, “Image-Based Localization Using Hourglass Networks,” in 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), 2017, pp. 870–877.

- [10] S. Brahmbhatt, J. Gu, K. Kim, J. Hays, and J. Kautz, “Geometry-Aware Learning of Maps for Camera Localization,” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2018, pp. 2616–2625.

- [11] B. Wang, C. Chen, C. Xiaoxuan Lu, P. Zhao, N. Trigoni, and A. Markham, “AtLoc: Attention Guided Camera Localization,” vol. 34, no. 06, Apr. 2020, pp. 10 393–10 401.

- [12] W. Wang, B. Wang, P. Zhao, C. Chen, R. Clark, B. Yang, A. Markham, and N. Trigoni, “PointLoc: Deep Pose Regressor for LiDAR Point Cloud Localization,” IEEE Sensors Journal, vol. 22, no. 1, pp. 959–968, 2022.

- [13] A. H. Lang, S. Vora, H. Caesar, L. Zhou, J. Yang, and O. Beijbom, “PointPillars: Fast Encoders for Object Detection From Point Clouds,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 12 689–12 697.

- [14] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is All you Need,” in Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, Eds., vol. 30. Curran Associates, Inc., 2017.

- [15] R. Arandjelovic, P. Gronat, A. Torii, T. Pajdla, and J. Sivic, “NetVLAD: CNN Architecture for Weakly Supervised Place Recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 5297–5307.

- [16] D. G. Lowe, “Distinctive Image Features from Scale-Invariant Keypoints,” International Journal of Computer Vision, vol. 60, pp. 91–110, 2004.

- [17] K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 770–778.

- [18] Szegedy, Christian and Wei Liu and Yangqing Jia and Sermanet, Pierre and Reed, Scott and Anguelov, Dragomir and Erhan, Dumitru and Vanhoucke, Vincent and Rabinovich, Andrew, “Going Deeper with Convolutions,” in 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015.

- [19] Z. Laskar, I. Melekhov, S. Kalia, and J. Kannala, “Camera Relocalization by Computing Pairwise Relative Poses Using Convolutional Neural Network,” in 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), 2017, pp. 920–929.

- [20] V. Balntas, S. Li, and V. Prisacariu, “RelocNet: Continuous Metric Learning Relocalisation Using Neural Nets,” in Proceedings of the European Conference on Computer Vision (ECCV), V. Ferrari, M. Hebert, C. Sminchisescu, and Y. Weiss, Eds. Cham: Springer International Publishing, 2018, pp. 782–799.

- [21] H. Hu, A. Wang, M. Sons, and M. Lauer, “ViPNet: An End-to-End 6D Visual Camera Pose Regression Network,” in 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), 2020.

- [22] K. Zhou, C. Chen, B. Wang, M. R. U. Saputra, N. Trigoni, and A. Markham, “VMLoc: Variational Fusion For Learning-Based Multimodal Camera Localization,” vol. 35, no. 7, May 2021, pp. 6165–6173.

- [23] W. Wang, M. R. U. Saputra, P. Zhao, P. Gusmao, B. Yang, C. Chen, A. Markham, and N. Trigoni, “DeepPCO: End-to-End Point Cloud Odometry through Deep Parallel Neural Network,” in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019, pp. 3248–3254.

- [24] C. R. Qi, L. Yi, H. Su, and L. J. Guibas, “PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space,” in Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, Eds., vol. 30. Curran Associates, Inc., 2017.

- [25] K. Fischer, M. Simon, S. Milz, and P. Mäder, “StickyLocalization: Robust End-To-End Relocalization on Point Clouds using Graph Neural Networks,” in 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), 2022, pp. 307–316.

- [26] B. Kaleci, K. Turgut, and H. Dutagaci, “2DLaserNet: A deep learning architecture on 2D laser scans for semantic classification of mobile robot locations,” Engineering Science and Technology, an International Journal, vol. 28, p. 101027, 2022. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S2215098621001397

- [27] S. Herath, H. Yan, and Y. Furukawa, “RoNIN: Robust Neural Inertial Navigation in the Wild: Benchmark, Evaluations, & New Methods,” in 2020 IEEE International Conference on Robotics and Automation (ICRA), 2020, pp. 3146–3152.

- [28] S. Sun, D. Melamed, and K. Kitani, “IDOL: Inertial Deep Orientation-Estimation and Localization,” vol. 35, no. 7, May 2021, pp. 6128–6137.

- [29] S. Herath, D. Caruso, C. Liu, Y. Chen, and Y. Furukawa, “Neural Inertial Localization,” in 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022.

- [30] C. Li, S. Wang, Y. Zhuang, and F. Yan, “Deep Sensor Fusion between 2D Laser Scanner and IMU for Mobile Robot Localization,” IEEE Sensors Journal, vol. 21, no. 6, pp. 8501–8509, 03 2021.

- [31] J. Fu, J. Liu, H. Tian, Y. Li, Y. Bao, Z. Fang, and H. Lu, “Dual Attention Network for Scene Segmentation,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 3141–3149.

- [32] Y. Yu, Y. Xiong, W. Huang, and M. R. Scott, “Deformable Siamese Attention Networks for Visual Object Tracking,” in 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2020, pp. 6727–6736.

- [33] A. Oertel, T. Cieslewski, and D. Scaramuzza, “Augmenting Visual Place Recognition With Structural Cues,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 5534–5541, 2020.

- [34] Y. Pan, X. Xu, W. Li, Y. Wang, and R. Xiong, “CORAL: Colored structural representation for bi-modal place recognition,” 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pp. 2084–2091, 2021.

- [35] J. Komorowski, M. Wysoczanska, and T. Trzcinski, “MinkLoc++: Lidar and Monocular Image Fusion for Place Recognition,” in International Joint Conference on Neural Networks (IJCNN). IEEE, July 2021.

- [36] S. Ioffe and C. Szegedy, “Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift,” in Proceedings of the 32nd International Conference on International Conference on Machine Learning (ICML), vol. 37, 2015, pp. 448–456.

- [37] J. L. Ba, J. R. Kiros, and G. E. Hinton, “Layer Normalization,” 2016. [Online]. Available: https://arxiv.org/abs/1607.06450

- [38] Y. Zhou, C. Barnes, J. Lu, J. Yang, and H. Li, “On the Continuity of Rotation Representations in Neural Networks,” in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 5738–5746.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/194ef05e-878e-4d8f-96fc-30cc90159d4b/Leej.png) |

Jieun Lee received the B.E., M.E., and Ph.D degrees in department of electrical and computer engineering from Ajou University, Korea, in 2009, 2011, and 2019, respectively. From September to December in 2019, she was a Researcher in Advanced Institute of Convergence Technology, Korea. Since October 2021, she has been a Post Doctoral Researcher in Electronics and Telecommunications Research Institute (ETRI), Korea. Her research interests include computer vision, machine learning, robot perception, and their applications. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/194ef05e-878e-4d8f-96fc-30cc90159d4b/Leeh.jpg) |

Hakjun Lee received the B.S. degree in electrical engineering from Chungbuk National University, Cheongju, South Korea and the M.S. and Ph.D. degrees in electrical engineering from the Pohang University of Science and Technology (POSTECH), Pohang, South Korea, in 2014, 2016, and 2020, respectively. He was a Post Doctoral Researcher in POSTECH from Sep. 2020 to Apr. 2021. He is currently a Senior Researcher with Polaris3D Company, Ltd., Pohang, South Korea. His research interests include service robot, robust control, and navigation system. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/194ef05e-878e-4d8f-96fc-30cc90159d4b/oh.png) |

Jiyong Oh (M’08) received the B.S. degree from the School of Electronic Engineering, Ajou University, Korea in 2004 and the M.S. and Ph.D. degrees from the School of Electrical Engineering and Computer Science, Seoul National University, Korea in 2006 and 2012, respectively. He was a Post Doctoral Researcher in Sungkyunkwan and Ajou University, Korea in 2012 and 2013, respectively. From Sept. 2013 (March 2015) to March 2015 (May 2016), he was a Research Fellow (BK Assistant Professor) in the Graduate School of Convergence Science and Technology, Seoul National University, Korea. Since June 2016, he has been a Senior Researcher in Electronics and Telecommunications Research Institute (ETRI), Korea. His research interests include computer vision, machine learning, robot perception, and their applications. |