Functional differentiations in evolutionary reservoir computing networks

Abstract

We propose an extended reservoir computer that shows the functional differentiation of neurons. The reservoir computer is developed to enable changing of the internal reservoir using evolutionary dynamics, and we call it an evolutionary reservoir computer. To develop neuronal units to show specificity, depending on the input information, the internal dynamics should be controlled to produce contracting dynamics after expanding dynamics. Expanding dynamics magnifies the difference of input information, while contracting dynamics contributes to forming clusters of input information, thereby producing multiple attractors. The simultaneous appearance of both dynamics indicates the existence of chaos. In contrast, sequential appearance of these dynamics during finite time intervals may induce functional differentiations. In this paper, we show how specific neuronal units are yielded in the evolutionary reservoir computer.

One of the universal characteristics of the brain is functional differentiation, where each elementary unit of the brain, that is, a neuron or a neuron assembly, plays a role in a respective specific function. The emergence of functional differentiation in the brain depends not only on gene expressions but on self-organization with constraints. Here, constraints may stem from physical, chemical, and even informational factors that develop fetal brain in its developmental process. In this paper, we propose an artificial neural network that realizes functional differentiation. In particular, we focus on computation ability of an extended reservoir computer, introducing evolutionary dynamics, by which parameters such as the connection weights of an internal network can be changed and optimized for a given constraint. In such an evolutionary reservoir computer, we found that neurons differentiated to respond to specific input stimulations, where we used spatial and temporal patterns as representations of visual and auditory stimulations, respectively. In this dynamic development, the network topology changed from a random network to a feedforward network including feedback connections. The development of this type of network structure is consistent with animal and human cortical local network structures. Although the structure of the present system and its dynamical rule are different from those in Rössler’s optimization system, both converged dynamics are similar, in the sense that an optimized solution adapts to the environment.

I Introduction

Functional differentiations have occurred in animal and human brains in biological evolution associated with structural changes of the brain networks Sporns and Betzel (2016); Glasser et al. (2016); Treves, Skaggs, and Barnes (1996); Sharma, Angelucci, and Sur (2000). Furthermore, in the cerebral cortex, more than 180 different areas were recently identified using modern imaging technology, where assemblies of multiple modules temporarily emerged depending on given tasks via cooperative dynamics across such assemblies, and this process is called functional parcellation Glasser et al. (2016). In contrast, similar correlated behaviors between modules have also been observed in resting states, in association with transition dynamics of neural activity between functional areas Biswal et al. (1995); Greicius et al. (2003); Fox et al. (2005). This is known as transitions between default modes. The questions have been presented regarding why and how functional differentiation and/or functional parcellation occurred. However, only a few theoretical studies have been proposed thus farVon der Malsburg (1973); Amari (1980); Kohonen (1982), and the underlying basic principle is still unclear. In this paper, we use the term functional differentiations as a generic term expressing both functional differentiation and functional parcellation.

Clarifying the computational principle of functional differentiations leads not only to a fundamental understanding of the brain’s functions but also to a promising direction of research for artificial neural networks that possess a flexible and adaptive structure. The study of such artificial networks may provide a better design for artificial intelligence and roboticsLe (2013); Asada et al. (2001). It is also known that genetic factors do not entirely determine the form of differentiationsSharma, Angelucci, and Sur (2000). In the present paper, we treat functional differentiations from the perspective of self-organization in the brain, whereby the computational principle of functional differentiations can be clarified.

We have proposed the self-organization with constraints concept for describing functional differentiations in the brain and studied several mathematical models Tsuda, Yamaguti, and Watanabe (2016). In this study, we observed the emergence of system components (i.e., elements) caused by a given constraint that acts on a whole system. In Ref. Yamaguti and Tsuda, 2015, using a genetic algorithm, we studied how heterogeneous modules develop in the networks consisting of coupled phase oscillators of the modified Kuramoto modelKuramoto (1984). In that study, the constraint was provided by maximizing the bidirectional information transmission between two subnetworks. Starting from the random networks of phase oscillators, two functionally differentiated modules evolved. The emergence of the differentiated modules from random and homogeneous networks may be caused by symmetry breaking via chaotic behaviors of the network.

As a further extended study about self-organization with constraints, here we treat the functional differentiation of networks in terms of a biologically inspired model that can operate cognitive tasks. We also investigate what constraints can be appropriate for the emergence of functional differentiations. The present model is based on reservoir computersMaass, Natschläger, and Markram (2002); Jaeger and Haas (2004); Dominey, Arbib, and Joseph (1995).

Reservoir computers were proposed as a kind of recurrent neural network (RNN) and were considered as computational models of cerebellum cortexYamazaki and Tanaka (2007) and neocortexEnel et al. (2016) because of similar network structures to microcircuits in the brain, such as random and sparse connections. Unlike other RNNs and deep neural networks, only synaptic weights to output units (called readout units) are modified, and recurrent synaptic weights remained unchanged throughout the learning process. This scheme drastically speeds up the supervised learning process compared with back-propagation-based methods. Furthermore, fully developed chaos in the internal network produces poor performance, but several studies have reported that the ”edge of chaos”, or weakly chaotic states of the internal network, can bring about effective performance for given tasks Bertschinger and Natschläger (2004); Sussillo and Abbott (2009); Hoerzer, Legenstein, and Maass (2014). However, other studies argue that the importance of the edge of chaos does not always hold Laje and Buonomano (2013); Carroll (2020). It may be more essential for certain tasks, such as short-term memory, that the system state is at the ”edge of stability” Carroll (2020); Yamane et al. (2016), where the largest Lyapunov exponent of an attractor is near zero. Examples of information processing in neural networks using such neutral stability have been discussed in, for example, Refs. Mante et al., 2013; Yamane et al., 2016.

As stated in the following paragraphs, we treat optimization problems by introducing evolutionary dynamics. Using an optimization method, the complexity of network dynamics is reduced by the appearance of fixed-point states in the case without inputs, and then the network dynamics is easily entrained by the input dynamics, thereby adapting to environments. Coupled optimizers of RösslerRössler (1987) provide a typical example of the appearance of fixed-point dynamics in relative dynamic behaviors between controlled and controlling systems, while overall dynamics appears to be weakly chaotic states.

In conventional studies of reservoir computers (RCs), the internal network, which plays a role in a reservoir, is constructed by random connections of neuronal units. However, recently, the study of different types of network topology such as small-world network is attracting attention because such a network provides effective performanceKawai, Park, and Asada (2019); Park et al. (2019). Accordingly, we here study the functional differentiations of RCs that can perform multiple tasks, by exploring an optimized network. In this respect, we propose an extended model of RCs, by adopting genetic algorithms to such an internal recurrent network, which we call evolutionary RCs (ERCs). In an optimization problem in an ERC, we combined an evolutionary algorithm, which modifies the structure of recurrent networks, with a supervised learning algorithm, which changes the output weights.

In the present study, we consider a network that receives combined signals coming from multiple sensory modalities, such as visual and auditory sensations, which were encoded by spatial and temporal patterns, respectively. The task of the network is to separate the spatial and temporal patterns from the combined input signals. The performance of the network is evaluated after determining the output weights. Using an evolutionary algorithm, we investigate a network structure that realizes high task performance. From the perspective of functional differentiations, the internal structure of the developed network was studied, using mutual information analysis.

The organization of the paper is as follows. In Section II, we describe the mathematical model, tasks, genetic algorithm, and the method of network analysis. In Section III, we show how ERCs can solve the given tasks by changing internal network structures. The information mechanism of functional differentiations in neuronal units of the networks is clarified using mutual information analysis, and the parameter dependence on the functional differentiations is also shown. Section IV is devoted to summary and discussion.

II Model and Method

II.1 Network architecture

We extended RCs Dominey, Arbib, and Joseph (1995); Maass, Natschläger, and Markram (2002); Jaeger and Haas (2004), adopting the optimization principle via evolutionary dynamics to the internal random recurrent networks, called ERCs. We employed a continuous-valued, discrete-timestep model of ERC. The network consists of neurons, whose dynamics is described by

| (1) | |||||

where is a state of the -th neuron at time , and are weights of recurrent connections and weights of connections to an input signal , respectively, and is a constant term. In addition, represents a decay constant, and is a noise term. The initial state of was randomly taken from a uniform distribution over . The network consisting of these neurons was constructed with sparse connections, such that only 10% of synaptic connections on the network were nonzero.

Because of effective computations on differentiations, we used the following additional structure of internal networks. The internal networks were divided into two groups of neurons that construct an input and an output layer within internal networks, unlike a standard RC that is constructed by homogeneous random networks. Neurons in the input layer receive input signals but have no direct connections to output units, called readout units. In contrast, neurons in the output layer do not receive input signals directly but have direct connections to the readout units (Fig. 1).

The model was trained to perform the discrimination task of two different kinds of patterns: simultaneous discrimination of spatial and temporal patterns. Correspondingly, the model possesses two distinct output vectors, spatial and temporal vectors. Each output vector was determined by a linearly weighted sum of the internal states, as shown in the following equation.

| (2) |

where (*) indicates spatial or temporal patterns, are indices of readout units and are weights for readout. In the present simulation, output weights are determined, using ridge regression. For input patterns denoted by an asterisk, the following loss function was minimized:

| (3) |

where the first term indicates the loss stemming from the differences between output and target patterns during a training period of length and the second term indicates the loss from the weight regularization.

II.2 Task setting

II.2.1 Separation Task

To represent a simultaneous input of visual and auditory patterns, we considered the product of spatial and temporal patterns as inputs. The input was represented by an -dimensional signal . We predefined finite sets of spatial patterns and temporal patterns . Then, for every time period , we multiplied randomly selected temporal and spatial patterns to generate the input signal. Spatial patterns represent static components in sensory inputs, which we realized as square waves by the following equation:

Temporal patterns were represented as one-dimensional sinusoidal signals:

where . Here, let and be the indices of spatial and temporal patterns at time , respectively. Thus, when the -th spatial pattern and -th temporal pattern at time are presented, the input signal of the -th unit, in Eq. (1) is described by . The combination of spatial and temporal patterns, , was randomly switched for each step. In the present paper, the computational results are shown in the case of .

The task of ERCs is to discriminate spatial and temporal patterns and classify each pattern correctly at the readout units. The network was trained such that the -th unit of the spatial and the -th unit of the temporal readout units exhibit ones, and the other neuronal units exhibit zeros. Considering the delay from the input to the output layers, the input pattern of four steps ago was set as a teacher signal, i.e., in Eq. (3) takes if and otherwise. The same rule was applied to . To perform this kind of task, the network needs short-term memory and nonlinear operations. The accuracy of network learning was calculated as a proportion of time such that the readout unit taking the maximum value at each time coincides with the correct index .

II.2.2 Combination Task

For comparison with the presented performance of the network, we tried to evolve the network with another task called the combination task. The task was detecting the specific combinations of spatial and temporal inputs. In the previous task, processing two information sources after separating such sources was necessary, while the present task needs processing of combined information of two sources. We used the same input patterns as the previous task. The same number of readout units as the spatial (and temporal) readout units in the separation task () was used for fair comparison between these two conditions. In supervised learning at readout units , , we changed the connection weights to detect some specific combinations of spatial and temporal patterns. In more detail, let be a set of combinations of spatial and temporal patterns, which the -th readout unit should detect. Furthermore, each combination is included in either set of . An example of this allocation of a combination of patterns to readout units is shown in Fig. 7. Considering the time delay in a similar manner of the case of a separation task, the teacher signals takes 1 only when , and otherwise 0. In the evolution experiment, the network size and other system parameters were the same as in the separation task. The combination of was randomly allocated in each generation.

II.3 Genetic algorithm

For the presented tasks, reservoir computing networks with random internal networks, which belong to conventional RCs, did not show good performance, even after the output weights were learned using the ridge regression. This implies that the structure of internal networks must be changed for such tasks. This is a reason why we introduced a genetic algorithm for changing the internal networks and extended conventional RCs to evolutionary ones. For the first time, we prepared an initial ensemble of randomly connected networks, and second, we performed the following procedures (1) to (3) for each network.

-

(1)

Collection of data for learning. Input a pattern sequence consisting of spatial and temporal patterns, and continue to update internal states according to Eq. (1), and discard the first transient data.

-

(2)

Learning period. Perform supervised learning of the readout weights with ridge regression, using the internal states and teacher signals collected in (1).

-

(3)

Test period. Update the states of both internal and readout units, and then calculate the root mean square of errors between teacher signals and outputs for both spatial and temporal patterns. Then, evaluate Eq.(4).

For all networks, the following procedure (4) was performed.

-

(4)

Evolutionary period. Among all networks, select networks with smaller errors for which the next generation of the networks were produced using mutation and crossover.

In the present simulation, the targets for mutation and crossover are the weights of recurrent connections and decay constants, . Mutation of the weights of recurrent connections was performed, randomly reconnecting % of the total connections and adding Gaussian noise, in a randomly selected 40% of the weights of all internal connections. Decay constants were also changed by randomly adding Gaussian noise, , where the upper and lower cutoffs of were commonly given as [. As for crossover, we randomly selected two survived networks and constructed one new network, where a half connection of a new network stems from a randomly chosen half connection of one survived network and the other half connection from the other survived network. For each generation, we repeated (1) to (4), and the selected networks show high adaptability to the given task. As a constraint for evolutionary dynamics, the following loss function was adopted, which indicates the summation of mean squared errors of the activity of all readout units, based on the teacher signals for both spatial and temporal patterns. Here, an average was taken over both of all readout units and a test period of length .

| (4) | |||||

II.4 Mutual information analysis

To clarify an indication of the functional differentiation of neurons, we adopted mutual information between neural activity in the internal network and teacher signals. Mutual information was calculated for spatial and temporal patterns, separately according to the following steps; Discretize neuronal states by equally dividing the interval between its minimum and maximum values into states, which are represented by . Then, calculate the joint-probability distributions and , whose distributions provide the basis of the calculation of mutual information for spatial and temporal patterns defined in the following manner.

III Computation Results

III.1 Learning of task by evolution

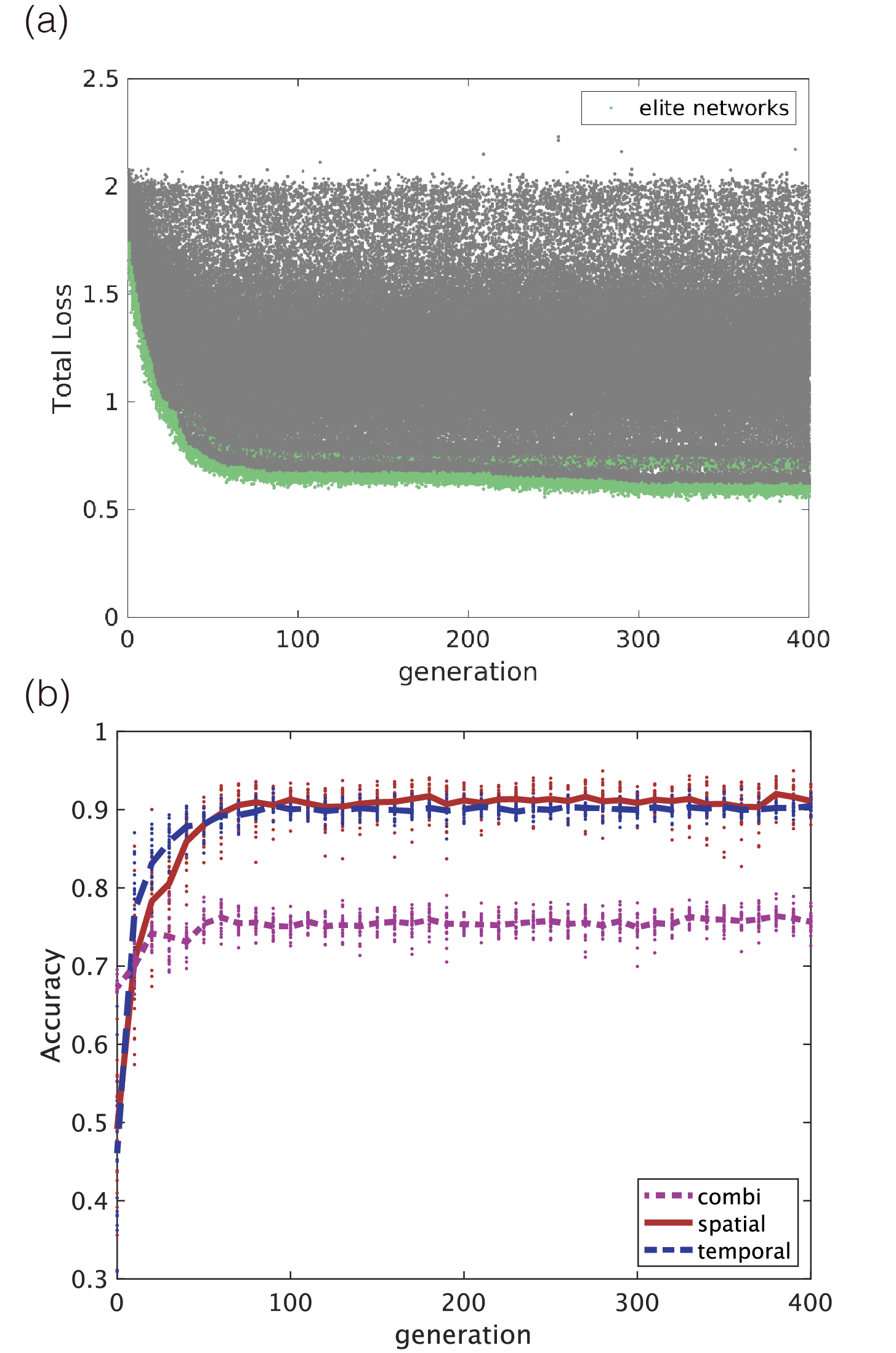

Computation results of evolutionary dynamics showed high accuracy for both spatial and temporal patterns, which were around 90 in the case that the number of neuron was (Fig. 2(b)). The process of evolution is shown in Fig. 2(a). Although the performance of the early networks was low, it rapidly improved during the first hundred generations, thereby converging to a certain equilibrium state.

In this experiment, the objective function depends on the discriminations independently performed for spatial and temporal patterns but independent of the performance of the combination task. Nevertheless, the network performance can be estimated through learning only the readout units for each generation, because a common feature of RCs is that multiple-readout units can learn in parallel. In Fig. 2(b), the performance of the network in the case that the combination task was learned by ridge regression for each network, and each generation was also indicated by the purple dotted line and dots. Because the network evolution was not optimized for the combination task, the network performance had an accuracy of around 70%.

We show the output time series for spatial and temporal patterns at initial and evolved networks in Fig. 3. The evolved network successfully outputs correct signals for the classification of spatial and temporal patterns, except for the first few steps after exchanging the inputs. Evolutions of spectral radii of the recurrent weight matrix and the distribution of decay constant are discussed in Appendix B.

III.2 Differentiation of neurons and the change of network structure

To investigate functional differentiation of each neuron through evolution, we examined mutual information between the state of neuron and spatial patterns , and between and temporal patterns . If two quantities are negatively correlated over neuron ensembles, this implies the existence of many differentiated neurons that primarily represent only one type of information. The correlation coefficient between the two mutual information values in the input layer was about , indicating little change with evolution, and variance between trials was large (Fig. 4(a)). In contrast, correlation in the output layer significantly decreased in early evolution stages, when classification performance was improving, finally reaching a trial average of about (Fig. 4(a)). Focusing on the output layer that showed a negative correlation, we made histograms to approximate the joint probability distribution of the two mutual information values and in the output layer neurons (Fig. 4(b)). Compared with the initial networks, the distribution extends to the upper left and lower right after evolution. In particular, neurons with higher increased in the evolved networks. This result implies the appearance of the functional differentiation of the neural specificity of sensory information. To test robustness of the emergence of functional differentiation, we conducted two additional experiments: one using spatiotemporal patterns in which every component has a different oscillatory phase and another using a different set of frequencies. Appendix B presents the results.

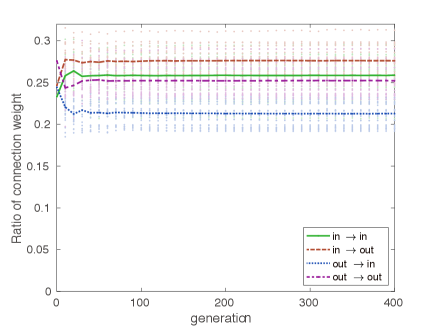

We also investigated how the network structure changed from a random network after evolutionary optimization was established. We found that the feedforward connections from the input layer to the output layer in the internal network were strengthened much more than the other connections (Fig. 5). Furthermore, the feedback connections from the output to the input layer were rather weakened, and were also weakened for internal feedback connections in both the input and output layers. We estimated the effective connectivity of the network type for the feedforward or feedback type of networks by such connection strength. The ratio of effective connectivity of the feedback to the feedforward networks varied depending on the system size : about 2:3 at , and 3:4– 6:7 at . This tendency of the reduction of feedback connections relative to the feedforward connections does not contradict the structure of cortical local connections that was recently found by Seeman et al.Seeman et al. (2018), where the ratio of the number of connections was reported as 1:10 for mouse primary visual cortex and 1:5– 1:7 for human frontal and temporal cortices. Furthermore, the evolved structure shown in the present ERC is similar to the evolutionary change of the network structure of hippocampus. In the biological evolution from reptiles to mammals, the hippocampus evolved from rather randomly uniform networks consisting of a small-cell and a large-cell layer to differentiated feedforward networks including feedback connections Treves (2004). It is also known in mammals that some amount of feedback connections exist in CA3, whereas only a few feedback connections exist in CA1. Thus, the ratio of feedback connections to feedforward connections in the mammalian hippocampus looks consistent with our findings in ERCs. However, it is questionable if the constraint adopted here was used in biological evolution. In biological evolution, sexual and social constraints as well as natural selections would have been more significant than the information constraint adopted here.

The structural changes described may induce functional changes. It is known that uniformly random networks in reptiles give rise to a simple memory, which can be realized by a single association. In contrast, RNNs with inhibitory neurons in mammals can produce an episodic memory, which can be realized by a successive association of memories (see, for example, Tsuda, Koerner, and Shimizu (1987); Tsukada, Yamaguti, and Tsuda (2013)). The hippocampal CA3 plays a role in the formation of such sequences of memories, while the hippocampal CA1 receives such sequences, and encodes the sequential information in a form of fractal geometry, called Cantor coding (see, for example, Tsuda (2001); Fukushima et al. (2007); Tsuda and Kuroda (2001); Kuroda et al. (2009); Yamaguti et al. (2011)). Considering these findings, the evolution of the network structure to the feedforward network including feedback connections was a decisive milestone in the evolution of memory. Therefore, the present ERC possesses sufficient structures of neural networks to yield high performance for not only the discrimination of patterns but also memory formation.

III.3 Combination task

For comparison with the presented task, called here the separation task, we investigated the development of networks in the case of the combination task, where the same network size and parameters were used. The combination task identifies specific combinations of the spatial and temporal patterns. Learning succeeded with a high accuracy (Fig. 6, 7), but a similar differentiation was not observed in the internal network structure, that is, and did not show negative correlations(Fig. 8(a)). The joint probability distribution of the evolved networks (Fig. 8(b)) shows a concentration along a diagonal line, which was not seen in the separation task. This implies that a different type of optimization occurred. Specifically, because many neurons possessing both spatial and temporal information were observed in the output layer, such neurons possibly do not separate sensory information, but rather unify sensations, thereby processing a combination of sensory inputs. The emergence of such neurons may be related to the concept of “mixed selectivity,” which has recently been intensively studied both experimentally and theoretically in the neocortex Rigotti et al. (2013).

III.4 Dependence on constraints

We investigated two kinds of conditions on which differentiation depends: the size of the network and the strength of the weights. As for the network size, the computation results showed that a decreasing network size corresponds to an increasing number of neurons that specifically respond to either input signal of spatial or temporal patterns (Fig. 9). Classification accuracy increased with network size (Fig. 9(a)). However, the degree of functional differentiation in the output layer, as measured by the strength of negative correlation of mutual information, was higher at and than at (Fig. 9(b)). There was no negative correlation in any case in the combination task. As for network structure, we found that ratios of feedforward connections increased and those of feedback connections decreased as the network size decreased (Fig. 9(c)).

To investigate the effect of penalty for weight size, the following penalty term, , on the connections of the internal network was added to the loss function and evolved the network. Here, we show the computation results in the case of . Because the network with sufficiently differentiates even without such an additional penalty term, the effect of the additional term was very weak. In contrast, in , because the degree of differentiations was low in the case without the penalty term (Fig. 9(b), we investigated how differentiations changed by such an additional term (Fig. 10). As the strength of the penalty increased, accuracy fell at and rose again at (Fig. 10(a)). A detailed investigation of the reasons for this decrease and increase is beyond the scope of this paper. Negative correlations between two mutual information values became stronger as increased, implying an increase in differentiated neurons (Fig. 10(b)). The feedforward-to-feedback ratio did not strongly depend on the penalty strength (Fig. 10(c)). It looks the penalty strength did not affect the differentiation on feedforward/feedback structure of the network. In summary, there was an evident range of penalty strengths that enhances differentiation without adversely affecting classification accuracy.

IV Discussion

In this paper, we proposed a new computation network type that simultaneously decodes multiple kinds of inputs, using the evolution of an internal network of RCs. The network performance studied with mutual information analysis showed neuronal differentiations in the sense of the emergence of the specificity of neurons, responding to a single specific input pattern. In the simulation, some other neurons still evolved, responding to multiple inputs. This type of neuron seems capable of evolving to neurons that may specifically respond to other new kinds of inputs. The computation results suggest the information mechanism underlying functional differentiations in the brain.

We also investigated the dynamics of the evolved networks. After the optimization is established, all evolved networks produced a single fixed-point attractor in the case without inputs. In the presence of input sequence, a limit-cycle type of quasi-attractors emerged and transitions between such quasi-attractors occurred. These dynamic behaviors appear different from chaotic itinerancy Kaneko and Tsuda (2003), which represents chaotic transitions between quasi-attractors.

Acknowledgements.

The authors would like to thank Otto E. Rössler for his continual encouragement and stimulation via both personal communication and his outstanding work on chaos. We would like to thank Y. Kawai, J. Park, M. Asada, T. Hirakawa, T. Yamashita, H. Fujiyoshi, and H. Watanabe for their helpful discussions. This study was partially supported by the JST Strategic Basic Research Programs (Symbiotic Interaction: Creation and Development of Core Technologies Interfacing Human and Information Environments, CREST Grant Number JPMJCR17A4). This work was also partially supported by a Grant-in-Aid for Scientific Research on Innovative Areas (Non-Linear Neuro-Oscillology: Towards Integrative Understanding of Human Nature, KAKENHI Grant Number 15H05878) from the Ministry of Education, Culture, Sports, Science and Technology, Japan.Data Availability

The data that supports the findings of this study are available within the article.

Appendix A Parameter values

Here, we describe parameter values used in numerical simulations of this article. The number of the reservoir units: , except for Fig. 10 where . Both the number of neurons in the input layer and the number of input units are . The size of the population in the gene pool: . The number of survived elite networks in each generation: , the number of new networks generated by mutation: , and for the networks generated by crossover: . The probability of an occurrence of the recombination of connections during mutation is 0.04. The probability of changing connection weights during mutation is 0.4. The standard deviation of perturbation for weights is . The probability of an occurrence of perturbation for the decay constant during mutation is 0.1, whose standard deviation is 0.01.

For each network in each generation, steps after the first steps of transient were used for learning, in which we recorded the internal states and the teacher readout signals, and then determined the weights and such as minimizing the loss function in Eq. (3) using a ridge regression method. Then, further steps were used for the test, in which we evaluated the difference between the teacher signal and the readout state , and determined the values of the objective function for evolution, following Eq. (4).

At the beginning of the evolution, a sparse recurrent weight matrix was randomly created, and their weights were rescaled such that the spectral radius of the matrix became one. The values of the input weights took 0.1 if and zero otherwise. The noise term was given by a normal distribution of mean and a standard deviation of .

Appendix B Additional analysis and experiments

In this section, we describe additional analyses and experiments.

B.1 Evolution of the spectral radius

To investigate the evolution of overall connection strengths that may affect network dynamics, we observed evolution of the spectral radius of the recurrent weight matrix (Fig. 11). In both the separation and the combination task, averages of spectral radii exceeded 1.0 after evolution. The spectral radius was higher in the combination task than in the separation task, indicating different optimal connection strengths between the two tasks. Nevertheless, in all cases, fixed-point attractors were observed when we ran the evolved networks with no input (figures not shown).

B.2 Evolution of the decay constant

In our genetic algorithm, the decay constant of each neuron was also subject to evolution. To determine whether decay constants adapted to the time scale of the input signal through evolution, we studied changes in the distribution of the decay constant (Fig. 12). This distribution did not change much from the initial network to the evolved network. The question of what information is encoded by a neuron with specific time constants is left for future research.

B.3 Temporal pattern with phase shift

To test the robustness of emergence of the functional differentiation, we conducted an additional evolution experiment in which input signal elements had mutually different phases. Specifically, the temporal patterns were modified to

where and . Then the -th component of the input signal was

Figure 13 shows examples of spatiotemporal input patterns, teacher signals, and readouts of the evolved network. Performance of the evolved network was at the same level as that in the main results (Fig. 14(a)). The degree of differentiation, as measured by the strength of negative correlation of mutual information, was slightly higher than that in the main results (Fig. 14(b)). In summary, the network showed the same evolutionary adaptability and differentiation as with the standard conditions, even when there was a phase difference in the input.

B.4 Temporal pattern with different periods

To further test robustness of the functional differentiation against change in frequency, we performed evolution experiments with a different frequency set , whose inverses (oscillation periods) are prime numbers. Other parameters values were the same as in the other simulations. Under these conditions, the classification accuracy of spatial patterns decreased to about (Fig. 15(a)), but the degree of differentiation did not change (Fig. 15(b)). This degradation in spatial pattern classification might be related to sinusoidal waveform distortions due to coarse sampling using odd periods.

References

- Sporns and Betzel (2016) O. Sporns and R. F. Betzel, Annu. Rev. Psychol. 67, 613–640 (2016).

- Glasser et al. (2016) M. F. Glasser, T. S. Coalson, E. C. Robinson, C. D. Hacker, J. Harwell, and E. Yacoub, Nature 536, 171–178 (2016).

- Treves, Skaggs, and Barnes (1996) A. Treves, W. E. Skaggs, and C. A. Barnes, Hippocampus 6, 666–74 (1996).

- Sharma, Angelucci, and Sur (2000) J. Sharma, A. Angelucci, and M. Sur, Nature 404, 841–847 (2000).

- Biswal et al. (1995) B. Biswal, F. Zerrin Yetkin, V. M. Haughton, and J. S. Hyde, Magn. Reson. Med. 34, 537–541 (1995).

- Greicius et al. (2003) M. D. Greicius, B. Krasnow, A. L. Reiss, and V. Menon, Proc. Natl. Acad. Sci. U. S. A. 100, 253–258 (2003).

- Fox et al. (2005) M. D. Fox, A. Z. Snyder, J. L. Vincent, M. Corbetta, D. C. V. Essen, and M. E. Raichle, Proc. Natl. Acad. Sci. U. S. A. 102, 9673–8 (2005).

- Von der Malsburg (1973) C. Von der Malsburg, Kybernetik 14, 85–100 (1973).

- Amari (1980) S.-I. Amari, Bull. Math. Biol. 42, 339–364 (1980).

- Kohonen (1982) T. Kohonen, Biol. Cybern. 43, 59–69 (1982).

- Le (2013) Q. V. Le, in 2013 IEEE international conference on acoustics, speech and signal processing (IEEE, 2013) pp. 8595–8598.

- Asada et al. (2001) M. Asada, K. F. MacDorman, H. Ishiguro, and Y. Kuniyoshi, Rob. Auton. Syst. 37, 185–193 (2001).

- Tsuda, Yamaguti, and Watanabe (2016) I. Tsuda, Y. Yamaguti, and H. Watanabe, Entropy 18, 74 (2016).

- Yamaguti and Tsuda (2015) Y. Yamaguti and I. Tsuda, Neural Netw. 62, 3–10 (2015).

- Kuramoto (1984) Y. Kuramoto, Chemical oscillations, waves, and turbulence (Berlin and New York: Springer-Verlag, 1984).

- Maass, Natschläger, and Markram (2002) W. Maass, T. Natschläger, and H. Markram, Neural Comput. 14, 2531–2560 (2002).

- Jaeger and Haas (2004) H. Jaeger and H. Haas, Science 304, 78–80 (2004).

- Dominey, Arbib, and Joseph (1995) P. Dominey, M. Arbib, and J.-P. Joseph, J. Cogn. Neurosci. 7, 311–336 (1995).

- Yamazaki and Tanaka (2007) T. Yamazaki and S. Tanaka, Neural Netw. 20, 290–297 (2007).

- Enel et al. (2016) P. Enel, E. Procyk, R. Quilodran, and P. F. Dominey, PLoS Comput. Biol. 12 (2016).

- Bertschinger and Natschläger (2004) N. Bertschinger and T. Natschläger, Neural Comput. 16, 1413–1436 (2004).

- Sussillo and Abbott (2009) D. Sussillo and L. F. Abbott, Neuron 63, 544–557 (2009).

- Hoerzer, Legenstein, and Maass (2014) G. M. Hoerzer, R. Legenstein, and W. Maass, Cereb. Cortex 24, 677–690 (2014).

- Laje and Buonomano (2013) R. Laje and D. V. Buonomano, Nat. Neurosci. 16, 925–933 (2013).

- Carroll (2020) T. L. Carroll, Chaos 30, 013102 (2020).

- Yamane et al. (2016) T. Yamane, S. Takeda, D. Nakano, G. Tanaka, R. Nakane, S. Nakagawa, and A. Hirose, in International Conference on Neural Information Processing (Springer, 2016) pp. 205–212.

- Mante et al. (2013) V. Mante, D. Sussillo, K. V. Shenoy, and W. T. Newsome, Nature 503, 78–84 (2013).

- Rössler (1987) O. E. Rössler, Ann. N. Y. Acad. Sci. 504, 229–240 (1987).

- Kawai, Park, and Asada (2019) Y. Kawai, J. Park, and M. Asada, Neural Netw. 112, 15–23 (2019).

- Park et al. (2019) J. Park, K. Ichinose, Y. Kawai, J. Suzuki, M. Asada, and H. Mori, Entropy 21, 214 (2019).

- Seeman et al. (2018) S. C. Seeman, L. Campagnola, P. A. Davoudian, A. Hoggarth, T. A. Hage, A. Bosma-Moody, C. A. Baker, J. H. Lee, S. Mihalas, C. Teeter, et al., eLife 7, e37349 (2018).

- Treves (2004) A. Treves, Hippocampus 14, 539–556 (2004).

- Tsuda, Koerner, and Shimizu (1987) I. Tsuda, E. Koerner, and H. Shimizu, Prog. Theor. Phys. 78, 51–71 (1987).

- Tsukada, Yamaguti, and Tsuda (2013) H. Tsukada, Y. Yamaguti, and I. Tsuda, Cogn. Neurodyn. 7, 409–416 (2013).

- Tsuda (2001) I. Tsuda, “Toward an interpretation of dynamic neural activity in terms of chaotic dynamical systems,” Behavioral and Brain Sciences 24, 793–810 (2001).

- Fukushima et al. (2007) Y. Fukushima, M. Tsukada, I. Tsuda, Y. Yamaguti, and S. Kuroda, Cogn. Neurodyn. 1, 305–16 (2007).

- Tsuda and Kuroda (2001) I. Tsuda and S. Kuroda, Japan J. Ind. Appl. Math. 18, 249–258 (2001).

- Kuroda et al. (2009) S. Kuroda, Y. Fukushima, Y. Yamaguti, M. Tsukada, and I. Tsuda, Cogn. Neurodyn. 3, 205–22 (2009).

- Yamaguti et al. (2011) Y. Yamaguti, S. Kuroda, Y. Fukushima, M. Tsukada, and I. Tsuda, Neural Netw. 24, 43–53 (2011).

- Rigotti et al. (2013) M. Rigotti, O. Barak, M. R. Warden, X.-J. Wang, N. D. Daw, E. K. Miller, and S. Fusi, Nature 497, 585–590 (2013).

- Kaneko and Tsuda (2003) K. Kaneko and I. Tsuda, Chaos 13, 926–936 (2003).