Fully Distributed Optimization based CAV Platooning Control under Linear Vehicle Dynamics

Abstract

This paper develops distributed optimization based, platoon centered CAV car following schemes, motivated by the recent interest in CAV platooning technologies. Various distributed optimization or control schemes have been developed for CAV platooning. However, most existing distributed schemes for platoon centered CAV control require either centralized data processing or centralized computation in at least one step of their schemes, referred to as partially distributed schemes. In this paper, we develop fully distributed optimization based, platoon centered CAV platooning control under the linear vehicle dynamics via the model predictive control approach with a general prediction horizon. These fully distributed schemes do not require centralized data processing or centralized computation through the entire schemes. To develop these schemes, we propose a new formulation of the objective function and a decomposition method that decomposes a densely coupled central objective function into the sum of several locally coupled functions whose coupling satisfies the network topology constraint. We then exploit the formulation of locally coupled optimization and operator splitting methods to develop fully distributed schemes. Control design and stability analysis is carried out to achieve desired traffic transient performance and asymptotic stability. Numerical tests demonstrate the effectiveness of the proposed fully distributed schemes and CAV platooning control.

Keywords: Connected and autonomous vehicle, car following control, distributed algorithm, constrained optimization, control and stability

1 Introduction

The recent advancement of connected and autonomous vehicle (CAV) technologies provides a unique opportunity to mitigate urban traffic congestion through innovative traffic flow control and operations. Supported by advanced sensing, vehicle communication, and portable computing technologies, CAVs can sense, share, and process real-time mobility data and conduct cooperative or coordinated driving. This has led to a surging interest in self-driving technologies. Among a number of emerging self-driving technologies, vehicle platooning technology receives substantial attention. Specifically, the vehicle platooning technology links a group of CAVs through cooperative acceleration or speed control. It allows adjacent group members to travel safely at a higher speed with smaller spacing between them and thus has a great potential to increase lane capacity, improve traffic flow efficiency, and reduce congestion, emission, and fuel consumption [2, 7].

There is extensive literature on CAV platooning control. The widely studied approaches include adaptive cruise control (ACC) [8, 10, 11, 18, 24], cooperative adaptive cruise control (CACC) [14, 15, 17, 22], and platoon centered vehicle platooning control [4, 5, 19, 20]. The first two approaches intend to improve an individual vehicle’s safety, mobility, and string stability rather than systematical performance of the entire platoon, even though enhanced system performance is validated by analysis, simulations, or field experiments. In contrast, the platoon centered approach aims to improve the performance of the entire platoon and seeks a control input that optimizes the platoon’s transient traffic dynamics for a smooth traffic flow while achieving stability and other desired long-time dynamical behaviors.

The platoon centered CAV platooning control often gives rise to sophisticated, large-scale optimal control or optimization problems, and requires extensive computation. In order to successfully implement these control schemes, efficient real-time computation is needed [19]. However, due to high computation load and the absence of roadside computing facilities, centralized computation is either inefficient or infeasible [21]. By leveraging portable computing capability of each vehicle, distributed computing is a favorable option because it has potentials to be more adaptive to different platoon network topologies, be more robust to network malfunctions, and accommodate for communication delays effectively [12, 21]. In spite of these advantages, the development of efficient distributed algorithms to solve platoon centered optimization or optimal control problems in real-time is nontrivial, especially under complicated traffic conditions and constraints.

A number of effective distributed control or optimization schemes have been proposed for CAV platooning [19, 21, 22, 25]. In particular, the recent paper [5] develops model predictive control (MPC) based car-following control schemes for CAV platooning by exploiting transportation, control and optimization methodologies. These control schemes take vehicle constraints, transient dynamics and and asymptotic dynamics of the entire platoon into account, and can be computed in a distributed manner. The paper [4] extends these distributed schemes to a mixed traffic flow including both CAVs and human-driven vehicles. However, to the best of our knowledge, the proposed schemes in [4, 5] as well as many other existing distributed or decentralized schemes [9] either require all vehicles to exchange information with a central component for centralized data processing or perform centralized computation in at least one step of these schemes. We refer to these schemes as partially distributed schemes. In contrast, the distributed schemes developed in this paper do not require centralized data processing or carry out centralized computation through the entire schemes and thus are called fully distributed. Distinct advantages of fully distributed schemes include but are not limited to: (i) no data synchronization is needed such that no central computing equipment is required; and (ii) each vehicle only interacts with its nearby vehicles through a vehicle communication network. Hence, these schemes impose less restriction on vehicle communication networks and can be easily implemented on a wide range of vehicle networks. They are also suitable for a large CAV platoon in remote areas where communication network is unreliable or roadside equipments are scarce. Further, they are more robust to network malfunction or cyber attacks.

In this paper, we develop fully distributed optimization based and platoon centered CAV car following control schemes over a general vehicle communication network. We propose a general -horizon MPC model subject to the linear vehicle dynamics of the CAVs and various physical or safety constraints. Typically, a fully distributed optimization scheme requires the objective function and constraints of the underlying optimization problem to be decoupled [6]. However, the constrained optimization problem arising from the proposed MPC model does not satisfy this requirement since its objective function is densely coupled and its constraints are locally coupled; see Remark 3.2 for more details. Therefore, this paper develops new techniques to overcome this difficulty.

The main contributions of this paper are summarized as follows:

-

(1)

We propose a new form of the objective function in the MPC model with new sets of weight matrices. This new formulation facilitates the development of fully distributed schemes and closed loop stability analysis whereas it can achieve desired traffic transient performance of the whole platoon. Based on the new formulation, a decomposition method is developed for the strongly convex quadratic objective function. This method decomposes the central objective function into the sum of locally coupled (strongly) convex quadratic functions, where local coupling satisfies the network topology constraint under a mild assumption on network topology. Along with locally coupled constraints in the MPC model, the underlying optimization model is formulated as a locally coupled convex quadratically constrained quadratic program (QCQP).

-

(2)

Fully distributed schemes are developed for solving the above-mentioned convex QCQP arising from the MPC model using the techniques of locally coupled optimization and operator splitting methods. Specifically, by introducing copies of local coupling variables of each vehicle, an augmented optimization model is formulated with an additional consensus constraint. A generalized Douglas-Rachford splitting method based distributed scheme is developed, where only local information exchange is needed, leading to a fully distributed scheme. Other operator splitting method based distributed scheme are also discussed.

-

(3)

The new formulation of the weight matrices and objective function leads to different closed loop dynamics in comparison with that in [5]. Besides, since a general -horizon MPC is considered, it calls for new stability analysis of the closed loop dynamics. We perform detailed stability analysis and choose suitable weight matrices for desired traffic transient performance for a general horizon length . In particular, we prove that up to a horizon of , the closed loop dynamic matrix is Schur stable. Extensive numerical tests are carried out to test the proposed distributed schemes under different MPC horizon ’s and to evaluate the closed loop stability and performance.

The rest of the paper is organized as follows. Section 2 introduces the linear vehicle dynamics, state and control constraints, and vehicle communication networks. The model predictive control model with a general prediction horizon is proposed and formulated as a constrained optimization problem in Section 3; fundamental properties of this optimization problem are established. Section 4 develops fully distributed schemes by exploiting a decomposition method for the central quadratic objective function, locally coupled optimization, and operator splitting methods. Control design and stability analysis for the closed loop dynamics is presented in Section 5 with numerical results given in Section 6. Finally, conclusions are given in Section 7.

2 Vehicle Dynamics, Constraints, and Communication Networks

We consider a platoon consisting of vehicles on a roadway, where the (uncontrolled) leading vehicle is labeled by the index 0 and its following CAVs are labeled by the indices , respectively. Let denote the longitudinal position and speed of the th vehicle, respectively. Let be the sampling time, and each time interval is given by for . We consider the following kinematic model for linear vehicle dynamics that is widely adopted in system-level studies with the acceleration as the control input:

| (1) |

State and control constraints. Each vehicle in a platoon is subject to several important state and control constraints. For each ,

-

(i)

Control constraints: , where and are pre-specified acceleration and deceleration bounds for a vehicle.

-

(ii)

Speed constraints: , where are pre-specified bounds on longitudinal speed for a vehicle;

-

(iii)

Safety distance constraints: these constraints guarantee sufficient spacing between neighboring vehicles to avoid collision even if the leading vehicle comes to a sudden stop. This gives rise to the safety distance constraint of the following form:

(2) where is a constant depending on vehicle length, and is the reaction time.

Note that constraints (i) and (ii) are decoupled across vehicles, whereas the safety distance constraint (iii) is state-control coupled since such a constraint involves control inputs of two vehicles. This yields challenges to distribution computation. Further, we consider the identical bounds of the acceleration and deceleration and ignore their variations due to road surface condition changes in this paper, although the proposed approach can handle a general case with different acceleration or deceleration bounds.

Communication network topology. We consider a general communication network whose topology is modeled by a graph , where is the set of nodes where the th node corresponds to the th CAV, and is the set of edges connecting two nodes in . Let denote the set of neighbors of node , i.e., . The following assumption on the communication network topology is made throughout the paper:

-

The graph is undirected and connected. Further, two neighboring vehicles form a bidirectional edge of the graph, i.e., .

Since the graph is undirected, for any with , means that there exists an edge between node and node . In other words, vehicle can receive information from vehicle and send information to vehicle , and so does vehicle . We also assume that the first vehicle can detect and receive , and from the leading vehicle. The setting given by includes many widely used communication networks of CAV platoons, e.g., immediate-preceding, multiple-preceding, and preceding-and-following networks [23].

3 Model Predictive Control for CAV Platooning Control

We exploit the model predictive control (MPC) approach for car following control of a platoon of CAVs. Let be the desired (constant) spacing between two adjacent vehicles, and be the position, speed, and control input of the leading vehicle, respectively. Define the vectors: (i) , representing the relative spacing error; (ii) , representing the relative speed between adjacent vehicles; and (iii) , representing the control input. Further, let for each , and , which stands for the difference of control input between adjacent vehicles. Hence, for any , , where is the vector of ones, and

| (3) |

Given a prediction horizon , the -horizon MPC control is determined by solving the following constrained optimization problem at each , involving all vehicles’ control inputs for given feasible state and at time subject to the vehicle dynamics model (1):

| (4) | |||

subject to: for each and each ,

| (5) | |||||

| (6) |

where , and are symmetric positive semidefinite weight matrices to be discussed soon. Note that when , are unknown at time for . In this case, we assume that for all and use these ’s and the vehicle dynamics model (1) to predict for . Here we assume that represents the actual acceleration of the leading vehicle at time .

Remark 3.1.

The three terms in the objective function intend to minimize traffic flow oscillations via mild control: the first term penalizes the magnitude of control, whereas the second and last terms penalize the variations of the relative spacing and relative speed, respectively. The presence of the matrix in the first term is due to the coupled vehicle dynamics through the CAV platoon. To illustrate this, let for . Thus for each . Therefore, the first term in satisfies for each .

The weight matrices , , and , determine transient and asymptotic dynamics, and they depend on vehicle network topologies and can be chosen by stability analysis and transient dynamics criteria of the closed loop system. To develop fully distributed schemes for a broad class of vehicle network topologies and to facilitate control design and analysis, we make the following blanket assumption on , , and throughout the rest of the paper:

-

For each , and are diagonal and positive semidefinite (PSD), and is diagonal and positive definite (PD).

The reasons for considering this class of diagonal positive semidefinite or positive definite weight matrices are three folds: (i) Diagonal matrices have a simpler interpretation in transportation engineering so that the selection of such matrices is easier to practitioners. For instance, the diagonal and mean that one imposes penalties on each element of and without considering their coupling. Further, by suitably choosing the weight matrices , it can be shown that the ride comfort term in equation (4), which corresponds to acceleration of CAVs, is similar to imposing direct penalties on ’s, which simplifies control design. (ii) This class of weight matrices facilitates the development of fully distributed schemes for general vehicle network topologies. (iii) Closed-loop stability and performance analysis is relatively simpler (although still nontrivial) when using this class of weight matrices. The detailed discussions of choosing diagonal, positive semidefinite or positive definite weight matrices for satisfactory closed loop dynamics will be given in Section 5.

The sequential feasibility has been established in [4, 5] for the MPC model (4) when . Define where for each . Specifically, the sequential feasibility implies that for any feasible at time , the constraint set is non-empty such that the MPC model (4) has a solution such that the constraint set is non-empty. Using this result, we show below that under a mild assumption, the constraint sets of the MPC model have nonempty interior for any MPC horizon . This result is important to the development of distributed algorithms.

Corollary 3.1.

Proof.

Fix an arbitrary . Since , it follows from [5, Proposition 3.1] that there exists a vector denoted by in the interior of the set . Let and be generated by (and ). Since , we deduce via [5, Proposition 3.1] again that there exists a vector denoted by in the interior of the constraint set . Continuing this process in -steps, we derive the existence of an interior point in the constraint set of the -horizon MPC model (4). ∎

3.1 Constrained MPC Optimization Model

Consider the constrained MPC optimization model (4) at an arbitrary but fixed time subject to the linear vehicle dynamics (1). In view of the following results: for each ,

we formulate (4) as the constrained convex minimization problem (where we omit since it is fixed):

| (7) |

where with , is a PD matrix to be shown in Lemma 3.1 below, , , each is a polyhedral set, and each is a convex quadratic function characterizing the safety distance given by (12). Here is the matrix of the form given by (3). Further, for the given . An important property of the matrix in (7) is given below.

Lemma 3.1.

Suppose that and are PSD and are PD for all (but not necessarily diagonal). Then the matrix in (7) is PD.

Proof.

Let u be an arbitrary nonzero vector in . Since is quadratic, we have . In view of the equivalent formulation of given by (4), we deduce that for any , , where the first inequality follows from the fact that and are PSD, and the second inequality holds because , and thus , are PD. Therefore, , leading to . Hence, is PD. ∎

To establish the closed form expressions of the matrix and the vector in (7), we define the following matrices for any :

Clearly, for any . Moreover, let for . Hence, the symmetric matrix is given by , where

| (8) |

and is the permutation matrix satisfying

Specifically, the -entry of the matrix is given by

| (9) |

In particular, when , .

For a fixed , we also define for each ,

In light of given by (3), we have . Therefore, we obtain

| (10) |

In view of

the linear terms in the objective function are given by

| (11) |

Using the permutation matrix given in (9), we can write the above linear terms as where is the subvector of corresponding to . Since and are diagonal, it is easy to obtain the following lemma via in (10) and the structure of given by (3):

Lemma 3.2.

Consider the vector given above. Then for each , the subvector depends only on ’s for , and depends only on . Further, only depends on .

The above lemma shows that each only depends on the information of the adjacent vehicles of vehicle , and thus can be easily established from any vehicle network. This property is important for developing fully distributed schemes to be shown in Section 4.3.

To find the vector form of the safety constraint, we note that for ,

The safety distance constraint for the -th vehicle at time is given by: for ,

| (12) | ||||

where is a convex quadratic function for each . Hence, the set is closed and convex. The problem (7) becomes subject to and for all , which is a convex quadratically constrained quadratic program (QCQP) and can be solved via a second-order cone program or a semi-definite program in the centralized manner.

Remark 3.2.

The above results show that each ’s are decoupled from the other vehicles, whereas the constraint function for vehicle is locally coupled with its neighboring vehicles. Specifically, depends not only on but also on of vehicle , which can exchange information with vehicle . We will explore this local coupling property to develop fully distributed schemes for solving (7).

4 Fully Distributed Algorithms for Constrained Optimization in MPC

We develop fully distributed algorithms for solving the underlying optimization problem given by (7) at each time using the techniques of locally coupled convex optimization and operator splitting methods.

4.1 Brief Overview of Locally Coupled Convex Optimization

One of major techniques for developing fully distributed schemes for the underlying optimization problem given by (7) is to formulate it as a locally coupled convex optimization problem [6]. To be self-contained, we briefly describe its formulation.

Consider a multi-agent network of agents whose communication is characterized by a connected and undirect graph , where is the set of agents, and denotes the set of edges. For , let be the set of neighbors of agent , i.e., . Let be a disjoint union of the index set . Hence, for any , forms a partition of . We call a local variable of each agent . For each , define . Hence, for each , contains the local variable and the variables from its neighboring agents (or locally coupled variables). Consider the convex optimization problem

where is an extended-valued, proper, and lower semicontinuous convex function for each . Clearly, each is locally coupled such that the problem bears the name of “locally coupled convex optimization”. Although the problem is seemingly unconstrained, it does include constrained convex optimization since may contain the indicator function of a closed convex set. To impose the locally coupled convex constraint explicitly, the problem can be equivalently written as:

| (13) |

where for each , is a real-valued convex function, and is a closed convex set.

By introducing copies of the locally coupled variables for each agent and imposing certain consensus constraints on these copies, the paper [6] formulates the problem (or equivalently ) as a separable consensus convex optimization problems. Under suitable assumptions, Douglas-Rachford and other operator splitting based distributed schemes are developed; details can be found in [6].

4.2 Decomposition of a Strongly Convex Quadratic Objective Function

The framework of locally coupled optimization problems requires that both an objective function and constraints are expressed in a locally coupled manner. Especially, the central objective function in (13) is expected to be written as the sum of a few locally coupled functions preserving certain desired properties, e.g., the (strong) convexity if the central objective function is so, where local coupling satisfies network topology constraints. While the constraints of the problem (7) have been shown to be locally coupled (cf. Remark 3.2), the central strongly convex quadratic objective function, particularly its quadratic term , is highly coupled and thus need to be decomposed into the sum of locally coupled (strongly) convex quadratic functions, where the local coupling should satisfy the network topology constraint. In this subsection, we address this decomposition problem under a mild assumption on network topology. Specifically, our results yield a decomposition into convex and strongly convex functions.

We start from a slightly general setting. Let and be a diagonal matrix, i.e., is the vector representation of the diagonal entries of . Therefore, the following matrix is tridiagonal:

| (14) |

Consider a general . Let be a symmetric block diagonal matrix given by

where is diagonal for some , and for all . Let denote the th entry of the vector . For each , define the matrix

| (15) |

It can be shown that , where is the permutation matrix given by (9). Hence, is PD (resp. PSD) if and only if each is PD (resp. PSD).

Let

where is symmetric and tridiagonal. Letting be the permutation matrix given by (9), a straightforward computation shows that is a symmetric block tridiagonal matrix given by

where each is symmetric and . Furthermore, for each and ,

where denotes the -entry of the block . In view of and (14), we have that and for , and . Moreover, since , is PD (resp. PSD) if and only if is PD (resp. PSD), which is also equivalent to that each is PD (resp. PSD); see the comment after (15).

In what follows, we consider PSD (resp. PD) matrix decomposition for a PSD (resp. PD) generated by for and . The goal of this decomposition is to construct PSD matrices for such that the following conditions hold:

-

(i)

-

(ii)

for each ,

-

(iii)

.

For notational simplicity, let denote the possibly nonzero block in each . Specifically,

and for each ,

When is PD, we also want each in the above decomposition to be PD.

Proposition 4.1.

Let be a PSD matrix generated by for . Then there exist PSD matrices satisfying the above conditions. Moreover, suppose is PD. Then there exist PD matrices such that their corresponding ’s satisfy the above conditions.

Proof.

Let be generated by ’s such that is PSD, and let ’s be defined in (15) corresponding to ’s. Note that each is PSD as is PSD. Let

and for each ,

Since each is PSD, so is for each . Clearly, .

Now suppose is PD. Hence, each given by (15) is PD. Define

and for each ,

Note that and the two matrices on the right hand side are both PSD and the intersection of their null spaces is the zero subspace. Hence, is PD. Similarly, is PD, and the other ’s are PSD. Since is PD, we see that for an arbitrary , is PD. Hence,

is also PD. Therefore, for an arbitrary , the matrix is PD. Further, it is easy to show that the matrix is PD such that for any , the matrix is PD. Continuing this process by induction, we see that is PD for all and is PD for an arbitrary , where is PD. Finally, define which is clearly PD. Using these PD , we construct by setting the possibly nonzero block in each as . Specifically,

and for each ,

A straightforward calculation shows that , yielding the desired result. ∎

To obtain a desired decomposition using the above proposition, we observe that the matrix in (8) is given by for some matrix of the form given below (14) whose blocks are positive combinations of and . Since and are diagonal and PSD and are diagonal and PD, each block of is diagonal and PD or PSD. Moreover, by Lemma 3.1, is PD. Hence, there are uncountably many ways to construct positive , and thus PD , as shown in the above proposition. Therefore, we obtain the following strongly convex decomposition for the objective function in (7), where we set the constant without loss of generality:

where the strongly convex functions are given by

| (16) | |||||

Remark 4.1.

Clearly, the above decomposition method is applicable to any vehicle communication network satisfying the assumption in Section 2, i.e., for all . Besides, various alternative approaches can be developed to construct PD matrices using the similar idea given in the above proposition. Further, a similar decomposition method can be developed for other vehicle communication networks different from the the cyclic-like graph. For instance, if such a graph contains edges other than , one can add or subtract certain small terms pertaining to these extra edges in relevant matrices, which will preserve the desired PD property.

In what follows, we write each as for notational convenience, where denotes the set of neighbors of vehicle in a vehicle network such that for and .

4.3 Operator Splitting Method based Fully Distributed Algorithms

For illustration simplicity, we consider the cyclic like network topology through this subsection, although the proposed schemes can be easily extended to other network topologies under a suitable assumption ((cf. Remark 4.1). In this case, , , and for .

Define the constraint set

Recall that is defined by convex quadratic functions. The underlying optimization problem (7) at time becomes subject to .

We formulate this problem as a locally coupled convex optimization problem [6] and solve it using fully distributed algorithms. Specifically, in view of the decompositions given by (16), the objective function in (7) can be written as

In view of Remark 3.2, the safety constraints are also locally coupled. Let denote the indicator function of a (closed convex) set . Define, for each ,

As in [6], define , where the new variables represent the predicted values of of vehicle in the neighbor of vehicle , and let . Define the consensus subspace

Then the underlying optimization problem (7) can be equivalently written as

Let for notational simplicity. Then the underlying optimization problem becomes

| (17) |

where denotes the extended-valued objective function. Thus is the sum of two indictor functions of closed convex sets and the convex quadratic function given by , by slightly abusing the notation. Note that is polyhedral. It is easy to show via Corollary 3.1 that the Slater’s condition holds under the mild assumptions given in Corollary 3.1, e.g., for all . Hence, by [13, Corollary 23.8.1], in light of , where denotes the normal cone of a closed convex set at . Finally, the formulation given by (17) is a locally coupled convex optimization problem; see Section 4.1. This formulation allows one to develop fully distributed schemes. Particularly, in fully distributed computation, each vehicle only knows and (i.e., and ) but does not know and with . Each vehicle will exchange information with its neighboring vehicles to update via a distributed scheme.

We introduce more notation first. For a proper, lower semicontinuous convex function , let denote the proximal operator, i.e., for any given ,

Further, denotes the Euclidean projection onto a closed convex set . Using this notation, we present a specific operator splitting method based distributed scheme for solving (17). By grouping the first two sums (with separable variables) in the objective function of (17), we apply the generalized Douglas-Rachford splitting algorithm [6]. Recall that for each . For any constants and satisfying and , this algorithm is given by:

It is shown in [3, 6] that the sequence converges to the unique minimizer of the underlying optimization problem (17). In the above scheme, is the orthogonal projection onto the consensus subspace such that the following holds: for any where , is given by [6, Section IV]:

| (18) |

Furthermore, due to the decoupled structure of ’s, we obtain the distributed version of the above algorithm, which is also summarized in Algorithm 1:

| (19a) | ||||

| (19b) | ||||

In the distributed scheme (19), the first step (or Line 5 of Algorithms 1) is a consensus step, and the consensus computation is carried out in a fully distributed and synchronous manner as indicated in [6, Section IV]. The second step in (19) (or Line 8 of Algorithms 1) does not need inter-agent communication [6] and is performed using local computation only. For effective computation in the second step, recall that for each such that the proximal operator becomes

Since is the intersection of a polyhedral set and a quadratically constrained convex set and is a quadratic convex function, is formulated as a QCQP and can be solved via a second order cone program [1] or a semidefinite program. Efficient numerical packages, e.g., SeDuMi [16], can be used for solving the QCQP. Lastly, a typical (global) stopping criterion in the scheme (19) (or Algorithm 1) is defined by the error tolerance of two neighboring ’s, i.e., , where is an error tolerance. For distributed computation, one can use its local version, i.e., , as a stopping criterion for each vehicle.

Remark 4.2.

Other distributed algorithms can be used to solve the underlying optimization problem (17). For example, the three operator splitting schemes developed in [3] can be applied. To describe such schemes, let . the Hessian . Hence, is -Lipschitz continuous and thus -cocoercive. Further, the two indicator functions are proper, closed, and convex functions. Choose the constants such that and . Then for any initial condition , the iterative scheme is given by [3, Algorithm 1]:

In view of the similar discussions for consensus computation and decoupled structure of the projection , we obtain the distributed version of the above algorithm:

In this scheme, the Euclidean projection can be formulated as a QCQP and be solved via a second order cone program.

When each is PD, each is strongly convex. Thus is -strongly monotone with , i.e., . Since the subdifferential of the indicator function of a closed convex set is monotone, an accelerated scheme developed in [3, Algorithm 2] can be exploited. In particular, let be a constant with , and . Set the initial points for an arbitrary , and . The distributed version of this scheme is given by:

where . It is shown in [3, Theorem 1.2] that converges to the unique minimizer with .

5 Control Design and Stability Analysis of the Closed Loop Dynamics

In this section, we discuss how to choose the weight matrices , and to achieve the desired closed loop performance, including stability and traffic transient dynamics. For the similar reasons given in [5, Section 5], we focus on the constraint free case.

Under the linear vehicle dynamics, the closed-loop system is also a linear system. Specifically, the linear closed-loop dynamics are given by

| (20) |

where is a unique solution to an unconstrained optimization problem arising from the MPC and is a linear function of and to be determined as follows.

Case (i): . In this case, we write as respectively. Then the objective function becomes

where we recall that . It follows from the similar argument in [5, Section 5] that the the closed-loop system is given by the following linear system:

| (21) |

where is the closed loop dynamics matrix, and

| (22) |

The matrix in (21) plays an important role in the closed loop stability and desired transient dynamical performance. Since and are all diagonal and PSD (resp. PD), we have , , and , where and with , , and . Hence, we write the matrix as to emphasize its dependence on these parameters. The following result asserts the asymptotic stability of the linear closed-loop dynamics; its proof resembles that for [5, Proposition 5.1] and is thus omitted.

Proposition 5.1.

Given any and any , the matrix is Schur stable, i.e., each eigenvalue of satisfies . Moreover, for any eigenvalue of , the following hold:

-

(1)

if is non-real, then , where ;

-

(2)

if is real, then .

Case (ii): . Fix . For a general , let . Recall that for each ,

and with slightly abusing notation, the objective function is

where introduced in Remark 3.1. It follows from the similar development in Section 3.1 that

where is a constant. By a similar argument as in Lemma 3.1, it can be shown that is a symmetric PD matrix. Further, it resembles the matrix in (8) (by replacing with ), i.e.,

| (23) |

where ’s are diagonal PD matrices given by

Moreover, it follows from (10) and (11) that the matrix and constant vector are

| (24) |

where are given by: for each ,

Hence, the optimal solution is , and . Define the matrix and the vector as

| (25) |

The closed loop system becomes

where is the closed loop dynamics matrix, and the subscript of represents the closed loop.

Since are diagonal PSD and are diagonal PD for all , we write them as , , and , where and for all with , , and for each . Let , , and . We write the matrix as to emphasize its dependence on these parameters.

It can be shown that there exists a permutation matrix such that is a block diagonal matrix, i.e., whose each block is given by

Here for each , , where

and

Note that for the permutation matrix given by (9). Since is PD, so are all the ’s.

As examples, we give the closed form expressions of and for some small ’s. When , and for each . When , we have, for each ,

and

Lemma 5.1.

Let . For any , and for each , the matrix is Schur stable, i.e., its spectral radius is strictly less than 1.

Proof.

By the previous argument, it suffices to show that each is Schur stable for . Fix an arbitrary . Letting , we have

where

and , and for . Hence, . Define

Clearly, are all positive for any , and . Moreover, we deduce from a somewhat lengthy but straightforward calculation that . Hence, , , and

It follows from [5, Proposition 5.1] that is Schur stable, and so is . ∎

Using a similar technique but more lengthy calculations, it can be shown that when , the matrix is Schur stable for , , and for each . For , we expect that the same result holds (supported by numerical experience) although its proof becomes much more complicated. Nevertheless, it is observed that in the -horizon MPC, when the parameters , (and possibly including ) with are medium or large, large control inputs are generated, which causes control or speed saturation and may lead to undesired close-loop dynamics. Motivated by this observation, we obtain the following stability result for small for .

Proposition 5.2.

Let . For any , and for each , and for and , there exists a positive constant such that for any for and , the matrix is Schur stable.

Proof.

Consider . Fix arbitrary , , and for each and for and . Suppose for all and . Then for all . Hence, for all and any . Thus it is easy to show that for each ,

where and correspond to given before. Hence, . It follows from Lemma 5.1 that is Schur stable, i.e., its spectral radius is strictly less than 1. Since the spectral radius of is continuous in for all and , a small perturbation to for all and still leads to the Schur stable matrix . This yields the desired result. ∎

Based on the above results, one may choose in the following way. Let and be positive or nonnegative vectors of the same order. Let (e.g., or higher) be a constant and let and be some positive constants. Then let

6 Numerical Results

6.1 Numerical Experiments and Weight Matrices Design

We conduct numerical tests to evaluate the performance of the proposed fully distributed schemes and the CAV platooning control. In these tests, we consider a platoon of an uncontrolled leading vehicle labeled by the index 0 and ten (i.e., ) CAVs following the leading vehicle. The following physical parameters are used for the CAVs and their constraints throughout this section unless otherwise stated: the desired spacing , the vehicle length , the sample time , the reaction time , the acceleration and deceleration limits and , and the speed limits and . The initial state of the platoon is and for all . Further, the vehicle communication network is given by the cyclic-like graph, i.e., the bidirectional edges of the graph are .

When , a particular choice of these weight matrices is given as follows: for ,

For , we choose , , , and

The above vectors define the weight matrices for , which further yield the closed loop dynamics matrix ; see the discussions below (25). It is shown that when these weights are used, the spectral radius of is for , and for , respectively.

Discussion on the selection of MPC horizon. We discuss the choice of the MPC prediction horizon based on numerical tests as follows. Our numerical experience shows that for , the weight matrices and play a more important role for the closed loop dynamics. For fixed and with the large penalties in and for , the closed loop dynamics may be mildly improved but at the expense of undesired large control. Hence, we choose smaller penalties in and for , which only lead to slightly better closed loop performance compared with the case of . Further, when a large is used, the underlying optimization problem has a larger size, resulting in longer computation time and slow convergence of the proposed distributed scheme. Besides, the current MPC model assumes that the future for all at each . This assumption is invalid when the true is substantially different from , which implies that the prediction performance is poor for a large . For these reasons, it is recommended that a smaller be used, for example, .

The following scenarios are used to evaluate the proposed CAV platooning control.

Scenario 1: The leading vehicle performs instantaneous deceleration/acceleration and then keeps a constant speed for a while. The goal of this scenario is to test if the platoon can maintain stable spacing and speed when the leading vehicle is subject to acceleration or deceleration disturbances. The motion profile of the leading vehicle is as follows: the leading vehicle decelerates from to with the deceleration , and maintains a constant speed till . After , it restores to its original speed with the acceleration .

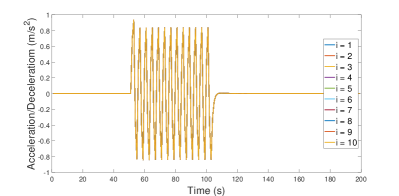

Scenario 2: The leading vehicle performs periodical acceleration/deceleration. The goal of this scenario is to test whether the proposed control scheme can reduce periodical spacing and speed fluctuation. The motion profile of the leading vehicle in this scenario is as follows: the leading vehicle periodically changes its acceleration and deceleration from to with the period and acceleration/deceleration . Then it maintains its original constant speed after .

Scenario 3: In this scenario, we aim to test the performance of the proposed control scheme in a real traffic environment, particularly when the leading vehicle undergoes traffic oscillations. We use real world trajectory data from an oscillating traffic flow to generate the leading vehicle’s motion profile. Specifically, we consider NGSIM data on eastbound I-80 in San Francisco Bay area in California. We use the data of position and speed of a real vehicle to generate its control input at each second and treat this vehicle as a leading vehicle. Since the maximum of acceleration of this vehicle is close to , we choose . All the other parameters or physical limits remain the same. The experiment setup of this scenario is: , for each , and the time length is . To further test the proposed CAV platooning control in a more realistic traffic setting in Scenario 3, random noise is added to each CAV to simulate dynamical disturbances, model mismatch, signal noise, communication delay, and road condition perturbations. In particular, at each , the random noise with the normal distribution is added to the first CAV, and the noise with the normal distribution is added to each of the rest of the CAVs. Here a larger noise is imposed to the first CAV since there are more noises and disturbances between the leading vehicle and the first CAV.

6.2 Performance of Fully Distributed Schemes and CAV Platooning Control

The generalized Douglas-Rachford splitting method based distributed algorithm (i.e., Algorithm 1) is tested. For each MPC horizon , the parameters , , and the error tolerance for the stopping criteria in this algorithm are chosen to achieve desired numerical accuracy and efficiency; see the discussions below (19) for error tolerances and Table 1 for a list of these parameters and error tolerances. In particular, we choose a larger error tolerance for a larger to meet the desired computation time requirement of one second per vehicle. For comparison, we also test the three operator splitting based distributed scheme and its accelerated version given in Remark 4.2, where we choose , and . Here is the Lipschitz constant defined in Remark 4.2. For the accelerated scheme, we let and .

| MPC horizon | |||||

|---|---|---|---|---|---|

| 0.95 | 0.95 | 0.95 | 0.8 | 0.8 | |

| 0.3 | 0.3 | 0.3 | 0.1 | 0.1 | |

| Error tolerance |

Initial guess warm-up. For a given , the augmented locally coupled optimization problem (17) has nearly scalar variables and scalar constraints when the cyclic-like network topology is considered. These sizes can be even larger for other network topologies satisfying the assumption A1. Hence, when is large, the underlying optimization problem is of large size, which may affect the numerical performance of the distributed schemes. Several techniques are developed to improve the efficiency of the proposed Douglas-Rachford distributed schemes for real-time computation, particularly for a large . For illustration, we discuss the initial guess warm-up technique as follows. When implementing the proposed scheme, we often choose a numerical solution obtained from the last step as an initial guess for the current step and run the proposed Douglas-Rachford scheme. Such the choice of an initial guess usually works well when two neighboring control solutions are relatively close. However, it is observed that the convergence of the proposed distributed scheme is sensitive to an initial guess, especially when the CAV platoon is subject to significant traffic oscillations, which results in highly different control solutions between two neighboring instants. In this case, using a neighboring control solution as an initial guess leads to rather slow convergence. To solve this problem, we propose an initial guess warm-up technique, motivated by the fact that control solutions are usually unconstrained for most of ’s. Specifically, we first compute an unconstrained solution in a fully distributed manner, which can be realized by setting as the Euclidean space in Algorithm 1. This step can be efficiently computed since the proximal operator is formulated by an unconstrained quadratic program and has a closed form solution. In fact, letting , the closed form solution to the proximal operator is given by , where is PD. We then project this unconstrained solution onto the constrained set in one step. Due to the decoupled structure of the problem (17), this one-step projection can be computed in a fully distributed manner. We thus use this projected solution as an initial guess for the Douglas-Rachford scheme. Numerical experience shows that this new initial guess significantly improves computation time and solution quality when is large.

| MPC horizon | Computation time per CAV (s) | Relative numer. error | ||

| Mean | Variance | Mean | Variance | |

| 0.0248 | 0.0017 | |||

| 0.0603 | 0.0034 | |||

| 0.1596 | 0.0764 | |||

| 0.1528 | 0.1500 | |||

| 0.2365 | 0.2830 | |||

| MPC horizon | Computation time per CAV (s) | Relative numer. error | ||

| Mean | Variance | Mean | Variance | |

| 0.0464 | 0.0039 | |||

| 0.1086 | 0.0153 | |||

| 0.3296 | ||||

| 0.5049 | 0.6257 | |||

| 0.5784 | 0.7981 | |||

| MPC horizon | Computation time per CAV (s) | Relative numer. error | ||

| Mean | Variance | Mean | Variance | |

| 0.0825 | 0.0023 | |||

| 0.2011 | 0.0051 | |||

| 0.5830 | 0.3462 | |||

| 0.8904 | 0.4685 | |||

| 0.9967 | 0.7467 | |||

| MPC horizon | Computation time per CAV (s) | Relative numer. error | ||

| Mean | Variance | Mean | Variance | |

| 0.0243 | 0.0023 | |||

| 0.0097 | ||||

| 0.0579 | ||||

| 0.1063 | 0.1103 | |||

| 0.1258 | 0.1155 | |||

Performance of distributed schemes. Distributed algorithms are implemented on MATLAB and run on a computer of the following processor with 4 cores: Intel(R) Core(TM) i7-8550U CPU @ and RAM: . We test the fully distributed Algorithm 1 for Scenarios 1-3. At each , we use the optimal solution obtained from the last step as an initial guess unless otherwise stated. To evaluate the numerical accuracy of the proposed distributed scheme, we compute the relative error between the numerical solution from the distributed scheme and that from a high precision centralized scheme when the latter solution, labeled as the true solution, is nonzero. The mean and variance of computation time per vehicle and relative errors for different MPC horizon ’s in noise-free Scenarios 1-3 are displayed in Table 2-4, respectively. The numerical performance for Scenario 3 under noises is similar to that without noise; it is thus omitted due to space limit.

It is observed from the numerical results that when the MPC horizon increases, more computation time is needed with mildly deteriorating numerical accuracy. This observation agrees with the discussion on the choice of given in Section 6.1, which suggests a relatively small for practical computation. Besides, we have tested the proposed initial guess warm-up technique on Scenario 3 for different ’s using the same parameters and error tolerances for Algorithm 1; see Table 1. To compute a warm-up initial guess using an iterative distributed scheme, we use the same and for each with error tolerance for and for the other ’s. A summary of the numerical results is shown in Table 5. Compared with the results given in Table 4 without initial guess warm-up, the averaging computation time is reduced by at least and the relative numerical error is reduced by at least two thirds for when the initial guess warm-up is used. This shows that the initial guess warm-up technique considerably improves the numerical efficiency and accuracy, and it is especially suitable for real-time computation when a large is used. Hence, we conclude that Algorithm 1, together with the initial guess warm-up technique, is suitable for real-time computation with satisfactory numerical precision.

We have also tested the three-operator splitting based distributed scheme and its accelerated version given in Remark 4.2. These schemes provide satisfactory computation time and numerical accuracy when is small. For example, when , the mean of computation time per CAV is 0.0553 seconds with the variance 0.0284 for Scenario 1 and 0.219 seconds with the variance 0.138 for Scenario 2, respectively. However, for a slightly large , e.g., , it takes much longer than 1 second for an individual CAV to complete computation. These schemes are thus not suitable for real-time computation for .

Performance of the CAV platooning control. We discuss the closed-loop performance of the proposed CAV platooning control for the three aforementioned scenarios with different MPC horizon ’s. In each scenario, we evaluate the performance of the spacing between two neighboring vehicles (i.e., ), the vehicle speed , and the control input for . Due to the paper length limit, we present the closed-loop performance for and only for each scenario; see Figures 1-3 for (noise free) Scenarios 1-3 respectively, and Figure 4 for Scenario 3 with noises. In fact, it is observed from these figures (and the other tests) that there is little difference in control performance between and a higher , e.g., . We comment more on the closed-loop performance of each scenario as follows:

-

(i)

Scenario 1. Figure 1 shows that the spacing between the uncontrolled leading vehicle and the first CAV, i.e., , has mild deviation from the desired when the leading vehicle performs instantaneous acceleration or deceleration, while the spacings between the other CAVs remain the desired constant . Further, it takes about for to converge to the steady state with the maximum spacing deviation . The similar performance can be observed for the vehicle speed and control input. In particular, it can be seen that all the CAVs show the exactly same speed change and control, implying that the CAV platoon performs a “coordinated” motion with “consensus” under the proposed platooning control.

-

(ii)

Scenario 2. Figure 2 displays that under the periodic acceleration/deceleration of the leading vehicle, the CAV platoon also demonstrates a “coordinated” motion with “consensus”. For example, only demonstrates mild fluctuation, whereas the spacings between the other CAVs remain the desired constant, and all the CAVs show the exactly same speed change and control. Moreover, under the proposed platooning control, the oscillations of are relatively small with the maximal magnitude less than . Such oscillations quickly decay to zero within when the leading vehicle stops its periodical acceleration/deceleration.

-

(iii)

Scenario 3. In this scenario, the leading vehicle undergoes various traffic oscillations through the time window of . In spite of such oscillations, it is seen from Figure 3 that only demonstrates small spacing variations with the maximum magnitude less than , but the spacings between the other CAVs remain almost constant through the entire time window. This shows that the CAV platoon also demonstrates a “coordinated” motion with “consensus” as in Scenarios 1-2.

-

(iv)

Scenario 3 subject to noises. Figure 4 shows the control performance of the CAV platoon in Scenario 3 under noises. It can be seen that there are more noticeable spacing deviations from the desired constant for all CAVs due to the noises. However, the variation of remains to be within , and the maximum deviation of each with is less than . Further, the profiles of the CAV speed and control still demonstrate a nearly “coordinated” motion in spite of the noises.

In summary, the proposed platooning control effectively mitigates traffic oscillations of the spacing and vehicle speed of the platoon; it actually achieves a (nearly) consensus motion of the entire CAV platoon even under small random noises and perturbations. Compared with other platoon centered approaches, e.g., [5], the proposed control scheme performs better since it uses different weight matrices that lead to decoupled closed loop dynamics; this choice of the weight matrices also facilitates the development of fully distributed computation.

7 Conclusion

The present paper develops fully distributed optimization based MPC schemes for CAV platooning control under the linear vehicle dynamics. Such schemes do not require centralized data processing or computation and are thus applicable to a wide range of vehicle communication networks. New techniques are exploited to develop these schemes, including a new formulation of the MPC model, a decomposition method for a strongly convex quadratic objective function, formulating the underlying optimization problem as locally coupled optimization, and Douglas-Rachford method based distributed schemes. Control design and stability analysis of the closed loop dynamics is carried out for the new formulation of the MPC model. Numerical tests are conducted to illustrate the effectiveness of the proposed fully distributed schemes and CAV platooning control. Our future research will address nonlinear vehicle dynamics and robust issues under uncertainties, e.g., model mismatch, sensing errors, and communication delay.

8 Acknowledgements

This research work is supported by the NSF grants CMMI-1901994 and CMMI-1902006. We thank Benjamin Hyatt of University of Maryland Baltimore County for his contribution to the proof of the closed loop stability when .

References

- [1] F. Alizadeh and D. Goldfarb. Second-order cone programming. Mathematical Programming, 95(1):3–51, 2003.

- [2] C. Bergenhem, S. Shladover, E. Coelingh, C. Englund, and S. Tsugawa. Overview of platooning systems. In Proceedings of the 19th ITS World Congress, Oct 22-26, Vienna, Austria (2012), 2012.

- [3] D. Davis and W. Yin. A three-operator splitting scheme and its optimization applications. Set-valued and Variational Analysis, 25(4):829–858, 2017.

- [4] S. Gong and L. Du. Cooperative platoon control for a mixed traffic flow including human drive vehicles and connected and autonomous vehicles. Transportation Research Part B: Methodological, 116:25–61, 2018.

- [5] S. Gong, J. Shen, and L. Du. Constrained optimization and distributed computation based car following control of a connected and autonomous vehicle platoon. Transportation Research Part B: Methodological, 94:314–334, 2016.

- [6] J. Hu, Y. Xiao, and J. Liu. Distributed algorithms for solving locally coupled optimization problems on agent networks. In 2018 IEEE Conference on Decision and Control, pages 2420–2425. IEEE, 2018.

- [7] P. Kavathekar and Y. Chen. Vehicle platooning: A brief survey and categorization. In ASME 2011 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, pages 829–845. American Society of Mechanical Engineers, 2011.

- [8] A. Kesting, M. Treiber, M. Schönhof, and D. Helbing. Adaptive cruise control design for active congestion avoidance. Transportation Research Part C: Emerging Technologies, 16(6):668–683, 2008.

- [9] J. Koshal, A. Nedic, and U. V. Shanbhag. Multiuser optimization: distributed algorithms and error analysis. SIAM Journal on Optimization, 21(3):1046–1081, 2011.

- [10] S. Li, K. Li, R. Rajamani, and J. Wang. Model predictive multi-objective vehicular adaptive cruise control. IEEE Transactions on Control Systems Technology, 19(3):556–566, 2011.

- [11] G. Marsden, M. McDonald, and M. Brackstone. Towards an understanding of adaptive cruise control. Transportation Research Part C: Emerging Technologies, 9(1):33–51, 2001.

- [12] M. Mesbahi and M. Egerstedt. Graph Theoretic Methods in Multiagent Networks. Princeton University Press, 2010.

- [13] R. T. Rockafellar. Convex Analysis. Princeton University Press, 1970.

- [14] S. E. Shladover, C. Nowakowski, X.-Y. Lu, and R. Ferlis. Cooperative adaptive cruise control: definitions and operating concepts. Transportation Research Record: Journal of the Transportation Research Board, (2489):145–152, 2015.

- [15] S. E. Shladover, D. Su, and X.-Y. Lu. Impacts of cooperative adaptive cruise control on freeway traffic flow. Transportation Research Record: Journal of the Transportation Research Board, 2324:63–70, 2012.

- [16] J. F. Sturm. Using SeDuMi 1.02, a MATLAB toolbox for optimization over symmetric cones. Optimization Methods and Software, 11(1-4):625–653, 1999.

- [17] B. Van Arem, C. J. Van Driel, and R. Visser. The impact of cooperative adaptive cruise control on traffic-flow characteristics. IEEE Transactions on Intelligent Transportation Systems, 7(4):429–436, 2006.

- [18] J. Vander Werf, S. E. Shladover, M. A. Miller, and N. Kourjanskaia. Effects of adaptive cruise control systems on highway traffic flow capacity. Transportation Research Record, 1800(1):78–84, 2002.

- [19] J. Wang, S. Gong, S. Peeta, and L. Lu. A real-time deployable model predictive control-based cooperative platooning approach for connected and autonomous vehicles. Transportation Research Part B: Methodological, 128:271–301, 2019.

- [20] M. Wang, W. Daamen, S. P. Hoogendoorn, and B. van Arem. Rolling horizon control framework for driver assistance systems. Part II: Cooperative sensing and cooperative control. Transportation Research Part C: Emerging Technologies, 40:290–311, 2014.

- [21] M. Wang, W. Daamen, S. P. Hoogendoorn, and B. van Arem. Cooperative car-following control: Distributed algorithm and impact on moving jam features. IEEE Transactions on Intelligent Transportation Systems, 17(5):1459–1471, 2016.

- [22] S. Zhao and K. Zhang. A distributionally robust stochastic optimization-based model predictive control with distributionally robust chance constraints for cooperative adaptive cruise control under uncertain traffic conditions. Transportation Research Part B: Methodological, 138:144–178, 2020.

- [23] Y. Zheng, S. E. Li, K. Li, F. Borrelli, and J. K. Hedrick. Distributed model predictive control for heterogeneous vehicle platoons under unidirectional topologies. IEEE Transactions on Control Systems Technology, 25(3):899–910, 2016.

- [24] Y. Zhou, S. Ahn, M. Chitturi, and D. A. Noyce. Rolling horizon stochastic optimal control strategy for ACC and CACC under uncertainty. Transportation Research Part C: Emerging Technologies, 83:61–76, 2017.

- [25] Y. Zhou, M. Wang, and S. Ahn. Distributed model predictive control approach for cooperative car-following with guaranteed local and string stability. Transportation Research part B: Methodological, 128:69–86, 2019.