Fully Bayesian inference for latent variable Gaussian process models

Abstract

Real engineering and scientific applications often involve one or more qualitative inputs. Standard Gaussian processes (GPs), however, cannot directly accommodate qualitative inputs. The recently introduced latent variable Gaussian process (LVGP) overcomes this issue by first mapping each qualitative factor to underlying latent variables (LVs), and then uses any standard GP covariance function over these LVs. The LVs are estimated similarly to the other GP hyperparameters through maximum likelihood estimation, and then plugged into the prediction expressions. However, this plug-in approach will not account for uncertainty in estimation of the LVs, which can be significant especially with limited training data. In this work, we develop a fully Bayesian approach for the LVGP model and for visualizing the effects of the qualitative inputs via their LVs. We also develop approximations for scaling up LVGPs and fully Bayesian inference for the LVGP hyperparameters. We conduct numerical studies comparing plug-in inference against fully Bayesian inference over a few engineering models and material design applications. In contrast to previous studies on standard GP modeling that have largely concluded that a fully Bayesian treatment offers limited improvements, our results show that for LVGP modeling it offers significant improvements in prediction accuracy and uncertainty quantification over the plug-in approach.

Keywords: Gaussian process, Latent variables, Categorical variables, Fully Bayesian inference, Uncertainty quantification,

1 Introduction

Many scientific and engineering applications often require the use of surrogate models or emulators in various tasks such as optimization, sensitivity analysis, active learning, etc. Gaussian processes (GPs) are a popular class of surrogate models. Their principled uncertainty quantification (UQ) makes them useful for Bayesian optimization (BO) [32] and model calibration [1]. Traditionally, GP models have been developed for quantitative/numerical inputs. However, many applications involve one or more qualitative/categorical inputs. For example, in several material design applications, a goal is to find material compositions (e.g. atomic compositions) that have the desired target properties (such as resistivity, bandgap, etc.).

Previous work that has developed GP models for systems involving one ore more qualitative input(s) include [29, 16, 40, 11, 39], and [31]. Among them, the latent variable Gaussian process [39] (LVGP, not to be confused with Gaussian process latent variable models [24, 36] that are used for performing nonlinear dimensionality reduction) has typically achieved comparatively better modeling performance for such systems. When used within a BO framework, it has been applied to several material design and engineering design problems [21, 38, 22, 37, 27] and has yielded improved results. The LVGP method maps the levels of each qualitative input to a set of numerical values for some latent numerical variables. The latent variable values for each qualitative factor quantify the “distances” between the different levels, and therefore can be treated the same as numerical inputs in a GP model. In addition to the improved modeling performance, the latent variable mapping of the qualitative factors provides an inherent ordering and structure for the levels of the factor(s), which can provide insights into the effects of the qualitative factors on the response (see the examples in [39]).

The latent variables that represent the levels are treated as unknown and must be estimated along with other GP hyperparameters before being used for prediction or UQ. By “UQ”, we essentially mean a prediction interval on the predicted response, as a function of the inputs. A common strategy for inference in standard GPs is to plug in point estimates, such as maximum likelihood estimates or the maximum a-posteriori (MAP) estimates, into the different quantities of interest such as the expected improvement sampling criterion for BO. This plug-in approach, however, does not account for the uncertainty in the estimation of these hyperparameters. On the other hand, fully Bayesian inference, where one marginalizes over the posterior distribution of the hyperparameters, takes this uncertainty into account in a principled manner (for e.g., [17]). However, as it is more computationally expensive than plug-in inference, the plug-in approach is more commonly used. For standard GPs with numerical inputs, the benefits of the fully Bayesian approach appear to be mixed. Some works (for e.g., [18, 23]) have found the fully Bayesian approach to significantly improve performance, while some others (for e.g., [25, 9, 10]) have found the uncertainty in the estimated parameters to contribute relatively little to the total uncertainty in the predicted response. However, fully Bayesian inference has been found to be more robust for BO applications [13, 5], where small initial designs are commonly used.

Unlike for the standard GP model, the effect of the estimating the latent variables from data on the performance of the LVGP model is yet to be studied. Prior works on LVGP modeling, including ones for BO applications, have all used a plug-in approach with maximum likelihood estimates. The estimation uncertainty can be especially significant for the latent variables, whose numbers are usually much larger than that of the GP hyperparameters. This is of particular relevance for small initial datasets that are often encountered in material design applications (see [2], e.g.), especially when one or more qualitative variables have many levels, in which case there will typically be some levels for which no response observations are available. Improving the quality of UQ improves the performance of BO algorithms in these applications, which is a primary motivation for this work.

In this work, we develop a fully-Bayesian approach for LVGP modeling and conduct numerical studies comparing it to plug-in inference on a few of material design applications that motivated this work, and also on a few engineering models. This involves developing appropriate prior distributions for the latent variables and the other hyperparameters. The fully Bayesian approach complicates the interpretation of the latent variables, since there is a different latent variable mapping for each Markov chain Monte Carlo (MCMC) draw. Consequently, we develop an approach for finding a single common latent variable mapping in order to capitalize on the interpretability advantages of LVGP modeling. Finally, we also develop approximations for scaling fully Bayesian inference for LVGPs to much larger data sets than is possible with exact inference.

One important finding is that a fully Bayesian treatment appears to be substantially more beneficial in LVGP modeling with qualitative inputs than in standard GP modeling with only numerical inputs. Whereas the improvements in standard GP modeling have been mixed (see above discussion), we observed substantial improvements for the fully Bayesian approach in every example that we considered. The improvement in the UQ (as measured by the mean interval score metric [15]) was substantial in every example we considered. Moreover, and somewhat surprisingly, the predictive accuracy (as measured by the mean square error in predicting test response cases) was often improved substantially and never substantially worsened. We, therefore, advocate for a fully Bayesian treatment of the LVGP hyperparameters, especially when one or more qualitative inputs have many levels.

The remainder of the paper is structured as follows. We review GPs with numerical inputs and LVGPs in Section 2. We derive the fully Bayesian inference for the LVGP model in Section 3. We also discuss the ambiguity in interpreting the latent spaces under fully Bayesian inference, and provide a solution for the same. In Section 4, we develop approximations for scaling up LVGPs, and fully Bayesian inference for the LVGP hyperparameters using sparse inducing point methods. We discuss the numerical studies in Section 5. Finally, we offer concluding remarks in Section 6.

2 Background and notation

In this section, we review the standard GP model for quantitative inputs, related estimation and inference methods, and the LVGP model to handle qualitative factors. Let denote the true physical response surface model with inputs , where represents quantitative variables and represents qualitative variables with levels respectively. Without loss of generality, we assume that the levels of the qualitative factor are coded as a . Let denote the product input space.

2.1 GP regression

We consider GP regression models of the form:

| (1) |

is a GP, and is the zero-mean Gaussian noise term with variance . GPs define a prior over a space of functions with the property that any finite number of function values associated with different locations , have a joint Gaussian distribution. GPs are completely characterized by a mean function and a covariance or kernel function . A common choice for the mean function is a constant , which we will assume throughout this paper. The kernel function encodes assumptions about the function’s properties and the choice is problem dependent. A common choice for numerical inputs is the squared-exponential kernel

| (2) |

where is the prior variance hyperparameter, and are positive length-scale hyperparameters.

Suppose we have a set of observations (e.g., from N runs of some complex code that simulates a physical system) at inputs . By the marginalization properties of jointly Gaussian variables, the posterior distribution of at input conditioned on these observations, is again Gaussian with mean and variance given by:

| (3) | ||||

| (4) |

where is the vector of hyperparameters including the noise variance , is the covariance matrix of the observations whose element at the row and column is , is the dimensional covariance vector between and the observations, and is a vector of ones.

The GP hyperparameters are usually unknown in practice. A common strategy is to use a plug-in Bayes approach, also known as Empirical Bayes, where an estimate is first computed from data, and then plugged into the posterior predictive distribution. Under this plug-in approach, the posterior distribution of is again Gaussian whose mean and variance are calculated by plugging in into (3) and (4) respectively. A common strategy for estimation is to optimize with respect to either the log-likelihood

| (5) |

which gives the maximum likelihood estimate, or the log-posterior,

| (6) |

which gives the maximum a-posteriori (MAP) estimate. Here, is the prior distribution density over .

A more rigorous approach to obtain the posterior predictive distributions is to use fully Bayesian inference, where one marginalizes over the posterior distribution of given data. The density of the posterior distribution of given the data is

| (7) |

There is usually no closed form expression for the above distribution, as the integrals involved are often intractable. In practice, one often uses MCMC methods to draw a finite number of samples from the distribution , which can then be used to compute different quantities of interest. In this work, we use the No-U-Turn-Sampler [20] algorithm for drawing the MCMC samples.

2.2 LVGP models for systems with qualitative inputs

Standard GP correlation functions, such as the squared exponential, Matérn, power exponential, etc. cannot be directly applied to qualitative inputs because there is no inherent distance metric in the qualitative space. The recently proposed LVGP approach [39] provides a natural and convenient way to handle qualitative inputs by mapping the levels of each qualitative factor onto low-dimensional continuous latent spaces. To be more specific, consider a qualitative input with levels. The LVGP approach constructs a mapping , where is the dimension of the latent variable (LV) space. With this mapping, the LVGP approach defines kernels of the form

| (8) |

where is any kernel function on .

The LVGP is based on the premise that for any real physical system, the effects of a qualitative input must be due to some underlying (and perhaps very high-dimensional) quantitative variables . Consider the situation in Figure 1, where the three levels of are associated with points on the high-dimensional space of . The LVGP uses sufficient dimensionality reduction arguments to implicitly construct a low dimensional representation that accounts for most of their effects. Based on empirical studies, [39] argued that was often a good choice.

When there are multiple qualitative inputs, a separate latent space is used for each qualitative input. Let denote the -dimensional LV mapping for the qualitative input . If the squared exponential kernel is used for both the quantitative variables and the LVs (i.e., ) for the qualitative inputs, the covariance kernel for the LVGP is given by

| (9) |

In the above, the length scales for the LVs are set to unity. This is because these length scales are implicitly estimated along with the LV mapping.

The LVs are additional hyperparameters that must be estimated along with the other GP hyperparameters. Existing works in the literature employing the LVGP have all used maximum likelihood estimation followed by the plug-in Bayes approach for generating predictions. The impact of estimation of LVs on the performance of the LVGP model is yet to be studied. In the remainder of the paper, we demonstrate that the impact of the estimation uncertainty in the LVs can be substantial, and that the fully Bayesian approach that we develop for LVGPs appropriately accounts for the uncertainty, improving both the prediction accuracy and the UQ.

3 Fully Bayesian inference for LVGPs

In this section, we discuss various aspects for fully Bayesian inference for LVGPs - the choice of the prior distributions (Section 3.1), our choice of the MCMC algorithm and obtaining predictions from the model (Section 3.2), and interpreting the estimated LVs (Section 3.3). As in [39], we will assume that the kernel over the LVs is stationary.

3.1 Priors for the latent variables

For fully Bayesian inference, we need to specify prior distributions for the LVs and for the other GP hyperparameters. In this section, we will discuss our choice of the prior distribution for the LVs. Prior distributions used for the other GP hyperparameters are standard choices. In the following, we drop the superscript from the LVs for notational convenience. For a qualitative variable with levels and its LV mapping , let denote the mapped values for level .

The prior on the LVs for a qualitative variable can be used to encode any domain knowledge (if available) about the similarity between the different levels of in terms of their effects on the response. This domain knowledge may be available in multiple forms. For example, we could be given an incomplete set of numerical descriptors that are either known to or suspected to have significant effects on the response. The Euclidean distances between these descriptor values could potentially be used to be define some appropriate prior similarity measure for the LVs. However, in this work we focus on the more general setting where no such domain knowledge exists.

In the absence of any domain information, we define the following weakly informative prior over the LVs for :

| (10) |

where is a precision hyperparameter that is common for all LVs for , and is jointly inferred along with these LVs. Note that different qualitative factors have different precision hyperparameters. The prior (10) encourages the values of the LVs to be concentrated towards 0. This has an indirect effect of shrinking the distances between the different levels in the LV space, and can be viewed as a form of regularization of the effects of . The degree of regularization for is controlled by the precision . The factor of is included to account for the fact that the number of LVs to be estimated increases with and a larger regularization will be needed for larger values of .

Alternatively, one can place a completely non-informative prior on the LVs. In practice, one can approximate this via i.i.d. uniform priors with relatively large lower and upper limits (e.g., -10 and 10, respectively). With a completely non-informative prior, there is effectively no regularization on the effects of the qualitative variable. We find that the completely non-informative priors negatively impacted MCMC convergence, irrespective of the choice of the MCMC algorithm. We will, therefore, restrict our attention to the weakly informative prior (10).

With a stationary kernel over the LVs, the correlations between the different levels is dependent only on the pairwise distances between the corresponding LV values of the levels. Therefore, unconstrained LVs are subject to translation and rotation symmetries under the LVGP likelihood (see Figure 2 for illustration in a 2D LV space). While the prior (10) reduces translation symmetries in the LVs under the posterior, rotation symmetries still exist - the two sets of LVs and have the same posterior probabilities, where is any orthogonal matrix in . This rotational symmetry in the LVs can potentially reduce sampling efficiencies of the MCMC scheme, which would necessitate large number of MCMC draws, thereby increasing the computational expense of fully Bayesian inference. To eliminate these symmetries, [39] fix the coordinate frame of reference. For example, in a 2D LV space, they constrain the mapped LVs for the first level, , at the origin, and those of the second level, , to lie on the horizontal axis. For a general , they set , , and for . This results in fewer free parameters. However, a challenge with this parameterization for fully Bayesian inference is that the prior distribution cannot represent independent and identically distributed LVs, because this parameterization treats the first levels differently from the other levels. For example, under independent normal or uniform prior distributions with mean at 0, the LVs for any level would be closer on average to the first level than to any other level. This treatment of the LVs is problematic, because the ordering of the levels is arbitrary, and it can affect the quality of the LVGP model, especially with a small number of training observations, or when some of the levels are unobserved in the training data.

To rectify this, we instead modify the prior (10) by first defining “raw” LV parameters , which are i.i.d. for all levels, and then transforming them to the coordinate frame of reference used by [39] to obtain the actual LVs. We first consider the case of a 2D LV space. The resulting two-stage prior is as follows:

| (11) | |||

| (12) |

where

| (13) |

and is a rotation matrix in parameterized by and given by

| (14) |

In (12), the raw LVs are first translated so that the first level is at the origin, and then rotated by so that the mapped values of the second level lie on the horizontal axis. Note that the mapped LVs for the remaining levels are not constrained to lie on either axis. The prior distribution on the actual LVs are, clearly, not i.i.d., although by symmetry arguments the distances between each pair of levels follow the same prior distribution. The modifications represented by (12) ensure that the results are theoretically invariant (barring numerical errors) to which two levels are chosen to lie at the origin and on the horizontal axis.

For , the matrix in (12) is replaced by a product of Givens rotation matrices in dimensions. Givens rotation matrices in -dimensions, , are matrices that take the form of the identity matrix except for the and positions which are replaced by , and the and positions which are replaced by and respectively. The LVs for a general are given by

| (15) |

where

| (16) |

and is set such that the LV for the level to be zero. In practice, the matrix multiplication operations in (15) are implemented sequentially using Algorithm 1.

With this formulation, for a qualitative variable with levels and using a -dimensional LV spave, there are parameters to estimate. And so, with qualitative variables, we have parameters to estimate. Algorithm 1 has an computational cost for a qualitative variable with L levels and d number of latent variable dimensions. However, the main computational cost in each posterior evaluation comes from computing the Cholesky factorization, which is .

Finally, for a prior distribution on , we consider the Gamma distribution. The support of the Gamma distribution is the set of positive real numbers. Larger values of result in larger amounts of regularization on the LVs. The Gamma distribution has two additional hyperparameters, the concentration and the rate . We restrict and set , so that the mode of the distribution is at . This results in only a single hyperprior parameter. We find that the convergence and performance of the model is not sensitive to the choice of . Refer to Appendix A for more details. In our numerical experiments in Section 5, we use .

3.2 Predictions and UQ

In this section, we briefly discuss our choice of the MCMC algorithm for drawing samples from the posterior distribution of the LVGP hyperparameters, and then we state the formulae and procedures for obtaining predictions and confidence intervals on these predictions.

The joint posterior distribution for the LVs and the other GP hyperparameters is typically high dimensional. The MCMC algorithm should have a few desired properties in order to efficiently converge to this posterior distribution. Firstly, the algorithm needs to suppress random walk behavior for the Markov chain to mix well in high dimensions. Otherwise, the chain will take too long to converge to the posterior, and thereby require many evaluations for the posterior. Secondly, if the algorithm has additional tuning parameters, there must be a mechanism to tune them during the burn-in or warm-up phase. Among the various algorithms that we have tested, the No-U-Turn-Sampler [20] algorithm111as implemented by the NumPyro [28, 7] library in Python satisfies both properties, and is found to work well for our case.

Under MCMC sampling, the posterior predictive distribution of at input is approximated as a Gaussian mixture distribution

| (17) |

where are MCMC samples drawn from , and and are defined in (3) and (4) respectively. The mean and variance of this distribution are straightforward to compute and are as follows:

| (18) | ||||

| (19) |

In this work, we define UQ in terms of prediction intervals on the predicted response (as a function of the inputs). Unfortunately, there are no analytical expressions for the quantiles of a Gaussian mixture distribution. So, the variance term (19) cannot be used to directly compute the quantiles. Instead, we estimate the quantiles empirically by drawing samples from this distribution as shown in Algorithm 2. When computing the confidence intervals in our experiments in Section 5, we use about samples. This is likely overkill, and we could obtain sufficiently accurate confidence intervals using as few as 500-1000 samples. However, the confidence interval computations are very fast, in part because the operations in Algorithm 2 can be easily vectorized. So, we erred on the side of caution and used a large number of samples.

3.3 Latent space interpretation

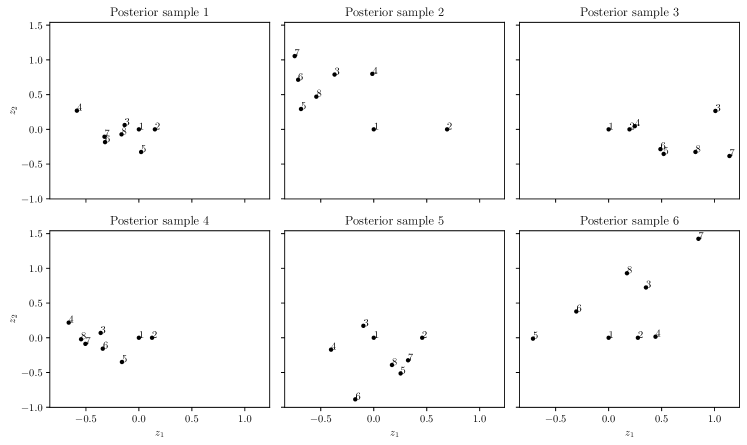

The LV mapping provides an inherent ordering and structure for the different levels of a qualitative input, in terms of their effects on the response. Therefore, a secondary benefit of the LVGP approach is to provide an interpretation of the effects of these different levels. Interpreting the LVs with fully Bayesian inference is, however, less straightforward. As shown in Figure 3, there are multiple latent spaces, each corresponding to a different MCMC sample drawn from the posterior. Since the estimation uncertainty in the LVs can be large, especially with small training data sets, the variation in the MCMC latent space samples can be large. Due to this sample-to-sample variability, the different latent space samples can result in different interpretations of the relations among the levels, thereby making interpretation of the effects of the qualitative variable challenging.

To resolve this ambiguity in interpretation and to help average out sample-to-sample variability, we propose to find a common latent space that reconciles the differences between the different latent spaces by minimizing a discrepancy measure over these different latent spaces. Since the latent variables are ultimately used to compute the covariance matrix over the levels, we define the discrepancy measure in terms of differences in their resulting covariance matrices. In the following, we drop the subscript from the LVs for notational convenience. For a LV mapping for a qualitative input with levels, let denote the corresponding LV matrix. Let denote the covariance matrix across levels corresponding to the LV matrix , where the element at the row and column is . We define the discrepancy measure between two LV matrices and (e.g., corresponding to two different MCMC draws of the LVs) as , where is the Frobenius norm.

Let be the latent variable matrices corresponding to the different hyperparameter samples from the posterior. We find a representative latent variable matrix as

| (20) | ||||

| (21) |

where is the LV for the level. Constraints (21) fix the coordinate frame of reference to the one used by [39] to deal with the translation and rotation symmetries in the LVs, which were discussed in Section 3.1.

Once is obtained, the representative LV space can be interpreted in a similar way as the LV space obtained from point estimates. For systems with multiple qualitative inputs, the optimization problem (20) is run separately for each qualitative input.

For illustration, consider the borehole function, that is commonly used to illustrate GP modeling [26]. The flow rate of water through a borehole, that is drilled from the ground surface through two aquifers is

where the 8 inputs are (). We modify this model by first discretizing two underlying numerical variables and to have 4 levels each, and then creating a new qualitative variable whose 16 levels represent discrete combinations of those two inputs, as shown in Figure 4a. The total Sobol sensitivity indices [34] of the original numerical inputs and are 0.86 and 0.05, respectively. Therefore, is much more important than , and we would hope that the (representative) latent space for reflects this. In particular, we would hope that the levels corresponding to the same value (for e.g. levels 1,2,3, and 4) to be closer to each other on average than those with different values. We consider two different training set sizes of and observations, corresponding to having 2 and 4 observations per each level. The training sets are generated using a two-step design of experiments (DoE) approach. First, the quantitative variables are generated using Latin hypercube sampling. Then, the qualitative factor levels of each data point are assigned using random stratified sampling, with stratification on the levels to ensure that each level occurs for the same number of training observations.

The estimated representative LV spaces for the two training sets are shown in Figures 4b and 4c, respectively. In both cases, the LV corresponds roughly to , and the corresponds fairly closely to , more so for the set of 64 training observations. Moreover, levels with the same value are closer to each other than those with different values. Note that the distances between different levels in the (representative) LV space are larger in the case of the training set with 32 observations, and the agreement between the estimated LV space and the true LV space is not as tight as for the case of 64 training observations. This is likely due to larger estimation uncertainty with just 2 observations per level, and hence, greater discrepancies between the different posterior LV samples. Also, the effect of is not very clear for the smaller training set, since the orderings of the levels are different at different values. However, with the larger training set in Figure 4c, the effects of both and are more clear. In fact, we can conclude that approximately represents the effects of , while the approximately represent the effects of .

The above example shows that LVGP with Bayesian inference can offer useful interpretations even with small training datasets. As we collect more data, we can draw more definitive conclusions from the representative LV space.

4 Scaling fully Bayesian inference for LVGPs using sparse approximations

GPs, and LVGPs by extension, do not scale well with . The computational requirements scale as time and as memory. In addition, LVGPs have many more parameters to estimate than regular GPs, as the latent variables must also be estimated. Since fully Bayesian inference typically requires many more likelihood evaluations than does maximum likelihood estimation, approximations may be needed for certain applications with larger datasets. In this section, we consider sparse approximations to the GP likelihood (5) using inducing points. These methods lower the computational costs to time and memory, where is a pre-defined number of inducing points. We also address a few challenging issues that arise in defining inducing points for the qualitative inputs.

Inducing point methods augment the model with inducing points (also referred to as pseudo-inputs) and their corresponding function values . A common assumption behind all inducing point methods is that the function value at a test location and the function values at the training locations are conditionally independent given . Under this assumption, we use the standard approximation [30, 35]

| (22) |

where the are some approximating distributions. The function values are marginalized analytically in the right-hand side of (22), leaving the inducing points as the only additional parameters. We consider two such methods - the fully independent training conditional (FITC) [33], and the variational free energy (VFE) [35] methods. The two methods use slightly different approximating distributions and likelihood objectives but have quite different performances in practice. See [3] for more details.

These inducing point methods were developed for numerical inputs, for which the inducing points are continuous parameters. However, the domain for qualitative inputs is a finite set of values, which requires inference techniques over a mixed-variable parameter space. To avoid difficulties associated with such inference, we first define the inducing points for a qualitative variable in its corresponding latent variable space. We then relax the constraint that they belong to the finite set of the mapped LV values (illustrated in Figure 5a), and allow them to lie in the convex hull of this set, as illustrated in Figure 5b. To ensure the inducing points lie in the convex hull, we represent the inducing point location for , denoted by , as

| (23) |

where are weight parameters with , and . These can be further expressed as a function of simple bound-constrained parameters (see [6], e.g.). If the mapped LV values are fixed, could potentially be represented using fewer weight parameters, i.e., those corresponding to the levels whose mapped values make up the vertices of the convex hull. However, the mapped LV values must be estimated from data in practice. The subset of levels whose mapped values make up the vertices of the convex hull can repeatedly change during optimization which complicates the joint estimation of the inducing points and the LVs using this sparser parameterization. In contrast, the formulation (23) allows for more convenient joint optimization of the inducing points and the LVs.

For tractable fully Bayesian inference over these sparse models, we perform fully Bayesian inference only over the LVs and other LVGP hyperparameters and we fix the parameters for the inducing points (of both the numerical and the qualitative variables) to their maximum likelihood estimates.

5 Numerical studies

In this section, we present numerical studies to compare plug-in inference with MAP estimates against fully Bayesian inference for the LVGP. As in [39], we will use a 2-D latent space for all the case studies. For each case study, we compare the methods using two metrics. The prediction quality of the methods is quantified as the relative root mean-squared error (RRMSE) of their predictions over test points:

| (24) |

where and denote the true and predicted values respectively for the test sample, and is the average across the true test observations. To assess the quality of UQ, we use the negatively oriented mean interval score (MIS) [15] for the 95% (central) prediction intervals. For an observation , the interval score for a 95% prediction interval is given by

| (25) |

where denotes the positive part of the argument. The interval score penalizes confidence intervals that do not contain their corresponding observations. Since fully Bayesian inference accounts for the uncertainty in estimating the LVs while plugin inference does not, the confidence intervals obtained through fully Bayesian inference will be wider than those obtained through plugin inference, and therefore are less likely to incur this penalty. However, the MIS also penalizes overly wide confidence intervals. The MIS results in Sections 5.1 and 5.2 indicate that our Bayesian LVGP model results in superior uncertainty quantification by appropriately widening, but not overly widening, the confidence intervals. Finally, we also report the coverage probabilities for these 95% prediction intervals. The Python code used for these experiments can be found at https://github.com/syerramilli/lvgp-bayes.

5.1 Engineering models

We first compare the performance of the two methodologies on a set of engineering models that are commonly used for assessing surrogate models with numerical inputs. In each case, we discretize two numerical inputs and then construct a new qualitative factor whose levels represent discrete combinations of those two numerical inputs. In addition to the borehole model discussed in Section 3.3, we also consider the following models:

OTL Circuit

The midpoint voltage of a transformerless (OTL) circuit function is

where , and the 6 inputs are (). Refer to [4] for complete definitions and physical meanings of these inputs. We discretize and to have 6 and 3 levels respectively, and define the 18 levels of as the Cartesian product of the levels of and .

Piston

The resulting cycle time for a piston moving within a cylinder is

where , A = , and the 7 inputs are ( ). Refer to [4] for complete definitions and physical meanings of these inputs. We discretize amd to have 4 and 5 levels respectively, and define the 20 levels of as the Cartesian product of the levels of amd .

For each system, we randomly sample 1000 points over the input space as test data over which the metrics are computed. We compare the estimation methods for three different training set sizes, corresponding to having 2, 3, and 4 observations, respectively, per level of the qualitative variable . For example, the training set sizes for the borehole function would be 32, 48 and 64 respectively. The training sets are generated using a two-step design of experiments (DoE) approach. First, the quantitative variables are generated using Latin hypercube sampling. Then, the qualitative factor levels of each data point assigned using random stratified sampling, with stratification on the levels to ensure that each level occurs for the same number of training observations. We perform the analysis for 25 replicates, each using different training sets with a different random Latin hypercube sample in the quantitative variables.

The RRMSE results are shown in Figure 6. In all three systems, the prediction quality of fully Bayesian inference is either better than (sometimes substantially better) or comparable to that of plug-in inference. The fully Bayesian inference had slightly worse RRMSE on average only in the case of the borehole function with 32 training observations, but even in this case it resulted in better consistency across replicates (in Figure 6, its boxplot is narrower than that for the MAP approach). For most of the other situations in Figure 6, the fully Bayesian RRMSE is substantially better, with lower average RRMSE and better consistency (narrower box plots) across replicates. Regarding the consistency, even for the borehole function with 32 training observations, the fully Bayesian inference has better worse case performance, and is therefore more robust.

The corresponding MIS results are shown in Figure 7. Irrespective of training set size, there is a sizable improvement in the MIS from fully Bayesian inference. There is also much lower variance in the MIS across the different replicates, as is evident from the smaller interquartile ranges. The improvement in quality of UQ is expected, as there are many LVs to be estimated for each system, and hence the estimation uncertainty for the LVs will be large. This is reflected in the coverage probabilities, shown in Figure 8. The MAP versions have poor coverage probabilities, especially for the OTL circuit function. While their coverage probabilities improve with increased training set sizes, they are still quite inaccurate. In contrast, with fully Bayesian inference, the models consistently have far better coverage probabilities irrespective of the training set size.

5.2 Material design datasets

We now compare the estimation methodologies on the two different material design applications that motivated this work. In each case, we train independent LVGP models for multiple response properties of interest as a function of the atomic compositions of the materials, which constitute the purely qualitative inputs. In material design applications, the primary bottleneck is in obtaining physical measurements or first-principles calculations for the properties of interest, as these are typically expensive and time consuming [19]. Therefore, it is important to develop cheap surrogate models that can learn efficiently from (relatively small) available datasets.

5.2.1 Electronic properties of lacunar spinels

The first dataset consists of high-fidelity density functional theory (DFT) calculations for bandgaps and stabilities of 270 material compounds belonging to the lacunar spinel family [37]. The atom has three levels Al, In, Ga, the atom has six levels V, Nb, Ta, Cr, Mo, W, the atom has five levels V, Nb, Ta, Mo, W, and the atom has 3 levels S, Se, Te. In the original study [37], the authors used the LVGP with point estimates in a multi-objective LVGP-BO framework to find suitable metal-insulator transition materials in the lacunar spinel family. For this family of materials, choosing suitable numerical features for modeling the properties was challenging, as there was a lack of a clear understanding of the microscopic interactions governing the two properties. The LVGP methodology circumvented this issue by allowing modeling of the two properties as a function of the atomic compositions alone.

Here, we are interested in assessing the change in model performance on small training sets through a fully Bayesian treatment of the LVGP hyperparameters. For this analysis, we generate training sets of sizes of 50, 75 and 100 through random sampling. For each training set of size , we use the remaining observations as the test set over which the metrics are computed. We train and test the model across 25 replicates, where the replicates use different training and test splits.

The RRMSE results are shown in Figure 9. For predicting both bandgap and stability, there is a large improvement from fully Bayesian inference irrespective of training set size. The corresponding MIS results are shown in Figure 10. The fully Bayesian inference provides a large improvement in the quality of UQ, and for all sample sizes considered. As in the previous case, this improvement in UQ is largely from much better coverage probabilities with fully Bayesian inference, as show in Figure 11. The extent of improvement in UQ may seem a bit surprising given that none of the four qualitative inputs have many levels. However, the total number of LVs to be estimated across all four qualitative variables is still large and hence, there is a significant amount of estimation uncertainty.

5.2.2 Elastic moduli of AX materials

The second dataset is from another adaptive material exploration study [2] involving a combinatorial search of compounds belonging to the family of phases. The responses variables are the three different elastic moduli - Young’s, shear, and bulk. The elastic moduli were estimated via DFT calculations. The M atom has ten levels Sc, Zr, Nb, Cr, Ti, Hf, V, W, Ta, Mo, the A atom has twelve levels Cd, Tl, In, Pb, Al, Ga, Sn, Ge, Si, As, P, S, and the X atom has two levels C, N. Among the 240 possible combinations, 17 were found to have negative elastic constants, and thus were not considered.

In the original study [2], the authors have considered GP modeling with a small number of numerical descriptors for each qualitative factor, that were expected to have large effects on the response. They used seven descriptors in total: the s-, p-, and d-orbital radii for the M atom, and the s-, and p-orbital radii for the A and X atoms. In the following, we compare the performance of the standard (for numerical inputs only) GP with the chosen descriptors against the LVGP with just the qualitative factors (i.e., the LVGP does not consider the numerical descriptors at all) for each of the three responses. In addition, we also compare the impact of fully Bayesian inference on the performance of both models. Since the X atom has only two levels, it makes no difference whether it is treated as a numerical descriptor or as a qualitative input. Therefore, we treat X as a binary numerical variable for both models. For analysis, 100 of the 223 data points are randomly selected as training data and the rest are used as the test data. We repeated this procedure for 25 replicates, where on each replicate we choose different random subsets to serve as training and test data. In all the 25 different training sets, there were no levels with missing observations.

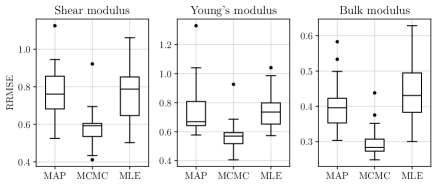

The RRMSE results are shown in Figure 12. Multiple observations that can be made from these results. Firstly, fully Bayesian inference results in a substantial improvement in the case of LVGP models, but offers much less improvement in the case of the GP models with only the numerical descriptors. This is perhaps due to the LVGP models having a much greater number of hyperparameters, and therefore a higher level of estimation uncertainty, in which case the MAP estimates may be misleading in the case of LVGPs. A second important observation from Figure 12 is that the GP models with the descriptors have consistently worse performance than the LVGP models (especially the fully Bayesian LVGP) on all three responses. This may be due to the numerical descriptors providing an insufficient representation of the effects of the qualitative variables.

The corresponding MIS results are shown in the Figure 13. As in the case of the RRMSE comparison, the fully Bayesian LVGP offers substantial improvement in UQ relative to its MAP version, whereas the fully Bayesian version of the GP model offers much less improvement over its MAP version. This intuitively makes sense, as LVGP models have more hyperparameters to estimate and hence the associated estimation uncertainty is much larger. Notice that despite having poorer predictive performance, the MAP version of the GP models have better UQ than the MAP version of the LVGP model. However, the fully Bayesian version of the LVGP model has substantially better UQ than any of the other methods (in addition to substantially better RRMSE). The trends observed in MIS are also reflected in the coverage probabilities, shown in Figure 14.

5.3 Formation energies of AB perovskites

We now consider a dataset of DFT computed formation energies of AB perovskites [12]. These compounds are widely used for thermochemical water spitting applications. In these compounds, O is the oxygen atom, while A and B are variables that represent the cations that may occupy these two sites of the compound. There are 73 elements from the periodic table that can occupy either of these sites. The dataset contains a total of 5276 compounds for which formation energies are available. To test the approach, we randomly select 1000 observations as training data, and the remainder of the 5276 compounds are used as test data. We repeated this procedure for 25 replicates, where on each replicate we choose different random subsets to serve as training and test data. In each of the 25 different training sets, there were no levels with missing observations. For this application, fully Bayesian inference with all = 1000 observations was too prohibitively expensive to run across multiple replicates. Instead, we compare the MAP version of the LVGP model with all = 1000 observations (which we refer to as the exact MAP) versus both MAP and fully Bayesian versions of the two sparse LVGP approximations discussed in Section 4. For each sparse approximation, we set the number of inducing points to 50.

The RRMSE results are shown in Figure 15a. The first panel corresponds to the exact MAP LVGP, the second to the FITC approximation (MAP and fully Bayesian), and the third to the VFE approximation (MAP and fully Bayesian). Comparing the MAP versions, we can see that the sparse models have much better prediction accuracies than the exact MAP LVGP model, which may seem surprising. However, the optimization of the exact MAP LVGP model suffers from stability issues arising from computing the Cholesky decomposition of a much larger (covariance) matrix than that for the sparse LVGP models, which explains its poorer performance. The VFE model has slightly better prediction accuracy than the FITC model. For both models, there are significant improvements in prediction accuracy with fully Bayesian inference versus MAP.

The MIS results are shown in Figure 15b. Comparing the MAP versions, the VFE model has worse UQ than the exact LVGP model. However, the FITC model has much better UQ than the exact LVGP model. With fully Bayesian inference, there are large improvements in the quality of UQ for both the sparse models. The relative improvement in UQ for the VFE model with the fully Bayesian inference is much larger than that for the FITC models. This can be explained by their respective coverage probabilities, which are shown in Figure 15c. The coverage probability of the MAP FITC model is much larger than the of the MAP VFE model.

These results show that fully Bayesian inference for the LVs and the other hyperparameters results in improved performance even for larger training sets. However, these improvements come with a large increase in computation time. As shown in Figure 15d, the training time for fully Bayesian inference (which include the training time for obtaining the MAP estimates for the inducing points) is about an order of magnitude larger than that for obtaining the corresponding MAP estimates.

6 Conclusions

In this work, we have developed a fully-Bayesian approach for LVGP modeling and conducted numerical studies comparing it to plug-in inference on a few engineering models and real datasets for material design problems. This involved developing prior distributions for the latent variables and developing an approach for interpreting the LVGP model with fully Bayesian inference. We have also developed approximations for scaling up LVGPs and for fully Bayesian inference for the LVGP hyperparameters using sparse inducing point methods. Through our numerical studies, we have observed substantial improvements in UQ from the fully Bayesian approach in every example that we considered. Moreover, and somewhat surprisingly, the predictive accuracy, as measured by the RRMSE, was often improved substantially and never significantly worsened. Finally, the LVGP with fully Bayesian inference is as interpretable as the LVGP with point estimates using our proposed approach for constructing a single representative LV space. We, therefore, advocate for a fully Bayesian treatment of the LVGP hyperparameters, especially when one or more qualitative inputs have many levels.

Acknowledgements

This work was supported by the Advanced Research Projects Agency-Energy (ARPA-E), U.S. Department of Energy, under Award Number DE-AR0001209. This research was also supported, in part, through the computational resources and staff contributions provided for the Quest high-performance computing facility at Northwestern University, which is jointly supported by the Office of the Provost, the Office for Research, and Northwestern University Information Technology.

References

- Arendt et al. [2012] Paul D. Arendt, Daniel W. Apley, and Wei Chen. Quantification of Model Uncertainty: Calibration, Model Discrepancy, and Identifiability. Journal of Mechanical Design, 134(10), 09 2012.

- Balachandran et al. [2016] Prasanna V Balachandran, Dezhen Xue, James Theiler, John Hogden, and Turab Lookman. Adaptive strategies for materials design using uncertainties. Scientific reports, 6(1):1–9, 2016.

- Bauer et al. [2016] Matthias Bauer, Mark van der Wilk, and Carl Edward Rasmussen. Understanding probabilistic sparse Gaussian process approximations. Advances in neural information processing systems, 29, 2016.

- Ben-Ari and Steinberg [2007] Einat Neumann Ben-Ari and David M. Steinberg. Modeling data from computer experiments: An empirical comparison of kriging with mars and projection pursuit regression. Quality Engineering, 19(4):327–338, 2007. doi: 10.1080/08982110701580930.

- Benassi et al. [2011] Romain Benassi, Julien Bect, and Emmanuel Vazquez. Robust Gaussian process-based global optimization using a fully Bayesian expected improvement criterion. In Carlos A. Coello Coello, editor, Learning and Intelligent Optimization, pages 176–190, Berlin, Heidelberg, 2011. Springer Berlin Heidelberg. ISBN 978-3-642-25566-3.

- Betancourt [2012] Michael Betancourt. Cruising the simplex: Hamiltonian Monte Carlo and the Dirichlet distribution. In AIP Conference Proceedings 31st, volume 1443, pages 157–164. American Institute of Physics, 2012.

- Bingham et al. [2019] Eli Bingham, Jonathan P Chen, Martin Jankowiak, Fritz Obermeyer, Neeraj Pradhan, Theofanis Karaletsos, Rohit Singh, Paul Szerlip, Paul Horsfall, and Noah D Goodman. Pyro: Deep universal probabilistic programming. The Journal of Machine Learning Research, 20(1):973–978, 2019.

- Byrd et al. [1995] Richard H. Byrd, Peihuang Lu, Jorge Nocedal, and Ciyou Zhu. A limited memory algorithm for bound constrained optimization. SIAM Journal on Scientific Computing, 16(5):1190–1208, 1995. doi: 10.1137/0916069.

- Chen et al. [2016] Hao Chen, Jason L. Loeppky, Jerome Sacks, and William J. Welch. Analysis Methods for Computer Experiments: How to Assess and What Counts? Statistical Science, 31(1):40 – 60, 2016. doi: 10.1214/15-STS531.

- Chen et al. [2017] Hao Chen, Jason L. Loeppky, and William J. Welch. Flexible correlation structure for accurate prediction and uncertainty quantification in Bayesian Gaussian process emulation of a computer model. SIAM/ASA Journal on Uncertainty Quantification, 5(1):598–620, 2017. doi: 10.1137/15M1008774.

- Deng et al. [2017] X. Deng, C. Devon Lin, K.-W. Liu, and R. K. Rowe. Additive Gaussian process for computer models with qualitative and quantitative factors. Technometrics, 59(3):283–292, 2017. doi: 10.1080/00401706.2016.1211554.

- Emery and Wolverton [2017] Antoine A Emery and Chris Wolverton. High-throughput DFT calculations of formation energy, stability and oxygen vacancy formation energy of ABO3 perovskites. Scientific data, 4(1):1–10, 2017.

- Forrester and Jones [2008] Alexander Forrester and Donald Jones. Global optimization of deceptive functions with sparse sampling. In 12th AIAA/ISSMO multidisciplinary analysis and optimization conference, 2008.

- Gardner et al. [2018] Jacob Gardner, Geoff Pleiss, Kilian Q Weinberger, David Bindel, and Andrew G Wilson. GPyTorch: Blackbox matrix-matrix Gaussian process inference with GPU acceleration. In S. Bengio, H. Wallach, H. Larochelle, K. Grauman, N. Cesa-Bianchi, and R. Garnett, editors, Advances in Neural Information Processing Systems, volume 31, pages 7576–7586. Curran Associates, Inc., 2018.

- Gneiting and Raftery [2007] Tilmann Gneiting and Adrian E Raftery. Strictly proper scoring rules, prediction, and estimation. Journal of the American Statistical Association, 102(477):359–378, 2007. doi: 10.1198/016214506000001437.

- Han et al. [2009] Gang Han, Thomas J. Santner, William I. Notz, and Donald L. Bartel. Prediction for computer experiments having quantitative and qualitative input variables. Technometrics, 51(3):278–288, 2009. doi: 10.1198/tech.2009.07132.

- Handcock and Stein [1993] Mark S. Handcock and Michael L. Stein. A Bayesian analysis of kriging. Technometrics, 35(4):403–410, 1993. doi: 10.1080/00401706.1993.10485354.

- Helbert et al. [2009] Céline Helbert, Delphine Dupuy, and Laurent Carraro. Assessment of uncertainty in computer experiments from universal to Bayesian kriging. Applied Stochastic Models in Business and Industry, 25(2):99–113, 2009.

- Himanen et al. [2019] Lauri Himanen, Amber Geurts, Adam Stuart Foster, and Patrick Rinke. Data-driven materials science: Status, challenges, and perspectives. Advanced Science, 6(21):1900808, 2019. doi: 10.1002/advs.201900808.

- Hoffman and Gelman [2014] Matthew D. Hoffman and Andrew Gelman. The No-U-Turn sampler: adaptively setting path lengths in Hamiltonian Monte Carlo. Journal of Machine Learning Research, 15(47):1593–1623, 2014.

- Iyer et al. [2019] Akshay Iyer, Yichi Zhang, Aditya Prasad, Siyu Tao, Yixing Wang, Linda Schadler, L. Catherine Brinson, and Wei Chen. Data-Centric Mixed-Variable Bayesian Optimization for Materials Design. volume Volume 2A: 45th Design Automation Conference of International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, 08 2019. doi: 10.1115/DETC2019-98222.

- Iyer et al. [2020] Akshay Iyer, Yichi Zhang, Aditya Prasad, Praveen Gupta, Siyu Tao, Yixing Wang, Prajakta Prabhune, Linda S. Schadler, L. Catherine Brinson, and Wei Chen. Data centric nanocomposites design via mixed-variable Bayesian optimization. Molecular Systems Design and Engineering, 5:1376–1390, 2020. doi: 10.1039/D0ME00079E.

- Lalchand and Rasmussen [2020] Vidhi Lalchand and Carl Edward Rasmussen. Approximate Inference for Fully Bayesian Gaussian Process Regression . In Cheng Zhang, Francisco Ruiz, Thang Bui, Adji Bousso Dieng, and Dawen Liang, editors, Proceedings of The 2nd Symposium on Advances in Approximate Bayesian Inference, volume 118 of Proceedings of Machine Learning Research, pages 1–12. PMLR, 08 Dec 2020.

- Lawrence [2003] Neil Lawrence. Gaussian process latent variable models for visualisation of high dimensional data. Advances in neural information processing systems, 16, 2003.

- Minasny et al. [2011] Budiman Minasny, Jasper A. Vrugt, and Alex B. McBratney. Confronting uncertainty in model-based geostatistics using Markov Chain Monte Carlo simulation. Geoderma, 163(3):150–162, 2011. ISSN 0016-7061. doi: 10.1016/j.geoderma.2011.03.011.

- Morris et al. [1993] Max D. Morris, Toby J. Mitchell, and Donald Ylvisaker. Bayesian design and analysis of computer experiments: Use of derivatives in surface prediction. Technometrics, 35(3):243–255, 1993. doi: 10.1080/00401706.1993.10485320.

- Pelamatti et al. [2021] Julien Pelamatti, Loïc Brevault, Mathieu Balesdent, El-Ghazali Talbi, and Yannick Guerin. Bayesian optimization of variable-size design space problems. Optimization and Engineering, 22(1):387–447, 2021.

- Phan et al. [2019] Du Phan, Neeraj Pradhan, and Martin Jankowiak. Composable effects for flexible and accelerated probabilistic programming in numpyro. arXiv preprint arXiv:1912.11554, 2019.

- Qian et al. [2008] Peter Z. G Qian, Huaiqing Wu, and C. F. Jeff Wu. Gaussian process models for computer experiments with qualitative and quantitative factors. Technometrics, 50(3):383–396, 2008. doi: 10.1198/004017008000000262.

- Quiñonero-Candela and Rasmussen [2005] Joaquin Quiñonero-Candela and Carl Edward Rasmussen. A unifying view of sparse approximate Gaussian process regression. Journal of Machine Learning Research, 6(65):1939–1959, 2005.

- Roustant et al. [2020] Olivier Roustant, Espéran Padonou, Yves Deville, Aloïs Clément, Guillaume Perrin, Jean Giorla, and Henry Wynn. Group kernels for Gaussian process metamodels with categorical inputs. SIAM/ASA Journal on Uncertainty Quantification, 8(2):775–806, 2020. doi: 10.1137/18M1209386.

- Shahriari et al. [2016] Bobak Shahriari, Kevin Swersky, Ziyu Wang, Ryan P Adams, and Nando De Freitas. Taking the human out of the loop: A review of Bayesian optimization. Proceedings of the IEEE, 104(1):148–175, 2016.

- Snelson and Ghahramani [2005] Edward Snelson and Zoubin Ghahramani. Sparse Gaussian processes using pseudo-inputs. Advances in Neural information processing systems, 18, 2005.

- Sobol [2001] I.M Sobol. Global sensitivity indices for nonlinear mathematical models and their Monte Carlo estimates. Mathematics and Computers in Simulation, 55(1):271–280, 2001. ISSN 0378-4754. doi: 10.1016/S0378-4754(00)00270-6. The Second IMACS Seminar on Monte Carlo Methods.

- Titsias [2009] Michalis Titsias. Variational learning of inducing variables in sparse gaussian processes. In David van Dyk and Max Welling, editors, Proceedings of the Twelth International Conference on Artificial Intelligence and Statistics, volume 5 of Proceedings of Machine Learning Research, pages 567–574, Hilton Clearwater Beach Resort, Clearwater Beach, Florida USA, 16–18 Apr 2009. PMLR.

- Titsias and Lawrence [2010] Michalis Titsias and Neil D. Lawrence. Bayesian Gaussian process latent variable model. In Yee Whye Teh and Mike Titterington, editors, Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, volume 9 of Proceedings of Machine Learning Research, pages 844–851, Chia Laguna Resort, Sardinia, Italy, 13–15 May 2010. PMLR.

- Wang et al. [2020] Yiqun Wang, Akshay Iyer, Wei Chen, and James M. Rondinelli. Featureless adaptive optimization accelerates functional electronic materials design. Applied Physics Reviews, 7(4):041403, 2020. doi: 10.1063/5.0018811.

- Zhang et al. [2020a] Yichi Zhang, Daniel W Apley, and Wei Chen. Bayesian optimization for materials design with mixed quantitative and qualitative variables. Scientific reports, 10(1):1–13, 2020a.

- Zhang et al. [2020b] Yichi Zhang, Siyu Tao, Wei Chen, and Daniel W. Apley. A latent variable approach to Gaussian process modeling with qualitative and quantitative factors. Technometrics, 62(3):291–302, 2020b. doi: 10.1080/00401706.2019.1638834.

- Zhou et al. [2011] Qiang Zhou, Peter Z. G. Qian, and Shiyu Zhou. A simple approach to emulation for computer models with qualitative and quantitative factors. Technometrics, 53(3):266–273, 2011. doi: 10.1198/TECH.2011.10025.

- Zhu et al. [1997] Ciyou Zhu, Richard H. Byrd, Peihuang Lu, and Jorge Nocedal. Algorithm 778: L-BFGS-B: Fortran subroutines for large-scale bound-constrained optimization. ACM Transactions on Mathematical Software, 23(4):550–560, dec 1997. ISSN 0098-3500. doi: 10.1145/279232.279236.

Appendix A Sensitivity of results to the choice of hyperprior

As mentioned in Section 3.1, we place a Gamma prior on the precision hyperparameter , and constrain the concentration hyperparameter and set the rate hyperparameter , so that the mode of this prior will always be 1, irrespective of the value of . In this section, we test the sensitivity of the LVGP model to the value of on a couple of cases in Section 5. Refer to Figures 16 and 17. We can see that there are no significant differences in prediction accuracy and uncertainty quantification across different values of between 1.1 and 3.0.

Appendix B Training times

In this section, we report the training times for both MAP and fully Bayesian inference for the examples discussed in Sections 5.1 and 5.2. Note that we use different libraries for performing MAP estimation and fully Bayesian inference. We use GPyTorch [14], PyTorch and Scipy for obtaining the MAP estimates, while we use JAX and NumPyro [28, 7] for running the No-U-Turn sampler [20] algorithm. As such, they have different computational overheads, which can be significant for small training set sizes.

The training times for the engineering models discussed in Section 5.1 are shown in Figure 18. For these models, fully Bayesian inference is actually faster than obtaining MAP estimates with smaller training set sizes, which could be due to a larger computational overhead with obtaining the MAP estimates. However, for the largest training set in each case, fully Bayesian inference is slower. Another odd result here is that for the piston model, the training times for the MAP models actually decreased with the number of training observations. On a closer look, we found that the total number of likelihood evaluations during optimization also decreased considerably with the number of training set observations, and this decrease more than offset the increase in the computational expense of each likelihood evaluation.

Appendix C Comparison of the representative latent space and the MAP latent space

In Section 3.3, we proposed to construct a representative latent space to average out the sample-to-sample differences in the estimated LVs across each MCMC sample, and then use this representative latent space to interpret the effects of the qualitative variable. Alternatively, one might consider choosing the single latent sample corresponding to the MAP estimate for interpretation. These latent spaces can differ in terms of the positions of the levels in the latent space, and hence can result in slightly different interpretations. Consider the scenario in Figure 21, where we plot the latent space corresponding to the MAP estimate (left plot) and the representative latent space (right plot) for the M-site element when modeling the Shear modulus of materials. There are a few differences in the relative positions of the levels. For example, element Mo is close to elements Ti, Hf, and Zr in the MAP latent space, while it is much further away from them in the representative latent space. Aside from these differences, the two latent spaces largely agree with each other.

Appendix D Comparison with maximum likelihood estimates

In the numerical studies, we have used the MAP estimates for plug-in inference. Alternatively, one can use the maximum likelihood estimates (MLEs). The MLE can be viewed as a MAP estimate with a completely non-informative prior. The performance of the MLE estimates for the LVGP models on the examples in Section 5.1 and 5.2 are shown in Figures 22 and 23 for the engineering models, Figure 24 and 25 for the lacunar spinels, and Figure 26 for the materials. In general, we find that MLE estimates are less robust and slower to compute than the MAP estimates.

Appendix E Optimizing the sparse LVGP approximations

For the sparse LVGP approximations, one must optimize the regular hyperparameters (including the latent variables), and the parameters for the inducing points . For a qualitative variable with levels, we characterize its inducing points through the weight parameters described in (4.2), which can be further expressed through bound constrained parameters [6]. Thus, there are inducing point parameters for a qualitative variable with levels.

Let denote the covariance matrix of the function values at the training locations , denote the covariance matrix of the function values at the induncing point locations locations , and denote the covariance matrix between and . Using the notation of [3], the likelihood objective for the FITC [33] the VFE [36] approximation is

| (26) |

where is a rank-M approximation to , and

| (27) | ||||||

| (28) |

This objective for the sparse LVGP approximations can be optimized with any standard numerical optimization algorithm. In our experiments, we use the L-BFGS-B algorithm [8, 41] with multiple restarts, since the objective (26) typically has multiple local optima. In practice, we find that models with the FITC approximation are much easier to optimize than models with the VFE approximation. We also find that initializing the optimization for the VFE model using the global optima of the corresponding FITC model finds the global optima for the VFE model much faster than with multi-start numerical optimization alone.