Full Reference Video Quality Assessment for Machine Learning-Based Video Codecs

Abstract

Machine learning-based video codecs have made significant progress in the past few years. A critical area in the development of ML-based video codecs is an accurate evaluation metric that does not require an expensive and slow subjective test. We show that existing evaluation metrics that were designed and trained on DSP-based video codecs are not highly correlated to subjective opinion when used with ML video codecs due to the video artifacts being quite different between ML and video codecs. We provide a new dataset of ML video codec videos that have been accurately labeled for quality. We also propose a new full reference video quality assessment (FRVQA) model that achieves a Pearson Correlation Coefficient (PCC) of 0.99 and a Spearman’s Rank Correlation Coefficient (SRCC) of 0.99 at the model level. We make the dataset and FRVQA model open source to help accelerate research in ML video codecs, and so that others can further improve the FRVQA model.

1 Introduction

Internet traffic statistics show that internet video traffic will be 82% of all consumer Internet traffic by 2022, up from 73% in 2017, which is a compound annual growth rate of 34%[2]. This number is only expected to grow, with the ever-increasing popularity of the video social media platforms like TikTok, Reels, and YouTube, and videoconferencing applications like Microsoft Teams and Zoom. Since the physical internet infrastructure is limited and cannot be scaled up fast enough to keep up with the exponential growth of internet traffic, video transmission has the potential to choke up the internet[16]. Video compression technologies enable video to be streamed across the internet at a small fraction of the uncompressed bandwidth, which enables video streaming and video conferencing applications to be possible. These video compression methods involve fast and efficient hand-coded digital signal processing (DSP) algorithms to reduce the size of the video files by over 1000x with an acceptable reduction in quality.

In the late 1980s, the common H.26X video compression standards were introduced, starting with H.261 in 1988 [13], followed by H.262 in 1994 [1], H.263 in 1996 [37], H.264 in 2003 [49], H.265 in 2013 [41], and the latest one H.266 in 2020 [6]. Each one of these DSP codecs roughly doubled the coding efficiency of their predecessors, and the average time between versions for the past three codecs is 8.5 years.

Machine learning (ML) is now actively being used to improve the coding efficiency of video codecs. However, the vast majority of ML video codecs [22, 23, 28, 29, 27, 4] only evaluate the codec quality using metrics like Peak Signal-to-Noise ratio (PSNR) [12] and Multi-Scale Structural Similarity Index Measure (MS-SSIM) [48], which as we show in the paper, are poorly correlated with human subjective quality assessment.

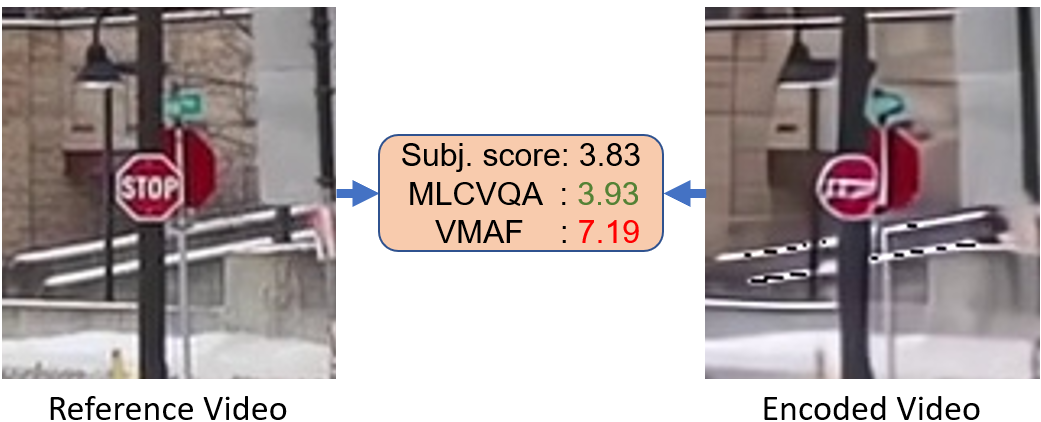

The development and adoption of ML video codecs have faced a roadblock in terms of perceptual quality evaluation, i.e., measuring how good or bad a human viewer perceives the videos compressed by an ML video codec. ML video codecs have different artifacts than DSP-based video codecs, as shown in Fig. 1. Existing FRVQAs do not work well with ML video codecs (see Section 5). The gold standard approach for measuring perceptual quality is subjective testing in which a group of test participants watch the processed videos and rate their quality following different standardized test methods depending on the use case, e.g., ITU-T Rec. P.910 [34], P.911 [35], P.913 [36], and ITU-R BT.500 [7]. Besides the test method, the standards define the viewing conditions, participant qualification tests, and device properties to reduce their effects on subjective ratings. The aggregated ratings represent the processing system’s perceptual quality and are used to directly compare the performance of different codecs. This process is costly and time-consuming, especially since we expect many versions of a codec during development, differing in model architectures, model sizes, and hyper-parameters among other possible design choices.

In order to address this issue, we propose an ML-based video quality assessment model that achieves a PCC of 0.99, SRCC of 0.99, and Kendall’s Tau-b 95 (see Section 4.5) of 0.93 with the subjective scores, outperforming the existing state-of-the-art objective metrics. We also release a dataset with a wide variety of videos encoded by many ML video codecs, complete with subjective scores for each video-codec pair. Our model can be used to get accurate perceptual quality measures for videos, and thus allow for faster development and evaluation of ML video codecs. We make our code, trained model, and the full dataset available to the community to accelerate the development and evaluation of ML video codecs111https://github/microsoft/MLCVQA.

Our contributions are:

-

1.

We provide the first FRVQA for ML video codecs and show it provides best-in-class results.

-

2.

We provide the first large dataset, MLVC-FRVQA, for training and testing FRVQA models for ML video codecs.

-

3.

We provide a new statistical metric, Tau-b 95, for evaluating perceptual metrics and provide the theoretical limit to an FRVQA on the given test set with this metric.

2 Related work

While learning-based methods have taken over in a majority of vision-based problem domains, video quality assessment still remains dominated by quantitative formula-based approaches. Almost all of the current video quality assessment tasks utilize metrics such as PSNR [12], Structural Similarity Index measure (SSIM) [47], and its advanced version, MS-SSIM [48]. These metrics are calculated at the frame level and use pooling, usually just a mean of frame level scores, to get the final video quality score.

Netflix developed a machine learning solution, Video Multi-method Assessment Fusion (VMAF) [44, 25], which predicts the perceptual quality using different objective quality metrics. Specifically, VMAF fuses two image quality metrics, Visual Information Fidelity (VIF) [45] and Detail Loss Metric (DLM) [24]. It also uses a very simple motion metric which is a pixel-wise temporal difference of the luminance channels between adjacent frames. VMAF fuses these metrics using support vector regression to calculate a weighted average, which measures the final perceptual quality score. Since VMAF relies on objective metrics and performs a frame-level temporal pooling of quality, it is not able to take into account the motion component of the video, which is more complex than pixel-wise differences in adjacent frames. As a result, it has a lower performance on videos with more motion. This limitation is discussed in detail in Section 5.

Recently, multiple end-to-end deep learning approaches have been proposed for video quality assessment. Xu et al. proposed C3DVQA [50], which consists of using 3D convolutions to account for the temporal nature of the video data. This allows the model to learn spatial and temporal variations, thus resulting in a better measurement of perceptual quality. One limitation is that it only uses the luminance channel, which hinders its performance since video codecs can include color distortions. It also downsizes the input video, which makes the subtle distortions caused by ML codecs hard to perceive.

Sun et al. proposed CompressedVQA [42] which is also an end-to-end trainable method for full as well as no reference video quality assessment. Their framework consists of a feature extraction module to extract features from different layers of a convolutional neural network which are transformed by hand-coded functions into “texture” and “structure” features. These transformed features are used to regress the frame level quality scores followed by a subjectively-inspired temporal pooling strategy proposed in [21] which considers the memory effect of previous frames and the hysteresis effect of the next frames to the current frame. This approach does not use 3D convolutions or any learnable pooling to better model the temporal nature of the video data. Also, like C3DVQA, CompressedVQA also downsizes the input videos.

With the success of transfer learning in computer vision, there has been considerable interest in studying the nature and re-usability of features extracted by deep models trained on large datasets such as ImageNet [9], and Kinetics [8]. Zhang et al. [52] studied the use of deep features for perceptual quality measurement of images, and the proposed Learned Perceptual Image Patch Similarity (LPIPS) metric. Tariq et al. [43] discussed the reasoning behind why deep features work as good perceptual metrics. Li et al. [20] demonstrate this idea for no-reference video quality assessment by using only the Fast pathway of the SlowFast model. In this paper, we show the effectiveness of the pre-trained SlowFast[10] model (using both slow and fast pathways) at full-reference video quality assessment.

3 Datasets

3.1 CLIC challenge dataset

The CVPR 2022 CLIC video compression challenge222http://compression.cc resulted in 12 teams compressing 30 videos in the test set targeting 1 Mbps and 0.1 Mbps average bitrate. This included 27 combinations of codecs and bitrates, including the original sequences and two sets encoded by H.264 and AV1 [14]. Overall, the resulting dataset contains 810 videos which are either processed by 27 encoder-bitrates combinations or are uncompressed videos.

The Microsoft P.910 Crowdsourcing Toolkit [32] was used to assess the quality of the videos using three different subjective test methodologies. The toolkit provides an open-source implementation of ITU-T Rec. P.910 [34] for crowdsourcing. The CLIC 2022 dataset is available at http://compression.cc.

The Absolute Category Rating (ACR) is a single-stimulus subjective test method, in which participants watch a sequence and rate the quality on a five-point discrete scale. The Degradation Category Rating (DCR) and Comparison Category Rating (CCR) are double-stimulus test methods, in which participants watch the source and the processed clips before casting their votes. In DCR, participants are informed which sequence is the reference clip and rate the level of degradation in the processed sequence compared to the reference. However, in CCR participants are not aware of which sequence is processed and rate the quality of the second clip compared to the first clip they watched. As the source clips were included in the dataset, ACR with Hidden Reference (ACR-HR) was computed as well (see [34] for further description of the test methods). On average, 21.6 valid votes per sequence in the ACR test, 12.1 in the DCR test (on a 9-point discrete scale), and 15.2 in the CCR test were collected. Table 1 reports the correlation between Mean Opinion Scores (MOS) of different test methods aggregated in clip or model level. The highest correlations are observed within double-stimulus methods.

| Test | Clip level | Model level | |||||||

|---|---|---|---|---|---|---|---|---|---|

| method | ACR | ACR-HR | DCR | CCR | ACR | ACR-HR | DCR | CCR | |

| ACR | - | 0.975 | 0.948 | 0.920 | - | 0.999 | 0.981 | 0.963 | |

| ACR-HR | 0.964 | - | 0.939 | 0.912 | 0.999 | - | 0.983 | 0.966 | |

| DCR | 0.948 | 0.935 | - | 0.962 | 0.976 | 0.981 | - | 0.990 | |

| CCR | 0.936 | 0.928 | 0.960 | - | 0.987 | 0.989 | 0.981 | - | |

According to the P.910 recommendation, the double-stimulus test methods with an explicit reference should be employed when high-quality systems are under test, or the transparency or fidelity of the underlying process or transmission should be evaluated. These test methods lead to longer test sessions and consequently higher costs compared to the single-stimulus approach (about ). We also observed a large difference in the quality rating of clips according to single- or double-stimulus tests when they contain (1) imperfect source sequence (e.g., low image quality, or slow motioned), (2) specific impairment due to encoding process (e.g., color changes or blur effect on non-saliency area), (3) source sequence with bokeh.

As FRVQA models use both reference and processed videos for prediction, we decided to use scores from a double-stimulus subjective test method. Consequently, participants are also exposed to both reference and processed videos before casting their votes. We chose DCR scores (known as DMOS) since the test set includes only high-quality video clips and the current codecs do not improve the original video quality. We repeated the DCR subjective test (9-point scale) again to increase the number of valid votes. The results of both subjective tests highly correlated (see Table 2) leading to 26.6 valid votes per clip on average. Reported statistical metrics also indicate the presence of uncertainty in subjective ratings, even in test-retest experiments, and the importance of considering this uncertainty when evaluating objective models.

| Level | SRCC | PCC | Tau-b 95 | RMSE |

|---|---|---|---|---|

| Clip | 0.975 | 0.983 | 0.900 | 0.489 |

| Model | 0.999 | 0.998 | 0.987 | 0.181 |

3.2 ML Video Codec Full Reference Video Quality Assessment (MLVC-FRVQA) Dataset

Two issues with the CVPR 2022 CLIC dataset are (1) It does not have broad coverage of spatial and temporal information (see Figure 3), and (2) It only includes high-quality videos, which is not the case in user-generated content and video conferencing scenarios. To address these issues, we created a more comprehensive dataset based on several datasets described in Table 3. Each clip in the MLVC-FRVQA is resized to the resolutions given in Table 4 and processed by ML video codecs at the target bitrates given in Table 4. The MLVC-FRVQA dataset uses 7 open source ML video codec implementations [22, 23, 18, 4, 30, 27, 51], 4 traditional DSP codecs (H.264/AVC, H.265/HEVC, H.266/VVC, AV1), and 2 from CVPR CLIC 2022 challengers, for a total of 13 codecs. The total number of rated clips in the MLVC-FRVQA dataset is .

The video clips were subjectively labeled using P.910 CCR with an average number of raters of . We used the CCR test method as a portion of videos in the MLVC-FRVQA dataset have less than perfect quality, and some of the codecs enhance the perceived quality. The MLVC-FRVQA dataset is available at https://github.com/microsoft/MLCVQA. MLVC-FRVQA is not used in the MLCVQA model in this paper due to the timing of the dataset completion.

| Source | Source clips | Encoded clips |

|---|---|---|

| UVG dataset [31] | 16 | 2496 |

| MCL-JCV [46] | 30 | 4680 |

| HEVC [5] | 25 | 3900 |

| CLIC 2022 challenge test set | 30 | 4680 |

| MS-Webcam dataset | 46 | 7176 |

| Total | 147 | 22,932 |

| Resolution | Bitrates (Kbps) |

|---|---|

| 1080p | 1000, 500, 250, 125 |

| 720p | 500, 250, 125, 62.5 |

| 480p | 250, 125, 62.5, 31.25 |

3.3 User bias correction

Raw measurements of subjective quality scores are often very noisy [3]. To get more accurate subjective quality scores and help machine learning-based FRVQA models learn easier we applied SUREAL [26]. We first checked the effectiveness of SUREAL by performing bootstrapping simulations with the VQEG HDTV datasets and the subjective quality scores collected in [32]. This dataset contains 81 votes per clip on average and we performed similar bootstrapping simulations as in Section 4.6 in the paper. N votes were randomly selected per clip with a replacement while making sure a minimum of N or 10 votes are selected from each voter. The selected votes were used to calculate both raw DMOS and SUREAL corrected DMOS and then resulting DMOS values were compared against the ground truth DMOS values - the average of all votes collected per clip - in terms of correlations and RMSE. We repeated this process 250 times per number of selected votes (N). Figure 2 shows the results. Raw DMOS aligns better with the ground truth DMOS than SUREAL corrected DMOS until the number of votes reaches around 20. The pattern is reversed after 20 votes. This may be because SUREAL is a Maximum Likelihood Estimator and it requires a certain number of samples to perform reliably per the Central Limit Theorem. Our dataset has 26 votes per clip on average and it lies on the borderline of getting benefits by applying SUREAL, so we compared our results with and without SUREAL-corrected DMOS. We trained and tested our models without any augmentation using 5-fold cross-validation. Table 5 summarizes the results. We observe that SUREAL does not improve our results significantly. We believe that this is mainly because the P.910 Crowdsourcing Toolkit [32] we used for data collection already applies intensive rater, environment, and device qualification tests, and includes rating quality tests, which altogether leads to less bias and noise in the aggregated data.

| Clip level | Model level | ||||

|---|---|---|---|---|---|

| DMOS | raw | SUREAL | raw | SUREAL | |

| PCC | 0.89 | 0.89 | 0.99 | 0.99 | |

| SRCC | 0.88 | 0.89 | 0.98 | 0.98 | |

| RMSE | 1.18 | 1.18 | 0.52 | 0.56 | |

| Tau-b 95 | 0.74 | 0.74 | 0.92 | 0.92 | |

3.4 Spatial and temporal information distribution of MLVC-FRVQA

We used the SI-TI Tool333https://github.com/VQEG/siti-tools to calculate the Spatial perceptual Information (SI) and Temporal perceptual Information (TI) of all the source videos in the MLVC-FRVQA dataset. The SI indicates the amount of spatial detail in a frame, and TI refers to the amount of temporal changes in a video sequence [34]. Figure 3 illustrates the average SI and TI distribution and 95% percentile range per video. Besides the MS-Webcam set, the average SI and TI for all videos cover a wide range of spatial and temporal information. The new MS-Webcam dataset focuses on head and shoulder scenarios, mainly representing the video conferencing use case, showing a lower range of TI values.

4 Method

We propose the Machine Learning Codec Video Quality Assessment (MLCVQA) FRVQA model, which involves a two-step approach: a feature extraction step, followed by a temporal quality prediction using the extracted features. This extends the ideas proposed in [52] and [43] which show the effectiveness of using deep features as a perceptual metric for images, to the domain of videos. Note the feature extractor is frozen during the entire process (train and test), and the weights are never updated. Different from previous learning-based approaches which specifically mention avoiding augmentations other than random crops[50] while aggressively resizing the input videos, we propose relative perceptual quality invariant (RPQI) augmentations that do not affect the perceptual quality of a compressed video relative to its original version. We show that our simple approach with RPQI augmentations outperforms the current baselines.

4.1 Feature Extraction

We choose the SlowFast [10] model pre-trained using the action recognition task on the Kinetics-400 [8] for feature extraction. This choice was motivated by: (1) the fully convolutional nature of the model, allowing input videos of arbitrary resolution, and (2) the design of SlowFast being inspired by the Human Visual system.

We extract the features using the SlowFast model pre-trained on Kinetics [8]. Specifically, we use the SlowFast 8x8 with a ResNet-101 [15] backbone available on PyTorch Hub.

For each video, we fed a sliding window of 32 frames (sampled with a step size of 2 from a chunk of 64 consecutive frames), with a stride of 16, extracting 2304-D features before the last fully connected layer. Each window thus covers seconds (64 frames / 30 fps) of the video.

As mentioned above, SlowFast is a fully convolutional model allowing us to forward each clip (sequence of frames), in full resolution () without resizing or cropping. Avoiding resizing is critical because many distortions introduced by the ML video codecs are not noticeable if the videos are resized to a smaller resolution. This is especially true for the top-performing ML video codecs from the CLIC 2022 Challenge.

For each pair of encoded video () and reference video () features, we calculate the difference () as:

| (1) |

We further concatenate this difference to the encoded video features resulting in the concatenated feature vector ():

| (2) |

Theoretically, the model should learn to model the difference on its own, but, in our experiments, we found providing the difference explicitly helps the model achieve better results as well as converge faster.

In our experiments, we noticed that in the few cases where a video does not have a lot of motion (i.e., it is more or less like a still image), then as one would expect, SlowFast features as well as learning-based baselines [50, 42] do not perform as well as frame-metric based methods such as VMAF (see Figure 10). Thus, in order to make our model more robust to these outlier videos, we also input frame-level metrics. We pool frame-level metrics such as Visual Information Fidelity (VIF) [45] and Detail Loss Metric (DLM) [24], in a similar manner as the sliding window approach described above. For each window of 32 frames, we end up with frame level metrics which are flattened to a vector of size 352. This vector is concatenated with the SlowFast features resulting in the final feature representation () of the video pair which is fed to the model:

| (3) |

4.2 Data Augmentation

In order to increase the data size, we propose augmentation strategies that do not affect a clip’s perceptual quality relative to a reference clip. For spatial augmentation, we extract features using different transforms such as horizontal and vertical flips, and rotation by small angles (). We also add a center crop () augmentation to implicitly give more weight to the center region of each frame, since the human visual system often pays more attention to the center of its view[53], thus having more impact on the perceived quality of the video.

Before settling on the final set of augmentations, we explored different strategies, including modifying the distorted videos at the just noticeable difference (JND) level, and 3x the JND level. For these experiments we included changes in brightness, contrast, hue, saturation, gamma, Gaussian noise, rotation, and horizontal flipping, parameter levels were defined through crowd-sourced P.910 DCR subjective tests. These experiments are discussed in Section 5.2 in more detail and did not improve the model’s performance.

We also augment (temporal sample) the data along the temporal axis. Specifically, given a feature representation of a video, we sample feature vectors with a step size of 2, resulting in two features of size .

We follow the standard practices of training augmentation [50, 10, 8, 39]. During training, random augmentations are applied to both the encoded video as well as the reference video. The resulting features are then sampled temporally as mentioned above. It should be noted that the same augmentation is applied to both the encoded and reference video to ensure the relative perceptual quality stays the same. We also experimented with test-time augmentation, but it showed no improvement in performance.

4.3 Model Architecture

An overview of the model is shown in Fig. 4. Our model consists of a projection layer followed by two 1-D convolutional layers and a quality prediction head.

The projection layer consists of a 1-D convolutional layer with a kernel size of 3, a stride of size 1, and padding of size 1. This layer projects the input 4608-D features to a size of 128-D. The two subsequent convolutional layers have the same configuration with the only difference being that of keeping the number of input and output channels the same, viz. 256-D. ReLU activation functions follow each convolutional layer.

We chose the kernel size of to help incorporate information from neighboring features. Setting stride and padding to ensures the temporal dimension does not change during convolutions.

After the 1-D convolutions is a 2-layer multi-layer perceptron to predict the score at each time step. This allows a fine-grained quality score prediction since the quality of a video clip can be dynamic over time. Finally, we average (arithmetic mean) the scores across the time dimension to get the final predicted score for the video.

We use Smooth L1 loss to model the error between the predicted quality score () and the ground truth MOS ():

| (4) |

4.4 Training details

We optimize the model using the Adam optimizer with a batch size of , weight decay set to , and momentum of . The maximum learning rate is set to and a linear warm-up is applied in the first epochs. After epochs the learning rate is decayed using the cosine decay schedule. We train for epochs after warm-up, which takes about minutes on 8 NVidia V100 GPUs 444We pre-extract the features to speed up the training process. Without pre-extraction, we expect the training to take longer..

4.5 Evaluation metrics

A key performance indicator for an FRVQA model is the validity of the rank order of ML video codecs produced by it and compared to the subjective test rank order. Typically, SRCC and Kendall’s Tau () [19] are used for measuring the ordinal association between two sets of scores (i.e., DMOS values from a subjective test and predicted scores from an FRVQA model). The SRCC represents the linear relationship between two rankings, for which a high coefficient can be achieved when the two ranked sets are monotonically related. Kendall’s Tau measures how much two ranking procedures agree for all combinations of item pairs in the set. It is well known that Kendall’s Tau is a better estimate of correlation in population and is the preferred method when there is a small number of items, and many tied ranks [17, 11]. It also provides a better interpretation compared to the SRCC. shows that for a randomly selected pair of items (e.g., ML video codecs), the probability that the two items will be ranked in the same order as the subjective test is higher than the probability that they will be ranked in the reverse order. In other words, it shows how far the conclusions made from the prediction of an objective model correspond to the conclusions made from the subjective test. Fig. 5 illustrates the prediction of a poor-performing FRVQA model compared to the subjective scores resulting in SRCC and . Consequently, a high SRCC score, in this case, can be a misleading metric.

Furthermore, none of these methods consider the uncertainty in subjective scores. Both methods consider two items to construct a tied rank only when they have equal numeric values. However, the MOS from a subjective test represents the sample mean and is subject to uncertainty, i.e., the numeric MOS value can change by a minor change in the rating of a single participant. Therefore, it is recommended to consider the distribution of ratings (e.g., through 95% CI ranges) when comparing MOS values of two items [33, 38]. For our evaluation, first, we create a rank order of the items given the DMOS and the 95% CI values from the subjective test according to [33], i.e., two items considered a tied rank when the DMOS value of one item is in the 95% CI range of the other item. Afterward, we calculate Kendall’s Tau-b on the provided ranked-order list (hereafter referred to as Tau-b 95). Given the higher number of tied ranks, a small number of ML video codecs, and better interpretability of Kendall’s Tau, we consider Tau-b 95 the primary metric in our evaluation.

Besides ranked-based metrics, we also calculate Pearson Correlation Coefficient (PCC) and Root Mean Square Error (RMSE) to evaluate the linearity and accuracy of predictions, respectively.

4.6 Number of votes and expected accuracy

We used a bootstrapping simulation to estimate the accuracy of DMOS scores as a function of the number of votes used per clip and model. In the simulation, N votes were randomly selected per clip (model) with replacement and used to calculate the DMOS values of that subset. Consequently, we calculated correlations and RMSE between DMOS from the subset and when the entire ratings are used. We repeated this process 200 times per the number of selected votes (N) and calculated the mean and 95% CI for all metrics555The average 95% CI for all metrics in model level was 0.001.. Fig. 6 illustrates how each metric changes as a function of the number of votes used in the calculation of DMOS. At the model level, PCC is saturated by 100 votes, whereas the SRCC shows a slight increase up to 200 votes. Kendall’s Tau-b and Tau-b 95 partially saturated by 500 votes however with only a change of 0.01 compared to 200 votes. RMSE is also saturated by 600 votes although with only a 0.05 difference compared to 200 votes (see Figure 2(b)). At the clip level, although PCC and SRCC are saturated by 25 votes, Tau-b has a steady slope within the range of simulation. Similarly, RMSE tends to decrease further when more votes are used. A similar comparison for ACR ratings showed that 40 votes per clip are recommended [32].

Subjective scores have an uncertainty that decreases by increasing the number of votes collected in a dataset. One can conclude that the prediction accuracy of a hypothetical objective model, when compared to subjective scores, may only reach the limit observed during simulation, given the number of subjective votes collected for the test set. In this paper, we use five-fold cross-validation, in which 6 videos (encoded by 27 codecs) belong to the test set in each fold (details in section 5). Consequently, the predictions of the FRVQA models were compared to the subjective DMOS values calculated from 26 votes per clip and 160 votes per model. Given the simulation result, the expected upper limit for each statistical metric is given in Table 6.

Figure 8 illustrates how the RMSE is changing as a function of the number of selected votes in the bootstrapping simulation.

| Level | SRCC | PCC | Tau-b | Tau-b 95 | RMSE |

|---|---|---|---|---|---|

| Clip | 0.992 | 0.995 | 0.930 | 0.949 | 0.252 |

| Model | 0.995 | 0.999 | 0.967 | 0.975 | 0.102 |

5 Experiments and Results

The FRVQA models predict the quality of a given compressed clip in comparison to a reference clip. This per clip score when compared to the DMOS per clip from the subjective test is referred to as clip level evaluation. However, the main focus of this paper is to compare the codec performance, which is referred to as model level evaluation, and accomplished by comparing the arithmetic mean of the DMOS values for all the videos encoded by that codec (i.e., DMOS per model), with the arithmetic mean of the predicted scores. It should be noted that each codec compressed the same set of videos, therefore the predicted scores are directly comparable. We evaluate the prediction of FRVQA models, at both the clip level and model level.

| Objective | Clip level | Model level | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Model | PCC | SRCC | RMSE | Tau-b 95 | PCC | SRCC | RMSE | Tau-b 95 | |

| LPIPS | 0.84a | 0.88a | 2.28a | 0.71a | 0.95a | 0.95a | 2.38a | 0.87a | |

| MS-SSIM | 0.74a | 0.87a | 3.62a | 0.72a | 0.89a | 0.95a | 3.60a | 0.88a | |

| C3DVQA | 0.90a | 0.91a | 1.28a | 0.75a | 0.97a | 0.97b | 0.97a | 0.90 | |

| PSNR | 0.77a | 0.77a | 1.60a | 0.60a | 0.92a | 0.91a | 1.18a | 0.82a | |

| CompressedVQA | 0.94b | 0.94 | 1.01a | 0.81 | 0.97a | 0.96a | 0.77a | 0.89a | |

| VMAF (retrained) | 0.95 | 0.94 | 0.85 | 0.81 | 0.98a | 0.97a | 0.49a | 0.90b | |

| VMAF | 0.92a | 0.92b | 0.96b | 0.77 | 0.96a | 0.93a | 0.71a | 0.82a | |

| MLCVQA (ours) | 0.94 | 0.94 | 0.85 | 0.80 | 0.99 | 0.99 | 0.34 | 0.93 | |

| Augmentation | Frame Metrics | Clip Level | Model Level | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| PCC | SRCC | RMSE | Tau-b 95 | PCC | SRCC | RMSE | Tau-b 95 | |||

| ✓ | ✓ | 0.94 | 0.94 | 0.85 | 0.80 | 0.99 | 0.99 | 0.34 | 0.93 | |

| - | ✓ | 0.92 | 0.91 | 1.04 | 0.76 | 0.99 | 0.98 | 0.41 | 0.92 | |

| ✓ | - | 0.92 | 0.91 | 0.99 | 0.78 | 0.99 | 0.98 | 0.46b | 0.93 | |

| - | - | 0.89 | 0.88 | 1.18b | 0.74 | 0.99b | 0.98 | 0.52a | 0.92 | |

We report the performance of our model and other FRVQA models on the CLIC dataset (Section 3.1) with 5-fold cross-validation. For each fold, six videos (each encoded by 27 codecs) are chosen for the test split, while 24 videos are kept in the training split. For a fair comparison, we retrained our model, VMAF, C3DVQA, and CompressedVQA for each fold with and without augmented data and always report the best results for each method in Table 7. For PSNR, MS-SSIM, VMAF, and LPIPS 666LPIPS calculates distance d between a processed and a reference frame. For a video with frames, is considered as LPIPS’ quality prediction. we calculated their performance in each test fold as well. Performance of all FRVQA models777Best performing instance of each model (with or without augmentation) is reported here. See Section 5.2., averaged over 5 test folds are reported in Table 7. We perform a statistical significance test comparing the performance of each model metric with our model and marked cases with statistically significant differences. To the authors’ best knowledge, this is the first VQA comparison that performs statistical significance tests.

VMAF, MLCVQA, and CompressedVQA performed closely in the clip level, where retrained VMAF and MLCVQA showed better accuracy (i.e., RMSE). In the model-level evaluation, MLCVQA performed better than all the other models in all metrics.

Distribution of the predictions in both clip and model levels for MLCVQA, retrained VMAF, CompressVQA, LPIPS, MS-SSIM, and PSNR are illustrated in Fig. 7. MLCVQA shows excellent performance at the model level and competitive performance at the clip level. The theoretical maximum performance that can be achieved by an objective model given this test set was reported in Table 6. Compared to that, MLCVQA’s performance can be improved in clip-level for all metrics and in model-level in RMSE () and Tau-b 95 () metrics in future work.

Figure 9 illustrates the empirical cumulative distribution of absolute errors. Although MLCVQA and the retrained VMAF obtained equal RMSE = 0.85, the prediction error of the MLCVQA was below 0.46 (i.e., the average 95% CI of subjective ratings in clips level) more than 60% of the time.

5.1 Ablation Study

In this section, we show how different components of MLCVQA contribute to its performance. Table 8 shows the results of our ablation study. Specifically, we test the contribution of (1) SlowFast features, (2) Augmentation, and (3) Adding image level metrics. We observe how using SlowFast features alone results in very high performance at the model level. This performance is boosted by simple geometric augmentations and further improved by adding image-level metrics.

5.2 Performance with and without augmentation

To utilize augmentation, we performed multiple experiments with different types, levels, and augmentation strategies. First, within an expert viewing session, we established the highest level of augmentation without a noticeable difference in quality for all augmentations. Table 9 reports the type and level of augmentations. We also examined these levels in a follow-up DCR crowdsourcing test, where the quality of the augmented clips was rated compared to the reference clips. We observed less than a 1 DMOS change in quality for Rotation and Additive Gaussian Noise and less than a 0.5 DMOS change for the rest of the augmentations. Therefore, we considered these as minimum augmentation levels.

| Augmentation | Parameter Range | Baseline value | Value description |

|---|---|---|---|

| Additive Gaussian Noise | [0, 0.025] | 0.0 | % of pixels affected |

| Brightness | [0.9, 1.1] | 1.0 | % w.r.t baseline |

| Center crop | [0, 0.1] | 0.0 | % w.r.t image shape |

| Gamma | [0.9, 1.1] | 1.0 | % w.r.t baseline |

| Hue | [-0.025, +0.025] | 1.0 | Value range |

| Rotate | [-1, 1] | 0.0 | Degree |

| Saturation | [0.75, 1.25] | 1.0 | % w.r.t baseline |

We examined different augmentation strategies with the following configurations: 1) only processed clips augmented with minimum levels (AugDistortedOnly), 2) Both reference and processed clips are augmented, with 3 of the minimum levels (ExtremeAug), 3) geometric augmentations as described in Section 4.2 of the paper and the subset of geometric augmentations from table 9 (GeometryOnly), 4) ensemble testing [40] where many augmentations are averaged for a final prediction value (TestAugmentation), 5) a subset of GeometryOnly where all geometric augmentations coming from Table 9 were removed (SubsetGeometryOnly). We evaluated these strategies on the performance of the MLCVQA model. Results are reported in Table 10 and show that the SubsetGeometryOnly and TestAugmentation strategies beat the rest. We use SubsetGeometryOnly in the next steps as it is the simpler approach.

| Objective | Augmentation | Clip level | Model level | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Model | strategy | PCC | SRCC | RMSE | Tau-b 95 | PCC | SRCC | RMSE | Tau-b 95 | |

| MLCVQASF1 | AugDistortedOnly | 0.70a | 0.73b | 2.14a | 0.60b | 0.96a | 0.96a | 1.88a | 0.87a | |

| ExtremeAugmentations | 0.87 | 0.86 | 1.23 | 0.71 | 0.97a | 0.96a | 0.64 | 0.88a | ||

| TestAugmentation | 0.92 | 0.91 | 0.99 | 0.78 | 0.99 | 0.98 | 0.46 | 0.93 | ||

| GeometryOnly | 0.92 | 0.91 | 0.97 | 0.77 | 0.99 | 0.98 | 0.43 | 0.92 | ||

| SubsetGeometryOnly | 0.92 | 0.91 | 0.99 | 0.78 | 0.99 | 0.98 | 0.46 | 0.93 | ||

| C3DVQA-aug | SubsetGeometryOnly | 0.89a | 0.89a | 1.44a | 0.73a | 0.97a | 0.96a | 1.14a | 0.89a | |

| CompressedVQA-aug | SubsetGeometryOnly | 0.86a | 0.89a | 1.56a | 0.72a | 0.96a | 0.95a | 1.17a | 0.86a | |

| MLCVQA (ours) | SubsetGeometryOnly + Frame-level | 0.94 | 0.94 | 0.85 | 0.80 | 0.99 | 0.99 | 0.34 | 0.93 | |

| 1 A version of MLCVQA model only with SlowFast features. | ||||||||||

We also evaluated the effect of augmentation on other ML-based FRVQA models. Table 10 also shows the results of experiments with CompressedVQA and C3VQA, both retrained using SubsetGeometryOnly, the best set of performing augmentations for MLCVQA. The MLCVQA (ours) result adds frame-level features to the best-performing features which are described in Section 4.1 of the paper. 5-fold cross-validation results are used to compare all experiments with MLCVQA.

5.3 MLCVQA performance and its limitations

| Feature | Avg. Importance |

|---|---|

| 95% CI | 0.366 |

| Codec | 0.109 |

| N of ratings | 0.082 |

| 2.5% Percentile of TI | 0.067 |

| Standard deviation of SI | 0.064 |

| Minimum TI | 0.042 |

| Bitrate | 0.037 |

We observed that MLCVQA is performing well in predicting the quality of videos on both extremes of the DMOS scale (1 and 9) and shows lower accuracy for videos in the middle-quality range. We trained a random forest regressor to predict the absolute error of MLCVQA prediction (per clip) given some features from source videos like SI and TI, and the uncertainty of subjective ratings. We randomly selected 80% of the data for training and repeated the process 20 times with newly selected training and test sets. On average, the regressor’s prediction on the test set has a PCC = 0.63 with the absolute error. The essential features and their average importance over all repetitions are reported in Table 11. Results show that the uncertainty of subjective ratings is moderately correlated with the absolute error. However, it should be interpreted with caution as it is known that items located in the middle-quality range mostly have a larger 95% CI compared to items located in both poles of the scale. There could be a confounding factor that both increases uncertainty in subjective rating and prediction error of MLCVQA.

Figure 10 shows the TI and SI for each split. In addition, we show if VMAF is performing better than MLCVQA based on which model has a higher SRCC for that split. Note that VMAF tends to do better for lower TI and lower SI values.

6 Conclusions

We have provided the first large open-source dataset MLVC-FRVQA for ML video codec FRVQA development and an FRVQA model MLCVQA that exceeds the performance of the existing FRVQA methods that have tested. The MLVC-FRVQA dataset can be used to create a challenge to improve MLCVQA. MLCVQA can be used for ML video codec development for ranking new ML video codecs, hyper-parameter tuning, and training.

There is still significant room for improvement for MLCVQA as measured using Kendall’s Tau-b 95 and through the theoretical analysis done in Table 6. Future development includes utilizing the MLVC-FRVQA dataset for training. We can also try additional pre-trained models, including an ensemble of models that should improve MLCVQA’s performance. We found that the leading predictor of the performance of MLCVQA for a specific clip is the 95% confidence interval of the clip under evaluation. Therefore, we can significantly improve the results of MLCVQA by adaptively getting more quality ratings in the DMOS=[3,7] range where there is more uncertainty in the ratings (see Fig. 7), thereby reducing the confidence intervals for those clips.

References

- [1] ISO/IEC 13818-2:1996 - Information technology – Generic coding of moving pictures and associated audio information: Video, 1995.

- [2] VNI Complete Forecast Highlights. Technical report, CISCO, 2018.

- [3] ITU-T Recommendation P.1401 : Methods, metrics and procedures for statistical evaluation, qualification and comparison of objective quality prediction models, 2020.

- [4] Eirikur Agustsson, David Minnen, Nick Johnston, Johannes Balle, Sung Jin Hwang, and George Toderici. Scale-Space Flow for End-to-End Optimized Video Compression. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 8500–8509, Seattle, WA, USA, June 2020. IEEE.

- [5] Frank Bossen. Common test conditions and software reference configurations. JCTVC-L1100, 12(7), 2013.

- [6] Benjamin Bross, Ye-Kui Wang, Yan Ye, Shan Liu, Jianle Chen, Gary J. Sullivan, and Jens-Rainer Ohm. Overview of the Versatile Video Coding (VVC) Standard and its Applications. IEEE Transactions on Circuits and Systems for Video Technology, 31(10):3736–3764, Oct. 2021. Conference Name: IEEE Transactions on Circuits and Systems for Video Technology.

- [7] ITU-R Recommendation BT.500. Methodologies for the subjective assessment of the quality of television images, 2019.

- [8] Joao Carreira and Andrew Zisserman. Quo Vadis, Action Recognition? A New Model and the Kinetics Dataset. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 4724–4733, Honolulu, HI, July 2017. IEEE.

- [9] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. ImageNet: A large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition, pages 248–255, June 2009.

- [10] Christoph Feichtenhofer, Haoqi Fan, Jitendra Malik, and Kaiming He. SlowFast Networks for Video Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 6202–6211, 2019.

- [11] Andy Field. Discovering Statistics Using IBM SPSS Statistics. Sage Publications Inc, Nov. 2022.

- [12] R. Gonzalez and R. Woods. Digital Image Processing. Prentice Hall, 3rd edition, 2006.

- [13] H.261. Video codec for audiovisual services at p x 384 kbit/s - Recommendation H.261, 1998.

- [14] Jingning Han, Bohan Li, Debargha Mukherjee, Ching-Han Chiang, Adrian Grange, Cheng Chen, Hui Su, Sarah Parker, Sai Deng, Urvang Joshi, Yue Chen, Yunqing Wang, Paul Wilkins, Yaowu Xu, and James Bankoski. A Technical Overview of AV1. Proceedings of the IEEE, 109(9):1435–1462, Sept. 2021. Conference Name: Proceedings of the IEEE.

- [15] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep Residual Learning for Image Recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 770–778, June 2016.

- [16] Jeff Hecht. The bandwidth bottleneck that is throttling the Internet. Nature, 536(7615):139–142, Aug. 2016.

- [17] David Howell. Statistical Methods for Psychology, 8th Edition - 9781111835484 - Cengage. https://www.cengage.com/c/statistical-methods-for-psychology-8e-howell/9781111835484.

- [18] Zhihao Hu, Guo Lu, and Dong Xu. FVC: A New Framework towards Deep Video Compression in Feature Space. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 1502–1511, Nashville, TN, USA, June 2021. IEEE.

- [19] M. G. Kendall. A New Measure of Rank Correlation. Biometrika, 30(1/2):81–93, 1938. Publisher: [Oxford University Press, Biometrika Trust].

- [20] Bowen Li, Weixia Zhang, Meng Tian, Guangtao Zhai, and Xianpei Wang. Blindly Assess Quality of In-the-Wild Videos via Quality-aware Pre-training and Motion Perception, Apr. 2022. arXiv:2108.08505 [cs, eess].

- [21] Dingquan Li, Tingting Jiang, and Ming Jiang. Quality Assessment of In-the-Wild Videos. In Proceedings of the 27th ACM International Conference on Multimedia, MM ’19, pages 2351–2359, New York, NY, USA, Oct. 2019. Association for Computing Machinery.

- [22] Jiahao Li, Bin Li, and Yan Lu. Deep Contextual Video Compression. In Advances in Neural Information Processing Systems, volume 34, pages 18114–18125. Curran Associates, Inc., 2021.

- [23] Jiahao Li, Bin Li, and Yan Lu. Hybrid Spatial-Temporal Entropy Modelling for Neural Video Compression. In Proceedings of the 30th ACM International Conference on Multimedia, MM ’22, pages 1503–1511, New York, NY, USA, Oct. 2022. Association for Computing Machinery.

- [24] Songnan Li, Fan Zhang, Lin Ma, and King Ngi Ngan. Image Quality Assessment by Separately Evaluating Detail Losses and Additive Impairments. IEEE Transactions on Multimedia, 13(5):935–949, Oct. 2011.

- [25] Z. Li, C. Bampis, J. Novak, A. Aaron, K. Swanson, A. Moorthy, and J. De Cock. VMAF: The Journey Continues. Technical report, 2018.

- [26] Zhi Li and Christos G. Bampis. Recover Subjective Quality Scores from Noisy Measurements. In 2017 Data Compression Conference (DCC), pages 52–61, Apr. 2017. ISSN: 2375-0359.

- [27] Jianping Lin, Dong Liu, Houqiang Li, and Feng Wu. M-LVC: Multiple Frames Prediction for Learned Video Compression. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 3543–3551, Seattle, WA, USA, June 2020. IEEE.

- [28] Guo Lu, Wanli Ouyang, Dong Xu, Xiaoyun Zhang, Chunlei Cai, and Zhiyong Gao. DVC: An End-To-End Deep Video Compression Framework. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 10998–11007, Long Beach, CA, USA, June 2019. IEEE.

- [29] Guo Lu, Xiaoyun Zhang, Wanli Ouyang, Li Chen, Zhiyong Gao, and Dong Xu. An End-to-End Learning Framework for Video Compression. IEEE Transactions on Pattern Analysis and Machine Intelligence, 43(10):3292–3308, Oct. 2021.

- [30] Fabian Mentzer, George Toderici, David Minnen, Sung-Jin Hwang, Sergi Caelles, Mario Lucic, and Eirikur Agustsson. VCT: A Video Compression Transformer, Oct. 2022. arXiv:2206.07307 [cs, eess].

- [31] Alexandre Mercat, Marko Viitanen, and Jarno Vanne. UVG dataset: 50/120fps 4K sequences for video codec analysis and development. In Proceedings of the 11th ACM Multimedia Systems Conference, pages 297–302, Istanbul Turkey, May 2020. ACM.

- [32] Babak Naderi and Ross Cutler. A crowdsourcing approach to video quality assessment, Sept. 2022. arXiv:2204.06784 [eess].

- [33] Babak Naderi and Sebastian Möller. Transformation of Mean Opinion Scores to Avoid Misleading of Ranked Based Statistical Techniques. In 2020 Twelfth International Conference on Quality of Multimedia Experience (QoMEX), pages 1–4, May 2020.

- [34] ITU-T Recommendation P.910. Subjective video quality assessment methods for multimedia applications, 2021.

- [35] ITU-T Recommendation P.911. Subjective audiovisual quality assessment methods for multimedia applications, 1998.

- [36] ITU-T Recommendation P.913. Methods for the subjective assessment of video quality, audio quality and audiovisual quality of Internet video and distribution quality television in any environment, 2021.

- [37] K. Rijkse. H.263: video coding for low-bit-rate communication. IEEE Communications Magazine, 34(12):42–45, Dec. 1996. Conference Name: IEEE Communications Magazine.

- [38] Michael Seufert. Fundamental Advantages of Considering Quality of Experience Distributions over Mean Opinion Scores. In 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), pages 1–6, June 2019.

- [39] Divya Shanmugam, Davis Blalock, Guha Balakrishnan, and John Guttag. Better Aggregation in Test-Time Augmentation. In 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pages 1194–1203, Oct. 2021.

- [40] Divya Shanmugam, Davis Blalock, Guha Balakrishnan, and John Guttag. Better Aggregation in Test-Time Augmentation. In 2021 IEEE/CVF International Conference on Computer Vision (ICCV), pages 1194–1203, Oct. 2021. ISSN: 2380-7504.

- [41] Gary J. Sullivan, Jens-Rainer Ohm, Woo-Jin Han, and Thomas Wiegand. Overview of the High Efficiency Video Coding (HEVC) Standard. IEEE Transactions on Circuits and Systems for Video Technology, 22(12):1649–1668, Dec. 2012. Conference Name: IEEE Transactions on Circuits and Systems for Video Technology.

- [42] Wei Sun, Xiongkuo Min, Wei Lu, and Guangtao Zhai. A Deep Learning based No-reference Quality Assessment Model for UGC Videos. In Proceedings of the 30th ACM International Conference on Multimedia, MM ’22, pages 856–865, New York, NY, USA, Oct. 2022. Association for Computing Machinery.

- [43] Taimoor Tariq, Okan Tarhan Tursun, Munchurl Kim, and Piotr Didyk. Why Are Deep Representations Good Perceptual Quality Features? In Andrea Vedaldi, Horst Bischof, Thomas Brox, and Jan-Michael Frahm, editors, Computer Vision – ECCV 2020, Lecture Notes in Computer Science, pages 445–461, Cham, 2020. Springer International Publishing.

- [44] Pankaj Topiwala, Wei Dai, Jiangfeng Pian, Katalina Biondi, and Arvind Krovvidi. VMAF And Variants: Towards A Unified VQA. In Applications of Digital Image Processing XLIV, volume 11842, pages 96–104. SPIE, Oct. 2021.

- [45] Phong V. Vu and Damon M. Chandler. ViS3: An algorithm for video quality assessment via analysis of spatial and spatiotemporal slices. Journal of Electronic Imaging, 23(1):013016, 2014.

- [46] Haiqiang Wang, Weihao Gan, Sudeng Hu, Joe Yuchieh Lin, Lina Jin, Longguang Song, Ping Wang, Ioannis Katsavounidis, Anne Aaron, and C.-C. Jay Kuo. MCL-JCV: A JND-based H.264/AVC video quality assessment dataset. In 2016 IEEE International Conference on Image Processing (ICIP), pages 1509–1513, Sept. 2016. ISSN: 2381-8549.

- [47] Zhou Wang, A.C. Bovik, H.R. Sheikh, and E.P. Simoncelli. Image quality assessment: From error visibility to structural similarity. IEEE Transactions on Image Processing, 13(4):600–612, Apr. 2004.

- [48] Z. Wang, E.P. Simoncelli, and A.C. Bovik. Multiscale structural similarity for image quality assessment. In The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, pages 1398–1402, Pacific Grove, CA, USA, 2003. IEEE.

- [49] Thomas Wiegand, Gary J Sullivan, Gisle Bjøntegaard, and Ajay Luthra. Overview of the H.264 / AVC Video Coding Standard. IEEE Transactions On Circuits And Systems For Video Technology, page 19, 2003.

- [50] Munan Xu, Junming Chen, Haiqiang Wang, Shan Liu, Ge Li, and Zhiqiang Bai. C3DVQA: Full-Reference Video Quality Assessment with 3D Convolutional Neural Network. In International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2020.

- [51] Ren Yang, Fabian Mentzer, Luc Van Gool, and Radu Timofte. Learning for Video Compression with Recurrent Auto-Encoder and Recurrent Probability Model. IEEE Journal of Selected Topics in Signal Processing, 15(2):388–401, Feb. 2021.

- [52] Richard Zhang, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 586–595, Salt Lake City, UT, June 2018. IEEE.

- [53] Wei Zhang and Hantao Liu. Study of Saliency in Objective Video Quality Assessment. IEEE Transactions on Image Processing, 26(3):1275–1288, Mar. 2017.