From the Greene–Wu Convolution to Gradient Estimation over Riemannian Manifolds

Abstract

Over a complete Riemannian manifold of finite dimension, Greene and Wu studied a convolution of defined as

where is a kernel that integrates to 1, and is the base measure on . In this paper, we study properties of the Greene–Wu (GW) convolution and apply it to non-Euclidean machine learning problems. In particular, we derive a new formula for how the curvature of the space would affect the curvature of the function through the GW convolution. Also, following the study of the GW convolution, a new method for gradient estimation over Riemannian manifolds is introduced.

1 Introduction

Recently, as data structures are becoming increasingly non-Euclidean, many non-Euclidean operations are studied and applied to machine learning problems (e.g., Absil et al.,, 2009). Among these operations, the Greene-Wu (GW) convolution (Greene and Wu,, 1973, 1976, 1979) is important but relatively less understood.

Over a complete Riemannian manifold, the seminal Greene-Wu convolution (or approximation) of function at is defined as

| (GW) |

where is a convolution kernel that integrates to 1, and is the base measure on . The GW convolution generalizes standard convolutions in Euclidean spaces and has been subsequently studied in mathematics (e.g., Parkkonen and Paulin,, 2012; Azagra and Ferrera,, 2006; Azagra,, 2013). This convolution operation also naturally arises in machine learning scenarios. For example, if one is interested in studying a function over a matrix manifold, the output of such a function is GW convoluted if the input is noisy (i.e., we can only obtain an average function value near whenever we try to obtain the function value at ). A real-life scenario where the GW convolution happens is photo taking with shaky camera. Any function defined over the manifolds of images (e.g., likelihood of containing a cat) is GW convoluted if the camera is perturbed.

Despite the importance of the GW convolution, many of its elementary properties are only inadequately understood. In particular, how would the curvature of the manifold affect the curvature of the function through the GW convolution? (Q1) The answer to this question is important to machine learning problems, since the curvature of a function depicts fundamental properties of a function, including how convex the function is. In the above-mentioned example scenario, a better understanding of the GW convolution would lead to a better understanding of how the landscape of the function would be affected by the curvature of the space when the input is noisy.

In this paper, we give a quantitative answer to question (Q1). As an example, consider the GW convolution defined with kernel whose radial density (see Definition 1) is uniform over . For this GW convolution, we show that

where

-

1.

for any twice continuously differentiable and any , denotes the Hessian matrix of at ;

-

2.

exacts the minimal eigenvalue of a matrix;

-

3.

denotes the unit sphere in and denotes the expectation taken with respect to uniformly sampled from ;

-

4.

denotes the covariant derivative associated with the Levi-Civita connection.

This result implies that the GW convolution can sometimes make the function more convex, and thus often more friendly to optimize. Specifically, if and satisfy that

then the minimal eigenvalue of at is larger than the minimal eigenvalue of near . This means sometimes noisy input can make the function more convex and thus more friendly to optimize, thanks to the curvature of the space.

Besides understanding of how the curvature of the space would affect curvature of the function via GW convolution, another important question is how can we directly apply the GW convolution operation to machine learning problems? (Q2) Naturally, the GW convolution is related to anti-derivatives and thus gradient estimation, as suggested by the fundamental theorem of calculus or the Stokes’ theorem. Along this line, we introduce a new gradient estimation method over Riemannian manifolds. Our gradient estimation method improves the state-of-the-art scaling with dimension from to , while holding all other quantities the same§§§More details for the comparison are explained in Remark 3.. Apart from curvature, Riemannian gradient estimation differs from its Euclidean counterpart in that an open set is diffeomorphic to an Euclidean set only within the injectivity radius. This difference implies that one needs to be extra careful when taking finite difference steps in non-Euclidean spaces. To this end, we show that as long as the function is geodesically -smooth, the finite difference method always works, no matter how small the injectivity radius is. Empirically, our method outperforms the best existing method for gradient estimation over Riemannian manifolds, as evidenced by thorough experimental evaluations. Based on our answer to (Q2), we study the online convex learning problems over Hadamard manifolds under stochastic bandit feedback. In particular, single point gradient estimator is designed. Thus online convex learning problem under bandit-type feedback can be solved, and a curvature-dependent regret bound is derived.

Related Works

The study of geometric methods in machine learning has never stalled. Researchers have investigated this topic from different angles, include kernel methods (e.g., Schölkopf et al.,, 2002; Muandet et al.,, 2017; Jacot et al.,, 2021), manifold learning (e.g., Lin and Zha,, 2008; Li et al.,, 2017; Zhu et al.,, 2018; Li and Dunson,, 2020), metric learning (e.g., Kulis et al.,, 2012; Li and Dunson,, 2019). Recently, attentions are concentrated on problems of learning with Riemannian geometry. Examples include convex optimization method over negatively curved spaces (Zhang and Sra,, 2016; Zhang et al.,, 2016), online learning algorithms with Riemannian metric (Antonakopoulos et al.,, 2019), mirror map based methods for relative Lipschitz objectives (Zhou et al.,, 2020).

Despite the rich literature on geometric methods for machine learning (e.g., Absil et al.,, 2009), some important non-Euclidean operations are relatively poorly understood, including the Greene–Wu (GW) convolution (Greene and Wu,, 1973, 1976, 1979; Parkkonen and Paulin,, 2012; Azagra and Ferrera,, 2006; Azagra,, 2013). To this end, we derive new properties of the GW convolution and apply it to machine learning problems.

Enabled by our study of the GW convolution, we design a new gradient estimation method over Riemannian manifolds. Perhaps the history of gradient estimation can date back to Sir Isaac Newton and the fundamental theory of calculus. Since then, many gradient estimation methods have been invented (Lyness and Moler,, 1967; Hildebrand,, 1987). In its more recent form, gradient estimation methods are often combined with learning and optimization. Flaxman et al., (2005) used the fundamental theorem of geometric calculus and solved online convex optimization problems with bandit feedback. Nesterov and Spokoiny, (2017); Li et al., (2020) introduced a stochastic gradient estimation method using Gaussian sampling, in Euclidean space or manifolds embedded in Euclidean spaces. We improve the previous results in two ways. 1. Our gradient estimation method generalizes this previous work by removing the ambient Euclidean space. 2 We tighten the previous error bound. Over an -dimensional Riemannian manifold, we show that the error of gradient estimation is bounded by in contrast to (Li et al.,, 2020), where is the dimension of the manifold, is the smoothness parameter of the objective function and is an algorithm parameter controlling finite-difference step size.

The application of our method to convex bandit optimization is related to online convex learning (e.g., Shalev-Shwartz,, 2011). The problem of online convex optimization with bandit feedback was introduced and studied by Flaxman et al., (2005). Since then, a sequence of works has focused on bandit convex learning in Euclidean spaces. For example, Hazan and Kale, (2012); Chen et al., (2019); Garber and Kretzu, (2020) have studied the gradient-free counterpart for the Frank-Wolfe algorithm, and Hazan and Levy, (2014) studied this problem with self-concordant barrier. An extension to this scheme is combining the methodology with a partitioning of the space Kleinberg, (2004). By doing so, regret rate of can be achieved in Euclidean spaces (Bubeck et al.,, 2015; Bubeck and Eldan,, 2016). This rate is optimal in terms of for stochastic environement, as shown by Shamir, (2013). While there has been extensive study of online convex optimization problems, they are all restricted to Euclidean spaces. In this paper, we generalize previous results from Euclidean space to Hadamard manifolds, using our new gradient estimation method.

2 Preliminaries and Conventions

Preliminaries for Riemannian Geometry

A Riemannian manifold is a smooth manifold equipped with a metric tensor such that at any point , defines a positive definite inner product in the tangent space . For a point , we use to denote the inner product in induced by . Also, we use to denote the norm associated with . Intuitively, a Riemannian manifold is a space that can be locally identified as an Euclidean space. With such structures, one can locally carry out calculus and linear algebra operations.

A vector field assigns each point a unique vector in . Let be the space of all smooth vector fields. An affine connection is a mapping so that (i) for , (ii) for , and (iii) for all . The Levi-Civita connection is the unique affine connection that is torsion free and compatible with the Riemannian metric . A geodesic curve is a curve so that for all , where here denotes the Levi-Civita connection. One can think of geodesic curves as generalizations of straight line segments in Euclidean space. At any point , the exponential map is a local diffeomorphism that maps to such that there exists a geodesic curve with , and . Since the exponential map is (locally) diffeomorphic, the inverse exponential map is also properly defined (locally). We use to denote the inverse exponential map of .

We say a vector field is parallel along a geodesic if for all . For , we call the map a parallel transport, if it holds that for any vector field such that is parallel along , is mapped to . Parallel transport induced by the Levi-Civita connection preserves the Riemannian metric, that is, for , , and geodesic , it holds that for any .

The Riemann curvature tensor is defined as , where is the Lie bracket for vector fields. The sectional curvature is defined as , which is the high dimensional generalization of Gaussian curvature. Intuitively, for a point , is the the Gaussian curvature of the sub-manifold spanned by at .

For a continuously connected Riemannian manifold , a distance metric can be defined. The distance between is the infimum over the lengths of piecewise smooth curves in connecting and , where the length of a curve is defined with respect to the metric tensor . A Riemannian manifold is complete if it is complete as a metric space. By the celebrated Hopf–Rinow theorem, if a Riemannian manifold is complete, the exponential map is defined over the entire tangent space for all . The cut locus of a point is the set of points such that there exists more than two distinct shortest path from to .

Notations and Conventions

For better readability, we list here some notations and conventions that will be used throughout the rest of this paper.

-

•

For any , let denote the open set near that is a diffeomorphic to a subset of via the local normal coordinate chart . Define the distance () such that

where is the Euclidean distance in .

-

•

As mentioned in the preliminaries, for any , we use and to denote the inner produce and norm induced by the Riemannian metric in the tangent space . We omit the subscript when it is clear from context.

-

•

For any and differentiable function over , We use to denote the gradient of at .

-

•

For any and , we use to denote the origin-centered sphere in with radius . For simplicity, we write .

-

•

We will use another set of notations for parallel transport. For two points and a smooth curve connecting and , we use to denote the parallel transport from to along the curve . Let denote the set of minimizing geodesics connecting and . Define be defined such that for any , where the expectation is taken with respect to the uniform probability measure over . Since for any point , its cut locus is of measure zero, the operation is deterministically defined for almost every .

-

•

For any , and , define .

-

•

As a convention, if is diffeomorphic to , we simply say is diffeomorphic. Similarly, a map is said to be diffeomorphic when the domain of the map is diffeomorphic to an open subset of the Euclidean space. For simplicity, we omit all musical isomorphisms when there is no confusion.

3 The Greene–Wu Convolution

The Greene–Wu convolution can be decomposed into two steps. In the first step, a direction in the tangent space is picked, a convolution along the direction is performed, and the extension of this convolution to other points is defined. In the second step, the direction is randomized over a sphere. To properly describe these two steps, we need to formally define kernels.

Definition 1.

A function is called a kernel if

-

1.

For any and , there exists a density on such that , and for all , where is the uniform probability density over the Euclidean sphere of radius ¶¶¶Here as a convention.;

-

2.

, where is the Dirac point mass at .

We call the radial density for .

By the Radon-Nikodym theorem, item 1 in the definition of a kernel is satisfied when the measure on induced by is absolutely continuous with respect to the base measure on . Examples of kernels include uniform mass over a ball of radius .

Remark 1.

For simplicity, we restrict our attention to symmetric kernels , where the value of only depends on . Yet many of the results generalize to non-symmetric kernels. To see this, we decompose by , where is the uniform density over the sphere of radius , and is the density induced by along the direction of (or a parallel transport of ) up to a scaling by . We can replace item 1 in Definition 1 and many of the follow-up results can be extended to these cases.

With the kernel defined in Definition 1, the GW approximation/convolution can be defined as follows. For and , we define

| (1) |

where

| (2) |

Next we show that the convolution in (1) is identical to the (GW) convolution everywhere.

Proposition 1.

For any and defined on , it holds that .

Proof.

Let be the base measure on and let be the base measure on . We then have . Thus it holds that

where a change of variable is used.

∎

What’s interesting with (1) is that it extends (GW) from a local definition to a global definition, as pointed out in Remark 2.

Remark 2.

With the Levi-Civita connection, spheres are preserved after parallel transport, and so are the uniform base measures over the spheres. Thus the spherical integration of defined in (2) can be carried out at regardless whether or not. Hence, the convolution defined by (1) and (2) is indeed the GW convolution in the sense that agrees with (GW) almost everywhere∥∥∥The cut locus is of measure zero and thus is deterministically defined almost everywhere. if the convolution at every point is restricted to the direction specified by (or parallel transport of ). Now the advantage of our decomposition is clear – is globally defined and agrees with (GW) along the the direction of . This advantage leads to easy computations and meaningful applications, as we will see throughout the rest of the paper.

3.1 Properties of the GW Convolution

In their seminal works, Greene and Wu showed that the approximation is convexity-preserving when the width of the kernel approaches 0 (Theorem 2 by Greene and Wu, (1973)). We show that the GW convolution is convexity-preserving even if the width of the kernel is strictly positive. Also, second order properties of the GW approximation is proved.

3.1.1 Convexity-Preserving Property of the GW Convolution

Definition 2 (Convexity).

Let be a complete Riemannian manifold. A smooth function is convex near with radius exceeding if there exists , such that for any , the function is convex in over .

Using our decomposition trick of the GW approximation (defined in Eq. 1), one can show that the GW convolution is convexity preserving even if the width of the kernel is strictly positive.

Theorem 1.

Let be a complete Riemannian manifold, and fix an arbitrary . If there exists such that 1) is convex near with radius exceeding , and 2) the density (a shorthand for ) induced by kernel satisfies that , then the Greene-Wu approximation is also convex near with radius exceeding .

Proof of Theorem 1.

By Proposition 1 and Remark 2, it is sufficient to consider . Since is convex near with radius exceeding , we have, for any and any real numbers ,

| (3) |

Let () for simplicity. We can then rewrite (3) as

| (4) |

We can integrate the first term in (4) with respect to and , and get

| (5) |

where (5) uses that parallel transport along geodesics preserves length and angle, for the change of tangent space for the outer integral.

Since the density satisfies that , the term on the right-hand-side of (5) is the exact definition of . We repeat this computation for the second term in (4), and get

| (6) |

which is the midpoint convexity (around , along ) for . Since midpoint convexity implies convexity for smooth functions, we know is convex near along . Since the above argument is true for any , we know that is convex near with radius exceeding .

∎

Previous Convexity-Preserving Result

Previously, Greene and Wu, (1973, 1976, 1979) studied the GW convolution from an analytical and topological perspective, and showed that the GW convolution is convexity-preserving (Greene and Wu,, 1973) when the width of the kernel approaches zero. For completeness, this previous result is summarized in Theorem 2. In Theorem 1 above, we show that the output of GW convolution is convex with a strictly positive radius, as long as the input is convex with a strictly positive radius.

Theorem 2 (Greene and Wu, (1973)).

Consider a smooth function defined over a complete Riemannian manifold . Let be the GW approximation of with kernel . Then it holds that

| (7) |

where is the set of all geodesics parametrized by arc-length such that .

3.2 Second Order Properties of the GW Convolution

Our decomposition of the Greene-Wu convolution also allows other properties of the GW convolution easily derived. Perhaps one of the most important property of a function is its Hessian (matrix), which we discuss now. The definition of Hessian is in Definition 3 (e.g., Schoen and Yau, (1994); Petersen, (2006)).

Definition 3 (Hessian).

Consider an -dimensional Riemannian manifold . For , define the Hessian of as such that

where is the Levi-Civita connection. Using Christoffel symbols, it holds that

The Hessian matrix of at is a map , such that for any .

With the Levi-Civita connection, which is torsion-free, one can verify that

For any , with the normal coordinate specified by the exponential map , the Christoffel symbol vanishes at . This means in local normal coordinate. Similar to the Euclidean case, the eigenvalues of the Hessian matrix describes the curvature of the function. Next, we will show how the curvature of the function is affected after applying the GW convolution.

In the study of the second order properties of the GW approximation, we will restrict our attention to kernels whose radial density function is uniform. More specifically, we will use and . Since one can construct other kernels by combining kernels with uniform radial density functions, investigating kernels with uniform radial density is sufficient for our purpose. With this in mind, we look at

| (8) |

The following theorem tells us how the geometry of the function and the geometry of the space are related after the GW convolution.

Theorem 3.

Consider a complete Riemannian manifold and a kernel with radial density . For any , there exists such that for all , it holds that

for all .

We will use the double exponential map (Gavrilov,, 2007) notation in the proof. The double exponential map (also written ) is defined as such that, when is small enough (),

| (9) |

With this notation, we are ready to prove Theorem 3.

Proof of Theorem 3.

With this notion of double exponential map, we have

| (10) |

Let , and let

We can then write (10) as

| (11) |

For the terms involving , one has

| (12) |

where and .

For , we consider the term for a smooth function and small vectors . For any , and , define . We can Taylor expand by

Thus we have, for any , of small norm,

where the last equality uses .

Thus we have

Thus we have

| (13) |

where denotes terms that are odd in .

∎

Theorem 3 says that when the space is not flat, the GW convolution changes the curvature of the function (Hessian matrix) in a non-trivial way. A more concrete example of this fact is in Corollary 1.

Corollary 1.

Consider a kernel with radial density function . For a smooth function defined over a complete Riemannian manifold and any , there exists , such that for all , it holds that

Since the minimum eigenvalue of the Hessian matrix measures the degree of convexity of a function, this corollary establish a quantitative description of how the GW convolution would affect the degree of convexity.

4 Estimating Gradient over Riemannian Manifolds

From our formulation of the GW approximation, one can obtain tighter bounds for gradient estimation over Riemannian manifolds, for geodesically -smooth functions defined as follows.

Definition 4.

Let . A function is called geodesically -smooth near if

| (15) |

where is the gradient of at . If is -smooth near for all , we say is -smooth over .

For a geodesically -smooth function, one has the following theorem that bridges the gradient at and the zeroth-order information near .

Theorem 4.

Fix . Let be geodesically -smooth near . It holds that

| (16) |

where is the origin-centered sphere in of radius .

This theorem provides a very simple and practical gradient estimation method: At point , we can select small numbers and , and uniformly sample a vector . With these , the random vector

| (17) |

gives an estimator of .

One can also independently sample uniformly from . Using these samples, an estimator for is

| (18) |

Note that Theorem 4 also provides an in expectation bound for the estimator in (18). Before proving Theorem 4, we first present Propositions 2 and 3, and Lemma 1.

Proposition 2.

Consider a continuously differentiable function defined over a complete Riemannian manifold . If function is geodesically -smooth over , then it holds that

-

1.

for all and ;

-

2.

for any , , where .

Proof.

For any and , let be a sequence of points such that and for all that is sufficiently small. Since the Levi-Civita connection preserves the Riemannian metric, we know . At any , we have

| (19) | ||||

where (19) uses the definition of geodesic -smoothness.

Also, it holds that

| (20) |

By the definition of geodesic -smoothness and the Cauchy-Schwarz inequality, it holds that

| (21) |

Adding and subtracting to (20) and using (21) gives

Thus we have

| (22) |

where the notation omits constants that do not depend on or . Since and (22) is true for arbitrarily small , letting in (22) finishes the proof.

The second item can be proved in a similar way. Specifically, we can also find a sequence of points on the curve of with and , and repeatedly use the definition of geodesic -smoothness.

∎

Proposition 3.

For any vector , we have

Proof.

It is sufficient to consider , where is the local canonical basis for with respect to . For any ,

Since for any when , we have . When , by symmetry, we have for all .

We have shown , on which taking inner product with shows that . ∎

Lemma 1.

Pick and . Let be a geodesic in . We then have

| (23) |

Proof.

Consider the function . By fundamental theorem of calculus, we have

Also, the directional derivative over Riemannian manifolds can be computed by

Combining the above results finishes the proof. ∎

Lemma 1 can be viewed as a corollary of the fundamental theorem of calculus, or a consequence of Stokes’ theorem. If one views the curve as a manifold with boundary ( and ), and as a vector field parallel to , then applying Stokes’ theorem proves Lemma 1.

We are now ready to prove Theorem 4, using our decomposition trick with the radial density function being .

Proof of Theorem 4.

From the definition of directional derivative, we have

| (24) |

For simplicity, let () for any . Since is geodesically -smooth, we have, for any and ,

| (25) | ||||

| (26) | ||||

| (27) |

where (25) uses (24), (26) uses the fact that the parallel transport preserves the Riemannian metric, and (27) uses Proposition 2 and the Cauchy-Schwarz inequality.

Applying Lemma 1 and the above results gives

Combining the above result with (28) gives

| (29) |

∎

Theorem 5.

If is geodesically -smooth, then it holds that

Proof.

By Lemma 1 and that is geodesically -smooth, we have, for any ,

| (30) |

By (30), it holds that,

| (31) |

By Theorem 4, we have

where is the all-one vector and the big-O notation here means that, with respect to any coordinate system, each entry in and differs by at most .

Since are mutually independent, we have, for any ,

where the last inequality uses the Cauchy-Schwartz inequality. Thus by expanding out all terms we have

∎

4.1 Previous Methods for Riemannian Gradient Estimation

Previously, Nesterov and Spokoiny, (2017); Li et al., (2020) have introduced gradient estimators using the following sampler. The estimator is

| (32) |

where are Gaussian vectors, and is a parameter controlling the width of the estimator. We use this Gaussian sampler as a baseline for empirical evaluations. For this estimator, Li et al., (2020) studied its properties over manifolds embedded in Euclidean space. Their result is in Proposition 4.

Proposition 4 (Li et al., (2020)).

Let be a manifold embedded in a Euclidean. If is geodesically -smooth over , then it holds that

| (33) |

where is the estimator in (32).

To fairly compare our method (17) and this previous method (32), one should set for our method, as pointed out in Remark 3.

Remark 3.

If one picks in Theorem 4, one can obtain the error bound for our method, and the error bound for the previous method is (Proposition 4). Why should we pick in Theorem 4? This is because when , we have . In words, when , the random vectors used in our method (17) and the random vector used in the previous method (32) have the same squared norm in expectation. This leads to a fair comparison for the bias, while holding the second moment of the random vector exactly the same.

5 Empirical Studies

In this section, we empirically study the spherical estimator in (18), in comparison with the Gaussian estimator in (32). The two methods (18) and (32) are compared over three manifolds: 1. the Euclidean space , 2. the unit sphere , and 3. the surface specified by the equation . The evaluation is carried out at three different point, one for each manifold. The three manifolds, and corresponding points for evaluation, are listed in Table 1. Two test functions are used: a. the linear function , and b. the function . The two test functions are listed in Table 2.

| Label | Manifold | Point | Exponential map |

| 1 | |||

| 2 | |||

| 3 | , |

| Label | Function |

| a | |

| b |

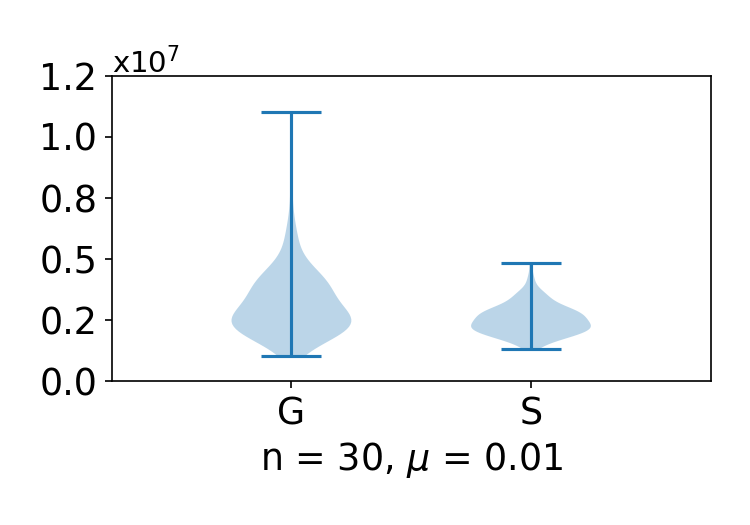

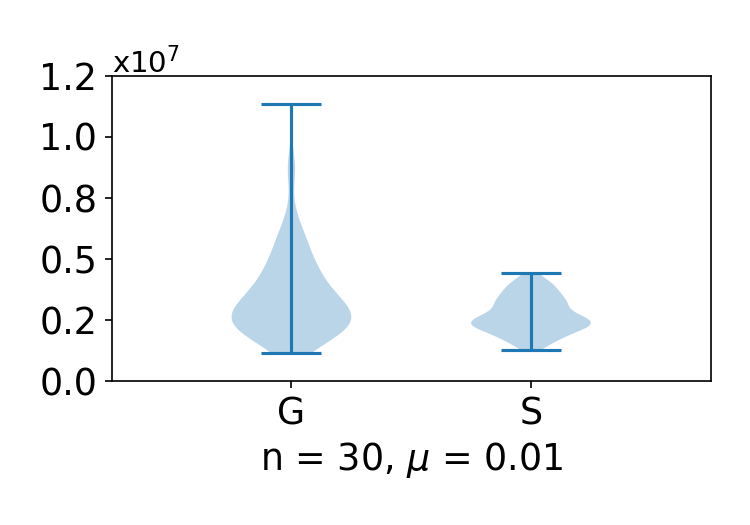

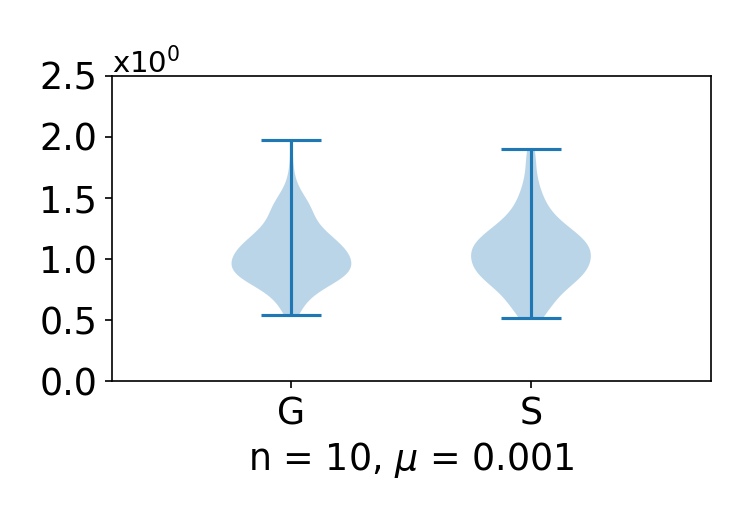

The three manifolds in Table 1 and the two functions in Table 2 together generate 6 settings. We use 1a, 1b, 2a, 2b, 3a, 3c to label these 6 setting, where 1a refers to the setting over manifold 1 using function a, and so on. The experimental results are summarized in Figures 1-12. In all figures, “G” on the -axis stands for the previous method using Gaussian estimator (32), and “S” on the -axis stands for our method using the spherical estimator (18). To fairly compare (32) and (18), we use for all spherical estimators so that for both the spherical estimator and the Gaussian estimator, one has . The figure captions specify the settings used. For example, setting 1a is for function in Table 2 over manifold 1 in Table 1. Below each subfigure, the values of and are the dimension of the manifold and the parameter used in the estimators (both G and S).

Since are set to the same value for both G and S, we use

| (34) |

to measure the error (bias) of the estimator, and

| (35) |

to measure robustness of the estimator. For both the estimation error and the robustness, smaller values mean better performance. For both “G” and “S” in all figures, each violin plot summarizes values of (34) or (35), where we use for all of them. Take G and S in Figure 1 as an example. We compute (35) for 100 times for G and estimations for S, all with . Then we use violin plots to summarize these 100 values of (35) for G and the 100 values of (35) for S.

Figures 1-6 plot the robustness of the two estimators and , and Figures 7-12 show the corresponding errors. As shown in the figures, in all of the settings, the spherical estimator S is much more robust than the Gaussian estimator G, while achieving the same level of estimation error.

6 Application to Online Convex Optimization

In online learning with bandit feedback, each time , the learner chooses points and , and the environment picks a convex loss function . The learner suffers and observes loss and observes the function value . The learner then picks based on historical observations and the learning process moves on. Online learning agents, unlike their offline learning (or batch learning) counterpart, do not have knowledge about when they choose . Such models capture real-world scenarios such as learning with streaming data. In the machine learning community, such problem settings are often referred to as the Online Convex Optimization problem with bandit-type feedback.

6.1 Online Convex Optimization over Hadamard Manifolds: an Algorithm

We consider an online convex optimization problem (with bandit-type feedback) over a negatively curved manifold. Let there be a Hadamard manifold and a closed geodesic ball . Each time , the agent and the environment executes the above learning protocol, with defined over and . The goal of the agent is to minimize the -step hindsight regret: , where .

With the approximation guarantee in Theorem 4, one can solve this online learning problem. At each , we can approximate gradient at using the function value , and perform an online gradient descent step. In particular, we can generalize the online convex optimization algorithm (with bandit-type feedback) (Flaxman et al.,, 2005) to Hadamard manifolds.

By Theorem 4, we have

| (36) |

Thus the gradient of at can be estimated by

where is uniformly sampled from . This estimator generalizes the two-evaluation estimator in the Euclidean case (Duchi et al.,, 2015). As shown in (36), the bias of this estimator is also bounded by .

With this gradient estimator, one can perform estimated gradient descent by

where is uniformly sampled from , and is the learning rate.

To ensure that the algorithm stays in the geodesic ball , we need to project back to a subset of , which is

where and are the center and radius of the geodesic ball . Since the set is a geodesic ball in a Hadamard manifold, the projection onto it is well-defined. Thus we can project onto to get , or let . Now one iteration finishes and the learning process moves on to the next round. This learning algorithm is summarized in Algorithm 1.

6.2 Online Convex Optimization over Hadamard Manifolds: Analysis

Theorem 6.

If, for all , the loss function satisfies that is geodesically -smooth, then the regret of Algorithm 1 satisfies:

| (37) |

where is the diameter of , and .

In particular, when , and , the regret satisfies . This sublinear regret rate implies that, when are identical, the algorithm converges to the optimal point at a rate of order . We now start the proof of Theorem 6, by presenting an existing result by Zhang and Sra.

Lemma 2 (Corollary 8, Zhang and Sra, (2016)).

Let be a Hadamard manifold, with sectional curvature for all and . Then for any , and for some , then it holds that

where .

Proof of Theorem 6.

By convexity of , we have

Together with the Lemma of Zhang and Sra, we get

where the last line uses Lemma 2. Taking expectation (over ) on both sides gives

| (38) |

where the last line uses Theorem 4, and that ( for all ). Henceforth, we will omit the expectation operator for simplicity.

By definition of , we have, . Plugging this back to (38), we get, in expectation,

Summing over gives, in expectation,

where the last line telescopes out the summation term.

∎

6.3 Online Convex Optimization over Hadamard Manifolds: Experiments

In this section, we study the effectiveness of our algorithm on the benchmark task of finding the Riemannian center of mass of several positive definite matrices (Bini and Iannazzo,, 2013). The Riemannian center of mass of a set of symmetric positive definite matrices seeks to minimize

| (39) |

where is symmetric and positive definite.

This objective is non-convex in the Euclidean sense, but is geodesically convex on the manifold of positive definite matrices. The gradient update step for objective (39) over the manifold of symmetric and positive definite matrices is

For our experiments, we can only estimate the gradient by querying function values of . We randomly sample several positive definite matrices , and apply Algorithm 1 to search for to minimize (39). The results are summarized in Figure 13.

7 Conclusion

In this paper, we study the GW convolution/approximation and gradient estimation over Riemannian manifolds. Our new decomposition trick of the GW approximation provides a tools for computing properties of the GW convolution. Using this decomposition trick, we derive a formula for how the curvature of the manifold will affect the curvature of the function via the GW convolution. Empowered by our decomposition trick, we introduce a new gradient estimation method over Riemannian manifolds. Over an -dimensional Riemannian manifold, we improve best previous gradient estimation error (Li et al.,, 2020). We also apply our gradient estimation method to bandit convex optimization problems, and generalize previous results from Euclidean spaces to Hadamard manifolds.

References

- Absil et al., (2009) Absil, P.-A., Mahony, R., and Sepulchre, R. (2009). Optimization algorithms on matrix manifolds. Princeton University Press.

- Antonakopoulos et al., (2019) Antonakopoulos, K., Belmega, E. V., and Mertikopoulos, P. (2019). Online and stochastic optimization beyond lipschitz continuity: A riemannian approach. In International Conference on Learning Representations.

- Azagra, (2013) Azagra, D. (2013). Global and fine approximation of convex functions. Proceedings of the London Mathematical Society, 107(4):799–824.

- Azagra and Ferrera, (2006) Azagra, D. and Ferrera, J. (2006). Inf-convolution and regularization of convex functions on riemannian manifolds of nonpositive curvature. Revista Matemática Complutense, 19(2):323–345.

- Bini and Iannazzo, (2013) Bini, D. A. and Iannazzo, B. (2013). Computing the karcher mean of symmetric positive definite matrices. Linear Algebra and its Applications, 438(4):1700–1710.

- Bubeck et al., (2015) Bubeck, S., Dekel, O., Koren, T., and Peres, Y. (2015). Bandit convex optimization: regret in one dimension. In Conference on Learning Theory, pages 266–278. PMLR.

- Bubeck and Eldan, (2016) Bubeck, S. and Eldan, R. (2016). Multi-scale exploration of convex functions and bandit convex optimization. In Conference on Learning Theory, pages 583–589. PMLR.

- Chen et al., (2019) Chen, L., Zhang, M., and Karbasi, A. (2019). Projection-free bandit convex optimization. In The 22nd International Conference on Artificial Intelligence and Statistics, pages 2047–2056. PMLR.

- Duchi et al., (2015) Duchi, J. C., Jordan, M. I., Wainwright, M. J., and Wibisono, A. (2015). Optimal rates for zero-order convex optimization: The power of two function evaluations. IEEE Transactions on Information Theory, 61(5):2788–2806.

- Flaxman et al., (2005) Flaxman, A. D., Kalai, A. T., and McMahan, H. B. (2005). Online convex optimization in the bandit setting: gradient descent without a gradient. In Proceedings of the sixteenth annual ACM-SIAM symposium on Discrete algorithms, pages 385–394.

- Garber and Kretzu, (2020) Garber, D. and Kretzu, B. (2020). Improved regret bounds for projection-free bandit convex optimization. In International Conference on Artificial Intelligence and Statistics, pages 2196–2206. PMLR.

- Gavrilov, (2007) Gavrilov, A. V. (2007). The double exponential map and covariant derivation. Siberian Mathematical Journal, 48(1):56–61.

- Greene and Wu, (1979) Greene, R. E. and Wu, H. (1979). -approximations of convex, subharmonic, and plurisubharmonic functions. In Annales Scientifiques de l’École Normale Supérieure, volume 12, pages 47–84.

- Greene and Wu, (1973) Greene, R. E. and Wu, H.-H. (1973). On the subharmonicity and plurisubharmonicity of geodesically convex functions. Indiana University Mathematics Journal, 22(7):641–653.

- Greene and Wu, (1976) Greene, R. E. and Wu, H.-h. (1976). -convex functions and manifolds of positive curvature. Acta Mathematica, 137(1):209–245.

- Hazan and Kale, (2012) Hazan, E. and Kale, S. (2012). Projection-free online learning. In Proceedings of the 29th International Coference on International Conference on Machine Learning, pages 1843–1850.

- Hazan and Levy, (2014) Hazan, E. and Levy, K. Y. (2014). Bandit convex optimization: Towards tight bounds. In NIPS, pages 784–792.

- Hildebrand, (1987) Hildebrand, F. B. (1987). Introduction to numerical analysis. Courier Corporation.

- Jacot et al., (2021) Jacot, A., Gabriel, F., and Hongler, C. (2021). Neural tangent kernel: convergence and generalization in neural networks. In Proceedings of the 53rd Annual ACM SIGACT Symposium on Theory of Computing, pages 6–6.

- Kleinberg, (2004) Kleinberg, R. (2004). Nearly tight bounds for the continuum-armed bandit problem. Advances in Neural Information Processing Systems, 17:697–704.

- Kulis et al., (2012) Kulis, B. et al. (2012). Metric learning: A survey. Foundations and trends in machine learning, 5(4):287–364.

- Lee, (2006) Lee, J. M. (2006). Riemannian manifolds: an introduction to curvature, volume 176. Springer Science & Business Media.

- Li and Dunson, (2019) Li, D. and Dunson, D. B. (2019). Geodesic distance estimation with spherelets. arXiv preprint arXiv:1907.00296.

- Li and Dunson, (2020) Li, D. and Dunson, D. B. (2020). Classification via local manifold approximation. Biometrika, 107(4):1013–1020.

- Li et al., (2017) Li, D., Mukhopadhyay, M., and Dunson, D. B. (2017). Efficient manifold and subspace approximations with spherelets. arXiv preprint arXiv:1706.08263.

- Li et al., (2020) Li, J., Balasubramanian, K., and Ma, S. (2020). Stochastic zeroth-order riemannian derivative estimation and optimization. arXiv preprint arXiv:2003.11238.

- Lin and Zha, (2008) Lin, T. and Zha, H. (2008). Riemannian manifold learning. IEEE Transactions on Pattern Analysis and Machine Intelligence, 30(5):796–809.

- Lyness and Moler, (1967) Lyness, J. N. and Moler, C. B. (1967). Numerical differentiation of analytic functions. SIAM Journal on Numerical Analysis, 4(2):202–210.

- Muandet et al., (2017) Muandet, K., Fukumizu, K., Sriperumbudur, B., and Schölkopf, B. (2017). Kernel mean embedding of distributions: A review and beyond. Foundations and Trends in Machine Learning, 10(1-2):1–144.

- Nesterov and Spokoiny, (2017) Nesterov, Y. and Spokoiny, V. (2017). Random gradient-free minimization of convex functions. Foundations of Computational Mathematics, 17(2):527–566.

- Parkkonen and Paulin, (2012) Parkkonen, J. and Paulin, F. (2012). On strictly convex subsets in negatively curved manifolds. Journal of Geometric Analysis, 22(3):621–632.

- Petersen, (2006) Petersen, P. (2006). Riemannian geometry, volume 171. Springer.

- Schoen and Yau, (1994) Schoen, R. M. and Yau, S.-T. (1994). Lectures on differential geometry, volume 2. International press Cambridge, MA.

- Schölkopf et al., (2002) Schölkopf, B., Smola, A. J., Bach, F., et al. (2002). Learning with kernels: support vector machines, regularization, optimization, and beyond. MIT press.

- Shalev-Shwartz, (2011) Shalev-Shwartz, S. (2011). Online learning and online convex optimization. Foundations and trends in Machine Learning, 4(2):107–194.

- Shamir, (2013) Shamir, O. (2013). On the complexity of bandit and derivative-free stochastic convex optimization. In Conference on Learning Theory, pages 3–24. PMLR.

- Zhang et al., (2016) Zhang, H., Reddi, S. J., and Sra, S. (2016). Riemannian SVRG: fast stochastic optimization on Riemannian manifolds. In Proceedings of the 30th International Conference on Neural Information Processing Systems, pages 4599–4607.

- Zhang and Sra, (2016) Zhang, H. and Sra, S. (2016). First-order methods for geodesically convex optimization. In Conference on Learning Theory, pages 1617–1638. PMLR.

- Zhou et al., (2020) Zhou, Y., Sanches Portella, V., Schmidt, M., and Harvey, N. (2020). Regret bounds without lipschitz continuity: Online learning with relative-lipschitz losses. Advances in Neural Information Processing Systems, 33.

- Zhu et al., (2018) Zhu, B., Liu, J. Z., Cauley, S. F., Rosen, B. R., and Rosen, M. S. (2018). Image reconstruction by domain-transform manifold learning. Nature, 555(7697):487–492.