From Point to Space: 3D Moving Human Pose Estimation Using Commodity WiFi

Abstract

In this paper, we present Wi-Mose, the first 3D moving human pose estimation system using commodity WiFi. Previous WiFi-based works have achieved 2D and 3D pose estimation. These solutions either capture poses from one perspective or construct poses of people who are at a fixed point, preventing their wide adoption in daily scenarios. To reconstruct 3D poses of people who move throughout the space rather than a fixed point, we fuse the amplitude and phase into Channel State Information (CSI) images which can provide both pose and position information. Besides, we design a neural network to extract features that are only associated with poses from CSI images and then convert the features into key-point coordinates. Experimental results show that Wi-Mose can localize key-point with 29.7mm and 37.8mm Procrustes analysis Mean Per Joint Position Error (P-MPJPE) in the Line of Sight (LoS) and Non-Line of Sight (NLoS) scenarios, respectively, achieving higher performance than the state-of-the-art method. The results indicate that Wi-Mose can capture high-precision 3D human poses throughout the space.

Index Terms:

WiFi sensing, 3D human pose estimation, CSI images, neural network design.I Introduction

In recent years, thanks to the increasingly ubiquitous deployment of WiFi infrastructure and the open-source software [1], human sensing based on WiFi Channel State Information (CSI) has gained significant attention [2] [3] [4]. To achieve more fine-grained human sensing, WiFi-based human pose estimation has been a focus of research recently. Pioneering works [5] [6] estimate human poses using radars operating at the WiFi frequency, i.e., 5.46-7.24.

Further, for easy deployment in real world and cost savings, [7] [8] use commodity WiFi devices to obtain fine-grained human poses. [7] enables commodity WiFi devices to capture 2D human skeleton images. But it can merely obtain human poses from one perspective, and performs unsatisfactorily in some special perspectives restricted by annotations.

Different from [7], [8] presents WiPose, the only 3D human pose estimation system using commodity WiFi. It utilizes the amplitude component of CSI and achieves high performance in the experimental environment. However, during the experiment, the subjects are required to perform movements at a fixed point. Moreover, it consists of 9 distributed antennas. Therefore, WiPose is limited to some specific applications and not convenient enough for daily use, such as smart home, health monitoring, etc.

In summary, due to the change of the target position and the difficulty of data collection, WiFi-based human pose estimation still has some limitations for wide adoption in daily life.

To address the above limitations, we propose Wi-Mose, the first system which can capture fine-grained 3D moving human poses with commodity WiFi devices in both Line of Sight (LoS) and Non-Line of Sight (NLoS) scenarios. To reconstruct 3D moving human poses, we mainly design the system from two aspects: data processing and network design.

For one thing, we convert the processed amplitude and phase, which contain the pose and position information, respectively, into a sensitive CSI tensor, called CSI images. And then, we feed CSI images into the network rather than only amplitude or phase information. For another, we design a deep feature extraction network to extract pose related features from the amplitude channel and weaken the influence of position changes by leveraging the information in the phase channel. Specifically, we use the position information contained in the phase channel as prior knowledge to add constraints to the attitude estimation. We also design a pose regress network to convert the features into key-point coordinates. Therefore, the subject can move continuously and freely without space constraints. The main contributions of our work are listed as follows:

1. We propose a method to convert the raw CSI data into CSI images so that the neural network can extract features which contain more pose information but less position component.

2. We design a neural network which is suitable to extract moving human pose features from CSI images and convert WiFi signals into 3D human poses.

3. We build a 3D human pose estimation prototype system for experiment and evaluation. Results show that the system can estimate 3D human poses with 29.7mm (37.8mm) Procrustes analysis Mean Per Joint Position Error (P-MPJPE) in the LoS (NLoS) scenarios, achieving 21% (10%) improvements in accuracy over the state-of-the-art method.

4. Because of the usage of CSI images and specialized network, Wi-Mose utilizes only 6 antennas to capture information, which is lightweight and low-cost compared with the state-of-the-art method [8].

The rest of this paper is organized as follows. Section II is the system overview. Section III discusses data collection and processing. Section IV introduces the neural network. Section V is the baseline. Section VI describes experiments and performance followed by a conclusion in Section VII.

II System Overview

The system consists of three parts: data collection, data processing, and pose estimation, as shown in Fig. 1. The data collection part contains two receivers, a transmitter, and a monocular camera, which are used to collect synchronous CSI and video frames. The data processing part converts the raw CSI data into CSI images and transforms the video frames to human key-point coordinates which are used for supervised learning. The pose estimation part extracts features from CSI images and converts the features into key-point coordinates which are utilized to reconstruct 3D human pose skeletons.

III Data Collection and Processing

In this paper, to reconstruct 3D moving human pose skeletons using commodity WiFi devices, we need to collect synchronous raw CSI and video frames and then process these data so that they can be fed into the neural network.

III-A Data Collection

According to Fresnel zone model [9], to capture human poses in the whole space, we need at least 2 pairs of transceivers. Hence in this paper, we utilize 3 commodity WiFi devices (one transmitter and two receivers) to capture the raw CSI data which contains human pose information. To collect more information, we set 1 transmitting antenna at the transmitter, 3 receiving antennas at each receiver. In order to improve resolution and capture pose-related features efficiently, the 2 pairs of transceivers are mutually perpendicular and the transmitter is placed at the intersection. During collecting CSI data, we collect synchronous video frames using a monocular camera, which are utilized to extract 3D key-point coordinates as the ground truth to train the proposed neural network.

III-B Data Processing

III-B1 Link Selection

We observe that there is always an antenna which receives CSI with a larger variance value than others. It means this antenna has larger dynamic responses. So we choose it as the reference and make use of its amplitude information.

III-B2 Denoising

In reality, there are multiple paths between a pair of transceiver. In an ideal state, the response of the wireless channel at time and frequency can be expressed as:

| (1) |

where is the number of multipath, and are the complex attenuation and time of flight for the -th path, respectively.

According to whether the length of the path changes, CSI can be divided into two parts, the static path and the dynamic path components, which can be expressed as:

| (2) |

where is the sum of responses of all static paths including LoS and other static reflection paths, is the collection of dynamic paths which are not constant over time. Our purpose is to extract the dynamic path component.

We cannot directly utilize raw CSI to capture human poses. Because compared with the signals of LoS and other static paths in raw CSI measurements, pose-related signals are too week and easily influenced by unpredictable interference. To improve the accuracy of pose estimation, we eliminate interference and extract the dynamic paths corresponding to humans.

For more denoising details, please refer to the previous work of our team in [7].

III-B3 Segmentation

In order to capture continuous human poses, we segment the processed CSI according to the synchronous video frames and reconstruct CSI images, as shown in Fig. 1. The CSI images contain an amplitude channel and a phase channel. The amplitude can reflect people’s movements, while the phase is more used to obtain changes in position. To extract pose information and weaken the influence of position changes, we combine them to provide both pose and position information for the neural network.

IV Neural Network Design

In this paper, we design a neural network to extract features and convert them into key-point coordinates. In order to reduce the influence of position changes on pose estimation, different from [8], we choose to directly regress the key-points, similar to the method in computer vision.

IV-A Data and Annotations

To accurately and intuitively associate CSI data with human poses, we use a camera synchronized with a receiver to capture video frames. Then, we apply AlphaPose [10] and VideoPose3D [11] to get the 3D key-point coordinates from the video frames. Since our goal is to reconstruct 3D moving human pose skeletons, we choose key-point coordinates as the annotation rather than the whole skeleton. Because the limbs are rigid, locating key-points can be more accurate and prevent overfitting.

IV-B Network Framework

The design of our neural network must consider the time correlation of human poses and the spatial position of the human body. In addition, the spatial resolution of WiFi signals is low, which makes it difficult to capture complete human poses from just a single CSI sample. To solve these problems, we make the network learn to aggregate information from multiple CSI samples instead of taking a single CSI sample as input.

We design a neural network to convert CSI data into 3D human key-point coordinates, including a feature extraction network and a key-point regression network. Because the input CSI images contain a phase channel which increases the amount of data and introduces linear constraints, the feature extraction network should be able to extract sufficiently deep features.

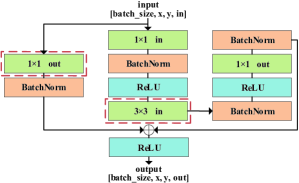

Therefore, in the feature extraction network, we use a residual network, which contains 13 residual blocks as shown in Fig. 2, to extract features related to human poses. Because of its special structure, the residual network can avoid gradient explosion and disappearance caused by the deepening of the network. In the key-point regression network, we utilize two fully connected layers to integrate feature information, and finally convert these features into key-point coordinates. The details of the network are shown in Table I.

Take a set of synchronized CSI data and key-point coordinates as an example, where denotes the CSI images from two pairs of transceivers and denotes the corresponding key-point coordinates from video frames.

In the training stage, we feed into the proposed neural network and get the predicted 3D human key-point coordinates . Then we use as an annotation and compare with it to optimize the entire network.

We define the training process as minimizing the average Euclidean distance error between predicted joints and the ground truth, so we first define the position loss as the norm between the predicted joints and the ground truth:

| (3) |

where and are the predicted and real coordinate of joint in time slot , is the joint number in the model we use, and means the number of data samples.

| Network | Input Size | Output Size | Stride |

|---|---|---|---|

| BLOCK1 | 30204 | 30204 | 11 |

| BLOCK2 | 30204 | 30208 | 11 |

| BLOCK3 | 30208 | 15108 | 22 |

| BLOCK4 | 15108 | 151016 | 11 |

| BLOCK5 | 151016 | 8516 | 22 |

| BLOCK6 | 8516 | 8564 | 11 |

| BLOCK7 | 8564 | 4364 | 22 |

| BLOCK8 | 4364 | 43256 | 11 |

| BLOCK9 | 43256 | 22256 | 22 |

| BLOCK10 | 22256 | 221024 | 11 |

| BLOCK11 | 221024 | 111024 | 22 |

| BLOCK12 | 111024 | 112048 | 11 |

| BLOCK13 | 112048 | 112048 | 11 |

| FC1 | 112048 | 1512 | - |

| FC2 | 1512 | 151 | - |

-

1

1. Stride just applies to the two layers inside the red box in Fig. 2.

-

2

2. Other layers’ stride is always .

Since our network regresses the key-points directly, we introduce Huber Loss in the loss function. Huber Loss is a parameterized loss function for regression problems. It can enhance and reduce the interference of outliers. The Huber Loss in our loss function is expressed as:

| (4) |

where means the Huber norm. It is defined as:

| (5) |

where:

| (6) |

The is a parameter of the Huber Loss and set to 0.75 in our experiment.

Finally, the loss function is defined as:

| (7) |

We use the Adam [12] optimizer to optimize the loss function in our network.

IV-C Network Settings and Training

We collect CSI data at 150Hz and video frames at 30Hz, which means every 5 CSI samples in each receiver are synchronized with one video frame by timestamps. Consider the continuity of movement and the efficiency of training, we use 5 strictly corresponding CSI samples and the preceding 15 samples to correspond to one video frame. Therefore, the final result is 20 unique CSI samples corresponding to one video frame, that is, the final generated action is 7.5 Hz.

The structure of the neural network is shown in Table I. The feature extraction part consists of 13 residual blocks shown in Fig. 2. We add a batch normalization layer after each convolution. In order to add non-linearity to the model, we use Rectified Linear Unit (ReLU) activation functions after each batch normalization layer. To improve training efficiency, we set the stride of the two layers inside the red box in Fig. 2 to 22 in the 3rd, 5th, 7th, 9th, and 11th residual blocks, and all other strides to 11. In addition, in these layers, we perform a convolution operation (kernel size is 11, stride is 22) on the input to make it match the size of the output. After the residual network, two fully connected layers convert high-dimensional features into the key-point coordinates.

V Baseline

WiPose proposed in [8] is currently the state-of-the-art 3D human pose estimation system based on commodity WiFi. In this paper, we apply the deep learning model proposed in WiPose as our baseline. The model contains four-layer Convolutional Neural Network (CNN) and three-layer Long Short Term Memory network (LSTM) on top of the CNN. The system applies the output of LSTM to the initial skeleton model to obtain the current poses according to forward kinematics. In [8], the author proposes two input data formats. Because our system uses 2D CSI data, we only use 2D CSI data to train the model in [8].

VI Experiment and Evaluation

VI-A Setup

Our experimental site is a 7m8m basement. We use 3 transceivers work in the 5GHz frequency with 20MHz bandwidth and we install CSI-Tool [1] on all the transceivers. The two receivers are synchronized using the Network Time Protocol (NTP). And we connect a monocular camera to a receiver to record video frames. Throughout the experiment, other wireless signals are still operated.

VI-B Dataset

The dataset contains 10 hours of data for 5 people with different heights, weights, clothing, and genders, which means that we finally collected 5,400,000 CSI samples at each receiver. We ask each volunteer to perform continuous poses in the room. Meanwhile, the camera will record their poses. Then we obtain the joints synchronized with CSI samples through automatic annotations for joints (AlphaPose and VideoPose3D). For the data of the four persons who are chosen as the training subjects, 75% is used as the training set to train the network and the remaining 25% is used as the test set to test the model. The data of the last person is used for testing the generalization of our model.

| Joints | MidHip | LHip | LKnee | LAnkle | RHip | RKnee | RAnkle | Back | Neck | Nose | Head | LShoulder | LElbow | LWrist | RShoulder | RElbow | RWrist | Overall |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WiPose | 17 | 30 | 36 | 57 | 34 | 40 | 60 | 31 | 13 | 25 | 38 | 26 | 45 | 53 | 24 | 43 | 67 | 37.6 |

| Wi-Mose | 16 | 22 | 32 | 49 | 23 | 31 | 50 | 18 | 14 | 24 | 36 | 19 | 31 | 46 | 18 | 24 | 52 | 29.7 |

VI-C Performance

To measure the performance, we introduce P-MPJPE which performs Procrutes analysis before calculates MPJPE. We observe that compared with the ground truth, some constructed 3D human pose coordinates have slight and global offset. The reason is that we directly regress key-point coordinates, which introduces an error independent of poses. Since P-MPJPE is more suitable for moving human pose estimation, we utilize it to weaken the effect of the attitude-independent error.

VI-C1 Basic Scenario

We first evaluate the performance in the basic scenario which is the LoS scenario. The left part of Fig. 3 shows a test example of the constructed skeletons of a person who continuously walks in different poses and directions.

Table II reports the P-MPJPE for the basic scenario. We not only calculate the errors for each joint but also measure the overall performance by averaging them. The results show that the proposed system performs much better than the baseline. The overall P-MPJPE of our system is 29.7mm, while that of the baseline is 37.6mm. Note that the error of the root joint in WiPose, which is set to Neck in our model, is smaller than that in Wi-Mose because of the introduction of forward kinematics. The positioning accuracy of other points of Wi-Mose is higher than that of the baseline. For both baseline and the presented system, it is more difficult to locate the joints farther from the trunk, since the reflected signals from these parts are weaker than those from closer parts. Another reason is that these parts of human body have smaller reflection areas and always have a larger moving range in our dataset, which makes it much harder to locate.

The upper part of Fig. 4 shows a test example in different perspectives. Compared with 2D pose estimation, we can show the poses in all perspectives, even if the limbs are obscured. The results show that Wi-Mose is more suitable to capture 3D human pose throughout the space.

VI-C2 Occluded Scenario

In order to verify the performance of Wi-Mose in the occluded environment (the NLoS scenario), we add a wooden screen between the subject and the receiver. The distribution of training and test data is the same as the basic environment. A test example is shown in the right part of Fig. 3. The overall P-MPJPE result of Wi-Mose is 37.8mm, while that of the baseline is 42.0mm, indicating that Wi-Mose outperforms the baseline. Thanks to the penetration of WiFi, we can still receive signals even if there are obstructions. Because the signals will attenuate when passing through the wooden screen, the final error will be larger than the basic scenario. In the NLoS scenario, some poses estimated by the baseline are obviously wrong. The reason is that the baseline is more susceptible to position changes than Wi-Mose, especially when there is less information.

The bottom part of Fig. 4 shows a test example in different perspectives. The results indicate that Wi-Mose can capture high-precision 3D moving human poses in the occluded scenario.

VI-C3 Cross-subject Scenario

Wi-Mose performs well in cross-subject scenario. We collect 5 people’s data in both the basic and occluded scenarios. When we train the network in each scenario, we feed the data of the first four people into the network, and then, use the last person’s data for testing. It should be noted that there are obvious differences in height and weight of the five people. The result is in Table III. As we can see, the performance of the proposed Wi-Mose framework is slightly better than the baseline. Compared with the basic and occluded scenarios, the performance of our system is degraded a little larger than that of the baseline. The reason is that we use the network loss to constrain the pose estimation, which is a weak constraint. While the baseline applies the preset skeleton model and the network loss to constrain the poses, which is a strong constraint. This makes our model more flexible in dealing with full-space scenarios, but less satisfactory in cross-subject pose estimation.

VII Conclusion

In this paper, we present Wi-Mose, the first high-precision 3D moving human pose estimation system using commodity WiFi devices. We construct CSI images which contain both pose and position information so that the neural network can extract features which is related to poses but independent of position. Moreover, we design a neural network to extract features from CSI images and convert them into key-point coordinates. The experiment results show that Wi-Mose achieves 29.7mm and 37.8mm P-MPJPE in the LoS and NLoS scenarios, which is 21% and 10% increased in accuracy compared with the baseline, respectively. In the future, we will prove that Wi-Mose can also construct high-precision 3D moving human pose skeletons in different environments.

| Scenarios | Model | Overall |

|---|---|---|

| Basic | WiPose | 43.7 |

| Wi-Mose | 42.6 | |

| Occluded | WiPose | 50.7 |

| Wi-Mose | 46.8 |

References

- [1] D. Halperin, W. Hu, A. Sheth, and D. Wetherall, “Tool release: Gathering 802.11n traces with channel state information,” ACM SIGCOMM Computer Communication Review, vol. 41, no. 1, pp. 53–53, 2011.

- [2] Y. Xie, J. Xiong, M. Li, and K. Jamieson, “mD-Track: Leveraging multi-dimensionality for passive indoor Wi-Fi tracking,” in The 25th Annual International Conference on Mobile Computing and Networking, pp. 1–16, 2019.

- [3] L. Guo, X. Wen, Z. Lu, X. Shen, and Z. Han, “WiRoI: Spatial region of interest human sensing with commodity WiFi,” in 2019 IEEE Wireless Communications and Networking Conference (WCNC), pp. 1–6, 2019.

- [4] S. Palipana, D. Rojas, P. Agrawal, and D. Pesch, “FallDeFi: Ubiquitous fall detection using commodity Wi-Fi devices,” Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, vol. 1, no. 4, pp. 1–25, 2018.

- [5] M. Zhao, T. Li, M. A. Alsheikh, Y. Tian, and D. Katabi, “Through-wall human pose estimation using radio signals,” in Computer Vision and Pattern Recognition (CVPR), 2018.

- [6] M. Zhao, Y. Tian, H. Zhao, M. A. Alsheikh, T. Li, R. Hristov, Z. Kabelac, D. Katabi, and A. Torralba, “RF-based 3D skeletons,” in Proceedings of the 2018 Conference of the ACM Special Interest Group on Data Communication, pp. 267–281, 2018.

- [7] L. Guo, Z. Lu, X. Wen, S. Zhou, and Z. Han, “From signal to image: Capturing fine-grained human poses with commodity Wi-Fi,” IEEE Communications Letters, vol. 24, no. 4, pp. 802–806, 2019.

- [8] W. Jiang, H. Xue, C. Miao, S. Wang, and L. Su, “Towards 3D human pose construction using WiFi,” in MobiCom ’20: The 26th Annual International Conference on Mobile Computing and Networking, 2020.

- [9] D. Wu, D. Zhang, C. Xu, H. Wang, and X. Li, “Device-free WiFi human sensing: From pattern-based to model-based approaches,” IEEE Communications Magazine, vol. 55, no. 10, pp. 91–97, 2017.

- [10] H.-S. Fang, S. Xie, Y.-W. Tai, and C. Lu, “RMPE: Regional multi-person pose estimation,” in Proceedings of the IEEE International Conference on Computer Vision, pp. 2334–2343, 2017.

- [11] D. Pavllo, C. Feichtenhofer, D. Grangier, and M. Auli, “3D human pose estimation in video with temporal convolutions and semi-supervised training,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7753–7762, 2019.

- [12] D. Kingma and J. Ba, “Adam: A method for stochastic optimization,” Computer ence, 2014.

- [13] M. Abadi, P. Barham, J. Chen, Z. Chen, A. Davis, J. Dean, M. Devin, S. Ghemawat, G. Irving, M. Isard, et al., “Tensorflow: A system for large-scale machine learning,” in 12th USENIX symposium on operating systems design and implementation (OSDI 16), pp. 265–283, 2016.