From masses and radii of neutron stars to EOS of nuclear matter through neural network

Abstract

The equation of state (EOS) of dense nuclear matter is a key factor to determine the internal structure and properties of neutron stars. However, the EOS of high-density nuclear matter has great uncertainty mainly because the terrestrial nuclear experiments cannot reproduce matter as dense as that in the inner core of a neutron star. Fortunately, continuous improvements in astronomical observations of neutron stars provide the opportunity to inversely constrain the EOS of high-density nuclear matter. A number of methods have been proposed to implement this inverse constraint, such as the Bayesian analysis algorithm, the Lindblom’s approach, and so on. Neural network algorithm is an effective new method developed in recent years. By employing a set of isospin-dependent parametric EOSs as the training sample of neural network algorithm, we set up an effective way to reconstruct the EOS with relative accuracy through a few mass-radius data. Based on the obtained neural network algorithms and according to the NICER observations on masses and radii of neutron stars with assumed precision, we get the inversely constrained EOS and further calculate the corresponding macroscopic properties of the neutron star. The results are basically consistent with the constraint on EOS from the Huth based on Bayesian analysis. Moreover, the results show that even though the neural network algorithm was obtained by using the finite parameterized EOS as the training set, it is valid for any rational parameter combination of the parameterized EOS model.

pacs:

97.60.Jd; 04.40.Dg; 04.30.-w; 95.30.SfI Introduction

Understanding the nature of neutron stars and determining the equation of state (EOS) of high-density asymmetric nuclear matter are both long-standing problems in nuclear physics and astrophysics Chen1 ; Li25 ; Li26 ; Li2019EPJA . The EOS of asymmetric nuclear matter is a key input for probing the structure and properties of neutron stars. However, the theoretical predictions on the EOS at supra-saturation densities diverge broadly. Except possible phase transitions, density dependence of the symmetry energy is the most uncertain part of the EOS, especially at supra-saturation densities Dieperink03PRC ; Li26 ; Li2019EPJA . Although significant progresses have been made in probing the symmetry energy in terrestrial nuclear laboratories Russotto2 ; LeFevre3 ; Adhikari4 , the inability to reproduce the authentic high-density neutron-rich nuclear matter restricts the full and accurate understanding of symmetry energy and EOS at supra-saturation densities.

Fortunately, many groundbreaking observational discoveries from scientific facilities in recent years have led to a new upsurge in understanding the neutron stars and EOS of high-density asymmetric nuclear matter. For example, the mass-radius measurement on neutron stars from the Neutron Star Interior Composition Explorer (NICER) and the gravitational radiation detection of binary neutron star mergers from LIGO and Virgo have taken the understanding of neutron stars and EOS of the asymmetric nuclear matter to a new level Miller5 ; Riley6 ; Pang7 ; Abbott8 . In the near future, the Large Observatory for X-ray Timing (LOFT), the Advanced Telescope for High Energy Astrophysics (ATHENA), the Square Kilometre Array (SKA) and Einstein Telescope (ET) will also bring more observations of neutron stars when they become operational Feroci40 ; Nandra41 ; Punturo42 ; Dewdney43 ; Rezzolla44 . It is believed that they will provide powerful insights in understanding the neutron stars and the EOS.

If we regard obtaining the properties of neutron stars from the EOS of nuclear matter as forward method, which normally can be numerically calculated according to the EOS and TOV equations for static spherically symmetrical stars. In combination with the inverse derivation from the mass-radius to EOS given in Ref. Lindblom9 using enthalpy, there is a one-to-one mapping between EOS and the mass-radius relation of neutron stars. That is, if a set of accurate mass-radius observations of neutron stars is obtained, the EOS of nuclear matter can be reversely achieved. But how can we reversely obtain the EOS from the properties of neutron stars? At present, there is no reliable and widely accepted method. The purpose of this work is to try to find an effective way reversely mapping the EOS of nuclear matter through the mass-radius relations of neutron stars.

Lindblom had pioneered the inverse TOV mapping to obtain the pressure-density sequence P() of the EOS from the accurate mass-radius sequence of a neutron star Lindblom9 . The reality is that the accurate observation data of the mass-radius of the neutron star is still very rare. As more advanced algorithms are introduced, a small amount of observation data can provide effective constraint on the EOS. For example, Ref. XuJPRC21 implemented constraints on EOS-related parameters within the framework of Bayesian analysis. Bayesian analysis is an algorithm for updating information by correcting existing information based on observed events. The use of this algorithm therefore requires ensuring both the correctness of the prior probabilities and the reasonableness of the observed events. This year, Huth also used this algorithm to achieve constraints on the EOS by combining data from heavy-ion collisions (HIC) with results from multi-messenger astronomical observations Huth11 . Furthermore, a substantial amount of Bayesian analysis work has been contributed to neutron star research YX12 ; Silva13 ; Xie14 .

Neural networks (NN) is a branch of machine learning (ML) and a computing system inspired by biological neural networks Amari17 . It has the advantages of Bayesian analysis algorithm and polynomial fitting algorithm. Only a few less precise observations are needed to obtain the effective constraint through NN, which can also be used to calculate other properties of neutron stars. In the 1990s, NN was pioneered by Clark and collaborators for research related to nuclear physics Gazula92 ; Gazula93 ; Gazula95 ; Gazula99 . This algorithm is now widely used in theoretical prediction work to improve the accuracy of nuclear mass and nuclear charge radii measurements ZhaoNPA22 ; Niu18 ; Utama16PRC ; Utama16JPG . In the field of nuclear astrophysics, this algorithms have also been introduced into neutron star research. For example, the inverse TOV mapping of NN was constructed by using the EOS with sound velocity segmentation in Ref. Fujimoto16 , the reconstructed EOS was implemented with NN at 1 to 7 times the saturated nuclear density in Ref. Soma22AX , Krastev constructed NNs from mass-radius and mass-tidal deformations to symmetry energy Krastev15 , and NN from nuclear parameters to neutron star properties was constructed in Ref. Thete22AX . Additionally, the mapping from X-ray spectra to EOS parameters was achieved by using NN in Ref. Farrell22AX .

Although much of the NN work has given exciting results, many questions remain unsolved. For example, the plausibility of the symmetry energy parameters of the EOS after noise addition, the implementation of the NN prediction function and its general applicability, and the validation of the effectiveness. In this work, we will construct a new NN based on the parametric EOS, which can be efficiently implemented for any combination of parameters satisfying terrestrial experiments and multi-messenger astronomical observations with a small number of mass-radius relations to inverse TOV mapping.

This paper is organized as follows. In Sec. II we briefly review the isospin-dependent parametric EOS and the basic formula for calculating the properties of neutron stars. The NN implementation of the inverse TOV mapping is presented in Sec. III. NN combined with NICER observational constraints are presented in Sec. IV. The summary and outlook are in Sec. V.

Unless otherwise stated, in the formulae we use the gravitational units ().

II Isospin-dependent parametric EOS and the fundamental formula of neutron stars

II.1 Isospin-dependent parametric EOS

Implementing a NN for inverse TOV mapping requires a large number of the EOS as training samples. Moreover, these EOS also need to meet the range of nuclear parameters as much as possible, such as , , etc. The isospin-dependent parametric EOS is able to satisfy both the need to generate a large number of EOS and the rationalization of nuclear parameters.

For asymmetric nuclear matter, the energy per nucleon can be expanded by isospin asymmetry Li25 ; Li26

| (1) |

where is the isospin asymmetry. The first term on the right side is usually referred to as the energy of symmetric nuclear matter, and the second term is referred to as the symmetry energy, which has the physical meaning of the energy difference per nucleon between asymmetric nuclear matter and pure neutron matter.

Usually, the two terms on the right side in Eq. (1) can be expanded at the saturation density Zhang18APJ ; Xie14

| (2.2) | |||

| (2.3) |

Constrained by terrestrial nuclear experiments, the most probable values of the parameters in the above equations are as follows: , , , , , Shlomo33 ; Piekarewicz34 ; Zhang35 ; Oertel36 ; Li37 . It is worth pointing out that in recent years, PREX-II has given higher values ( Adhikari4 ). This result is also considered later in this work.

The pressure of the system can be calculated numerically from

| (2.4) |

II.2 Fundamental formula of statically spherically symmetric neutron stars

Neutron stars in this work are considered to be isolated, non-rotating, and statically spherically symmetric. The Tolman-Oppenheimer-Volkoff (TOV) equations consist of the equation of hydrostatic equilibrium Oppenheimer19 ; Tolman20

| (2.5) |

and

| (2.6) |

The external boundary condition of the star is as follows: . Given the center density of the neutron star, it can be solved layer by layer from the interior to the exterior of the star using the method of solving high-precision initial value problems.

Tidal deformation of neutron star is an EOS-dependent property which can be measured through the gravitational wave events such as GW170817 Abbott18PRL . To linear order, the tidal deformation is defined as Flanagan22

| (2.7) |

where and represent the induced quadrupole moment and the static tidal field, respectively. The second tidal Love number is defined as

| (2.8) |

which can be solved together with the TOV equation Chamel23 ; Chamel24 .

III Neural network implementation of inverse TOV mapping

To clearly demonstrate the process of implementing an NN for inverse TOV mapping, we draw the flow chart as shown in Fig. 1. The first step requires the provision of a relatively complete training set. After determining the range of symmetry energy parameters, the massive EOS (output of NN) is generated by the method in Section II.1, while the corresponding mass-radius points (input of NN) are calculated by the TOV equation. The training set of NN is filtered by astronomical observations. In the second step, the training set is substituted into the initialized NN training to examine the loss. Adjust the key parameters of NN until the loss converges. The final step is to find the minimum number of neurons at the input, which means comparing the relative errors under different mass-radius point conditions. The different mass-radius points come from the non-training sample, which is the EOS in the non-training set and the corresponding mass-radius (consistent with the parameter range and filtering in the first step).

The most significant prerequisite for the implementation of this algorithm is the training sample processing. Four groups of variable parameters are used to generate training samples to cover as large EOS range as possible. The variable parameter space is as follows: , , , , , Shlomo33 ; Piekarewicz34 ; Zhang35 ; Oertel36 ; Li37 . It is especially worth noting that the slope range was developed by combining terrestrial Adhikari4 and astrophysical data. To obtain preliminary training samples, we generate EOS (Section II.1) by taking points at equal intervals in the above parameter interval, then solve the corresponding M-R with the TOV equation. Moreover, in order to make the output more reasonable, we used astronomical observation results during the sample generation stage: Cromartie20NatAs , Abbott18PRL , Miller5 corresponding to , Riley6 corresponding to . Similarly to Ref. Fujimoto_JHEP2021 considering the causality condition () and the stability condition (), if the maximum mass filter is then added, the sample size is comparable to that after the screening of astronomical observations as described above, with a limited improvement in the generalization ability. In the sample processing stage, due to the randomness of observations, we randomly sampled the mass-radius sequences over the whole mass range. Note that because it is more difficult to form low-mass neutron stars, as in Ref. Fujimoto_JHEP2021 the sampling point is set to be greater than 1 . But, considering the theoretical lower mass limit of neutron stars that can reach 0.1 Haensel_AA2002 , as well as recent observations of the HESS J1731-347 Doroshenko_NA2022 , a wider mass sampling interval was adopted. The final sample size involved in the NN is approximately 880,000, where M-R points are used as input and P- points are used as output.

| Layer | Number of neurons | Activation function |

|---|---|---|

| 0(Input) | 80 | N/A |

| 1 | 100 | ReLU |

| 2 | 300 | ReLU |

| 3 | 400 | ReLU |

| 4 | 400 | ReLU |

| 5 | 400 | ReLU |

| 6 | 300 | ReLU |

| 7(Output) | 182 | tanh |

The NN was initialized with reference to the framework of Ref. Fujimoto16 and Krastev15 and according to the background of our training set. By adjusting the relevant parameters, such as the number of layers, the optimization function, the epoch size, etc., the loss convergence of the NN is achieved. We finalized the NN architecture as shown in Table 1. The 80 neurons in the input layer means that the NN can provide accurate predictions under the condition of 40 pairs of (M,R) points. The other key parameters for this NN are shown in Appendix A and the predicted results for the different input points are shown in Appendix B.

To verify the accuracy, we used several mass-radius sequences of the EOS to substitute into this NN for prediction. In particular, we use APR3 and APR4 to test the effectiveness of this NN for non-parameter EOS.

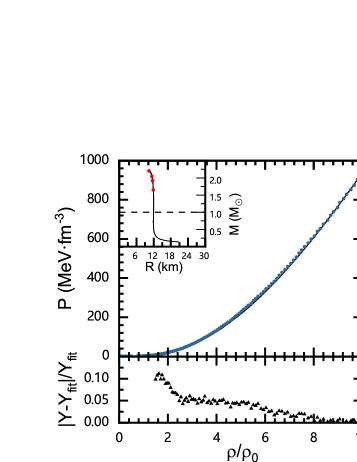

Fig. 2 shows the NN predictions for three adopted EOS. It is shown that our NN method achieves a relatively accurate prediction function with approximately 40 mass-radius points as input. The solid black line for the (a) panel equation of state is randomly generated and substituted into Eq.(2.5) to give the solid black line for the mass-radius sequence. Additionally, We also test some EOS other than the parameterized EOS and find that some of them can also be predicted well, as shown in (b) and (c) in Fig. 2 for APR3 and APR4 EOS. This result means that our NN method will no longer be limited to parametric EOS even though we use only parametric EOS as training samples.

For comparison with the results in Ref. Krastev15 , we also probe the output of NN by setting the parameters of EOS in the panel, where , and and take a range of and , respectively. Using the same sample generation process as for the panel, the sample size at this point is approximately 8,000. Fig. 3 shows the NN predictions for the randomly selected test EOS with the parameters limited in the previously mentioned range. We try to use as few input samples as possible to achieve the prediction function. Here the prediction function for the full density segment is implemented in the NN with 4 rows of mass-radius sequences as input (the data of the input is shown in Table 2). Since the low-dimensional parameter space is simpler compared to the high-dimensional, e.g., the same (M, R) point corresponds to fewer EOS, only four output points and some parameters of the NN in the simplified Table 1 are needed to implement the function. The above results show that our NN design is more suitable for inverse TOV mapping under isospin-dependent parametric EOS conditions.

| Mass () | Radius (km) |

|---|---|

| 1.650030 | 11.8838 |

| 1.916370 | 11.6289 |

| 2.046519 | 11.3741 |

| 2.201511 | 10.3368 |

IV Combining NICER observations to verify the validity of NN

To validate the predictions of the inverse TOV mapping-type NNs, prediction EOS from NICER observations are shown and discussed in this section within the parametric EOS framework as well as adopting the results of Refs. Abbott8 and Huth11 . Furthermore, due to the insufficient number of observations at the neutron star mass-radius, the following approach was adopted to satisfy the NN input points: 1. The EOS is generated in batch with parametric EOS within the range of nuclear parameters (, , , ) mentioned in Sec. III; 2. Take one EOS that fits near the most probable point of the two NICER observations ( Miller21APJ , Riley19APJ ); 3. Forty points were randomly taken on the M-R curve corresponding to this EOS. However, for the real situation, 40 precise (M,R) observations from different neutron stars are necessary for NN input. It is expected that such accurate and sufficient observations will be available in the near future.

The NN-based predicted EOS together with further calculated dimensionless tidal deformations as a function of stellar mass are displayed in Fig. 4(a). To demonstrate the effect of NN, we also plot the constraint on EOS from Huth Huth11 and of canonical neutron star () from GW170817 Abbott8 . It is shown that the predictions obtained under the assumption of observational accuracy prefer relatively stiff EOS at high density, which is basically in agreement with the results of Ref. Huth11 . Further calculation of the predicted EOS shows that the canonical neutron star has a dimensionless tidal deformation of , indicating a high tidal deformability. From Fig. 4(b), we can see that when the stellar mass is less than 1.6 , the tidal deformability decreases relatively quickly as the mass increases, when the mass is higher than 1.6 , the tidal deformability decreases relatively slowly with the increase of mass. For massive neutron stars with , its tidal deformation is , which is far smaller than that of canonical neutron stars.

V Summary and Outlook

The NN algorithms to implement the inverse mapping TOV equation is constructed by employing the parametric EOS as the training sample. Through the practical application of the obtained neural network algorithm, we get the following results.

-

(i)

We implemented the inverse constraint EOS of NN in 4-dimensional parameter space () and 2-dimensional parameter space (), respectively. About 40 mass-radius points are required to output higher precision EOS under the condition that is a variable. Four mass-radius points are required to output a higher precision EOS with as the variable.

-

(ii)

The EOS based on the obtained NN algorithm and the two sets of NICER observational values with assumed precision is predicted. Similar to the results from other method, the EOS constrained by the NICER observation and NN is relatively stiff, and the corresponding tidal deformability of canonical neutron star is relatively high. Our NN predictions are basically consistent with the results of Huth Huth11 .

Despite the generalisation ability of the algorithm we provide, the output of the neural network is difficult to ensure credible results when the EOS is other than the isospin-dependent parametric EOS. To fill this gap, more types of EOSs need to be added to the training samples in the future. We believe that by combining precise observations from multiple sources, NN will be a promising tool for achieving more precise constraints on the EOS of nuclear matter.

Acknowledgements.

This work is supported by NSFC (Grants No. 12375144 and 11975101), Guangdong Natural Science Foundation (Grants No. 2022A1515011552 and 2020A151501820).Appendix A Neural network implementation

NN, one of the common algorithms in ML, is now used in almost every aspects of scientific research and engineering Wright28 . Based on the universal approximation theorem, NN can implement complex nonlinear mappings Hornik29 ; Leshno30 . Its construction is mainly divided into an input layer, a hidden layer and an output layer. Each neuron can be treated as a container of numbers. Similar to the transmission of electrical signals between synapses, neurons are using numbers as signals between them. Starting from the input layer, each layer of neurons goes through the following process between each other

| (2) |

where represents the weight, represents the serial number of layers, and represents the bias value Goodfellow . The calculated is then substituted into the activation function to obtain the value of the output of the neuron to the next layer:

| (3) |

where is called the activation function and is usually used as Sigmoid or ReLU, the latter being adopted in this work. When summarizing the operations of the whole layer of neurons, we can obtain the following expression

| (4) |

At this point, the NN has completed one forward propagation. We also need a metric to evaluate how well the output compares to the true value. This type of evaluation metric is called the loss function, in which we usually use the mean squared error () method . The MSE of each batch is equivalent to the construction drawing, guiding the optimizer to continuously adjust a huge number of weights () and biases () in the NN. The available optimizers are Stochastic Gradient Descent (SGD) and Adam Adam , the latter being adopted in this paper.

The platform for our implementation of NN is Keras, with Tensorflow as its backend Keras ; Tensorflow . It integrates the excellent features of Compute Unified Device Architecture (CUDA) for parallel computing on GPU with Tensorflow as the backend thus providing a rich interface. The training of the NN was performed on NVIDIA GeForce GTX 1650. It can significantly save the time cost of computing while implementing complex NN. To prevent overfitting, Dropout is set to 0.3 between layers. Furthermore, we chose an initial learning rate of 0.0003, a batch size of 500, and an epoch of 800. The proportion of the training dataset used for the validation dataset was 0.1. The hidden layer is used as a fully connected layer. Based on the above conditions, we have designed six layers of NN to achieve inverse TOV mapping. All the data in the NN need to be normalized, which in this paper is used as (M3, R35) and (()40, ). The EOS is taken logarithmic in order to avoid too large a gap in pressure magnitude between different density intervals affecting the prediction accuracy of the NN. For ease of calculation both P and are taken as logarithmic results in and respectively.

Appendix B Results for different input points

We gradually reduce the input points after achieving loss convergence, while improving the relevant parameters of NN. Within the conventional NN framework, most of the improvements to achieve convergence of the NN can only affect the convergence rate of the loss cannot affect the minimum number of input points, such as increasing the number of hidden layers. As the number of neurons in the input layer continues to decrease, a significant error occurs in the panel for 35 pairs of (M,R) (see Fig. 5), while a significant error occurs in the panel for 3 pairs of (M,R) (see Fig. 6). It is important to note that over-concentration of (M,R) sampling points affects the NN predictions. Such sampling is a small probability event in astronomical observations.

Data Availability Statement: The main code used in this work can be found in the Github repository https://github.com/zhscut/NN-for-Neutron-Star.

References

- (1) L. W. Chen, C. M. Ko and B. A. Li, Phys. Rev. Lett. 94, 032701. (2005).

- (2) B. A. Li, X. Han, Phys. Lett. B 727, 276-281 (2013).

- (3) B. A. Li, L. W. Chen and C. M. Ko, Phys. Rep. 464, 113-281 (2008).

- (4) B. A. Li, P. G. Krastev, D. H. Wen, and N. B. Zhang, (2019), arXiv:1905.13175.

- (5) A.E.L. Dieperink, Y. Dewulf, D. Van Neck, M. Waroquier, V. Rodin, Phys. Rev. C 68 064307. (2003).

- (6) P. Russotto, S. Gannon, and S. Kupny, ., Phys. Rev. C 94, 034608 (2016).

- (7) A. Le Fevre, Y. Leifels, ., Nucl. Phys. A 945, 112-133 (2016).

- (8) D. Adhikari, H. Albataineh, ., Phys. Rev. Lett. 126, 172502. (2021).

- (9) M. C. Miller ., Astrophys J. Lett. 918, L28 (2021).

- (10) T. E. Riley ., Astrophysical J. Lett. 918, L27 (2021).

- (11) P. T. Pang, I. Tews, and M. W. Coughlin ., Astrophysical. J. 922, 14 (2021).

- (12) B. P. Abbott ., Phys. Rev. lett. 121, 161101 (2018).

- (13) M. Feroci, L. Stella, ., Experimental Astronomy 34, 415-444 (2012).

- (14) http://www. the-athena-x-ray-observatory. eu.

- (15) M. Punturo, M. Abernathy, ., Classical and Quantum Gravity 27, 194002 (2010).

- (16) P. E. Dewdney, P. J. Hall, ., Proceedings of the IEEE 97, 1482-1496 (2009).

- (17) L. Rezzolla, P. Pizzochero, ., Springer International Publishing.(2018).

- (18) L. Lindblom, Astrophysical. J. 398, 569-573 (1992).

- (19) Y. Fujimoto, K. Fukushima, and K. Murase, Phys. Rev. D 98, 023019 (2018).

- (20) J. Xu, Z. Zhang, B. A. Li, Phys. Rev. C 104(5), 054324 (2021).

- (21) S .Huth, P. T. Pang, ., Nature 606, 276-280 (2022).

- (22) Y. Li, H. Chen, D. Wen, ., Eur. Phys. J. A 57 1-10 (2021).

- (23) H. O. Silva, A. M. Holgado, A. Cardenas-Avendano and N. Yunes, Phys. Rev. Lett. 126, 181101 (2021).

- (24) W. J. Xie, B. A. Li, Astrophysical J. 883, 174 (2019).

- (25) S. I. Amari, M. A. Arbib, Systems neuroscience, 119-165 (1977).

- (26) S. Gazula, J. Clark, and H. Bohr, Nucl. Phys. A 540, 1 (1992).

- (27) K. Gernoth, J. Clark, J. Prater, and H. Bohr, Phys. Lett. B 300, 1 (1993).

- (28) K. Gernoth and J. Clark, Neural Networks 8, 291 (1995).

- (29) J. W. Clark, T. Lindenau, and M. Ristig, Springer Lecture Notes in Physics, Vol. 522 (Springer-Verlag, Berlin, 1999).

- (30) T. Zhao, H. Zhang, Nucl. Phys. A 1021, 122420 (2022).

- (31) Z.M. Niu, H.Z. Liang, Phys. Lett. B 778 48. (2018).

- (32) R. Utama, J. Piekarewicz, H.B. Prosper, Phys. Rev. C 93 014311 (2016).

- (33) R. Utama, W.C. Chen, J. Piekarewicz, J. Phys. G, Nucl. Part. Phys. 43 114002 (2016).

- (34) S. Soma, L. Wang, S. Shi, ., arXiv preprint arXiv:2201.01756. (2022).

- (35) P. G. Krastev, Galaxies 10, 16 (2022).

- (36) A. Thete, K. Banerjee, T. Malik, arXiv:2208.13163. (2022).

- (37) D. Farrell, P. Baldi, J. Ott, A. Ghosh, ., arXiv:2209.02817. (2022).

- (38) N. B. Zhang, B. A. Li, J. Xu, Astrophysical. J. 859(2), 90. (2018).

- (39) N. B. Zhang, B. J. Cai, B. A. Li, W. G. Newton, and J. Xu, Nucl. Sci. Technol. 28, 181 (2017).

- (40) S. Shlomo, V. M. Kolomietz, G. Colo, Eur. Phys. J. A, 30(1), 23-30 (2006).

- (41) J. Piekarewicz. Journal of Physics G Nuclear Physics. 37, 064038 (2010).

- (42) M. Oertel, M. Hempel, T. Klähn, and S. Typel, Rev. Mod. Phys. 89, 015007 (2017).

- (43) B. A. Li, Nuclear Physics News, 27, 7 (2017).

- (44) J. W. Negele, D. Vautherin, Nucl. Phys. A 207, 298-320 (1973).

- (45) G. Baym, C. Pethick, P. Sutherland, Astrophysical. J. 170, 299. (1971).

- (46) J. R. Oppenheimer, G. M. Volkoff, Phys. Rev. 55, 374 (1939).

- (47) R. C. Tolman, Phys. Rev. 55, 364 (1939).

- (48) B. P. Abbott, R. Abbott, T. D. Abbott, ., Phys. Rev. Lett. 121, 161101. (2018).

- (49) E. E. Flanagan, T. Hinderer, Phys. Rev. D 77, 021502(R) (2008).

- (50) T. Hinderer, Astrophysical. J. 677, 1216.(2008).

- (51) T. Hinderer, B. D. Lackey, R. N. Lang, J. S. Read, Phys. Rev. D 81, 123016 (2010).

- (52) H. T. Cromartie, E. Fonseca, S.M Ransom, ., Nat. Astron. 4: 72-76. (2020).

- (53) Y. Fujimoto, M. Nitta, Journal of High Energy Physics, 2021(9), 1-36. (2021).

- (54) P. Haensel, J. L. Zdunik, F. Douchin, Astron. Astrophys. 385, 301-307. (2002).

- (55) V. Doroshenko, ., Nat. Astron., 6(12), 1444-1451. (2022).

- (56) M. C. Miller, F. K. Lamb, A. J. Dittmann, ., Astrophys. J. Lett. 887, L24 (2019).

- (57) T. E. Riley, A. L. Watts, S. Bogdanov, ., Astrophys. J. Lett. 887, L21 (2019).

- (58) L. G. Wright, T. Onodera, and M. M. Stein, ., Nature, 601, 549-555 (2022).

- (59) K. Hornik, M. Stinchcombe, and H. White, Neural networks, 3, 551-560. 171 (1990).

- (60) M. Leshno, V. Y. Lin, ., Neural Networks, 6, 861-867.(1993).

- (61) I. Goodfellow, Y. Bengio, A. Courville Deep learning. (MIT press), (2016).

- (62) D. P. Kingma,J. Ba, Adam: A method for stochastic optimization. arXiv:1412.6980, (2014).

- (63) F. Chollet, "Keras: Deep learning library for theano and tensorflow," https://github.com/fchollet/keras (2015).

- (64) M. Abadi, A. Agarwal, P. Barham, ., Tensorflow: Large-scale machine learning on heterogeneous distributed systems. arXiv:1603.04467, (2016).