From graphs to DAGs: a low-complexity model and a scalable algorithm

Abstract

Learning directed acyclic graphs (DAGs) is long known a critical challenge at the core of probabilistic and causal modeling. The NoTears approach of Zheng et al. [ZARX18], through a differentiable function involving the matrix exponential trace , opens up a way to learning DAGs via continuous optimization, though with an complexity in the number of nodes. This paper presents a low-complexity model, called LoRAM for Low-Rank Additive Model, which combines low-rank matrix factorization with a sparsification mechanism for the continuous optimization of DAGs. The main contribution of the approach lies in an efficient gradient approximation method leveraging the low-rank property of the model, and its straightforward application to the computation of projections from graph matrices onto the DAG matrix space. The proposed method achieves a reduction from a cubic complexity to quadratic complexity while handling the same DAG characteristic function as NoTears and scales easily up to thousands of nodes for the projection problem. The experiments show that the LoRAM achieves efficiency gains of orders of magnitude compared to the state-of-the-art at the expense of a very moderate accuracy loss in the considered range of sparse matrices, and with a low sensitivity to the rank choice of the model’s low-rank component.

1 Introduction

The learning of directed acyclic graphs (DAGs) is an important problem for probabilistic and causal inference [Pea09, PJS17] with important applications in social sciences [MW15], genome research [SB09] and machine learning itself [PBM16, ABGLP19, SG21]. Through the development of probabilistic graphical models [Pea09, BPE14], DAGs are a most natural mathematical object to describe the causal relations among a number of variables. In today’s many application domains, the estimation of DAGs faces intractability issues as an ever growing number of variables is considered, due to the fact that estimating DAGs is NP-hard [Chi96]. The difficulty lies in how to enforce the acyclicity of graphs. Shimizu et al. [SHHK06] combined independent component analysis with the combinatorial linear assignment method to optimize a linear causal model (LiNGAM) and later proposed a direct and sequential algorithm [SIS+11] guaranteeing global optimum of the LiNGAM, for complexities.

Recently, Zheng et al. [ZARX18] proposed an optimization approach to learning DAGs. The breakthrough in this work, called NoTears, comes with the characterization of the DAG matrices by the zero set of a real-valued differentiable function on , which shows that an matrix is the adjacency matrix of a DAG if and only if the exponential trace satisfies

| (1) |

and thus the learning of DAG matrices can be cast as a continuous optimization problem subject to the constraint . The NoTears approach broadens the way of learning complex causal relations and provides promising perspectives to tackling large-scale inference problems [KGG+18, YCGY19, ZDA+20, NGZ20]. However, NoTears is still not suitable for large-scale applications as the complexity of computing the exponential trace and its gradient is . More recently, Fang et al. [FZZ+20] proposed to represent DAGs by low-rank matrices with both theoretical and empirical validation of the low-rank assumption for a range of graph models. However, the adaptation of the NoTears framework [ZARX18] to low-rank model still yields a complexity of due to the DAG characteristic function in (1).

The contribution of the paper is to propose a new computational framework to tackle the scalability issues faced by the low-rank modeling of DAGs. We notice that the Hadamard product in characteristic functions as in (1) poses real obstacles to scaling up the optimization of NoTears [ZARX18] and NoTears-low-rank [FZZ+20]. To address these difficulties, we present a low-complexity model, named Low-Rank Additive Model (LoRAM), which is a composition of low-rank matrix factorization with sparsification, and then propose a novel approximation method compatible with LoRAM to compute the gradients of the exponential trace in (1). Formally, the gradient approximation—consisting of matrix computation of the form , where and is a thin low-rank matrix—is inspired from the numerical analysis of [AMH11] for the matrix action of . We apply the new method to the computation of projections from graphs to DAGs through optimization with the differentiable DAG constraint.

Empirical evidence is presented to identify the cost and the benefits of the approximation method combined with Nesterov’s accelerated gradient descent [Nes83], depending on the considered range of problem parameters (number of nodes, rank approximation, sparsity of the target graph).

The main contributions are summarized as follows:

-

•

The LoRAM model, combining a low-rank structure with a flexible sparsification mechanism, is proposed to represent DAG matrices, together with a DAG characteristic function generalizing the exponential trace of NoTears [ZARX18].

-

•

An efficient gradient approximation method, exploiting the low-rank and sparse nature of the LoRAM model, is proposed. Under the low-rank assumption (), the complexity of the proposed method is quadratic () instead of as shown in Table 1. Large efficiency gains, with insignificant loss of accuracy in some cases, are demonstrated experimentally in the considered range of application.

2 Notation and formal background

A graph on nodes is defined and denoted as a pair , where and . By default, a directed graph is simply referred to as a graph. The adjacency matrix of a graph , denoted as , is defined as the matrix such that if and otherwise. Let be any weighted adjacency matrix of a graph on nodes, then by definition, the adjacency matrix indicates the nonzeros of ; the adjacency matrix is also the support of , denoted as , i.e., if and otherwise. The number of nonzeros of is denoted as . The matrix is called a DAG matrix if is the adjacency matrix of a directed acyclic graph (DAG). We define, by convention, the set of DAG matrices as follows: .

We recall the following theorem that characterizes acyclic graphs using the matrix exponential trace——where denotes the matrix exponential function. The matrix exponential will be denoted as and indifferently. The operator denotes the matrix Hadamard product that acts on two matrices of the same size by elementwise multiplications.

Theorem 1 ([ZARX18]).

A matrix is a DAG matrix if and only if

The following corollary is a straightforward extension of the theorem above:

Corollary 2.

Let be an operator such that: (i) and (ii) , for any . Then, is a DAG matrix if and only if .

In view of the property above, we will refer to the composition of and the operator as a DAG characteristic function. Next, we show some more properties (proof in Appendix A) of the exponential trace.

Proposition 3.

The exponential trace satisfies: (i) For all , and if and only if is a DAG matrix. (ii) is nonconvex on . (iii) The Fréchet derivative of at along any direction is

and the gradient of at is .

3 LoRAM: a low-complexity model

In this section, we describe a low-complexity matrix representation of the adjacency matrices of directed graphs, and then a generalized DAG characteristic function for the new matrix model.

In the spirit of searching for best low-rank singular value decompositions and taking inspiration from [FZZ+20], the search of a full (DAG) matrix is replaced by the search of a pair of thin factor matrices , in for , and the candidate graph matrix is represented by the product . This matrix product has a rank bounded by , with number of parameters . However, the low-rank representation generally gives a dense matrix. Since in many scenarios the sought graph (or Bayesian network) is usually sparse, we apply a sparsification operator on in order to trim abundant entries in . Accordingly, we combine the two operations and introduce the following model.

Definition 4 (LoRAM).

Let be a given index set. The low-rank additive model (LoRAM), noted , is defined from the matrix product of sparsified according to :

| (2) |

where is a mask operator such that if and otherwise. The set is referred to as the candidate set of LoRAM.

The candidate set is to be fixed according to the specific problem. In the case of projection from a given graph to the set of DAGs, can be fixed as the index set of the given graph’s edges.

The DAG characteristic function on the LoRAM search space is defined as follows:

Definition 5.

Let denote the exponential trace function. We define by

| (3) |

where is one of the following elementwise operators:

| (4) |

Note that operators and (4) are two natural choices that meet the conditions (i)–(ii) of Corollary 2, since they both produce a nonnegative surrogate matrix of the matrix while preserving the support of .

3.1 Representativity

In the construction of a LoRAM matrix (2), the low-rank component of the model— with —has a rank smaller or equal to (equality attained when and have full column ranks), and the subsequent sparsification operator generally induces a change in the rank of the final matrix model (2). Indeed, the rank of depends on an interplay between and the discrete set . The following examples illustrate the two extreme cases of such interplay:

-

(i)

The first extreme case: let be the index set of the edges of a sparse graph , and let be the pair of matrices containing all ones, for , then the LoRAM matrix , i.e., the adjacency matrix of . Hence , which depends solely on and is generally much larger than .

-

(ii)

The second extreme case: let be the full index set, then reduces to the identity map such that and for any .

In the first extreme case above, optimizing LoRAM (2) for DAG learning boils down to choosing the most relevant edge set , which is an NP-hard combinatorial problem [Chi96]. In the second extreme case, the optimization of LoRAM (2) reduces to learning the most pertinent low-rank matrices , which coincides with optimizing the NoTears-low-rank [FZZ+20] model.

In this work, we are interested in settings between the two extreme cases above such that both and the candidate set have sufficient degrees of freedom. Consequently, the representativity of LoRAM depends on both the rank parameter and .

Next, we present a way of quantifying the representativity of LoRAM with respect to a subset of DAG matrices. The restriction to a subset is motivated by the revelation that certain types of DAG matrices—such as those with many hubs—can be represented by low-rank matrices [FZZ+20].

Definition 6.

Let be a given set of nonzero DAG matrices. For , let denote any LoRAM matrix (2) such that , then we define the relative error of LoRAM w.r.t. as

where denotes the matrix max-norm. For , is referred to as an -quasi DAG matrix.

Note that the existence of for any is guaranteed by the closeness of the image set of LoRAM (2).

Based on the relative error above, the relevance of the DAG characteristic function is established from the following proposition (proof in Appendix A):

Proposition 7.

Given a set of nonzero DAG matrices. For any such that , without loss of generality, the minima of

belong to the set

| (5) |

where is given in Definition 6 and for a constant .

Remark 8.

The constant in Proposition 7 can be seen as a measure of total capacity of passing from one node to other nodes, and therefore depends on , (bounded by ), the sparsity and the average degree of the graph of . For DAG matrices with sparsity and , one can expect that for a constant .

The result of Proposition 7 establishes that, under the said conditions, a given DAG matrix admits low rank approximations satisfying for a small enough parameter . In other words, the low-rank projection with a relaxed DAG constraint admits solutions.

The general case of projecting a non-acyclic graph matrix onto a low-rank matrix under a relaxed DAG constraint is considered in next section.

4 Scalable projection from graphs to DAGs

Given a (generally non-acyclic) graph matrix , let us consider the projection of onto the feasible set (5):

| (6) |

where is the LoRAM matrix (2), is given by Definition 5, and is a tolerance parameter. Based on Proposition 7 and given the objective function of (6), the solution to (6) provides a quasi DAG matrix closest to and thus enables finding a projection of onto the DAG matrix space . More precisely, we tackle problem (6) using the penalty method and focus on the primal problem, for a given penalty parameter , followed by elementwise hard thresholding:

| (7) | |||

| (8) |

where is the elementwise hard thresholding operator. The choice of and is discussed in Section C.1 in the context of problem (6).

Remark 9.

To obtain any DAG matrix closest to (the non-acyclic) , it is necessary to break the cycles in by suppressing certain edges. Hence, we assume that the edge set of the sought DAG is a strict subset of and thus fix the candidate set to be .

Problems (6) and (7) are nonconvex due to: (i) the matrix exponential trace in (3) is nonconvex (as in [ZARX18]), and (ii) the matrix product in (2) is nonconvex. In view of the DAG characteristic constraint of (6), the augmented Lagrangian algorithms of NoTears [ZARX18] and NoTears-low-rank [FZZ+20] can be applied for the same objective as problem (6); it suffices for NoTears and NoTears-low-rank to replace the LoRAM matrix in (6) by the matrix variable and the dense matrix product respectively.

However, the NoTears-based methods of [ZARX18, FZZ+20] have an complexity due to the composition of the elementwise operations (the Hadamard product ) with the matrix exponential in the function (1) and (3). We elaborate this argument in the next subsection and then propose a new computational method for computations involving the gradient of the DAG characteristic function .

4.1 Gradient of the DAG characteristic function and an efficient approximation

Lemma 10.

Theorem 11.

Proof.

From Proposition 3-(iii), the Fréchet derivative of the exponential trace at is for any . By the chain rule and Lemma 10, the Fréchet derivative of (3) for is as follows: with ,

| (12) | |||

| (13) | |||

| (14) | |||

where (13) holds, i.e., because (for any and ) and , and (14) holds because for any (with compatible sizes). By identifying the above equation with , we have , hence the expression (10) for . The expression of for can be obtained using the same calculations and (4b). ∎

The computational bottleneck for the exact computation of the gradient (9) lies in the computation of the matrix (11) and is due to the difference between the Hadamard product and matrix multiplication; see Section B.1 for details. Nevertheless, we note that the multiplication is similar to the action of matrix exponentials of the form , which can be computed using only a number of repeated multiplications of a matrix with the thin matrix based on Al-Mohy and Higham’s results [AMH11].

The difficulty in adapting the method of [AMH11] also lies in the presence of the Hadamard product in (10)–(11). Once the sparse matrix in (10)–(11) is obtained (using Algorithm 3, Section B.2), the exact computation of , using the Taylor expansion of to a certain order , is to compute at each iteration, which inevitably requires the computation of the matrix product (in the form of ) before computing the Hadamard product, which requires an cost.

To alleviate the obstacle above, we propose to use inexact333It is inexact because . incremental multiplications; see Algorithm 1.

In line 1 of Algorithm 1, the value of is obtained from numerical analysis results of [AMH11, Algorithm 3.2]; often, the value of , depending on the spectrum of , is a bounded constant (independent of the matrix size ). Therefore, the dominant cost of Algorithm 1 is , since each iteration (lines 4–6) costs flops. Table 1 summarizes this computational property in comparison with existing methods.

Reliability of Algorithm 1.

The accuracy of Algorithm 1 with respect to the exact computation of depends notably on the scale of , since the differential at has a greater operator norm when the norm of is greater; see Proposition 13 in Appendix A.

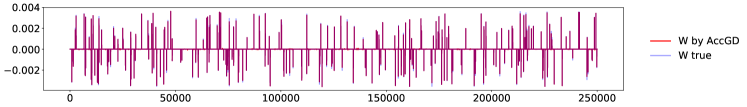

To illustrate the remark above, we approximate (9) by Algorithm 1 on random points of with different scales, with defined from the edges of a random sparse graph; the results are shown in Figure 1.

We observe from Figure 1 that Algorithm 1 is reliable, i.e., having gradient approximations with cosine similarity close to , when the norm of is sufficiently bounded. More precisely, for , Algorithm 1 is reliable in the following set

| (15) |

The degrading accuracy of Algorithm 1 outside (15) can nonetheless be avoided for the projection problem (6), in particular, through rescaling of the input matrix . Note that the edge set of any graph is invariant to the scaling of its weighted adjacency matrix, and that any DAG solution to (6) satisfies since (see Remark 9). Hence it suffices to rescale with a small enough scalar, e.g., replace with , without loss of generality, in (6)–(7). Indeed, this rescaling ensures that both the input matrix and matrices like —a DAG matrix equivalent to —stay confined in the image (through LoRAM) of (15), in which the gradient approximations by Algorithm 1 are reliable.

4.2 Accelerated gradient descent

Given the gradient computation method (Algorithm 1) for the function, we adapt Nesterov’s accelerated gradient descent [Nes83, Nes04] to solve (7). The accelerated gradient descent is used for its superior performance than vanilla gradient descent in many convex and nonconvex problems while it also only requires first-order information of the objective function. Details of this algorithm for our LoRAM optimization is given in Algorithm 2.

| (16) | ||||

Specifically, in line 5 of Algorithm 2, the Barzilai–Borwein (BB) stepsize [BB88] is used for the descent step (16). The computation of the BB stepsize requires evaluating the inner products and the norm , where ; we choose the Euclidean inner product as the metric on :

for any pair of points and on . Note that one can always use backtracking line search based on the stepsize estimation (line 5). We choose to use the BB stepsize directly since it does not require any evaluation of the objective function, and thus avoids the nonnegligeable costs for computing the matrix exponential trace in (3). We refer to [CDHS18] for a comprehensive view on accelerated methods for nonconvex optimization.

Due to the nonconvexity of (3) (see Proposition 3) and thus (7), we aim at finding stationary points of (7). In particular, empirical results in Section 5.3 show that the solutions by the proposed method, with close-to-zero or even zero SHDs to the true DAGs, are close to global optima in practice.

5 Experimental validation

This section investigates the performance (computational gains and accuracy loss) of the proposed gradient approximation method (Algorithm 1) and thereafter reports on the performance of the LoRAM projection (6), compared to NoTears [ZARX18]. Sensitivity to the rank parameter of the proposed method is also investigated.

The implementation is available at https://github.com/shuyu-d/loram-exp.

5.1 Gradient computations

We compare the performance of Algorithm 1 in gradient approximations with the exact computation in the following settings: the number of nodes , , , , , , , and the sparsity () of the index set tested are . The results shown in Figure 2 are based on the computation of (9) on randomly generated points , where and are Gaussian matrices.

From Figure 2 (a), Algorithm 1 shows a significant reduction in the runtime for computing the gradient of (3) at the expense of a very moderate loss of accuracy (Figure 2 (b)): the approximate gradients are mostly sufficiently aligned with exact gradients in the considered range of graph sizes and sparsity levels.

5.2 Sensitivity to the rank parameter

In this experiment, we generate the input matrix of problem (6) as follows:

| (17) |

where is a given DAG matrix and is a graph containing additive noisy edges that break the acyclicity of the observed graph .

The ground truth DAG matrix is generated from the acyclic Erdős-Rényi (ER) model (in the same way as in [ZARX18]), with a sparsity rate . The noise graph of (17) is defined as , which consists of edges that create a confusion between causes and effects, since these edges are reversed, pointing from the ground-truth effects to their respective causes. We evaluate the performance of LoRAM-AGD in the proximal mapping computation (7) for and different values of the rank parameter . In all these evaluations, the candidate set is fixed to be ; see Remark 9.

We measure the accuracy of the projection result by the false discovery rate (FDR, lower is better), false positive rate (FPR), true positive rate (TPR, higher is better), and the structural Hamming distance (SHD, lower is better) of the solution compared to the DAG .

The results in Figure 3 suggest that:

-

(i)

For each sparsity level, increasing the rank parameter generally improves the projection accuracy of the LoRAM.

-

(ii)

While the rank parameter of (2) attains at most around , which is only -th the rank of the input matrix and the ground truth , the rank of the solution attains the same value as . This means that the rank representativity of the LoRAM goes beyond the value of . This phenomenon is understandable in the present case where the candidate set is fairly close to the sparse edge set .

-

(iii)

The projection accuracy in TPR and FDR (and also SHD, see Figure 5 in Section C.2) of LoRAM-AGD is close to optimum on a wide interval of the tested ranks and are fairly stable on this interval.

5.3 Scalability

We examine two different types of noisy edges (in ) as follows. Case (a): Bernoulli-Gaussian , where with probability and all nonzeros of are i.i.d. samples of . Case (b): cause-effect confusions as in Section 5.2.

The initial factor matrices are random Gaussian matrices. For the LoRAM, we set to be the support of ; see Remark 9. The penalty parameter of (7) is varied in with no fine tuning.

In case (a), we test with various noise levels for nodes. In case (b), we test on various graph dimensions, for . The results are given in Table 2–Table 3 respectively.

| LoRAM (ours) / NoTears | ||||

|---|---|---|---|---|

| Runtime (sec) | TPR | FDR | SHD | |

| 0.1 | 1.34 / 5.78 | 1.0 / 1.0 | 9.9e-3 / 0.0e+0 | 25.0 / 0.0 |

| 0.2 | 2.65 / 11.58 | 1.0 / 1.0 | 9.5e-3 / 0.0e+0 | 24.0 / 0.0 |

| 0.3 | 1.35 / 28.93 | 1.0 / 1.0 | 9.5e-3 / 8.0e-4 | 24.0 / 2.0 |

| 0.4 | 1.35 / 18.03 | 1.0 / 1.0 | 9.9e-3 / 3.2e-3 | 25.0 / 9.0 |

| 0.5 | 1.35 / 12.52 | 1.0 / 1.0 | 9.9e-3 / 5.2e-3 | 25.0 / 13.0 |

| 0.6 | 2.57 / 16.07 | 1.0 / 1.0 | 9.5e-3 / 4.4e-3 | 24.0 / 11.0 |

| 0.7 | 1.35 / 18.72 | 1.0 / 1.0 | 9.9e-3 / 5.2e-3 | 25.0 / 13.0 |

| 0.8 | 1.35 / 32.03 | 1.0 / 1.0 | 9.9e-3 / 4.8e-3 | 25.0 / 15.0 |

| LoRAM (ours) / NoTears | |||||

|---|---|---|---|---|---|

| Runtime (sec) | TPR | FDR | SHD | ||

| (5.0, 40) | 100 | 1.82 / 0.67 | 1.00 / 1.00 | 0.00e+0 / 0.0 | 0.0 / 0.0 |

| (5.0, 40) | 200 | 2.20 / 3.64 | 0.98 / 0.95 | 2.50e-2 / 0.0 | 1.0 / 2.0 |

| (5.0, 40) | 400 | 2.74 / 16.96 | 0.98 / 0.98 | 2.50e-2 / 0.0 | 4.0 / 4.0 |

| (5.0, 40) | 600 | 3.40 / 42.65 | 0.98 / 0.96 | 1.67e-2 / 0.0 | 6.0 / 16.0 |

| (5.0, 40) | 800 | 4.23 / 83.68 | 0.99 / 0.97 | 7.81e-3 / 0.0 | 5.0 / 22.0 |

| (2.0, 80) | 1000 | 7.63 / 136.94 | 1.00 / 0.96 | 0.00e+0 / 0.0 | 0.0 / 36.0 |

| (2.0, 80) | 1500 | 13.34 / 437.35 | 1.00 / 0.96 | 8.88e-4 / 0.0 | 2.0 / 94.0 |

| (2.0, 80) | 2000 | 20.32 / 906.94 | 1.00 / 0.96 | 7.49e-4 / 0.0 | 3.0 / 148.0 |

The results in Table 2–Table 3 show that:

-

(i)

In case (a), the solutions of LoRAM-AGD are close to the ground truth despite slighly higher errors than NoTears in terms of FDR and SHD.

-

(ii)

In case (b), the solutions of LoRAM-AGD are almost identical to the ground truth In (17) in all performance indicators (also see Section C.2).

-

(iii)

In terms of computation time (see Figure 4), the proposed LoRAM-AGD achieves significant speedups (around times faster when ) compared to NoTears and also has a smaller growth rate with respect to the problem dimension , showing good scalability.

6 Discussion and Perspectives

This paper tackles the projection of matrices on DAG matrices, motivated by the identification of linear causal graphs. The line of research built upon the LiNGAM algorithms [SHHK06, SIS+11] has recently been revisited through the formal characterization of DAGness in terms of a continuously differentiable constraint by [ZARX18]. The NoTears approach of [ZARX18] however suffers from an complexity in the number of variables, precluding its usage for large-scale problems.

Unfortunately, this difficulty is not related to the complexity of the model (number of parameters of the model): the low-rank approach investigated by NoTears-low-rank [FZZ+20] also suffers from the same complexity, incurred in the gradient-based optimization phase.

The present paper addresses this difficulty by combining a sparsification mechanism with the low-rank model and using a new approximated gradient computation. This approximated gradient takes inspiration from the approach of [AMH11] for computing the action of exponential matrices based on truncated Taylor expansion. This approximation eventually yields a complexity of , where the rank parameter is small (). The experimental validation of the approach shows that the approximated gradient entails no significant error with respect to the exact gradient, for LoRAM matrices with a bounded norm, in the considered range of graph sizes () and sparsity levels. The proposed algorithm combining the approximated gradient with a Nesterov’s acceleration method [Nes83, Nes04] yields gains of orders of magnitude in computation time compared to NoTears on the same artificial benchmark problems. The approximation performance indicators reveal almost no performance loss for the projection problem in the setting of case (b) (where the matrix to be projected is perturbed with anti-causal links), while it incurs minor losses in terms of false discovery rate (FDR) in the setting of case (a) (with random additive spurious links).

Further developments aim to extend the approach and address the identification of causal DAG matrices from observational data. A longer term perspective is to extend LoRAM to the non-linear case, building upon the introduction of latent causal variables and taking inspiration from the non-linear independent component analysis and generalized contrastive losses [HST19]. Another perspective relies on the use of auto-encoders to yield a compressed representation of high-dimensional data, while constraining the structure of the encoder and decoder modules to enforce the acyclic property.

Acknowledgement

The authors warmly thank Fujitsu Laboratories LTD who funded the first author, and in particular Hiroyuki Higuchi and Koji Maruhashi for many discussions.

References

- [ABGLP19] M. Arjovsky, L. Bottou, I. Gulrajani, and D. Lopez-Paz. Invariant risk minimization. arXiv preprint arXiv:1907.02893, 2019.

- [AMH11] A. H. Al-Mohy and N. J. Higham. Computing the action of the matrix exponential, with an application to exponential integrators. SIAM journal on scientific computing, 33(2):488–511, 2011.

- [BB88] J. Barzilai and J. M. Borwein. Two-point step size gradient methods. IMA journal of numerical analysis, 8(1):141–148, 1988.

- [BPE14] P. Bühlmann, J. Peters, and J. Ernest. Cam: Causal additive models, high-dimensional order search and penalized regression. The Annals of Statistics, 42(6):2526–2556, 2014.

- [CDHS18] Y. Carmon, J. C. Duchi, O. Hinder, and A. Sidford. Accelerated methods for nonconvex optimization. SIAM Journal on Optimization, 28(2):1751–1772, 2018.

- [Chi96] D. M. Chickering. Learning bayesian networks is NP-complete. In Learning from data, pages 121–130. Springer, 1996.

- [FZZ+20] Z. Fang, S. Zhu, J. Zhang, Y. Liu, Z. Chen, and Y. He. Low rank directed acyclic graphs and causal structure learning. arXiv preprint arXiv:2006.05691, 2020.

- [Hab18] H. E. Haber. Notes on the matrix exponential and logarithm. Santa Cruz Institute for Particle Physics, University of California: Santa Cruz, CA, USA, 2018.

- [HST19] A. Hyvarinen, H. Sasaki, and R. Turner. Nonlinear ICA using auxiliary variables and generalized contrastive learning. In International Conference on Artificial Intelligence and Statistics (AISTATS), 2019.

- [KGG+18] D. Kalainathan, O. Goudet, I. Guyon, D. Lopez-Paz, and M. Sebag. Structural agnostic modeling: Adversarial learning of causal graphs. arXiv preprint arXiv:1803.04929, 2018.

- [MW15] S. L. Morgan and C. Winship. Counterfactuals and causal inference. Cambridge University Press, 2015.

- [Nes83] Y. Nesterov. A method for solving the convex programming problem with convergence rate . Soviet Mathematics Doklady, 27:372–376, 1983.

- [Nes04] Y. Nesterov. Introductory Lectures on Convex Optimization, volume 87. Springer Publishing Company, Incorporated, 1 edition, 2004. doi:10.1007/978-1-4419-8853-9.

- [NGZ20] I. Ng, A. Ghassami, and K. Zhang. On the role of sparsity and DAG constraints for learning linear DAGs. Advances in Neural Information Processing Systems, 33:17943–17954, 2020.

- [PBM16] J. Peters, P. Bühlmann, and N. Meinshausen. Causal inference by using invariant prediction: identification and confidence intervals. Journal of the Royal Statistical Society. Series B (Statistical Methodology), pages 947–1012, 2016.

- [Pea09] J. Pearl. Causality. Cambridge university press, 2009.

- [PJS17] J. Peters, D. Janzing, and B. Schölkopf. Elements of causal inference: foundations and learning algorithms. The MIT Press, 2017.

- [SB09] M. Stephens and D. J. Balding. Bayesian statistical methods for genetic association studies. Nature Reviews Genetics, 10(10):681–690, 2009.

- [SG21] A. Sauer and A. Geiger. Counterfactual generative networks. In International Conference on Learning Representations (ICLR), 2021.

- [SHHK06] S. Shimizu, P. O. Hoyer, A. Hyvärinen, and A. Kerminen. A linear non-Gaussian acyclic model for causal discovery. Journal of Machine Learning Research, 7(10), 2006.

- [SIS+11] S. Shimizu, T. Inazumi, Y. Sogawa, A. Hyvärinen, Y. Kawahara, T. Washio, P. O. Hoyer, and K. Bollen. DirectLiNGAM: A direct method for learning a linear non-Gaussian structural equation model. The Journal of Machine Learning Research, 12:1225–1248, 2011.

- [YCGY19] Y. Yu, J. Chen, T. Gao, and M. Yu. DAG-GNN: DAG structure learning with graph neural networks. In International Conference on Machine Learning, pages 7154–7163. PMLR, 2019.

- [ZARX18] X. Zheng, B. Aragam, P. K. Ravikumar, and E. P. Xing. DAGs with NO TEARS: Continuous optimization for structure learning. In Advances in Neural Information Processing Systems, volume 31, 2018. URL https://proceedings.neurips.cc/paper/2018/file/e347c51419ffb23ca3fd5050202f9c3d-Paper.pdf.

- [ZDA+20] X. Zheng, C. Dan, B. Aragam, P. Ravikumar, and E. Xing. Learning sparse nonparametric dags. In International Conference on Artificial Intelligence and Statistics, pages 3414–3425. PMLR, 2020.

Appendix A Proofs (Sections 2–4)

Proof of Corollary 2.

By the condition (i), all entries of the matrix are nonnegative. Hence, for all ,

| (18) |

By combining (18) and (ii) that , we confirm that equals the sum of the weighted edge-sum along all -cycles in the graph of (the graph whose adjacency matrix is ), according to classical graph theory (which can be obtained by induction). Therefore, if is a DAG matrix, i.e., there are no cycles (of any length) in the graph of , then for all , hence ; and if , then , hence (due to (18)), which implies that there are no cycles of any length in the graph of .

Proof of Proposition 3.

(i) All entries of any matrix are nonnegative, hence for all . Therefore, . Moreover, Corollary 2 shows that if and only if is a DAG matrix.

(ii) First, we show the nonconvexity of on (for ) by using the property (i) and Corollary 2: let and . Notice that and are two different DAG matrices. Then, consider any matrix on the line segment between and :

Then is a weighted adjacency matrix of graph with -cycles. Hence, is nonnegative and is not a DAG matrix, it follows from the property (i) above that . Property (i) also shows that , since and are DAG matrices. Therefore, we have

Hence is nonconvex along the segment . To extend this example to , it suffices to consider a similar pair of DAG matrices and which differ only on their first submatrices, such that and .

(iii) First, the following definition and property are needed for the differential calculus of the matrix exponential function.

Definition 12.

For any pair of matrices , the commutator between and is defined and denoted as follows:

The operator is a linear operator. More generally, the powers of the commutator are defined as follows: , and for any :

Proposition 13 ([Hab18] (Theorem 2.b)).

For any and any , the derivative of at is , where .

Now, we calculate the directional derivative of along a direction : Note that the differential of is itself anywhere, hence by the chain rule and Proposition 13, for any , the directional derivative of along is

| (19) |

Next, we show that last term of (19) is zero: note that for , by rotating the three matrices in the trace function, we have

| (20) |

for any , where (20) holds because since and commute (for any ). Furthermore, we deduce that for all :

because (20) holds for any direction including . Therefore, the equation (19) becomes , and through the identification , the gradient reads .

Proof of Proposition 7.

From Definition 6, the residual matrix satisfies . Therefore, by the Taylor expansion and Proposition 3-(iii), there exists a constant such that

| (21) | |||

where (21) holds since is a DAG matrix (in view of Theorem 1), and is the number of nonzeros of . It follows that, with relative error and (without loss of generality):

which entails the result.

Proof of Lemma 10.

Appendix B Details of computational methods

B.1 Computational cost of the exact gradient

The computation of the function value and the gradient of (3) mainly includes two types of operations: (i) the computation of the sparse matrix given Definition 4 and , and (ii) the computation of the matrix exponential-related terms (10) or (11).

The exact computation of the gradient (Corollary 2) is as follows:

(i) compute (2): this step has a cost

of flops; see Algorithm 3 in Section B.2.

The output is a sparse matrix. A byproduct of

this step is ( or );

(ii) compute : this step has a cost of ;

(iii) compute , which costs

flops. The output is a sparse matrix;

(iv) Compute the sparse-dense matrix multiplication : this step costs flops.

Therefore, the total cost is .

In scenarios with large and sparse graphs, i.e., and with , the complexity of computing the exact gradient of the learning criterion thus is cubic in , incurred by computing the matrix exponential in step (ii); this step is necessary due to the Hadamard product that lies between the action of and the thin factor matrix (respectively, ) in (10) or (11): one needs to compute the Hadamard product (before the matrix multiplication with ), which requires computing explicitly the matrix exponential .

B.2 Computation of the LoRAM matrix

Appendix C Experiments

C.1 Choice of the parameters and

In the context of projection problem (6), the following features are notable factors that influence the choice of an optimal : (i) the type of graphs underlying the input matrix , and (ii) sparsity of the graph matrix . Note that the scale of is irrelevant to the choice of since we rescale the input graph matrix with for a constant , without loss of generality (see Section 4.1), such that the scale of always stay in the level of (in Frobenius norm). Therefore, we are able to fix a parameter set regarding the following experimental settings: (i) the type of graphs is fixed to be the ER acyclic graph set, same as in [ZARX18], and (ii) the sparsity level of is fixed around . Concretely, we select the penalty parameter , among values in the fixed set , with respect to the objective function value of the proximal mapping (7).

On the other hand, the choice of the hard threshold in (8) is straightforward, according to Definition 6 for the relative error of LoRAM w.r.t. a given subset of DAGs. For the set of graphs in our experiments, we use a moderate value , which has the effect of eliminating only the very weakly weighted edges.

C.2 Additional experimental details

| Algorithm | runtime | FDR | TPR | FPR | SHD | ||

|---|---|---|---|---|---|---|---|

| LoRAM | (5.0, 40) | 100 | 1.82 | 0.0 | 1.0 | 0.0 | 0.0 |

| (5.0, 40) | 200 | 2.20 | 2.5e-2 | 0.98 | 5.0e-5 | 1.0 | |

| (5.0, 40) | 400 | 2.74 | 2.5e-2 | 0.98 | 5.0e-5 | 4.0 | |

| (5.0, 40) | 600 | 3.40 | 1.7e-2 | 0.98 | 3.4e-5 | 6.0 | |

| (5.0, 40) | 800 | 4.23 | 7.8e-3 | 0.99 | 1.6e-5 | 5.0 | |

| (2.0, 80) | 1000 | 7.63 | 0.0 | 1.0 | 0.0 | 0.0 | |

| (2.0, 80) | 1500 | 13.34 | 8.9e-4 | 1.0 | 1.8e-6 | 2.0 | |

| (2.0, 80) | 2000 | 20.32 | 7.5e-4 | 1.0 | 1.5e-6 | 3.0 | |

| NoTears | - | 100 | 0.67 | 0.0 | 1.00 | 0.0 | 0.0 |

| - | 200 | 3.64 | 0.0 | 0.95 | 0.0 | 2.0 | |

| - | 400 | 16.96 | 0.0 | 0.98 | 0.0 | 4.0 | |

| - | 600 | 42.65 | 0.0 | 0.96 | 0.0 | 16.0 | |

| - | 800 | 83.68 | 0.0 | 0.97 | 0.0 | 22.0 | |

| - | 1000 | 136.94 | 0.0 | 0.96 | 0.0 | 36.0 | |

| - | 1500 | 437.35 | 0.0 | 0.96 | 0.0 | 94.0 | |

| - | 2000 | 906.94 | 0.0 | 0.96 | 0.0 | 148.0 |