From Automation to Cognition: Redefining the Roles of Educators and Generative AI in Computing Education

Abstract.

Generative Artificial Intelligence (GenAI) offers numerous opportunities to revolutionise teaching and learning in Computing Education (CE). However, educators have expressed concerns that students may over-rely on GenAI and use these tools to generate solutions without engaging in the learning process. While substantial research has explored GenAI use in CE, and many Computer Science (CS) educators have expressed their opinions and suggestions on the subject, there remains little consensus on implementing curricula and assessment changes.

In this paper, we describe our experiences with using GenAI in CS-focused educational settings and the changes we have implemented accordingly in our teaching in recent years since the popularisation of GenAI. From our experiences, we propose two primary actions for the CE community: 1) redesign take-home assignments to incorporate GenAI use and assess students on their process of using GenAI to solve a task rather than simply on the final product; 2) redefine the role of educators to emphasise metacognitive aspects of learning, such as critical thinking and self-evaluation. This paper presents and discusses these stances and outlines several practical methods to implement these strategies in CS classrooms. Then, we advocate for more research addressing the concrete impacts of GenAI on CE, especially those evaluating the validity and effectiveness of new teaching practices.

1. Introduction

Generative Artificial Intelligence (GenAI) has profoundly impacted the Computing Education (CE) landscape (Denny et al., 2024c). Studies have shown that GenAI is capable of producing solutions for introductory and intermediate (CS1/CS2) programming assessments (Denny et al., 2023; Finnie-Ansley et al., 2022; Savelka et al., 2023), with recent models achieving near-perfect scores on CS1 exams (Prather et al., 2023). Furthermore, OpenAI’s GPT-4o model has demonstrated the potential to mimic human educators and provide tutoring independently (Khan Academy, 2024). Leveraging both capabilities may provide Computer Science (CS) students with unlimited, immediate, and personalised tutor assistance, which can also reduce the workload of answering student queries for CS educators (Kazemitabaar et al., 2024b; Sheese et al., 2024). GenAI may also be particularly useful for subjects using multimodal input and output. For example, in Computer Graphics, the correctness of a student submission can be assessed using visual output, function output, API invariants (OpenGL states), and code (Wünsche et al., 2018, 2019). In the future, GenAI tools may be able to give formative feedback on all of these levels. This could help students develop a deeper understanding of content, and enable educators to pose more open-ended and creative assignments, which would be difficult to automatically assess with more traditional means (Hooper et al., 2024).

While the capabilities and rapid adoption of GenAI are impressive, it is a double-edged sword for CS students and educators. GenAI tools accept an unlimited number of queries and produce immediate responses. This permits students to repeatedly seek assistance until they receive working solutions, even if they don’t understand the underlying concepts, potentially becoming overly reliant on GenAI to generate answers without actively engaging in the learning process (Becker et al., 2023; Prather et al., 2023). Additionally, despite GenAI’s impressive ability to solve a wide range of problems, it is currently less effective when applied to certain complex questions, such as those in Computer Graphics (Feng et al., 2024a, b, c), where students may learn from incorrectly generated information if they use GenAI to aid them in their problem-solving process. Unfettered access to tools that can be used by students to solve exercises with minimal effort threatens the integrity and effectiveness of take-home assessments. To avoid this, some educators discourage using GenAI on graded work and attempt to detect and punish any misuse of GenAI tools (Tang et al., 2024; Wu et al., 2023). However, GenAI detection tools are unreliable and could result in unjust punishments (Orenstrakh et al., 2023). Alternatively, educators could adapt course content and teaching practices that encourage productive learning with unrestricted use of GenAI in assignments (Milano et al., 2023), balanced by the use of secure invigilated assessments to ensure valid credentialing of learning (Lye and Lim, 2024).

As GenAI continues to impact the CE landscape, action must be taken to maintain a productive, fair, and effective learning environment for CS. Recent research involving comprehensive interviews with CS educators worldwide found that a common strategy to prevent student misuse of GenAI is to rely more on in-person invigilated assessments and less on take-home assignments while shifting to grading criteria that assess processes instead of the final product (Prather et al., 2023), a sentiment that is widely shared (Cao and Dede, 2023). These articles suggest that educators should teach skills that GenAI cannot teach, such as replicating human judgment. However, concrete methods to implement these strategies must still be developed, evaluated and deployed at scale.

In this paper, we recount some personal anecdotes when navigating CE after the popularisation of GenAI while highlighting several issues brought by GenAI to CE (Section 2). We propose two suggestions to the CE community to address these issues, along with justifications and the recommended courses of action to implement these suggestions in CS classrooms (Section 3).

2. Early Experiences

This section describes our experiences using GenAI in CS-focused educational settings and the changes we have implemented accordingly in our teaching in recent years since the popularisation of GenAI.

2.1. Context

We teach at a large urban research-focused institution. Authors are primarily involved in teaching classes of approximately 1000 first-year Engineering students learning to program in C, and classes of approximately 300-500 first-year Computer Science students taught in Python. In both cases, students are engaged in weekly laboratory activities conducted in person and supported by graduate teaching assistants. The laboratory activities are submitted to CodeRunner, where the correctness of programming solutions is graded automatically using a test suite. Students also engage in other activities manually graded by the teaching assistants or involving other online tools (e.g., those described later in this paper). At the undergraduate level, authors also have experience teaching second-year systems, and third-year computer graphics courses.

2.2. Student Use of GenAI

Since the release of ChatGPT (OpenAI, 2024), we have seen a steady increase in student use of GenAI in CS classrooms. During the first few months after ChatGPT’s release, students were still wary of the adverse consequences of using GenAI in classrooms, as the majority of students were still uncertain of its performance and accuracy or were unaware of its existence, and those who knew its capabilities were concerned with potential violations of academic integrity through its use.

However, as GenAI grew in popularity, more students became aware of its capabilities, and the use of ChatGPT and other GenAI-powered tools became more prevalent. Despite the guidelines that prohibited using GenAI on course pages and assignment/assessment specifications, there was evidence that many students still used GenAI against the guidelines. For example, the average scores achieved by students for take-home assignments were much higher than those for in-person invigilated assessments. Furthermore, we observed in assessments of an introductory programming course that a few students struggled to write simple print statements despite having completed all prior take-home programming assignments, often using sophisticated code constructs and solution approaches. There were also a small number of cases of students accessing tools like ChatGPT during invigilated assessments such as tests and quizzes, in violation of the rules. We are aware that there are many reasons that students struggle and the difficulties observed are not all necessarily caused by GenAI. Even so, publicly available tools, such as ChatGPT, have made it possible to complete some take-home assignments without engaging in the learning process by providing answers to coursework at no cost or risk. This enables less motivated students to generate solutions and submit them as their own without engaging in learning. However, in contrast, we found that more motivated students were less likely to misuse GenAI and instead use it to their advantage to support their learning, such as by asking constructive questions and receiving immediate feedback to clarify confusion or misunderstanding that may arise during learning. Our observation aligns with the work of Prather et al., who found that while tools like ChatGPT and Copilot can help motivated students accelerate their work on programming tasks, struggling students often face persistent and compounded metacognitive difficulties when using these tools (Prather et al., 2024). As a result of our own observations, we saw significant importance in teaching students effective ways of using GenAI to support their learning.

As time passed and GenAI became ubiquitous, it became clear that policy changes had to be made to adapt, and simply prohibiting the use of GenAI was not the solution. In fact, the prohibition of GenAI during an academic programme may cause difficulty for graduates expected to transition to work environments where GenAI may be encouraged to boost productivity. Therefore, we began to allow, and even incorporate, GenAI in take-home assignments (several concrete examples are given in Subsection 2.3), so it became commonplace for students to use GenAI, even in the presence of teaching staff. But to ensure that students were engaging in learning and not reliant on GenAI, we enforced strict standards for some assessments, particularly secure (in-person, invigilated, and GenAI-prohibited) or online using secure assessment tools. Furthermore, we highlighted to students the nature of the assessments and, thus, the importance of learning and understanding the concepts even with the use of GenAI.

Anecdotally, over time we have noticed a reduction in student queries regarding concepts or questions related to programming exercises, and relatively higher percentages of student queries related to course administration. This is presumably because many students are making use of GenAI for receiving fast and often detailed answers.

2.3. Incorporating GenAI into the classroom

As students become increasingly familiar with using commercial AI tools, like ChatGPT, we recognise the importance of integrating GenAI into classroom activities to align with their expectations. Over the last few years, we have explored several activities that leverage large language models (LLMs) to provide unique and engaging ways for students to interact with computing concepts. These activities aim to develop students’ problem-solving, debugging, and conceptual understanding skills through meaningful interaction with LLMs. To develop critical thinking skills, we have also explored scaffolded tasks where students seek help from LLM-based teaching assistants and evaluate their outputs. Below, we outline six key activities explored in our first-year introductory programming courses.

2.3.1. Prompt Problems

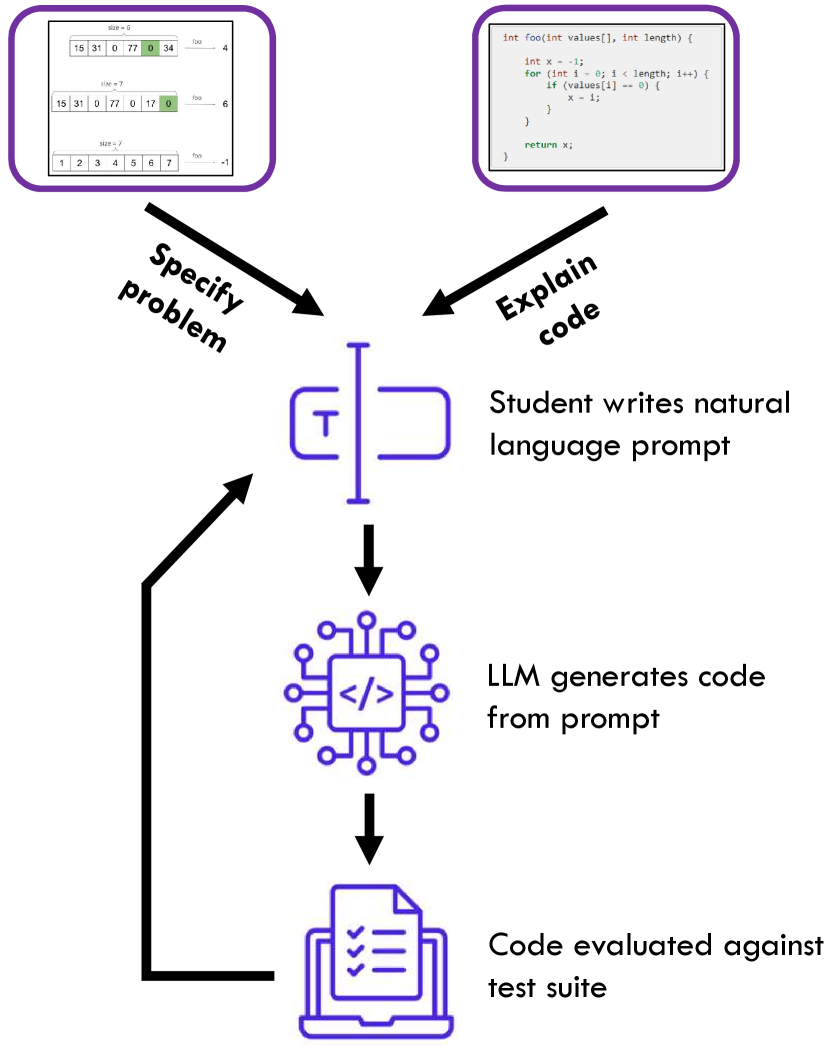

Prompt Problems (Denny et al., 2024a) are a relatively new kind of exercise designed to teach students the emerging skill of writing effective prompts for generating code. These exercises require students to craft natural language prompts that enable an LLM to generate correct code for solving a specified computational task. The activity emphasizes computational thinking and critical evaluation of AI-generated outputs rather than direct programming skills.

In our deployment of Prompt Problems, students were presented with visual representations of programming tasks, encouraging them to infer and articulate requirements in a prompt. This is depicted in the “Specify problem” branch of Figure 1, where the example image shows a series of ‘input’ arrays along with their expected ‘outputs’. We used a web-based tool called Promptly to support these exercises by automating the evaluation of generated code against test cases. Results from our implementation showed that students were highly engaged, finding the activity challenging and rewarding. Students liked that the problems introduced them to new programming constructs and techniques. Importantly, these tasks allow students to refine their problem-specification skills, a critical aspect of AI-assisted programming.

2.3.2. Generating Debugging Exercises

Debugging is a critical skill for programmers, and we have used the BugSpotter (Pădurean et al., 2024) tool as an innovative way to teach this skill. The tool generates buggy code based on a problem specification and requires students to design failing test cases that reveal the errors. This unique format helps students develop systematic reasoning about code and reinforces the importance of understanding problem specifications.

We deployed BugSpotter in one of our large introductory programming courses, and conducted an evaluation of student performance solving the tasks and expert review of the generated problems. The LLM-generated debugging exercises were comparable in quality to instructor-designed tasks, demonstrating that AI can effectively support the creation of diverse sets of exercises with varying difficulty. There are other emerging LLM-based approaches for teaching debugging, including having students play the role of a teaching assistant to help an LLM-powered agent debug code (Ma et al., 2024). Similar to our approach, this encourages metacognitive practices, as students are required to hypothesize the cause of errors and then verify their solutions. This also aligns with the growing need to prepare students for debugging AI-generated code in real-world scenarios.

2.3.3. Code Comprehension through Prompting

Code comprehension is an essential skill for novice programmers, especially in an era where the volume of AI-generated code is rapidly increasing. We have been using Explain in Plain Language (EiPL) tasks (Kerslake et al., 2024) to help students practice reading and understanding code by receiving automated feedback from an LLM. These tasks require students to explain the high-level purpose of a provided code fragment, by writing a prompt that will generate code equivalent to the original. This dual focus – understanding code and articulating problems in natural language – bridges the gap between problem-solving and programming.

Our implementation is depicted in the “Explain code” branch of Figure 1, where the exercise begins with the student being shown a fragment of code (typically a single function with obfuscated identifier names). In the course, we interleaved EiPL tasks with traditional programming-based lab activities. Students who struggled with syntax in traditional tasks found the natural language-focused EiPL exercises more accessible. Performance on these tasks was less correlated with traditional programming skills, indicating that they target a complementary skill set. Additionally, students appreciated the immediate feedback provided by the LLM on their prompts, which facilitated iterative learning.

2.3.4. Student-Generated Analogies

Understanding complex concepts such as recursion often poses challenges for students. To address this, we implemented a novel exercise where students used LLMs to generate analogies to help them understand recursion (Bernstein et al., 2024). In order to produce useful analogies, students were encouraged to use themes that aligned with their personal interests. We also asked students to critically evaluate the analogies that were generated, and reflect on the usefulness of this approach. Our goal in having students produce analogies was to help them anchor abstract concepts in familiar, personally relevant contexts, making them more accessible.

In a study involving over 350 students, participants used ChatGPT to create analogies for recursion. Results showed that analogies tailored to students’ interests were more diverse and memorable than generic examples. Students reported that the activity deepened their understanding of recursion and made the learning process more enjoyable, with some students indicating they planned to use analogy generation in the future. This exercise demonstrates the potential of LLMs as tools for scaffolding personalized learning experiences.

2.3.5. Generating Contextualized Parsons Problems

Parsons Problems, which involve rearranging code fragments into a correct sequence, are widely used to scaffold code-writing skills. Using a custom tool called PuzzleMakerPy (del Carpio Gutierrez et al., 2024), we allowed students to generate personalized Parsons Problems. Students could select contexts and programming topics of interest, making the exercises more engaging and relevant.

The deployment of PuzzleMakerPy showed several benefits. Students reported high satisfaction levels with the personalized problems, noting that the contextual framing made the exercises more relatable. The ability to select programming concepts was also reported as being useful for learning. From an instructional perspective, the tool significantly reduced the workload of creating diverse, high-quality problems. This work is one example in a growing body of literature in computing education around using LLMs to produce learning resources (Sarsa et al., 2022; Hou et al., 2024b; Lekshmi-Narayanan et al., 2024; MacNeil et al., 2023; Jury et al., 2024; Tran et al., 2023).

2.3.6. Teaching Students to Use AI Teaching Assistants

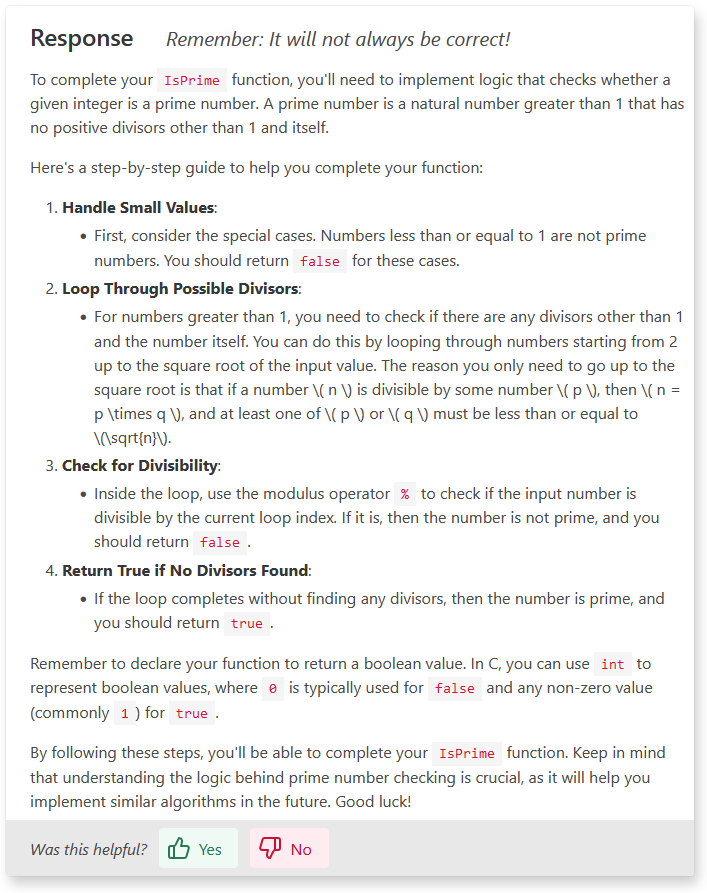

The advent of AI-powered teaching assistants offers scalable solutions for providing personalized feedback. In our courses we have deployed a digital assistant based on CodeHelp (Denny et al., 2024b) to support students in debugging and code-writing tasks. The most obvious advantage of the AI assistant is that it could provide round-the-clock availability and ensure equitable access to support. In fact, we observed students seeking help from the assistant at all hours, with hundreds of queries submitted between 1am and 3am during the two week period that the assistant was available. The CodeHelp tool uses guardrails to avoid revealing direct code solutions – an example of a response from the CodeHelp tool is shown in Figure 2.

Student feedback highlighted several key attributes of the AI assistant that were valued, including instant feedback, and scaffolding that guided students to discover their own solutions rather than simply providing direct answers. Students also expressed a desire for the responses from the AI assistant to be aligned with their current level of knowledge, which is a key skill that human tutors provide. In general, our observations suggest that AI teaching assistants can complement human instructors by addressing gaps in availability and consistency.

2.3.7. Summary

Together, these examples showcase the diverse applications of LLMs in computing education. Integrating GenAI into classroom activities and into the curriculum is essential for equipping students with the skills to leverage AI tools in the future.

3. Discussion

We see two main approaches to incorporating GenAI into CS Education. The first is to embrace GenAI as a core tool (similar to an IDE) used throughout a course. An example of such a course redesign is described by Vadaparty et al. (2024), where the entire course has been refocused on developing programs with the integrated support of GenAI. We believe this approach focuses on contemporary skills for workplace readiness. The second approach is more moderate and retains the existing course design but adopts isolated activities introducing GenAI. This approach focuses on raising student awareness of how GenAI may be used for learning.

From our experiences navigating the introduction, popularisation, and acclimatisation of GenAI in CS education, we summarise two strategies that (a) introduce GenAI for individual tasks and (b) raise student awareness of how to use GenAI successfully for improved educational outcomes.

3.1. Strategy 1: Redesign Assignments

GenAI use is still not widely accepted in education, and using GenAI tools to assist with writing assignments is typically disallowed (Perkins, 2023). This is a sensible approach where the assignments have not been adapted for a GenAI context — most assignments currently used in courses are designed to be solved individually and not with the assistance of GenAI. However, since GenAI tools are becoming more widely used, we believe that restricting GenAI use in all assignments is unrealistic and does not reflect real-world conditions. Hence, our view is that at least some assignments should be designed to support learning with GenAI.

For example, the Prompt Problem exercises described in Subsection 2.3.1 require students to engage with problem specifications, an authentic task required by programmers writing programs to solve problems outside the highly constrained educational context. This helps students develop important dispositions outlined in Computing Curriculum 2023 (Kumar et al., 2024), such as being meticulous and persistent while raising awareness of the capabilities and limitations of GenAI tools.

Activities such as peer code review have been shown to benefit students producing reviews (Indriasari et al., 2020). These activities require students to understand and critique the code produced by others. One potential barrier to such activities is the difficulty of sharing peer code in classrooms — an administrative burden that typically requires a dedicated peer review tool. The same activity (and indeed any activity that requires students to engage with work produced by others) can use GenAI to create the code to be reviewed. Students can be asked to reflect on the quality of the code and comment on differences between the generated code and their solutions. This approach retains the focus on traditional learning outcomes for a given assessment, yet it raises student awareness of GenAI capability (and potentially the limitations of such tools where the quality of the generated code has room for improvement).

As a general example, any traditional assignment involving code writing could be redesigned to focus on code comprehension and encourage students to link concrete examples more explicitly to more abstract concepts. Figure 3 is an example of a typical code writing question used in a CS1 course. Some students may query GenAI for the solution and submit it as their work without processing the information. This can occur whenever we focus assessment on the product, since GenAI is excellent at solving programming problems. It is possible to redesign this question to focus on more abstract levels of understanding programming concepts and encourage students to use GenAI tools for scaffolding rather then simply producing solutions. Figure 4 guides students to use the capabilities of GenAI tools as a scaffolding tool to support learning rather than replace learning. Although this does not guarantee productive learning, it lowers the likelihood of students copying and pasting without reading and understanding the responses. Assignments like this also align with the common suggestion of shifting towards assessing students on the learning and completion process rather than the final product (Cao and Dede, 2023; Prather et al., 2023).

| Write a function that returns a function that takes an argument and adds it to the last even element in the given list. |

| Use the following guide to solve the following question: |

| Write a function that returns a function that takes an argument and adds it to the last even element in the given list. |

| 1. Use ChatGPT to find which programming concepts are used in this question. What are they? |

| 2. Ask ChatGPT to give an example of using each concept in a new context (unrelated to the problem above). Summarise its responses in your own words. |

| 3. Write your solution to the question and then ask ChatGPT to generate a solution. |

| 4. Compare the solutions and comment on the similarities and differences. Submit both solutions and your critique of the main differences between them. |

The focus of the redesigned assignments is to assist with learning rather than to evaluate learning. Although we believe that GenAI should be used in assignments, we also believe it is beneficial for courses to include components of secure assessments (in-person, invigilated, and GenAI-prohibited) to ensure that we measure student capability for accreditation purposes and to focus attention on that capability rather than relying on an outside source (such as GenAI) to produce outputs that students are expected to complete themselves. We enforce secure assessments by having students submit their code on a web-based automated marking tool, such as Coderunner (Lobb and Harlow, 2016), and a firewall that only allows Internet access to the said marking tool. This eliminates the possibility of students using GenAI during secure assessment components.

A potential challenge arising from more qualitative assignments, or focusing on process rather than product, is the increased workload for educators to grade student submissions. Using the traditional grading method of manually reading submitted material may be ineffective, and innovative methods may need to be devised to speed up the grading process. One possibility is to design a proxy for students to access GenAI tools that store interactions that may be used to automate grading purposes. The requirements of the grading process may vary between assignments, but they should be considered when designing the assignments.

The redesign of assignments also raises concerns regarding the correctness of responses generated by GenAI since students risk counterproductive learning if the generated information is incorrect or misleading. However, instead of perceiving this as counterproductive learning, educators must teach students to identify incorrect information by fact-checking using trusted non-AI-generated resources such as textbooks and teaching staff, which is an essential skill in a discipline where much of the recent content knowledge is acquired through community sources (such as forums, blog posts, and online documentation). This can strengthen student understanding of topics and simultaneously improve their metacognitive skills.

3.2. Strategy 2: Teach Metacognition

With increasing online resources, students are becoming less reliant on educators, as their first source of study assistance is usually the Internet (Hou et al., 2024a) because Internet searches supply answers instantly. One main advantage of educators’ responses to student queries is that they provide personalised feedback that answer specific details of the questions that online searches may not supply. However, now GenAI tools are easily accessible and students can receive unlimited, immediate, and personalised feedback. Consequentially, we have observed fewer help requests from students in our computing laboratories, as described in Subsection 2.2. This indicates that we may better support student learning by increasing attention to skills students cannot learn simply with assistance from GenAI, such as metacognitive skills crucial when learning CS (Prather et al., 2020).

In Subsection 2.2, we outline the differences in the approaches of using GenAI between more motivated students and those less motivated, which may also be attributed to the differences in metacognitive skills, where more motivated students understand the harmful effects of using GenAI without engaging in learning. In contrast, those less motivated may not consider the future effects of copying from GenAI, such as the lack of understanding leading to difficulties during assessments. We believe that it should be the educators’ responsibility to highlight the risks of copying from GenAI and the correct and most effective ways of using GenAI when learning, such as asking GenAI to refrain from providing full solutions and instead provide explanations and examples.

Kazemitabaar et al. (2024a) present a variety of GenAI-supported tasks that align with different levels of Bloom’s taxonomy. These tasks require tools designed to scaffold learning with GenAI and could be introduced into CS classrooms with guidance that raises awareness of how the activities impact learning and the role of GenAI in that process.

| Prompt |

| How to write a function to check whether a number is prime? |

| Response |

| 1. Special Cases … |

| 2. Divisibility Check … |

| [FULL CODE SOLUTION WITH LIMITED COMMENTS] |

| Prompt |

| Write a function to check whether a number is prime. Please do not include any code and only explain the algorithm in detail. |

| Response |

| 1. Handle Small Numbers … |

| 2. Eliminate Even and Small Odd Divisors Early … |

| 3. Check for Divisors up to the Square Root of … |

| 4. Terminate if Any Divisor is Found … |

| Prompt |

| Write a function to check whether a number is prime. Please provide more comments in the code and explain how each line works. |

| Response |

| 1. Function Definition … |

| 2. Check if n is less than or equal to 1 … |

| 3. Loop from 2 to the square root of n … |

| 4. Check if n is divisible by any number in the range … |

| 5. Return True if no factors are found … |

| [FULL CODE SOLUTION WITH DETAILED COMMENTS] |

More generally available tools, such as ChatGPT, are more likely to be used in practice by students working independently on programming tasks. The way students assemble their prompts significantly influences the content, thus usefulness, of the responses, so educators should teach students about the characteristics of effective prompts for students. Teachers can easily demonstrate how to prompt ChatGPT and the impact of different prompts. The Prompt Problems exercise described in Subsection 2.3.1 illustrates how guidance about prompts could subsequently be assessed as part of a course activity.

For example, in the scenario of a CS1 student attempting to understand the process of writing a prime checking function with the assistance of ChatGPT, Figure 5 shows an example of a general prompt, where the explanation lacks details and may be difficult to understand for a novice programmer. Additionally, it provides the full model solution, which reduces the learning that occurs as students explore the solution space. In contrast, Figure 6 shows an example of a prompt requesting no code and only explanations. This is more valuable for learning than the general prompt, as the students can then attempt to write the code themselves from the information generated by ChatGPT. If students fail to write the code themselves, they can use the prompt shown in Figure 7, which expects a full code solution but with further comments and details. As expected, the corresponding explanation and code are more comprehensive and explicit than that shown in Figure 5, even providing explanations for the use of the if-statements and for-loops. Using the prompts shown in Figure 6 and Figure 7, and observing the corresponding annotated outputs, may help a student develop a deeper understanding of the problem and the computational steps involved, compared with only using the prompt shown in Figure 5.

The characteristics of effective prompts can vary across courses and disciplines. Therefore, educators should provide course-specific guidance on crafting good prompts for queries within the context of their course. Additionally, students need to recognize that GenAI responses are not always accurate, and they must develop the ability to identify misinformation. This process can serve as both a valuable learning experience and an important metacognitive skill.

AI-generated content comes in various forms, each potentially requiring different fact-checking methods. Educators should demonstrate the fact-checking techniques most relevant to their courses to teach students how to identify misinformation effectively. For example, educators can present snippets of AI-generated content and walk students through the process of verifying this information using course materials, textbooks, documentation, and trusted websites. Similarly, educators can demonstrate how to test AI-generated code for correctness by running it. If the code produces incorrect results, educators can showcase the debugging process, which is an essential skill, particularly as code generation becomes more prevalent.

3.3. Future Trends

The CE landscape will inevitably undergo further significant change due to GenAI. While many new teaching practices have emerged in response to GenAI, such as those outlined in this work, we argue that further research is essential to evaluate their validity and effectiveness. This includes trialling innovative teaching methods, gathering student feedback, and assessing student performance. It is critical to thoroughly investigate the concrete impacts of GenAI and proactively prepare for these changes before the research gap becomes too wide. Neglecting this need could result in reduced student productivity due to ineffective GenAI usage and leave graduates ill-prepared because of outdated teaching practices.

We believe that future graduates must be proficient and experienced in leveraging GenAI to support their work. Therefore, methods for effectively using GenAI should be integrated into teaching curricula. We urge the CE community to prioritize research that examines the tangible impacts of GenAI, including its influence on course structure, content, and teaching methods. Such research will enable educators to make well-informed decisions as we adapt our teaching practices to the evolving role of GenAI in education.

4. Conclusion

In this paper, we shared our experiences with integrating GenAI into CS-focused educational settings and the adjustments we have made to our teaching practices in recent years following the popularization of GenAI. Based on these experiences, we emphasized the need to redefine the role of CS educators in the GenAI era, focusing more on developing students’ metacognitive skills. To support this shift, we proposed two strategies: redesigning take-home assignments to incorporate GenAI use and adapting teaching approaches to emphasize metacognition by demonstrating the correct and effective use of GenAI. Additionally, we highlighted the importance of conducting further research to evaluate the concrete impacts of GenAI on computing education, particularly in assessing the validity and effectiveness of emerging teaching practices. We hope this paper provides valuable insights and inspiration for navigating the evolving landscape of CS education as GenAI continues to advance.

References

- (1)

- Becker et al. (2023) Brett A Becker, Paul Denny, James Finnie-Ansley, Andrew Luxton-Reilly, James Prather, and Eddie Antonio Santos. 2023. Programming is hard-or at least it used to be: Educational opportunities and challenges of ai code generation. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education V. 1. 500–506.

- Bernstein et al. (2024) Seth Bernstein, Paul Denny, Juho Leinonen, Lauren Kan, Arto Hellas, Matt Littlefield, Sami Sarsa, and Stephen Macneil. 2024. ”Like a Nesting Doll”: Analyzing Recursion Analogies Generated by CS Students Using Large Language Models. In Proceedings of the 2024 on Innovation and Technology in Computer Science Education V. 1 (Milan, Italy) (ITiCSE 2024). Association for Computing Machinery, New York, NY, USA, 122–128. https://doi.org/10.1145/3649217.3653533

- Cao and Dede (2023) Lydia Cao and Chris Dede. 2023. Navigating a world of generative AI: Suggestions for educators. The Next Level Lab at Harvard Graduate School of Education. President and Fellows of Harvard College: Cambridge (2023).

- del Carpio Gutierrez et al. (2024) Andre del Carpio Gutierrez, Paul Denny, and Andrew Luxton-Reilly. 2024. Automating Personalized Parsons Problems with Customized Contexts and Concepts. In Proceedings of the 2024 on Innovation and Technology in Computer Science Education V. 1 (Milan, Italy) (ITiCSE 2024). Association for Computing Machinery, New York, NY, USA, 688–694. https://doi.org/10.1145/3649217.3653568

- Denny et al. (2023) Paul Denny, Viraj Kumar, and Nasser Giacaman. 2023. Conversing with copilot: Exploring prompt engineering for solving CS1 problems using natural language. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education V. 1. 1136–1142.

- Denny et al. (2024a) Paul Denny, Juho Leinonen, James Prather, Andrew Luxton-Reilly, Thezyrie Amarouche, Brett A. Becker, and Brent N. Reeves. 2024a. Prompt Problems: A New Programming Exercise for the Generative AI Era. In Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 1 (Portland, OR, USA) (SIGCSE 2024). Association for Computing Machinery, New York, NY, USA, 296–302. https://doi.org/10.1145/3626252.3630909

- Denny et al. (2024b) Paul Denny, Stephen MacNeil, Jaromir Savelka, Leo Porter, and Andrew Luxton-Reilly. 2024b. Desirable Characteristics for AI Teaching Assistants in Programming Education. In Proceedings of the 2024 on Innovation and Technology in Computer Science Education V. 1 (Milan, Italy) (ITiCSE 2024). Association for Computing Machinery, New York, NY, USA, 408–414. https://doi.org/10.1145/3649217.3653574

- Denny et al. (2024c) Paul Denny, James Prather, Brett A. Becker, James Finnie-Ansley, Arto Hellas, Juho Leinonen, Andrew Luxton-Reilly, Brent N. Reeves, Eddie Antonio Santos, and Sami Sarsa. 2024c. Computing Education in the Era of Generative AI. Commun. ACM 67, 2 (Jan. 2024), 56–67. https://doi.org/10.1145/3624720

- Feng et al. (2024a) Tony Haoran Feng, Paul Denny, Burkhard Wuensche, Andrew Luxton-Reilly, and Steffan Hooper. 2024a. More Than Meets the AI: Evaluating the performance of GPT-4 on Computer Graphics assessment questions. In Proceedings of the 26th Australasian Computing Education Conference. 182–191.

- Feng et al. (2024b) Tony Haoran Feng, Paul Denny, Burkhard C Wünsche, Andrew Luxton-Reilly, and Jacqueline Whalley. 2024b. An Eye for an AI: Evaluating GPT-4o’s Visual Perception Skills and Geometric Reasoning Skills Using Computer Graphics Questions. In SIGGRAPH Asia 2024 Educator’s Forum. 1–8.

- Feng et al. (2024c) Tony Haoran Feng, Burkhard C. Wünsche, Paul Denny, Andrew Luxton-Reilly, and Steffan Hooper. 2024c. Can GPT-4 Trace Rays. In Eurographics 2024 - Education Papers, Beatriz Sousa Santos and Eike Anderson (Eds.). The Eurographics Association. https://doi.org/10.2312/eged.20241003

- Finnie-Ansley et al. (2022) James Finnie-Ansley, Paul Denny, Brett A Becker, Andrew Luxton-Reilly, and James Prather. 2022. The robots are coming: Exploring the implications of openai codex on introductory programming. In Proceedings of the 24th Australasian Computing Education Conference. Association for Computing Machinery, 10–19.

- Hooper et al. (2024) Steffan Hooper, Burkhard C Wünsche, Andrew Luxton-Reilly, Paul Denny, and Tony Haoran Feng. 2024. Advancing Automated Assessment Tools-Opportunities for Innovations in Upper-level Computing Courses: A Position Paper. In Proceedings of the 55th ACM Technical Symposium on Computer Science Education V. 1. 519–525.

- Hou et al. (2024a) Irene Hou, Sophia Mettille, Owen Man, Zhuo Li, Cynthia Zastudil, and Stephen MacNeil. 2024a. The Effects of Generative AI on Computing Students’ Help-Seeking Preferences. In Proceedings of the 26th Australasian Computing Education Conference. 39–48.

- Hou et al. (2024b) Xinying Hou, Zihan Wu, Xu Wang, and Barbara J. Ericson. 2024b. CodeTailor: LLM-Powered Personalized Parsons Puzzles for Engaging Support While Learning Programming. In Proceedings of the Eleventh ACM Conference on Learning @ Scale (Atlanta, GA, USA) (L@S ’24). Association for Computing Machinery, New York, NY, USA, 51–62. https://doi.org/10.1145/3657604.3662032

- Indriasari et al. (2020) Theresia Devi Indriasari, Andrew Luxton-Reilly, and Paul Denny. 2020. A Review of Peer Code Review in Higher Education. ACM Trans. Comput. Educ. 20, 3, Article 22 (Sept. 2020), 25 pages. https://doi.org/10.1145/3403935

- Jury et al. (2024) Breanna Jury, Angela Lorusso, Juho Leinonen, Paul Denny, and Andrew Luxton-Reilly. 2024. Evaluating LLM-generated Worked Examples in an Introductory Programming Course. In Proceedings of the 26th Australasian Computing Education Conference (Sydney, NSW, Australia) (ACE ’24). Association for Computing Machinery, New York, NY, USA, 77–86. https://doi.org/10.1145/3636243.3636252

- Kazemitabaar et al. (2024a) Majeed Kazemitabaar, Oliver Huang, Sangho Suh, Austin Z. Henley, and Tovi Grossman. 2024a. Exploring the Design Space of Cognitive Engagement Techniques with AI-Generated Code for Enhanced Learning. arXiv:2410.08922 [cs.HC] https://arxiv.org/abs/2410.08922

- Kazemitabaar et al. (2024b) Majeed Kazemitabaar, Runlong Ye, Xiaoning Wang, Austin Z Henley, Paul Denny, Michelle Craig, and Tovi Grossman. 2024b. CodeAid: Evaluating a Classroom Deployment of an LLM-based Programming Assistant that Balances Student and Educator Needs. arXiv preprint arXiv:2401.11314 (2024).

- Kerslake et al. (2024) Chris Kerslake, Paul Denny, David H. Smith, James Prather, Juho Leinonen, Andrew Luxton-Reilly, and Stephen MacNeil. 2024. Integrating Natural Language Prompting Tasks in Introductory Programming Courses. In Proceedings of the 2024 on ACM Virtual Global Computing Education Conference V. 1 (Virtual Event, NC, USA) (SIGCSE Virtual 2024). Association for Computing Machinery, New York, NY, USA, 88–94. https://doi.org/10.1145/3649165.3690125

- Khan Academy (2024) Khan Academy. 2024. GPT-4o (Omni) math tutoring demo on Khan Academy - YouTube. https://www.youtube.com/watch?v=IvXZCocyU_M. [Accessed 22-05-2024].

- Kumar et al. (2024) Amruth N. Kumar, Rajendra K. Raj, Sherif G. Aly, Monica D. Anderson, Brett A. Becker, Richard L. Blumenthal, Eric Eaton, Susan L. Epstein, Michael Goldweber, Pankaj Jalote, Douglas Lea, Michael Oudshoorn, Marcelo Pias, Susan Reiser, Christian Servin, Rahul Simha, Titus Winters, and Qiao Xiang. 2024. Computer Science Curricula 2023. Association for Computing Machinery, New York, NY, USA.

- Lekshmi-Narayanan et al. (2024) Arun-Balajiee Lekshmi-Narayanan, Priti Oli, Jeevan Chapagain, Mohammad Hassany, Rabin Banjade, Peter Brusilovsky, and Vasile Rus. 2024. Explaining Code Examples in Introductory Programming Courses: LLM vs Humans. arXiv:2403.05538 [cs.CY] https://arxiv.org/abs/2403.05538

- Lobb and Harlow (2016) Richard Lobb and Jenny Harlow. 2016. Coderunner: A tool for assessing computer programming skills. ACM Inroads 7, 1 (2016), 47–51.

- Lye and Lim (2024) Che Yee Lye and Lyndon Lim. 2024. Generative Artificial Intelligence in Tertiary Education: Assessment Redesign Principles and Considerations. Education Sciences 14, 6 (2024), 569.

- Ma et al. (2024) Qianou Ma, Hua Shen, Kenneth Koedinger, and Sherry Tongshuang Wu. 2024. How to Teach Programming in the AI Era? Using LLMs as a Teachable Agent for Debugging. In Artificial Intelligence in Education, Andrew M. Olney, Irene-Angelica Chounta, Zitao Liu, Olga C. Santos, and Ig Ibert Bittencourt (Eds.). Springer Nature Switzerland, Cham, 265–279.

- MacNeil et al. (2023) Stephen MacNeil, Andrew Tran, Arto Hellas, Joanne Kim, Sami Sarsa, Paul Denny, Seth Bernstein, and Juho Leinonen. 2023. Experiences from Using Code Explanations Generated by Large Language Models in a Web Software Development E-Book. In Proceedings of the 54th ACM Technical Symposium on Computer Science Education V. 1 (Toronto ON, Canada) (SIGCSE 2023). Association for Computing Machinery, New York, NY, USA, 931–937. https://doi.org/10.1145/3545945.3569785

- Milano et al. (2023) Silvia Milano, Joshua A McGrane, and Sabina Leonelli. 2023. Large language models challenge the future of higher education. Nature Machine Intelligence 5, 4 (2023), 333–334.

- OpenAI (2024) OpenAI. 2024. ChatGPT. https://chatgpt.com/. [Accessed 10-12-2024].

- Orenstrakh et al. (2023) Michael Sheinman Orenstrakh, Oscar Karnalim, Carlos Anibal Suarez, and Michael Liut. 2023. Detecting llm-generated text in computing education: A comparative study for chatgpt cases. arXiv preprint arXiv:2307.07411 (2023).

- Perkins (2023) Mike Perkins. 2023. Academic Integrity considerations of AI Large Language Models in the post-pandemic era: ChatGPT and beyond. Journal of university teaching & learning practice 20, 2 (2023), 07.

- Prather et al. (2020) James Prather, Brett A Becker, Michelle Craig, Paul Denny, Dastyni Loksa, and Lauren Margulieux. 2020. What do we think we think we are doing? Metacognition and self-regulation in programming. In Proceedings of the 2020 ACM conference on international computing education research. 2–13.

- Prather et al. (2023) James Prather, Paul Denny, Juho Leinonen, Brett A Becker, Ibrahim Albluwi, Michelle Craig, Hieke Keuning, Natalie Kiesler, Tobias Kohn, Andrew Luxton-Reilly, et al. 2023. The robots are here: Navigating the generative ai revolution in computing education. In Proceedings of the 2023 Working Group Reports on Innovation and Technology in Computer Science Education. Association for Computing Machinery, 108–159.

- Prather et al. (2024) James Prather, Brent N Reeves, Juho Leinonen, Stephen MacNeil, Arisoa S Randrianasolo, Brett A. Becker, Bailey Kimmel, Jared Wright, and Ben Briggs. 2024. The Widening Gap: The Benefits and Harms of Generative AI for Novice Programmers. In Proceedings of the 2024 ACM Conference on International Computing Education Research - Volume 1 (Melbourne, VIC, Australia) (ICER ’24). Association for Computing Machinery, New York, NY, USA, 469–486. https://doi.org/10.1145/3632620.3671116

- Pădurean et al. (2024) Victor-Alexandru Pădurean, Paul Denny, and Adish Singla. 2024. BugSpotter: Automated Generation of Code Debugging Exercises. arXiv:2411.14303 [cs.SE] https://arxiv.org/abs/2411.14303

- Sarsa et al. (2022) Sami Sarsa, Paul Denny, Arto Hellas, and Juho Leinonen. 2022. Automatic Generation of Programming Exercises and Code Explanations Using Large Language Models. In Proceedings of the 2022 ACM Conference on International Computing Education Research - Volume 1 (Lugano and Virtual Event, Switzerland) (ICER ’22). Association for Computing Machinery, New York, NY, USA, 27–43. https://doi.org/10.1145/3501385.3543957

- Savelka et al. (2023) Jaromir Savelka, Arav Agarwal, Christopher Bogart, Yifan Song, and Majd Sakr. 2023. Can generative pre-trained transformers (gpt) pass assessments in higher education programming courses?. In Proceedings of the 2023 Conference on Innovation and Technology in Computer Science Education V. 1. 117–123.

- Sheese et al. (2024) Brad Sheese, Mark Liffiton, Jaromir Savelka, and Paul Denny. 2024. Patterns of Student Help-Seeking When Using a Large Language Model-Powered Programming Assistant. In Proceedings of the 26th Australasian Computing Education Conference. 49–57.

- Tang et al. (2024) Ruixiang Tang, Yu-Neng Chuang, and Xia Hu. 2024. The Science of Detecting LLM-Generated Text. Commun. ACM 67, 4 (2024), 50–59.

- Tran et al. (2023) Andrew Tran, Kenneth Angelikas, Egi Rama, Chiku Okechukwu, David H. Smith, and Stephen MacNeil. 2023. Generating Multiple Choice Questions for Computing Courses Using Large Language Models. In 2023 IEEE Frontiers in Education Conference (FIE). 1–8. https://doi.org/10.1109/FIE58773.2023.10342898

- Vadaparty et al. (2024) Annapurna Vadaparty, Daniel Zingaro, David H. Smith IV, Mounika Padala, Christine Alvarado, Jamie Gorson Benario, and Leo Porter. 2024. CS1-LLM: Integrating LLMs into CS1 Instruction. In Proceedings of the 2024 on Innovation and Technology in Computer Science Education V. 1 (Milan, Italy) (ITiCSE 2024). Association for Computing Machinery, New York, NY, USA, 297–303. https://doi.org/10.1145/3649217.3653584

- Wu et al. (2023) Junchao Wu, Shu Yang, Runzhe Zhan, Yulin Yuan, Derek F Wong, and Lidia S Chao. 2023. A survey on llm-gernerated text detection: Necessity, methods, and future directions. arXiv preprint arXiv:2310.14724 (2023).

- Wünsche et al. (2018) Burkhard C Wünsche, Zhen Chen, Lindsay Shaw, Thomas Suselo, Kai-Cheung Leung, Davis Dimalen, Wannes van der Mark, Andrew Luxton-Reilly, and Richard Lobb. 2018. Automatic assessment of OpenGL computer graphics assignments. In Proceedings of the 23rd annual ACM conference on innovation and technology in computer science education. 81–86.

- Wünsche et al. (2019) Burkhard C Wünsche, Edward Huang, Lindsay Shaw, Thomas Suselo, Kai-Cheung Leung, Davis Dimalen, Wannes van der Mark, Andrew Luxton-Reilly, and Richard Lobb. 2019. CodeRunnerGL-An interactive web-based tool for computer graphics teaching and assessment. In 2019 International Conference on Electronics, Information, and Communication (ICEIC). IEEE, 1–7.